1. Introduction

We use our five senses to grasp our surroundings, and it is said that vision accounts for about 90% of the information we receive. However, it is thought that people use auditory information to compensate for information that cannot be confirmed visually because it is hidden to the side, behind, or in the shadows. For example, when we cross a road at dusk or at night, when visibility is limited, and a stopped vehicle suddenly moves or turns at an intersection and suddenly comes into view, visual information alone is not enough to quickly detect it; auditory information is also required. When crossing the road, we often unconsciously turn our head in the direction of the sound of the vehicle. Due to the anxiety caused by auditory information alone, we may turn our face to reconfirm the sound source visually.

On the other hand, hearing-impaired (deaf) people and young people who walk around with earphones listening to music at high volume may not be able to hear the sounds around them, which is assumed to increase the risk of experiencing traffic accidents. Hearing dogs play a role in supporting the daily lives of the hearing impaired. Indoors, they listen to various sounds such as ringing telephones, doorbells, boiling kettles, fire alarms, and human voices on behalf of hearing-impaired people; communicate what the sounds are; and lead them to respond appropriately. A hearing dog does not convey detailed information about the sound source but merely attracts the person’s attention through direct contact or special behavior. It is assumed that the hearing-impaired person, given simple information by the dog, knows what action to take after visually confirming the situation.

In this study, we focus on auditory support for the hearing impaired to cross roads safely. The purpose of this study was to develop a hearing assistance system that can detect the location of vehicles on roads and to present it to people. In order to confirm that a vehicle is approaching on the road, it is necessary to continuously track the position of the sound source. If we can develop a hearing assistance system that detects sounds with a microphone, identifies the sound source, and notifies the user of its location, we can improve the quality of life for the hearing impaired. Some basic ideas and technologies related to this system are described below.

Devices that convert sound characteristics into patterns of vibrating tactile stimuli have been proposed as a way for the hearing impaired to understand their acoustic environment [

1,

2,

3,

4,

5,

6,

7,

8,

9,

10,

11,

12,

13]. In pioneering work in this field, Bekesy (1955, 1959) created two artificial cochleae and fixed them to a subject’s forearms [

1,

2]. The sound generated by loudspeakers drove two spatially separated microphones, in which the output was amplified to operate the two artificial cochleae, which vibrated on the subject’s arms. As a result, the subject was able to accurately determine the direction of the sound. Bekesy reported that auditory sound source localization depends only on the difference in intensity of sounds. Richardson et al. (1979) reported that, by transmitting sound information through vibration on the skin, subjects can estimate the distance to and direction of the sound source at the same time [

4]. They supported Bekesy’s claim by pointing out that sound localization by vibration based on differences in intensity is better than that based on phase or time differences. Frost et al. (1976) showed that tactile localization of a sound source is possible with accuracy comparable to that of normal hearing, that a moving sound source can be tracked, and that attention can be selectively directed by tactile sensation [

5]. It has also been reported that people with cochlear implants can track moving sound sources [

6]. The distance and direction to the sound source, which are higher-order information from the perceived tactile stimuli, are left to the subject’s own judgment. The subject learns the pattern of the tactile stimuli and makes a correspondence to the acoustic environment. With a certain amount of training, it is possible not only to localize a sound source but also to track and selectively pay attention to it.

On the other hand, no robot can act like a hearing dog, but many elemental technologies have been proposed to realize the function of assisting a disabled person after determining their acoustic environment. Many studies have been reported on the use of microphone array systems for sound source localization, estimation of the trajectory of moving sound sources, and sound source tracking [

14,

15,

16]. A microphone array system consists of many microphones placed far apart, which makes the overall size of the array unsuitable for mobile platforms and places a heavy computational load on the computer. Argentieri et al. listed the following four constraints that are required for mobile platforms [

17]: (1) geometric constraints: there must be a trade-off between the combined size of all sensors and the performance of the platform in motion; (2) real-time constraints: short processing time should be guaranteed; (3) wide frequency bands: the computer must be able to process at high speed; and (4) environmental constraints: they must be operable in dynamic and unpredictable environments. Microphone array systems violate constraints 1, 2, and 3. Acoustic processing using binaural microphones that can be easily mounted on a mobile platform and at least satisfy constraint 1 is known, and there are many reports on sound source localization, detection of multiple sound sources, and tracking of moving sound sources [

17,

18,

19,

20,

21,

22,

23,

24]. By mounting the microphones on a mobile platform, their orientation can change. This is used for acoustic processing in active audition [

18].

In this study, we used an ear microphone that is easy to wear and to fix. When a hearing-impaired person wearing an ear microphone moves his or her head, the direction of the microphone changes. Active audition is often installed in moving robots, and tracking of moving sound sources by robots has been reported [

24]. An algorithm should be adopted that satisfies all of the above constraints among the algorithms that use such computers to localize and track moving sound sources.

Let us assume that the location of the sound source can be estimated by a computer. Then, how can we convey this information to someone who is hearing impaired? Various methods of presenting the information have been proposed [

25,

26,

27,

28,

29,

30,

31,

32]. Presenting information on a cell phone screen is the easiest and most convenient way. However, visual communication is not appropriate as a human–machine interface in this case because hearing-impaired people use their vision to understand their surroundings. Ross et al. (2000) proposed a method of using a 3 × 3 array of vibrators on a visually impaired person’s back to indicate the direction of walking by changing the waving pattern [

26]. Nine vibrators are heavy, and it is difficult to gather all of them close together. A tactile display using piezoelectric elements was developed [

27], Khin et al. proposed a soft tactile actuator that can be assembled [

31], and Ontenna, a hearing aid for the deaf, is a hairpin-type device that can convert sound intensity into light or vibration intensity [

32].

Haptic interfaces are also used in visual aids for the visually impaired [

33,

34,

35,

36,

37,

38]. In addition to skin stimulation by physical vibration, electrical stimulation of muscles has been proposed as a tactile stimulus [

33]. There are proposals to show walking routes to visually impaired persons while avoiding obstacles in the road detected by ultrasonic sensors and to give instructions for a walking route by referring to GPS location information and a map [

36,

37,

38]. In these examples, a computer determines the walking direction of the visually impaired person by using obstacles in front and the position of the person detected by sensors and conveys the direction to the person by tactile stimuli. A tactile stimulus is more easily transmitted when it is applied to the skin, which has high sensory sensitivity, but it is not suitable for long-term use because direct stimulation of the skin tends to cause abrasions. The authors previously proposed a wearable hearing assistance system that estimates the direction of a sound source by computer from acoustic signals detected by ear microphones and notifies the person of the direction of the sound source by vibrators attached to the shoulders [

39].

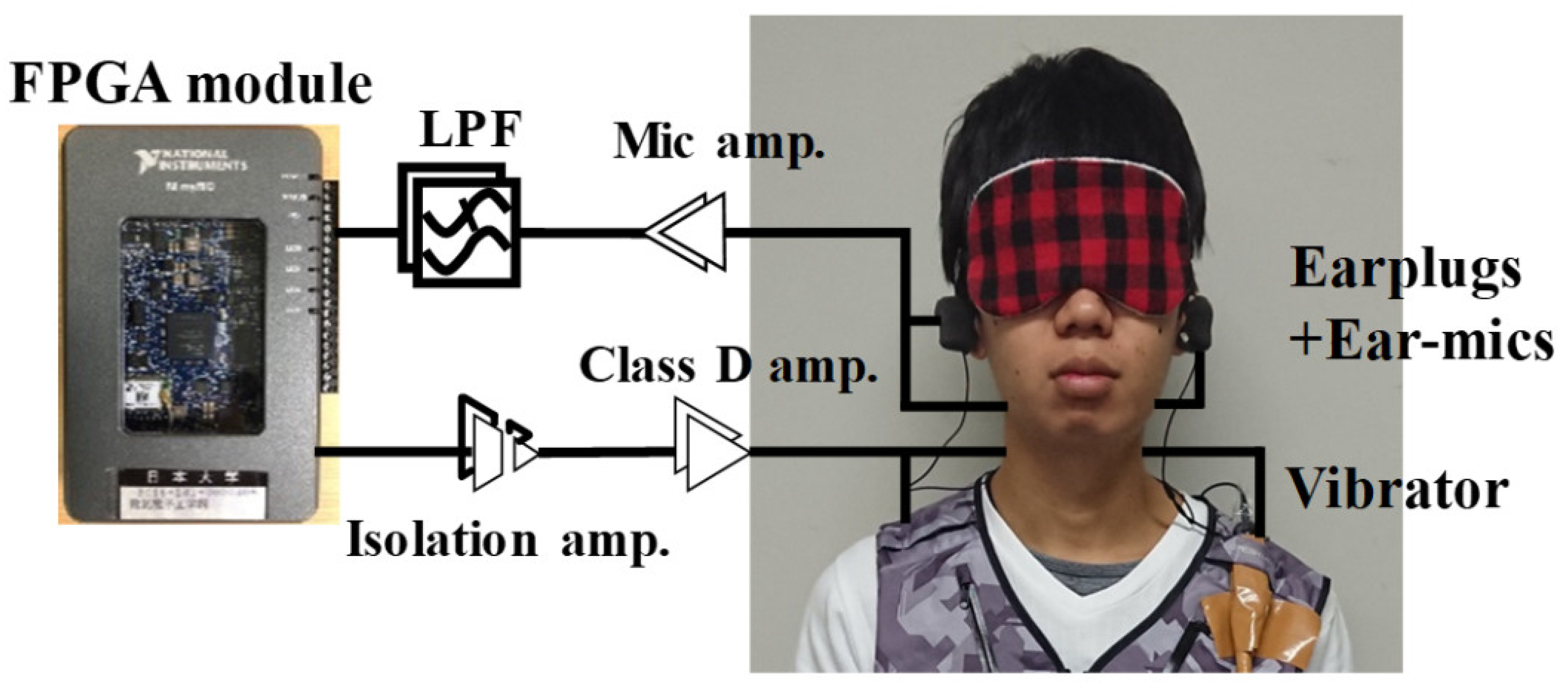

In this study, we modified the previous hearing assistance system so that it can present not only the direction of the sound source but also the distance to the source. The system presents the horizontal movement of the sound source to the person through the vibrators on both shoulders according to the distance to and direction of the sound source estimated from the acoustic signals detected by the ear microphones. Two small speaker drivers attached to a vest are used as vibrators so that they can be worn on top outdoors. The ratio of the vibration intensity of the two vibrators varies according to the angle of the sound source: the closer to the sound source, the stronger the vibration intensity, thereby presenting the relative angle of and distance to the sound source to the person. By turning their face in the direction where the vibration intensity on both shoulders is equal, the person can perceive the approach of the sound source as a change in vibration intensity. We conducted an experiment in which a subject wearing an eye mask and the hearing assistance system tracked a single sound source. The system can track the direction of a moving sound source with an accuracy of less than 5° by adding an analog vibration pattern to the direction of the sound source. By presenting the direction of the sound source with high accuracy, it is possible to show the approach and departure of the sound source to the subject.

Section 2 describes the methods.

Section 3 describes the configuration of the hearing assistance system, the structure, vibration characteristics of the vibrators, which can be used outdoors and a flowchart including the subject’s behavior.

Section 4 describes the tracking of a moving sound source by a subject wearing the system.

Section 5 describes the discussion. Finally, the conclusions and future plans are provided.

4. Results

By combining the two ways of driving the vibrators, as described above, it is possible to convey information to the user about the direction of the sound source and the distance to the source through vibration. The vibration intensity ratio of the left and right vibrators indicates the direction of the sound source, and the vibration intensity itself indicates the distance to the source. In principle, if only the intensity of the vibrations is increased without changing the intensity ratio of the vibrators, the user should be able to see the source approaching from any direction. However, the directional dependence (directionality) of the mean value of the standard deviation introduces uncertainty into the distance estimate, and thus, it is considered more effective for estimating relative distance rather than absolute distance. In other words, it may only tell us whether the sound source is approaching or moving away from its current position. In order to simplify the evaluation of the system, the subject tracked a moving sound source.

When a loudspeaker fixed on a linearly moving platform crossed a point 2 m away from the subject, we examined whether the subject, wearing an eye mask, could track the moving loudspeaker. The subject, a 21-year-old male student with no hearing impairment, was instructed to turn his face in the direction where the vibration intensity of the left and right vibrators was equal. Four seconds after the start of the loudspeaker motion, the loudspeaker continuously generated the sound of a motorcycle engine. A schematic diagram of the experiment is shown in

Figure 14.

The measurement results are shown in

Figure 15;

Figure 15a shows the time variation of the time difference between the two ears,

Figure 15b shows the time variation of the mean value of the standard deviation, and

Figure 15c shows the output voltage to the left and right vibrators. Immediately after the onset of sound, the value of the interaural time difference was as low as −60 μs because the speaker was located on the subject’s right side (

Figure 15a). At that time, the output voltage of the vibrator was higher on the right (

Figure 15c), so the subject turned his face to the right. After that, the time difference between the two ears was gradually corrected to around zero. This is because the subject continued to face the direction of the moving loudspeaker according to the vibration of the vibrator. The average value of the standard deviation was 70° immediately after the onset of the sound but decreased to around 50° as the loudspeaker approached. The output voltage to the left and right vibrators increased accordingly. As the loudspeaker approached, the vibration of the vibrators gradually intensified, so the subject could see how the loudspeaker was approaching. For an indoor sound source that is moving a relatively short distance, it is possible to accurately notify the subject of its movement. As shown in the flowchart in

Figure 13, since the subject’s motion is included in the control loop, individual differences in tracking motion are likely to occur. The action of pointing the head in the direction where the vibrations of both vibrators are equal to the movement of the sound source is not always easy, especially when the movement is fast. If the subject does not accurately point in the direction of the sound source, the vibration intensity does not correspond to the distance to the source. By training the subject, the accuracy of the motion of pointing the head in the direction of the sound source could be improved to some extent and the effect of individual differences can be controlled.

5. Discussion

It is more effective to apply tactile stimuli directly to the skin, which is extremely sensitive to perception, in order to encourage the behavior of hearing-impaired people. However, the application of electrical or vibrating stimuli to the skin is not appropriate for long-term use because it tends to cause rashes and abrasions due to friction. In this study, two small loudspeaker drivers attached to a vest were used as vibrators so that the vest could be worn outdoors in layers (

Figure 9). The vibration amplitude is up to 1.5 mm, which means that a hearing-impaired person could wear this vest over his or her clothes when going out, even when wearing layers. The placement of the vibrators, shown in

Figure 9b, is an important factor that affects the perceptibility of the vibrations. When we tested the placement of the vibrators on the subject’s shoulders and back, we found that the protruding area around the scapula was more sensitive to vibration than the area above the muscles. The shape and size of the scapula varies from person to person, and there may also be individual differences in the sensitivity to vibration and the location where it is easily perceived. The best position to place the vibrator needs to be adjusted individually. The degree of adhesion of the vibrator is also important for perception. This can be solved by choosing the best size that is optimal for the hearing-impaired person. The subject who participated in this experiment was not inconvenienced by wearing the hearing assist system.

In this study, we tracked a moving sound source in a room to verify whether the hearing aid system could transmit information to the subject about the direction of the sound source and the distance to the sound source through vibration. The vibration intensity ratio of the left and right vibrators indicates the direction of the sound source, and the vibration intensity itself indicates the distance to the source. In principle, if the vibration intensity is increased without changing the intensity ratio, the subject should be able to identify the source approaching from any direction. However, as mentioned in the previous section, the directional dependence of the mean value of the standard deviation (directivity) is expected to cause uncertainty in the distance estimate. Therefore, we examined whether the subject, wearing an eye mask, could track a loudspeaker when it was fixed on a linearly moving platform and crossed a point 2 m away from the subject indoors.

As mentioned in

Section 1, the purpose of this study is to provide hearing assistance to the hearing impaired by detecting the location of a vehicle traveling on a road outdoors. Further improvements are needed in the future. Richardson et al. (1979) reported that the location of a sound source placed at a predetermined discrete direction and distance could be estimated by subjects through learning. In our system, the sound was detected and processed in real time, and the position of the sound source was tracked continuously by the vibration of the vibrator alone, without the subject having to learn. The subject wore an eye mask and did not use visual information to track the loudspeaker. The time variation of the interaural time difference (

Figure 15a) shows that immediately after the onset of the sound, the value of the interaural time difference was −60 μs. This indicates that the subject’s face was initially oriented about 5° to the loudspeaker. As the subject continued to face the direction of the moving loudspeaker according to the vibration of the vibrator, the interaural time difference was gradually corrected to near zero. Since the interaural time difference remained below about 60 μs, the direction of the sound source was below about 5°. As shown in

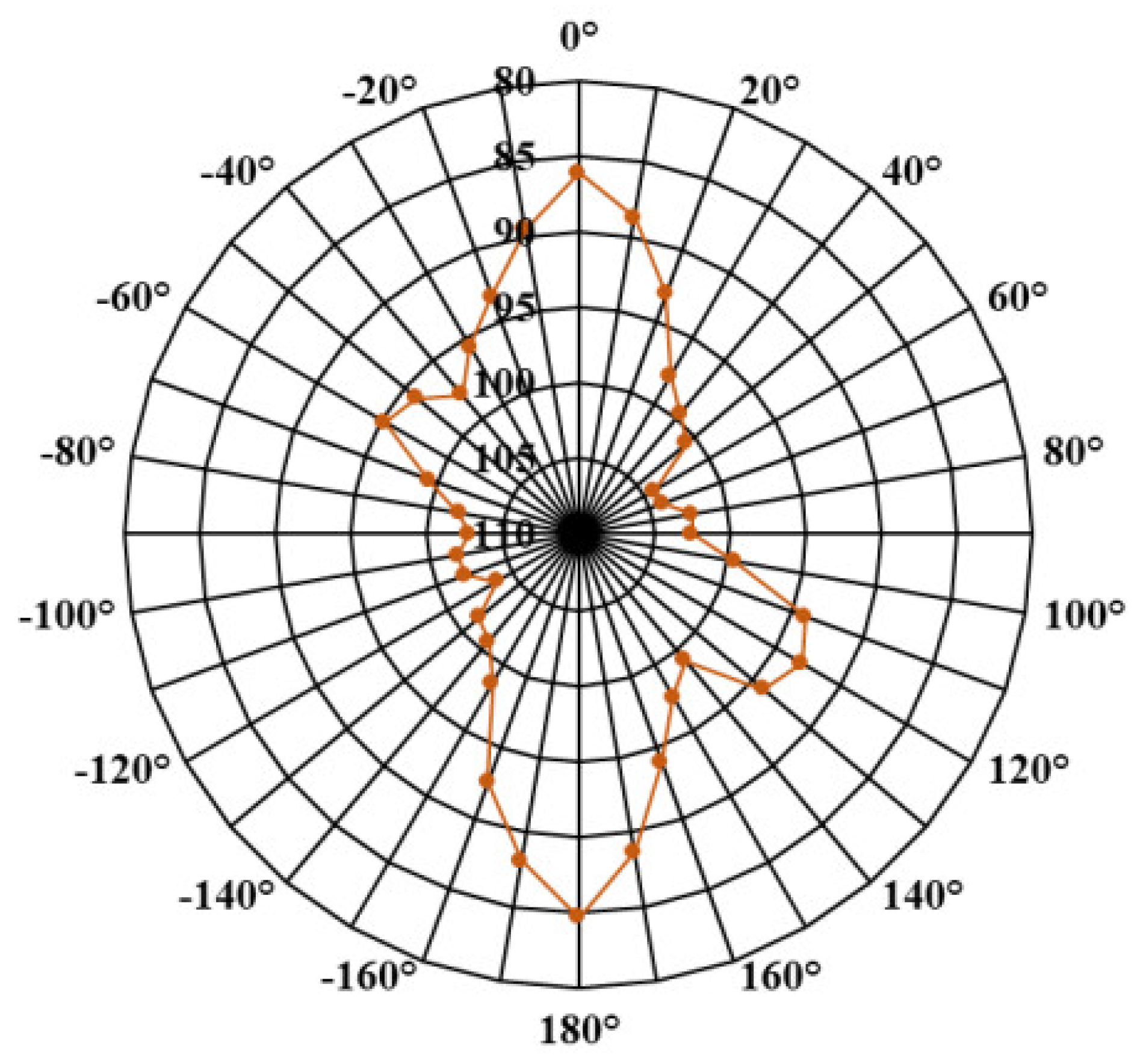

Figure 3, the subject was almost facing the front of the loudspeaker, and the increase in the mean value of the standard deviation estimated from the directional characteristics of the mean was slight (about 2–3°;

Figure 6). Therefore, the time variation of the mean of the standard deviation (

Figure 15b) can be obtained without having to depend on the source direction. As the distance between the subject and the loudspeaker reached about 2.69 to 1.40 m, the mean value of the standard deviation gradually decreased from about 70 to 50°. The time difference between the two ears was near zero, indicating that the subject was directly facing the direction of the loudspeaker. Even though the direction of the loudspeaker from the subject’s point of view changed totally by about 24° before and after the movement, the output voltage to the left and right vibrators (

Figure 15c) remained almost the same. This indicates that the subject kept changing the direction of his face so that the vibration intensity of the vibrators on both shoulders became equal. The movement of the loudspeaker was set to a relatively slow speed of 3.8 cm/s. The speed at which the sound source direction changed from the subject’s perspective was about 0.6°/s. When tracking the sound of a vehicle on a road, it is necessary to consider the validity of the moving speed. The farther away the vehicle, the lower the speed should be at which the direction of the sound source changes.

In real scenarios, hearing-impaired people would benefit most if it were possible for them to perceive sound sources from directions that are not included in their visual field. Although experimental data are not shown, the proposed system is capable of detecting the direction of a sound source not only in front but also to the side and rear of the subject’s field of vision and of providing notification. The authors reported a method of detecting the direction of lateral and backward sound sources and of providing notification with vibrating vibrators attached to the subject’s shoulders [

39]. The method of driving the vibrators is simpler than that shown in

Figure 11. If the sign of the time difference between the two ears is positive, the left vibrator is driven by constant voltage, and if it is negative, the right vibrator is driven by constant voltage. The subject was instructed to turn his face to the vibrating side. Both vibrators vibrated only when the subject faced the direction of the sound source. The subject could continue to change the direction of his face by relying on the vibrations to indicate the direction of the sound source, not only in front but also to the side or behind. In this system, the right and left vibrators are driven by constant voltage when the time difference between the two ears is less than −300 μm and more than +300 μm, respectively, as shown in

Figure 11, so it should be able to present the direction of lateral and backward sound sources as well as the previous system. Therefore, even if the hearing-impaired person is looking to the right in order to see a vehicle when crossing the road, this system can still provide notification of a vehicle approaching from the left or rear.

The evaluation was performed by conducting measurements of a sound source with the sound of a motorcycle engine. For real applications, common sound sources (e.g., sound of a car motor) need to be considered. In this system, both the direction of the sound source and the distance to the source were obtained from the interaural phase difference of the sound pressure. This is performed to take advantage of the fact that phase is independent of amplitude. The phase difference spectrum can be obtained independently of the sound quality. The authors reported that the spectra of the phase difference detected by two spatially separated microphones are almost equal when noise and voice are generated from loudspeakers [

42]. In other words, the same results should be obtained when the same experiment is conducted with vehicle sounds instead of the sound of a motorcycle engine. This property of being independent of sound quality is also a drawback in another sense. This is especially apparent when the system is used outdoors. The system reacts to wind noise, which it is not necessary to alert the user about. In order to solve this problem, it will be necessary to add a function to the system that can recognize environmental sounds through artificial intelligence.

In this study, the number of sound sources to be tracked was limited to one. Ideally, a hearing assistance system should be able to track the locations of multiple sound sources, to analyze the sound quality of each, and to then prioritize them in order to provide notification. However, tracking multiple sound sources and analyzing the sound quality using artificial intelligence is too computationally demanding to be implemented on a mobile platform, which may violate constraints 2 and 3 in

Section 1. The proposed system notifies the user of the direction of the loudest sound source in the vicinity. The acoustic signal detected by the microphone is a composite of sounds arriving from various directions. Physically, sound is a scalar quantity because it is pressure fluctuation in the atmosphere, but it can be treated as a vector quantity with a propagation direction by using two microphones. We assume that the direction of a composite vector, which is a combination of several vectors of different sizes, can be approximated by the direction of the vector with the largest amplitude. Strictly speaking, we track one sound source per time frame, and the detected sound source is not limited to one but may switch to others as time passes. In other words, even if the hearing-impaired person happens to be looking to the right when crossing the street, if the sound of a truck approaching from the left (corresponding to the rear) is loud, the system can alert the person to the presence of the approaching truck.

Finally, we compared the function of this system with that of the previous hearing assist system [

39]. This system is similar in that it can present the direction of a single sound source in all horizontal directions (including lateral and backward directions) to the subject, but it has an additional function of estimating the distance to the sound source. By adding analog vibration patterns for presenting the direction of the sound source, we were able to show the direction of a moving sound source with an accuracy of less than 5°. The high accuracy of the direction overcame the uncertainty in the estimation of the distance and made it possible to show the approach and departure of the sound source to the subject.