Development and Testing of an AI-Based Specific Sound Detection System Integrated on a Fixed-Wing VTOL UAV

Abstract

1. Introduction

2. Materials and Methods

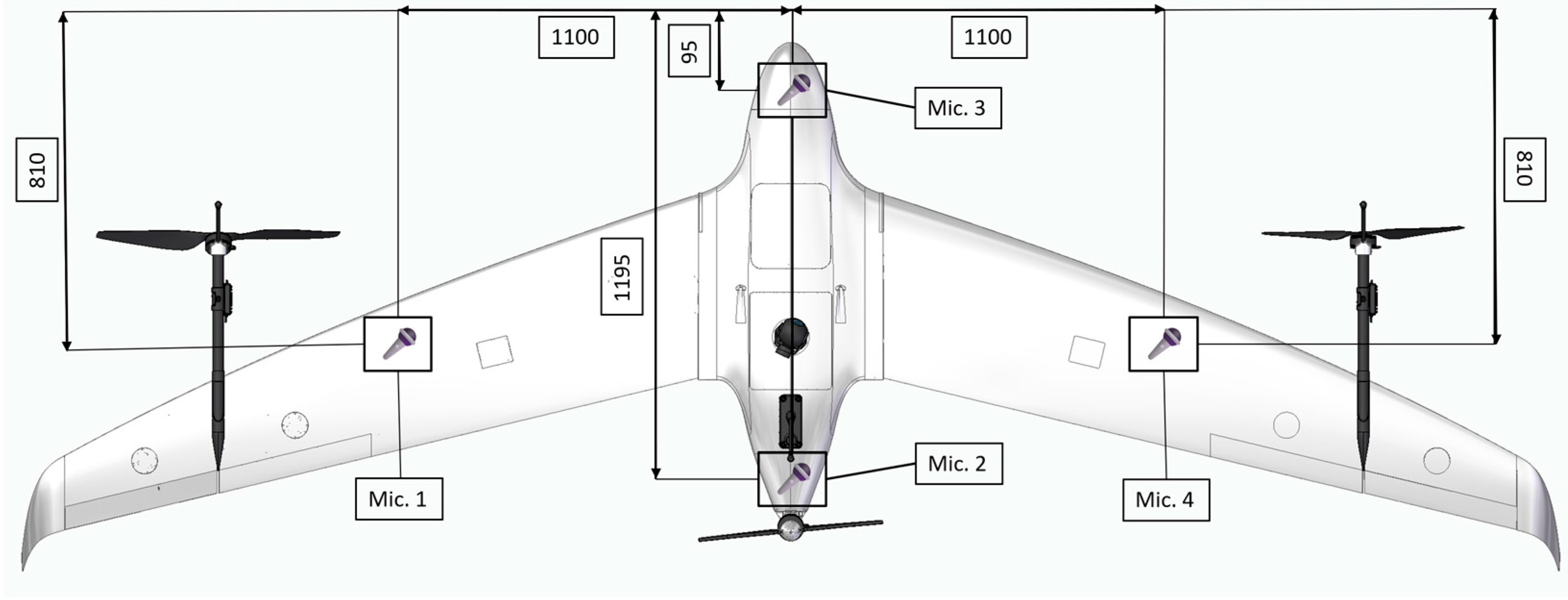

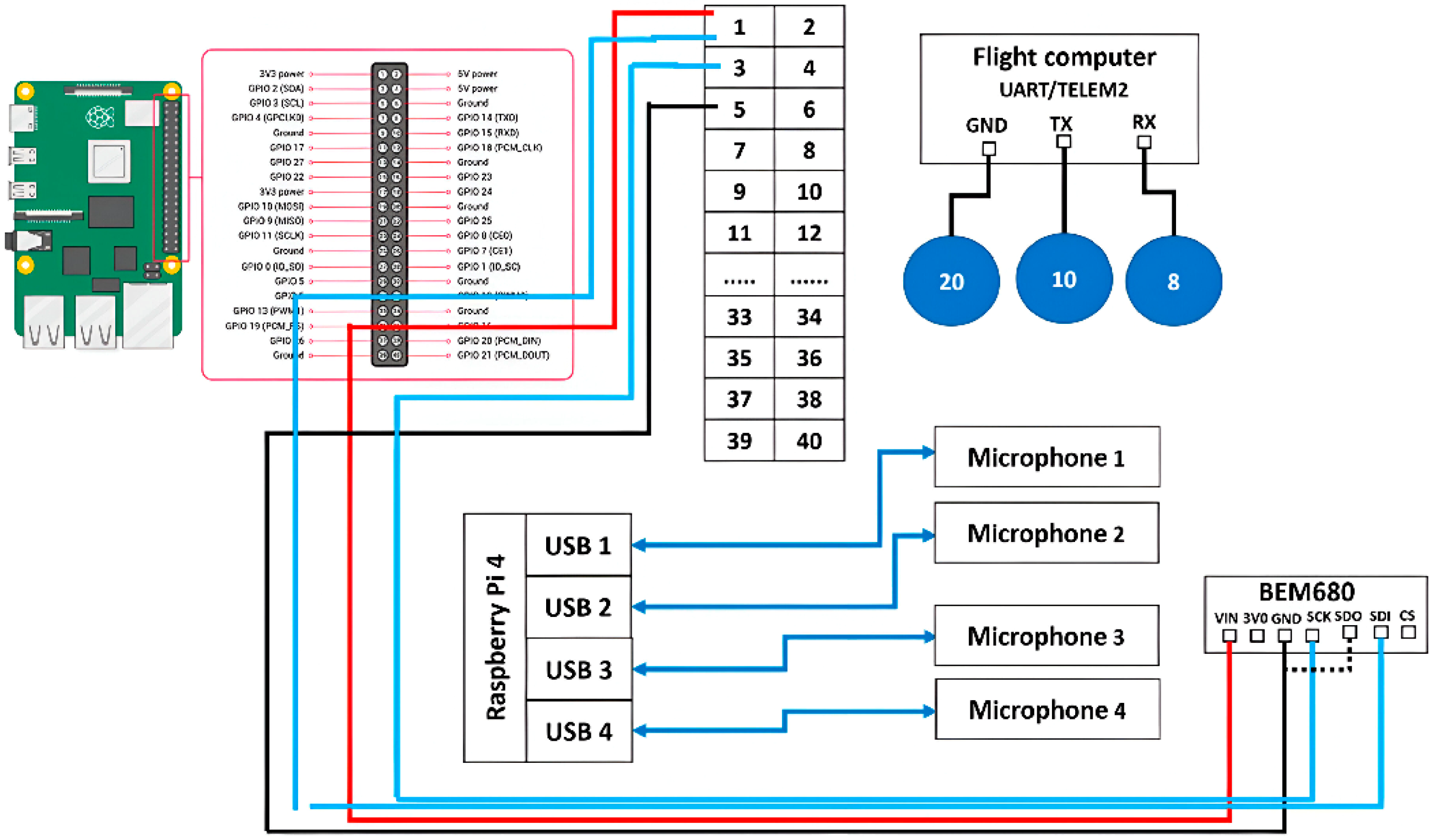

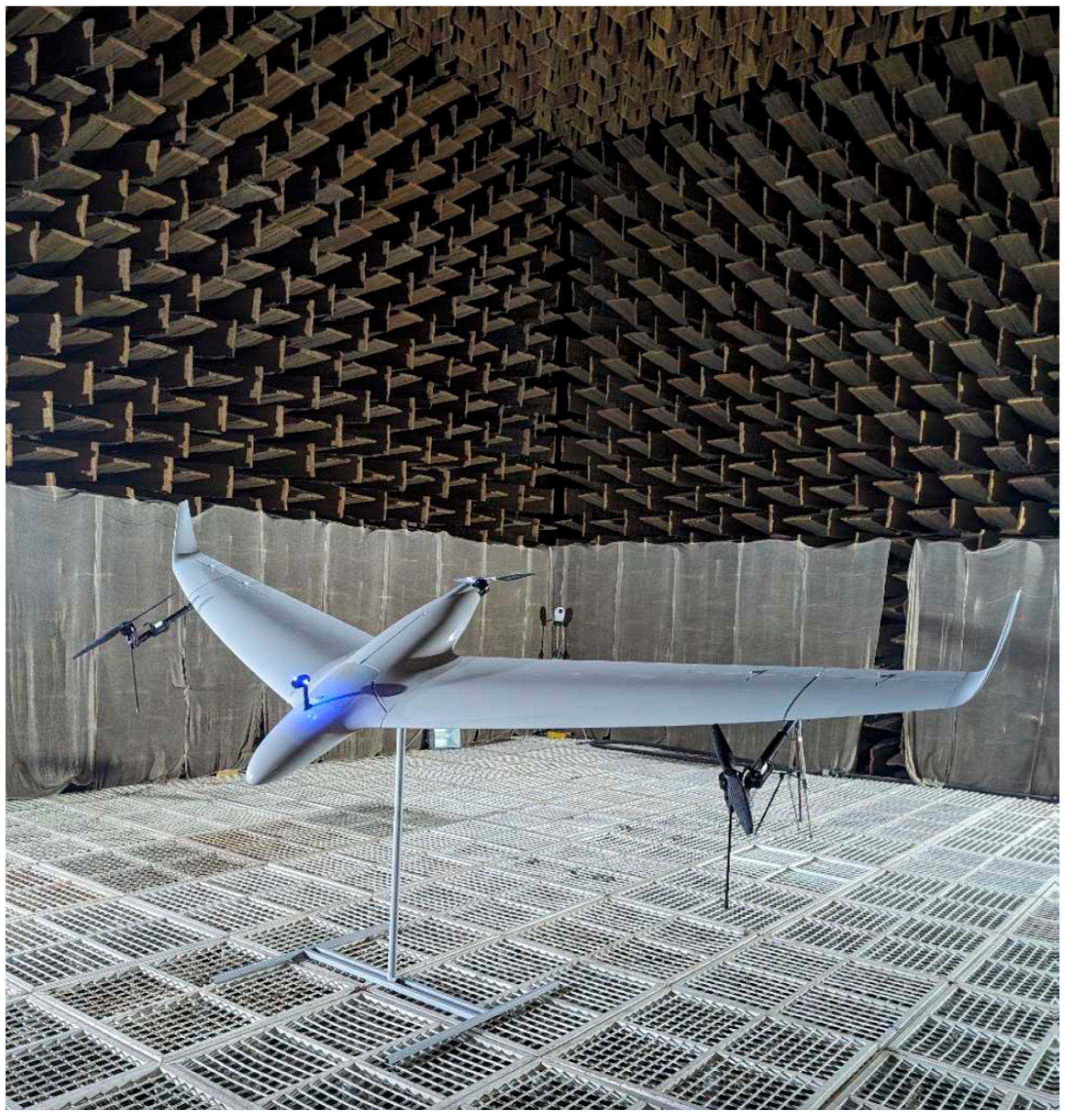

2.1. UAV Platform and Hardware Configuration

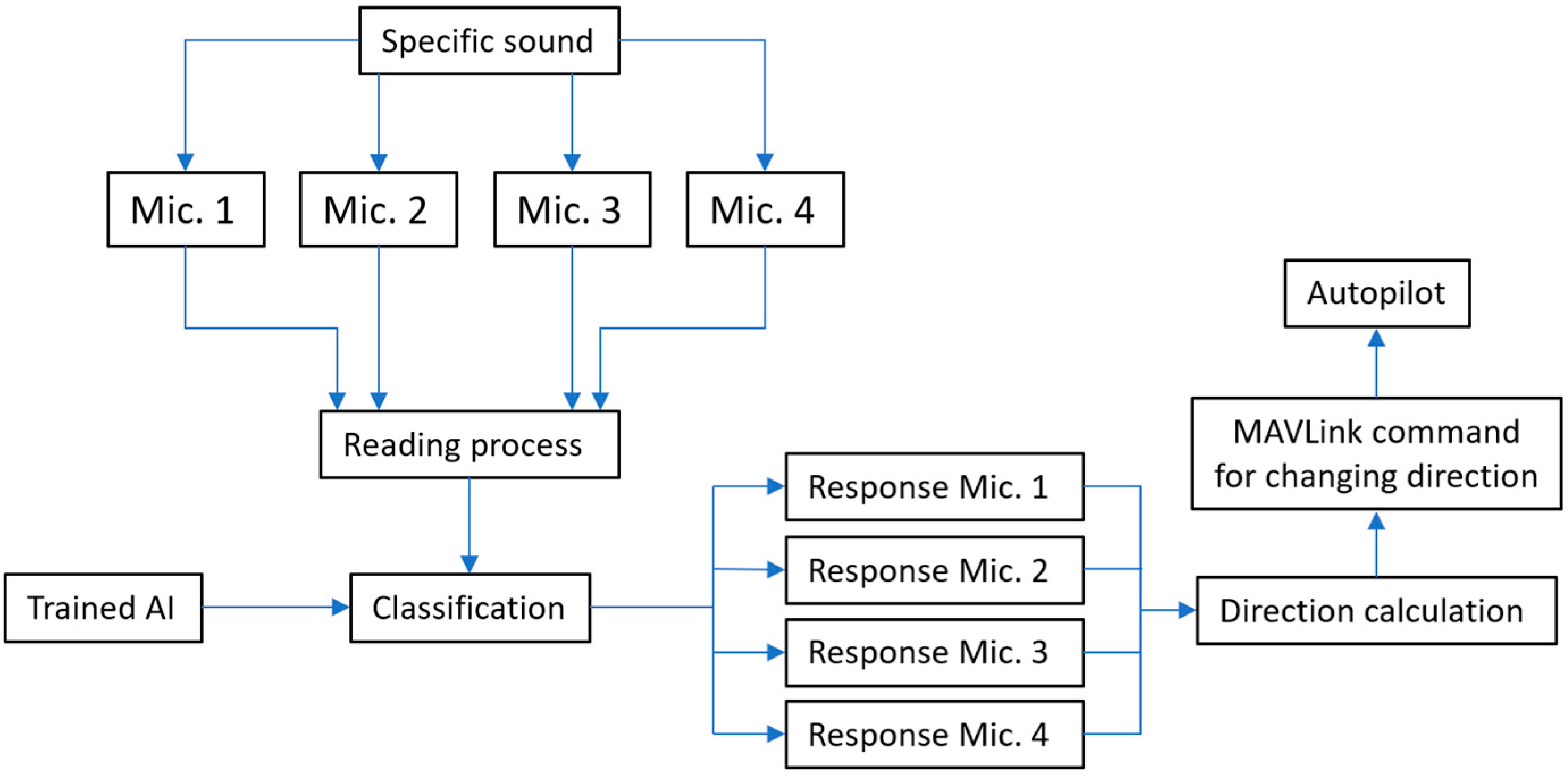

2.2. Algorithm Development

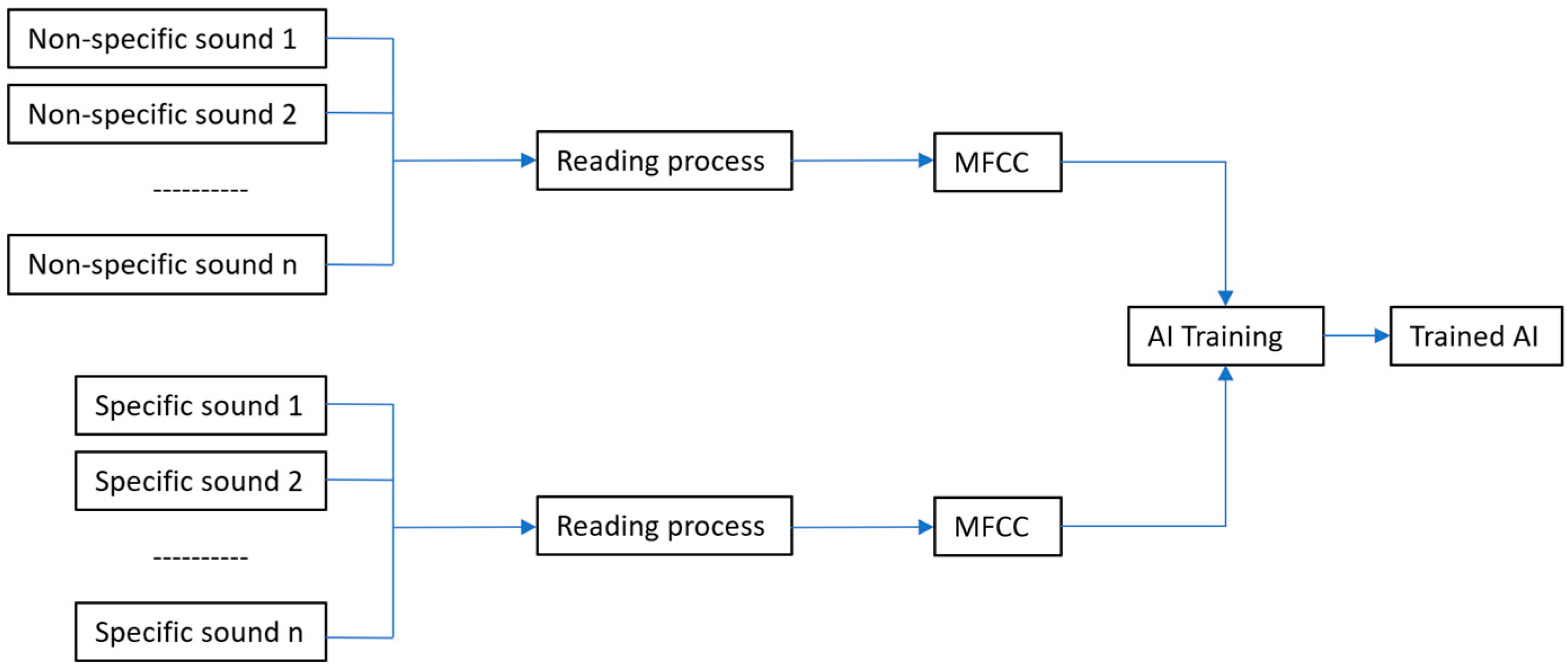

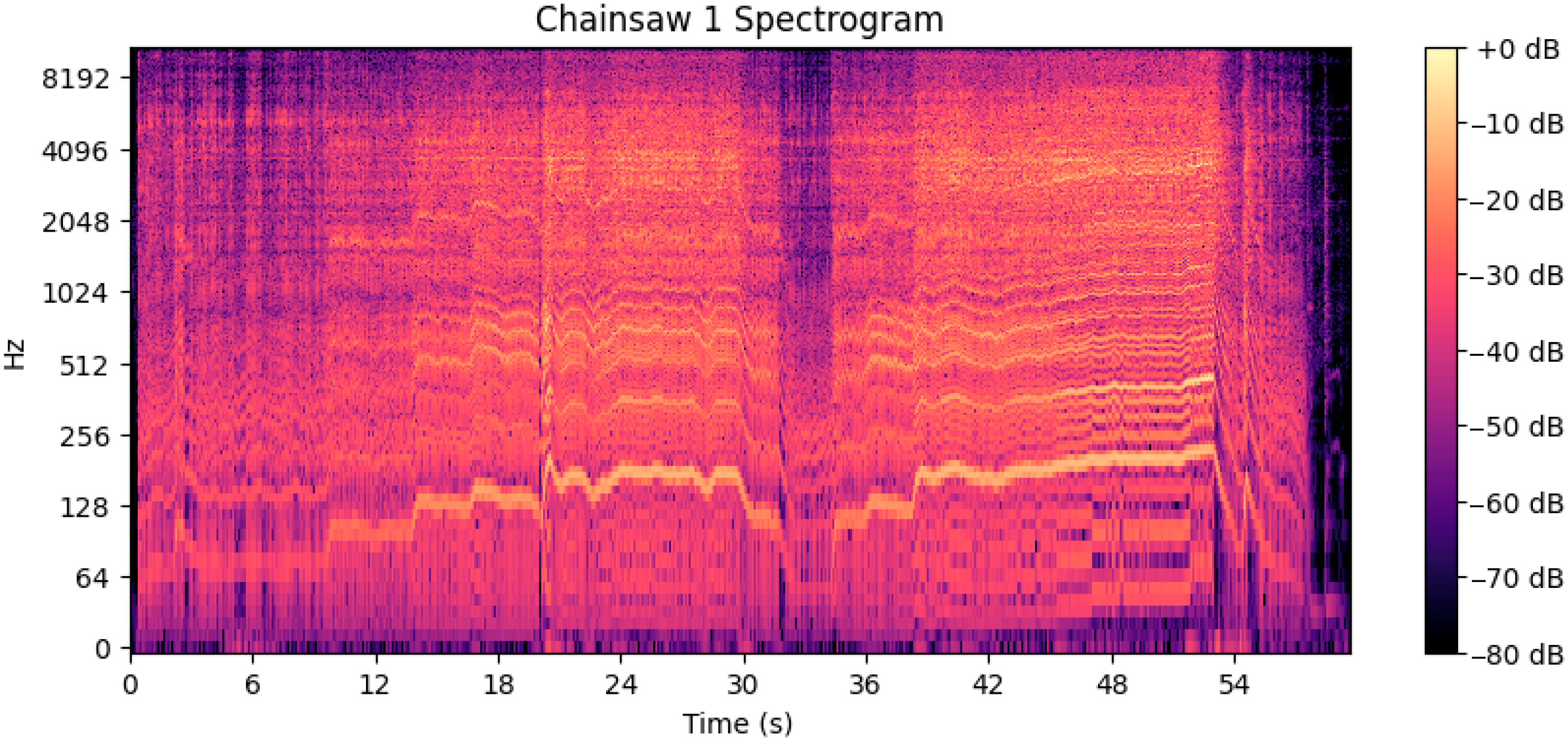

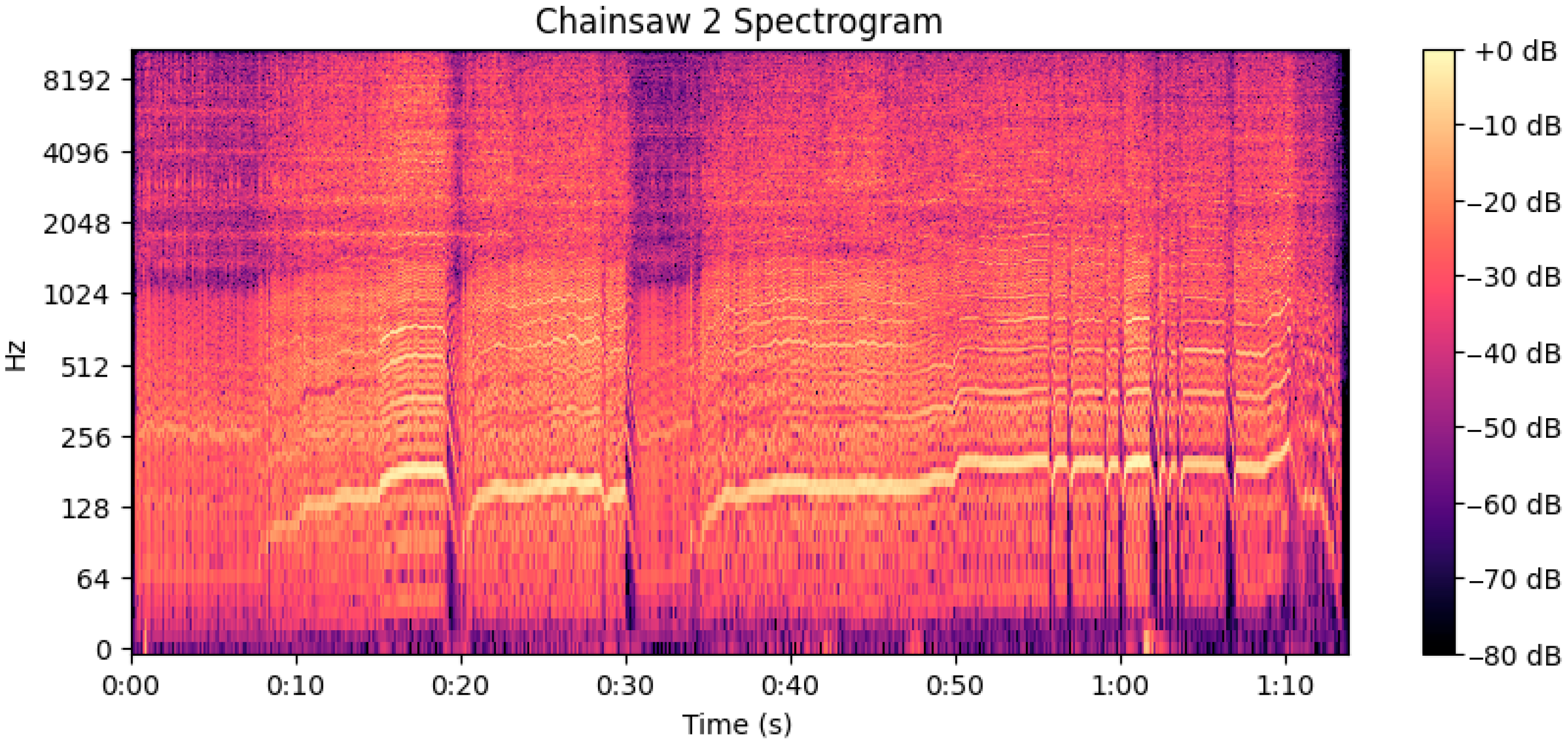

2.2.1. Training the Artificial Intelligence Model for Specific Sound Classification

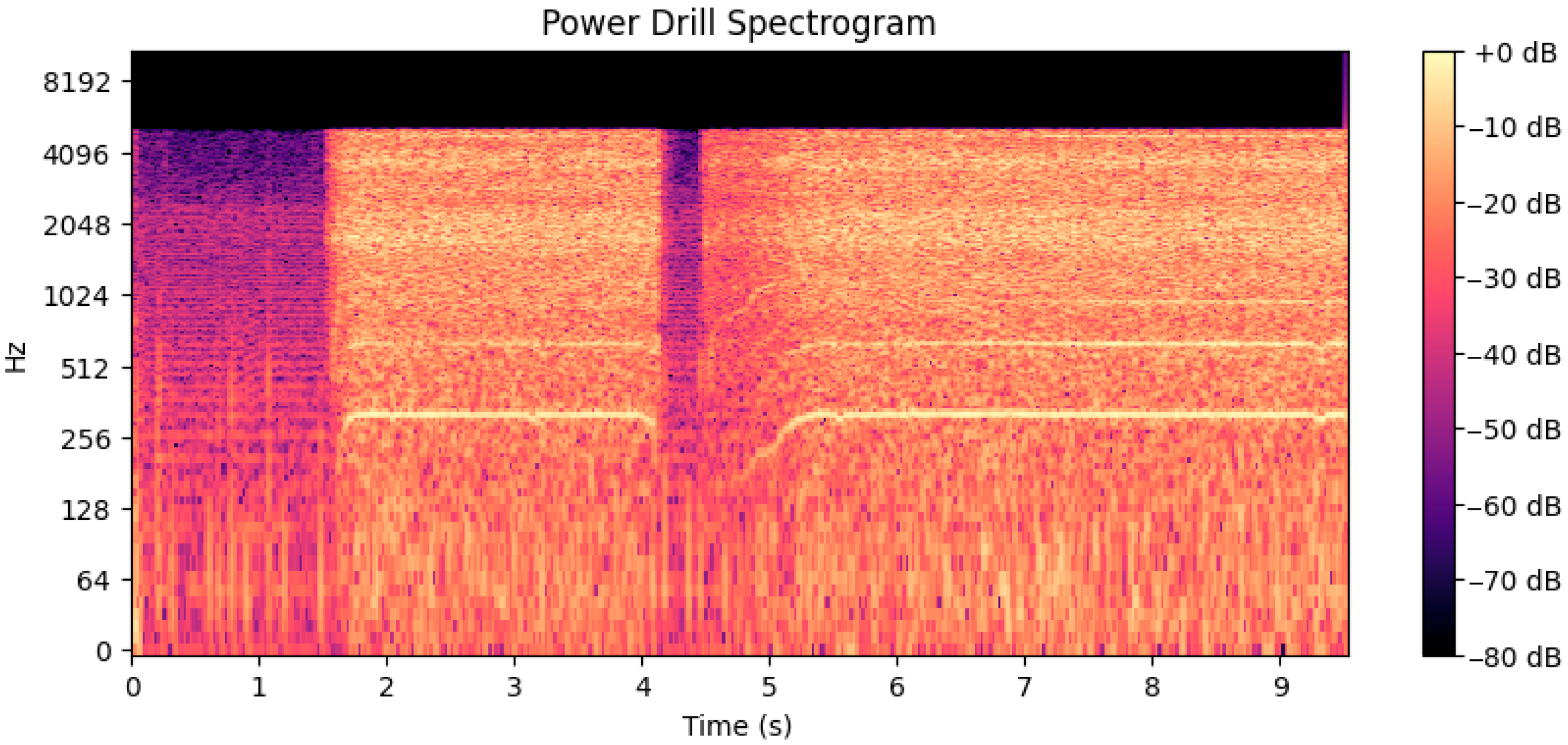

- Non-specific sounds (negative class): environmental and mechanical sounds such as birds, wind, and traffic, including the sound of a power drill—intentionally added due to its frequency spectrum being similar to a chainsaw;

- Specific sounds (positive class): recordings of chainsaws, electric saws, and thermal saws, typically used in tree-felling operations.

- is the MFCC feature vector;

- is the output label (0 = non-specific, 1 = specific);

- is the number of trees in the forest.

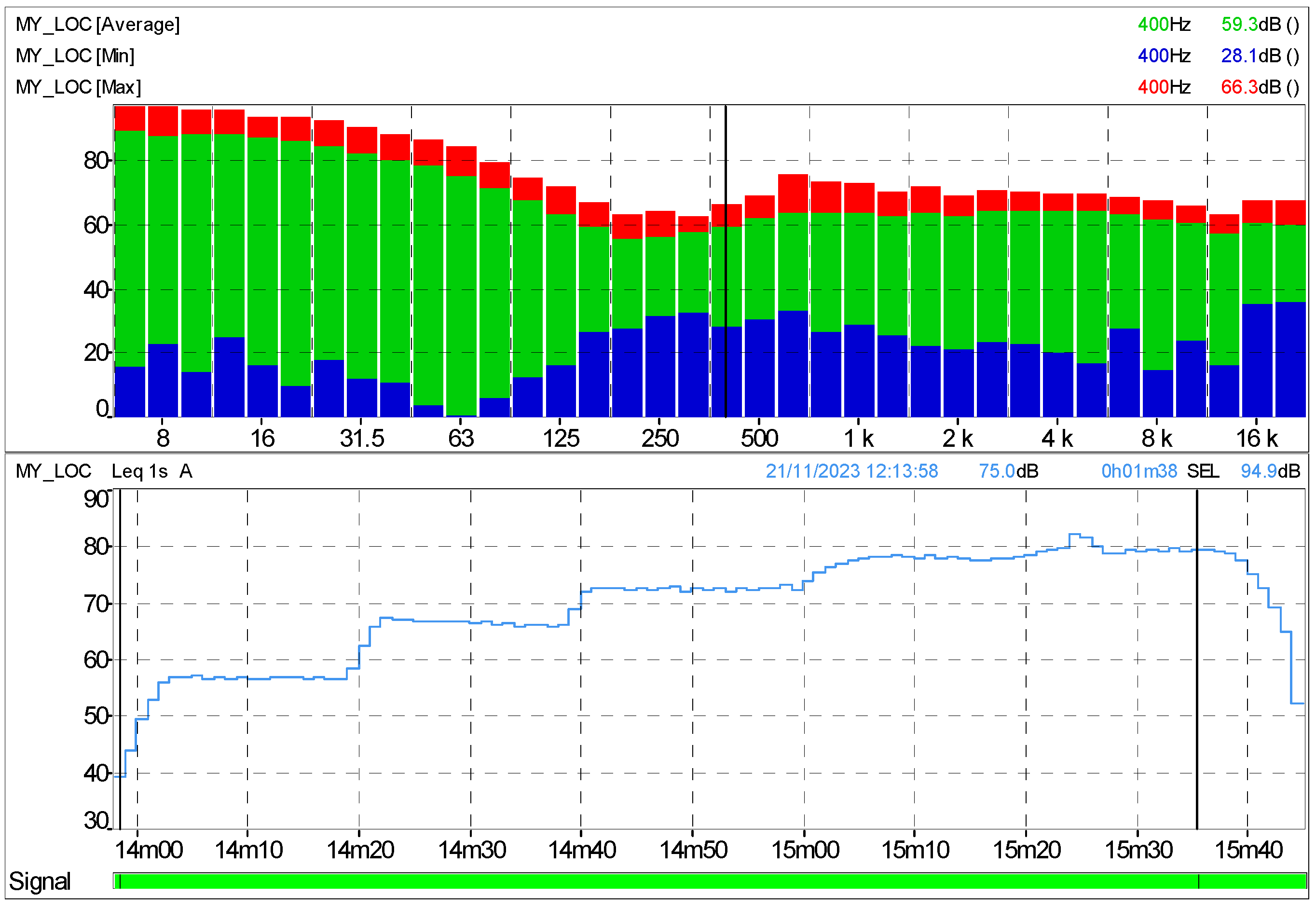

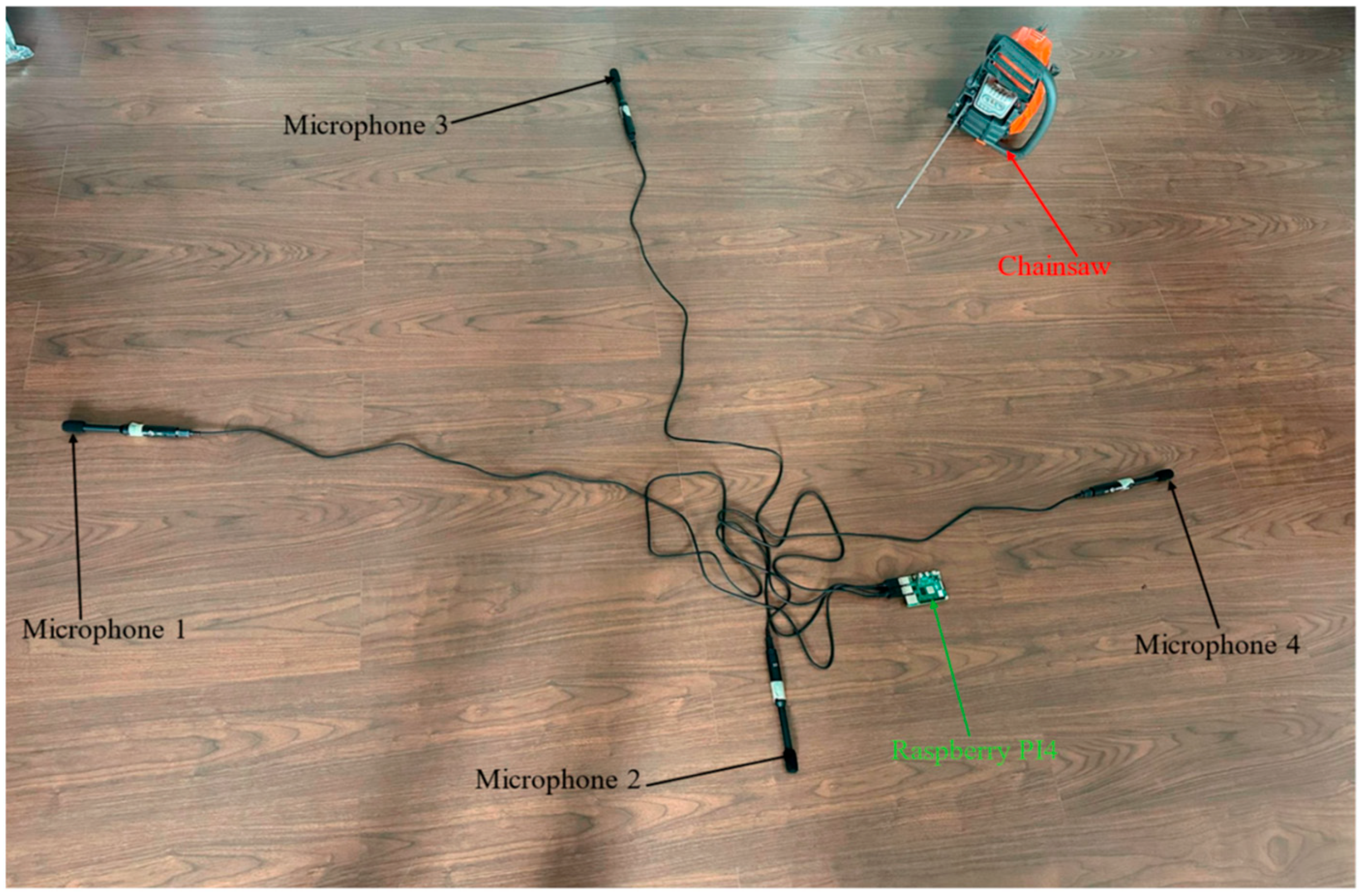

2.2.2. Experimental Validation

- Gasoline-powered chainsaw sounds;

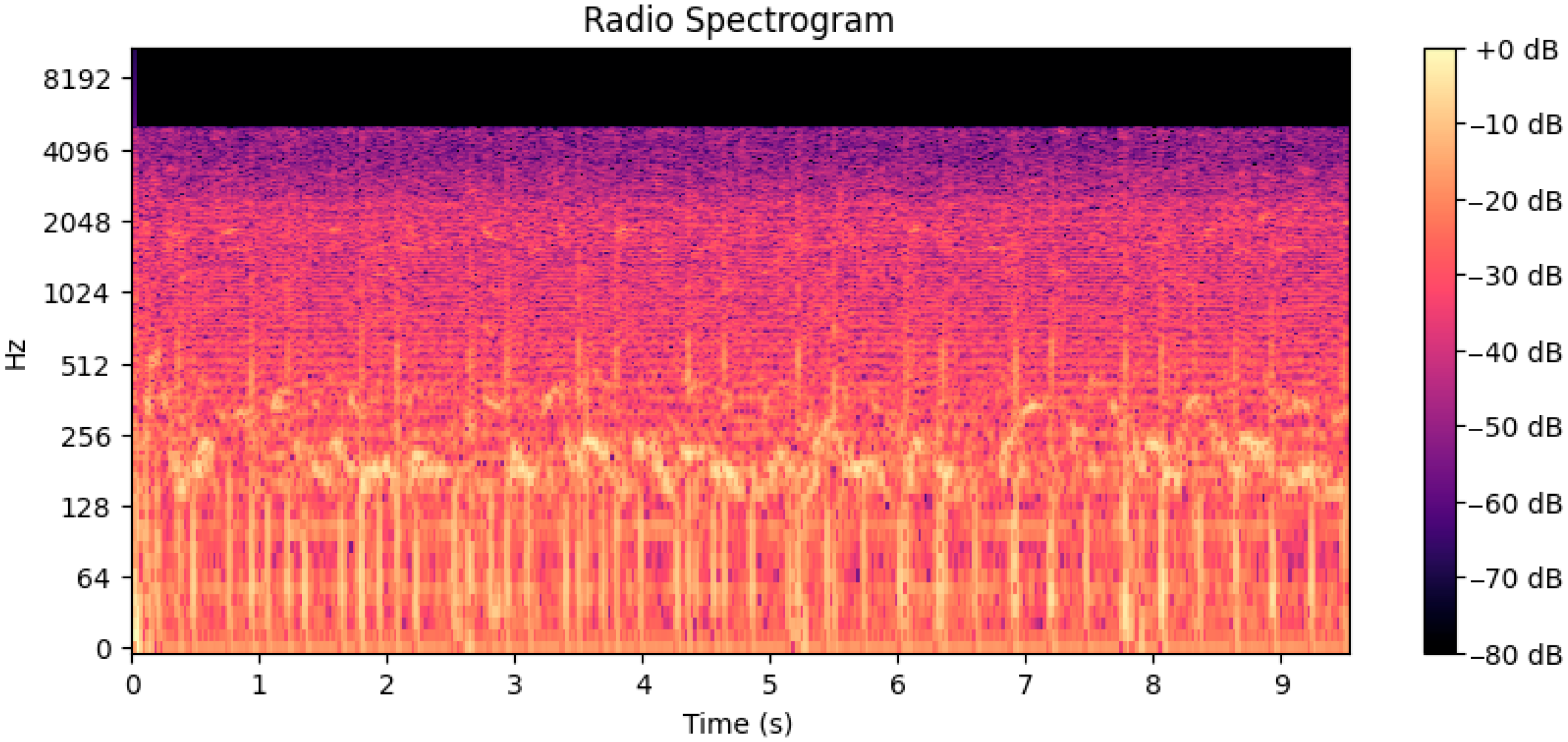

- Radio sound;

- Power drill sounds;

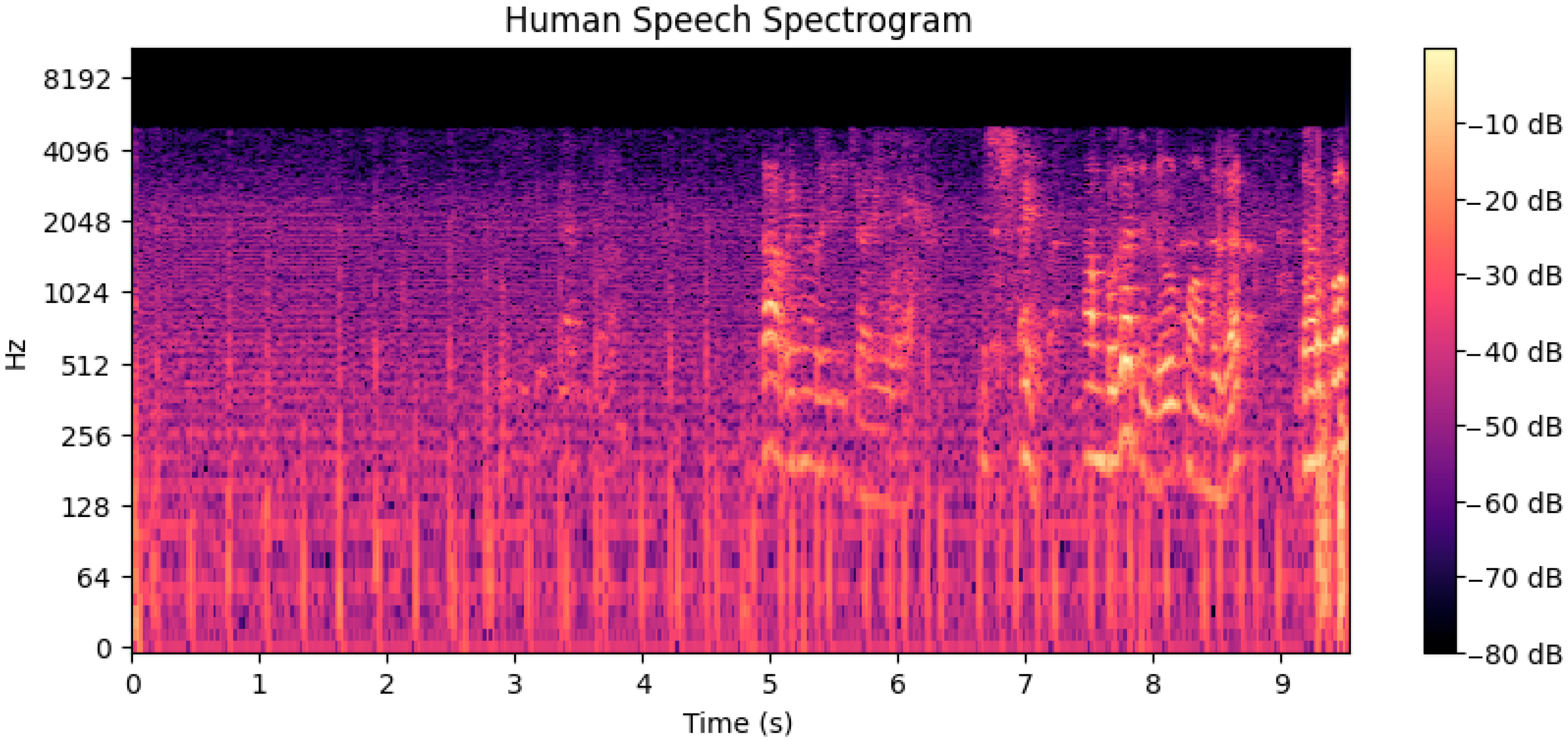

- Human voice recordings.

- Ruris Expert 351, with an engine power of 1.6 kW and a displacement of 45 cm3;

- Husqvarna 135 Mark II, also rated at 1.6 kW and a displacement of 38 cm3;

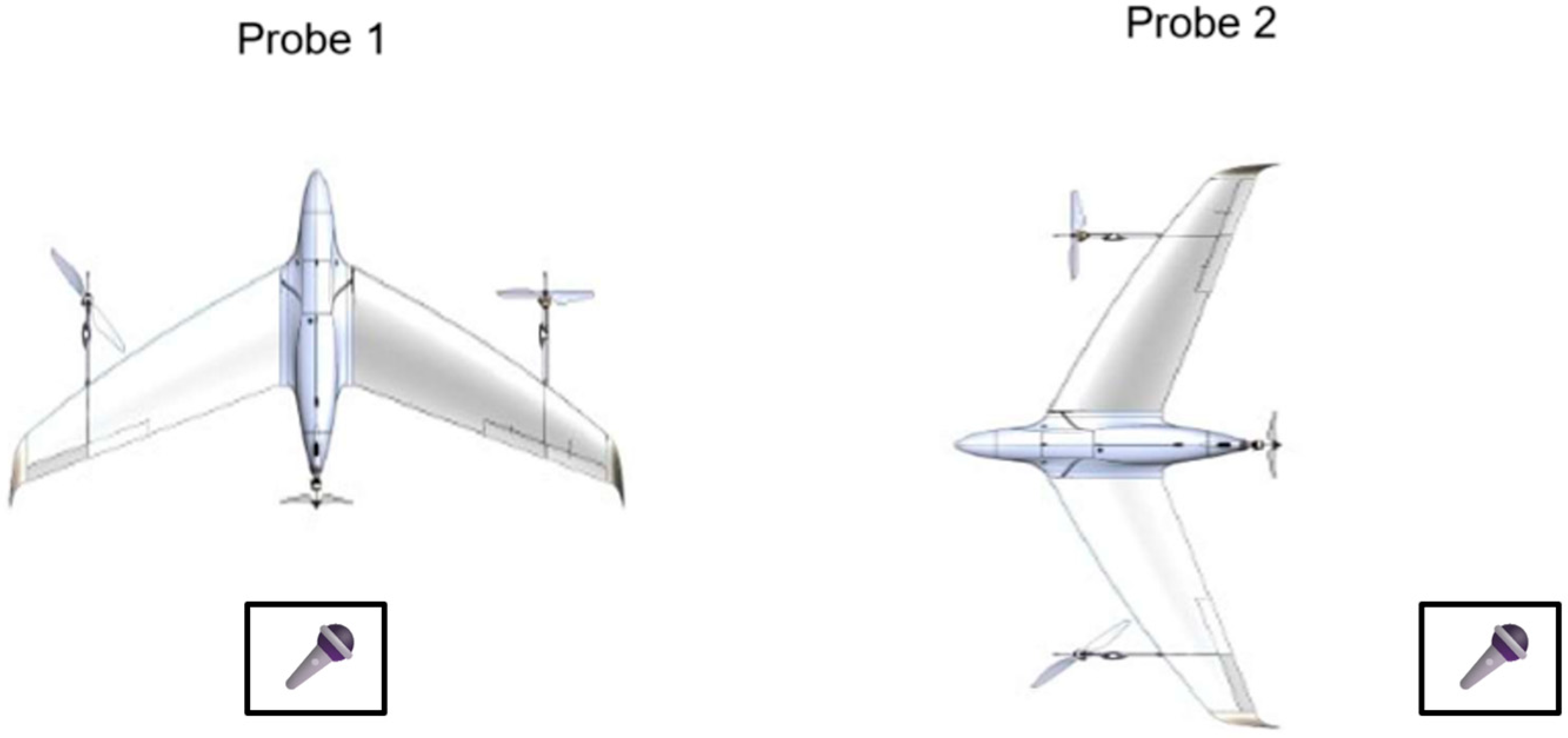

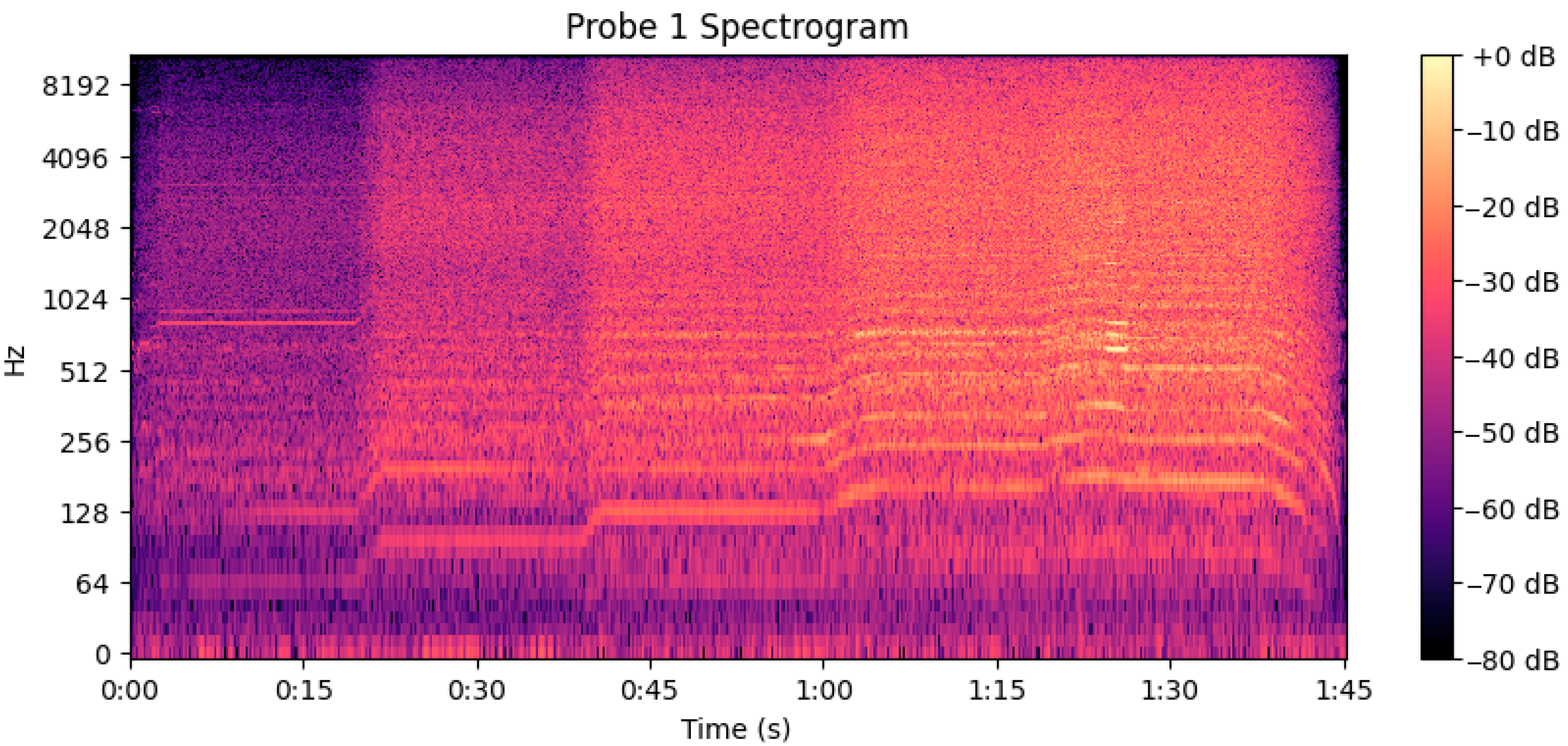

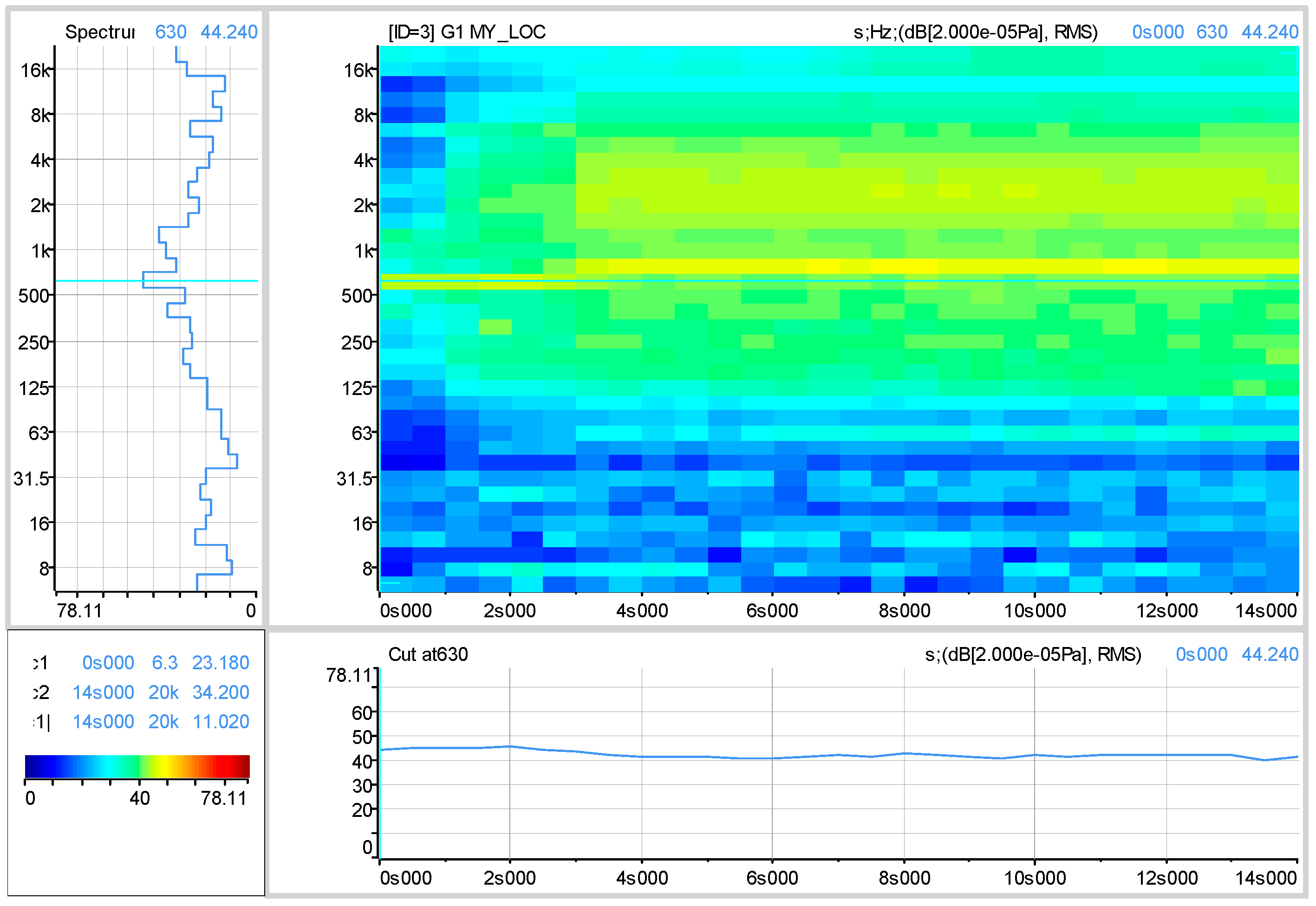

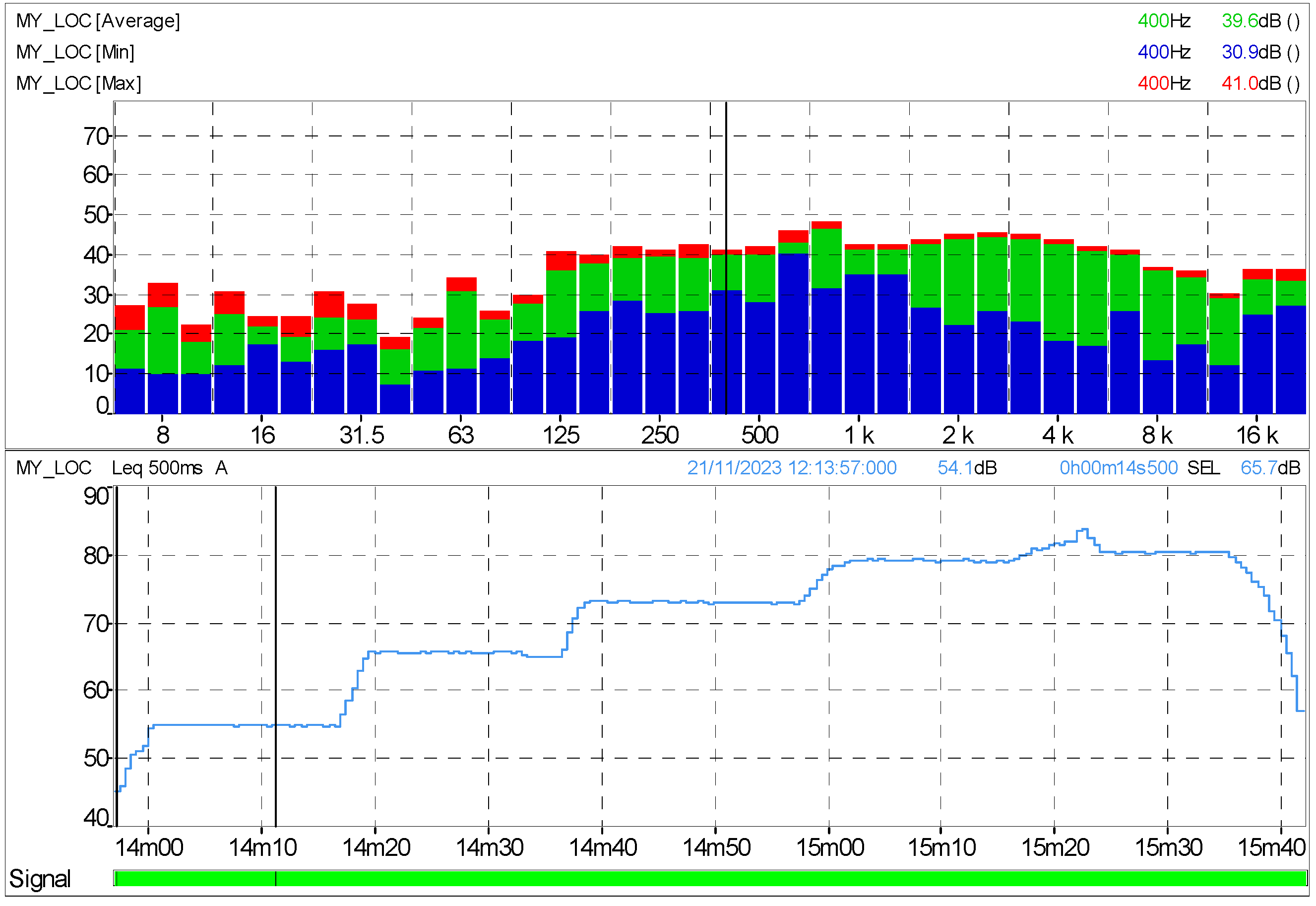

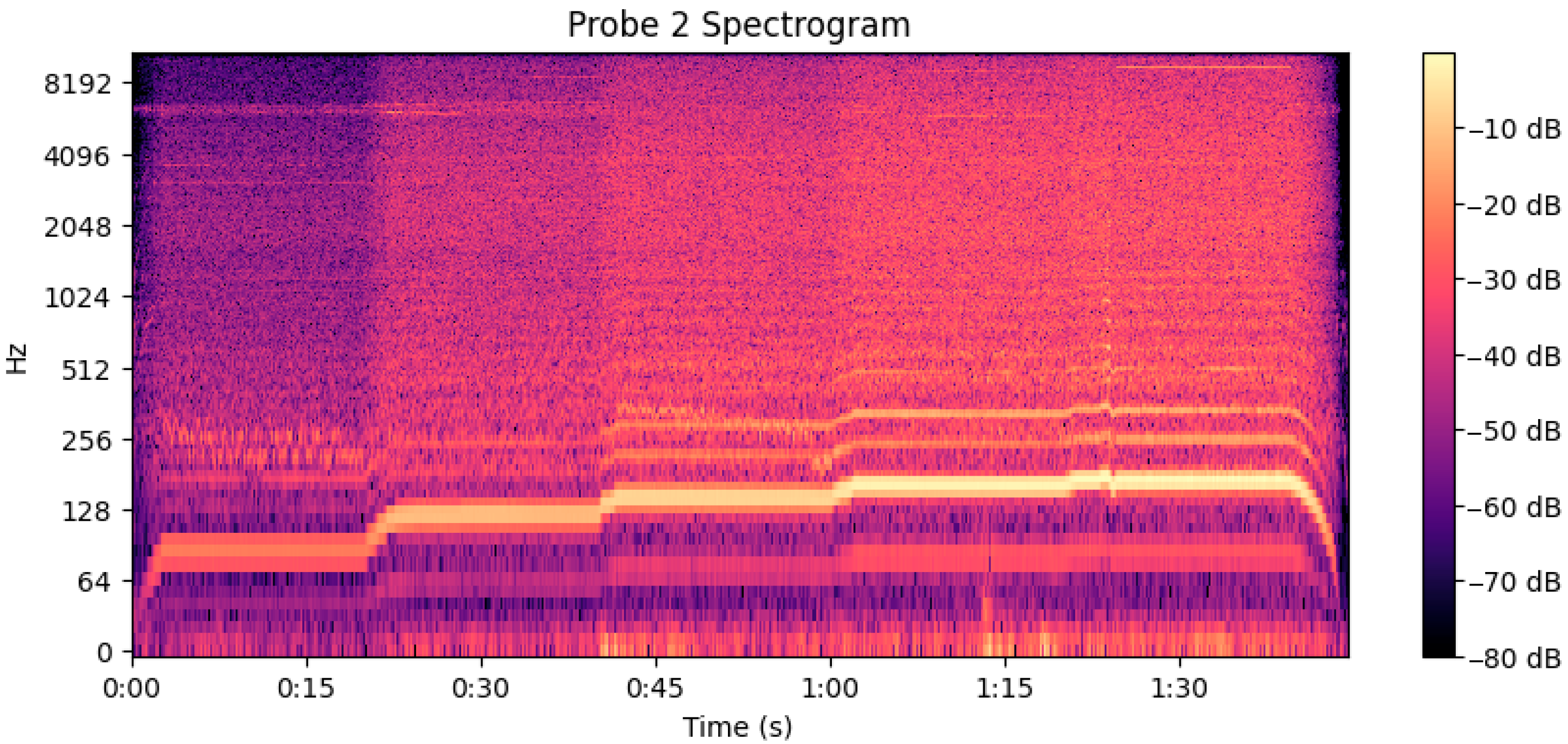

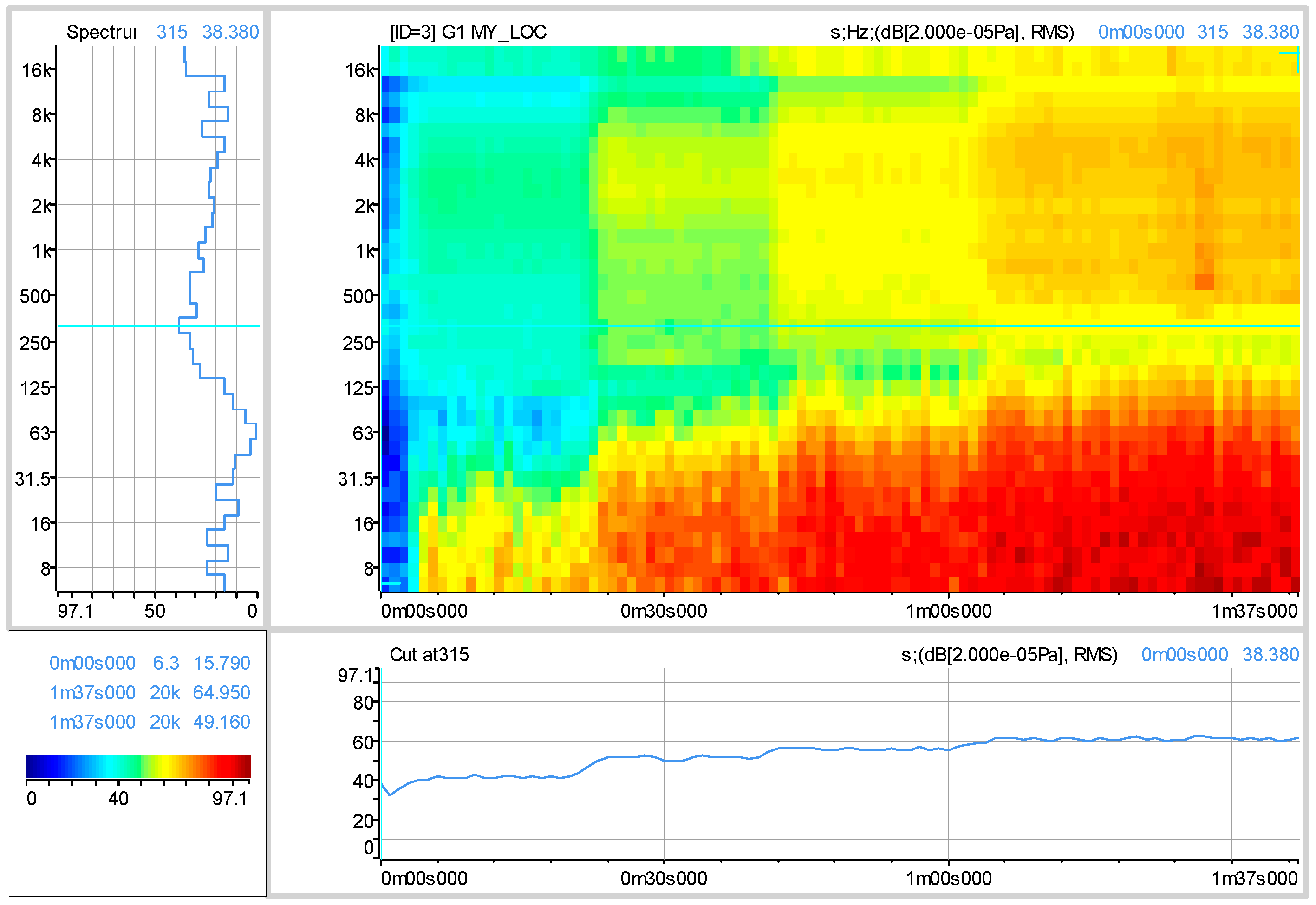

2.2.3. Noise Canceling for Propulsion Sound Isolation

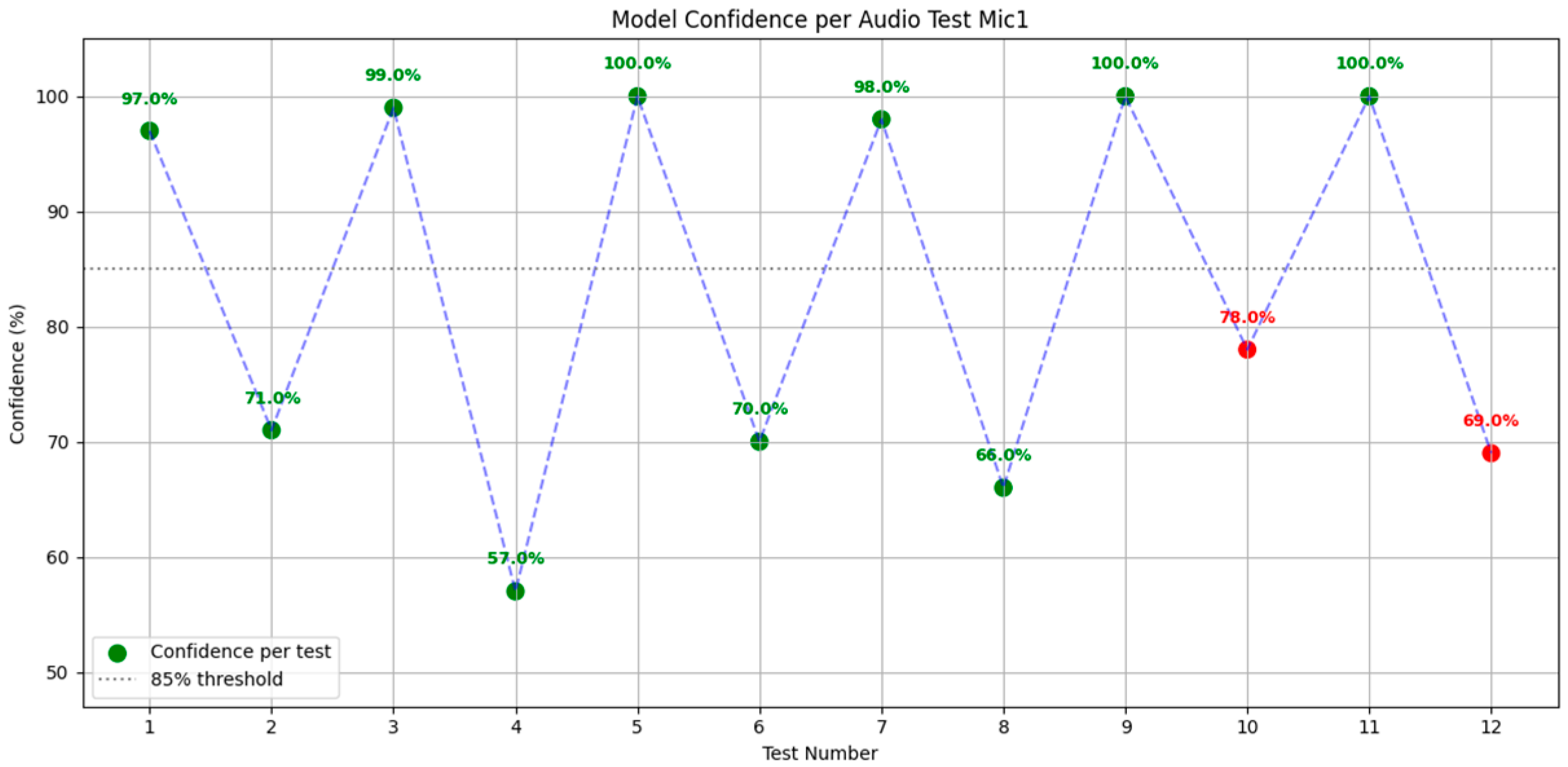

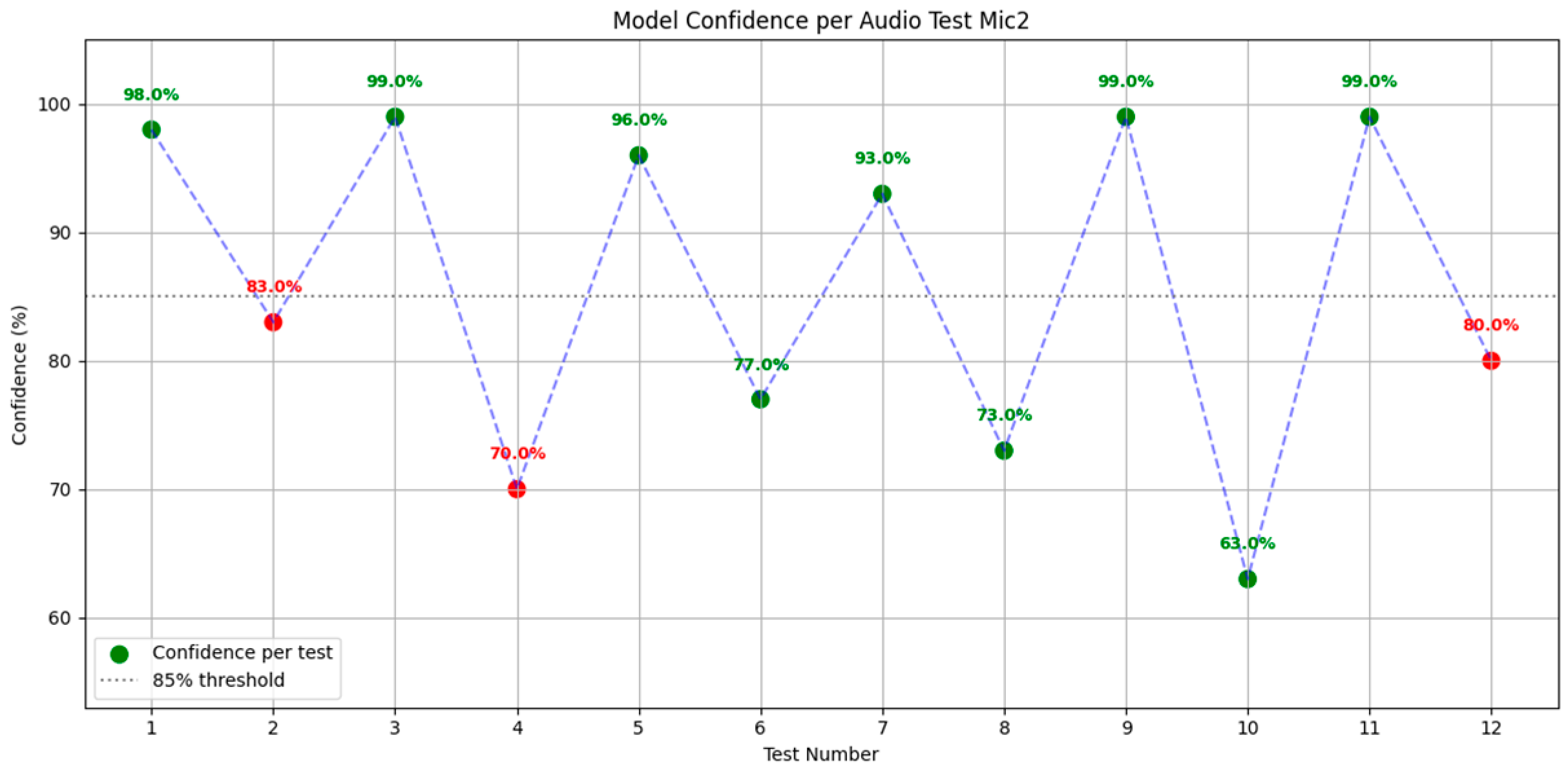

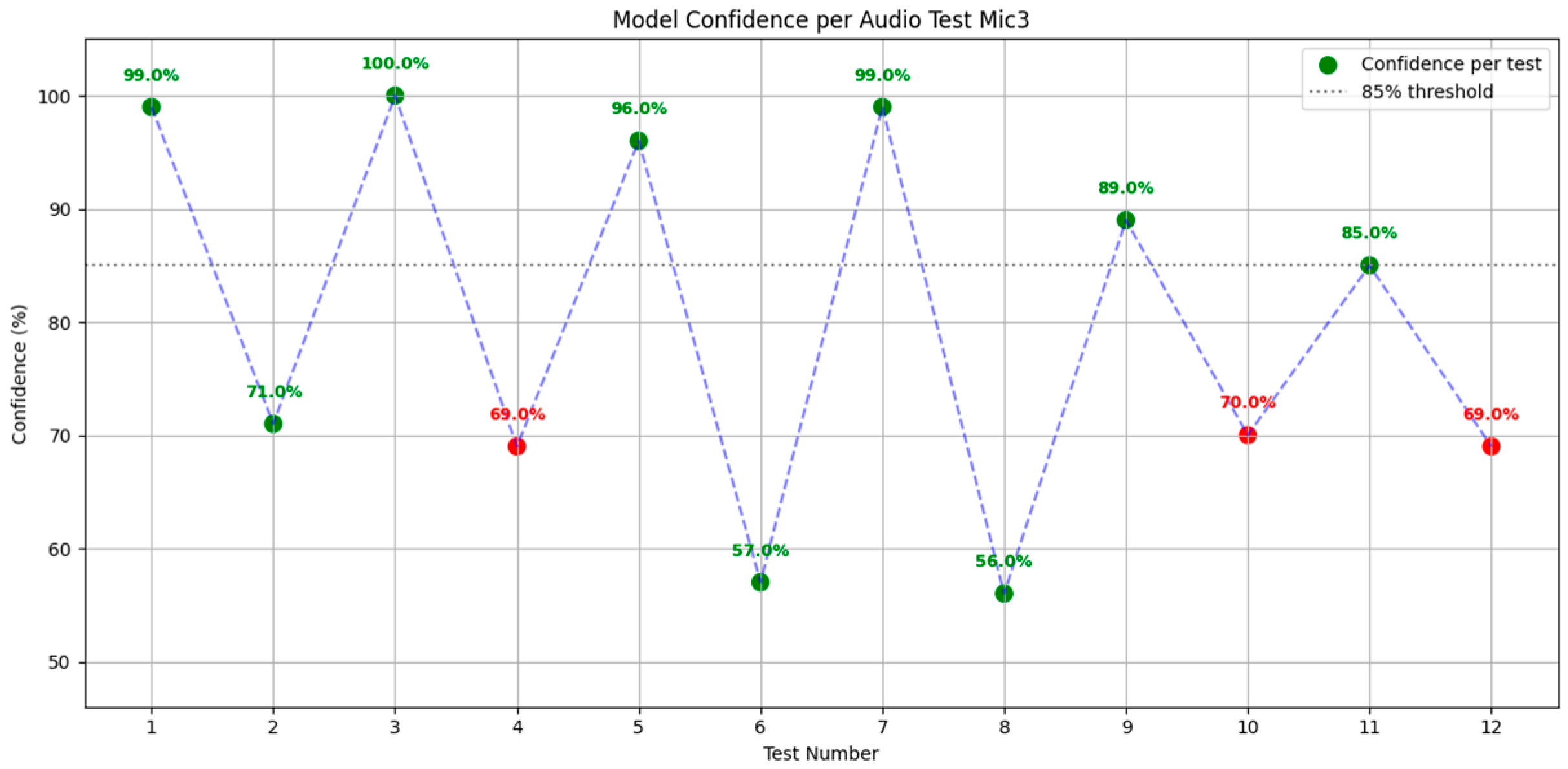

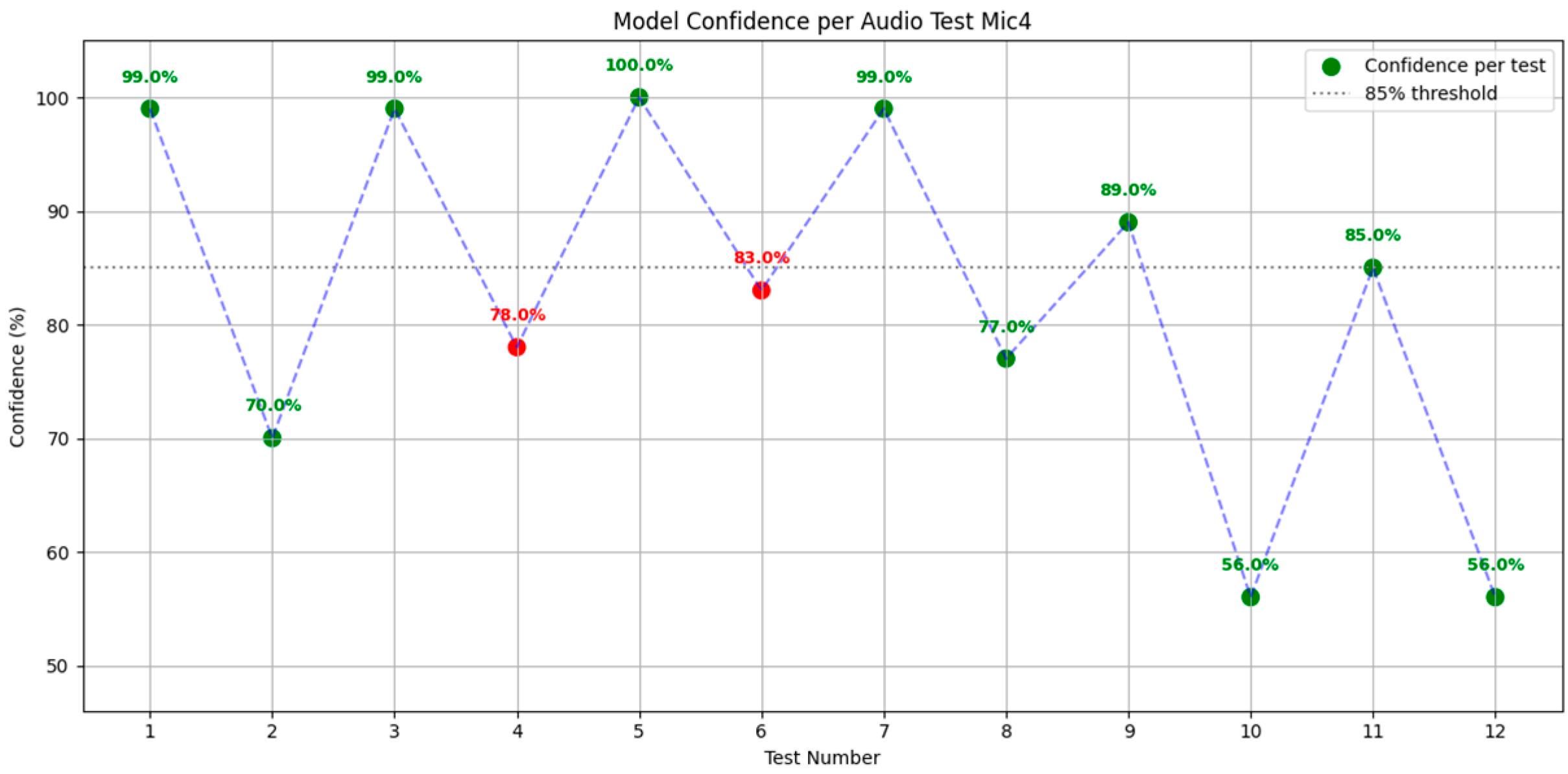

3. Results

4. Discussion

5. Conclusions

6. Patents

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Al-Emadi, S.; Al-Ali, A.; Al-Ali, A. Audio-Based Drone Detection and Identification Using Deep Learning Techniques with Dataset Enhancement through Generative Adversarial Networks. Sensors 2021, 21, 4953. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.R. UAV Detection using Convolutional Neural Networks and Radio Frequency Data. Appl. Comput. Eng. 2024, 100, 93–100. [Google Scholar] [CrossRef]

- Frid, A.; Ben-Shimol, Y.; Manor, E.; Greenberg, S. Drones Detection Using a Fusion of RF and Acoustic Features and Deep Neural Networks. Sensors 2024, 24, 2427. [Google Scholar] [CrossRef] [PubMed]

- Seidaliyeva, U.; Ilipbayeva, L.; Taissariyeva, K.; Smailov, N.; Matson, E.T. Advances and Challenges in Drone Detection and Classification Techniques: A State-of-the-Art Review. Sensors 2024, 24, 125. [Google Scholar] [CrossRef] [PubMed]

- Ganapathi, U.; Sabarimalai Manikandan, M. Convolutional Neural Network Based Sound Recognition Methods for Detecting Presence of Amateur Drones in Unauthorized Zones. In Machine Learning, Image Processing, Network Security and Data Sciences; Bhattacharjee, A., Borgohain, S., Soni, B., Verma, G., Gao, X.Z., Eds.; Communications in Computer and Information Science; Springer: Singapore, 2020; Volume 1241. [Google Scholar] [CrossRef]

- James, M.; Atul, R.; Rawat, D.B. Ensemble Learning for UAV Detection: Developing a Multi-Class Multimodal Dataset. In Proceedings of the 2023 IEEE 24th International Conference on Information Reuse and Integration for Data Science (IRI), Bellevue, WA, USA, 4–6 August 2023; pp. 101–106. [Google Scholar] [CrossRef]

- Kadyrov, D.; Sedunov, A.; Sedunov, N.; Sutin, A.; Salloum, H.; Tsyuryupa, S. Improvements to the Stevens drone acoustic detection system. Proc. Meet. Acoust. 2022, 46, 45001. [Google Scholar] [CrossRef]

- Lai, D.; Zhang, Y.; Liu, Y.; Li, C.; Mo, H. Deep Learning-Based Multi-Modal Fusion for Robust Robot Perception and Navigation. arXiv 2025, arXiv:2504.19002. [Google Scholar] [CrossRef]

- Alla, I.; Olou, H.; Loscri, V.; Levorato, M. From Sound to Sight: Audio-Visual Fusion and Deep Learning for Drone Detection. In Proceedings of the 17th ACM Conference on Security and Privacy in Wireless and Mobile Networks, Seoul, Republic of Korea, 27–29 May 2024; pp. 123–133. [Google Scholar] [CrossRef]

- Yoon, N.; Kim, K.; Lee, S.; Bai, J.H.; Kim, H. Adaptive Sensing Data Augmentation for Drones Using Attention-Based GAN. Sensors 2024, 24, 5451. [Google Scholar] [CrossRef] [PubMed]

- Tejera-Berengue, D.; Zhu-Zhou, F.; Utrilla-Manso, M.; Gil-Pita, R.; Rosa-Zurera, M. Analysis of Distance and Environmental Impact on UAV Acoustic Detection. Electronics 2024, 13, 643. [Google Scholar] [CrossRef]

- Dumitrescu, C.; Minea, M.; Costea, I.M.; Cosmin Chiva, I.; Semenescu, A. Development of an Acoustic System for UAV Detection. Sensors 2020, 20, 4870. [Google Scholar] [CrossRef] [PubMed]

- Patel, K.; Ramirez, L.; Canales, D.; Rojas, E. Unmanned Aerial Vehicles Detection Using Acoustics and Quantum Signal Processing. In Proceedings of the AIAA SCITECH 2024 Forum, Orlando, FL, USA, 8–12 January 2024. [Google Scholar] [CrossRef]

- Mrabet, M.; Sliti, M.; Ammar, L.B. Machine learning algorithms applied for drone detection and classification: Benefits and challenges. Front. Comms. Net. 2024, 5, 1440727. [Google Scholar] [CrossRef]

- Vladislav, S.; Ildar, K.; Alberto, L.; Dmitriy, A.; Liliya, K.; Alessandro, C.-F. Advances in UAV detection: Integrating multi-sensor systems and AI for enhanced accuracy and efficiency. Int. J. Crit. Infrastruct. Prot. 2025, 49, 100744. [Google Scholar] [CrossRef]

- Rahman, M.H.; Sejan, M.A.S.; Aziz, M.A.; Tabassum, R.; Baik, J.-I.; Song, H.-K. A Comprehensive Survey of Unmanned Aerial Vehicles Detection and Classification Using Machine Learning Approach: Challenges, Solutions, and Future Directions. Remote Sens. 2024, 16, 879. [Google Scholar] [CrossRef]

- Tejera-Berengue, D.; Zhu-Zhou, F.; Utrilla, M.; Gil-Pita, R.; Rosa-Zurera, M. Acoustic-Based Detection of UAVs Using Machine Learning: Analysis of Distance and Environmental Effects. In Proceedings of the 2023 IEEE Sensors Applications Symposium (SAS), Ottawa, ON, Canada, 18–20 July 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Semenyuk, V.; Kurmashev, I.; Lupidi, A.; Alyoshin, D.; Cantelli-Forti, A. Advance and Refinement: The Evolution of UAV Detection and Classification Technologies. arXiv 2024, arXiv:2409.05985. [Google Scholar] [CrossRef]

- Svanström, F.; Alonso-Fernandez, F.; Englund, C. Drone Detection and Tracking in Real-Time by Fusion of Different Sensing Modalities. Drones 2022, 6, 317. [Google Scholar] [CrossRef]

- Polyzos, K.D.; Dermatas, E. Real-Time detection, classification and DOA estimation of Unmanned Aerial Vehicle. arXiv 2019, arXiv:1902.11130. [Google Scholar]

- Uddin, Z.; Altaf, M.; Bilal, M.; Nkenyereye, L.; Bashir, A.K. Amateur Drones Detection: A machine learning approach utilizing the acoustic signals is the presence of strong interference. Comput. Commun. 2020, 154, 236–245. [Google Scholar] [CrossRef]

- López-Muñoz, P.; San Frutos, L.G.; Abarca, C.; Alegre, F.J.; Calle, J.L.; Monserrat, J.F. Hybrid Artificial-Intelligence-Based System for Unmanned Aerial Vehicle Detection, Localization, and Tracking Using Software-Defined Radio and Computer Vision Techniques. Telecom 2024, 5, 1286–1308. [Google Scholar] [CrossRef]

- Dombrovschi, M.; Deaconu, M.; Cristea, L.; Frigioescu, T.F.; Cican, G.; Badea, G.-P.; Totu, A.-G. Acoustic Analysis of a Hybrid Propulsion System for Drone Applications. Acoustics 2024, 6, 698–712. [Google Scholar] [CrossRef]

- Badea, G.P.; Frigioescu, T.F.; Dombrovschi, M.; Cican, G.; Dima, M.; Anghel, V.; Crunteanu, D.E. Innovative Hybrid UAV Design, Development, and Manufacture for Forest Preservation and Acoustic Surveillance. Inventions 2024, 9, 39. [Google Scholar] [CrossRef]

- Allen, J.B. Short term spectral analysis, synthesis, and modification by discrete Fourier transform. IEEE Trans. Acoust. Speech Signal Process. 1977, 25, 235–238. [Google Scholar] [CrossRef]

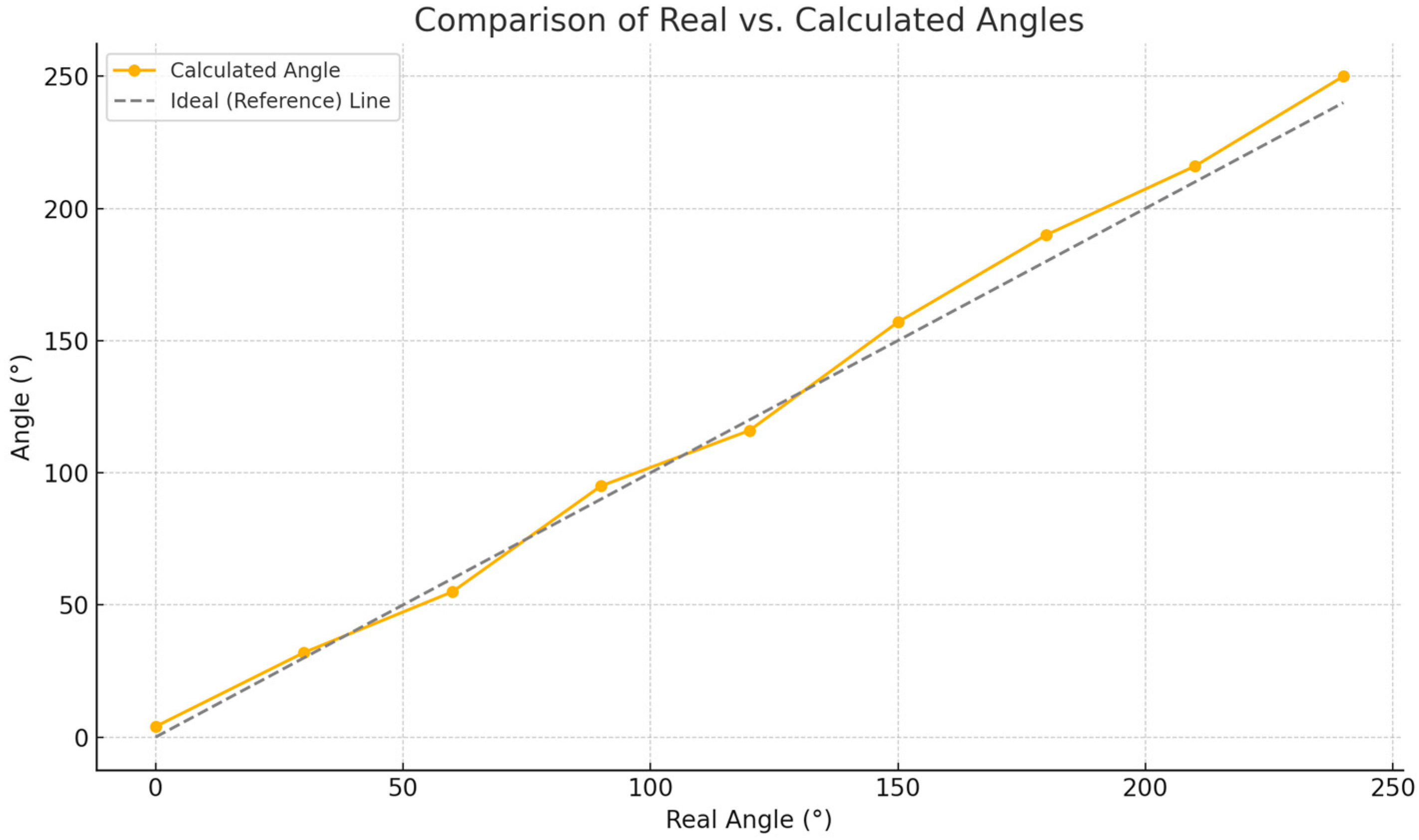

| Test No. | Real Angle [°] | Resulted Angle [°] |

|---|---|---|

| 1 | 0 | 0 |

| 2 | 25 | 27 |

| 3 | 45 | 48.6 |

| 4 | 60 | 64.8 |

| 5 | 70 | 75.6 |

| 6 | 90 | 97.2 |

| 7 | 135 | 145.8 |

| 8 | 180 | 194.4 |

| 9 | 200 | 216 |

| 10 | 240 | 260 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Badea, G.-P.; Dombrovschi, M.; Frigioescu, T.-F.; Căldărar, M.; Crunteanu, D.-E. Development and Testing of an AI-Based Specific Sound Detection System Integrated on a Fixed-Wing VTOL UAV. Acoustics 2025, 7, 48. https://doi.org/10.3390/acoustics7030048

Badea G-P, Dombrovschi M, Frigioescu T-F, Căldărar M, Crunteanu D-E. Development and Testing of an AI-Based Specific Sound Detection System Integrated on a Fixed-Wing VTOL UAV. Acoustics. 2025; 7(3):48. https://doi.org/10.3390/acoustics7030048

Chicago/Turabian StyleBadea, Gabriel-Petre, Mădălin Dombrovschi, Tiberius-Florian Frigioescu, Maria Căldărar, and Daniel-Eugeniu Crunteanu. 2025. "Development and Testing of an AI-Based Specific Sound Detection System Integrated on a Fixed-Wing VTOL UAV" Acoustics 7, no. 3: 48. https://doi.org/10.3390/acoustics7030048

APA StyleBadea, G.-P., Dombrovschi, M., Frigioescu, T.-F., Căldărar, M., & Crunteanu, D.-E. (2025). Development and Testing of an AI-Based Specific Sound Detection System Integrated on a Fixed-Wing VTOL UAV. Acoustics, 7(3), 48. https://doi.org/10.3390/acoustics7030048