AI-Driven Recognition and Sustainable Preservation of Ancient Murals: The DKR-YOLO Framework

Abstract

1. Introduction

1.1. Research on Visual Classification and Detection

1.2. Mural Detection Application Research

1.3. This Paper’s Research

2. Materials and Procedures

2.1. The YOLOv8 Model’s Architecture

2.2. Dynamic Snake Convolution

2.3. Kernel Warehouse Dynamic Convolution

2.4. Lightweight Residual Feature Pyramid Network

3. Experiment and Results

3.1. Hardware and Software Configuration

| Item | Setting |

|---|---|

| Optimizer | SGD (Nesterov = True), momentum 0.937, weight decay 5 × 10−4 |

| Initial LR | 0.01 (SGD), cosine decay to 1% of LR; warm-up 3 epochs (linear) |

| Epochs | 200 |

| Batch size | 32 images/GPU (RTX 3090 Ti, 24 GB) |

| Input size | 640 × 640 (train & val), letterbox resize, keep aspect ratio |

| Normalization | Pixel range [0, 1]; no per-channel mean/std shifting |

| Augmentation (train) | Horizontal flip 0.5; scale [0.5, 1.5]; translate 0.1; shear 0.0; degrees 0; HSV(h = 0.015, s = 0.7, v = 0.4); no mosaic/mixup for the baseline row (Model1) |

| Model precision | FP16 mixed precision (AMP) |

| Losses | Box: CIoU + DFL (Ultralytics defaults); Cls/Obj: BCE (label smoothing 0.0) |

| Anchor setting | Anchor-free (YOLOv8 head) |

| NMS | Class-agnostic NMS, IoU 0.60; confidence threshold 0.25 |

| Evaluation metric | Primary: mAP@0.5 (IoU = 0.5)—values reported in Table 2, Table 3 and Table 4 |

| Dataset split | Stratified 50/50 train/val (per-class proportions preserved) |

| Classes | 20 (per Table 2) |

| Hardware/Env | Windows 11 (64-bit); Python 3.8; PyTorch 1.10; i9-14900K; RTX 3090 Ti |

| Category | Name | Introduce | Sets |

|---|---|---|---|

| human landscap | Fans | These fans not only have practical uses but also carry rich cultural meanings, embodying the artistic achievements of ancient craftsmen. | 85 |

| Honeysuckle | In the edge of Dunhuang grotts, such as caisings, flat tiles, wall layers, arches, niches, and canopies, honeysuckle patterns are used as edge decorations. | 40 | |

| Flame | Flames in Dunhuang murals often appear as decorative patterns such as back light and halo, symbolizing light, holiness, and power. Around religious figures like Buddhas and Bodhisattvas, the use of flame patterns enhances their holiness and grandeur. | 35 | |

| Bird | Birds are common natural elements in Dunhuang murals. They adding vivid life and natural beauty to the murals. | 28 | |

| Pipa | As an important ancient plucked string instrument, the pipa frequently appears in Dunhuang murals, especially in musical and dance scenes. These pipa images not only showcase the form of ancient musical instruments but also reflect the music culture and lifestyle of the time. | 62 | |

| Konghou | The konghou is also an ancient plucked string instrument and is a significant part of musical and dance scenes in Dunhuang murals. | 34 | |

| tree | Trees in Dunhuang murals often serve as backgrounds or decorative elements, such as pine and cypress trees. They not only add natural beauty to the mural but also symbolize longevity, resilience, and other virtuous qualities. | 38 | |

| productive labor | Pavilion | Pavilions are common architectural images in Dunhuang murals. These architectural images not only display the artistic style and technical level of ancient architecture but also reflect the cultural life and esthetic pursuits of the time. | 76 |

| Horses | Horses in Dunhuang murals often appear as transportation or symbolic objects, such as warhorses and horse-drawn carriages. These horse images are vigorous and powerful, reflecting the military strength and lifestyle of ancient society. | 72 | |

| Vehicle | Vehicles, including horse-drawn carriages and ox-drawn carriages, are also common transportation images in Dunhuang murals. These vehicles not only showcase the transportation conditions and technical level of ancient society but also reflect people’s lifestyles and cultural habits. | 49 | |

| Boat | While boats are not as common as land transportation in Dunhuang murals, they do appear in scenes reflecting water-based life. These boat reflecting the water transportation conditions and water culture characteristics of ancient society. | 22 | |

| Cattle | Cattle in Dunhuang murals often appear as farming or transportation images, such as working cows and ox-drawn carriages. These cattle images are simple and honest, closely connected to the farming life of ancient society. | 32 | |

| religious activities | Deer | Deer in Dunhuang murals often symbolize goodness and beauty. In some story paintings or decorative patterns, deer images add a sense of vivacity and harmony to the mural. | 52 |

| Clouds | Clouds in Dunhuang murals often serve as background elements. They may be light and graceful or thick and steady, creating different atmospheres and emotional tones in the mural. The use of clouds also symbolizes good wishes such as good fortune and fulfillment. | 72 | |

| Alage wells | Algae Wells are important architectural decorations. Located at the center of the ceiling, they are adorned with exquisite patterns and colors. They not only serve a decorative purpose but also symbolize the suppression of evil spirits and the protection of the building. | 126 | |

| Baldachin | Canopies or halos in Dunhuang murals may appear as head lights or back lights, covering religious figures such as Buddhas and Bodhisattvas, symbolizing holiness and nobility. | 43 | |

| Lotus | The lotus is a common floral pattern in Dunhuang murals, symbolizing purity, elegance, and good fortune. Below or around religious figures such as Buddhas and Bodhisattvas. | 24 | |

| Niche Lintel | Niche lintels are the decorative parts above the niches in Dunhuang murals, often painted with exquisite patterns and colors. These niche lintel images not only serve a decorative purpose but also reflect the artistic achievements and esthetic pursuits of ancient craftsmen. | 10 | |

| Pagoda | Pagodas are important religious architectural images in Dunhuang murals. These pagoda images not only showcase the artistic style and technical level of ancient architecture but also reflect the spread and influence of Buddhist culture. | 66 | |

| Monk Staff | The monastic staff is a commonly used implement by Buddhist monks and may appear as an accessory to monk figures in Dunhuang murals. As an important symbol of Buddhist culture undoubtedly adds a strong religious atmosphere to the mural. | 29 |

| Simulations | P/% | R/% | mAP@0.5 | F1/% | FPS |

|---|---|---|---|---|---|

| YOLOv3-tiny | 79.2 | 79.6 | 81.4 | 78.8 | 557 |

| YOLOv4-tiny | 81.4 | 74.8 | 82.6 | 78.1 | 229 |

| YOLOv5n | 80.1 | 75.2 | 82.3 | 77.2 | 326 |

| YOLOv7-tiny | 81.3 | 73.8 | 81.2 | 76.9 | 354 |

| YOLOv8 | 78.3 | 75.4 | 80.6 | 77.2 | 526 |

| DKR-YOLOv8 | 82.0 | 80.9 | 85.7 | 80.5 | 592 |

| Models | Based Models | DSC | KW | RE-FPN | P/% | R/% | mAP@0.5 | F1/% | FPS | FLOPs (G) |

|---|---|---|---|---|---|---|---|---|---|---|

| Model1 | YOLOv8 | 78.3 | 75.4 | 80.6 | 77.2 | 526 | 28.41 | |||

| Model2 | YOLOv8 | ✓ | 81.6 | 73.9 | 80.8 | 78.8 | 868 | 27.74 | ||

| Model3 | YOLOv8 | ✓ | 77.3 | 74.8 | 81.3 | 78.7 | 640 | 26.93 | ||

| Model4 | YOLOv8 | ✓ | 84.0 | 83.2 | 82.1 | 84.5 | 474 | 14.17 | ||

| Model5 | YOLOv8 | ✓ | ✓ | 80.9 | 74.8 | 82.0 | 75.9 | 669 | 27.84 | |

| Model6 | YOLOv8 | ✓ | ✓ | 80.6 | 79.8 | 86.2 | 76.5 | 539 | 13.43 | |

| Model7 | YOLOv8 | ✓ | ✓ | 80.6 | 81.4 | 78.9 | 84.3 | 524 | 15.08 | |

| Model8 | YOLOv8 | ✓ | ✓ | ✓ | 82.0 | 80.9 | 85.7 | 80.5 | 592 | 16.01 |

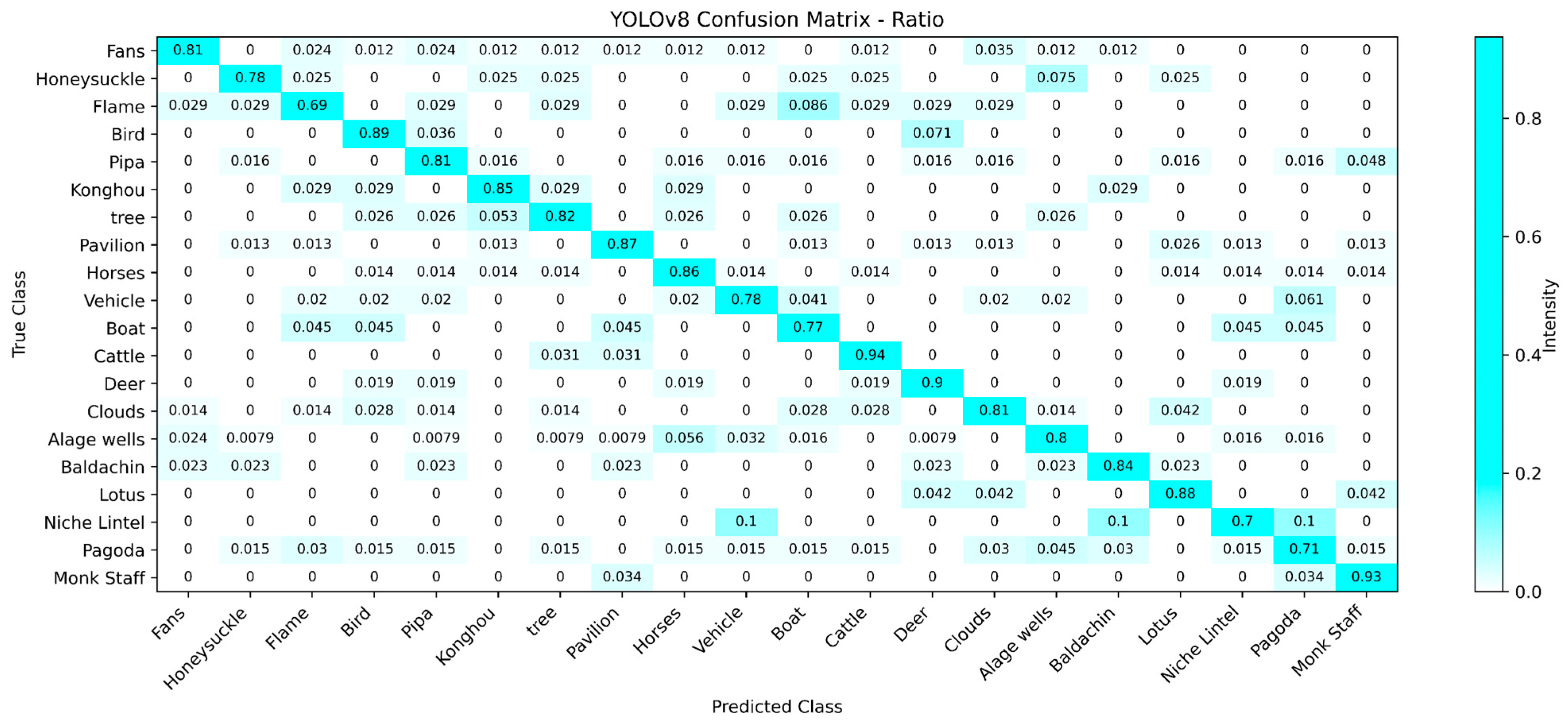

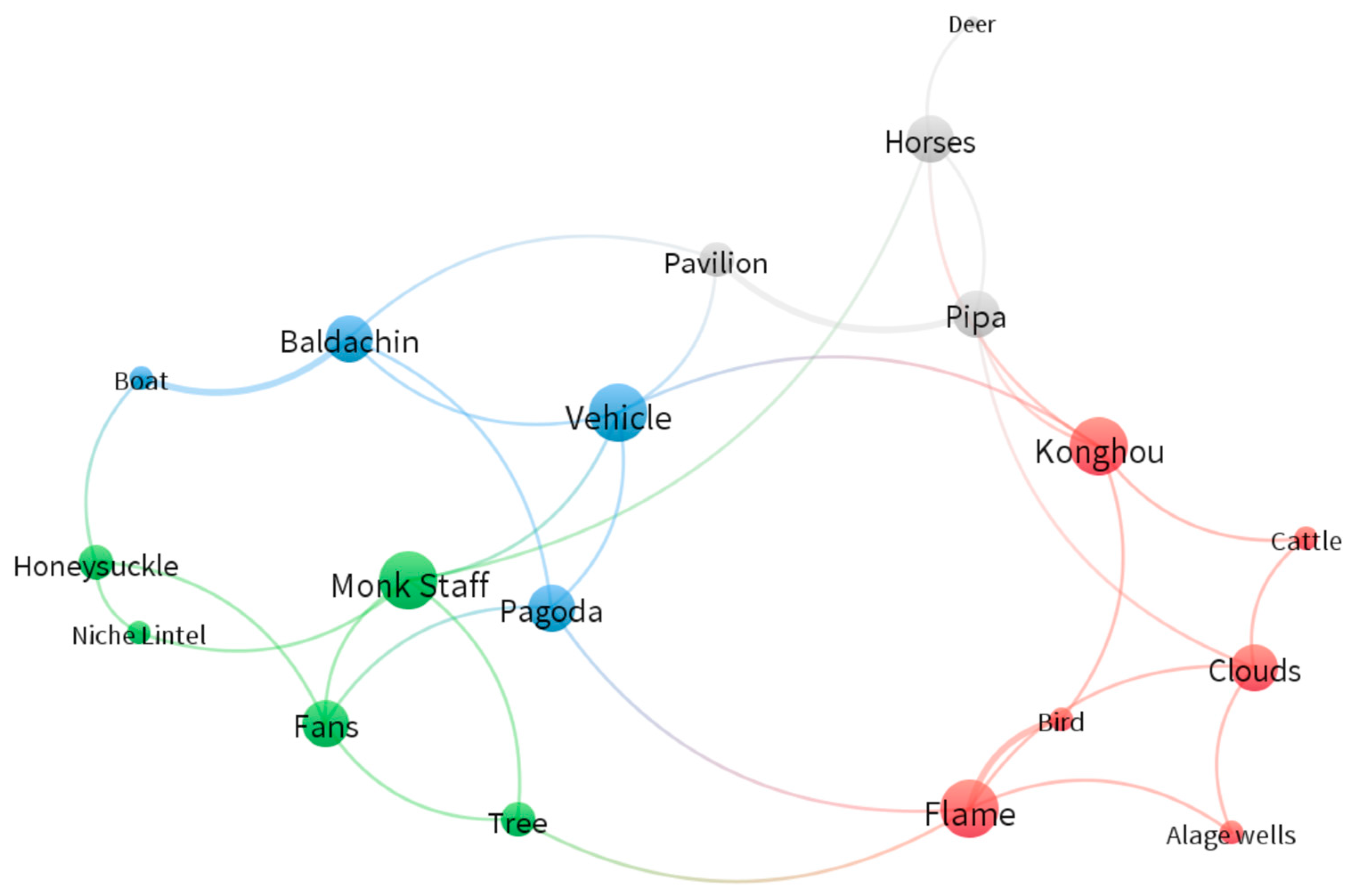

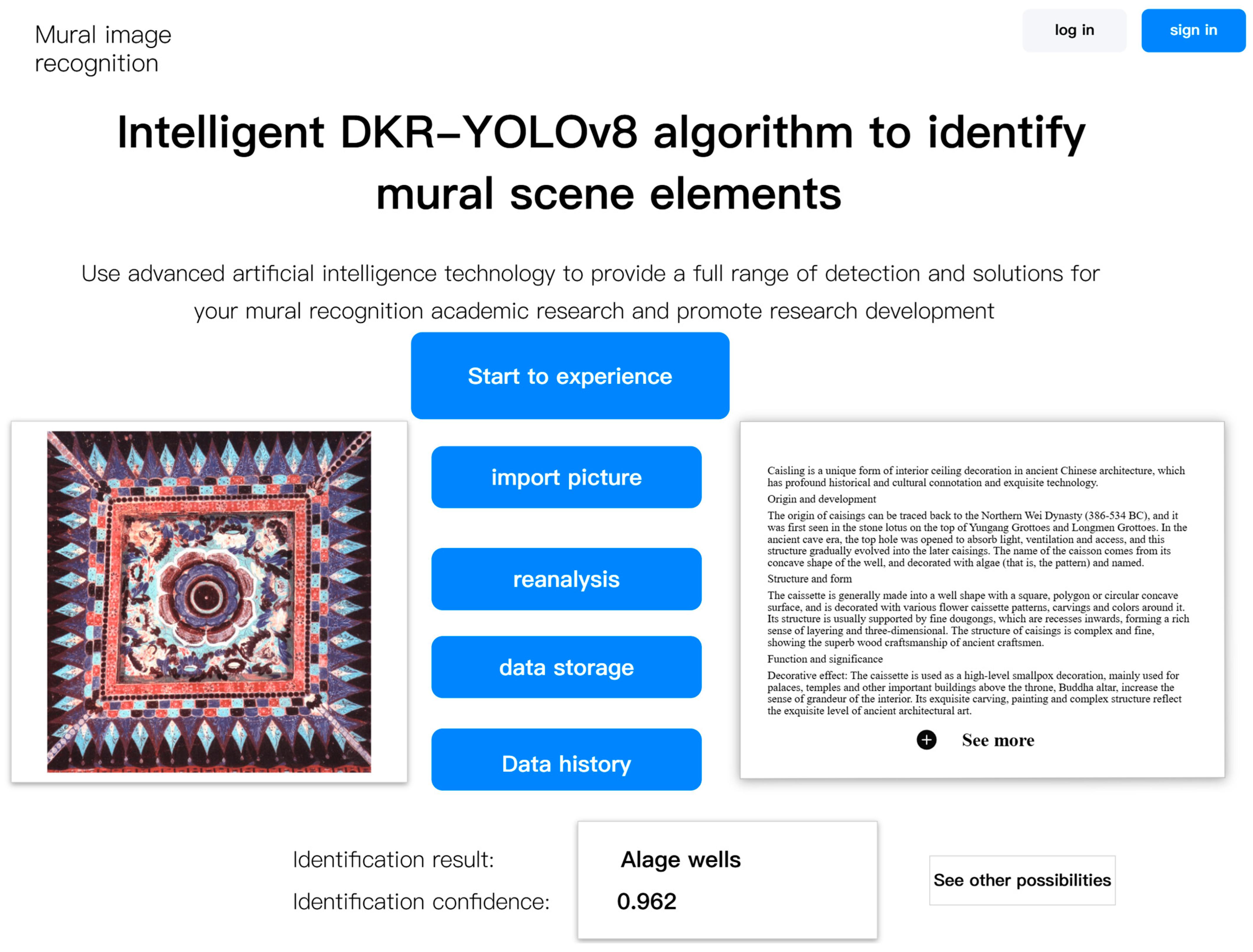

3.2. Recognition Results

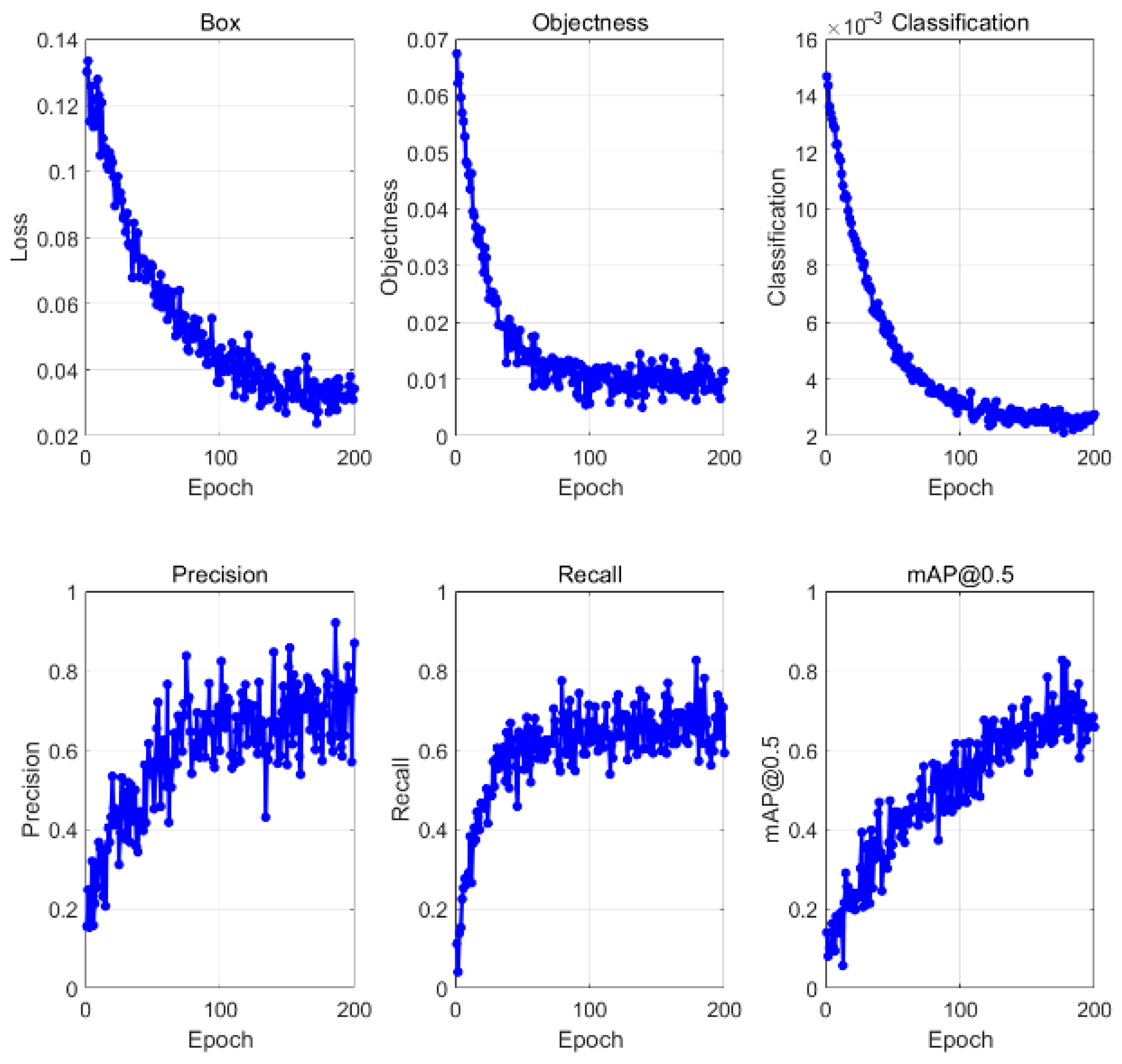

3.3. Test Results on Mural Dataset

3.4. Ablation Experiment

3.5. Grad-CAM Module Analysis

3.6. Model Method Comparison Experiment

3.7. Comparison Experiment Before and After Improvement

4. Discussion

5. Conclusions

5.1. Research Results

5.2. Research Prospects

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| RFA | Residual Feature Augmentation |

| LSTM | Long short-term memory |

| FPN | Feature pyramid network |

| SVMs | Support vector machines |

| GANs | Generative adversarial networks |

| BCE | Binary cross-entropy |

| KW | Kernel Warehouse |

| DWConv | Depthwise Convolution |

| SGD | Random Gradient Decrease |

| HE | Histogram Equalization |

| DSC | Dynamic Snake Convolution |

References

- Brumann, C.; Gfeller, A.É. Cultural landscapes and the UNESCO World Heritage List: Perpetuating European dominance. Int. J. Herit. Stud. 2022, 28, 147–162. [Google Scholar] [CrossRef]

- Mazzetto, S. Integrating emerging technologies with digital twins for heritage building conservation: An interdisciplinary approach with expert insights and bibliometric analysis. Heritage 2024, 7, 6432–6479. [Google Scholar] [CrossRef]

- Poger, D.; Yen, L.; Braet, F. Big data in contemporary electron microscopy: Challenges and opportunities in data transfer, compute and management. Histochem. Cell Biol. 2023, 160, 169–192. [Google Scholar] [CrossRef] [PubMed]

- Vassilev, H.; Laska, M.; Blankenbach, J. Uncertainty-aware point cloud segmentation for infrastructure projects using Bayesian deep learning. Autom. Constr. 2024, 164, 105419. [Google Scholar] [CrossRef]

- Agrawal, K.; Aggarwal, M.; Tanwar, S.; Sharma, G.; Bokoro, P.N.; Sharma, R. An extensive blockchain based applications survey: Tools, frameworks, opportunities, challenges and solutions. IEEE Access 2022, 10, 116858–116906. [Google Scholar] [CrossRef]

- Jaillant, L.; Mitchell, O.; Ewoh-Opu, E.; Hidalgo Urbaneja, M. How can we improve the diversity of archival collections with AI? Opportunities, risks, and solutions. AI Soc. 2025, 40, 4447–4459. [Google Scholar] [CrossRef]

- Yu, T.; Lin, C.; Zhang, S.; Wang, C.; Ding, X.; An, H.; Liu, X.; Qu, T.; Wan, L.; You, S. Artificial intelligence for Dunhuang cultural heritage protection: The project and the dataset. Int. J. Comput. Vis. 2022, 130, 2646–2673. [Google Scholar] [CrossRef]

- Gao, Y.; Zhang, Q.; Wang, X.; Huang, Y.; Meng, F.; Tao, W. Multidimensional knowledge discovery of cultural relics resources in the Tang tomb mural category. Electron. Libr. 2024, 42, 1–22. [Google Scholar] [CrossRef]

- Zhang, X. The Dunhuang Caves: Showcasing the Artistic Development and Social Interactions of Chinese Buddhism between the 4th and the 14th Centuries. J. Educ. Humanit. Soc. Sci. 2023, 21, 266–279. [Google Scholar] [CrossRef]

- Chen, S.; Vermol, V.V.; Ahmad, H. Exploring the Evolution and Challenges of Digital Media in the Cultural Value of Dunhuang Frescoes. J. Ecohumanism 2024, 3, 1369–1376. [Google Scholar] [CrossRef]

- Zeng, Z.; Qiu, S.; Zhang, P.; Tang, X.; Li, S.; Liu, X.; Hu, B. Virtual restoration of ancient tomb murals based on hyperspectral imaging. Herit. Sci. 2024, 12, 410. [Google Scholar] [CrossRef]

- Tekli, J. An overview of cluster-based image search result organization: Background, techniques, and ongoing challenges. Knowl. Inf. Syst. 2022, 64, 589–642. [Google Scholar] [CrossRef]

- Zeng, Z.; Sun, S.; Li, T.; Yin, J.; Shen, Y. Mobile visual search model for Dunhuang murals in the smart library. Libr. Hi Tech 2022, 40, 1796–1818. [Google Scholar] [CrossRef]

- Gupta, N.; Jalal, A.S. Traditional to transfer learning progression on scene text detection and recognition: A survey. Artif. Intell. Rev. 2022, 55, 3457–3502. [Google Scholar] [CrossRef]

- Shin, J.; Miah, A.S.M.; Konnai, S.; Hoshitaka, S.; Kim, P. Electromyography-Based Gesture Recognition with Explainable AI (XAI): Hierarchical Feature Extraction for Enhanced Spatial-Temporal Dynamics. IEEE Access 2025, 13, 88930–88951. [Google Scholar] [CrossRef]

- Zhang, P.; Liu, J.; Zhang, J.; Liu, Y.; Shi, J. HAF-YOLO: Dynamic Feature Aggregation Network for Object Detection in Remote-Sensing Images. Remote Sens. 2025, 17, 2708. [Google Scholar] [CrossRef]

- Neves, F.S.; Claro, R.M.; Pinto, A.M. End-to-end detection of a landing platform for offshore uavs based on a multimodal early fusion approach. Sensors 2023, 23, 2434. [Google Scholar] [CrossRef]

- Zhao, Z.; Zhang, P.; Liu, X.; Lei, X.; Luo, Y. Analysis of photoaging characteristics of Chinese traditional pigments and dyes in different environments based on color difference principle. Color Res. Appl. 2021, 46, 1276–1287. [Google Scholar] [CrossRef]

- Li, K.; Zhang, Y.; Li, K.; Li, Y.; Fu, Y. Image-text embedding learning via visual and textual semantic reasoning. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 641–656. [Google Scholar] [CrossRef] [PubMed]

- Gao, Y.; Li, W.; Liang, S.; Hao, A.; Tan, X. Saliency-Aware Foveated Path Tracing for Virtual Reality Rendering. IEEE Trans. Vis. Comput. Graph. 2025, 31, 2725–2735. [Google Scholar] [CrossRef]

- Feng, C.-Q.; Li, B.-L.; Liu, Y.-F.; Zhang, F.; Yue, Y.; Fan, J.-S. Crack assessment using multi-sensor fusion simultaneous localization and mapping (SLAM) and image super-resolution for bridge inspection. Autom. Constr. 2023, 155, 105047. [Google Scholar] [CrossRef]

- Ma, W.; Guan, Z.; Wang, X.; Yang, C.; Cao, J. YOLO-FL: A target detection algorithm for reflective clothing wearing inspection. Displays 2023, 80, 102561. [Google Scholar] [CrossRef]

- Dahri, F.H.; Abro, G.E.M.; Dahri, N.A.; Laghari, A.A.; Ali, Z.A. Advancing Robotic Automation with Custom Sequential Deep CNN-Based Indoor Scene Recognition. ICCK Trans. Intell. Syst. 2024, 2, 14–26. [Google Scholar] [CrossRef]

- Sun, H.; Wang, Y.; Du, J.; Wang, R. MFE-YOLO: A Multi-feature Fusion Algorithm for Airport Bird Detection. ICCK Trans. Intell. Syst. 2025, 2, 85–94. [Google Scholar]

- Iqbal, M.; Yousaf, J.; Khan, A.; Muhammad, T. IoT-Enabled Food Freshness Detection Using Multi-Sensor Data Fusion and Mobile Sensing Interface. ICCK Trans. Sens. Commun. Control 2025, 2, 122–131. [Google Scholar] [CrossRef]

- Ullah, N.; Ahmad, B.; Khan, A.; Khan, I.; Khan, I.M.; Khan, S. Attention-Guided Wheat Disease Recognition Network through Multi-Scale Feature Optimization. ICCK Trans. Sens. Commun. Control 2025, 2, 11–24. [Google Scholar] [CrossRef]

- Ali, M.L.; Zhang, Z. The YOLO framework: A comprehensive review of evolution, applications, and benchmarks in object detection. Computers 2024, 13, 336. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, H.; Dong, H. A Survey of Deep Learning-Driven 3D Object Detection: Sensor Modalities, Technical Architectures, and Applications. Sensors 2025, 25, 3668. [Google Scholar] [CrossRef]

- Cheng, T.; Song, L.; Ge, Y.; Liu, W.; Wang, X.; Shan, Y. Yolo-world: Real-time open-vocabulary object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–18 June 2024; pp. 16901–16911. [Google Scholar]

- Lu, P.; Jiang, T.; Li, Y.; Li, X.; Chen, K.; Yang, W. Rtmo: Towards high-performance one-stage real-time multi-person pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–18 June 2024; pp. 1491–1500. [Google Scholar]

- Derakhshani, M.M.; Masoudnia, S.; Shaker, A.H.; Mersa, O.; Sadeghi, M.A.; Rastegari, M.; Araabi, B.N. Assisted excitation of activations: A learning technique to improve object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 9201–9210. [Google Scholar]

- Lengyel, H.; Remeli, V.; Szalay, Z. A collection of easily deployable adversarial traffic sign stickers. at-Automatisierungstechnik 2021, 69, 511–523. [Google Scholar] [CrossRef]

- Cetinic, E.; She, J. Understanding and creating art with AI: Review and outlook. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 2022, 18, 1–22. [Google Scholar] [CrossRef]

- Mei, S.; Li, X.; Liu, X.; Cai, H.; Du, Q. Hyperspectral image classification using attention-based bidirectional long short-term memory network. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–12. [Google Scholar] [CrossRef]

- Liu, S.; Yang, J.; Agaian, S.S.; Yuan, C. Novel features for art movement classification of portrait paintings. Image Vis. Comput. 2021, 108, 104121. [Google Scholar] [CrossRef]

- Martinez Pandiani, D.S.; Lazzari, N.; Erp, M.v.; Presutti, V. Hypericons for interpretability: Decoding abstract concepts in visual data. Int. J. Digit. Humanit. 2023, 5, 451–490. [Google Scholar] [CrossRef]

- Wang, Y.; Wu, X. Current progress on murals: Distribution, conservation and utilization. Herit. Sci. 2023, 11, 61. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Zhang, X.; Huang, Z.; Cheng, X.; Feng, J.; Jiao, L. Bidirectional multiple object tracking based on trajectory criteria in satellite videos. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–14. [Google Scholar] [CrossRef]

- Koo, B.; Choi, H.-S.; Kang, M. Simple feature pyramid network for weakly supervised object localization using multi-scale information. Multidimens. Syst. Signal Process. 2021, 32, 1185–1197. [Google Scholar] [CrossRef]

- Hu, J.; Yu, Y.; Zhou, Q. GuidePaint: Lossless image-guided diffusion model for ancient mural image restoration. npj Herit. Sci. 2025, 13, 118. [Google Scholar] [CrossRef]

- Mantzou, P.; Bitsikas, X.; Floros, A. Enriching cultural heritage through the integration of art and digital technologies. Soc. Sci. 2023, 12, 594. [Google Scholar] [CrossRef]

- Saunders, D. Domain adaptation and multi-domain adaptation for neural machine translation: A survey. J. Artif. Intell. Res. 2022, 75, 351–424. [Google Scholar] [CrossRef]

- Zeng, Z.; Sun, S.; Li, T.; Yin, J.; Shen, Y.; Huang, Q. Exploring the topic evolution of Dunhuang murals through image classification. J. Inf. Sci. 2024, 50, 35–52. [Google Scholar] [CrossRef]

- Ren, H.; Sun, K.; Zhao, F.; Zhu, X. Dunhuang murals image restoration method based on generative adversarial network. Herit. Sci. 2024, 12, 39. [Google Scholar] [CrossRef]

- Fei, B.; Lyu, Z.; Pan, L.; Zhang, J.; Yang, W.; Luo, T.; Zhang, B.; Dai, B. Generative diffusion prior for unified image restoration and enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 9935–9946. [Google Scholar]

- Mu, R.; Nie, Y.; Cao, K.; You, R.; Wei, Y.; Tong, X. Pilgrimage to Pureland: Art, Perception and the Wutai Mural VR Reconstruction. Int. J. Hum.–Comput. Interact. 2024, 40, 2002–2018. [Google Scholar] [CrossRef]

- Nazir, A.; Cheema, M.N.; Sheng, B.; Li, P.; Li, H.; Xue, G.; Qin, J.; Kim, J.; Feng, D.D. Ecsu-net: An embedded clustering sliced u-net coupled with fusing strategy for efficient intervertebral disc segmentation and classification. IEEE Trans. Image Process. 2021, 31, 880–893. [Google Scholar] [CrossRef]

- Smagulova, D.; Samaitis, V.; Jasiuniene, E. Convolutional Neural Network for Interface Defect Detection in Adhesively Bonded Dissimilar Structures. Appl. Sci. 2024, 14, 10351. [Google Scholar] [CrossRef]

- Zhang, C. The digital interactive design of mirror painting under transformer based intelligent rendering methods. Sci. Rep. 2025, 15, 25518. [Google Scholar] [CrossRef]

- Zhou, G.; Zhi, H.; Gao, E.; Lu, Y.; Chen, J.; Bai, Y.; Zhou, X. DeepU-Net: A Parallel Dual-Branch Model for Deeply Fusing Multi-Scale Features for Road Extraction From High-Resolution Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 9448–9463. [Google Scholar] [CrossRef]

- Wang, P.; Fan, X.; Yang, Q.; Tian, S.; Yu, L. Object detection of mural images based on improved YOLOv8. Multimed. Syst. 2025, 31, 93. [Google Scholar] [CrossRef]

- Chen, L.; Wu, L.; Wan, J. Damage detection and digital reconstruction method for grotto murals based on YOLOv10. npj Herit. Sci. 2025, 13, 91. [Google Scholar] [CrossRef]

- Chen, Y.; Zhang, A.; Shi, J.; Gao, F.; Guo, J.; Wang, R. Paint Loss Detection and Segmentation Based on YOLO: An Improved Model for Ancient Murals and Color Paintings. Heritage 2025, 8, 136. [Google Scholar] [CrossRef]

- Sapkota, R.; Flores-Calero, M.; Qureshi, R.; Badgujar, C.; Nepal, U.; Poulose, A.; Zeno, P.; Vaddevolu, U.B.P.; Khan, S.; Shoman, M. YOLO advances to its genesis: A decadal and comprehensive review of the You Only Look Once (YOLO) series. Artif. Intell. Rev. 2025, 58, 274. [Google Scholar] [CrossRef]

- Hussain, M. Yolov1 to v8: Unveiling each variant–a comprehensive review of yolo. IEEE Access 2024, 12, 42816–42833. [Google Scholar] [CrossRef]

- Zheng, J.; Fu, Y.; Zhao, R.; Lu, J.; Liu, S. Dead Fish Detection Model Based on DD-IYOLOv8. Fishes 2024, 9, 356. [Google Scholar] [CrossRef]

- Wu, X.; Yuan, Q.; Qu, P.; Su, M. Image-driven batik product knowledge graph construction. npj Herit. Sci. 2025, 13, 20. [Google Scholar] [CrossRef]

- Ru, H.; Zhang, W.; Wang, G.; Ding, L. OMAL-YOLOv8: Real-time detection algorithm for insulator defects based on optimized feature fusion. J. Real-Time Image Process. 2025, 22, 52. [Google Scholar] [CrossRef]

- Dunkerley, D. Leaf water shedding: Moving away from assessments based on static contact angles, and a new device for observing dynamic droplet roll-off behaviour. Methods Ecol. Evol. 2023, 14, 3047–3054. [Google Scholar] [CrossRef]

- Lee, U.; Park, Y.; Kim, Y.; Choi, S.; Kim, H. Monacobert: Monotonic attention based convbert for knowledge tracing. In Proceedings of the International Conference on Intelligent Tutoring Systems, Thessaloniki, Greece, 10–13 June 2024; pp. 107–123. [Google Scholar]

- Li, S.; Tu, Y.; Xiang, Q.; Li, Z. MAGIC: Rethinking dynamic convolution design for medical image segmentation. In Proceedings of the 32nd ACM International Conference on Multimedia, Melbourne, Australia, 28 October–1 November 2024; pp. 9106–9115. [Google Scholar]

- Zhao, M.; Agarwal, N.; Basant, A.; Gedik, B.; Pan, S.; Ozdal, M.; Komuravelli, R.; Pan, J.; Bao, T.; Lu, H. Understanding data storage and ingestion for large-scale deep recommendation model training: Industrial product. In Proceedings of the 49th Annual International Symposium on Computer Architecture, New York, NY, USA, 18–22 June 2022; pp. 1042–1057. [Google Scholar]

- Ahsan, F.; Dana, N.H.; Sarker, S.K.; Li, L.; Muyeen, S.; Ali, M.F.; Tasneem, Z.; Hasan, M.M.; Abhi, S.H.; Islam, M.R. Data-driven next-generation smart grid towards sustainable energy evolution: Techniques and technology review. Prot. Control Mod. Power Syst. 2023, 8, 1–42. [Google Scholar] [CrossRef]

- Bhalla, S.; Kumar, A.; Kushwaha, R. Feature-adaptive FPN with multiscale context integration for underwater object detection. Earth Sci. Inform. 2024, 17, 5923–5939. [Google Scholar] [CrossRef]

| Model | Track | FLOPs (G) | P (%) | R (%) | F1 (%) | mAP@0.5 (%) |

|---|---|---|---|---|---|---|

| DKR-YOLOv8 | Detection | 16.01 | 82.0 | 80.9 | 80.5 | 85.7 |

| YOLOv8 (baseline) | Detection | 28.41 | 78.3 | 75.4 | 77.2 | 80.6 |

| NanoDet-Plus (1.5×) | Detection | 3.9 | 79.0 | 73.8 | 76.3 | 78.7 |

| PP-PicoDet-LCNet (1.5×) | Detection | 2.7 | 78.5 | 72.6 | 75.4 | 77.9 |

| EfficientDet-D0 | Detection | 8.5 | 80.0 | 74.5 | 77.1 | 79.3 |

| MobileNetV3-SSD | Detection | 2.2 | 76.4 | 69.7 | 72.9 | 75.1 |

| U-Net (R34 encoder) | Segmentation | 16.8 | 73.8 | 80.2 | None | 76.2 |

| DeepLabV3+ (MV3) | Segmentation | 5.4 | 74.9 | 79.0 | None | 76.5 |

| SegFormer-B0 | Segmentation | 8.9 | 77.3 | 80.5 | None | 78.3 |

| Mask R-CNN (R50-FPN) | Instance Seg. | 44.7 | 81.0 | 76.4 | 78.6 | 80.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, Z.; Kumar, S.; Wang, H.; Li, J. AI-Driven Recognition and Sustainable Preservation of Ancient Murals: The DKR-YOLO Framework. Heritage 2025, 8, 402. https://doi.org/10.3390/heritage8100402

Guo Z, Kumar S, Wang H, Li J. AI-Driven Recognition and Sustainable Preservation of Ancient Murals: The DKR-YOLO Framework. Heritage. 2025; 8(10):402. https://doi.org/10.3390/heritage8100402

Chicago/Turabian StyleGuo, Zixuan, Sameer Kumar, Houbin Wang, and Jingyi Li. 2025. "AI-Driven Recognition and Sustainable Preservation of Ancient Murals: The DKR-YOLO Framework" Heritage 8, no. 10: 402. https://doi.org/10.3390/heritage8100402

APA StyleGuo, Z., Kumar, S., Wang, H., & Li, J. (2025). AI-Driven Recognition and Sustainable Preservation of Ancient Murals: The DKR-YOLO Framework. Heritage, 8(10), 402. https://doi.org/10.3390/heritage8100402