1. Introduction

Cultural heritage sites and buildings are more than static structures; they are repositories of memory and stories. Traditionally, the documentation of such sites has relied on architectural descriptions and historical records, often presenting a singular, authoritative narrative. Recent advances in remote sensing and digital preservation have enabled highly detailed capture of built heritage, opening new possibilities for how we record and interpret these memories [

1,

2]. In particular, unmanned aerial vehicle (UAV) photogrammetry allows us to create precise 3D models of buildings by stitching together numerous images, providing a digital record for conservation and study [

3]. These 3D data have become important for recording the form of important sites so that, at least in digital form, they can be passed down to future generations. Remote sensing techniques yield geometrically accurate, realistic models usable for historical documentation, monitoring changes, and even virtual reality exhibits [

4]. Likewise, terrestrial laser scanning (TLS) is frequently employed in digital heritage documentation, capturing millions of 3D points to preserve architectural geometries with high fidelity [

2].

Capturing the physical geometry of heritage is only one part of preserving its significance. Equally important is the intangible cultural memory: the stories, experiences, perceptions, and meanings associated with a place. Conventional heritage discourse often privileges official histories and aesthetic narratives, sometimes neglecting personal or alternative perspectives. Heritage scholars refer to an Authorized Heritage Discourse (AHD) [

5] that tends to celebrate grand sites and elite experiences while marginalizing the voices of local communities or non-elites [

6]. For example, Brasília’s official World Heritage narrative celebrates its modernist design by renowned architects, whereas local accounts recall the grueling conditions and sacrifices of workers (

candangos) who built the city, stories largely absent from the authorized history until recently [

7]. Similarly, Rottnest Island (Wadjemup) in Australia has been promoted as a picturesque tourist paradise, while its dark history as an Aboriginal prison was downplayed or erased for decades. The island’s authorized heritage storyline emphasized natural beauty and holiday leisure, obscuring the incarceration, dispossession, and deaths of Indigenous people at the colonial-era Rottnest prison [

8,

9].

In response to these exclusions, heritage scholars and practitioners increasingly advocate polyvocal approaches that acknowledge multiple—even conflicting—perspectives on heritage sites [

10,

11,

12,

13,

14]. A polyvocal narrative framework embraces a plurality of stories, thereby diversifying accepted knowledge and challenging the dominance of any single viewpoint in interpreting the past [

10]. Such approaches seek to represent how heritage sites are experienced and remembered by different stakeholders and encourage reflection on one’s own opinions and perceptions.

This article explores how machine-assisted storytelling can enhance perception through a polyvocal representation of a built site, specifically through an experimental project centered on McMahon Hall—a Brutalist architectural landmark on the University of Washington campus in Seattle, USA. Completed in 1965 of precast concrete and distinctive for its irregularly protruding balconies [

8] as well as placement in the landscape (atop a hill amongst near mature pine trees [

9]), McMahon Hall embodies an era of architectural ideology and carries decades of campus history. In time, McMahon Hall housed thousands of students and even hosted experimental social programs (such as a 1970s language-immersion dormitory and a temporary education initiative for incarcerated individuals). These layers of architectural significance and social history make McMahon Hall an apt subject for exploring layered memory.

Like many mid-20th-century concrete structures, it elicits mixed reactions as it is admired by some for its bold geometry and structural honesty, and disliked by others for its heavy, “bunkerlike” appearance [

15]. This paper responds to the growing concern that such modernist landmarks are underappreciated, endangered, or even demolished as urban areas (in this case, a campus) evolve. A notable example from the same campus is UW’s More Hall Annex (the Nuclear Reactor Building, 1961), a Brutalist structure added to the National Register of Historic Places but still torn down in 2016 to make way for new development [

16]. McMahon’s neighbor, another Brutalist building designed by the same firm, Haggett Hall (1963), was condemned and permanently closed in 2021 and recently demolished to be replaced by newly contemporary student housing (

Figure 1 and

Figure 2). This shows how this typology of buildings is at risk of being slowly erased. The work of the authors aligns with recent efforts to document vulnerable modernist structures (e.g., Nonument Group’s use of photogrammetry and 3D modeling to archive derelict Brutalist monuments like Bulgaria’s Buzludzha Memorial Hall before they vanish) [

17]. Another reason for choosing this example was accessibility, as the department organizing the summer school where this experiment was developed occupies parts of the lower levels of the building.

Rather than recounting its history from a single authoritative stance, the project engages speculative memory, which involves the imaginative reconstruction of a subject’s potential past, present, or future [

18,

19,

20]. The Building and other non-human perspectives are invited to “speak” about their experiences, aspirations, and observations. The narrative is constructed through multiple AI-generated voices or characters, including: the Building itself (a first-person perspective), the concrete (the material’s viewpoint, witnessing wear and time), an architect (a reflective creator’s voice, not the building’s actual designer), a journalist (investigative, linking the building to broader societal contexts), and a bird (offering a temporal outsider’s view). This polyvocal cast was developed using generative AI for text (large language models to script each character’s monologue) and for voice (leveraging ElevenLabs’ AI voice synthesis to produce distinct, lifelike voices). The result is a narrative installation in which McMahon Hall’s cultural memory is reinterpreted through a conversation of voices—human, non-human, and machine—each providing a unique interpretive lens.

The approach builds upon several interdisciplinary threads of research. In digital heritage and remote sensing, it is increasingly recognized that technical documentation must be complemented by engaging storytelling to truly transmit a site’s value to the public [

21,

22,

23]. At the same time, emerging work in human–computer interaction and heritage design has embraced polyvocality and co-design with communities to surface unofficial memories and participatory narratives [

10,

21,

23]. Generative AI offers new tools to facilitate such narrative diversification; it can lower the barriers for individuals to create and share heritage stories, potentially amplifying diverse voices that were previously unheard [

23]. Yet the use of AI in cultural narrative comes with warnings—issues of bias, authenticity, and the risk of homogenization of voice need careful consideration [

24]. This experiment serves as a methodological inquiry exploring how precise spatial data from photogrammetric remote sensing can be fused with the imaginative storytelling capabilities of generative AI. The goal is to explore a methodology that could enrich the cultural memory of an architectural site and examine the new epistemological questions that arise when machine intelligence participates in the interpretation of built heritage.

Notably, this study was undertaken as part of a broader initiative. It aligns with the aims of the EpisTeaM project, a consortium that promotes interdisciplinary research bridging technology, art, and cultural heritage while advocating diverse epistemological perspectives in innovation [

25]. The project itself was developed during the “Rhetorical Machines & the Hyperlocal” Summer School hosted by the University of Washington’s DXARTS in 2025—an intensive workshop on generative AI and site-specific storytelling which formed part of the MSCA-funded EpisTeaM program. This context provided a collaborative, experimental setting that strongly informed the project’s goals of merging remote sensing precision with machine-augmented narrative.

The 10-day intensive Summer School brought together participants from European institutions, DXARTS, UW Arts Division collaborators, interdepartmental partners, as well as off-campus guests working at the intersections of art, technology, and cultural production. The profile of the participants was: education, researchers, artists, and PhD students. During the summer school, the participants attended technical demonstrations and hands-on workshops, artist talks and research presentations, roundtable discussions, as well as fieldwork across and beyond the Seattle campus. The final results were presented in an exhibition setting, with this project being the only one related to architecture.

2. Theoretical Framework

2.1. Digital Heritage, Photogrammetry, and Remote Sensing

Remote sensing technologies have revolutionized the field of cultural heritage documentation over the past two decades [

26,

27]. Data collected through remote sensing (e.g., photogrammetry, LiDAR scans, UAV imagery) is highly valuable for the ongoing monitoring and management of built heritage structures [

28,

29]. The high-resolution 3D data provides accurate baselines for detecting changes over time, planning maintenance interventions, and informing the design of a structural health monitoring (SHM) system [

30,

31]. These rich datasets often serve as the foundation for creating digital twins of structures, which are virtual replicas of physical buildings and mirror their state and behavior, enabling simulations of deterioration processes and proactive risk assessments [

32].

Photogrammetry, the technique of deriving measurements and models from photographs, has emerged as a particularly powerful tool for heritage recording [

33,

34]. Modern close-range photogrammetry and structure-from-motion (SfM) algorithms can reconstruct detailed three-dimensional models of monuments and buildings from overlapping image sets, including images captured via drones [

3]. These methods, alongside terrestrial laser scanning, have greatly improved the ability to capture fine geometric detail and surface texture of heritage sites in their current state [

1]. The importance of such digital recording is widely recognized, especially as many heritage sites suffer from threats like weathering, natural disasters, and human conflict or neglect [

35,

36]. By creating a digital record of a site at a specific moment in time, even if the physical structure is altered or lost, its spatial data has more chances to be passed to future generations, considering the large number of projects developed in the last years [

37,

38,

39,

40,

41].

Photogrammetric 3D models also enable new forms of analysis and engagement. Once an accurate model of a building is produced, it can serve numerous purposes beyond archival documentation: scholars can perform virtual measurements and structural analyses; conservators can monitor degradation by comparing models over time; and educators or curators can create visualizations or virtual reality experiences for the public. Remote sensing-based models have been used in applications ranging from historical research and condition monitoring to immersive VR exhibits and digital tourism [

42,

43,

44]. Remondino (2011) notes that the availability of high-quality 3D data opens a wide spectrum of further applications and allows new analyses, studies, and interpretations, facilitating everything from simulation of aging processes to multimedia museum presentations [

45,

46,

47,

48,

49,

50,

51,

52]. Capturing the tangible aspects of heritage through remote sensing not only aids preservation but also provides a platform for innovative interpretive content.

Despite these advances, integrating rich spatial data into heritage interpretation remains an area of ongoing development. Most photogrammetry efforts focus on fidelity and accuracy of the models, aligning with traditional conservation goals [

53,

54,

55]. Building on this, the next frontier in digital heritage involves connecting the detailed digital surrogates of buildings with the intangible heritage—the stories, memories, and cultural values associated with them. This gap points to the need for methodologies that can couple the strengths of remote sensing (precision, objectivity, visual realism) with approaches that convey meaning and narrative [

53,

56,

57,

58,

59,

60,

61,

62,

63,

64]. In this work, the photogrammetric model of McMahon Hall is used not as a static end product, but as a springboard for storytelling. The 3D model becomes the stage and visual reference for speculative narratives that imbue the “cold” data with cultural context and imaginative life. In doing so, the digital model is treated as part of a spatial computing environment where digital and physical realities merge, enabling immersive heritage experiences.

2.2. Polyvocal Naratives and the Bridge to AI Storytelling

Storytelling is an important way to share knowledge about places. Narratives help transform static information (dates, architectural styles, events) into meaningful stories that resonate with people’s emotions and personal connections. Traditionally, the dominant narratives at heritage sites have been curator- or expert-led, sometimes resulting in a monologic interpretation. Recent scholarship and practice advocate for more inclusive, polyvocal narratives that represent multiple viewpoints, especially those of communities historically left out of official accounts [

6,

10,

65,

66]. Embracing polyvocality can democratize heritage interpretation, ensuring that the “story of the site” is not reduced to a single voice of authority. Instead, it becomes a tapestry of voices across different cultures, genders, classes, or even species, each adding to a richer understanding. Tsenova et al. (2022) describe polyvocal design in heritage as a way to diversify accepted knowledges and lived experiences, explicitly challenging the biases of Authorized Heritage Discourse [

10,

67,

68,

69]. Such plural narratives acknowledge that heritage sites have layered meanings and that what a place signifies can vary greatly among different observers and participants in its history [

70,

71,

72,

73].

Traditionally, polyvocal heritage narratives have been developed through collaborative storytelling with human participants [

74,

75,

76]. Museums, preservationists, and community historians often engage local voices, scriptwriters, artists, and cultural stakeholders to co-create stories, ensuring that multiple viewpoints and memories shape the interpretation of a place [

65,

77,

78,

79]. In some narrative projects, even non-human perspectives have been employed to jolt audiences into a new viewpoint, a technique known as defamiliarization. Viktor Shklovsky famously highlighted Tolstoy’s short story “Kholstomer” (1886), narrated by a horse, as a classic example that forces readers to see human society through non-human eyes [

80,

81]. Similarly, granting a voice to a building or a material can defamiliarize the narrative of a site, prompting fresh reflection on familiar history. This approach resonates with theories of material agency: political theorist Jane Bennett argues that matter possesses a form of vitality or “thing-power”, proposing a vibrant materiality that runs alongside and inside humans, giving “the force of things” its due [

82,

83]. By figuratively animating McMahon Hall and its concrete with voices, the project adopts what Bennett calls a “strategic anthropomorphism”—symbolically empowering the building as an agent in its own story. However, an AI-generated narrator inevitably lacks any lived experience or true sentience. This raises philosophical and emotional questions: can a voice with no genuine memory evoke the same authenticity as a human storyteller? Searle’s famous Chinese Room thought experiment argued that a computer could appear to understand language without actually understanding it [

84], underscoring the gap between generating words and grasping meaning. In the present context, this critique translates to a caution—an AI can mimic empathy or nostalgia, but it does not feel these things. The absence of real memory or consciousness in the machine voices might limit the emotional resonance of the narrative or prompt ethical concerns about authenticity [

85,

86,

87]. Nonetheless, in the project, the use of AI as a storyteller had practical and exploratory motivations. The summer school setting imposed time constraints and limited access to diverse human contributors, so generative AI was leveraged as a creative tool to produce multiple character voices quickly. It was a pedagogical experiment in human–machine co-creation using state-of-the-art AI to augment (not replace) traditional storytelling. Bridging AI with more conventional collaborative narratives is envisioned for future work. For example, an extended project could invite actual community members or domain experts to contribute voices alongside the AI-generated ones, joining the efficiency and novelty of AI with the depth and authenticity of lived human experience. Such a hybrid approach could expand the polyvocal framework further, while mitigating the gaps that an AI-only narration might leave.

It is worth mentioning that efforts to enable machines to generate or understand human language have a long history in computing, dating back to the 1950s in the earliest days of AI research. From Alan Turing’s seminal 1950 speculations about “thinking” machines to the first machine translation experiments, researchers have long sought to model linguistic intelligence. A running philosophical debate accompanied these efforts (take John Searle’s experiment, previously mentioned). This underscores the distinction between producing syntactically valid narratives and genuinely understanding semantics. This is a tension that persists in today’s generative models and is highly relevant when considering AI-based storytelling [

88].

Public imaginaries around AI have also been profoundly shaped by fiction and cinema. Iconic portrayals such as HAL 9000, the sentient computer in

2001: A Space Odyssey (1968), have played a pivotal role in how AI is perceived as a narrative agent [

89]. HAL’s calm, disembodied voice and its complex psychological arc from logical servant to a self-aware “being” provided an early template for a machine that could not only speak but possess a viewpoint and even emotional depth [

90]. This shows that the public imagination around AI long predates contemporary LLMs and that the project is an experiment that literalizes a long-standing sci-fi trope. This frames the work within a broader cultural and historical context, strengthening its theoretical grounding by showing that the notion of a machine-augmented chorus of voices—e.g., Samantha in

Her (2013); Ava in

Ex Machina (2015); Joi in

Blade Runner 2049 (2017); VIKI in

I, Robot (2004); Deus Ex Machina in

The Matrix franchise (1999–2003); Indra in

Brave New World (2020), etc.—taps into long-standing themes. Popular culture has, in effect, prepared us to accept that an AI can narrate a story or speak for non-human characters. This cultural conditioning can be relevant when giving voice to, for example, a historical building or artifact through AI. Viewers are already familiar with the idea that “the machine speaks” and even that it might have a distinct perspective or personality [

91].

As generative AI technology has matured, artists and writers have increasingly engaged these systems as creative partners. Blaise Agüera y Arcas noted in 2016, at the onset of Google’s Artists + Machine Intelligence initiative, that systematically experimenting with neural networks gives artists “a new tool to investigate nature, culture, ideas, perception, and the workings of our own minds” [

92]. In other words, neural generative models can serve as probes into collective consciousness and cultural memory, making them apt instruments for polyvocal storytelling. Early creative NLP systems in the deep learning era—such as Neural Storyteller (2016) and OpenAI’s GPT-2 (2019)—offered a first glimpse of AI-assisted narrative generation. These models were relatively limited in coherence and often produced text that was surreal, nonsensical, or unintentionally humorous [

93]. Yet, they were embraced in experimental literature (see

Sunspring or

1 the Road) [

94] and digital heritage projects [

95] for what they

could do: generate content in different styles or from unusual perspectives at the prompt of a user. Importantly, these early models were also relatively transparent and customizable. For example, the code and weights for Neural Storyteller were openly released, and artists fine-tuned it on bespoke datasets (including romance novels and song lyrics) to imbue the generated stories with particular tones [

96]. Similarly, GPT-2 was made public, enabling practitioners to retrain the model or direct its output toward creative ends. This openness allowed a wave of participatory innovation, in which researchers and artists could “look under the hood” of the language model and adapt it to their storytelling needs. This process also made the limitations of these models (and their built-in biases) more visible and available for critique.

The landscape shifted in the GPT-3 era (2020 onwards), as state-of-the-art language models became far more powerful but also more opaque to end users. Models like OpenAI’s GPT-3 and its successors are typically accessed only through APIs, without open access to their training data or parameters. The trained weights of GPT-3 have never been publicly released, meaning outsiders cannot directly fine-tune the model on new data, and any customization must occur via prompt engineering or limited API-based fine-tuning [

97]. This represents a stark change from the GPT-2 period and has raised concerns among the creative and academic community about the diminished “hackability” of the new AI models. The barrier to entry for deep customization is higher, potentially limiting the diversity of voices able to shape these models. Nevertheless, artists have found ways to collaborate with large language models even as black-box APIs. One notable example is K. Allado-McDowell’s

Pharmako-AI (2021), often cited as the first book co-written with GPT-3 [

98]. In this experimental text, Allado-McDowell and the AI engage in an iterative dialogue—the human author writes a passage or poses a question, the machine responds, and so on—producing a kind of contrapuntal co-authorship throughout the narrative [

99]. The result is a polyvocal, hybrid voice that blurs the boundary between human and machine contributions.

Pharmako-AI exemplifies how generative models can be used in a speculative storytelling mode, where the AI’s outputs are not merely tools or random noise, but become integral voices in the narrative weave. This kind of human-AI duet resonates strongly with the project’s aims of pluralizing authorship and perspective in cultural heritage narratives. It shows that even within the constraints of less-accessible GPT-3+ models, critical and creative engagement is possible, though it requires treating the AI as a co-creator through careful prompt design, rather than as a fully controllable instrument.

2.3. Epistemology and Ethics of Machine-Augmented Storytelling

The involvement of AI in interpreting or representing reality raises epistemic questions—chief among them, how we can trust what a machine says about the world when we know the machine has no true understanding or lived experience. An AI language model can simulate a believable narrative but operates by statistical pattern-matching rather than human-style cognition [

84], a kind of

machinic unconscious [

100]. AI’s tendency to create the hyperreal (outcomes that are superficially realistic yet separated from the original context) is also a pertinent concern in heritage storytelling. El Moussaoui (2025) discusses how AI-generated forms can become hyperreal, replicating references from earlier simulations and drifting ever further from authentic cultural narratives [

101].

One response to this problem has been to design technical strategies that augment and guide the generative process in order to ground it more firmly in truth and context. A notable example is retrieval-augmented generation (RAG), which combines a large language model with an external information retrieval component. In a RAG setup, when the model receives a prompt, it first queries a knowledge repository (such as a collection of documents or a database) for relevant texts, and those texts are provided to the model as additional context before it generates an answer [

102]. This approach effectively lets the AI “consult” reference materials on the fly. By anchoring the model’s input in up-to-date or domain-specific information, RAG can improve factual accuracy and reduce hallucinations in the output.

In the context of the Rhetorical Machines workshop, a form of RAG was employed by building a private knowledge base (“knowledge stack”) about McMahon Hall—including historical data, architectural descriptions, and anecdotal records [

103,

104,

105,

106,

107,

108,

109,

110]—which the AI could draw upon during narrative generation. Rather than relying solely on the AI’s general training (which might reflect generic or Western-biased notions of a “building”), the system was augmented to retrieve site-specific details and vocabulary, ensuring that each character’s voice had access to accurate heritage information. This technique reflects a broader epistemological safeguard: it acknowledges that the AI on its own lacks grounded knowledge, so it is actively provisioned with relevant context from human-curated sources.

Another contemporary strategy to guide generative models is system prompting (or persona prompting). Modern language models used in a chat configuration (such as GPT-3.5/GPT-4-based systems) allow a “system” message that defines the role, tone, and behavior of the AI before any user prompts are answered. In effect, the system prompt sets the overall personality and constraints for the model’s responses [

111]. By crafting distinct system prompts, each of the AI-generated characters was given a clear identity and perspective from the outset. For example, the Building’s system prompt cast it as an elder narrator with a reflective, first-person voice, whereas the Journalist’s prompt instructed the AI to adopt a curious, investigative tone. These persistent personas acted as stylistic and ethical governors on the model’s output—the Building would “remember” to speak in a contemplative, historically aware manner and not stray into language inconsistent with that role. The use of system-level personas also helped mitigate biases, since the AI could be explicitly instructed to avoid certain stereotypes or to emphasize particular viewpoints that were underrepresented. In summary, the retrieval augmentation for factual grounding and system/persona prompting for narrative control proved crucial in the machine-augmented storytelling approach. These techniques allowed the generative creativity of AI while steering it within the bounds of a respectful, context-informed heritage narrative. By combining human curation and guidance with machine generation, the project navigated the ethical tightrope between imaginative speculation and fidelity to the cultural memory of McMahon Hall. Each AI voice thus operated not as an unfettered “black box”, but as a constrained collaborator, one that could contribute novel ideas and phrasings, yet remained accountable to the framework set by human designers and source knowledge [

102,

111].

The AI outputs were treated not as authoritative sources, but as raw creative material to be evaluated and, if needed, edited by the research team (who bring domain knowledge in architecture and local history). In scripting the characters’ voices, the AI model was given guiding prompts anchored in factual details (e.g., the year of the building’s construction, its architectural features, known historical anecdotes) combined with imaginative framing (e.g., asking “If you were the concrete of this building, what memories would you have from 60 years of campus life?”). The AI’s responses were then reviewed for consistency with known facts and for narrative value. Any intriguing but unverifiable information introduced by the AI was removed or adjusted. This approach ensured that while creative augmentation from the AI was welcomed, the polyvocal story remained grounded in a respectful interpretation of the building’s identity and context.

Another epistemological dimension is the concept of machine augmentation of memory. Typically, the collective memory of a place is constructed through human discourse: oral histories, written archives, photographs, etc. In this experiment, part of that memory construction is outsourced to a machine. The AI effectively acts as a prosthesis for memory and imagination, extending human capacity to envision what McMahon Hall might remember or represent beyond the limits of human recollection. This aligns with ideas of the extended mind, where tools and technologies become integral parts of cognitive processes, the Thesis of the Extended Mind (TMX) stating that parts of the mind are not confined to the brain but, literally, lie in external materiality [

112,

113,

114]. In other words, “hybrid thinking systems” that spread across the brain, body, and external resources are being formed. This extended mind perspective, first articulated by Clark and Chalmers in 1998, has gained traction in cognitive science and related fields, highlighting that tools and technologies can be integral components of human cognition [

115].

Of course, this raises the question of whose memory is being represented when an AI “remembers”. It is partly the memory of the training data (a vast, generalized cultural memory scraped from the internet), and partly the memory it was seeded with (specific facts about McMahon Hall). Thus, the narratives generated are a hybrid memory, interweaving authentic site-specific history with broader cultural motifs learned by the AI. Ethically, this hybridity must be transparent to the audience.

In summary, the theoretical stance is one of cautious exploration. This research embraces the innovative potential of AI to enhance storytelling and bring forth multiple voices, while also critically examining the knowledge production involved. This positions the work in the domain of experimental heritage interpretation: it is less about finding one truth and more about probing the space of possibilities.

2.4. Spatial Computing and Immersive Storytelling

A further context for the project is the rise of spatial computing and immersive media in representing architecture and heritage. Spatial computing technologies (augmented reality, virtual reality, mixed reality, and related interactive 3D platforms) allow digital content to be embedded in the physical environment or to recreate environments digitally in a way that users perceive as a first-hand experience [

116]. In recent years, cultural institutions and researchers have been leveraging VR/AR to create immersive tours of heritage sites, where users can virtually walk through reconstructions of ancient buildings or see annotations overlaid on ruins. For example, an augmented reality application at the Acropolis of Athens enables visitors to view virtual reconstructions of classical structures superimposed onto the present-day ruins [

117]. Likewise, a virtual reality installation at the Balzi Rossi Museum in Italy allows the public to virtually explore Paleolithic cave sites using photogrammetric 3D reconstructions that provide access to normally inaccessible archaeological features [

4]. These kinds of experiences often include overlaid annotations or guided interactions, greatly enhancing learning and engagement by providing a sense of presence and interactivity that traditional media lack [

118,

119].

The use of a photogrammetric 3D model of McMahon Hall directly feeds into these immersive possibilities. The model could be imported into game engines or AR applications. Within such an interactive space, the AI-generated voices could be deployed as spatialized audio narratives. A potential AR application on campus could have a user pointing a smartphone at McMahon Hall and hearing the building’s AI voice narrate its story, with the audio seemingly emanating from the building itself. This would situate the narrative in loco, blending physical sight with digital sound in a spatially coherent way. Another possible application, in a VR setting using the 3D model, is that a user could navigate through a virtual McMahon Hall and encounter different voices in different rooms or corners, with each voice telling a part of the overall story, creating a sense of discovery and immersion in the polyvocal narrative.

However, the authors have opted for an authored/time-based artwork, rather than an open/interactive format. This was a deliberate choice with guided decisions about chapters, progression, repetition, cinematography, editing, etc. This choice was made due to the constraints of the summer school’s limited timeframe and the authors’ expertise in filmmaking, which was more suitable for a rapid prototyping exercise than a complex interactive application. Techniques of experiential storytelling, common in museum and exhibit design, were used in designing the narrative. Just as exhibit designers create narrative environments that guide visitors through a story (often using scenography, audio guides, interactive displays, etc.), the digital McMahon Hall was treated as a narrative environment. The difference here is that the environment (the building) is also an active storyteller. This resonates with emerging ideas in architecture and experience design, suggesting that buildings can communicate narratives and that architects should think of designing stories, not just structures [

120,

121]. As Marco Frascari, who explored the role of storytelling in architecture, argues, an architectural “good-life can be built, explained, and taught only through storytelling [

122]. The project takes this concept literally by giving an existing building a narrative voice through AI.

This article thus presents an experiment, a step toward a future where visiting a historical building might involve interacting with one or more interactive, or real-time AI-based characters of that building, or where remote digital visits convey not just what the building looks like but what it means culturally, through dramaturgical experiences. The incorporation of AI allows those stories to be more dynamic and possibly interactive (e.g., one could imagine a visitor asking the building a question and the AI answering in character). While this current implementation (and first experiment) is mostly scripted (static narratives written with AI assistance), and can be seen as a current limitation, it opens the door for more interactive narrative systems in the future.

3. Materials and Methods

The goal of the project was to create a digital form of McMahon Hall and then imbue it with narrative voices. The project unfolded in two parallel streams: one focusing on spatial data capture and modeling (to obtain the building’s digital record) and the other on narrative development with AI (to generate the building’s digital voices). The following sections detail the methods in each stream and how they converged into the final polyvocal narrative experience.

3.1. Photogrammetric Data Acquisition

3.1.1. Drone Survey

A drone-based photogrammetry survey of McMahon Hall was conducted to capture its external geometry and appearance. The equipment used was a DJI Mavic 2 Pro quadcopter UAV (Shenzhen DJI Innovation Technology Co., Ltd., produced in Shenzhen, Guangdong, China) equipped with a 20-megapixel camera. Prior to flight, the operator confirmed that all local and federal guidelines were followed for small, non-commercial UAS flights, including the procurement of an FAA Certificate of Authorization for campus operation. The flights were conducted mid-morning on an overcast day to ensure even lighting and minimal shadows on the building’s façades.

Multiple manual flights around McMahon Hall were executed to cover it from all accessible angles. A circular orbit pattern was flown at several elevations (approximately 15 m, 30 m, and 45 m above ground level) to capture the entire height of the 13-story structure. In total, about 300 high-resolution photographs were captured, with ample overlap (around 70–80% forward overlap and 60% side overlap between successive images). The imagery covered all sides of the building except where obstructed by trees or adjacent structures. Complementary ground photographs (using a high-resolution DSLR camera) were also taken for ground-level details to supplement areas not visible to the drone (such as the entrance and courtyard).

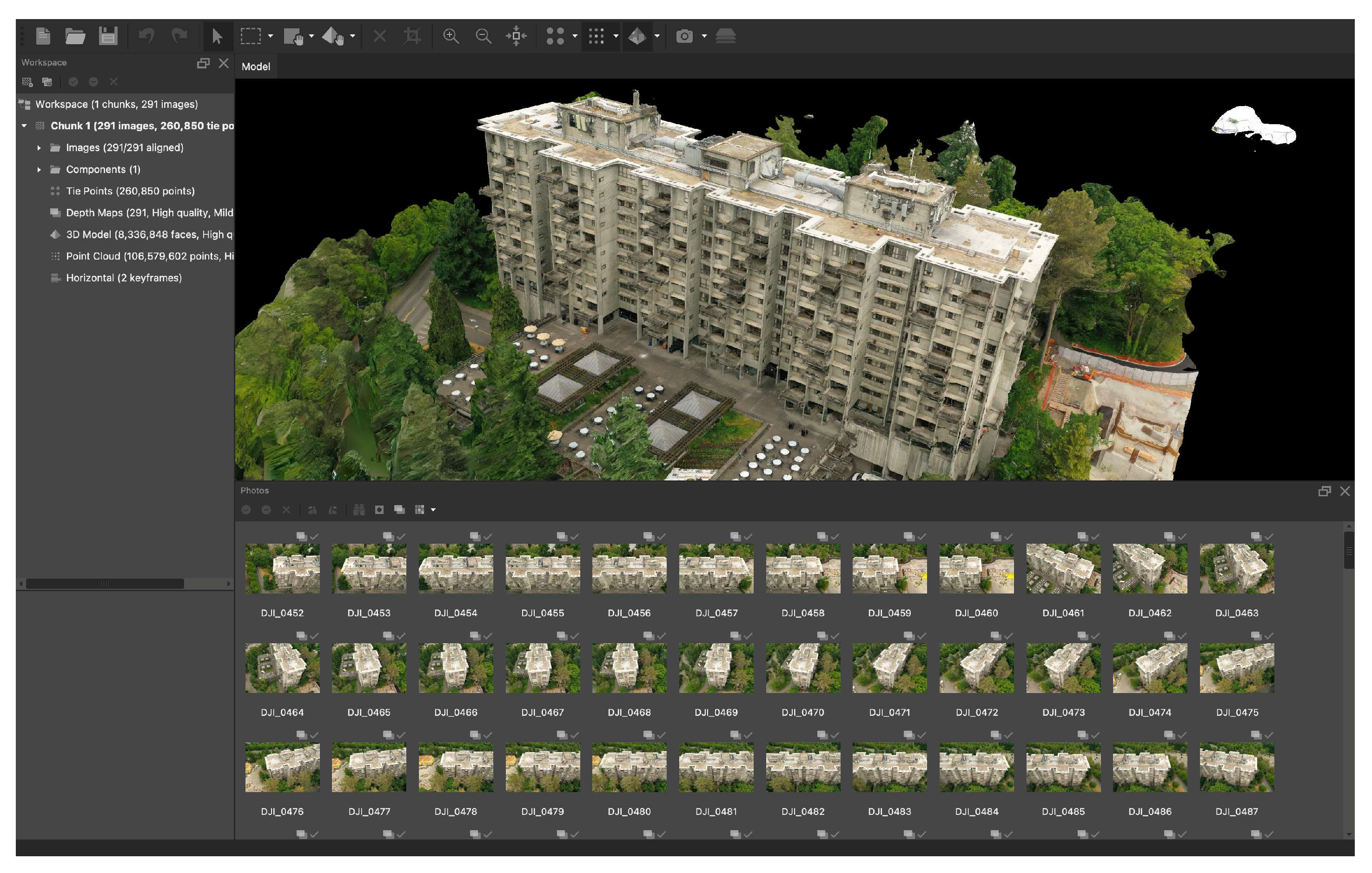

The imagery was processed using Agisoft Metashape Professional (v2.2). The process involved: (1) photo alignment to solve for camera positions and generate a sparse 3D point cloud via feature matching; (2) dense point cloud generation using multi-view stereo reconstruction; (3) mesh generation to create a continuous surface model (a textured polygonal mesh with ~8 million faces to balance detail and performance was generated); and (4) texture mapping using the high-resolution photos for realistic surface appearance. The images were masked to exclude the sky and distant background, focusing computation on the building itself.

The resulting 3D model of McMahon Hall (

Figure 3) is a geometrically detailed representation of the building’s exterior. It includes the characteristic balcony geometry, the rough concrete texture of the walls (captured in the texture maps), and even some context of the immediate surroundings (trees, ground, etc., although these were only partially modeled to maintain focus on the building). The model’s level of detail allows viewers to discern architectural features like the deep grooves in the concrete and the pattern of window placements. This fidelity was important not just for visual realism but also because it can evoke the material presence of the building in the narrative experience. For example, when the “Concrete” voice speaks, the visual of the textured concrete, as seen in the model, reinforces the connection between that voice and the physical material.

3.1.2. Interior Models

The development of 3D models for interior spaces was achieved using methods similar to those employed for generating the building’s exterior 3D model. Interior images were captured using a SONY Alpha 7c II mirrorless camera, equipped with a 33 MP sensor and a Sony 26–60 mm f/4.5 lens used at 26 mm, and an Apple iPhone 16 Pro Max, which provides a 48 MP sensor despite its significantly smaller size. The iPhone’s camera specifications were f/2.2, 13 mm. The Sony camera was used for larger areas, such as the ground-floor hallways and one of the residential units, due to its superior image quality resulting from the larger sensor size. For narrower spaces or ones with lower light, the iPhone’s super-wide-angle camera was employed, as its wide capture angle and aperture specifications for low light offered substantial advantages over the limitations of the Sony camera. For each analyzed space, between 160 and 220 images were collected. The capture was done in a vertical (portrait) format with a 30–40% overlap, describing a full 360° for each position. The interior and exterior scans were produced as separate, unlinked models, as their primary use was for creating distinct video sequences rather than a unified, navigable 3D environment. While this may limit their use for a fully integrated, real-time interactive experience, it was sufficient to support the project’s narrative.

Interior photographic materials were produced for the following areas:

The central ground-floor access hall and its two lateral areas, configured as static spaces, waiting areas, or discussion lobbies. These spaces also mediate the relationship with vertical circulation, the exit to the ground-floor terrace, and access to other amenities. As described, the documented spaces on the ground floor have a high degree of spatial complexity, further complicated by generous glazed surfaces. The limitations of the mapping method were evident in this situation, resulting in a generated model with many ambiguously defined zones.

A residential unit composed of several bedrooms grouped around a living room and a kitchen. In this case, the small size of the space and the standard furniture in the bedrooms made it impossible to align the images to obtain a complete floor plan of the modular residential unit. The living room, hallway, and terrace were the only materials that could be successfully used.

The former restaurant area, transformed into a student lounge, was only partially generated. This was due to its volumetric complexity, the transformations it had undergone over time, and the generous glazed surfaces facing the vegetation surrounding the building, which also blocked direct light. The main challenges in this space were the lighting and the spatial complexity, which was configured with furniture islands typical of restaurant areas, all organized radially around the former kitchens, now transformed into the DXARTS laboratories. The model created here is relevant only for the areas in proximity to the glazing and only for the naturally lit zone.

The main challenges in acquiring the data for the photogrammetry that significantly influence the quality of the result stem from lighting conditions as well as from the parameters of the camera used to capture the images. Thus, from the tests carried out, it was noticed that spaces with proper, uniform lighting (preferably natural light) provided higher accuracy in the photo alignment process, as well as a better level of precision in identifying volumes and surfaces. Areas in shadow or with low color contrast were difficult to identify, leaving them undefined in the software. Similarly, in situations where there were excessively white surfaces or glazed surfaces, difficulties arose in recognizing planes and defining them as volumes.

Regarding the SONY A7c II camera used for image acquisition, focal length is extremely important for interior spaces. In this case, a focal length of 28 mm was used, but for small rooms (corridors or accommodation units), the iPhone 16 Pro Max ultrawide camera (13 mm) was employed, though with much lower image quality compared to the SONY camera. Another important aspect is image distortion, which usually occurs with ultrawide lenses; for this type of image capture, rectilinear lenses are recommended as they do not distort straight lines. These characteristics are important because image alignment requires a 30–50% overlap between frames, and such a lens captures more of the space, which is especially useful in smaller rooms. The disadvantage in this case is resolution, which affects the level of surface detail. Another element worth mentioning is aperture, which should provide sharpness across the captured image. To obtain a well-defined, sharp image in all areas, an aperture of F8–F10 would be a minimum setting, while F16 would be ideal. However, in poorly lit spaces, this creates challenges, since achieving sharp images at F10 requires longer exposure times and, most likely, the use of a tripod to avoid camera shaking during image capture.

After image acquisition, they were imported into Agisoft Metashape software, separately for each space. The images were aligned using a “medium” accuracy setting, “estimated” reference preselection with a 40.000 Key point limit and 4.000 tie point limit, without applying any mask and with the exclusion of stationary points as parameters. Following this stage, the identified areas and the correctness of the alignment were verified. The next phase involved generating the point cloud. For this step, several settings were experimented with, and the most relevant configuration was chosen for each situation.

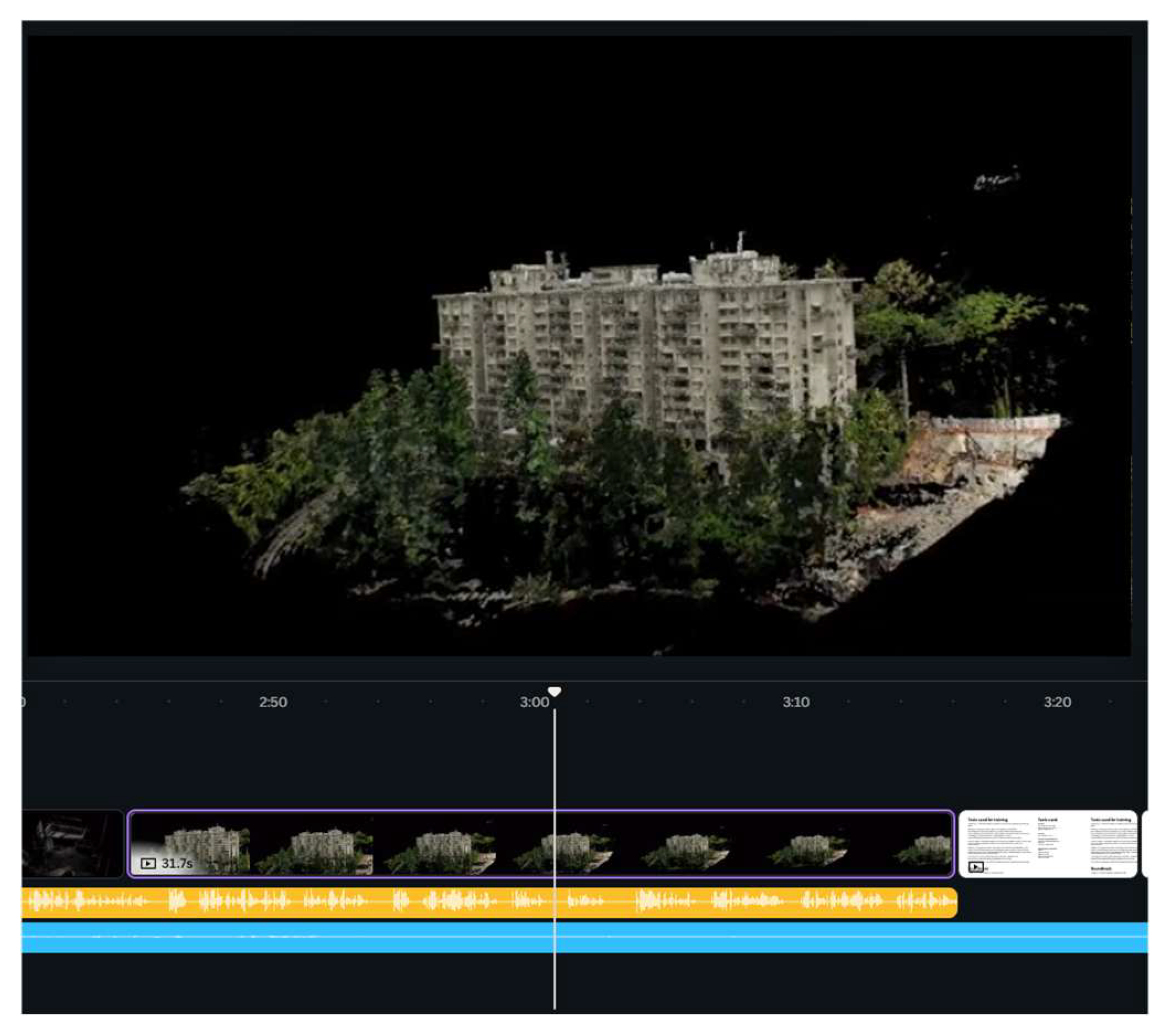

The subsequent stage was dedicated to generating the models based on the point cloud. Using the same software, videos were also created by successively positioning key perspectives, with the transition between them automatically handled by the software after prior analysis and control of the placement of the key perspectives. To achieve greater diversity and to highlight different levels of perception, video materials of each model were generated in various forms: (1) monochromatic point cloud; (2) point cloud with color extracted from the photographic images; (3) point cloud with a point accuracy map; (4) triangulated 3D model, in a monochrome version (

Figure 4); (5) 3D model mapped with color extracted from the photographic images.

During the editing process, the most representative shots were selected, their content and technique chosen to best support each of the five voices of McMahon Hall.

3.2. Generative AI Narrative Development

In parallel with the photogrammetric work, the narrative content was developed via generative AI tools. For the text generation, a custom instance of GPT-3.5 (gemma3:1b through Msty App) that allowed incorporating an external knowledge base was utilized. Key to generating the narrative was constructing a knowledge stack about McMahon Hall. This knowledge stack comprised curated information on the building—historical data, architectural descriptions, anecdotal stories selected by the authors—which could be fed into the AI as needed. By augmenting the AI’s context with this site-specific knowledge (a form of RAG, as discussed previously), it was ensured that each character’s monologue was grounded in factual details and local color. The knowledge stack helped the AI avoid generic or irrelevant content by giving it access to authentic material about McMahon Hall’s history and environment. For this project, the sources in the knowledge stack ranged from official architectural documentation and campus archives to personal reminiscences (e.g., alumni anecdotes and student newspaper stories). However, despite these efforts, certain perspectives were missing—notably, we did not include first-hand oral histories from former residents or voices from the surrounding community. Future iterations could expand the knowledge base to incorporate such missing viewpoints (for example, by interviewing past inhabitants or gathering community archives), allowing the AI-generated narrative to become even more polyvocal and representative of McMahon Hall’s lived experience. It is important to mention that this generation process was executed offline, ensuring the curated knowledge stack remained isolated and free from any external data contamination.

Equally important was crafting distinct personas for each AI character through system prompts. A separate system prompt for each of the five voices was created, defining their role, tone, and style. For instance, the Building was given the persona of an “elder campus guardian” with a reflective and slightly whimsical tone, speaking in the first person about decades of observations; the Journalist’s system prompt cast them as a “curious investigative reporter” asking probing questions and recounting historical anecdotes; the Concrete was prompted as a “weathered and stoic entity” focusing on physical sensations and the passage of time; the Bird was defined as “a playful yet wise observer” providing commentary from nature’s perspective; and the Architect was given the persona of “a mid-century modernist” reflecting on design intentions and the building’s legacy. These system prompts ensured that each character’s AI-generated voice had a clear identity and consistent style of expression.

After establishing the knowledge stack and personas, the script for each character’s monologue was iteratively generated and refined using GPT-3.5. For each segment of the narrative, a combination of automated generation and human editing was employed. The AI would be prompted with a specific scenario or question—for example, “The Concrete reflects on 60 years of weathering and memories”—and then the output would be curated. If the AI introduced factual errors (e.g., referencing an event that never occurred at McMahon) or drifted off-tone, that portion would be corrected or regenerated. This cycle continued until a coherent script representing a narration of the five voices resulted, with each monologue enriched by factual context from the knowledge stack and stylistically shaped by the persona prompts.

The deliberate use of customized system prompts and carefully crafted persona voices acted as a form of creative constraint that guided the AI’s output. It ensured that each AI-generated character spoke with a genuine-sounding voice and accurate context. Every monologue had a consistent voice appropriate to the character, making each persona sound distinct and believable. As a result, the narrative felt authentic, and the viewers could “hear” individual characters, as opposed to a single generic AI voice, which greatly improved the realism of the storytelling. This strengthened the authenticity of the narrative, while also making the multi-character storytelling approach more effective in engaging the viewers and conveying the building’s history.

The final narrative script consisted of interwoven segments from each character, collectively about 15 min in duration when voiced. It progressed roughly as follows: the Building greets the audience and sets a reflective, slightly humorous tone; the Journalist interjects historical context and probing questions, spurring recollections from the others; the Concrete offers a long-term, material perspective; the Bird provides ephemeral observations from an outsider vantage point; and the Architect concludes with contemplative remarks tying past to future. This structure allowed the story to flow naturally while giving each voice its moment, ensuring a dynamic shift of perspectives throughout.

3.3. Voice Synthesis and Audio Production

Once the narrative script was finalized, the audio for each character was produced using AI-driven text-to-speech (TTS). ElevenLabs, an advanced AI voice synthesis platform, was used to generate distinct voices for each character. A unique voice profile to match each persona was selected or fine-tuned: The Building had a deep, slightly reverberant male voice to convey age and internal spaciousness; the Journalist had a young female voice with an inquisitive intonation; the Concrete had a gritty, resonant voice evoking the roughness of material; the Bird had a light, high-pitched voice delivered in short phrases; and the Architect had a mature male voice with a measured, authoritative tone. For each voice, the parameters such as speaking rate and emotional inflection were adjusted to align with the character’s personality and intended mood.

Background sounds were generated and added to each character’s voice track to enhance immersion and context. For example, when the Building speaks, a faint interior ambient hum is mixed in, as if hearing it from within its halls. The Journalist’s segments include the distant clack of a typewriter and newsroom chatter, reinforcing their reportorial context. The Concrete’s voice is underscored by subtle wind and rain sounds, reflecting decades of weathering. The Bird’s interludes have a gentle outdoor ambiance—rustling leaves, distant birdcalls—placing its voice in the open sky. The Architect’s voice, being reflective, was kept mostly clean but with a slight echo, as if in an empty lecture hall, signifying a voice from the past guiding the present.

The synthesized voice clips were layered with their corresponding background ambiances and adjusted timing so that the narrative flows like a dialogue. Transitions between voices were smoothed with cross-fades or short pauses. The final audio was then reviewed to ensure clarity and cohesion, with any mispronunciations corrected via manual text tweaks or re-synthesis if necessary.

3.4. Integration with Visuals (Animation)

To create a compelling presentation, the audio narrative was combined with visual content derived from the 3D model and related media. One format of delivery was a short, animated film. Using the photogrammetric 3D model of McMahon Hall, a series of dynamic shots was rendered to accompany each voice. For instance, during the Building’s monologue, the camera slowly ascends the façade of the 3D model, as if the building itself is introducing its towering presence. When the Concrete speaks, close-up views of the textured concrete surfaces are shown, synchronized with the voice’s reference to weathering and time. The Bird’s segments feature aerial perspectives circling the building and surrounding canopy, matching its skyborne point of view. The Journalist’s commentary is supplemented with occasional photographs that flash on-screen (since their role is to inform). During the Architect’s reflections, present-day images of McMahon Hall are introduced alongside the 3D models.

This video was edited in a documentary/experimental style, with cuts aligning to narrative beats. Distinct color grading to different segments was applied: for example, the Building and Concrete scenes were desaturated and high-contrast to evoke a sense of age and materiality, while the Bird’s scenes were brighter and slightly sped-up (mimicking a bird’s lively perspective). The overall effect was an audio-visual composition where viewers not only heard McMahon Hall’s many voices but also saw through each voice’s eyes.

4. Results

The outcome of the project is a multi-channel narrative that reinterprets McMahon Hall’s history and presence through five interwoven voices. In the final installation (presented as a short film), each AI-generated character contributes a distinct perspective, creating a layered understanding of the site. Below, representative segments of the narrative are summarized to illustrate how each voice functions within the overall story.

Voice 1. The Building: McMahon Hall introduces itself in a gently, weary, amused tone, referring to itself as an “old sentinel” that has watched over generations of students. Speaking as if addressing a group of present-day students, the Building reflects on its decades of experience. It humorously mentions a “sentient stapler” (a playful narrative embellishment, but AI-generated novelty) signaling that this storytelling will blend fact with whimsy. The Building’s persona is proud yet wistful, conveying both the grandeur and the burdens of being a long-standing structure. It sets a reflective mood and invites the other voices to chime in with their own “memories within the walls”.

Voice 2. The Journalist: In a crisp, inquisitive tone, the Journalist provides context and inquiry. They introduce themselves as if writing a story about McMahon Hall for the campus paper, which frames their interjections. They rely on factual details: that McMahon’s Brutalist design was controversial, that over the years it earned nicknames like “the Fortress” due to its austere appearance, and even notes that infamous serial killer Ted Bundy once purportedly prowled its halls (a local legend adding a dark tinge to its history). Their segments ground the narrative with real-world context and critical perspective, ensuring the story remains tethered to heritage facts and debates.

Voice 3. The Concrete: With a low, resonant delivery, the Concrete speaks as the very material of McMahon Hall. It muses about the tactile experiences of being a building’s fabric: enduring decades of rain and Seattle dampness, feeling the daily vibrations of footsteps and laughter, the silence of summers when students depart. The Concrete’s monologue is poetic and a bit melancholic. It also shares a sense of pride in the hall’s structural strength, boasting that it withstands every storm unyieldingly. The Concrete’s viewpoint adds a deep sense of time and material continuity to the narrative, giving voice to the inanimate endurance of the hall.

Voice 4. The Bird: A crow perched on the walls of McMahon Hall “speaks” in a light, lilting voice with an almost sing-song cadence. The Bird’s perspective is whimsical and ephemeral. It talks about how the hall looks from above (

Figure 5)—describing the geometric pattern of the Building as a “bloody, grey boxy monstrosity”. The Bird playfully recounts an event with breathless excitement, detailing a subtle, audacious maneuver that allowed it to snag a whole bag of chips—a “glorious, salty, carbohydrate-rich victory”. It laughs at the European visitors for leaving “unsupervised culinary treasures” for the taking on the terrace of McMahon Hall. The bird’s monologue complements the Concrete’s long-term perspective with a more fleeting, moment-to-moment awareness.

Voice 5. The Architect. They are the voice with a measured, thoughtful tone, accompanied by the familiar tune of an architecture docuseries, that introduces McMahon Hall as a “behemoth of Brutalist architecture” that both inspires and frustrates. The voice first describes the “majestic grandeur” of the façade with its imposing bulk and cold, gray concrete. It notes how the material absorbs and reflects light, creating an “aura of solidity and permanence” that is quintessentially Brutalist. Next, the Architect’s voice focuses on the entrance, the narrow doorway leading to a cavernous interior as a design that prioritizes functionality over aesthetics, an approach he admires for its utilitarian ethos. It acknowledges the “functional efficiency” of this design, though it admits it sacrifices “visual interest”. The monologue then turns to the Building’s less-than-stellar relationship with its environment. The speaker, an architect who values contextualism, finds the lack of regard for the surrounding architecture and pedestrian flow to be “jarring”. The segment (and the entire narration) concludes by summarizing McMahon Hall, representing both the “triumphs and pitfalls” of the Brutalist movement. It praises the Building’s commitment to raw concrete and functions as a testament to modernist ideals, but also admits that even celebrated examples carry risks. The architect ultimately sees McMahon Hall as a “magnificent structure” that reflects humanity’s desire to create spaces that honor the “raw materiality” of the world. The video concludes with a zoomed-out 3D image of McMahon Hall and its surroundings (

Figure 6).

The polyvocal narrative was presented in an intimate exhibition setting, featuring a film projected on a screen accompanied by spatial audio. The audio–video narrative was screened as a fixed-duration film with a ‘captive audience’; however, an alternative viewing situation could present the material on a loop, or in an installation format with additional contextual artifacts. The ambient atmosphere was intentionally crafted to immerse viewers in the distinct perspectives of the story. The audience was a diverse group, including local artists, academic professors, PhD students, and researchers from several partner universities from the EpisTeaM project, spanning North America, Europe, and South America. Following the presentation, an informal, open-ended discussion with the audience was held; no formal survey was conducted to gather responses.

The viewer response was positive, with many reporting that they found the approach to be both novel and affecting. The use of multiple, distinct voices created a rich tapestry of impressions that moved beyond a traditional, single-narrator documentary. This creative choice transformed the viewing experience from a passive observation into an active, emotional engagement with the subject.

The most consistent feedback was the feeling that the narrative made them perceive the Building as almost alive or, at the very least, in a more personal and intimate manner. Viewers felt that they were not simply learning about a building but were instead introduced to a character with its own hopes, frustrations, and quiet observations. This opened a door for a new appreciation for the Building’s role as a witness to history and a guardian of countless personal stories, altering their relationship to the physical space itself.

The presentation also sparked critical discussions, with some questions regarding the historical fidelity of the polyvocal approach. This method can blur the line between fiction and history, which is a valid point that highlights the careful management required for heritage interpretation. Other questions focused on the potential for AI “hallucinations”, which were reduced by using knowledge stacks and generating the text offline. The authors also addressed the issue of factual exaggerations and pompous vocabulary by using careful prompting and curation. Finally, some audience members were curious about the future possibility of an AI dynamically generating these stories for any building and what that might mean for the roles of historians and interpreters—a question that remains open.

The polyvocal narrative of McMahon Hall stands as an example of how one might re-contextualize a Brutalist building, often criticized for its coldness, into a vessel of warm, multi-layered memory.

5. Discussion

The experiment described above opens several discussions from a technological, methodological, and theoretical point of view. One clear outcome is evidence that a polyvocal, machine-assisted narrative approach can indeed enrich the interpretation and the perception of the built environment. By giving McMahon Hall multiple voices, the project moves beyond a monolithic historical account and instead presents the Building as a convergence of different narratives (designer, material, observer, etc.). This multi-perspectival view aligns with contemporary heritage theory that emphasizes inclusivity and diversity of narratives [

10,

12]. This was operationalized by literally dramatizing those diverse narratives. A building, often perceived as an inert object, was re-imagined as an active participant with its own “voice”, while also being contextualized by external voices. This proved effective in humanizing the Building and making its history more relatable. It validates the idea that polyvocal storytelling can capture intangible heritage values (such as atmosphere, personal memories, and unofficial stories) that standard documentation might miss. In practical terms, this approach could be adapted for other heritage sites. For example, an ancient ruin could have voices of a past inhabitant, an archaeologist, the stone material, and a tourist together painting a richer picture of its significance. The use of generative AI was crucial here because manually writing and coordinating such multi-voice narratives would be labor-intensive, whereas AI helped to rapidly prototype and iterate voices.

The narrative was explicitly speculative yet grounded in an authentic context. This raises the question of how to balance creative storytelling with factual accuracy in heritage interpretation. Our approach was to distinguish layers of “truth”: the factual baseline (dates, events, physical features of the building) versus the interpretive imaginative layer (the inner thoughts of a building, a material, a bird, etc.). Importantly, transparency with the audience about the nature of the piece was maintained. In the installation, a short explanatory introduction was provided, making clear that AI was used to create fictional voices that interpret McMahon Hall’s history, much as a museum might contextualize a historical dramatization. In any published format (such as an online video of the narrative), accompanying text likewise will clarify that what is presented is an imaginative narrative built upon real history.

From a research perspective, the speculative element is not a drawback but rather a means to examine how knowledge about a site is constructed. This approach can show what imagination can reveal that pure analysis might overlook. By connecting to the audience’s emotions and cultural context, it can highlight real societal issues and human experiences connected to the site. In our case, “letting the building speak” surfaced certain poignant themes. For example, the loneliness a building might feel during quiet summers, or the pride (and burden) of witnessing decades of change, which one might not think to include in a standard documentary. Speculation here served to personalize and dramatize the experience of being architecture. This aligns with epistemological views that understanding a place can be enriched by multiple ways of knowing, including narrative and imaginative ones alongside analytical ones. This approach thus complements, rather than replaces, traditional scholarship. In an academic or pedagogical setting, one could envision pairing a factual lecture on McMahon Hall’s design and history with this narrative as a creative interpretation exercise, then discussing the interplay between fact and fiction.

Another discussion point is how remote sensing data (the photogrammetric model) contributed to the project beyond providing interesting visuals. In the experiment, the 3D model’s role was foundational. It ensured that the visual and spatial authenticity of the representation was intact, which in turn gave the AI narratives a concrete anchor to cling to. This is another way of using remote sensing data, not just for measurement or preservation, but as a stimulus for creative interpretation. Essentially, the 3D model became a canvas for storytelling. Using the model in the presentation meant that viewers could actually see the Building as the voices described it, lending a sense of embodied storytelling. The accuracy of the model added credibility as well; audiences notice if a representation is off. For instance, using a generic Brutalist building image, those familiar with McMahon Hall might have been unconsciously less convinced. Photogrammetry literally provided the Building’s digital footprint. In addition, from a preservation angle, all the narrative content created could be seen as metadata enriching that photogrammetric dataset. In digital heritage repositories, one might imagine uploading not just the 3D scan of a building, but also oral histories or AI-generated interpretive narratives attached to it. This multi-layer documentation could be valuable for future generations trying to understand both the physical and cultural significance of a site. However, Champion and Rahaman’s “

Survey of 3D Digital Heritage Repositories and Platforms” (2020) examines existing platforms for hosting 3D cultural heritage models and identifies several limitations in current practices [

123]. The idea of enriching a photogrammetric 3D model with narrative content (oral histories, AI-generated interpretations, etc.) directly addresses some gaps noted in their survey (e.g., minimal metadata and context as stand-alone meshes, limited reuse, lack of documentation, lack of preservation strategy). By uploading not just the raw 3D scan but also oral histories, interpretive narratives, and other contextual layers, a digital heritage repository can help in preserving both the physical and cultural significance of a site.

The approach, while innovative, is not without limitations. One major challenge is the quality and bias of AI output. Instances of the AI generating clichés or irrelevant content were encountered (e.g., in an early draft, the Journalist’s voice started talking about an unrelated campus building—a clear hallucination). These had to be filtered out through human review. The experiment heavily relied on human judgment to curate AI outputs; a fully autonomous generation would likely produce a less coherent or reliable narrative. This underscores that AI is a tool, not an author. The creative vision and critical review by humans remain central. The narrative currently reflects the author’s own choices and perspectives. The voices chosen (architect, building, etc.) were their way of representing diversity, but there are many voices that were not included (e.g., a student, a maintenance worker, a neighboring building). In principle, one could extend this method to include even more voices, perhaps crowdsourcing memories from the community, but there is a scalability issue: too many voices might overwhelm the narrative, so finding the right balance is key.

Ethically, the potential of misrepresenting history had to be considered. While trying to avoid factual errors, there is always a risk that imaginative elements might be misconstrued as fact by some audience members if not clearly informed. This is especially sensitive since real people (alumni, staff) might have their own connections to McMahon Hall and might object if they felt the narrative mischaracterized the place. The emphasis on transparency and framing the piece as an artistic interpretation was intended to mitigate this risk. As a side note, the few AI-generated images and short videos used to create an imagined atmosphere of students in the Building and intentionally inserted in the final video to support and enhance the narrative were immediately identified by the audience, as it was summer break at the time of the Summer School and did not reflect the reality of the Building.

The work also has implications for how knowledge of architecture and remote sensing might expand. Typically, remote sensing yields quantitative data—dimensions, materials, changes over time—contributing to an objective documentation of a site. By introducing narrative and AI, qualitative, subjective knowledge (stories, interpretations) is blended into that framework. It suggests an epistemological model where a building’s “truth” is not just in its measurable form, but also in the assemblage of narratives it inspires. This resonates with certain postmodern perspectives in architecture that a building’s meaning is co-created by observers and context. While AI-generated narratives must be used judiciously to avoid misinformation, the project shows that they can add value by capturing emotional and experiential dimensions of heritage. In this case, by mastering the dynamic relationship (human creativity guiding AI output), the trap of AI just churning out generic content was avoided [

102]. Instead, it was harnessed to extend human creative reach.

Another point is the notion of

hyperreality in architecture mentioned earlier [

119]. The experiment consciously tried to tether the narrative to reality to prevent drifting into pure simulation detached from meaning. Yet, the very act of giving a building a voice is in a sense a hyperreal gesture—buildings do not talk, the illusion that it does was created. The positive feedback indicates people found meaning in that illusion, but philosophically, it’s worth considering how far such practices could go.

This project opens several avenues for future exploration. Technologically, one could integrate real-time interaction, allowing users to ask the building’s AI persona questions and get answers. With advanced language models, a system could be in place where the building’s voice is not pre-scripted but can converse, drawing on a knowledge base of the building’s history.

From the remote sensing angle, an interesting extension would be to incorporate change over time. The limitations of retrospective photogrammetry from historical images, such as a lack of sufficient overlap and photographic metadata, mean that the better approach is virtual reconstruction, which synthesizes historical photos with other sources like plans and drawings. Then the AI narrative could reference visual changes and temporal linking of physical change, and the narrative would be very powerful in showing how environments evolve.

Another direction is evaluating the educational impact of such narratives. Studies to see if students or visitors retain more information about a building’s history when presented in this polyvocal AI-assisted way versus a traditional lecture or plaque can be designed. The hypothesis is that the story format (especially with emotional content) would enhance memory retention, as narrative is known to aid in information recall [

119]. This crosses into cognitive psychology and museum studies, suggesting a multidisciplinary research opportunity.

Lastly, it would be worthwhile to explore community co-creation. In this project, the team (authors) curated the voices and content. But one can envision a participatory workshop where former residents of a building are invited to feed their stories or sentiments, which are then incorporated or even used to fine-tune the AI’s output. That would ground the fictional voices in real oral histories, blending community-sourced data with AI creativity. Such an approach could empower communities to see their experiences reflected in new media and possibly increase acceptance of these AI interventions as authentic.

6. Conclusions

This research sets out to investigate how speculative memory and machine augmentation could reframe the way we engage with architectural heritage, using the case of a Brutalist campus building. A workflow that combines the strengths of remote sensing (for precise digital capture of physical reality) with generative AI (for producing rich narrative content), resulting in an innovative polyvocal narrative experience, has been showcased. The article contributes to several domains:

Remote Sensing and Digital Heritage: The experiment demonstrates the application of photogrammetric models beyond documentation as platforms for storytelling. This suggests a broadened scope for remote sensing in heritage, one that includes interpretation and not just recording. High-fidelity 3D models paired with narratives could become a new paradigm for digital heritage presentations.

AI and the Humanities: The study provides a concrete example of AI as a creative tool in the heritage domain (specifically in heritage interpretation and architectural history). The project illustrates that AI can help articulate multiple perspectives (even non-human ones) in a cohesive narrative, something that would have been considerably harder to achieve manually. It’s a step toward human-AI co-authorship in cultural content, underscoring the importance of guiding AI with domain knowledge and critical insight.

Epistemology of the Built Environment: The traditional epistemic boundaries are challenged by engaging voices like a building and a material, suggesting that understanding a place can be augmented by fictional yet insightful narratives. While these do not replace factual analysis, they enrich the mosaic of understanding. The need to critically manage AI’s contributions to ensure they add value and do not mislead is highlighted as well.

Methodological Innovation. The interdisciplinary method outlined (drone survey, 3D modeling, AI narrative generation, voice synthesis, and multimedia integration) is in itself a contribution. It can be replicated or adapted for other sites or projects aiming to blend technical and creative practices. The challenges and solutions encountered were documented, which can guide future practitioners doing similar work.

In conclusion, the polyvocal rendering of McMahon Hall stands as a proof-of-concept that even the most stoic Brutalist structure can “come alive” through a fusion of bytes and imagination. By hearing a building’s story from multiple angles, not only is factual knowledge of its history and design gained, but also a felt understanding of its presence and role over time. This kind of empathetic connection to architecture is particularly timely as mid-century Brutalist buildings age; many face demolition due to public misunderstanding or aesthetic disfavor. Perhaps giving them a voice can sway hearts and minds towards appreciation, or at least preservation of their stories if not their forms.

Ultimately, this work invites a reconsideration of the phrase “if walls could talk”. With AI, they indeed can, and what they have to say can deepen the perception and appreciation of the inhabited spaces. McMahon Hall’s speculative voices represent a narrative modality at the crossroads of remote sensing precision and machine creativity.