Abstract

Today, Web3D technologies and the rise of new standards, combined with faster browsers and better hardware integration, allow the creation of engaging and interactive web applications that target the field of cultural heritage. Functional, accessible, and expressive approaches to discovering the past starting from the present (or vice-versa) are generally a strong requirement. Cultural heritage artifacts, decorated walls, etc. can be considered as palimpsests with a stratification of different actions over time (modifications, restorations, or even reconstruction of the original artifact). The details of such an articulated cultural record can be difficult to distinguish and communicate visually, while entire archaeological sites often exhibit profound changes in terms of shape and function due to human activities over time. The web offers an incredible opportunity to present and communicate enriched 3D content using common web browsers, although it raises additional challenges. We present an interactive 4D technique called “Temporal Lensing”, which is suitable for online multi-temporal virtual environments and offers an expressive, accessible, and effective way to locally peek into the past (or into the future) by targeting interactive Web3D applications, including those leveraging recent standards, such as WebXR (immersive VR on the web). This technique extends previous approaches and presents different contributions, including (1) a volumetric, temporal, and interactive lens approach; (2) complete decoupling of the involved 3D representations from the runtime perspective; (3) a wide range applications in terms of size (from small artifacts to entire archaeological sites); (4) cross-device scalability of the interaction model (mobile devices, multi-touch screens, kiosks, and immersive VR); and (5) simplicity of use. We implemented and developed the described technique on top of an open-source framework for interactive 3D presentation of CH content on the web. We show and discuss applications and results related to three case studies, as well as integrations of the temporal lensing with different input interfaces for dynamically interacting with its parameters. We also assessed the technique within a public event where a remote web application was deployed on tablets and smartphones, without any installation required by visitors. We discuss the implications of temporal lensing, its scalability from small to large virtual contexts, and its versatility for a wide range of interactive 3D applications.

1. Introduction

All 3D visualization projects that target cultural heritage require a scientific approach in order to get a consistent and thorough reconstructive model of the past and to avoid the equivocity of the “black box effect” [1]. As a result of the scientific debate on virtual reconstruction, numerous projects and documents aimed at creating efficient guidelines and good practices in the field of scientific visualization of the past have been implemented over the years, such as the London Charter (www.londoncharter.org-accessed on 29 April 2021) and Principle of Seville [2]. The London Charter is one of the most internationally recognized documents, and it defines a set of principles of computer-based visualization to ensure the intellectual and technical integrity, reliability, documentation, sustainability, and accessibility [3]. In particular, it points out the importance of the knowledge claim: It states that any computer-based visualization of heritage should clearly declare its identity (e.g., 3D models of the existing state, evidence-based restoration, or hypothetical reconstruction) and the extent and nature of any factual uncertainty. “Traditional” and consolidated visualization standards in the field of cultural heritage (2D graphical outputs such as footprints, sections, etc.) target formal ways to communicate the archaeological record, focusing on metrically correct representations. This approach has several limitations, not only in the lack of interactivity (typical for the analogical approaches), but also in the extent and resolution of the representation through layouts with several images in the same sheet of paper. When it comes to proposing solutions for comparative visualization (for instance, the base dichotomy 3D survey/reconstructive hypothesis), they rely on simple synoptical juxtaposition of images or, in rare cases, on the use of transparent sheet layers to add a reconstruction on top of a photo of the site as it appears today. Within interactive 3D visualization of cultural heritage content, functional, engaging, accessible, and expressive approaches to discovering the past starting from the present (or vice-versa) are generally a strong requirement. Artifacts, decorated walls, etc. can be considered as palimpsests with a stratification of different actions over time (modifications, restorations, or even reconstruction of the original artifact). The details of such an articulated cultural record can be difficult to distinguish and communicate, while entire archaeological sites often exhibit profound changes in terms of shape and function due to human activities over time (destruction, reconstruction, change in the building’s end use, etc.). Virtual interactive lenses [4] represent an elegant approach to providing alternative visual representations for selected or specific regions of interest. They define a parametrizable selection according to which a base visualization (or context) is temporarily altered. Such approaches are highly valued and appreciated in the realm of interactive visualization due the simplicity of their concept, their power, and their versatility. Interactive lens techniques are, in general, strongly coupled with the base visualization system, which raises challenges when transferring existing techniques to specific problems. An important goal in research is, in fact, to develop concepts and methods that facilitate the “implement once and reuse many times” development of such lens techniques. This is the case, for instance, with the implementation of such techniques for the web through WebGL/HTML5 technologies [5]. Additional challenges arise that are related to performance on complex 3D scenarios due to the limitations of web browsers, thus undermining the interactive aspects of the lens. We present and discuss a scalable technique called “temporal lensing” that allows users to interactively compare—in specific selected areas—manufactured artifacts or items, buildings, or large archaeological sites in their current state of preservation along with their hypothetical reconstruction. This tool does not give access to data or paradata related to the reconstructive process, as it is designed to allow the general public an immediate reading and understanding of archaeological contexts. Such a metaphor, in fact, offers users dynamic and local time shifts between present and past. The technique differs from other approaches where the interactive lens is conceived as 2D and see-through; our approach instead operates in a volumetric manner, offering a broader public a powerful, accessible, and expressive 3D tool for discovering the past (or the future).

2. Related Work

Regarding interactive lenses for visualization, extensive surveys in the literature [4] have highlighted their elegance, flexibility, and simplicity when applied to several fields and case studies. This work also offers a robust conceptual model for designing and implementing interactive lenses by formalizing, in particular, a selection (the region affected by the lens) and a lens function (how the visualization is altered). There are, in fact, several past projects and studies developed on top of these building blocks to create interactive lenses for 2D and 3D interaction models. Past works, such as the “Temporal Magic Lens” [6], introduced a 2D lens technique that considers the time dimension to locally alter video streams for analysis by combining temporal and spatial aspects of a video-based query and offering a unified presentation to the user. Magic lens techniques have also been applied in the literature in Augmented Reality (AR), for instance, to inspect and discover portions of physical objects using a hand-held flexible sheet [7]. Within the field of cultural heritage, previous research already explored expressive and accessible interactive lens models in order to discover the past (or the future) in a specific location of a physical or virtual space. Regarding approaches for casual visitors and non-professional users, the “revealing flashlight” [8]—on display during the Keys to Rome exhibition (http://keys2rome.eu/-accessed on 29 April 2021) at the Trajan Markets in 2015—represents an excellent example of a spatial augmented reality technique that allows one to locally reveal the details of physical museum artifacts from a specific point of view. The projection mapping in this case can be exploited by users to locally decipher inscriptions on eroded stones or to interactively discover the geometric details and meta-information of cultural artifacts, with proven effectiveness, ease of use, and ease of learning.

Regarding the interactive exploration of cartographic heritage for public exhibits, a previous work [9] exploited the interactive lens approach with temporal alteration to visually communicate old 2D maps to visitors in an efficient manner. Such datasets present dense and precious information that is very difficult to retrieve and study without special techniques, thus offering visitors a very simple, immediate, and efficient interface. “TangibleRings” [10] is another example of a 2D interactive lens concept applied to tangible interfaces, offering visitors an intuitive and haptic interaction to discover different information layers. Within immersive virtual reality (VR) analytics, through consumer-level head-mounted displays (HMDs), recent works investigated applications of interactive lenses that exploited a virtual 3D space [11]. Due to the “see-through” concept offered by the interaction model, different lenses naturally lend themselves to the combination of their individual effects and integrations with six-DoF controllers (hand-held controllers with six degrees of freedom) to naturally control the final effect. Regarding virtual representations of archaeological sites or artifacts, previous research investigated volumetric techniques that allow professionals to locally “carve” space and time in multi-temporal (4D) virtual environments [12] that are supported by formal languages [13] to keep track of virtual reconstruction processes. These techniques fully and interactively operate in a virtual 3D context, thus enabling the adoption of advanced 3D interactions and 3D user interfaces when designing these tools. These interactive techniques are generally employed within ad hoc, desktop-based applications and tools; what about the application of the 3D lens concept to web-based visualization? We have witnessed large advancements regarding the presentation and dissemination of interactive 3D content on desktop and mobile web browsers (Google Chrome, Mozilla Firefox, etc.). A prominent example of interactive 3D presentation on the web is offered by the SketchFab platform (https://sketchfab.com/-accessed on 29 April 2021) to present and inspect 3D models through a normal web page. Nowadays, web technologies offer improved hardware integration; thus, a web page (once it has obtained a user’s permissions) is able to access the built-in microphones and cameras, GPS, compass, and much more. Such integrations—especially on mobile web browsers—are very appealing for web applications that target cultural heritage because they do not force the user to install any additional software on their device. Past research projects have already exploited these technologies to present large interpreted or reconstructed 3D landscapes [14], browser-based, city-scale 4D visualization based on historical images [15], augmented museum collections using device orientation [16], or gamified 3D experiences [17,18]. Regarding immersive VR, since the introduction of the first open specification for WebVR [19], it became possible to consume immersive experiences by using common web browsers and consumer-level HMDs (including cardboard). The API recently evolved into WebXR (https://immersiveweb.dev/-accessed on 29 April 2021) [20]; the new open specification has the goal of unifying the worlds of VR and AR, in combination with a wide range of user inputs (e.g., voice, gestures), thus offering users options for interacting with virtual environments on the web [21]. Immersive computing raises additional challenges and strict requirements for low-latency communication to deliver a consistent and smooth experience. These must be taken into strong consideration, especially when designing interactive techniques for web-based tools or applications dealing with the exploration of the past.

3. Temporal Lensing

3.1. Description of the Technique

The main concept of the temporal lensing technique is based on local, volumetric alteration of time in a virtual 3D space. Thus, the model extends 2D lens techniques to 3D by leveraging a radial alteration of time in a given virtual location. The proposed technique operates on two different scene-graphs:

- : A base 3D scene (or reference);

- : A target 3D scene (representing in a different time period).

and may represent an archaeological site, a building, or a small artifact in two different time periods. Following the conceptual model and taxonomy described by Tominski et al. [4], our technique implements the selection (the region affected by the lens) as a spherical volume defined by (1) a 3D application point c and (2) an influence radius r. The lens function (how the visualization is altered) operates in a 4D space where both time periods coexist ( and ); outside the volume of interest , is hidden and is shown, while the exact opposite happens inside (see Figure 1). Thus, depending on the location and radius of the lens, a temporally altered version of the reference scene is shown inside the volume (sphere) of interest. By definition, parameters of the interactive lens can be manipulated at runtime, so users may dynamically change both the location and radial influence of the temporal lens in any given 3D scene.

Figure 1.

Unaffected visualization where only the base scene is shown (A). A temporal lens using a 7.5 m radius (B) and 26.5 m radius (C) allows the discovery of a past period inside the selection.

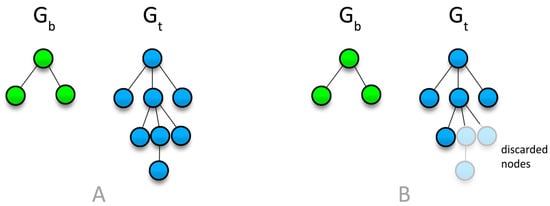

A crucial aspect of the technique is the complete decoupling of and ; the two scenes may, in fact, exhibit profound changes due to time (destruction, reconstruction, changes in the building’s end use, etc.), thus presenting different interactive visualization requirements in terms of scene-graph organization, textures and geometry, level of details, procedural rules, etc. (see, for instance, the samples in Figure 2, left). This separation allows a wide range of usage scenarios going well beyond basic texture alterations; it offers users a volumetric peek into the past (or future) in a local area of interest, potentially discovering a completely different shape, color, or material. For interactive visualization purposes, the lens function is generally realized using GLSL (OpenGL Shading Language) fragment shaders through the graphics hardware—also available via WebGL—to radially discard scene portions that we do not intend to show.

Figure 2.

Comparison of the scene-graphs of samples and (A); depending on temporal lens parameters, specific nodes can be safely discarded at runtime to improve performance (B).

From a performance perspective, one observation regarding this 3D technique is that the volumetric selection where the temporal lens is applied (visualization of ) is usually smaller than the reference scene in terms of size. When dealing with scene-graphs, we can thus employ hierarchical culling techniques to discard nodes outside the radial selection because they will not contribute to the final visualization in any case (see the sample in Figure 2, right).

The inverse is also applicable for the base scene (culling nodes inside the lens selection ). This can offer solid performance boosts at runtime, especially when using the technique in web-based visualization through standard WebGL (or through WebXR presentation). Due to the volumetric approach of the technique, it can be easily adapted and deployed in immersive VR sessions, allowing users to interact with the location of the temporal lens and its influence radius. The next section will describe a Web3D implementation of the technique, also including WebXR applications (immersive VR).

3.2. Web Implementation

This section describes a web-based implementation of the temporal lensing technique targeting common web browsers leveraging WebGL and modern standards (WebXR) for immersive VR sessions. As previously introduced, we deal with two separate 3D representations ( and ) of the same scene in two different time periods. Modern javascript libraries, such as Three.js (https://threejs.org/-accessed on 29 April 2021), Babylon.js (https://www.babylonjs.com/-accessed on 29 April 2021), and many others, offer all of the tools for creating and organizing interactive 3D scenes. In order to implement and assess the temporal lensing technique, we used the open-source framework “ATON” by CNR ISPC [14,16,17,18,22]. The framework, which is based on Three.js, allows the crafting of scalable, cross-device, and WebXR-compliant web applications that target cultural heritage, and it also provides spatial UI (user interface) components for developing and presenting advanced immersive interfaces.

The temporal lens function was implemented with common GLSL shaders (see the previous section) that were applied to both the and scene-graphs to show/discard specific volumetric portions; these allow the user to change the location and radius of the 3D lens at runtime. Regarding the lens selection (affected radial volume), the implemented interaction model for the web allows one to dynamically choose a location on the visible 3D scene (where the temporal lensing is being applied). In order to determine c—the center location of the lens—a query is performed on visible surfaces using a geometry intersection ray. The first problem to face is that of which graph we choose because and may exhibit completely different shapes, volumes, and surfaces. For our current implementation (javascript), we adopted the following algorithm:

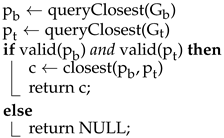

Algorithm 1 first queries the geometry for the closest intersection point , then queries for the closest intersection point . If both and are found and valid, the temporal lens center (c) is assigned to the closest one (with respect to the query ray’s origin)—otherwise, the effect is not applied (see Figure 3).

| Algorithm 1 Query. |

|

Figure 3.

A sample query 3D ray intersecting with both (left—in this case, the present)—and (right—in this case the past)—respectively, in and as candidate locations for the temporal lens center.

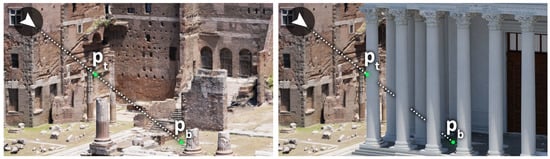

Regarding the visual alteration (), the steps in Algorithm 2 show an extract of the per-fragment pseudo-code performed on GPU hardware (through GLSL). and routines are applied, respectively, to and (and all descendants) to properly discard incoming fragments and realize the spherical lens effect. A single fragment—given its world location p—is discarded (hidden) depending on its euclidean distance from the lens center c (selected in the previous steps) and radius r. Note that such GPU routines completely abstract from the internal complexity of scene-graphs and in terms of geometric detail, texture resolution, or scene hierarchy (for instance, advanced structures can be required to present large, progressive, multi-resolution datasets on the web [14,23,24]). The technique is fully replicable using different WebGL libraries or Web3D frameworks, with or without support for advanced scene-graph features.

| Algorithm 2 Fragment pseudo-shaders for and . |

|

Regarding the query routines (see Algorithm 1), big challenges arise due to performance within Web3D contexts, especially when dealing with complex geometries. This issue is even more prominent for immersive VR experiences (through WebXR) because they strictly require low-latency response times in order to deliver a consistent, smooth, and acceptable experience for the final user. This is crucial for allowing the user to interactively alter the lens parameters (mainly the location and radius) at runtime.

In order to overcome this problem—especially in Web3D applications—both the and geometries need to be spatially indexed [25]. We use BVH (bounding volume hierarchy) trees—offered by the ATON framework—that allow a web application to query complex geometries very efficiently while maintaining very high frame rates. This solution allows Web3D applications to smoothly use dynamic temporal lensing with different input profiles:

- Touch as the primary input for querying and inspecting locations;

- Mouse as the primary device for querying and inspecting locations;

- HMD using a virtual head or six-DoF controller for interactively querying the 4D space in immersive WebXR sessions (location + direction).

Such interaction models provide users with a direct interface to discover the past (or the future) using a web browser, without requiring any third-party software installation. The next section will describe, in detail, three case studies that we considered in order to assess the technique and its implementation for the web.

4. Case Studies

In this section, a selection of case studies will be presented. Table 1 lists the technical details of the optimized 3D assets employed for each case, as well as the number of textures and the resolution involved for the Web3D/WebXR presentation in order to highlight and compare polygon counts. Each case consists of two different scene-graphs, and (see Section 3), which, respectively, present and past 3D representations.

Table 1.

Details of the 3D assets of the involved case studies, each with two different representations ( and ), optimized for Web3D/WebXR presentation.

4.1. Augustus Forum

The first case study presented is the 3D model of the Forum of Augustus. The model was created in 2018 within the Reveal project (https://cordis.europa.eu/project/id/732599-accessed on 29 April 2021). The project was funded under the European Horizon 2020 Program and supported by Sony Interactive Entertainment; it was born with the aim of using the Sony PlayStation gaming platform as a tool for the dissemination of knowledge of the European historical–artistic–archaeological heritage [26,27].

The project developed a series of tools for guiding video game developers to create engaging experiences that improve the understanding of cultural heritage. The project had two applied games as output: “The chantry” and “A night in the forum”. In the second case, the player could visit the Forum of Augustus in Rome after sunset to discover mysteries and stories of the Roman world. Within the game, two accurate and detailed models were developed (see Figure 4). The former was a digital replica of the archaeological site in its actual state of conservation (2018). The latter was a hypothetical 3D reconstruction of its architecture in the 1st century AD. The reconstruction was developed by employing a large variety of historical and archaeological sources and surveys of the in situ architectures. The models created were used for experimentation in other research projects and were particularly suited for the temporal lensing technique (see Figure 4, bottom). Indeed, the Forum of Augustus is very important archaeological evidence for Roman history, and its remains are visible to all in the heart of Rome in the archaeological park of the imperial forums. However, the condition of ruin does not allow a clear understanding of the volumes and articulation of the architectural spaces. Therefore, the overlapping of the different models—the past (hypothetical reconstruction) and the future (current state of preservation)—allows the user to make a targeted comparison between the two temporal layers.

Figure 4.

Three-dimensional model of the Forum of Augustus in 2018 (, A) and its reconstruction during the 1st century AD from the same view (, B). The temporal lensing (C) was used to interactively discover a portion of the reconstructed portico in real time.

4.2. Domus of Caecilius Iucundus

The house of Caecilius Iucundus is a roman domus placed in the archaeological site of Pompeii (Italy). In 2000, the Swedish Pompeii project (www.pompejiprojektet.se-accessed on 29 April 2021) was initiated by the Swedish Institute in Rome, and since autumn 2010, the research project has been directed by the Department of Archaeology and Ancient History at Lund University. The aim was to record and analyze an entire Pompeian city block, Insula V 1, and to experiment with digital archaeology methods and technologies involving 3D surveys and reconstructions. During the fieldwork activity, the house was completely digitized using laser scanner technology, and the 3D data, along with archaeological and historical sources, were employed to develop different research activities in the area of digital visualization [28]. In particular, 3D simulations of the house were performed in order to discuss and visualize different possible interpretations of the house itself. As in the previous case study, the use of a digital replica of the site as a backdrop for the virtual reconstruction allowed a high level of control of the proposed hypotheses and the possibility of a real-time comparison between models (digital replica and virtual reconstruction) during the interpretation process, thus bringing a significant contribution to the archaeological analysis [29]. The digital replica was textured using manual mapping techniques that started from high-resolution orthophotos. This technique, despite having other possible semi-automatic solutions through multi-photo projections within photogrammetry software, such as Metashape (https://www.agisoft.com/-accessed on 29 April 2021), has allowed a greater coherence of proportions through a nadiral projection of the images of the pictorial apparatus on the optimized geometries (with a considerable reduction of the deformation of the color information due to possible deformities of the optimized geometry with respect to the real object).

The 3D models obtained during the research activities were particularly suitable for temporal lensing experimentation, especially for what concerns the understanding of mosaic and pictorial decorative apparatus. In this case study, although the architectural composition of the living spaces is quite understandable even for non-experts, the decorative apparatus was instead subjected to a rapid degradation, as evidenced by some watercolors of the late 1800s, which show frescoes with vivid colors that have now vanished or are severely altered. Indeed, the tool allows an immediate comparison between the current state of conservation and degradation of colors and decorative motifs and their original appearance and shades (see Figure 5). Furthermore, it allows the users to better perceive the ancient environment and to see details that are no longer visible to the naked eye.

Figure 5.

Temporal lensing to interactively discover the vivid colors of the original decorative apparatus.

4.3. Cerveteri Tomb

The Tomb of Reliefs is an Etruscan underground tomb dating back to the end of the 4th century B.C., discovered in the necropolis of Banditaccia in Cerveteri (Italy) in 1847. It is the only Etruscan tomb that is decorated with stucco reliefs (normally, there are painted frescoes) that represent, hanging on the walls, all of the furnishings that accompanied the deceased on their journey beyond death. The tomb is about 6 m long, while the ceiling is about 2 m high. Given the fragility of the stuccoes, the artistic quality, the fact that it is unique, and its presence inside a UNESCO site, the tomb is kept closed and is visible to the public only through a window in the metal entrance door. The tomb, excavated inside the tufa rock, has a crack that crosses it diagonally. CNR ISPC researchers obtained a very-high-resolution digital replica in 2016 for the purposes of documentation, protection, monitoring, and valorization of the site. The project was funded by the CNR with the scientific cooperation of the SABAP (Soprintendenza Archeologia Belle Arti e Paesaggio per l’Area Metropolitana di Roma, Provincia di Viterbo e Etruria Meridionale). The 3D survey of the tomb was performed using a FARO laser scanner (https://www.faro.com/-accessed on 29 April 2021) with a grid of seven points of view. In the same positions, very-high-resolution equirectangular panoramas (about 50,000 × 25,000 pixels) were acquired. A topographic survey was performed with the total station for the acquisition of removable targets that were included in the photo shooting and laser scanning.

The photogrammetric model was developed from equirectangular images with the Metashape software (https://www.agisoft.com-accessed on 29 April 2021) and was superimposed on the geometric model obtained from the laser scanner’s point cloud. The phase of adding the color information to the latter model was carried out by using 3D Survey Collection (3DSC [30]), a tool specially developed by CNR for the management and reprojection of equirectangular images. The digital replica that was thus obtained was optimized for use in real time; the geometries were improved and reduced and the textures were recreated by following the standard metrics ( pixels, each 16 m).

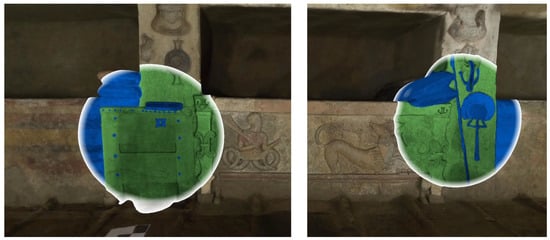

The optimized 3D model was used as the basis for the creation of a reconstructive hypothesis of what the tomb was originally supposed to look like. In particular, a geometric restoration was carried out to integrate fragmentary portions of the stuccoes and a chromatic restoration in the points where the color was lost. For the production of the reconstructive model, a non-destructive method of creating antique materials based on masks and semantics was applied, which mapped the hypothesized pigment (color) and the type of finish of the stucco (smooth, porous, etc.) on the surface. The results of the research activity were two three-dimensional models complete with textures: the digital replica optimized for real time and the reconstructive hypothesis. This case study is particularly suitable for the use of temporal lensing because it helps to understand aspects that are otherwise difficult to understand. Through this technique, not only the difference between what should have been in ancient times and what is today the pictorial decoration, but also the geometrical additions that were necessary to restore the tomb to its former glory, are made immediately evident (see Figure 6). The possibilities of communicating both the pictorial reconstruction and the geometric reconstruction are part of the peculiar and virtuous characteristics of the three-dimensional representation of an archaeological context through temporal lensing.

Figure 6.

Interactive and immersive VR discovery of the tomb using an Oculus Quest HMD and temporal lensing (view-aligned query) to discover original colors through the built-in Oculus web browser.

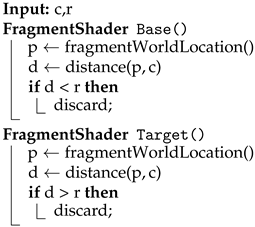

5. Public Installation

In order perform a technical assessment and a preliminary evaluation of the web implementation of the temporal lensing technique with a broader audience, an interactive installation was developed for the archaeological exhibition “TourismA 2020”, which was held before the COVID-19 pandemic. It is an annual three-day event held in the prestigious location of “Palazzo dei Congressi” in Florence (Italy). The setup consisted of two multi-touch tablets (Samsung Galaxy Tab A 8.0) connected to the internet and arranged on a table (see Figure 7, top strip), which used built-in web browsers to present the interactive web application with temporal lensing, without any additional software installed. Each tablet presented a different virtual scenario to the visitor: the Forum of Augustus in Rome (Italy) and the Caecilius Jucundus house in Pompeii (Italy), as well as their respective 3D reconstructions, which were discussed in the previous section.

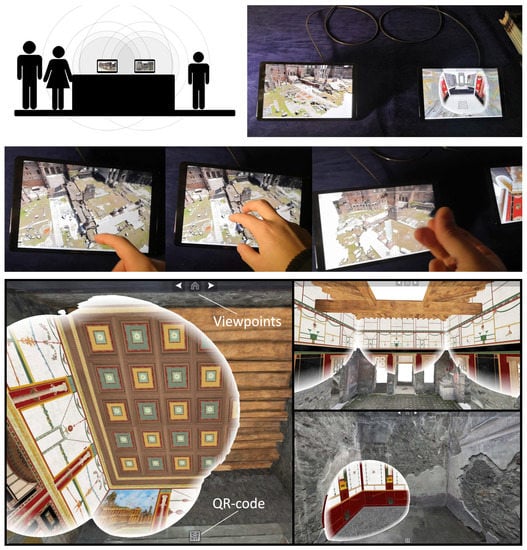

Figure 7.

“TourismA” installation setup (top strip). A visitor interacting with the multi-touch interface to pick a temporal lensing location, panning the camera, and snapping fingers to enlarge the radius, respectively (middle strip). User interface with predefined viewpoints and QR-code buttons (bottom).

The entire interactive web application was served on both tablets in Florence by a public and certified CNR ISPC server node located in Rome (Area della Ricerca di Roma 1) with worldwide reachability. For this setup, visitors could interact with the temporal lensing parameters in two ways: (1) moving the location of the temporal lens through a tap on the screen and (2) enlarging or shrinking the temporal radius using the captured volume levels (through the built-in microphone). Visitors could thus move the temporal lens around the Forum of Augustus and the Caecilius Jucundus house, discovering their corresponding past reconstructions (). The user interface presented a simple welcome popup describing the case study and how to interact (move the camera). On the top of the screen, a few buttons allowed the visitor to move into specific viewpoints (predefined camera locations), while on the bottom, a QR code allowed users to “grab” and replicate the entire experience on their smartphones, without requiring any installation (see Figure 7). In order to control the radius of the temporal lens, the vast majority of the interacting visitors used their voices, finger snapping, or hand clapping as input. The invitation to play with the tablets was also facilitated by the noise of people and surrounding installations, which occasionally influenced the radii of both web applications with their sounds. Within a preliminary assessment, observations by interviewed visitors after using the devices were, for the vast majority, positive; they all particularly appreciated the input model that combined touch and sound to discover the past reconstructions of both scenarios (see Supplementary Materials).

6. Discussion

Temporal lensing is a technique based on local, volumetric alteration of time in a virtual 3D space that offers users an interactive, expressive, and accessible discovery model that targets cultural heritage scenarios. The technique differs from other approaches where the interactive lens is conceived as 2D and see-through; our approach instead operates in a volumetric manner, offering a broader public a powerful 3D tool for discovering the past (or the future). The web and its recent standards offer incredible opportunities for presenting and communicating different temporal layers through expressive and accessible interaction models, without forcing final users to install any third-party software or applications. We assess and describe a web-based implementation of the technique applied to different CH case studies (Section 4), including its application to immersive VR sessions by exploiting the recent WebXR specification.

In the following tables, we first report the performance measurements resulting from the Web3D implementation of the temporal lensing (see Section 3.2) on different devices. We recorded the average frame rate (FPS) in three-minute sessions for each case study with different devices: (a) a desktop workstation (CPU: intel core i7, GPU: NVIDIA 980GTX), (b) Oculus Quest 1 (all-in-one HMD), (c) smartphone (Huawei Honor V10), and (d) the two tablets used for the “TourismA” exhibit (see Section 5). As described in Section 3.2, different input models were employed with different devices to control the temporal lens. More specifically, the following were adopted: mouse-based interaction (a), multi-touch interaction (c, d), and view-aligned query (b).

Table 2 shows that the Web3D application did maintain good frame rates for all cases during the whole session, which was also because the BVH trees were adopted for interactive queries (see Section 3.2). For these tests, no radial culling optimization (see Section 3, Figure 2) was employed; thus, the per-fragment GPU routines (see Section 3.2, Algorithm 2) performed on the and scene-graphs in their entirety to realize the volumetric lens effect. We performed additional tests using the same devices on a specific case (Caecilius Iucundus domus), but this time, we enabled radial culling optimization. For each 3 min session, a fixed lens radius was used; the goal was to assess the impact of variable radii and compare this to the original test. As shown in Table 3, smaller radii (fixed during the whole session) led to small frame rate improvements. As expected, this is a direct result of the per-room segmentation [29] of the scene-graph of Caecilius Iucundus domus (reconstruction) and its blocks outside the radius being culled.

Table 2.

Average frame rates (FPS) of the web implementation of temporal lensing on different devices with the presented case studies.

Table 3.

Average frame rates (FPS) using the radial culling optimization with different fixed temporal radii for the Caecilius Iucundus case study.

Temporal lensing can be easily integrated with different input interfaces to exploit the capabilities of modern web browsers and recent standards (e.g., access to built-in microphones, compasses [16], WebXR presentations for cardboard [18], etc.). The web application developed for the public “TourismA” exhibit (see Section 5) was deployed using a public server (with worldwide reachability) to carry out a preliminary assessment of temporal lensing with the general public on two 3D scenarios (the Forum of Augustus in Rome and the house of Caecilius Jucundus in Pompeii). People could interact using a combination of touch and sound to control lens parameters, and through a simple QR code, they could replicate the whole experience on friends’ devices without any installation. We plan to carry out an extensive evaluation of the integration of the temporal lensing technique with online web applications to reach a larger audience.

The technique was shown to be particularly suited for virtual restoration of pictorial and mosaic decorations because it ensures the legibility of the decorative elements by re-establishing their formal integrity and showing their possible original appearance, but allowing, at the same time, the recognizability of the current state of conservation in accordance with the principle of guided restoration [31], as in the cases of the house of Caecilius Iucundus and the Tomb of Reliefs. The approach is also well suited for the volumetric discovery of different typologies of 3D representations, such as technical layers that show color-coded materials (see Figure 8) or other maps (preservation status, etc.). The technique can thus be easily employed with different lens functions () to visually communicate additional information alongside the pure temporal discovery.

Figure 8.

Alternative lens function (color-coded materials) applied to the Tomb of Reliefs.

The technique completely abstracts from the geometric/texturing complexity of the two temporal representations ( and scene-graphs), and can thus be replicated on different Web3D frameworks or with different libraries. Furthermore, Algorithms 1 and 2 (described in Section 3.2) can be easily applied to multi-resolution datasets for larger or more complex 3D scenes that require progressive levels of detail.

The temporal lensing technique, as described in the Section 3, can also be implemented in desktop game engines (e.g., Unreal Engine 4, Unity, etc.), thus offering a usable and immediate interaction model for high-fidelity real-time 3D applications that deal with multi-temporal CH representations. As shown in the work of Kluge et al. [11], multiple interactive lenses can be combined (overlaid) in immersive VR sessions. Another research direction would be to investigate volumetric combinations of multiple temporal lenses (for instance, by using two different time alterations and ), thus offering an immediate and natural interaction model. Due to its immediacy and ease of use, we also foresee applications of temporal lensing in gamified online contexts. For instance, the temporary aspect of the lens can be removed, and instead, persistent alterations (see, for instance, the Pompeii Touch project for 2D (http://www.pompeiitouch.com/en.php-accessed on 29 April 2021) can be adopted, where users can increasingly discover volumetric portions of the 3D scene (even using different periods). This kind of progressive and persistent temporal discovery would suit the application of Web3D/WebXR to games where multiple users on a network have to collaboratively discover a given scenario. We foresee different forms of experimentation in this direction with the adopted web framework, ATON, because it already offers built-in collaborative features.

Supplementary Materials

A video presentation of the temporal lensing technique is available here: https://www.youtube.com/watch?v=87OInAOkYS0.

Author Contributions

Conceptualization, B.F.; software, B.F.; resources, D.F. and E.D.; data curation, E.D. and D.F.; writing, all. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

All data and software involved are available through the official ATON framework website http://osiris.itabc.cnr.it/aton/ (accessed on 29 April 2021) or github https://github.com/phoenixbf/aton (accessed on 29 April 2021).

Acknowledgments

The authors would like to thank the Trajan Markets in Rome (Italy) for their support on the reconstructive models of the Forum of Augustus, the Department of Archaeology and Ancient History at Lund University (Sweden), and, in particular, all of the staff of the DarkLab for involving the CNR ISPC in the reconstruction of the house of Caecilius Iucundus and for the fruitful collaboration. For more information about the Swedish Pompeii project, please visit the page https://www.pompejiprojektet.se/ (accessed on 29 April 2021). Furthermore, the authors thank the CNR ISTI, which carried out important work pertaining to the acquisition and post-processing of the digital replica of the house. The authors would like to thank the Soprintendenza Archeologia Belle Arti e Paesaggio per l’Area Metropolitana di Roma, Provincia di Viterbo e Etruria Meridionale (Rita Cosentino, Alfonsina Russo) for their scientific and administrative support for the research project. The authors would also like to thank A. Palombini (CNR ISPC) for the organization of the “Allegro Museo” space in TourismA 2020.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| HMD | Head-mounted display |

| 3/6-DoF | 3/6 degrees of freedom (head-mounted displays and controllers) |

References

- Demetrescu, E. Virtual reconstruction as a scientific tool. In Digital Research and Education in Architectural Heritage; Springer: Berlin/Heidelberg, Germany, 2017; pp. 102–116. [Google Scholar]

- Bendicho, V.M.L.M. International guidelines for virtual archaeology: The Seville principles. In Good Practice in Archaeological Diagnostics; Springer: Berlin/Heidelberg, Germany, 2013; pp. 269–283. [Google Scholar]

- Denard, H. Implementing best practice in cultural heritage visualisation: The London charter. In Good Practice in Archaeological Diagnostics; Springer: Berlin/Heidelberg, Germany, 2013; pp. 255–268. [Google Scholar]

- Tominski, C.; Gladisch, S.; Kister, U.; Dachselt, R.; Schumann, H. Interactive lenses for visualization: An extended survey. Comput. Graph. Forum 2017, 36, 173–200. [Google Scholar] [CrossRef]

- Parisi, T. WebGL: Up and Running; O’Reilly Media, Inc.: Newton, MA, USA, 2012. [Google Scholar]

- Ryall, K.; Li, Q.; Esenther, A. Temporal magic lens: Combined spatial and temporal query and presentation. In Proceedings of the IFIP Conference on Human-Computer Interaction, Rome, Italy, 12–16 September 2005; pp. 809–822. [Google Scholar]

- Looser, J.; Grasset, R.; Billinghurst, M. A 3D flexible and tangible magic lens in augmented reality. In Proceedings of the 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, 13–16 November 2007; pp. 51–54. [Google Scholar]

- Ridel, B.; Reuter, P.; Laviole, J.; Mellado, N.; Couture, N.; Granier, X. The revealing flashlight: Interactive spatial augmented reality for detail exploration of cultural heritage artifacts. J. Comput. Cult. Herit. JOCCH 2014, 7, 1–18. [Google Scholar] [CrossRef]

- Grammenos, D.; Zabulis, X.; Michel, D.; Argyros, A.A. Augmented Reality Interactive Exhibits in Cartographic Heritage: An implemented case-study open to the general public. e-Perimetron 2011, 6, 57–67. [Google Scholar]

- Ebert, A.; Weber, C.; Cernea, D.; Petsch, S. TangibleRings: Nestable circular tangibles. In Proceedings of the CHI’13 Extended Abstracts on Human Factors in Computing Systems, Paris, France, 27 April–2 May 2013; pp. 1617–1622. [Google Scholar]

- Kluge, S.; Gladisch, S.; von Lukas, U.F.; Staadt, O.; Tominski, C. Virtual Lenses as Embodied Tools for Immersive Analytics. arXiv 2019, arXiv:1911.10044. [Google Scholar]

- Fanini, B.; Demetrescu, E. Carving Time and Space: A Mutual Stimulation of IT and Archaeology to Craft Multidimensional VR Data-Inspection. In Proceedings of the International and Interdisciplinary Conference on Digital Environments for Education, Arts and Heritage, Brixen, Italy, 5–6 July 2018; pp. 553–565. [Google Scholar]

- Demetrescu, E. Archaeological stratigraphy as a formal language for virtual reconstruction. Theory and practice. J. Archaeol. Sci. 2015, 57, 42–55. [Google Scholar] [CrossRef]

- Fanini, B.; Pescarin, S.; Palombini, A. A cloud-based architecture for processing and dissemination of 3D landscapes online. Digit. Appl. Archaeol. Cult. Herit. 2019, 14, e00100. [Google Scholar] [CrossRef]

- Münster, S.; Maiwald, F.; Lehmann, C.; Lazariv, T.; Hofmann, M.; Niebling, F. An Automated Pipeline for a Browser-based, City-scale Mobile 4D VR Application based on Historical Images. In Proceedings of the 2nd Workshop on Structuring and Understanding of Multimedia heritAge Contents, Seattle, WA, USA, 12–16 October 2020; pp. 33–40. [Google Scholar]

- Barsanti, S.G.; Malatesta, S.G.; Lella, F.; Fanini, B.; Sala, F.; Dodero, E.; Petacco, L. The Winckelmann300 Project: Dissemination of Culture with Virtual Reality at the Capitoline Museum in Rome. In International Archives of the Photogrammetry, Remote Sensing & Spatial Information Sciences, Proceedings of the 2018 ISPRS TC II Mid-term Symposium “Towards Photogrammetry 2020”, Riva del Garda, Italy, 4–7 June 2018; ISPRS: Riva del Garda, Italy, 2018; Volume 42, p. 42. [Google Scholar]

- Turco, M.L.; Piumatti, P.; Calvano, M.; Giovannini, E.C.; Mafrici, N.; Tomalini, A.; Fanini, B. Interactive Digital Environments for Cultural Heritage and Museums. Building a digital ecosystem to display hidden collections. Disegnarecon 2019, 12. [Google Scholar] [CrossRef]

- Luigini, A.; Fanini, B.; Basso, A.; Basso, D. Heritage education through serious games. A web-based proposal for primary schools to cope with distance learning. VITRUVIO Int. J. Archit. Technol. Sustain. 2020, 5, 73–85. [Google Scholar] [CrossRef]

- Pakkanen, T.; Hakulinen, J.; Jokela, T.; Rakkolainen, I.; Kangas, J.; Piippo, P.; Raisamo, R.; Salmimaa, M. Interaction with WebVR 360 video player: Comparing three interaction paradigms. In Proceedings of the 2017 IEEE Virtual Reality (VR), Los Angeles, CA, USA, 18–22 March 2017; pp. 279–280. [Google Scholar]

- González-Zúñiga, L.D.; O’Shaughnessy, P. Virtual Reality… in the Browser. In VR Developer Gems; CRC Press: Boca Raton, FL, USA, 2019; p. 101. [Google Scholar]

- Maclntyre, B.; Smith, T.F. Thoughts on the Future of WebXR and the Immersive Web. In Proceedings of the 2018 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Munich, Germany, 16–20 October 2018; pp. 338–342. [Google Scholar]

- Fanini, B. ATON Framework. Available online: https://github.com/phoenixbf/aton (accessed on 29 April 2021).

- Ponchio, F.; Dellepiane, M. Multiresolution and fast decompression for optimal web-based rendering. Graph. Model. 2016, 88, 1–11. [Google Scholar] [CrossRef]

- Meghini, C.; Scopigno, R.; Richards, J.; Wright, H.; Geser, G.; Cuy, S.; Fihn, J.; Fanini, B.; Hollander, H.; Niccolucci, F.; et al. ARIADNE: A research infrastructure for archaeology. J. Comput. Cult. Herit. JOCCH 2017, 10, 1–27. [Google Scholar] [CrossRef]

- Kontakis, K.; Malamos, A.G.; Steiakaki, M.; Panagiotakis, S. Spatial indexing of complex virtual reality scenes in the web. Int. J. Image Graph. 2017, 17, 1750009. [Google Scholar] [CrossRef]

- Ferdani, D.; Fanini, B.; Piccioli, M.C.; Carboni, F.; Vigliarolo, P. 3D reconstruction and validation of historical background for immersive VR applications and games: The case study of the Forum of Augustus in Rome. J. Cult. Herit. 2020, 43, 129–143. [Google Scholar] [CrossRef]

- Pescarin, S.; Fanini, B.; Ferdani, D.; Mifsud, K.; Hamilton, A. Optimising Environmental Educational Narrative Videogames: The Case of ‘A Night in the Forum’. J. Comput. Cult. Herit. JOCCH 2020, 13, 1–23. [Google Scholar] [CrossRef]

- Demetrescu, E.; Ferdani, D.; Dell’Unto, N.; Touati, A.M.L.; Lindgren, S. Reconstructing the original splendour of the House of Caecilius Iucundus. A complete methodology for virtual archaeology aimed at digital exhibition. SCIRES-IT Sci. Res. Inf. Technol. 2016, 6, 51–66. [Google Scholar]

- Dell’Unto, N.; Leander, A.M.; Dellepiane, M.; Callieri, M.; Ferdani, D.; Lindgren, S. Digital reconstruction and visualization in archaeology: Case-study drawn from the work of the Swedish Pompeii Project. In Proceedings of the 2013 Digital Heritage International Congress (DigitalHeritage), Marseille, France, 28 October–1 November 2013; Volume 1, pp. 621–628. [Google Scholar]

- Demetrescu, E. 3D Survey Collection. 2021. Available online: https://doi.org/10.5281/zenodo.4556757 (accessed on 29 April 2021).

- Limoncelli, M. Hierapolis di Frigia XIII—Virtual Hierapolis. Virtual Archaeology and Restoration Project (2007–2015); Ege Yayinlari: Istanbul, Turkey, 2019. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).