Integrating Ecological Forecasting into Undergraduate Ecology Curricula with an R Shiny Application-Based Teaching Module

Abstract

:1. Introduction

2. Materials and Methods

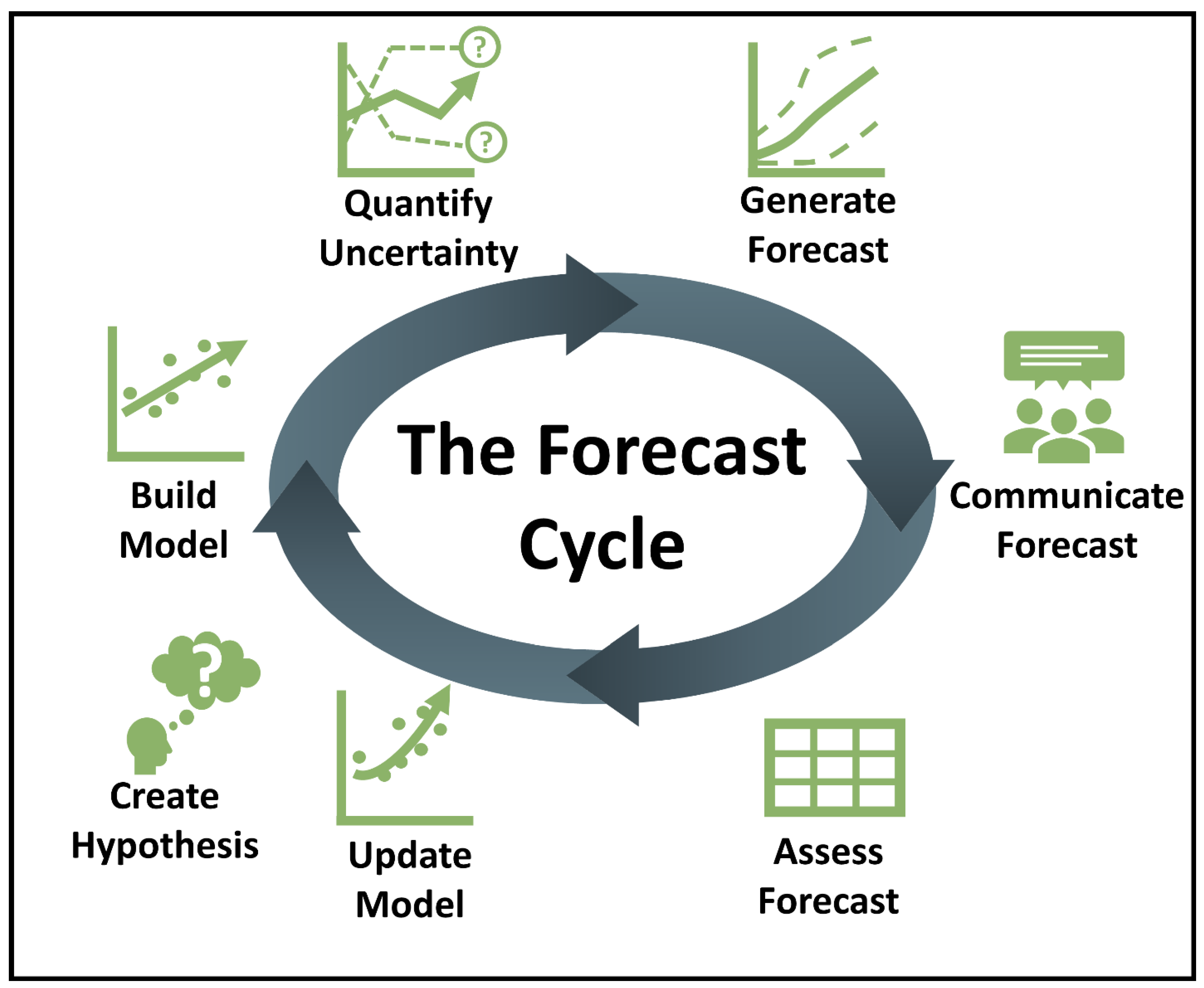

2.1. Module Overview and Learning Objectives

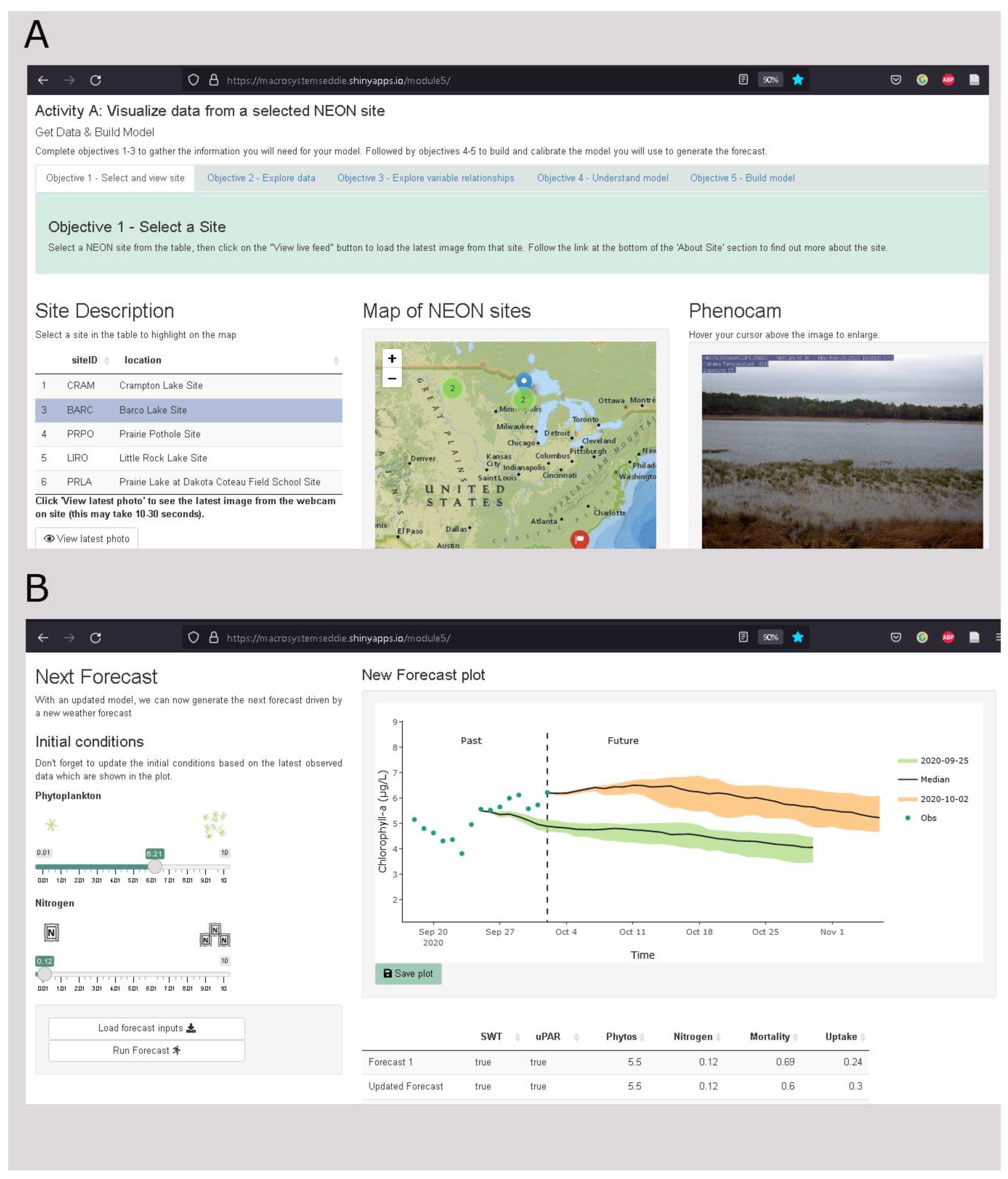

2.2. Module Activities

2.3. Module Availability

2.4. Module Accessibility

2.5. Module Implementation and Assessment

3. Results

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix A.1. Detailed Methods of Qualitative Assessment Analysis

| Question Number | Question |

|---|---|

| 1 | How have you used forecasts (ecological, political, sports, any kind!) before in your day-to-day life? |

| 2 | How can ecological forecasts improve both natural resource management and ecological understanding? |

| 3 | How do you think forecasts of freshwater primary productivity will differ between warmer lakes and colder lakes? |

| 4 | Choose one of the ecological forecasts above and use the website to answer the questions below. |

| 4a | Which ecological forecast did you select? |

| 4b | What ecological variable(s) are being forecasted? |

| 4c | How can this forecast help the public and/or managers? |

| 4d | Describe the way(s) in which the forecast is visualized. |

| 5 | Fill out information about your selected NEON site: |

| 5a | Name of selected site: |

| 5b | Four letter site identifier: |

| 5c | Latitude: |

| 5d | Longitude: |

| 5e | Lake area (km2): |

| 5f | Elevation (m): |

| 6 | Fill out the table below with the description of site variables: |

| 6a | Air temperature |

| 6b | Surface water temperature |

| 6c | Nitrogen |

| 6d | Underwater PAR |

| 6e | Chlorophyll-a |

| 7 | Describe the effect of each of the following variables on chlorophyll-a. Chlorophyll-a is used as a proxy measurement for phytoplankton concentration and primary productivity in aquatic environments. |

| 7a | Air temperature |

| 7b | Surface water temperature |

| 7c | Nitrogen |

| 7d | Underwater PAR |

| 8 | Were there any other relationships you found at your site? If so, please describe below. |

| 9 | What is the relationship between each of these driving variables and productivity? For example, if the driving variable increases, will it cause productivity to increase (positive), decrease (negative), or have no effect (stay the same). |

| 9a | Surface water temperature |

| 9b | Incoming light |

| 9c | Available nutrients |

| 10 | Classify the following as either a state variable or a parameter by dragging it into the corresponding bin. |

| 11 | We are using chlorophyll-a as a proxy of aquatic primary productivity. Select how you envision each parameter to affect chlorophyll-a concentrations: |

| 11a | Nutrient uptake by phytoplankton |

| 11b | Phytoplankton mortality |

| 12 | Without using surface water temperature or underwater light (uPAR) as inputs into your model, adjust the initial conditions and parameters of your model to best replicate the observations. Make sure you select the ‘Q12′ row in the parameter table to save your setup. Describe how the model simulation compares to the observations. |

| 13 | Explore the model’s sensitivity to SWT and uPAR: |

| 13a | Switch on surface water temperature by checking the box. Adjust initial conditions and parameters to replicate observations. Is the model sensitive to SWT? Did it help improve the model fit? (Select the “Q13a” row in the Parameter Table to store your model setup there). |

| 13b | Switch on uPAR and switch off surface water temperature. Adjust initial conditions and parameters to replicate observations. Is the model sensitive to uPAR? Did it help improve the model fit? (Select the “Q13b” row in the Parameter Table to store your model setup there). |

| 14 | Develop a scenario (e.g., uPAR is on, low initial conditions of phytoplankton, high nutrients, phytoplankton mortality is high, low uptake, etc.) and hypothesize how you think chlorophyll-a concentrations will respond prior to running the simulation. Switch off observations prior to running the model. |

| 14a | Write your hypothesis of how chlorophyll-a will respond to your scenario here: |

| 14b | Run your model scenario. Select the “Q14” row in the Parameter Table to store your model setup there. Was your hypothesis supported or refuted? Describe what you observed: |

| 15 | Add the observations to the plot. Calibrate your model by selecting sensitive variables and adjusting the parameters until they best fit the observed data. Save the plot and the parameters (Select the “Q15” row in the Parameter Table to store your model setup there), these are what will be used for the forecast. |

| 16 | What is forecast uncertainty? How is forecast uncertainty quantified? |

| 17 | Inspect the weather forecast data for the site you have chosen: |

| 17a | How does increasing the number of ensemble members in the weather forecast affect the size of the uncertainty in future weather? |

| 17b | Which type of plot (line or distribution) do you think visualizes the forecast uncertainty best? |

| 17c | Using the interactivity of the weather forecast plot, compare the air temperature forecasts for the first week (25 September–1 October) to the second week (2–8 October). How does the forecast uncertainty change between the two periods? |

| 18 | How does driver uncertainty affect the forecast? Specifically, does an increase in the number of members increase or decrease the range of uncertainty in the forecasts? How does that change over time? |

| 19 | What do you think are the main sources of uncertainty in your ecological forecast? |

| 20 | How would you describe your forecast of primary productivity at your NEON site so it could be understood by a fellow classmate? |

| 21 | How well did your forecast do compared to observations (include R2 value)? Why do you think it is important to assess the forecast? |

| 22 | Did your forecast improve when you updated your model parameters? Why do you think it is important to update the model? |

| 23 | Describe the new forecast of primary productivity. |

| 24 | Why is the forecast cycle described as ‘iterative’ (i.e., repetition of a process)? |

| 25 | Repeat Activity A and B with a different NEON site (ideally from a different region). |

| 25a | Apply the same model scenario (with the same model structure and parameters) which you developed in Q14 to this new site. How do you expect chlorophyll-a concentrations will respond prior to running the simulation? |

| 25b | Was your hypothesis supported or refuted? Why? |

| 25c | Revisit your hypothesis from Q3. What did you find out about the different productivity forecasts in warmer vs. colder sites? |

| 26 | Does forecast uncertainty differ at this site compared to the first selected site? Why do you think that is? |

| Which of the following statements best describes an ecological forecast? |

|

| When an ecological forecast is generated, how does the uncertainty of the forecast change as it predicts conditions further into the future? For example, if a forecast was generated today for ecological conditions over the next two weeks, how would the uncertainty in the first week of the forecast compare to the uncertainty in the second week of the forecast? |

|

| Which of the following options is NOT an advantage of iteratively updating forecasts with data over time? |

|

| Which of the following options is a step in the iterative ecological forecast cycle? |

|

| Metric | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|

| Ecological Forecasting | Not at all familiar, I have never heard of ecological forecasting. | Slightly familiar, I have heard of ecological forecasting, but cannot elaborate. | Somewhat familiar, I could explain a little about ecological forecasting. | Moderately familiar, I could explain quite a bit about ecological forecasting. | Extremely familiar, I could explain and instruct others about ecological forecasting. |

| Ecological Forecast Uncertainty | Not at all familiar, I have never heard of ecological forecast uncertainty. | Slightly familiar, I have heard of ecological forecast uncertainty, but cannot elaborate. | Somewhat familiar, I could explain a little about ecological forecast uncertainty. | Moderately familiar, I could explain quite a bit about ecological forecast uncertainty. | Extremely familiar, I could explain and instruct others about ecological forecast uncertainty. |

| Generate Forecast; Communicate Forecast; Quantify Uncertainty | Not at all important, no need to learn | Slightly important, may be of limited use but not necessary to learn | Somewhat important, may be useful to learn | Moderately important, there is a need to learn | Very important, it is necessary to learn |

| Let us pretend that you are an ecological forecaster tasked with forecasting lake algae concentrations for the next 16 days into the future to help lake managers. What steps would you propose to accomplish this goal? If you don’t know the answer, please write “I don’t know” instead of leaving the response blank. |

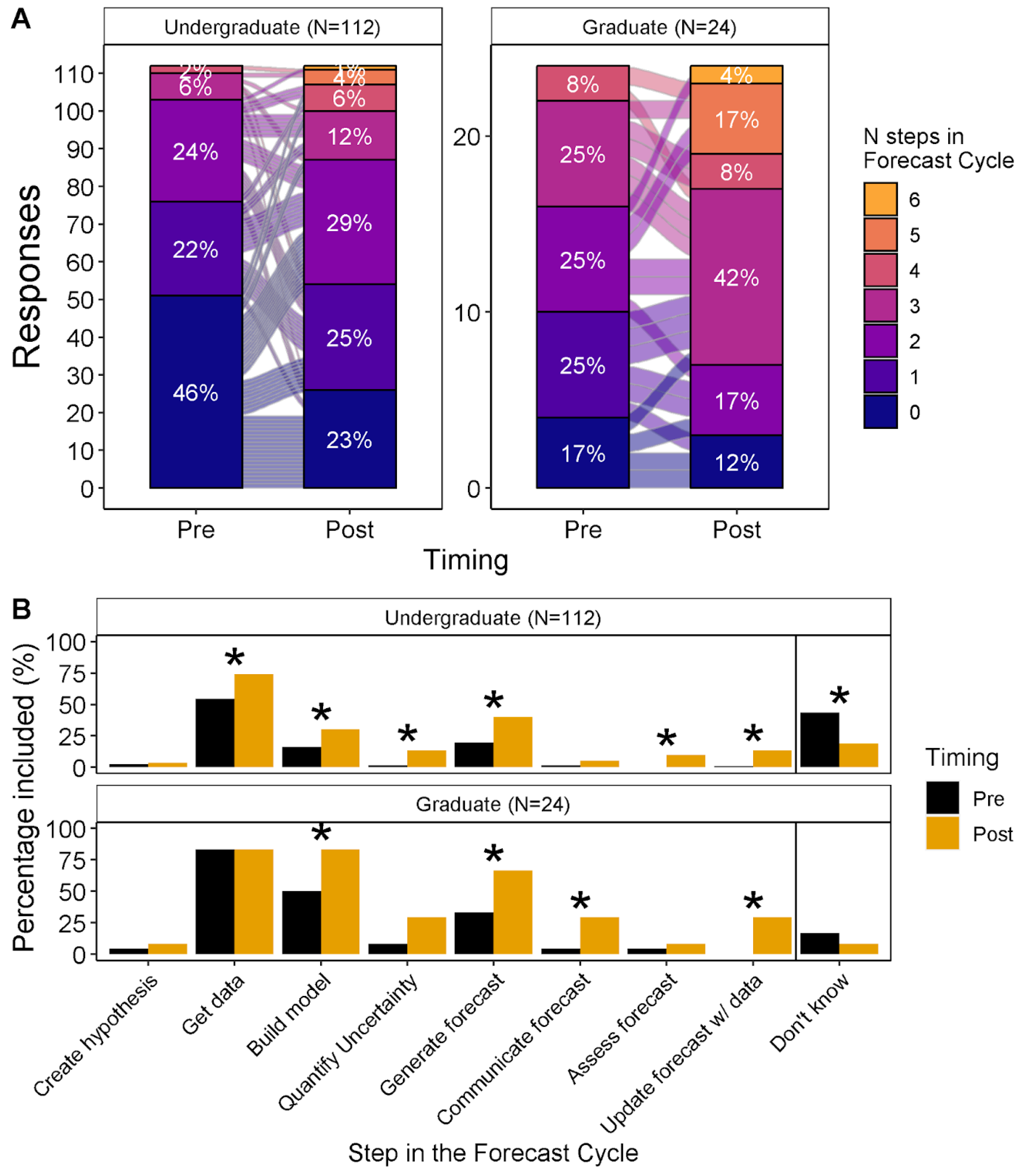

| Thematic bins: Answers were scored for the presence or absence of each “bin” (create hypothesis, build model, quantify uncertainty, generate forecast, communicate forecast, assess forecast, update forecast with data, don’t know). | |

| Create hypothesis | Create hypothesis, ask driving question |

| Build model | Build/construct/run/calibrate/develop/run model, any mention of model |

| Quantify uncertainty | Quantify/Include uncertainty, ensembles, any mention of uncertainty/certainty |

| Generate forecast | Generate forecast/predictions/projections |

| Communicate forecast | Communicate/present forecast |

| Assess forecast | Assess/validate forecast, compare forecast with observations, confront forecast with data |

| Update forecast with data | Update/readjust/recalibrate model/parameters/states/forecast with data/observations, feed data into model |

| Don’t know | I don’t know |

| Level | Test Statistic | Two-Tailed p-Value | n | Pre-Module Mean (±1 SD) | Pre-Module Median (IQR) | Post-Module MEAN (±1 SD) | Post-Module Median (IQR) | Effect Size |

|---|---|---|---|---|---|---|---|---|

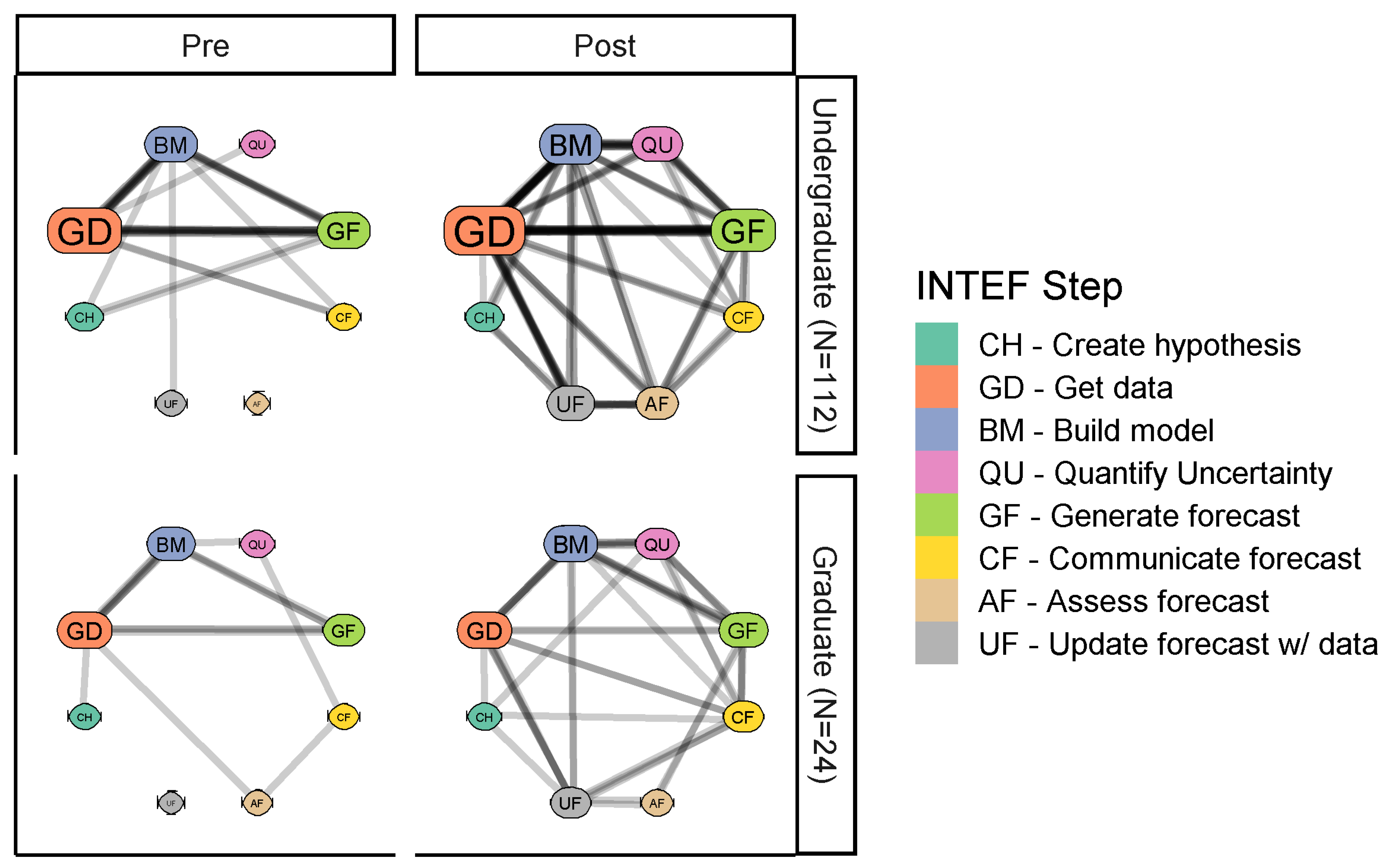

| Grad | 10 | 0.001 | 24 | 1.8 ± 1.2 | 2 (1, 3) | 3.0 ± 1.6 | 3 (2, 4) | 0.68 |

| UG | 647.5 | 0.000 | 112 | 1.0 ± 1.1 | 1 (0, 2) | 1.7 ± 1.4 | 2 (1, 2) | 0.42 |

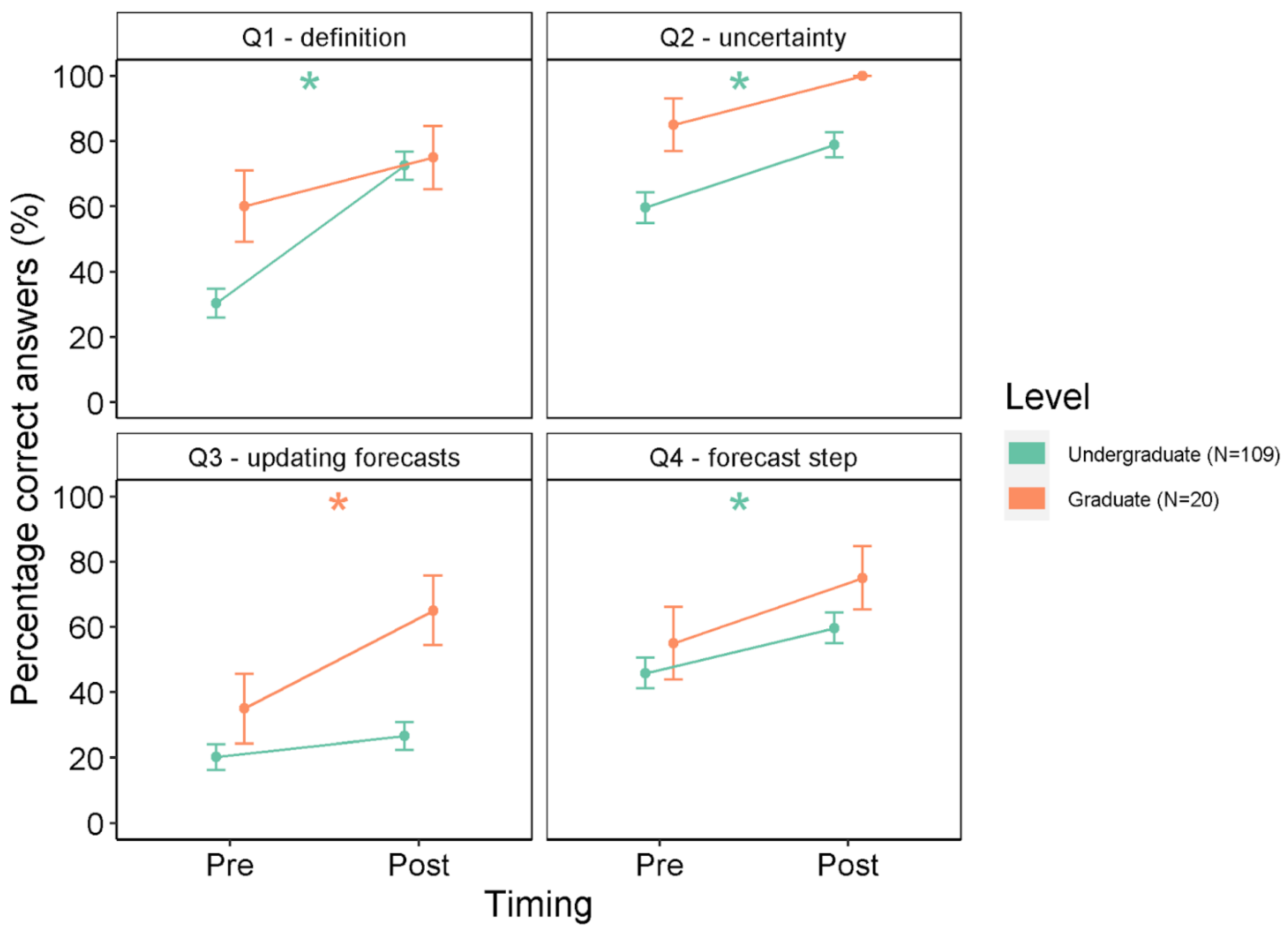

| Question | Level | Test Statistic | Two-Tailed p-Value | n | Pre-Module Correct (%) | Post-Module Correct (%) | Effect Size |

|---|---|---|---|---|---|---|---|

| Q1—definition | Grad | 8 | 0.30 | 20 | 60.0 | 75.0 | 0.23 |

| Q1—definition | UG | 110 | <0.01 | 109 | 30.3 | 72.5 | Inf |

| Q2—uncertainty | Grad | 0 | 0.15 | 20 | 85.0 | 100.0 | 0.32 |

| Q2—uncertainty | UG | 152 | <0.01 | 109 | 59.6 | 78.9 | 0.32 |

| Q3—updating forecasts | Grad | 4.5 | 0.04 | 20 | 35.0 | 65.0 | 0.46 |

| Q3—updating forecasts | UG | 192 | 0.21 | 109 | 20.2 | 26.6 | 0.12 |

| Q4—forecast step | Grad | 0 | 0.07 | 20 | 55.0 | 75.0 | 0.40 |

| Q4—forecast step | UG | 308 | 0.02 | 109 | 45.9 | 59.6 | 0.22 |

| Forecast Step | Level | Test Statistic | Two-Tailed p-Value | n | Pre-Module Included(%) | Post-Module Included(%) | Effect Size |

|---|---|---|---|---|---|---|---|

| Create hypothesis | Grad | 2.0 | 0.77 | 24 | 4.2 | 8.3 | 0.06 |

| Create hypothesis | UG | 12.0 | 0.78 | 112 | 2.7 | 3.6 | 0.03 |

| Get data | Grad | 5.0 | 1.00 | 24 | 83.3 | 83.3 | 0.00 |

| Get data | UG | 129.5 | <0.01 | 112 | 54.5 | 74.1 | 0.35 |

| Build model | Grad | 0.0 | 0.01 | 24 | 50.0 | 83.3 | 0.56 |

| Build model | UG | 157.5 | 0.01 | 112 | 16.1 | 30.4 | 0.26 |

| Quantify Uncertainty | Grad | 4.0 | 0.07 | 24 | 8.3 | 29.2 | 0.37 |

| Quantify Uncertainty | UG | 8.0 | <0.01 | 112 | 1.8 | 13.4 | 0.31 |

| Generate forecast | Grad | 0.0 | 0.01 | 24 | 33.3 | 66.7 | 0.56 |

| Generate forecast | UG | 189.0 | <0.01 | 112 | 19.6 | 40.2 | 0.34 |

| Communicate forecast | Grad | 0.0 | 0.02 | 24 | 4.2 | 29.2 | 0.48 |

| Communicate forecast | UG | 9.0 | 0.18 | 112 | 1.8 | 5.4 | 0.13 |

| Assess forecast | Grad | 2.0 | 0.77 | 24 | 4.2 | 8.3 | 0.06 |

| Assess forecast | UG | 0.0 | <0.01 | 112 | 0.0 | 9.8 | 0.31 |

| Update forecast w/data | Grad | 0.0 | 0.01 | 24 | 0.0 | 29.2 | 0.52 |

| Update forecast w/data | UG | 0.0 | <0.01 | 112 | 0.9 | 13.4 | 0.35 |

| Don’t know | Grad | 3.0 | 0.35 | 24 | 16.7 | 8.3 | 0.19 |

| Don’t know | UG | 643.5 | <0.01 | 112 | 43.8 | 18.8 | 0.43 |

| Level | Create Hypothesis | Get Data | Build Model | Quantify Uncertainty | Generate Forecast | Communicate Forecast | Assess Forecast | Update Forecast w/Data |

|---|---|---|---|---|---|---|---|---|

| UG | 3 | 7 | 9 | 1 | 9 | 2 | 0 | 0 |

| Grad | 1 | 2 | 0 | 1 | 0 | 0 | 0 | 0 |

| Skill | Level | Test Statistic | Two-Tailed p-Value | n | Pre-Module Mean (±1 SD) | Pre-Module Median (IQR) | Post-Module Mean (±1 SD) | Post-Module Median (IQR) | Effect Size |

|---|---|---|---|---|---|---|---|---|---|

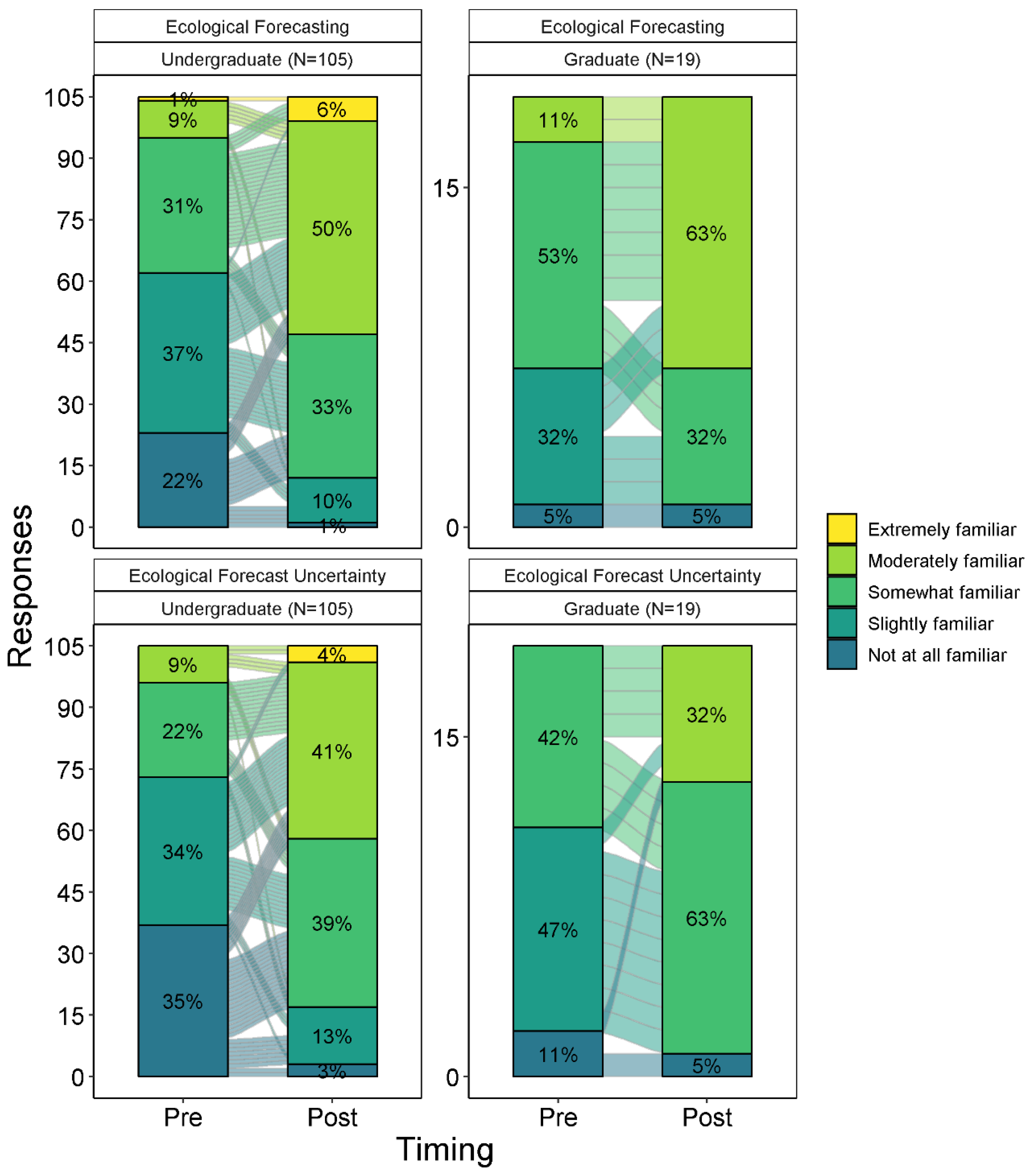

| Ecological Forecasting | Grad | 0 | <0.01 | 19 | 2.7 ± 0.7 | 3 (2, 3) | 3.5 ± 0.8 | 4 (3, 4) | 0.76 |

| Ecological Forecasting | UG | 201 | <0.01 | 105 | 2.3 ± 0.9 | 2 (2, 3) | 3.5 ± 0.8 | 4 (3, 4) | 0.74 |

| Ecological Forecast Uncertainty | Grad | 0 | <0.01 | 19 | 2.3 ± 0.7 | 2 (2, 3) | 3.2 ± 0.7 | 3 (3, 4) | 0.81 |

| Ecological Forecast Uncertainty | UG | 216 | <0.01 | 105 | 2.0 ± 1.0 | 2 (1, 3) | 3.3 ± 0.9 | 3 (3, 4) | 0.74 |

| Skill | Level | Test Statistic | Two-Tailed p-Value | n | Pre-Module Mean (±1 SD) | Pre-Module Median (IQR) | Post-Module Mean (±1 SD) | Post-Module Median (IQR) | Effect Size |

|---|---|---|---|---|---|---|---|---|---|

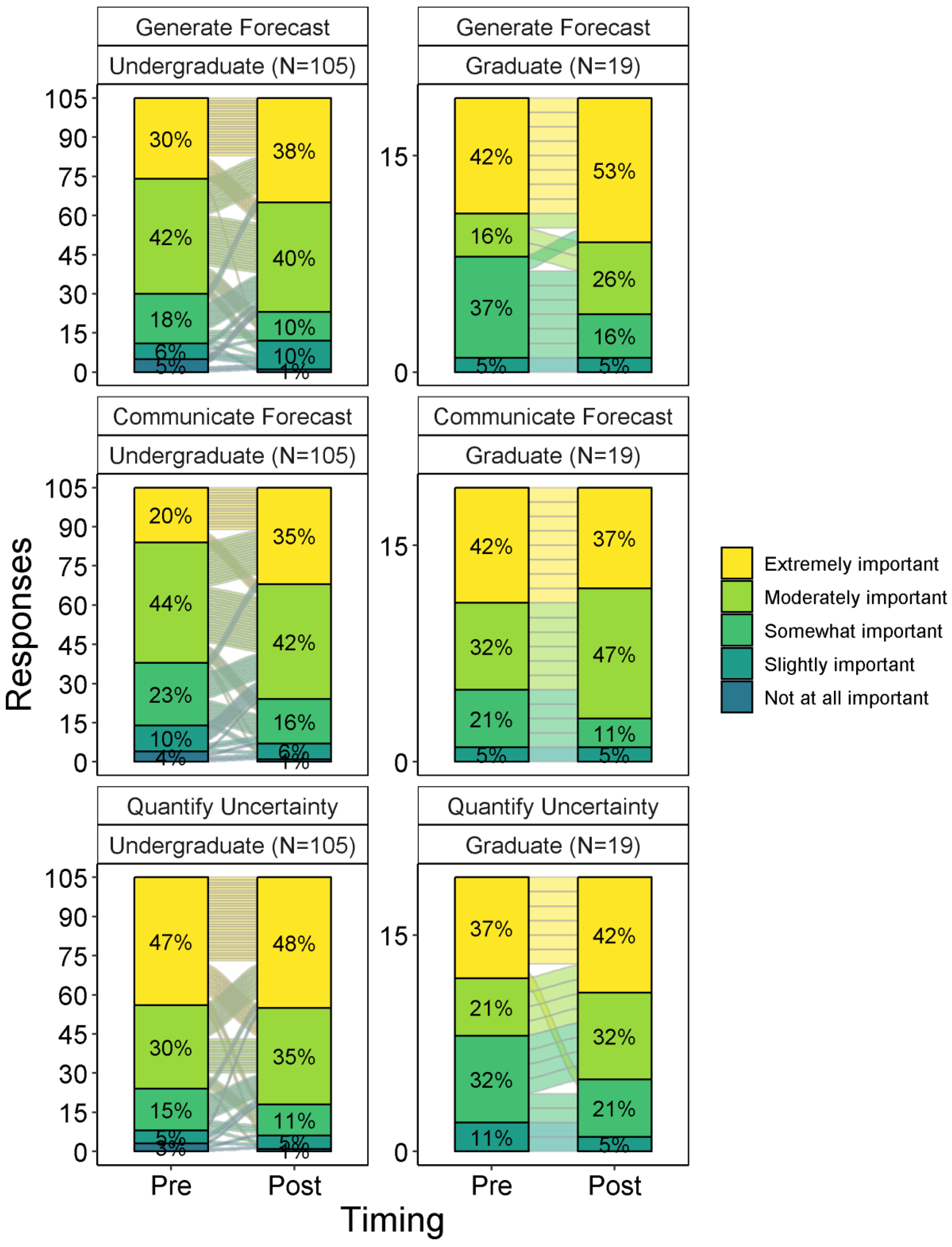

| Generate Forecast | Grad | 0.0 | 0.05 | 19 | 3.9 ± 1.0 | 4 (3,5) | 4.3 ± 0.9 | 5 (4,5) | 0.45 |

| Generate Forecast | UG | 653.5 | 0.10 | 105 | 3.9 ± 1.1 | 4 (3,5) | 4.0 ± 1.0 | 4 (4,5) | 0.16 |

| Communicate Forecast | Grad | 2.0 | 0.77 | 19 | 4.1 ± 0.9 | 4 (3.5,5) | 4.2 ± 0.8 | 4 (4,5) | 0.07 |

| Communicate Forecast | UG | 402.0 | <0.01 | 105 | 3.7 ± 1.0 | 4 (3,4) | 4.0 ± 0.9 | 4 (4,5) | 0.38 |

| Quantify Uncertainty | Grad | 8.0 | 0.15 | 19 | 3.8 ± 1.1 | 4 (3,5) | 4.1 ± 0.9 | 4 (3.5,5) | 0.33 |

| Quantify Uncertainty | UG | 676.5 | 0.30 | 105 | 4.1 ± 1.0 | 4 (4,5) | 4.2 ± 0.9 | 4 (4,5) | 0.10 |

References

- Carey, C.C.; Woelmer, W.M.; Lofton, M.E.; Figueiredo, R.J.; Bookout, B.J.; Corrigan, R.S.; Daneshmand, V.; Hounshell, A.G.; Howard, D.W.; Lewis, A.S.L.; et al. Advancing Lake and Reservoir Water Quality Management with Near-Term, Iterative Ecological Forecasting. Inland Waters 2022, 12, 107–120. [Google Scholar] [CrossRef]

- Dietze, M.C.; Fox, A.; Beck-Johnson, L.M.; Betancourt, J.L.; Hooten, M.B.; Jarnevich, C.S.; Keitt, T.H.; Kenney, M.A.; Laney, C.M.; Larsen, L.G.; et al. Iterative Near-Term Ecological Forecasting: Needs, Opportunities, and Challenges. Proc. Natl. Acad. Sci. USA 2018, 115, 1424–1432. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tulloch, A.I.T.; Hagger, V.; Greenville, A.C. Ecological Forecasts to Inform Near-Term Management of Threats to Biodiversity. Glob. Chang. Biol. 2020, 26, 5816–5828. [Google Scholar] [CrossRef]

- White, E.P.; Yenni, G.M.; Taylor, S.D.; Christensen, E.M.; Bledsoe, E.K.; Simonis, J.L.; Ernest, S.K.M. Developing an Automated Iterative Near-Term Forecasting System for an Ecological Study. Methods Ecol. Evol. 2019, 10, 332–344. [Google Scholar] [CrossRef] [Green Version]

- Woelmer, W.M.; Bradley, L.M.; Haber, L.T.; Klinges, D.H.; Lewis, A.S.L.; Mohr, E.J.; Torrens, C.L.; Wheeler, K.I.; Willson, A.M. Ten Simple Rules for Training Yourself in an Emerging Field. PLoS Comput. Biol. 2021, 17, e1009440. [Google Scholar] [CrossRef] [PubMed]

- Lewis, A.S.L.; Woelmer, W.M.; Wander, H.L.; Howard, D.W.; Smith, J.W.; McClure, R.P.; Lofton, M.E.; Hammond, N.W.; Corrigan, R.S.; Thomas, R.Q.; et al. Increased Adoption of Best Practices in Ecological Forecasting Enables Comparisons of Forecastability. Ecol. Appl. 2022, 32, e2500. [Google Scholar] [CrossRef] [PubMed]

- Bever, A.J.; Friedrichs, M.A.M.; St-Laurent, P. Real-Time Environmental Forecasts of the Chesapeake Bay: Model Setup, Improvements, and Online Visualization. Environ. Model. Softw. 2021, 140, 105036. [Google Scholar] [CrossRef]

- Record, N.R.; Pershing, A.J. Facing the Forecaster’s Dilemma: Reflexivity in Ocean System Forecasting. Oceans 2021, 2, 738–751. [Google Scholar] [CrossRef]

- Scavia, D.; Bertani, I.; Testa, J.M.; Bever, A.J.; Blomquist, J.D.; Friedrichs, M.A.M.; Linker, L.C.; Michael, B.D.; Murphy, R.R.; Shenk, G.W. Advancing Estuarine Ecological Forecasts: Seasonal Hypoxia in Chesapeake Bay. Ecol. Appl. 2021, 31, e02384. [Google Scholar] [CrossRef]

- Thomas, R.Q.; Figueiredo, R.J.; Daneshmand, V.; Bookout, B.J.; Puckett, L.K.; Carey, C.C. A Near-Term Iterative Forecasting System Successfully Predicts Reservoir Hydrodynamics and Partitions Uncertainty in Real Time. Water Resour. Res. 2020, 56, e2019WR026138. [Google Scholar] [CrossRef]

- Dietze, M.C. Ecological Forecasting; Princeton University Press: Princeton, NJ, USA, 2017; ISBN 978-1-4008-8545-9. [Google Scholar]

- Dietze, M.C.; Lynch, H. Forecasting a Bright Future for Ecology. Front. Ecol. Environ. 2019, 17, 3. [Google Scholar] [CrossRef]

- Bodner, K.; Rauen Firkowski, C.; Bennett, J.R.; Brookson, C.; Dietze, M.; Green, S.; Hughes, J.; Kerr, J.; Kunegel-Lion, M.; Leroux, S.J.; et al. Bridging the Divide between Ecological Forecasts and Environmental Decision Making. Ecosphere 2021, 12, e03869. [Google Scholar] [CrossRef]

- Greengrove, C.; Lichtenwalner, C.S.; Palevsky, H.I.; Pfeiffer-Herbert, A.; Severmann, S.; Soule, D.; Murphy, S.; Smith, L.M.; Yarincik, K. Using Authentic Data from NSF’s Ocean Observatories Initiative in Undergraduate Teaching. Oceanography 2020, 33, 62–73. [Google Scholar] [CrossRef]

- Kjelvik, M.K.; Schultheis, E.H. Getting Messy with Authentic Data: Exploring the Potential of Using Data from Scientific Research to Support Student Data Literacy. CBE Life Sci. Educ. 2019, 18, es2. [Google Scholar] [CrossRef] [PubMed]

- O’Reilly, C.M.; Gougis, R.D.; Klug, J.L.; Carey, C.C.; Richardson, D.C.; Bader, N.E.; Soule, D.C.; Castendyk, D.; Meixner, T.; Stomberg, J.; et al. Using Large Data Sets for Open-Ended Inquiry in Undergraduate Science Classrooms. BioScience 2017, 67, 1052–1061. [Google Scholar] [CrossRef] [Green Version]

- Burrow, A.K. Teaching Introductory Ecology with Problem-Based Learning. Bull. Ecol. Soc. Am. 2018, 99, 137–150. [Google Scholar] [CrossRef] [Green Version]

- Rieley, M. Big Data Adds up to Opportunities in Math Careers: Beyond the Numbers; Bureau of Labor Statistics: Washington, DC, USA, 2018. [Google Scholar]

- FACT SHEET: President Biden Takes Executive Actions to Tackle the Climate Crisis at Home and Abroad, Create Jobs, and Restore Scientific Integrity Across Federal Government. Available online: https://www.whitehouse.gov/briefing-room/statements-releases/2021/01/27/fact-sheet-president-biden-takes-executive-actions-to-tackle-the-climate-crisis-at-home-and-abroad-create-jobs-and-restore-scientific-integrity-across-federal-government/ (accessed on 15 November 2021).

- Carey, C.C.; Farrell, K.J.; Hounshell, A.G.; O’Connell, K. Macrosystems EDDIE Teaching Modules Significantly Increase Ecology Students’ Proficiency and Confidence Working with Ecosystem Models and Use of Systems Thinking. Ecol. Evol. 2020, 10, 12515–12527. [Google Scholar] [CrossRef]

- Sormunen, M.; Heikkilä, A.; Salminen, L.; Vauhkonen, A.; Saaranen, T. Learning Outcomes of Digital Learning Interventions in Higher Education: A Scoping Review. CIN: Comput. Inform. Nurs. 2022, 40, 154–164. [Google Scholar] [CrossRef]

- Subhash, S.; Cudney, E.A. Gamified Learning in Higher Education: A Systematic Review of the Literature. Comput. Hum. Behav. 2018, 87, 192–206. [Google Scholar] [CrossRef]

- Auker, L.A.; Barthelmess, E.L. Teaching R in the Undergraduate Ecology Classroom: Approaches, Lessons Learned, and Recommendations. Ecosphere 2020, 11, e03060. [Google Scholar] [CrossRef] [Green Version]

- Carey, C.C.; Gougis, R.D. Simulation Modeling of Lakes in Undergraduate and Graduate Classrooms Increases Comprehension of Climate Change Concepts and Experience with Computational Tools. J. Sci. Educ. Technol. 2017, 26, 1–11. [Google Scholar] [CrossRef] [Green Version]

- Chang, W.; Cheng, J.; Allaire, J.J.; Sievert, C.; Schloerke, B.; Xie, Y.; Allen, J.; McPherson, J.; Dipert, A.; Borges, B. Shiny: Web Application Framework for R. 2021. Available online: https://cran.r-project.org/web/packages/shiny/index.html (accessed on 25 April 2022).

- Zhu, M.; Johnson, M.; Dutta, A.; Panorkou, N.; Samanthula, B.; Lal, P.; Wang, W. Educational Simulation Design to Transform Learning in Earth and Environmental Sciences. In Proceedings of the 2020 IEEE Frontiers in Education Conference (FIE), Uppsala, Sweden, 21–24 October 2020; pp. 1–6. [Google Scholar]

- Derkach, T.M. The Origin of Misconceptions in Inorganic Chemistry and Their Correction by Computer Modelling. J. Phys. Conf. Ser. 2021, 1840, 012012. [Google Scholar] [CrossRef]

- Biehler, R.; Fleischer, Y. Introducing Students to Machine Learning with Decision Trees Using CODAP and Jupyter Notebooks. Teach. Stat. 2021, 43, S133–S142. [Google Scholar] [CrossRef]

- Hayyu, A.N.; Dafik; Tirta, I.M.; Wangguway, Y.; Kurniawati, S. The Analysis of the Implementation Inquiry Based Learning to Improve Student Mathematical Proving Skills in Solving Dominating Metric Dimention Number. J. Phys. Conf. Ser. 2020, 1538, 012093. [Google Scholar] [CrossRef]

- Williams, I.J.; Williams, K.K. Using an R Shiny to Enhance the Learning Experience of Confidence Intervals. Teach. Stat. 2018, 40, 24–28. [Google Scholar] [CrossRef]

- Kasprzak, P.; Mitchell, L.; Kravchuk, O.; Timmins, A. Six Years of Shiny in Research—Collaborative Development of Web Tools in R. R J. 2020, 12, 155. [Google Scholar] [CrossRef]

- Fawcett, L. Using Interactive Shiny Applications to Facilitate Research-Informed Learning and Teaching. J. Stat. Educ. 2018, 26, 2–16. [Google Scholar] [CrossRef] [Green Version]

- González, J.A.; López, M.; Cobo, E.; Cortés, J. Assessing Shiny Apps through Student Feedback: Recommendations from a Qualitative Study. Comput. Appl. Eng. Educ. 2018, 26, 1813–1824. [Google Scholar] [CrossRef]

- Neyhart, J.L.; Watkins, E. An Active Learning Tool for Quantitative Genetics Instruction Using R and Shiny. Nat. Sci. Educ. 2020, 49, e20026. [Google Scholar] [CrossRef]

- Moore, T.N.; Carey, C.C.; Thomas, R.Q. Macrosystems EDDIE Module 5: Introduction to Ecological Forecasting (Instructor Materials). 2022. [CrossRef]

- Prevost, L.; Sorensen, A.E.; Doherty, J.H.; Ebert-May, D.; Pohlad, B. 4DEE—What’s Next? Designing Instruction and Assessing Student Learning. Bull. Ecol. Soc. Am. 2019, 100, e01552. [Google Scholar] [CrossRef]

- Carey, C.C.; Darner Gougis, R.; Klug, J.L.; O’Reilly, C.M.; Richardson, D.C. A Model for Using Environmental Data-Driven Inquiry and Exploration to Teach Limnology to Undergraduates. Limnol. Oceanogr. Bull. 2015, 24, 32–35. [Google Scholar] [CrossRef] [Green Version]

- Bybee, R.W.; Taylor, J.A.; Gardner, A.; Van Scotter, P.; Carlson Powell, J.; Westbrook, A.; Landes, N. The BSCS 5E Instructional Model: Origins and Effectiveness; Colorado Springs, Co: Colorado Springs, CO, USA, 2006. [Google Scholar]

- Soetaert, K.; Herman, P.M.J. Model Formulation. In A Practical Guide to Ecological Modelling: Using R as a Simulation Platform; Springer: Dordrecht, The Netherlands, 2009; pp. 15–69. ISBN 978-1-4020-8624-3. [Google Scholar]

- Jolliffe, I.T.; Stephenson, D.B. Forecast Verification a Practitioner’s Guide in Atmospheric Science, 2nd ed.; Wiley-Blackwell: Chichester, UK, 2012. [Google Scholar]

- Moore, T.N.; Carey, C.C.; Thomas, R.Q. Macrosystems EDDIE Module 5: Introduction to Ecological Forecasting (R Shiny Application); Zenodo: Geneva, Switzerland, 2022. [Google Scholar] [CrossRef]

- Miles, M.B.; Huberman, A.M.; Saldaña, J. (Eds.) Qualitative Data Analysis: A Methods Sourcebook, 3rd ed.; SAGE Publications, Inc.: Thousand Oaks, CA, USA, 2014; ISBN 978-1-4522-5787-7. [Google Scholar]

- Vogt, W.P. Dictionary of Statistics & Methodology: A Nontechnical Guide for the Social Sciences, 3rd ed.; Sage Publications: Thousand Oaks, CA, USA, 2005; ISBN 978-0-7619-8854-0. [Google Scholar]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2022. [Google Scholar]

- Cid, C.R.; Pouyat, R.V. Making Ecology Relevant to Decision Making: The Human-Centered, Place-Based Approach. Front. Ecol. Environ. 2013, 11, 447–448. [Google Scholar] [CrossRef]

- Nagy, R.C.; Balch, J.K.; Bissell, E.K.; Cattau, M.E.; Glenn, N.F.; Halpern, B.S.; Ilangakoon, N.; Johnson, B.; Joseph, M.B.; Marconi, S.; et al. Harnessing the NEON Data Revolution to Advance Open Environmental Science with a Diverse and Data-Capable Community. Ecosphere 2021, 12, e03833. [Google Scholar] [CrossRef]

- Record, S.; Jarzyna, M.A.; Hardiman, B.; Richardson, A.D. Open Data Facilitate Resilience in Science during the COVID-19 Pandemic. Front. Ecol. Environ. 2022, 20, 76–77. [Google Scholar] [CrossRef]

- Ballen, C.J.; Wieman, C.; Salehi, S.; Searle, J.B.; Zamudio, K.R. Enhancing Diversity in Undergraduate Science: Self-Efficacy Drives Performance Gains with Active Learning. CBE Life Sci. Educ. 2017, 16, ar56. [Google Scholar] [CrossRef]

- Lai, J.; Lortie, C.J.; Muenchen, R.A.; Yang, J.; Ma, K. Evaluating the Popularity of R in Ecology. Ecosphere 2019, 10, e02567. [Google Scholar] [CrossRef] [Green Version]

- Beck, C.W.; Blumer, L.S. Inquiry-Based Ecology Laboratory Courses Improve Student Confidence and Scientific Reasoning Skills. Ecosphere 2012, 3, art112. [Google Scholar] [CrossRef]

| Activity | EDDIE ABC Conceptual Framework | Introduction to Ecological Forecasting Module |

|---|---|---|

| A | Engage in initial data exploration and skill development using simple analyses | Engage in thinking about how forecasts are used and can advance ecological management. Explore NEON data from a site of the student’s choice and build a simple productivity model. |

| B | Explore and Explain through more detailed analyses and comparisons | Explore how the productivity model can best fit observations. Use the model to step through each stage of the forecast cycle. Explain the forecast and its uncertainty to a classmate. |

| C | Elaborate on developed ideas to other sites, datasets, and concepts. Evaluate knowledge in class discussion and homework | Elaborate on the forecasting framework by applying their model to generate a forecast for a different NEON site and compare results. Evaluate knowledge by answering discussion questions in the R Shiny app and together as a class. |

| Institution | Course Level | Class Name | Carnegie Code | Instructor | Number of Students Enrolled | Mode (Virtual, In-Person or Hybrid) |

|---|---|---|---|---|---|---|

| Rhodes College | 100 (introductory undergraduate) | Intro to Environmental Science | Baccalaureate Colleges: Arts and Sciences Focus | N | 27 | in-person |

| Saint Olaf College | 200 (2nd-year undergraduates, sophomore-level) | Macrosystems Ecology and Data Science | Baccalaureate Colleges: Arts and Sciences Focus | N | 7 | in-person |

| Ohio Wesleyan University | 300 (3rd-year undergraduates, junior level) | Plant Responses to Global Change | Baccalaureate Colleges: Arts and Sciences Focus | N | 20 | virtual |

| University of Richmond | 300 (3rd-year undergraduates, junior level) | Data Visualization and Communication for Biologists | Baccalaureate Colleges: Arts and Sciences Focus | N | 19 | hybrid |

| Longwood University | 300 (3rd year undergraduates, junior-level) | Ecology | M2 | N | 18 | in-person |

| University of California—Berkeley | 300 (3rd-year undergraduates, junior level) | Data Science for Global Change Ecology | R1 | N | 54 | in-person |

| Boston University | 300 (3rd year undergraduates, junior-level) | Introduction to Quantitative Environmental Modeling | R1 | N | 30 | in-person |

| Virginia Tech | 500 (graduate students) | Ecological Modeling and Forecasting | R1 | Y | 16 | virtual |

| University of California—Berkeley | 500 (graduate students) | Reproducible and Collaborative Data Science | R1 | Y | 17 | virtual |

| University of Notre Dame | 500 (graduate students) | Quant Camp | R1 | N | 13 | in-person |

| Global Lake Ecological Observatory Network (GLEON) Workshop | 500 (graduate students) | Introduction to Ecological Forecasting | NA | Y | 20 | virtual |

| Invent Water Workshop | 500 (graduate student, non-American) | Introduction to Ecological Forecasting | NA | N | 15 | in-person |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Moore, T.N.; Thomas, R.Q.; Woelmer, W.M.; Carey, C.C. Integrating Ecological Forecasting into Undergraduate Ecology Curricula with an R Shiny Application-Based Teaching Module. Forecasting 2022, 4, 604-633. https://doi.org/10.3390/forecast4030033

Moore TN, Thomas RQ, Woelmer WM, Carey CC. Integrating Ecological Forecasting into Undergraduate Ecology Curricula with an R Shiny Application-Based Teaching Module. Forecasting. 2022; 4(3):604-633. https://doi.org/10.3390/forecast4030033

Chicago/Turabian StyleMoore, Tadhg N., R. Quinn Thomas, Whitney M. Woelmer, and Cayelan C. Carey. 2022. "Integrating Ecological Forecasting into Undergraduate Ecology Curricula with an R Shiny Application-Based Teaching Module" Forecasting 4, no. 3: 604-633. https://doi.org/10.3390/forecast4030033

APA StyleMoore, T. N., Thomas, R. Q., Woelmer, W. M., & Carey, C. C. (2022). Integrating Ecological Forecasting into Undergraduate Ecology Curricula with an R Shiny Application-Based Teaching Module. Forecasting, 4(3), 604-633. https://doi.org/10.3390/forecast4030033