Bootstrap Prediction Intervals of Temporal Disaggregation

Abstract

1. Introduction

2. Model-Based Temporal Disaggregation

2.1. The GLS Disaggregation

2.2. Disaggregate ARIMA Models

- 1

- The AR part:

- When m is odd and the AR polynomial of the aggregate model is factorized asthe disaggregate AR parameters are given by

- Otherwise, a disaggregate model cannot be uniquely determined.

- 2

- The MA part:Ref. [19] showed that the m-aggregate model for ARIMA with is ARIMA with . Thus, it is reasonable to assume that the maximum MA order of the disaggregate model is .Consider in (9). Because for depends only on for , the aggregation transformation matrix in (9) can be partitioned aswhere is a square matrix of size , such as , and and are block matrices of dimensions and , respectively, with .

- The disaggregate AR parameter:

- The disaggregate MA parameters:whereand . Moreover, since the AR component implies for , can be rewritten aswhere . We therefore obtainWhen the matrix in (21) is not a singular matrix, the autocovariances , , , and can be solved. Consider , which is MA from (18). The autocovariances of are expressed asHere, we can derive the MA parameters , , and from the above autocovariance equations, including the fixed values of , , , , and .

3. AR-Sieve Bootstrap Prediction Intervals of Temporal Disaggregation

- Step 1:

- Using the disaggregation method introduced in Section 2.2, we identify an ARIMA expression for the unknown disaggregate series and find the autocovariance estimates, , , …, , and the coefficient estimates, and , of the disaggregate model. Then, we derive an estimated time series from the GLS disaggregation shown in Section 2.1.

- Step 2:

- The dth differenced series is assumed to be stationary and invertible in (10). The invertibility admits an AR representation for such thatand the ith coefficient estimate is derived from the numerical associationFurthermore, we decide an appropriate AR approximation of order s under the condition of for , where is a predetermined positive value close to zero.

- Step 3:

- We compute the centered residuals of the AR approximation, defined asfor , whereIn addition, we obtain the empirical distribution function of the centered residuals,where the indicator if or 0 elsewhere.

- Step 4:

- We generate a bootstrap resample of i.i.d. observations , from in (32).

- Step 5:

- We derive a pseudo time series from the forward modelfor , assuming . Then, we replace the first s observations with the backward valuesfor (see [20], pp. 205–206).

- Step 6:

- For the pseudo series , , we obtain the AR coefficient estimates, , through Yule–Walker estimation (see [21], pp. 239–240).

- Step 7:

- We calculate the predicted bootstrap observations, , using defined asfor , andfor , and assuming .

- Step 8:

- We repeat Steps 4–7 many times and find the bootstrap distribution function of , denoted by . Finally, we construct the prediction interval for the unknown disaggregate observation , given bywhere is the kth percentile of .

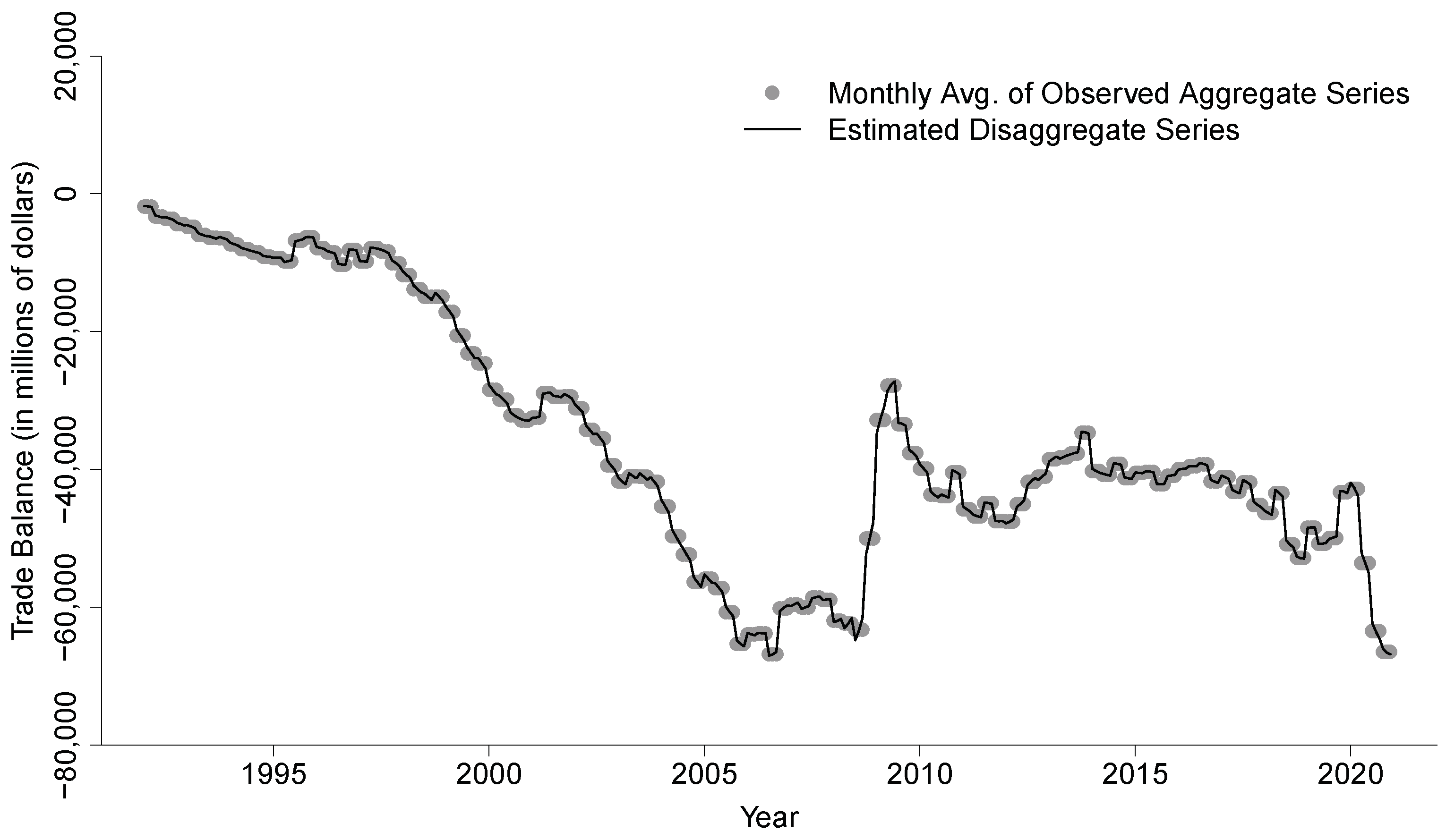

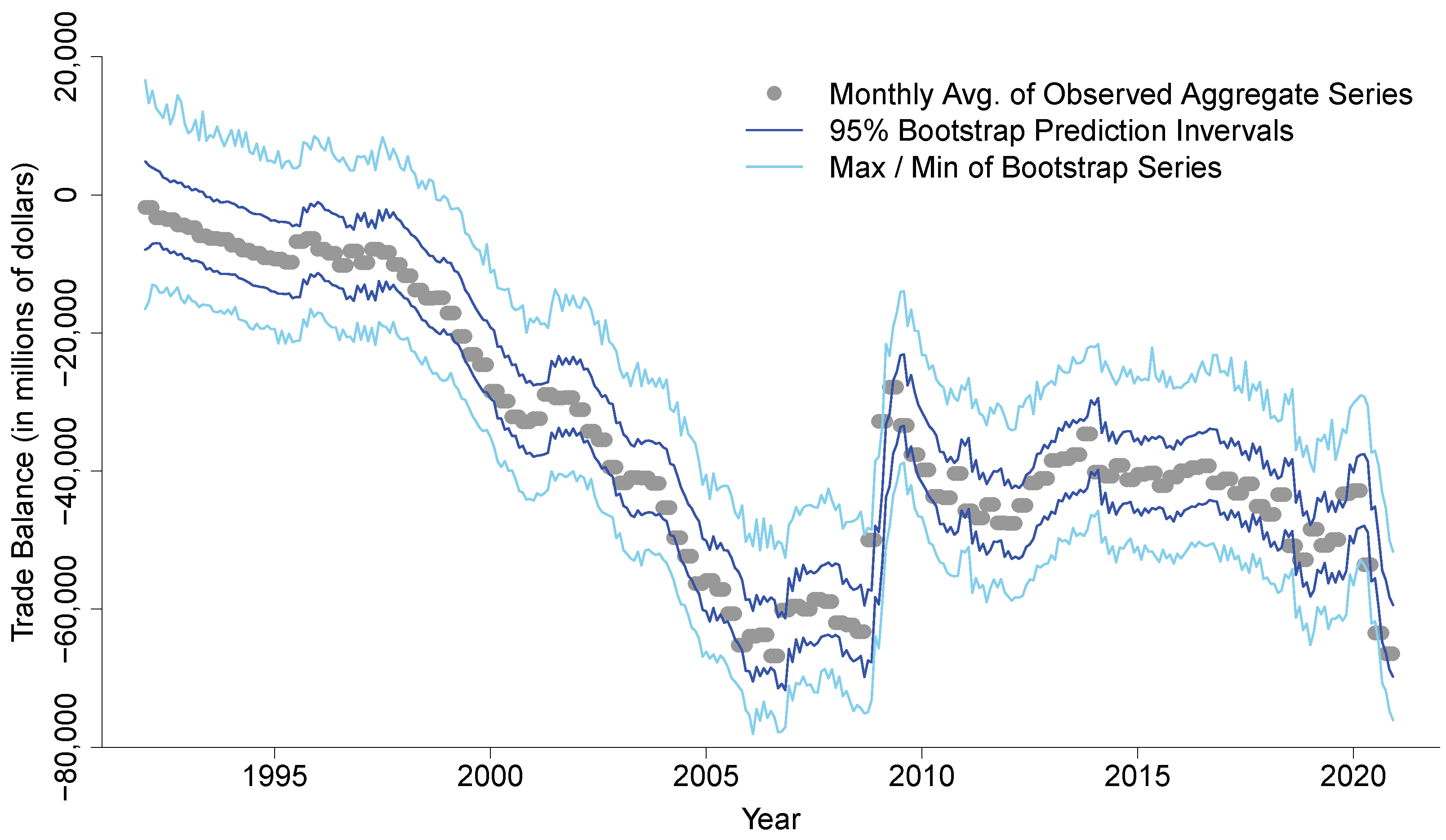

4. Real Data Analysis: The U.S. International Trade in Goods and Services

5. Concluding Remarks

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lee, B.H.; Wei, W.W.S. The use of temporally aggregated data on detecting a mean change of a time series process. Commun. Stat. Theory Methods 2017, 46, 5851–5871. [Google Scholar] [CrossRef]

- Lee, B.H.; Park, J. A spectral measure for the information loss of temporal aggregation. J. Stat. Theory Pract. 2020, 14, 1–23. [Google Scholar] [CrossRef]

- Lee, B.H.; Wei, W.W.S. The use of temporally aggregated data in modeling and testing a variance change in a time series. Commun. Stat. Simul. Comput. 2021. Forthcoming. [Google Scholar] [CrossRef]

- Chow, G.C.; Lin, A. Best linear unbiased interpolation, distribution and extrapolation of time series by related series. Rev. Econ. Stat. 1971, 53, 372–375. [Google Scholar] [CrossRef]

- Denton, F.T. Adjustment of monthly or quarterly series to annuals totals: An approach based on quadratic minimization. J. Am. Stat. Assoc. 1971, 66, 99–102. [Google Scholar] [CrossRef]

- Fernández, R.B. A methodological note on the estimation of time series. Rev. Econ. Stat. 1981, 63, 471–476. [Google Scholar] [CrossRef]

- Litterman, R.B. A random walk, Markov model for the distribution of time series. J. Bus. Econ. Stat. 1983, 1, 169–173. [Google Scholar]

- Guerrero, V.M. Temporal disaggregation of time series: An ARIMA based approach. Int. Stat. Rev. 1990, 58, 29–46. [Google Scholar] [CrossRef]

- Stram, D.O.; Wei, W.W.S. A methodological note on the disaggregation of time series totals. J. Time Ser. Anal. 1986, 7, 293–302. [Google Scholar] [CrossRef]

- Wei, W.W.S.; Stram, D.O. Disaggregation of time series models. J. R. Stat. Soc. Ser. Stat. Methodol. 1990, 52, 453–467. [Google Scholar] [CrossRef]

- Feijoó, S.R.; Caro, A.R.; Quintana, D.D. Methods for quarterly disaggregation without indicators: A comparative study using simulation. Comput. Stat. Data Anal. 2003, 43, 63–78. [Google Scholar] [CrossRef]

- Moauro, F.; Savio, G. Temporal disaggregation using multivariate structural time series models. Econom. J. 2005, 8, 214–234. [Google Scholar] [CrossRef]

- Sax, C.; Steiner, P. Temporal disaggregation of time series. R J. 2013, 5, 80–87. [Google Scholar] [CrossRef]

- Alonso, A.M.; Pẽna, D.; Romo, J. Forecasting time series with sieve bootstrap. J. Stat. Plan. Inference 2002, 100, 1–11. [Google Scholar] [CrossRef]

- Alonso, A.M.; Pẽna, D.; Romo, J. On sieve bootstrap prediction intervals. Stat. Probab. Lett. 2003, 65, 13–20. [Google Scholar] [CrossRef][Green Version]

- Alonso, A.M.; Sipols, A.E. A time series bootstrap procedure for interpolation intervals. Comput. Stat. Data Anal. 2008, 52, 1792–1805. [Google Scholar] [CrossRef]

- Stram, D.O.; Wei, W.W.S. Temporal aggregation in the ARIMA process. J. Time Ser. Anal. 1986, 7, 279–292. [Google Scholar] [CrossRef]

- Wei, W.W.S. Time Series Analysis: Univariate and Multivariate Methods, 2nd ed.; Addison Wesley: Boston, MA, USA, 2006. [Google Scholar]

- Wei, W.W.S. Seasonal Analysis of Economic Time Series; CHAPTER Some Consequences of Temporal Aggregation in Seasonal Time Series Models; U.S. Department of Commerce, Bureau of the Census: Washington, DC, USA, 1978; pp. 433–444.

- Box, G.E.P.; Jenkins, G.M.; Reinsel, G.C.; Ljung, G.M. Time Series Analysis: Forecasting and Control, 5th ed.; John Wiley & Sons: Hoboken, NJ, USA, 2016. [Google Scholar]

- Brockwell, P.J.; Davis, R.A. Time Series: Theory and Methods, 2nd ed.; Springer: New York, NY, USA, 2006. [Google Scholar]

- U.S. Census Bureau Economic Indicators. Available online: https://www.census.gov/economic-indicators (accessed on 28 February 2021).

- U.S. International Trade Data. Available online: https://www.census.gov/foreign-trade/data/index.html (accessed on 13 February 2021).

- International Trade in Goods and Services. Available online: https://www.bea.gov/data/intl-trade-investment/international-trade-goods-and-services (accessed on 13 February 2021).

- Freeland, R.K.; McCabe, B.P.M. Analysis of low count time series data by Poisson autoregression. J. Time Ser. Anal. 2004, 25, 701–722. [Google Scholar] [CrossRef]

- Fokianos, A.; Rahbek, A.; Tjøstheim, D. Poisson autoregression. J. Am. Stat. Assoc. 2009, 104, 1430–1439. [Google Scholar] [CrossRef]

- Zhu, F.; Wang, D. Estimation and testing for a Poisson autoregressive model. Metrika 2011, 73, 211–230. [Google Scholar] [CrossRef]

- Chen, C.W.S.; Lee, S. Generalized Poisson autoregressive models for time series of counts. Comput. Stat. Data Anal. 2016, 99, 51–67. [Google Scholar] [CrossRef]

- Hotta, L.K.; Vasconcellos, K.L. Aggregation and disaggregation of structural time series models. J. Time Ser. Anal. 1999, 20, 155–171. [Google Scholar] [CrossRef]

| Mean | Sd | W-Statistic | p-Value | ||

|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | ||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | |||||||||||

| 2 | |||||||||||

| 3 | |||||||||||

| 4 | |||||||||||

| 5 | |||||||||||

| 6 | |||||||||||

| 7 | |||||||||||

| 8 | |||||||||||

| 9 | |||||||||||

| 10 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, B.H. Bootstrap Prediction Intervals of Temporal Disaggregation. Stats 2022, 5, 190-202. https://doi.org/10.3390/stats5010013

Lee BH. Bootstrap Prediction Intervals of Temporal Disaggregation. Stats. 2022; 5(1):190-202. https://doi.org/10.3390/stats5010013

Chicago/Turabian StyleLee, Bu Hyoung. 2022. "Bootstrap Prediction Intervals of Temporal Disaggregation" Stats 5, no. 1: 190-202. https://doi.org/10.3390/stats5010013

APA StyleLee, B. H. (2022). Bootstrap Prediction Intervals of Temporal Disaggregation. Stats, 5(1), 190-202. https://doi.org/10.3390/stats5010013