Abstract

In this paper, we present the Cumulative Median Estimation (CUMed) algorithm for robust sufficient dimension reduction. Compared with non-robust competitors, this algorithm performs better when there are outliers present in the data and comparably when outliers are not present. This is demonstrated in simulated and real data experiments.

1. Introduction

Sufficient Dimension Reduction (SDR) is a framework of supervised dimension reduction algorithms that have been proposed mainly for regression and classification settings. The main objective is to reduce the dimension of the p-dimensional predictor vector without losing information on the conditional distribution of . In other words, we can state the objective as the effort to estimate a matrix ꞵ, such that the following conditional independence model holds:

where . The space spanned by the columns of ꞵ is called the Dimension Reduction Subspace (DRS). There are many ꞵ’s that satisfy the conditional independence model above, and therefore, there are many DRSs. The objective is to find the matrix ꞵ which achieves the minimum d. The space spanned by such ꞵ is called the Central Dimension Reduction Subspace (CDRS), or simply the Central Space (CS). CS does not always exist, but if it exists, it is unique. Conditions of existence of the CS are mild (see [1]) and we consider that these are met in the rest of this work. For a more comprehensive overview of the SDR literature, the interested reader is referred to [2].

The first approach to the SDR framework was Sliced Inverse Regression (SIR) introduced by [3] and which used inverse means to achieve efficient feature extraction. There are a number of other methods that used the idea of inverse moments like Sliced Average Variance Estimation (SAVE) by [4] which uses inverse variance, Directional Regression (DR) by [5] which uses both the inverse mean and variance, and Sliced Inverse Mean Difference (SIMD) by [6] which uses the inverse mean difference. A common aspect of these methods is the fact that one needs to define the number of slices as a tuning parameter. To avoid this, [7] proposed the Cumulative Mean Estimation (CUME), which uses the first moment and removes the necessity to tune the number of slices.

More recently, a number of methods were introduced for robust sufficient dimension reduction. [8] proposed Robust SIR by using the L1 median to achieve sufficient dimension reduction and [9] proposed the use of Tukey and Oja medians to robustify SIR. Similarly, ref. [10] proposed Sliced Inverse Median Difference (SIMeD), the robust version of SIMD using the L1 median. The main reason for using the L1 median is the fact that it is uniquely defined for . On the other hand, Tukey and Oja medians may not be uniquely defined, but they are affine equivariant.

In this paper, we will investigate the use of the L1 median to robustify CUME. The new method is called Cumulative Median estimation (CUMed). As CUMed is the robust version of CUME, it has all the advantages CUME has with the additional advantage that it is robust to the presence of outliers. The rest of the paper is organised as follows. In Section 2 we discuss the previous methods in more detail, and we introduce the new method in Section 3. We present numerical studies in Section 4, and we close with a small discussion.

2. Literature Review

In this section, we discuss some of the existing methods in the SDR framework that mostly relate to our proposal. We discuss Sliced Inverse Regression (SIR), Cumulative Mean Estimation (CUME), and Sliced Inverse Median (SIME). We first introduce some standard notation. Let be the dimensional predictor vector, Y be the response variable (which we assume to be univariate without loss of generality), be the covariance matrix, and be the standardized predictors.

2.1. Sliced Inverse Regression (SIR)

SIR was introduced by [3] and it is considered the first method introduced in the SDR framework. SIR depends on the linearity assumption (or the linear conditional mean assumption), which states that for any ꞵ , the conditional expectation is a linear function of . The author proposed to standardize the predictors and then use the inverse mean . By performing an eigenvalue decomposition of the variance-covariance matrix , the authors find the directions which span the CS, . One can then use the invariance principle [11] to find the direction that spans .

A key element in SIR is the use of inverse conditional means, that is, . To find these in a sample setting, one has to discretize Y, that is, to bin the observations into a number of intervals (denoted with , where H is the number of intervals). Therefore, the inverse mean is, in practice, replaced by using for , and thus, it is the eigenvalue decomposition of which is used to find the directions which span the CS, .

2.2. Sliced Inverse Median (SIME)

A number of proposals exist in the literature to try to robustify SIR (see, for example, refs. [8,9]). We discuss here the work by [8] which uses the inverse L1 median instead of means to achieve this. The authors preferred the L1 median due to its uniqueness in cases where . In their work, they defined the inverse L1 median as:

Definition 1.

The inverse L1 median is given by , where is the Euclidean norm.

Similarly, they denote with . To estimate the CS , they performed an eigenvalue decomposition of the covariance matrix in a similar manner that SIR works with the decomposition of the covariance matrix of the means.

2.3. Cumulative Mean Estimation (CUME)

As we described above, to use SIR one needs to define the number of slices. To avoid this, [7] proposed the use of the Cumulative Mean Estimation (CUME) algorithm, which removes the need of tuning for the number of slices by taking the cumulative mean idea. CUME uses the eigenvalue decomposition of the matrix , where is the indicator function, to find the directions which span the CS, . To achieve this, one needs to increase the value of y and recalculate the mean each time the value of y is large enough for a new observation to be included in the range .

Like SIR, SIME needs to tune the number of slices as well. To our knowledge, there is not another proposal in the literature that removes the need to tune for the number of slices while simultaneously being robust to outliers. Therefore, in the next section, we will present our proposal to robustify CUME by using the Cumulative L1 median.

3. Cumulative Median Estimation

In this section, we introduce our new robust method. Like [8,10] we will use the L1 median as the basis for our proposed method due to the fact that it is unique in higher dimensions. To be more accurate, ref. [8] used the inverse L1 median as it was stated in Definition 1, and [10] used the inverse median difference. In this work, we will be using the cumulative L1 median, as it is defined below:

Definition 2.

The cumulative L1 median is given by , where is the Euclidean norm and is the indicator function.

Before we prove the basic theorem of this work, let’s give some basic notation. Let , the data pairs, where the response is considered univariate. Let also denote the standardized value of . It is standard in the SDR literature to work with the regression of on , and then using the invariance principle (see [11]) to find the CS, . In the following theorem, we demonstrate that one can use the cumulative L1 median to estimate the CS.

Theorem 1.

Let be the kernel matrix for our method, as defined in (4). Suppose has an elliptical distribution with . Then, .

Proof.

First of all, note that

Now suppose that has an orthonormal basis . Let be the orthonormal basis for , the complement of . Then,

For consider the linear transformation such that

where for and for . Using this notation, Dong et al. (2015) showed that

From this, is the minimizer of . By definition, is also the minimizer of . Therefore, , and therefore, which means for . From the construction at the beginning of the proof , and therefore . □

Estimation Procedure

In this section we will present the algorithm to estimate the CS using the cumulative L1 median.

- (1)

- Standardise data to find , where is the sample mean of and is an estimate of the covariance matrix of .

- (2)

- Sort Y and then for each value of y, find the cumulative L1 median

- (3)

- Using the ’s from the previous step, estimate the candidate matrix bywhere is the range of Y, the proportion of points used to find .

- (4)

- Calculate the eigenvectors , which correspond to the largest eigenvalues of , and estimate with .

Note that the in the first step, it is more appropriate to use a robust estimator for Σ, and in this work we propose the use of the minimum covariance determinant (MCD) estimator, which is implemented in function covMcd in package robustbase in R (see [12]. Note that other alternative estimators, such as RFCH and RMVN [13,14] or the forward search estimator ([15]) could have been used. The same estimator is used in the last step as well to recover .

4. Numerical Results

In this section, we will discuss the numerical performance of the algorithm, both in a simulated as well as a real dataset setting.

4.1. Simulated Datasets

We compare the performance of our algorithms with algorithms based on means like SIR and CUME as well as SIME, which is the robust version of SIR proposed by [8] and, similarly to our case, it uses the L1 median. We ran a number of simulation experiments using the following models:

- (1)

- Model 1:

- (2)

- Model 2:

- (3)

- Model 3:

- (4)

- Model 4:

- (5)

- Model 5:

for or 20 and . Note that . For SIR and SIME we used 10 slices. Note also that the predictors come either from multivariate standard normal, which produces no outliers, or from a multivariate standard Cauchy, which produces outliers. We denote them in the result Tables as follows:

- (Outl a): is from multivariate standard normal.

- (Outl b): is from multivariate standard Cauchy.

We ran 100 simulations and report the average trace correlation and its standard errors to compare performance. Trace correlation is calculated as follows:

where is the projection matrix on the true subspace and the projection matrix on the estimated subspace. It takes values between 0 and 1 and the closer to 1, the better the estimation.

The results in Table 1 demonstrate the advantages of our algorithm. Our method CUMed performs well when there are no outliers (comparable performance with CUME) and it is better than CUME in the case where there are outliers. Comparing to SIME (the robust version of SIR), our method performs better with the exception of model 2, where SIME performs slightly better. We run a more extensive simulation and we have seen that when , SIME tends to work better than CUMed.

Table 1.

Mean and standard errors (in parentheses) of trace correlation (4) for 100 replications for all the models for different values of p. We use and the number of slices in SIR and SIME.

On the second experiment, we describe the importance of using the L1 median by running a comparison of CUMed when the L1 median is used with CUMed when the Oja median is used. Here, we emphasize that the Oja median is not unique, and it is also computationally more expensive to be calculated. We run the experiment for and under both scenarios where there are outliers and where there are not outliers. As we can see from Table 2, the L1 median behaves better for all models and both scenarios.

Table 2.

Mean and standard errors (in parentheses) of trace correlation (4) for 100 replications for the performance of CUMed using L1 and Oja medians for all five models with and without outliers. and .

4.2. Real Data—Concrete Data

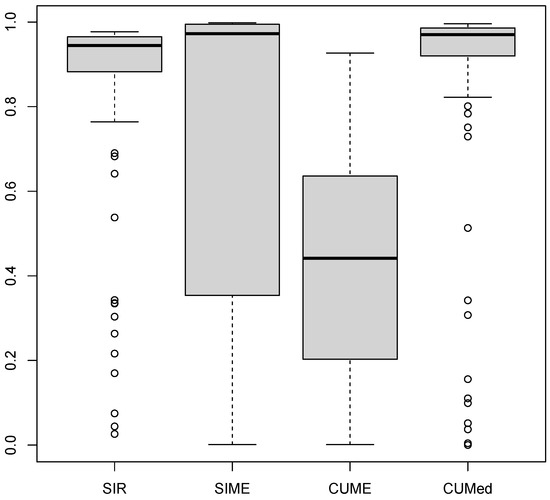

In this section, we compare the SIR, SIME, and CUME methods against our CUMed method on a real data set. We use the Concrete strength dataset (see [16]), which has eight predictors (Cement, Blast Furnace Slag, Fly Ash, Water, Superplasticizer, Coarse Aggregate, Fine Aggregate, Age) and a response variable (Concrete Strength). It has 1030 observations. To highlight our method’s ability to estimate the central subspace in the presence of outliers, we ran 100 experiments where we selected 30 data points randomly and multiplied them by 10 (therefore creating about outliers in the dataset). We then computed the trace correlation distance between the original projection matrix and the estimate projection matrix containing the outliers. As it is shown in Figure 1, if we compare the median trace correlations, both robust methods, SIME and CUMed, tend to have much less distance from the original projection (the one before introducing outliers) than SIR and CUME. CUMed is, overall, the best method, as it seems to have most distances above 0.8 and only in a few cases falls below that. On the other hand, for SIME, we can see from the boxplot that Q1 is at 0.35 (as opposed to CUMed, which has Q1 at 0.9).

Figure 1.

Performance of 100 repetitions of the experiment of the introduction of outliers in the concrete strength dataset.

5. Discussion

In this work, we discussed a robust SDR methodology which uses the L1 median to expand the scope of a previously proposed algorithm in the SDR literature, CUME, which uses the first moments. The new method utilizes all the advantages of CUME, by not having to tune for the number of slices as earlier SDR methods, like SIR, had to. In addition, it is robust to the presence of outliers, which CUME is not, as we demonstrated in our numerical experiments.

There have been a number of papers introduced recently in the SDR literature to robustify against outliers. We believe that this work adds to the existing literature and opens new roads to further discuss robustness within the SDR framework. In addition to the ones already discussed, which are based on inverse-moments, there is literature in the Support Vector Machine (SVM) based on SDR literature—see, for example, ref. [17], who used adaptively weighted schemes for SVM-based robust estimation, as well as the literature in iterative methods, like the ones proposed in [18,19].

Author Contributions

Conceptualization, A.A.; methodology, A.A. and S.B.; software, S.B.; validation, A.A. and S.B.; writing—original draft preparation, S.B.; writing—review and editing, A.A.; visualization, S.B. and A.A.; supervision, A.A.; project administration, A.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The study used publicly available data from the UC Irvine Machine Learning Repository, https://archive.ics.uci.edu/ml/index.php, accessed on 18 February 2021.

Acknowledgments

The authors would like to thank the Editors and three reviewers for their useful comments on the manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Yin, X.; Li, B.; Cook, R.D. Successive direction extraction for estimating the central subspace in a multiple-index regression. J. Multivar. Anal. 2008, 99, 1733–1757. [Google Scholar] [CrossRef]

- Li, B. Sufficient Dimension Reduction. Methods and Applications with R; Chapman and Hall/CRC: New York, NY, USA, 2018. [Google Scholar]

- Li, K.-C. Sliced inverse regression for dimension reduction (with discussion). J. Am. Stat. Assoc. 1991, 86, 316–342. [Google Scholar] [CrossRef]

- Cook, R.D.; Weisberg, S. Discussion of ‘’Sliced inverse regression for dimension reduction”. J. Am. Stat. Assoc. 1991, 86, 316–342. [Google Scholar] [CrossRef]

- Li, B.; Wang, S. On directional regression for dimension reduction. J. Am. Stat. Assoc. 2007, 102, 997–1008. [Google Scholar] [CrossRef]

- Artemiou, A.; Tian, L. Using slice inverse mean difference for sufficient dimension reduction. Stat. Probab. Lett. 2015, 106, 184–190. [Google Scholar] [CrossRef][Green Version]

- Zhu, L.P.; Zhu, L.X.; Feng, Z.H. Dimension Reduction in Regression through Cumulative Slicing Estimation. J. Am. Stat. Assoc. 2010, 105, 1455–1466. [Google Scholar] [CrossRef]

- Dong, Y.; Yu, Z.; Zhu, L. Robust inverse regression for dimension reduction. J. Multivar. Anal. 2015, 134, 71–81. [Google Scholar] [CrossRef]

- Christou, E. Robust Dimension Reduction using Sliced Inverse Median Regression. Stat. Pap. 2018, 61, 1799–1818. [Google Scholar] [CrossRef]

- Babos, S.; Artemiou, A. Sliced inverse median difference regression. Stat. Methods Appl. 2020, 29, 937–954. [Google Scholar] [CrossRef]

- Cook, R.D. Regression Graphics: Ideas for Studying Regressions through Graphics; Wiley: New York, NY, USA, 1998. [Google Scholar]

- Maechler, M.; Rousseeuw, P.; Croux, C.; Todorov, V.; Ruckstuhl, A.; Salibian-Barrera, M.; Verbeke, T.; Koller, M.; Conceicao, E.L.T.; di Palma, M.A. robustbase: Basic Robust Statistics R Package Version 0.93-3. 2018. Available online: http://CRAN.R-project.org/package=robustbase (accessed on 10 February 2021).

- Olive, D.J. Robust Multivariate Statistics; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Olive, D.J. Robust Statistics. 2020. Available online: http://parker.ad.siu.edu/Olive/robbook.htm (accessed on 10 February 2021).

- Cerioli, A.; Farcomeni, A.; Riani, M. Strong consistency and robustness of the Forward Search estimator of multivariate location and scatter. J. Multivar. Anal. 2014, 126, 167–183. [Google Scholar] [CrossRef]

- Yeh, I.-C. Modeling of strength of high performance concrete using artificial neural networks. Cem. Concr. Res. 1998, 28, 1797–1808. [Google Scholar] [CrossRef]

- Artemiou, A. Using adaptively weighted large margin classifiers for robust sufficient dimension reduction. Statistics 2019, 53, 1037–1051. [Google Scholar] [CrossRef]

- Wang, H.; Xia, Y. Sliced regression for dimension reduction. J. Am. Stat. Assoc. 2008, 103, 811–821. [Google Scholar] [CrossRef]

- Kong, E.; Xia, Y. An adaptive composite quantile approach to dimension reduction. Ann. Stat. 2014, 42, 1657–1688. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).