1. Introduction

Order picking refers to the process of collecting items from storage in a repository, typically encompassing these key steps: pre-action, searching, picking, transport, and additional supporting actions (

Figure 1) [

1]. It is a key task in several real contexts, such as warehouse logistics [

2], and relies heavily on human expertise [

3]. However, it is still performed with Paper Lists, Hand-Held Devices (HHDs), or Pick-by-Voice models. The Paper List is outdated and lacks automatic integration with the Warehouse Management System (WMS), making the model complex, and, like Hand-Held Devices, keeps the hands busy and causes distractions. Conversely, Pick-by-Voice models lead to frustration and alienation with prolonged use, while their efficiency is constrained by the pace of verbal exchanges [

4]. All these models bring a high cognitive load to workers.

A possible solution to increase the performance, safety, and general well-being of pickers is Augmented Reality (AR) [

5]. AR systems provide real-time digital information directly in the user’s field of view, without the use of hands, thus streamlining the order-picking process [

6,

7,

8,

9,

10]. Compared to traditional Paper Lists, adopting AR technologies in industrial settings may involve higher upfront costs and integration challenges, but these represent an investment in process improvement, training time reduction, and greater sustainability. However, the use of AR Headsets in real contexts presents some limitations, such as high cost, heavy weight, visual obstruction, and cable connection for battery duration, leading to discomfort and risk issues [

10,

11,

12].

A viable alternative to AR Headsets are AR Glasses, lightweight devices that have few sensors and limited monocular vision, but offer extended battery life, comfort, and safety at a lower cost [

13]. The only drawback is the limited immersion, which is not required for order picking. A simple visualization of key information, such as the target position, can already be beneficial for pickers. To foster the adoption of AR Glasses and maximize their benefits, it is crucial to carefully consider the user interface and experience [

14] and to settle visual and optometric issues such as discomfort and difficulty focusing [

15]. Nevertheless, there is a research gap concerning the design and optimization of interfaces specifically targeted for AR Glasses, the use of such devices in the literature, and the user testing in real contexts [

10]. The novelty of this work lies in the design of visualizations of the target position tailored for AR Glasses, in the employment of a lightweight device in the user study, and the simulation of the order-picking task in a wide laboratory environment.

This paper examines the following research questions:

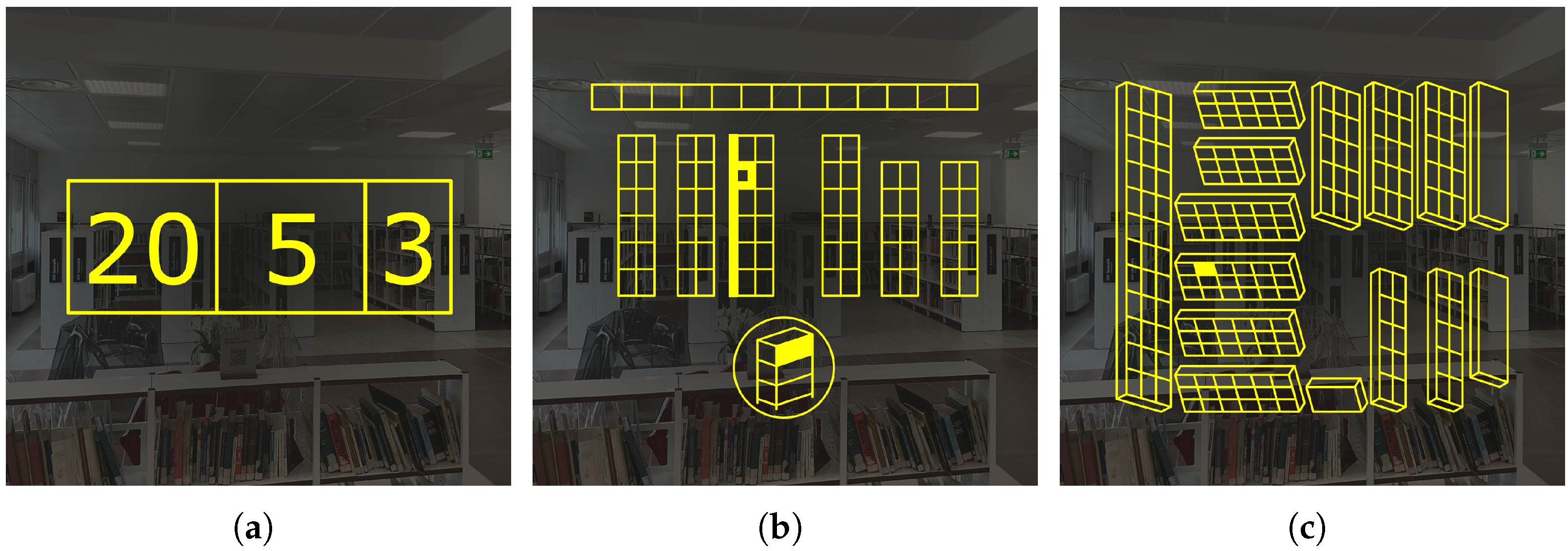

To address these research questions, this work performs a state of the art, followed by a within-subjects user study to compare three types of visualizations (

Figure 2):

Numeric Code: three numbers related to lane, bay, and shelf of target position;

2D Map: a plan of the repository with the target position highlighted and a bookcase icon to show the shelf;

3D Map: an axonometric projection of the repository with the target position highlighted.

Figure 2.

Mock-Ups of the three visualizations designed for order picking and tested in this research. In picture (a), the Numeric Code visualization, made by lane, bay, and shelf of the target position; lane 20, bay 5, shelf 3 is used as an example. Numbers are placed on a single row, enclosed in a grid, written in regular weight of Verdana font, and are bright on a transparent background. In picture (b), the 2D-Map visualization, representing a section of the repository, with the target position highlighted. In picture (c), the 3D-Map visualization, an axonometric projection of the repository with tilted shelves for visibility purposes, in which the target position is highlighted. Made with Rhinoceros (version 7.29) and Adobe Illustrator (version 24.1.1.376).

Figure 2.

Mock-Ups of the three visualizations designed for order picking and tested in this research. In picture (a), the Numeric Code visualization, made by lane, bay, and shelf of the target position; lane 20, bay 5, shelf 3 is used as an example. Numbers are placed on a single row, enclosed in a grid, written in regular weight of Verdana font, and are bright on a transparent background. In picture (b), the 2D-Map visualization, representing a section of the repository, with the target position highlighted. In picture (c), the 3D-Map visualization, an axonometric projection of the repository with tilted shelves for visibility purposes, in which the target position is highlighted. Made with Rhinoceros (version 7.29) and Adobe Illustrator (version 24.1.1.376).

This study encompasses an overview of prior research on AR visualizations for order picking, the design of tailored visualizations for AR Glasses, and a user study aimed at gathering empirical data and comparing these visualizations.

2. Related Works

A review by Fernandez et al. [

10] on AR applications in industry identifies logistics as a key beneficiary of this technology, primarily due to hands-free operation, real-time process optimization, and increased efficiency. Nonetheless, the most commonly used devices, AR Headsets, remain largely confined to research settings due to ergonomic issues and high costs, which limit their practical deployment.

Jumahat et al. [

16,

17] conducted a review of 23 studies exploring the positive implications of AR systems in warehouses. Identified benefits include productivity, reduction of errors, better operational planning, safety, and ergonomics. However, the authors express the need for more empirical studies to improve AR systems and achieve greater acceptance.

Several studies have explored AR systems for order picking (

Table 1), most of which involve scenarios related to logistics. Depending on the hardware used, different visualizations have become more effective.

To make user studies more feasible, specific techniques can be utilized, such as the simulation of AR using a Virtual Reality (VR) Headset [

28], the Wizard of Oz [

21,

27], which consists of the researcher (wizard) simulating system interactions by remote control, or vision of first-person point-of-view pictures of concepts [

29].

To evaluate user interfaces, both quantitative and qualitative metrics are employed, including standard or custom ones. Quantitative ones may include Task Completion Time (TCT) [

8,

18,

19,

20,

21,

22,

23,

24,

25,

26,

27,

28], errors [

8,

18,

19,

20,

21,

22,

23,

24,

25,

26,

27,

28], tracked head movement [

28], or distance traveled [

23]. Qualitative metrics could consist of standard ones such as NASA-TLX [

18,

19,

21,

22,

23,

24,

26,

27,

28], Comfort Rating Scale (CRS) [

8], Simulator Sickness Questionnaire (SSQ) [

8], System Usability Scale (SUS) [

25], or custom questionnaires about user satisfaction [

18,

19,

21,

23,

26,

27,

28], user preference [

18,

24,

26], ease of use [

18,

24], ease of learning [

21], and usability [

19,

22,

23]. Open interviews can also be conducted [

29] to gather feedback and design suggestions.

According to the work by Cetin et al. [

30] on display dimensions and resolution for visual analytics, larger display sizes tend to increase work performance. Umair et al. [

31] also investigated how different media influence spatial awareness, noting that it is significantly enhanced with both desktop 3D visualizations and immersive Head-Mounted Displays (HMDs). These findings highlight the importance of user interface optimization for AR Glasses that only support non-spatial visualizations on limited displays, to offset these limitations. Among the user studies reported (

Table 1), there are only a few works with AR Glasses [

8,

23,

24]; however, most of them address simple visualizations that are compatible even with limited displays.

All user studies collected are conducted in a laboratory setting. Setups for the repository environment usually do not exceed 10 lanes and have a regular layout. The most widely adopted baseline is the Paper List, which is also one of the most-used tools by logistics operators. The Paper List includes the code indicating the target position, composed of lane, bay, and shelf information. The Paper List baseline is usually outperformed by AR interfaces. The kind of interfaces showing the best results are the Highlighted Position and the 2D Graph of the lane. One of the strengths of the Highlighted Position is the world-fixed tracking, which provides spatial information and avoids supplemental cognitive processing and eye shifts [

32,

33,

34], but is not easily supported by AR Glasses.

Some studies developed head-fixed or body-fixed interfaces [

8,

18,

25,

27], which can work on devices with more basic hardware but can only show floating content distinct from the environment [

35]. Hand-Held Devices also work as body-fixed interfaces [

26]. Other studies developed world-fixed interfaces [

19,

20,

21,

22,

23,

24,

25,

26,

28,

29], of which the visualization is placed directly on the environment [

35]. World-fixed interfaces are much more hardware-demanding to track the environment, and are currently out of reach for most AR Glasses.

In conclusion, it is well-established in the literature that AR systems outperform traditional tools and present a promising solution to improve warehouse processes. Although they offer substantial benefits in terms of efficiency and user experience, attention must be paid to ergonomic considerations to ensure that these technologies can be used for the prolonged duration of a work shift. More research and user testing on AR Glasses are needed to make them viable for industrial use.

3. Design of Visualizations

In this section, we design three visualizations for AR Smartglasses, namely Numeric Code, 2D Map, and 3D Map. They consist of static screens showing the target position for the order picking. A comparison between these visualizations is particularly insightful, given their differences in interpretation and visual complexity. The Numeric Code is the AR visualization of the target position as it is written in the Paper List or Tablet. Then, we design the 2D Map, which merges 2D Maps and 2D Graph of the lane present in the literature. Finally, we create a 3D Map as an alternative novel graphical visualization to push the limits of the AR Glasses screen. Unlike the 3D Graph of the single lane in the literature, it displays all the lanes in the environment. Visualizations are tailored specifically for the target repository layout, namely the public library serving as a large lab environment, and are specifically tuned for the implementation on the Engo 2 AR Smartglasses powered by ActiveLook. For visibility purposes, all the visualizations are sized to the maximum dimensions allowed by the screen’s useful area.

The Numeric Code visualization is made up of three numbers in a grid corresponding to lane, bay, and shelf (

Figure 3). The Verdana font is chosen for its wide adoption in the literature, becoming a standard for its excellent readability on screens and in Augmented Reality applications [

36,

37,

38,

39,

40]. The layout is selected following an internal pre-test and a consultation with a warehouse manager, in which the following factors were evaluated: one or two rows, regular or bold, with or without the grid lines to separate blocks, and positive versus negative.

The 2D Map consists of the plan of the repository (

Figure 4a). The target lane and bay are highlighted with a thicker line. The fill color is not used because it is too bright, causing glare in the surrounding area, as a result of an internal pre-test. The target shelf is highlighted on a bookcase icon. The icon is axonometric and enclosed in a circle to differentiate it from the orthographic plan. In order to keep the graphic as wide as possible, the repository layout has been divided into two halves, with the visualization showing only the useful one, and empty spaces are disproportionate compared to the physical library. Moreover, one half is rotated 90 degrees relative to the other to better fit the aspect ratio of the screen. Despite this rotation, the orientation of the map is still deducible from the layout. Walls and landmarks of the library are omitted, keeping only the bookcase outlines and making the map schematic. Landmarks are excluded because they are not typical of a warehouse environment and would introduce bias into the study, since the literature shows that they enhance spatial awareness and navigation [

41,

42].

The 3D Map consists of the axonometric view of the layout designed with tilted bookcases to sharpen the distinction between the lines of the shelves (

Figure 5b). Visualizations from two opposing viewpoints are needed to visualize the front and back of each bookcase, but cannot be shown at the same time because of the limited screen area. For this reason, differently from the 2D Map, no further division into areas is made to avoid many different screens that may confuse the user. The target position is highlighted with the fill color. Even in this case, the landmarks of the library are intentionally omitted.

4. Methods

We present a within-subject user study to compare Numeric Code, 2D Map, and 3D Map as AR Glasses visualizations showing the target position. They are tested through a simulated order-picking task in a public library.

4.1. Hypothesis

Numeric Code is expected to be more readable, given its visual simplicity, but could be mentally demanding in recalling the numbering system. In contrast, Maps do not require memory, but could require higher navigational skill and greater visual effort due to their visual complexity. These considerations lead to the formulation of the following hypotheses:

H1. Numeric Code is more efficient than Maps.

H2. Numeric Code is more accurate than Maps.

H3. Numeric Code is associated with lower cognitive load than Maps.

H4. Numeric Code is associated with lower eye strain than Maps.

H5. Numeric Code is preferred to Maps by users.

4.2. Setup

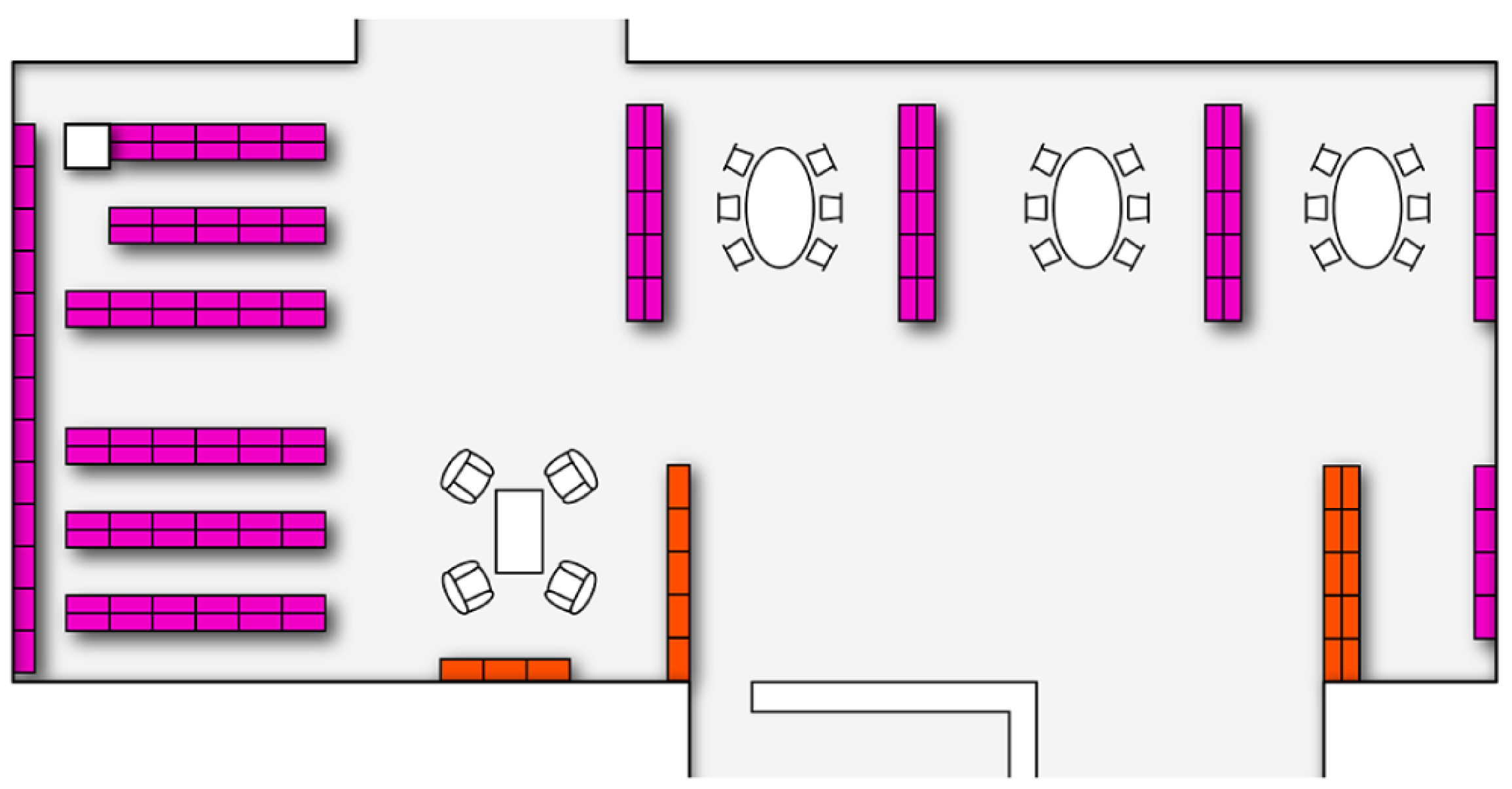

The simulation is performed in a library serving as a lab environment of approximately 286

with a total of 396 target positions (

Figure 6). A numbering index is established to identify each target position. Numeric tags with lane numbers are placed at the beginning of each lane, while individual positions do not have tags.

The AR Glasses used are Active Look powered Engo 2, a commercial device designed for outdoor sports activities with tinted lenses. Following development guidelines [

43] and internal pre-tests, margins were applied to the original resolution of

px, resulting in a usable area of

px. We then calculated through lab tests the diagonal FOV (Field of View) to be approximately 9°, significantly less than the 52° diagonal FOV of HoloLens 2 [

44] as a reference for AR Headsets. The AR Glasses, working as a BLE device paired with a smartphone (

Figure 7), display images to the user upon a button press following a Wizard of Oz approach as in some literature works [

21,

27].

4.3. Participants

A total of 30 voluntary university students, 17 men and 13 women, aged 19–32 (mean

) participated in the user study. They had a wide level of education (8 high school diplomas, 6 bachelor’s degrees, 14 master’s degrees, 2 PhDs), and diverse previous experience with AR (mean

/7 Likert Scale) (

Table 2). Recruitment was carried out in our institution and participants received a beverage as an incentive. Requirements for participation were having normal vision or wearing contact lenses and being unfamiliar with the library layout, to avoid bias in navigation in the environment.

4.4. Dependent Variables

Quantitative variables measured in this study are the Task Completion Time (TCT) and the errors, which are indicators of the efficiency and accuracy of the system.

Qualitative variables are collected through a user questionnaire, which starts with the standard scale Raw NASA-TLX, preferred to its weighted version, to streamline the filling of the questionnaire. Then there are five custom questions about Ease of Understanding, Required Previous Knowledge, Eye Stress, Visual Appeal, and Novelty, on a 7-point Likert scale. Finally, we record the user preference and collect open-answer feedback.

4.5. Procedure

The test starts with a preliminary explanation supported by slides about the test, the layout of the library, and the task. The user then fills out the preliminary questionnaire. Subsequently, the simulated order picking using each of the three visualizations occurs. A cross-Latin square design was used to minimize learning effects related to interface order, assigning each participant a different random permutation of the use sequence. The use of each interface starts with tailored slide instructions and a training task that requires reaching three target positions. Then the actual task begins, which involves reaching 16 target positions in a row. The user is asked to reach and touch the target position shown in the glasses (

Figure 8), then the next target position is shown.

The researcher checks the list of target positions and keeps track of any errors. The user has no feedback on the accuracy during the execution of the task and will only find out at the end of the test. Each of the three visualizations has its own list of target positions arranged so that each task requires the same walking distance and the TCT data are not biased. At the end of the task, the questionnaire is filled out. This procedure is repeated for each visualization. At the end of the last session, the user is asked which visualization they prefer.

5. Results

Jasp and Scimago Graphica are used for data analysis and plotting. The Bonferroni correction is used to determine the statistical significance (marginal significance p < 0.05 *; significance p < 0.01 **; high significance p < 0.001 ***).

5.1. Quantitative Data

The analysis of TCT (s) shows significant differences between Numeric Code (

s), 2D Map (

s), and 3D Map (

s) (

Figure 9). Analyzing individual TCTs of users, for 25/30 Numeric Code < 2D Map, for 24/30 2D Map < 3D Map, for 29/30 Numeric Code < 3D Map, and for 19/30 Numeric Code < 2D Map < 3D Map.

The analysis of errors and error rate for Numeric Code (

, ≈

), 2D Map (

, ≈

), and 3D Map (

, ≈

) shows a highly significant difference only between Numeric Code and 3D Map (

Figure 9).

For each visualization, the correlation between TCT and errors was analyzed using Pearson’s Correlation Coefficient, and it was found to be marginally significant only for the 3D Map (

p = 0.012) (

Figure 10). To determine the presence of a learning effect, we analyzed the relationship between mean TCT, errors, and task execution order; however, no significant effect was observed.

5.2. Qualitative Data

The overall scores of Raw NASA-TLX reveal increasing values from Numeric Code (29/100) followed by 2D Map (42/100) and 3D Map (56/100). Lower values correspond to a lower applied load, which is desirable. Specifically, both Mental and Physical Demand, as well as Requested Effort of the three visualizations, have a significant difference (

Figure 11). Concerning Frustration, 3D Map values are marginally significant compared to 2D Map and strongly significant compared to Numeric Code. In terms of Perceived Performance, the 3D Map shows much higher values compared to the Numeric Code, with a strong significance. The only exception to the trend, though not significantly, is the Temporal Demand, where the Numeric Code value is higher than the others.

Regarding Ease of Understanding (

Figure 12a), compared to Numeric Code (

) and 2D Map (

), the result of 3D Map (

) is strongly significant. Conversely, the Required Previous Knowledge does not show a significant difference between Numeric Code (

), 2D Map (

), and 3D Map (

). About the Eye Stress, Numeric Code (

) has a marginal significance compared to 2D Map (

), which has a marginal significance compared to 3D Map (

). The difference in Eye Stress between Numeric Code and 3D Map is strongly significant. Regarding Visual Appeal there is a slightly and not significant appreciation of 2D Map (

) over Numeric Code (

) over 3D Map (

), while for Novelty, there is a minor and nonsignificant preference for 2D Map (

) compared to 3D Map (

) compared to Numeric Code (

). In conclusion, the user preference reveals 19 votes for Numeric Code, 10 for 2D Map, and 1 for 3D Map (

Figure 12b).

We provide user comments from the questionnaire, including general thoughts and feedback related specifically to visualizations. Some users described the system as useful, innovative, or pleasant, suggesting improvements such as combining the Numeric Code with the 2D Map or integrating it with an axonometric view of the shelf. Some users declared that their experience was influenced more by the screen’s low resolution than by the visualizations themselves, mainly referring to the 3D Map. Comments about visibility focused on issues such as low resolution, position of the image in the FOV, high or low ambient lighting, and possible discomfort from prolonged use of contact lenses. Concerning the Numeric Code, someone said that it is intuitive, minimizing visual fatigue and helping to concentrate more on the task. Some noted that, even after brief initial use, learning the numbering system was not difficult and that it would become even less of an issue with more practice. A comment suggested a potential issue with the Numeric Code for users with attention disorders. Somebody defined Maps as somewhat dense, and some users cited the need to consult them more often than the code. Feedback on the 2D Map suggested that it is easy to use and beneficial for those unfamiliar with the space, though it could be improved, and the 90° rotation between areas can be confusing. Some feedback about the 3D Map mentioned that it requires more thought, strains the eyes more, and the perspective switch makes memorization and use more difficult, with the tilted bookcases being an unpleasant feature.

6. Discussion

In terms of performance, cognitive load, and user appreciation, the Numeric Code surpasses the 2D Map and the 3D Map, with an alignment between objective and subjective data.

As indicated by the TCT, H1 is confirmed, with a decrease in efficiency from the Numeric Code to the 2D Map and the 3D Map, especially when analyzing individual results, revealing that the slowdown is widespread. Observation of users’ behavior and received feedback suggest that the delays in Maps, especially for the 3D Map, are linked to changes in the map orientation, the need for multiple lookups, and vision issues like the low resolution, the position in the FOV, and ambient light conditions.

Looking at the errors, even H2 is proven since Numeric Code is slightly more accurate than 2D Map and considerably more accurate with respect to 3D Map. Looking at individual results, accuracy with Maps appears to be highly subjective, probably depending on orientation and interpretation skills. Observing Numeric Code errors, most of them are inversions in the bays’ progression. Instead, with Maps, users frequently misidentify the lane, and specifically in the 3D Map, the misunderstanding of the perspective leads to the selection of the lane directly behind the correct one. In this case, the Maps are static visualizations showing only the target position, to match the level of information provided by the Numeric Code. Future developments, leveraging external devices like the connected smartphone, could enable real-time positioning, allowing users to see their current location and heading on the map. This enhancement may increase the informativeness of the Map visualizations and potentially improve their performance. Looking at the correlation between efficiency and accuracy, for Numeric Code and the 2D Map, as expected, there is no significant relationship, since faster users tend to make more mistakes, while the significance observed for the 3D Map further highlights the issue.

Regarding cognitive load, H3 is validated, as the Raw NASA-TLX rates reveal an increasing workload across Numeric Code, 2D Map, and 3D Map. Compared to the average value of

reported by Hertzum [

45] for Virtual Reality technologies, we can conclude that Numeric Code applies a low workload, the 2D Map applies a medium workload, and the 3D Map applies a high workload. The most noticeable differences can be found in Mental Demand, Physical Demand, and Effort. Temporal Demand is the only metric that shows an inverse pattern, likely because the Maps captured the attention of users and are more fun to use, leading to a reduced perception of time passing. Seeing a low Mental Demand for the Numeric Code means that remembering the numbering system of the layout is not an issue for users, as confirmed by some feedback. However, a map visualization could help new employees learn the layout. The result of Ease of Understanding further confirms the dominance of the Numeric Code. A slightly higher requirement for Previous Knowledge of the Numeric Code than the Maps did not negatively affect its effectiveness.

It is important to note the high significance of the Physical Demand between the Maps and the Numeric Code; since the journey is identical for each visualization, the physical effort is primarily related to vision. This result, along with the one specifically related to Eye Strain, which is also significant, proves H4 by showing that Maps caused more eye fatigue than the Numeric Code. In a warehouse setting, using an interface that strains the eyes for a long time would compromise both practicality and safety. For this reason, it is preferable to avoid small graphical elements like small Maps and instead rely on a clear Numeric Code.

Users’ ratings of Visual Appeal and Novelty provide insights into their attitude toward the visualizations, which may express their own visual identity and personality. Regarding the user preference, the 2D Map is more visually appealing, and both Maps are seen as more novel, though not significantly. However, the overall user preference leans towards the Numeric Code, with 19/30 rates, thus validating H5. Nevertheless, this result partially reflects all the previous ones, since 10/30 users still preferred the 2D Map. In this regard, as suggested by some users and confirmed in the experiment conducted by Li et al. in the literature [

8], the combination of Numeric Code and a Map could be an effective solution. Still, even on a graphical interface, it is useful to keep the code to check. In future work, a novel interface that merges two kinds of visualizations can be designed and assessed.

Among existing studies, the work by Klinker et al. aligns most closely with these findings, showing superior performance linked to the Numeric Code [

26]. This is likely influenced by the devices used in their experiments, characterized by low resolution and small FOV, as the AR Glasses used in our user study. Our results differ from other user studies that utilized full-featured AR Headsets with higher visibility, which sustain the superiority of graphical visualizations such as 2D Map and 2D/3D Graph of the lane. This further confirms the necessity to design interfaces according to the target device.

This study has some limitations, including the specificity of the conditions examined in terms of environment, which may affect the scalability of the results. Further studies might investigate map designs, given the wide range of their design choices. In addition, future work can conduct additional field tests with professional order pickers in industrial scenarios. An aspect to consider is that the duration of the task in this study was relatively short, which streamlined data collection, but may leave room for future studies to explore longer-term effects and acceptance. Another potential direction for future research is analyzing how the type of errors varies with the visualization method, which could offer deeper insights into users’ spatial awareness and navigation skills.

7. Conclusions

From the literature analysis, it emerged that both textual and graphical AR visualizations represent effective evolutions of the traditional Paper List to visualize the target position in order picking. However, this user study demonstrates that, as a visualization on lightweight AR Glasses with limited resolution and FOV, the Numeric Code interface is the most usable due to its visual simplicity.

In summary, we can determine the following:

Highlighted Position, 2D Graphics, and Numeric Code are the most supportive AR visualizations of target position for order picking in the literature.

Numeric Code is more supportive than 2D and 3D Maps for order picking with AR Glasses.

This implies that device capabilities must be carefully considered when choosing and designing AR interfaces for AR Glasses. If the device is limited, designers should prioritize a minimal interface with only essential information that is graphically simple, adding contextual details only if the device can handle it, and being aware of the cognitive overload and optical issues. Design choices should also be driven by the specific kind of task and the complexity of the environment. Advancements in interfaces for AR Glasses could positively influence increasingly complex workflows in the industry, enhancing efficiency and user experience, and fostering the adoption of the technology. With AR Glasses, a forklift operator can check the target location at any moment with a quick glance, without the need to stop driving and use their hands. We contribute by exploring AR solutions that, despite relying on limited hardware, remain attractive to companies because of their supportiveness, low cost, and comfort. This study builds on previous research, not only reinforcing existing findings in the literature but also adding compelling arguments that drive the debate forward.

Author Contributions

Conceptualization, D.G. and M.F.; methodology, D.G. and M.F.; software, D.G.; validation, D.G.; formal analysis, D.G., F.M., M.D. and M.F.; investigation, D.G.; resources, D.G.; data curation, D.G. and M.D.; writing—original draft preparation, D.G.; writing—review and editing, F.M., M.D. and M.F.; visualization, D.G.; supervision, M.F.; project administration, D.G. and M.F.; funding acquisition, M.F. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Institutional Review Board of Polytechnic University of Bari (16 June 2024).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Acknowledgments

Ph.D. in Smart e Sustainable Industry XXXIX cycle—Polytechnic Institute of Bari, co-funded by DM n. 117/2023, PNRR, Mission 4, component 2 “From Research to Business”—Investment 3.3 “Introduction of innovative Ph.D. programs that address the innovation needs of businesses and promote the hiring of researchers by companies” and Genesys Software Srl. During the preparation of this manuscript, the author(s) used ChatGPT 4o for the purpose of improving language and readability. The authors have reviewed and edited the output and take full responsibility for the content of this publication.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| AR | Augmented Reality |

| VR | Virtual Reality |

| WMS | Warehouse Management System |

| TCT | Task Completion Time |

| CRS | Comfort Rating Scale |

| SSQ | Simulator Sickness Questionnaire |

| SUS | System Usability Scale |

| HMD | Head-Mounted Display |

| FOV | Field Of View |

| BLE | Bluetooth Low Energy |

References

- Chen, T.L.; Cheng, C.Y.; Chen, Y.Y.; Chan, L.K. An efficient hybrid algorithm for integrated order batching, sequencing and routing problem. Int. J. Prod. Econ. 2015, 159, 158–167. [Google Scholar] [CrossRef]

- Giannikas, V.; Lu, W.; Robertson, B.; McFarlane, D. An interventionist strategy for warehouse order picking: Evidence from two case studies. Int. J. Prod. Econ. 2017, 189, 63–76. [Google Scholar] [CrossRef]

- Wang, W.; Wang, F.; Song, W.; Su, S. Application of augmented reality (AR) technologies in inhouse logistics. E3S Web Conf. 2020, 145, 02018. [Google Scholar] [CrossRef]

- Nicole, Y. Designing SpeechActs: Issues in Speech User Interfaces. In Proceedings of the CHI95, Denver, CO, USA, 7–11 May 1995. [Google Scholar]

- Stoltz, M.H.; Giannikas, V.; McFarlane, D.; Strachan, J.; Um, J.; Srinivasan, R. Augmented reality in warehouse operations: Opportunities and barriers. IFAC-PapersOnLine 2017, 50, 12979–12984. [Google Scholar] [CrossRef]

- Moon, P. Js-04 effects of augmented reality control method on task performance and subjective experience during order picking. Jpn. J. Ergon. 2023, 59, JS-04. [Google Scholar] [CrossRef]

- Vidovič, E.; Gajšek, B. Analysing picking errors in vision picking systems. Logist. Sustain. Transp. 2020, 11, 90–100. [Google Scholar] [CrossRef]

- Li, K. Assessments of order-picking tasks using a paper list and augmented reality glasses with different order information displays. Appl. Sci. 2023, 13, 12222. [Google Scholar] [CrossRef]

- Gajšek, B.; Herzog, N. Potential of smart glasses in manual order picking systems. Destech Trans. Soc. Sci. Educ. Hum. Sci. 2020, 1, 101–107. [Google Scholar] [CrossRef]

- Fernández-Moyano, J.A.; Remolar, I.; Gómez-Cambronero, Á. Augmented Reality’s Impact in Industry—A Scoping Review. Appl. Sci. 2025, 15, 2415. [Google Scholar] [CrossRef]

- Epe, M.; Azmat, M.; Islam, D.M.Z.; Khalid, R. Use of Smart Glasses for Boosting Warehouse Efficiency: Implications for Change Management. Logistics 2024, 8, 106. [Google Scholar] [CrossRef]

- Odell, D.; Dorbala, N. The effects of head mounted weight on comfort for helmets and headsets, with a definition of “comfortable wear time”. Work 2024, 77, 651–658. [Google Scholar] [CrossRef]

- Pádua, L.; Adão, T.; Narciso, D.; Cunha, A.; Magalhães, L.; Peres, E. Towards modern cost-effective and lightweight augmented reality setups. Int. J. Web Portals (IJWP) 2015, 7, 33–59. [Google Scholar] [CrossRef]

- SEVER, M. Service process enhancement in medical tourism with support of augmented reality. Pamukkale Üniversitesi Işletme Araştırmaları Dergisi 2023, 10, 854–867. [Google Scholar] [CrossRef]

- Herzog, N.; Buchmeister, B.; Beharic, A.; Gajšek, B. Visual and optometric issues with smart glasses in industry 4.0 working environment. Adv. Prod. Eng. Manag. 2018, 13, 417–428. [Google Scholar] [CrossRef]

- Jumahat, S.; Sidhu, M.; Shah, S. A review on the positive implications of augmented reality pick-by-vision in warehouse management systems. Acta Logist. 2023, 10, 1–10. [Google Scholar] [CrossRef]

- Jumahat, S.; Sidhu, M.S.; Shah, S.M. Pick-by-vision of Augmented Reality in Warehouse Picking Process Optimization—A Review. In Proceedings of the 2022 IEEE International Conference on Artificial Intelligence in Engineering and Technology (IICAIET), Kota Kinabalu, Malaysia, 13–15 September 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Guo, A.; Raghu, S.; Xie, X.; Ismail, S.; Luo, X.; Simoneau, J.; Gilliland, S.; Baumann, H.; Southern, C.; Starner, T. A comparison of order picking assisted by head-up display (HUD), cart-mounted display (CMD), light, and paper pick list. In Proceedings of the 2014 ACM International Symposium on Wearable Computers, Seattle, WA, USA, 13–17 September 2014; pp. 71–78. [Google Scholar]

- Reif, R.; Günthner, W.A.; Schwerdtfeger, B.; Klinker, G. Evaluation of an augmented reality supported picking system under practical conditions. Comput. Graph. Forum 2010, 29, 2–12. [Google Scholar] [CrossRef]

- Schwerdtfeger, B.; Reif, R.; Günthner, W.A.; Klinker, G. Pick-by-vision: There is something to pick at the end of the augmented tunnel. Virtual Real. 2011, 15, 213–223. [Google Scholar] [CrossRef]

- Hanson, R.; Falkenström, W.; Miettinen, M. Augmented reality as a means of conveying picking information in kit preparation for mixed-model assembly. Comput. Ind. Eng. 2017, 113, 570–575. [Google Scholar] [CrossRef]

- Maio, R.; Santos, A.; Marques, B.; Ferreira, C.; Almeida, D.; Ramalho, P.; Batista, J.; Dias, P.; Santos, B.S. Pervasive Augmented Reality to support logistics operators in industrial scenarios: A shop floor user study on kit assembly. Int. J. Adv. Manuf. Technol. 2023, 127, 1631–1649. [Google Scholar] [CrossRef]

- Fang, W.; An, Z. A scalable wearable AR system for manual order picking based on warehouse floor-related navigation. Int. J. Adv. Manuf. Technol. 2020, 109, 2023–2037. [Google Scholar] [CrossRef]

- Rehman, U.; Cao, S. Augmented-reality-based indoor navigation: A comparative analysis of handheld devices versus google glass. IEEE Trans. Hum.-Mach. Syst. 2016, 47, 140–151. [Google Scholar] [CrossRef]

- Albawaneh, A.; Agnihothram, V.; Wu, J.; Singla, G.; Kim, H. Augmented reality for warehouse: Aid system for foreign workers. In Proceedings of the 2023 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Shanghai, China, 25–29 March 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 432–433. [Google Scholar]

- Klinker, G.; Frimor, T.; Pustka, D.; Schwerdtfeger, B. Mobile information presentation schemes for supra-adaptive logistics applications. In Proceedings of the 16th International Conference on Artificial Reality and Telexistence, Hangzhou, China, 29 November–1 December 2006; pp. 998–1007. [Google Scholar]

- Iben, H.; Baumann, H.; Ruthenbeck, C.; Klug, T. Visual based picking supported by context awareness: Comparing picking performance using paper-based lists versus lists presented on a head mounted display with contextual support. In Proceedings of the 2009 International Conference on Multimodal Interfaces, Cambridge, MA, USA, 2–4 November 2009; pp. 281–288. [Google Scholar]

- Renner, P.; Pfeiffer, T. [POSTER] Augmented Reality Assistance in the Central Field-of-View Outperforms Peripheral Displays for Order Picking: Results from a Virtual Reality Simulation Study. In Proceedings of the 2017 IEEE International Symposium on Mixed and Augmented Reality (ISMAR-Adjunct), Nantes, France, 9–13 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 176–181. [Google Scholar]

- Elbert, R.; Sarnow, T. Augmented reality in order picking—Boon and bane of information (over-) availability. In Proceedings of the Intelligent Human Systems Integration 2019: Proceedings of the 2nd International Conference on Intelligent Human Systems Integration (IHSI 2019): Integrating People and Intelligent Systems, San Diego, CA, USA, 7–10 February 2019; Springer: Cham, Switzerland, 2019; pp. 400–406. [Google Scholar]

- Cetin, G.; Stuerzlinger, W.; Dill, J. Visual analytics on large displays: Exploring user spatialization and how size and resolution affect task performance. In Proceedings of the 2018 International Symposium on Big Data Visual and Immersive Analytics (BDVA), Konstanz, Germany, 17–19 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–10. [Google Scholar]

- Umair, M.; Sharafat, A.; Lee, D.E.; Seo, J. Impact of virtual reality-based design review system on user’s performance and cognitive behavior for building design review tasks. Appl. Sci. 2022, 12, 7249. [Google Scholar] [CrossRef]

- Tang, A.; Owen, C.; Biocca, F.; Mou, W. Comparative effectiveness of augmented reality in object assembly. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Ft. Lauderdale, FL, USA, 5–10 April 2003; pp. 73–80. [Google Scholar]

- Henderson, S.; Feiner, S. Exploring the benefits of augmented reality documentation for maintenance and repair. IEEE Trans. Vis. Comput. Graph. 2010, 17, 1355–1368. [Google Scholar] [CrossRef] [PubMed]

- Laera, F.; Manghisi, V.M.; Evangelista, A.; Uva, A.E.; Foglia, M.M.; Fiorentino, M. Evaluating an augmented reality interface for sailing navigation: A comparative study with a immersive virtual reality simulator. Virtual Real. 2023, 27, 929–940. [Google Scholar] [CrossRef]

- Syed, T.A.; Siddiqui, M.S.; Abdullah, H.B.; Jan, S.; Namoun, A.; Alzahrani, A.; Nadeem, A.; Alkhodre, A.B. In-depth review of augmented reality: Tracking technologies, development tools, AR displays, collaborative AR, and security concerns. Sensors 2022, 23, 146. [Google Scholar] [CrossRef]

- Matsuura, Y.; Terada, T.; Aoki, T.; Sonoda, S.; Isoyama, N.; Tsukamoto, M. Readability and legibility of fonts considering shakiness of head mounted displays. In Proceedings of the 2019 ACM International Symposium on Wearable Computers, London, UK, 9–13 September 2019; pp. 150–159. [Google Scholar]

- Ali, A.Z.M.; Wahid, R.; Samsudin, K.; Idris, M.Z. Reading on the Computer Screen: Does Font Type Have Effects on Web Text Readability? Int. Educ. Stud. 2013, 6, 26–35. [Google Scholar] [CrossRef]

- Hojjati, N.; Muniandy, B. The effects of font type and spacing of text for online readability and performance. Contemp. Educ. Technol. 2014, 5, 161–174. [Google Scholar] [CrossRef]

- Sheedy, J.E.; Subbaram, M.V.; Zimmerman, A.B.; Hayes, J.R. Text legibility and the letter superiority effect. Hum. Factors 2005, 47, 797–815. [Google Scholar] [CrossRef]

- Cardenas, D.G.; Ginters, E.; del Rio, M.S.; Martin-Gutierrez, J. Determining the Legibility of Fonts Displayed in Augmented Reality Apps for Senior Citizens. In Proceedings of the 2021 62nd International Scientific Conference on Information Technology and Management Science of Riga Technical University (ITMS), Riga, Latvia, 14–15 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–8. [Google Scholar]

- Puikkonen, A.; Haveri, M.; Sarjanoja, A.H.; Huhtala, J.; Häkkilä, J. Improving the UI design of indoor navigation maps. In Proceedings of the UIST’09, Victoria, BC, Canada, 4–7 October 2009. [Google Scholar]

- Fang, H.; Xin, S.; Zhang, Y.; Wang, Z.; Zhu, J. Assessing the influence of landmarks and paths on the navigational efficiency and the cognitive load of indoor maps. ISPRS Int. J. Geo-Inf. 2020, 9, 82. [Google Scholar] [CrossRef]

- ActiveLook. Developer Guide ActiveLook. 2023. Available online: https://www.activelook.net/news-blog/developing-with-activelook-getting-started (accessed on 10 January 2025).

- Goode, L. Microsoft’s HoloLens 2 Puts a Full-Fledged Computer on Your Face. 2019. Available online: https://www.wired.com/story/microsoft-hololens-2-headset/ (accessed on 10 January 2025).

- Hertzum, M. Reference values and subscale patterns for the task load index (TLX): A meta-analytic review. Ergonomics 2021, 64, 869–878. [Google Scholar] [CrossRef]

Figure 1.

This sketch shows the execution of the order-picking task. The operator has a list of products to collect, which they consult using either a Paper List or a mobile barcode scanner. The key information needed includes the product, its location, and the quantity to be picked. In this case, the operator must assemble a multi-reference pallet, meaning a pallet containing different types of items picked from different locations in the warehouse. Made with Procreate (version 5.3.15).

Figure 1.

This sketch shows the execution of the order-picking task. The operator has a list of products to collect, which they consult using either a Paper List or a mobile barcode scanner. The key information needed includes the product, its location, and the quantity to be picked. In this case, the operator must assemble a multi-reference pallet, meaning a pallet containing different types of items picked from different locations in the warehouse. Made with Procreate (version 5.3.15).

Figure 3.

Mock-Ups of Numeric Code variations explored during an internal pre-test. Lane 20, bay 5, shelf 3 is used as an example. Variations with respect to the final design are 1/2 row (a), regular/bold (b), with/without grid (c), and positive/negative (d). Made with Adobe Illustrator (version 24.1.1.376).

Figure 3.

Mock-Ups of Numeric Code variations explored during an internal pre-test. Lane 20, bay 5, shelf 3 is used as an example. Variations with respect to the final design are 1/2 row (a), regular/bold (b), with/without grid (c), and positive/negative (d). Made with Adobe Illustrator (version 24.1.1.376).

Figure 4.

The first half of the 2D Map representing the environment (a) and the second half (b). They are displayed individually and are rotated 90 degrees relative to the other with respect to the real environment. The lane and the bay of the target position are highlighted with thicker lines, while the shelf is highlighted with the fill color in the bookcase icon. Made with Adobe Illustrator (24.1.1.376).

Figure 4.

The first half of the 2D Map representing the environment (a) and the second half (b). They are displayed individually and are rotated 90 degrees relative to the other with respect to the real environment. The lane and the bay of the target position are highlighted with thicker lines, while the shelf is highlighted with the fill color in the bookcase icon. Made with Adobe Illustrator (24.1.1.376).

Figure 5.

The front viewpoint of the 3D Map (a) and the back viewpoint (b). The target position is highlighted with the fill color. Bookcases are tilted to sharpen the distinction between the lines of the shelves. Made with Adobe Illustrator (version 24.1.1.376).

Figure 5.

The front viewpoint of the 3D Map (a) and the back viewpoint (b). The target position is highlighted with the fill color. Bookcases are tilted to sharpen the distinction between the lines of the shelves. Made with Adobe Illustrator (version 24.1.1.376).

Figure 6.

Plan of the library serving as lab environment. The following are indicated: in hot pink, 3-level shelves; in orange, 2-level shelves; and in white, furniture. Two areas can be recognized according to the layout: the left one with 6 shelves in series, arranged very close to each other in a comb pattern, and the right one with shelves spaced far apart. There are 25 lanes, most of which consist of 5 or 6 bays, each typically containing three shelves. The lane count follows the perimeter of the library, the bay count follows the direction from the center to the library’s walls, and the shelf count goes from the bottom to the top. Made with Adobe Illustrator (version 24.1.1.376).

Figure 6.

Plan of the library serving as lab environment. The following are indicated: in hot pink, 3-level shelves; in orange, 2-level shelves; and in white, furniture. Two areas can be recognized according to the layout: the left one with 6 shelves in series, arranged very close to each other in a comb pattern, and the right one with shelves spaced far apart. There are 25 lanes, most of which consist of 5 or 6 bays, each typically containing three shelves. The lane count follows the perimeter of the library, the bay count follows the direction from the center to the library’s walls, and the shelf count goes from the bottom to the top. Made with Adobe Illustrator (version 24.1.1.376).

Figure 7.

Picture of the hardware setup. The AR Glasses connect to the smartphone via BLE (Bluetooth Low Energy).

Figure 7.

Picture of the hardware setup. The AR Glasses connect to the smartphone via BLE (Bluetooth Low Energy).

Figure 8.

A user executing the simulated order-picking task in the user study, in the act of touching the target position (a). Pictures from behind the AR Glasses’ lenses of the visualizations compared in the test, respectively, Numeric Code (b), 2D Map (c), and 3D Map (d). The employed device does not support mirroring; therefore, pictures are taken.

Figure 8.

A user executing the simulated order-picking task in the user study, in the act of touching the target position (a). Pictures from behind the AR Glasses’ lenses of the visualizations compared in the test, respectively, Numeric Code (b), 2D Map (c), and 3D Map (d). The employed device does not support mirroring; therefore, pictures are taken.

Figure 9.

The charts illustrate the TCTs and errors for each visualization. A box plot displays the TCTs (a). Black dots represent the outliers, “**” indicates significance and “***” indicates high significance. A line chart shows individual users’ results (b); each user is represented by a line; the trend shows an upward pattern, with the only exceptions exceptions highlighted and clustered with colors. A box plot illustrates the number of errors users made while performing the task (c). Black dots represent the outliers and “***” indicates high significance. A line chart shows errors of each user (d); each user is represented by a line; the trend reveals an upward pattern, with the only exceptions highlighted and clustered with colors. Made with Scimago Graphica (version 1.0.50) and Adobe illustrator (version 4.1.1.376).

Figure 9.

The charts illustrate the TCTs and errors for each visualization. A box plot displays the TCTs (a). Black dots represent the outliers, “**” indicates significance and “***” indicates high significance. A line chart shows individual users’ results (b); each user is represented by a line; the trend shows an upward pattern, with the only exceptions exceptions highlighted and clustered with colors. A box plot illustrates the number of errors users made while performing the task (c). Black dots represent the outliers and “***” indicates high significance. A line chart shows errors of each user (d); each user is represented by a line; the trend reveals an upward pattern, with the only exceptions highlighted and clustered with colors. Made with Scimago Graphica (version 1.0.50) and Adobe illustrator (version 4.1.1.376).

Figure 10.

Correlation between TCT and errors for each visualization. The Numeric Code (a) and the 2D Map (b) show no significant relationship. The 3D Map (c), shown in larger detail, shows a marginally significant correlation indicated bi “*”. Made with Scimago Graphica (version 1.0.50) and Adobe Illustrator (4.1.1.376).

Figure 10.

Correlation between TCT and errors for each visualization. The Numeric Code (a) and the 2D Map (b) show no significant relationship. The 3D Map (c), shown in larger detail, shows a marginally significant correlation indicated bi “*”. Made with Scimago Graphica (version 1.0.50) and Adobe Illustrator (4.1.1.376).

Figure 11.

Raw NASA-TLX ratings referring to each visualization, along with their associated significance levels. “*” indicates marginal significance, “**” indicates significance and “***” indicates high significance. A shift to the left indicates a lower workload, reflecting a more favorable outcome. Made with Scimago Graphica (version 1.0.50) and Adobe Illustrator (version 4.1.1.376).

Figure 11.

Raw NASA-TLX ratings referring to each visualization, along with their associated significance levels. “*” indicates marginal significance, “**” indicates significance and “***” indicates high significance. A shift to the left indicates a lower workload, reflecting a more favorable outcome. Made with Scimago Graphica (version 1.0.50) and Adobe Illustrator (version 4.1.1.376).

Figure 12.

Answers to the questionnaire along with their significance levels are shown, with the first two derived from the UEQ (a). “*” indicates marginal significance and “***” indicates high significance. The user preference is shown (b). Made with Scimago Graphica (version 1.0.50) and Adobe Illustrator (version 4.1.1.376).

Figure 12.

Answers to the questionnaire along with their significance levels are shown, with the first two derived from the UEQ (a). “*” indicates marginal significance and “***” indicates high significance. The user preference is shown (b). Made with Scimago Graphica (version 1.0.50) and Adobe Illustrator (version 4.1.1.376).

Table 1.

Overview of user studies about AR systems for order picking found in the literature. Employed devices, visualizations with better performances, and the other visualizations involved in the comparison are reported.

Table 1.

Overview of user studies about AR systems for order picking found in the literature. Employed devices, visualizations with better performances, and the other visualizations involved in the comparison are reported.

| Study | Employed Devices | Best Visualizations | Other Visualizations |

|---|

| [8] | Smartglasses | Numeric Code + 2D Graph of the Lane, Numeric Code + 3D Graph of the Lane | Paper List |

| [7] | AR Headset, Cart-Mounted Display, Pick By Light | Highlighted Position | Paper List |

| [18] | AR Headset, Cart-Mounted Display, Head-Up Display | 2D Graph of the Lane | Paper List, Highlighted Position |

| [19] | AR Headset | 2D Graph of the Lane | Paper List |

| [20] | AR Headset | Highlighted Position with Frame, Highlighted Position with Tunnel | Highlighted Position with Harrow |

| [21] | AR Headset | Highlighted Position with Tunnel | Paper List |

| [22] | AR Headset | Highlighted Position | Paper List |

| [23] | AR Headset | Highlighted Position | Paper List |

| [24] | Smartglasses, Hand-Held Device | Spatial Harrows | Paper Map |

| [25] | AR Headset | Highlighted Path | Paper List |

| [26] | AR Headset (low res.), Hand-Held Device (low res.) | Numeric Code | 2D Map, Spatial Harrows |

| [27] | AR Headset | Numeric Code | Paper List |

Table 2.

Summary of participants’ demographics and AR experience.

Table 2.

Summary of participants’ demographics and AR experience.

| Gender | Male | Female | |

| 17 | 13 | | |

| Age | Min | Max | Mean | St. Dev |

| 19 | 32 | 25 | 3 |

| Level of education | High school diploma | Bachelor’s degree | Master’s degree | PhD |

| 8 | 6 | 14 | 2 |

| Experience with AR (1–7) | Min | Max | Mean | St. Dev |

| 1 | 7 | 4 | 2.2 |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).