A Brief Introduction to Nonlinear Time Series Analysis and Recurrence Plots

Abstract

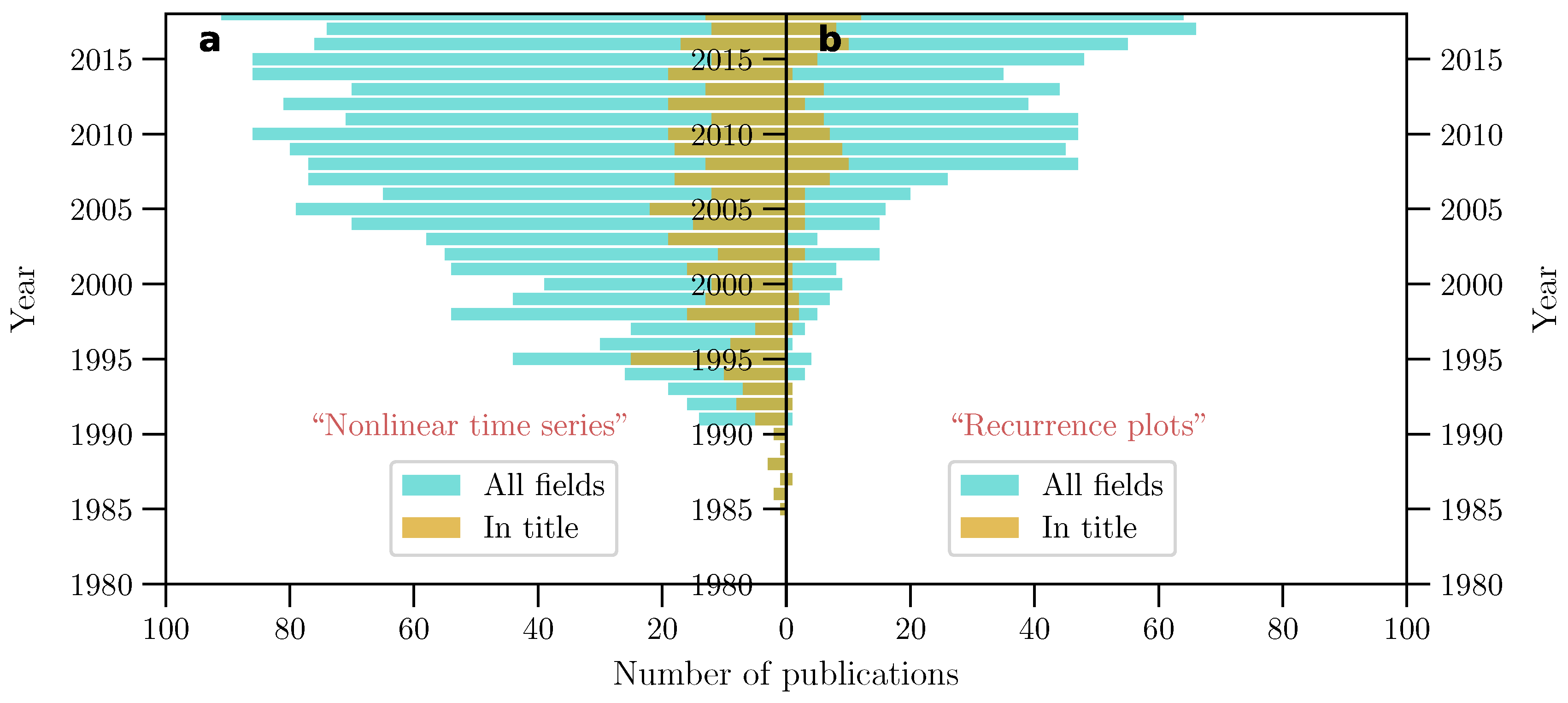

1. Historical Background

2. Recurrence Plots in Engineering Research

3. About This Tutorial Review

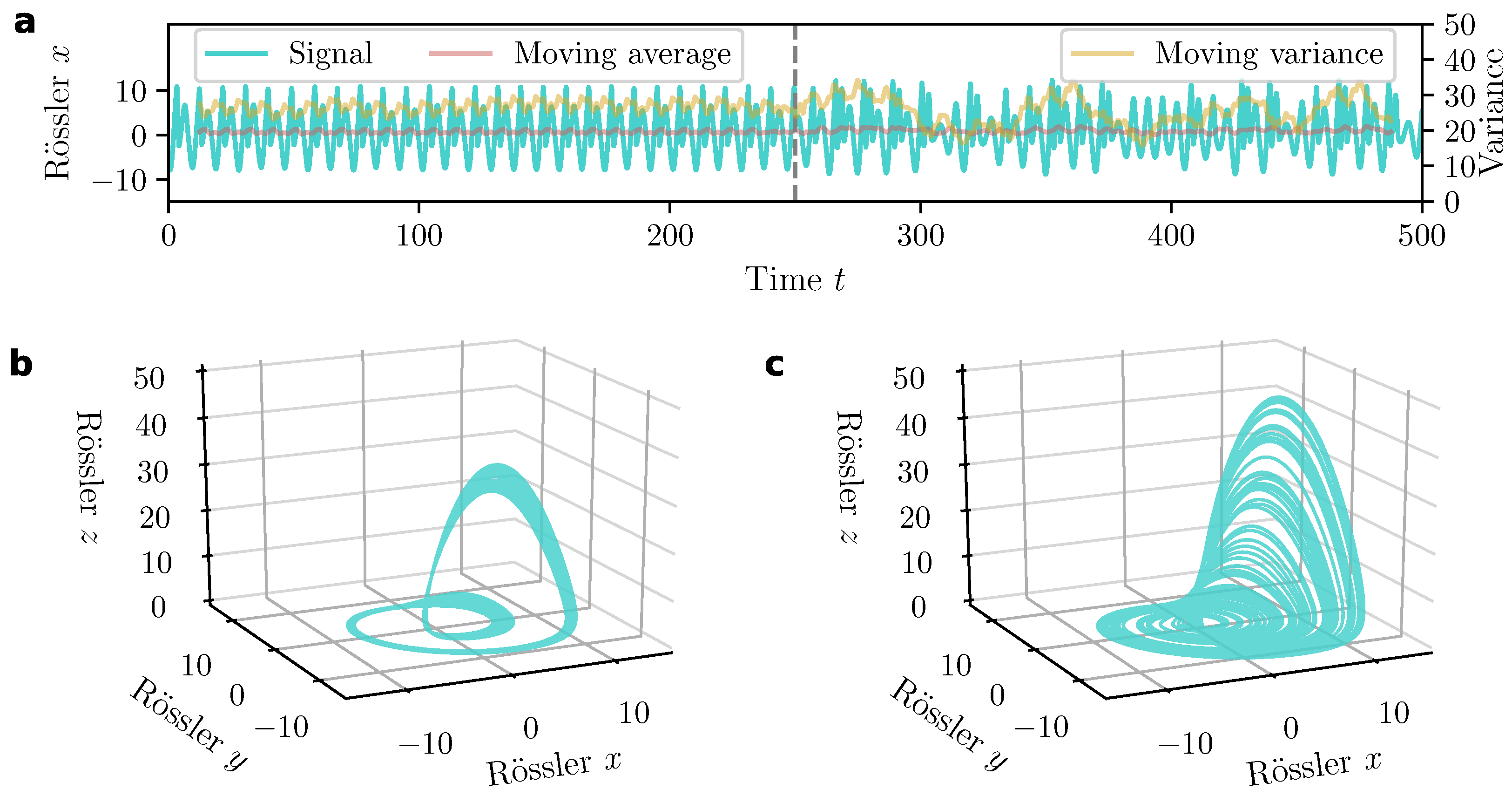

4. Consequences of Nonlinearity

4.1. Predictability

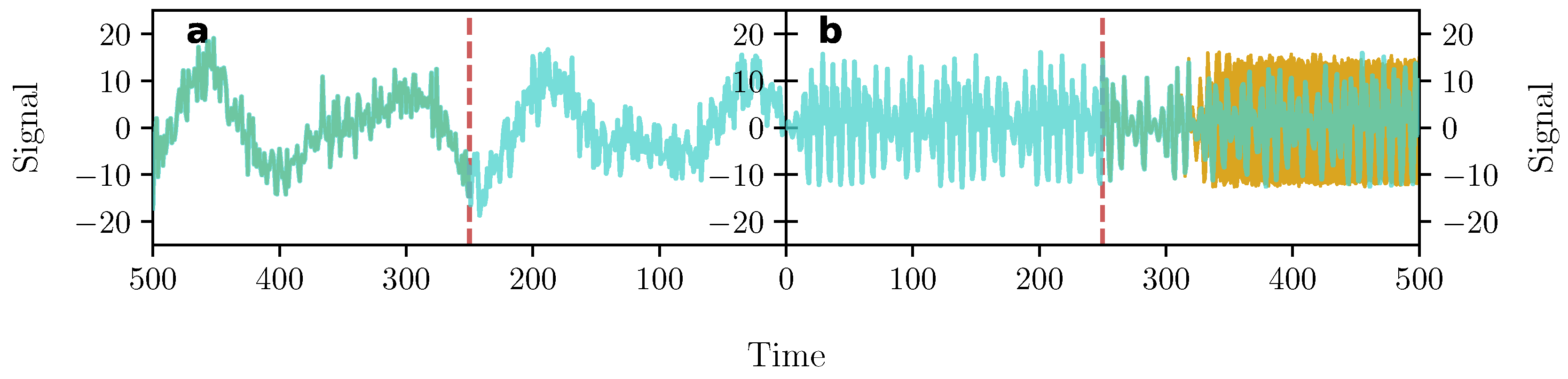

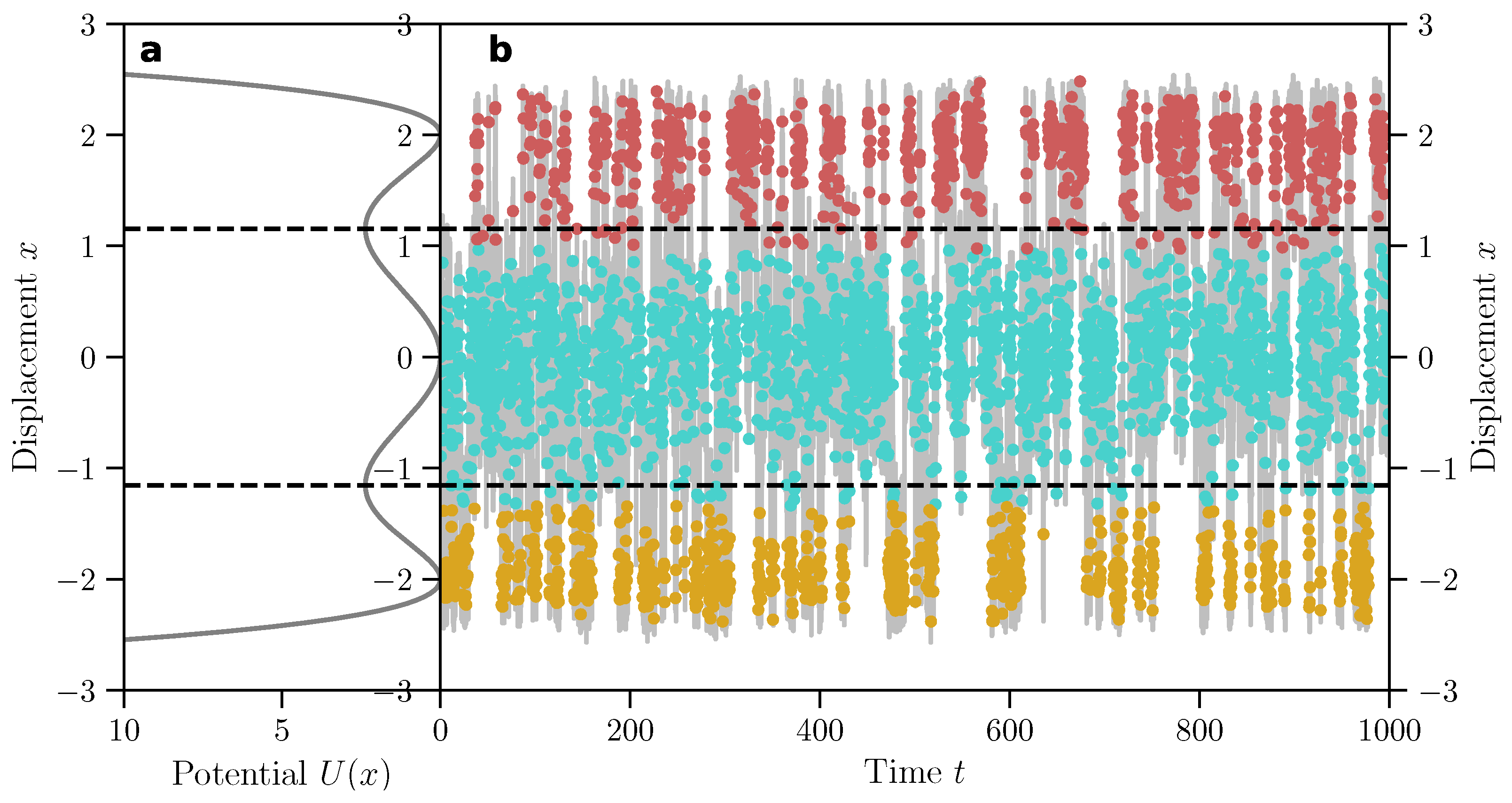

4.2. Transitions

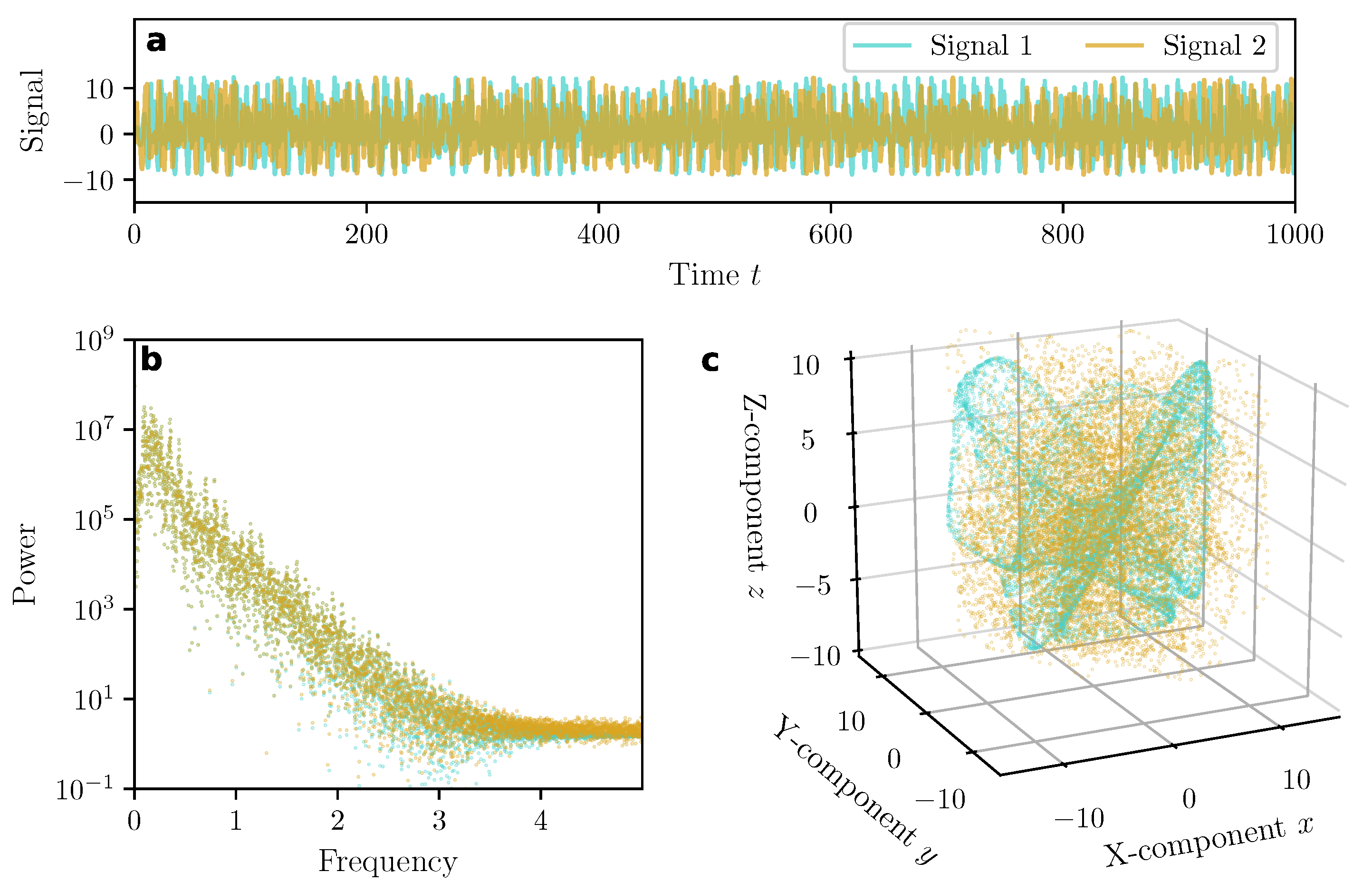

4.3. Synchronization

4.4. Characterization

5. Dynamical Systems: The Basics

5.1. What Is a Dynamical System?

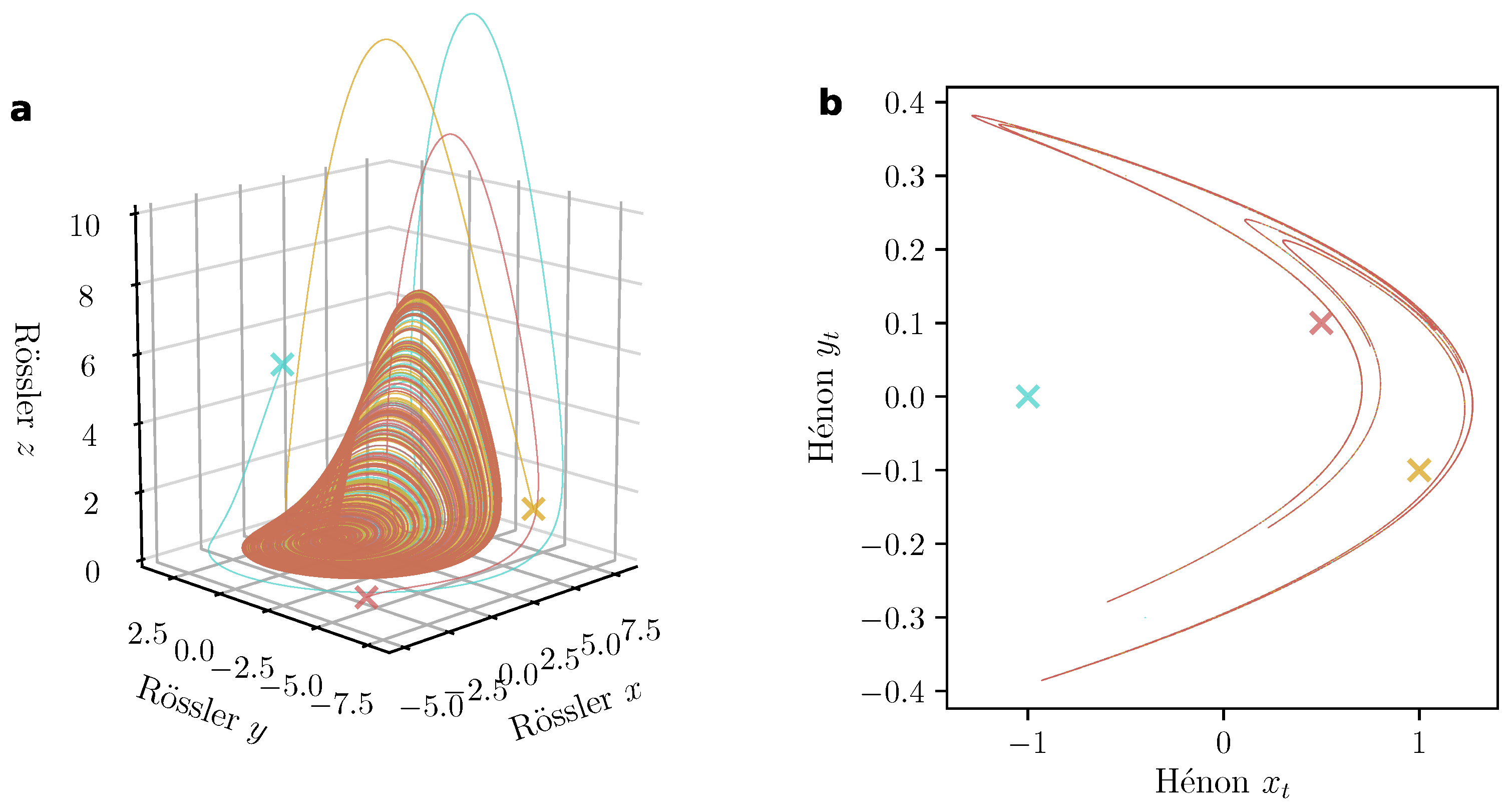

5.2. Attractors

- Invariance: the attractor should map to itself under .

- Attractivity: any set of initial conditions in the state space should, for large t i.e., , converge to the attractor.

- Irreducibility: the attracting set of states should be connected by one trajectory and it should not be possible to decompose the attractor to subsets of states which have non-overlapping trajectories. In this case, each subset would be an attractor and not their union.

- Persistence: the attractor should be stable under small perturbations, i.e., small deviations from the trajectory on the attractor should return back to the attractor.

- Compactness: the attracting set of states for the dynamic should be compact.

5.3. Bifurcations

6. State Space Reconstruction

6.1. The Measurement Paradigm and Time Delay Embedding

6.2. Time Delay Embedding in Practice

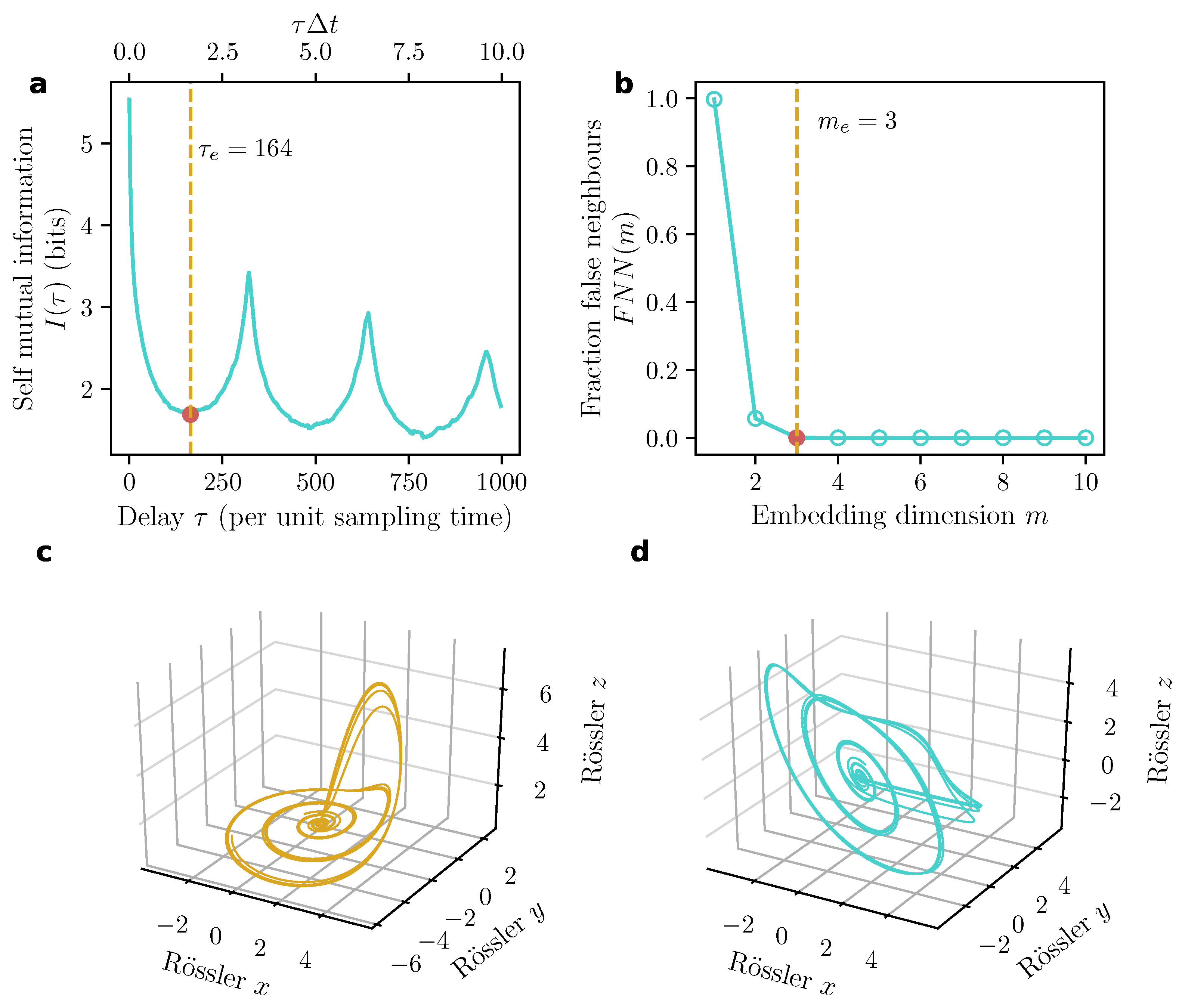

- Determining the time delay. The choice of impacts the resulting embedding critically. When is smaller than the desired value, consecutive coordinates of are correlated and the attractor is not sufficiently unfolded. When is larger than the desired value, successive coordinates are almost independent, resulting largely uncorrelated cloud of points in without much structure. It is important that the fundamental idea in determining the time delay is that each coordinate of the reconstructed m-dimensional vector must be functionally independent. In order to achieve this, it is recommended to set to the first zero-crossing of the autocorrelation function. However, the autocorrelation function captures only linear self-interrelations, and it is more preferable to use the first minimum of the self-mutual information function (Figure 8a), as first shown in [94]. For the scalar time series , the self-mutual information at lag is,where is a random variable underlying the sample and is the random variable underlying the sample . In this notation, the optimal value of is given by,

- Determining the embedding dimension. The method of false nearest neighbours (FNN) put forward by Kennel, Brown and Abarbanel in 1992 [95] is typically used to determine the embedding dimension, once a time delay is chosen. This approach is based on the geometric reasoning that given an embedding in dimension m, it is possible to differentiate between ‘true’ and ‘false’ neighbours of points on the reconstructed trajectory. In this method, we first choose a reasonable definition of ‘neighbourhood’. Based on this definition, we identify the neighbours of all points on the trajectory in . Next, we look for the false neighbours, defined as those neighbours which cease to be neighbours in dimensions, i.e., when we consider the trajectory . The false neighbours were neighbours in the lower dimensional embedding solely because the attractor was not properly unfolded and we were actually looking at a projection of the attractor rather than the attractor itself. As an example, consider the 2D limit cycle trajectory on a circle, where opposite points that are almost on the same vertical line would be seen as neighbours if the same dynamic were projected on to the horizontal axis, i.e., the 1D real line . Once the attractor is properly unfolded, however, the number of false neighbours would go to zero. In practice, this notion is implemented by the following formula (after Equation (3.8) of [86]),where denotes the Heaviside function which is one for all positive arguments and zero otherwise, denotes the maximum norm and is the index of the point closest to in the m-dimensional embedding based on maximum norm. The first term in the numerator counts all those cases when the distance between a point and its closest neighbour increases by more than a factor of r in going from m dimensions to dimensions. However, in order to not count those cases where the points are already far apart in m dimensions, the second term in the numerator is used as a weight and also as a normalisation factor in the denominator. The second term ensures that we count only those cases where the closest neighbour in m dimensions is closer than , where is the standard deviation of the data. The final embedding dimension is the smallest value of m for which the fraction is zero (Figure 8b).

7. Recurrence-Based Analysis

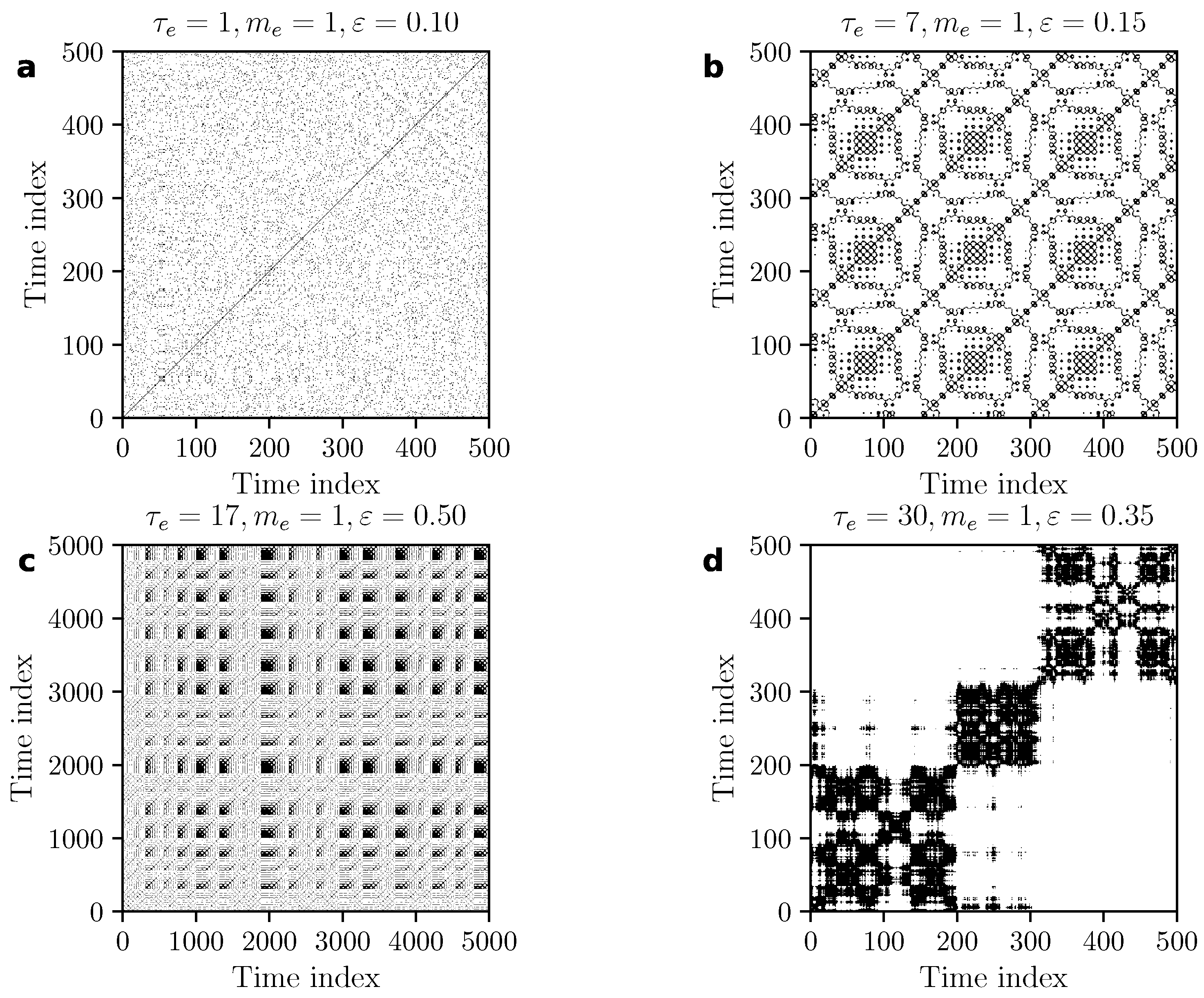

7.1. Recurrence Plots

7.2. Recurrence Networks

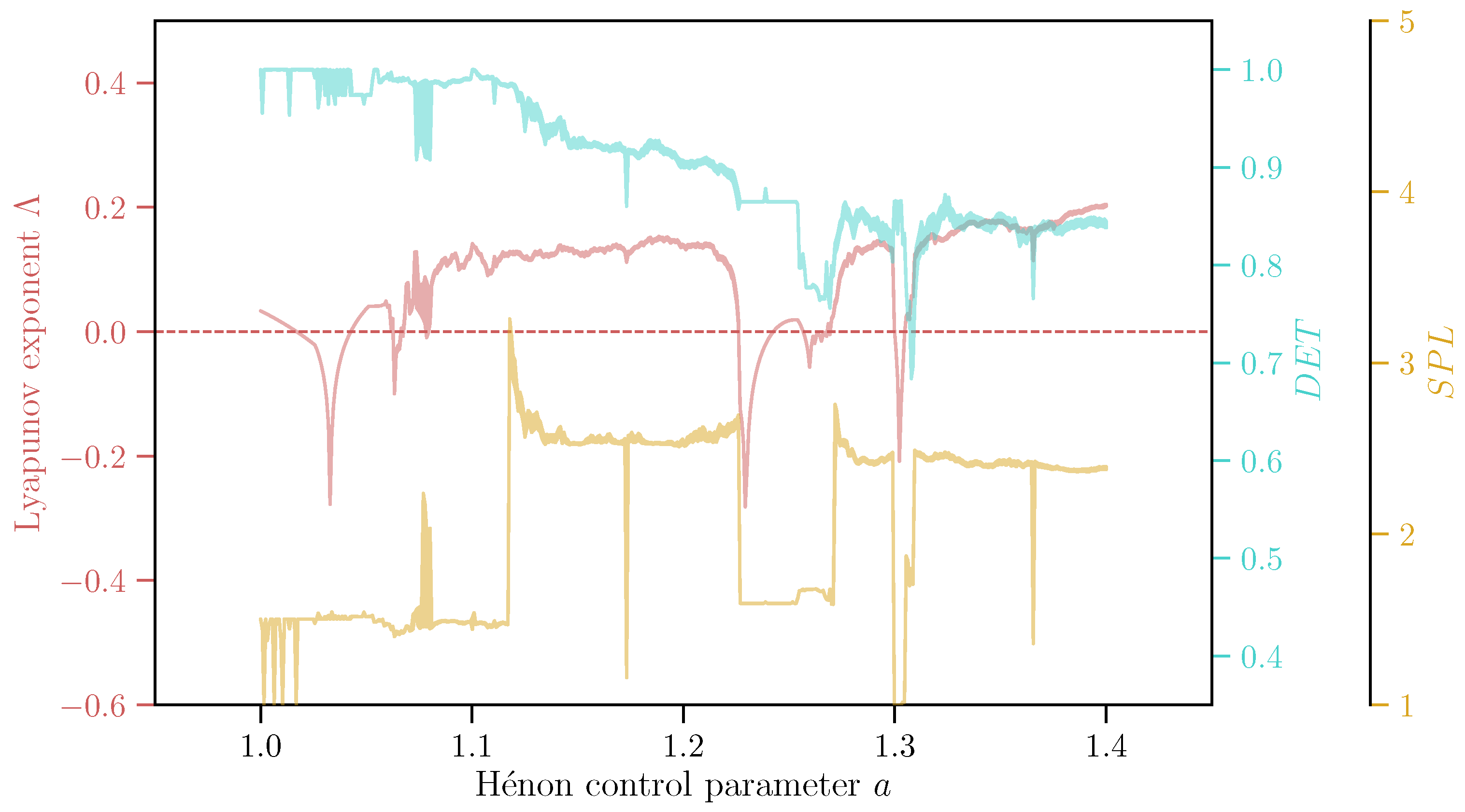

7.3. Quantification Based on Recurrence Patterns

- Determinism. A prevalent feature found in most recurrence plots are diagonal lines, which show up when there are periods in which trajectories evolve in parallel to each other. A diagonal line of length l occurs when the following condition is satisfied: , , , …, . This condition can hold only when the two sections of the trajectory—one between and and the other between and are parallel to each other in the reconstructed state space, which occurs for periodically repeating portions of the trajectory. A higher number of such periodically repeating sections of the trajectory would imply that the state of the system can be predicted on timescales equal to the period of oscillation which, in this example, would be the time difference . Diagonal lines are thus typically used as an indicator of deterministic behavior, as is also seen in the recurrence plots given in Figure 9. To quantify the extent of determinism contained in the recurrence plot, the recurrence plot-based measure is defined as,where the denominator is the total number of recurring points, is the number of lines of length l, and is the minimum number of points required to form a line. Although technically should be 2, higher values can be chosen in certain cases. For instance, in noisy systems, one can expect very short diagonal lines to occur purely by chance. Such lines do not encode determinism of the system and are better avoided in the estimation of . In such situations, we an set to a larger value so as to count only longer lines as they are less likely to occur due to randomness and more likely to indicate (any) deterministic component of the underlying process. gives a number between 0 and 1 such that a periodic signal (e.g., a sinusoid) will have a value of 1 and a purely stochastic signal will result in a value extremely close to 0.

- Average shortest path length. A ‘path’ between two nodes i and j in a network is defined as a sequence of nodes that needs to be traversed in order to go to node j from node i. In general, there exist many possible paths between any pair of nodes in a network, and there can be even several possible shortest paths between a pair of nodes. However, it is possible to uniquely define a shortest path length between two nodes i and j which is the smallest number of nodes that need to be traversed in order to reach j from i. Often the average shortest path length is a characteristic feature of networks that can help distinguish the topology of one network from another. In recurrence networks, shortest path length helps to characterize the topology of nearest-neighbor relationships. Each shortest path is the distance between two states i and j of the system measured by laying out straight line segments between them such that: (i) each line segment cannot be more than units long, and (ii) the ends of each line segment must lie on a measured state, the first and last of which are i and j respectively. Thus, is bounded from below by the straight line between the states i and j, i.e., is the upper bound for the Euclidean distance between two states on the attractor [115,117], and its average value is an upper bound for the mean separation of states of the attractor [117]. The average shortest path length, , is estimated as—where N is the size of the recurrence network and the normalization factor is the possible number of unique paths between N nodes. In order for Equation (12) to be estimable, we should not have self-loops in the network, which is ensured by Equation (10), and the network also has to be connected, in the sense that must exist at least one path between any pair of nodes in the network. Note that quantifies the topology on the recurrence networks embedded in the state space and in several situations, such as multiple attractors existing for the same parameter set, can help quantify differences between the different attractors based on their geometric layout. This might not be reflected in estimations of mean separation of states for the same attractors.

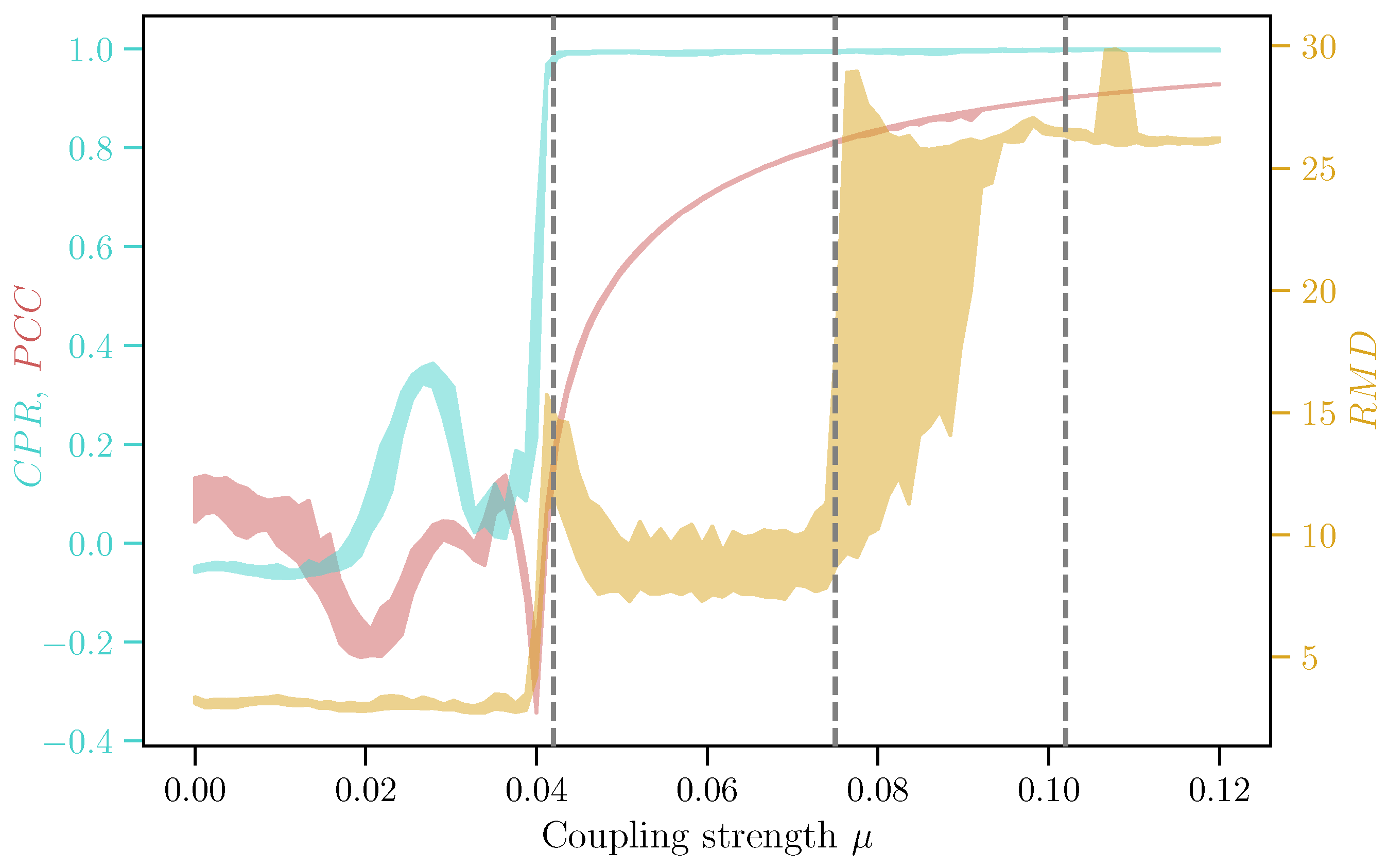

7.4. Inferring Dependencies Using Recurrences

- Correlation of probabilities of recurrence. The determination of the phase from the measured time series of a chaotic oscillation is a challenging task. Especially in the case when the attractor is in a non-phase-coherent dynamical regime, it is nontrivial to determine which particular combination of the state space variables would result in a reliable definition of the ‘phase’ of the motion, in the sense that with every time period, the phase should increase by . In their study, Romano et al. [125] exploit this idea to note that for complex systems, we need to relax the condition (which is true for a purely periodic system with a single well defined period ) to rather have , i.e., , which allows us to define the function,where the normalisation ensures that the average number of recurring points for every possible period is considered. The function , also referred to as the -recurrence rate, can be considered as a kind of generalized autocorrelation function of the system which peaks at multiples of the dominant periods of the dynamics (cf. [61]). An important point here is that should be typically greater than the correlation timescales of the system. To estimate the extent of phase synchronisation, Romano et al. suggest to use the Pearson’s cross-correlation coefficient of the and curves from two dynamical systems and in combination with an appropriately selected so-called Theiler window [133] to take into account the autocorrelations of the dynamics. This was updated in a later study [134] to consider only those values , where is the maximum of the two decorrelation times for the two systems, where the decorrelation time was considered as the smallest value of for which the autocorrelation function is less than . Here, we suggest to consider the decorrelation time with respect to instead of the autocorrelation function, i.e., . Thus, the phase synchronisation between and can be obtained by,where and denote the sample mean and standard deviations, and the tilde symbol is used to denote estimates based on the condition. Equation (16) is thus nothing but the sample cross-correlation coefficient of the values obtained for and .

- Recurrence-based measure of dependence. Goswami et al. [131] recently proposed a statistically motivated measure of dependence based on recurrence plots. This idea was further developed by Ramos et al. [132] to include conditional dependences as well which helped to identify and remove ‘common driver’ effects in multivariate analyses. The so-called recurrence-based measure of dependence ( in Equation (20) below) is the mutual information of the probabilities of recurrence of two dynamical systems and . Consider the recurrence plot constructed from the measured/embedded series : we can estimate the probability that the system recurs to the state at time as,and similarly, we get for system . Now, consider the joint recurrence plot [88],which encodes the joint recurrence patterns of systems and by looking at those pairs of time points where a recurrence in coincides with a recurrence and vice versa. The joint recurrence plot allows us to define the joint probabilitywhich encodes the joint probability that recurrences of the system to its state at time coincide with recurrences of system to its state at the same time . The three quantities , , and allow us to define the mutual information of these probabilities of recurrence, i.e.,which encodes the extent to which and are non-independent. For the case where the two systems are completely synchronized, , which means, according to Equation (20), , i.e., the Shannon entropy of the recurrences of states of the system. If the systems are independent, is zero as the joint probability is simply the product of the two individual probabilities of recurrences. We can understand this by observing that the product in Equation (18) involves the element-wise product of two corresponding columns of the individual recurrence plots. For independent systems and , this amounts to estimating the probability of getting overlapping 1 s from multiplying two binary series where the 1 s in each series have been distributed independently according to probabilities and respectively, which is simply .

7.5. Detecting Dynamical Regimes Using Recurrences

8. Surrogate-Based Hypothesis Testing

- Estimate the time series analysis quantifier Q from the original time-series, denote it as .

- Generate K surrogate time series using an appropriate surrogate generation method.

- Estimate the same quantifier Q from each of the surrogate time series in the exact same manner as was done for the original time series. This results in a sample of K values of Q, which we denote as .

- Estimate the probability distribution from the sample using a histogram function or a kernel density estimate. This distribution is known as the ‘null distribution’ as it is the distribution of values Q for the situation the null hypothesis is true, i.e., for whatever characteristic the surrogates preserve.

- Using , estimate the so-called ‘p-value’, defined as the total probability of obtaining a value at least as extreme as the observed value , i.e.,The p-value encodes how less likely is the observed value to be obtained from the null distribution .

- Based on a chosen confidence level of the test , determine whether is statistically significant at level by checking whether or not. When , the observed value is statistically significant with respect to the chosen null hypothesis, and we fail to accept the null hypothesis, indicating that the observed is caused by characteristics other than what is retained in the surrogates. By convention, is typically chosen at 5%, i.e., or in some cases at 1%, i.e., . Values of higher than 5%, such as 10%, is not recommended as the statistical evidence in such cases is rather weak.

- In cases when there is more than one statistical test, we have to take into account the problem of multiple comparisons. This situation commonly arises in a sliding window analysis, where we divide a time series into smaller (often overlapping) sections and estimate the quantifier Q for each section. If , then 5% of the windows are possibly false positives. To reduce the effect of false positives, ‘correction factors’ such as the Bonferroni correction or the Dunn-Šidák correction are used [144]. In particular, Holm’s method [145] is preferable as it does not require that the different tests be independent. The fundamental idea behind correction factors is to use a corrected value of which is far lower than the actual reported , thereby reducing the effective number of false positives at the reported level of confidence.

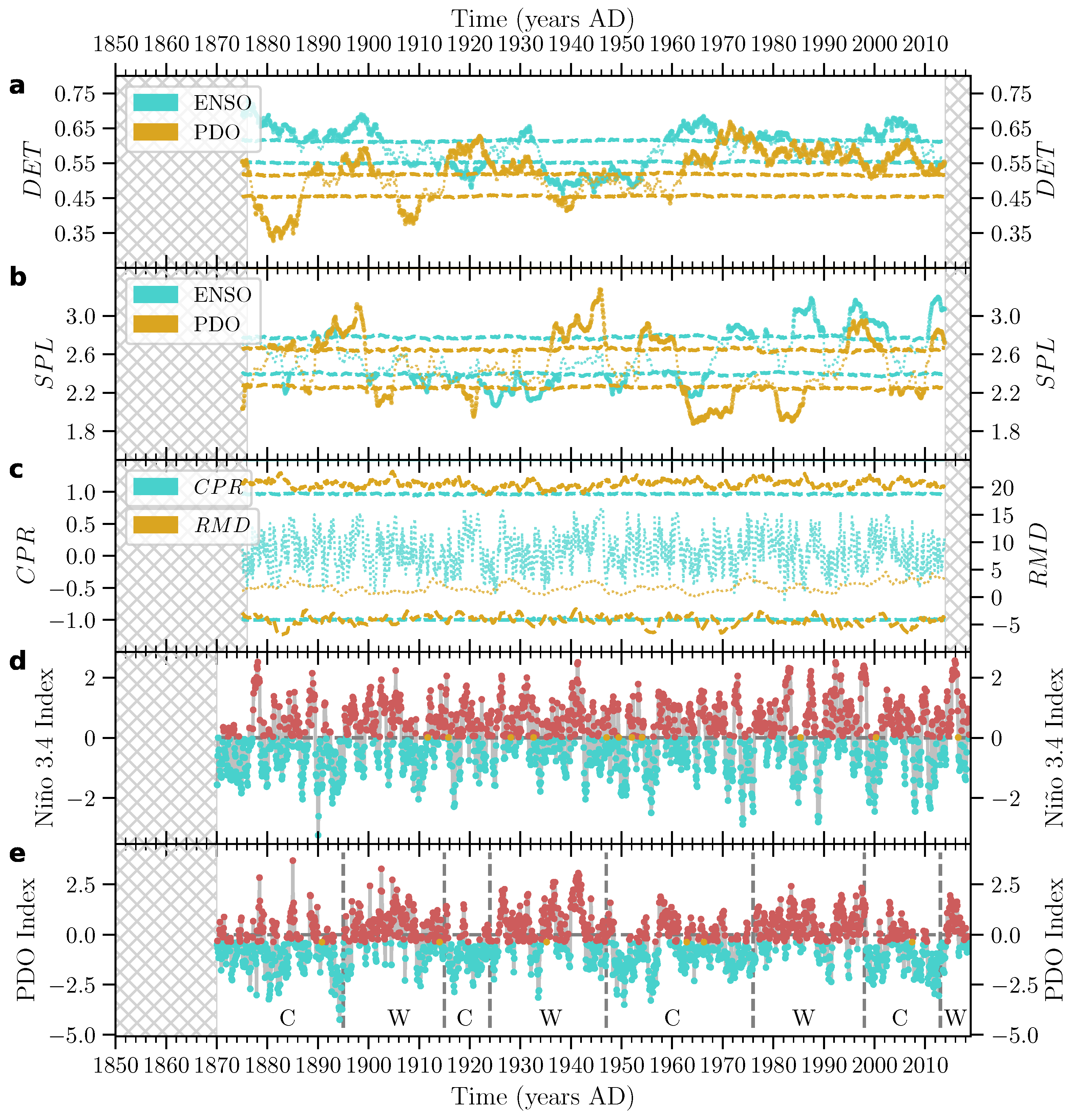

9. Application: Climatic Variability in the Equatorial and Northern Pacific

10. Summary and Outlook

Funding

Acknowledgments

Conflicts of Interest

Code Availability

References

- Kantz, H. Nonlinear time series analysis—Potentials and limitations. In Nonlinear Physics of Complex Systems: Current Status and Future Trends; Parisi, J., Müller, S.C., Zimmermann, W., Eds.; Springer: Berlin/Heidelberg, Germany, 1996; pp. 213–228. [Google Scholar]

- Packard, N.H.; Crutschfield, J.P.; Farmer, J.D.; Shaw, R.S. Geometry from a time series. Phys. Rev. Lett. 1980, 45, 712–716. [Google Scholar] [CrossRef]

- Sauer, T.; Yorker, J.A.; Casdagli, M. Embedology. J. Stat. Phys. 1991, 65, 579–616. [Google Scholar] [CrossRef]

- Takens, F. Detecting strange attractors in turbulence. In Dynamical Systems and Turbulence; Rand, D.A., Young, L.-S., Eds.; Springer: Berlin/Heidelberg, Germany, 1981; pp. 366–381. [Google Scholar]

- Hutchinson, J. Fractals and self-similarity. Indiana Univ. Math J. 1981, 30, 713–747. [Google Scholar] [CrossRef]

- Mandelbrot, B.B. Self-affine fractals and fractal dimension. Phys. Scr. 1985, 32, 257–260. [Google Scholar] [CrossRef]

- Graf, S. Statistically self-similar fractals. Prob. Theor. Rel. Fields 1987, 74, 357–392. [Google Scholar] [CrossRef]

- Shaw, R. Strange attractors, chaotic behavior, and information flow. Z. Naturforsch. 1981, 36, 80–112. [Google Scholar] [CrossRef]

- Ruelle, D. Small random perturbations of dynamical systems and the definition of attractors. Commun. Math. Phys. 1981, 82, 137–151. [Google Scholar] [CrossRef]

- Grassberger, P.; Procaccia, I. Characterization of strange attractors. Phys. Rev. Lett. 1983, 50, 346–349. [Google Scholar] [CrossRef]

- Grebogi, C.; Ott, E.; Pelikan, S.; Yorke, J.A. Strange attractors that are not chaotic. Physica D 1984, 13, 261–268. [Google Scholar] [CrossRef]

- Eckmann, J.-P.; Ruelle, D. Ergodic theory of chaos and strange attractors. Rev. Mod. Phys. 1985, 57, 617–656. [Google Scholar] [CrossRef]

- Rand, R.H.; Holmes, P.J. Bifurcation of periodic motions in two weakly coupled van der Pol oscillators. Int. J. Nonlinear Mech. 1980, 15, 387–399. [Google Scholar] [CrossRef]

- Collet, P.; Eckmann, J.-P.; Koch, H. Period doubling bifurcations for families of maps on . J. Stat. Phys. 1981, 25, 1–14. [Google Scholar] [CrossRef]

- Gardini, L.; Lupini, R.; Messia, M.G. Hopf bifurcation and transition to chaos in Lotka-Volterra equation. J. Math. Biol. 1989, 27, 259–272. [Google Scholar] [CrossRef]

- Afraimovich, V.S.; Verichev, N.N.; Rabinovich, M.I. Stochastic synchronization of oscillation in dissipative systems. Radiophys. Quant. Electron. 1986, 29, 795–803. [Google Scholar] [CrossRef]

- Rulkov, N.F.; Sushchik, M.M.; Tsimring, L.S.; Abarbanel, H.D.I. Generalized synchronization of chaos in directionally coupled chaotic systems. Phys. Rev. E 1995, 51, 980–994. [Google Scholar] [CrossRef]

- Rosenblum, M.G.; Pikovsky, A.S.; Kurths, J. Phase Synchronization of Chaotic Oscillators. Phys. Rev. Lett. 1996, 76, 1804–1807. [Google Scholar] [CrossRef]

- Pecora, L.M.; Carroll, T.L.; Johnson, G.A.; Maraun, D.J.; Heagy, J.F. Fundamentals of synchronization in chaotic systems, concepts, and applications. Chaos 1997, 7, 520–543. [Google Scholar] [CrossRef]

- Carroll, T.L.; Pecora, L.M. Synchronizing chaotic circuits. IEEE Trans. Circuits Syst. 1991, 38, 453–456. [Google Scholar] [CrossRef]

- Chua, L.O.; Kocarev, L.; Eckhart, K.; Itoh, M. Exprimental chaos synchronization in Chua’s circuit. Int. J. Bifurc. Chaos 1992, 2, 705–708. [Google Scholar] [CrossRef]

- Takiguchi, Y.; Fujino, H.; Ohtsubo, J. Experimental synchronization of chaotic oscillations in externally injected semiconductor lasers in a low-frequency fluctuation regime. Opt. Lett. 1999, 24, 1570–1572. [Google Scholar] [CrossRef]

- Olsen, L.F.; Truty, G.L.; Schaffer, W.M. Oscillations and chaos in epidemics: A nonlinear dynamic study of six childhood diseases in Copenhagen, Denmark. Theor. Popul. Biol. 1988, 33, 344–370. [Google Scholar] [CrossRef]

- Hsieh, D.A. Chaos and Nonlinear Dynamics: Application to Financial Markets. J. Financ. 1991, 46, 1839–1877. [Google Scholar] [CrossRef]

- Turchin, P.; Taylor, A.D. Complex dynamics in ecological time series. Ecology 1992, 73, 289–305. [Google Scholar] [CrossRef]

- Hilborn, R.C. Chaos and Nonlinear Dynamics: An Introduction for Scientists and Engineers; Oxford University Press: Oxford, UK, 2000. [Google Scholar]

- Sugihara, G.; May, R. Nonlinear forecasting as a way of distinguishing chaos from measurement error in time series. Nature 1990, 344, 734–741. [Google Scholar] [CrossRef] [PubMed]

- Bjørnstad, O.N.; Grenfell, B.T. Noisy Clockwork: Time Series Analysis of Population Fluctuations in Animals. Science 2001, 293, 638–644. [Google Scholar] [CrossRef]

- Sugihara, G.; May, R.; Ye, H.; Hsieh, C.; Deyle, E.; Fogarty, M.; Munch, S. Detecting Causality in Complex Ecosystems George Sugihara. Science 2012, 338, 496–500. [Google Scholar] [CrossRef]

- Lehnertz, K.; Elger, C.E. Can epileptic seizures be predicted? Evidence from nonlinear time series analysis of brain electrical activity. Phys. Rev. Lett. 1998, 80, 5019–5022. [Google Scholar] [CrossRef]

- Costa, M.; Goldberger, A.L.; Peng, C.-K. Multiscale Entropy Analysis of Complex Physiologic Time Series. Phys. Rev. Lett. 2002, 89, 068102. [Google Scholar] [CrossRef]

- Voss, B.A.; Schulz, S.; Schroeder, R.; Baumert, M.; Caminal, P. Methods derived from nonlinear dynamics for analysing heart rate variability. Philos. Trans. R. Soc. A 2009, 367, 277–296. [Google Scholar] [CrossRef]

- Sangoyomi, T.B.; Lall, U.; Abarbanel, H.D.I. Nonlinear dynamics of the Great Salt Lake: Dimension estimation. Water Resour. Res. 1996, 32, 149–159. [Google Scholar] [CrossRef]

- Ghil, M.; Allen, M.R.; Dettinger, M.D.; Ide, K.; Kondrashov, D.; Mann, M.E.; Robertson, A.W.; Saunders, A.; Tian, Y.; Varadi, F.; et al. Advanced spectral methods for climatic time series. Rev. Geophys. 2002, 40, 1–41. [Google Scholar] [CrossRef]

- Jevrejeva, S.; Grinsted, A.; Moore, J.C.; Holgate, S. Nonlinear trends and multiyear cycles in sea level records. J. Geophys. Res. 2006, 111, C09012. [Google Scholar] [CrossRef]

- Eckmann, J.-P.; Kamphorst, S.O.; Ruelle, D. Recurrence Plots of Dynamical Systems. Europhys. Lett. 1987, 4, 973–977. [Google Scholar] [CrossRef]

- Kantz, H. A robust method to estimate the maximal Lyapunov exponent of a time series. Phys. Lett. A 1994, 185, 77–87. [Google Scholar] [CrossRef]

- Farmer, J.D. Information Dimension and the Probabilistic Structure of Chaos. Z. Naturforsch. 1982, 37, 1304–1326. [Google Scholar] [CrossRef]

- Webber, C.L., Jr.; Zbilut, J.P. Dynamical assessment of physiological systems and states using recurrence plot strategies. J. Appl. Physiol. 1994, 76, 965–973. [Google Scholar] [CrossRef]

- Marwan, N.; Wessel, N.; Meyerfeldt, U.; Schirdewan, A.; Kurths, J. Recurrence-plot-based measures of complexity and their application to heart-rate-variability data. Phys. Rev. E 2002, 66, 026702. [Google Scholar] [CrossRef]

- Silva, D.F.; De Souza, V.M.; Batista, G.E. Time series classification using compression distance of recurrence plots. In 2013 IEEE 13th International Conference on Data Mining; Xiong, H., Karypis, G., Thuraisingham, B., Cook, D., Wu, X., Eds.; IEEE Computer Society: Danvers, MA, USA, 2013; pp. 687–696. [Google Scholar]

- Strozzi, F.; Zaldívar, J.M.; Zbilut, J.P. Application of nonlinear time series analysis techniques to high-frequency currency exchange data. Physica A 2002, 312, 520–538. [Google Scholar] [CrossRef]

- Bastos, J.A.; Caiado, J. Recurrence quantification analysis of global stock markets. Physica A 2011, 390, 1315–1325. [Google Scholar] [CrossRef]

- Palmieri, F.; Fiore, U. A nonlinear, recurrence-based approach to traffic classification. Comput. Netw. 2009, 53, 761–773. [Google Scholar] [CrossRef]

- Yang, Y.-G.; Pan, Q.-X.; Sun, S.-J.; Xu, P. Novel Image Encryption based on Quantum Walks. Sci. Rep. 2015, 5, 7784. [Google Scholar] [CrossRef]

- Serrà, J.; Serra, X.; Andrzejak, R.G. Cross recurrence quantification for cover song identification. New J. Phys. 2009, 11, 093017. [Google Scholar] [CrossRef]

- Moore, J.M.; Corrêa, D.C.; Small, M. Is Bach’s brain a Markov chain? Recurrence quantification to assess Markov order for short, symbolic, musical compositions. Chaos 2018, 28, 085715. [Google Scholar] [CrossRef]

- Richardson, D.C.; Dale, R. Looking To Understand: The Coupling Between Speakers’ and Listeners’ Eye Movements and Its Relationship to Discourse Comprehension. Cogn. Sci. 2005, 29, 1045–1060. [Google Scholar] [CrossRef]

- Duran, N.D.; Dale, R.; Kello, C.T.; Street, C.N.H.; Richardson, D.C. Exploring the movement dynamics of deception. Front. Psychol. 2013, 4, 1–16. [Google Scholar] [CrossRef]

- Konvalinka, I.; Xygalatas, D.; Bulbulia, J.; Schjødt, U.; Jegindø, E.M.; Wallot, S.; Van Orden, G.; Roepstorff, A. Synchronized arousal between performers and related spectators in a fire-walking ritual. Proc. Natl. Acad. Sci. USA 2011, 108, 8514–8519. [Google Scholar] [CrossRef]

- Acharya, U.R.; Sree, S.V.; Chattopadhyay, S.; Yu, W.; Ang, P.C.A. Application of recurrence quantification analysis for the automated identification of epileptic EEG signals. Int. J. Neur. Syst. 2011, 21, 199–211. [Google Scholar] [CrossRef]

- Zolotova, N.V.; Ponyavin, D.I. Phase asynchrony of the north-south sunspot activity. Astron. Astrophys. 2006, 449, L1–L4. [Google Scholar] [CrossRef][Green Version]

- Stangalini, M.; Ermolli, I.; Consolini, G.; Giorgi, F. Recurrence quantification analysis of two solar cycle indices. J. Space Weather Space Clim. 2017, 7, A5. [Google Scholar] [CrossRef]

- Li, S.; Zhao, Z.; Wang, Y.; Wang, Y. Identifying spatial patterns of synchronization between NDVI and climatic determinants using joint recurrence plots. Environ. Earth Sci. 2011, 64, 851–859. [Google Scholar] [CrossRef]

- Zhao, Z.Q.; Li, S.C.; Gao, J.B.; Wang, Y.L. Identifying spatial patterns and dynamics of climate change using recurrence quantification analysis: A case study of qinghaitibet plateau. Int. J. Bifurc. Chaos 2011, 21, 1127–1139. [Google Scholar] [CrossRef]

- Marwan, N.; Trauth, M.H.; Vuille, M.; Kurths, J. Comparing modern and Pleistocene ENSO-like influences in NW Argentina using nonlinear time series analysis methods. Clim. Dyn. 2003, 21, 317–326. [Google Scholar] [CrossRef]

- Eroglu, D.; McRobie, F.H.; Ozken, I.; Stemler, T.; Wyrwoll, K.-H.; Breitenbach, S.F.M.; Marwan, N.; Kurths, J. See–saw relationship of the Holocene East Asian–Australian summer monsoon. Nat. Commun. 2016, 7, 12929. [Google Scholar] [CrossRef]

- Zaitouny, A.; Walker, D.M.; Small, M. Quadrant scan for multi-scale transition detection. Chaos 2019, 29, 103117. [Google Scholar] [CrossRef]

- Rapp, P.E.; Darmon, D.M.; Cellucci, C.J. Hierarchical Transition Chronometries in the Human Central Nervous System. Proc. Int. Conf. Nonlinear Theor. Appl. 2013, 2, 286–289. [Google Scholar] [CrossRef]

- Feeny, B.F.; Liang, J.W. Phase-Space Reconstructions and Stick-Slip. Nonlinear Dyn. 1997, 13, 39–57. [Google Scholar] [CrossRef]

- Zbilut, J.P.; Marwan, N. The Wiener-Khinchin theorem and recurrence quantification. Phys. Lett. A 2008, 372, 6622–6626. [Google Scholar] [CrossRef]

- Wendeker, M.; Litak, G.; Czarnigowski, J.; Szabelski, K. Nonperiodic oscillations of pressure in a spark ignition combustion engine. Int. J. Bifurc. Chaos 2004, 14, 1801–1806. [Google Scholar] [CrossRef]

- Litak, G.; Kamiński, T.; Czarnigowski, J.; Żukowski, D.; Wendeker, M. Cycle-to-cycle oscillations of heat release in a spark ignition engine. Meccanica 2007, 42, 423–433. [Google Scholar] [CrossRef]

- Longwic, R.; Litak, G.; Sen, A.K. Recurrence plots for diesel engine variability tests. Z. Naturforsch. A 2009, 64, 96–102. [Google Scholar] [CrossRef]

- Nichols, J.M.; Trickey, S.T.; Seaver, M. Damage detection using multivariate recurrence quantification analysis. Mech. Syst. Signal Proc. 2006, 20, 421–437. [Google Scholar] [CrossRef]

- Iwaniec, J.; Uhl, T.; Staszewski, W.J.; Klepka, A. Detection of changes in cracked aluminium plate determinism by recurrence analysis. Nonlinear Dyn. 2012, 70, 125–140. [Google Scholar] [CrossRef]

- Qian, Y.; Yan, R.; Hu, S. Bearing Degradation Evaluation Using Recurrence Quantification Analysis and Kalman Filter. IEEE Trans. Instrum. Meas. 2014, 63, 2599–2610. [Google Scholar] [CrossRef]

- Zhou, C.; Zhang, W. Recurrence plot based damage detection method by integrating T2 control chart. Entropy 2015, 17, 2624–2641. [Google Scholar] [CrossRef]

- Holmes, D.S.; Mergen, A.E. Improving the performance of the T2 control chart. Qual. Eng. 1993, 5, 619–625. [Google Scholar] [CrossRef]

- García-Ochoa, E.; González-Sánchez, J.; Acuña, N.; Euan, J. Analysis of the dynamics of Intergranular corrosion process of sensitised 304 stainless steel using recurrence plots. J. Appl. Electrochem. 2009, 39, 637–645. [Google Scholar] [CrossRef]

- Yang, Y.; Zhang, T.; Shao, Y.; Meng, G.; Wang, F. Effect of hydrostatic pressure on the corrosion behaviour of Ni-Cr-Mo-V high strength steel. Corros. Sci. 2010, 52, 2697–2706. [Google Scholar] [CrossRef]

- Hou, Y.; Aldrich, C.; Lepkova, K.; Machuca, L.L.; Kinsella, B. Monitoring of carbon steel corrosion by use of electrochemical noise and recurrence quantification analysis. Corros. Sci. 2016, 112, 63–72. [Google Scholar] [CrossRef]

- Barrera, P.R.; Gómez, F.J.R.; García-Ochoa, E. Assessing of new coatings for iron artifacts conservation by recurrence Plots analysis. Coatings 2019, 9, 12. [Google Scholar] [CrossRef]

- Oberst, S.; Lai, J.C.S. Statistical analysis of brake squeal noise. J. Sound Vib. 2011, 330, 2978–2994. [Google Scholar] [CrossRef]

- Wernitz, B.A.; Hoffmann, N.P. Recurrence analysis and phase space reconstruction of irregular vibration in friction brakes: Signatures of chaos in steady sliding. J. Sound Vib. 2012, 331, 3887–3896. [Google Scholar] [CrossRef]

- Stender, M.; Oberst, S.; Tiedemann, M.; Hoffmann, N. Complex machine dynamics: Systematic recurrence quantification analysis of disk brake vibration data. Nonlinear Dyn. 2019, 97, 2483–2497. [Google Scholar] [CrossRef]

- Stender, M.; Di Bartolomeo, M.; Massi, F.; Hoffmann, N. Revealing transitions in friction-excited vibrations by nonlinear time-series analysis. Nonlinear Dyn. 2019, 1–18. [Google Scholar] [CrossRef]

- Kabiraj, L.; Sujith, R.I. Nonlinear self-excited thermoacoustic oscillations: Intermittency and flame blowout. J. Fluid Mech. 2012, 713, 376–397. [Google Scholar] [CrossRef]

- Nair, V.; Sujith, R.I. Identifying homoclinic orbits in the dynamics of intermittent signals through recurrence quantification. Chaos 2013, 23, 033136. [Google Scholar] [CrossRef] [PubMed]

- Nair, V.; Thampi, G.; Sujith, R.I. Intermittency route to thermoacoustic instability in turbulent combustors. J. Fluid Mech. 2014, 756, 470–487. [Google Scholar] [CrossRef]

- Elias, J.; Narayanan Namboothiri, V.N. Cross-recurrence plot quantification analysis of input and output signals for the detection of chatter in turning. Nonlinear Dyn. 2014, 76, 255–261. [Google Scholar] [CrossRef]

- Harris, P.; Litak, G.; Iwaniec, J.; Bowen, C.R. Recurrence Plot and Recurrence Quantification of the Dynamic Properties of Cross-Shaped Laminated Energy Harvester. Appl. Mech. Mater. 2016, 849, 95–105. [Google Scholar] [CrossRef]

- Oberst, S.; Niven, R.K.; Lester, D.R.; Ord, A.; Hobbs, B.; Hoffmann, N. Detection of unstable periodic orbits in mineralising geological systems. Chaos 2018, 28, 085711. [Google Scholar] [CrossRef] [PubMed]

- Parlitz, U. Nonlinear time-series analysis. In Nonlinear Modeling: Advanced Black-Box Techniques; Suykens, J.A.K., Vandewalle, J., Eds.; Springer: Boston, MA, USA, 1998; pp. 209–239. [Google Scholar]

- Schreiber, T. Interdisciplinary application of nonlinear time series methods. Phys. Rep. 1999, 308, 1–64. [Google Scholar] [CrossRef]

- Kantz, H.; Schreiber, T. Nonlinear Time Series Analysis, 2nd ed.; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Bradley, E.; Kantz, H. Nonlinear time-series analysis revisited. Chaos 2015, 25, 097610. [Google Scholar] [CrossRef] [PubMed]

- Marwan, N.; Romano, M.C.; Thiel, M.; Kurths, J. Recurrence plots for the analysis of complex systems. Phys. Rep. 2007, 438, 237–329. [Google Scholar] [CrossRef]

- Marwan, N. A historical review of recurrence plots. Eur. Phys. J. Spec. Top. 2008, 164, 3–12. [Google Scholar] [CrossRef]

- Strogatz, S.H. Nonlinear Dynamics and Chaos: With Applications to Physics, Biology, Chemistry, and Engineering, 2nd ed.; CRC Press: Boca Raton, FL, USA, 2018. [Google Scholar]

- Schreiber, T.; Schmitz, A. Improved Surrogate Data for Nonlinearity Tests. Phys. Rev. Lett. 1996, 77, 635–638. [Google Scholar] [CrossRef] [PubMed]

- Ruelle, D. What is a strange attractor? Not. Am. Math. Soc. 2006, 53, 764–765. [Google Scholar]

- Feigenbaum, M.J. The universal metric properties of nonlinear transformations. J. Stat. Phys. 1979, 21, 669–706. [Google Scholar] [CrossRef]

- Fraser, A.M.; Swinney, H.L. Independent coordinates for strange attractors from mutual information. Phys. Rev. A 1986, 33, 1134–1140. [Google Scholar] [CrossRef]

- Kennel, M.B.; Brown, R.; Abarbanel, H.D.I. Determining embedding dimension for phase-space reconstruction using a geometrical construction. Phys. Rev. A 1992, 45, 3403–3411. [Google Scholar] [CrossRef]

- Barrow-Green, J. Poincaré and the Three Body Problem; Americal Mathematical Society: Providence, RI, USA, 1997. [Google Scholar]

- Robinson, G.; Thiel, M. Recurrences determine the dynamics. Chaos 2009, 19, 023104. [Google Scholar] [CrossRef]

- Thiel, M.; Romano, M.C.; Kurths, J. How much information is contained in a recurrence plot? Phys. Lett. A 2004, 330, 343–349. [Google Scholar] [CrossRef]

- Hirata, Y.; Horai, S.; Aihara, K. Reproduction of distance matrices and original time series from recurrence plots and their applications. Eur. Phys. J. Spec. Top. 2008, 164, 13–22. [Google Scholar] [CrossRef]

- Iwanski, J.S.; Bradley, E. Recurrence plots of experimental data: To embed or not to embed? Chaos 1998, 8, 861–871. [Google Scholar] [CrossRef] [PubMed]

- March, T.K.; Chapman, S.C.; Dendy, R.O. Recurrence plot statistics and the effect of embedding. Physica D 2005, 200, 171–184. [Google Scholar] [CrossRef]

- Thiel, M.; Romano, M.C.; Kurths, J. Spurious Structures in Recurrence Plots Induced by Embedding. Nonlinear Dyn. 2006, 44, 299–305. [Google Scholar] [CrossRef]

- Schinkel, S.; Dimigen, O.; Marwan, N. Selection of recurrence threshold for signal detection. Eur. Phys. J. Spec. Top. 2008, 164, 45–53. [Google Scholar] [CrossRef]

- Marwan, N. How to avoid potential pitfalls in recurrence plot based data analysis. Int. J. Bifurc. Chaos 2011, 21, 1003–1017. [Google Scholar] [CrossRef]

- Eroglu, D.; Marwan, N.; Prasad, S.; Kurths, J. Finding recurrence networks’ threshold adaptively for a specific time series. Nonlinear Proc. Geophys. 2014, 21, 1085–1092. [Google Scholar] [CrossRef]

- Beim Graben, P.; Sellers, K.K.; Fröhlich, F.; Hutt, A. Optimal estimation of recurrence structures from time series. Europhys. Lett 2016, 114, 38003. [Google Scholar] [CrossRef]

- Kraemer, K.H.; Donner, R.V.; Heitzig, J.; Marwan, N. Recurrence threshold selection for obtaining robust recurrence characteristics in different embedding dimensions. Chaos 2018, 28, 085720. [Google Scholar] [CrossRef]

- Marwan, N.; Kurths, J. Nonlinear analysis of bivariate data with cross recurrence plots. Phys. Lett. A 2002, 302, 299–307. [Google Scholar] [CrossRef]

- Romano, M.C.; Thiel, M.; Kurths, J.; von Bloh, W. Multivariate recurrence plots. Phys. Lett. A 2004, 330, 214–223. [Google Scholar] [CrossRef]

- Casdagli, M.C. Recurrence plots revisited. Physica D 1997, 108, 12–44. [Google Scholar] [CrossRef]

- Eroglu, D.; Peron, T.K.D.; Marwan, N.; Rodrigues, F.A.; Costa, L.D.F.; Sebek, M.; Kiss, I.Z.; Kurths, J. Entropy of weighted recurrence plots. Phys. Rev. E 2014, 90, 042919. [Google Scholar] [CrossRef] [PubMed]

- Pham, T.D. Fuzzy recurrence plots. Europhys. Lett. 2016, 116, 50008. [Google Scholar] [CrossRef]

- Schinkel, S.; Marwan, N.; Kurths, J. Order patterns recurrence plots in the analysis of ERP data. Cogn. Neurodyn. 2007, 1, 317–325. [Google Scholar] [CrossRef] [PubMed]

- Fukino, M.; Hirata, Y.; Aihara, K. Coarse-graining time series data: Recurrence plot of recurrence plots and its application for music. Chaos 2016, 26, 023116. [Google Scholar] [CrossRef] [PubMed]

- Marwan, N.; Donges, J.F.; Zou, Y.; Donner, R.V.; Kurths, J. Complex network approach for recurrence analysis of time series. Phys. Lett. A 2009, 373, 4246–4254. [Google Scholar] [CrossRef]

- Xu, X.; Zhang, J.; Small, M. Superfamily phenomena and motifs of networks induced from time series. Proc. Natl. Acad. Sci. USA 2008, 105, 19601–19605. [Google Scholar] [CrossRef]

- Donner, R.V.; Zou, Y.; Donges, J.F.; Marwan, N.; Kurths, J. Recurrence networks—A novel paradigm for nonlinear time series analysis. New J. Phys. 2010, 12, 033025. [Google Scholar] [CrossRef]

- Donges, J.F.; Donner, R.V.; Trauth, M.H.; Marwan, N.; Schellnhuber, H.-J.; Kurths, J. Nonlinear detection of paleoclimate-variability transitions possibly related to human evolution. Proc. Natl. Acad. Sci. USA 2011, 108, 20422–20427. [Google Scholar] [CrossRef]

- Eroglu, D.; Marwan, N.; Stebich, M.; Kurths, J. Multiplex recurrence networks. Phys. Rev. E 2018, 97, 012312. [Google Scholar] [CrossRef] [PubMed]

- Zou, Y.; Donner, R.V.; Marwan, N.; Donges, J.; Kurths, J. Complex network approaches to nonlinear time series analysis. Phys. Rep. 2019, 787, 1–97. [Google Scholar] [CrossRef]

- Bradley, E.; Mantilla, R. Recurrence plots and unstable periodic orbits. Chaos 2002, 12, 596–600. [Google Scholar] [CrossRef] [PubMed]

- Zou, Y.; Thiel, M.; Romano, M.C.; Kurths, J. Characterization of stickiness by means of recurrence. Chaos 2007, 17, 043101. [Google Scholar] [CrossRef]

- Facchini, A.; Kantz, H.; Tiezzi, E. Recurrence plot analysis of nonstationary data: The understanding of curved patterns. Phys. Rev. E 2005, 72, 021915. [Google Scholar] [CrossRef]

- Hutt, A.; beim Graben, P. Sequences by Metastable Attractors: Interweaving Dynamical Systems and Experimental Data. Front. Appl. Math. Stat. 2017, 3, 1–14. [Google Scholar] [CrossRef]

- Romano, M.C.; Thiel, M.; Kurths, J.; Kiss, I.Z.; Hudson, J.L. Detection of synchronization for non-phase-coherent and non-stationary data. Europhys. Lett. 2005, 71, 466–472. [Google Scholar] [CrossRef]

- Romano, M.C.; Thiel, N.; Kurths, J.; Grebogi, C. Estimation of the direction of the coupling by conditional probabilities of recurrence. Phys. Rev. E 2007, 76, 036211. [Google Scholar] [CrossRef]

- Feldhoff, J.H.; Donner, R.V.; Donges, J.F.; Marwan, N.; Kurths, J. Geometric detection of coupling directions by means of inter-system recurrence networks. Phys. Lett. A 2012, 376, 3504–3513. [Google Scholar] [CrossRef]

- Groth, A. Visualization of coupling in time series by order recurrence plots. Phys. Rev. E 2005, 72, 046220. [Google Scholar] [CrossRef]

- Tanio, M.; Hirata, Y.; Suzuki, H. Reconstruction of driving forces through recurrence plots. Phys. Lett. A 2009, 373, 2031–2040. [Google Scholar] [CrossRef]

- Hirata, Y.; Aihara, K. Identifying hidden common causes from bivariate time series: A method using recurrence plots. Phys. Rev. E 2010, 81, 016203. [Google Scholar] [CrossRef] [PubMed]

- Goswami, B. How do global temperature drivers influence each other? A network perspective using recurrences. Eur. Phys. J. Spec. Top. 2013, 222, 861–873. [Google Scholar] [CrossRef]

- Ramos, A.M.T.; Builes-Jaramillo, A.; Poveda, G.; Goswami, B.; Macau, E.; Kurths, J.; Marwan, N. Recurrence measure of conditional dependence and applications. Phys. Rev. E 2017, 95, 052206. [Google Scholar] [CrossRef]

- Theiler, J. Spurious dimension from correlation algorithms applied to limited time-series data. Phys. Rev. A 1986, 34, 2427–2432. [Google Scholar] [CrossRef]

- Goswami, B.; Ambika, G.; Marwan, N.; Kurths, J. On interrelations of recurrences and connectivity trends between stock indices. Physica A 2012, 391, 4364–4376. [Google Scholar] [CrossRef]

- Graben, P.B.; Hutt, A. Detecting Recurrence Domains of Dynamical Systems by Symbolic Dynamics. Phys. Rev. Lett. 2013, 110, 154101. [Google Scholar] [CrossRef]

- Iwayama, K.; Hirata, Y.; Suzuki, H.; Aihara, K. Change-point detection with recurrence networks. Nonlinear Theor. Appl. IEICE 2013, 4, 160–171. [Google Scholar] [CrossRef]

- Goswami, B.; Boers, N.; Rheinwalt, A.; Marwan, N.; Heitzig, J.; Breitenbach, S.F.M.; Kurths, J. Abrupt transitions in time series with uncertainties. Nat. Commun. 2018, 9, 48. [Google Scholar] [CrossRef]

- Newman, M.E.J. Modularity and community structure in networks. Proc. Natl. Acad. Sci. USA 2006, 103, 8577–8582. [Google Scholar] [CrossRef]

- Newman, M.E.J.; Girvan, M. Finding and evaluating community structure in networks. Phys. Rev. E 2004, 69, 026113. [Google Scholar] [CrossRef] [PubMed]

- Fortunato, S. Community detection in graphs. Phys. Rep. 2010, 486, 75–174. [Google Scholar] [CrossRef]

- Csardi, G.; Nepusz, T. The igraph software package for complex network research. InterJournal Complex Syst. 2006, 1695, 1–9. [Google Scholar]

- Thiel, M.; Romano, M.C.; Kurths, J.; Rolfs, M.; Kliegl, R. Twin surrogates to test for complex synchronisation. Europhys. Lett. 2006, 75, 535–541. [Google Scholar] [CrossRef]

- Lancaster, G.; Iatsenko, D.; Pidde, A.; Ticcinelli, V.; Stefanovska, A. Surrogate data for hypothesis testing of physical systems. Phys. Rep. 2018, 748, 1–60. [Google Scholar] [CrossRef]

- Abdi, H. Bonferroni and Šidák corrections for multiple comparisons. In Encyclopedia of Measurement and Statistics; Salkind, N.H., Ed.; Sage Publications: Thousand Oaks, CA, USA, 2007; pp. 103–107. [Google Scholar]

- Abdi, H. Holm’s Sequential Bonferroni Procedure. In Encyclopedia of Research Design; Salkind, N.H., Ed.; Sage Publications: Thousand Oaks, CA, USA, 2007; pp. 1–8. [Google Scholar]

- Chan, J.C.L.; Zhou, W. PDO, ENSO and the early summer monsoon rainfall over south China. J. Clim. 2005, 32, L08810. [Google Scholar] [CrossRef]

- Pavia, E.G.; Graef, F.; Reyes, J. PDO–ENSO effects in the climate of Mexico. J. Clim. 2006, 19, 6433–6438. [Google Scholar] [CrossRef]

- Hu, Z.-Z.; Huang, B. Interferential impact of ENSO and PDO on dry and wet conditions in the, U.S. Great Plains. J. Clim. 2009, 22, 6047–6065. [Google Scholar] [CrossRef]

- Trenberth, K.E. The definition of El Nińo. Bull. Am. Meteorol. Soc. 1997, 78, 2771–2778. [Google Scholar] [CrossRef]

- Newman, M.; Alexander, M.A.; Ault, T.R.; Cobb, K.M.; Deser, C.; Di Lorenzo, E.; Mantua, N.J.; Miller, A.J.; Shoshiro, M.; Nakamura, H.; et al. The Pacific Decadal Oscillation, revisited. J. Clim. 2016, 29, 4399–4427. [Google Scholar] [CrossRef]

- Verdon, D.C.; Franks, S.W. Long-term behaviour of the ENSO: Interactions with the PDO over the past 400 years inderred from paleoclimate records. Geophys. Res. Lett. 2006, 33, L06712. [Google Scholar] [CrossRef]

- Working Group on Surface Pressure, NOAA ESRL Physical Sciences Division. Available online: https://www.esrl.noaa.gov/psd/gcos_wgsp/Timeseries/Data/nino34.long.data (accessed on 21 February 2019).

- Pacific, Decadal Oscillation (PDO), NOAA, Climate Monitoring, Teleconnections. Available online: https://www.ncdc.noaa.gov/teleconnections/pdo/data.csv (accessed on 21 February 2019).

- Brunton, S.L.; Proctor, J.L.; Kutz, J.N. Discovering governing equations from data by sparse identification of nonlinear dynamical systems. Proc. Natl. Acad. Sci. USA 2016, 113, 3932–3937. [Google Scholar] [CrossRef] [PubMed]

- Lu, Z.; Hunt, B.R.; Ott, E. Attractor reconstruction by machine learning. Chaos 2018, 28, 061104. [Google Scholar] [CrossRef] [PubMed]

© 2019 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Goswami, B. A Brief Introduction to Nonlinear Time Series Analysis and Recurrence Plots. Vibration 2019, 2, 332-368. https://doi.org/10.3390/vibration2040021

Goswami B. A Brief Introduction to Nonlinear Time Series Analysis and Recurrence Plots. Vibration. 2019; 2(4):332-368. https://doi.org/10.3390/vibration2040021

Chicago/Turabian StyleGoswami, Bedartha. 2019. "A Brief Introduction to Nonlinear Time Series Analysis and Recurrence Plots" Vibration 2, no. 4: 332-368. https://doi.org/10.3390/vibration2040021

APA StyleGoswami, B. (2019). A Brief Introduction to Nonlinear Time Series Analysis and Recurrence Plots. Vibration, 2(4), 332-368. https://doi.org/10.3390/vibration2040021