All-Weather Forest Fire Automatic Monitoring and Early Warning Application Based on Multi-Source Remote Sensing Data: Case Study of Yunnan

Abstract

1. Introduction

- (1)

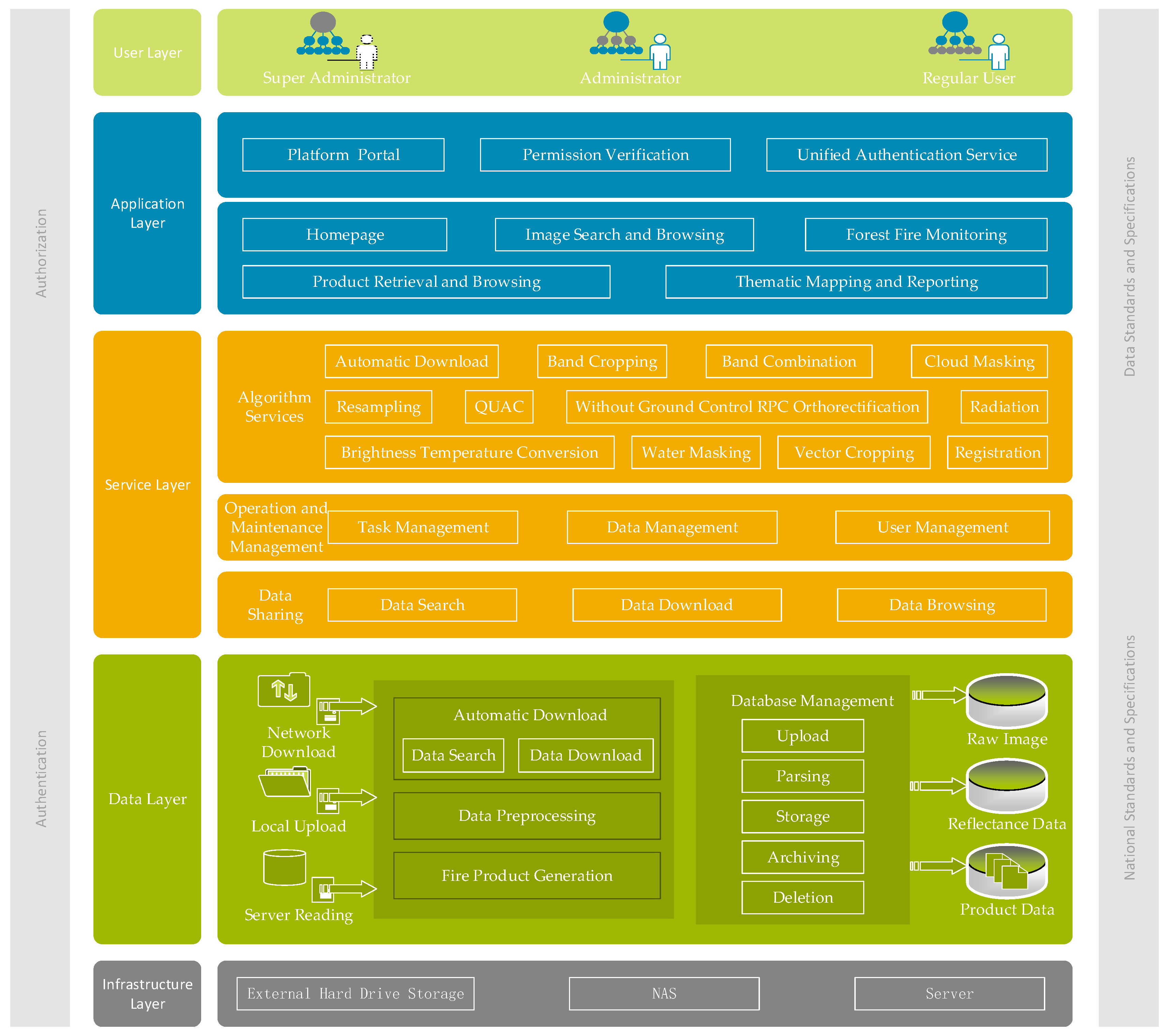

- The study aims to explore and optimize forest fire monitoring algorithms tailored to three types of multi-source remote sensing imagery—GF-4, Landsat 8, and Sentinel-2. The study aims to systematically evaluate the applicability and performance differences of the U-Net deep learning algorithm, improved automatic threshold algorithm, and traditional empirical threshold algorithm across various fire scales. Special attention is given to enhancing fire pixel detection under complex environmental conditions by incorporating statistical method and data augmentation strategies. Finally, the feasibility of deep learning approaches in the domain of forest fire monitoring is also explored.

- (2)

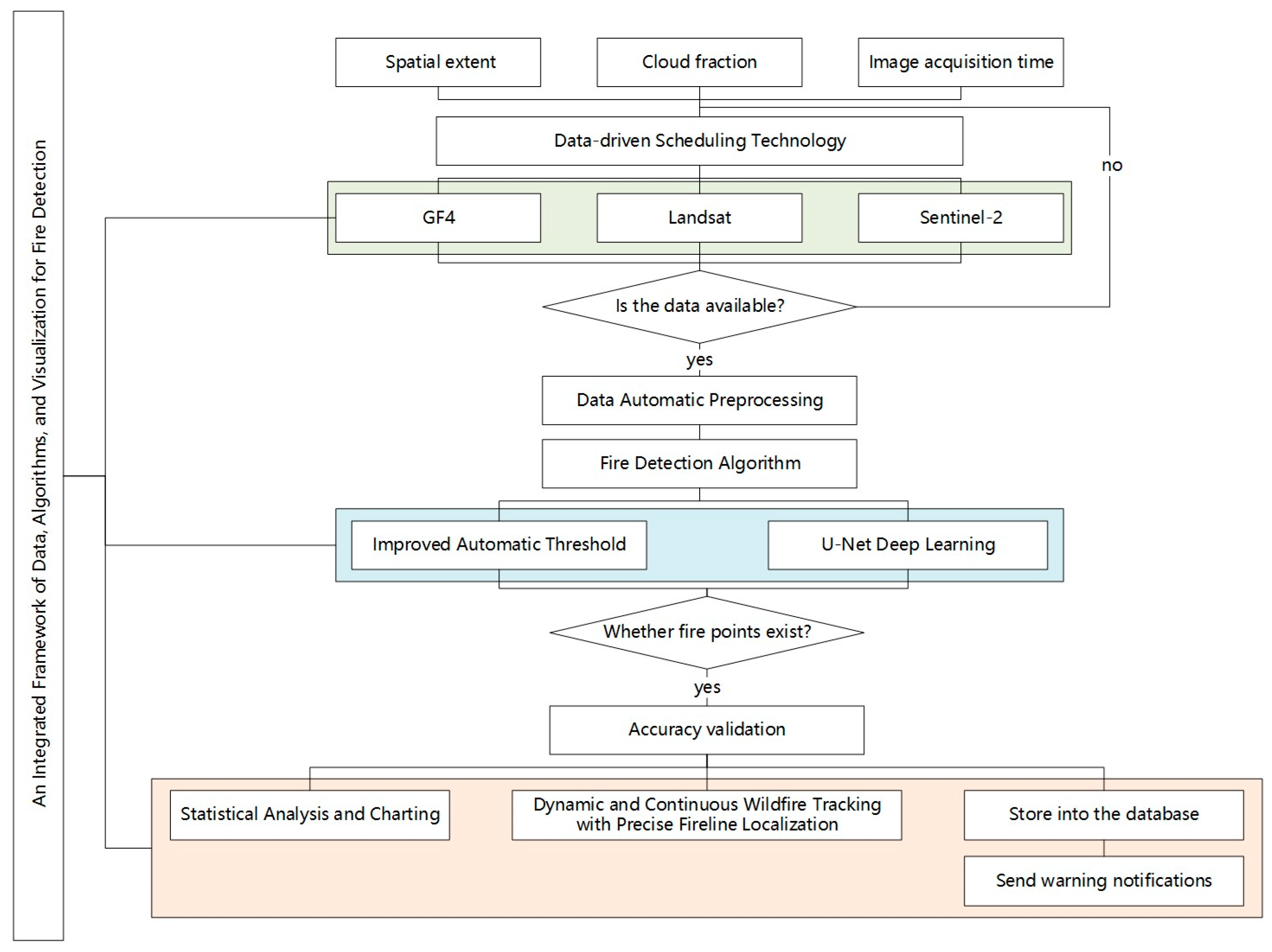

- The study aims to design and implement a data-driven scheduling technology that integrates autonomous data acquisition and automated task scheduling. By constructing an end-to-end data processing workflow covering data acquisition, preprocessing, fire detection, and visualization, the level of intelligence and response efficiency in forest fire monitoring can be significantly enhanced. The aim is to achieve a fully automated closed-loop process from data to results while supporting a priority handling mechanism for emergency events. The study further aims to provide more scientific and timely decision support for forestry management departments, promoting the transformation of forest fire monitoring from event-driven to data-driven approaches.

- (3)

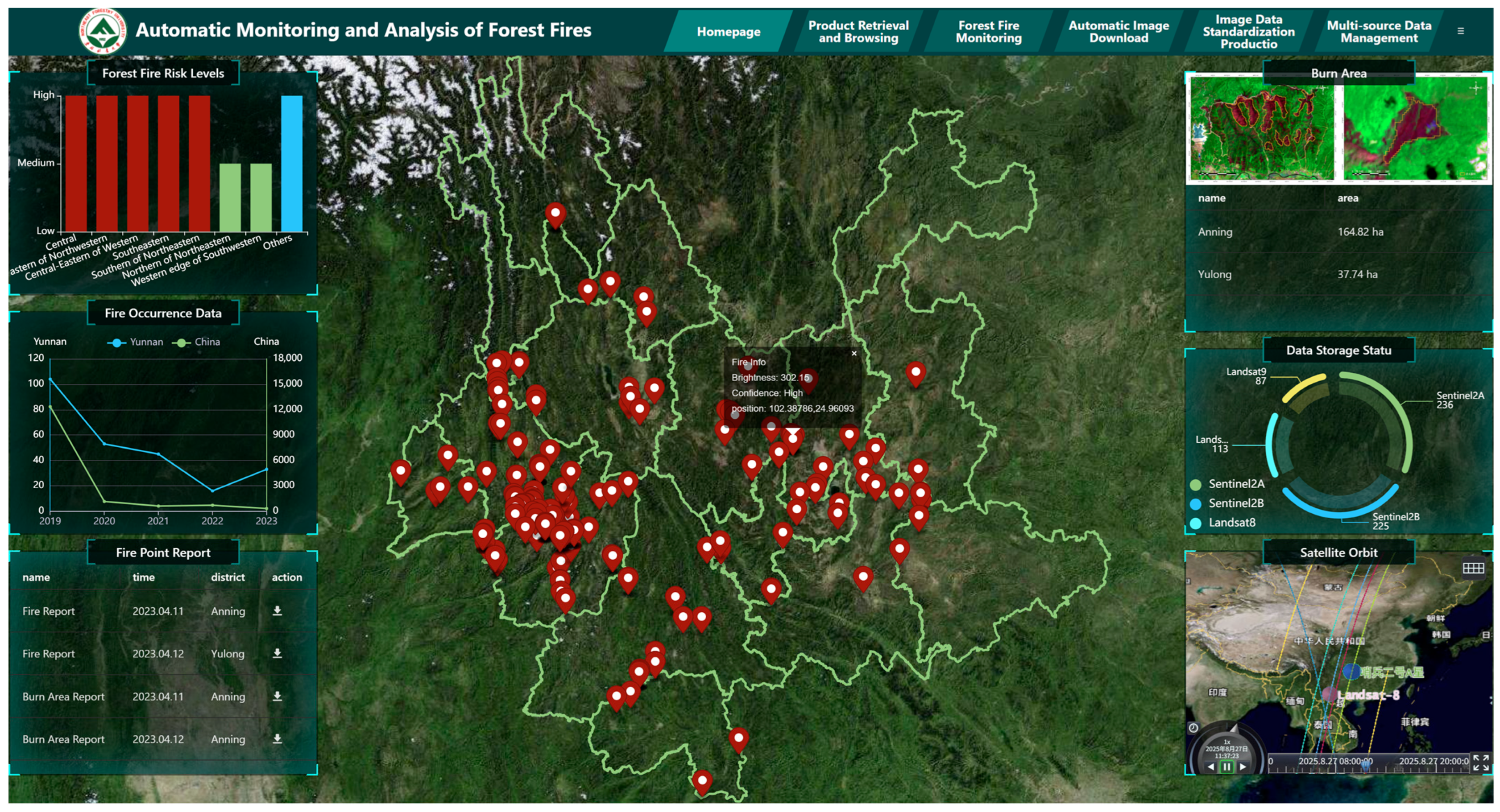

- A framework integrating data processing, algorithms, and a visualization interface was designed to fully exploit the spatiotemporal characteristics of multi-source remote sensing data for the dynamic tracking and fine-scale detection of forest fires. Using representative forest fire events in Yunnan Province as case studies, the effectiveness and scalability of the proposed framework were validated in practical applications. The ultimate goal is to provide a generalizable and scalable technical solution for rapid fire detection and intelligent early warning of forest fires. The framework also includes a visualization interface featuring spatial querying, temporal evolution analysis, and administrative-level statistics, thereby enhancing the interpretability and practical usability of the results.

2. Related Works

2.1. Fire Detection Methods

2.2. All-Weather Automated Forest Fire Monitoring Technology

3. Study Area and Data

3.1. Study Area

3.2. Datasets

4. Methods

4.1. Data-Driven Scheduling Technology

4.2. Data Automatic Preprocessing

4.3. Fire Detection Algorithm

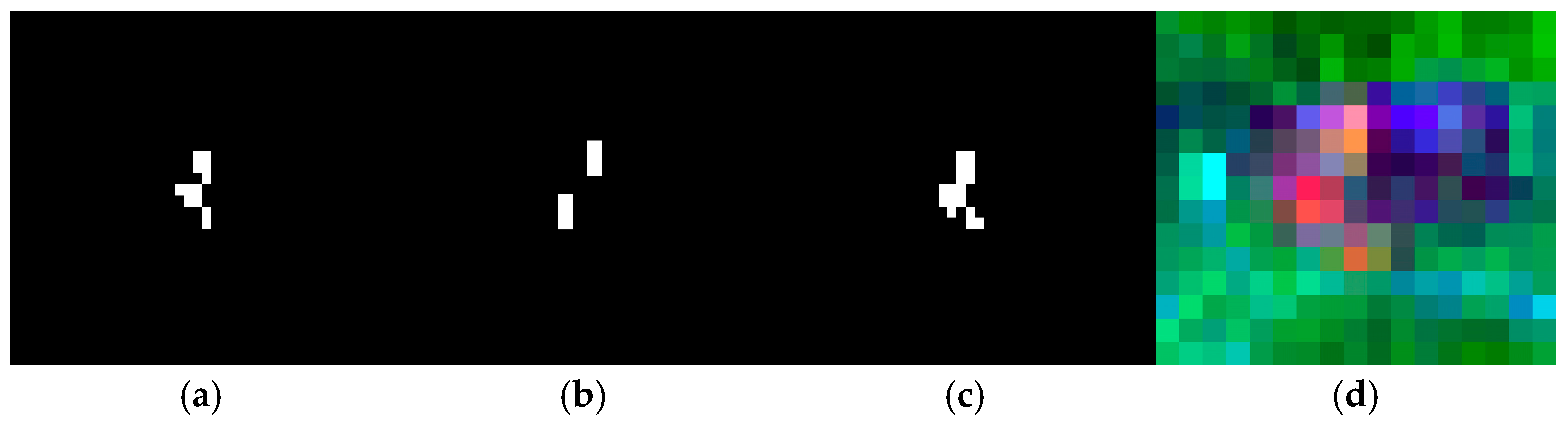

4.3.1. Fire Detection Algorithm for GF-4 Data

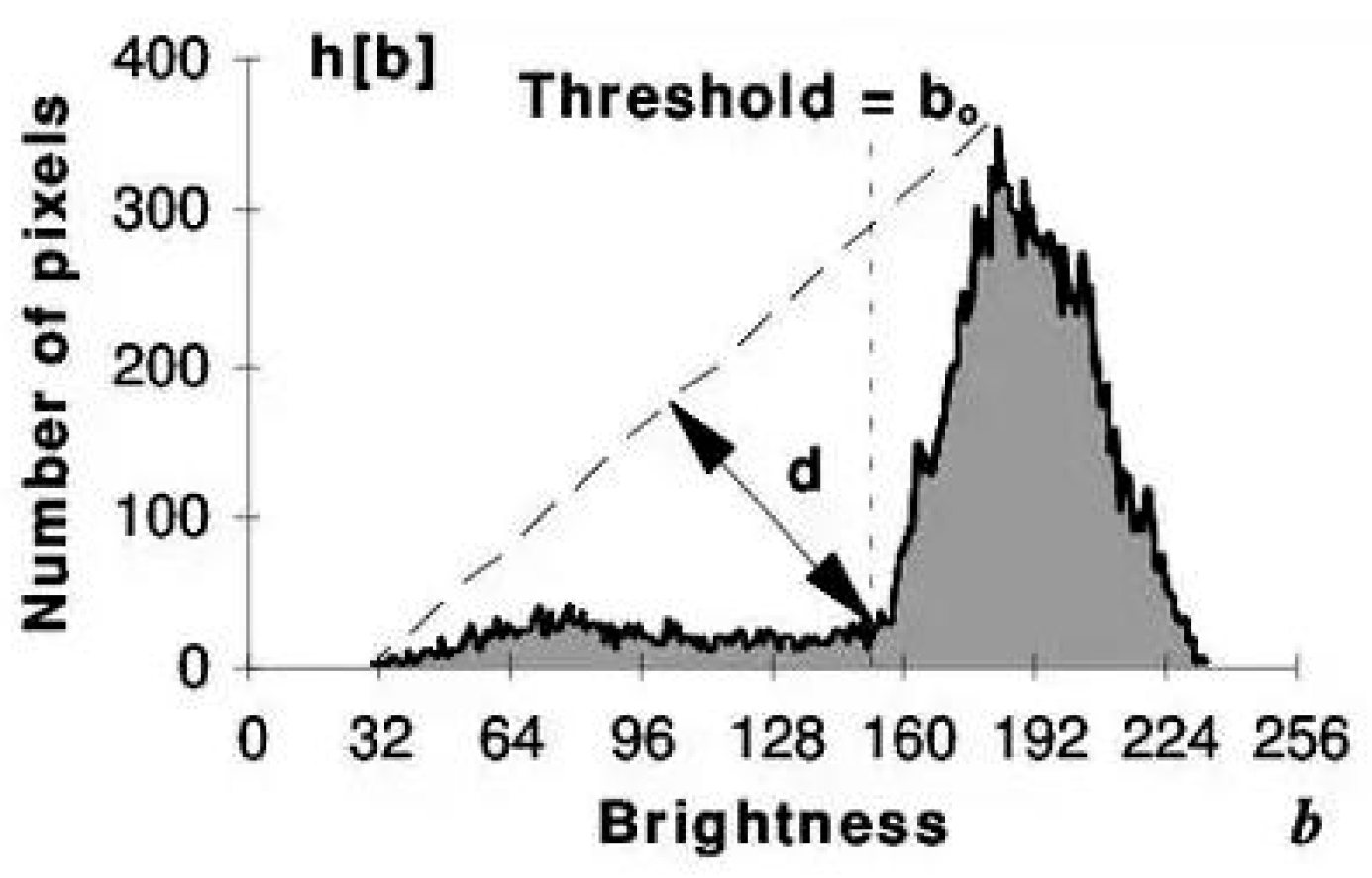

- Improved Automatic Threshold Algorithm

- Fixed Threshold Algorithm

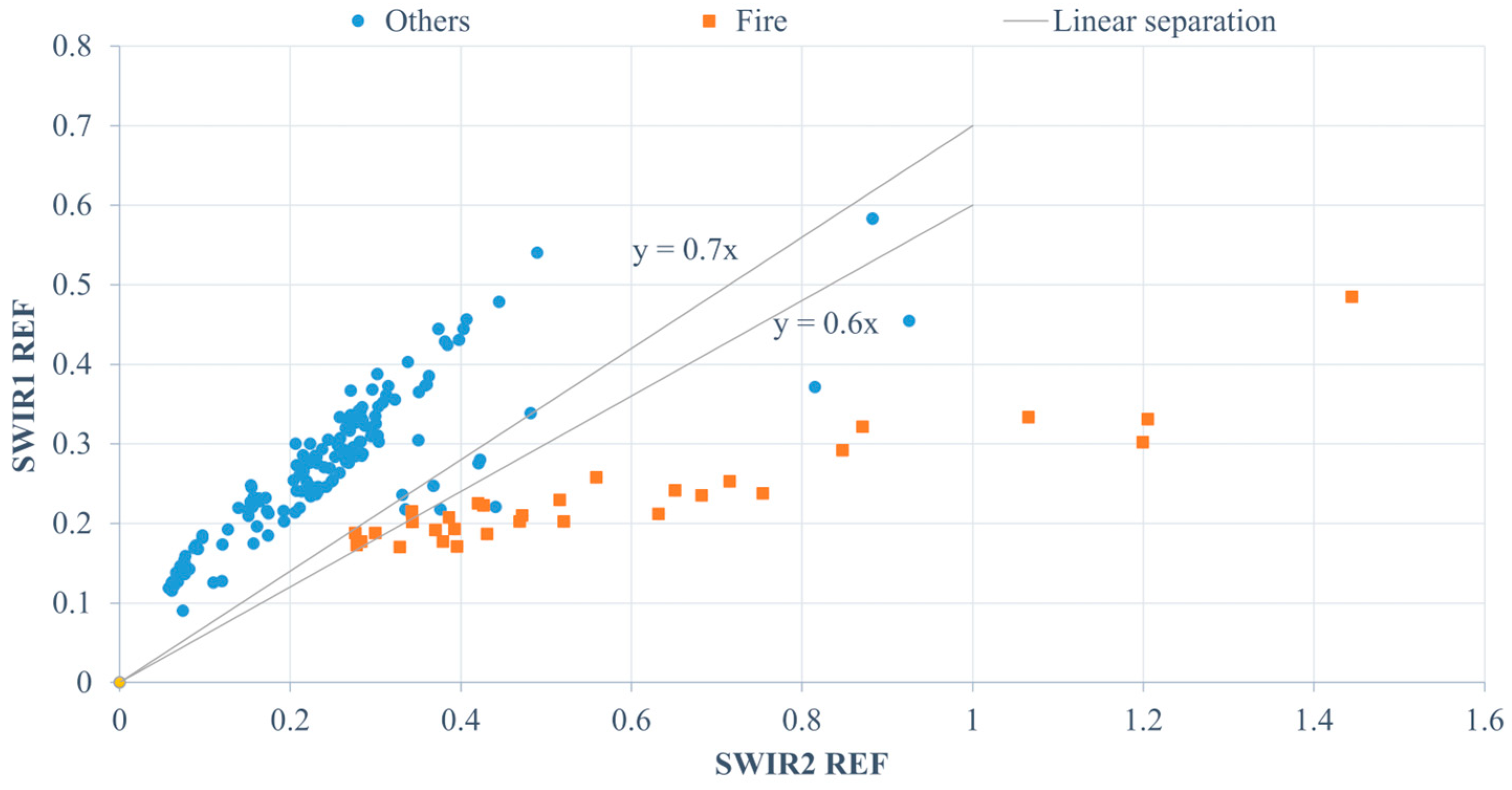

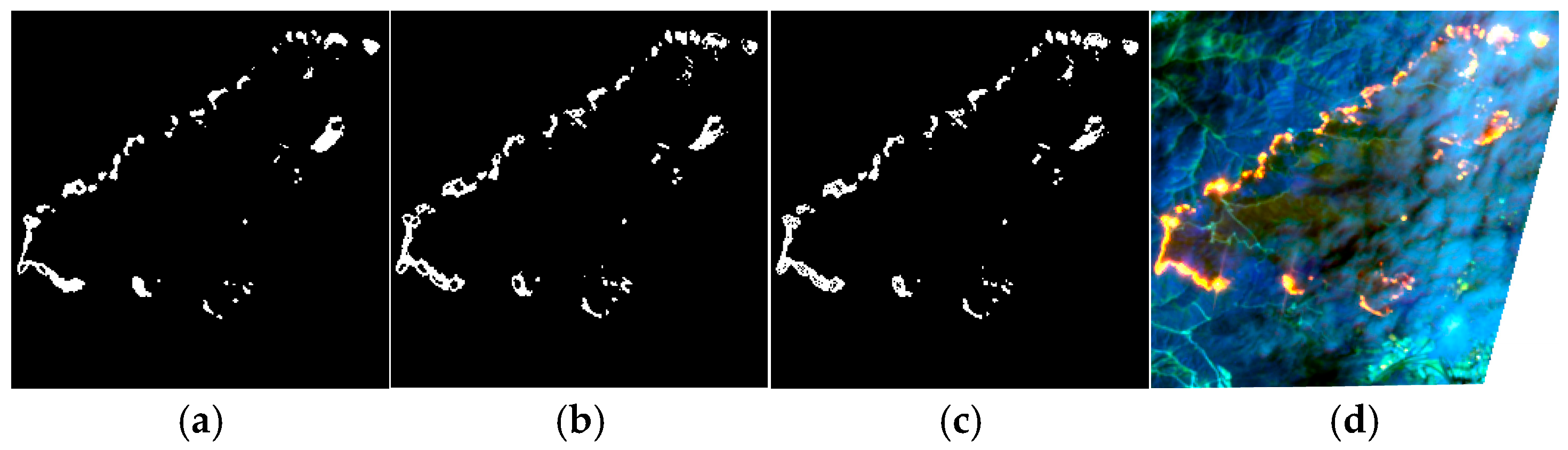

4.3.2. Fire Detection Algorithm for Landsat 8/Sentinel-2 Data

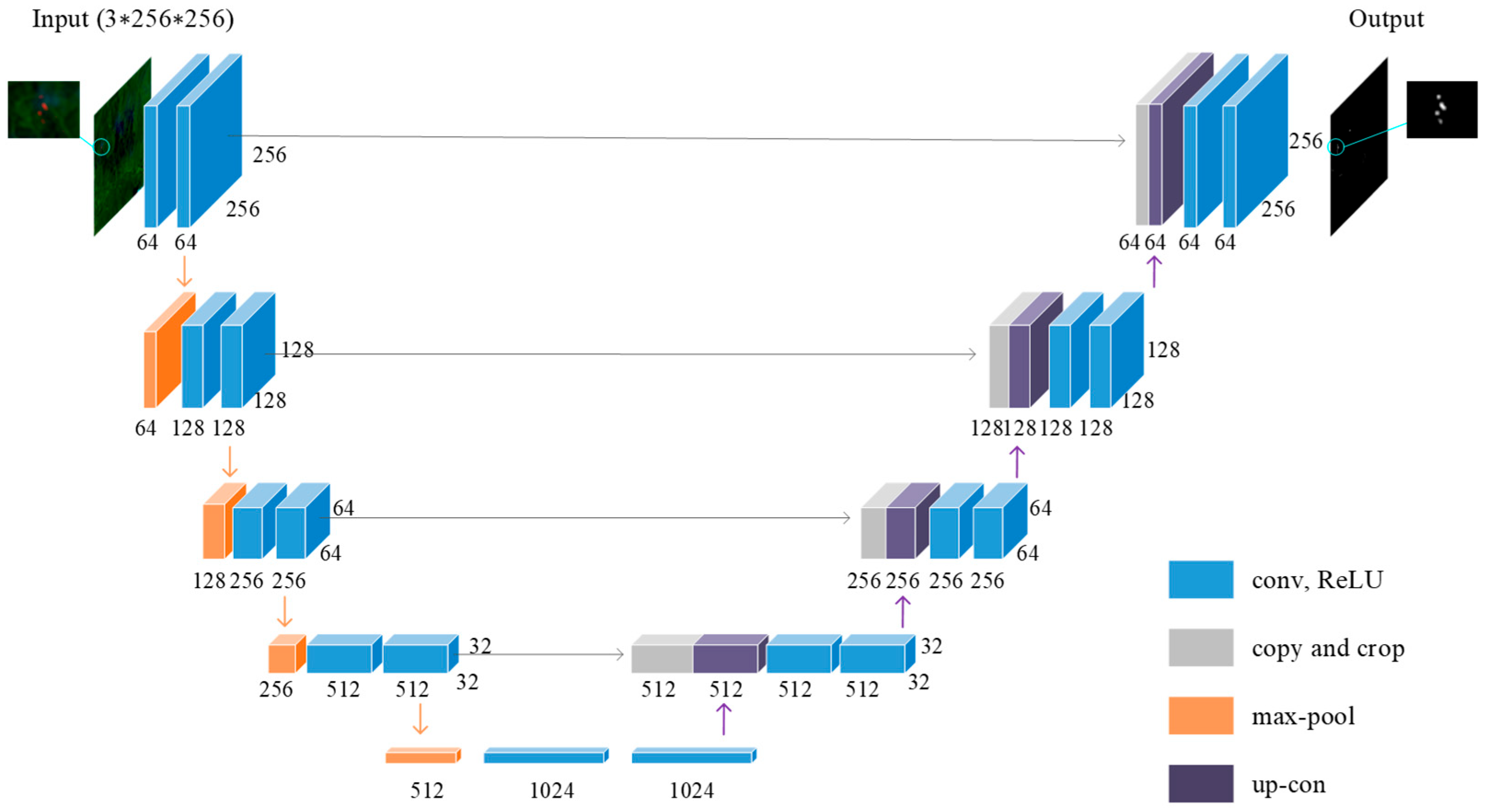

- U-Net Deep Learning

- Improved Automatic Threshold Algorithm

4.4. An Integrated Framework of Data, Algorithms, and Visualization for Fire Detection

4.5. Validation Method

5. Results

5.1. Performance of Different Algorithms

5.1.1. Performance of Different Algorithms for GF-4

5.1.2. Performance of Different Algorithms for Landsat 8/Sentinel-2

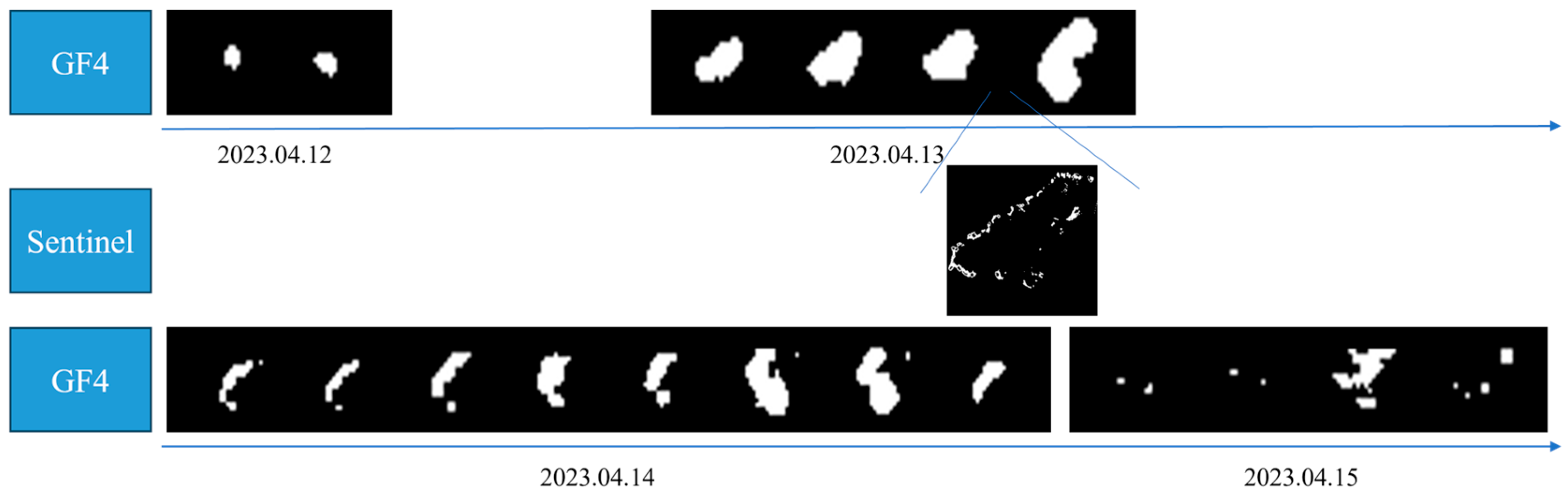

5.2. All-Weather Monitoring and Cross-Utilization of Data

6. Discussion

6.1. Perspective on Algorithms and Datasets

6.2. Perspective on Data-Driven Technology and Management

6.3. Perspective on an Integrated Framework of Data, Algorithms, and Visualization for Fire Detection

7. Conclusions

- (1)

- Data-driven scheduling technology: The proposed technology addresses the complexity of multi-source data processing by enabling fully automated workflows—from data acquisition to fire detection and early warning—within an hour. It also supports data priority scheduling to enhance emergency responsiveness.

- (2)

- Integrated data–algorithm–visualization framework: Validated through real fire events in the Anning and Jinning Districts of Yunnan Province, this framework demonstrates strong performance in all-weather, real-time monitoring and rapid response. It enables dynamic fire tracking and fine-scale detection by utilizing the cross-applicability of heterogeneous data sources. Moreover, the framework incorporates asynchronous task scheduling and a robust data storage and management architecture, ensuring both efficiency and framework stability. It also features an intuitive and user-friendly visualization interface that meets user needs for disaster analysis and statistics.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhang, J.-H.; Yao, F.-M.; Liu, C.; Yang, L.-M.; Boken, V.K. Detection, Emission Estimation and Risk Prediction of Forest Fires in China Using Satellite Sensors and Simulation Models in the Past Three Decades—An Overview. Int. J. Environ. Res. Public Health 2011, 8, 3156–3178. [Google Scholar] [CrossRef]

- Wang, X.; Di, Z.; Li, M.; Yao, Y. Satellite-Derived Variation in Burned Area in China from 2001 to 2018 and Its Response to Climatic Factors. Remote Sens. 2021, 13, 1287. [Google Scholar] [CrossRef]

- Zong, X.Z.; Tian, X.R.; Yao, Q.C.; Brown, P.M. An analysis of fatalities from forest fires in China, 1951–2018. Int. J. Wildland Fire 2022, 31, 507–517. [Google Scholar] [CrossRef]

- Li, G.; Hai, J.; Qiu, J.; Zhang, D.; Ge, C.; Wang, H.; Wu, J. Revealing future changes in China’s forest fire under climate change. Agric. For. Meteorol. 2025, 371, 110609. [Google Scholar] [CrossRef]

- Chen, Y.; Morton, D.C.; Randerson, J.T. Remote sensing for wildfire monitoring: Insights into burned area, emissions, and fire dynamics. One Earth 2024, 7, 1022–1028. [Google Scholar] [CrossRef]

- Kong, S.; Deng, J.; Yang, L.; Liu, Y. An attention-based dual-encoding network for fire flame detection using optical remote sensing. Eng. Appl. Artif. Intell. 2024, 127, 107238. [Google Scholar] [CrossRef]

- Saleh, A.; Zulkifley, M.A.; Harun, H.H.; Gaudreault, F.; Davison, I.; Spraggon, M. Forest fire surveillance systems: A review of deep learning methods. Heliyon 2024, 10, e23127. [Google Scholar] [CrossRef]

- Payra, S.; Sharma, A.; Verma, S. Chapter 14—Application of remote sensing to study forest fires. In Atmospheric Remote Sensing; Kumar Singh, A., Tiwari, S., Eds.; Elsevier: Amsterdam, The Netherlands, 2023; pp. 239–260. [Google Scholar] [CrossRef]

- Bargali, H.; Pandey, A.; Bhatt, D.; Sundriyal, R.C.; Uniyal, V.P. Forest fire management, funding dynamics, and research in the burning frontier: A comprehensive review. Trees For. People 2024, 16, 100526. [Google Scholar] [CrossRef]

- Chowdhury, E.H.; Hassan, Q.K. Operational perspective of remote sensing-based forest fire danger forecasting systems. ISPRS J. Photogramm. Remote Sens. 2015, 104, 224–236. [Google Scholar] [CrossRef]

- Parto, F.; Saradjian, M.; Homayouni, S. MODIS Brightness Temperature Change-Based Forest Fire Monitoring. J. Indian Soc. Remote Sens. 2020, 48, 163–169. [Google Scholar] [CrossRef]

- Zhang, H.; Sun, L.; Zheng, C.; Ge, S.; Chen, J.; Li, J. A weighted contextual active fire detection algorithm based on Himawari-8 data. Int. J. Remote Sens. 2023, 44, 2400–2427. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, Y.; Zhu, C. YOLO-LFD: A Lightweight and Fast Model for Forest Fire Detection. Comput. Mater. Contin. 2025, 82, 3399–3417. [Google Scholar] [CrossRef]

- Xu, H.; Chen, J.; He, G.; Lin, Z.; Bai, Y.; Ren, M.; Zhang, H.; Yin, H.; Liu, F. Immediate assessment of forest fire using a novel vegetation index and machine learning based on multi-platform, high temporal resolution remote sensing images. Int. J. Appl. Earth Obs. Geoinf. 2024, 134, 104210. [Google Scholar] [CrossRef]

- Zhang, P.; Ban, Y.; Nascetti, A. Learning U-Net without forgetting for near real-time wildfire monitoring by the fusion of SAR and optical time series. Remote Sens. Environ. 2021, 261, 112467. [Google Scholar] [CrossRef]

- Vittucci, C.; Cordari, F.; Guerriero, L.; Di Sanzo, P. Design and evaluation of a cloud-oriented procedure based on SAR and Multispectral data to detect burnt areas. Earth Sci. Inform. 2025, 18, 322. [Google Scholar] [CrossRef]

- Bonilla-Ormachea, K.; Cuizaga, H.; Salcedo, E.; Castro, S.; Fernandez-Testa, S.; Mamani, M. ForestProtector: An IoT Architecture Integrating Machine Vision and Deep Reinforcement Learning for Efficient Wildfire Monitoring. In Proceedings of the 2025 11th International Conference on Automation, Robotics, and Applications (ICARA), Zagreb, Croatia, 12–14 February 2025. [Google Scholar]

- Cui, Z.; Zhao, F.; Zhao, S.; Fei, T.; Ye, J. Research on information extraction of forest fire damage based on multispectral UAV and machine learning. J. Nat. Disasters 2024, 33, 99–108. [Google Scholar]

- Han, J.; Shen, Z.; Ying, L.; Li, G.; Chen, A. Early post-fire regeneration of a fire-prone subtropical mixed Yunnan pine forest in Southwest China: Effects of pre-fire vegetation, fire severity and topographic factors. For. Ecol. Manag. 2015, 356, 31–40. [Google Scholar] [CrossRef]

- Zhang, N.; Sun, L.; Sun, Z. GF-4 Satellite Fire Detection with an Improved Contextual Algorithm. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 163–172. [Google Scholar] [CrossRef]

- Li, Q.; Cui, J.; Jiang, W.; Jiao, Q.; Gong, L.; Zhang, J.; Shen, X. Monitoring of the Fire in Muli County on March 28, 2020, based on high temporal-spatial resolution remote sensing techniques. Nat. Hazards Res. 2021, 1, 20–31. [Google Scholar] [CrossRef]

- Suárez-Fernández, G.E.; Martínez-Sánchez, J.; Arias, P. Assessment of vegetation indices for mapping burned areas using a deep learning method and a comprehensive forest fire dataset from Landsat collection. Adv. Space Res. 2025, 75, 1665–1685. [Google Scholar] [CrossRef]

- Kouachi, M.E.; Khairoun, A.; Moghli, A.; Rahmani, S.; Mouillot, F.; Baeza, M.J.; Moutahir, H. Forty-Year Fire History Reconstruction from Landsat Data in Mediterranean Ecosystems of Algeria following International Standards. Remote Sens. 2024, 16, 2500. [Google Scholar] [CrossRef]

- Xu, H. Change of Landsat 8 TIRS calibration parameters and its effect on land surface temperature retrieval. J. Remote Sens. 2016, 20, 229–235. [Google Scholar]

- Hu, C.; Zhang, X.; Xing, X.; Gao, Q. An approach to detect gas flaring sites using sentinel-2 MSI and NOAA-20 VIIRS images. Int. J. Appl. Earth Obs. Geoinf. 2023, 124, 103534. [Google Scholar] [CrossRef]

- Rodriguez-Jimenez, F.; Novo, A.; Hall, J.V. Influence of wildfires on the conflict (2006–2022) in eastern Ukraine using remote sensing techniques (MODIS and Sentinel-2 images). Remote Sens. Appl. Soc. Environ. 2024, 35, 101240. [Google Scholar] [CrossRef]

- Liu, X.; Sun, L.; Yang, Y.; Zhou, X.; Wang, Q.; Chen, T. Cloud and Cloud Shadow Detection Algorithm for Gaofen-4 Satellite Data. Acta Opt. Sin. 2019, 39, 446–457. [Google Scholar] [CrossRef]

- Pereira, G.H.D.; Fusioka, A.M.; Nassu, B.T.; Minetto, R. Active fire detection in Landsat-8 imagery: A large-scale dataset and a deep-learning study. Isprs J. Photogramm. Remote Sens. 2021, 178, 171–186. [Google Scholar] [CrossRef]

- Murphy, S.W.; de Souza, C.R.; Wright, R.; Sabatino, G.; Pabon, R.C. HOTMAP: Global hot target detection at moderate spatial resolution. Remote Sens. Environ. 2016, 177, 78–88. [Google Scholar] [CrossRef]

- Schroeder, W.; Oliva, P.; Giglio, L.; Quayle, B.; Lorenz, E.; Morelli, F. Active fire detection using Landsat-8/OLI data. Remote Sens. Environ. 2016, 185, 210–220. [Google Scholar] [CrossRef]

- Kumar, S.S.; Roy, D.P. Global operational land imager Landsat-8 reflectance-based active fire detection algorithm. Int. J. Digit. Earth 2018, 11, 154–178. [Google Scholar] [CrossRef]

- Yang, S.; Huang, Q.; Yu, M. Advancements in remote sensing for active fire detection: A review of datasets and methods. Sci. Total Environ. 2024, 943, 173273. [Google Scholar] [CrossRef]

- Fusioka, A.M.; Pereira, G.H.D.; Nassu, B.T.; Minetto, R. Active Fire Segmentation: A Transfer Learning Study From Landsat-8 to Sentinel-2. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 14093–14108. [Google Scholar] [CrossRef]

- Liu, Y.; Zhi, W.; Xu, B.; Xu, W.; Wu, W. Detecting high-temperature anomalies from Sentinel-2 MSI images. Isprs J. Photogramm. Remote Sens. 2021, 177, 174–193. [Google Scholar] [CrossRef]

- Kato, S.; Nakamura, R. Detection of thermal anomaly using Sentinel-2A data. In Proceedings of the 37th Annual IEEE International Geoscience and Remote Sensing Symposium, IGARSS 2017, Fort Worth, TX, USA, 23–28 July 2017; pp. 831–833. [Google Scholar]

- Wooster, M.J.; Roberts, G.J.; Giglio, L.; Roy, D.P.; Freeborn, P.H.; Boschetti, L.; Justice, C.; Ichoku, C.; Schroeder, W.; Davies, D.; et al. Satellite remote sensing of active fires: History and current status, applications and future requirements. Remote Sens. Environ. 2021, 267, 112694. [Google Scholar] [CrossRef]

- Mseddi, W.S.; Ghali, R.; Jmal, M.; Attia, R. Fire Detection and Segmentation using YOLOv5 and U-NET. In Proceedings of the 2021 29th European Signal Processing Conference (EUSIPCO), Dublin, Ireland, 23–27 August 2021; pp. 741–745. [Google Scholar]

- Barco, L.; Urbanelli, A.; Rossi, C. Rapid Wildfire Hotspot Detection Using Self-Supervised Learning on Temporal Remote Sensing Data; IEEE: New York, NY, USA, 2024. [Google Scholar]

- Ramos, L.T.; Casas, E.; Romero, C.; Rivas-Echeverría, F.; Bendek, E. A study of YOLO architectures for wildfire and smoke detection in ground and aerial imagery. Results Eng. 2025, 26, 104869. [Google Scholar] [CrossRef]

- Feng, H.; Qiu, J.; Wen, L.; Zhang, J.; Yang, J.; Lyu, Z.; Liu, T.; Fang, K. U3UNet: An accurate and reliable segmentation model for forest fire monitoring based on UAV vision. Neural Netw. 2025, 185, 107207. [Google Scholar] [CrossRef] [PubMed]

- Hu, Y.; Zhan, J.; Zhou, G.; Chen, A.; Cai, W.; Guo, K.; Hu, Y.; Li, L. Fast forest fire smoke detection using MVMNet. Knowl. Based Syst. 2022, 241, 108219. [Google Scholar] [CrossRef]

- Safonova, A.; Ghazaryan, G.; Stiller, S.; Main-Knorn, M.; Nendel, C.; Ryo, M. Ten deep learning techniques to address small data problems with remote sensing. Int. J. Appl. Earth Obs. Geoinf. 2023, 125, 103569. [Google Scholar] [CrossRef]

- Zhang, H.K.; Camps-Valls, G.; Liang, S.; Tuia, D.; Pelletier, C.; Zhu, Z. Preface: Advancing deep learning for remote sensing time series data analysis. Remote Sens. Environ. 2025, 322, 114711. [Google Scholar] [CrossRef]

- Nie, Z.; Xu, Y.; Zhao, J.; Yuan, M. Fire classification and detection in imbalanced remote sensing images using a three-sphere model combined with YOLOv5. Appl. Soft Comput. 2025, 177, 113192. [Google Scholar] [CrossRef]

- Peterson, D.; Wang, J.; Ichoku, C.; Hyer, E. Sub-Pixel Fractional Area of Wildfires from MODIS Observations: Retrieval, Validation, and Potential Applications. AGU Fall Meet. 2010, 2010, A24B-02. [Google Scholar]

| Area | Method | tp | fn | fp | P | R | F-Score | IoU | F-Score 95% CI | McNemar-p |

|---|---|---|---|---|---|---|---|---|---|---|

| Anning * | Improved automatic threshold | 12 | 4 | 0 | 1 | 0.750 | 0.857 | 0.75 | 0.6667–0.9714 | 0.0078 |

| Fixed threshold | 4 | 12 | 0 | 1 | 0.250 | 0.400 | 0.25 | 0.1053–0.6667 | ||

| Jinning | Improved automatic threshold | 92 | 14 | 3 | 0.968 | 0.868 | 0.915 | 0.844 | 0.8725–0.9524 | 0.6072 |

| Fixed threshold | 87 | 19 | 1 | 0.989 | 0.821 | 0.897 | 0.813 | 0.8478–0.9384 |

| Image Sensor | Area | Method | tp | fn | fp | P | R | F-Score | IoU | F-Score 95% CI | McNemar-p |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Landsat 8 | Anning | Improved automatic threshold | 41 | 1 | 7 | 0.854 | 0.976 | 0.911 | 0.836 | 0.8421–0.9655 | 1 |

| U-Net | 41 | 1 | 6 | 0.872 | 0.976 | 0.921 | 0.854 | 0.8537–0.9739 | |||

| Sentinel-2 | Jinning * | Improved automatic threshold | 1823 | 176 | 216 | 0.894 | 0.912 | 0.903 | 0.823 | 0.8932–0.9122 | 1.3061 × 10−14 |

| U-Net transfer | 1559 | 440 | 173 | 0.900 | 0.780 | 0.836 | 0.718 | 0.8228–0.8482 |

| Field Name | Data Type | Length | Nullable | Unique | Description |

|---|---|---|---|---|---|

| id | String | 100 | No | Yes | Unique identifier |

| autotask_id | String | 100 | No | Yes | Auto-download task ID |

| username | String | 50 | No | No | Associated user |

| jobId | Integer | / | No | Yes | Job ID |

| acquisition_time | DateTime | / | No | No | Image acquisition time |

| production_time | DateTime | / | Yes | No | Fire detection time |

| confidence | String | 100 | No | No | Fire confidence level |

| bright_data | Float | / | No | No | Pixel brightness (temperature) value |

| sensor | String | 50 | Yes | No | Sensor type |

| lon | Float | / | No | No | Longitude |

| lat | Float | / | No | No | Latitude |

| province | String | 50 | No | No | Province-level administrative region |

| city | String | 50 | No | No | City-level administrative region |

| county | String | 50 | No | No | County-level administrative region |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, B.; Jia, W.; Wang, Q.; Yang, G. All-Weather Forest Fire Automatic Monitoring and Early Warning Application Based on Multi-Source Remote Sensing Data: Case Study of Yunnan. Fire 2025, 8, 344. https://doi.org/10.3390/fire8090344

Gao B, Jia W, Wang Q, Yang G. All-Weather Forest Fire Automatic Monitoring and Early Warning Application Based on Multi-Source Remote Sensing Data: Case Study of Yunnan. Fire. 2025; 8(9):344. https://doi.org/10.3390/fire8090344

Chicago/Turabian StyleGao, Boyang, Weiwei Jia, Qiang Wang, and Guang Yang. 2025. "All-Weather Forest Fire Automatic Monitoring and Early Warning Application Based on Multi-Source Remote Sensing Data: Case Study of Yunnan" Fire 8, no. 9: 344. https://doi.org/10.3390/fire8090344

APA StyleGao, B., Jia, W., Wang, Q., & Yang, G. (2025). All-Weather Forest Fire Automatic Monitoring and Early Warning Application Based on Multi-Source Remote Sensing Data: Case Study of Yunnan. Fire, 8(9), 344. https://doi.org/10.3390/fire8090344