Abstract

Forest fires are a global environmental threat to human life and ecosystems. This study compiles smoke alarm images from five high-definition surveillance cameras in Foshan City, Guangdong, China, collected over one year, to create a smoke-based early warning dataset. The dataset presents two key challenges: (1) high false positive rates caused by pseudo-smoke interference, including non-fire conditions like cooking smoke and industrial emissions, and (2) significant regional data imbalances, influenced by varying human activity intensities and terrain features, which impair the generalizability of traditional pre-train–fine-tune strategies. To address these challenges, we explore the use of visual language models to differentiate between true alarms and false alarms. Additionally, our method incorporates a prompt tuning strategy which helps to improve performance by at least 12.45% in zero-shot learning tasks and also enhances performance in few-shot learning tasks, demonstrating enhanced regional generalization compared to baselines.

1. Introduction

Forest fires represent a catastrophic phenomenon with far-reaching implications for the global ecosystem, economy, and human well-being. The impact of these uncontrolled blazes extends from the loss of biodiversity and habitats to the exacerbation of climate change through the release of vast amounts of carbon dioxide into the atmosphere [1,2]. From an economic perspective, forest fires impose significant financial burdens in several respects, such as the allocation of resources to firefighting efforts, the reconstruction of affected areas, and the loss of forest resources [3,4]. Moreover, the societal impact is profound, with the potential for loss of human life and the displacement of communities [5,6]. The destructive nature of forest fires necessitates robust and proactive detection systems to mitigate their occurrence and impact [7,8].

In the context of forest fire detection, smoke is typically detected before the flames become visible, making it a crucial indicator for early detection systems. Therefore, current mainstream technologies often focus on identifying the occurrence of forest fires by observing smoke [9,10]. Traditional detection methods rely on smoke detectors to sense smoke produced by combustion [11]. However, in open outdoor environments, smoke tends to disperse rapidly and is challenging to monitor effectively. Additionally, fine particulate matter such as dust in the atmosphere may interfere with the proper functioning of detectors, leading to reduced detection efficiency and accuracy. In contrast, image-based smoke recognition technology, with its broad detection range and cost-effectiveness, has been widely applied in the field of forest fire early warning detection [12]. Traditional image processing techniques for smoke detection have relied on features such as color, texture, and motion to distinguish smoke from the background [13,14]. However, these methods are often limited by their inability to generalize across varying environmental conditions and the diversity of smoke appearances.

The evolution of forest fire detection methods displays both technological progress and persistent challenges. Early approaches relied on physical patrols and lookout stations [15], later supplemented by sensor networks and satellite monitoring. The advent of deep learning brought significant breakthroughs through architectures like DeepSmoke’s CNN framework [16] and Xu et al.’s dual-stream network combining pixel-level and object-level analysis [17]. Subsequent innovations include Yuan et al.’s waveform neural network for smoke concentration prediction [18], Azim’s fuel classification model for emergency planning [19], and Qiang et al.’s TRPCA-enhanced video analysis [20]. While these methods improved detection accuracy, practical deployment revealed limitations in handling geographic variations and pseudo-smoke interference. The YOLO-PCA algorithm [21] addressed efficiency through principal component analysis, and FireFormer [22] demonstrated exceptional performance in specific WUI environments. Nevertheless, cross-regional adaptation remains challenging due to data distribution shifts.

The deep learning revolution has transformed wildfire detection through enhanced feature extraction capabilities. Modern architectures overcome manual feature engineering limitations via end-to-end learning [23,24], with transfer learning enabling effective knowledge transfer from pre-trained models [25]. Notable advancements include FireFormer [22], which integrates Swin Transformer’s multi-scale hierarchical representation [26] for smoke pattern recognition in wildland–urban interface environments. Nevertheless, three fundamental challenges persist.

First, current detection systems demonstrate heightened sensitivity to pseudo-smoke phenomena—including cooking smoke and industrial emissions—resulting in excessive false positives. This limitation stems from disproportionate emphasis on feature localization over comprehensive risk assessment. Second, smoke classification research predominantly operates within constrained datasets exhibiting low inter-class confusion, creating significant gaps in adversarial robustness research. Third, practical deployment encounters data scarcity challenges due to wildfire unpredictability and geographical diversity, resulting in dispersed feature distributions that complicate model adaptation [27].

Few-shot learning emerges as a promising paradigm for addressing data paucity through prior knowledge utilization from large labeled datasets [28]. However, it must resolve two critical challenges: category shifts between training and testing distributions and extreme label scarcity [29]. While conventional mitigation strategies like data augmentation provide partial solutions, they fail to prevent model overfitting (where a model memorizes limited examples instead of learning general patterns) when fine-tuning with limited samples.

Recent advances in prompt engineering offer novel adaptation strategies for large pre-trained models. By integrating task-specific prompts—either through linguistic instructions [30,31] or learnable embeddings [32]—models can better leverage pre-acquired knowledge for downstream tasks.

Building on CLIP’s vision-language alignment capabilities [33], recent prompt tuning methods have enhanced model adaptability. CoOp [34] introduced learnable text prompts, while MaPLe [35] extended this to multi-modal prompting through deep visual–linguistic adaptation. Subsequent innovations like PromptSRC’s self-regularization [36] and ProMetaR’s meta-regularization [37] improved generalization through the explicit prevention of representation overfitting. Architectural advancements include DePT’s feature space decoupling [38] and MetaPrompt’s domain-invariant tuning [39], which address the base–new tradeoff through meta-learning strategies. These developments establish prompt engineering as a powerful paradigm for few-shot adaptation—a critical requirement for wildfire detection systems facing data scarcity.

The prompt tuning paradigm demonstrates particular promise through its parameter-efficient adaptation mechanism, achieving superior few-shot performance compared to conventional transfer learning [32].

In this work, we introduce the FireCLIP model for forest fire smoke detection that integrates prompt tuning (adapting models via task-specific input instructions rather than full retraining) to address the challenges of transfer learning in new regions and few-shot learning due to data scarcity. FireCLIP is based on the large-scale vision-language pre-trained model CLIP [33] and is adapted to the specific task of smoke detection through prompt tuning. This method aims to enhance the model’s ability to generalize from a small number of examples and to adapt to the unique characteristics of smoke in a new domain.

Key Innovations:

- Identification of dual challenges in forest fire detection: pseudo-smoke false positives and regional data imbalance.

- Empirical validation of vision-language prompt learning’s effectiveness in addressing these challenges through adaptive feature extraction and cross-modal understanding.

- Contribution of a novel smoke recognition benchmark dataset featuring high inter-class confusion, specifically designed to evaluate model robustness against pseudo-smoke phenomena.

2. Materials and Methods

2.1. Wildland–Urban Interface Smoke Alarm Dataset (WUISAD)

Foshan City, located in the central-southern part of Guangdong Province, China, covers a total area of 3797.72 square kilometers. The city is one of the most important bases for manufacturing in the country, with a highly developed industrial system. Geographically, the western part of Foshan City is primarily characterized by low hills and hillocks. In terms of climate, the city is located within the subtropical monsoon climate zone, with an average annual temperature of about 22.5 °C and an average annual precipitation of approximately 1681.2 mm. Such climatic conditions are extremely conducive to the growth and flourishing of forests and various types of vegetation. Consequently, in areas where forests and urban residential or industrial zones are adjacent, a typical wildland–urban interface (WUI) landscape has been formed.

We used high-resolution cameras equipped with conventional detection algorithms to capture images exhibiting smoke characteristics. To obtain a diverse and representative dataset, we deployed five long-range surveillance cameras across various regions of Foshan City. Each camera is capable of operating within a radius of 0–8 km. Equipped with both visible-light and infrared imaging technologies, the cameras feature DC brushless motors for rotation and a visible focal length lens with an 8–560 mm range, enabling up to 70× zoom capabilities. With a resolution exceeding eight megapixels and a maximum image resolution of 3840 × 2160 pixels, these cameras are ideally suited for remote monitoring applications, particularly in protected areas such as forest fire prevention zones.

After excluding images without distinct smoke features, we meticulously categorized the remaining samples into five types: Field Burning and Wildfires, Illuminated by Light, Industrial Emissions, Mist and Water Vapor, and Village Cooking Smoke, as shown in Table 1. The “Field Burning and Wildfires” class focuses on detecting agricultural burning activities, which are not major wildfire incidents themselves, but represent critical fire-ignition behaviors demanding early identification for effective forest fire prevention.

Table 1.

Various examples of smoke alarm images. All labels are concatenated with the prompt before being input into the text encoder of CLIP. This allows the model to better differentiate between various types of samples based on text labels rather than abstract numerical labels.

Furthermore, we subdivided these samples into five sub-datasets based on geographic regions. Images from Datasets A to E were collected by multiple high-definition cameras located in various areas of Foshan City. The specific locations of each camera and the distribution of images within the datasets are shown in Table 2.

Table 2.

Distribution of images in each dataset.

Given that Datasets A and B are both of considerable size, and that their combination can form a more evenly distributed dataset, we decided to merge these two datasets into a single unified dataset. This integration aimed to construct a pre-training dataset that was more balanced across various label classifications, thereby enhancing the model’s generalization capability and performance.

Due to the distinct characteristics of the remaining three datasets and the relatively limited amount of available data, we decided to utilize them as datasets for downstream tasks. This approach was designed to simulate the practical challenges that may be encountered during the generalization process of pre-trained models, thereby assessing and enhancing the model’s adaptability and application potential in data-scarce environments.

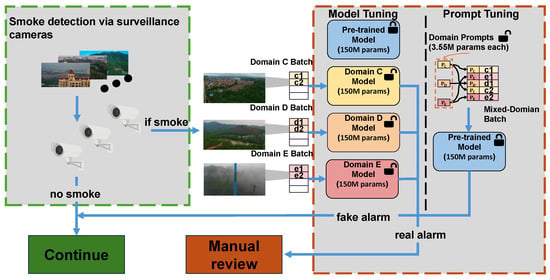

2.2. Forest Fire Detection System

Figure 1 presents a forest fire detection system that detects and classifies potential fire threats through a series of steps. The system initially utilizes high-definition surveillance cameras deployed in areas prone to fires to capture real-time environmental images.

Figure 1.

Forest fire detection system. In this figure, C, D, and E represent the downstream task regions, as detailed in Table 2. c1, d1, e1, etc., denote the image data corresponding to the respective regions; , , and refer to region-specific prompts. The locked symbols in the figure indicate pre-trained models that have not been fine-tuned for specific regions, while the unlocked symbols represent models or prompts that have been fine-tuned for those regions. The model parameters in the figure are based on the CLIP VIT-B/16 version. For a detailed explanation of the system in Figure 1, please refer to Section 3.2.

These cameras integrate cutting-edge imaging technology, enabling the continuous detection of smoke features. After capturing the images, the system initiates a preliminary screening process that automatically identifies and locates potential problem areas, such as the presence of smoke, through image processing algorithms. This recognition process is executed by analyzing patterns and features related to smoke within the images. Once the system detects smoke, it further classifies the smoke based on risk levels, distinguishing between high-risk situations that may indicate an imminent forest fire and low-risk situations.

The classification phase is a complex and critical step. Traditionally, fine-tuning pre-trained models on large datasets specific to the task has been employed for such tasks. Fine-tuning (model tuning) [40] refers to the process of adjusting and optimizing the parameters of a pre-trained model to improve its performance on a specific task or dataset. This typically involves fine-tuning certain layers or hyperparameters of the model, using a smaller, task-specific dataset, to adapt the model to the new application. Tuning can include techniques such as adjusting learning rates, regularization terms, or the number of training epochs. The goal of model tuning is to enhance the model’s generalization ability and accuracy without overfitting to the new data. However, this approach often performs poorly in scenarios where data is scarce or unevenly distributed across regions.

The system can also adopt a prompt tuning strategy, an innovative method to address the aforementioned challenges. Prompt tuning enables a model to adapt to new tasks and datasets with minimal additional training by introducing task-specific prompts into the input of a pre-trained model. This method is particularly effective in data-limited contexts as it allows the model to generalize effectively by leveraging the knowledge encoded in the pre-trained parameters.

The advantages of prompt tuning over traditional fine-tuning are multifaceted. Firstly, it significantly reduces the computational resources required, as the model does not need to relearn all parameters. Secondly, it enhances the model’s adaptability, enabling it to quickly adjust to new scenarios or regions with varying environmental conditions. Lastly, by utilizing region-specific prompts, the system can deploy a single model to handle forest fire detection tasks across multiple areas.

2.3. Multi-Modal Prompt Tuning (MaPLe)

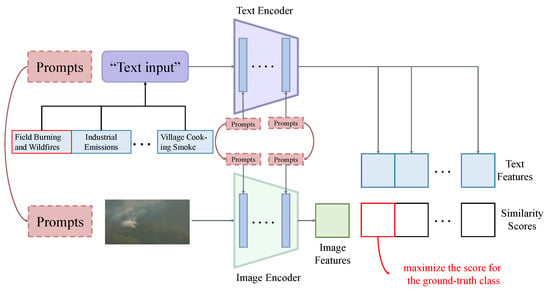

MapLe [35] is an improvement over the CLIP framework, incorporating learnable prompts into both the visual and linguistic branches of CLIP to enhance the model’s generalization capabilities. The framework is shown in Figure 2.

Figure 2.

Working principle of MaPLe in forest fire detection. We concatenate the text and image with their corresponding prompt vectors and input them into the model simultaneously. The model then extracts features for both the text and the image. Subsequently, the similarity between these two types of features is computed, and the candidate label with the highest similarity score is selected as the prediction result.

Specifically, MaPLe introduces learnable tokens into the linguistic branch of the CLIP model. The input embeddings take the form of , with corresponding to fixed input tokens. Additional learnable tokens are integrated at each transformer block of the language encoder () up to a specified depth J. The equations are as follows:

Here, denotes the concatenation operation. After the Jth transformer layer, subsequent layers process the previous layer’s prompts and compute the final text representation z, which is projected into a common latent embedding space via TextProj,

Similarly to language prompt learning methods, learnable vision prompts (denoted ) are introduced within the visual branch of the CLIP model, with new learnable tokens being integrated deeper into the transformer layers of the image encoder (Vi) up to depth J.

The equations are presented as follows:

Considering that a multimodal approach is essential for prompt tuning, where both the vision and language branches of CLIP are simultaneously adapted to achieve comprehensive context optimization. MaPLe proposes a multimodal prompting method that tunes the vision and language branches of CLIP together by sharing prompts across both modalities. Language prompt tokens are introduced in the language branch up to the Jth transformer block, akin to deep language prompting. To ensure synergy between prompts, vision prompts “P” are obtained by projecting language prompts P via a vision-to-language projection, referred to as the coupling function .

The equations are given by

Here, is a linear layer that maps -dimensional inputs to . This serves as a bridge between the two modalities, encouraging the mutual propagation of gradients.

The full equations are as follows:

Through the aforementioned methodology, MaPLe effectively captures the interaction between the visual and linguistic branches when fine-tuning pre-trained CLIP models, thereby improving the model’s generalization capabilities to novel categories, new target datasets, and unseen domain shifts.

3. Results

3.1. Benchmark Setting

To evaluate the effectiveness of FireCLIP, this study selected FireFormer [22], ConvNeXt [41], and EfficientNetV2 [42] as the primary baseline models. FireFormer has demonstrated outstanding performance in relevant domains. ConvNeXt, an optimized version of traditional convolutional neural networks, integrates design principles from Vision Transformers to enhance model expressiveness. EfficientNetV2, a highly efficient convolutional neural network, achieves an optimal balance among network depth, width, and resolution, exhibiting superior computational efficiency and feature representation capabilities. Through comparative analysis with these baseline models, this study aims to comprehensively assess the advantages and application potential of FireCLIP.

The experimental setup is as follows:

- Pre-training: We deployed the baseline models on a pre-training dataset for pre-training, and compared the performance and efficiency of the baseline models versus FireCLIP.

- Zero-shot Transfer: We directly applied the pre-trained model to the dataset of a downstream task to observe the differences in generalization ability between baselines and FireCLIP methods.

- Few-shot Learning: We conducted few-shot learning with the pre-trained model on the dataset of a downstream task. The learned model was then tested on both the pre-training dataset and the downstream task dataset to assess the performance of baselines and FireCLIP in 4-, 8-, 16-, and 32-shot settings.

For fair comparison, all baseline methods underwent identical training settings during both the pre-training and few-shot learning phases. Model versions are specified in Table 3.

Table 3.

Comparison of models, parameters, and FPS. The FPS in the table indicates the number of images per second that the model is able to process during evaluation. “ViT-B/16” for FireCLIP refers to its visual encoder architecture and 154 M represents the total parameters including the language encoder.

3.2. Evaluation Setting

To address the problem of data balance and the measurement of single-category precision in the classification task, four commonly used evaluation metrics in classification task were adopted to comprehensively evaluate the performance of model, as defined in Equations (11)–(14):

where TP is true positives with a positive label and positive model prediction, TN is true negatives with a negative label and negative model prediction, FP is false positives with positive prediction but a negative label, and FN is false negatives with negative prediction but a positive label.

3.3. Implementation Details

We conducted experiments on two datasets, namely the WUISAD and FASDD [43] datasets. FASDD is a large-scale, multi-source collection comprising 122,634 samples of fire-related phenomena (flames and smoke) and non-fire distractors. This community-driven dataset features crowdsourced annotations and multimodal visual data collected from three distinct visual sensor modalities: surveillance cameras, unmanned aerial vehicles (UAVs), and satellite imaging. We performed binary classification tasks on the two subsets, FASDDCV (surveillance camera-captured data) and FASDDUAV (UAV-acquired imagery), to distinguish between the presence and absence of fire and smoke.

We employed a few-shot training strategy in all experiments, with random sampling for each class. All experiments were conducted on a single A100 80G GPU, with the batch size set to 32 and the image size adjusted to 224 × 224. The experimental results for all few-shot learning tasks represent the average values after three samplings. We performed a five-class classification task on the WUISAD dataset, training all models for 100 epochs. Conversely, for the FASDD dataset, we conducted a two-class classification task, limiting the training to five epochs. This configuration was adopted due to the significantly higher complexity and classification difficulty inherent in the WUISAD dataset compared to FASDD.

Prompt tuning was applied to a pre-trained ViT-B/16 CLIP model, which was trained on the WIT dataset, containing 400 million (image, text) pairs. We set prompt depth J to 9 and the language and vision prompt lengths to 2. We initialized the language prompts with the pre-trained CLIP word embeddings of the template ‘a photo of a’. Other settings are the same as in MaPLe.

3.4. Performance Comparison

3.4.1. Pre-Training

This section investigates the learning capacities of the baseline models and FireCLIP across datasets from various regions. During training, the baseline models fine-tune all parameters, whereas FireCLIP employs a more parameter-efficient approach by freezing the pre-trained parameters of CLIP and solely learning prompts. Consequently, there is a substantial difference in the number of parameters being learned, with the baseline models utilizing at least 119.5 million parameters compared to FireCLIP’s 3.55 million parameters.

Table 4 presents the pre-training results of various methods on the FASDD dataset. Despite being trained for only five epochs, all methods demonstrated commendable performance. These results further underscore the relatively lower discriminative complexity of the FASDD dataset.

Table 4.

Binary classification accuracy (%) of each model after pre-training on the FASDD dataset.

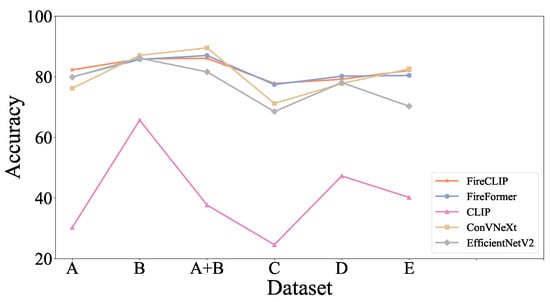

According to Figure 3, the FireCLIP and baseline models demonstrate comparable performance across various datasets of WUISAD. This suggests that integrating CLIP’s prompt tuning method can achieve performance levels similar to the fine-tuned FireFormer, even with a significant reduction in the number of parameters. This finding underscores the potential of parameter-efficient learning approaches.

Figure 3.

Test accuracy of each model across the different datasets of WUISAD. All models were trained except for the CLIP model. The detailed information regarding the dataset can be found in Table 2.

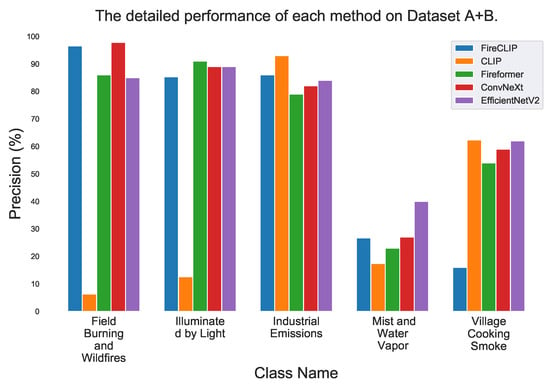

Figure 4 reveals significant differences in precision across five categories among various models. FireCLIP achieves the highest precision, approaching 96.5%, in the “Field Burning and Wildfires” category, but underperforms in “Village Cooking Smoke”.

Figure 4.

Test accuracy of each model on Dataset A + B. All models were trained except for the CLIP model.

In contrast, CLIP exhibits generally weak performance, particularly showing extremely low precision in both “Field Burning and Wildfires” and “Illuminated by Light”, rendering it inadequate for classification purposes. The marked performance improvement of FireCLIP over CLIP highlights the advantages of prompt learning in fire scene classification tasks, suggesting that optimizing prompt learning strategies can effectively enhance the model’s feature extraction and generalization capabilities.

Fireformer and EfficientNetV2 demonstrate relatively stable performance, maintaining high precision across multiple categories, especially excelling in “Industrial Emissions” and “Village Cooking Smoke”, with precision exceeding 80%. ConvNeXt approaches optimal performance in “Field Burning and Wildfires” but shows slightly inferior results in “Village Cooking Smoke”. Overall, “Mist and Water Vapor” emerges as the most challenging category to classify, with all models performing poorly, whereas “Field Burning and Wildfires” is comparatively easier to classify. This performance is consistent with the data distribution.

3.4.2. Zero-Shot Transfer on WUISAD

Before exploring the model’s ability in zero-shot transfer, we first need to grasp the distinctions among the datasets to assess the generalization capabilities of different methods effectively.

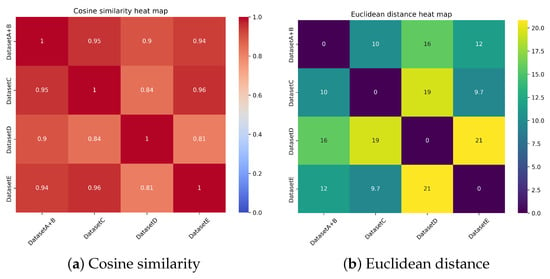

To evaluate this, we utilized a pre-trained ResNet50 [44] model to extract features from multiple image datasets and calculated the cosine similarity and Euclidean distance between these datasets. Specifically, we input all images from each dataset into the pre-trained ResNet50 model to obtain feature vectors for each image. The average of these feature vectors was computed to represent the overall feature profile of each dataset.

Based on the extracted feature vectors, we quantified the similarity and divergence between datasets using cosine similarity and Euclidean distance. Cosine similarity measures the alignment of vector directions, reflecting semantic similarity, with values ranging from −1 to 1, where a higher value indicates greater similarity. In contrast, the Euclidean distance assesses absolute differences by computing the straight-line distance between vectors, with smaller distances indicating closer feature proximity.

Figure 5 provides information on similarities and differences between the datasets. The cosine similarity heatmap reveals that Datasets C and E exhibit the highest level of similarity (0.96), indicating their highly aligned feature distributions. Dataset A + B also demonstrates significant similarity with both Dataset C and Dataset E, with values exceeding 0.95. However, Dataset D shows relatively lower similarity with other datasets, particularly with Dataset C and Dataset E (values ranging between 0.84 and 0.9), suggesting that Dataset D possesses more distinct feature characteristics.

Figure 5.

Heatmap comparison of similarity metrics in high-dimensional spaces.

The Euclidean distance heatmap corroborates these findings, where shorter distances signify greater similarity. Dataset C and Dataset E exhibit the smallest Euclidean distance (9.7), reaffirming their close relationship in feature space. Dataset A + B also shows relatively low distances with Dataset C and Dataset E (10–12), supporting their shared feature alignment. Conversely, Dataset D is characterized by consistently higher distances (16–21) compared to other datasets, emphasizing its unique feature distribution.

Table 5 shows the performance of the baseline models and FireCLIP in zero-shot transfer across different domains (Domains C, D, and E). Zero-shot transfer is a method to test the model’s generalization ability, i.e., directly evaluating the model’s performance without any additional training on the target dataset.

Table 5.

Zero-shot transfer performance of different methods on WUISAD.

FireCLIP exhibits the strongest transfer capability across all target domains (Domains C, D, and E), achieving accuracy rates of 70.24% and 66.73% in Domain C and Domain E, respectively. These results significantly outperform other methods, demonstrating its superior cross-domain generalization abilities. Moreover, FireCLIP achieves an average accuracy of 65.07%, which is substantially higher than FireFormer (52.62%), ConvNeXt (45.64%), and EfficientNetV2 (42.34%). This clearly highlights its overall advantage in zero-shot transfer learning.

FireFormer performs second to FireCLIP in the target domains, attaining an accuracy of 66.51% in Domain C, which indicates its robust cross-domain transfer capabilities. However, its performance in certain target domains, such as Domain D and Domain E, still falls short of FireCLIP, suggesting that its adaptability in zero-shot scenarios has room for improvement.

In contrast, ConvNeXt and EfficientNetV2 demonstrate weaker performance, especially in Domain E, where their accuracy rates are only 26.32% and 26.90%, respectively. This reveals a notable deficiency in cross-domain transfer capabilities, indicating that these two methods have limited generalization abilities when faced with tasks involving significant domain distribution differences.

3.4.3. Few-Shot Learning

Table 6 demonstrates the binary classification performance of pre-trained models on the FASDD dataset under few-shot settings. FireCLIP achieves high accuracy (98.33–98.56% across 4–32 shots), significantly outperforming FireFormer (83.62–87.23%), ConvNeXt (81.04–88.60%), and EfficientNetV2 (80.61–82.69%), with performance gaps widening as sample size decreases. These results highlight FireCLIP’s superior few-shot adaptability through vision-language alignment while revealing conventional models’ limitations in low-data regimes.

Table 6.

Binary classification accuracy (%) of each model after pre-training on the FASDD dataset under few-shot settings.

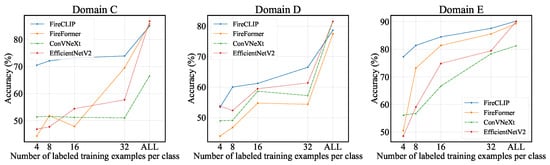

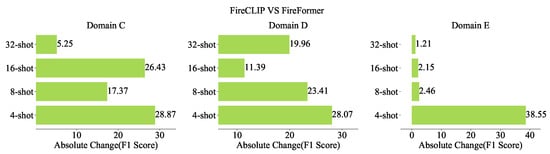

The experimental results depicted in Figure 6 reveal significant performance disparities among various models in few-shot learning scenarios, particularly across different domains (Domain C, Domain D, and Domain E). Notably, FireCLIP consistently exhibits superior performance advantages. Under few-shot conditions, such as having only four or eight labeled samples per class, FireCLIP achieves markedly higher accuracy compared to other benchmark models, demonstrating robust generalization capabilities and thereby affirming its suitability for few-shot learning tasks. In contrast, FireFormer’s performance incrementally improves with an increasing number of samples, nearing FireCLIP’s performance when ample data is available (e.g., the ALL condition), yet it still shows a noticeable performance gap under limited sample conditions. Conversely, EfficientNetV2 and ConvNeXt display limited performance enhancements in few-shot settings, indicating a weaker adaptability to few-shot learning tasks.

Figure 6.

Few-shot learning performance of the different model.

Overall, FireCLIP’s superior performance in few-shot learning underscores its ability to maintain high classification accuracy despite limited labeled data, which is crucial for data-scarce applications such as forest fire detection. FireFormer, on the other hand, demonstrates strong learning capabilities when sufficient samples are available, highlighting its adaptability in resource-rich environments. In comparison, the constrained performance of EfficientNetV2 and ConvNeXt under few-shot conditions limits their potential applicability in related tasks.

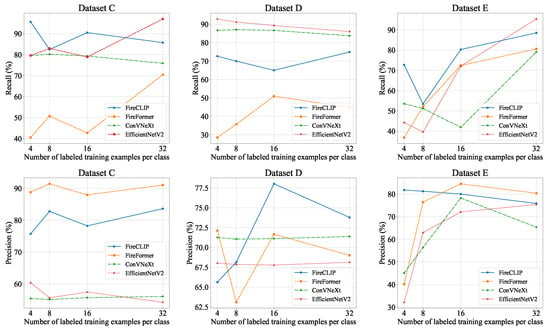

The analysis presented above demonstrates the capability of various models in identifying different types of smoke. However, in practical application scenarios, it is crucial to enhance the accuracy of forest fire detection and reduce the rate of false alarms. Therefore, our subsequent focus will be on the precision and recall metrics of positive samples. By conducting an analysis of the precision and recall of positive samples, we can more accurately quantify the model’s performance in detecting forest fire events. The precision metric measures the proportion of actual forest fire events among all events predicted by the model as positive samples, which is of significant importance for assessing the accuracy of the model’s predictions. The recall, on the other hand, focuses on the proportion of real forest fire events that the model can identify out of all actual forest fire events, reflecting the comprehensiveness of the model in capturing forest fire events.

The experimental results presented in Figure 7 demonstrate significant fluctuations in both recall and precision across different methods, particularly when the number of labeled samples is limited (e.g., four or eight samples). For instance, FireCLIP shows a decrease in recall with increasing sample size in certain domains, potentially due to the lack of dedicated optimization for positive sample accuracy during model training.

Figure 7.

The evaluation of recall and precision for positive samples (Field Burning and Wildfires) across different datasets for each model.

In comparative analyses, FireCLIP exhibits relatively superior overall performance, maintaining high levels of recall and precision as the sample size increases. FireFormer’s performance is notably affected by the number of samples, yet it still demonstrates some capability for few-shot learning. In contrast, ConVNeXt and EfficientNetV2 show generally average performance, struggling with few-shot learning in Datasets C and D.

These findings suggest that maintaining high recall and precision for positive samples while enhancing overall accuracy remains a significant research challenge.

Considering the overall strong performance of FireFormer as a baseline, we will focus on comparing FireCLIP with FireFormer on WUISAD in the subsequent analysis.

In order to conduct a comprehensive evaluation of the models’ performance, we introduce the F1 score, which is the harmonic mean of precision and recall. This metric effectively integrates the considerations of both the precision and comprehensiveness of the models. Observations from Figure 8 indicate that FireCLIP outperforms FireFormer in the comprehensive prediction of positive samples, particularly in scenarios where the number of learnable samples is limited.

Figure 8.

Few-shot learning performance of the different models (F1).

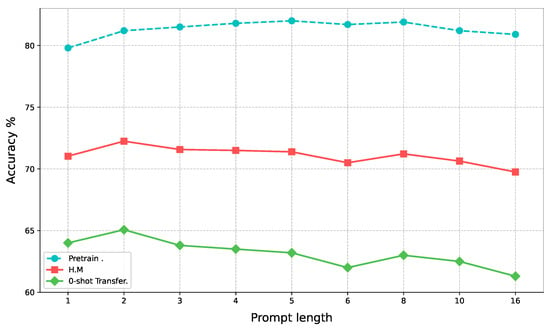

3.4.4. Ablation Experiments

Figure 9 presents an ablation study evaluating the impact of prompt length on FireCLIP’s performance during pre-training (Dataset A + B) and subsequent zero-shot transfer (average across Datasets C, D, E). The harmonic mean (H.M) quantifies the balance between source domain proficiency and cross-domain generalization capability.

Figure 9.

Ablation study on the impact of prompt length during pre-training (Dataset A + B) and subsequent zero-shot transfer performance (average across Datasets C, D, E). H.M represents the harmonic mean of pre-training accuracy and zero-shot transfer accuracy.

The results reveal certain sensitivity to prompt length. While longer prompts generally enhance pre-training accuracy on the source domain, indicating improved adaptation to its specific characteristics, this benefit comes at a cost to generalization. Specifically, prompts exceeding an optimal length degrade zero-shot transfer performance across the target domains.

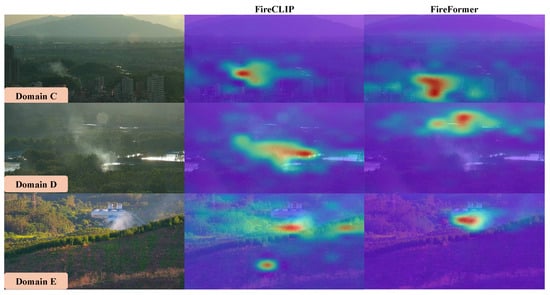

3.4.5. Visualization

To further demonstrate the robustness of FireCLIP in complex scenarios, more challenging cases are collected for evaluation, as illustrated in Figure 10. All hard cases are labeled as “Field Burning and Wildfires”, originating from three distinct domain. We select the FireCLIP and FireFormer models under a 32-shot setting for visualization analysis.

Figure 10.

Attention visualization of FireCLIP (correct prediction) vs. FireFormer (incorrect prediction). FireCLIP focuses more on surrounding environmental features, demonstrating its robustness against false positives.

Domain C: The challenge in the Domain C hard case arises from the smoke generated in a wildland–urban interface (WUI) area, which can be confused with cooking smoke. The FireCLIP model primarily focuses on the wooded areas, which might be more typical of wildfires, whereas FireFormer directs its attention towards the buildings. This difference suggests that FireCLIP may better discriminate between different smoke sources in this environment, while FireFormer might be distracted by structures that could also be common in non-wildfire smoke scenarios.

Domain D: In this hard case, multiple distractions such as sunlight reflections on water surfaces present challenges. FireCLIP successfully concentrates on the correct smoke areas among the trees, indicating a robust capability to ignore misleading bright reflections. In contrast, FireFormer seems to be attracted by the sunlight, potentially reducing its effectiveness in accurately identifying smoke amidst environmental reflections.

Domain E: Similar to Domain C’s hard case, Domain E’s hard case also features obscured smoke sources near buildings. Although FireFormer correctly identifies the smoke’s location, its focus on the buildings can lead to misjudgments. FireCLIP, on the other hand, maintains a broader focus on the expansive wooded areas, potentially offering a more reliable detection of wildfire smoke when it originates from or spreads into forested regions.

Based on the aforementioned analysis, the FireCLIP model exhibits a relatively broad distribution of attention, particularly demonstrating heightened focus on forested areas. In contrast, the FireFormer model tends to be more easily attracted to the representational features of non-forested areas.

4. Discussion

FireCLIP demonstrates three advantages through its parameter-efficient architecture and multimodal prompt tuning strategy.

First, it achieves computational efficiency with only 3.55M trainable parameters—orders of magnitude fewer than FireFormer (197 M), ConvNeXt-L (198 M), and EfficientNetV2-L (119.5 M)—while maintaining high inference speeds (91 FPS), enabling scalable deployment in resource-constrained environments. Second, the framework exhibits exceptional zero-shot cross-domain generalization, outperforming the baseline models by 12.45% regarding the average accuracy (65.07% vs. FireFormer’s 52.62%) across geographically distinct regions (Table 5, effectively mitigating regional data imbalances through CLIP’s vision-language alignment. Third, FireCLIP excels in few-shot adaptation, achieving 98.33% accuracy in four-shot FASDD classification (Table 6—a 4.71–17.72% improvement over the other models—by leveraging task-specific prompts to extract discriminative smoke features from limited samples.

This unified combination of parameter efficiency, robust cross-regional adaptability, and data-scarce learning superiority addresses core challenges in wildfire detection, including pseudo-smoke interference and geographic distribution shifts, while minimizing computational overheads.

5. Conclusions

This study develops an efficient early warning system for forest fires, addressing a critical global environmental threat. We constructed a smoke-based dataset and introduced two strategies: a visual language framework to differentiate fire and non-fire samples and prompt tuning to enhance model generalization in data-scarce regions. Our model, FireCLIP, was evaluated using accuracy, precision, recall, and F1 scores, demonstrating superior performance in pre-training and zero-shot transfer, especially in new domain generalization. Compared to traditional fine-tuning, FireCLIP leverages CLIP’s pre-trained knowledge with prompt tuning for rapid adaptation with minimal parameter adjustments. In few-shot learning tasks, FireCLIP significantly outperformed FireFormer, showing improved accuracy and stable performance as the number of training samples increased. These results highlight FireCLIP’s high accuracy and reliability in predicting forest fires, enhancing the effectiveness of detection systems.

The forest fire detection early warning system proposed in this study has demonstrated strong performance both theoretically and practically; however, it also faces certain limitations and challenges:

- Vision-Aware Dynamic Prompting: While the current prompt templates primarily rely on static textual descriptions, incorporating fine-grained visual semantics specific to the content of the current input image presents a promising future direction. Static prompts inherently struggle to adapt to the diverse visual manifestations of smoke. Future works can focus on developing mechanisms to dynamically inject image-specific visual features or semantic descriptions into the prompt template generation process. Successfully integrating these dynamic, vision-guided elements with static contextual knowledge has the potential to significantly refine the model’s ability to recognize subtle smoke characteristics, leading to enhanced generalization and robustness across varied environmental scenes.

- Environmental and Seasonal Variability: In addition to the challenges of large and diverse negative samples and regional data imbalance discussed in the present work, it is important to consider the influence of environmental changes and seasonal variations. Forest fire detection models may be sensitive to factors such as varying vegetation, weather conditions, and seasonal changes, which could impact model performance. Future research should explore how to adapt and optimize detection systems for different environmental contexts and seasons to ensure consistent performance year-round.

Author Contributions

Conceptualization, F.W., S.W. and Y.Q.; methodology, F.W., S.W. and Y.Q.; software, S.W. and Y.Q.; validation, S.H. and J.Z.; formal analysis, S.H. and J.Z.; investigation, S.H. and J.Z.; resources, Z.W. and X.L.; data curation, Z.W. and X.L.; writing—original draft preparation, F.W., S.W. and Y.Q.; writing—review and editing, S.W. and Y.Q.; visualization, S.W. and Y.Q.; supervision, F.W.; project administration, F.W.; funding acquisition, F.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Yunnan Provincial Science and Technology Plan Project (Forest-Grass Joint Project) grant number 202404CB090017, the Shenzhen Science and Technology Innovation Commission grant number JCYJ20180508152055235, and the Key Field Research and Development Program of Guangdong, China grant number 2019B111104001.

Data Availability Statement

Requests for more data can be addressed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Liu, Y.; Goodrick, S.; Heilman, W. Wildland fire emissions, carbon, and climate: Wildfire–climate interactions. For. Ecol. Manag. 2014, 317, 80–96. [Google Scholar] [CrossRef]

- Jiang, W.; Qiao, Y.; Su, G.; Li, X.; Meng, Q.; Wu, H.; Quan, W.; Wang, J.; Wang, F. WFNet: A hierarchical convolutional neural network for wildfire spread prediction. Environ. Model. Softw. 2023, 170, 105841. [Google Scholar] [CrossRef]

- Lüthi, S.; Aznar-Siguan, G.; Fairless, C.; Bresch, D.N. Globally consistent assessment of economic impacts of wildfires in CLIMADA v2. 2. Geosci. Model Dev. 2021, 14, 7175–7187. [Google Scholar] [CrossRef]

- Politoski, D.; Dittrich, R.; Nielsen-Pincus, M. Assessing the absorption and economic impact of suppression and repair spending of the 2017 eagle creek fire, Oregon. J. For. 2022, 120, 491–503. [Google Scholar] [CrossRef]

- Diakakis, M.; Xanthopoulos, G.; Gregos, L. Analysis of forest fire fatalities in Greece: 1977–2013. Int. J. Wildland Fire 2016, 25, 797–809. [Google Scholar] [CrossRef]

- Jiang, W.; Qiao, Y.; Zheng, X.; Zhou, J.; Jiang, J.; Meng, Q.; Su, G.; Zhong, S.; Wang, F. Wildfire risk assessment using deep learning in Guangdong Province, China. Int. J. Appl. Earth Obs. Geoinf. 2024, 128, 103750. [Google Scholar] [CrossRef]

- Truong, T.X.; Kim, J.M. Fire flame detection in video sequences using multi-stage pattern recognition techniques. Eng. Appl. Artif. Intell. 2012, 25, 1365–1372. [Google Scholar] [CrossRef]

- Sakr, G.E.; Elhajj, I.H.; Mitri, G. Efficient forest fire occurrence prediction for developing countries using two weather parameters. Eng. Appl. Artif. Intell. 2011, 24, 888–894. [Google Scholar] [CrossRef]

- Fernández-Berni, J.; Carmona-Galán, R.; Martínez-Carmona, J.F.; Rodríguez-Vázquez, Á. Early forest fire detection by vision-enabled wireless sensor networks. Int. J. Wildland Fire 2012, 21, 938–949. [Google Scholar] [CrossRef]

- Wang, S.; Xiao, X.; Deng, T.; Chen, A.; Zhu, M. A Sauter mean diameter sensor for fire smoke detection. Sensors Actuators B Chem. 2019, 281, 920–932. [Google Scholar] [CrossRef]

- Gutmacher, D.; Hoefer, U.; Wöllenstein, J. Gas sensor technologies for fire detection. Sensors Actuators B Chem. 2012, 175, 40–45. [Google Scholar] [CrossRef]

- Song, L.; Wang, B.; Zhou, Z.; Wang, H.; Wu, S. The research of real-time forest fire alarm algorithm based on video. In Proceedings of the 2014 Sixth International Conference on Intelligent Human-Machine Systems and Cybernetics, Hangzhou, China, 26–27 August 2014; IEEE: Piscataway, NJ, USA, 2014; Volume 1, pp. 106–109. [Google Scholar]

- Tomkins, L.; Benzeroual, T.; Milner, A.; Zacher, J.; Ballagh, M.; McAlpine, R.; Doig, T.; Jennings, S.; Craig, G.; Allison, R. Use of night vision goggles for aerial forest fire detection. Int. J. Wildland Fire 2014, 23, 678–685. [Google Scholar] [CrossRef]

- Zhou, Z.; Shi, Y.; Gao, Z.; Li, S. Wildfire smoke detection based on local extremal region segmentation and surveillance. Fire Saf. J. 2016, 85, 50–58. [Google Scholar] [CrossRef]

- Kaur, P.; Kaur, K.; Singh, K.; Kim, S. Early forest fire detection using a protocol for energy-efficient clustering with weighted-based optimization in wireless sensor networks. Appl. Sci. 2023, 13, 3048. [Google Scholar] [CrossRef]

- Khan, S.; Muhammad, K.; Hussain, T.; Del Ser, J.; Cuzzolin, F.; Bhattacharyya, S.; Akhtar, Z.; de Albuquerque, V.H.C. Deepsmoke: Deep learning model for smoke detection and segmentation in outdoor environments. Expert Syst. Appl. 2021, 182, 115125. [Google Scholar] [CrossRef]

- Xu, G.; Zhang, Y.; Zhang, Q.; Lin, G.; Wang, Z.; Jia, Y.; Wang, J. Video smoke detection based on deep saliency network. Fire Saf. J. 2019, 105, 277–285. [Google Scholar] [CrossRef]

- Yuan, F.; Zhang, L.; Xia, X.; Huang, Q.; Li, X. A wave-shaped deep neural network for smoke density estimation. IEEE Trans. Image Process. 2019, 29, 2301–2313. [Google Scholar] [CrossRef]

- Azim, M.R.; Keskin, M.; Do, N.; Gül, M. Automated classification of fuel types using roadside images via deep learning. Int. J. Wildland Fire 2022, 31, 982–987. [Google Scholar] [CrossRef]

- Qiang, X.; Zhou, G.; Chen, A.; Zhang, X.; Zhang, W. Forest fire smoke detection under complex backgrounds using TRPCA and TSVB. Int. J. Wildland Fire 2021, 30, 329–350. [Google Scholar] [CrossRef]

- Guede-Fernández, F.; Martins, L.; de Almeida, R.V.; Gamboa, H.; Vieira, P. A deep learning based object identification system for forest fire detection. Fire 2021, 4, 75. [Google Scholar] [CrossRef]

- Qiao, Y.; Jiang, W.; Wang, F.; Su, G.; Li, X.; Jiang, J. FireFormer: An efficient Transformer to identify forest fire from surveillance cameras. Int. J. Wildland Fire 2023, 32, 1364–1380. [Google Scholar] [CrossRef]

- Li, P.; Zhao, W. Image fire detection algorithms based on convolutional neural networks. Case Stud. Therm. Eng. 2020, 19, 100625. [Google Scholar] [CrossRef]

- Shahid, M.; Chien, I.F.; Sarapugdi, W.; Miao, L.; Hua, K.L. Deep spatial-temporal networks for flame detection. Multimed. Tools Appl. 2021, 80, 35297–35318. [Google Scholar] [CrossRef]

- Zhuang, F.; Qi, Z.; Duan, K.; Xi, D.; Zhu, Y.; Zhu, H.; Xiong, H.; He, Q. A comprehensive survey on transfer learning. Proc. IEEE 2020, 109, 43–76. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Bari, A.; Saini, T.; Kumar, A. Fire detection using deep transfer learning on surveillance videos. In Proceedings of the 2021 Third International Conference on Intelligent Communication Technologies and Virtual Mobile Networks (ICICV), Tirunelveli, India, 4–6 February 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1061–1067. [Google Scholar]

- Wang, Y.; Yao, Q.; Kwok, J.T.; Ni, L.M. Generalizing from a few examples: A survey on few-shot learning. ACM Comput. Surv. 2020, 53, 1–34. [Google Scholar] [CrossRef]

- Song, Y.; Wang, T.; Cai, P.; Mondal, S.K.; Sahoo, J.P. A comprehensive survey of few-shot learning: Evolution, applications, challenges, and opportunities. ACM Comput. Surv. 2023, 55, 1–40. [Google Scholar] [CrossRef]

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Xia, F.; Chi, E.; Le, Q.V.; Zhou, D. Chain-of-thought prompting elicits reasoning in large language models. Adv. Neural Inf. Process. Syst. 2022, 35, 24824–24837. [Google Scholar]

- Zhang, Z.; Zhang, A.; Li, M.; Smola, A. Automatic Chain of Thought Prompting in Large Language Models. In Proceedings of the Eleventh International Conference on Learning Representations, Virtual, 25–29 April 2022. [Google Scholar]

- Lester, B.; Al-Rfou, R.; Constant, N. The Power of Scale for Parameter-Efficient Prompt Tuning. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Virtual, 7–11 November 2021; pp. 3045–3059. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Zhou, K.; Yang, J.; Loy, C.C.; Liu, Z. Learning to prompt for vision-language models. Int. J. Comput. Vis. 2022, 130, 2337–2348. [Google Scholar] [CrossRef]

- Khattak, M.U.; Rasheed, H.; Maaz, M.; Khan, S.; Khan, F.S. Maple: Multi-modal prompt learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 19113–19122. [Google Scholar]

- Khattak, M.U.; Wasim, S.T.; Naseer, M.; Khan, S.; Yang, M.H.; Khan, F.S. Self-regulating prompts: Foundational model adaptation without forgetting. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 15190–15200. [Google Scholar]

- Park, J.; Ko, J.; Kim, H.J. Prompt Learning via Meta-Regularization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 26940–26950. [Google Scholar]

- Zhang, J.; Wu, S.; Gao, L.; Shen, H.T.; Song, J. Dept: Decoupled prompt tuning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 12924–12933. [Google Scholar]

- Zhao, C.; Wang, Y.; Jiang, X.; Shen, Y.; Song, K.; Li, D.; Miao, D. Learning domain invariant prompt for vision-language models. IEEE Trans. Image Process. 2024, 33, 1348–1360. [Google Scholar] [CrossRef]

- Xu, L.; Xie, H.; Qin, S.Z.J.; Tao, X.; Wang, F.L. Parameter-efficient fine-tuning methods for pretrained language models: A critical review and assessment. arXiv 2023, arXiv:2312.12148. [Google Scholar]

- Liu, Z.; Mao, H.; Wu, C.Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A convnet for the 2020s. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 11976–11986. [Google Scholar]

- Tan, M.; Le, Q. Efficientnetv2: Smaller models and faster training. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 10096–10106. [Google Scholar]

- Wang, M.; Yue, P.; Jiang, L.; Yu, D.; Tuo, T.; Li, J. An open flame and smoke detection dataset for deep learning in remote sensing based fire detection. Geo-Spat. Inf. Sci. 2024, 1–16. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).