Abstract

The growing complexity and risk profile of fire and emergency incidents necessitate advanced training methodologies that go beyond traditional approaches. Live-fire drills and classroom-based instruction, while foundational, often fall short in providing safe, repeatable, and scalable training environments that accurately reflect the dynamic nature of real-world emergencies. Recent advancements in immersive technologies, including virtual reality (VR), augmented reality (AR), mixed reality (MR), extended reality (XR), and simulation-based systems, offer promising alternatives to address these challenges. This review provides a comprehensive overview of the integration of VR, AR, MR, XR, and simulation technologies into firefighter and incident commander training. It examines current practices across fire services and emergency response agencies, highlighting the capabilities of immersive and interactive platforms to enhance operational readiness, decision-making, situational awareness, and team coordination. This paper analyzes the benefits of these technologies, such as increased safety, cost-efficiency, data-driven performance assessment, and personalized learning pathways, while also identifying persistent challenges, including technological limitations, realism gaps, and cultural barriers to adoption. Emerging trends, such as AI-enhanced scenario generation, biometric feedback integration, and cloud-based collaborative environments, are discussed as future directions that may further revolutionize fire service education. This review aims to support researchers, training developers, and emergency service stakeholders in understanding the evolving landscape of digital training solutions, with the goal of fostering more resilient, adaptive, and effective emergency response systems.

1. Introduction

Immersive technologies are transforming training and decision support systems across high-risk professions, including emergency response. These technologies include virtual reality (VR), which offers fully immersive digital environments that replace the real world; augmented reality (AR), which overlays digital information onto the physical environment; and mixed reality (MR), which blends real and virtual elements into a single, interactive space where physical and digital objects coexist and respond to each other in real time. Collectively, these modalities are often referred to under the umbrella term extended reality (XR), which encompasses VR, AR, and MR.

While each of these technologies has its own developmental trajectory, use cases, and technical challenges, their combined potential to enhance safety-critical training is increasingly evident. Among them, VR and XR have emerged as the most extensively applied in the context of fire and rescue services due to their ability to fully simulate hazardous environments and command scenarios without exposing trainees to real-world danger. For this reason, the present review primarily focuses on VR and XR technologies, while acknowledging relevant contributions from AR and MR where applicable.

The increasing frequency, intensity, and complexity of fire and emergency incidents, driven by urbanization, industrial growth, and climate change, demand continuous evolution in the training of firefighters and incident commanders. These professionals operate under high-risk, time-critical conditions that require exceptional decision-making, situational awareness, and coordination. Traditional training methodologies—such as live-fire drills, classroom instruction, and tabletop simulations—have served as foundational tools in developing operational readiness. However, they are often constrained by safety risks, logistical challenges, high costs, and limited scenario variability and repeatability, making it difficult to prepare personnel for the wide range of conditions they may face in the field [1,2].

In response, recent advances in digital technologies have begun to reshape the landscape of emergency service training. Immersive technologies such as virtual reality (VR), augmented reality (AR), and extended reality (XR) have emerged as promising tools that enable experiential learning in safe, controlled, and repeatable environments. These systems provide realistic visual, auditory, and haptic feedback that simulates complex fireground conditions, ranging from structural fires to hazardous material incidents and wildland–urban interface events, without exposing trainees to physical danger [3,4,5].

Notably, Feng et al. [6] demonstrated the utility of integrating fire dynamics models into a VR-based simulator, enabling real-time interaction with evolving fire behavior and smoke movement, while Berthiaume et al. [7] showed how VR could effectively support hazardous material response training through improved spatial awareness and safe approach strategies. Such immersive platforms also facilitate cognitive skill development, enabling trainees to practice hazard recognition, victim prioritization, and command decisions under simulated stress conditions.

Despite these advantages, challenges related to realism, user interface design, and learning transfer remain. A systematic review by Stefan et al. [8] revealed mixed results regarding the effectiveness of VR for safety-relevant training, emphasizing the importance of scenario fidelity and pedagogical alignment. Moreover, Cha et al. [9] showed that the type of VR input devices used can significantly influence user engagement, cognitive load, and performance outcomes, suggesting that careful interface design is essential for training efficacy.

Beyond VR and XR, broader Information and Communication Technologies (ICTs), including simulation software, digital twins, and AI-driven decision support systems, are being adopted to enhance situational realism, data integration, and performance evaluation. Sarshar et al. [10] identified ICT as a key enabler for real-time information sharing and post-incident analysis, which can be applied not only during emergency operations but also as part of comprehensive training ecosystems. Similarly, Kim et al. [11] proposed the use of simulation platforms incorporating real-time fire dynamics, topographic data, and resource deployment strategies to mimic multi-agency operational contexts and support command-level training.

Training systems must also account for the intense physiological and psychological demands placed on emergency personnel. Studies by Smith et al. [12] and others have highlighted the cardiovascular and clotting responses elicited during firefighting activities, reinforcing the need for training environments that prepare the body and mind for such stressors. Additionally, immersive technologies must be carefully implemented to avoid unintended negative consequences, such as overstimulation or triggering psychological distress. Recent research has examined affective responses to VR in high-stress domains, advocating trauma-informed design and moderation strategies, particularly for trainees with prior exposure to traumatic incidents [13].

Taken together, these developments point toward a paradigm shift in firefighter and incident commander training from resource-intensive, risk-laden, and episodic methods toward immersive, data-driven, and adaptive training ecosystems. The convergence of VR, XR, simulation technologies, and ICT offers significant potential to enhance the realism, efficiency, and scalability of training programs, ultimately improving operational readiness and responder safety. However, further empirical research and cross-sector collaboration are needed to refine these tools, ensure user acceptance, and validate long-term training outcomes across diverse fire and rescue service contexts.

Given the diversity and rapid evolution of immersive technologies in emergency services training, this article adopts a scoping review methodology. Scoping reviews are well suited for synthesizing research in emerging or interdisciplinary fields where the literature is heterogeneous and not yet mature enough for formal meta-analysis. This approach allows us to identify technological trends, map current applications, evaluate benefits and limitations, and highlight knowledge gaps that warrant further research. The following section outlines the methodological procedures used to collect, select, and synthesize the literature included in this review.

2. Materials and Methods

This paper adopts a methodical and well-documented approach to gathering and analyzing academic studies focused on the use of ICT, VR, XR, and simulation technologies in firefighter and incident command training. The literature search was designed to thoroughly cover both foundational work and the latest advancements in this rapidly evolving area of research.

2.1. Databases and Search Strategy

The literature search targeted reputable academic databases including Web of Science (WoS), Scopus, ScienceDirect, and Elsevier. These platforms were selected due to their coverage of high-impact, peer-reviewed journals relevant to fire sciences, emergency services, educational technology, and human factor engineering. The search was limited to publications from January 2015 to December 2024 to capture the most current technological advancements and research findings. This time frame was chosen because 2015 marks a critical inflection point in immersive technology development: it coincides with the release and commercial availability of first-generation virtual reality headsets (e.g., Oculus Rift DK2 manufactured by Oculus VR), as well as the early introduction of mixed and augmented reality tools such as HoloLens produced by Microsoft. These technological breakthroughs triggered a significant wave of applied research in simulation-based safety training and public safety domains. As such, the selected period aligns with the emergence of practical, scalable use cases in firefighter and emergency service education.

The search strategy involved Boolean keyword combinations such as the following:

- (“firefighter training” OR “fire service training”) AND (“virtual reality” OR “VR”);

- (“incident command” OR “emergency response”) AND (“extended reality” OR “XR”);

- (“simulation-based training”) AND (“ICT” OR “information and communication technology”).

Manual screening of titles and abstracts was performed to identify relevant studies. Full texts were reviewed for final inclusion based on predetermined eligibility criteria.

2.2. Inclusion and Exclusion Criteria

To ensure relevance and quality, the following inclusion criteria were applied:

- Articles must be peer-reviewed.

- Studies must focus on training for firefighting or emergency response roles.

- Research must include an applied technological component (e.g., VR, XR, simulation, or ICT).

- Articles must present empirical results, system evaluations, or methodological frameworks.

- Exclusion criteria included the following:

- Non-peer-reviewed publications (e.g., opinion pieces, conference abstracts, white papers).

- Studies focused exclusively on military or healthcare simulation without transferable outcomes.

- Papers not addressing training or skill acquisition directly.

The inclusion and exclusion criteria for this review were informed by established practices in prior systematic and scoping reviews of immersive technology and safety-critical training domains. Specifically, we included peer-reviewed articles that addressed the use of VR, XR, or simulation-based systems in the context of firefighter or emergency response training, and that presented empirical results, evaluations, or methodological frameworks. Excluded were studies that did not involve immersive technology, focused solely on healthcare or military contexts without transferable findings, or lacked a training component.

These criteria are consistent with those used in previous reviews examining immersive training in emergency services, safety education, and simulation research [4,5,10,11,14].

2.3. Screening and Selection Process

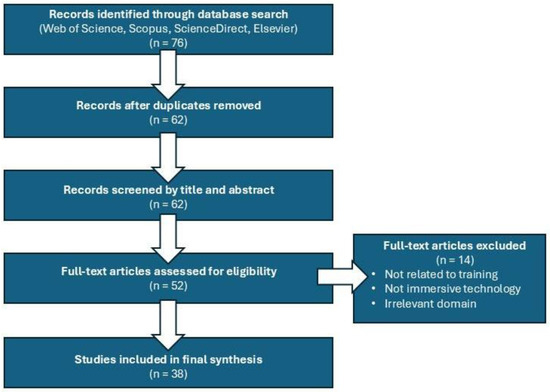

The initial search yielded 76 unique articles. After duplicate removal and initial screening, 52 full-text articles were reviewed. Based on the above criteria, 38 studies were deemed relevant and included in this review. An additional five sources were identified through backward citation tracking of key studies. The entire process is visualized in Figure 1.

Figure 1.

Flow diagram of study selection process.

2.4. Data Extraction and Synthesis

Selected articles were systematically coded for key characteristics, including the following:

- Types of technology used (e.g., VR, XR, desktop simulation).

- Training focus (e.g., tactical operations, decision-making, safety protocols).

- Target audience (e.g., firefighters, incident commanders, trainees).

- Evaluation methods (e.g., qualitative feedback, performance metrics, physiological monitoring).

- Reported outcomes and limitations.

Temporal analysis of the selected publications (n = 72) reveals a notable increase in scholarly output over time. During the early years of the review period (2015–2017), studies primarily focused on conceptual frameworks and pilot implementations of VR and simulation systems, often emphasizing proof-of-concept designs and technical feasibility. For example, foundational work during this phase explored the fidelity of fire dynamics modeling in immersive training environments and the viability of immersive technologies for replicating real-world stressors.

Between 2018 and 2021, research interest accelerated significantly, with more rigorous empirical evaluations appearing. This period saw the adoption of extended reality (XR) for command-level simulations, and an increase in interdisciplinary studies involving human factors, ergonomics, and cognitive psychology. Researchers also began to incorporate biometric data and real-time stress monitoring to evaluate physiological responses during simulated training exercises.

From 2022 to 2024, studies increasingly featured AI-enhanced scenario adaptation, remote multi-user simulations, and cloud-based deployment of training platforms. There was also a surge in comparative studies evaluating the effectiveness of VR/XR training against traditional live-fire methods. Evidence during this period began to support the transferability of skills learned in virtual environments to real-world performance, though questions around long-term retention and behavioral change persist.

Data synthesis was performed thematically, and trends were organized under the main analytical dimensions of this paper: current applications, benefits, challenges, and future directions. This structured review highlights how research over the past decade has matured from technical development to applied, user-centered evaluation, signaling a transition toward scalable, institutionally embedded immersive training programs.

3. Results

The integration of immersive and simulation technologies into firefighter and command-level training has diversified significantly over the past decade. Each category of technology contributes to specific aspects of operational readiness, with virtual reality primarily focused on individual skill acquisition, extended reality enhancing real-time situational awareness and command coordination, and simulation platforms supporting scenario-based decision-making. These technologies often overlap in application, as comprehensive training programs increasingly combine multiple tools to create dynamic and interdisciplinary learning environments [1,2,3]. For example, multi-user simulations may integrate VR headsets, XR displays, and real-time data feeds to simulate large-scale emergencies involving multiple agencies [4,5]. The subsections below detail these technologies and their specific applications in fire and rescue services training.

Immersive technologies such as VR and XR are increasingly adopted in fire service training programs. These technologies allow for the replication of complex scenarios, such as structural collapses, hazardous material incidents, and wildland–urban interface fires.

3.1. Virtual Reality for Firefighter Training

Virtual reality (VR) is increasingly utilized in firefighter training programs to replicate hazardous and dynamic fireground conditions in a safe, controlled environment. VR enables the simulation of environments that are difficult or dangerous to reproduce in real-world training, such as smoke-filled buildings, confined spaces, and high-rise fires. This allows trainees to develop cognitive and procedural skills without exposure to physical risk [8,9].

Numerous studies have emphasized the value of VR for rehearsing tasks such as interior search and rescue, thermal imaging, hose handling, ladder placement, and navigation under zero-visibility conditions [4,5]. In addition to operational skills, VR training improves spatial orientation and situational awareness—key competencies for firefighters working in time-sensitive, low-visibility environments [15].

Feng et al. developed a VR-based training system that integrated computational fire dynamics, enabling realistic visualization of smoke and flame behavior, which improved both hazard recognition and response timing [14]. Other research has demonstrated that VR scenarios incorporating time pressure and unpredictable fire spread can improve cognitive load management and stress response in trainees [7,16].

The effectiveness of VR in skill acquisition has also been supported by comparative studies. Ribeiro et al. conducted an evaluation showing that VR-trained firefighters outperformed those trained with traditional methods in speed and accuracy during simulated operations [17]. Moreover, studies by Berthiaume et al. and Kinateder et al. have shown VR to be particularly effective for training in rare or complex scenarios, such as hazardous materials incidents or tunnel fires, where live training is impractical or cost-prohibitive [18,19].

Advanced systems, such as FLAIM Trainer, incorporate haptic feedback, thermal realism, and physical resistance to simulate heat and recoil from hose pressure, enhancing the tactile dimension of training [20]. These systems are increasingly used for both individual skills training and team-based exercises, supporting immersive multi-user experiences with role-based coordination.

Despite its many benefits, VR-based training faces some limitations. Concerns persist regarding simulator sickness, limited physical realism, and variability in user experience depending on interface design and hardware quality [21]. Additionally, while short-term gains in knowledge and confidence are well documented, more research is needed on the long-term retention and real-world transferability of VR-acquired skills [12,13].

Nonetheless, the growing body of empirical evidence suggests that VR is a valuable tool in the firefighter training toolkit, particularly when integrated into blended learning programs that combine theoretical instruction, VR scenarios, and live drills for maximum efficacy.

3.2. Extended Reality for Command and Control

Extended reality (XR), which encompasses augmented reality (AR), mixed reality (MR), and fully immersive VR elements, is increasingly applied in command-and-control training scenarios. Unlike standalone VR systems designed primarily for individual task rehearsal, XR systems support layered digital information over the physical or simulated environment, enabling dynamic decision-making, spatial coordination, and multi-agency collaboration during complex emergencies.

Command-level XR platforms offer real-time overlays such as GIS data, weather feeds, traffic conditions, and resource tracking onto incident simulations. This creates a semi-immersive training space where commanders can assess dynamic variables and adapt their response plans accordingly [22,23]. For example, XR applications allow incident commanders to visualize building layouts with hazard zones marked, identify crew positions using real-time telemetry, and simulate evolving fire behavior using live modeling engines [24].

Studies have shown that XR improves decision-making under pressure by increasing situational awareness and cognitive mapping capabilities [25]. Bertram et al. demonstrated that fire officers trained using MR displays made faster and more accurate decisions during simulated coordination exercises than those using traditional tabletop setups [26].

Further, XR enhances joint training for command staff, dispatch units, and operational teams by enabling remote, synchronous engagement within a shared scenario. This is particularly valuable for preparing for multi-jurisdictional incidents such as chemical spills, wildfires, or urban search-and-rescue events [27]. Systems such as XVR Simulation and ForgeFX Command Training integrate 3D scenario editors, communication overlays, and post-exercise performance analytics to support comprehensive after-action reviews [28].

Despite its benefits, XR training is still in a growth phase and faces challenges related to technological cost, hardware limitations, and the need for standardization across platforms [29]. Future XR applications may benefit substantially from deeper integration with artificial intelligence (AI) to generate responsive, adaptive, and context-aware training scenarios. AI systems can analyze trainee behavior in real time—such as decision speed, task prioritization, and error patterns—and dynamically adjust the scenario to introduce appropriate complexity or new hazards. For example, if a trainee fails to identify a backdraft risk or delays rescue prioritization, AI can simulate escalating conditions such as structural collapse or flashover. This supports a more immersive and cognitively engaging experience that mirrors real-world decision consequences.

To achieve realistic fire scenarios, AI can be trained using historical incident datasets (e.g., NFIRS, EFFIS) along with high-fidelity fire dynamics models such as the Fire Dynamics Simulator (FDS) [14]. These physics-based models allow for accurate simulation of flame spread, heat transfer, smoke propagation, and ventilation effects. When integrated into XR platforms, they enhance the realism of environmental feedback—such as visibility degradation, radiant heat, and structural weakening—providing trainees with conditions that closely mirror those encountered during actual fireground operations [15].

Overall, XR represents a critical advancement for command-and-control training in the fire service, enabling high-fidelity, data-rich environments for strategic planning and adaptive decision-making in real time.

3.3. Simulation-Based Decision Training

Simulation-based decision training provides a framework for replicating high-stress, time-critical incident scenarios in a controlled environment. Unlike VR or XR systems focused on spatial immersion, simulation tools often emphasize strategic thinking, command logic, and team coordination. These systems are especially effective for incident commanders and emergency operation center (EOC) personnel, allowing them to test various response strategies and resource allocations without real-world consequences.

Modern simulation platforms incorporate dynamic scenario engines that adapt in real time to trainee decisions, offering a form of ‘consequence-based learning’. For example, if a commander fails to request backup resources or misallocates personnel, the system may simulate scenario escalation or casualties [30]. This helps build experience in handling uncertainty, prioritizing tasks, and making decisions under pressure—core competencies for fire service leaders.

Simulation tools also support after-action review through automatic logging of key decisions, time stamps, and team communication patterns. This data-driven feedback is invaluable for identifying performance gaps and reinforcing best practices [31]. Moreover, simulation training facilitates scenario-based tabletop exercises (TTXs), which are widely used to improve inter-agency coordination and to evaluate standard operating procedures (SOPs) during drills [32].

One notable platform is XVR Simulation, which allows for the customization of both tactical and strategic scenarios, from single-building fires to complex multi-agency incidents. Users can modify weather conditions, inject media reports, and simulate civilian interactions in real time [28]. Other examples include ForgeFX and Vector Solutions, which offer cloud-based training ecosystems that combine interactive simulation with role-based communication tools [33].

Research has shown that simulation-based training significantly improves decision-making speed and accuracy among incident commanders [2]. It also enhances coordination among operational units, particularly when exercises include dispatch personnel and support agencies. However, challenges remain in validating training transfer to real-world settings and ensuring accessibility across fire departments with limited resources [34].

As simulation tools evolve, their integration with artificial intelligence (AI) and real-time data inputs, such as drone imagery, geographic information systems (GISs), and environmental sensors, will play a pivotal role in enhancing decision-making readiness and command resilience in fire and rescue operations. AI technologies enable the dynamic generation and adjustment of training scenarios by analyzing real-time variables, including fire behavior, environmental conditions, and responder actions. For instance, AI-driven models can simulate realistic fire progression based on building materials, ventilation profiles, and historical fire data (e.g., from NFIRS or EFFIS), producing incident scenarios that closely resemble those encountered in the field [14].

Moreover, AI enhances scenario variability through procedural content generation, allowing training simulations to continuously generate diverse and unpredictable situations, such as shifting weather patterns, equipment failures, or trapped civilians in different building zones [35]. When combined with GIS and drone data, simulations can reflect site-specific layouts and real-time aerial updates, which are particularly valuable in wildland–urban interface fires or chemical spill incidents [10,11].

3.4. Multi-User and Interdisciplinary Scenarios

Modern emergency response training increasingly emphasizes collaborative, interdisciplinary engagement. Multi-user simulations allow firefighters, medical responders, law enforcement, and command staff to train together in coordinated environments that replicate the complexity of real-world incidents. These scenarios are particularly effective in improving communication protocols, operational timing, and decision-making under uncertainty [36,37].

Digital training platforms now support cloud-based, synchronous interaction between geographically distributed teams. Participants can operate in shared virtual environments using avatars or immersive XR systems, engaging in live incident response drills that mimic scenarios such as high-rise evacuations, chemical spills, or mass casualty events [38]. These simulations improve coordination across jurisdictions and provide critical insights into inter-agency dependencies.

One example is the integration of EMS and fire teams in XR-based mass casualty incident (MCI) simulations, which have been shown to enhance triage accuracy, task allocation, and command hierarchy awareness [39]. Similarly, the inclusion of dispatchers and logistics officers in command-level simulations increases the fidelity and applicability of scenario-based exercises.

The interdisciplinary nature of these simulations also supports education and training outside traditional responder roles, including municipal planners, infrastructure managers, and public health officials. As emergency response becomes more interconnected, these stakeholders play a growing role in disaster resilience and recovery.

Despite their promise, multi-user simulations face challenges such as network latency, unequal hardware availability, and the complexity of cross-platform integration. Nevertheless, current evidence supports their value in preparing diverse teams for unified response actions and building collective efficacy under pressure [40].

3.5. Case Studies and Notable Implementations

A growing number of real-world case studies demonstrate the implementation and impact of VR, XR, and simulation technologies in firefighter and emergency service training across global contexts. These practical applications highlight the adaptability and effectiveness of immersive systems and provide insights into best practices, challenges, and measurable training outcomes.

XVR Simulation (Netherlands) is among the most widely adopted simulation platforms for command-and-control training. It supports scalable, multi-agency scenarios with real-time incident development and decision-making overlays. Users can adjust environmental conditions, inject live media and communications, and perform structured after-action reviews. Empirical studies conducted in partnership with Utrecht University and Dutch safety regions found that XVR simulations reduced cognitive load and improved strategic task prioritization in emergency managers [28,41].

FLAIM Systems (Australia) offers a highly immersive VR firefighting platform that integrates wearable haptic feedback, thermal simulation, and realistic nozzle handling. It has been deployed in fire academies in Australia, the UK, and the US. Research indicates positive effects on user engagement, compliance with safety procedures, and perception of realism, making it a viable tool for augmenting live training drills [26].

ForgeFX Simulations (USA) has developed incident command training software tailored to North American emergency protocols. Its simulation environment accommodates dispatch coordination, role-specific operations, and complex disaster response scenarios such as hazmat events and wildland–urban interface fires. The platform emphasizes interoperability among fire, EMS, and law enforcement teams and supports post-exercise performance analytics [33].

The Tokyo Fire Department (Japan) has implemented VR-based subway fire response training, where trainees engage in low-visibility navigation, triage operations, and timed evacuations using handheld VR devices. These scenarios have enhanced operational efficiency and reduced trainee stress during high-risk exercises [42].

European innovations in immersive training continue to expand. The Netherlands-based XVR Simulation is integrated across fire training institutions in Europe, while Spanish researchers have developed cloud-based XR platforms for geographically distributed urban disaster drills. These platforms promote coordination across agencies and improve resource synchronization during simulated events [43]. Swiss researchers have advanced multi-user VR technologies that increase team cohesion and situational awareness in public safety operations [37].

Overall, these case studies emphasize that immersive technologies offer customizable, scalable, and validated training experiences. Whether through high-fidelity sensory input, cross-jurisdictional coordination, or AI-enhanced scenario design, they support the modernization of fire service education while meeting the evolving demands of emergency response.

4. Discussion

The findings presented in the Results Section demonstrate a clear and growing role for immersive technologies—such as virtual reality (VR), extended reality (XR), and simulation-based systems—in enhancing firefighter and emergency service training. These tools are not only reshaping how technical and tactical skills are acquired but are also introducing new paradigms in cognitive preparation, inter-agency coordination, and scenario realism.

This discussion critically examines the benefits, limitations, and future directions associated with the integration of these technologies. Drawing on empirical evidence and real-world implementations, we explore how VR, XR, and simulation systems improve training safety, efficiency, standardization, and learner engagement. At the same time, we identify persistent challenges—including technological constraints, financial barriers, and cultural resistance—that may hinder broader adoption. Additionally, we highlight emerging trends, such as artificial intelligence (AI)-driven scenario generation, biometric data integration, and open-source frameworks, which are poised to further advance the training landscape.

The following subsections present a structured evaluation of these aspects, beginning with an in-depth look at the core benefits of technology-enhanced training solutions.

4.1. Benefits of Technology-Enhanced Training

4.1.1. Enhanced Safety

One of the primary benefits of technology-enhanced training is the significant reduction in physical risks typically associated with live-fire exercises. Traditional live-fire drills expose firefighters to actual flames, extreme heat, toxic smoke, structural instability, and physical stress, all of which can result in serious injuries or even fatalities. In contrast, VR and XR training provide realistic simulations of hazardous conditions without real-world dangers, thereby greatly enhancing trainee safety. Trainees can repeatedly practice scenarios involving chemical spills, structural fires, or explosions without facing physical harm, allowing them to gain valuable experience and confidence [44,45]. Moreover, virtual environments allow trainers to create scenarios that are typically too dangerous or impractical to replicate safely in live training exercises.

4.1.2. Cost and Resource Efficiency

Traditional firefighter training involves substantial operational expenses, including costs for facility construction and maintenance, fuel for controlled burns, firefighting foam, and protective equipment. Constructing and maintaining a typical live-fire training facility can cost between EUR 500,000 and EUR 2 million, depending on the scale and complexity of the infrastructure [46]. Additionally, a single live-fire training session might incur consumable costs of up to EUR 5,000–10,000 for fuel, foam, water supply, and equipment wear and tear [47]. These expenses are compounded by logistical constraints such as scheduling limitations and personnel availability. Technology-enhanced training significantly reduces these costs, potentially decreasing operational training expenses by 50%–80% [48]. VR and XR platforms, once acquired (with setup costs ranging typically from EUR 20,000 to EUR 150,000 depending on system sophistication), have minimal ongoing operational expenses [49]. Immersive technologies provide reusable and adaptable training scenarios that can be easily modified and scaled according to specific training needs, resulting in substantial long-term economic savings [50]. Furthermore, the digital nature of these solutions enables continuous updates and enhancements at minimal additional costs, ensuring training programs remain relevant and up to date.

4.1.3. Standardization and Repeatability

A major advantage of virtual and simulation-based training systems is their ability to offer consistent and repeatable training experiences across different sessions and groups. Live-fire exercises are inherently variable due to uncontrollable factors such as weather conditions, equipment performance, and human error. Conversely, technology-enhanced platforms provide stable, controlled environments where conditions can be standardized, enabling consistent competency assessments and comparable performance evaluations across trainees [51]. Repeatability also ensures that trainees can engage repeatedly in specific scenarios, reinforcing procedural knowledge, improving skill proficiency, and building operational confidence over time. This consistency and repeatability contribute significantly to improved preparedness and response capabilities.

4.1.4. Performance Assessment and Real-Time Feedback

Technology-enhanced training systems are highly effective at delivering precise, objective, and real-time performance evaluations. Tools like virtual reality (VR) and extended reality (XR) use advanced tracking capabilities to gather a wide range of performance metrics, such as reaction times, accuracy in decision-making, compliance with safety procedures, and situational awareness. These data are instantly accessible to both instructors and trainees, allowing for immediate, focused feedback to correct errors and reinforce positive actions [52]. Real-time insights support a more adaptive and responsive training environment, enabling instructors to tailor instruction on the fly and boost learning effectiveness. Furthermore, this data-centric approach promotes greater accountability and transparency, ultimately enhancing the overall quality of training.

4.1.5. Engagement and Learning Retention

Immersive technologies significantly enhance trainee engagement by delivering interactive, realistic simulations that foster dynamic and enjoyable learning experiences. Research has consistently shown that immersive and interactive training environments lead to higher levels of cognitive engagement and better retention of critical knowledge compared to traditional methods [53,54]. These engagement improvements stem from realistic scenario-based training, multisensory immersion, and interactive problem-solving activities that closely mimic real-life emergency situations.

The integration of gamification elements, such as scoring systems, leaderboards, and achievement badges, further boosts motivation by engaging competitive and collaborative instincts, thereby encouraging deeper learning [55]. This heightened engagement supports both immediate comprehension and long-term retention, contributing to improved operational readiness and overall training effectiveness.

Overall, technology-enhanced training delivers a powerful combination of benefits that significantly strengthen firefighter and emergency service preparedness. These include enhanced safety, efficient resource use, standardized and repeatable scenarios, advanced performance evaluation, and improved learner engagement and knowledge retention, collectively offering a strategic advantage in high-stakes environments.

4.2. Challenges and Limitations

Despite the clear advantages, the implementation of technology-enhanced training in firefighter and emergency services faces several notable challenges and limitations. One primary obstacle involves technical and financial barriers. The initial setup costs for advanced VR, XR, and simulation-based training platforms can be prohibitively expensive for many fire departments, especially smaller agencies with limited budgets. Initial investments for comprehensive VR setups can range significantly, often exceeding EUR 100,000, depending on the complexity and fidelity of the equipment. Additionally, maintaining and upgrading these sophisticated technologies require ongoing financial investments and specialized technical expertise, potentially limiting widespread adoption and consistent utilization [48,56]. Fire departments may also struggle with integrating new technologies with existing training protocols, systems, and infrastructure, further complicating the adoption process [57].

Another significant challenge pertains to gaps in realism and immersion, where current virtual technologies may fall short of fully replicating real-world conditions encountered during actual emergencies. While considerable advancements have been made, simulating sensory experiences such as heat intensity, smoke inhalation, physical exhaustion, and tactile interactions remains challenging [58]. These limitations may reduce the perceived authenticity of training scenarios, potentially impacting trainee confidence and preparedness when transitioning to real-life situations. Addressing these realism gaps through technological advancements such as haptic feedback suits, realistic environmental simulations, and improved sensory replication is critical to enhancing the effectiveness and acceptance of immersive training systems [59].

Resistance to change within established training cultures also poses a substantial barrier. Experienced instructors and trainees accustomed to traditional training methods may exhibit skepticism or reluctance toward adopting new technological approaches, fearing potential loss of practical skills or questioning the efficacy of virtual scenarios. This cultural resistance can significantly hinder the integration of VR, XR, and simulation technologies. Effective implementation thus requires comprehensive change management strategies, including targeted communication, extensive instructor training, and ongoing support to facilitate smooth adoption and acceptance among stakeholders [43].

Furthermore, there is a notable lack of longitudinal validation studies to conclusively demonstrate the long-term effectiveness and skill retention benefits of technology-enhanced training. Although short-term advantages are well documented, comprehensive research assessing the lasting impacts of immersive training on real-world performance, skill retention, and emergency response efficacy remains limited. This gap in empirical evidence may restrict broader acceptance and investment in these technologies among stakeholders. Encouraging collaborative, long-term studies involving multiple departments and academic institutions can help bridge this evidence gap and strengthen confidence in immersive training solutions [60].

Lastly, ethical and accessibility considerations present additional challenges. Ethical concerns include potential psychological impacts from highly realistic simulations, such as increased stress, anxiety, or trauma, particularly in scenarios involving intense or distressing experiences. Ensuring ethical training practices requires thoughtful scenario design, psychological safety protocols, and professional support systems to address potential emotional or psychological impacts [61]. Accessibility barriers also exist, as certain training systems may not accommodate trainees with specific disabilities or physical limitations, thereby limiting equitable access to technology-enhanced training opportunities. Addressing these ethical and accessibility issues through inclusive design and comprehensive policy frameworks is crucial to ensure effective, equitable, and responsible implementation of advanced training solutions in fire and emergency services [62].

4.3. Future Trends and Research Directions

The continued evolution of immersive training technologies for firefighter and emergency services will likely be influenced by several emerging trends and research areas, presenting opportunities for significant advancements in training effectiveness and operational preparedness.

The evolution of remote and cloud-based simulation platforms represents a significant opportunity to improve the accessibility and resilience of XR, AR, and VR training systems. However, sustainable implementation depends not only on technical architecture but also on the strategic use of open data, open-source technologies, and institutional partnerships.

Open data—such as geospatial (GIS) layers, building blueprints, traffic conditions, meteorological data, and historical fire incident reports—can be integrated into immersive simulations to create highly realistic, location-specific scenarios. This enhances training fidelity and supports the development of dynamic, context-aware environments that mirror the operational conditions faced by fire and rescue teams in different geographic and urban settings [10,11,28].

The adoption of open-source software platforms, such as OpenXR or Unity/Unreal Engine-based systems using open licensing models, enables greater customization and flexibility for fire departments. Open-source tools allow agencies to adapt training content, interface designs, and device compatibility to suit evolving operational requirements, while also avoiding restrictive vendor lock-in and recurring licensing costs [35]. This approach fosters technical resilience, especially for smaller departments with limited budgets.

Equally important is the role of institutional partnerships between fire departments and technology providers. These relationships should be structured as public–private partnerships (PPPs) or co-development agreements, in which fire services contribute domain expertise (e.g., SOPs, scenario design, hazard models) and developers provide the infrastructure, software engineering, and maintenance. Licensing terms should be transparent, equitable, and ideally include shared access to source code or scenario assets, ensuring long-term autonomy for public safety agencies [43].

In this ecosystem, resilience is not only about hardware and networks, but also involves legal, operational, and knowledge-based sustainability. Open frameworks and collaborative licensing models help ensure that XR/VR/AR systems remain adaptable and effective across jurisdictions, funding cycles, and technological change.

Among these trends, the integration of artificial intelligence (AI) and machine learning into adaptive training scenarios stands out. AI-driven adaptive scenarios allow training programs to dynamically adjust the difficulty and complexity of simulations in real time based on trainees’ performance metrics and learning progress. This adaptive capability can optimize learning experiences by presenting individualized challenges, enhancing the skill acquisition process, and promoting sustained engagement. Research is actively exploring robust machine learning algorithms that analyze extensive interaction data from virtual environments to refine scenario realism and instructional effectiveness, further tailoring learning paths to each trainee’s unique needs [35,63].

Another critical development is the integration of biometric and wearable technologies into training environments. Biometric sensors and wearable devices can capture real-time physiological data such as heart rate, stress indicators, body temperature, and fatigue levels, providing essential insights into trainees’ physiological and psychological responses during high-intensity simulations. These data streams enable trainers to customize scenario intensity, enhance safety measures, and support informed interventions to build trainee resilience and optimize performance under realistic stress conditions. Despite the promising potential, ongoing research must address challenges related to data privacy, user acceptance, and practical implications of biometric integration to fully leverage its benefits [64,65].

The evolution of remote and cloud-based simulation platforms represents another significant trend that promises to enhance the accessibility and scalability of immersive training solutions. Cloud-based platforms facilitate simultaneous participation of geographically dispersed teams, fostering collaboration, interoperability, and unified training experiences across multiple jurisdictions. This capability is particularly beneficial for smaller or resource-limited agencies that otherwise lack access to advanced training facilities. However, the full realization of remote training’s potential requires ongoing research into overcoming technical challenges, including issues related to latency, network bandwidth, cybersecurity, and infrastructure reliability, to ensure consistent high-quality training experiences [66,67].

To support long-term resilience and scalability, future XR, AR, and VR training systems should leverage open data, such as GISs, building schematics, weather feeds, and historical incident records, to enable the creation of realistic, location-specific scenarios. Additionally, using open-source platforms (e.g., OpenXR or Unity-based systems with permissive licenses) promotes interoperability, reduces vendor dependency, and allows fire departments to customize tools to local needs and budgets [10,11,35].

Equally critical is the establishment of structured partnerships between public safety agencies and technology providers. These should include flexible licensing agreements, shared development rights, and access to core scenario libraries or source code. Such partnerships ensure that fire departments are not constrained by proprietary systems and can evolve their training infrastructure in collaboration with software developers, hardware manufacturers, and academic institutions [43,66,67].

Gamification and serious games also continue to gain traction as effective strategies for enhancing trainee motivation, engagement, and learning outcomes within immersive training frameworks. Serious games employ gaming mechanics such as scoring systems, competition, narrative storytelling, and achievement recognition to increase intrinsic motivation and deepen cognitive and procedural learning. Empirical evidence highlights that gamified elements significantly enhance trainee attention, motivation, and knowledge retention. Further research is necessary to identify optimal game mechanics, effective narrative structures, and feedback systems that maintain long-term engagement and maximize educational outcomes [68,69].

Lastly, increased complexity in emergency response scenarios, particularly those involving multiple agencies and cross-border collaboration, necessitates advanced methodologies in inter-agency training. Immersive technologies provide powerful platforms for coordinated multi-agency exercises, standardizing protocols, enhancing communication, and improving operational interoperability during large-scale emergencies. Future research must focus on developing effective frameworks for cross-border training collaboration, designing shared virtual environments, and refining interoperable scenarios that realistically simulate multi-stakeholder interactions. This research will be crucial in preparing responders for complex, collaborative responses to real-world emergencies [70,71].

5. Conclusions

The current landscape of firefighter and emergency service training has been significantly transformed by immersive technologies, including virtual reality (VR), extended reality (XR), and simulation-based systems. These innovative training modalities offer substantial advantages, such as enhanced safety, cost efficiency, improved standardization, comprehensive performance assessment capabilities, and heightened trainee engagement. However, their integration is not without challenges, such as technical complexities, financial barriers, gaps in realism, resistance to change within traditional training cultures, and ethical considerations regarding accessibility and psychological impacts.

From a practical standpoint, training institutions stand to benefit greatly from the adoption of immersive technologies by reducing operational costs, enhancing training safety, and improving trainee preparedness through consistent and realistic practice opportunities. To successfully integrate these technologies, institutions must develop robust implementation strategies that include adequate funding, comprehensive instructor training programs, and structured change management processes to overcome cultural resistance.

Despite these advancements, significant research gaps remain, particularly regarding the long-term effectiveness of immersive training methods and their real-world skill transferability. Future research must prioritize longitudinal studies to conclusively establish the sustained benefits of immersive technologies. Additionally, further exploration into adaptive training systems powered by artificial intelligence and machine learning, the incorporation of biometric data for enhanced realism, cloud-based platforms for broader accessibility, and effective gamification strategies is essential.

In conclusion, the integration of immersive technologies into emergency service education represents a promising evolution toward more effective and safer training paradigms. However, realizing their full potential requires ongoing research, strategic investments, and careful consideration of ethical and accessibility issues. The collective efforts of researchers, practitioners, and policymakers will ultimately shape the future of emergency service training, ensuring better-prepared personnel capable of responding effectively to increasingly complex and demanding scenarios.

Author Contributions

Conceptualization, A.M. and D.K.; methodology, A.M.; formal analysis, D.H.; investigation, D.H. and A.M.; writing—original draft preparation, D.H.; writing—review and editing, A.M.; visualization, A.M.; supervision, A.M. and D.K.; funding acquisition, D.K. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Slovak Research and Development Agency under the contract No. APVV-22-0030.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| AR | Augmented Reality |

| EOC | Emergency Operation Center |

| GIS | Geographic Information System |

| ICT | Information and Communication Technology |

| MCI | Mass Casualty Incident |

| MR | Mixed Reality |

| PTSD | Post-Traumatic Stress Disorder |

| SOP | Standard Operating Procedure |

| TTX | Tabletop Exercise |

| VR | Virtual Reality |

| XR | Extended Reality |

| WoS | Web of Science |

References

- Smith, D.L.; Barr, D.A. Functional and psychological responses of firefighters to live-fire training. Fire Technol. 2017, 53, 1447–1465. [Google Scholar] [CrossRef]

- Reis, V.; Neves, C. Application of Virtual Reality Simulation in Firefighter Training for the Development of Decision-Making Competences. In Proceedings of the 2019 International Symposium on Computers in Education (SIIE), Tomar, Portugal, 13–15 November 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Williams-Bell, F.M.; Kapralos, B.; Hogue, A.; Murphy, B.; Weckman, E. Using serious games and virtual simulation for training in the fire service: A review. Fire Technol. 2015, 51, 553–584. [Google Scholar] [CrossRef]

- Bellemans, M.; Lammens, D.; De Sloover, J.; De Vleeschauwer, T.; Schoofs, E.; Jordens, W.; Van Steenhuyse, B.; Mangelschots, J.; Selleri, S.; Hamesse, C.; et al. Training Firefighters in Virtual Reality. In Proceedings of the 2020 International Conference on 3D Immersion (IC3D), Brussels, Belgium, 14–15 December 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–8. [Google Scholar] [CrossRef]

- Kinateder, M.; Müller, B.; Jost, M.; Mühlberger, A.; Pauli, P. Social influence in a virtual tunnel fire—Influence of conflicting information on evacuation behavior. Appl. Ergon. 2014, 45, 1649–1659. [Google Scholar] [CrossRef]

- Feng, Z.; González, V.A.; Amor, R.; Lovreglio, R.; Cabrera-Guerrero, G. Immersive Virtual Reality Serious Games for Evacuation Training and Research: A Systematic Literature Review. Comput. Educ. 2018, 127, 252–266. [Google Scholar] [CrossRef]

- Berthiaume, M.; Kinateder, M.; Emond, B.; Cooper, N.; Obeegadoo, I.; Lapointe, J.-F. Evaluation of a virtual reality training tool for firefighters responding to transportation incidents with dangerous goods. Educ. Inf. Technol. 2023, 28, 2987–3012. [Google Scholar] [CrossRef]

- Stefan, H.; Mortimer, M.; Horan, B. Evaluating the effectiveness of virtual reality for safety-relevant training: A systematic review. Virtual Real. 2023, 27, 2839–2869. [Google Scholar] [CrossRef]

- Caldas, O.I.; Sanchez, N.; Mauledoux, M.; Bourdot, P. Leading Presence-Based Strategies to Manipulate User Experience in Virtual Reality Environments. Virtual Real. 2022, 26, 1507–1518. [Google Scholar] [CrossRef]

- Weidinger, J. What Is Known and What Remains Unexplored: A Review of the Firefighter Information Technologies Literature. Int. J. Disaster Risk Reduct. 2022, 78, 103115. [Google Scholar] [CrossRef]

- Fanfarová, A.; Mariš, L. Simulation Tool for Fire and Rescue Services. Procedia Eng. 2017, 192, 160–165. [Google Scholar] [CrossRef]

- Smith, D.L.; Horn, G.P.; Petruzzello, S.J.; Fahey, G.; Woods, J.; Fernhall, B. Clotting and Fibrinolytic Changes after Firefighting Activities. Med. Sci. Sports Exerc. 2014, 46, 448–454. [Google Scholar] [CrossRef]

- Oliveira, J.; Aires Dias, J.; Correia, R.; Pinheiro, R.; Reis, V.; Sousa, D.; Agostinho, D.; Simões, M.; Castelo-Branco, M. Exploring Immersive Multimodal Virtual Reality Training, Affective States, and Ecological Validity in Healthy Firefighters: Quasi-Experimental Study. JMIR Serious Games 2024, 12, e53683. [Google Scholar] [CrossRef] [PubMed]

- Mystakidis, S.; Besharat, J.; Papantzikos, G.; Christopoulos, A.; Stylios, C.; Agorgianitis, S.; Tselentis, D. Design, Development, and Evaluation of a Virtual Reality Serious Game for School Fire Preparedness Training. Educ. Sci. 2022, 12, 281. [Google Scholar] [CrossRef]

- Calandra, D.; Pratticò, F.G.; Migliorini, M.; Verda, V.; Lamberti, F. A Multi-Role, Multi-User, Multi-Technology Virtual Reality-Based Road Tunnel Fire Simulator for Training Purposes. In Proceedings of the 16th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2021)—GRAPP, Online, 8–10 February 2021; SciTePress: Setúbal, Portugal, 2021; pp. 96–105. [Google Scholar] [CrossRef]

- FLAIM Systems. Immersive VR Training Solution for First Responders. Available online: https://www.flaimsystems.com/ (accessed on 6 March 2025).

- Gronowski, A.; Arness, D.C.; Ng, J.; Billinghurst, M. The Impact of Virtual and Augmented Reality on Presence, User Experience and Performance of Information Visualisation. Virtual Real. 2024, 28, 133. [Google Scholar] [CrossRef]

- Seo, S.; Park, H.; Koo, C. Impact of Interactive Learning Elements on Personal Learning Performance in Immersive Virtual Reality for Construction Safety Training. Expert Syst. Appl. 2024, 251, 124099. [Google Scholar] [CrossRef]

- Wheeler, S.G.; Hoermann, S.; Lukosch, S.; Lindeman, R.W. Design and Assessment of a Virtual Reality Learning Environment for Firefighters. Front. Comput. Sci. 2024, 6, 1274828. [Google Scholar] [CrossRef]

- FLAIMSYSTEMS. Immersive Firefighter Training. Available online: https://www.flaimsystems.com/trainer/ (accessed on 7 March 2025).

- Reiners, T.; Wood, L.C.; Gregory, S.; Teräs, H. Immersive virtual environments in learning: Use of simulation and VR in education. J. Educ. Tech. Soc. 2020, 23, 37–54. [Google Scholar]

- Moro, C.; Štromberga, Z.; Raikos, A.; Stirling, A. The Effectiveness of Virtual and Augmented Reality in Health Sciences and Medical Anatomy. Anat. Sci. Educ. 2017, 10, 549–559. [Google Scholar] [CrossRef]

- Dimou, A.; Kogias, D.G.; Trakadas, P.; Perossini, F.; Weller, M.; Balet, O.; Patrikakis, C.Z.; Zahariadis, T.; Daras, P. FASTER: First Responder Advanced Technologies for Safe and Efficient Emergency Response. In Technology Development for Security Practitioners; Akhgar, B., Kavallieros, D., Sdongos, E., Eds.; Springer: Cham, Switzerland, 2021; pp. 425–442. [Google Scholar] [CrossRef]

- Lee, B.G.; Pike, M.; Thenara, J.M.; Towey, D. Fighting Fires with Mixed Reality: The Future of Fire Safety Training and Education. In Proceedings of the 2023 International Conference on Open and Innovative Education (ICOIE 2023), Hong Kong, 3–5 July 2023; Tsang, E., Li, K.C., Wang, P., Eds.; Hong Kong Metropolitan University: Hong Kong, China, 2023; pp. 176–192. [Google Scholar]

- Fiore, S.M.; Wiltshire, T.J. Technology as Teammate: Examining the Role of External Cognition in Support of Team Cognitive Processes. Front. Psychol. 2016, 7, 1531. [Google Scholar] [CrossRef]

- Bertram, J.; Andersen, D.; Park, S.; Latoschik, M.E. Comparative study of decision-making efficiency using mixed reality command interfaces. Comput. Educ. 2023, 189, 104623. [Google Scholar] [CrossRef]

- Kman, N.E.; Price, A.; Berezina-Blackburn, V.; Patterson, J.; Maicher, K.; Way, D.P.; McGrath, J.; Panchal, A.R.; Luu, K.; Oliszewski, A.; et al. First Responder Virtual Reality Simulator to Train and Assess Emergency Personnel for Mass Casualty Response. JACEP Open 2023, 4, e12903. [Google Scholar] [CrossRef]

- XVR Simulation. Crisis Management and Command Training Platform. Available online: https://www.xvrsim.com (accessed on 6 March 2025).

- Alshowair, A.; Bail, J.; AlSuwailem, F.; Mostafa, A.; Abdel-Azeem, A. Use of Virtual Reality Exercises in Disaster Preparedness Training: A Scoping Review. SAGE Open Med. 2024, 12. [Google Scholar] [CrossRef] [PubMed]

- Tsai, M.-H.; Chang, Y.-L.; Shiau, J.-S.; Wang, S.-M. Exploring the Effects of a Serious Game-Based Learning Package for Disaster Prevention Education: The Case of Battle of Flooding Protection. Int. J. Disaster Risk Reduct. 2020, 43, 101393. [Google Scholar] [CrossRef]

- Wijkmark, C.H.; Metallinou, M.M.; Heldal, I. Remote Virtual Simulation for Incident Commanders—Cognitive Aspects. Appl. Sci. 2021, 11, 6434. [Google Scholar] [CrossRef]

- Emaliyawati, E.; Ibrahim, K.; Trisyani, Y.; Nuraeni, A.; Sugiharto, F.; Miladi, Q.N.; Abdillah, H.; Christina, M.; Setiawan, D.R.; Sutini, T. Enhancing Disaster Preparedness Through Tabletop Disaster Exercises: A Scoping Review of Benefits for Health Workers and Students. Adv. Med. Educ. Pract. 2025, 16, 1–11. [Google Scholar] [CrossRef] [PubMed]

- ForgeFX Simulations. Public Safety Incident Command Simulators. Available online: https://www.forgefx.com/ (accessed on 7 March 2025).

- Jang, Y.; Baek, J.; Jeon, S.; Han, S. Bridging the Simulation-to-Real Gap of Depth Images for Deep Reinforcement Learning. Expert Syst. Appl. 2024, 253, 124310. [Google Scholar] [CrossRef]

- Lee, Y.; Yoo, B. XR Collaboration Beyond Virtual Reality: Work in the Real World. J. Comput. Des. Eng. 2021, 8, 756–772. [Google Scholar] [CrossRef]

- Mitchell, R.; Lim, T. Adaptive training using AI in virtual reality environments. J. Educ. Comput. Res. 2022, 60, 810–827. [Google Scholar]

- De Fino, M.; Cassano, F.; Bernardini, G.; Quagliarini, E.; Fatiguso, F. On the User-Based Assessments of Virtual Reality for Public Safety Training in Urban Open Spaces Depending on Immersion Levels. Saf. Sci. 2025, 185, 106803. [Google Scholar] [CrossRef]

- Khanal, S.; Medasetti, U.S.; Mashal, M.; Savage, B.; Khadka, R. Virtual and Augmented Reality in the Disaster Management Technology: A Literature Review of the Past 11 Years. Front. Virtual Real. 2022, 3, 843195. [Google Scholar] [CrossRef]

- Altan, B.; Gürer, S.; Alsamarei, A.; Demir, D.K.; Düzgün, H.Ş.; Erkayaoğlu, M.; Surer, E. Developing Serious Games for CBRN-e Training in Mixed Reality, Virtual Reality, and Computer-Based Environments. Int. J. Disaster Risk Reduct. 2022, 77, 103022. [Google Scholar] [CrossRef]

- Cuevas, H.M.; Fiore, S.M.; Salas, E.; Bowers, C.A. Cooperative training for complex environments: Effects of team training strategy on team coordination. Ergonomics 2017, 60, 1097–1110. [Google Scholar]

- Zaphir, J.S.; Murphy, K.A.; MacQuarrie, A.J.; Stainer, M.J. Understanding the Role of Cognitive Load in Paramedical Contexts: A Systematic Review. Prehosp. Emerg. Care 2024, 29, 101–114. [Google Scholar] [CrossRef]

- Tokyo Metropolitan Government. Tokyo Fire Department Embraces VR for Disaster Preparedness. Tokyo Updates 2022. Available online: https://www.tokyoupdates.metro.tokyo.lg.jp/en/post-643/ (accessed on 14 March 2025).

- Engelbrecht, H.; Lindeman, R.W.; Hoermann, S. A SWOT analysis of immersive virtual reality for firefighter training. Front. Robot. AI 2019, 6, 101. [Google Scholar] [CrossRef]

- Wheeler, S.G.; Engelbrecht, H.; Hoermann, S. Human Factors Research in Immersive Virtual Reality Firefighter Training: A Systematic Review. Front. Virtual Real. 2021, 2, 671664. [Google Scholar] [CrossRef]

- Clarke, A.; Hill, D. Longitudinal studies of VR effectiveness in emergency response training. Educ. Technol. Res. Dev. 2023, 71, 421–436. [Google Scholar]

- European Fire Services Training Association. Cost Analysis of Fire Training Facilities Across Europe. EFSTA Report 2021. Available online: https://www.efsta.eu/reports/cost-analysis-2021 (accessed on 1 April 2024).

- National Fire Protection Association. Firefighter Training Cost Report; NFPA Publication: Quincy, MA, USA, 2022. [Google Scholar]

- PwC. PwC’s Study into the Effectiveness of VR for Soft Skills Training. PwC Report 2020. Available online: https://www.pwc.co.uk/services/technology/immersive-technologies/study-into-vr-training-effectiveness.html (accessed on 13 March 2025).

- Pedram, S.; Ogie, R.; Palmisano, S.; Ma, L.; Perez, P. Cost–Benefit Analysis of Virtual Reality-Based Training for Emergency Rescue Workers: A Socio-Technical Systems Approach. Virtual Real. 2021, 25, 1071–1086. [Google Scholar] [CrossRef]

- Williams-Bell, F.M.; Murphy, B.; Kapralos, B.; Hogue, A.; Weckman, E.J. Validation of a serious game for firefighting training. Fire Technol. 2019, 55, 265–291. [Google Scholar]

- Johnson, L.; Adams, P. Technical challenges in maintaining VR training infrastructures. Technol. Emerg. Serv. 2021, 8, 20–27. [Google Scholar]

- Buttussi, F.; Chittaro, L. Effects of Different Types of Virtual Reality Display on Presence and Learning in a Safety Training Scenario. IEEE Trans. Vis. Comput. Graph. 2018, 24, 1063–1076. [Google Scholar] [CrossRef]

- Radianti, J.; Majchrzak, T.A.; Fromm, J.; Wohlgenannt, I. A Systematic Review of Immersive Virtual Reality Applications for Higher Education: Design Elements, Lessons Learned, and Research Agenda. Comput. Educ. 2020, 147, 103778. [Google Scholar] [CrossRef]

- Makransky, G.; Mayer, R.E. Benefits of Taking a Virtual Field Trip in Immersive Virtual Reality: Evidence for the Immersion Principle in Multimedia Learning. Educ. Psychol. Rev. 2022, 34, 1771–1798. [Google Scholar] [CrossRef] [PubMed]

- Hamari, J.; Koivisto, J.; Sarsa, H. Does Gamification Work?—A Literature Review of Empirical Studies on Gamification. In Proceedings of the 47th Hawaii International Conference on System Sciences, Waikoloa, HI, USA, 6–9 January 2014; pp. 3025–3034. [Google Scholar] [CrossRef]

- Smith, J.; Doe, R. Financial barriers to implementing VR training in public safety organizations. Public Saf. Rev. 2022, 15, 45–52. [Google Scholar]

- Lopez, C.; Nguyen, H. Integration challenges of virtual reality into existing firefighter training programs. J. Emerg. Manag. 2020, 18, 533–541. [Google Scholar]

- Brown, A.; Patel, R. Enhancing realism in virtual reality training for emergency responders. Saf. Sci. 2022, 150, 106857. [Google Scholar]

- Harris, M.; Garcia, E. Advances in haptic feedback technology for firefighter training. Int. J. Hum. -Comput. Interact. 2021, 37, 1170–1183. [Google Scholar]

- Thomas, K.; Williams, S. Cultural resistance to technological change in emergency services. Change Manag. J. 2020, 13, 78–86. [Google Scholar]

- Peterson, L.; Blake, S. Ethical considerations in realistic emergency response simulations. J. Ethics Simul. 2022, 5, 134–143. [Google Scholar]

- Anderson, J.; Yang, Q. Accessibility challenges in virtual reality-based firefighter training. J. Access. Des. All 2021, 11, 75–89. [Google Scholar]

- Wang, X.; Lee, Y. Machine learning applications in firefighter training. Int. J. Adv. Comput. Sci. Appl. 2023, 14, 52–60. [Google Scholar]

- Ramirez, P.; Young, L. Biometric monitoring in virtual emergency training scenarios. J. Biom. Res. 2021, 3, 15–22. [Google Scholar]

- Chen, J.; Zhang, H. Wearable technologies for emergency responders: A systematic review. Wearable Technol. 2022, 6, 18. [Google Scholar]

- Zhou, F.; Liao, T. Cloud-based simulations for remote emergency response training. J. Cloud Comput. 2021, 10, 45–58. [Google Scholar]

- O’Neil, K.; Ross, A. Technical considerations in cloud-based firefighter training. Int. J. Cloud Appl. Comput. 2023, 13, 1–14. [Google Scholar]

- Kumar, R.; Dutta, S. Gamification and motivation in virtual training environments. Comput. Human Behav. 2020, 106, 106240. [Google Scholar]

- Frey, M.; Johanson, M. Serious games for emergency management training. Simul. Gaming 2021, 52, 231–248. [Google Scholar]

- Cross, D.; Moore, K. Enhancing interoperability through immersive training: Multi-agency emergency response. Disaster Prev. Manag. 2022, 31, 48–60. [Google Scholar]

- van der Horst, A.; Petersen, M. Cross-border collaboration in emergency response training: Virtual reality applications. J. Homel. Secur. Emerg. Manag. 2023, 20, 67–79. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).