Lightweight YOLOv5s Model for Early Detection of Agricultural Fires

Abstract

1. Introduction

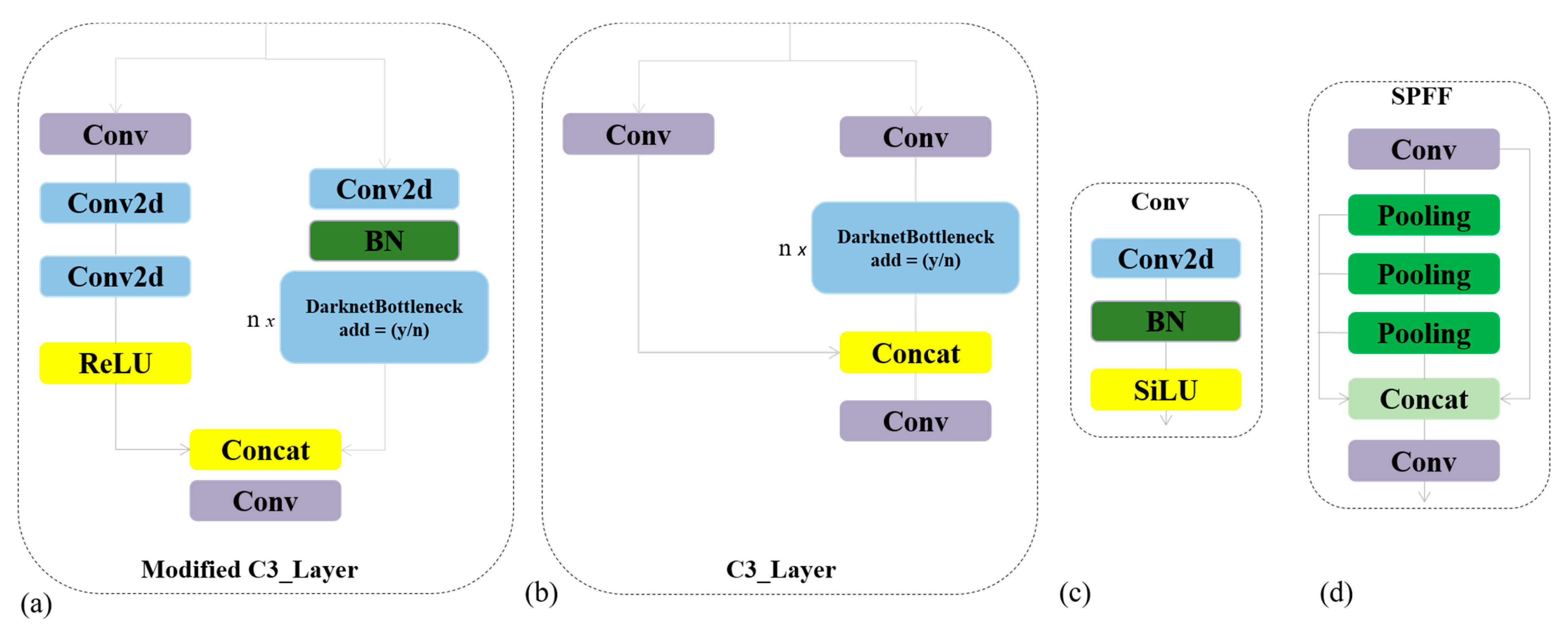

- We propose a modified C3 block within YOLOv5s, incorporating a deeper structure and DarknetBottleneck modules to improve the extraction of fine-grained features critical for early fire detection.

- A comparative study of SiLU, ReLU, and Leaky ReLU functions is conducted to determine the optimal activation mechanism for fire-specific feature learning, with SiLU showing superior convergence and accuracy.

- We introduce a sensitivity analysis evaluating the influence of architectural components on key performance metrics, providing insight into design choices that enhance model robustness.

- A custom dataset composed of annotated agricultural fire imagery was compiled and preprocessed, enabling a diverse, representative training environment.

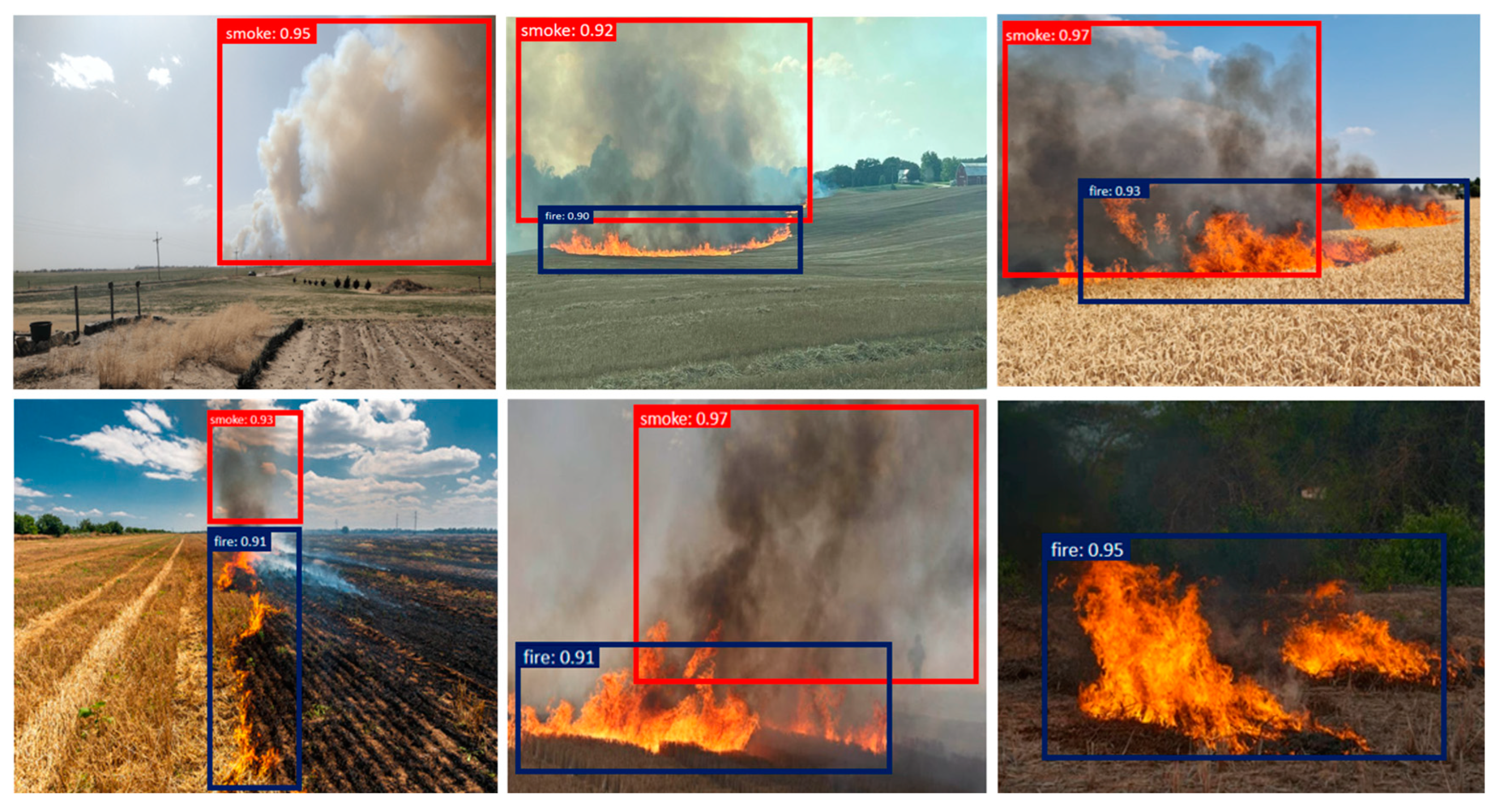

- Our proposed model achieves a higher precision, recall, mAP, and F1-score compared to YOLOv7-tiny, YOLOv8n, YOLO-Fire, and other state-of-the-art lightweight detectors, while maintaining computational efficiency.

2. Related Works

2.1. Traditional Fire Monitoring Approaches

2.2. IoT-Based Ground Surveillance Systems

2.3. Deep Learning in Fire Detection

3. Methodology

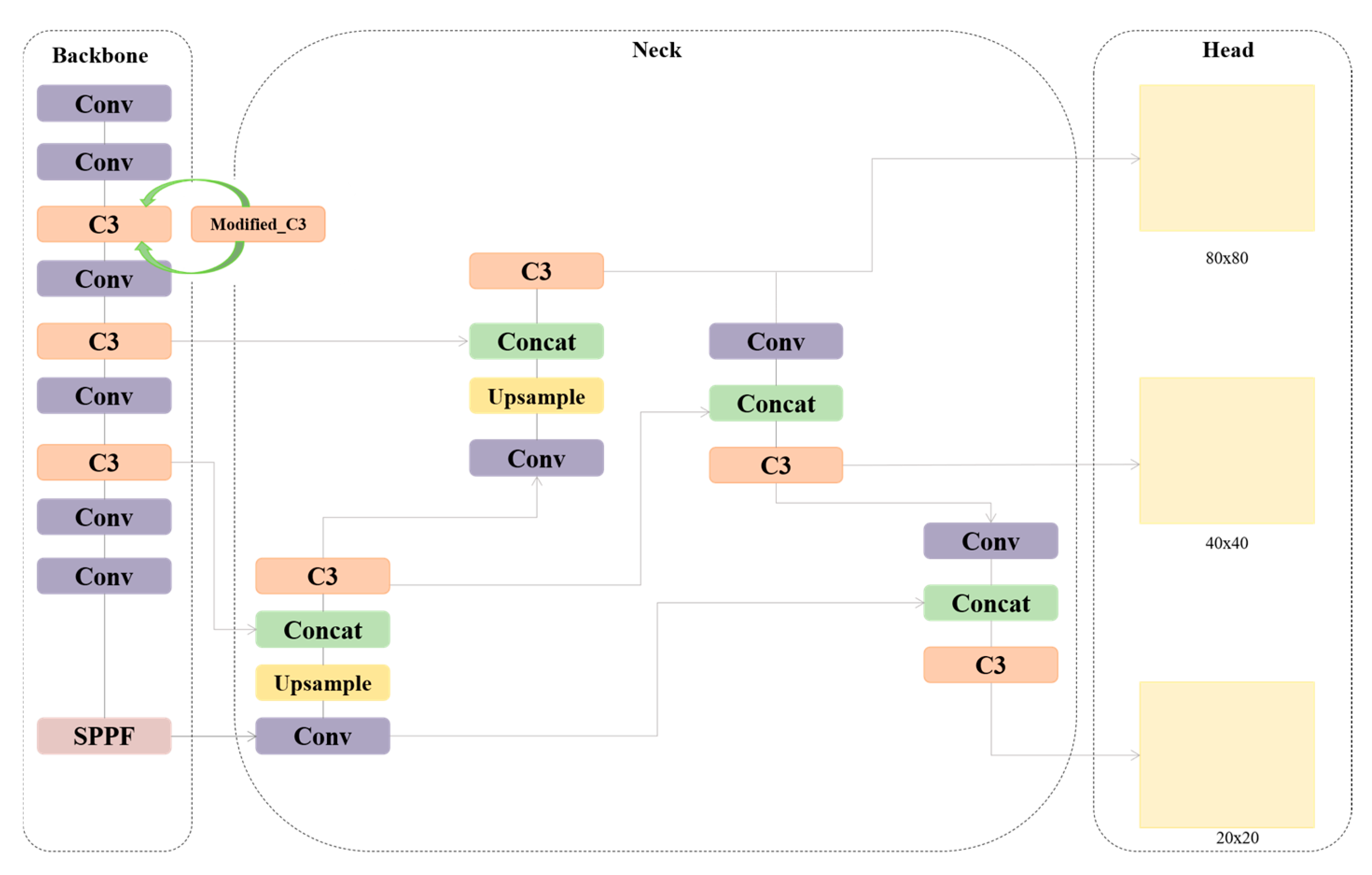

3.1. Yolov5s

3.2. The Proposed Method

| Algorithm 1. Pseudocode for the Modified C3 Layer used in the enhanced YOLOv5s architecture, integrating convolutional, pooling, SiLU activation, and DarknetBottleneck modules to improve early-stage agricultural fire detection. |

| class modified(C3_layer): 2: conv: 3: Conv2d (channels, channels, kernel, padding, stride) 4: BatchNorm2d (channels) 5: SiLU (); 6: Conv2d (channels, channels, kernel, padding, stride) 7: Pooling (); 8: Conv2d (channels, channels, kernel, padding, stride) 9: (n*) DarknetBottleneck(add(y/n)); 10: Concatenation (5, 9); 11: conv; |

4. The Experiment and Results

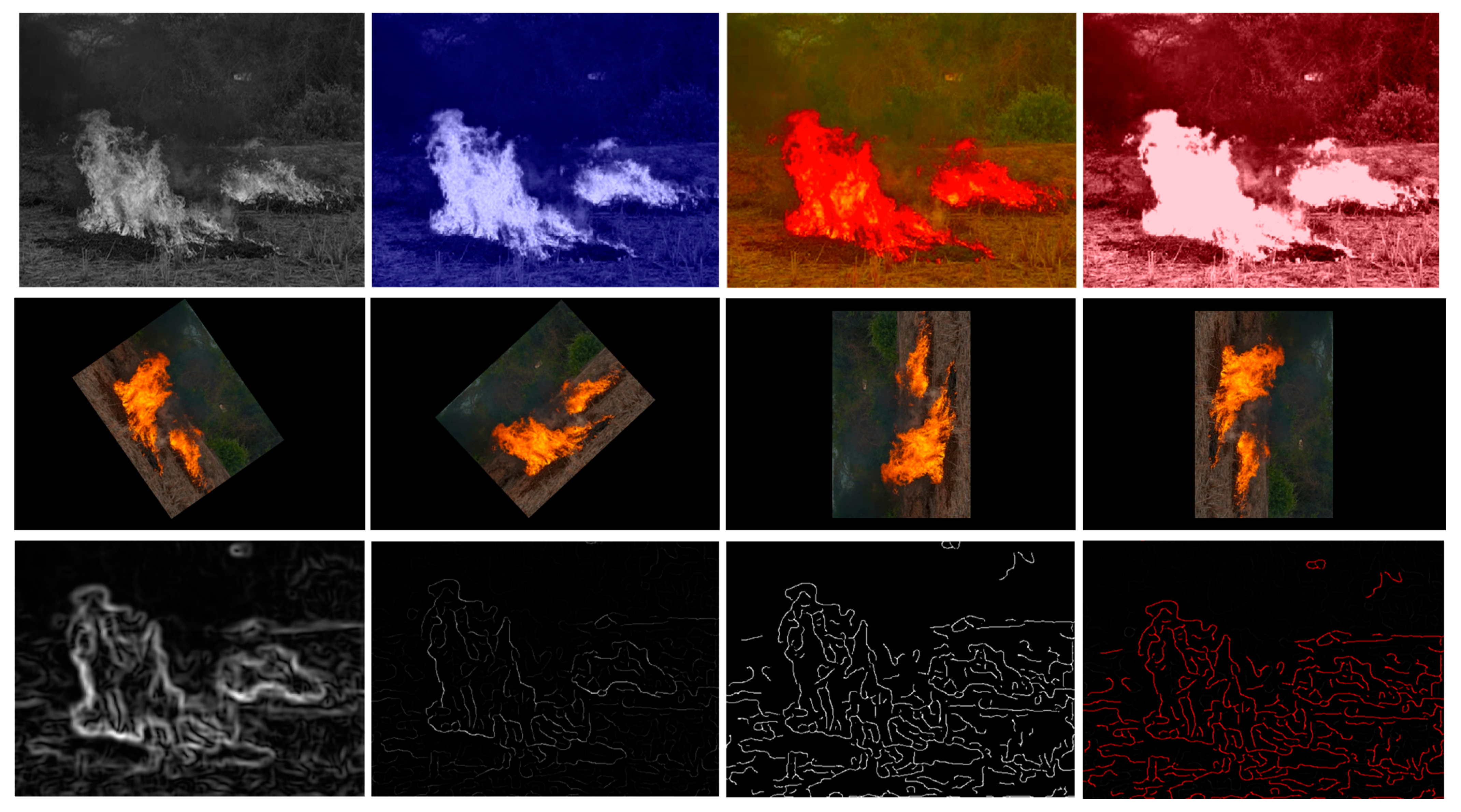

4.1. Dataset

4.2. Data Preprocessing

4.3. Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Morchid, A.; Alblushi, I.G.M.; Khalid, H.M.; El Alami, R.; Said, Z.; Qjidaa, H.; Cuce, E.; Muyeen, S.M.; Jamil, M.O. Fire detection and anti-fire system to enhance food security: A concept of smart agriculture systems-based IoT and embedded systems with machine-to-machine protocol. Sci. Afr. 2025, 27, e02559. [Google Scholar] [CrossRef]

- Parks, S.A.; Guiterman, C.H.; Margolis, E.Q.; Lonergan, M.; Whitman, E.; Abatzoglou, J.T.; Falk, D.A.; Johnston, J.D.; Daniels, L.D.; Lafon, C.W.; et al. A fire deficit persists across diverse North American forests despite recent increases in area burned. Nat. Commun. 2025, 16, 1493. [Google Scholar] [CrossRef]

- Tesfaye, A.H.; Prior, J.; McIntyre, E. Impact of climate change on health workers: A scoping review. J. Public Health 2025, 1–19. [Google Scholar] [CrossRef]

- Udurume, M.; Hwang, T.; Uddin, R.; Aziz, T.; Koo, I. Developing a Fire Monitoring System Based on MQTT, ESP-NOW, and a REM in Industrial Environments. Appl. Sci. 2025, 15, 500. [Google Scholar] [CrossRef]

- Li, S.; Baijnath-Rodino, J.A.; York, R.A.; Quinn-Davidson, L.N.; Banerjee, T. Temporal and spatial pattern analysis of escaped prescribed fires in California from 1991 to 2020. Fire Ecol. 2025, 21, 3. [Google Scholar] [CrossRef]

- Abdusalomov, A.; Umirzakova, S.; Bakhtiyor Shukhratovich, M.; Mukhiddinov, M.; Kakhorov, A.; Buriboev, A.; Jeon, H.S. Drone-Based Wildfire Detection with Multi-Sensor Integration. Remote Sens. 2024, 16, 4651. [Google Scholar] [CrossRef]

- Maity, T.; Bhawani, A.N.; Samanta, J.; Saha, P.; Majumdar, S.; Srivastava, G. MLSFDD: Machine-Learning-Based Smart Fire Detection Device for Precision Agriculture. IEEE Sensors J. 2025, 25, 8921–8928. [Google Scholar] [CrossRef]

- Ayilara, M.S.; Fasusi, S.A.; Ajakwe, S.O.; Akinola, S.A.; Ayilara-Adewale, O.A.; Ajakaye, A.E.; Ayilara, O.A.; Babalola, O.O. Impact of Climate Change on Agricultural Ecosystem. In Climate Change, Food Security, and Land Management: Strategies for a Sustainable Future; Springer Nature: Cham, Switzerland, 2025; pp. 1–24. [Google Scholar]

- Thirunagari, B.K.; Kumar, R.; Kota, S.H. Assessing and mitigating India’s agricultural emissions: A regional and temporal perspective on crop residue, tillage, and livestock contributions. J. Hazard. Mater. 2025, 488, 137407. [Google Scholar] [CrossRef]

- Vasconcelos, R.N.; Franca Rocha, W.J.; Costa, D.P.; Duverger, S.G.; Santana, M.M.D.; Cambui, E.C.; Ferreira-Ferreira, J.; Oliveira, M.; Barbosa, L.D.S.; Cordeiro, C.L. Fire Detection with Deep Learning: A Comprehensive Review. Land 2024, 13, 1696. [Google Scholar] [CrossRef]

- Yunusov, N.; Islam, B.M.S.; Abdusalomov, A.; Kim, W. Robust Forest Fire Detection Method for Surveillance Systems Based on You Only Look Once Version 8 and Transfer Learning Approaches. Processes 2024, 12, 1039. [Google Scholar] [CrossRef]

- Ramos, L.; Casas, E.; Bendek, E.; Romero, C.; Rivas-Echeverría, F. Hyperparameter optimization of YOLOv8 for smoke and wildfire detection: Implications for agricultural and environmental safety. Artif. Intell. Agric. 2024, 12, 109–126. [Google Scholar] [CrossRef]

- Yu, D.; Li, S.; Zhang, Z.; Liu, X.; Ding, W.; Zhao, X. Improved YOLOv5: Efficient Object Detection for Fire Images. Fire 2025, 8, 38. [Google Scholar] [CrossRef]

- Dou, Z.; Zhou, H.; Liu, Z.; Hu, Y.; Wang, P.; Zhang, J.; Wang, Q.; Chen, L.; Diao, X.; Li, J. An Improved YOLOv5s Fire Detection Model. Fire Technol. 2023, 60, 135–166. [Google Scholar] [CrossRef]

- Saleh, A.; Zulkifley, M.A.; Harun, H.H.; Gaudreault, F.; Davison, I.; Spraggon, M. Forest fire surveillance systems: A review of deep learning methods. Heliyon 2023, 10, e23127. [Google Scholar] [CrossRef]

- Naval, M.L.M.; Bieluczyk, W.; Alvarez, F.; da Silva Carvalho, L.C.; Maracahipes-Santos, L.; de Oliveira, E.A.; da Silva, K.G.; Pereira, M.B.; Brando, P.M.; Junior, B.H.M.; et al. Impacts of repeated forest fires and agriculture on soil organic matter and health in southern Amazonia. CATENA 2025, 254, 108924. [Google Scholar] [CrossRef]

- Xiong, X.; King, M.D.; Salomonson, V.V.; Barnes, W.L.; Wenny, B.N.; Angal, A.; Wu, A.; Madhavan, S.; Link, D.O. Moderate resolution imaging spectroradiometer on Terra and Aqua missions. In Optical Payloads for Space Missions; Wiley: Hoboken, NJ, USA, 2015; pp. 53–89. [Google Scholar]

- Wan, Q.; Smigaj, M.; Brede, B.; Kooistra, L. Optimizing UAV-based uncooled thermal cameras in field conditions for precision agriculture. Int. J. Appl. Earth Obs. Geoinfo. 2024, 134, 104184. [Google Scholar] [CrossRef]

- Hristov, G.; Zlatov, N.; Zahariev, P.; Le, C.H.; Kinaneva, D.; Georgiev, G.; Yotov, Y.; Gao, J.; Chu, A.M.; Nguyen, H.Q.; et al. Development of a smart system for early detection of forest fires based on unmanned aerial vehicles. In Proceedings of the 2022 Seventh International Conference on Research in Intelligent and Computing in Engineering, Hung Yen University of Technology and Education, Hung Yen, Vietnam, 11–12 November 2022; pp. 135–140. [Google Scholar]

- Morchid, A.; Jebabra, R.; Ismail, A.; Khalid, H.M.; El Alami, R.; Qjidaa, H.; Jamil, M.O. IoT-enabled fire detection for sustainable agriculture: A real-time system using flask and embedded technologies. Results Eng. 2024, 23, 102705. [Google Scholar] [CrossRef]

- Sharma, A.; Kumar, H.; Mittal, K.; Kauhsal, S.; Kaushal, M.; Gupta, D.; Narula, A. IoT and deep learning-inspired multi-model framework for monitoring Active Fire Locations in Agricultural Activities. Comput. Electr. Eng. 2021, 93, 107216. [Google Scholar] [CrossRef]

- Peruzzi, G.; Pozzebon, A.; Van Der Meer, M. Fight Fire with Fire: Detecting Forest Fires with Embedded Machine Learning Models Dealing with Audio and Images on Low Power IoT Devices. Sensors 2023, 23, 783. [Google Scholar] [CrossRef]

- Vishwakarma, A.K.; Deshmukh, M. CNNM-FDI: Novel Convolutional Neural Network Model for Fire Detection in Images. IETE J. Res. 2025, 1–14. [Google Scholar] [CrossRef]

- Abdusalomov, A.B.; Islam, B.M.S.; Nasimov, R.; Mukhiddinov, M.; Whangbo, T.K. An Improved Forest Fire Detection Method Based on the Detectron2 Model and a Deep Learning Approach. Sensors 2023, 23, 1512. [Google Scholar] [CrossRef]

- Casas, E.; Ramos, L.; Bendek, E.; Rivas-Echeverría, F. Assessing the Effectiveness of YOLO Architectures for Smoke and Wildfire Detection. IEEE Access 2023, 11, 96554–96583. [Google Scholar] [CrossRef]

- He, Y.; Hu, J.; Zeng, M.; Qian, Y.; Zhang, R. DCGC-YOLO: The Efficient Dual-Channel Bottleneck Structure YOLO Detection Algorithm for Fire Detection. IEEE Access 2024, 12, 65254–65265. [Google Scholar] [CrossRef]

- Han, Y.; Duan, B.; Guan, R.; Yang, G.; Zhen, Z. LUFFD-YOLO: A Lightweight Model for UAV Remote Sensing Forest Fire Detection Based on Attention Mechanism and Multi-Level Feature Fusion. Remote. Sens. 2024, 16, 2177. [Google Scholar] [CrossRef]

- Cao, L.; Shen, Z.; Xu, S. Efficient forest fire detection based on an improved YOLO model. Vis. Intell. 2024, 2, 20. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, Y.; Zhu, C. YOLO-LFD: A Lightweight and Fast Model for Forest Fire Detection. Comput. Mater. Contin. 2025, 82, 3399–3417. [Google Scholar] [CrossRef]

- Kim, S.; Jang, I.-S.; Ko, B.C. Domain-free fire detection using the spatial–temporal attention transform of the YOLO backbone. Pattern Anal. Appl. 2024, 27, 45. [Google Scholar] [CrossRef]

- Xiao, L.; Li, W.; Zhang, X.; Jiang, H.; Wan, B.; Ren, D. EMG-YOLO: An efficient fire detection model for embedded devices. Digit. Signal Process. 2024, 156, 104824. [Google Scholar] [CrossRef]

- Xu, B.; Hua, F.; Yang, Q.; Wang, T. August. YOLO-Fire: A Fire Detection Algorithm Based on YOLO. In Advanced Intelligent Computing Technology and Applications; International Conference on Intelligent Computing Series; Springer Nature: Singapore, 2024; pp. 142–154. [Google Scholar]

- Wang, D.; Qian, Y.; Lu, J.; Wang, P.; Yang, D.; Yan, T. Ea-yolo: Efficient extraction and aggregation mechanism of YOLO for fire detection. Multimed. Syst. 2024, 30, 287. [Google Scholar] [CrossRef]

- Wang, H.; Fu, X.; Yu, Z.; Zeng, Z. DSS-YOLO: An improved lightweight real-time fire detection model based on YOLOv8. Sci. Rep. 2025, 15, 8963. [Google Scholar] [CrossRef]

- Zhang, D. A Yolo-based Approach for Fire and Smoke Detection in IoT Surveillance Systems. Int. J. Adv. Comput. Sci. Appl. 2024, 15, 9. [Google Scholar] [CrossRef]

- Abdusalomov, A.; Umirzakova, S.; Tashev, K.; Egamberdiev, N.; Belalova, G.; Meliboev, A.; Atadjanov, I.; Temirov, Z.; Cho, Y.I. AI-Driven UAV Surveillance for Agricultural Fire Safety. Fire 2025, 8, 142. [Google Scholar] [CrossRef]

| Model | Precision (%) | Recall (%) | mAP (%) | F1-Score (%) |

|---|---|---|---|---|

| YOLOv5s (Baseline) | 85.5 | 83.2 | 84.6 | 81.3 |

| Proposed Model | 88.9 | 85.7 | 87.3 | 87.3 |

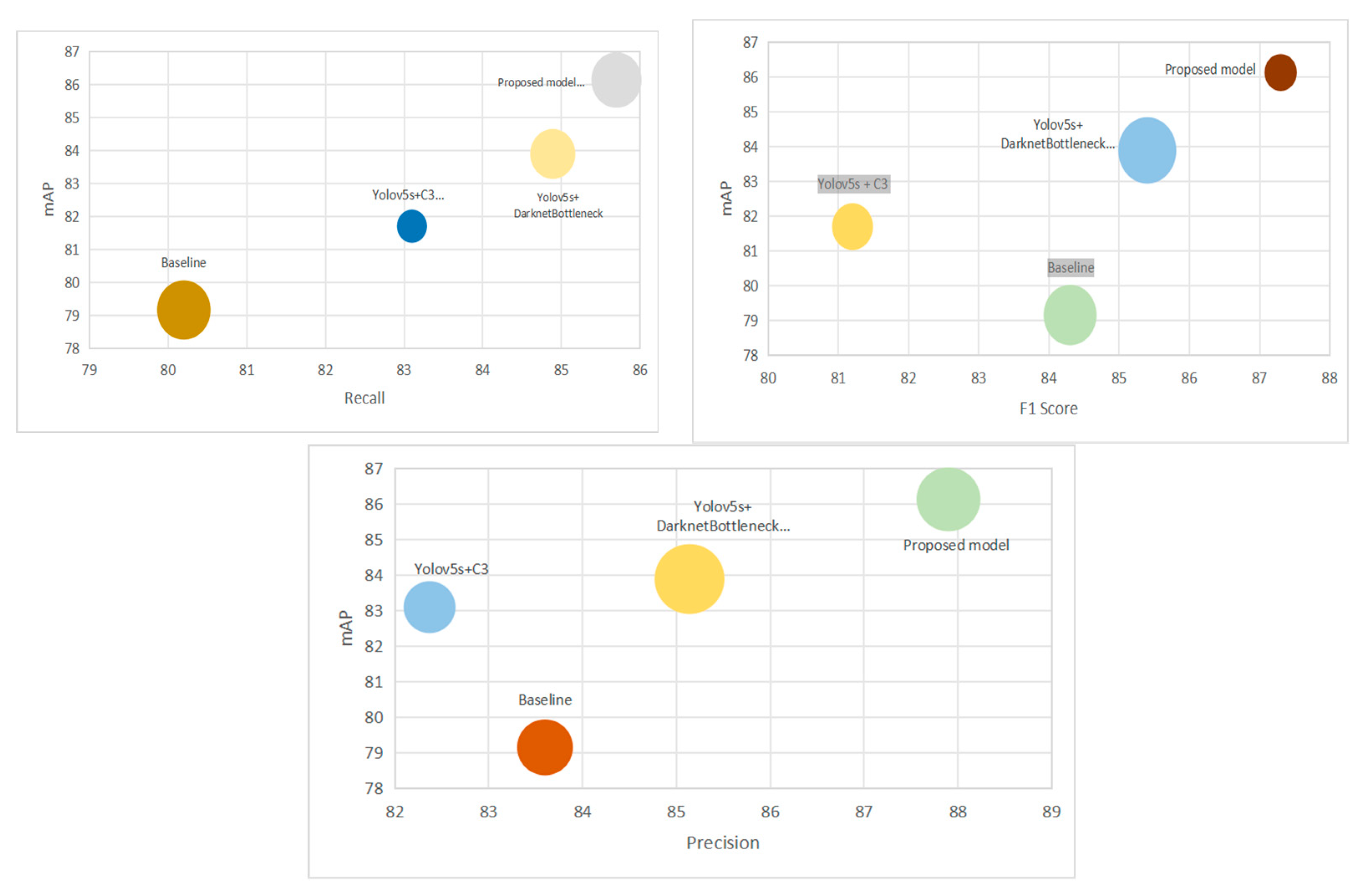

| Modification | Precision (%) | Recall (%) | mAP (%) | F1-Score (%) |

|---|---|---|---|---|

| Baseline YOLOv5s | 83.6 | 80.2 | 79.16 | 84.3 |

| YOLOv5s + DarknetBottleneck | 85.14 | 84.89 | 83.89 | 85.4 |

| YOLOv5s + C3 | 82.37 | 83.1 | 81.7 | 81.2 |

| Proposed model + modified C3 | 87.9 | 85.7 | 86.13 | 87.3 |

| Model | Parameters (M) | Inference Speed (FPS) | Training Time (Epoch) |

|---|---|---|---|

| YOLOv5s (baseline) | 7.2 | 78 | 2 h 45 min |

| Proposed model | 7.5 | 74 | 3 h 10 min |

| Model | Precision (%) | Recall (%) | mAP (%) | F1-Score (%) | Parameters (M) |

|---|---|---|---|---|---|

| SSD300 | 76.4 | 74.8 | 75.9 | 75.6 | 24.1 |

| Faster R-CNN (ResNet50) | 82.3 | 80.9 | 81.7 | 81.6 | 41.2 |

| YOLOv3 | 81.5 | 80.2 | 80.7 | 80.8 | 61.5 |

| YOLOv4 | 84.2 | 82.5 | 83.1 | 83.3 | 64 |

| YOLOv5n | 82.6 | 81.4 | 81.6 | 82 | 1.9 |

| YOLOv5m | 85.3 | 84 | 84.9 | 85.1 | 21.2 |

| YOLOv6n | 83.1 | 81.3 | 82.1 | 82.1 | 4.3 |

| YOLOv7-tiny | 85.1 | 82.7 | 83.4 | 83.8 | 6.2 |

| YOLOv8n | 86.7 | 83.5 | 85.1 | 85 | 6.2 |

| EfficientDet-D0 | 79.4 | 77.2 | 78 | 78.3 | 3.9 |

| CenterNet | 77.8 | 76.9 | 77.1 | 77.3 | 52.3 |

| RetinaNet | 81 | 79.6 | 80.2 | 80.3 | 34.6 |

| YOLO-LFD | 84.5 | 83.1 | 83.9 | 83.8 | 5.8 |

| LUFFD-YOLO | 85.9 | 84.7 | 85.6 | 85.2 | 6.1 |

| YOLO-Fire | 85.7 | 84.5 | 85 | 85.1 | 6 |

| Proposed model | 88.9 | 85.7 | 87.3 | 87.3 | 7.5 |

| Model | Parameters (M) | FLOPs (G) | Inference Speed (FPS) | Training Time/Epoch |

|---|---|---|---|---|

| YOLOv5s (baseline) | 7.2 | 16.5 | 78 | 2 h 45 min |

| YOLOv7-tiny | 6.2 | 17.8 | 70 | 3 h 00 min |

| YOLOv8n | 6.2 | 18.1 | 71 | 2 h 50 min |

| YOLO-Fire | 6.0 | 17.2 | 73 | 2 h 55 min |

| Proposed model | 7.5 | 17.0 | 74 | 3 h 10 min |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Saydirasulovich, S.N.; Umirzakova, S.; Nabijon Azamatovich, A.; Mukhamadiev, S.; Temirov, Z.; Abdusalomov, A.; Cho, Y.I. Lightweight YOLOv5s Model for Early Detection of Agricultural Fires. Fire 2025, 8, 187. https://doi.org/10.3390/fire8050187

Saydirasulovich SN, Umirzakova S, Nabijon Azamatovich A, Mukhamadiev S, Temirov Z, Abdusalomov A, Cho YI. Lightweight YOLOv5s Model for Early Detection of Agricultural Fires. Fire. 2025; 8(5):187. https://doi.org/10.3390/fire8050187

Chicago/Turabian StyleSaydirasulovich, Saydirasulov Norkobil, Sabina Umirzakova, Abduazizov Nabijon Azamatovich, Sanjar Mukhamadiev, Zavqiddin Temirov, Akmalbek Abdusalomov, and Young Im Cho. 2025. "Lightweight YOLOv5s Model for Early Detection of Agricultural Fires" Fire 8, no. 5: 187. https://doi.org/10.3390/fire8050187

APA StyleSaydirasulovich, S. N., Umirzakova, S., Nabijon Azamatovich, A., Mukhamadiev, S., Temirov, Z., Abdusalomov, A., & Cho, Y. I. (2025). Lightweight YOLOv5s Model for Early Detection of Agricultural Fires. Fire, 8(5), 187. https://doi.org/10.3390/fire8050187