AI-Driven UAV Surveillance for Agricultural Fire Safety

Abstract

1. Introduction

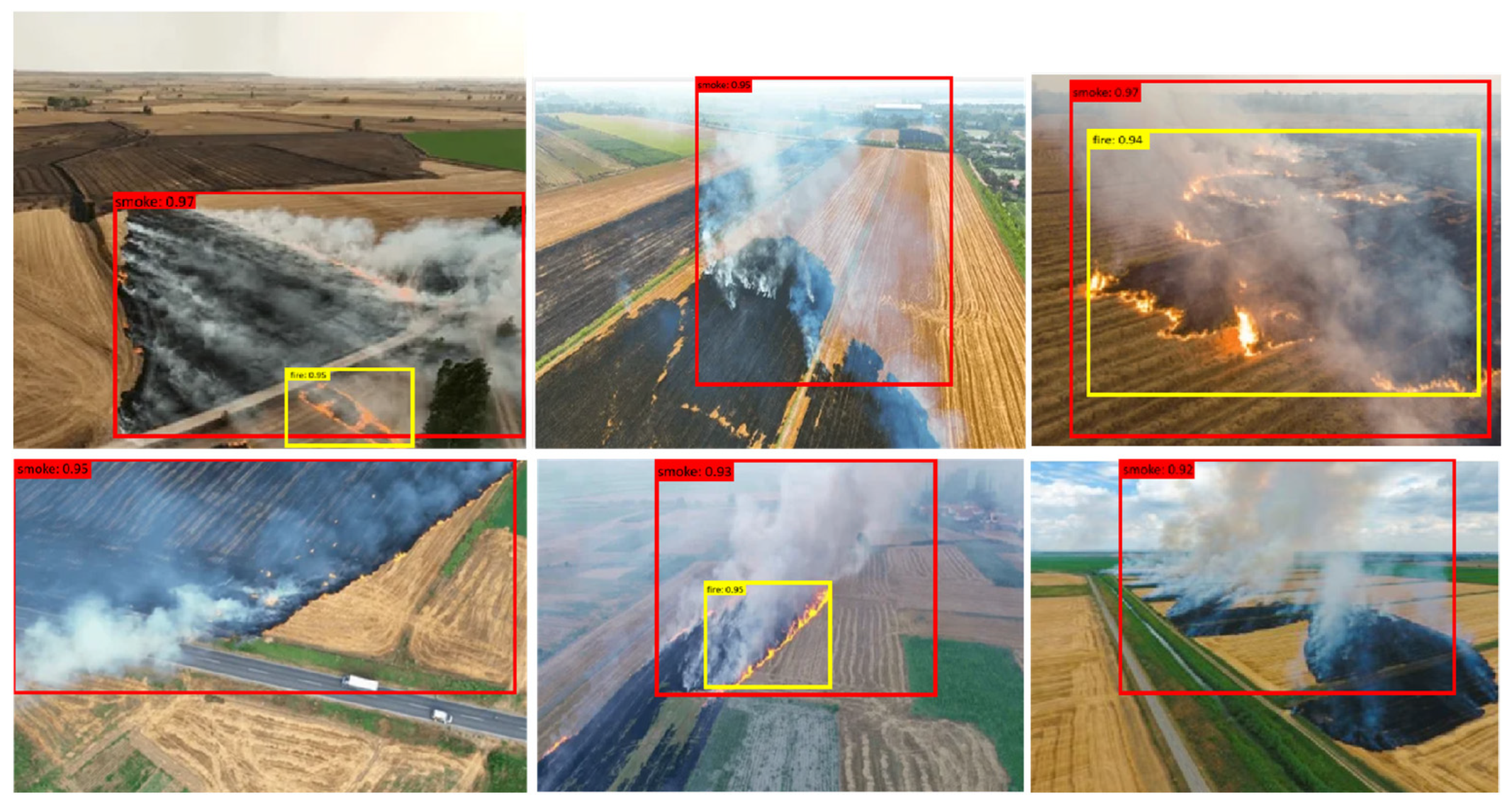

- The integration of a SSD with MobileNetV2 significantly improves fire-detection accuracy and reduces false positives.

- The proposed model is optimized for deployment on edge devices, significantly reducing computational demands without compromising detection performance.

- Enhanced detection speed, achieving up to 45 frames per second, making the model highly suitable for UAV-based agricultural fire monitoring.

- The application of advanced augmentation and preprocessing techniques to enhance model generalization and performance in diverse fire scenarios.

- The development of a detection framework adaptable to varying agricultural fire scenarios, including different environmental conditions and fire intensities.

- Contribution towards sustainable fire-management practices through AI-driven monitoring and early warning systems, thereby aiding in the reduction of fire-related economic and environmental damages.

2. Related Works

3. Methodology

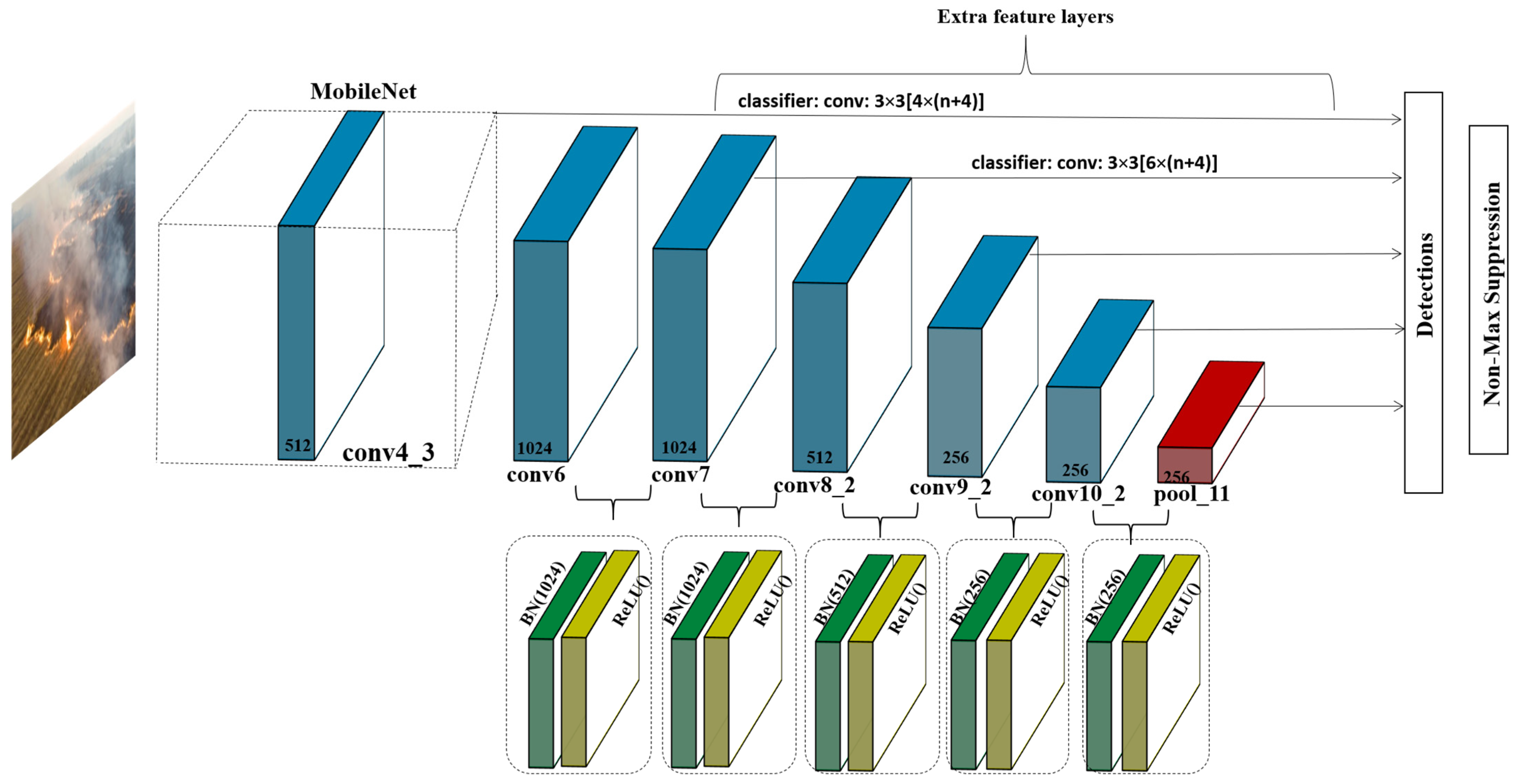

3.1. Single-Shot MultiBox Detector

3.2. Proposed Method

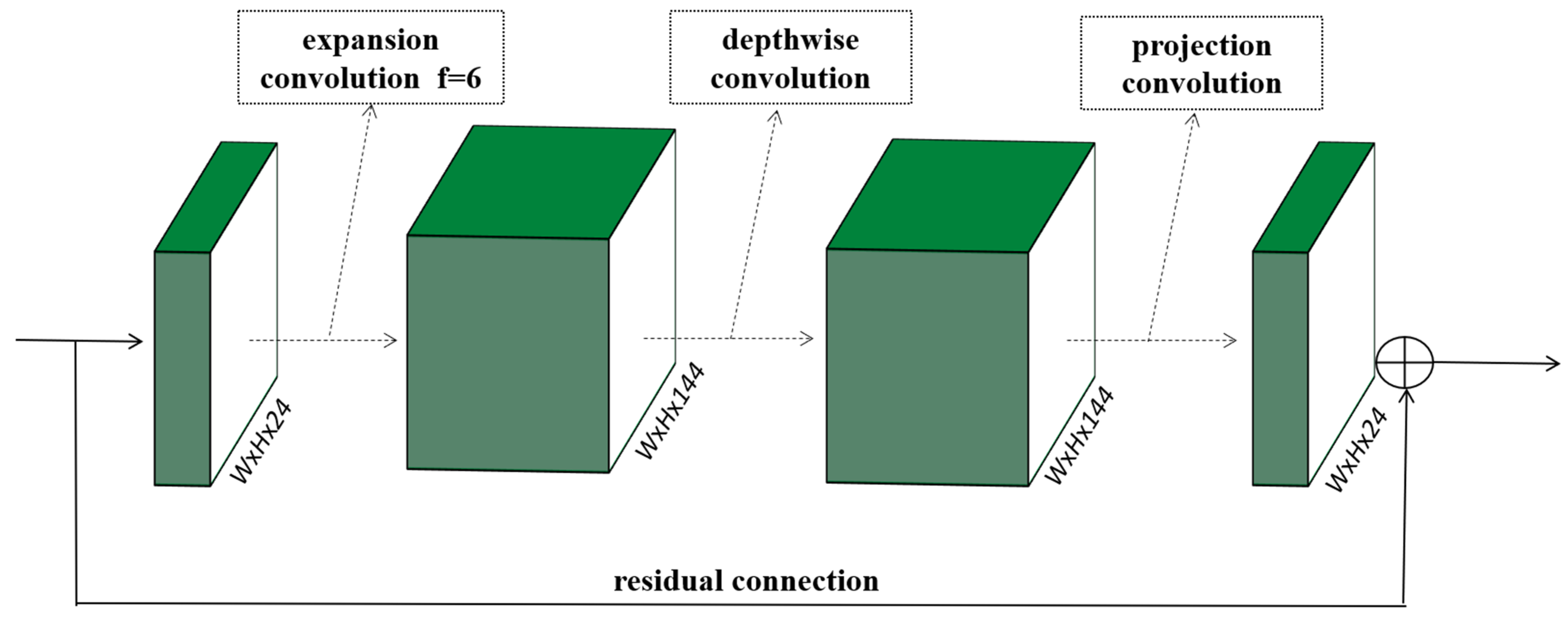

- Expansion layer: A 1 × 1 convolution that expands the number of channels of the input image, increasing the dimensionality for the depthwise convolution.

- Depthwise convolution: A 3 × 3 convolution applied separately to each channel. This layer is computationally efficient and allows for the extraction of rich spatial features.

- Projection layer: Another 1 × 1 convolution that projects the expanded channel dimension back to a lower dimension. This layer uses linear activation, unlike the other layers, to preserve the representational capacity without nonlinear distortions.

4. The Experiment and Results

4.1. Dataset

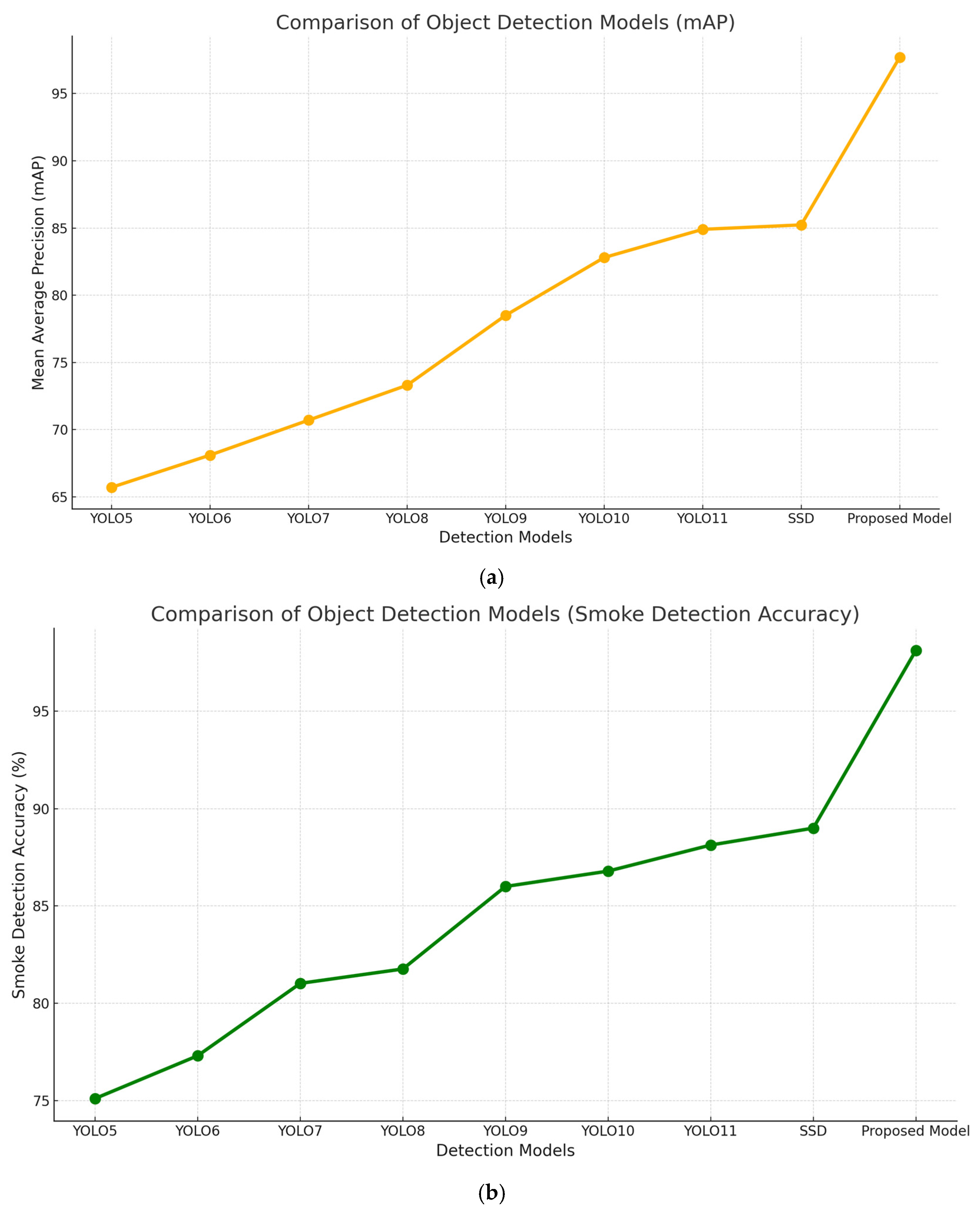

4.2. Comparison Results

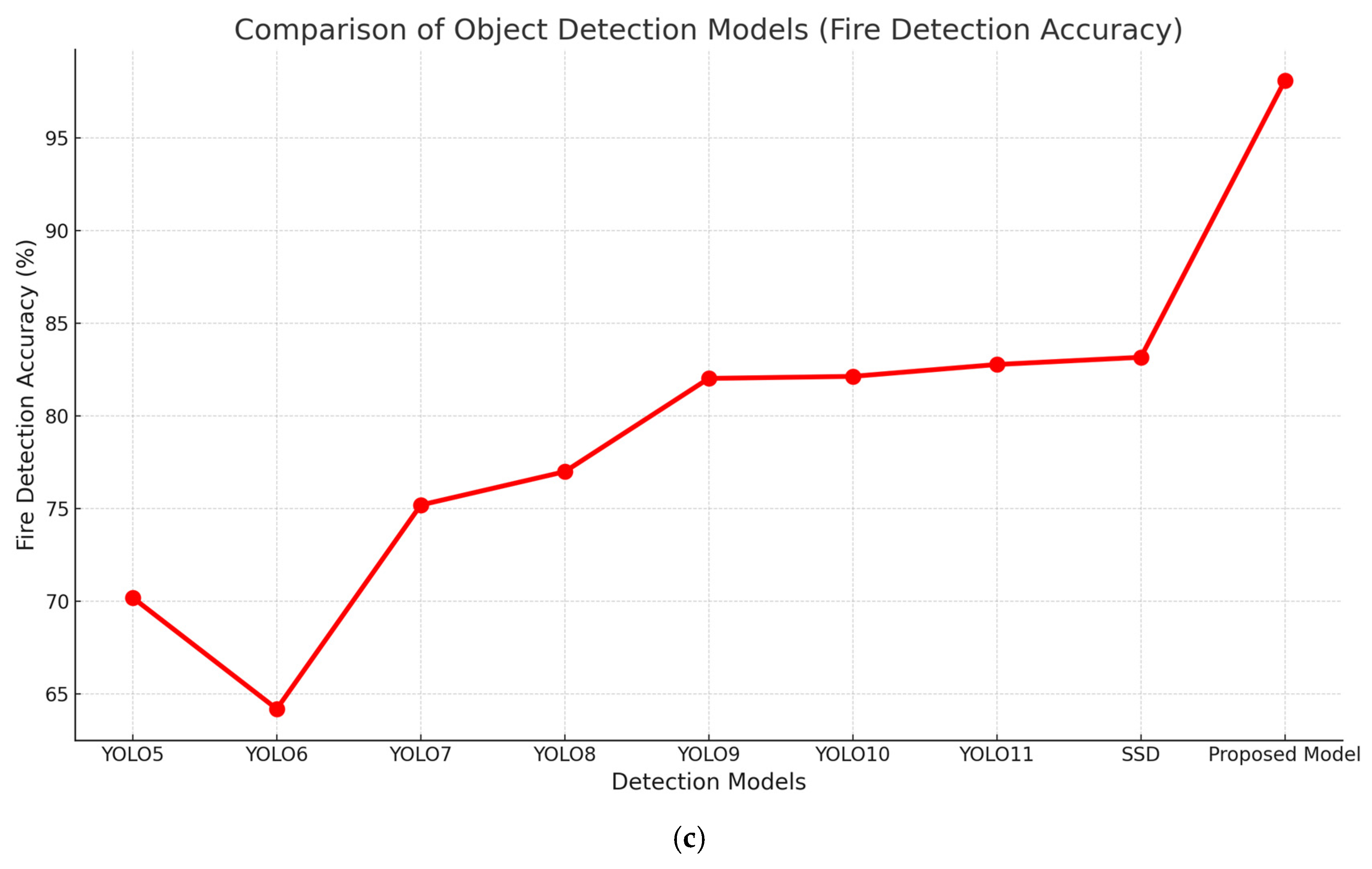

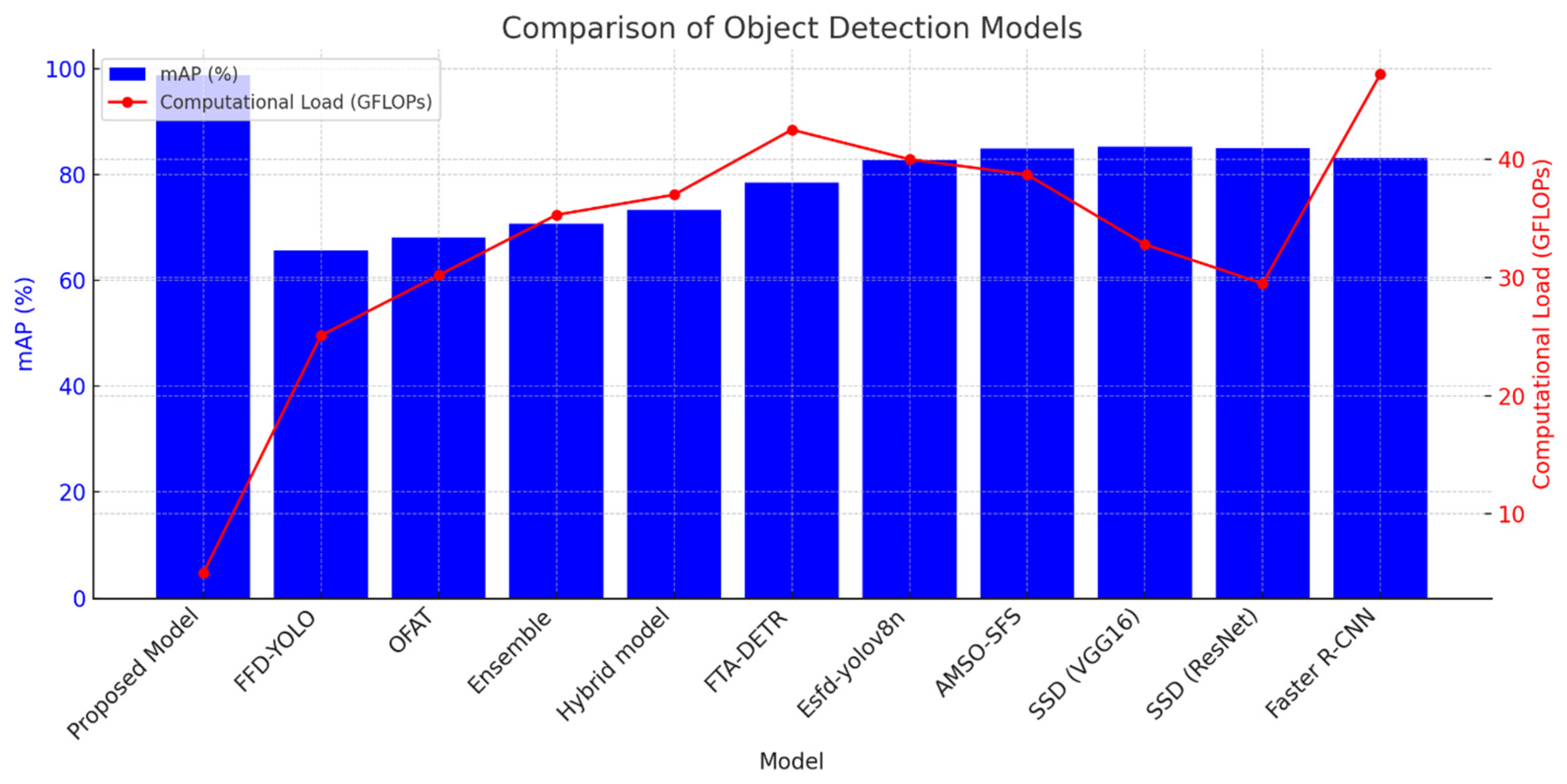

4.3. Comparison with State-of-the-Art Models

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Morchid, A.; Oughannou, Z.; El Alami, R.; Qjidaa, H.; Jamil, M.O.; Khalid, H.M. Integrated internet of things (IoT) solutions for early fire detection in smart agriculture. Results Eng. 2024, 24, 103392. [Google Scholar] [CrossRef]

- Maity, T.; Bhawani, A.N.; Samanta, J.; Saha, P.; Majumdar, S.; Srivastava, G. MLSFDD: Machine Learning-Based Smart Fire Detection Device for Precision Agriculture. IEEE Sens. J. 2025, 25, 8921–8928. [Google Scholar] [CrossRef]

- Li, L.; Awada, T.; Shi, Y.; Jin, V.L.; Kaiser, M. Global Greenhouse Gas Emissions from Agriculture: Pathways to Sustainable Reductions. Glob. Change Biol. 2025, 31, e70015. [Google Scholar] [CrossRef] [PubMed]

- Makhmudov, F.; Umirzakova, S.; Kutlimuratov, A.; Abdusalomov, A.; Cho, Y.-I. Advanced Object Detection for Maritime Fire Safety. Fire. 2024, 7, 430. [Google Scholar] [CrossRef]

- Patrick, M.; Mass, C. Weather Associated with Rapid-Growth California Wildfires. Weather. Forecast. 2025, 40, 347–366. [Google Scholar]

- Yang, S.; Huang, Q.; Yu, M. Advancements in remote sensing for active fire detection: A review of datasets and methods. Sci. Total Environ. 2024, 943, 173273. [Google Scholar] [CrossRef] [PubMed]

- Ramos, L.; Casas, E.; Bendek, E.; Romero, C.; Rivas-Echeverría, F. Hyperparameter optimization of YOLOv8 for smoke and wildfire detection: Implications for agricultural and environmental safety. Artif. Intell. Agric. 2024, 12, 109–126. [Google Scholar] [CrossRef]

- Duong, O.; Crew, J.; Rea, J.; Haghani, S.; Striki, M. A Multi-Functional Drone for Agriculture Maintenance and Monitoring in Small-Scale Farming. In Proceedings of the 2024 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 6–8 January 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–4. [Google Scholar]

- Xie, X.; Zhang, Y.; Liang, R.; Chen, W.; Zhang, P.; Wang, X.; Zhou, Y.; Cheng, Y.; Liu, J. Wintertime Heavy Haze Episodes in Northeast China Driven by Agricultural Fire Emissions. Environ. Sci. Technol. Lett. 2024, 11, 150–157. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Sharma, A.; Singh, K. UAV-based framework for effective data analysis of forest fire detection using 5G networks: An effective approach towards smart cities solutions. Int. J. Commun. Syst. 2025, 38, e4826. [Google Scholar]

- Yan, X.; Wang, W.; Lu, F.; Fan, H.; Wu, B.; Yu, J. GFRF R-CNN: Object Detection Algorithm for Transmission Lines. Comput. Mater. Contin. 2025, 82, 1439–1458. [Google Scholar]

- Cheknane, M.; Bendouma, T.; Boudouh, S.S. Advancing fire detection: Two-stage deep learning with hybrid feature extraction using faster R-CNN approach. Signal Image Video Process. 2024, 18, 1–8. [Google Scholar]

- Wang, Z.; Xu, L.; Chen, Z. FFD-YOLO: A modified YOLOv8 architecture for forest fire detection. Signal Image Video Process. 2025, 19, 265. [Google Scholar] [CrossRef]

- Wen, H.; Hu, X.; Zhong, P. Detecting rice straw burning based on infrared and visible information fusion with UAV remote sensing. Comput. Electron. Agric. 2024, 222, 109078. [Google Scholar] [CrossRef]

- Verma, P.; Bakthula, R. Empowering fire and smoke detection in smart monitoring through deep learning fusion. Int. J. Inf. Technol. 2024, 16, 345–352. [Google Scholar] [CrossRef]

- Yan, X.; Chen, R. Application Strategy of Unmanned Aerial Vehicle Swarms in Forest Fire Detection Based on the Fusion of Particle Swarm Optimization and Artificial Bee Colony Algorithm. Appl. Sci. 2024, 14, 4937. [Google Scholar] [CrossRef]

- Gupta, H.; Nihalani, N. An efficient fire detection system based on deep neural network for real-time applications. Signal Image Video Process. 2024, 18, 6251–6264. [Google Scholar] [CrossRef]

- Zheng, H.; Wang, G.; Xiao, D.; Liu, H.; Hu, X. FTA-DETR: An efficient and precise fire detection framework based on an end-to-end architecture applicable to embedded platforms. Expert Syst. Appl. 2024, 248, 123394. [Google Scholar] [CrossRef]

- Mamadaliev, D.; Touko, L.M.; Kim, J.H.; Kim, S.C. Esfd-yolov8n: Early smoke and fire detection method based on an improved yolov8n model. Fire 2024, 7, 303. [Google Scholar] [CrossRef]

- Abdusalomov, A.; Umirzakova, S.; Bakhtiyor Shukhratovich, M.; Mukhiddinov, M.; Kakhorov, A.; Buriboev, A.; Jeon, H.S. Drone-Based Wildfire Detection with Multi-Sensor Integration. Remote Sens. 2024, 16, 4651. [Google Scholar] [CrossRef]

| Reference | Study Focus | Key Findings |

|---|---|---|

| [12] | Intersection of AI and environmental monitoring for fire detection | AI-driven solutions enhance fire detection in agricultural settings |

| [13] | Revolution of computer vision through YOLO, Faster R-CNN, and SSD | Modern object detection models improve fire-detection accuracy |

| [14] | Integration of Faster R-CNN with UAV for early forest fire detection | Faster R-CNN with UAV achieved notable success in early detection |

| [15] | Modification of YOLO for fire and smoke detection, reducing false positives | YOLO-based detection significantly reduces false positives |

| [16] | Fusion of infrared and visible imagery to detect rice-straw burning | Infrared-visual fusion overcomes smoke occlusion and enhances detection |

| [7] | Evaluation of YOLOv8 for wildfire detection using hyperparameter tuning | Fine-tuned YOLOv8l with OFAT method improves wildfire detection |

| [9] | Sparse Vision Transformer (sparse-VIT) for optimized fire detection | Sparse attention mechanisms improve spectral efficiency and accuracy |

| [17] | Deep Surveillance Unit (DSU) combining InceptionV3, MobileNetV2, and ResNet50v2 | Deep learning fusion enhances fire and smoke classification performance |

| [18] | Fusion of PSO and ABC algorithms for UAV-based fire detection and suppression | UAV swarms optimize fire-detection routes and suppression efficiency |

| [19] | Hybrid model combining ResNet152V2 and InceptionV3 for fire detection | Hybrid deep feature extraction improves accuracy but requires high computation |

| [20] | FTA-DETR model using Deformable-DETR and DDPM for robust fire detection | Deformable-DETR with DDPM enhances detection but needs large datasets |

| [21] | ESFD-YOLOv8n: Optimized YOLOv8 with Wise-IoU v3 and residual blocks | YOLOv8n optimizations improve accuracy but require higher processing power |

| [22] | AMSO-SFS: Adaptive multi-sensor fire-detection model with SFS-Conv module | Multi-sensor model enhances detection but limits real-time UAV deployment |

| Section | Description | Key Components | Limitations of Baseline Model |

|---|---|---|---|

| 3.1 SSD | SSD represents a significant advancement in object detection, capable of detecting multiple objects within a single image in real-time. It is built upon a foundational convolutional network for feature extraction, employing multiple convolutional layers that decrease in size to handle objects of various sizes. | Modified pre-trained networks like VGG16 or ResNet, convolutional layers, predefined bounding boxes (‘anchors’), Non-Maximum Suppression (NMS) technique, and the joint optimization of localization and classification tasks. | High computational load unsuitable for edge devices, difficulty handling scale variability, less efficient for real-time applications on devices with limited processing capabilities. |

| 3.2 The proposed method | Our method enhances the SSD by integrating MobileNetV2 as its backbone to reduce computational demands, essential for deploying drones equipped with real-time fire-detection capabilities. Includes batch normalization and activation functions to improve efficiency. | MobileNetV2 architecture, streamlined stochastic gradient descent, linear bottlenecks, inverted residual structure, depthwise separable convolutions, batch normalization, and ReLU activation functions. | Proposed model integrates MobileNetV2 to reduce computational demand and improve scale detection; optimized for real-time applications on mobile and edge devices using advanced architectural modifications. |

| Aspect | Description |

|---|---|

| Dataset Source | Global and local agricultural fire incidents, YouTube sources |

| Types of Data | Imagery and video content depicting agricultural fires |

| Data-Collection Methods | Curated dataset with annotations from various real-world sources |

| Standardization Process | Uniform resolution standardization for computational efficiency |

| Image Resolution | All images resized to 300 × 300 pixels |

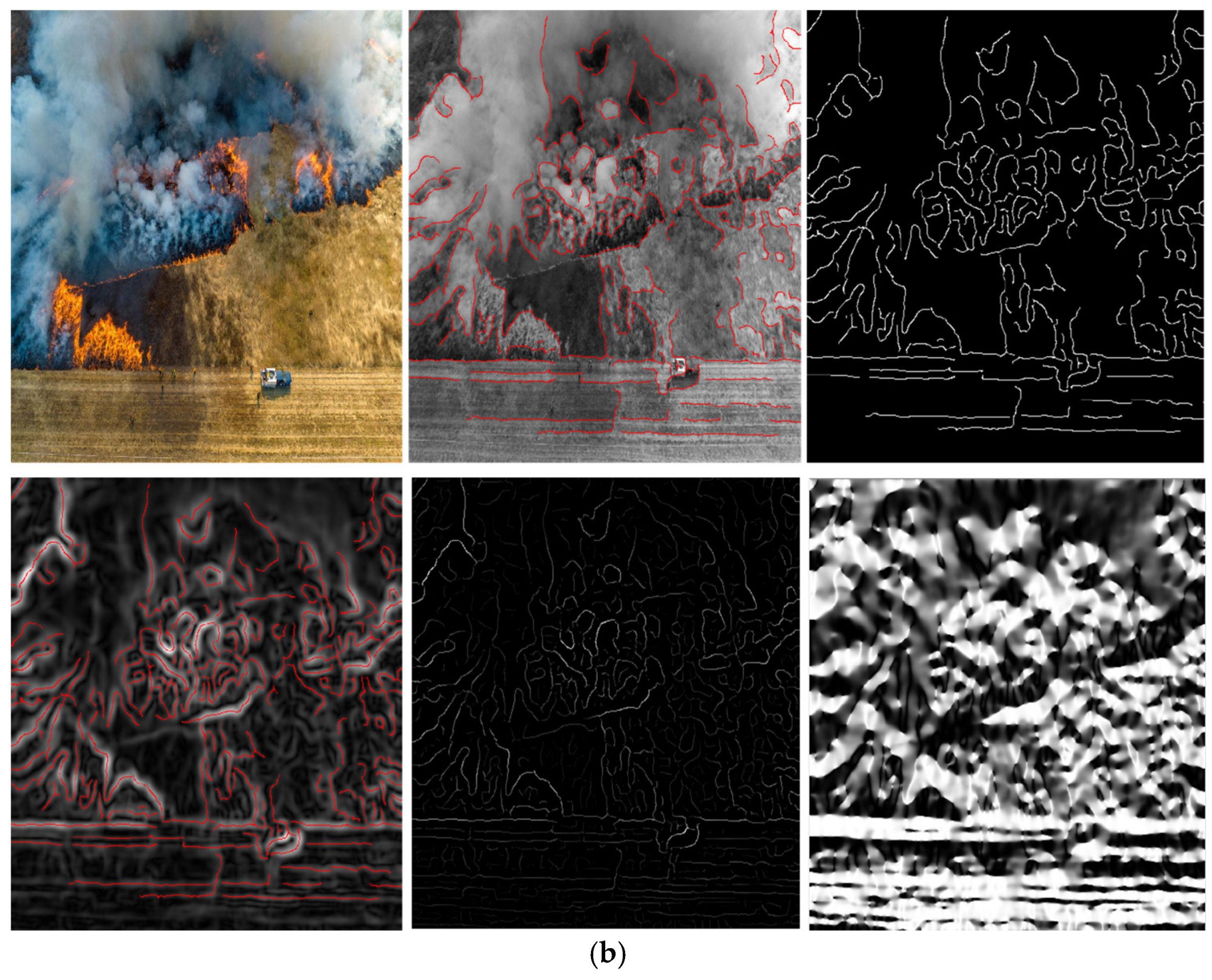

| Preprocessing Techniques | Normalization to adjust pixel intensity values across varying lighting conditions |

| Augmentation Methods | Random rotations, mirroring, scaling, cropping, and color adjustments |

| Objective of Data Processing | Improve model robustness, reduce overfitting, and enhance real-world detection accuracy |

| Model | Data | mAP | Smoke | Fire |

|---|---|---|---|---|

| YOLO5 | Custom | 65.7 | 75.1 | 70.2 |

| YOLO6 | Custom | 68.1 | 77.3 | 64.2 |

| YOLO7 | Custom | 70.7 | 81.02 | 75.2 |

| YOLO8 | Custom | 73.3 | 81.76 | 77.0 |

| YOLO9 | Custom | 78.5 | 86.0 | 82.03 |

| YOLO10 | Custom | 82.8 | 86.79 | 82.14 |

| YOLO11 | Custom | 84.9 | 88.13 | 82.78 |

| SSD | Custom | 85.23 | 89.00 | 83.17 |

| The proposed model | Custom | 97.7 | 98.12 | 98.10 |

| Model | mAP (%) | Detection Speed (fps) | Computational Load (GFLOPs) | Environmental Adaptability |

|---|---|---|---|---|

| Proposed Model | 98.7 | 45 | 5.0 | High |

| FFD-YOLO [15] | 65.7 | 60 | 25.1 | Moderate |

| OFAT [7] | 68.1 | 58 | 30.2 | Moderate |

| Ensemble [17] | 70.7 | 55 | 35.3 | Moderate |

| Hybrid model [19] | 73.3 | 53 | 37.0 | Moderate |

| FTA-DETR [20] | 78.5 | 50 | 42.5 | Good |

| Esfd-yolov8n [21] | 82.8 | 47 | 40.0 | Good |

| AMSO-SFS [22] | 84.9 | 45 | 38.7 | Good |

| SSD (VGG16) | 85.2 | 35 | 32.8 | Good |

| SSD (ResNet) | 85.0 | 32 | 29.5 | Good |

| Faster R-CNN | 83.1 | 25 | 47.2 | High |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abdusalomov, A.; Umirzakova, S.; Tashev, K.; Egamberdiev, N.; Belalova, G.; Meliboev, A.; Atadjanov, I.; Temirov, Z.; Cho, Y.I. AI-Driven UAV Surveillance for Agricultural Fire Safety. Fire 2025, 8, 142. https://doi.org/10.3390/fire8040142

Abdusalomov A, Umirzakova S, Tashev K, Egamberdiev N, Belalova G, Meliboev A, Atadjanov I, Temirov Z, Cho YI. AI-Driven UAV Surveillance for Agricultural Fire Safety. Fire. 2025; 8(4):142. https://doi.org/10.3390/fire8040142

Chicago/Turabian StyleAbdusalomov, Akmalbek, Sabina Umirzakova, Komil Tashev, Nodir Egamberdiev, Guzalxon Belalova, Azizjon Meliboev, Ibragim Atadjanov, Zavqiddin Temirov, and Young Im Cho. 2025. "AI-Driven UAV Surveillance for Agricultural Fire Safety" Fire 8, no. 4: 142. https://doi.org/10.3390/fire8040142

APA StyleAbdusalomov, A., Umirzakova, S., Tashev, K., Egamberdiev, N., Belalova, G., Meliboev, A., Atadjanov, I., Temirov, Z., & Cho, Y. I. (2025). AI-Driven UAV Surveillance for Agricultural Fire Safety. Fire, 8(4), 142. https://doi.org/10.3390/fire8040142