YOLO-Based Models for Smoke and Wildfire Detection in Ground and Aerial Images

Abstract

1. Introduction

- The recent YOLO models are adopted and implemented in detecting and localizing smoke and wildfires using ground and aerial images, thereby reducing false detection and improving the performance of deep learning-based smoke and fire detection methods;

- The reliability of YOLO models is shown using two public datasets, D-Fire and WSDY (WildFire Smoke Dataset YOLO). Extensive analysis confirms their performance over baseline fire detection methods;

- YOLO models showed a robust potential to address challenging limitations, including background complexity; detecting small smoke and fire zones; varying smoke and fire features regarding intensity, flow pattern, shape, and colors; visual similarity between fire, lighting, and sun glare; and the visual resemblances among smoke, fog, and clouds;

- YOLO models are introduced in this study, achieving fast detection times, which are useful for real-time fire detection and early fire ignition. This shows the reliability of YOLO models when used on wildland fire monitoring systems. They can also help enhancing wildfire intervention strategies and reducing fire spread and the area of burnt forest, thus providing effective protection for ecosystem and human communities.

2. Related Works

| Ref. | Methodology | Dataset | Object Detected | mAP (%) |

|---|---|---|---|---|

| [28] | Modified YOLOv5n Modified YOLOv5x | Private dataset: 2462 images | Fire Smoke | 83.20 90.50 |

| [29] | DFFT | Private dataset: 5900 fire images Fire smoke dataset: 23,730 images | Fire Smoke | 87.40 81.12 |

| [32] | YOLOv5s and YOLOv5l | Private dataset: 937 images | Fire Smoke | 76.00 |

| [33] | Modified YOLOv7 | Private dataset: 9005 images (6605 smoke images and 2400 non-smoke images) | Smoke | 93.70 |

| [30] | Deformable DETR | Private dataset:10,250 forest smoke images | Smoke | 88.40 |

| [34] | Improved YOLOv5s | Private dataset: 450 forest fire smoke images | Fire Smoke | 58.80 |

| [35] | ForestFireDetector | Private dataset: 3966 images of forest fires | Smoke | 90.20 |

| [36] | LMDFS | Private dataset: 5311 aerial smoke images | Smoke | 80.20 |

| [37] | Modified YOLOv3 | Private dataset: 30,411 images | Fire Smoke | 95.00 |

| [38] | AERNet | SF-dataset: 9246 fire and smoke images; FIRESENSE dataset: 49 videos of smoke and fire | Fire Smoke | 69.42 |

| [39] | Modified YOLOv3 | Private dataset:10,029 images of smoke and fire | Fire Smoke | 73.30 |

| [40] | Modified YOLOv7 | Private dataset: 14,904 images | Fire Smoke | 87.90 |

| [41] | Modified YOLOv7 | Private dataset: 6500 aerial images | Smoke | 86.40 |

| [42] | Pruned YOLOv4 | D-Fire: 21,527 images of smoke and fire | Fire Smoke | 73.98 |

| [43] | YOLOv5 | D-Fire: 21,527 images of smoke and fire | Fire Smoke | 79.46 |

| [44] | Optimized YOLOv5 | Public dataset: 6000 aerial images | Smoke | 73.60 |

3. Materials and Methods

3.1. Proposed Models

3.1.1. YOLOv5

3.1.2. YOLOv7

3.1.3. YOLOv8

3.1.4. YOLOv5u

3.2. Wildland Fire Challenges

3.3. Dataset

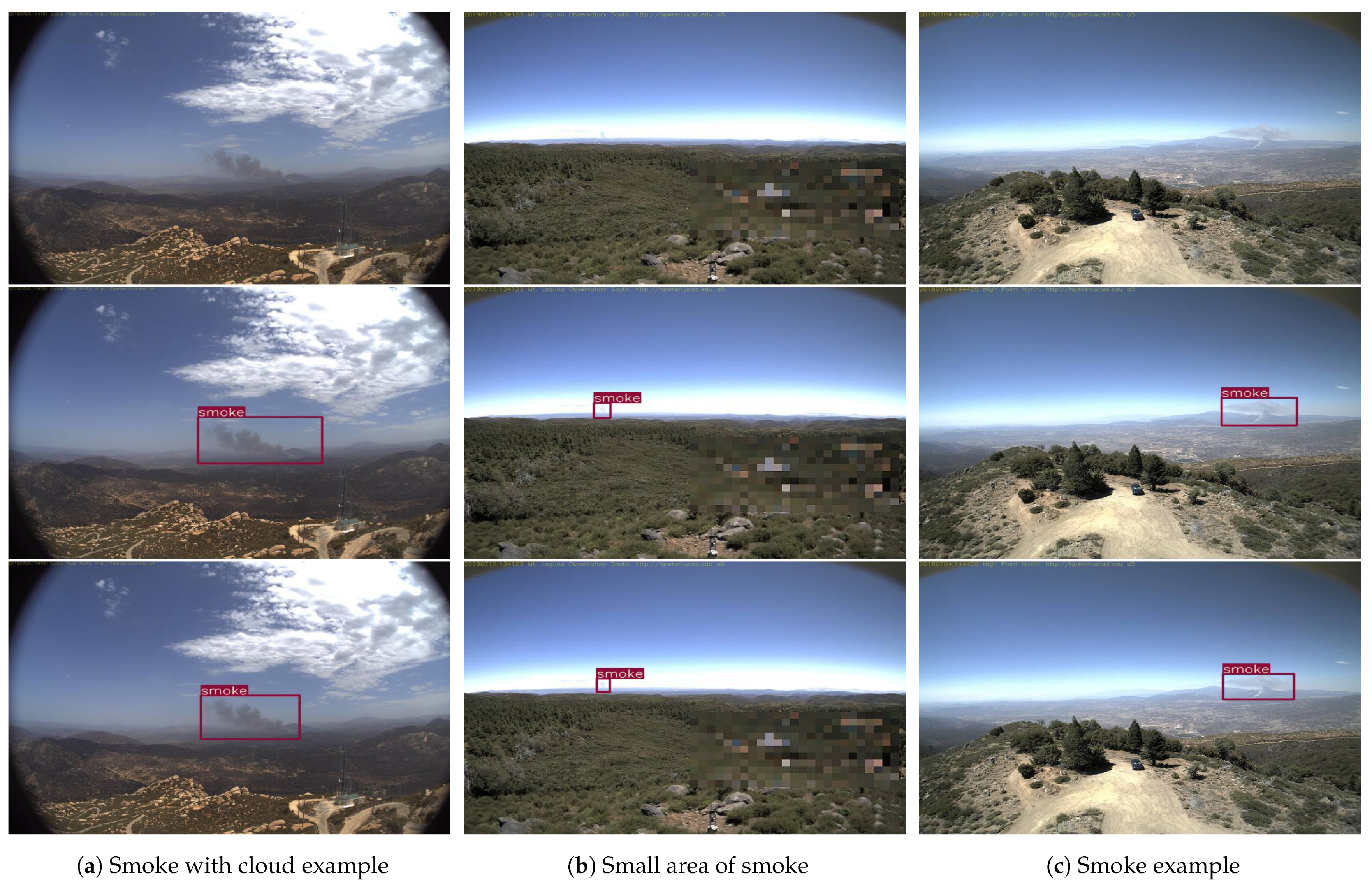

- The D-Fire dataset was introduced by Venâncio et al. [42,51] for the detection of smoke and fires. It includes aerial and ground images. It consists of a total of 21,527 images, of which 1164 images contain only fires, 5867 are smoke images, and 4658 are smoke and fire images, while the remaining 9838 images are non-fire and non-smoke images. It presents smoke and fires with different shapes, textures, intensities, sizes, and colors. The D-Fire dataset also includes images with challenging conditions, including scenarios with insects obstructing the camera, raindrops scattered, lighting, fog, clouds, and sun glare. These variations in the environmental factors provide diversity to the dataset and enhance its representation of the real challenges faced when detecting smoke and fires. Figure 1 presents some examples of the D-Fire dataset.

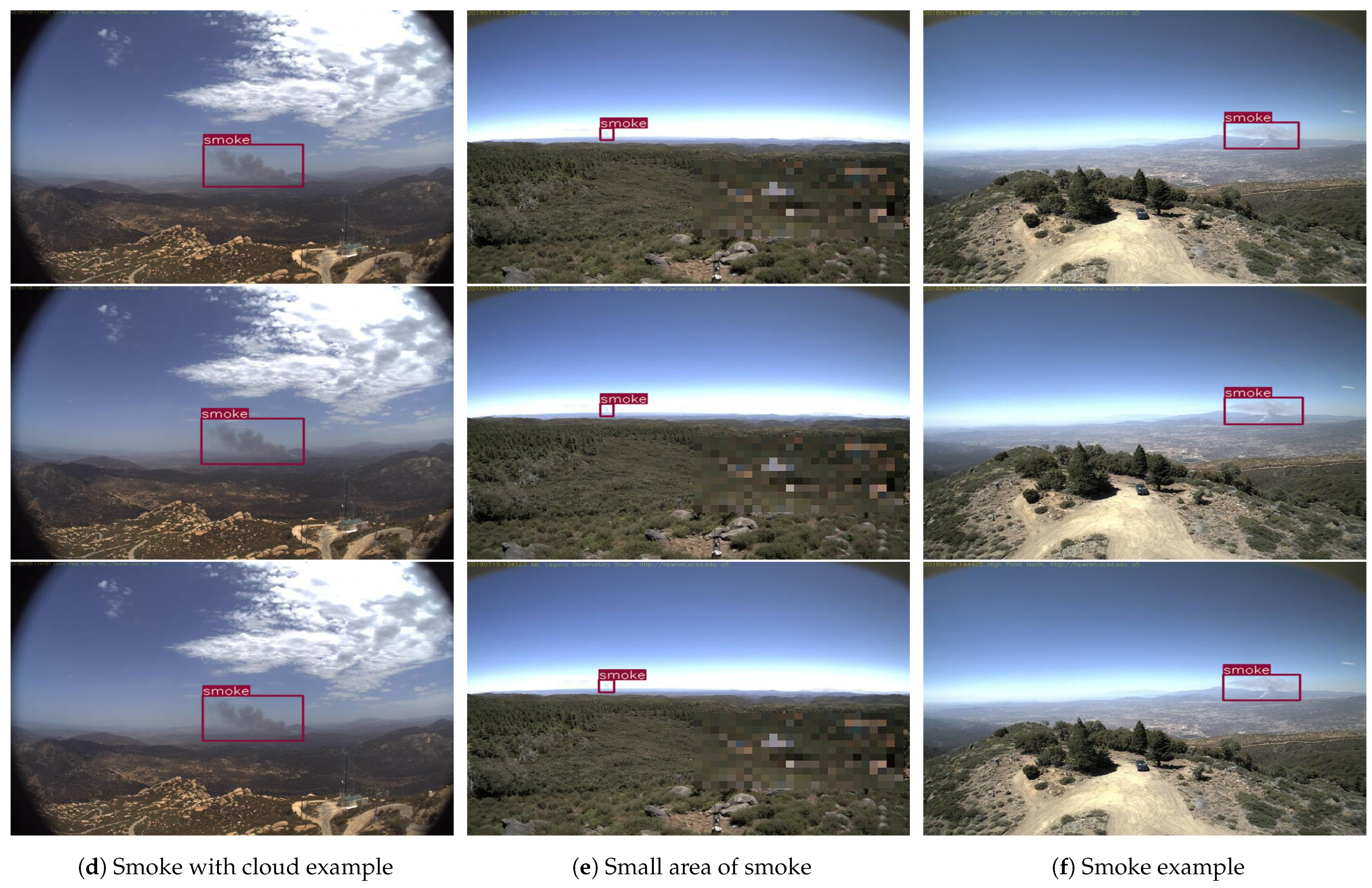

- The WSDY dataset [52] is a publicly available dataset developed by Hemateja for detecting and localizing wildfire smoke. It contains 737 smoke images, divided into training, validation, and test sets with their corresponding YOLO annotations. It depicts numerous wildland fire smoke scenarios with challenging situations such as the presence of clouds, as depicted in Figure 2.

3.4. Evaluation Metrics

4. Results and Discussion

4.1. Implementation Details

4.2. Result Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| YOLO | You Only Look Once |

| CNN | Convolutional Neural Network |

| MAP | Mean Average Precision |

| DFFT | Decoder-Free Fuly Transformer |

| MCCL | Multi-scale Context Contrasted Local Feature Module |

| DPPM | Dense Pyramid Pooling module |

| CA | Coordinate Attention |

| RFB | Receptive Field Block |

| CARAFE | Content-Aware Reassembly of Features |

| SE | Squeeze and Excitation |

| UAV | Unmanned Aerial Vehicle |

| GFLOPS | Billions of Floating-Point Operations per Second |

| FPN | Feature Pyramid Network |

| PANet | Path Aggregation Network |

| E-ELAN | Extended Efficient Layer Aggregation Network |

| ECA | Efficient Channel Attention |

| Bi-FPN | Bidirectional Feature Pyramid Network |

| SPD-Conv | Space-to-Depth Convolution |

| GSConv | Ghost Shuffle Convolution |

| CBAM | Convolutional Block Attention Module |

| SPPF | Spatial Pyramid Pooling Fast |

| IoU | Intersection over Union |

| AP | Average Precision |

| P | Precision |

| TP | True Positive |

| FP | False Positive |

| R | Recall |

| FN | False Negative |

| DFL | Distribution Focal Loss |

| CIoU | Complete Intersection over Union |

| BCE | Binary Cross-Entropy |

| WSDY | WildFire Smoke Dataset YOLO |

| PUE | Power Usage Effectiveness |

References

- European Commission. Wildfires in the Mediterranean. Available online: https://joint-research-centre.ec.europa.eu/jrc-news-and-updates/wildfires-mediterranean-monitoring-impact-helping-response-2023-07-28_en (accessed on 14 November 2023).

- Government of Canada. Forest Fires. Available online: https://natural-resources.canada.ca/our-natural-resources/forests/wildland-fires-insects-disturbances/forest-fires/13143 (accessed on 14 November 2023).

- Government of Canada. Protecting Communities. Available online: https://natural-resources.canada.ca/our-natural-resources/forests/wildland-fires-insects-disturbances/forest-fires/protecting-communities/13153 (accessed on 14 November 2023).

- Government of Canada. Social Aspects of Wildfire Management; Natural Resources Canada: Ottawa, ON, Canada, 2020.

- Alkhatib, A. A Review on Forest Fire Detection Techniques. Int. J. Distrib. Sens. Netw. 2014, 10, 597368. [Google Scholar] [CrossRef]

- Geetha, S.; Abhishek, C.S.; Akshayanat, C.S. Machine Vision Based Fire Detection Techniques: A Survey. Fire Technol. 2021, 57, 591–623. [Google Scholar] [CrossRef]

- Ghali, R.; Akhloufi, M.A. Deep Learning Approaches for Wildland Fires Remote Sensing: Classification, Detection, and Segmentation. Remote Sens. 2023, 15, 1821. [Google Scholar] [CrossRef]

- Ghali, R.; Akhloufi, M.A. Wildfires Detection and Segmentation Using Deep CNNs and Vision Transformers. In Proceedings of the Pattern Recognition, Computer Vision, and Image Processing, ICPR 2022 International Workshops and Challenges, Montreal, QC, Canada, 21–25 August 2023; pp. 222–232. [Google Scholar]

- Yuan, F. Video-based Smoke Detection with Histogram Sequence of LBP and LBPV Pyramids. Fire Saf. J. 2011, 46, 132. [Google Scholar] [CrossRef]

- Long, C.; Zhao, J.; Han, S.; Xiong, L.; Yuan, Z.; Huang, J.; Gao, W. Transmission: A New Feature for Computer Vision Based Smoke Detection. In Proceedings of the Artificial Intelligence and Computational Intelligence, Sanya, China, 23–24 October 2010; pp. 389–396. [Google Scholar]

- Ho, C.C. Machine Vision-based Real-time Early Flame and Smoke Detection. Meas. Sci. Technol. 2009, 20, 045502. [Google Scholar] [CrossRef]

- Tian, H.; Li, W.; Wang, L.; Ogunbona, P. Smoke Detection in Video: An Image Separation Approach. Int. J. Comput. Vis. 2014, 106, 192. [Google Scholar] [CrossRef]

- Calderara, S.; Piccinini, P.; Cucchiara, R. Vision-based Smoke Detection System Using Image Energy and Color Information. Mach. Vis. Appl. 2011, 22, 705. [Google Scholar] [CrossRef]

- O’Mahony, N.; Campbell, S.; Carvalho, A.; Harapanahalli, S.; Hernandez, G.V.; Krpalkova, L.; Riordan, D.; Walsh, J. Deep Learning vs. Traditional Computer Vision. In Proceedings of the Advances in Computer Vision, Las Vegas, NV, USA, 2–3 May 2020; p. 128. [Google Scholar]

- Ghali, R.; Akhloufi, M.A.; Souidene Mseddi, W.; Jmal, M. Wildfire Segmentation Using Deep-RegSeg Semantic Segmentation Architecture. In Proceedings of the 19th International Conference on Content-Based Multimedia Indexing, Graz, Austria, 14–16 September 2022; pp. 149–154. [Google Scholar]

- Terven, J.; Córdova-Esparza, D.M.; Romero-González, J.A. A Comprehensive Review of YOLO Architectures in Computer Vision: From YOLOv1 to YOLOv8 and YOLO-NAS. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Lan, W.; Dang, J.; Wang, Y.; Wang, S. Pedestrian Detection Based on YOLO Network Model. In Proceedings of the IEEE International Conference on Mechatronics and Automation (ICMA), Changchun, China, 5–8 August 2018; pp. 1547–1551. [Google Scholar]

- Rjoub, G.; Wahab, O.A.; Bentahar, J.; Bataineh, A.S. Improving Autonomous Vehicles Safety in Snow Weather Using Federated YOLO CNN Learning. In Proceedings of the Mobile Web and Intelligent Information Systems, Virtual, 23–25 August 2021; pp. 121–134. [Google Scholar]

- Andrie Dazlee, N.M.A.; Abdul Khalil, S.; Abdul-Rahman, S.; Mutalib, S. Object Detection for Autonomous Vehicles with Sensor-based Technology Using YOLO. Int. J. Intell. Syst. Appl. Eng. 2022, 10, 129–134. [Google Scholar] [CrossRef]

- Yang, W.; Jiachun, Z. Real-time Face Detection Based on YOLO. In Proceedings of the 1st IEEE International Conference on Knowledge Innovation and Invention (ICKII), Jeju Island, Republic of Korea, 23–27 July 2018; pp. 221–224. [Google Scholar]

- Ashraf, A.H.; Imran, M.; Qahtani, A.M.; Alsufyani, A.; Almutiry, O.; Mahmood, A.; Attique, M.; Habib, M. Weapons Detection For Security and Video Surveillance Using CNN and YOLOv5s. CMC-Comput. Mater. Contin. 2022, 70, 2761–2775. [Google Scholar] [CrossRef]

- Nie, Y.; Sommella, P.; O’Nils, M.; Liguori, C.; Lundgren, J. Automatic Detection of Melanoma with YOLO Deep Convolutional Neural Networks. In Proceedings of the E-Health and Bioengineering Conference (EHB), Iasi, Romania, 21–23 November 2019; pp. 1–4. [Google Scholar]

- Salman, M.E.; Çakirsoy Çakar, G.; Azimjonov, J.; Kösem, M.; Cedimoğlu, I.H. Automated Prostate Cancer Grading and Diagnosis System Using Deep Learning-based YOLO Object Detection Algorithm. Expert Syst. Appl. 2022, 201, 117148. [Google Scholar] [CrossRef]

- Yao, Z.; Jin, T.; Mao, B.; Lu, B.; Zhang, Y.; Li, S.; Chen, W. Construction and Multicenter Diagnostic Verification of Intelligent Recognition System for Endoscopic Images From Early Gastric Cancer Based on YOLOv3 Algorithm. Front. Oncol. 2022, 12, 815951. [Google Scholar] [CrossRef]

- Ghali, R.; Akhloufi, M.A.; Jmal, M.; Mseddi, W.S.; Attia, R. Forest Fires Segmentation using Deep Convolutional Neural Networks. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics (SMC), Melbourne, Australia, 17–20 October 2021; pp. 2109–2114. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable DETR: Deformable Transformers for End-to-End Object Detection. arXiv 2021, arXiv:2010.04159. [Google Scholar] [CrossRef]

- Ghali, R.; Akhloufi, M.A. BoucaNet: A CNN-Transformer for Smoke Recognition on Remote Sensing Satellite Images. Fire 2023, 6, 455. [Google Scholar] [CrossRef]

- Islam, A.; Habib, M.I. Fire Detection From Image and Video Using YOLOv5. arXiv 2023, arXiv:2310.06351. [Google Scholar] [CrossRef]

- Wang, X.; Li, M.; Gao, M.; Liu, Q.; Li, Z.; Kou, L. Early Smoke and Flame Detection Based on Transformer. J. Saf. Sci. Resil. 2023, 4, 294. [Google Scholar] [CrossRef]

- Huang, J.; Zhou, J.; Yang, H.; Liu, Y.; Liu, H. A Small-Target Forest Fire Smoke Detection Model Based on Deformable Transformer for End-to-End Object Detection. Forests 2023, 14, 162. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Proceedings of the Computer Vision—ECCV, Glasgow, UK, 23–28 August 2020; pp. 213–229. [Google Scholar]

- Bahhar, C.; Ksibi, A.; Ayadi, M.; Jamjoom, M.M.; Ullah, Z.; Soufiene, B.O.; Sakli, H. Wildfire and Smoke Detection Using Staged YOLO Model and Ensemble CNN. Electronics 2023, 12, 228. [Google Scholar] [CrossRef]

- Chen, X.; Xue, Y.; Hou, Q.; Fu, Y.; Zhu, Y. RepVGG-YOLOv7: A Modified YOLOv7 for Fire Smoke Detection. Fire 2023, 6, 383. [Google Scholar] [CrossRef]

- Li, J.; Xu, R.; Liu, Y. An Improved Forest Fire and Smoke Detection Model Based on YOLOv5. Forests 2023, 14, 833. [Google Scholar] [CrossRef]

- Sun, L.; Li, Y.; Hu, T. ForestFireDetector: Expanding Channel Depth for Fine-Grained Feature Learning in Forest Fire Smoke Detection. Forests 2023, 14, 2157. [Google Scholar] [CrossRef]

- Chen, G.; Cheng, R.; Lin, X.; Jiao, W.; Bai, D.; Lin, H. LMDFS: A Lightweight Model for Detecting Forest Fire Smoke in UAV Images Based on YOLOv7. Remote Sens. 2023, 15, 3790. [Google Scholar] [CrossRef]

- Yang, Y.; Pan, M.; Li, P.; Wang, X.; Tsai, Y.T. Development and Optimization of Image Fire Detection on Deep Learning Algorithms. J. Therm. Anal. Calorim. 2023, 148, 5089. [Google Scholar] [CrossRef]

- Sun, B.; Wang, Y.; Wu, S. An Efficient Lightweight CNN Model for Real-time Fire Smoke Detection. J. Real-Time Image Process. 2023, 20, 74. [Google Scholar] [CrossRef]

- Sun, Y.; Feng, J. Fire and Smoke Precise Detection Method Based on the Attention Mechanism and Anchor-Free Mechanism. Complex Intell. Syst. 2023, 9, 5185. [Google Scholar] [CrossRef]

- Jin, C.; Zheng, A.; Wu, Z.; Tong, C. Real-Time Fire Smoke Detection Method Combining a Self-Attention Mechanism and Radial Multi-Scale Feature Connection. Sensors 2023, 23, 3358. [Google Scholar] [CrossRef]

- Kim, S.Y.; Muminov, A. Forest Fire Smoke Detection Based on Deep Learning Approaches and Unmanned Aerial Vehicle Images. Sensors 2023, 23, 5702. [Google Scholar] [CrossRef]

- Venâncio, P.V.A.B.; Lisboa, A.C.; Barbosa, A.V. An Automatic Fire Detection System Based on Deep Convolutional Neural Networks for Low-Power, Resource-Constrained Devices. Neural Comput. Appl. 2022, 34, 15349. [Google Scholar] [CrossRef]

- Venâncio, P.V.A.B.; Campos, R.J.; Rezende, T.M.; Lisboa, A.C.; Barbosa, A.V. A Hybrid Method for Fire Detection Based on Spatial and Temporal Patterns. Neural Comput. Appl. 2023, 35, 9349. [Google Scholar] [CrossRef]

- Mukhiddinov, M.; Abdusalomov, A.B.; Cho, J. A Wildfire Smoke Detection System Using Unmanned Aerial Vehicle Images Based on the Optimized YOLOv5. Sensors 2022, 22, 9384. [Google Scholar] [CrossRef]

- Ultralytics. YOLOv5. Available online: https://github.com/ultralytics/yolov5 (accessed on 27 November 2023).

- Lin, H.; Liu, Z.; Cheang, C.; Fu, Y.; Guo, G.; Xue, X. YOLOv7: Trainable Bag-of-freebies Sets New State-of-the-art for Real-time Object Detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 7464–7475. [Google Scholar]

- Ultralytics. YOLOv8. Available online: https://github.com/ultralytics/ultralytics (accessed on 28 November 2023).

- Ultralytics. YOLOv5u. Available online: https://docs.ultralytics.com/models/yolov5/ (accessed on 27 November 2023).

- Taylor, S.W.; Woolford, D.G.; Dean, C.B.; Martell, D.L. Wildfire Prediction to Inform Fire Management: Statistical Science Challenges. Stat. Sci. 2013, 28, 586–615. [Google Scholar] [CrossRef]

- Artés, T.; Oom, D.; De Rigo, D.; Durrant, T.H.; Maianti, P.; Libertà, G.; San-Miguel-Ayanz, J. A global wildfire dataset for the analysis of fire regimes and fire behaviour. Sci. Data 2019, 6, 296. [Google Scholar] [CrossRef] [PubMed]

- Venâncio, P. D-Fire: An Image Dataset for Fire and Smoke Detection. Available online: https://github.com/gaiasd/DFireDataset/tree/master (accessed on 14 November 2023).

- Hemateja, A.V.N.M. WildFire Smoke Dataset YOLO. Available online: https://www.kaggle.com/datasets/ahemateja19bec1025/wildfiresmokedatasetyolo?resource=download (accessed on 25 March 2024).

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; pp. 8026–8037. [Google Scholar]

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F.; et al. LLaMA: Open and Efficient Foundation Language Models. arXiv 2023, arXiv:2302.13971. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

| Model | mAP (%) | AP-Smoke (%) | AP-Fire (%) | GFLOPS | Training Time (hour) | Power Consumption (Wh) |

|---|---|---|---|---|---|---|

| YOLOv5n | 75.90 | 80.70 | 71.00 | 4.10 | 2.100 | 693.00 |

| YOLOv5s | 78.30 | 82.90 | 73.60 | 15.80 | 2.573 | 849.09 |

| YOLOv5m | 78.70 | 84.90 | 72.40 | 47.90 | 4.088 | 1349.04 |

| YOLOv5l | 79.50 | 85.70 | 73.40 | 107.70 | 6.610 | 2181.3 |

| YOLOv5x | 78.90 | 85.30 | 72.50 | 203.80 | 9.872 | 3257.76 |

| YOLOv7 | 80.20 | 85.10 | 75.30 | 103.20 | 9.031 | 2980.23 |

| YOLOv7x | 80.40 | 85.50 | 75.40 | 188.00 | 11.655 | 3846.15 |

| YOLOv8n | 77.80 | 84.00 | 71.50 | 8.10 | 2.002 | 660.66 |

| YOLOv8s | 78.70 | 84.90 | 72.50 | 28.40 | 2.767 | 913.11 |

| YOLOv8m | 79.00 | 84.90 | 73.10 | 78.70 | 4.726 | 1559.58 |

| YOLOv8l | 79.10 | 85.00 | 73.20 | 164.80 | 6.806 | 2245.98 |

| YOLOv8x | 79.70 | 85.60 | 73.90 | 257.40 | 10.795 | 3562.35 |

| YOLOv5nu | 76.70 | 83.60 | 69.90 | 7.10 | 2.210 | 729.30 |

| YOLOv5su | 78.50 | 84.20 | 72.90 | 23.80 | 2.825 | 932.25 |

| YOLOv5mu | 79.40 | 85.90 | 73.00 | 64.00 | 4.535 | 1496.55 |

| YOLOv5lu | 79.70 | 86.00 | 73.40 | 134.70 | 6.415 | 2116.95 |

| YOLOv5xu | 79.50 | 86.00 | 72.90 | 246.00 | 11.032 | 3640.56 |

| Faster R-CNN | 35.95 | 31.90 | 40.00 | 406.00 | 2.420 | 798.60 |

| Tiny YOLOv4 [43] | 63.34 | 62.20 | 64.48 | 16.07 | — | — |

| YOLOv4 [43] | 76.56 | 83.48 | 69.94 | 59.57 | — | – |

| YOLOv5s [43] | 78.30 | 84.29 | 72.78 | 15.80 | — | — |

| YOLOv5l [43] | 79.46 | 86.38 | 72.84 | 107.80 | — | — |

| Model | mAP (%) | GFLOPS | Training Time (Hour) | Power Consumption (Wh) |

|---|---|---|---|---|

| YOLOv5n | 95.90 | 4.10 | 0.085 | 28.05 |

| YOLOv5s | 94.60 | 15.80 | 0.106 | 34.98 |

| YOLOv5m | 93.10 | 47.90 | 0.165 | 54.45 |

| YOLOv5l | 92.90 | 107.70 | 0.240 | 79.20 |

| YOLOv5x | 90.80 | 203.80 | 0.401 | 132.33 |

| YOLOv7 | 92.60 | 103.20 | 0.355 | 117.15 |

| YOLOv7x | 96.00 | 188.00 | 0.353 | 116.49 |

| YOLOv8n | 97.30 | 8.10 | 0.179 | 59.07 |

| YOLOv8s | 98.10 | 28.40 | 0.241 | 79.53 |

| YOLOv8m | 93.50 | 78.70 | 0.401 | 132.33 |

| YOLOv8l | 92.40 | 164.80 | 0.573 | 189.09 |

| YOLOv8x | 94.10 | 257.40 | 0.886 | 292.38 |

| YOLOv5nu | 95.70 | 7.10 | 0.192 | 80.84 |

| YOLOv5su | 94.20 | 23.80 | 0.248 | 81.51 |

| YOLOv5mu | 95.10 | 64.00 | 0.275 | 90.75 |

| YOLOv5lu | 94.30 | 134.70 | 0.525 | 173.25 |

| YOLOv5xu | 93.80 | 246.00 | 0.965 | 318.45 |

| Faster R-CNN | 50.34 | 406.00 | 2.097 | 692.01 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gonçalves, L.A.O.; Ghali, R.; Akhloufi, M.A. YOLO-Based Models for Smoke and Wildfire Detection in Ground and Aerial Images. Fire 2024, 7, 140. https://doi.org/10.3390/fire7040140

Gonçalves LAO, Ghali R, Akhloufi MA. YOLO-Based Models for Smoke and Wildfire Detection in Ground and Aerial Images. Fire. 2024; 7(4):140. https://doi.org/10.3390/fire7040140

Chicago/Turabian StyleGonçalves, Leon Augusto Okida, Rafik Ghali, and Moulay A. Akhloufi. 2024. "YOLO-Based Models for Smoke and Wildfire Detection in Ground and Aerial Images" Fire 7, no. 4: 140. https://doi.org/10.3390/fire7040140

APA StyleGonçalves, L. A. O., Ghali, R., & Akhloufi, M. A. (2024). YOLO-Based Models for Smoke and Wildfire Detection in Ground and Aerial Images. Fire, 7(4), 140. https://doi.org/10.3390/fire7040140