An Indoor Autonomous Inspection and Firefighting Robot Based on SLAM and Flame Image Recognition

Abstract

1. Introduction

2. Related Work

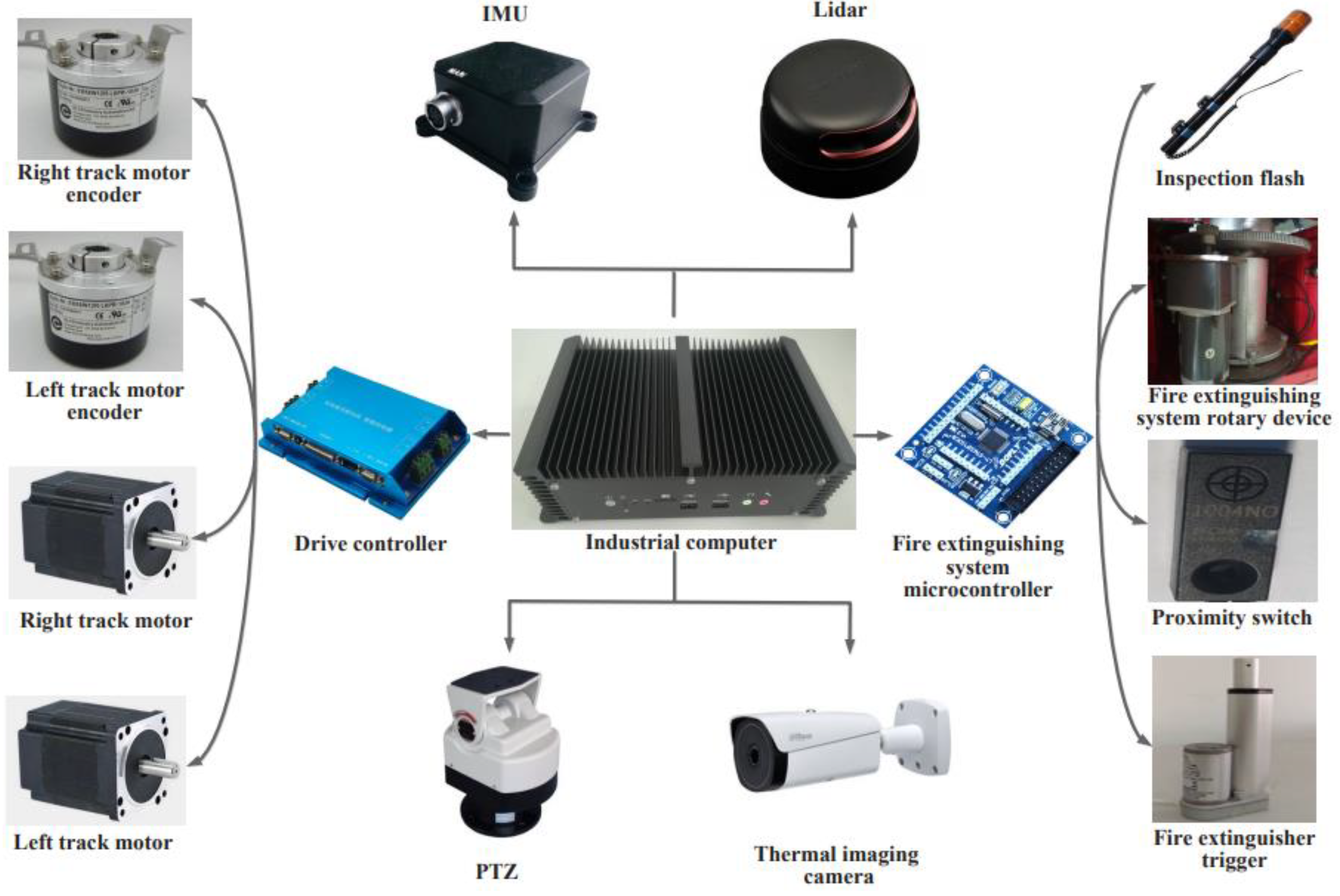

3. Hardware Design of the Autonomous Inspection and Firefighting Robot

3.1. Design of the Robot Map Construction System

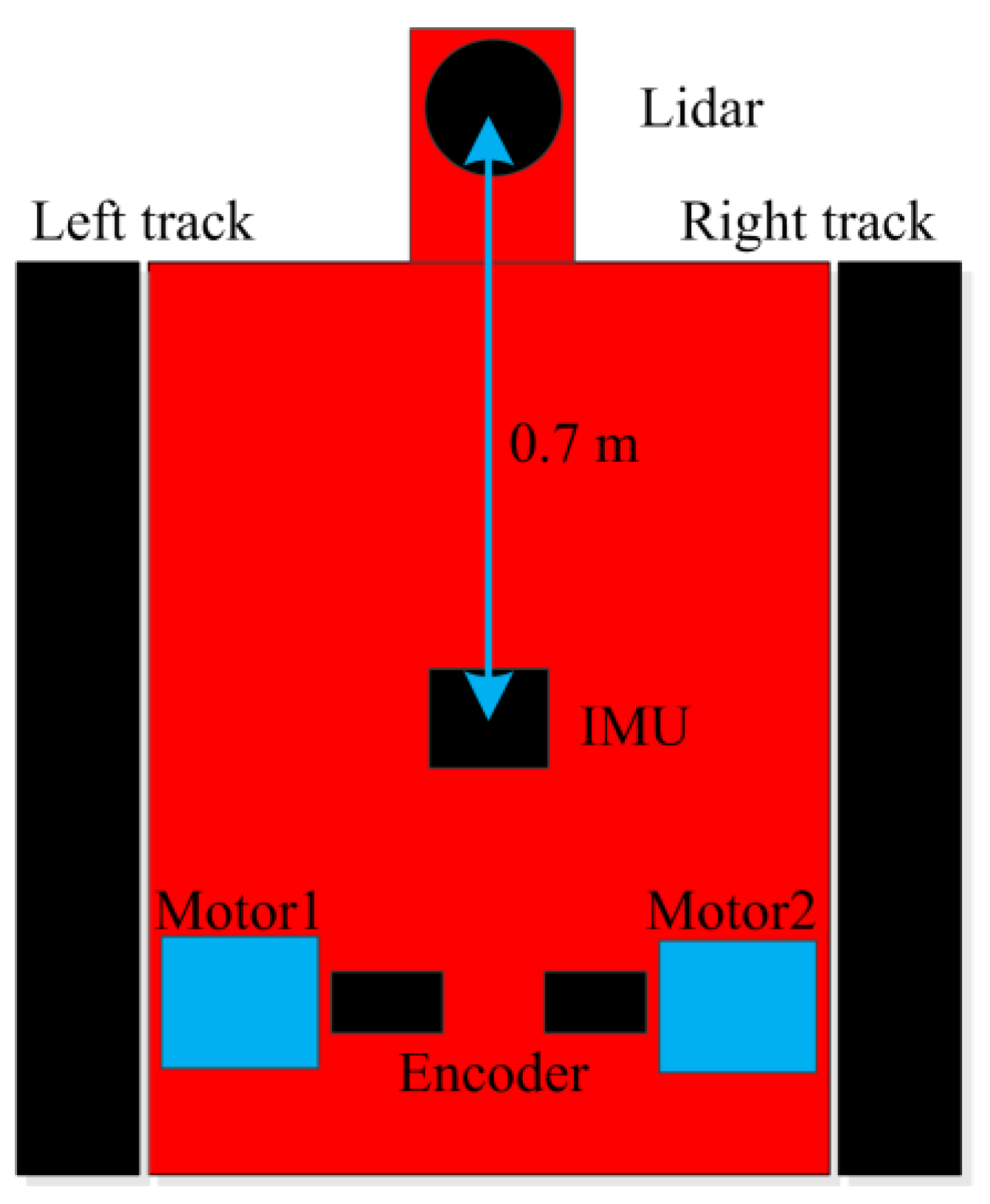

3.1.1. Robot Motion Unit

3.1.2. Robot Sensor Unit

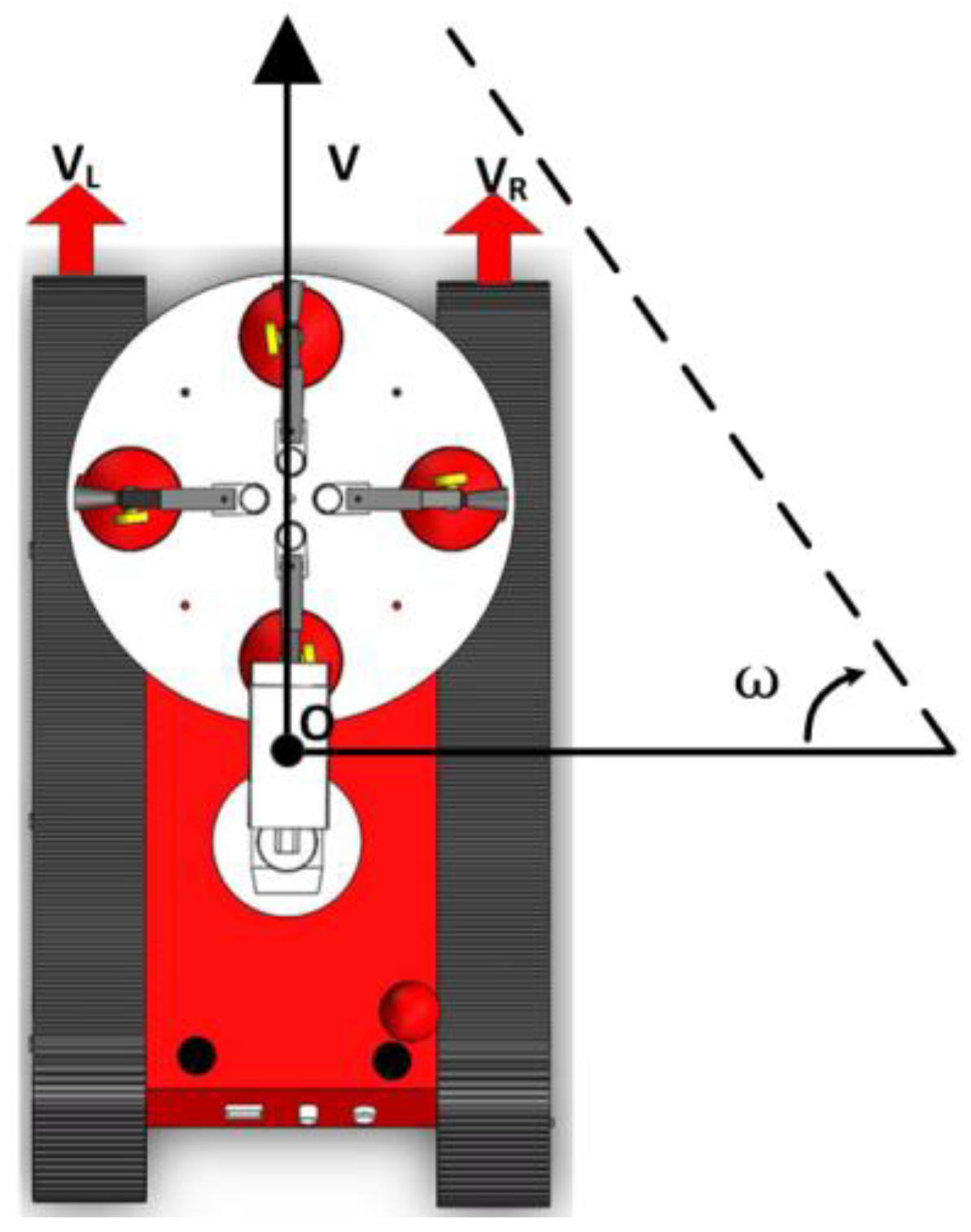

3.1.3. Robot Kinematics Model

3.2. Design of the Robot’s Automatic Fire-Extinguishing System

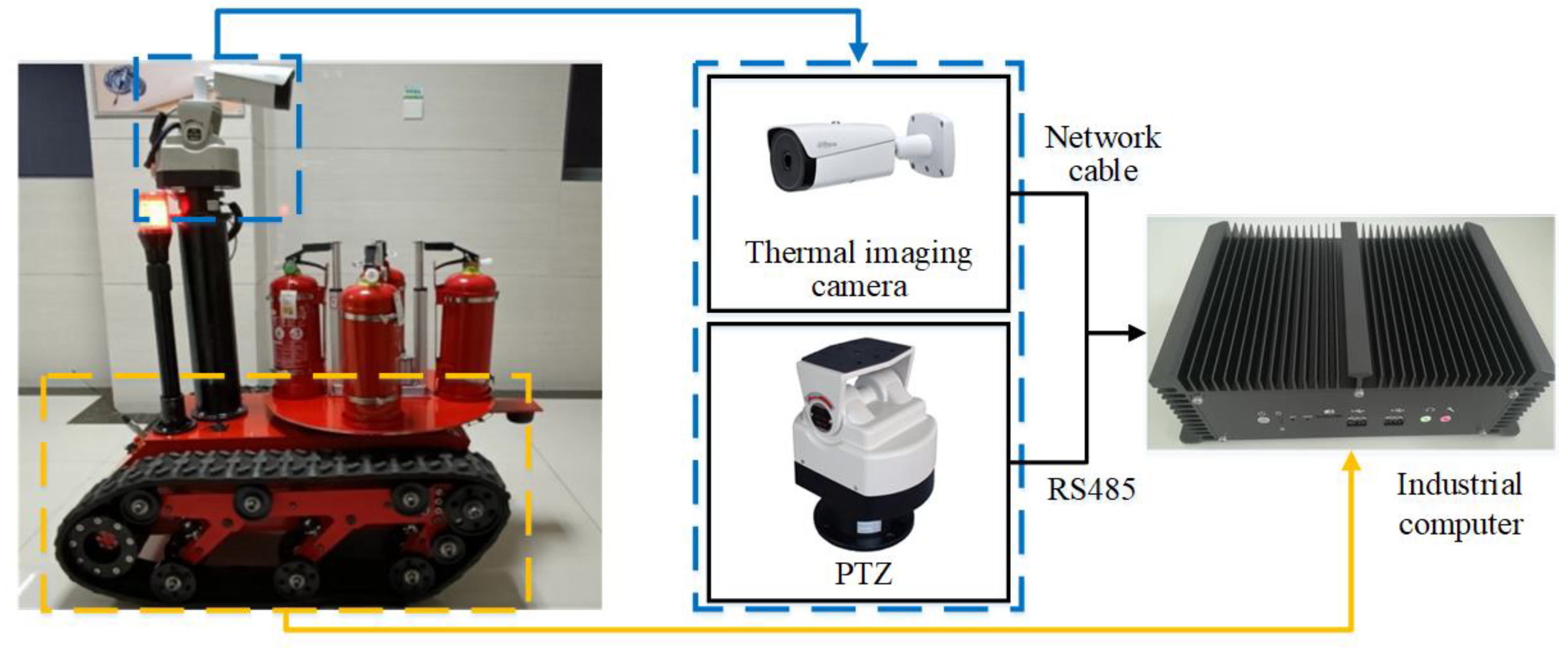

3.2.1. Video Surveillance System

3.2.2. Fire-Extinguishing System

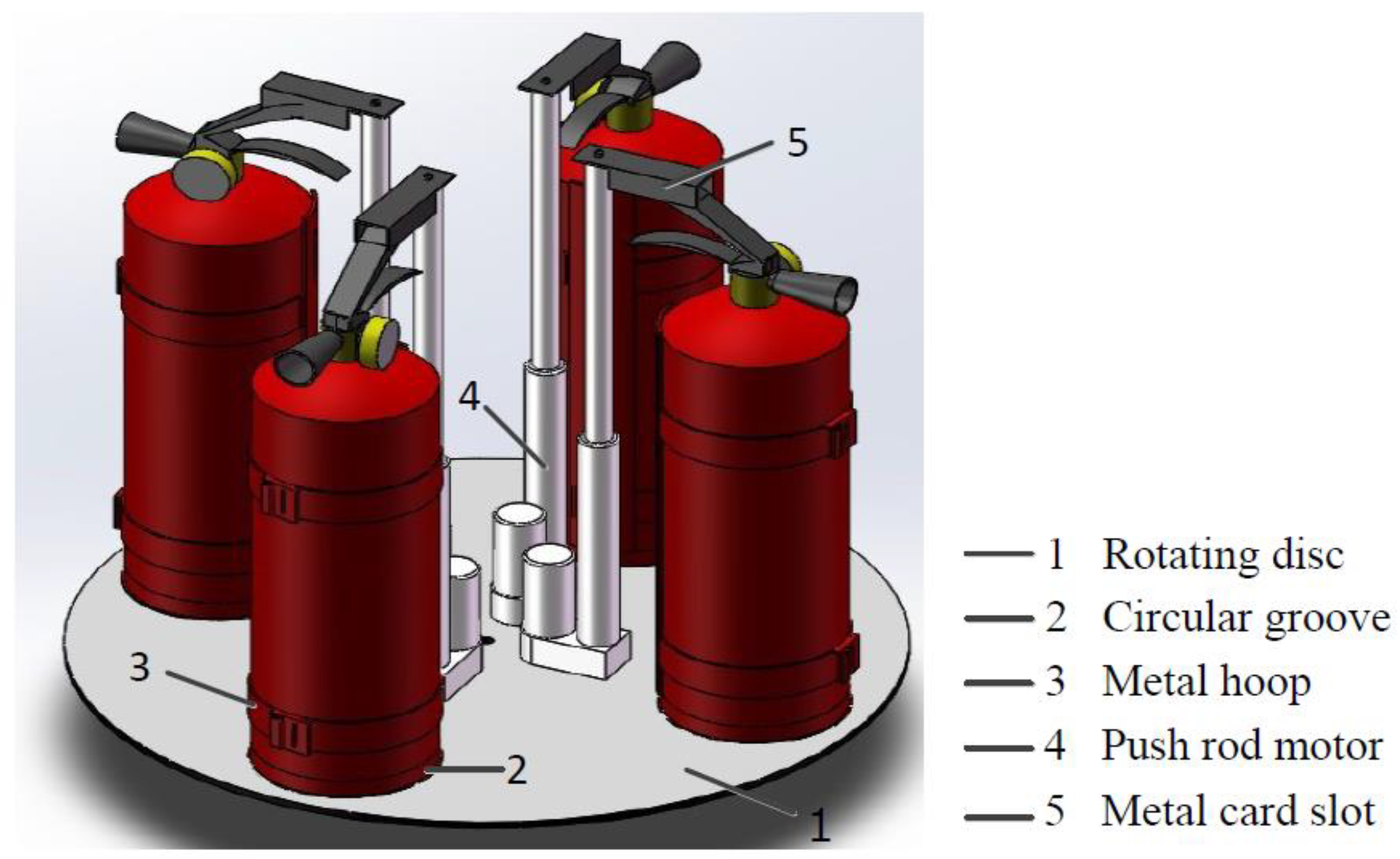

- (1)

- Fire extinguisher fixtures

- (2)

- Fire extinguisher trigger device

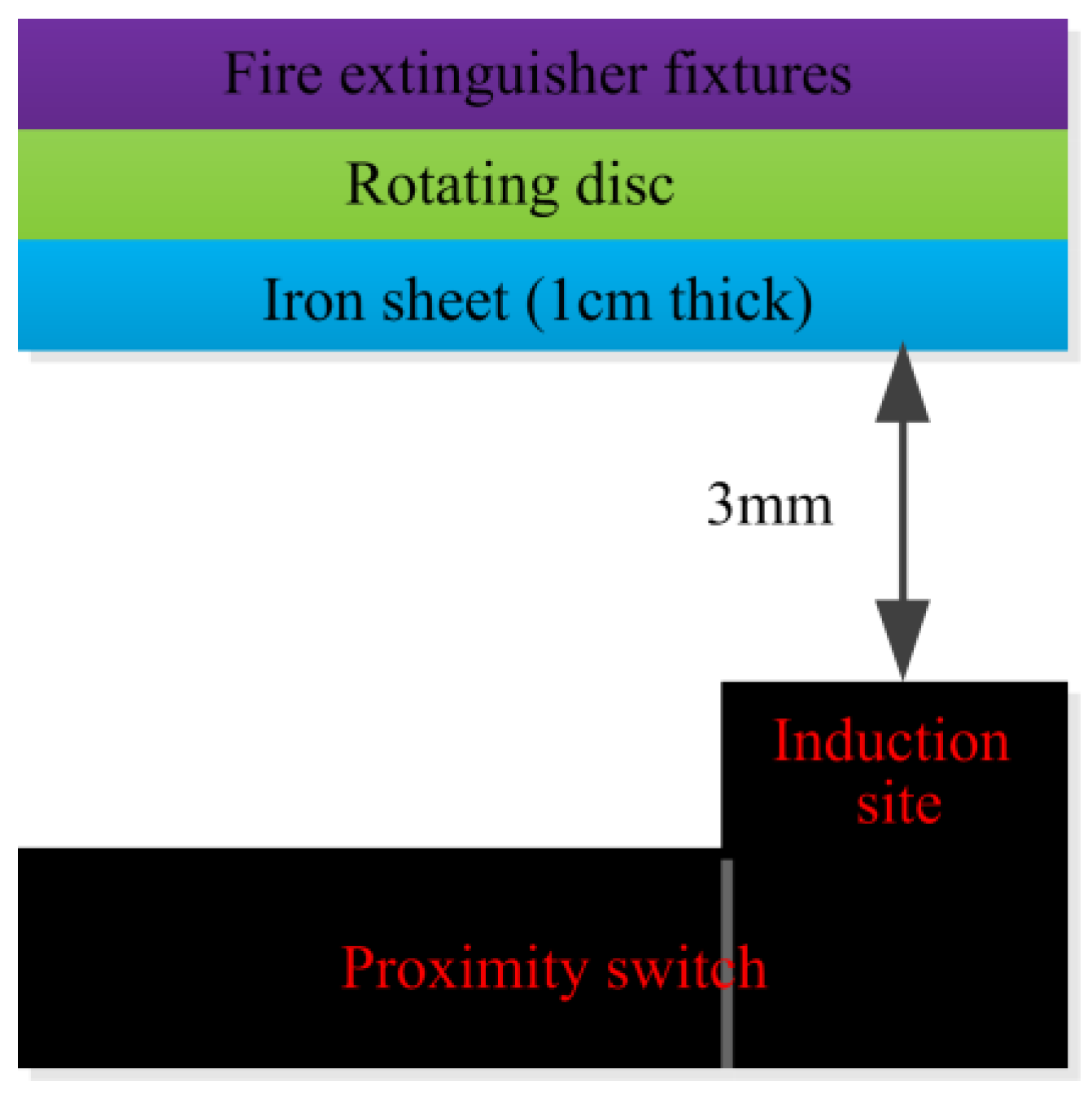

- (3)

- Rotating device

- (4)

- System control device

4. ROS-Based Map-Building System and Automatic Fire-Extinguishing System Implementation

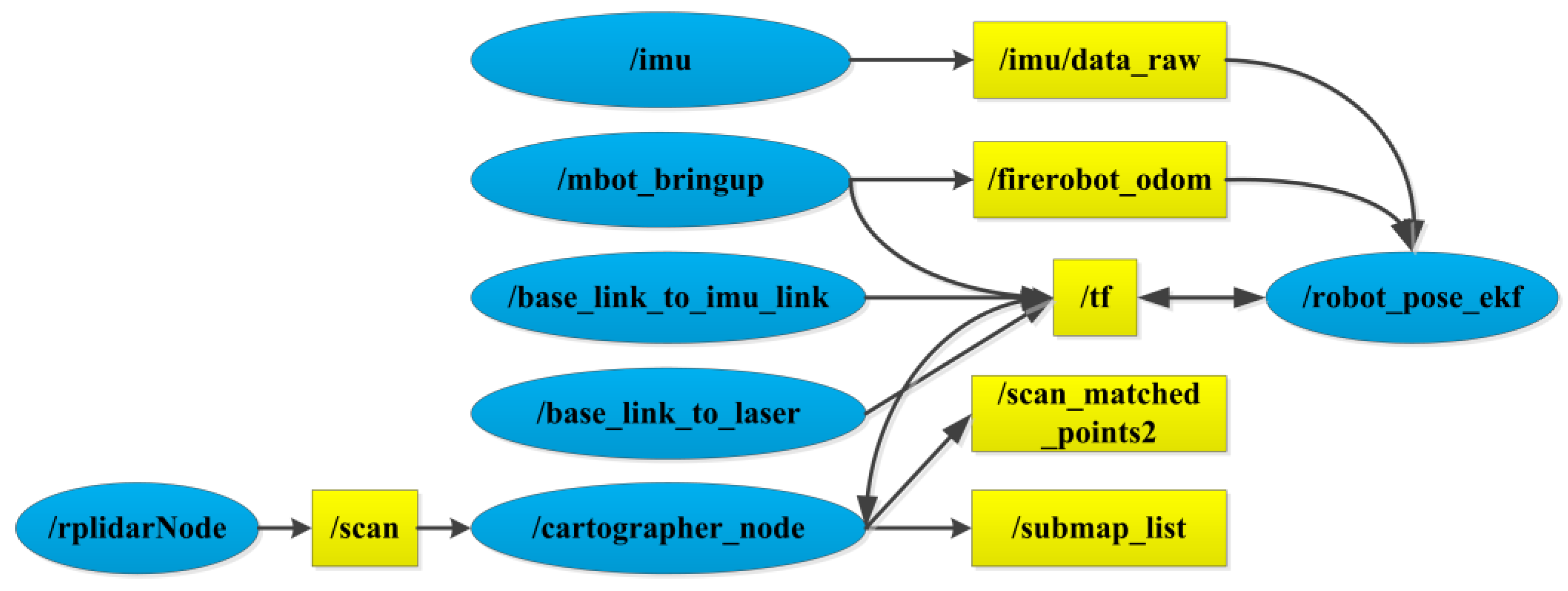

4.1. Map-Building System Implementation

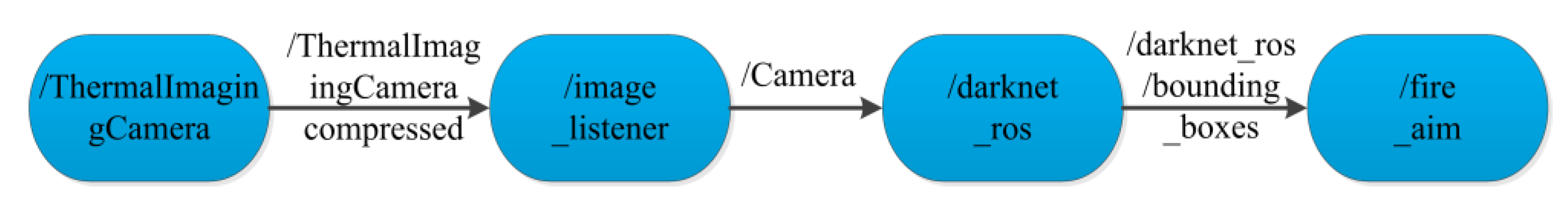

4.2. Realization of the Automatic Fire-Extinguishing System

5. Results and Analysis

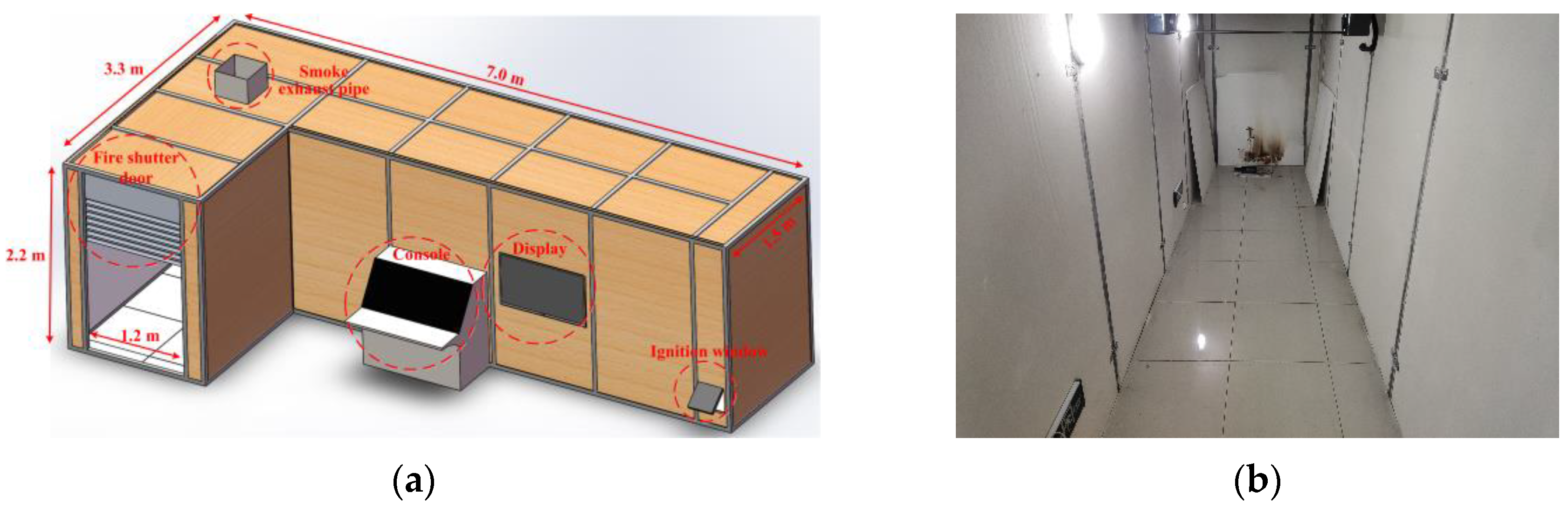

5.1. Building Fire Simulation Experimental Device Setup

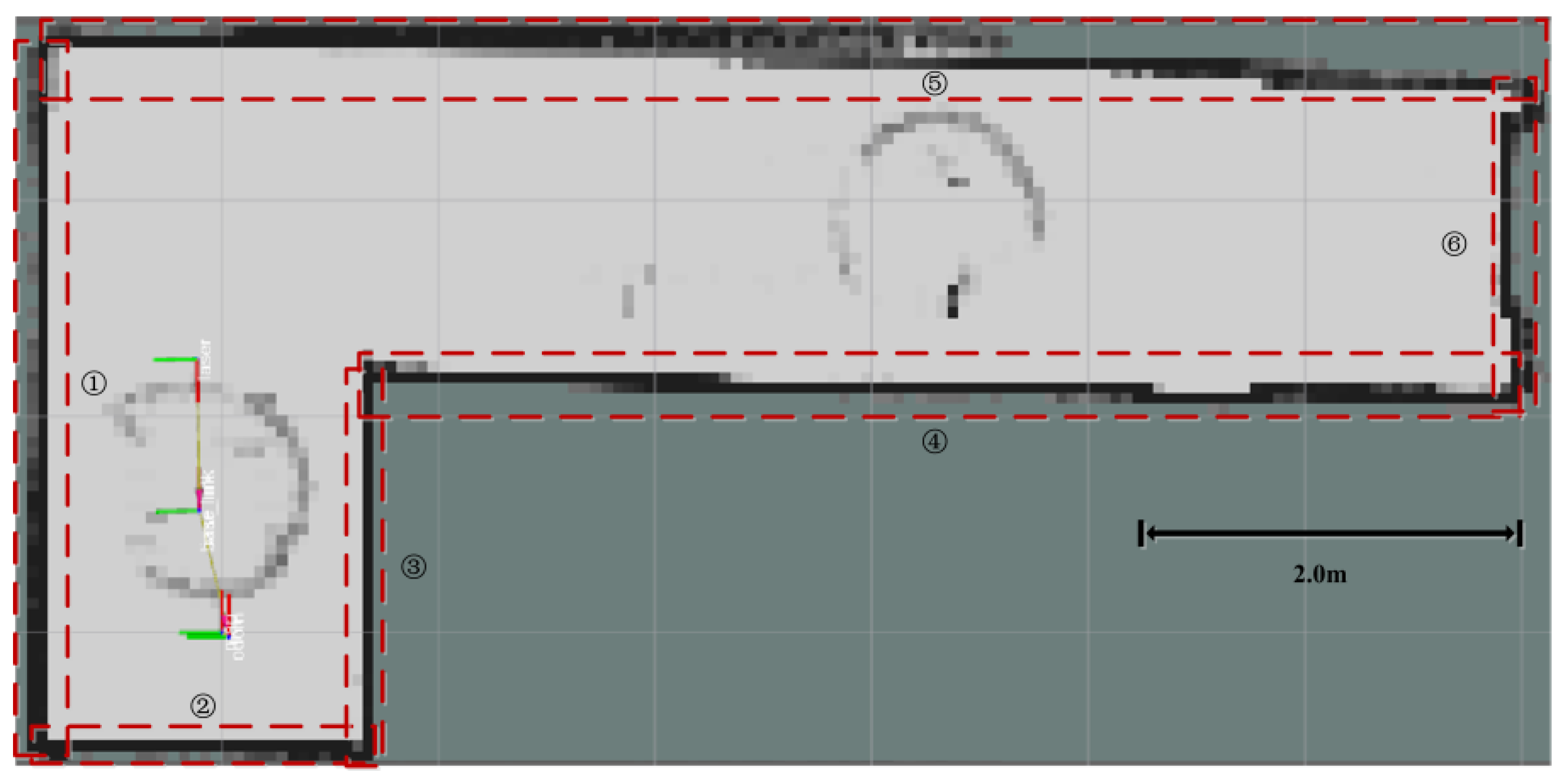

5.2. Robot Map Construction

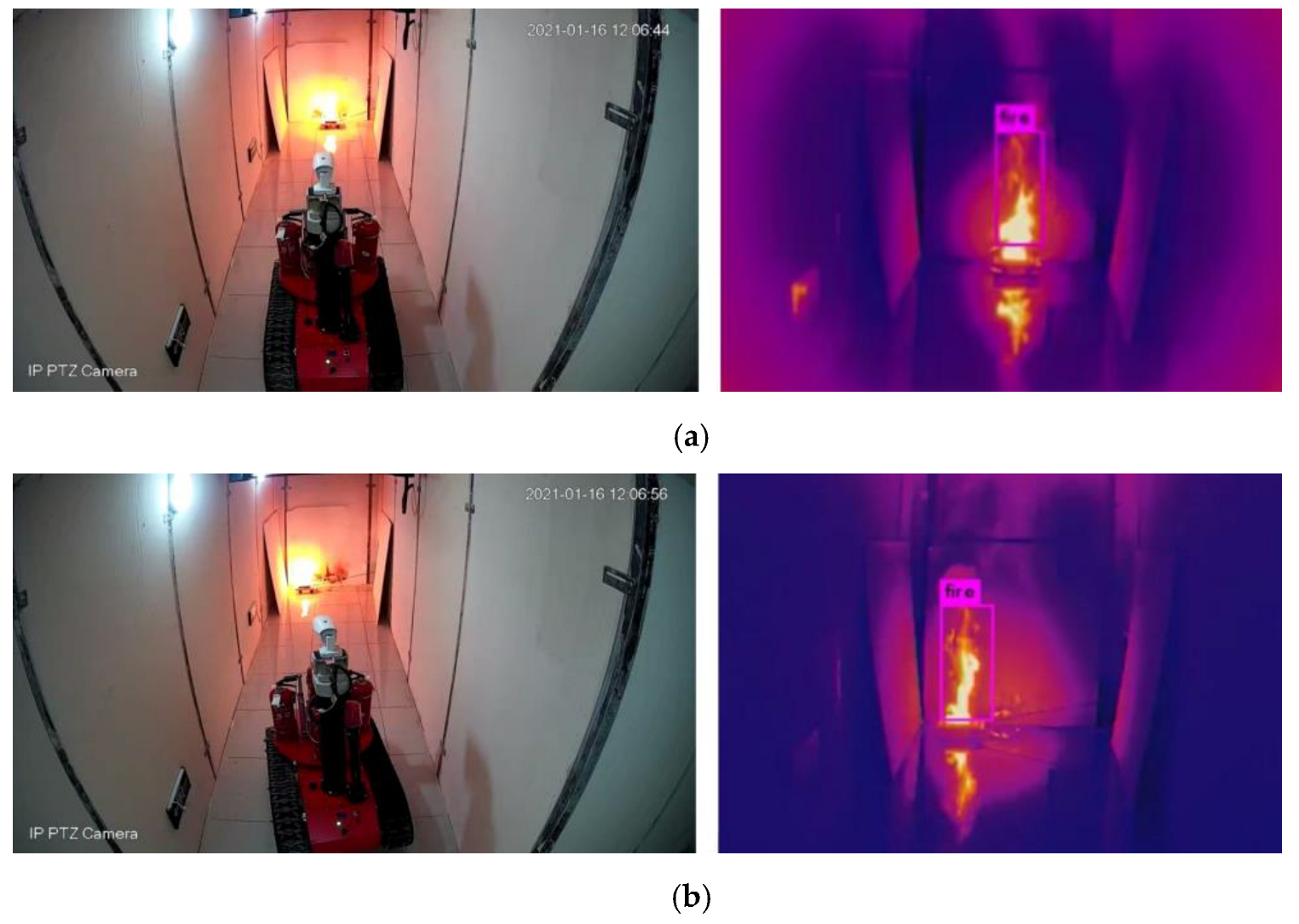

5.3. Robot Flame Detection and Automatic Aiming

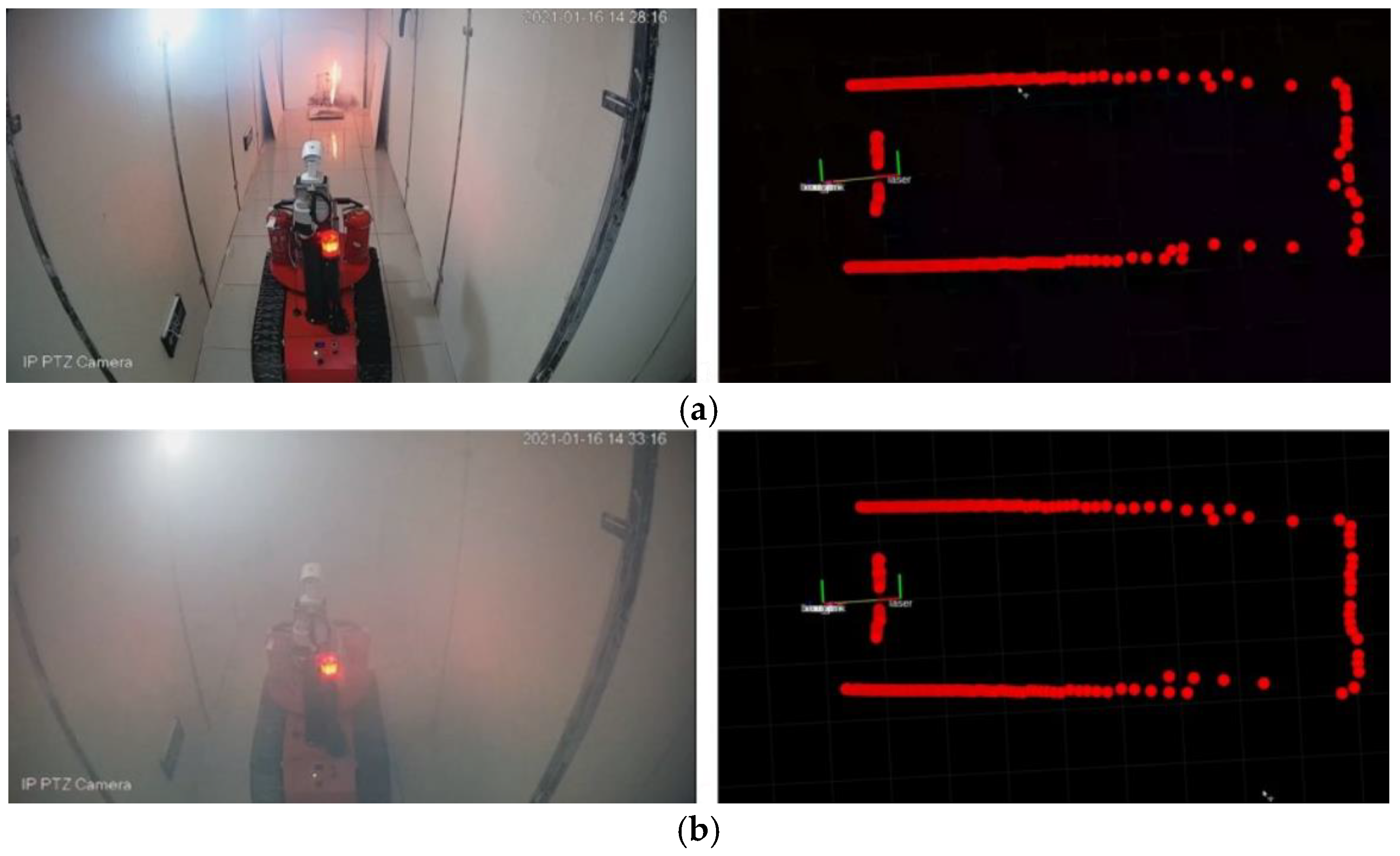

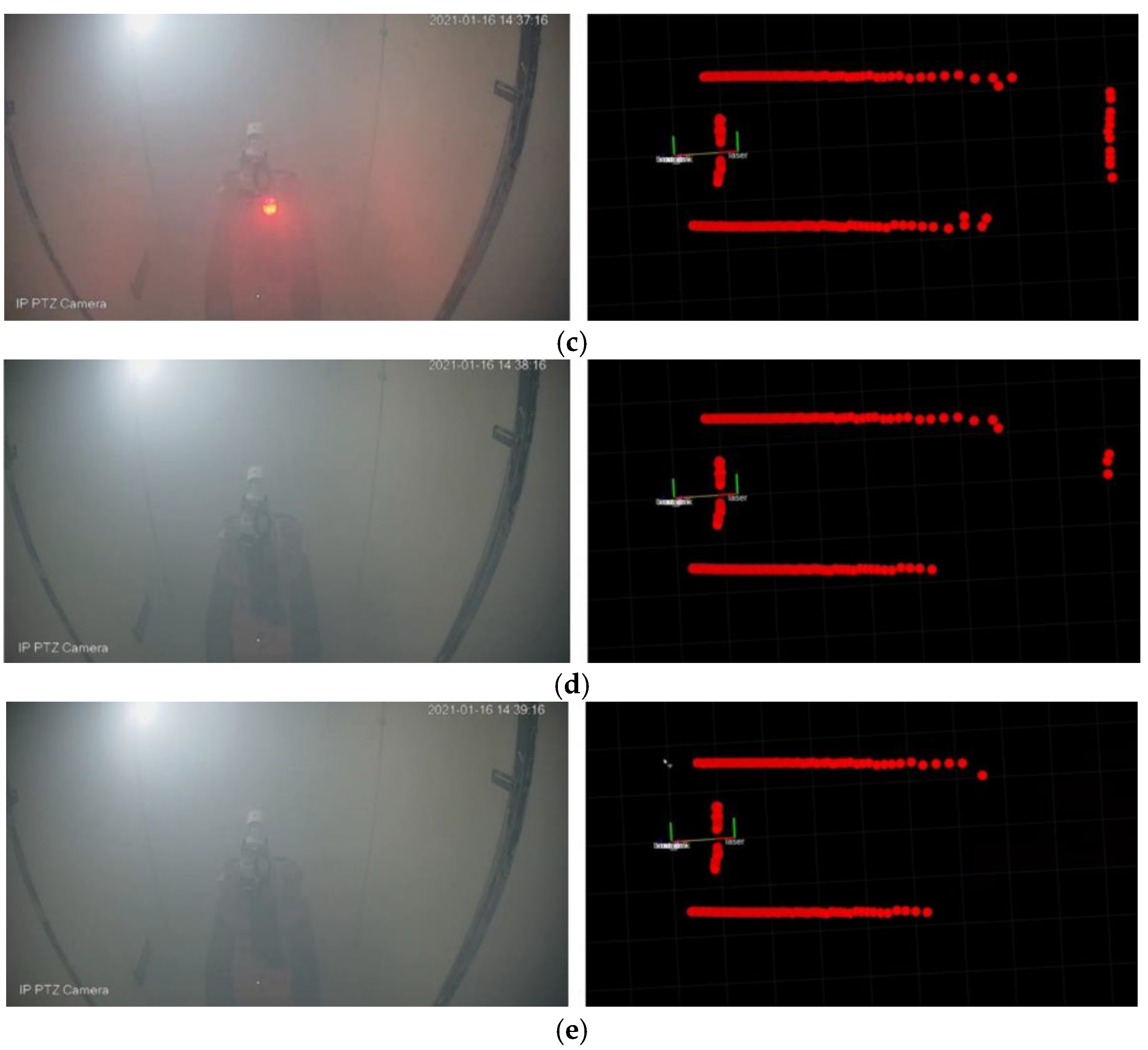

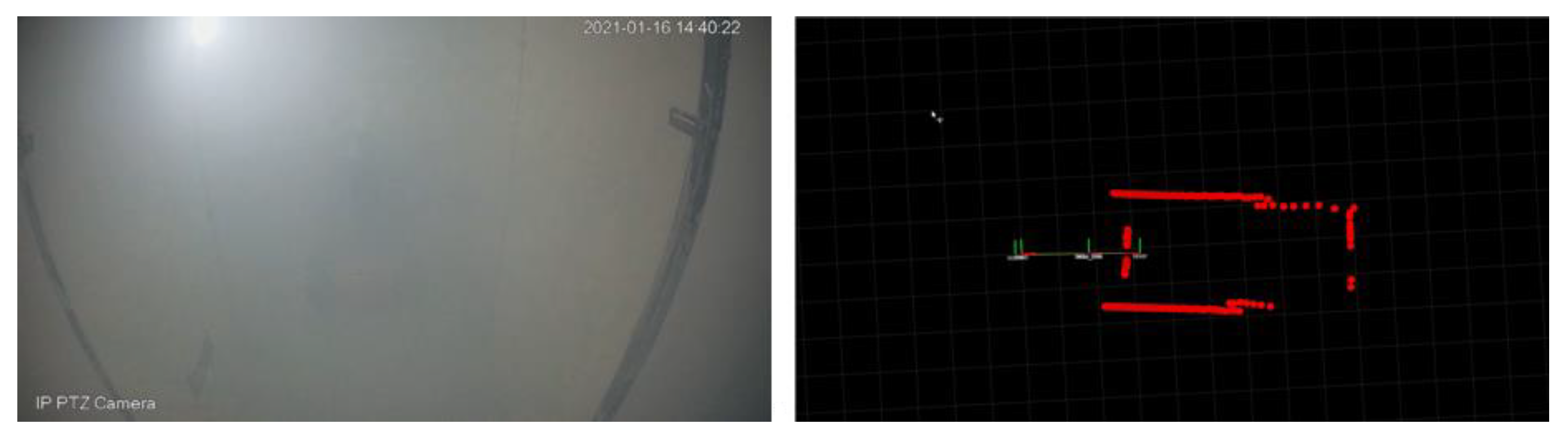

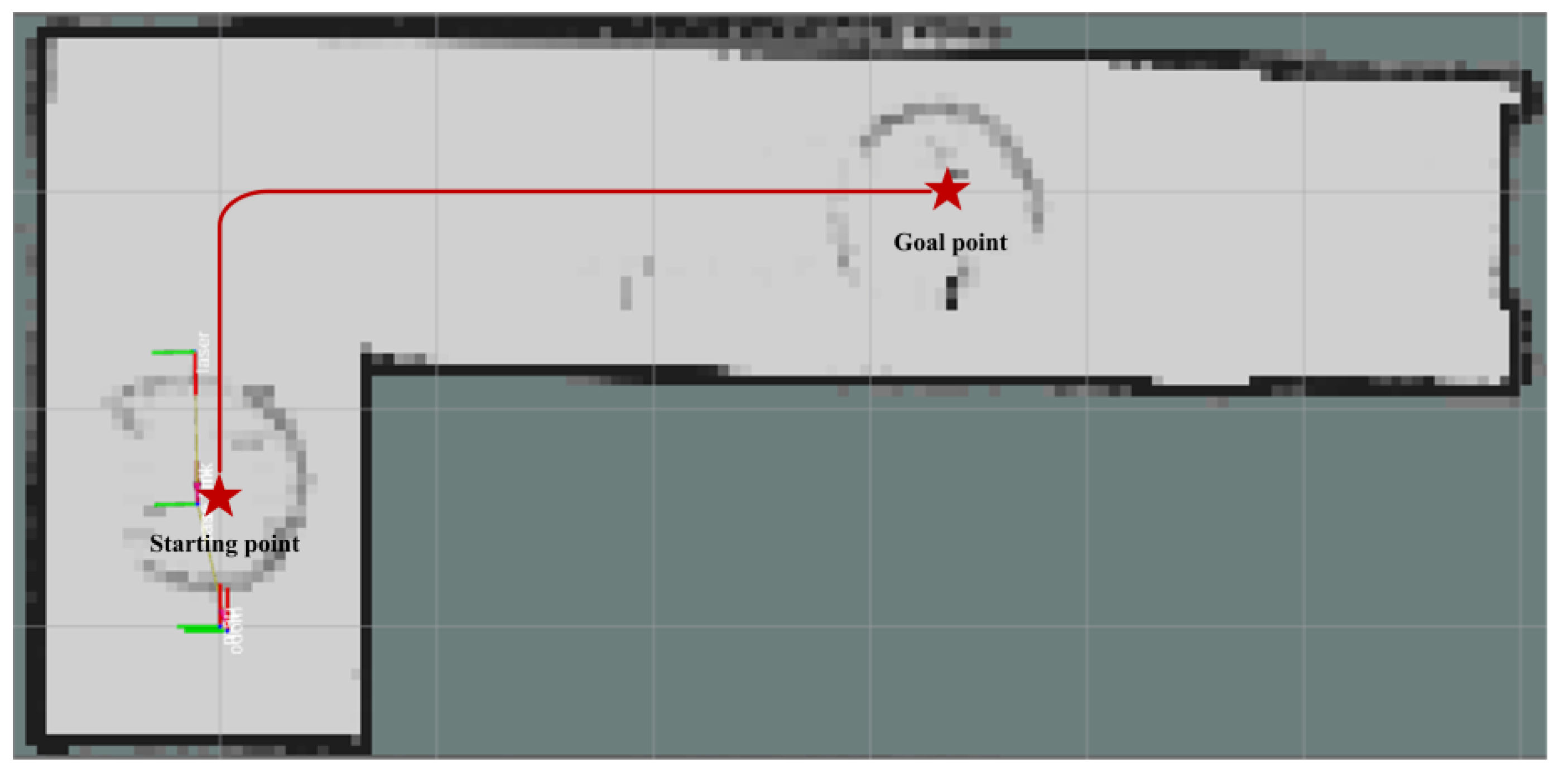

5.4. Robot Autonomous Inspection and Automatic Fire Extinguishing

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhou, Y.; Pang, Y.; Chen, F.; Zhang, Y. Three-dimensional indoor fire evacuation routing. ISPRS Int. J. Geo-Inf. 2020, 9, 558. [Google Scholar] [CrossRef]

- Kodur, V.; Kumar, P.; Rafi, M.M. Fire hazard in buildings: Review, assessment and strategies for improving fire safety. PSU Res. Rev. 2020, 4, 1–23. [Google Scholar]

- Li, Z.; Huang, H.; Li, N.; Zan, M.L.C.; Law, K. An agent-based simulator for indoor crowd evacuation considering fire impacts. Autom. Constr. 2020, 120, 103395. [Google Scholar] [CrossRef]

- Zhou, Y.-C.; Hu, Z.-Z.; Yan, K.-X.; Lin, J.-R. Deep learning-based instance segmentation for indoor fire load recognition. IEEE Access 2021, 9, 148771–148782. [Google Scholar]

- Birajdar, G.S.; Baz, M.; Singh, R.; Rashid, M.; Gehlot, A.; Akram, S.V.; Alshamrani, S.S.; AlGhamdi, A.S. Realization of people density and smoke flow in buildings during fire accidents using raspberry and openCV. Sustainability 2021, 13, 11082. [Google Scholar] [CrossRef]

- Ahn, Y.-J.; Yu, Y.-U.; Kim, J.-K. Accident cause factor of fires and explosions in tankers using fault tree analysis. J. Mar. Sci. Eng. 2021, 9, 844. [Google Scholar] [CrossRef]

- Liu, Y.T.; Sun, R.Z.; Zhang, X.N.; Li, L.; Shi, G.Q. An autonomous positioning method for fire robots with multi-source sensors. Wirel. Netw. 2021, 1–13. [Google Scholar] [CrossRef]

- Jia, Y.-Z.; Li, J.-S.; Guo, N.; Jia, Q.-S.; Du, B.-F.; Chen, C.-Y. Design and research of small crawler fire fighting robot. In Proceedings of the 2018 Chinese Automation Congress (CAC), Xi’an, China, 30 November–2 December 2018; pp. 4120–4123. [Google Scholar]

- Gao, X.; Zhang, F.; Chaoxia, C.; He, G.; Chong, B.; Pang, S.; Shang, W. Design and Experimental Verification of an Intelligent Fire-fighting Robot. In Proceedings of the 6th IEEE International Conference on Advanced Robotics and Mechatronics (ICARM), Chongqing, China, 3–5 July 2021; pp. 943–948. [Google Scholar]

- Liljeback, P.; Stavdahl, O.; Beitnes, A. SnakeFighter—Development of a water hydraulic fire fighting snake robot. In Proceedings of the 9th International Conference on Control, Automation, Robotics and Vision, Singapore, 5–8 December 2006; pp. 1–6. [Google Scholar]

- Kim, J.-H.; Starr, J.W.; Lattimer, B.Y. Firefighting robot stereo infrared vision and radar sensor fusion for imaging through smoke. Fire Technol. 2015, 51, 823–845. [Google Scholar] [CrossRef]

- Schneider, F.E.; Wildermuth, D. Using robots for firefighters and first responders: Scenario specification and exemplary system description. In Proceedings of the 18th International Carpathian Control Conference (ICCC), Sinaia, Romania, 28–31 May 2017; pp. 216–221. [Google Scholar]

- Liu, P.; Yu, H.; Cang, S.; Vladareanu, L. Robot-assisted smart firefighting and interdisciplinary perspectives. In Proceedings of the 22nd International Conference on Automation and Computing (ICAC), Colchester, UK, 7–8 September 2016; pp. 395–401. [Google Scholar]

- Zhang, S.; Yao, J.; Wang, R.; Liu, Z.; Ma, C.; Wang, Y.; Zhao, Y. Design of intelligent fire-fighting robot based on multi-sensor fusion and experimental study on fire scene patrol. Robot. Auton. Syst. 2022, 154, 104122. [Google Scholar] [CrossRef]

- Hong, J.H.; Min, B.-C.; Taylor, J.M.; Raskin, V.; Matson, E.T. NL-based communication with firefighting robots. In Proceedings of the 2012 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Seoul, Republic of Korea, 14–17 October 2012; pp. 1461–1466. [Google Scholar]

- Tamura, Y.; Amano, H.; Ota, J. Analysis of cognitive skill in a water discharge activity for firefighting robots. ROBOMECH J. 2021, 8, 13. [Google Scholar] [CrossRef]

- Ando, H.; Ambe, Y.; Ishii, A.; Konyo, M.; Tadakuma, K.; Maruyama, S.; Tadokoro, S. Aerial hose type robot by water jet for fire fighting. IEEE Robot. Autom. Lett. 2018, 3, 1128–1135. [Google Scholar] [CrossRef]

- Mingsong, L.; Tugan, L. Design and Experiment of Control System of Intelligent Fire Fighting Robot. In Proceedings of the 2020 IEEE 4th Information Technology, Networking, Electronic and Automation Control Conference (ITNEC), Chongqing, China, 12–14 June 2020; pp. 2570–2573. [Google Scholar]

- Gan, Z.; Huang, G.; Zhang, J.; Liu, X.; Shan, C. The Control System and Application Based on ROS Elevating Fire-Fighting Robot. J. Phys. Conf. Ser. 2021, 2029, 012004. [Google Scholar] [CrossRef]

- Wang, J.; Meng, Z.; Wang, L. A UPF-PS SLAM algorithm for indoor mobile robot with NonGaussian detection model. IEEE/ASME Trans. Mechatron. 2021, 27, 1–11. [Google Scholar] [CrossRef]

- Zong, W.P.; Li, G.Y.; Li, M.L.; Wang, L.; Li, S.X. A survey of laser scan matching methods. Chin. Opt. 2018, 11, 914–930. [Google Scholar] [CrossRef]

- Van der Merwe, R.; Wan, E.A. The square-root unscented Kalman filter for state and parameter-estimation. In Proceedings of the 2001 IEEE International Conference on Acoustics, Speech, and Signal Processing, Salt Lake City, UT, USA, 7–11 May 2001; pp. 3461–3464. [Google Scholar]

- Pagac, D.; Nebot, E.M.; Durrant-Whyte, H. An evidential approach to map-building for autonomous vehicles. IEEE Trans. Robot. Autom. 1998, 14, 623–629. [Google Scholar] [CrossRef]

- Murphy, K.P. Bayesian map learning in dynamic environments. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 1999. [Google Scholar]

- Montemerlo, M.; Thrun, S. Simultaneous localization and mapping with unknown data association using FastSLAM. In Proceedings of the 2003 IEEE International Conference on Robotics and Automation (Cat. No. 03CH37422), Taipei, Taiwan, 14–19 September 2003; pp. 1985–1991. [Google Scholar]

- Grisetti, G.; Stachniss, C.; Burgard, W. Improved techniques for grid mapping with rao-blackwellized particle filters. IEEE Trans. Robot. 2007, 23, 34–46. [Google Scholar] [CrossRef]

- Taguchi, S.; Deguchi, H.; Hirose, N.; Kidono, K. Fast Bayesian graph update for SLAM. Adv. Robot. 2022, 36, 333–343. [Google Scholar] [CrossRef]

- Gao, L.L.; Dong, C.Y.; Liu, X.Y.; Ye, Q.F.; Zhang, K.; Chen, X.Y. Improved 2D laser slam graph optimization based on Cholesky decomposition. In Proceedings of the 8th International Conference on Control, Decision and Information Technologies (CoDIT), Istanbul, Turkey, 17–20 May 2022; pp. 659–662. [Google Scholar]

- Hess, W.; Kohler, D.; Rapp, H.; Andor, D. Real-time loop closure in 2D LIDAR SLAM. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 1271–1278. [Google Scholar]

- Tadic, V.; Toth, A.; Vizvari, Z.; Klincsik, M.; Sari, Z.; Sarcevic, P.; Sarosi, J.; Biro, I. Perspectives of RealSense and ZED Depth Sensors for Robotic Vision Applications. Machines 2022, 10, 183. [Google Scholar] [CrossRef]

- Altay, F.; Velipasalar, S. The Use of Thermal Cameras for Pedestrian Detection. IEEE Sens. J. 2022, 22, 11489–11498. [Google Scholar] [CrossRef]

- Töreyin, B.U.; Dedeoğlu, Y.; Güdükbay, U.; Cetin, A.E. Computer vision based method for real-time fire and flame detection. Pattern Recognit. Lett. 2006, 27, 49–58. [Google Scholar] [CrossRef]

- Celik, T.; Demirel, H.; Ozkaramanli, H. Automatic fire detection in video sequences. In Proceedings of the 2006 14th European Signal Processing Conference, Florence, Italy, 4–8 September 2006; pp. 1–5. [Google Scholar]

- Marbach, G.; Loepfe, M.; Brupbacher, T. An image processing technique for fire detection in video images. Fire Saf. J. 2006, 41, 285–289. [Google Scholar] [CrossRef]

- Lin, M.X.; Chen, W.L.; Liu, B.S.; Hao, L.N. An Intelligent Fire-Detection Method Based on Image Processing. In Proceedings of the IEEE 37th Annual 2003 International Carnahan Conference on Security Technology, Taipei, Taiwan, 14–16 October 2003; pp. 172–175. [Google Scholar]

- Ko, B.C.; Cheong, K.H.; Nam, J.Y. Fire detection based on vision sensor and support vector machines. Fire Saf. J. 2009, 44, 322–329. [Google Scholar] [CrossRef]

- Borges, P.V.K.; Izquierdo, E. A probabilistic approach for vision-based fire detection in videos. IEEE Trans. Circuits Syst. Video Technol. 2010, 20, 721–731. [Google Scholar] [CrossRef]

- Chi, R.; Lu, Z.M.; Ji, Q.G. Real-time multi-feature based fire flame detection in video. IET Image Process. 2017, 11, 31–37. [Google Scholar] [CrossRef]

- Li, S.; Wang, Y.; Feng, C.; Zhang, D.; Li, H.; Huang, W.; Shi, L. A Thermal Imaging Flame-Detection Model for Firefighting Robot Based on YOLOv4-F Model. Fire 2022, 5, 172. [Google Scholar] [CrossRef]

- Conte, G.; Scaradozzi, D.; Mannocchi, D.; Raspa, P.; Panebianco, L.; Screpanti, L. Development and experimental tests of a ROS multi-agent structure for autonomous surface vehicles. J. Intell. Robot. Syst. 2018, 92, 705–718. [Google Scholar] [CrossRef]

- Li, S.; Feng, C.; Niu, Y.; Shi, L.; Wu, Z.; Song, H. A fire reconnaissance robot based on SLAM position, thermal imaging technologies, and AR display. Sensors 2019, 19, 5036. [Google Scholar] [CrossRef]

| Technical Indicators | Typical Value | Maximum Value |

|---|---|---|

| Ranging range | 0.2~12 m | Not applicable |

| Ranging resolution | <0.5 mm | Not applicable |

| Scan angle | 0~360° | Not applicable |

| Scanning frequency | 10 Hz | 15 Hz |

| Measurement frequency | Magnetization | 8010 Hz |

| Angular resolution | Magnetization | 1.35° |

| Laser wavelength | 785 nm | 795 nm |

| Measuring Position | Actual Length (cm) | Map Build Length (cm) | Absolute Error (cm) | Error Percentage (%) |

|---|---|---|---|---|

| 1 | 330.00 | 326.20 | 3.80 | 1.15 |

| 2 | 150.00 | 151.50 | 1.50 | 1.00 |

| 3 | 180.00 | 178.10 | 1.90 | 1.06 |

| 4 | 550.00 | 541.20 | 8.80 | 1.60 |

| 5 | 700.00 | 688.20 | 11.80 | 1.69 |

| 6 | 150.00 | 147.80 | 2.20 | 1.47 |

| Hardware Environment | Software Environment | Programming Language |

|---|---|---|

| NVIDIA Quadro K2000 4 G | Operating system Ubuntu 16.04, deep learning framework CSPDarknet53 | Python, C language |

| Parameter | Parameter Definition | Parameter Value |

|---|---|---|

| max_batches | Maximum number of iterations | 4000 |

| batch | The number of samples participating in a batch of training | 64 |

| subdivision | Number of sub-batches | 16 |

| momentum | Momentum parameter in gradient descent | 0.9 |

| decay | Weight attenuation regular term coefficient | 0.0005 |

| learning_rate | Learning rate | 0.001 |

| ignore_thresh | The threshold size of the IOU involved in the calculation | 0.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, S.; Yun, J.; Feng, C.; Gao, Y.; Yang, J.; Sun, G.; Zhang, D. An Indoor Autonomous Inspection and Firefighting Robot Based on SLAM and Flame Image Recognition. Fire 2023, 6, 93. https://doi.org/10.3390/fire6030093

Li S, Yun J, Feng C, Gao Y, Yang J, Sun G, Zhang D. An Indoor Autonomous Inspection and Firefighting Robot Based on SLAM and Flame Image Recognition. Fire. 2023; 6(3):93. https://doi.org/10.3390/fire6030093

Chicago/Turabian StyleLi, Sen, Junying Yun, Chunyong Feng, Yijin Gao, Jialuo Yang, Guangchao Sun, and Dan Zhang. 2023. "An Indoor Autonomous Inspection and Firefighting Robot Based on SLAM and Flame Image Recognition" Fire 6, no. 3: 93. https://doi.org/10.3390/fire6030093

APA StyleLi, S., Yun, J., Feng, C., Gao, Y., Yang, J., Sun, G., & Zhang, D. (2023). An Indoor Autonomous Inspection and Firefighting Robot Based on SLAM and Flame Image Recognition. Fire, 6(3), 93. https://doi.org/10.3390/fire6030093