Recent Advances and Emerging Directions in Fire Detection Systems Based on Machine Learning Algorithms

Abstract

:1. Introduction

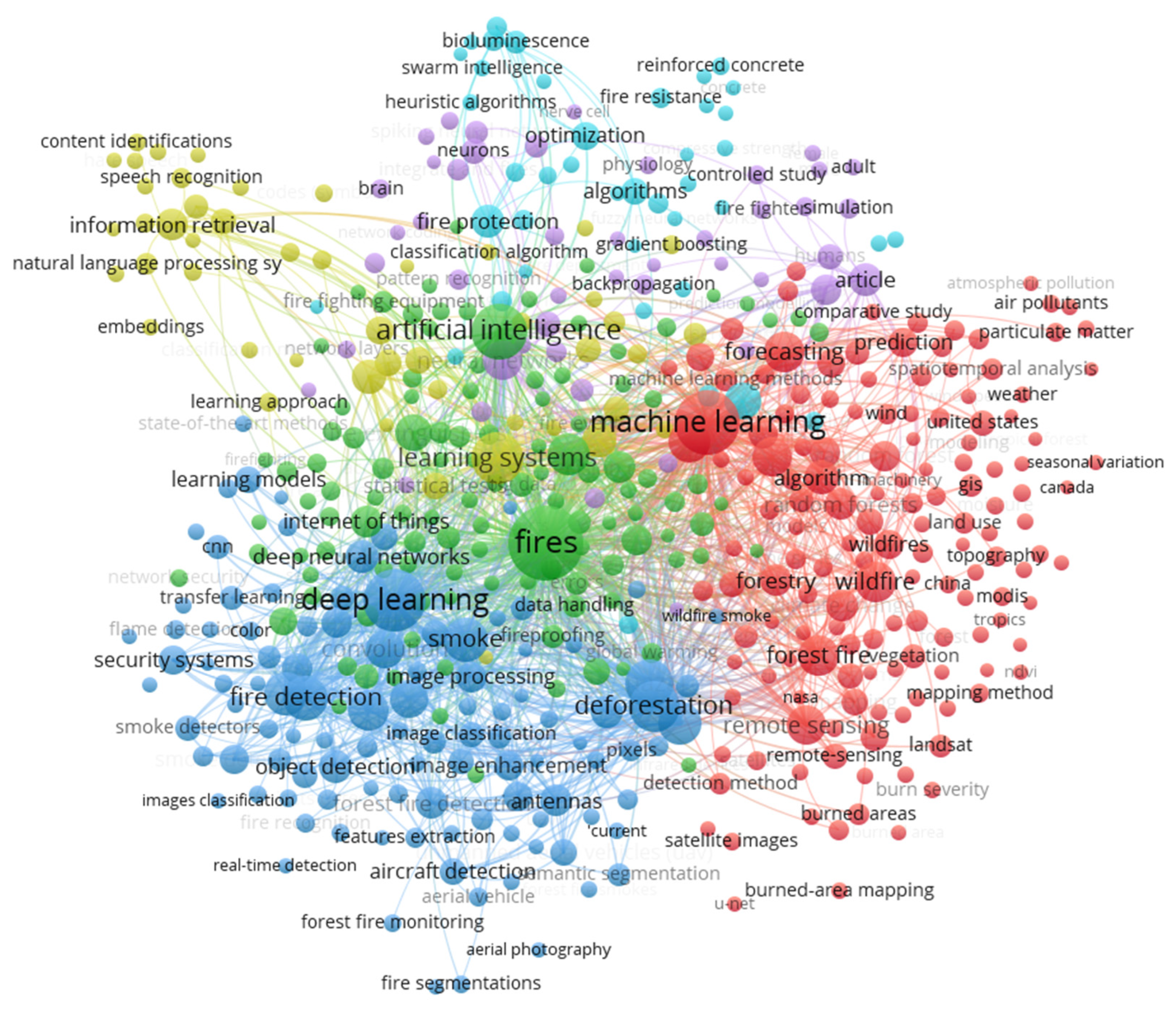

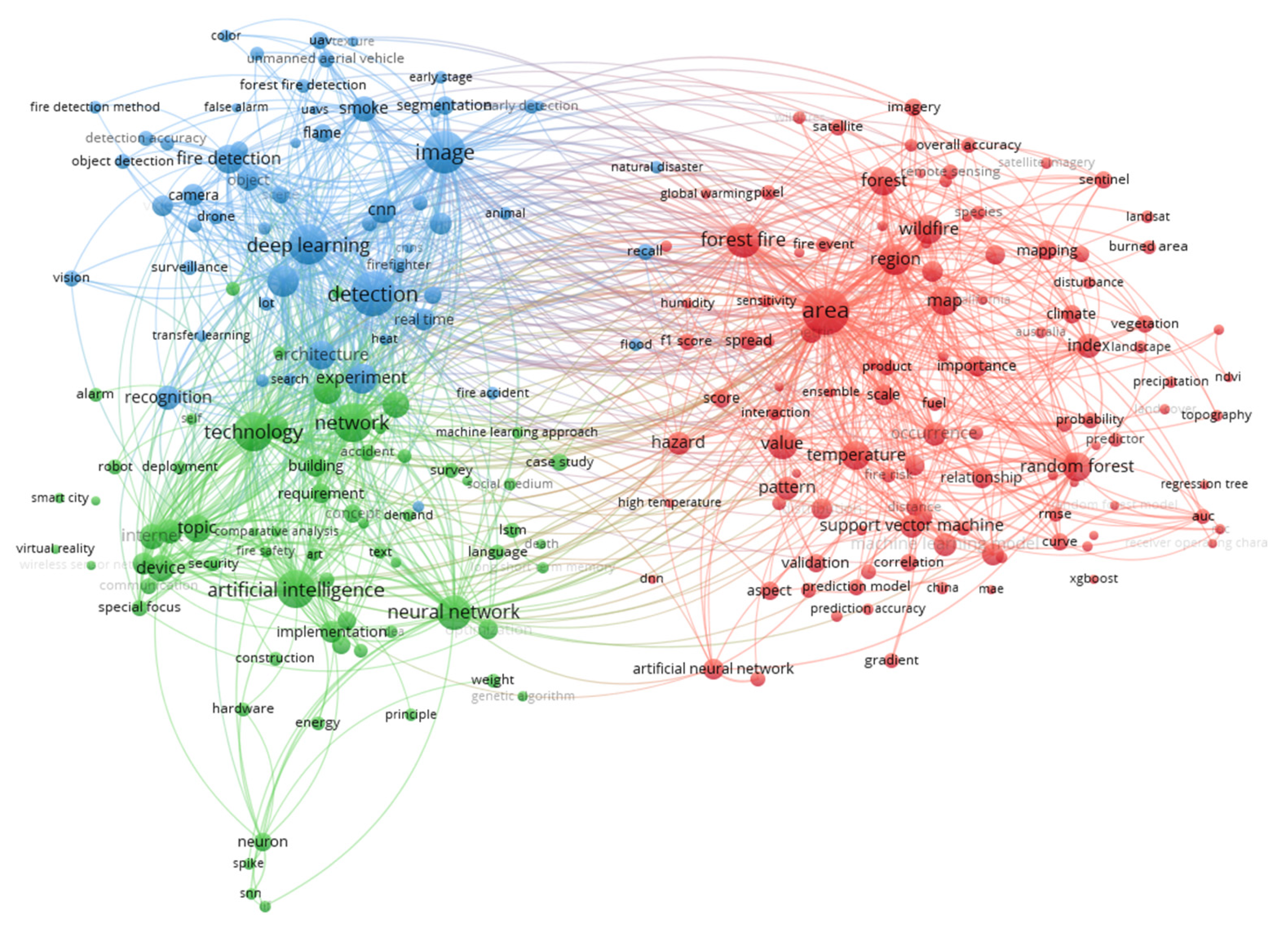

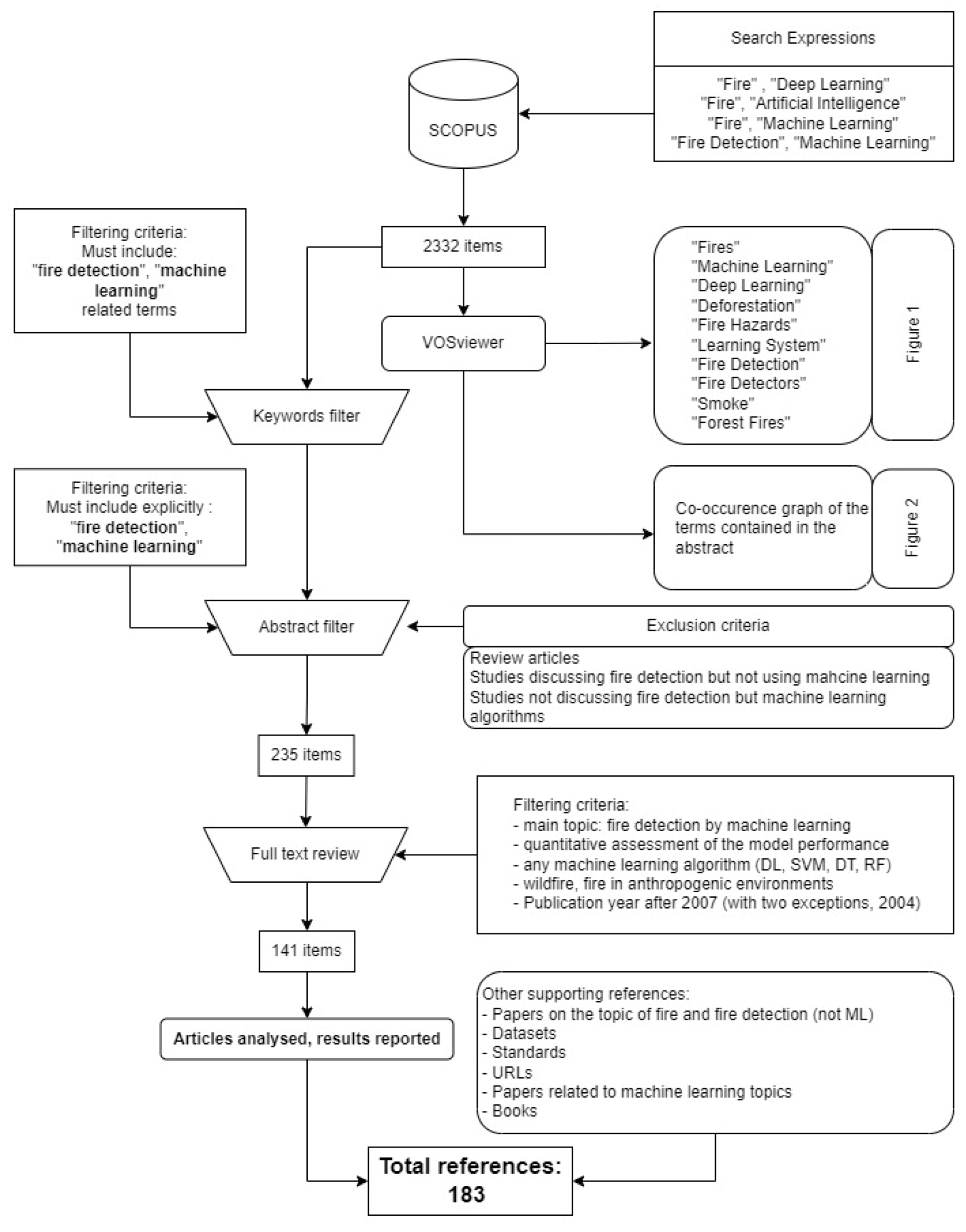

2. Materials and Methods

3. Results and Discussion

3.1. Machine Learning and Deep Learning Techniques in Fire Detection Sensory Systems

- DL are more suitable for big data and for data with a large number of features, such as images or video streams. However, the computational cost of DL techniques is significantly higher than that of ML techniques; in some cases, expensive hardware with special capabilities is required, such as GPUs.

- Feature extraction is performed automatically in the case of DL techniques (such as CNNs processing images or video streams). This is not possible in the case of ML techniques, which require some form of manual feature extraction.

3.1.1. Forest Fire

- Due to the large areas on which they can occur, forest fires are extremely difficult to detect in early stages, when suppression could be achieved effortlessly;

- Forest fires are a non-negligible source of GHG emissions (CO, CO2, CH4, NOx and particulate matter) and have a direct effect on the atmospheric chemistry and atmospheric heat budget (Saha et al. [1]);

- Forest fires cause a decrease in the terrestrial ecosystem’s productivity and exhaustion of the forest environment carbon stock, Amiro et al. [25];

- Forest fires cause soil fertility, crop productivity and water quality and quantity degradation, Venkatesh et al. [26].

3.1.2. Civil and Industrial Facilities

3.1.3. Office and Residential Facilities

3.2. Machine Learning Algorithms for Forest Fire Detection

3.3. Smoke Detection

- DS1, consisting of four different classes: (1) “smoke”, (2) “non smoke”, (3) “smoke with fog”, and (4) “non-smoke with fog”. The total number of images was 72,012 with 18,532 for each “smoke” and “smoke with fog” and 17,474 for “non-smoke” and “non-smoke with fog”, individually.

- DS2, consisting of seven videos of smoke, captured in different environments: smoke at long distance, smoke in a parking lot with other moving objects, and smoke released by a burning cotton rope.

- DS3, consisting of 252 images annotated from DS1 by considering two classes (“smoke” and “smoke with fog”).

- Super pixel segmentation;

- Redefinition of sample point local density;

- Adaptive double truncation distance;

- Automatic selection of cluster centers.

3.4. Machine Learning Algorithms for Fire Detection in Civil and Industrial Facilities

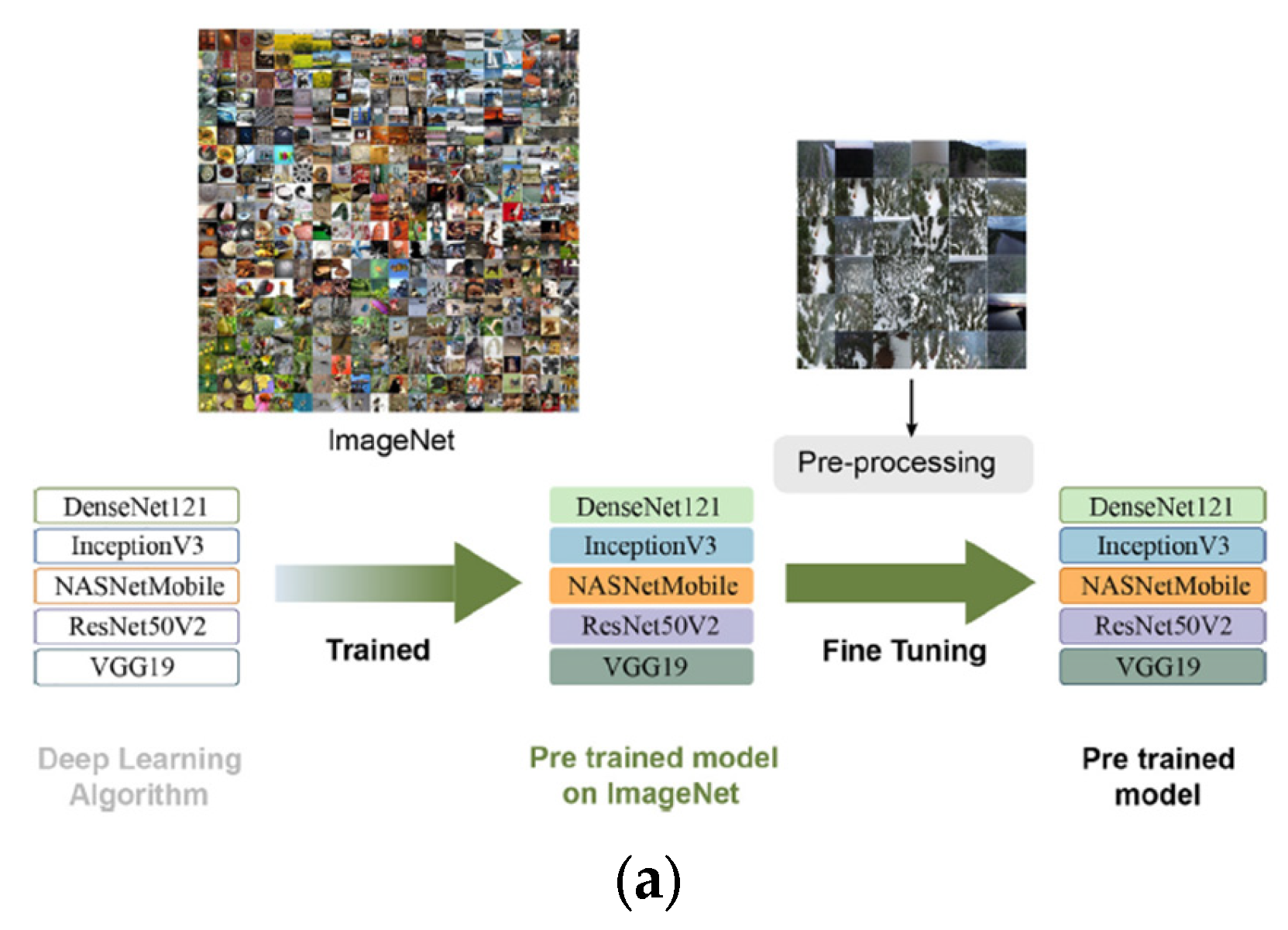

3.5. Transfer Learning in Fire Detection with Deep Learning Techniques

3.6. Image Datasets for Fire Detection Models

3.7. Remote Sensing Based on Image Capture

3.7.1. Optical Cameras

3.7.2. Thermal IR Cameras

3.8. Performance Metrics, Class Imbalance and Data Augmentation

- Cross-entropy loss, commonly used for multi class datasets. Not particularly effective in case of highly imbalanced datasets.

- Dice loss. Used frequently in image segmentation problem. Dice loss consists of minimizing the overlap between prediction and ground truth. Dice loss weighs FPs and FNs equally, which is one of its limitations. For highly unbalanced data such as small burned areas with a large unburned background, the FN detections can be weighted higher than FPs to improve the recall rate.

- Lovász loss (Berman et al. [170]) is used in image segmentation problems (flaw detection in industrial production lines, tumor segmentation, and road line detection). Lovász Softmax loss is appropriate for multiclassification tasks.

- Online Hard Example Mining (OHEM) loss, Shrivastava et al. [171]. OHEM puts more emphasis on misclassified examples, but unlike focal loss, OHEM completely discards easy examples.

- Focal loss, Lin et al. [172]. It was designed to address one-stage object detection situations in which an extreme imbalance (in the order of magnitude 1:1000) exists between classes. It is defined based on the standard binary cross-entropy loss introducing a modulating factor, which reduces the loss contribution from easy examples and extends the range in which a sample receives low loss.

- For semantic segmentation problems, a list of loss functions were reviewed by Jadon [173]: Tversky loss (a generalization of Dice loss coefficient, adding a weight to FPs and FNs), Focal Tversky loss (focuses on hard examples by down-weighting easy and common samples), Sensitivity Specific Loss, Shape-aware loss, Combo Loss (a weighted sum of Dice loss and a modified cross-entropy), Exponential Logaritmic Loss, Distance map-derived loss penalty term, Hausdorff Distance Loss, Correlation Maximized Structural Similarity Loss, and Log-Cosh Dice Loss.

- Suboptimal Warping Time Series Generator (SPAWNER), Kamycki et al. [177]. The SPAWNER algorithm creates a new time series by computing the average two random time series with the same class label. Before averaging the two time series, they are aligned using dynamic time warping (a standard method that warps the time dimension of two time series to find an optimal alignment between them, Sakoe and Chiba [178]).

- Discriminative Guided Warping is a dynamic time warping algorithm that aligns two time series using high-level features using a reference time series to guide the warping of the time step of another time series.

4. Conclusions and Recommendations for Further Investigation and Limitations

- Very few references report critical dataset properties, such as class imbalance and content diversity and difficulty (fire resembling objects that could confuse the algorithm);

- The volume of the datasets is insufficient in some cases, such as the Fire & Smoke dataset [143];

- The implications, and ultimately, the cost of FPs and FNs must be thoroughly assessed. In this respect, a practical, result-oriented workflow, could be the following:

- ○

- (i) Defining a misclassification cost function that quantifies the FPs and FNs implications; this depends on the fire type (e.g., forest fire, civil building fire, ship fire, etc.) and requires a thorough assessment of the FPs and FNs consequences; in the context of the reliability concept mentioned earlier, the lower the misclassification cost function value, the higher the reliability of the model.

- ○

- (ii) Adjusting the model hyperparameters in such way that the model performance, defined in terms of relevant metrics (recall, sensitivity, confusion matrix, ROC-AUC and P-R curve), would minimize the misclassification cost function. Ideally, the misclassification cost function should be used for model training instead of standard cost functions for classification (categorical cross-entropy, binary cross-entropy, etc.).

- Further investigation should be carried out in order to understand the mechanisms that lead to misclassified samples (which are scarcely discussed, e.g., [42]). This could be particularly useful in the case of CNN-based algorithms, where visualizing the processed image after each layer could explain how features are extracted explain how/why misclassification occurred.

- Very few studies discuss the class stratification when training/validation/test sets were prepared. Even fewer discuss cross validation, (e.g., [45]). In a more general context of classification problems, it is important to mention that issues such as overfitting occur frequently and sometimes are not thoroughly investigated. The general approach consists of splitting randomly a dataset into training and test subsets (and sometimes validation subset) with or without cross-validation. However, it is known that most datasets consist of samples with some degree of similarity. A fairly well-designed and trained model can produce results that could falsely induce the conclusion that overfitting is low or inexistent. Using for test samples from other datasets (that could be considered outliers for the train/test datasets) can sometimes reveal the true degree of overfitting.

- Although several public datasets exist, more effort is required to build new, high-quality datasets, or to improve the existing ones. Amongst the dataset features that are important for the fire classification problem, several were spotted that deserve special attention: dataset volume, class imbalance, positive samples difficult to classify or negative samples resembling positives (discussed in very few studies, e.g., [49]), and content diversity.

- Discussing the implications of class imbalance and addressing this issue has been scarcely spotted throughout the literature collection considered in this review. This is especially important, since class imbalance issues were identified for quite a few datasets examined in this review.

- For computer vision-based techniques, an interesting approach could be adding a new spectral channel to the RGB channel (Kim and Ruy [92]) or another feature to the dataset. A recent trend is the so-called sensor fusion philosophy, which consists of collecting and processing information from two or more different types of sensors, such as an RGB camera and a thermal IR camera. Not only has optical/IR detection been reported but also other sensor combinations. Benzekri et al. [190] used a network of wireless sensors to record parameters such as temperature and carbon monoxide concentration as input data for a wildfire detection DL algorithm.

- Although the literature search process resulted in a large number of relatively recent studies, both theoretical (based on datasets only) and experimental (involving some kind of practical approach), no report on any operational, production scale system was identified.

- The main source of information for this review was the SCOPUS database. The choice of SCOPUS was justified by its comprehensiveness and by the fact that it already includes other databases (such as IEEE Xplore, arXiv, ChemRxiv). However, the search could be extended over other important information sources, such as SCIE.

- Some topics covered in this review (such as transfer learning in fire detection problems) are over represented in the literature set considered in this review. Transfer learning in fire detection problems could be a topic for a standalone review.

Author Contributions

Funding

Conflicts of Interest

Nomenclature

| ANN | Artificial Neural Network |

| AP | Average Precision |

| AUC | Area under Curve |

| CFD | Computational Fluid Dynamics |

| CNN | Convolutional Neural Network |

| DL | Deep Learning |

| FC | Fully Connected |

| FN | False Negative |

| FNR | False Negative Rate |

| FP | False Positive |

| FPR | False Positive Rate |

| FPS | Frames per Second |

| FT | Fine Tuning |

| HRR | Heat Release Rate |

| LSTM | Long Short-Term Memory |

| MTBS | Monitoring Trends in Burn Severity |

| NIR | Near Infrared |

| P | Precision |

| PSNR | Peak Signal-to-Noise Ratio |

| NDVI | Normalized Difference Vegetation Index |

| R | Recall |

| RF | Random Forest |

| ROC | Receiver Operator Characteristic |

| SHAP | SHapely Additive exPlanations |

| SMOTE | Synthetic Minority Oversampling Technique |

| SVM | Support Vector Machine |

| SVR | Support Vector Regression |

| TSDPC | Truncation distance Self-adaptive Density Peak Clustering |

References

- Saha, S.; Bera, B.; Shit, P.K.; Bhattacharjee, S.; Sengupta, D.; Sengupta, N.; Adhikary, P.P. Recurrent forest fires, emission of atmospheric pollutants (GHGs) and degradation of tropical dry deciduous forest ecosystem services. Total Environ. Res. Themes 2023, 7, 100057. [Google Scholar] [CrossRef]

- Kala, C.P. Environmental and socioeconomic impacts of forest fires: A call for multilateral cooperation and management interventions. Nat. Hazards Res. 2023, 3, 286–294. [Google Scholar] [CrossRef]

- Elogne, A.G.; Piponiot, C.; Zo-Bi, I.C.; Amani, B.H.; Van der Meersch, V.; Hérault, B. Life after fire—Long-term responses of 20 timber species in semi-deciduous forests of West Africa. For. Ecol. Manag. 2023, 538, 120977. [Google Scholar] [CrossRef]

- Lowesmith, B.; Hankinson, G.; Acton, M.; Chamberlain, G. An Overview of the Nature of Hydrocarbon Jet Fire Hazards in the Oil and Gas Industry and a Simplified Approach to Assessing the Hazards. Process Saf. Environ. Prot. 2007, 85, 207–220. [Google Scholar] [CrossRef]

- Aydin, N.; Seker, S.; Şen, C. A new risk assessment framework for safety in oil and gas industry: Application of FMEA and BWM based picture fuzzy MABAC. J. Pet. Sci. Eng. 2022, 219, 111059. [Google Scholar] [CrossRef]

- Solukloei, H.R.J.; Nematifard, S.; Hesami, A.; Mohammadi, H.; Kamalinia, M. A fuzzy-HAZOP/ant colony system methodology to identify combined fire, explosion, and toxic release risk in the process industries. Expert Syst. Appl. 2022, 192, 116418. [Google Scholar] [CrossRef]

- Park, H.; Nam, K.; Lee, J. Lessons from aluminum and magnesium scraps fires and explosions: Case studies of metal recycling industry. J. Loss Prev. Process Ind. 2022, 80, 104872. [Google Scholar] [CrossRef]

- Zhou, J.; Reniers, G. Dynamic analysis of fire induced domino effects to optimize emergency response policies in the chemical and process industry. J. Loss Prev. Process Ind. 2022, 79, 104835. [Google Scholar] [CrossRef]

- Østrem, L.; Sommer, M. Inherent fire safety engineering in complex road tunnels—Learning between industries in safety management. Saf. Sci. 2021, 134, 105062. [Google Scholar] [CrossRef]

- Ibrahim, M.A.; Lönnermark, A.; Hogland, W. Safety at waste and recycling industry: Detection and mitigation of waste fire accidents. Waste Manag. 2022, 141, 271–281. [Google Scholar] [CrossRef]

- Wang, S.; Zhang, Y.; Hsieh, T.-H.; Liu, W.; Yin, F.; Liu, B. Fire situation detection method for unmanned fire-fighting vessel based on coordinate attention structure-based deep learning network. Ocean Eng. 2022, 266, 113208. [Google Scholar] [CrossRef]

- Davies, H.F.; Visintin, C.; Murphy, B.P.; Ritchie, E.G.; Banks, S.C.; Davies, I.D.; Bowman, D.M. Pyrodiversity trade-offs: A simulation study of the effects of fire size and dispersal ability on native mammal populations in northern Australian savannas. Biol. Conserv. 2023, 282, 110077. [Google Scholar] [CrossRef]

- Lindenmayer, D.; MacGregor, C.; Evans, M.J. Multi-decadal habitat and fire effects on a threatened bird species. Biol. Conserv. 2023, 283, 110124. [Google Scholar] [CrossRef]

- Liu, W.; Zhang, Z.; Li, J.; Wen, Y.; Liu, F.; Zhang, W.; Liu, H.; Ren, C.; Han, X. Effects of fire on the soil microbial metabolic quotient: A global meta-analysis. CATENA 2023, 224, 106957. [Google Scholar] [CrossRef]

- Batista, E.K.L.; Figueira, J.E.C.; Solar, R.R.C.; de Azevedo, C.S.; Beirão, M.V.; Berlinck, C.N.; Brandão, R.A.; de Castro, F.S.; Costa, H.C.; Costa, L.M.; et al. In Case of Fire, Escape or Die: A Trait-Based Approach for Identifying Animal Species Threatened by Fire. Fire 2023, 6, 242. [Google Scholar] [CrossRef]

- Courbat, J.; Pascu, M.; Gutmacher, D.; Briand, D.; Wöllenstein, J.; Hoefer, U.; Severin, K.; de Rooij, N. A colorimetric CO sensor for fire detection. Procedia Eng. 2011, 25, 1329–1332. [Google Scholar] [CrossRef]

- Derbel, F. Performance improvement of fire detectors by means of gas sensors and neural networks. Fire Saf. J. 2004, 39, 383–398. [Google Scholar] [CrossRef]

- ASTM. ASTM Standard Terminology of Fire Standards; ASTM: West Conshohocken, PA, USA, 2004. [Google Scholar]

- Fonollosa, J.; Solórzano, A.; Marco, S. Chemical Sensor Systems and Associated Algorithms for Fire Detection: A Review. Sensors 2018, 18, 553. [Google Scholar] [CrossRef]

- National Research Council. Fire and Smoke: Understanding the Hazards; National Academies Press: Washington, DC, USA, 1986; ISBN 0309568609. [Google Scholar]

- Chagger, R.; Smith, D. The Causes of False Fire Alarms in Buildings; Briefing Paper; BRE Global Ltd.: Watford, UK, 2014. [Google Scholar]

- Ishii, H.; Ono, T.; Yamauchi, Y.; Ohtani, S. An algorithm for improving the reliability of detection with processing of multiple sensors’ signal. Fire Saf. J. 1991, 17, 469–484. [Google Scholar] [CrossRef]

- Han, W.; Zhang, X.; Wang, Y.; Wang, L.; Huang, X.; Li, J.; Wang, S.; Chen, W.; Li, X.; Feng, R.; et al. A survey of machine learning and deep learning in remote sensing of geological environment: Challenges, advances, and opportunities. ISPRS J. Photogramm. Remote. Sens. 2023, 202, 87–113. [Google Scholar] [CrossRef]

- Dietterich, T.G. Ensemble Methods in Machine Learning. In Multiple Classifier Systems. MCS 2000; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2000; Volume 1857. [Google Scholar] [CrossRef]

- Amiro, B.D.; Chen, J.M.; Liu, J. Net primary productivity following forest fire for Canadian ecoregions. Can. J. For. Res. 2000, 30, 939–947. [Google Scholar] [CrossRef]

- Venkatesh, K.; Preethi, K.; Ramesh, H. Evaluating the effects of forest fire on water balance using fire susceptibility maps. Ecol. Indic. 2020, 110, 105856. [Google Scholar] [CrossRef]

- Alharbi, B.H.; Pasha, M.J.; Al-Shamsi, M.A.S. Firefighter exposures to organic and inorganic gas emissions in emergency residential and industrial fires. Sci. Total Environ. 2021, 770, 145332. [Google Scholar] [CrossRef] [PubMed]

- Iliadis, L.S.; Papastavrou, A.K.; Lefakis, P.D. A computer-system that classifies the prefectures of Greece in forest fire risk zones using fuzzy sets. For. Policy Econ. 2002, 4, 43–54. [Google Scholar] [CrossRef]

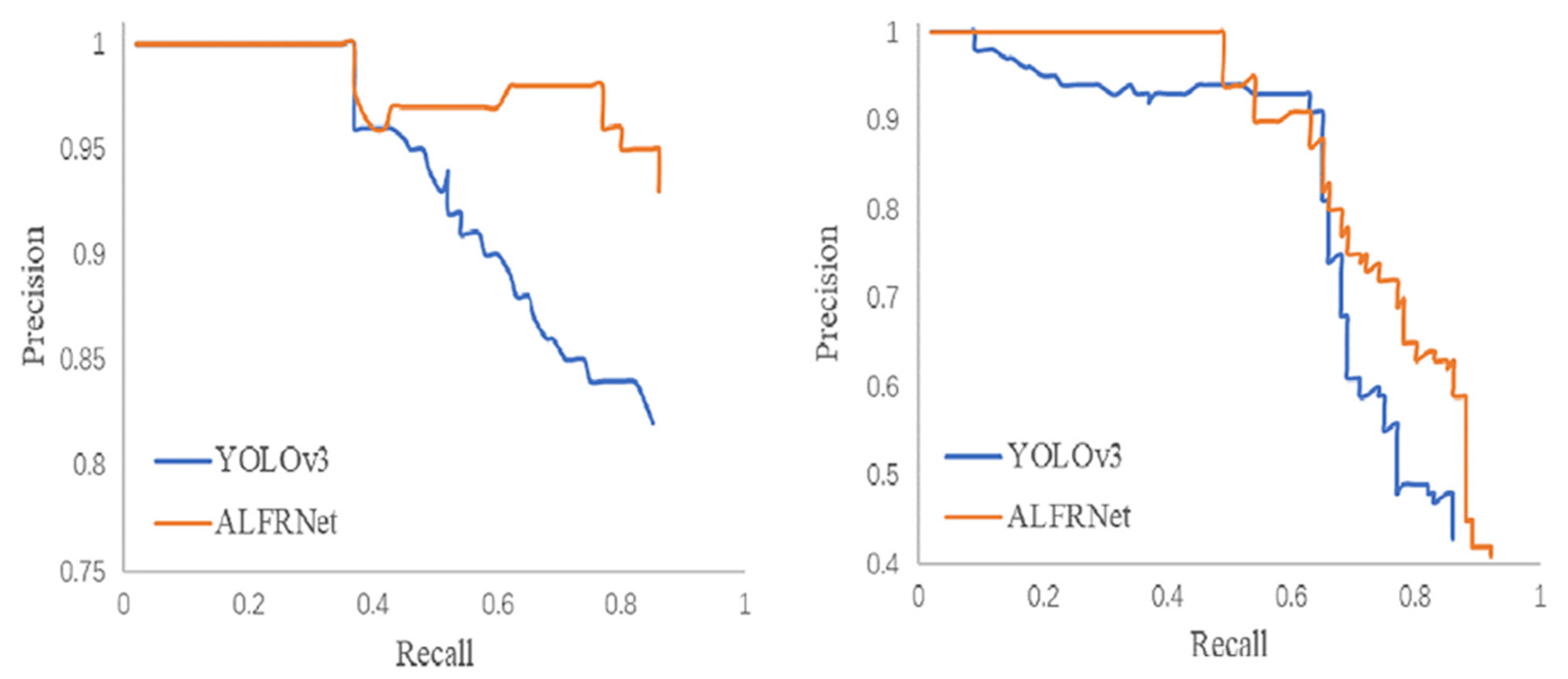

- Li, J.; Zhou, G.; Chen, A.; Wang, Y.; Jiang, J.; Hu, Y.; Lu, C. Adaptive linear feature-reuse network for rapid forest fire smoke detection model. Ecol. Inform. 2022, 68, 101584. [Google Scholar] [CrossRef]

- Ma, H.; Liu, Y.; Ren, Y.; Yu, J. Detection of Collapsed Buildings in Post-Earthquake Remote Sensing Images Based on the Improved YOLOv3. Remote Sens. 2020, 12, 44. [Google Scholar] [CrossRef]

- Shamsoshoara, A.; Afghah, F.; Razi, A.; Zheng, L.; Fulé, P.Z.; Blasch, E. Aerial imagery pile burn detection using deep learning: The FLAME dataset. Comput. Netw. 2021, 193, 108001. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; Available online: https://arxiv.org/abs/1610.02357 (accessed on 1 August 2023).

- Zhan, J.; Hu, Y.; Zhou, G.; Wang, Y.; Cai, W.; Li, L. A high-precision forest fire smoke detection approach based on ARGNet. Comput. Electron. Agric. 2022, 196, 106874. [Google Scholar] [CrossRef]

- Zhang, Q.; Xu, J.; Xu, L.; Guo, H. Deep Convolutional Neural Networks for Forest Fire Detection. In Proceedings of the International Forum on Management, Education and Information Technology Application (IFMEITA 2016), Guangzhou, China, 30–31 January 2016. [Google Scholar] [CrossRef]

- Reis, H.C.; Turk, V. Detection of forest fire using deep convolutional neural networks with transfer learning approach. Appl. Soft Comput. 2023, 143, 110362. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar] [CrossRef]

- Song, X.; Gao, S.; Liu, X.; Chen, C. An outdoor fire recognition algorithm for small unbalanced samples. Alex. Eng. J. 2021, 60, 2801–2809. [Google Scholar] [CrossRef]

- Fernandes, A.M.; Utkin, A.B.; Lavrov, A.V.; Vilar, R.M. Development of neural network committee machines for automatic forest fire detection using lidar. Pattern Recognit. 2004, 37, 2039–2047. [Google Scholar] [CrossRef]

- Ahn, Y.; Choi, H.; Kim, B.S. Development of early fire detection model for buildings using computer vision-based CCTV. J. Build. Eng. 2023, 65, 105647. [Google Scholar] [CrossRef]

- AIHuB. 2022. Available online: https://aihub.or.kr/ (accessed on 29 July 2023).

- Biswas, A.; Ghosh, S.K.; Ghosh, A. Early Fire Detection and Alert System using Modified Inception-v3 under Deep Learning Framework. Procedia Comput. Sci. 2023, 218, 2243–2252. [Google Scholar] [CrossRef]

- Szegedy, C.; Ioffe, S.; Vanhouche, V.; Alemi, A.A. Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning. arXiv 2016, arXiv:1602.07261. [Google Scholar] [CrossRef]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. arXiv 2015, arXiv:1512.00567. [Google Scholar]

- Muhammad, K.; Ahmad, J.; Baik, S.W. Early fire detection using convolutional neural networks during surveillance for effective disaster management. Neurocomputing 2018, 288, 30–42. [Google Scholar] [CrossRef]

- Foggia, P.; Saggese, A.; Vento, M. Real-Time Fire Detection for Video-Surveillance Applications Using a Combination of Experts Based on Color, Shape, and Motion. IEEE Trans. Circuits Syst. Video Technol. 2015, 25, 1545–1556. [Google Scholar] [CrossRef]

- Chino, D.Y.T.; Avalhais, L.P.S.; Rodrigues, J.F.; Traina, A.J.M. BoWFire: Detection of Fire in Still Images by Integrating Pixel Color and Texture Analysis. In Proceedings of the 28th 2015 Conference on Graphics, Patterns and Images, Salvador, Brazil, 26–29 August 2015; pp. 95–102. [Google Scholar] [CrossRef]

- Yar, H.; Khan, Z.A.; Ullah, F.U.M.; Ullah, W.; Baik, S.W. A modified YOLOv5 architecture for efficient fire detection in smart cities. Expert Syst. Appl. 2023, 231, 120465. [Google Scholar] [CrossRef]

- Valikhujaev, Y.; Abdusalomov, A.; Cho, Y.I. Automatic Fire and Smoke Detection Method for Surveillance Systems Based on Dilated CNNs. Atmosphere 2020, 11, 1241. [Google Scholar] [CrossRef]

- Gong, X.; Hu, H.; Wu, Z.; He, L.; Yang, L.; Li, F. Dark-channel based attention and classifier retraining for smoke detection in foggy environments. Digit. Signal Process 2022, 123, 103454. [Google Scholar] [CrossRef]

- Liu, Z.; Yang, X.; Liu, Y.; Qian, Z. Smoke-Detection Framework for High-Definition Video Using Fused Spatial- and Frequency-Domain Features. IEEE Access 2019, 7, 89687–89701. [Google Scholar] [CrossRef]

- Gubbi, J.; Marusic, S.; Palaniswami, M. Smoke detection in video using wavelets and support vector machines. Fire Saf. J. 2009, 44, 1110–1115. [Google Scholar] [CrossRef]

- Yuan, F.; Fang, Z.; Wu, S.; Yang, Y.; Fang, Y. Real-time image smoke detection using staircase searching-based dual threshold AdaBoost and dynamic analysis. IET Image Process 2015, 9, 849–856. [Google Scholar] [CrossRef]

- Muhammad, K.; Ahmad, J.; Mehmood, I.; Rho, S.; Baik, S.W. Convolutional Neural Networks Based Fire Detection in Surveillance Videos. IEEE Access 2018, 6, 18174–18183. [Google Scholar] [CrossRef]

- Lin, G.; Zhang, Y.; Xu, G.; Zhang, Q. Smoke Detection on Video Sequences Using 3D Convolutional Neural Networks. Fire Technol. 2019, 55, 1827–1847. [Google Scholar] [CrossRef]

- Berman, D.; Treibitz, T.; Avidan, S. Non-local image dehazing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar] [CrossRef]

- He, L.; Gong, X.; Zhang, S.; Wang, L.; Li, F. Efficient attention based deep fusion CNN for smoke detection in fog environment. Neurocomputing 2021, 434, 224–238. [Google Scholar] [CrossRef]

- Sathishkumar, V.E.; Cho, J.; Subramanian, M.; Naren, O.S. Forest fire and smoke detection using deep learning-based learning without forgetting. Fire Ecol. 2023, 19, 9. [Google Scholar] [CrossRef]

- Wu, X.; Lu, X.; Leung, H. A Video Based Fire Smoke Detection Using Robust AdaBoost. Sensors 2018, 18, 3780. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Zeng, J.; Lin, Z.; Qi, C.; Zhao, X.; Wang, F. An Improved Object Detection Method Based On Deep Convolution Neural Network for Smoke Detection. In Proceedings of the International Conference on Machine Learning and Cybernetics (ICMLC), Chengdu, China, 15–18 July 2018; pp. 184–189. [Google Scholar] [CrossRef]

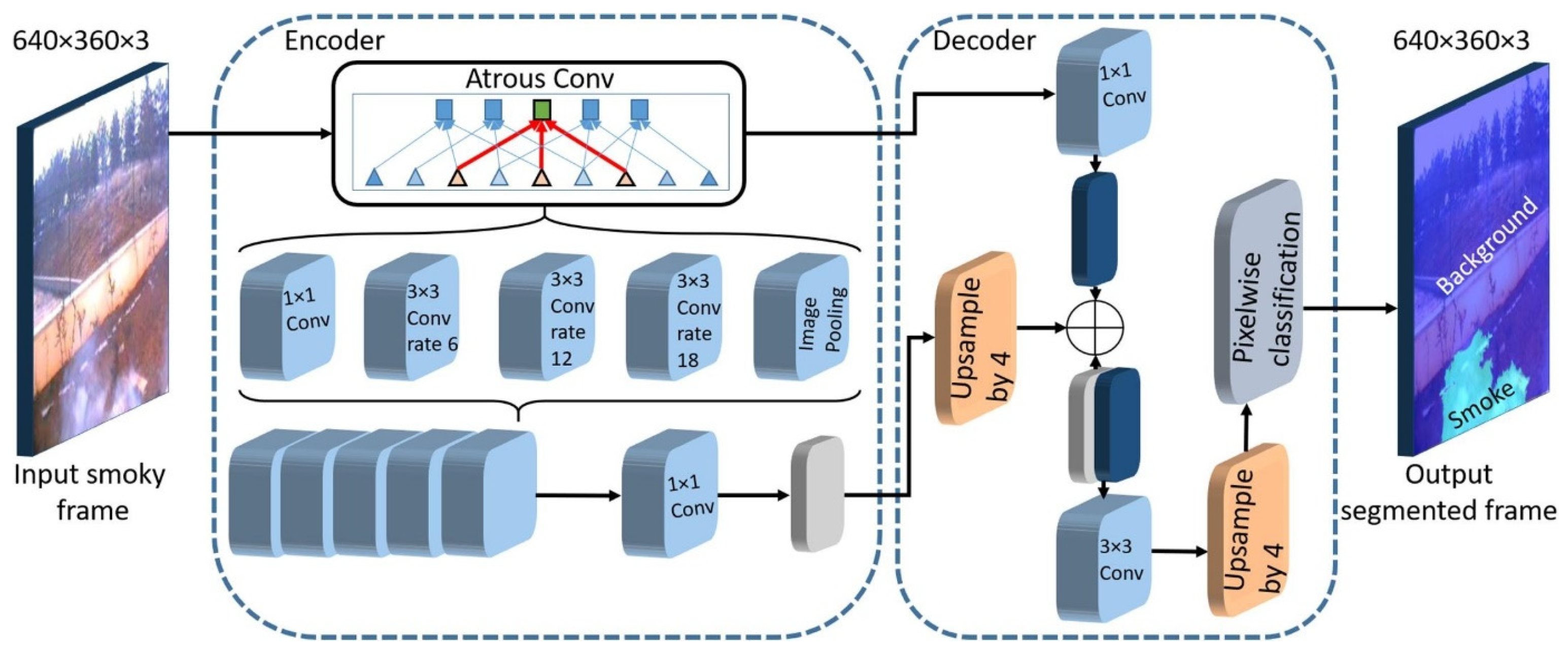

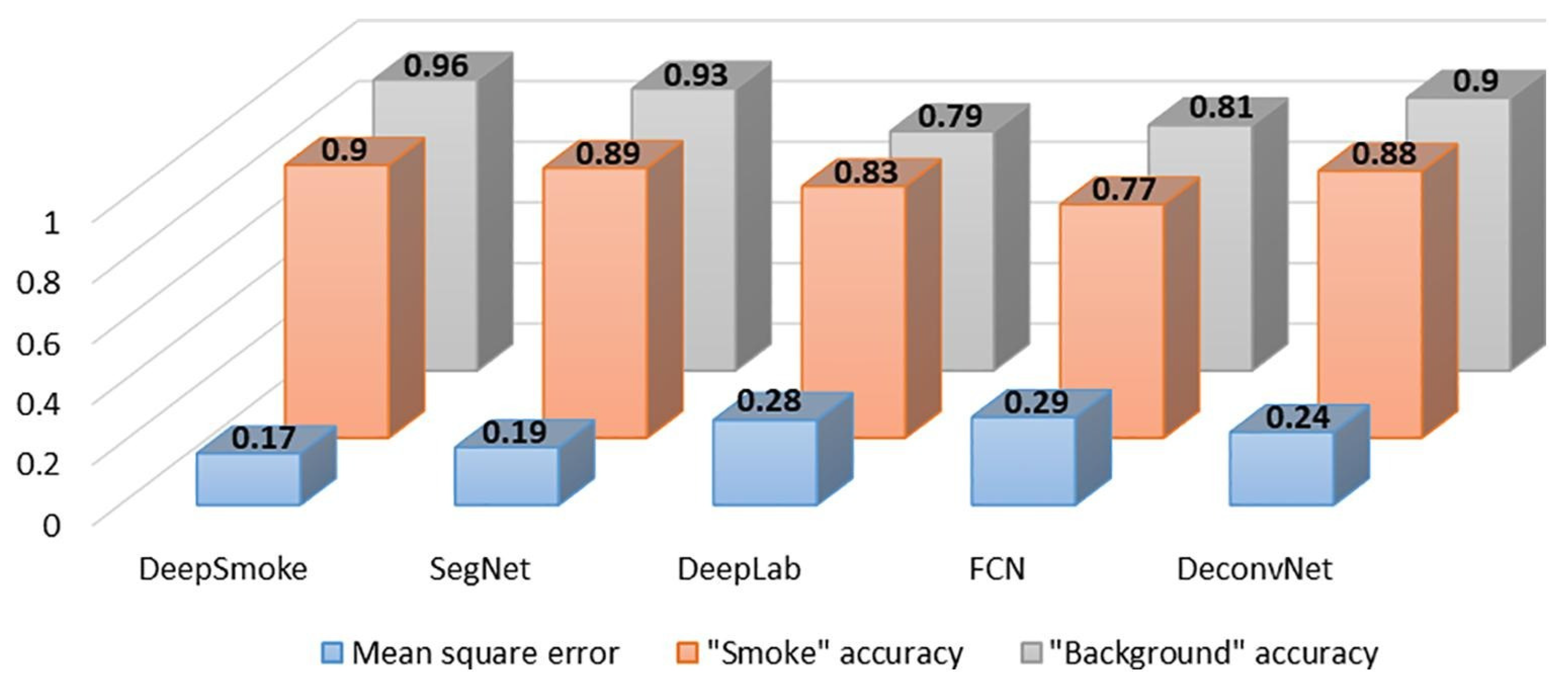

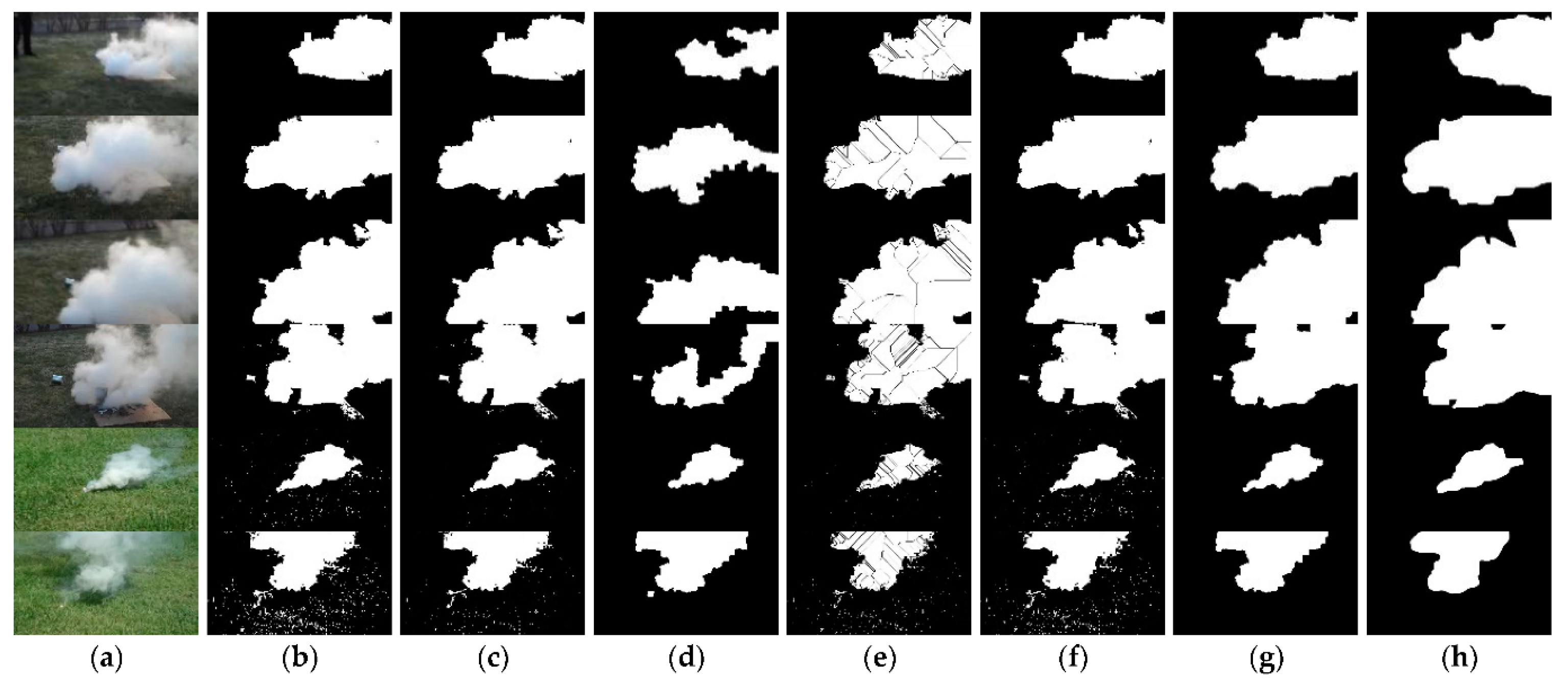

- Khan, S.; Muhammad, K.; Hussain, T.; Del Ser, J.; Cuzzolin, F.; Bhattacharyya, S.; Akhtar, Z.; de Albuquerque, V.H.C. DeepSmoke: Deep learning model for smoke detection and segmentation in outdoor environments. Expert Syst. Appl. 2021, 182, 115125. [Google Scholar] [CrossRef]

- Yuan, F.; Li, K.; Wang, C.; Fang, Z. A lightweight network for smoke semantic segmentation. Pattern Recognit. 2023, 137, 109289. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Noh, H.; Hong, S.; Han, B. Learning deconvolution network for semantic segmentation. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015. [Google Scholar] [CrossRef]

- Ma, Z.; Cao, Y.; Song, L.; Hao, F.; Zhao, J. A New Smoke Segmentation Method Based on Improved Adaptive Density Peak Clustering. Appl. Sci. 2023, 13, 1281. [Google Scholar] [CrossRef]

- Available online: https://github.com/sonvbhp199/Unet-Smoke/tree/main (accessed on 12 July 2023).

- Guan, J.; Li, S.; He, X.; Chen, J. Peak-Graph-Based Fast Density Peak Clustering for Image Segmentation. IEEE Signal Process Lett. 2021, 28, 897–901. [Google Scholar] [CrossRef]

- Kim, S.-Y.; Muminov, A. Forest Fire Smoke Detection Based on Deep Learning Approaches and Unmanned Aerial Vehicle Images. Sensors 2023, 23, 5702. [Google Scholar] [CrossRef] [PubMed]

- Ashiquzzaman, A.; Lee, D.S.S.; Oh, S.M.M.; Kim, Y.G.G.; Lee, J.H.H.; Kim, J.S.S. Video Key Frame Extraction & Fire-Smoke Detection with Deep Compact Convolutional Neural Network. In Proceedings of the SMA 2020: The 9th International Conference on Smart Media and Applications, Jeju, Republic of Korea, 17–19 September 2020. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Hu, Y.; Zhan, J.; Zhou, G.; Chen, A.; Cai, W.; Guo, K.; Hu, Y.; Li, L. Fast forest fire smoke detection using MVMNet. Knowl. Based Syst. 2022, 241, 108219. [Google Scholar] [CrossRef]

- Available online: https://github.com/guokun666/Forest_Fire_Smoke_DATA (accessed on 18 July 2023).

- Liu, Y.; Qin, W.; Liu, K.; Zhang, F.; Xiao, Z. A Dual Convolution Network Using Dark Channel Prior for Image Smoke Classification. IEEE Access 2019, 7, 60697–60706. [Google Scholar] [CrossRef]

- Yuan, F.; Shi, Y.; Zhang, L.; Fang, Y. A cross-scale mixed attention network for smoke segmentation. Digit. Signal Process 2023, 134, 103924. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the 2015 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR, Boston, MA, USA, 7–12 June 2015. [Google Scholar] [CrossRef]

- Yuan, F.; Zhang, L.; Xia, X.; Huang, Q.; Li, X. A Wave-Shaped Deep Neural Network for Smoke Density Estimation. IEEE Trans. Image Process 2020, 29, 2301–2313. [Google Scholar] [CrossRef]

- Available online: http://saliencydetection.net/duts/#orga661d4c (accessed on 24 July 2023).

- Li, M.; Zhang, Y.; Mu, L.; Xin, J.; Yu, Z.; Jiao, S.; Liu, H.; Xie, G.; Yingmin, Y. A Real-time Fire Segmentation Method Based on A Deep Learning Approach. IFAC-PapersOnLine 2022, 55, 145–150. [Google Scholar] [CrossRef]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Hartwig, A. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. arXiv 2018, arXiv:1802.02611v3, 801–818. [Google Scholar]

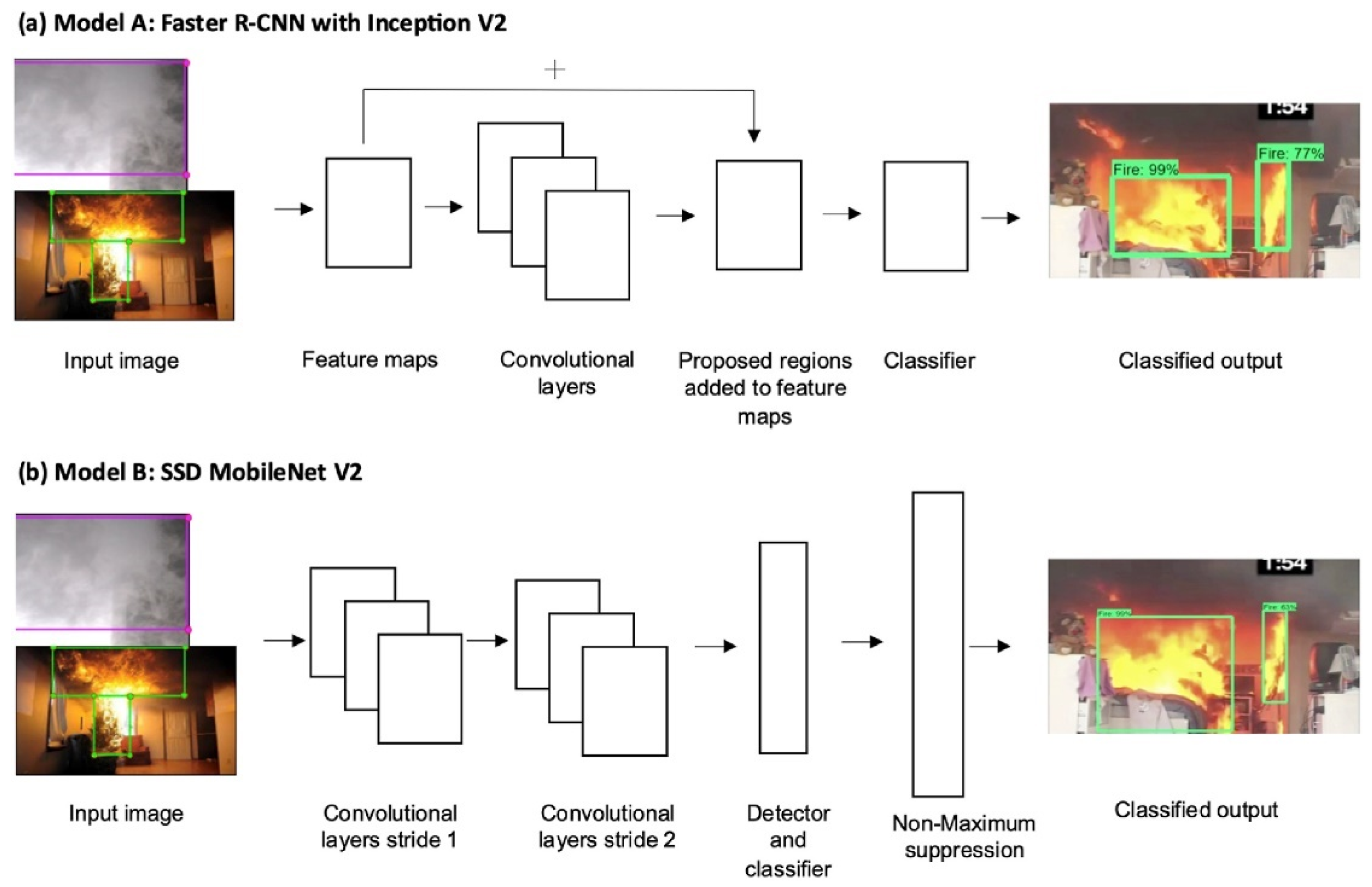

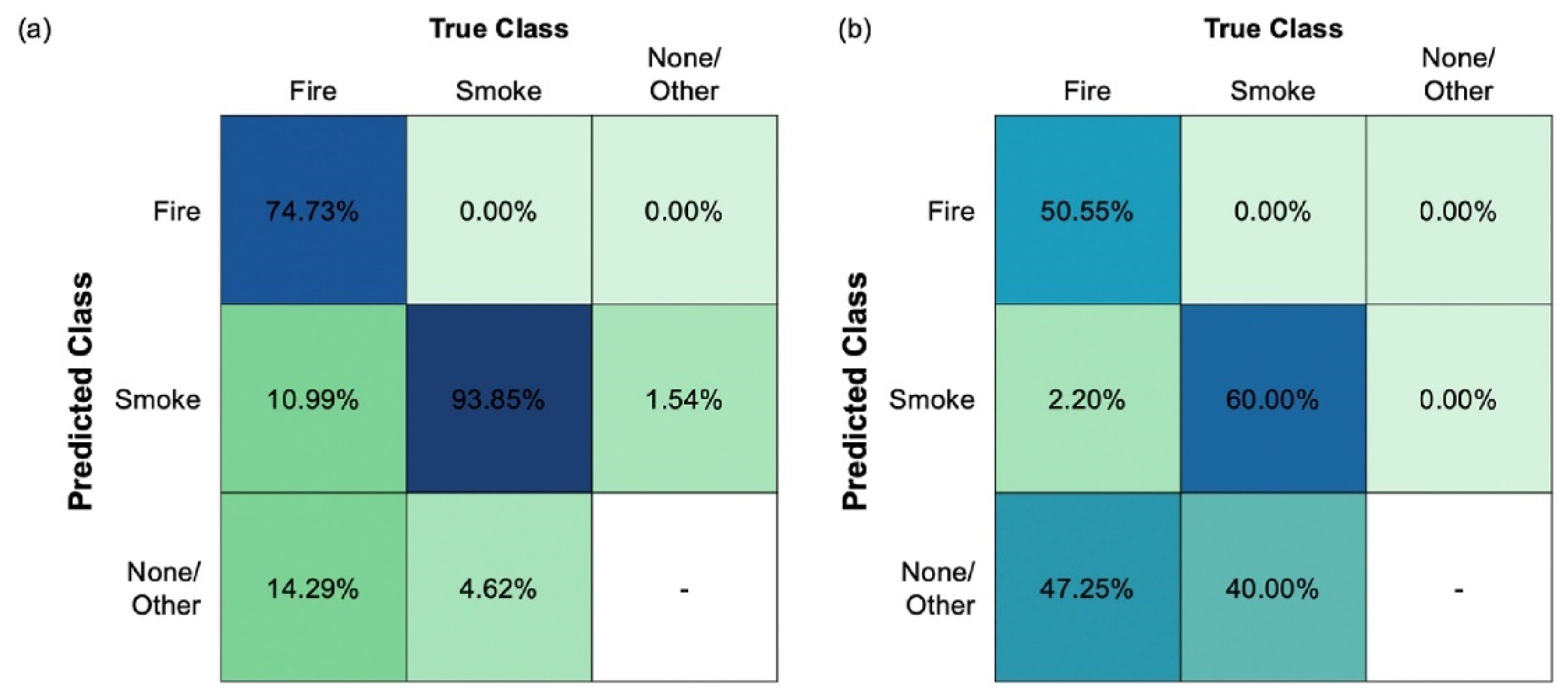

- Pincott, J.; Tien, P.W.; Wei, S.; Calautit, J.K. Indoor fire detection utilizing computer vision-based strategies. J. Build. Eng. 2022, 61, 105154. [Google Scholar] [CrossRef]

- Avazov, K.; Mukhiddinov, M.; Makhmudov, F.; Cho, Y.I. Fire Detection Method in Smart City Environments Using a Deep-Learning-Based Approach. Electronics 2022, 11, 73. [Google Scholar] [CrossRef]

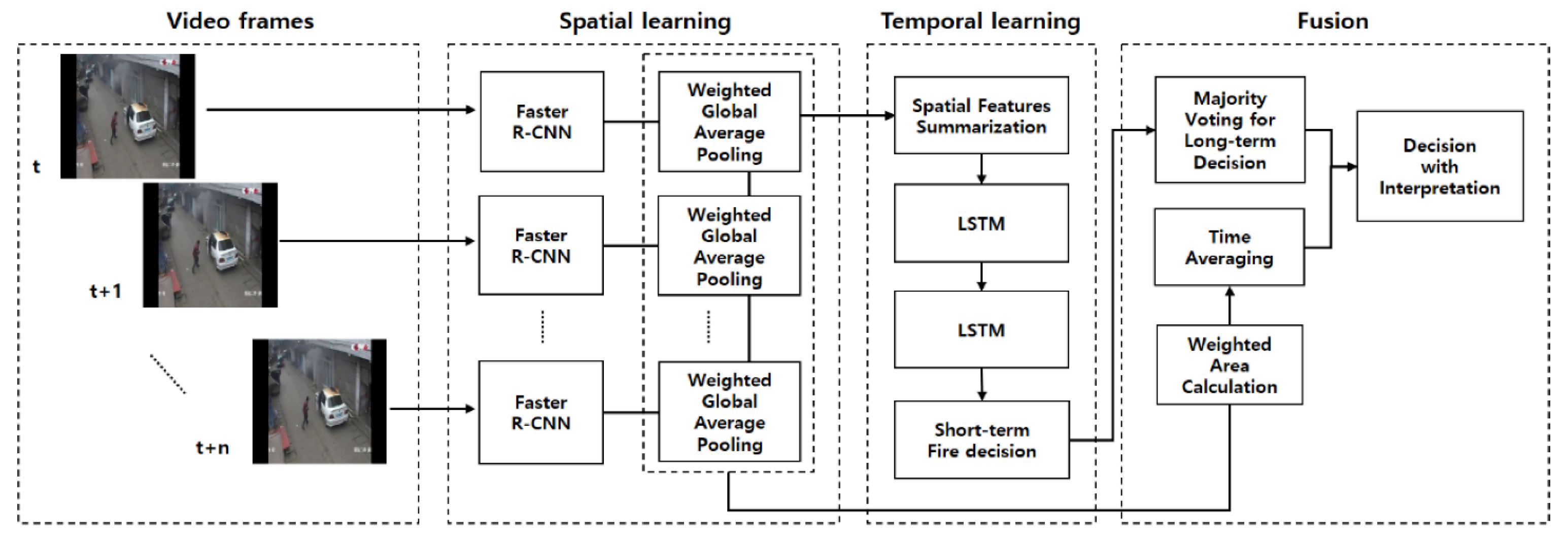

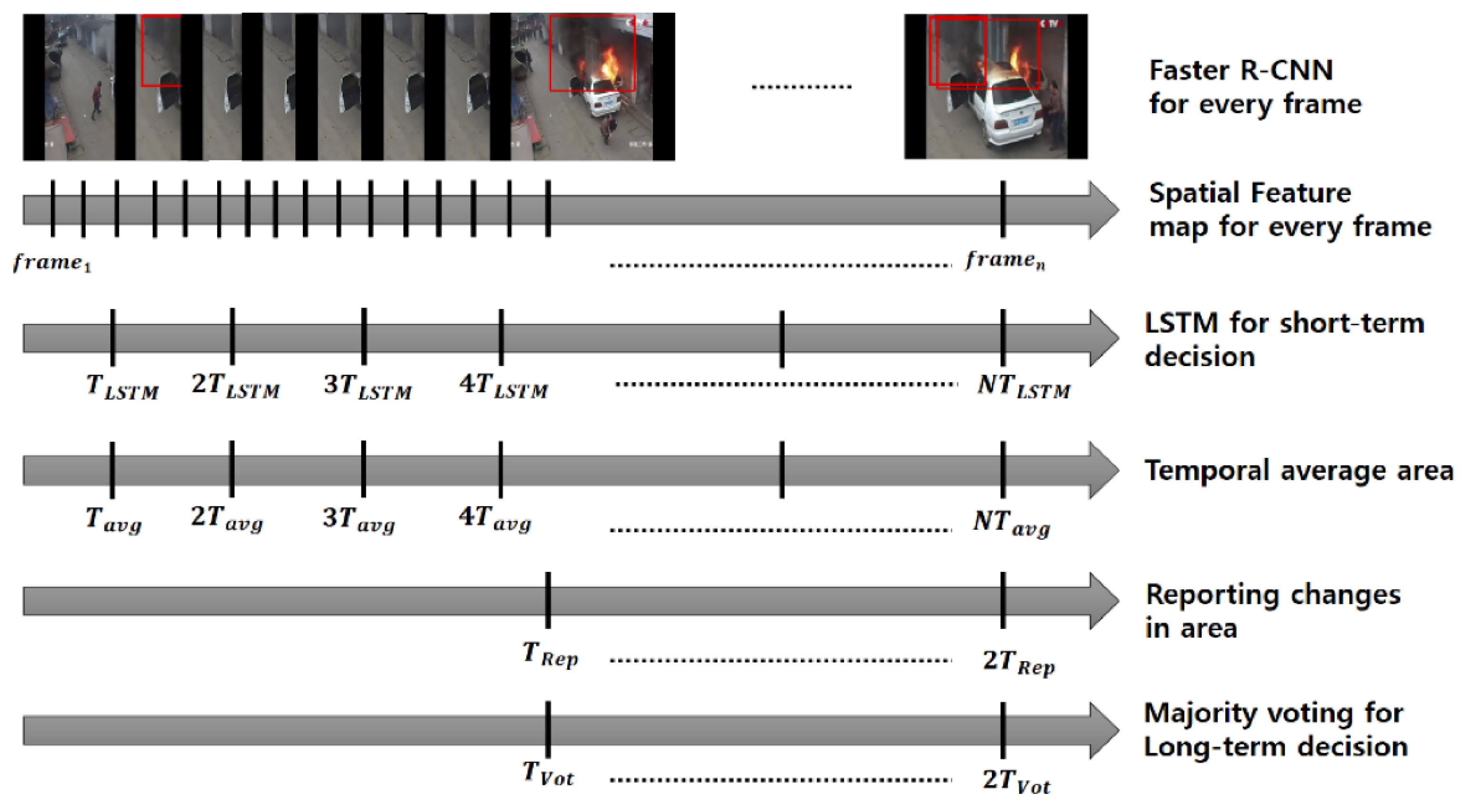

- Kim, B.; Lee, J. A Video-Based Fire Detection Using Deep Learning Models. Appl. Sci. 2019, 9, 2862. [Google Scholar] [CrossRef]

- Jadon, A.; Omama, M.; Varshney, A.; Ansari, M.S.; Sharma, R. FireNet: A Specialized Lightweight Fire & Smoke Detection Model for Real-Time IoT Applications. arXiv 2019, arXiv:1905.11922. [Google Scholar]

- Shees, A.; Ansari, M.S.; Varshney, A.; Asghar, M.N.; Kanwal, N. FireNet-v2: Improved Lightweight Fire Detection Model for Real-Time IoT Applications. Procedia Comput. Sci. 2023, 218, 2233–2242. [Google Scholar] [CrossRef]

- Saponara, S.; Elhanashi, A.; Gagliardi, A. Exploiting R-CNN for video smoke/fire sensing in antifire surveillance indoor and outdoor systems for smart cities. In Proceedings of the 2020 IEEE International Conference on Smart Computing (SMARTCOMP), Bologna, Italy, 14–17 September 2020. [Google Scholar] [CrossRef]

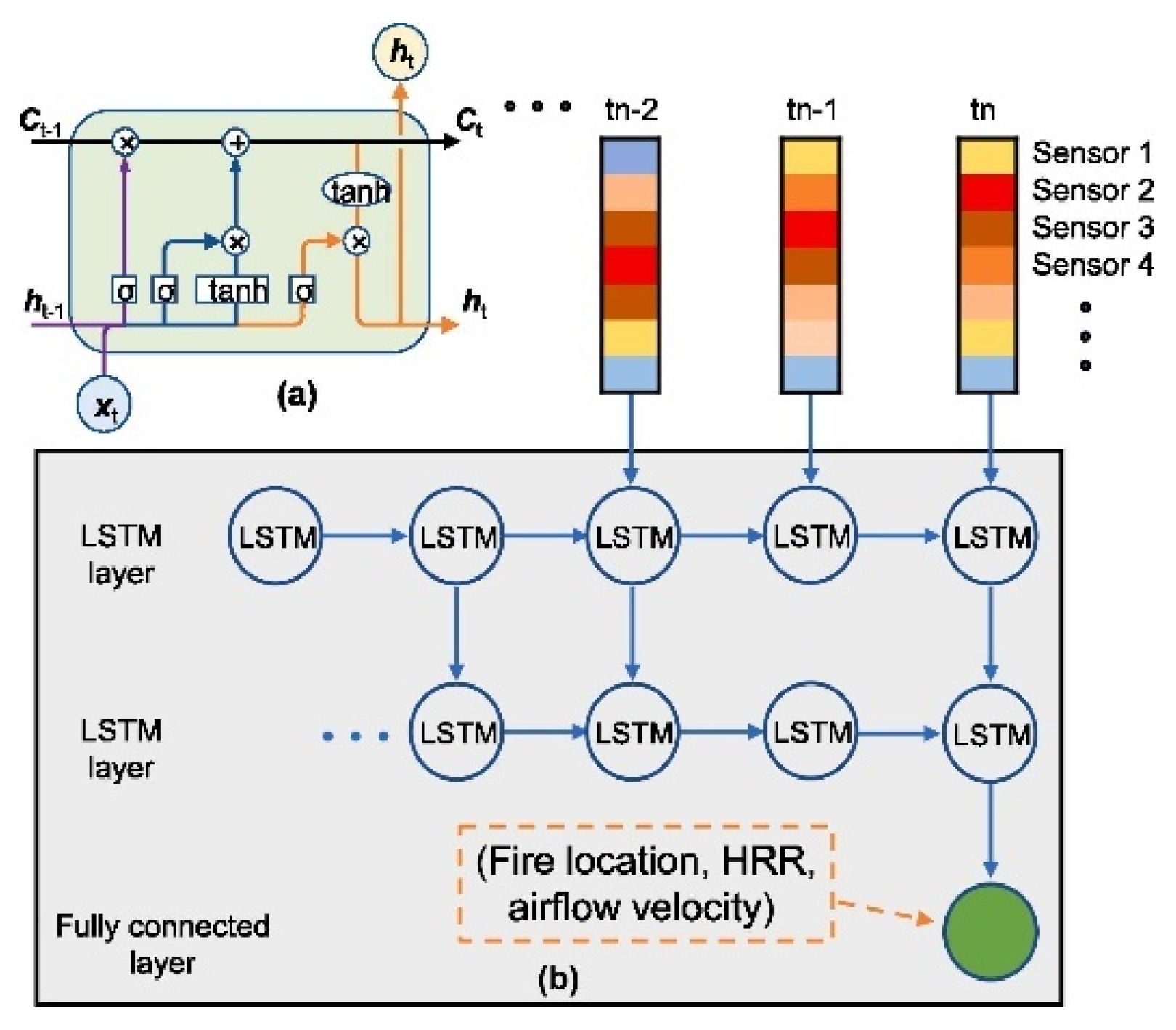

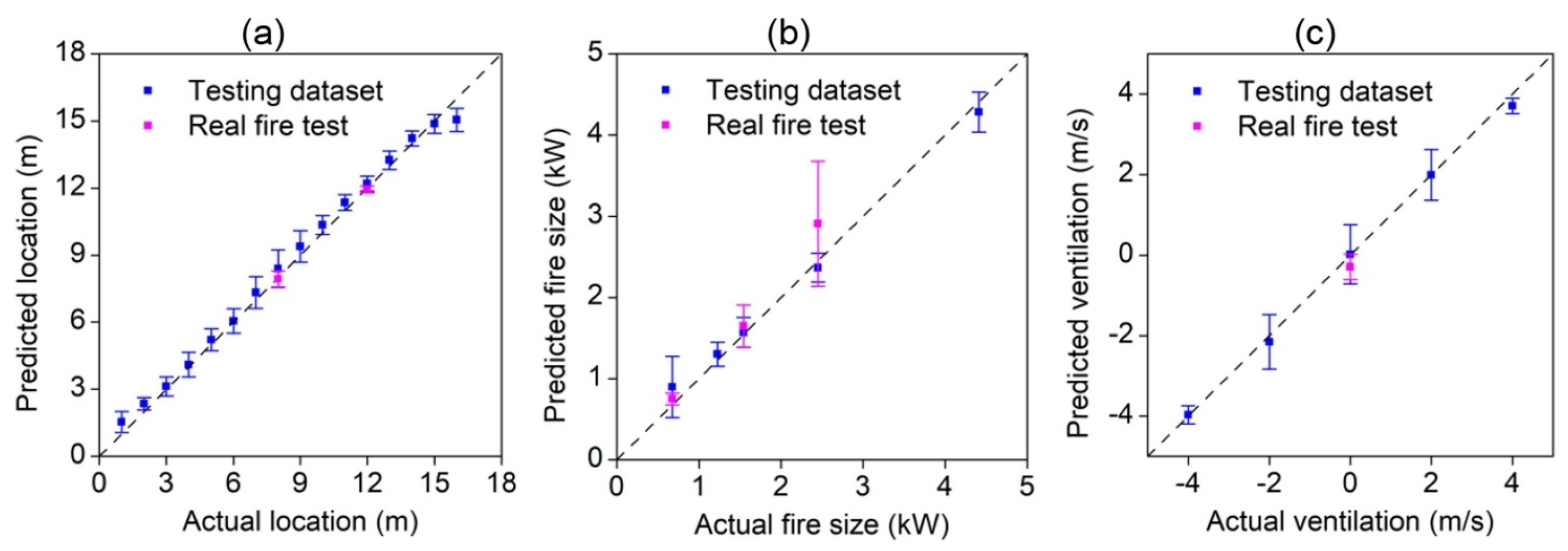

- Sun, B.; Xu, Z.-D. A multi-neural network fusion algorithm for fire warning in tunnels. Appl. Soft Comput. 2022, 131, 109799. [Google Scholar] [CrossRef]

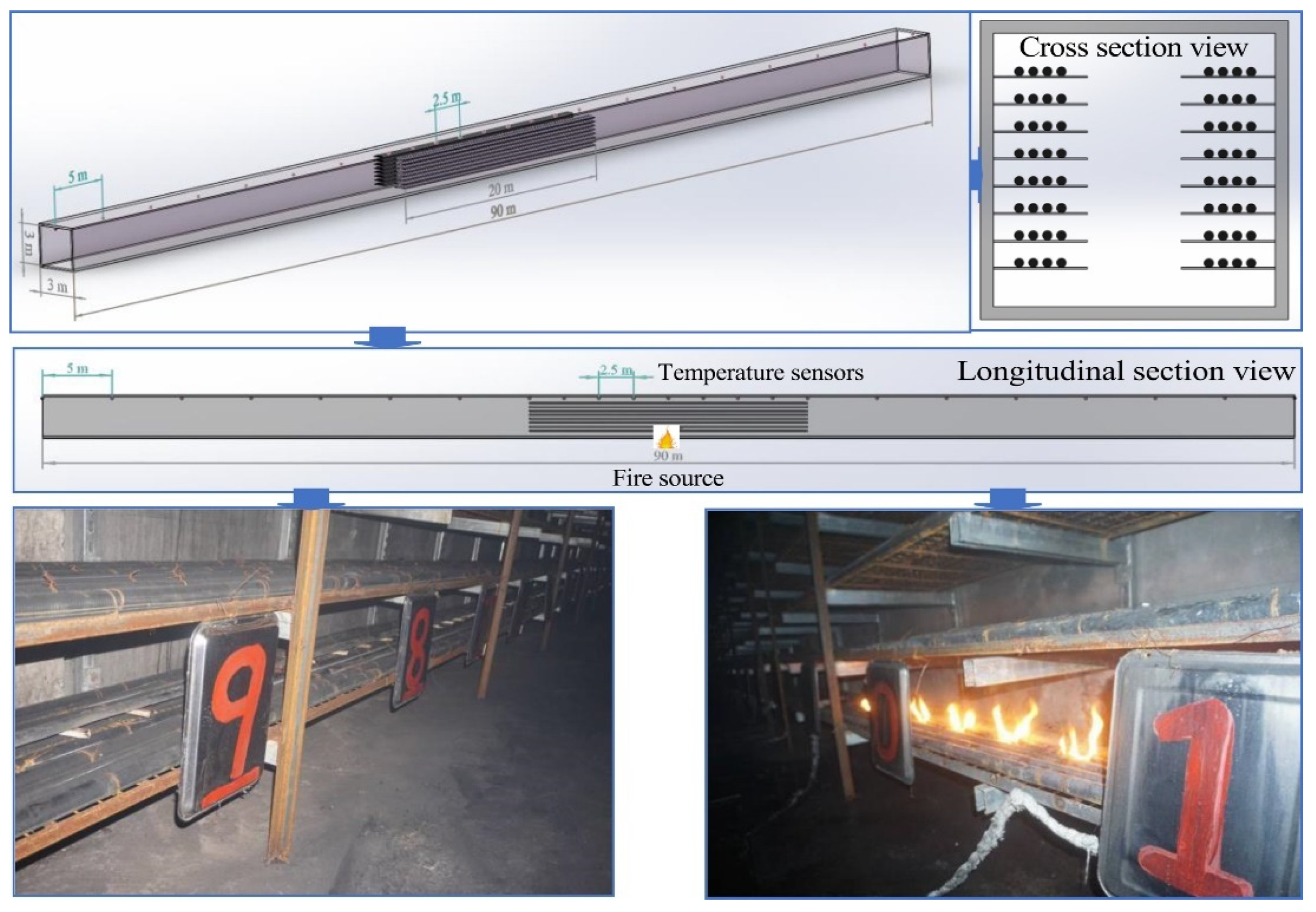

- Wu, X.; Zhang, X.; Jiang, Y.; Huang, X.; Huang, G.G.; Usmani, A. An intelligent tunnel firefighting system and small-scale demonstration. Tunn. Undergr. Space Technol. 2022, 120, 104301. [Google Scholar] [CrossRef]

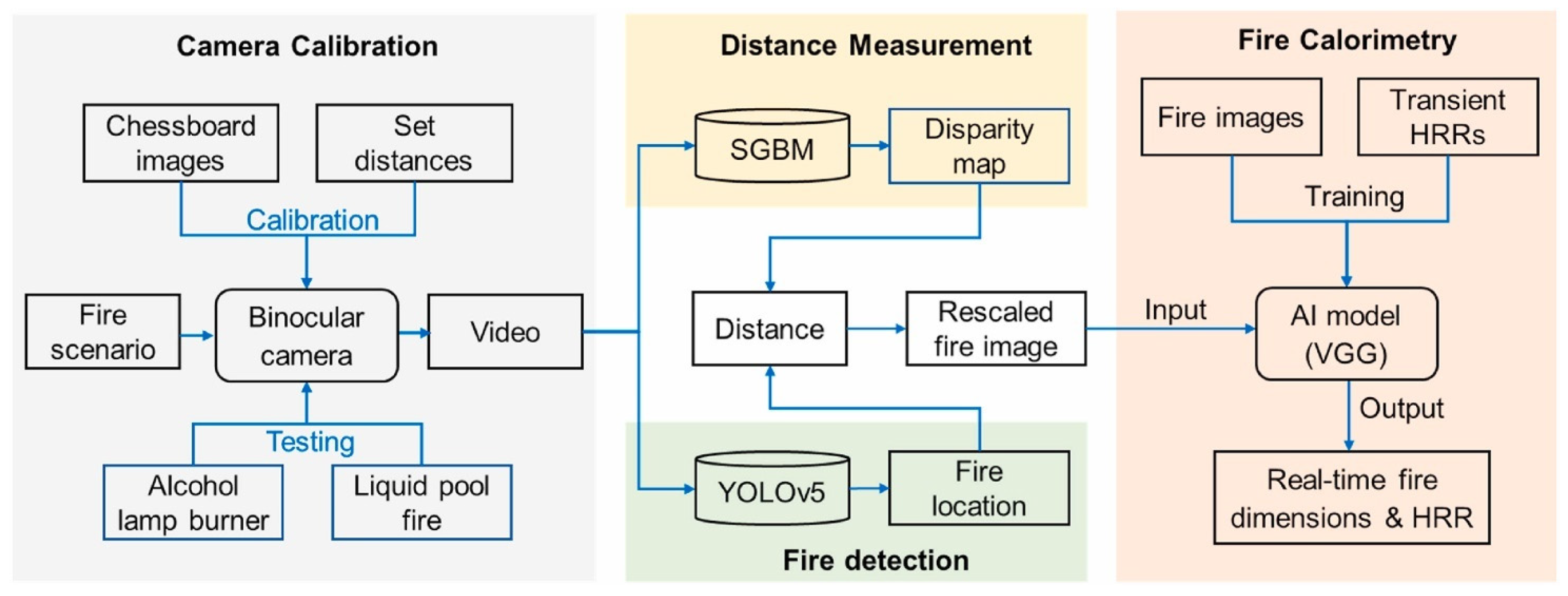

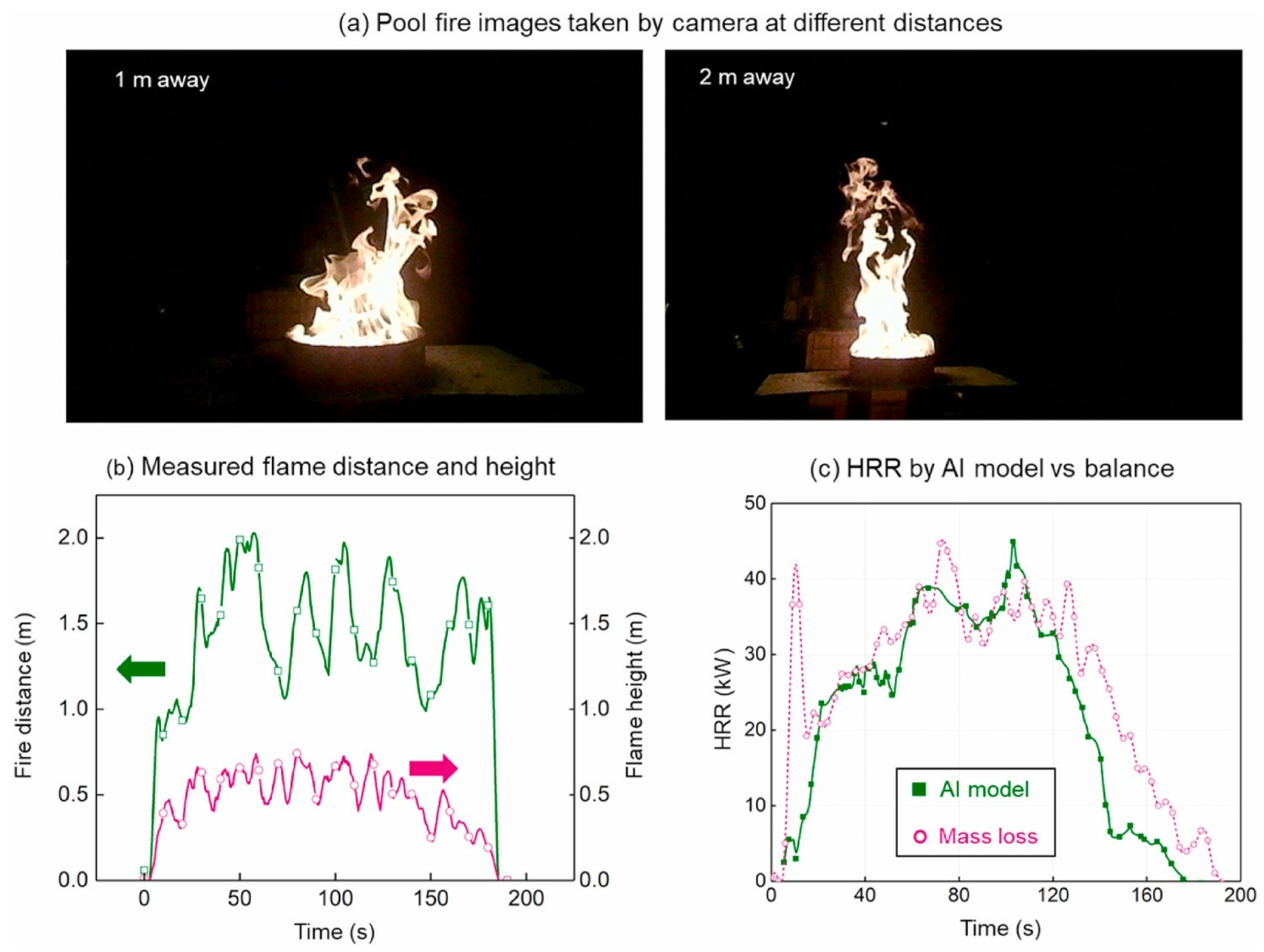

- Wang, Z.; Ding, Y.; Zhang, T.; Huang, X. Automatic real-time fire distance, size and power measurement driven by stereo camera and deep learning. Fire Saf. J. 2023, 140, 103891. [Google Scholar] [CrossRef]

- Mcgrattan, K. Heat Release Rates of Multiple Transient Combustibles; US Department of Commerce, National Institute of Standards and Technology: Washington, DC, USA, 2020. [CrossRef]

- Kim, D.; Ruy, W. CNN-based fire detection method on autonomous ships using composite channels composed of RGB and IR data. Int. J. Nav. Arch. Ocean Eng. 2022, 14, 100489. [Google Scholar] [CrossRef]

- Available online: https://www.tensorflow.org/api_docs/python/tf/keras/applications/xception (accessed on 5 September 2023).

- Available online: https://image-net.org (accessed on 2 August 2023).

- Liu, X.; Sun, B.; Xu, Z.D.; Liu, X. An adaptive Particle Swarm Optimization algorithm for fire source identification of the utility tunnel fire. Fire Saf. J. 2021, 126, 103486. [Google Scholar] [CrossRef]

- Fang, H.; Xu, M.; Zhang, B.; Lo, S. Enabling fire source localization in building fire emergencies with a machine learning-based inverse modeling approach. J. Build. Eng. 2023, 78, 107605. [Google Scholar] [CrossRef]

- Truong, T.X.; Kim, J.-M. Fire flame detection in video sequences using multi-stage pattern recognition techniques. Eng. Appl. Artif. Intell. 2012, 25, 1365–1372. [Google Scholar] [CrossRef]

- Available online: http://www.ultimatechase.com/Fire_Video.htm (accessed on 7 September 2023).

- Available online: http://signal.ee.bilkent.edu.tr/VisiFire/Demo/FireClips/ (accessed on 7 September 2023).

- Wang, Z.; Zhang, T.; Huang, X. Predicting real-time fire heat release rate by flame images and deep learning. Proc. Combust. Inst. 2023, 39, 4115–4123. [Google Scholar] [CrossRef]

- Available online: https://www.nist.gov/el/fcd (accessed on 7 September 2023).

- Wang, Z.; Zhang, T.; Wu, X.; Huang, X. Predicting transient building fire based on external smoke images and deep learning. J. Build. Eng. 2022, 47, 103823. [Google Scholar] [CrossRef]

- Hu, P.; Peng, X.; Tang, F. Prediction of maximum ceiling temperature of rectangular fire against wall in longitudinally ventilation tunnels: Experimental analysis and machine learning modeling. Tunn. Undergr. Space Technol. 2023, 140, 105275. [Google Scholar] [CrossRef]

- Hosseini, A.; Hashemzadeh, M.; Farajzadeh, N. UFS-Net: A unified flame and smoke detection method for early detection of fire in video surveillance applications using CNNs. J. Comput. Sci. 2022, 61, 101638. [Google Scholar] [CrossRef]

- Chen, S.-J.; Hovde, D.C.; Peterson, K.A.; Marshall, A.W. Fire detection using smoke and gas sensors. Fire Saf. J. 2007, 42, 507–515. [Google Scholar] [CrossRef]

- Qu, N.; Li, Z.; Li, X.; Zhang, S.; Zheng, T. Multi-parameter fire detection method based on feature depth extraction and stacking ensemble learning model. Fire Saf. J. 2022, 128, 103541. [Google Scholar] [CrossRef]

- ISO/TR 7240-9:2022; Fire Detection and Alarm Systems. ISO: Geneva, Switzerland, 2022.

- Kim, J.-H.; Lattimer, B.Y. Real-time probabilistic classification of fire and smoke using thermal imagery for intelligent firefighting robot. Fire Saf. J. 2015, 72, 40–49. [Google Scholar] [CrossRef]

- Favorskaya, M.; Pyataeva, A.; Popov, A. Spatio-temporal Smoke Clustering in Outdoor Scenes Based on Boosted Random Forests. Procedia Comput. Sci. 2016, 96, 762–771. [Google Scholar] [CrossRef]

- Smith, J.T.; Allred, B.W.; Boyd, C.S.; Davies, K.W.; Jones, M.O.; Kleinhesselink, A.R.; Maestas, J.D.; Naugle, D.E. Where There’s Smoke, There’s Fuel: Dynamic Vegetation Data Improve Predictions of Wildfire Hazard in the Great Basin. Rangel. Ecol. Manag. 2023, 89, 20–32. [Google Scholar] [CrossRef]

- Usmani, I.A.; Qadri, M.T.; Zia, R.; Alrayes, F.S.; Saidani, O.; Dashtipour, K. Interactive Effect of Learning Rate and Batch Size to Implement Transfer Learning for Brain Tumor Classification. Electronics 2023, 12, 964. [Google Scholar] [CrossRef]

- Tsalera, E.; Papadakis, A.; Voyiatzis, I.; Samarakou, M. CNN-based, contextualized, real-time fire detection in computational resource-constrained environments. Energy Rep. 2023, 9, 247–257. [Google Scholar] [CrossRef]

- Khan, A.; Hassan, B.; Khan, S.; Ahmed, R.; Abuassba, A. DeepFire: A Novel Dataset and Deep Transfer Learning Benchmark for Forest Fire Detection. Mob. Inf. Syst. 2022, 2022, 5358359. [Google Scholar] [CrossRef]

- Available online: https://github.com/DeepQuestAI/Fire-Smoke-Dataset (accessed on 2 August 2023).

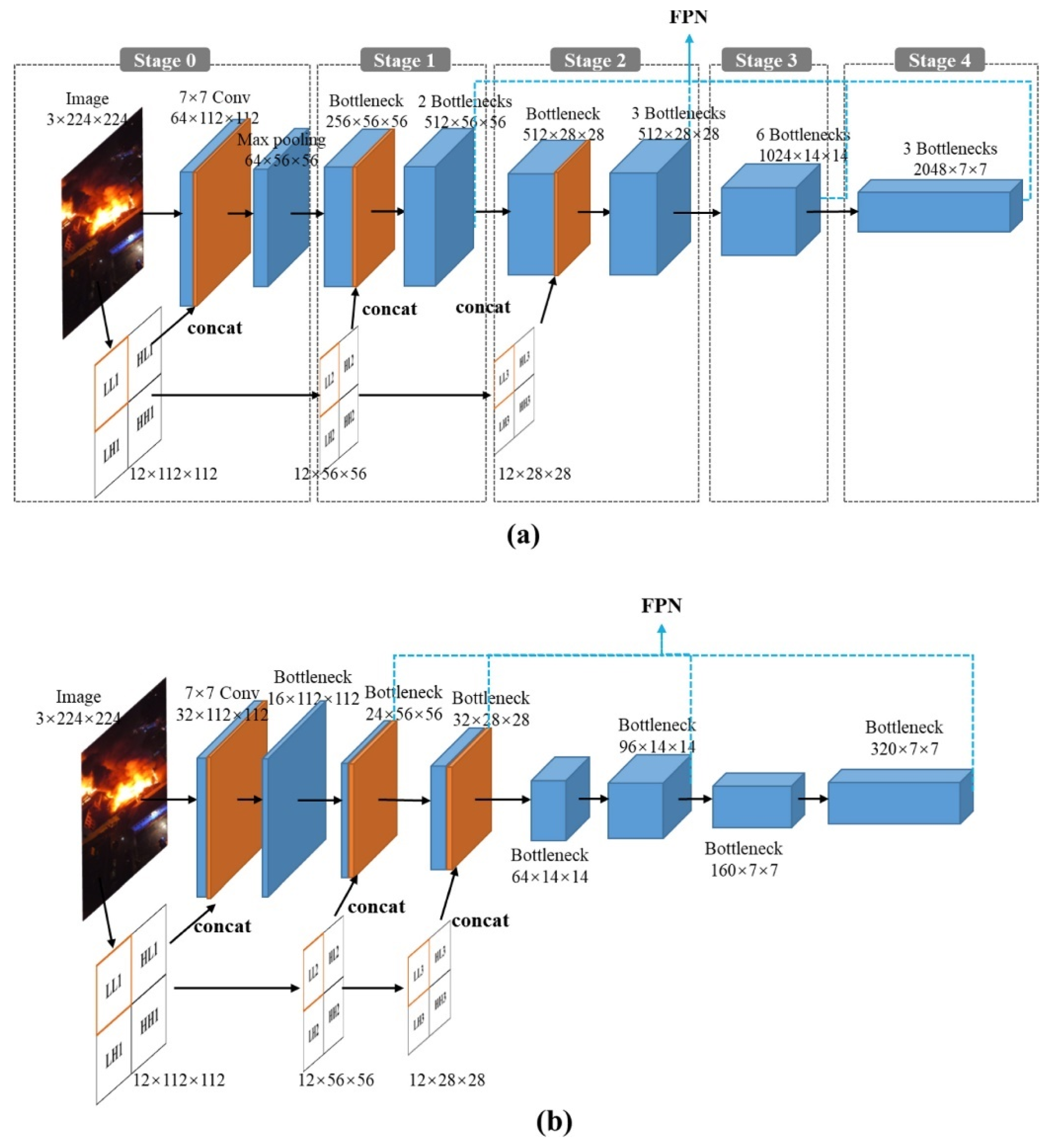

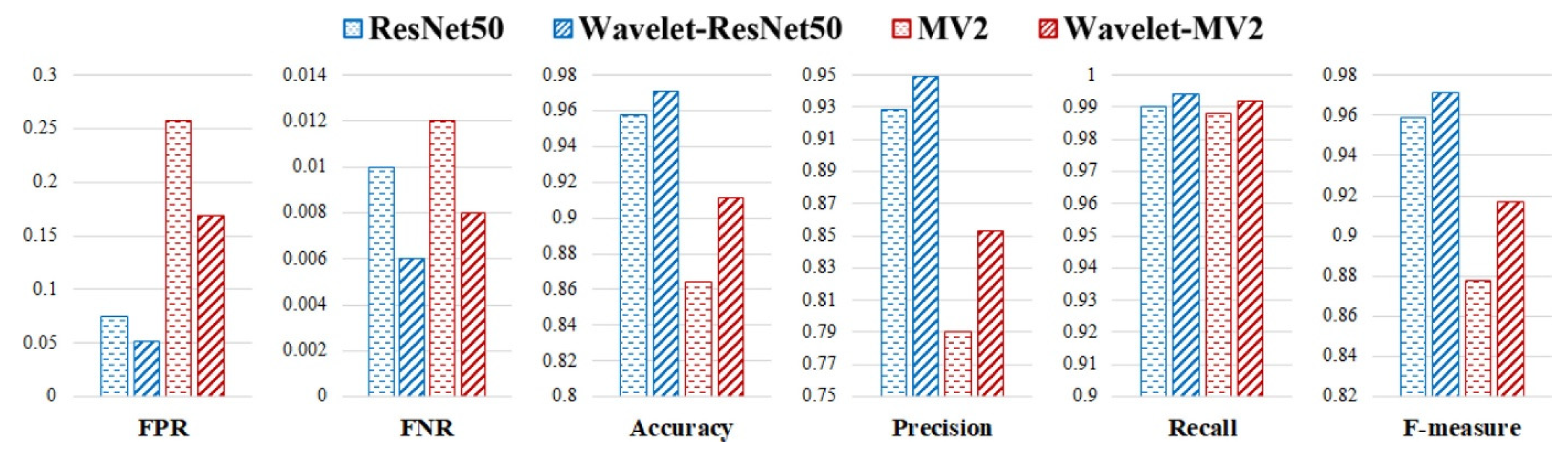

- Huang, L.; Liu, G.; Wang, Y.; Yuan, H.; Chen, T. Fire detection in video surveillances using convolutional neural networks and wavelet transform. Eng. Appl. Artif. Intell. 2020, 110, 104737. [Google Scholar] [CrossRef]

- Available online: https://cfdb.univ-corse.fr/ (accessed on 3 August 2023).

- Toulouse, T.; Rossi, L.; Campana, A.; Celik, T.; Akhloufi, M.A. Computer vision for wildfire research: An evolving image dataset for processing and analysis. Fire Saf. J. 2017, 92, 188–194. [Google Scholar] [CrossRef]

- Gong, F.; Li, C.; Gong, W.; Li, X.; Yuan, X.; Ma, Y.; Song, T. A Real-Time Fire Detection Method from Video with Multifeature Fusion. Comput. Intell. Neurosci. 2019, 2019, 1939171. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity mappings in deep residual networks. In Computer Vision–ECCV 2016. ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switerland, 2016; Volume 9908. [Google Scholar] [CrossRef]

- Zoph, B.; Vasudevan, V.; Shlens, J.; Le, Q.V. Learning Transferable Architectures for Scalable Image Recognition. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar] [CrossRef]

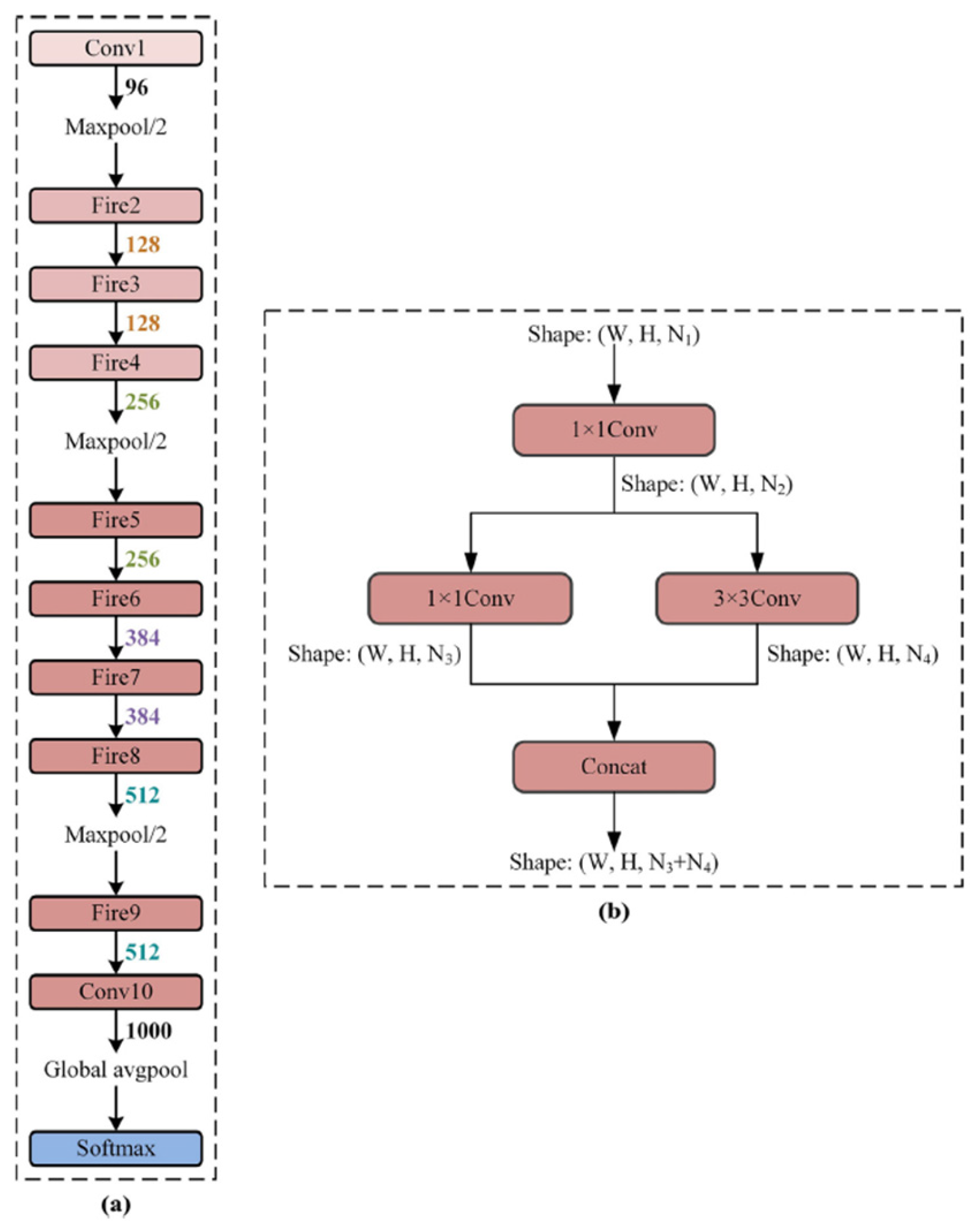

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5 MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar] [CrossRef]

- Peng, Y.; Wang, Y. Real-time forest smoke detection using hand-designed features and deep learning. Comput. Electron. Agric. 2019, 167, 105029. [Google Scholar] [CrossRef]

- Khan, S.; Khan, A. FFireNet: Deep Learning Based Forest Fire Classification and Detection in Smart Cities. Symmetry 2022, 14, 2155. [Google Scholar] [CrossRef]

- Forest Fire Dataset. Available online: https://www.kaggle.com/datasets/alik05/forest-fire-dataset (accessed on 28 August 2023).

- Zhang, L.; Wang, M.; Fu, Y.; Ding, Y. A Forest Fire Recognition Method Using UAV Images Based on Transfer Learning. Forests 2022, 13, 975. [Google Scholar] [CrossRef]

- Zhang, H.; Cisse, M.; Dauphin, Y.N.; Lopez-Paz, D. mixup: Beyond empirical risk minimization. arXiv 2017, arXiv:1710.09412. [Google Scholar]

- Ghali, R.; Akhloufi, M.A.; Mseddi, W.S. Deep Learning and Transformer Approaches for UAV-Based Wildfire Detection and Segmentation. Sensors 2022, 22, 1977. [Google Scholar] [CrossRef] [PubMed]

- Bahhar, C.; Ksibi, A.; Ayadi, M.; Jamjoom, M.M.; Ullah, Z.; Soufiene, B.O.; Sakli, H. Wildfire and Smoke Detection Using Staged YOLO Model and Ensemble CNN. Electronics 2023, 12, 228. [Google Scholar] [CrossRef]

- Alexandrov, D.; Pertseva, E.; Berman, I.; Pantiukhin, I.; Kapitonov, A. Analysis of Machine Learning Methods for Wildfire Security Monitoring with an Unmanned Aerial Vehicles. In Proceedings of the 24th Conference of Open Innovations Association FRUCT, Moscow, Russia, 8–12 April 2019. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S. SSD: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar] [CrossRef]

- Zhang, Q.-X.; Lin, G.-H.; Zhang, Y.-M.; Xu, G.; Wang, J.-J. Wildland Forest Fire Smoke Detection Based on Faster R-CNN using Synthetic Smoke Images. Procedia Eng. 2018, 211, 441–446. [Google Scholar] [CrossRef]

- Xie, X.; Chen, K.; Guo, Y.; Tan, B.; Chen, L.; Huang, M. A Flame-Detection Algorithm Using the Improved YOLOv5. Fire 2023, 6, 313. [Google Scholar] [CrossRef]

- Majid, S.; Alenezi, F.; Masood, S.; Ahmad, M.; Gündüz, E.S.; Polat, K. Attention based CNN model for fire detection and localization in real-world images. Expert Syst. Appl. 2022, 189, 116114. [Google Scholar] [CrossRef]

- Available online: https://ieee-dataport.org/open-access/flame-dataset-aerial-imagery-pile-burn-detection-using-drones-uavs (accessed on 31 August 2023).

- Dogan, S.; Barua, P.D.; Kutlu, H.; Baygin, M.; Fujita, H.; Tuncer, T.; Acharya, U. Automated accurate fire detection system using ensemble pretrained residual network. Expert Syst. Appl. 2022, 203, 117407. [Google Scholar] [CrossRef]

- FIRE Dataset. Available online: https://www.kaggle.com/datasets/phylake1337/fire-dataset (accessed on 31 August 2023).

- Available online: https://www.kaggle.com/datasets/atulyakumar98/test-dataset (accessed on 31 August 2023).

- Available online: https://bitbucket.org/gbdi/bowfire-dataset/src/master/ (accessed on 31 August 2023).

- Available online: https://zenodo.org/record/836749 (accessed on 31 August 2023).

- Available online: https://mivia.unisa.it/datasets/video-analysis-datasets/fire-detection-dataset/ (accessed on 31 August 2023).

- Available online: https://mivia.unisa.it/datasets/video-analysis-datasets/smoke-detection-dataset/ (accessed on 31 August 2023).

- Available online: https://cvpr.kmu.ac.kr/ (accessed on 31 August 2023).

- Available online: https://www.kaggle.com/datasets/dataclusterlabs/fire-and-smoke-dataset (accessed on 31 August 2023).

- Available online: https://www.kaggle.com/datasets/mohnishsaiprasad/forest-fire-images (accessed on 31 August 2023).

- Available online: https://www.kaggle.com/datasets/elmadafri/the-wildfire-dataset (accessed on 31 August 2023).

- Available online: https://www.kaggle.com/datasets/anamibnjafar0/flamevision (accessed on 31 August 2023).

- Available online: https://www.kaggle.com/datasets/amerzishminha/forest-fire-smoke-and-non-fire-image-dataset (accessed on 31 August 2023).

- Wu, S.; Zhang, X.; Liu, R.; Li, B. A dataset for fire and smoke object detection. Multimed. Tools Appl. 2023, 82, 6707–6726. [Google Scholar] [CrossRef]

- Wang, M.; Yu, D.; He, W.; Yue, P.; Liang, Z. Domain-incremental learning for fire detection in space-air-ground integrated observation network. Int. J. Appl. Earth Obs. Geoinf. 2023, 118, 103279. [Google Scholar] [CrossRef]

- Harkat, H.; Nascimento, J.M.; Bernardino, A.; Ahmed, H.F.T. Fire images classification based on a handcraft approach. Expert Syst. Appl. 2023, 212, 118594. [Google Scholar] [CrossRef]

- Bouguettaya, A.; Zarzour, H.; Taberkit, A.M.; Kechida, A. A review on early wildfire detection from unmanned aerial vehicles using deep learning-based computer vision algorithms. Signal Process 2022, 190, 108309. [Google Scholar] [CrossRef]

- Pourbahrami, S.; Hashemzadeh, M. A geometric-based clustering method using natural neighbors. Inf. Sci. 2022, 610, 694–706. [Google Scholar] [CrossRef]

- Sun, L.; Qin, X.; Ding, W.; Xu, J. Nearest neighbors-based adaptive density peaks clustering with optimized allocation strategy. Neurocomputing 2022, 473, 159–181. [Google Scholar] [CrossRef]

- Available online: https://github.com/ckyrkou/AIDER (accessed on 1 September 2023).

- Saini, N.; Chattopadhyay, C.; Das, D. E2AlertNet: An explainable, efficient, and lightweight model for emergency alert from aerial imagery. Remote Sens. Appl. Soc. Environ. 2023, 29, 100896. [Google Scholar] [CrossRef]

- Kyrkou, C.; Theocharides, T. EmergencyNet: Efficient Aerial Image Classification for Drone-Based Emergency Monitoring Using Atrous Convolutional Feature Fusion. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 1687–1699. [Google Scholar] [CrossRef]

- Wang, M.; Jiang, L.; Yue, P.; Yu, D.; Tuo, T. FASDD: An Open-access 100,000-level Flame and Smoke Detection Dataset for Deep Learning in Fire Detection [DS/OL]. V3. Science Data Bank. 2022. Available online: https://cstr.cn/31253.11.sciencedb.j00104.00103 (accessed on 1 November 2023).

- Chen, Y.; Zhang, Y.; Xin, J.; Yi, Y.; Liu, D.; Liu, H. A UAV-based forest fire detection algorithm using convolutional neural network. In Proceedings of the 2018 37th Chinese Control Conference (CCC), Wuhan, China, 25–27 July 2018. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Stathaki, T.; Dimitropoulos, K.; Grammalidis, N. Early Fire Detection Based on Aerial 360-Degree Sensors, Deep Convolution Neural Networks and Exploitation of Fire Dynamic Textures. Remote Sens. 2020, 12, 3177. [Google Scholar] [CrossRef]

- Novac, I.; Geipel, K.R.; Gil, J.E.d.D.; de Paula, L.G.; Hyttel, K.; Chrysostomou, D. A Framework for Wildfire Inspection Using Deep Convolutional Neural Networks. In Proceedings of the 2020 IEEE/SICE International Symposium on System Integration (SII), Honolulu, HI, USA, 12–15 January 2020. [Google Scholar] [CrossRef]

- Çetin, A.E.; Dimitropoulos, K.; Gouverneur, B.; Grammalidis, N.; Günay, O.; Habiboǧlu, Y.H.; Töreyin, B.U.; Verstockt, S. Video fire detection—Review. Digit. Signal Process 2013, 23, 1827–1843. [Google Scholar] [CrossRef]

- Pereira, G.H.d.A.; Fusioka, A.M.; Nassu, B.T.; Minetto, R. Active fire detection in Landsat-8 imagery: A large-scale dataset and a deep-learning study. ISPRS J. Photogramm. Remote Sens. 2021, 178, 171–186. [Google Scholar] [CrossRef]

- Al-Bashiti, M.K.; Naser, M. Machine learning for wildfire classification: Exploring blackbox, eXplainable, symbolic, and SMOTE methods. Nat. Hazards Res. 2022, 2, 154–165. [Google Scholar] [CrossRef]

- Short, K.C. Spatial Wildfire Occurrence Data for the United States, 1992–2015 (FPA_FOD_20170508), 4th ed.; Forest Service Research Data Archive: Fort Collins, CO, USA, 2017. [CrossRef]

- Stocks, B.J.; Lynham, T.J.; Lawson, B.D.; Alexander, M.E.; Van Wagner, C.E.; McAlpine, R.S.; Dubé, D.E. The Canadian Forest Fire Danger Rating System: An Overview. For. Chron. 1989, 65, 450–457. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Lee, S.I. A unified approach to interpreting model predictions. Adv. Neural Inf. Process. Syst. 2017, 30, 4768–4777. [Google Scholar] [CrossRef]

- Hu, X.; Zhang, P.; Ban, Y. Large-scale burn severity mapping in multispectral imagery using deep semantic segmentation models. ISPRS J. Photogramm. Remote Sens. 2023, 196, 228–240. [Google Scholar] [CrossRef]

- Available online: https://www.mtbs.gov/direct-download (accessed on 9 September 2023).

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer—Assisted Intervention; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar] [CrossRef]

- Berman, M.; Triki, A.R.; Blaschko, M.B. The Lovasz-Softmax Loss: A Tractable Surrogate for the Optimization of the Intersection-Over-Union Measure in Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4413–4421. [Google Scholar] [CrossRef]

- Shrivastava, A.; Gupta, A.; Girshick, R. Training Region-Based Object Detectors with Online Hard Example Mining. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Jadon, S. A survey of loss functions for semantic segmentation. In Proceedings of the 2020 IEEE Conference on Computational Intelligence in Bioinformatics and Computational Biology (CIBCB), Via del Mar, Chile, 27–29 October 2020. [Google Scholar] [CrossRef]

- Fernandez, A.; Garcia, S.; Galar, M.; Prati, R.; Krawczyk, B.; Herrera, F. Learning from Imbalanced Data Sets; Springer Nature: Cham, Switzerland, 2018; ISBN 978-3-319-98073-7. [Google Scholar]

- Iwana, B.K.; Uchida, S. An empirical survey of data augmentation for time series classification with neural networks. PLoS ONE 2021, 16, e0254841. [Google Scholar] [CrossRef] [PubMed]

- Tian, S.; Zhang, Y.; Feng, Y.; Elsagan, N.; Ko, Y.; Mozaffari, M.H.; Xi, D.D.; Lee, C.-G. Time series classification, augmentation and artificial-intelligence-enabled software for emergency response in freight transportation fires. Expert Syst. Appl. 2023, 233, 120914. [Google Scholar] [CrossRef]

- Kamycki, K.; Kapuscinski, T.; Oszust, M. Data Augmentation with Suboptimal Warping for Time-Series Classification. Sensors 2020, 20, 98. [Google Scholar] [CrossRef]

- Sakoe, H.; Chiba, S. Dynamic programming algorithm optimization for spoken word recognition. IEEE Trans. Acoust. Speech Signal Process. 1978, 26, 43–49. [Google Scholar] [CrossRef]

- Sousa, M.J.; Moutinho, A.; Almeida, M. Wildfire detection using transfer learning on augmented datasets. Expert Syst. Appl. 2020, 142, 112975. [Google Scholar] [CrossRef]

- Sun, K.; Zhao, Q.; Wang, X. Using knowledge inference to suppress the lamp disturbance for fire detection. J. Saf. Sci. Resil. 2021, 2, 124–130. [Google Scholar] [CrossRef]

- Zhao, H.; Jin, J.; Liu, Y.; Guo, Y.; Shen, Y. FSDF: A high-performance fire detection framework. Expert Syst. Appl. 2024, 238, 121665. [Google Scholar] [CrossRef]

- Shahid, M.; Hua, K.-L. Fire detection using transformer network. In Proceedings of the 2021 International Conference on Multimedia Retrieval, Taipei, Taiwan, 21–24 August 2021. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. In Proceedings of the 9th International Conference on Learning Representations, ICLR 2021, Virtual. 3–7 May 2021. [Google Scholar] [CrossRef]

- Lin, J.; Lin, H.; Wang, F. STPM_SAHI: A Small-Target Forest Fire Detection Model Based on Swin Transformer and Slicing Aided Hyper Inference. Forests 2022, 13, 1603. [Google Scholar] [CrossRef]

- Ali, A.; Touvron, H.; Caron, M.; Bojanowski, P.; Douze, M.; Joulin, A.; Laptev, I.; Neverova, N.; Synnaeve, G.; Verbeek, J.; et al. XCIT: Cross-covariance image trans-formers. Adv. Neural Inf. Process Syst. 2021, 34, 20014–20027. [Google Scholar]

- Yang, C.; Pan, Y.; Cao, Y.; Lu, X. CNN-Transformer Hybrid Architecture for Early Fire Detection. In Artificial Neural Networks and Machine Learning—ICANN 2022. ICANN 2022; Pimenidis, E., Angelov, P., Jayne, C., Papaleonidas, A., Aydin, M., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switerlandm, 2022; Volume 13532. [Google Scholar] [CrossRef]

- Wang, X.; Li, M.; Gao, M.; Liu, Q.; Li, Z.; Kou, L. Early smoke and flame detection based on transformer. J. Saf. Sci. Resil. 2023, 4, 294–304. [Google Scholar] [CrossRef]

- Zhang, K.; Wang, B.; Tong, X.; Liu, K. Fire detection using vision transformer on power plant. Energy Rep. 2022, 8, 657–664. [Google Scholar] [CrossRef]

- Qian, J.; Bai, D.; Jiao, W.; Jiang, L.; Xu, R.; Lin, H.; Wang, T. A High-Precision Ensemble Model for Forest Fire Detection in Large and Small Targets. Forests 2023, 14, 2089. [Google Scholar] [CrossRef]

- Benzekri, W.; Moussati, A.E.; Moussaoui, O.; Berrajaa, M. Early Forest Fire Detection System using Wireless Sensor Network and Deep Learning. Int. J. Adv. Comput. Sci. Appl. 2020, 11, 496–503. [Google Scholar] [CrossRef]

| Pos | Keyword | Occurrences | Total Link Strength |

|---|---|---|---|

| 1 | “fires” | 1315 | 14,293 |

| 2 | “machine learning” | 836 | 8501 |

| 3 | “deep learning” | 736 | 7665 |

| 4 | “deforestation” | 401 | 5339 |

| 5 | “fire hazards” | 422 | 4919 |

| 6 | “learning systems” | 348 | 4696 |

| 7 | “fire detection” | 535 | 4167 |

| 8 | “fire detectors” | 259 | 3424 |

| 9 | “smoke” | 269 | 3191 |

| 10 | “forest fires” | 277 | 2960 |

| Architecture | FPS | No. of Parameters | ||

|---|---|---|---|---|

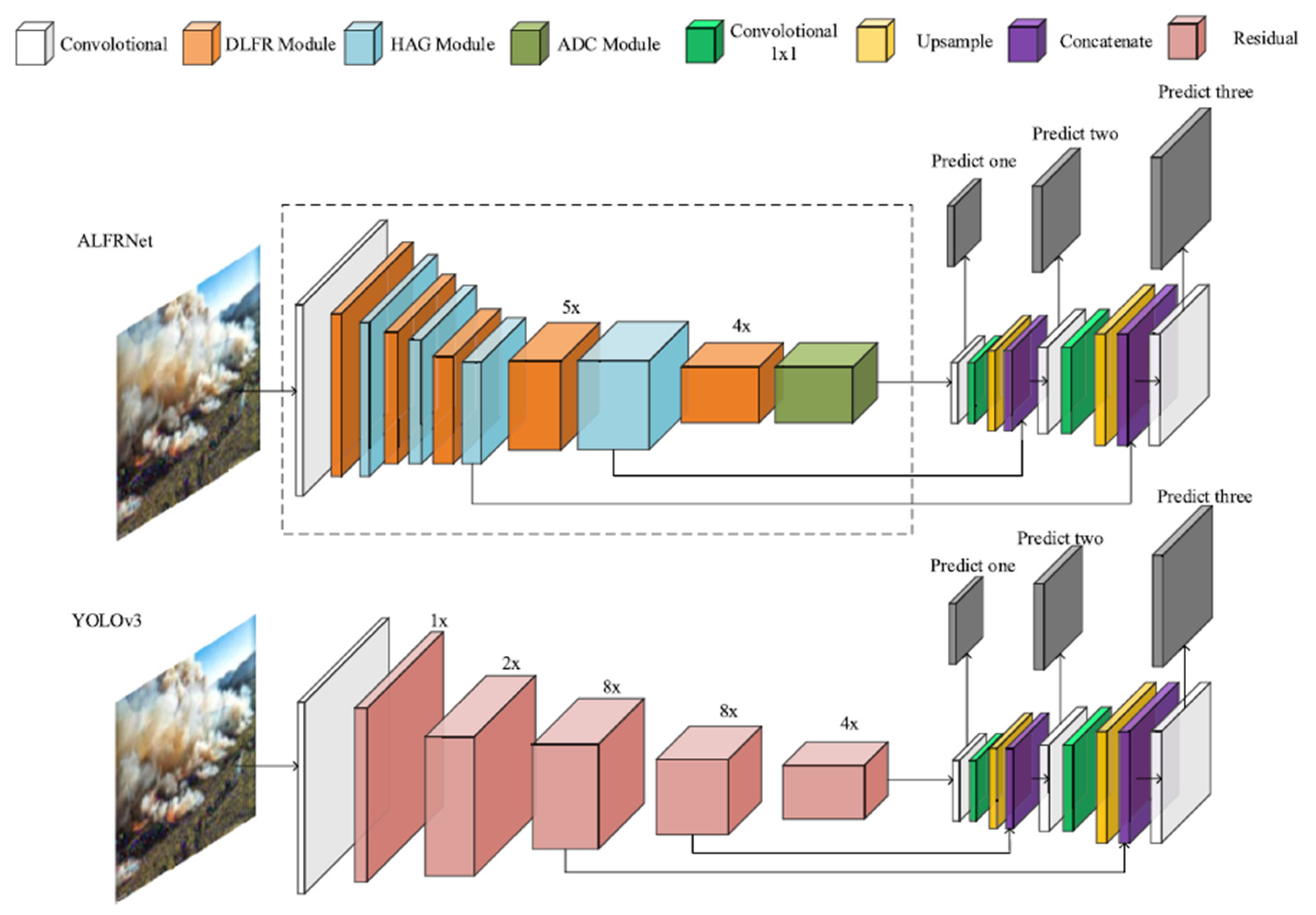

| YOLOv3 | 84.94 | 74.77 | 33 | 61,576,342 |

| ALFRNet | 87.26 | 79.95 | 43 | 23,336,302 |

| Dataset | Accuracy | Loss |

|---|---|---|

| Test | 0.7623 | 0.7414 |

| Validation | 0.9431 | 0.1506 |

| Training | 0.9679 | 0.0857 |

| Predicted Label | |||

|---|---|---|---|

| Fire | Non-Fire | ||

| True label | Fire | 3888 | 799 |

| Non-Fire | 1248 | 2680 | |

| Ref | Dataset | Fire Type | Architecture | Metrics |

|---|---|---|---|---|

| Zhan [33] (2022) | I. Data of 6913 smoke images intercepted from the video dataset by using screenshots. II. A total of 5832 images from the video dataset of the FLAME dataset [31]. III. A total of 1093 images simulated smoke generation when a forest fire occurred. | Forest | ARGNet adjacent layer composite network based on a recursive feature pyramid with deconvolution and dilated convolution and global optimal non-maximum suppression. | mAP50: 90 mAP75: 82 FPS: 122 Jaccard index: 0.84 |

| Zhang [34] (2016) | Images captured from a video stream (every five frames). A total of 21 positive and 4 negative sequences. Images were resized at 240 × 320. Fire patches were annotated manually with 32 × 32 boxes. Train set: 178 images, 12,460 patches (1307 positive, 11,153 negative). Test set: 59 images, 4130 patches (539 positive, 3591 negative). | Forest | Three convolutional layers Three pooling layers Two fully connected layers Softmax activation in the output layer | Accuracy: 0.931 Detection rate: 0.845 False positive rate: 0.039 Time per image: 2.1 s |

| Reis [35] (2023) | FLAME dataset [31]. A total of 14,357 negative and 25,018 positive instances in the training dataset, and 3480 no-fire and 5137 fire instances in the test dataset. | Forest | DenseNet121 (Huang [36]) CNN-based architecture with each layer directly connected to all previous layers. A total of 121 convolution layers, four dense blocks, three transition layers, and a SoftMax layer in the output layer. Image input 224 × 224 × 3. | Accuracy P R F1-Score Cohen’s Kappa Confusion matrix |

| Song [37] (2021) | UIA- CAIR/Fire-Detection-Image—Dataset (620 three-channel JPEG files) consisting of 110 positive samples and 510 negative samples. Image augmentation (translation, flipping and rotation) was applied to the positive samples in order to balance the classes. The total number of images after data augmentation was 950 (190 training images, 760 test images), normalized to 64 × 64 × 3. | Outdoor | C1: 64 5 × 5 convolution kernels by a step size 2. Pooling layer (MaxPooling). C2: 128 5 × 5 convolution kernels by a step size 2. Pooling layer (MaxPooling). C3: 256 5 × 5 convolution kernels by a step size 2. Pooling layer (MaxPooling). 1024-node fully connected layer Activation function: LeakyReLU (C1-C2), ReLU (C3). | Accuracy: For the training set 99.5%; For the test set 94%. |

| Fernandes [38] (2004) | Committee of neural networks connected sequentially; each unit eliminates the pattern that it classifies as atmospheric noise and passes on the pattern that is considered smoke. The model was trained with the same smoke signatures and noise patterns that caused false alarms in the previous neural network. | Forest | A total of 1410 patterns containing smoke signatures, 17,174 atmospheric noise patterns with 21 points and 8334 atmospheric noise patterns with 41 points for each value of the added noise amplitude. | False alarm (%): 0.9 Misdetections (%): 15.5 |

| Ahn [39] (2023) | A total of 10,163 images of early and indoor fires from AI HUB [40]. False fire alarm response items for video fire detectors: direct sunlight and solar-related sources, electric welding, black body sources (electric heater), and artificial lighting (incandescent lamp, fluorescent lamp, halogen lamp), candles, rainbow, laundry, flags, red cloths, yellow wires, light reflections, and cigarette smoke. | Indoor | YOLOv5 pre-trained model. The default YOLOv5 hyperparameters values were used. The epoch number was 300 and batch size 32. The training, validation, and test dataset volumes were 70, 20, and 10% of the image dataset. | P (Val/Test): 0.94/0.91 R (Val/Test): 0.93/0.97 mAP: 0.96/0.96 |

| Biswas [41] (2023) | CNN. Pretrained Inception-ResNet-v2 [42] (164 layers, 1000 output classes). Inception-ResNet-v3 [43]. Modified Inception-ResNet-v3 [41]. | Indoor/Outdoor | Three-channel JPEG images. Training set: 694 positive class, 286 negative class. Validation set: 171 positive class, 68 negative class | Training and validation accuracy. |

| Muhammad [44] (2018) | CNN consisting of five convolution layers, three pooling layers, and three fully connected layers. Input layer size 224 × 224 × 3 | Indoor/Outdoor | A. Foggia [45]: 31 videos in indoor and outdoor environments. A total of 14 positive class and 17 negative class. B. Chino [46]: 226 images: 119 positive class, 107 negative class consisting of fire-like images: sunsets, fire-like lights, and sunlight coming through windows | FPR: w FT: 0.0907 w/o FT: 0.0922 FNR: w FT: 0.0213 w/o FT: 0.106 Accuracy: w FT: 0.94 w/o FT: 0.90 |

| Yar [47] (2023) | YOLOv5-based, modified to detect both small fire areas and large fire areas. | Building(B) Indoor electric(I) Vehicle(V) | Building fire: 723 Indoor electric fire: 118 Vehicle fire: 1116 | P (B/I/V): 0.8/0.95/0.87 R (B/I/V): 0.64/0.95/0.86 F1-Score (B/I/V): 0.7/0.95/0.86 mAP (B/I/V): 0.7/0.96/0.92 |

| Valikhujaev [48] (2020) | CNN consisting of four convolution layers with dilation rate 1, 2 and 3, each convolution layer followed by a MaxPooling layer; a final Dropout layer; and two FC layers each followed by a Dropout layer. | Indoor/Outdoor | Total of 8430 fire images. Total of 8430 smoke images. | Train set accuracy: 0.996 Test set accuracy: 0.995 R: 0.97 Inference time |

| Dataset | Classes | |||

|---|---|---|---|---|

| Fire | No Fire | Smoke | Smoke Fire | |

| Train | 2161 | 2150 | 490 | 510 |

| Validation | 200 | 200 | 200 | 200 |

| Test | 200 | 200 | 200 | 200 |

| Algorithm | Accuracy | F1-Score |

|---|---|---|

| PGDPC | 45.57% | 39.14% |

| PSO | 64.11% | 45.81% |

| TSDPC | 64.74% | 51.27% |

| Ref | Architecture | Dataset | Image Augmentation | Performance Metrics | |||||

|---|---|---|---|---|---|---|---|---|---|

| Kim [70] (2023) | Enhanced bidirectional feature pyramid network (BiFPN) applied to the YOLOv7 head portion integrated with a convolutional block attention module. | Aerial photos of wildfire smoke and forest backgrounds. A total of 3500 forest fire smoke pictures, 3000 non-forest fire smoke pictures, respectively. All images resized to the size of 640 × 640. | Rotation Flipping | AP: 0.786 FPS: 153 | |||||

| Ashiquzzaman [71] (2020) | Deep architecture: six convolutional layers (each followed by one Batch Normalization and one MaxPooling layer), and three fully connected layers (each followed by a Batch Normalization layer). Activation function: ELU. | Foggia et al. [45]: 12 fire videos, 11 smoke videos and 1 normal video. Keyframes manually selected. Classes: Fire; Smoke; Negative. | N/A | P | R | F1 | |||

| 0 | 0.84 | 0.79 | 0.81 | ||||||

| 1 | 0.91 | 0.89 | 0.9 | ||||||

| 2 | 0.87 | 0.95 | 0.91 | ||||||

| Confusion matrix | |||||||||

| He [56] (2021) | VGG16 (He et al. [72]). A total of 13 convolution layers and 3 full connection layers. Each group of 3 convolution layers followed by MaxPooling. A total of 3 fully connected layers before the output. Activation function SoftMax, 1000 classes. | Type | Train | Test | N/A | Accuracy: 0.999 P: 0.999 R: 0.999 F1-Score: 0.999 | |||

| Smoke | 6662 | 1680 | |||||||

| Smoke and fog | 6842 | 1680 | |||||||

| Non-smoke | 6720 | 1681 | |||||||

| Non-smoke and fog | 6720 | 1681 | |||||||

| Hu [73] (2022) | Architecture built on YOLOv5 by replacing the SPP module with the Soft-SPP and adding the VAM module. | Available online [74] | N/A | AP: 0.79 FPS: 122 | |||||

| Liu [75] (2019) | First channel: AlexNet-based network with a residual block to extract generalizable features. Second channel: a dark-extracted convolution neural network is used to train the dark channel dataset. | Pos Class | Neg Class | N/A | Accuracy: 0.986 Binary cross-entropy: 0.077 | ||||

| Train | 3967 | 3967 | |||||||

| Val | 430 | 430 | |||||||

| Test | 500 | 500 | |||||||

| Yuan [76] (2023) | VGG16 (Simonyan [77]) was used as backbone for obtaining contextual features from the input image. A 3D attention module developed through fusing attention maps along three axes. Multi-scale feature maps obtained through Atrous convolutions and attention mechanisms followed by mixed pooling of average and maximum. | A total of 1000 virtual smoke images but with different background images (Yuan, [78]) | N/A | MAE (DUTS dataset [79] was used for performance comparison). | |||||

| Foggia Dataset [45] | FireNet Dataset [85] | ||||||

|---|---|---|---|---|---|---|---|

| Predicted Class | Predicted Class | ||||||

| True class | Fire | No Fire | True class | Fire | No Fire | ||

| Fire | 314 | 49 | Fire | 553 | 40 | ||

| No Fire | 4 | 3017 | No Fire | 4 | 274 | ||

| Dataset | R [%] | P [%] | F1-Score [%] |

|---|---|---|---|

| Red, Green, Blue | 98.9 | 99.8 | 99.3 |

| Green, Green, Blue | 97.3 | 80.0 | 87.8 |

| Red, Red, Blue | 90.9 | 86.5 | 88.6 |

| Zero, Green, Blue | 16.9 | 91.1 | 28.5 |

| Red, Zero, Blue | 0 | 0 | 0 |

| Predicted Class | |||

|---|---|---|---|

| True class | Fire | No Fire | |

| Fire | 6863 | 313 | |

| No Fire | 0 | 7030 | |

| Ref | Algorithm | Setting | Dataset/Data Source | Objectives |

|---|---|---|---|---|

| Liu [95] (2021) | PSO | Tunnel | Temperature from sensor arrays | Identify the fire source locations |

| Fang [96] (2023) | Feed-forward ANN | Building consisting of a large number of rooms | Temperature from sensor arrays | Localization of the fire source |

| Yar [47] (2023) | YOLOv5 with Stem module in the backbone; larger kernels replaced with smaller kernel sizes in the neck section; a P6 module in the head section. | Building fire (seen from outside) Indoor electric fire Vehicle fire | Fire images: 723 building fire; 118 indoor electric fire; 1116 vehicle fire. | Fire detection and classification |

| Truong [97] (2012) | Moving region detection by Adaptive Gaussian mixture model; Fuzzy C-means clustering color segmentation; SVM Classification. | Indoor Outdoor | Two-class images dataset: 25,000 fire frames; 15,000 non-fire frames. Test sets: Fire Video [98] VisiFire [99] | Fire detection |

| Wang [100] (2023) | VGG16 | Various indoor fires Vehicle fires | NIST Fire Calorimetry Database [101] consisting of HRR versus time values | Predict the HRR using flame images |

| Wang [102] (2022) | VGG16 | Indoor fires in a ISO 9705 compartment | CFD-generated dataset (FDS 6.7.5) | Predict the HRR |

| Hu [103] (2023) | Feed-forward ANN | Indoor fire | CFD-generated dataset | Predict the maximum ceiling temperature |

| Hosseini [104] (2022) | Deep CNN | Outdoor: vegetation, industrial settings Indoor | Eight classes: Flame; White smoke; Black smoke; Flame and white smoke; Flame and black smoke; Black smoke and white smoke; Flame, white smoke and black smoke; Normal. | Fire and smoke detection and classification |

| Chen [105] (2007) | Decision Tree | Aircraft cargo compartment | CO and CO2 concentration Smoke density | Fire and smoke detection |

| Qu [106] (2022) | Stacking ensemble learning: LSTM; Decision Tree; Random Forest; Support Vector Machine; K-Nearest Neighbor; XGBoost. | Defined by standard ISO/TR 7240-9-2022 [107]: Cotton rope smoldering fire; Polyurethane plastic open flame; Heptane open flame. | European standard fires: Time history of CO and smoke concentration, and temperature | Fire detection |

| Kim [108] (2015) | Bayesian classification | Indoor environment with a hallway with two adjacent rooms | Frames extracted from videos | Fire and smoke detection |

| Favorskaia [109] (2016) | Support Vector Machine Random Forests Boosted Random Forests | Outdoor | Frames extracted from videos | Smoke detection |

| Smith [110] (2023) | Random Forests | Wildfire | Pixel maps | Predict the wildfire hazard |

| Datasets Parameters | Datasets | ||

|---|---|---|---|

| Forest Fire | Fire-Flame | Unknown Images | |

| Classes | Fire/No Fire | Fire/No Fire | Fire/No Fire |

| Image acquisition | Terrestrial & Aerial | Terrestrial & Aerial | Terrestrial & Aerial |

| Fire type | Forest | General | General |

| Resolution | 250 × 250 pixels | Varies | Varies |

| View angle | Front & Top | Front | Front & Top |

| No of images | 1900 | 2000 | 200 |

| Class balance | Balanced | Balanced | Balanced |

| CNN | SqueezeNet | ShuffleNet | MobileNet v2 | ResNet50 | ||||

| Layers | 18 | 50 | 53 | 50 | ||||

| Parameters | ||||||||

| CNN performance | ||||||||

| Dataset | Accuracy (%) | Training time (s) | Accuracy (%) | Training time (s) | Accuracy (%) | Training time (s) | Accuracy (%) | Training time (s) |

| Forest-Fire | 97.11 | 45 | 97.89 | 68 | 98.95 | 151 | 97.63 | 166 |

| Fire-Flame | 95.00 | 77 | 96.00 | 90 | 97.5 | 164 | 96.00 | 175 |

| Architecture | No. of Classes, Input Size | Structure | Ref |

|---|---|---|---|

| DenseNet121 | Total of 1000 classes, input image size 224 × 224 × 3 | Total of 121 convolution layers Four dense blocks Three transition layers One SoftMax layer (output) | [36] |

| InceptionV3 | Total 1000 classes, input shape 229 × 229 × 3 Total of 23,851,784 parameters | 154-layer Three modules: (A) 5 × 5 convolution is divided into two 5 × 5 convolutions; (B) 7 × 7 convolution is divided into a 1 × 7 and a 7 × 1 layers; (C) 3 × 3 convolution is divided into two layers, 1 × 3 and a 3 × 1 | [43] |

| ResNet50V2 | Total of 1000 classes, input image size | Three network variants, with 50/101/152 layers | [119] |

| VGG-19 | Total of 1000 classes, 143,667, 240 trainable parameters | Total of 16 convolutional, 5 max pooling, 3 FC layers and a SoftMax output layer | [77] |

| NASNetMobile | Total of 1000 classes, Total of 5,326,716 trainable parameters, input image size 224 × 224 × 3 | Scalable CNN architecture consisting of cells using the reinforcement learning method | [120] |

| Architecture | P | R | Accuracy | F1-Score |

|---|---|---|---|---|

| SqueezeNet1 | 97.92 | 93.77 | 95.80 | 95.71 |

| SqueezeNet2 | 96.48 | 96.07 | 96.27 | 96.26 |

| SqueezeNet3 | 97.95 | 96.31 | 97.12 | 97.10 |

| Xception | 94.14 | 96.49 | 95.30 | 95.36 |

| MobileNet | 94.43 | 99.53 | 96.91 | 96.99 |

| MobileNetV2 | 99.00 | 96.58 | 97.78 | 97.75 |

| ShuffleNet | 94.43 | 98.44 | 96.39 | 96.47 |

| ShuffleNetV2 | 98.99 | 97.33 | 98.15 | 98.13 |

| AlexNet | 94.27 | 96.06 | 95.16 | 95.20 |

| VGG16 | 96.50 | 98.55 | 97.51 | 97.54 |

| Ref | Transfer Learning Architecture | Dataset/Classification Type | Dataset Volume/Classes | Image Augmentation | Performance Metrics |

|---|---|---|---|---|---|

| Khan [123] (2022) | FFireNet derived from MobileNetV2 by freezing the original weights and replacing the FC output layer. | Forest Fire Dataset [124]/Binary classification | Total of 1900 RGB forest environment images, resolution . Train/Test: 80/20. | Rotation 1–50° Scaling 0.1–0.2 Shear 0.1–0.2 Translation 0.1–0.2 | TP: 189 TN: 185 FP: 5 FN: 1 |

| Zhang [125] (2022) | Backbone: ResNet variants (18, 34, 50 and 101), VGGNet and Inception pretrained on ImageNet dataset. Shallow feature extraction blocks frozen, deep feature extraction blocks fine-tuned. | Selected from FLAME [31]: 31,501, 7874 and 8617 image samples as training, validation and test, respectively. | FLIR camera images: 640 . Zenmuse camera: . Phantom camera: . | Mix-up Zhang [126] (sample expansion algorithm for computer vision, which creates new images by mixing different types of images). Image augmentation (rotate, flip, pan, combine). | F1-Score: (ResNet101): 81.45% FNs: VGGNet: 1148 Inception: 964 ResNet: 925 |

| Ghali [127] (2022) | EfficientNet-B5 and DenseNet-201 fed independently with the unprocessed image. The outputs are concatenated and passed through a Pooling layer. | FLAME [31]/Binary classification | Training set: 20,015 fire/11,500 no-fire images. Validation set: 5003 fire /2875 no-fire images. Test set: 5137 fire/3480 no-fire images. | N/A | Test dataset: TP: 4839 TN: 2496 FP: 984 FN: 298 F1-Score: 84.77% Inference speed: 0.018 s/image |

| Bahhar [128] (2023) | YOLOv5 Xception MobileNetV2 RestNet-50 DenseNet121 | Images captured from FLAME [31] dataset (29 FPS video streams)/Binary classification | 25,018 fire class 14,357 no fire class 70% training set 20% validation set 10% test set | Image augmentation (Horizontal flip Rotation) | F1-Score: 0.93 |

| Alexandrov [129] (2019) | Local Binary Patterns [52] Haar YOLOv2 Faster R-CNN Single Shot Multibox Detector (Liu [130]) | Image captures from video streams of wildfires recorded from UAVs. Real smoke + Forest background dataset dataset (Favorskaya) [109]) Simulative smoke + Forest background dataset (Zhang [131]) | 6600 Fire 15,600 No-Fire 12,000 images 12,000 images | N/A | FN: 0 (LBP and Haar) FPS: 14.62 {Haar); 22.4 (LBP) |

| Xie [132] (2023) | YOLOv5 with a Small Target-Detection Layer | Total of 20,000 flame images collected by a fire brigade/Binary classification. | RGB images Total of 13,733 images in the test set | Image augmentation (Rotation, Flip) Brightness balance | mAP: 96.6% FPS: 68 |

| Majid [133] (2022) | VGG-1622 GoogLeNet ResNet50 EfficientNetB0 | DeepQuestAI [114] Saied (FIRE Dataset) [134] | Total of 3988 fire images, and 3989 non-fire images. Training/Test: 80/20. RGB images. | N/A | F1-Score: VGG16: 73.73% GoogLeNet: 87.06% ResNet50: 93.27% EfficientNetB0: 94.00% |

| Dogan [135] (2022) | Ensemble learning based on ResNet18, ResNet50, ResNet101 and IResNetV2 | Compilation from FIRE Dataset [136] and [137]/Binary classification: Wildfires; Outdoor fires; Building fires; Indoor fires. | PNG files 865 positive class 785 negative class (including fire-resembling objects) | N/A | Specificity Sensitivity P F1-Score Accuracy Confusion matrix |

| Dataset | File Format | Classes | Environment | Content | Sensor Type/Collection Methodology |

|---|---|---|---|---|---|

| FLAME [134] | Raw video Image files Images and masks | Fire No Fire | Wildfires | Total of 39,375 JPEG frames resized to 254 × 254 (training and validation, 1.3 GB). Total of 8617 JPEG frames resized to 254 × 254 (test), 301 MB. A total of 2003 fire frames for fire segmentation problems, 5.3 GB. Total of 2003 ground truth mask frames, for fire segmentation problems, 23.4 MB. | Full HD and 4K camera: Zenmuse X4S camera, Phantom 3 Camera Thermal Camera: FLIR Vue Pro R Drones: DJI Matrice 200, DJI Phantom 3 Professional |

| BoWFire [138] | Image files (JPG files) | Fire No-Fire | Buildings Industrial Urban | Training dataset: 80/160 images 50 Fire/No-Fire. Test dataset: 119/107 images of various resolutions Fire/No-Fire. | Sensor type: N/A/ Source: Image scraper (Google) Organized and examined by team members |

| FIRESENSE [139] | Video files (AVI) | Fire No-Fire Smoke No-Smoke | Buildings Urban Forest | Smoke: 13 positive files, 9 negative files. Fire: 13 positive files, 16 negative files. | Not provided |

| MIVIA Fire Detection Dataset [140] | Video files (AVI) | Fire No-Fire | Buildings Urban Forest | 11 positive video files 16 negative video files Various resolutions from to . | Not provided |

| MIVIA Fire Detection Dataset [141] | Video files (AVI) | Smoke Smoke and red sun reflections Mountains Clouds Red reflections Sun | Outdoor, non-urban | Total of 149 files, each consisting of an approximately 15 min recording. | Not provided |

| KMU [142] | Video files (AVI) | Fire Smoke Normal | Indoor Outdoor Wildfire | Indoor/outdoor flame, 22 files, 216 MB. Indoor/outdoor smoke, 2 files, 17.9 MB. Wildfire smoke, 4 files, 15.6 MB. Smoke- or flame-like moving objects, 10 files, 13.6 MB. | No provided |

| Fire & Smoke [143] | Image files (JPG) Annotations (XML) | Fire No-Fire | Indoor Outdoor Urban | or above. Various lighting conditions, varied distance and view point. | Mobile phones Crowdsource contribution |

| Forest Fire Images [144] | Image files (JPG) | Fire No-Fire | Forest | Train dataset: 2500/2500 Fire/No-Fire images. Test dataset: 25/25 Fire/No-Fire images. Total dataset size: 406.51 MB. | Sensor type: not provided. Combined and merged from other datasets. |

| Wildfire dataset [145] | Image files (JPG, PNG) | Smoke and Fire Smoke only Fire-like objects Forested areas Smoke-like objects | Forest | Train datasetTest dataset Validation dataset Maximum resolution: Minimum resolution: Total dataset size: 2701 files, 10.74 GB | Source: online platforms such as government databases, Flickr, and Unsplash |

| FlameVision [146] | Image files: JPG for detection tasks, PNG for classification. Total of 4500 annotation files for detection tasks. | Fire No-Fire | Wildfire Outdoor | Classification dataset: 5000 positive, 3600 negative images, split into train, validation and test. Total dataset size: 4.01 GB | Source: Videos of real-life wildfires were collected from the internet. |

| ForestFireSmoke [147] | Image files (JPG) | Fire Smoke Normal | Forest | Training set: 10,800 files class-balanced from all three classes. Test set: 3500 files class-balanced from all three classes. Total size: 7.12 GB. | Not provided |

| VisiFire [99] | Video files | Fire Smoke Normal | Outdoor | 13 fire videos 21 smoke videos 21 forest smoke videos 2 normal videos | Not provided |

| DSDF [49] | Image files | Smoke (SnF) Non-smoke w fog (nSF) Non-smoke wo fog (nSnF) Smoke and fog (SF) | Outdoor | 6528 SnF images 6907 nSnF images 1518 SF images 3460 nSF images Difficult samples were included: local blur and image smear; non-smoke images with smoke-like objects; different color and shapes of the target smoke. | Source: collected from public online resources; photos taken under laboratory conditions. |

| DFS [148] | Image files | Large flame Medium flame Small flame Other | Total of 9462 images, of which: 3357 Large flame; 4722 Medium flame; 349 Small flame; 1034 Other. | Source: collected from public online resources. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Diaconu, B.M. Recent Advances and Emerging Directions in Fire Detection Systems Based on Machine Learning Algorithms. Fire 2023, 6, 441. https://doi.org/10.3390/fire6110441

Diaconu BM. Recent Advances and Emerging Directions in Fire Detection Systems Based on Machine Learning Algorithms. Fire. 2023; 6(11):441. https://doi.org/10.3390/fire6110441

Chicago/Turabian StyleDiaconu, Bogdan Marian. 2023. "Recent Advances and Emerging Directions in Fire Detection Systems Based on Machine Learning Algorithms" Fire 6, no. 11: 441. https://doi.org/10.3390/fire6110441

APA StyleDiaconu, B. M. (2023). Recent Advances and Emerging Directions in Fire Detection Systems Based on Machine Learning Algorithms. Fire, 6(11), 441. https://doi.org/10.3390/fire6110441