1. Introduction

Machine Learning (ML) analytics have become increasingly vital in providing condition monitoring and predictive maintenance to industrial machinery over the last decade [

1]. ML provides several tacit advantages over purely statistical means while being able to utilise historic data to improve accuracy of inference on problems with sufficient data.

In Industrial Internet of Things systems for mechanical systems, reliability is a critical design factor; systems need to be reliable at every layer, through physical, software and networking to prevent loss of data, downtime, and loss of service. Utilisation of analytics for Industrial machinery can enable pre-emption of breakdowns and can play a role in extending the life of components [

2]. This has become vital in ensuring the reliability of many types of industrial machinery, especially those that are utilised under circumstances where they are remotely deployed and do not receive regular physical inspection. These analytics systems are often reliant on data produced by on-board sensors (for example, a hall-effect sensor on a motor or an internal temperature sensor) which is then collated and sent to the Cloud via an Internet of Things (IoT) device connected to the internet. Cloud integration with Industrial IoT systems has many advantages, from access to powerful computers able to provide advanced analytics, to reducing the need to store data on the local device.

Without access to the internet, the advantages of Cloud integration cannot be utilised. If Cloud access is strictly necessary, a more reliable system such as satellite communications may be utilised; however, these systems tend to bring a higher cost, which may not be feasible depending on the scale of the project. To solve this, many businesses and developers have turned to deployment of analytics systems at The Edge—deploying as close as possible to the source of data to capture value as quickly as possible and provide instant analytics results [

3].

Not all Edge systems have reliable access to the Cloud. Despite the prevalence of modern mobile networks, many worksites may still lack reliable internet access, especially if the system to be monitored is restricted by geographic factors, interference, cost constraint or lack of infrastructure [

3]. Generally, a low power IoT device can be utilised for an IoT workload when connected to the Cloud, as less compute power, storage and complexity of software is required locally than when utilising an Edge-only strategy [

4]. If there is no internet access, or network access is restricted/limited by any means, then this may not be feasible in an IoT deployment, and it is likely that data will not arrive in a timely manner to be useful on the device, and data or updates may not arrive at all [

5].

In this paper, a novel architecture combining elements of gateway IoT architecture with an Edge-based ML Operations (MLOps) system that manages ML models at the Edge independently from the Cloud is proposed. This system is analogous to Cloud MLOps, but instead pulls models from the Cloud, versions them at the Edge, and trains its own versions of models to maintain continuity under network failure while maintaining a consistent version management strategy.

This solution is indifferent to the model type or libraries used to build it and prioritises the deployment and management of models at the Edge regardless of circumstances, while mirroring functionality normally only present on the Cloud. This addresses limitations in existing solutions wherein the Edge is not considered as a central component of the MLOps cycle and is regarded as the end-stage or a small use-case when performing MLOps, which leads to most systems requiring full Cloud integration and constant or reliable networking which is not often the case in fully remote scenarios.

The objective of this work is to produce the architecture and prototype of this system to showcase the benefit of a novel Edge MLOps mirror over a conventional Cloud-Edge hybrid system or a Cloud-only solution.

The remainder of this paper is organised as follows.

Section 2 identifies related work in the area of ML model deployment on Edge devices and the current limitations.

Section 3 outlines some challenges and drawbacks of running ML at the Edge, with a view into why these systems are necessary for IoT and ML.

Section 4 shows a definition of the proposed system and top-level architecture for creation of an Edge MLOps system with a description and outline of components and their use.

Section 5 details a prototype implementation, in which off-the-shelf components are utilised to build the prototype, and how they work together.

Section 6 describes the evaluation methods for the prototype, using artificial packet loss to simulate network instability in an edge environment, with discussion of results. Finally,

Section 7 discusses conclusions and future work.

2. Related Work

Many previous projects have shown the value of ML analytics for the IoT, and the ability of low-cost hardware to run advanced models such as Neural Networks, with great benefits to monitoring and control of devices for greater failure detection, optimisation and efficiency improvements [

6]. An often-addressed limitation is the inability to train or retrain Neural Networks on IoT devices due to high compute overhead [

3]. There is also a noted gap in the capabilities of ML for IoT, with limitations imposed by networking constraints, power constraints and hardware constraint adding together to cause an inability to send large models, and to run them [

7]. This leads to the research area of Edge computing and, by extension, the paradigm of Fog computing, wherein cloud functionality is brought to a local network or individual devices [

5].

These methods of analysing data can provide fault detection, predictive maintenance alerts, future load forecasting and condition monitoring. By deploying close to the source of data there is scope to analyse data with lower latency at higher resolution and offer fast feedback or remedial action when issues occur [

3], while keeping running costs low. However, this often comes with a large increase in technical labour as models must be deployed and kept updated remotely with intervention from Data Scientists and operations teams, which may be unfeasible when the scale of the deployment may be in the thousands of devices. This scale requirement is noted by multiple authors and projects, with consideration that, when built in a reliable and scalable manner, that Edge deployments can handle extreme environments with massive data throughput [

8].

The issue of reliable deployment of ML is often remedied using MLOps practices, which serve as a reliable means of building, versioning, deploying, and monitoring ML Models [

9]. There are many open-source and commercial MLOps tools available, however many are Cloud-based and do not account for usage at the Edge [

10], nor do they combine Edge and Cloud management, instead opting for single source model management on the Cloud [

10]. This limits the usability on the Edge, which means in situations where access to the Cloud is limited or unreliable, the models may not be manageable under failure or drift conditions [

11].

Much of the landscape of Edge and Mobile ML does not consider bringing MLOps to the Edge but rather prioritises the idea of moving models from Cloud to Edge without consideration of uptime reliability or network circumstance, nor do they include monitoring or condition management for models built for individual devices, rather focusing on models that can be re-used [

10]. This leads to the issue of poor support of devices in constrained remote networks. This would mean that in a far-Edge environment, the feasibility of these solutions is reduced and therefore in a network-constrained environment, the overall viability of the Edge ML deployment is reduced.

The limitations present in related projects are that of reliability and reliance on Cloud, wherein most projects do not consider an offline scenario and either exclusively use direct internet access to the Cloud on devices instead of a gateway device or utilise a hybrid system where Cloud connection is still required for MLOps. This has disadvantages over a fully contained Edge system when reliability and speed of deployment are a factor.

3. Challenges of ML Deployment

3.1. Edge Computing

With IoT, industrial machinery can gain the capability to power local analytics and the required software toolchains directly on the device or as close as physically possible, and with Edge capabilities, advanced analytics and data processing can be accessed locally while reducing the overall burden on the Cloud. Edge systems can range from a small microcontroller running very simple analytical models on real-time data, up to entire “Fog” (local Cloud) networks deployed on a worksite.

While a Microcontroller may be small, low-power, and cheap; they rarely provide the power required for running larger ML models or Neural Networks considering they often have reduced instruction set CPUs and limited RAM and rarely have storage beyond what is necessary to store their base operating systems and user code [

4]. Conversely, Fog networks can provide the compute power and storage to run highly advanced, data-heavy analytics but carry a higher cost burden and require considerable power and logistics consideration [

12].

IoT gateways are commonly used to provide network access, data storage and process offloading for less powerful devices in a local network, such as a microcontroller unit (MCU) or onboard controller for a machine. An IoT Gateway is a more powerful device that sits between the Internet and far-Edge devices and can act as a router or relay for data to the Cloud [

13]. These can provide many of the advantages of Fog networks for limited-scale device groups, without the cost and logistic concerns involved with having many different devices to manage [

14].

3.2. ML Operations

MLOps techniques can be utilised to deliver pre-built models to the Edge. The field of MLOps is relatively immature, having only came into the mainstream as a concept within the last ten years. MLOps is analogous to many existing Continuous Integration/Continuous Deployment (CI/CD) version control systems that exist for versioning code, instead allowing for ML models to be versioned, monitored, updated, and deployed. MLOps systems rarely store code, but instead they store the parameters, weights, biases, context, data format, libraries and artifacts required to run a pre-built ML model [

9].

Once built, stored, and versioned; models are ready to be deployed over many devices or environments without needing to retrain or individually build for each system. This can decrease technical labour involved with deploying models that is especially prevalent when deploying to remote or Edge systems, as MLOps systems allow for automated deployment and management [

9]. This allows deployment of ML to be simplified at scale while enabling remote deployment of models without developer or user intervention. To deploy more powerful ML models, often the model will be built on the Cloud and deployed with code in a container or configuration to run at the Edge. Inference is less resource intensive than training and large models are commonly run on a lower powered device than the one used for training, such as a microcontroller unit (MCU), assuming the weaker device has the necessary storage.

RAM and compute power to run the model at all [

6]. RAM and storage are highly dependent factors for running ML on a device as, with any computer program, the model must be small enough to be stored on the system and loaded into RAM with space to handle loaded data. Models that overrun the available RAM space will work slowly due to the necessity of memory swapping.

Access to Edge systems may be limited by network connection, and analytics deployment in some businesses may not be sufficiently advanced as to be able to deploy outside of a controlled environment [

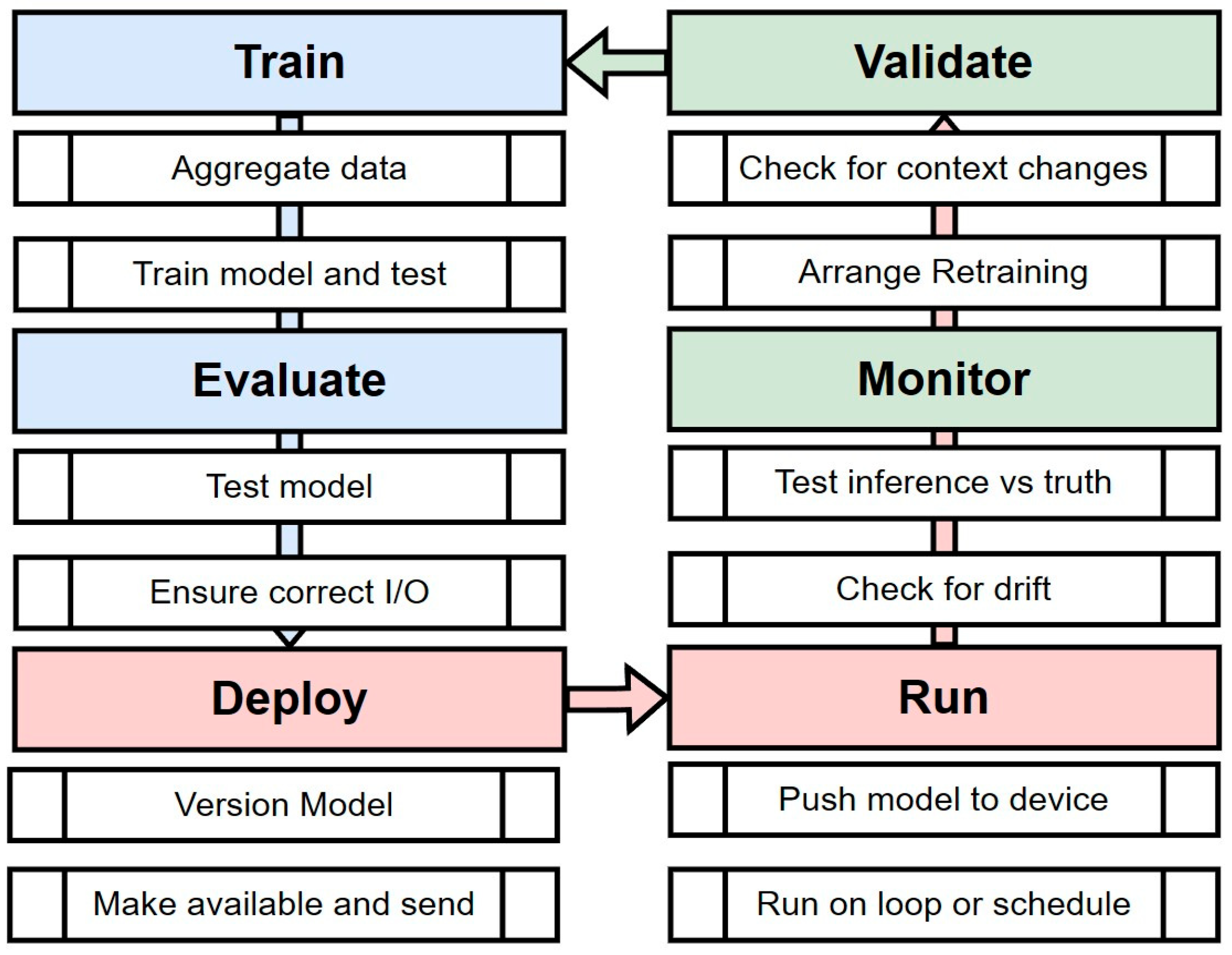

12]. An MLOps versioning system on the Cloud can work to keep models up to date and ensure accountability in model deployment. This could be mirrored on the Edge to allow the same benefits to devices connected to an IoT Edge Gateway. This process is outlined in

Figure 1, starting at the train step and continuing after validation wherein retraining may occur when necessary.

ML models are rarely built to run indefinitely without support. Many factors will affect the running of a model, and over time model accuracy may decrease due to changes in the context of the data (e.g., new sensors installed), or due to changes in the model itself, if the model uses online learning principles. Updates of ML models may be required under the circumstance that model accuracy or data context has drifted. This occurs when the underlying data changes due to, for example, a change in the source of the data, such as a new part being fitted to a machine [

15].

A functional MLOps strategy is an important factor in an industrial-scale Edge ML system. The scale of Edge deployments can be global, with thousands of devices—each of which may have dozens of devices attached to gather data. This scale exceeds human ability to individually build, deploy and manage the models required for intelligent forecasting, error detection, and any required process. This scale links MLOps and Edge ML very closely as, without MLOps, the human cost of deploying ML in a secure, reliable, repeatable and accountable way would be almost impossible.

3.3. Limitations of Cloud Processing for IoT

For many Industrial monitoring systems, the cadence of data produced will be sub-second. For example, signal monitoring in power production equipment can record data at several hundred Hz. Were this data fully recorded, uploaded to the Cloud for analysis and sent back, the cost of transmission, processing and storage could be prohibitive, especially at a large scale [

2]. The required frequency of data may also slow the rate at which it is sent to the Cloud. If a work site is remote, it could have limited bandwidth, meaning large volumes of data may arrive slowly and out of order, with data potentially lost. In these scenarios, reliance on the Cloud may mean the inability to utilise the required analytics system. This can lead to failures or missed alerts if analytics is vital to the detection of the fault.

The required latency of interactions with an industrial system may also inhibit Cloud analytics. The return time of sending data to the Cloud, processing, and sending a response may be longer than is allowable for responding to issues that happen quickly. In this situation, fast responses with minimum latency between detection and action may be required, which would necessitate the deployment of the relevant analytics systems as close as possible to the data source.

An IoT device deployed outside of a controlled environment may suffer from network instability, when model updates or new models are required, this may cause issues with delivering them to the Edge due to timeouts or lost packets. If the network connection is limited for any reason, it may be difficult or impossible to deploy or update a model at the Edge by streaming or file transfer. Resource constraint is an important factor here, the sensor devices are limited in what can be hosted, with models requiring larger storage and RAM, or significant pruning and optimisation. While this constraint does lead to models being smaller and easier to transport, it leads to a requirement for more frequent retraining, especially in forecasting and classification models due to the lower number of samples [

4].

3.4. Limitations of Deploying ML to the Edge

Deploying ML models to the Edge can be restricted by low network speed, low bandwidth, network instability and resources on local devices. IoT control and sensing devices are unlikely to be able to manage their own ML models due to a lack of storage space or other compute resources. This often leads to IoT devices running small, low power models that may not provide high accuracy, and that may not be able to adapt to changing data or context. Updating a model on an Edge device that experiences drift or requires contextual changes may either require significant downtime while models are updated or require devices to be restarted to allow the model change to take effect. A model remaining in use without an update may continue to degrade or may be otherwise useless. By leveraging MLOps versioning on the Edge, with Quality of Service (QoS) for reliably receiving models from the Cloud, the reach of analytics can be extended out to the source of data. This would work to reduce latency and reliance on the Cloud while allowing for local analytics and data processing to take place [

7].

When model drift occurs, it can lead to degraded accuracy and therefore incorrect classification or predictions occurring in model output, which may lead to inaccurate output and system failure if left unchecked [

15]. To prevent this, models should be monitored and updated as necessary, it may also be necessary to deploy and build entirely new models when a new sensor is added to machines, or to change the inputs and outputs (I/O) of an existing model. This information can be relayed back to system operators to inform them of calibration issues or failures in sensors. An operator may need to update the model whenever the device requires sensors to be calibrated or changed, to compensate for this in the model. These changes can be made with more ease than a full model update, as the model hosting container or running code can be updated with the new interface, or model I/O can be updated with the new fields while still online [

1].

Lack of connectivity and model management for far-Edge devices can be solved by using an Edge gateway, or any more powerful Edge devices such as the control node in a fog network to manage and distribute ML models while handling ML downloads, versioning and accuracy testing at the Edge [

14]. This would require a software package capable of receiving, managing, deploying, and monitoring models in production on Edge devices and updating or removing them when necessary.

Forecasting models in industrial sites can experience significant drift when context changes, due to new hardware being added or sensor changes and re-calibration. For example, if a portable system is running in an arctic environment that has an operational data history attuned to the cold climate is switched off and moved to a desert climate, a model designed to detect overheating of the device may constantly declare it to be overheating, and the rapid shift may cause forecasts of temperature during operation to constantly show impending overheat events—possibly causing the device to be shut down if the model has some control of overheating prevention systems.

Under network constraint this can lead to the reduction in service if the model is not properly maintained, leading eventually to negative circumstances such as gross inaccuracy or machine failure at the most extreme end. This demand for instant access to a new model at the Edge may not be met under a constrained network, with return time on a model being trained and sent from the Cloud meaning the machine would be offline or inaccurate for an extended time [

6].

3.5. Example

The limitations of Cloud processing for IoT—network instability, latency, data mismatch, and model version lag—lead to the necessity for an Edge layer to help manage and abate these issues. The volume of models and management required at scale quickly outpaces the ability of a human operator to keep up with deployment and retraining. For Example, take an industrial power site with power generators, transformers, battery storage, and solar panels. This site would have dozens of IoT devices each gathering hundreds of data fields at a rate as low as sub-second. Constant delivery of this data to the Cloud would require reliable, high-bandwidth internet access with appropriate security. Each device would require access to the internet and any disruption would mean certain periods of data could be lost.

Cloud-based systems would process the data and run it in ML models to perform the required analysis, before transmitting instructions to devices or human operators based off of this. Again, any network disruption would result in lost actions. The network instability could, depending on some factors such as geography and local infrastructure, could be remedied somewhat with satellite access if the site is remote. The cost of this transmission scale though could quickly become prohibitive, especially if high volumes of data are required. Lower volumes of data could be sent though it is likely that accuracy of inference would suffer as a result.

Some devices may run ML models if they have the capability—CPU power, Storage and RAM—however, each device would be responsible for downloading and swapping new models when required.

In this scenario, by introducing an Edge Gateway, the site would be able to have a larger buffer for data when offline and would be able to replicate many of the Cloud functions, such as data processing and model management. This can be coupled with Edge ML Ops—allowing for the gateway to manage the model accuracy measurements, swapping when models require changing, and handling multiple versions over many models. The introduction of the Edge gateway then allows an overall reduction in the necessity of a reliable internet connection and removes the need of smaller and less powerful devices in the network to access the internet directly which has a side-effect of improving security.

With the Edge gateway, individual devices can run local ML models, transmit the inference alongside the raw data to the gateway and have it processed and stored alongside a live evaluation of the accuracy of the model. This can be used to constantly monitor the models—which can be replaced or retrained with the Edge gateway as necessary, given that the gateway MLOps system would store multiple versions.

This would overall reduce the networking overhead required to run an Edge site with ML on each device, while improving analytics surface due to the ability of the gateway to deploy small models to devices which may struggle if connected to the internet, or that may not be capable of internet connection by themselves.

The novelty of such a system is in the mirroring of Cloud MLOps practices on the Edge—allowing a fully featured ML Ops system on a local network, with all the benefits that MLOps provides—reliability, accountability, continuous delivery and continuous improvement, especially when scale is a factor both worldwide, and locally.

4. Definition of the Proposed System

A Cloud to Edge MLOps architecture designed to deploy, manage, and run machine learning models on IoT devices at the Edge is proposed that allows for reliable and offline deployment of ML models in network and resource constrained devices in a fog or distributed Edge architecture, focusing specifically on the IoT Gateway use-case.

4.1. System Components

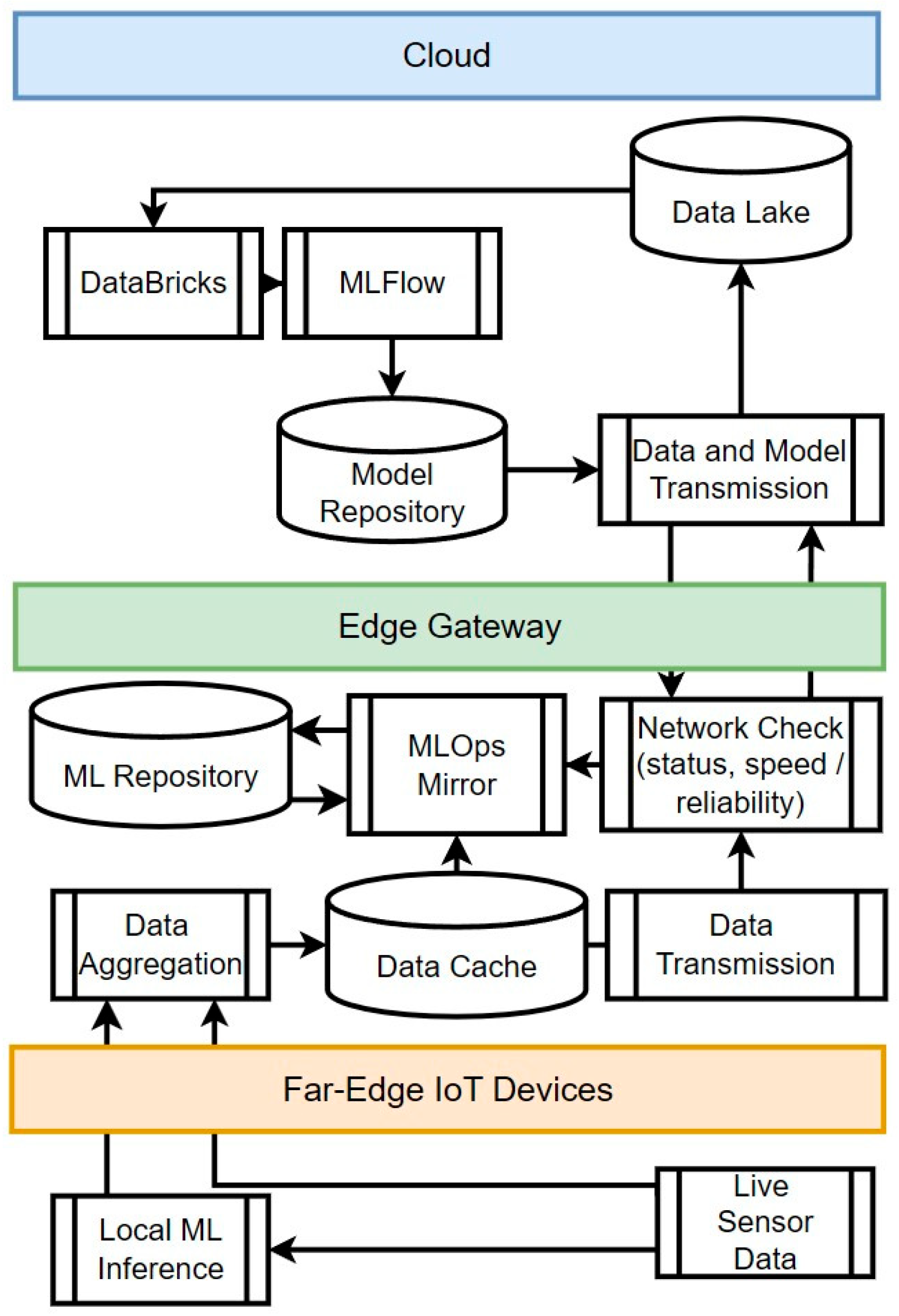

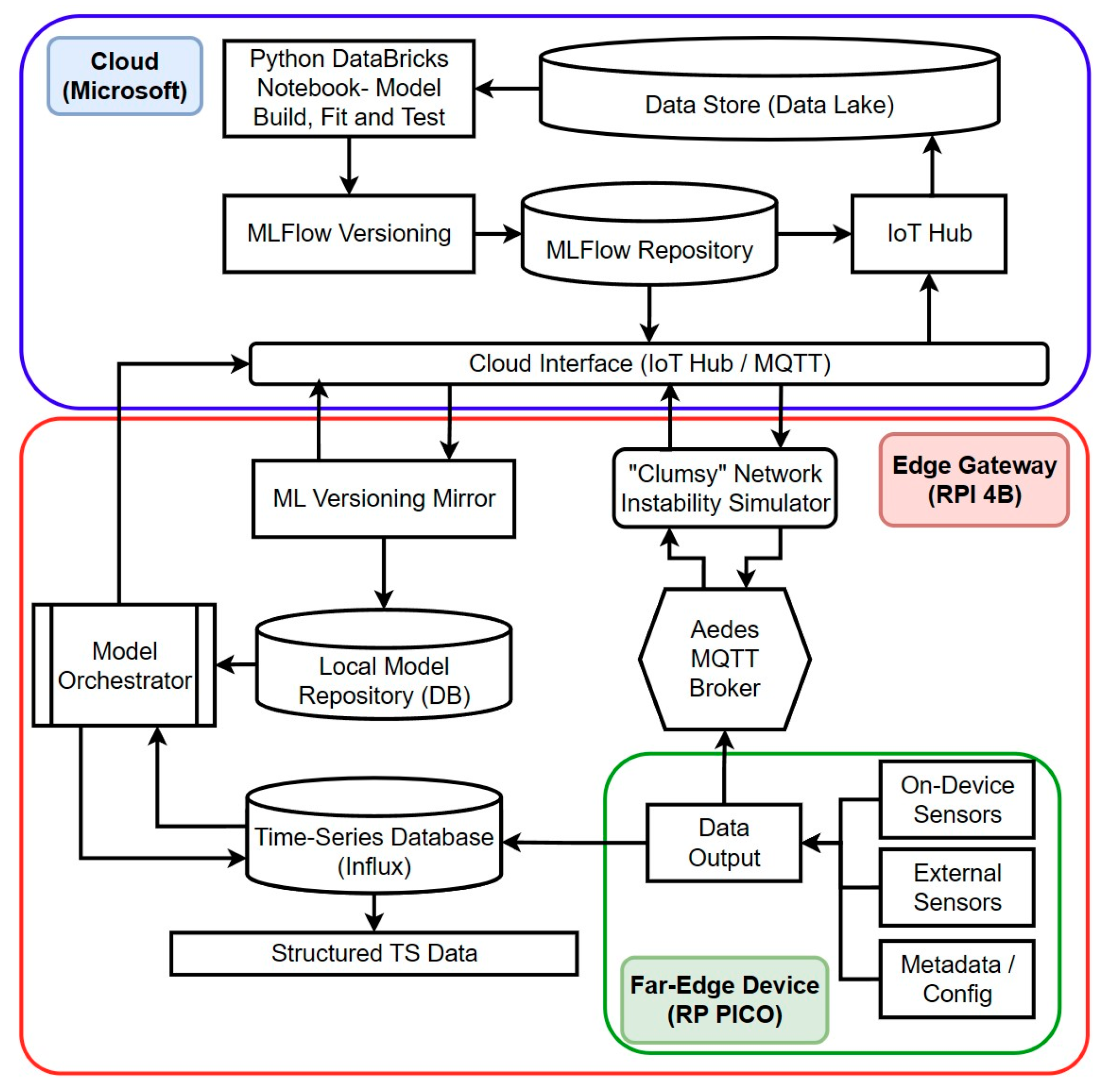

The system consists of 3 layers; the major components of each layer are shown in

Figure 2:

Cloud Layer;

Edge Gateway Layer;

Edge Device Layer.

The Cloud layer controls distribution of ML Models and is most linked to user input. Cloud access is required to access larger volumes of data and complex analytics. However, it is assumed that Cloud access may be limited or non-existent under certain circumstances. This is accounted for in the delivery method of the architecture, which prioritises QoS-based model delivery and offline-first functionality. The Edge Gateway is a device that sits between far-Edge devices and requires a Database and an access interface compatible with the message protocol used, so that models may dynamically access previous data as necessary. Key to the system described is an Edge model “Mirror” that holds a copy of models as they would appear on the Cloud versioning service, allowing local systems to access them as they would on-Cloud. By utilising an Edge gateway, access to ML models for less-powerful machines on the network can be enabled without need for them to host their own version of the software or to connect to the Cloud. This allows for low-power devices to receive model updates more frequently and reliably.

Additionally, an Edge gateway may process extracted data before it is sent to the Cloud or stored for all devices, even those that may not have the power or capability to support processing of real time data.

Models can be deployed on the Gateway device for monitoring group-level data or on the data collection devices, or in cases where devices may not be capable of deploying ML models themselves, due to resource constraints. The far-Edge IoT Device Layer interacts with machinery and sensors—allowing for data gathering and control of machines and the environment via existing interfaces (e.g., Modbus, Message Queuing Telemetry Transport Protocol (MQTT)) [

16]. This layer may already exist in the system, with the Edge gateway being used as an interface to include ML in a “brownfield” IoT deployment.

4.2. System Communications

Lightweight message queuing protocols can be utilised on the IoT device to enable broker managed communications between systems. Using these protocols also allows for communications to be extended to local devices, either by secure relay or direct connection. This allows for data to be shared from one device to many on the same site. The MQTT allows for topic-based identification of message. MQTT also gives an easier path to scaling up the number of data fields, as the multi-level topic structure allows us to dynamically handle messages and recognise new fields instantly. MQTT can be used for Cloud to Edge communications as well as inter-device communications [

16]. Network instability can be partially countered by taking advantage of the QoS feature built into the sending of a message, which allows a single message to be reconstructed even if parts of it are lost. QoS ensures that messages are received, even if connections are temperamental. This ensures that model components are received intact at the Edge. End-to-end encryption with token-based authentication is used to secure messages and connections, preventing model and data theft in transit.

4.3. Modular Code and Containers

Containerised services are a great benefit to Edge ML applications. Containerised software allows for parallel running of systems, while making it simple to add and remove software modules as necessary. Container orchestrators, such as Docker swarm and Kubernetes, allow for automatic provisioning of container groups, as well as scaling based on demand. These orchestrators can allow for an Edge system to be configured once at setup, then managed and updated remotely with inline or no-downtime upgrades to models. In this system, prebuilt containers with a codebase that allows for ML models to be combined with a container image and spun up quickly without rebuilding the image are utilised. This uses configuration supplied by the model to identify the correct libraries, communication channels, and run schedule. Model run schedules are defined by the model builder. Models can run when data is received (e.g., for a time-series prediction model) or at a certain delay from the previous run (e.g., for classifiers requiring multiple data points to run). By defining a schedule, models can run out of sync with when data arrives, allowing us to control when compute resource is utilised and prevent storage/CPU being over-utilised when it is required by higher priority software. In this case, they are controlled by a custom orchestrator but a prebuilt solution, such as Kubernetes, which can also be used with Cron scheduling or with a more dynamic approach dependent on model and data requirements.

4.4. Model Versioning on the Cloud

Once a Model is built, the user can choose to push it to the Versioning system with defined tags that dictate the model’s purpose and destination systems. The versioning system is deployed on the Cloud alongside model development systems. Model versioning software keeps track of models, configuration, and results, this allows for the model to be packaged as a transferable file with all the required components to run anywhere. New versions of models are made available to Edge systems with a list requested via a message channel and sent over MQTT in a series of messages. Model files are broken down into smaller files dependent on the on-disk size of the model, with each sent in batches with confirmation of receipt required before continuing the deployment. This ensures reliability and prevents network overload in constrained systems.

4.5. Model Versioning on the Edge

The Edge model versioning system is location flexible and only needs to be as close to the IoT devices as is feasible in deployment. This may not necessarily be local to the devices and instead may be deployed in the nearest data centre, or even a custom hosted server that is nearer to the devices than the Cloud. The Edge model “Mirror” acts to receive models generically from the Cloud and make them available to local devices according to device needs and operator parameters. This system acts also as the endpoint for model issues, allowing it to request new models or retraining from Cloud systems. The system provides the capability to run any model stored in the defined format.

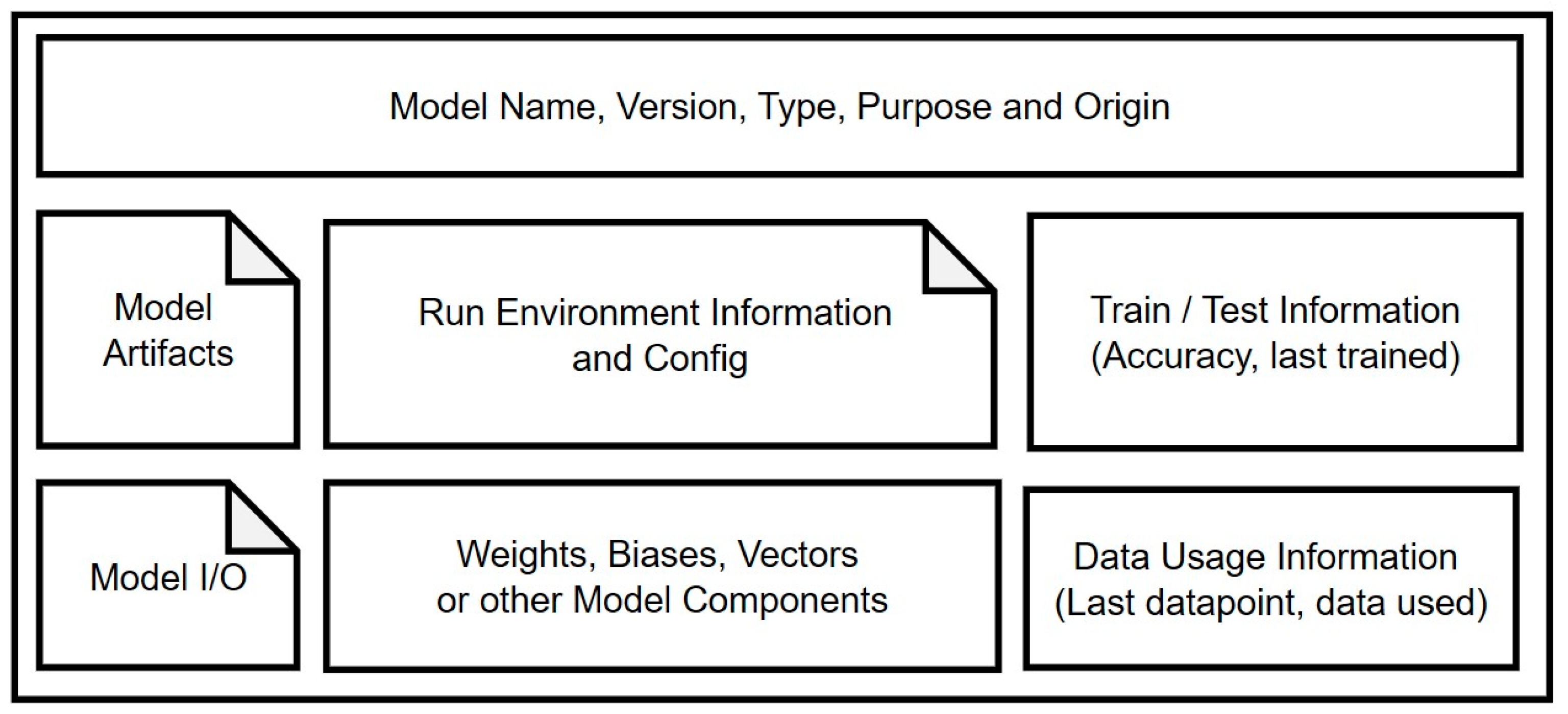

Models are stored as displayed in

Figure 3 in files as artifacts, with configuration data and any required information, such as I/O format, included in the file store. A generic configuration and standard model format. This system can make models in the file store available to local programs, or package them for sending to devices in the network using the same send strategy as described for Cloud to Edge. If networking is entirely unavailable, Flexibility in the MLOps lifecycle allows for models to be updated by a user bringing a new model on a USB data drive, or via another system on the same network that has received the models previously.

4.6. Machine Learning at the Edge

The ML model requires context around how it is run. This means that at build time, it should be supplied with library versions, schedule, and I/O format. With this supplied, the Edge systems can automatically assign and run ML models on the appropriate system. When a model run is compiled, the orchestrator combines the required components shown in

Figure 3 and provides the schedule. Models are loaded from a shared volume and cached in the run container.

Models can run on the far-Edge device, or on the Edge gateway as required. Models cannot be trained on the far-Edge device but can, and will be, trained on the Edge gateway as required. This Edge training will be slower than Cloud, with access to lower volumes of data and a smaller library of possible models, as well as limited compute resources with which to train the model.

A model built for a Cloud system may not always be compatible with an Edge device. They may be constructed on different compute architectures or may not consider the difference in required compute power. This is not always the case as the model is agnostic to architecture so long as the library is compatible. However, in the case that a model is incompatible, this requires input from the model builder to fix, with a different library necessary for some models and in some cases a rebuild on compatible hardware. In most Cloud instances an ARM/RISC build machine can be incorporated into the build chain to ensure compatibility.

4.7. Updates and Model Operations

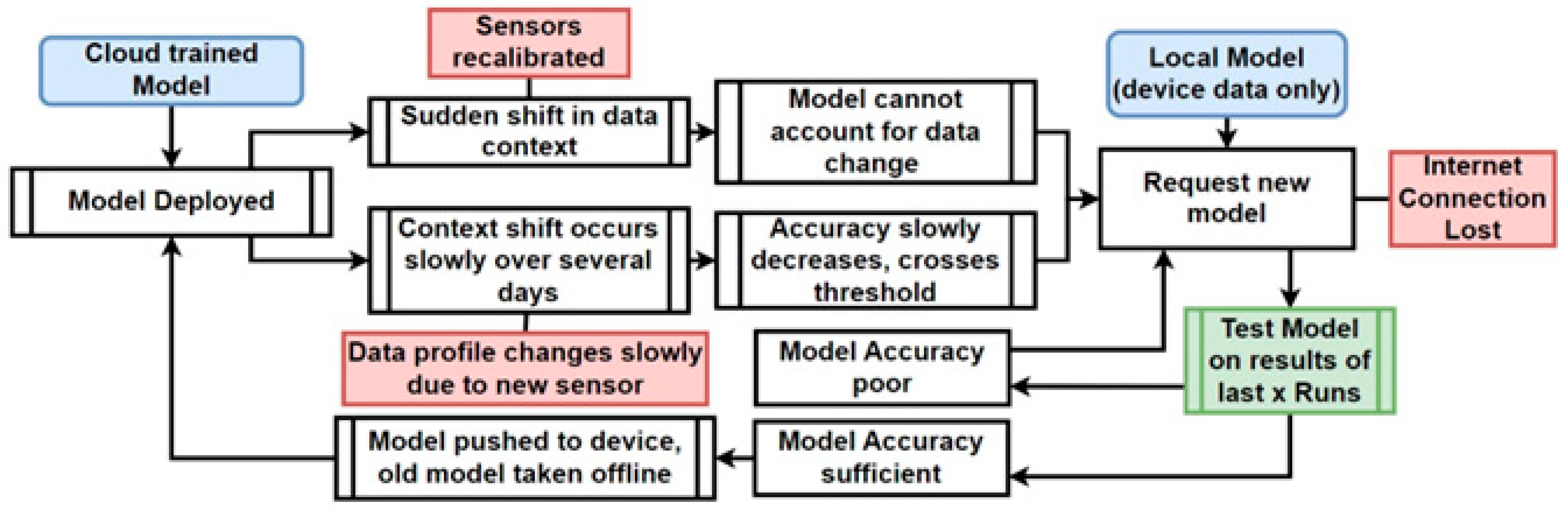

If deployed at scale, it is possible that each separate model could have a retraining request sent multiple times a day from various devices running a version of the model. This could occur when a model fails over many nodes at once, or if an unstable or poorly built model offers vastly different results for each device. In these cases, it would be preferable from an overall logistical standpoint to not have to retrain the model each time this is requested. While outlier cases could have a new model delivered as necessary, for failures affecting many in-production models it may be best to compile the data and metadata from a period before the failures and feed this back into a new model if possible. Alternatively, this can feed the issue back to the model’s owner to then fix the root cause and redeploy the model. This will require human intervention and would likely only be necessary in the case that a model has been built incorrectly, or the system has encountered an issue it cannot handle, such as when data fields change name or a significant change is made to the structure of the data or the devices gathering it. The method for retraining the model is outlined in

Figure 4.

4.8. Data Storage for Edge ML

How data is stored and accessed is vital to operation of models at the Edge. Data should be accessible to the model in a format that is known, with a clear method of accessing it. This is dependent on the exact configuration of the Edge system. This includes the type of the used database, the way data is moved through the system and the kind of the utilised internal networking. In this case, an interface to a time-series database that is accessible via dynamic MQTT channels is utilised. This allows for the dynamic addition of new input and output data fields on the Edge when new data is encountered or a model brings new output fields, or its existing ones are changed. The flow of data is shown in

Figure 5, with a view to bringing it to models in a prompt manner, with dynamic assignment of data fields and quantity.

4.9. Edge Model Retraining

Retraining of models can be performed on Edge when necessary. This would be able to utilise the recent data produced by the connected devices. This overlaps with the concept of Federated learning and enhances it with the ability to deploy to devices that cannot participate in the learning process by lack of power or necessity of function—such as microcontroller units or embedded devices. By utilising the available compute power at the Edge to train a model, it is more reliable and expedient to deliver a model to a far-Edge device than having to train at the Cloud. The circumstances as to why an ML system would require a model update vary; drift, context change, and requirements change are all possible. The process for this is described in

Figure 4. An Edge gateway device will only have immediate and reliable access to data given by the attached devices, whereas the Cloud platform will have data from all devices. The Cloud platform will therefore have scope to make more advanced models that can diagnose issues at scale, or that are hidden deeper in data. The devices in use in an Edge gateway setup will be low power devices, such as MCUs and LP IoT devices which may not be able to access the internet on their own or are locked down for security reasons. These devices will likely be unable to run very complex models and as such training on the Edge gateway will likely be feasible. However, in scenarios where the model requires significant updates, or to be changed entirely, the Edge gateway will need to communicate with the Cloud. In an unreliable network environment, this may not be fully feasible at all times. Additionally, by staging and storing multiple versions of code and models at the Edge, it can be ensured that every model has at least a fallback version if the latest version fails. Striking a balance between when to send a model, and when to use a local model is a key function of the system, with this capability allowing continuity of functionality even when there are significant context changes.

Model Training is performed in a Docker container on the Gateway utilising a script that considers model configuration and available libraries and containers on the Edge device to build a suitable model.

5. Prototype Implementation

A prototype, utilising a mix of open-source and commercially available components, was built as detailed in

Figure 2. MLFlow was utilised on the Cloud, with a custom deployment system and Edge versioning system built in Python 3.12 to provide Edge functionality. DataBricks on Azure Cloud was utilised to build models and version them in MLFlow. Node-Red was utilised externally as an MQTT Broker and an orchestrator, as well as to provide any pipelines and system connections. All functionality was contained in Docker containers and managed using a Python orchestrator, as shown in

Figure 6.

For managing the flow of data on the Edge, dynamic MQTT channels are utilised, the flow of data as shown in

Figure 5 is mostly managed by MQTT with exception of internal container networking. Data is routed to a channel defined by Device/Source/Name (e.g., Engine/Sensor/Temperature) with a unique identifier applied for each message. The database is also accessible by an MQTT interface.

Models are sent out from the Cloud versioning system, rebuilt on the Edge, and stored as artifacts in files. These can be sent to run containers to load the model. Model configuration is stored as JSON strings, allowing easy changes to configuration and running parameters. Run containers are built in a generic way and contain the libraries to run many kinds of models—this way many models can be deployed to a single managed container.

Managed models can be requested by devices on the Edge and swapped inline to upgrade utilising Docker or Kubernetes to swap I/O from the old model to the new without taking the system fully offline. If no appropriate model is available, and internet access is available, a message can be sent to request transfer of a new model or updated parameters.

The device in use is a Raspberry Pi 4B. This is a lower power IoT device with limited RAM and CPU power. Many IoT gateway devices and Edge deployments utilise higher-powered devices such as X86/64-based Mini PCs or mini servers. However, the Raspberry Pi is a very common Edge IoT device and represents an extremely common specification among a wide range of devices. It is possible that other devices with more power and newer networking cards would provide a more optimal result, though since the result of this is subjective to the actual deployment, the utilisation of a common device should give us a good middle of the line scenario to work from.

The ML model in use is a time-series forecaster based on a Keras/TensorFlow LSTM with consistent parameters on datasets of varying sample size. The model requires frequent retraining due to the constantly changing context in the data. For testing and comparison purposes, utilising a single model specification using 4 different sizes of dataset. The data in use is a subset of data from the MORED Morocco energy dataset [

17], with median filtering and a weighted rolling average applied to remove outliers. The time between model retraining and deployment to the test device was measured, for both Cloud deployment and Edge retraining. This also considers time required to send data to the Cloud for training and the time taken to extract data (assuming in this case they are approximately similar on Edge and Cloud).

Network instability is simulated by random packet loss, with a percentage based on the scenario to be simulated. Our middle of the range scenario entails 50 percent random packet loss, to appear similar to a slow or unreliable connection. Our extreme scenario entails a 90 percent packet loss to simulate a connection in a remote, under-serviced environment at the far-Edge where time-outs and connection loss are common.

When internet access is unavailable, the Edge gateway can be relied upon to train a model with up-to-date data. This can facilitate continuity of systems even under extended loss of network connectivity.

Other factors not considered here that will affect the transmission of a model are network downtime, interference, and latency. These are unpredictable factors that only negatively affect the transmission of data and models.

6. Evaluation

A controlled transmission of the models with no network constraint was compared to 2 network constrained scenarios and a local deployment at the Edge. As shown in

Table 1, the time from a retrain request being received to the model being deployed was compared. This is a single model with varying train/test sample sizes, designed to showcase the issue described here. This allows us to observe the results of the simulated network instability. A single model trained with different dataset sizes is utilised for the purposes of testing, to appear similar to how a production forecast model may operate in an Edge scenario when data from the Cloud may be unavailable; utilising the same model parameters, retrain process and build container with some newer data.

In this simulation there is also an extreme network loss scenario at 90 percent which simulates the most constrained networks in low infrastructure areas which, due to the random nature of packet loss, helps simulate dropped and slow connections. This did not include timing out or failed connections, which is common when the network is this unstable, nor did this include latency, as this will vary between networks and is not easily simulated in a random manner that is fair to the results, being it would bring a flat increase in time to send regardless of model size.

It can be observed in

Table 1, with time results highlighted in bold, and

Figure 7 that under a highly unstable networking environment, it is faster on-average to train a model on the Edge, than to await a larger Cloud model, this however leads to lower accuracy over time on our test mode and requires repeated retraining. On an unstable network, as shown in the mid scenario, it requires a similar timeframe to Edge training, with a slight timing advantage. This would likely be negated by network timeouts and disconnections, although this is not simulated. it makes sense to retrain on Edge if there is little connectivity. However, the benefits of having a larger Cloud model may outweigh the increased time to receive.

While the results shown here are for relatively small models with short train-test times and a low number of samples, the time to train, test and transmit will only scale upwards with the increase in model and data sizes. The larger scale of some of the most modern ML models, especially generative AI models which are now commonly in use in an increasingly large subset of utilities, necessitates striking a balance between deployment time, accuracy and usability.

The time difference between Cloud and Edge deployment will vary depending on the stability of the network and the size of the model. A large model may not be guaranteed to arrive fully on an unstable or low-bandwidth network. For this reason, it makes sense that even in a scenario as showcased above, where the model takes double the same time to train on Edge as on Cloud, then it may make sense if accuracy is equivalent to utilise the Edge model more often. This will of course vary between models and data. An Edge model may be only used as a fallback in case of complete Cloud isolation, where an output is necessary, but a model cannot make an accurate prediction. This only covered the transmission of the ML model to the Edge, however, and does not account for the sending of data to the Cloud to train the model, which can significantly affect the accuracy if data is missing or increase the round-trip time for training and sending a model. It can safely be assumed that under the same network constraints, it would significantly increase the difficulty of receiving data on the Cloud, further contributing to the issues described. In brief, this shows that under network constraint, coupled with other issues that may arise, it can be more time efficient and reliable to train a model on the Edge.

Limitations

The limitations of this system are apparent in a few areas. The first is that there is no automatic conversion of models for the Edge, which could be realised with pruning or quantisation steps which are not addressed in this system. This would mean that manual intervention is required to reduce model size when necessary to maintain accuracy or to build it for a device with special requirements—such as a microcontroller with extremely limited storage capacity. The concept of a generic size reduction system for the architecture described would possibly provide greater utilisation of higher quantities of data in the production of Edge-capable models. This also raises the question of whether this would be useful as the size reduction steps may also potentially reduce accuracy.

There is also the trade-off between model size/accuracy vs. the speed and reliability of transmission. This is not modelled and may not be linearly explained, as it will vary depending on the model and data. There is potential for modelling and exploration of the acceptable boundaries for Edge in regard to model size and how this affects model accuracy versus an “ideal” version of the model trained and deployed with no limitations.

A third, and major, limitation is the lack of a clear strategy for managing dynamic individual versions of models at scale. If a model is retrained for every individual device, and this creates thousands of offshoots of a single model, it may become impossible to manage them all when required to make major changes such as using a new model type. While this system would address the training and deployment of these individual models, there is an open question as to whether devices with constrained networks would be updated in a timely manner. There is also a possibility that human intervention would be required to address version mismatches—especially if an edge network is offline for a significant period of time and has retrained its own versions of models. This leads into the issue of when a model is the most current/best fit—would an Edge model potentially be better than a new one and would this cause the Edge Mirror to reject a model.

The issue of disparate and potentially orphaned model versions is very similar to the branch model used in CI/CD for coding, which commonly require human intervention to resolve issues with merging branches, orphaned code, and mismatched versions. The application of an autonomous solution to these problems for ML models would greatly reduce required technical labour from human operators.

7. Conclusions

A Machine learning Operations deployment architecture for reliable Edge computing is proposed in this paper. This is designed for an Edge gateway monitoring many low-power devices on an industrial worksite, such as in remote site power generation with reliable offline ML Operations features, and features for ensuring reliability when the network is unstable.

This system provides a method with which Data Scientists can easily deploy and manage large numbers of Edge models over a wide array of devices. This provides an alternative to deployments that require significant user intervention and works to reduce the technical labour involved with largescale deployment of machine learning analytics.

Adoption of such an Edge ML versioning and deployment system can allow large-scale analytics deployments in a manner that reduces issues with unmonitored machines and analytics, while providing the ability to keep the model up to date as and when it is necessary.

The addition of a local MLOps system to a fog, gateway, or single-device Edge deployment can significantly increase the reliability of analytics systems deployed to the Edge, while avoiding many of the issues normally associated with Edge deployments, especially in network constrained environments. The combination of these factors leads to a novel implementation of MLOps, wherein the Edge comes first and mirrors Cloud actions to create a local ML delivery network.

In conclusion, this architecture shows a reliable and multi-purpose Edge gateway for the enablement of MLOps. In addition to Industrial usage, it could prove useful to researchers conducting ML experiments at the Edge—allowing them to more effectively and reliably version, manage, record and update experimental models.

7.1. Advantages

A major advantage of this implementation is the ability of local networks to manage and version models with or without Cloud connection, allowing the retraining and provision of models when internet access is unavailable. Another advantage is the ability of fog/local networks to deploy models to low-power devices which may not have internet access directly and may not have the space or capability to manage model swapping themselves. While this is similar to the concept of federated learning, and overlaps in many ways, the advantage here differs in that this can manage differing versions of models and host many models for different purposes or device size, allowing smaller devices to be managed locally. Ultimately federated learning is a learning concept, and this system enhances that with deployment and running paradigms as well as functionality for smaller devices that cannot participate in the learning. This may take advantage of federated learning to create these smaller models, while offering a platform for the storage and management of versions separately to the devices running them.

The increase in reliability of the systems connected to this stem from several angles, mainly removing overall reliance on cloud while still allowing for deployment of ML models when internet access is unavailable by choice or circumstance. This also allows for the reduction in downtime in connected devices by removing the need for them to participate in the learning process if not capable.

7.2. Improvements

As this continues to be developed and improved, there are many possible evolutions that could occur, including the ability to autonomously convert or modify a built model to work on a different computer architecture or to prune/optimise the model to reduce resource overheads. This type of automated transformation would be performed after versioning but before deploying a model. It could also be prudent in future to further explore the circumstances and requirements for drift detection and automatic retraining, to find under which circumstances models should be retrained, and to optimise the process by updating the model through sending small messages over any available communication such as cellular networks.

There are areas not considered in this paper—disparate and clashing versions of models is a major issue. This is commonly seen in CI/CD branching mechanisms for code when two branches of code are merged into the same main branch with no allusion as to which one is the “correct” one. This has to be handled by human intervention. In the case of the system presented here, human intervention may be unlikely or infeasible and as such an autonomous response would be required, though this requires further work to understand and build a solution.

7.3. Future Work

In future, the exploration of the potential trade-offs in model accuracy versus size could further aid the question of whether it is better, in the case of a model that has drifted significantly, to train on Edge or wait for a better model to be delivered from the Cloud.

There is also potential for the exploration of further automation of components in the Edge MLOps workflow—including quantisation and size reduction in models destined for constrained devices, at-scale creation and management of “tailored” individual models for individual devices, and the automation of management of mismatched model versions/conflicting versions which is currently only resolvable by human intervention.

Expansion of the Edge MLOps workflow to also include Large Language Models (LLM) and other new types of large AI may prove to bring new areas of opportunity to the Edge, especially when Agentic workflows and intelligent resolutions to problems may be required. It may require further research, but it is possible that an LLM would be able to resolve clashing version problems.