1. Introduction

The number and importance of graphical user interfaces (GUIs) and, thus, user interface (UI) and user experience (UX) design is constantly increasing [

1,

2]. GUI is defined as the graphical user interface between a human and a machine [

3]. It facilitates the operation of interfaces using graphical elements [

4]. These include, for example, images, symbols, and color. The aim when designing such GUIs is to make them as usable as possible. Usability is defined as the “Extent to which a system, product or service can be used by specific users in a specific context of use to achieve specific goals effectively, efficiently and satisfactorily.” [

5] (p. 9).

As computer technology continues to advance, the range of information that can be displayed in a graphical user interface has expanded, becoming increasingly functional and informative [

6]. This presents a challenge for designers, as enriched layouts may lead to overly complicated interfaces, potentially overwhelming users due to the limitations of human attention and cognitive resources [

6,

7,

8]. One potential solution to this issue is to strategically direct users’ attention by manipulating specific design elements [

2]. In this context, visual attention and salience are of great importance. The term “design elements”, as used in this work, refers to the fundamental components employed in the development of designs [

9]. These components include, but are not limited to, lines, shapes, and colors. Attention is understood as a cognitive process through which individuals select certain information while ignoring other information [

10]. The capacity for selective attention enables individuals to prioritize or disregard specific aspects or stimuli [

11]. Visual attention can be effectively directed through the application of visual salience [

12]. A stimulus is considered visually salient if it stands out in a particular context [

13]. The salience of design elements plays a crucial role in emphasizing features within a GUI and guiding users’ focus toward important areas [

14,

15,

16,

17]. For example, according to a study by Ebert et al. [

18], the visual design of a warning should receive at least as much consideration as the informational content it conveys. Especially for warnings, the research suggests highly visually salient warnings are more likely to be noticed by individuals.

Limitations in human attention can occur particularly when spatial or temporal mechanisms of selective attention are overloaded [

19]. For instance, an excess of visual stimuli in a given space has been demonstrated to impede the effective processing of information [

20]. In the context of visual search tasks, performance metrics demonstrate a decline when the number of objects is increased or when the objects move more rapidly [

21]. This phenomenon suggests inherent limitations in our cognitive ability to track multiple objects concurrently. This raises concerns in both academia and industry, as many catastrophic accidents happen due to inattention [

22,

23]. It is therefore important to understand the limitations of attention. Processing limitations include, for example, inattentional blindness and change blindness [

19]. Inattentional blindness refers to the failure to perceive an object or event in the field of view because the attention of an individual is focused on something else [

24]. A perceptual error is said to be the result of a lack of attention to an unexpected element [

25]. This is particularly the case if the element does not appear relevant to the current task or if the current task is associated with a high degree of task commitment [

23,

26]. Change blindness is the phenomenon of not perceiving change in the environment, as the focus of attention is often not on the changing part of the corresponding area [

19,

27]. Change blindness suggests that the content of the representations in a person’s short-term memory is heavily dependent on selective attention [

27]. These phenomena have already been observed in various settings [

27,

28,

29,

30]. According to Davies and Beeharee [

31], change blindness and inattentional blindness have been observed in the context of mobile device usage. Their findings indicate that the identification of newly inserted objects is consistently higher than that of changes made to existing objects on a screen. These phenomena have already been observed in various settings.

The human visual system is tasked with processing a vast array of information, and its performance is shaped not only by attentional constraints but also by various cognitive biases [

32]. A distinction is made here between the attentional bias, selective bias, and salience bias. With the attentional bias, a person tends to focus their attention more strongly on certain stimuli than on others [

33]. The perception and interpretation of information can be influenced by previous experiences, emotions, and beliefs [

34]. Sequential bias is also referred to as the sequence effect, where decisions are always influenced by previous decisions or experiences [

35]. These are sometimes difficult to recognize and eliminate [

36]. This is particularly important during study implementations. The salience bias refers to the tendency of people to give more attention to information that is more salient or conspicuous than less salient information [

37]. Companies such as Apple Inc. use the salience bias, for example, for the customer experience in their stores and on their website [

14].

As users allocate a reduced amount of attentional resources to different elements within a more complex design, it is of advantage to gather information regarding the attention allocated toward individual elements [

38,

39]. Eye tracking serves as a valuable method for obtaining such data [

40,

41,

42,

43,

44]. In the context of GUI design, search tasks are particularly pertinent [

32]. During the process of searching for information within an interface, users’ eye movements provide objective indicators of attention distribution and information processing [

45]. Key metrics in eye tracking include fixation duration, which is defined as the time the eyes remain fixated on a specific target [

46]. Fixation duration is regarded as an implicit measure of visual attention. Another important eye tracking metric is the area of interest (AOI) attention ratio, which is defined as the proportion of time an individual spends looking at a designated area of interest during a task relative to the total time spent on the task [

47]. Consequently, the AOI attention ratio can be utilized to ascertain which areas of a user interface garner the most attention from users [

48]. Given that such data offers reliable insights into how individuals distribute their attention, it finds applications across various research domains, including the automotive industry [

49,

50]. Furthermore, this information is crucial for the future design of graphical user interfaces [

51].

The salience of design elements, such as color, has already been investigated in several research studies [

2,

52,

53,

54], with one study also demonstrating that salient stimuli attract more attention than others [

55]. According to Heimann and Schuetz [

56], when observing a scene or image, people’s eyes naturally linger on and repeatedly return to the parts that contain the most relevant or meaningful information from their perspective. Previous work [

57,

58,

59] has already shown that people look longer at stimuli that they subsequently select. Additionally, preference is reflected in allocated attention [

56,

58,

60,

61].

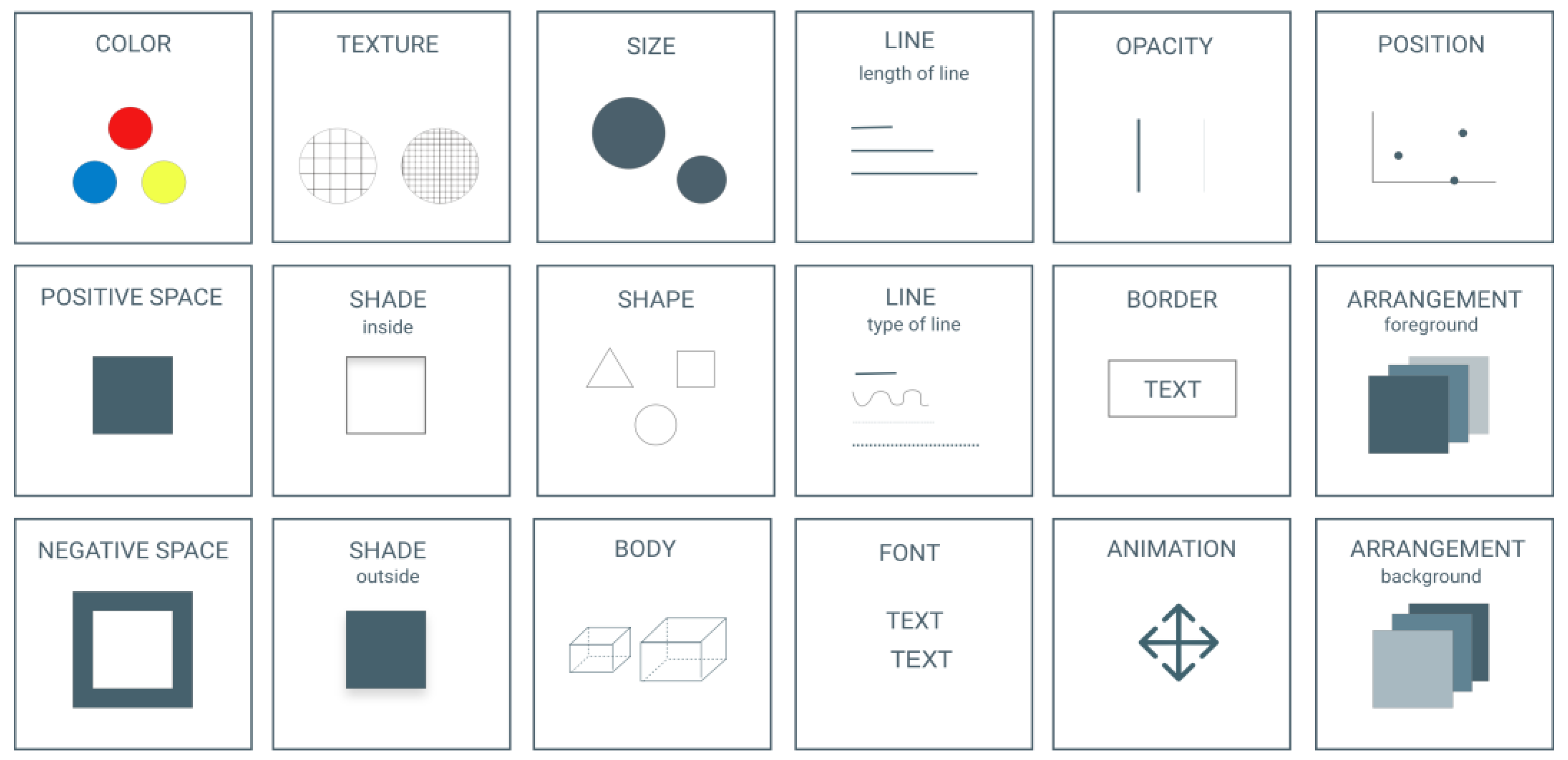

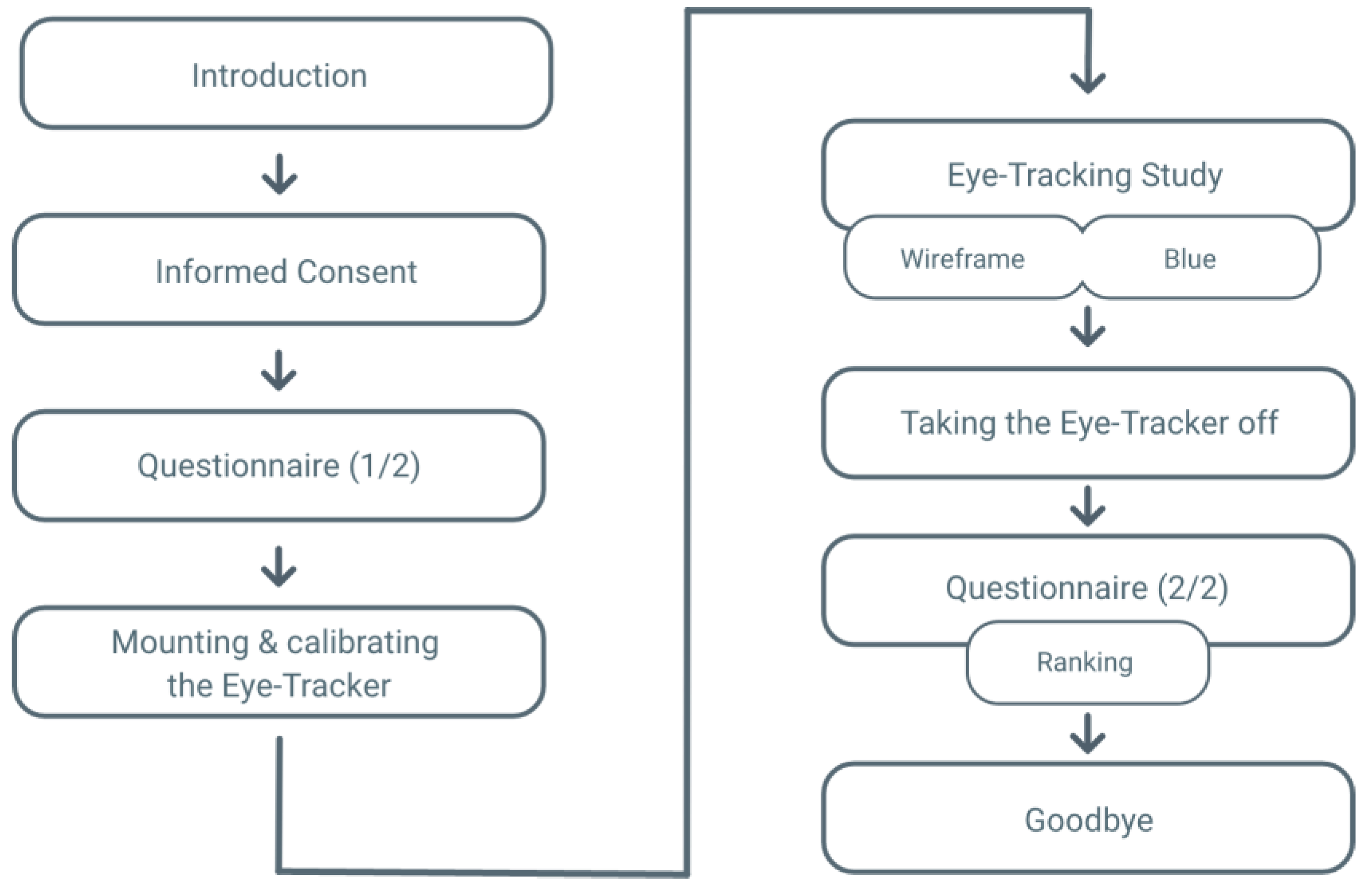

This study was preceded by an comprehensive examination of the topic based on the principle of the design thinking process [

62]. Prior to the experimental study, four expert interviews were conducted with professionals in the field of user experience and user interface design. These interviews provided valuable insight into the limitations, challenges and methodologies associated with GUI design. Subsequently, it was considered relevant to define manipulable design elements within a GUI to provide a corresponding overview. In this process, 16 distinct design elements were identified as illustrated in

Figure 1. There is already a lot of research on color and its impact in different contexts [

46,

52,

54,

63,

64]. Among the presented elements in

Figure 1, the design element “shape” in the form of a button was selected as a systematically manipulable design feature. This was because buttons are relevant to user interface and user experience design because they are utilized in various contexts and frequently in conjunction with GUIs. A subjective evaluation of buttons has already been made in a study by Becker et al. [

65].

This study investigates the relationship between button shape and user perception, isolated from contextual influences. By analyzing shape preferences, attention distribution, and perceived pleasantness, the research aims to translate visual perception theory into practical UI design recommendations. The study bridges psychological principles and user interface design, providing evidence-based insights into fundamental aspects of visual perception. The goal is to support designers by developing more intuitive and effective design recommendations for graphical user interfaces.

The three research questions were:

RQ1: What shape of button do subjects prefer in a pairwise comparison?

RQ2: Is the objective attention distribution reflected in the subjective preference?

RQ3: Which of the presented buttons do subjects find most pleasant?

4. Discussion

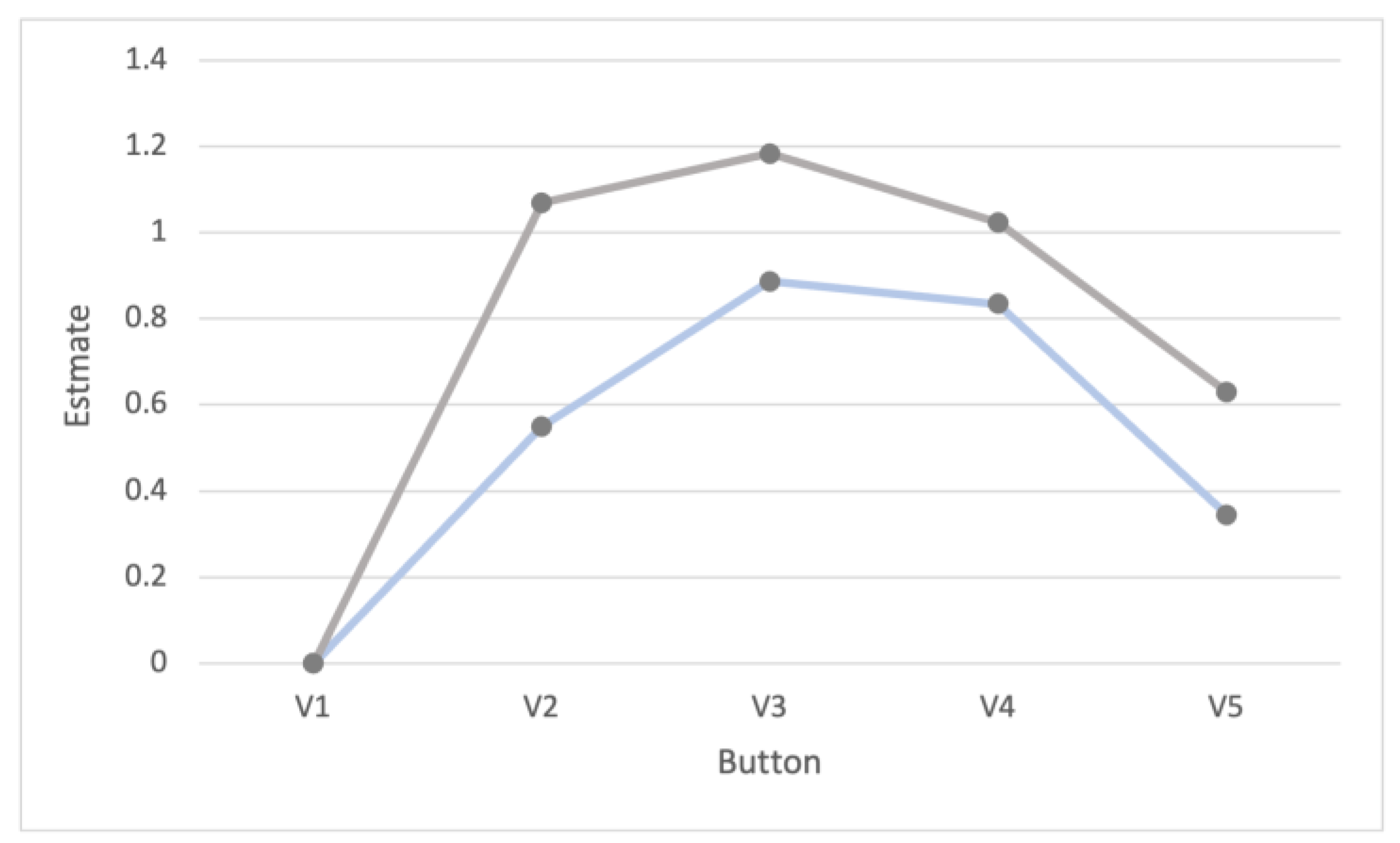

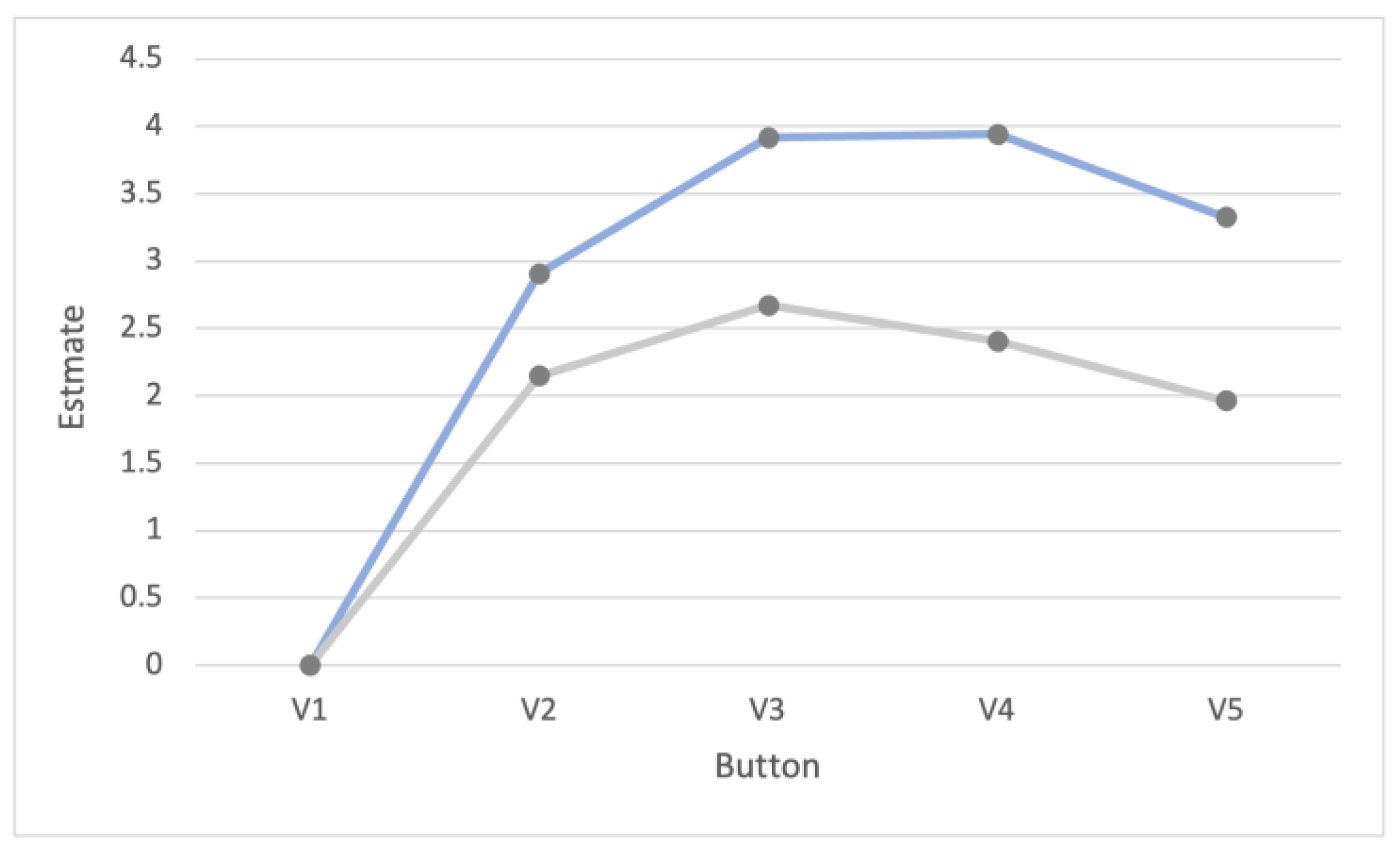

The objective of the eye-tracking study was to ascertain the question of which button subjects preferred in a pairwise comparison and whether the objective attention distribution was reflected in the subjective preference. The results of the study are outlined in

Table 10, which provides an overview of the ranks resulting from both the objective eye-tracking data and the subjective decisions made in the pairwise comparisons.

The results indicate that for all button types (objective wireframe, objective blue, subjective wireframe, subjective blue), V1, which represents the most angular button version, ranks fifth and last. In contrast, V5, which represents the roundest button version, ranks fourth in all cases except for the subjective evaluation of the wireframe buttons. These findings mainly align with a study by Becker et al. [

65], which showed that completely angular and completely curved button corners are the least preferred.

Looking at the rankings resulting from the pairwise comparison, the ranks resulting from objective and subjective data for the wireframe buttons and the blue buttons differ. For the wireframe buttons, there are specifically differences in ranks 1 and 2 as well as in ranks 3 and 4, with each rank being mirrored in its representation. The coefficient estimates indicate that there are only minimal differences between ranks 1 and 2, which correspond to button versions V3 and V4. For the blue buttons, ranks 2 and 3 are mirrored in their representation. The rankings derived from the eye-tracking data (objective) show V3 on the first rank of the wireframe buttons, which is consistent with the ranking of V3 of the blue button versions. A larger sample size or multiple iterations might provide a clearer evaluation of these ranks. The minimal difference in the rankings for the blue buttons suggests that preferences for specific button versions manifest in allocated visual attention. This finding corroborates the literature described in theory [

56,

58,

60,

61]. According to Milosavljevic et al. [

77], there is a potential for visual saliency biases to influence everyday choices. This raises the question of whether the buttons ranked higher are more salient than those with lower ranks. It is important to acknowledge that the present study exclusively examined the allocation of attention to button shapes in the absence of contextual information.

When comparing the rankings derived from the eye-tracking data, ranks 1, 4, and 5 do not differ from one another. V3 is ranked first, indicating that it received more focal attention than other button versions, both among blue buttons and wireframe buttons. V1 is in the last position, while V5 is ranked fourth. Following the assumption that preference is reflected in allocated attention, the findings align with those of Becker et al. [

65], who did not report a ranking between completely angular and completely round buttons. Based on the current results, it can be concluded that completely angular buttons are less preferred than completely round buttons. This is further supported by the rankings from subjective data, where V1 is also ranked last among both blue and wireframe buttons. V5 ranks fourth among blue and the objective ranks of the wireframe buttons and third among the subjective ranks of the wireframe buttons. When comparing the remaining ranks of button versions based on subjective data, it becomes evident that ranks 1–4 differ notably. This prompts the following questions: firstly, to what extent does color influence subjective evaluation, and secondly, if so, why, and what are the underlying mechanisms by which color exerts this influence?

The research question of which buttons are perceived to be the most pleasant by the participants can be answered by examining the frequency distributions shown in

Table 11 and

Table 12. These distributions are derived from the questionnaire administered during the study and reflect the ranking of button versions based on perceived pleasantness. The results clearly show that V1 frequently occupies the last rank for both wireframe and blue buttons in terms of the pleasantness evaluation. This is also seen in the results from the objective and subjective data of the pairwise comparison. A higher rank for V3 among wireframe buttons is indicated by its never being assigned to either the fourth or fifth rank. These results confirm the preference and greater attention reflected in the previously described rankings from both subjective and objective data shown in

Table 10. For blue buttons, V1 was never assigned to the first or second rank, and V2 was assigned once to the first rank. Here, V3 was also never assigned to the fifth rank among the blue buttons, showing a tendency toward higher ranks (ranks 1–4). Button V4 was more often assigned to higher ranks (rank 1–3) than the lower ranks (4–5). It should be noted here that the salience of a button in the pairwise comparison may have influenced the answers of the study participants. The evaluation of the results must be viewed under the influence of a possible salience bias [

77].

In summary, V1 received the least attention and was rated least preferable and least pleasant in comparison to the other button versions. In terms of eye-tracking data, V3 ranks first, a result which aligns with the rankings of the blue buttons derived from subjective comparisons. However, this is not the case for wireframe buttons. The frequency distributions in the questionnaire indicate that V1 is also ranked lowest in terms of perceived pleasantness. Furthermore, V3 tends to be assigned ranks 1–3 concerning pleasantness.

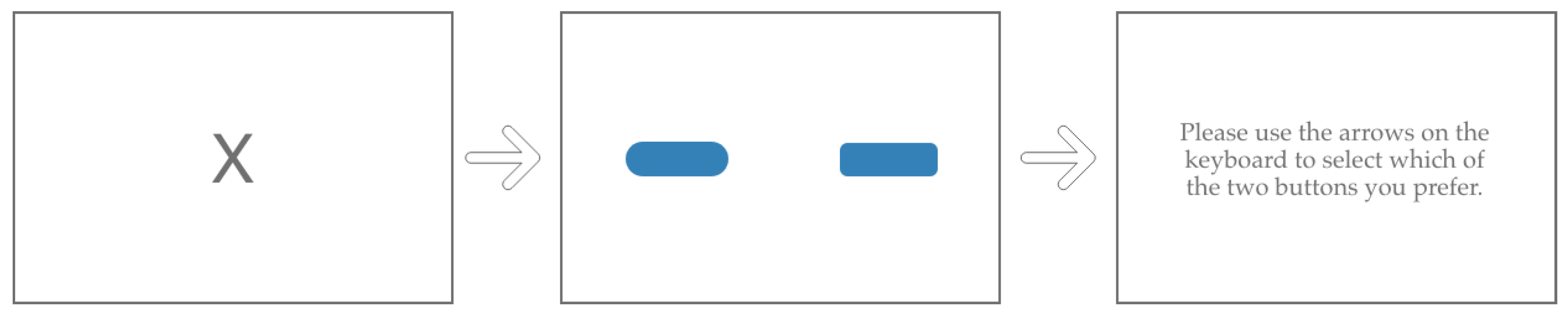

The present study is subject to several limitations. Prior to the pairwise comparison task, participants were asked to define the term “preference” for the task themselves. They were not advised on how or in what context to define the word “preference” for selecting the preferred button, which may have influenced the deviating ranks resulting from the subjective data. Additionally, during the eye-tracking study, participants looked at the fixation X for 10 s each. It is possible that this duration was sufficient to ensure that the previously displayed button pairs were forgotten; however, the potential influence of visual memory should be considered. Although the buttons were presented in a randomized order regarding their shape, the order of presentation should be considered, as the wireframe buttons were always presented before the blue buttons.

The present study employed a multidimensional approach, incorporating a pairwise comparison with eye tracking. This approach is regarded as a very useful method, although it should be noted that the results between the subjective preference and the attention distribution are not completely consistent. It is therefore recommended that a multidimensional approach with different eye-tracking parameters, as well as measurement methods, be utilized in future research. Additionally, incorporating a questionnaire with open-ended questions into the study design is deemed to be beneficial. This approach facilitates the collection of feedback regarding both the methodology and the various design elements [

78].

A further significant limitation pertains to the quality of the eye-tracking data, specifically the discarding of four sets of eye-tracking data from the final sample. The duration of the two runs of the pair comparison was limited to a maximum of 15 min, and a thorough examination revealed indications of participant fatigue, as well as issues related to inaccurate calibration and the use of make-up, which resulted in poor data quality. The issue of fatigue was evidenced by prolonged periods during which participants exhibited closed eyes. Such factors must be considered in future investigations.

Another limitation of the study was the presentation of the buttons in isolation from any contextual framework. The buttons were solely presented as shapes and lacked any labels. This was because an abstract initial study of attentional allocation without distraction was to be conducted. It is conceivable that the evaluation of these buttons might vary if they were to be labeled or viewed within a contextual framework, as is usually the case with graphical user interfaces. This is a possibility that will be examined in subsequent research. It is noteworthy that Becker et al. [

65] presented their buttons in the context of a radio screen of a multimedia display in a vehicle and obtained similar results. At this point it is worth noting that Becker et al. [

65] presented their buttons in the context of a radio screen of a multimedia display in a vehicle and obtained similar results.

The measurement of salience also constitutes a limitation of this study. Preference and the visual attention distribution were measured. Based on the results presented and the existing literature, it can be inferred that the rankings may also reflect the salience of the buttons, as visually salient objects are more likely to be chosen [

77]. However, this assumption should be validated through a more detailed analysis of the eye-tracking data. The initial gaze could serve as a useful metric for this purpose, as demonstrated in the work by Weber et al. [

2]. Additionally, it is advantageous to evaluate the salience of design elements subjectively. The findings of this study may provide valuable insights for the future design of graphical user interfaces (GUIs). Notably, clear results were observed for the fifth-ranked version of the completely square button. This button version did not receive the highest visual attention, nor was it preferred or perceived as particularly aesthetically pleasing compared to other button designs. However, these results should be interpreted with caution, as the button versions need to be tested in an appropriate context before specific design recommendations for GUIs can be made. Future research will extend this work to include an analysis of the salience of design elements in relevant contexts.

In the context of future research, the utilization of a combination of pairwise comparisons, eye-tracking, and interviews emerges as a promising avenue for exploration. This approach would facilitate the selection of two GUIs, each characterized by distinct design elements, by users. Moreover, the integration of eye-tracking data and interview responses would enable the extraction of insights into the rationale underlying user decisions. Additionally, it is imperative to undertake a comprehensive examination of the influence of both primary and secondary tasks in conjunction with GUIs, with a view to elucidating the salience of design elements.