Smart Diet Diary: Real-Time Mobile Application for Food Recognition

Abstract

1. Introduction

- We developed a semi-automated diet tracking smartphone application based on a food recognition engine trained using faster R-CNN.

- We developed our food recognition model for mobile application and extended it with a mechanism to calculate the volume and approximate calorie value.

- We generated a customized food image dataset composed of over 16,000 images from fourteen classes to train and test the system.

- We achieved a combined accuracy of approximately 80.1%, with a maximum of 90.2% for some of the classes.

2. Related Work

3. Materials and Methods

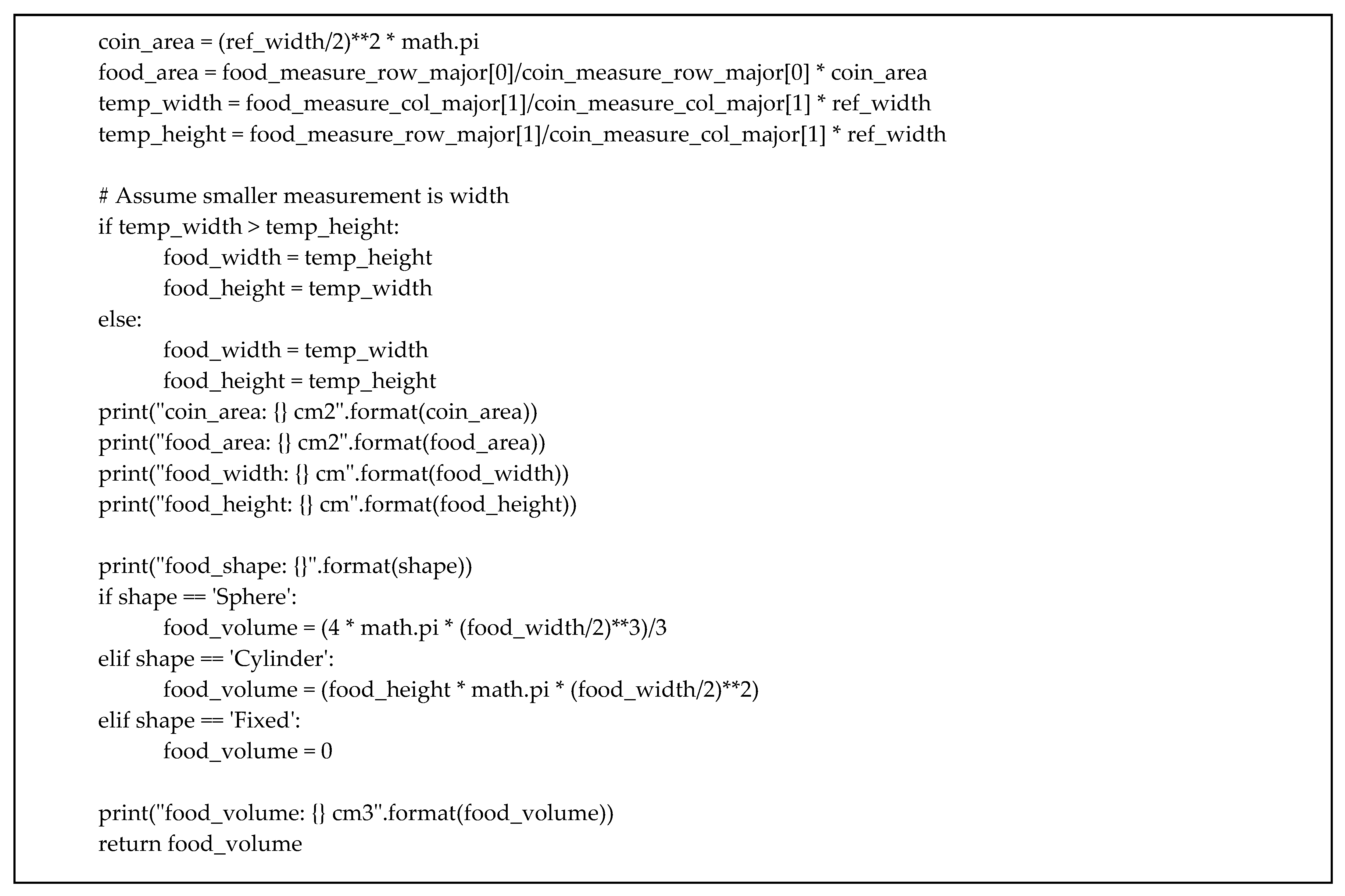

3.1. System Architecture

3.2. Application Development

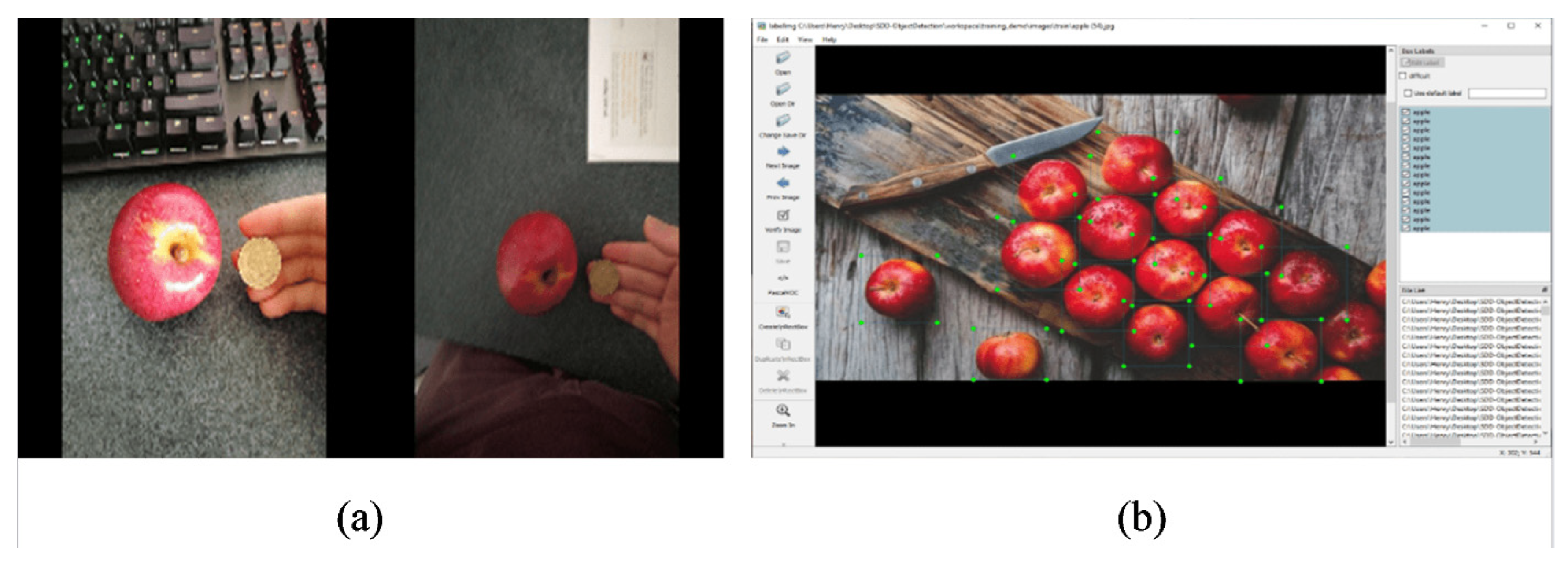

3.3. Dataset

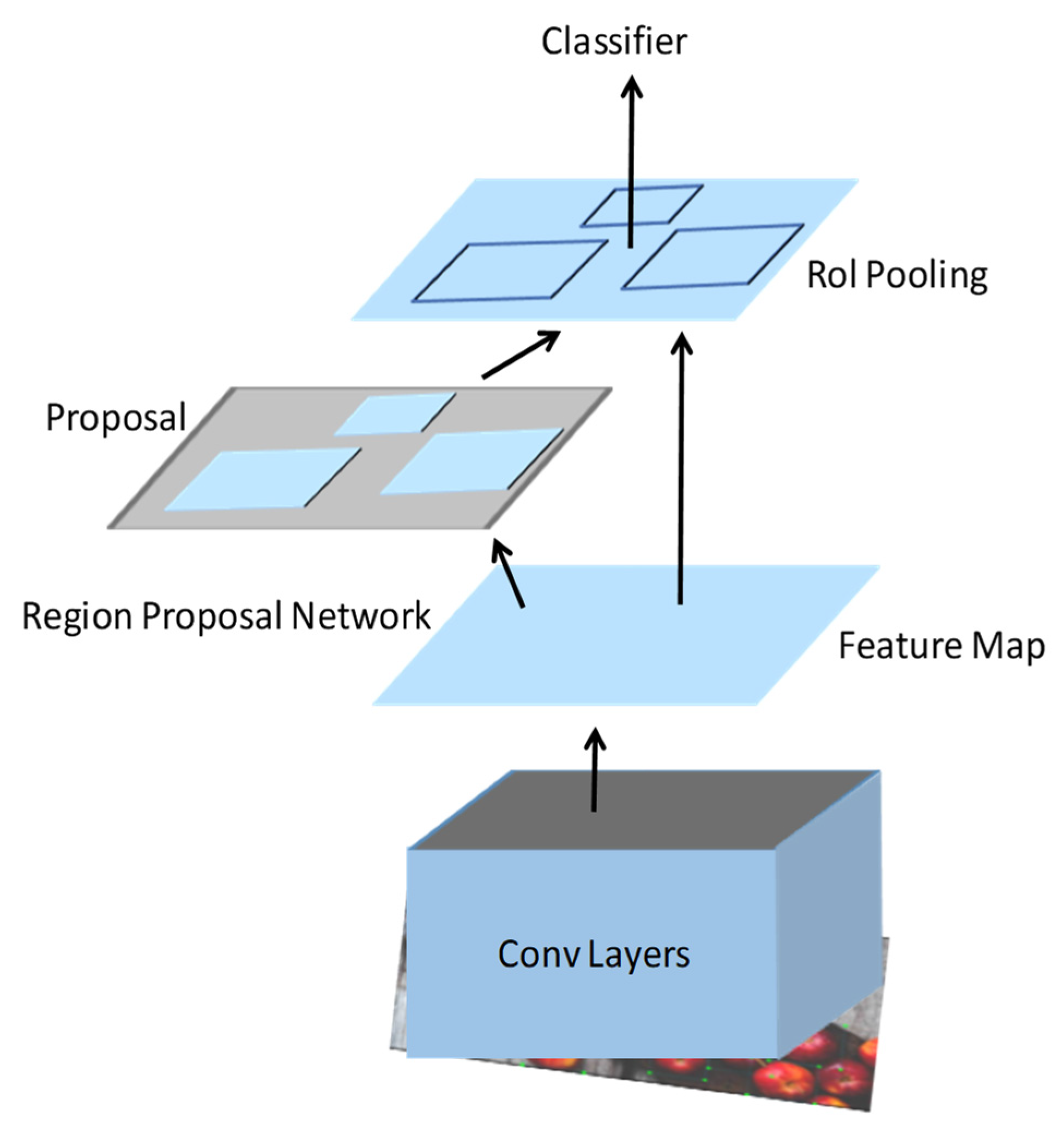

3.4. Object Detection and Classification

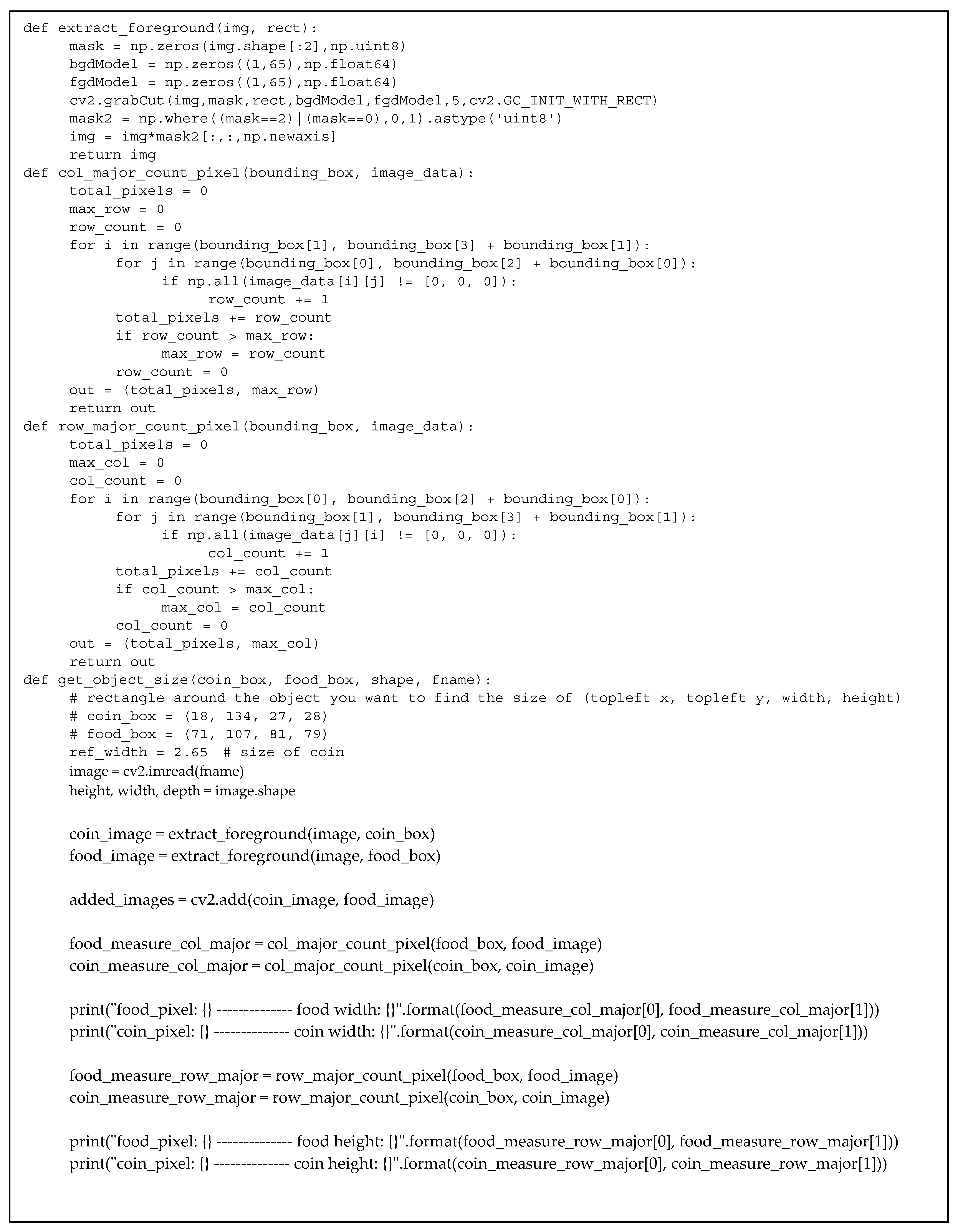

3.5. Volume Estimation

| Algorithm 1. GrabCut |

|

|

|

|

|

|

|

|

3.6. Calorie Estimation

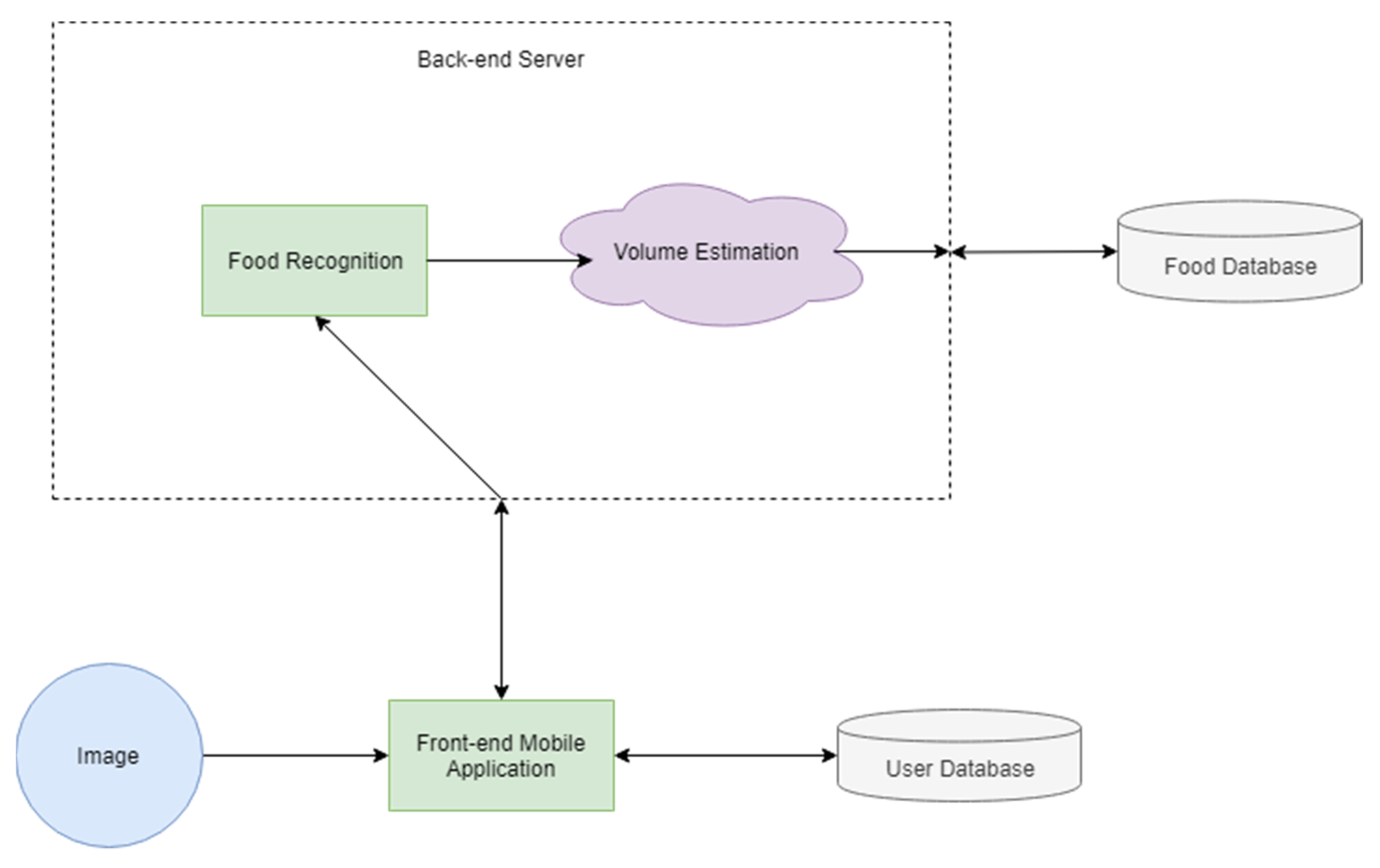

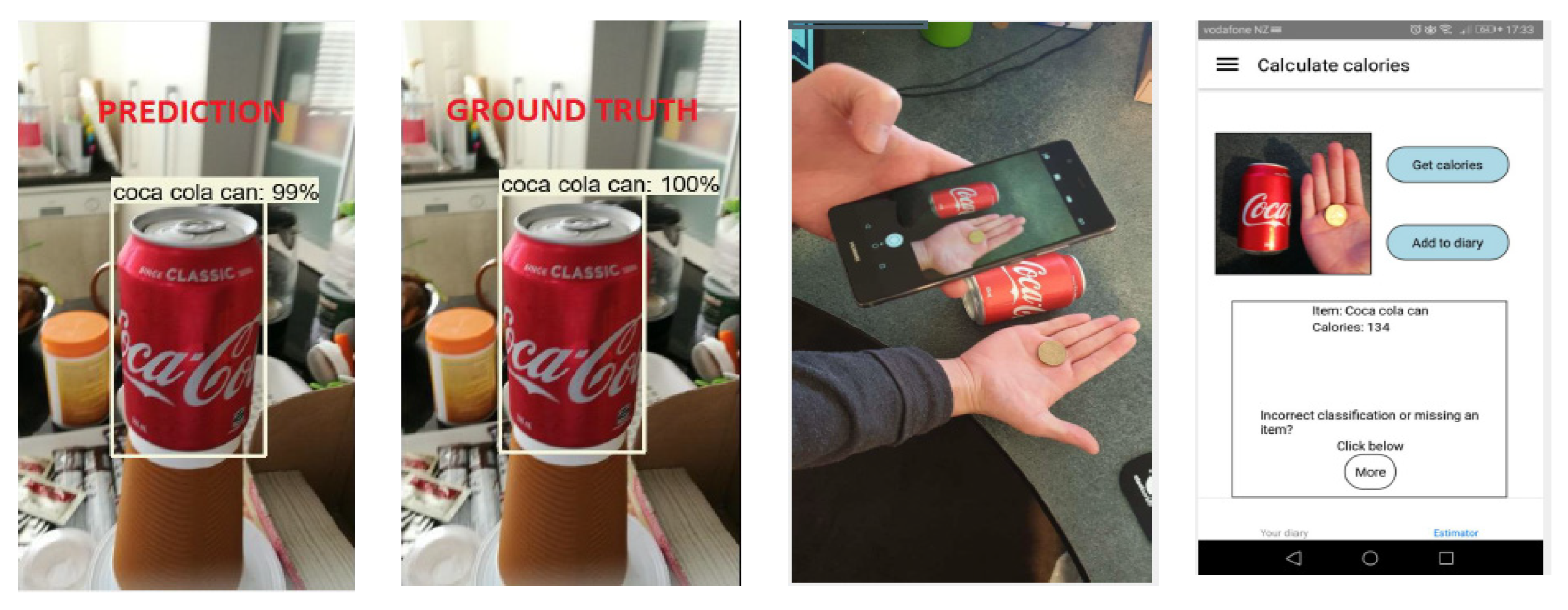

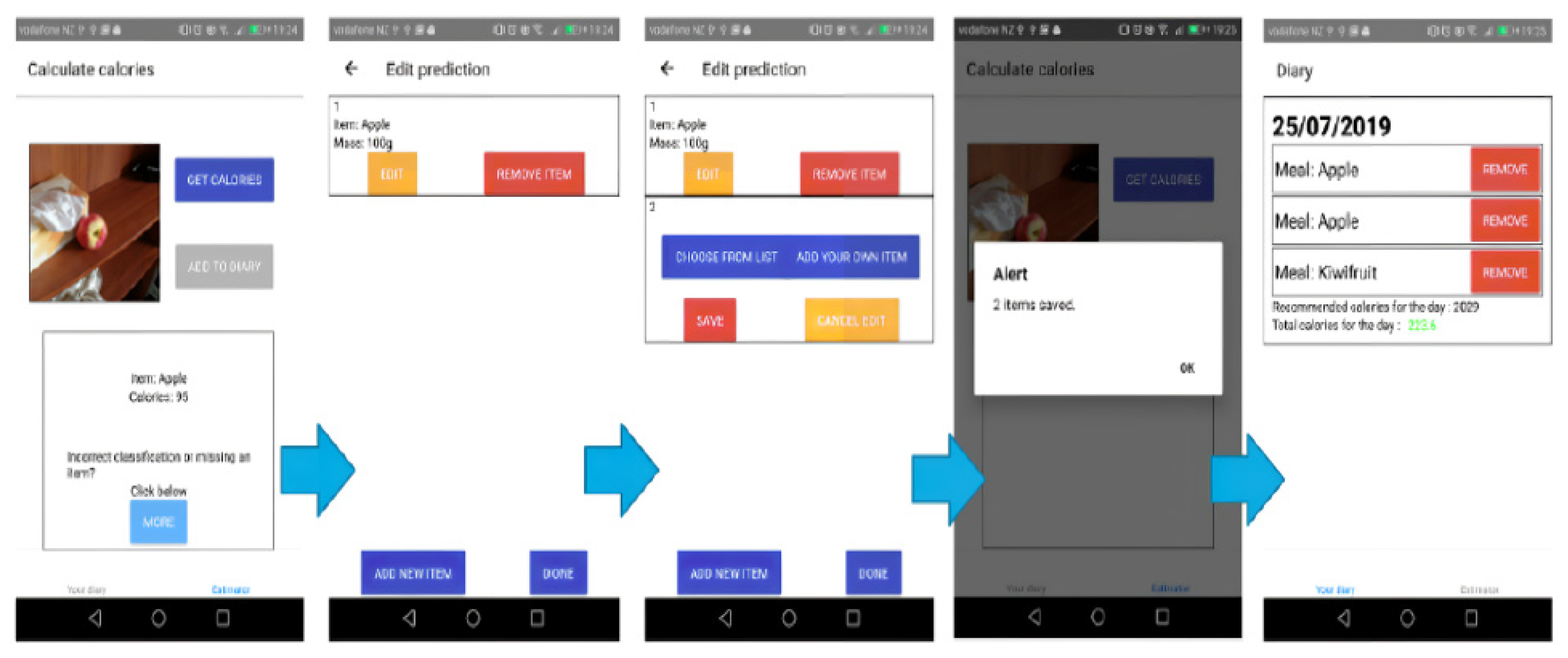

3.7. Mobile Application

3.7.1. User Profile

3.7.2. Diary Component and Food Database

3.7.3. Food Classifier Component

3.7.4. Food Correction Component

4. Results and Discussion

4.1. Evaluation Metrics

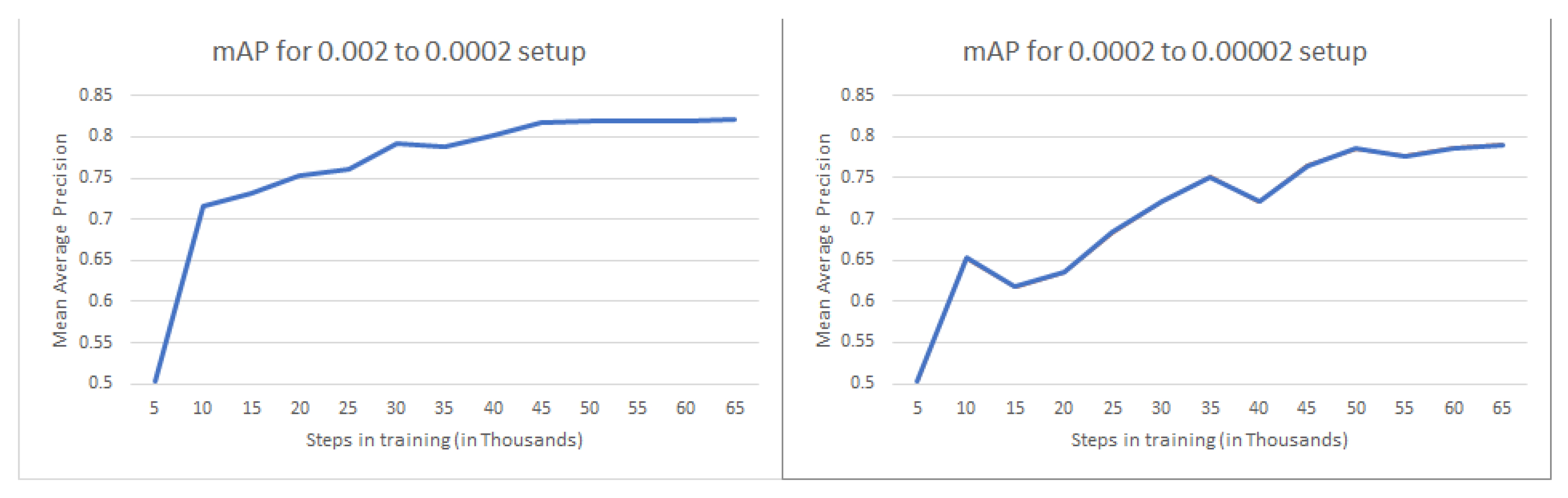

4.2. Evaluation Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

References

- Okunogbe, A.; Nugent, R.; Spencer, G.; Powis, J.; Ralston, J.; Wilding, J. Economic impacts of overweight and obesity: Current and future estimates for 161 countries. BMJ Glob. Health 2022, 7, e009773. [Google Scholar] [CrossRef] [PubMed]

- Tang, K.; Kumar, A.; Nadeem, M.; Maaz, I. CNN-Based Smart Sleep Posture Recognition System. IoT 2021, 2, 119–139. [Google Scholar] [CrossRef]

- Muppavram, S.; Patel, N.; Nadeem, M.P. Posture Alert. In Proceedings of the 2018 IEEE Region Ten Symposium (Ten-symp), Sydney, Australia, 4–6 July 2018. [Google Scholar]

- Loos, R.J.; Yeo, G.S. The genetics of obesity: From discovery to biology. Nat. Rev. Genet. 2022, 23, 120–133. [Google Scholar] [CrossRef] [PubMed]

- Basiotis, P.P.; Welsh, S.O.; Cronin, F.J.; Kelsay, J.L.; Mertz, W. Number of days of food intake records required to estimate individual and group nutrient intakes with defined confidence. J. Nutr. 1987, 117, 1638–1641. [Google Scholar] [CrossRef]

- 24-Hour Dietary Recall (24HR) at a Glance|Dietary Assessment Primer. 17 October 2021. Available online: https://dietassessmentprimer.cancer.gov/profiles/recall/ (accessed on 1 December 2021).

- Food Frequency Questionnaire at a Glance|Dietary Assessment Primer. 17 October 2021. Available online: https://dietassessmentprimer.cancer.gov/profiles/questionnaire/ (accessed on 1 December 2021).

- Holmes, B.; Dick, K.; Nelson, M. A comparison of four dietary assessment methods in materially deprived house-holds in England. Public Health Nutr. 2008, 11, 444–456. [Google Scholar] [CrossRef]

- Wohlers, E.M.; Sirard, J.R.; Barden, C.M.; Moon, J.K. Smart phones are useful for food intake and physical activity surveys. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Minneapolis, MN, USA, 3–6 September 2009. [Google Scholar]

- Vu, T.; Lin, F.; Alshurafa, N.; Xu, W. Wearable food intake monitoring technologies: A comprehensive review. Computers 2017, 6, 4. [Google Scholar] [CrossRef]

- Boushey, C.J.; Spoden, M.; Zhu, F.M.; Delp, E.J.; Kerr, D.A. New mobile methods for dietary assessment: Review of image-assisted and image-based dietary assessment methods. Proc. Nutr. Soc. 2017, 76, 283–294. [Google Scholar] [CrossRef]

- Sun, M.; Burke, L.; Mao, Z.; Chen, Y.; Chen, H.; Bai, Y. eButton: A wearable computer for health monitoring and personal assistance. In Proceedings of the 51st Annual Design Automation Conference, San Francisco, CA, USA, 1–5 June 2014; pp. 1–6. [Google Scholar]

- Glanz, K.; Murphy, S.; Moylan, J.; Evensen, D.; Curb, J.D. Improving dietary self-monitoring and adherence with hand-held computers: A pilot study. Am. J. Health Promot. 2006, 20, 165–170. [Google Scholar] [CrossRef]

- Meyers, A.; Johnston, N.; Rathod, V.; Korattikara, A.; Gorban, A.; Silberman, N.; Guadarrama, S.; Papandreou, G.; Huang, J.; Murphy, K. Im2Calories: Towards an automated mobile vision food diary. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Ocay, A.B.; Fernandez, J.M.; Palaoag, T.D. NutriTrack: Android-based food recognition app for nutrition awareness. In Proceedings of the 3rd IEEE International Conference on Computer and Communications (ICCC), Chengdu, China, 13–16 December 2017. [Google Scholar]

- Tzutalin LabelImg. Free Software: MIT License. 2015. Available online: https://github.com/tzutalin/labelImg} (accessed on 10 September 2021).

- TensorFlow. Welcome to the Model Garden for TensorFlow. 2021. Available online: https://github.com/tensorflow/models/blob/7a1da146446d783f1fa41d38e403d04afae453be/research/object_detect (accessed on 17 October 2021).

- Ege, T.; Yanai, K. Simultaneous estimation of food categories and calories with multi-task CNN. In Proceedings of the 2017 Fifteenth IAPR International Conference on Machine Vision Applications (MVA), Nagoya, Japan, 8–12 May 2017. [Google Scholar]

- Chen, J.; Ngo, C.W. Deep-based ingredient recognition for cooking recipe retrieval. In Proceedings of the 24th ACM International Conference on Multimedia, Amsterdam, The Netherlands, 15–19 October 2016. [Google Scholar]

- Kong, F.; Tan, J. DietCam: Automatic dietary assessment with mobile camera phones. Pervasive Mob. Comput. 2012, 8, 147–163. [Google Scholar] [CrossRef]

- Martinel, N.; Foresti, G.L.; Micheloni, C. Wide-slice residual networks for food recognition. In Proceedings of the 2018 IEEE Winter Conference on applications of computer vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018. [Google Scholar]

- Hippocrate, E.A.A.; Suwa, H.; Arakawa, Y.; Yasumoto, K. Food weight estimation using smartphone and cutlery. In Proceedings of the First Workshop on IoT-Enabled Healthcare and Wellness Technologies and Systems, Singapore, 30 June 2016. [Google Scholar]

- Hassannejad, H.; Matrella, G.; Ciampolini, P.; Munari, I.D.; Mordonini, M.; Cagnoni, S. A new approach to image-based estimation of food volume. Algorithms 2017, 10, 66. [Google Scholar] [CrossRef]

- Dehais, J.; Anthimopoulos, M.; Shevchik, S.; Mougiakakou, S. Two-view 3D reconstruction for food volume estimation. IEEE Trans. Multimed. 2016, 19, 1090–1099. [Google Scholar] [CrossRef]

- Vodopivec-Jamsek, V.; de Jongh, T.; Gurol-Urganci, I.; Atun, R.; Car, J. Mobile phone messaging for preventive health care. Cochrane Database Syst. Rev. 2021, 12, CD007457. [Google Scholar]

- Nasi, G.; Cucciniello, M.; Guerrazzi, C. The role of mobile technologies in health care processes: The case of cancer supportive care. J. Med. Internet Res. 2015, 17, 3757. [Google Scholar] [CrossRef]

- Marcolino, M.S.; Oliveira, J.A.Q.; D’Agostino, M.; Ribeiro, A.L.; Alkmim, M.B.M.; Novillo-Ortiz, D. The impact of mHealth interventions: Systematic review of systematic reviews. JMIR MHealth UHealth 2018, 6, e23. [Google Scholar] [CrossRef]

- Moorhead, S.A.; Hazlett, D.E.; Harrison, L.; Carroll, J.K.; Irwin, A.; Hoving, C. A new dimension of health care: Systematic review of the uses, benefits, and limitations of social media for health communication. J. Med. Internet Res. 2013, 15, e1933. [Google Scholar] [CrossRef]

- Samad, S.; Ahmed, F.; Naher, S.; Kabir, M.A.; Das, A.; Amin, S.; Shariful, I.M. Smartphone apps for tracking food consumption and recommendations: Evaluating artificial intelligence-based functionalities, features and quality of current apps. Intell. Syst. Appl. 2022, 15, 200103. [Google Scholar] [CrossRef]

- Hussain, G.; Javed, K.; Cho, J.; Yi, J. Food intake detection and classification using a necklace-type piezoelectric wearable sensor system. IEICE Trans. Inf. Syst. 2018, 101, 2795–2807. [Google Scholar] [CrossRef]

- Kalantarian, H.; Alshurafa, N.; Le, T.; Sarrafzadeh, M. Monitoring eating habits using a piezoelectric sensor-based necklace. Comput. Biol. Med. 2015, 48, 46–55. [Google Scholar] [CrossRef]

- Alshurafa, N.; Kalantarian, H.; Pourhomayoun, M.; Liu, J.; Sarin, S.; Shahbazi, B.; Sarrafzadeh, M. Recognition of nutrition intake using time-frequency decomposition in a wearable necklace using a piezoelectric sensor. IEEE Sens. J. 2015, 15, 3909–3916. [Google Scholar] [CrossRef]

- Bi, Y.; Mingsong, L.; Song, C.; Xu, W.; Guan, N.; Yi, W. AutoDietary: A wearable acoustic sensor system for food intake recognition in daily life. IEEE Sens. J. 2015, 16, 806–816. [Google Scholar] [CrossRef]

- Zhou, B.; Cheng, J.; Sundholm, M.; Reiss, A.; Huang, W.; Amft, O.; Lukowicz, P. Smart Table Surface: A Novel Approach to Pervasive Dining Monitoring. In Proceedings of the 2015 IEEE International Conference on Pervasive Computing and Communications (PerCom), St. Louis, MO, USA, 23–27 March 2015. [Google Scholar]

- Lee, K. Automatic Estimation of Food Intake Amount Using Visual and Ultrasonic Signals. Electronics 2021, 10, 2153. [Google Scholar] [CrossRef]

- Anderez, D.O.; Lotfi, A.; Pourabdollah, A. A deep learning based wearable system for food and drink intake recognition. J. Ambient Intell. Humaniz. Comput. 2021, 12, 9435–9447. [Google Scholar] [CrossRef]

- Hussain, G.; Maheshwari, M.K.; Memon, M.L.; Jabbar, M.S.; Javed, K. A CNN based automated activity and food recognition using wearable sensor for preventive healthcare. Electronics 2019, 8, 1425. [Google Scholar] [CrossRef]

- Amugongo, L.M.; Kriebitz, A.; Boch, A.; Lütge, C. Mobile Computer Vision-Based Applications for Food Recognition and Volume and Calorific Estimation: A Systematic Review. Healthcare 2023, 11, 59. [Google Scholar] [CrossRef]

- Kong, F.; He, H.; Raynor, H.A.; Tan, J. DietCam: Multi-view regular shape food recognition with a camera phone. Pervasive Mob. Comput. 2015, 19, 108–121. [Google Scholar] [CrossRef]

- Beijbom, O.; Joshi, N.; Morris, D.; Saponas, S.; Khullar, S. Menu-match: Restaurant-specific food logging from images. In Proceedings of the 2015 IEEE Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 5–9 January 2015. [Google Scholar]

- Horiguchi, S.; Amano, S.; Ogawa, M.; Aizawa, K. Personalized classifier for food image recognition. IEEE Trans. Multimed. 2018, 20, 2836–2848. [Google Scholar] [CrossRef]

- Miyazaki, T.; Chamin, D.S.G.; Aizawa, K. Image-based calorie content estimation for dietary assessment. In Proceedings of the IEEE International Symposium on Multimedia, Dana Point, CA, USA, 5–7 December 2011. [Google Scholar]

- Chen, M.; Yang, Y.; Ho, C.; Wang, S.; Liu, S.; Chang, E.; Yeh, C.; Ouhyoung, M. Automatic Chinese food identification and quantity estimation. In Proceedings of the SIGGRAPH Asia 2012 Technical Briefs, Singapore, 28 November–1 December 2012. [Google Scholar]

- Zhou, L.; Zhang, C.; Liu, F.; Qiu, Z.; He, Y. Application of deep learning in food: A review. Compr. Rev. Food Sci. Food Saf. 2019, 18, 1793–1811. [Google Scholar] [CrossRef]

- Zhang, W.; Yu, Q.; Siddiquie, B.; Divakaran, A.; Sawhney, H. “snap-n-eat” food recognition and nutrition estimation on a smartphone. J. Diabetes Sci. Technol. 2015, 9, 525–533. [Google Scholar] [CrossRef]

- Kawano, Y.; Yanai, K. Foodcam: A real-time food recognition system on a smartphone. Multimed. Tools Appl. 2015, 74, 5263–5287. [Google Scholar] [CrossRef]

- Joutou, T.; Yanai, K. A food image recognition system with multiple kernel learning. In Proceedings of the 2009 16th IEEE International Conference on Image Processing (ICIP), Cairo, Egypt, 7–10 November 2009. [Google Scholar]

- Tanno, R.; Okamoto, K.; Yanai, K. Deepfoodcam: A dcnn-based real-time mobile food recognition system. In Proceedings of the 2nd International Workshop on Multimedia Assisted Dietary Management, Amsterdam, The Netherlands, 16 October 2016. [Google Scholar]

- Yanai, K.; Kawano, Y. Food image recognition using deep convolutional network with pre-training and fine-tuning. In Proceedings of the 2015 IEEE International Conference on Multimedia Expo Workshops (ICMEW), Torino, Italy, 29 June–3 July 2015. [Google Scholar]

- Kawano, Y.; Yanai, K. Real-time mobile food recognition system. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Portland, OR, USA, 23–28 June 2013; pp. 1–7. [Google Scholar]

- Boykov, Y.Y.; Jolly, M.P. Interactive graph cuts for optimal boundary & region segmentation of objects in ND images. In Proceedings of the Eighth IEEE International Conference on Computer Vision, ICCV 2001, Vancouver, BC, Canada, 7–14 July 2001. [Google Scholar]

- Fard, M.A.; Hadadi, H.; Tavakoli Targhi, A. Fruits and vegetables calorie counter using convolutional neural networks. In Proceedings of the 6th International Conference on Digital Health Conference, Montral, QC, Canada, 11–13 April 2016. [Google Scholar]

- Babaeian Jelodar, A.; Sun, Y. Calorie Aware Automatic Meal Kit Generation from an Image. arXiv 2021, arXiv:2112.09839. [Google Scholar]

- Pouladzadeh, P.; Shirmohammadi, S. Mobile multi-food recognition using deep learning. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 2017, 13, 1–21. [Google Scholar] [CrossRef]

- Chopra, M.; Purwar, A. Food Image Recognition Using CNN, Faster R-CNN and YOLO. In Applications of Artificial Intelligence, Big Data and Internet of Things in Sustainable Development; CRC Press: Boca Raton, FL, USA, 2023; pp. 81–89. [Google Scholar]

- Davies, T.; Louie JC, Y.; Ndanuko, R.; Barbieri, S.; Perez-Concha, O.; Wu, J.H. A Machine Learning Approach to Predict the Added-Sugar Content of Packaged Foods. J. Nutr. 2022, 152, 343–349. [Google Scholar] [CrossRef]

- Davies, T.; Louie, J.C.Y.; Scapin, T.; Pettigrew, S.; Wu, J.H.; Marklund, M.; Coyle, D.H. An innovative machine learning approach to predict the dietary fiber content of packaged foods. Nutrients 2021, 13, 3195. [Google Scholar] [CrossRef]

- Kumar, G.K.; Rani, D.M.; Neeraja, K.; Philip, J. Food Calorie Estimation System Using ImageAI with RetinaNet Feature Extraction. In Advanced Techniques for IoT Applications: Proceedings of EAIT 2020; Springer: Singapore, 2020. [Google Scholar]

- Yang, H.; Zhang, D.; Lee, D.; Huang, M. A sparse representation based classification algorithm for Chinese food recognition. In Advances in Visual Computing: 12th International Symposium, ISVC 2016, Las Vegas, NV, USA, 12–14 December 2016, Proceedings, Part II 12; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Fahira, P.K.; Rahmadhani, Z.P.; Mursanto, P.; Wibisono, A.; Wisesa, H.A. Classical machine learning classification for javanese traditional food image. In Proceedings of the 2020 4th International Conference on Informatics and Computational Sciences (ICICoS), Semarang, Indonesia, 10–11 November 2020. [Google Scholar]

- Chun, M.; Jeong, H.; Lee, H.; Yoo, T.; Jung, H. Development of Korean Food Image Classification Model Using Public Food Image Dataset and Deep Learning Methods. IEEE Access 2022, 10, 128732–128741. [Google Scholar] [CrossRef]

- Ramesh, A.; Sivakumar, A.; Angel, S. Real-time Food-Object Detection and Localization for Indian Cuisines using Deep Neural Networks. In Proceedings of the 2020 IEEE International Conference on Machine Learning and Applied Network Technologies (ICMLANT), Hyderabad, India, 20–21 December 2020. [Google Scholar]

- Khan, T.A.; Islam, M.S.; Ullah, S.A.; Rabby, A.S.A. A machine learning approach to recognize junk food. In Proceedings of the 10th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Kanpur, India, 6–8 July 2019. [Google Scholar]

- Shifat, S.M.; Parthib, T.; Pyaasa, S.T.; Chaity, N.M.; Kumar, N.; Morol, M.K. A Real-time Junk Food Recognition System based on Machine Learning. In Proceedings of the Bangabandhu and Digital Bangladesh: First International Conference, ICBBDB 2021, Dhaka, Bangladesh, 30 December 2021; Springer: Cham, Switzerland, 2021. [Google Scholar]

- Valueva, M.V.; Nagornov, N.N.; Lyakhov, P.A.; Valuev, G.V.; Chervyakov, N.I. Application of the residue number system to reduce hardware costs of the convolutional neural network implementation. Math. Comput. Simul. 2020, 177, 232–243. [Google Scholar] [CrossRef]

- Fukushima, K. Artificial vision by multi-layered neural networks: Neocognitron and its advances. Neural Netw. 2013, 37, 103–119. [Google Scholar] [CrossRef]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Orchard, M.T.; Bouman, C.A. Color quantization of images. IEEE Trans. Signal Process. 1991, 39, 2677–2690. [Google Scholar] [CrossRef]

- Lu, Y. Food Image Recognition by Using Convolutional Neural Networks (CNNs). arXiv 2016, arXiv:1612.00983. [Google Scholar]

- Subhi, M.; Ali, S. A Deep Convolutional Neural Network for Food Detection and Recognition. In Proceedings of the 2018 IEEE-EMBS Conference on Biomedical Engineering and Sciences (IECBES), Kuching, Malaysia, 3–6 December 2018. [Google Scholar]

- Islam, M.; Siddique, B.K.; Rahman, S.; Jabid, T. Food Image Classification with Convolutional Neural Network. In Proceedings of the 2018 International Conference on Intelligent Informatics and Biomedical Sciences (ICIIBMS), Bangkok, Thailand, 21–24 October 2018. [Google Scholar]

- Jeny, A.; Junayed, M.; Ahmed, T.; Habib, M.; Rahman, M. FoNet-Local food recognition using deep residual neural networks. In Proceedings of the 2019 International Conference on Information Technology, ICIT 2019, Bhubaneswar, India, 20–23 December 2019. [Google Scholar]

- Razali, M.; Moung, E.; Yahya, F.; Hou, C.; Hanapi, R.; Mohamed, R.; Hashem, I. Indigenous Food Recognition Model Based on Various Convolutional Neural Network Architectures for Gastronomic Tourism Business Analytics. Information 2021, 12, 322. [Google Scholar] [CrossRef]

| Sr. No. | Category | Training | Test | Total |

|---|---|---|---|---|

| 1 | Apple | 671 | 200 | 871 |

| 2 | Bluebird sour cream | 805 | 200 | 1005 |

| 3 | Chicken curry | 651 | 200 | 851 |

| 4 | Coca-Cola can | 1035 | 200 | 1230 |

| 5 | Coin | 1546 | 400 | 1946 |

| 6 | ETA chicken | 817 | 200 | 1017 |

| 7 | ETA sour cream | 809 | 200 | 1009 |

| 8 | Fanta can | 1059 | 150 | 1209 |

| 9 | McDonalds Big-Mac | 849 | 200 | 1049 |

| 10 | McDonalds Fillet-o-Fish | 872 | 200 | 1072 |

| 11 | McDonalds Fries | 849 | 200 | 1049 |

| 12 | McDonalds McChicken | 836 | 200 | 1036 |

| 13 | Orange | 718 | 200 | 918 |

| 14 | Pizza Hut | 846 | 200 | 1046 |

| Item | Accuracy | Average Calories | Actual Calories |

|---|---|---|---|

| Coca-Cola can | 60% | 153 | 142 |

| Apple | 92% | 77 | 78 |

| Orange | 90% | 53 | 58 |

| Authors | Dataset | Categories | Techniques | Accuracy |

|---|---|---|---|---|

| Proposed Approach | Self-collected | 10 | Faster R-CNN | 80.06% |

| Yanai et al. (2021) [50] | UECFOOD100 | 1000 | DCNN | 78.77% |

| UEC-FOOD256 | 1000 | 67.57% | ||

| Joutou et al. (2009) [47] | 50 | Multiple kernel learning | 61.34% | |

| Zhang et al. (2015) [45] | Online image database, e.g., ImageNet, Flickr, and Google Images | 15 | HOG/ SIFT | 80.30% |

| Chen et al. (2016) [19] | VIREO Food-172 | 100 | Multi-task DCNN | 82.12 to 97.29% |

| Lu (2016) [69] | Small-scale dataset | 10 | CNN, 3 convolution-pooling layers, 1 fully connected layer | 74.0% |

| Ege et al. (2017) [18] | Web image mining | 15 | multi-task CNN | 77.90 to 80.60% |

| Horiguchi et al. (2018) [28,52] | FoodLog-FLD | 213 | CNN-based fixed-class | 54.60 to 72.40% |

| Subhi and Ali (2018) [70] | Self-collected Malaysian foods | 11 | Modified VGG19-CNN, 21 convolutional layers, 3 fully connected layers | Not reported |

| Islam et al. (2018) [71] | Food-11 dataset | 11 | CNN, 5 convolution layers, 3 max-pooling layers, 1 fully connected layer | 74.70% |

| Inception V3 pre-trained, 2 fully connected layers | 92.86% | |||

| Jeny et al. (2019) [72] | Self-collected, Bangladeshi foods | 6 | FoNet-based deep residual Neural network with 47 layers | 98.16%. |

| Razali et al. (2021) [73] | Sabahan foods | 11 | EFFNet + CNN | 94.01% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nadeem, M.; Shen, H.; Choy, L.; Barakat, J.M.H. Smart Diet Diary: Real-Time Mobile Application for Food Recognition. Appl. Syst. Innov. 2023, 6, 53. https://doi.org/10.3390/asi6020053

Nadeem M, Shen H, Choy L, Barakat JMH. Smart Diet Diary: Real-Time Mobile Application for Food Recognition. Applied System Innovation. 2023; 6(2):53. https://doi.org/10.3390/asi6020053

Chicago/Turabian StyleNadeem, Muhammad, Henry Shen, Lincoln Choy, and Julien Moussa H. Barakat. 2023. "Smart Diet Diary: Real-Time Mobile Application for Food Recognition" Applied System Innovation 6, no. 2: 53. https://doi.org/10.3390/asi6020053

APA StyleNadeem, M., Shen, H., Choy, L., & Barakat, J. M. H. (2023). Smart Diet Diary: Real-Time Mobile Application for Food Recognition. Applied System Innovation, 6(2), 53. https://doi.org/10.3390/asi6020053