A Low-Cost Collaborative Robot for Science and Education Purposes to Foster the Industry 4.0 Implementation

Abstract

:1. Introduction

2. Materials and Methods

2.1. Hardware

2.1.1. 3D Printing

2.1.2. Control and Movement

2.1.3. ESP32 Microcontroller and Communication Protocols

- UART—used to establish communication between ESP32 of each axis of the robot with its respective stepper motor controller and establish communication between main ESP32 and PC (MATLAB interface) through USB to TTL converter.

- ESP NOW—used to establish communication between the main ESP32 with the ESP32s of each axis.

- I2C—used to establish communication between the ESP32 of each axis with the ADC-analog–digital converter to monitor the consumption (electrical current) of each stepper motor.

2.2. Software

2.3. Methods and Algorithms

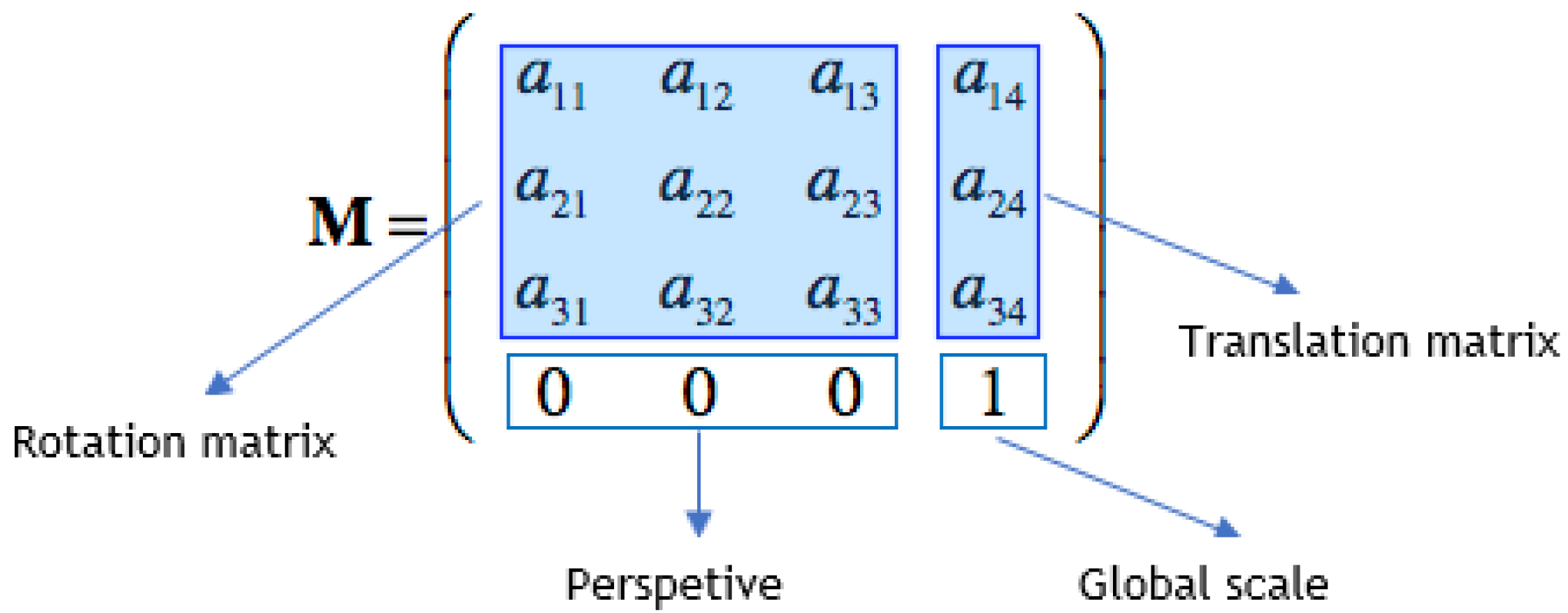

2.3.1. Homogeneous Transformation Matrix

2.3.2. Direct Kinematics

- ai: link length;

- αi: link torsion;

- di: offset;

- θi: joint angle.

2.3.3. Inverse Kinematics

- There may be multiple, or even infinite, solutions;

- There may be no solution (the generalized position is outside the workspace);

- They are non-linear equations, so an analytical solution is not always possible.

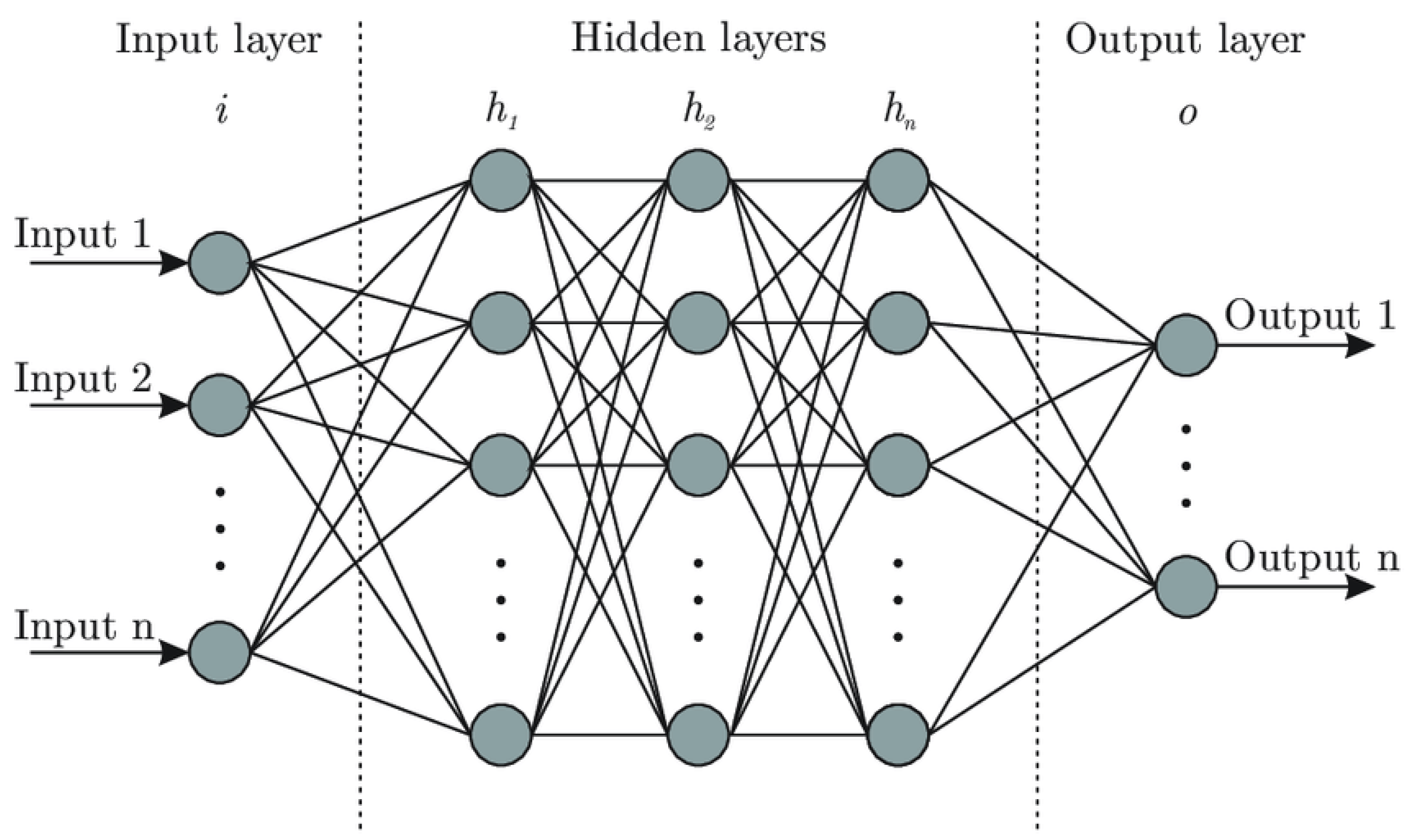

2.3.4. ANN—Artificial Neural Network

3. Results

3.1. Parts and Structure of the Robotic Arm

3.2. Mathematical Formulation of the Convention—DH and Direct/Inverse Kinematics

3.3. Electrical Circuit and Control

3.4. Graphical User Interface—MATLAB

3.5. 3D Printing and Assembly of the Robotic Arm

4. Analysis and Discussion of Results

4.1. Test No. 1: Payload

4.2. Test No. 2: Positioning Error

4.3. Test No. 3: Accuracy and Repeatability

4.4. Test No. 4: Electric Current with Payload Variation

- -

- For a weight less than 100 g, the electric current is low, which means the measured current is influenced by the common mode gain of the amplifier used in the current measurement circuit.

- -

- It is possible to distinguish the increase in current from the increase in weight (P (0,50,0) and P (0,0,50)), when it is only the movement in only 1 axis of each joint. It was not possible to verify the same for P (−50,0,0), because the current variation was reduced, being influenced by the differential gain.

- -

- The increase in current with the weight increase during the movement from P (0,0,0) to P (−50,50,50) is not significant because it is a movement in more than one joint; the force exerted depends on the angles of the other joints that are varied. This makes the graph P (0,50,0) different from P (0,50,0) [θ2] and P (0,0,50) different from P (0,0,50) [θ3].

4.5. Test No. 5: Collision Detection

5. Conclusions

Suggestions for Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Haseeb, M.; Hussain, H.I.; Ślusarczyk, B.; Jermsittiparsert, K. Industry 4.0: A Solution towards Technology Challenges of Sustainable Business Performance. Soc. Sci. 2019, 8, 154. [Google Scholar] [CrossRef] [Green Version]

- Tsaramirsis, G.; Kantaros, A.; Al-Darraji, I.; Piromalis, D.; Apostolopoulos, C.; Pavlopoulou, A.; Alrammal, M.; Ismail, Z.; Buhari, S.M.; Stojmenovic, M.; et al. A Modern Approach towards an Industry 4.0 Model: From Driving Technologies to Management. J. Sensors 2022, 2022, 5023011. [Google Scholar] [CrossRef]

- IFR. Available online: https://ifr.org/ (accessed on 2 November 2020).

- Bonilla, S.H.; Silva, H.R.O.; da Silva, M.T.; Gonçalves, R.F.; Sacomano, J.B. Industry 4.0 and Sustainability Implications: A Scenario-Based Analysis of the Impacts and Challenges. Sustainability 2018, 10, 3740. [Google Scholar] [CrossRef] [Green Version]

- Coşkun, S.; Kayıkcı, Y.; Gençay, E. Adapting Engineering Education to Industry 4.0 Vision. Technologies 2019, 7, 10. [Google Scholar] [CrossRef] [Green Version]

- Hariharasudan, A.; Kot, S. Review on Digital English and Education 4.0 for Industry 4.0. Soc. Sci. 2018, 7, 227. [Google Scholar] [CrossRef] [Green Version]

- RIA. Available online: Robotics Online, Robotic Industries Association (accessed on 10 November 2020).

- ISO/TS 15066:2016(en); Robots and Robotic Devices—Collaborative Robots. International Standards Organization: Geneva, Switzerland, 2016. Available online: https://www.iso.org/obp/ui/#iso:std:iso:ts:15066:ed-1:v1:en (accessed on 12 November 2020).

- Correia Simões, A.; Lucas Soares, A.; Barros, A.C. Factors Influencing the Intention of Managers to Adopt Collaborative Robots (Cobots) in Manufacturing Organizations. J. Eng. Technol. Manag. 2020, 57, 101574. [Google Scholar] [CrossRef]

- Zacharaki, A.; Kostavelis, I.; Gasteratos, A.; Dokas, I. Safety Bounds in Human Robot Interaction: A Survey. Saf. Sci. 2020, 127, 104667. [Google Scholar] [CrossRef]

- Ribeiro, J.P.L.; Gaspar, P.D.; Soares, V.N.G.J.; Caldeira, J.M.L.P. Computational simulation of an agricultural robotic rover for weed control and fallen fruit collection—Algorithms for image detection and recognition and systems control, regulation and command. Electronics 2022, 11, 790. [Google Scholar] [CrossRef]

- Krimpenis, A.A.; Papapaschos, V.; Bontarenko, E. HydraX, a 3D Printed Robotic Arm for Hybrid Manufacturing. Part I: Custom Design, Manufacturing and Assembly. Procedia Manuf. 2020, 51, 103–108. [Google Scholar] [CrossRef]

- Stilli, A.; Grattarola, L.; Feldmann, H.; Wurdemann, H.A.; Althoefer, K. Variable Stiffness Link (VSL): Toward Inherently Safe Robotic Manipulators. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 4971–4976. [Google Scholar] [CrossRef] [Green Version]

- Thingiverse WE-R2.4 Six-Axis Robot Arm by LoboCNC. Available online: https://www.thingiverse.com/thing:3327968 (accessed on 23 November 2020).

- Liu, B.; He, Y.; Kuang, Z. Design and Analysis of Dual-Arm SCARA Robot Based on Stereo Simulation and 3D Modeling. In Proceedings of the 2018 IEEE International Conference on Information and Automation (ICIA), Wuyishan, China, 11–13 August 2018; pp. 1233–1237. [Google Scholar] [CrossRef]

- Yang, H.; Yan, Y.; Su, S.; Dong, Z.; Ul Hassan, S.H. LWH-Arm: A Prototype of 8-DoF Lightweight Humanoid Robot Arm. In Proceedings of the 2019 3rd IEEE International Conference on Robotics and Automation Sciences (ICRAS), Wuhan, China, 1–3 June 2019; pp. 6–10. [Google Scholar] [CrossRef]

- Karmoker, S.; Polash, M.M.H.; Hossan, K.M.Z. Design of a Low Cost PC Interface Six DOF Robotic Arm Utilizing Recycled Materials. In Proceedings of the 2014 International Conference on Electrical Engineering and Information & Comunication Technology (ICEEICT), Dhaka, Bangladesh, 10–12 April 2014; pp. 2–6. [Google Scholar] [CrossRef]

- Sundaram, D.; Sarode, A.; George, K. Vision-Based Trainable Robotic Arm for Individuals with Motor Disability. In Proceedings of the 2019 IEEE 10th Annual Ubiquitous Computing, Electronics and Mobile Communication Conference (UEMCON), New York, NY, USA, 10–12 October 2019; pp. 0312–0315. [Google Scholar] [CrossRef]

- Liang, X.; Cheong, H.; Sun, Y.; Guo, J.; Chui, C.K.; Yeow, C.H. Design, Characterization, and Implementation of a Two-DOF Fabric-Based Soft Robotic Arm. IEEE Robot. Autom. Lett. 2018, 3, 2702–2709. [Google Scholar] [CrossRef]

- Dizon, J.R.C.; Espera, A.H.; Chen, Q.; Advincula, R.C. Mechanical Characterization of 3D-Printed Polymers. Addit. Manuf. 2018, 20, 44–67. [Google Scholar] [CrossRef]

- Stansbury, J.W.; Idacavage, M.J. 3D Printing with Polymers: Challenges among Expanding Options and Opportunities. Dent. Mater. 2016, 32, 54–64. [Google Scholar] [CrossRef] [PubMed]

- Melchels, F.P.W.; Feijen, J.; Grijpma, D.W. A Review on Stereolithography and Its Applications in Biomedical Engineering. Biomaterials 2010, 31, 6121–6130. [Google Scholar] [CrossRef] [Green Version]

- Thompson, M.K.; Moroni, G.; Vaneker, T.; Fadel, G.; Campbell, R.I.; Gibson, I.; Bernard, A.; Schulz, J.; Graf, P.; Ahuja, B.; et al. Design for Additive Manufacturing: Trends, Opportunities, Considerations, and Constraints. CIRP Ann. Manuf. Technol. 2016, 65, 737–760. [Google Scholar] [CrossRef] [Green Version]

- Alfarisi, N.A.S.; Santos, G.N.C.; Norcahyo, R.; Sentanuhady, J.; Azizah, N.; Muflikhun, M.A. Optimization and Performance Evaluation of Hand Cranked Music Box Base Structure Manufactured via 3D Printing. Heliyon 2021, 7, e08432. [Google Scholar] [CrossRef] [PubMed]

- Kantaros, A.; Piromalis, D. Fabricating Lattice Structures via 3D Printing: The Case of Porous Bio-Engineered Scaffolds. Appl. Mech. 2021, 2, 18. [Google Scholar] [CrossRef]

- Kantaros, A.; Diegel, O.; Piromalis, D.; Tsaramirsis, G.; Khadidos, A.O.; Khadidos, A.O.; Khan, F.Q.; Jan, S. 3D Printing: Making an Innovative Technology Widely Accessible through Makerspaces and Outsourced Services. Mater. Today Proc. 2021, 49, 2712–2723. [Google Scholar] [CrossRef]

- All3DP All3DP. Available online: https://all3dp.com/2/pla-vs-abs-filament-3d-printing/ (accessed on 18 July 2021).

- Hubs. Available online: https://www.hubs.com/knowledge-base/pla-vs-abs-whats-difference/#heat-resistance (accessed on 23 July 2021).

- Dodziuk, H. Applications of 3D Printing in Healthcare. Kardiochirurg. Torakochirurg. Pol. 2016, 13, 283–293. [Google Scholar] [CrossRef]

- Nachal, N.; Moses, J.A.; Karthik, P.; Anandharamakrishnan, C. Applications of 3D Printing in Food Processing. Food Eng. Rev. 2019, 11, 123–141. [Google Scholar] [CrossRef]

- Browne, M.P.; Redondo, E.; Pumera, M. 3D Printing for Electrochemical Energy Applications. Chem. Rev. 2020, 120, 2783–2810. [Google Scholar] [CrossRef]

- Leitman, S.; Granzow, J. Music Maker: 3d Printing and Acoustics Curriculum. In Proceedings of the 16th International Conference on New Interfaces for Musical Expression (NIME), Brisbane, Australia, 11–15 July 2016; Volume 16, pp. 118–121. [Google Scholar]

- Telegenov, K.; Tlegenov, Y.; Shintemirov, A. An Underactuated Adaptive 3D Printed Robotic Gripper. In Proceedings of the 10th France-Japan/8th Europe-Asia Congress on Mecatronics, Tokyo, Japan, 27–29 November 2014; pp. 110–115. [Google Scholar] [CrossRef]

- Chinthavali, M. 3D Printing Technology for Automotive Applications; Oak Ridge National Laboratory: Oak Ridge, TN, USA, 2016. [Google Scholar]

- Zhang, S.; Vardaxoglou, Y.; Whittow, W.; Mittra, R. 3D-Printed Flat Lens for Microwave Applications. In Proceedings of the 2015 Loughborough Antennas and Propagation Conference (LAPC), Loughborough, UK, 2–3 November 2015; pp. 31–33. [Google Scholar] [CrossRef] [Green Version]

- Chen, G.; Xu, Y.; Kwok, P.C.L.; Kang, L. Pharmaceutical Applications of 3D Printing. Addit. Manuf. 2020, 34, 101209. [Google Scholar] [CrossRef]

- Nadagouda, M.N.; Ginn, M.; Rastogi, V. A Review of 3D Printing Techniques for Environmental Applications. Curr. Opin. Chem. Eng. 2020, 28, 173–178. [Google Scholar] [CrossRef] [PubMed]

- Blachowicz, T.; Pająk, K.; Recha, P.; Ehrmann, A. 3D Printing for Microsatellites-Material Requirements and Recent Developments. AIMS Mater. Sci. 2020, 7, 926–938. [Google Scholar] [CrossRef]

- Babiuch, M.; Foltynek, P.; Smutny, P. Using the ESP32 Microcontroller for Data Processing. In Proceedings of the 2019 20th International Carpathian Control Conference (ICCC), Krakow/Wieliczka, Poland, 26–29 May 2019. [Google Scholar] [CrossRef]

- Bipasha Biswas, S.; Tariq Iqbal, M. Solar Water Pumping System Control Using a Low Cost ESP32 Microcontroller. In Proceedings of the 2018 IEEE Canadian Conference on Electrical and Computer Engineering, Quebec, QC, Canada, 13–16 May 2018; pp. 7–11. [Google Scholar] [CrossRef]

- Bunkum, M.; Vachirasakulchai, P.; Nampeng, J.; Tommajaree, R.; Visitsattapongse, S. Tele-Operation of Robotic Arm. In Proceedings of the 2019 12th Biomedical Engineering International Conference (BMEiCON), Ubon Ratchathani, Thailand, 19–22 November 2019. [Google Scholar] [CrossRef]

- Bolla, D.R.; Jijesh, J.J.; Palle, S.S.; Penna, M.; Keshavamurthy, S. An IoT Based Smart E-Fuel Stations Using ESP-32. In Proceedings of the 5th IEEE International Conference on Recent Trends on Electronics, Information and Communication Technology (RTEICT), Bangalore, India, 12–13 November 2020; pp. 333–336. [Google Scholar] [CrossRef]

- Rai, P.; Rehman, M. ESP32 Based Smart Surveillance System. In Proceedings of the 2019 2nd International Conference on Computing, Mathematics and Engineering Technologies (iCoMET), Sukkur, Pakistan, 30–31 January 2019; pp. 8–10. [Google Scholar] [CrossRef]

- Contardi, U.A.; Morikawa, M.; Brunelli, B.; Thomaz, D.V. MAX30102 Photometric Biosensor Coupled to ESP32-Webserver Capabilities for Continuous Point of Care Oxygen Saturation and Heartrate Monitoring. Eng. Proc. 2022, 16, 1114. [Google Scholar] [CrossRef]

- Espressif. Available online: https://www.espressif.com/en/products/socs/esp32 (accessed on 1 August 2021).

- 3D Lab 3D Lab. Available online: https://3dlab.com.br/10-softwares-de-modelagem-3d/ (accessed on 19 October 2021).

- Arduino. Available online: https://www.arduino.cc/en/Guide/Environment (accessed on 18 October 2021).

- MATLAB. Available online: https://www.mathworks.com/products/matlab.html (accessed on 19 October 2021).

- Spatial Transformation Matrices Spatial Transformation Matrices. Available online: https://www.brainvoyager.com/bv/doc/UsersGuide/CoordsAndTransforms/SpatialTransformationMatrices.html (accessed on 19 October 2021).

- Dasari, A.; Reddy, N.S. Forward and Inverse Kinematics of a Robotic Frog. In Proceedings of the 4th International Conference on Intelligent Human Computer Interaction (IHCI), Kharagpur, India, 27–29 December 2012. [Google Scholar] [CrossRef]

- Spong, M.W.; Hutchinson, S.; Vidyasagar, M. Robot Modeling and Control, 2nd ed.; John Wiley & Sons: New York, NY, USA, 2020; ISBN 978-1-119-52404-5. [Google Scholar]

- Fernández, E.F.; Almonacid, F.; Sarmah, N.; Rodrigo, P.; Mallick, T.K.; Pérez-Higueras, P. A Model Based on Artificial Neuronal Network for the Prediction of the Maximum Power of a Low Concentration Photovoltaic Module for Building Integration. Sol. Energy 2014, 100, 148–158. [Google Scholar] [CrossRef]

- Talari, S.; Shafie-khah, M.; Chen, Y.; Wei, W.; Gaspar, P.D.; Catalão, J.P.S. Real-Time Scheduling of Demand Response Options Considering the Volatility of Wind Power Generation. IEEE Trans. Sustain. Energy 2019, 10, 1633–1643. [Google Scholar] [CrossRef]

- Gomes, D.E.; Iglésias, M.I.D.; Proença, A.P.; Lima, T.M.; Gaspar, P.D. Applying a Genetic Algorithm to an m-TSP: Case Study of a Decision Support System for Optimizing a Beverage Logistics Vehicles Routing Problem. Electronics 2021, 10, 2298. [Google Scholar] [CrossRef]

- Freitas, A.A.; Lima, T.M.; Gaspar, P.D. Meta-Heuristic Model for Optimization of Production Layouts Based on Occupational Risk Assessment: Application to the Portuguese Wine Sector. Appl. Syst. Innov. 2022, 5, 40. [Google Scholar] [CrossRef]

- Freitas, A.A.; Lima, T.M.; Gaspar, P.D. Ergonomic Risk Minimization in the Portuguese Wine Industry: A Task Scheduling Optimization Method Based on the Ant Colony Optimization Algorithm. Processes 2022, 10, 1364. [Google Scholar] [CrossRef]

- Mesquita, R.; Gaspar, P.D. A Novel Path Planning Optimization Algorithm Based on Particle Swarm Optimization for UAVs for Bird Monitoring and Repelling. Processes 2021, 10, 62. [Google Scholar] [CrossRef]

- Magalhães, B.; Gaspar, P.D.; Corceiro, A.; João, L.; Bumba, C. Fuzzy Logic Decision Support System to predict Peaches Marketable Period at Highest Quality. Climate 2022, 10, 29. [Google Scholar] [CrossRef]

- Ananias, E.; Gaspar, P.D.; Soares, V.N.G.J.; Caldeira, J.M.L.P. Artificial Intelligence Decision Support System Based on Artificial Neural Networks to Predict the Commercialization Time by the Evolution of Peach Quality. Electronics 2021, 10, 2394. [Google Scholar] [CrossRef]

- Alibabaee, K.; Gaspar, P.D.; Lima, T. Crop Yield Estimation using Deep Learning Based on Climate Big Data. Energies 2021, 14, 3004. [Google Scholar] [CrossRef]

- Alibabaei, K.; Gaspar, P.D.; Lima, T.; Campos, R.M.; Girão, I.; Monteiro, J.; Lopes, C.M. A Review of the Challenges of using Deep Learning Algorithms to Support Decision-Making in Agricultural Activities. Remote Sens. 2022, 14, 638. [Google Scholar] [CrossRef]

- Alkayyali, M.; Tutunji, T.A. PSO-Based Algorithm for Inverse Kinematics Solution of Robotic Arm Manipulators. In Proceedings of the 2019 20th International Conference on Research and Education in Mechatronics (REM), Wels, Austria, 23–24 May 2019; Volume 5, pp. 8–13. [Google Scholar] [CrossRef]

- ISO 9283:1998(en); Manipulating Industrial Robots—Performance Criteria and Related Test Methods. 2nd ed. International Standards Organization: Geneva, Switzerland, 1998.

- Abderrahim, M.; Khamis, A.; Garrido, S.; Moreno, L. Accuracy and Calibration Issues of Industrial Manipulators. In Industrial Robotics: Programming, Simulation and Application; pIV pro literature Verlag: Mammendorf, Germany, 2006. [Google Scholar] [CrossRef] [Green Version]

| d | Distance [mm] |

|---|---|

| d1 | 163.00 |

| d2 | 204.00 |

| d3 | 230.00 |

| d4 | 100.00 |

| d5 | 100.00 |

| d6 | 100.00 |

| Link | a [mm] | α [°] | d [mm] | θ [°] |

|---|---|---|---|---|

| L01 | 0 | 90 | d1 | θ1 |

| L12 | d2 | 0 | 0 | θ2 |

| L23 | d3 | 0 | 0 | θ3 |

| L34 | 0 | 90 | d4 | θ4 |

| L45 | 0 | −90 | d5 | θ5 |

| L56 | 0 | 0 | d6 | θ6 |

| Filament | Temperature [°C] | Print Speed [mm/s] | Layer Height [mm] | Infill Density [%] | |

|---|---|---|---|---|---|

| Bed | |||||

| PLA | 215 | 70 | 80 | 0.2 | 20–50 |

| PETG | 240 | 80 | 80 | 0.2 | 40 |

| Position Still with Active Drive | Position after Disabled Drive | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| P1 | P2 | P3 | P4 | P5 | P1 | P2 | P3 | P4 | P5 | |

| Joints [°] | 3.51 × 10−2 | 1.41 × 10−2 | 1.29 × 10−2 | 8.16 × 10−3 | 1.41 × 10−2 | 4.08 × 10−2 | 7.79 × 10−2 | 5.35 × 10−2 | 1.83 × 10−2 | 4.93 × 10−2 |

| Position [mm] | 1.42 × 10−1 | 9.99 × 10−2 | 8.80 × 10−2 | 1.78 × 10−2 | 7.25 × 10−2 | 1.65 × 10−1 | 6.27 × 10−1 | 1.71 × 10−1 | 8.44 × 10−2 | 1.06 |

| Orientation [°] | 3.51 × 10−2 | 1.73 × 10−2 | 1.73 × 10−2 | 0.00 | 1.83 × 10−2 | 4.04 × 10−2 | 1.10 × 10−1 | 8.16 × 10−3 | 2.31 × 10−2 | 2.54 × 10−1 |

| Joint | Position | Orientation | |||||||

|---|---|---|---|---|---|---|---|---|---|

| θ1 [°] | θ2 [°] | θ3 [°] | X [mm] | Y [mm] | Z [mm] | X [°] | Y [°] | Z [°] | |

| P1 | 0.005 | 0.002 | 0.018 | 0.025 | 0.044 | 0.063 | 0.00 | 0.00 | 0.02 |

| P2 | 0.004 | 0.004 | 0.018 | 0.031 | 0.013 | 0.068 | 0.00 | 0.00 | 0.02 |

| P3 | 0.005 | 0.009 | 0.013 | 0.031 | 0.018 | 0.085 | 0.00 | 0.00 | 0.02 |

| P4 | 0.000 | 0.002 | 0.021 | 0.034 | 0.031 | 0.069 | 0.00 | 0.00 | 0.02 |

| P5 | 0.004 | 0.003 | 0.018 | 0.026 | 0.052 | 0.050 | 0.00 | 0.00 | 0.02 |

| Position | Accuracy [mm] | Repeatability [mm] | Accuracy [°] | Repeatability [°] |

|---|---|---|---|---|

| P5 | APp = 0.090 | RP = 0.2811 | APo = 0.0191 | RPo = ±0.0459 |

| APx = 0.041 | ||||

| APy = 0.074 | ||||

| APz = −0.031 | ||||

| P4 | APp = 0.100 | RP = 0.3423 | APo = 0.0173 | RPo = ±0.0579 |

| APx = 0.051 | ||||

| APy = −0.047 | ||||

| APz = −0.072 | ||||

| P3 | APp = 0.091 | RP = 0.254 | APo = 0.0217 | RPo = ±0.0213 |

| APx = −0.047 | ||||

| APy = −0.014 | ||||

| APz = −0.076 | ||||

| P2 | APp = 0.170 | RP = 0.3835 | APo = 0.0344 | RPo = ±0.0524 |

| APx = 0.022 | ||||

| APy = 0.026 | ||||

| APz = 0.166 | ||||

| P1 | APp = 0.131 | RP = 0.2836 | APo = 0.0316 | RPo = ±0.0364 |

| APx = −0.013 | ||||

| APy = −0.051 | ||||

| APz = 0.119 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ananias, E.; Gaspar, P.D. A Low-Cost Collaborative Robot for Science and Education Purposes to Foster the Industry 4.0 Implementation. Appl. Syst. Innov. 2022, 5, 72. https://doi.org/10.3390/asi5040072

Ananias E, Gaspar PD. A Low-Cost Collaborative Robot for Science and Education Purposes to Foster the Industry 4.0 Implementation. Applied System Innovation. 2022; 5(4):72. https://doi.org/10.3390/asi5040072

Chicago/Turabian StyleAnanias, Estevão, and Pedro Dinis Gaspar. 2022. "A Low-Cost Collaborative Robot for Science and Education Purposes to Foster the Industry 4.0 Implementation" Applied System Innovation 5, no. 4: 72. https://doi.org/10.3390/asi5040072

APA StyleAnanias, E., & Gaspar, P. D. (2022). A Low-Cost Collaborative Robot for Science and Education Purposes to Foster the Industry 4.0 Implementation. Applied System Innovation, 5(4), 72. https://doi.org/10.3390/asi5040072