1. Introduction

The number of computing devices that people encounter in their daily life is increasing. Beyond the typical form factor of a desktop computer, computing devices are becoming smaller, more abundant, and ubiquitously available. The vision of “ubiquitous computing” is more apparent today due to the myriad of connected everyday appliances known as the Internet-of-Things (IoT). Both ubiquitous computing and IoT emphasize environment-centric sensing and service provision through ambient intelligence. On the other hand, wearable computing complements the aforementioned environment-centric view with an intimate first-person view realized by always on, always accessible, and always connected wearables. Rapid advancements in ubiquitous computing and wearable computing have established a viable infrastructure to connect people over long distances to neutralize the barriers of physical locations. The recent COVID-19 pandemic has accelerated the use of this type of technology [

1,

2] in forms of remote collaboration, working-from-home, teleconferencing, online education, and metaverses enriched with various configurations of digital twins (DTs) [

3], augmented reality (AR), virtual reality (VR), mixed reality (MR), and extended reality (XR).

There are numerous reports and surveys supporting the wide spread and adoption of wearables, XR, and metaverses in the foreseeable future. According to BCG and Motor Intelligence, XR markets are growing and will reach 30.7 billion USD in 2021 and 300 billion USD by 2024, respectively. Another report by the IDC forecast that consumer spending on AR and VR technology is approximately 6.36 billion USD, accounting for 53% of global AR and VR spending in 2020. A survey published in February 2021 by ARtillery Intelligence highlights that enterprise AR glasses hardware and software revenue will rise to 2.05 billion USD in 2021 and 11.99 billion USD by 2024, respectively. XR industry experts forecast that such immersive technologies will have a great impact on the healthcare and medical sector [

4].

In this paper, we explore the opportunities and challenges of smartglass-assisted interactive telementoring and its applications. Specifically, our contribution is threefold, as follows:

First, we elicit requirements and trends by reviewing recent telementoring studies;

Second, we define a terminology “smartglass-assisted interactive telementoring (SAIT)” to refer to specialized use cases of telementoring and elaborate on its distinguishable characteristics;

Third, we identify opportunities and challenges of SAIT applications through five illustrative and descriptive scenarios.

2. Related Work

In this section, we review recent attempts at remote collaboration [

5] and XR collaboration [

6] using wearable displays and various interaction styles [

7,

8,

9] to elicit requirements for effective telementoring. Rather than the systematic review or meta-review approaches elaborated in [

10,

11], we specifically focus on recent remote collaboration and telementoring studies from 2015 to 2021.

Schäfer et al. have presented a comprehensive survey on synchronous remote collaboration systems based on AR/VR/MR technology [

5]. They have identified the category of “remote experts” to include collaboration systems involving a local and a remote user [

5] that share similar fundamental concepts and application areas as XR collaboration [

6], as well as our soon to be defined smartglass-assisted interactive telementoring approach.

2.1. Wearable Displays

Remote collaboration attempts to remove physical barriers between remote workers. One such technique is the capability of sharing a remote worker’s view and displaying it on a local site’s displays. Wearable displays such as head-mounted displays (HMDs) worn by collaborators enable the sharing of other users’ views, as well as supporting hands-free actions naturally. Wearable displays present additional information to the wearer. This characteristic has enabled various applications to be implemented using wearable displays. Wearable displays can be categorized as optical see-through (OST) or video see-through (VST) displays, and there are many trade-offs. In OST HMDs, the wearer can naturally see the real world through half-transparent mirrors placed in front of the wearer’s eyes, which optically reflect a combination of real and computer-generated images to the eyes without processing latency or delays [

12]. In VST HMDs, the real world is first captured with video cameras of the wider field of view (FoV) mounted on the HMD, and then the computer-generated images are electronically combined over the captured video [

12].

Google Glass launched in 2013 and demonstrated a viable use of OST HMDs through various situated applications in information visualization and augmented reality using images and instructions. Since then, commercial smartglasses from Microsoft [

13,

14,

15,

16,

17,

18,

19,

20,

21], Google [

22,

23], HTC [

7,

8], Vuzix [

24], Epson [

25,

26] and Brother [

27] have integrated a wearable display with a computationally sufficient computing unit that provides connectivity and a multi-modal user interface. For example, the latest OST HMDs such as HoloLens 2 and MagicLeap One provide high-resolution displays (i.e., up to 2048 × 1080), a wide FoV (i.e., 52 and 50 degrees), and tracking and control capabilities. For a comprehensive review on HMD for medical usages, readers are referred to [

28].

2.2. Medical and Surgical Telementoring

Telementoring is most beneficial when local and remote users have different degrees of knowledge, experience and expertise. Promising application domains for telementoring (satisfying the conditions for remoteness and mentoring as shown in

Table 1) are medical, surgical, and healthcare domains in which there exist a sufficient knowledge and experience gap between two groups of people (i.e., also known as mentors and mentees). Medical or often surgical telementoring is a well-examined application area that exploits these conditions to enhance or improve the overall performance of the involved users, as shown in

Table 1.

Ballantyne et al. stated that telementoring “permits an expert surgeon, who remains in their own hospital, to instruct a novice in a remote location on how to perform a new operation or use a new surgical technology [

29].” El-Sabawi and Magee III explained that “surgical telementoring involves the use of information technology to provide real-time guidance and technical assistance in performing surgical procedures from an expert physician in a different geographical location. Similar to traditional mentoring, it plays a dual role of educating and providing care at the same time [

30].” Semsar et al. defined that “these remote work practices entail a local worker performing a physical task supported by a remote expert who monitors the work and gives instructions when needed—sometimes referred to as telementoring [

31].” From the aforementioned views and previous medical and surgical telementoring studies, two types of telementoring can be identified. The first type is sharing the view of a mentor to teach mentees where mentees can learn from the mentor’s direct and real-time practice via learning by examples or demonstration strategies. The second type is sharing the view of a mentee, so mentors can appropriately give advice and deliver feedback in real-time for training and instruction purposes. For example, guidelines can be provided to surgeons to train them to perform new operations [

29,

32]. Despite the differences in who takes the initiative in telementoring, both types of telementoring can benefit from high-definition videoconferencing, wearable technology, robotic telementoring platforms, and augmented reality [

30]. For a comprehensive review on surgical, medical, and healthcare telementoring studies, readers are referred to [

10,

11].

2.3. Interaction Styles of Telementoring

Telementoring systems have employed numerous interaction techniques. Often, these interaction techniques were dominated by devices and displays used (i.e., mobile, wearables, and large-screen displays). Schäfer et al. identified nine common interactive elements in remote collaboration systems as 3D object manipulation, media sharing, AR annotations, 2D drawing, AR viewport sharing, mid-air drawing in 3D, hand gestures, shared gaze awareness, and conveying facial expression [

5]. In our review of recent telementoring studies, we have similarly identified four main interaction styles as touch-based user interface, voice-based user interface, gesture-based user interface, and telestration user interface.

2.3.1. Touch-Based User Interface

A touch-based user interface (UI) is a common and direct interaction style used in many telementoring systems [

16,

17,

18,

19,

33]. Most users are already familiar with touchscreens on smartphones and tablets, therefore touch-based UIs do not require much training time. However, in the medical and surgical domains, the use of hands is strictly restricted or prohibited to maintain hygiene. For other domains, touch-based UIs for wearables are popular and considered default. Smartglasses such as Google Glass, HoloLens, and Vuzix Blade all provide on-device touch-based UIs.

Figure 1 shows illustrations of touch-based interaction styles where the wearer can control the device by touching it (i.e., touchpad).

2.3.2. Voice-Based User Interface

A voice-based UI is another common style of interaction found in telementoring systems. Voice-based UIs are used between mentors and mentees to provide timely feedback and verbally communicate using conversations to request appropriate guidance. Unlike touch-based UIs, voice-based UIs can be implemented entirely hands-free, avoiding hygienic concerns for medical and surgical telementoring. The use of naturally spoken language is intuitive and highly expressive. Moreover, the performance of speech recognizers is greatly improved recently with artificial intelligence. Nonetheless, situations and environments involving many speakers, noisy workplaces, microphone quality, and the requirements of large vocabulary pose implementation and deployment issues. Often, a microphone is integrated with the device used for telementoring (i.e., smartglasses and notebooks). Previous telementoring studies using smartglasses have provided variations of voice-based UIs [

13,

14,

16,

17,

18,

19,

23,

31,

42].

Figure 2 illustrates hands-free and voice-based interaction styles.

2.3.3. Gesture-Based User Interface

A gesture-based UI mostly refers to exploiting hand gestures in telementoring systems. Commercial smartglasses such as Oculus Quest 2 and Microsoft HoloLens 2 provide hand tracking via computer vision or separate controllers. Furthermore, specialized hardware and software for data gloves and suits from Manus, BeBop sensors, Feel the Same, and Tesla can be adopted to include gesture-based UIs. Similar to voice-based UIs, gesture-based UIs can be used in hands-free and touch-less manner. On the downside, the less expressive gesture-based UIs often require additional and costly sensors, processing units, and heavy computation. In our review, several studies incorporated gesture-based UIs in their systems [

22,

36,

42].

Figure 3 demonstrates typical touch-less gesture-based interaction styles.

2.3.4. Telestration User Interface

A telestrator refers to a device that can draw sketches over still or video images. In many telementoring systems, similar functionalities of pointing, annotating, and drawing are called telestration as depicted in

Figure 4. Such telestration enables mentors to visually instruct and point out areas of interest quickly. Furthermore, telestration from mentees can be used to highlight problematic areas. Several studies in our review also used some forms of telestration in their systems [

14,

15,

16,

17,

18,

19,

20,

21,

26,

31,

37,

38,

39,

40], often with voice-based UIs [

14,

16,

17,

18,

19,

20,

31,

39].

2.3.5. Other Types of User Interfaces

There are other interaction styles such as using tangible UIs [

25], foot pedal [

27] and avatars [

21]. We think that using foot pedals depicted in

Figure 5, could go well with the aforementioned types of UIs (touch-based, voice-based, and gesture-based) and complement shortcomings of these interaction styles. For example, foot pedals could replace on-device and touch-based UIs in the medical and surgical settings. This interaction style can be used to confirm correctly recognized gestures or cancel incorrectly recognized gestures.

Table 2 identifies telementoring systems with smartglasses and their employed interaction styles discussed in this section.

3. Smartglass-Assisted Interactive Telementoring

In our review of recent remote collaboration and telementoring studies in the previous section, we identified studies that use smartglasses and satisfy telementoring requirements as shown in

Table 2. Using these selected studies, we define a specialized use case of telementoring, which we call “smartglass-assisted interactive telementoring (SAIT)”. As the terminology suggests, smartglasses are the required hardware display unlike traditional medical and surgical telementoring that allow other alternative displays [

31]. More specifically, the SAIT has the following characteristics over typical remote collaboration or telementoring in general.

Mentors and Mentees of Different Levels. The SAIT involves two groups of users categorized as mentors and mentees. Mentors are the sufficiently more knowledgeable and experienced groups with professional or technical expertise. Mentees are the less knowledgeable and experienced groups who would benefit or improve their performance with the help received from their mentors.

Sharing of First-person and Eye-level View via Smartglass. Either or both mentors and mentees share their eye-level view captured by their HMDs with sufficient FoV to provide enough details and video quality for the intended telementoring tasks.

Extended Use Cases with Bi-directional Interaction. The SAIT aims to cover flexible and casual use cases beyond the medical and surgical telementoring scenarios. In the SAIT, mentors and mentees are preferably on the go, situated, and hands-free for exploiting multiple interaction channels for various occasions.

To elaborate on these characteristics of the SAIT applications, five illustrative and descriptive scenarios are presented in the next section.

4. Five Scenarios for Smartglass-Assisted Interactive Telementoring

As we have discussed in

Section 2, many remote collaboration and telementoring systems have been developed in the healthcare sector. In a survey of XR industry experts, training simulations in the healthcare sector are the favored major application [

4].

Table 3,

Table 4,

Table 5 and

Table 6 show XR industry experts’ views on the promising XR applications in various domains reported in 2020 Augmented and Virtual Reality Survey Support [

4]. Training simulations and assisted surgeries are the top applications of immersive technologies in the healthcare sector, as shown in

Table 3. Immersive teaching experiences and soft skills development are expected to be a major application of XR technologies in the education sector, as shown in

Table 4. In the manufacturing sector, real-time remote assistance and workforce training are expected to be major XR applications, as shown in

Table 5. Improvements in navigation solutions and urban planning are the top applications of immersive technologies in smart cities, as shown in

Table 6.

Based on various XR applications across different domains, we have chosen five specific scenarios to elaborate on the concept and characteristics of the SAIT. The selected telementoring scenarios include the SAIT for paramedics, maintenance, culinary education and certification, hardware engineers, and foreigner navigation.

4.1. SAIT for Paramedics Scenario

Mentors and Mentees of Different Levels. This SAIT scenario involves dispatched paramedics as mentees and more experienced mentors at the medical center. The paramedics are trained to perform various skills in emergency settings (i.e., airway management, breathing, circulation, cardiac arrest, intravenous infusion, patient assessment, wound management) and provide emergency medications. Mentors with more medical expertise can guide and instruct the less-experienced mentees (i.e., newly employed, treatment for exceptional cases).

Sharing of First-person and Eye-level View via Smartglass. The mentees can allow their views captured by HMDs to be shared when they need assistance. Then, the mentors can intervene to assess a patient’s condition visually or walk through medical treatment together with the mentee.

Figure 6 illustrates dispatched paramedics mentees in this SAIT scenario.

Extended Use Cases with Bi-directional Interaction. In this scenario, voice-based UIs are used dominantly to communicate between mentors and mentees in real-time.

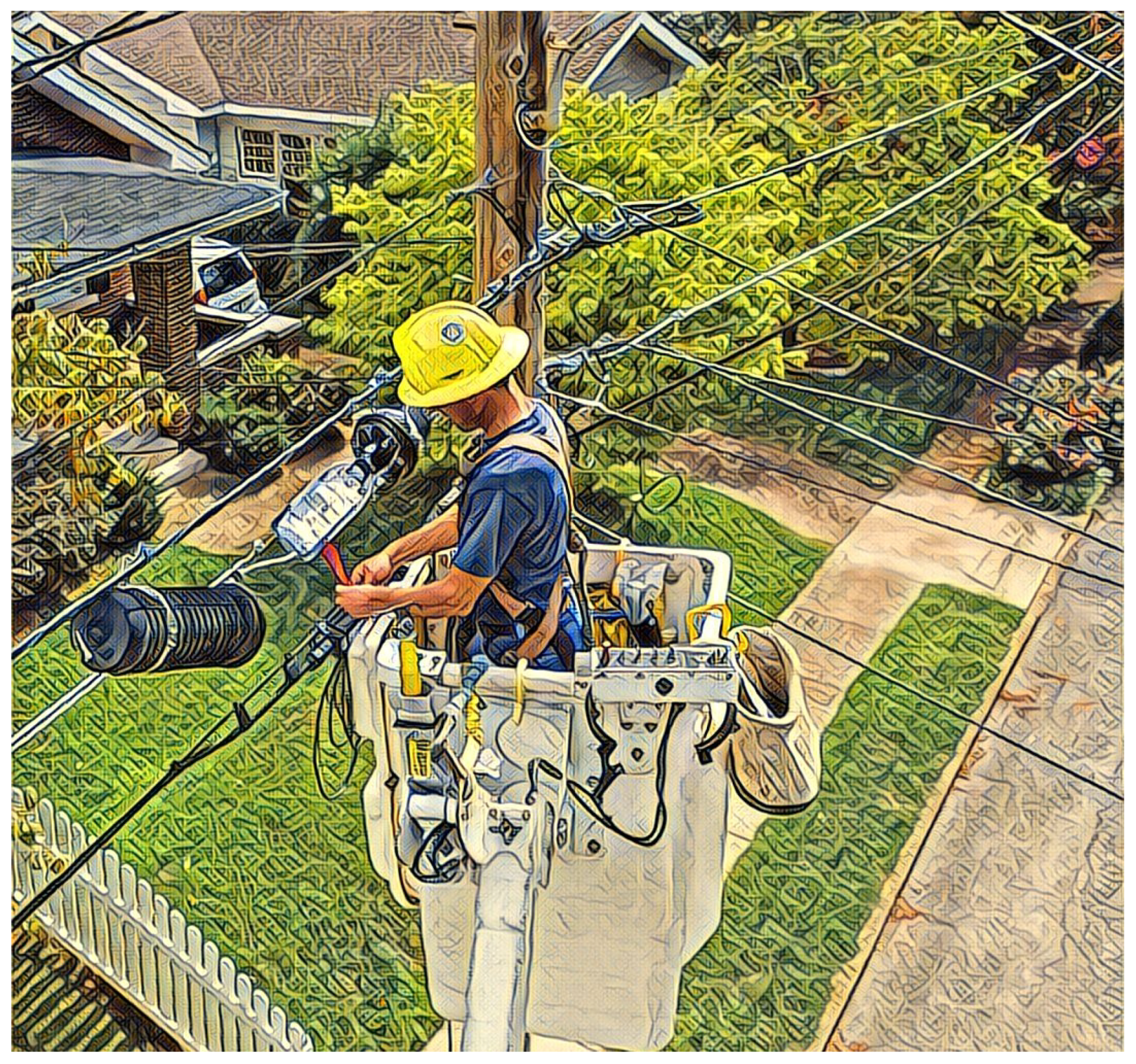

4.2. SAIT for Maintenance Scenario

Mentors and Mentees of Different Levels. This SAIT scenario involves on-site workers as mentees and remote experts as mentors. As illustrated in

Figure 7, working conditions on the site may be too narrow and confined to hold multiple workers physically. In this scenario, mentors with more experience and expertise support and assist mentees to carry out maintenance or repairing tasks successfully.

Sharing of First-person and Eye-level View via Smartglass. The mentees share their views captured by HMDs with remote expert mentors. Then the mentors can intervene to assess the equipment and cables visually as well as instructing complex procedures to the mentees.

Extended Use Cases with Bi-directional Interaction. In this scenario, voice-based UIs are used to communicate between mentors and mentees in real-time. Furthermore, telestration UIs will enhance communication to be more clear and allow identifying problematic areas of interest. For example, the mentor can highlight parts to be examined or replaced.

4.3. SAIT for Culinary Education and Certification Scenario

Mentors and Mentees of Different Levels. This scenario involves mentees who are interested in culinary education or getting a culinary certification. It is helpful to be trained by experienced chefs when you are learning to cook something new that requires new recipes or techniques. For example, a barista trainee can learn from winners of the World Barista Championships or learn from renowned chefs as illustrated in

Figure 8.

Sharing of First-person and Eye-level View via Smartglass. The mentors’ eye-level view captured by their HMDs can be shared with mentors when the mentees are assessed for getting certification. Another use case is that the mentor’s view captured by their HMDs are shared with multiple mentees to educate and train them by demonstration strategies.

Extended Use Cases with Bi-directional Interaction. In this scenario, voice-based UIs are used to communicate between mentors and mentees in real-time. Furthermore, telestration UIs can be used to draw attention to specific areas of interest. For example, the mentor can highlight where to cut.

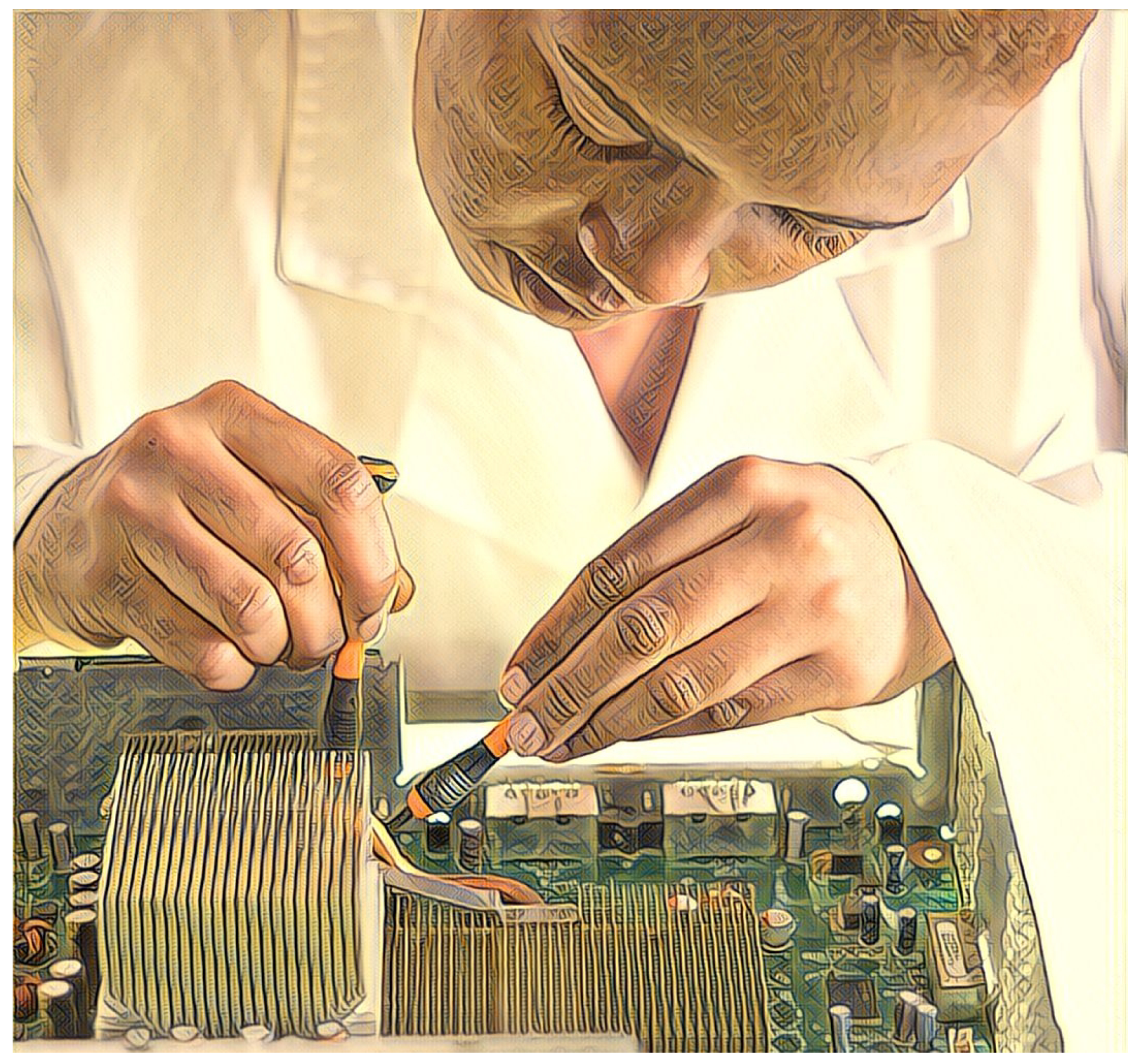

4.4. SAIT for Hardware Engineers Scenario

Mentors and Mentees of Different Levels. This scenario enables mentees to receive help from expert hardware engineers. For example, teachers as mentors can teach school courses that require hardware engineering as shown in

Figure 9. Remote mentors can also provide support for Do-It-Yourself (DIY) mentees.

Sharing of First-person and Eye-level View via Smartglass. It is often difficult to figure out hardware problems or explain the problem clearly. In this scenario, mentees share their eye-level view captured by their HMD smartglasses with mentors to receive supportive feedback.

Extended Use Cases with Bi-directional Interaction. In this scenario, voice-based UIs are used to communicate between mentors and mentees in real-time. Furthermore, telestration UI can be used to draw attention to specific areas of interest. For example, the mentor can highlight where to connect or dismantle.

4.5. SAIT for Foreigner Navigation

Mentors and Mentees of Different Levels. This scenario involves foreign travelers as mentees who do not have much information on where and how to find their destination as illustrated in

Figure 10. It will be helpful if local experts can accompany them to guide their navigation. For this purpose, local residents can play the role of mentors to help the mentees in their travel.

Sharing of First-person and Eye-level View via Smartglass. The mentees’ eye-level view captured by their HMDs is shared with mentors. Since the mentors are familiar with the local site, they can give useful information such as where to find good restaurants or which public transportation to use.

Extended Use Cases with Bi-directional Interaction. In this scenario, voice-based UIs are used to communicate between mentors and mentees in real-time. Furthermore, telestration UIs can be used to draw attention to specific areas to explore and navigate. For example, the mentor can highlight and recommend popular landmarks to visit.

5. Opportunities and Challenges of SAIT

In this section, we discuss the foreseeable opportunities and challenges of the proposed use case of the SAIT.

5.1. Opportunities

As reviewed in our related work section, a great amount of remote collaboration and telementoring studies focus on the medical, surgical, and healthcare domains. We presented a specialized use case of telementoring as the SAIT with three defining characteristics of mentors and mentees of different levels, sharing of first-person and eye-level view via smartglass, and extended use cases with bi-directional interaction. We believe that there are more application domains to be explored for remote collaboration and telementoring. In an attempt to go beyond the typical medical and surgical applications, we have presented five illustrative and descriptive SAIT scenarios that are more casual, tied with the use of smartglasses, and across several domains of healthcare, education, manufacturing, and smart cities, as shown in

Table 3,

Table 4,

Table 5 and

Table 6.

Several interaction styles such as touch-based UIs [

33,

43], voice-based UIs [

23] and telestration UIs for the SAIT are already in the mature stage (i.e., supported by Microsoft HoloLens 2, as shown in

Table 2), while novel interaction styles are emerging. For example, gesture-based UIs for finer granularity such as HaptX Gloves and exploiting all five channels of human senses (i.e., Virtuix Omni treadmill) are being developed. Facebook Reality Labs envision contextually aware, AI-powered interface for AR glasses using electromyography signals to perform “intelligent click” and wristband haptics for feedbackFacebook also patented artificial reality hat as an alternative to traditional AR headsets and smartglasses. Apple announced AssistiveTouch for Apple Watch that can detect subtle differences in muscle movement and tendon activity. Moreover, the widespread of IoT and edge computing [

44] enable flexible, adaptive, and seamless orchestration among smart devices and objects [

44,

45,

46,

47].

5.2. Technical and Societal Challenges

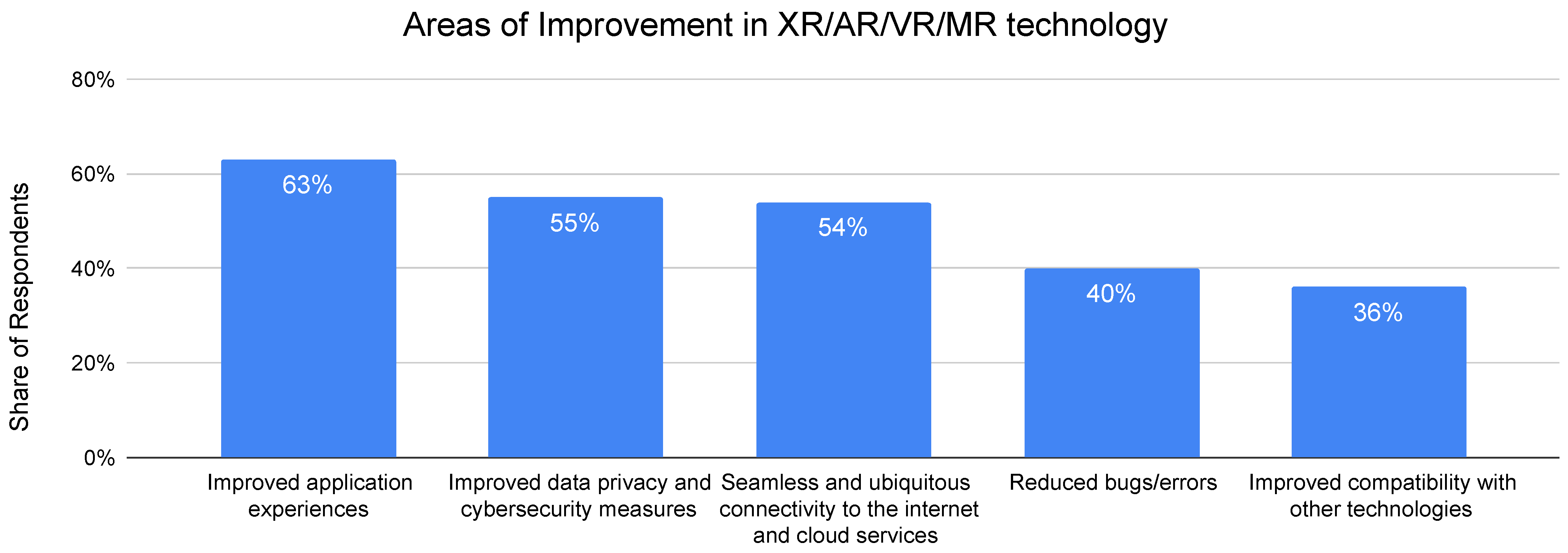

For our presented SAIT to be successful in XR telementoring, there are many technical as well as societal challenges to overcome. Many XR industry experts also have identified urgent areas of improvement [

4] as shown in

Figure 11.

Since the SAIT requires the use of smartglasses on mentors and mentees, various challenges of smartglasses are identified and being improved upon [

48,

49]. For example, to support the SAIT on everyday occasions, the weight of the HMD should be lighter. To provide a clear and crisp view captured by the HMD’s camera, wider FoV and higher resolution are preferred. Such specifications and performance measures of smartglasses including latency, content reproducibility, repeatability, synchronization, and warnings should be enforced through guidelines and standards. For example, there are international standards for VR and AR in environment safety (IEEE P2048.5), immersive user interface (IEEE P2048.6), eyewear display (IEC TR 63145-1-1:2018), and VR content (ISO/IEC TR 23842:2020), respectively.

Furthermore, XR experiences in our scenarios are limited since most informational contents (i.e., live video streams and annotations) are hardly considered as XR contents. Easy to use XR authoring tools for developers and service providers are desirable to provide multiple AR, VR, and XR applications [

50] and experiences in the SAIT scenarios. Moreover, establishing sufficient network bandwidth for high-resolution videos is also important to share the view and contents [

51] of mentors or mentees without interruptions and delays. As more interaction data between mentors and mentees are accumulated, we can benefit from deep learning approaches [

52,

53,

54] that enhance various steps involved in the XR pipeline (i.e., registration, tracking, rendering and interaction, task guidance [

55,

56]).

There are societal challenges regarding the SAIT. Concerns of involved users’ security, safety and privacy issues should be carefully managed. As witnessed in the medical and surgical sectors, use of camera in the operation room is very sensitive and controversial. In most countries, installation of and monitoring with closed-circuit television (CCTV) are strictly prohibited. For such sensitive and intimate scenarios, mentors or mentees could be disallowed to use smartglasses at all. There should be a general consensus and regulations among the society about the needs and social acceptance for smartglasses in the discussed SAIT scenarios.

6. Conclusions

In this paper, we explored XR concepts of remote collaboration and telementoring focusing on the use of smartglasses. To elicit requirements and the trends of telementoring, we reviewed recent studies from 2015 to 2021. Based on the literature review, we defined what three characteristics the SAIT (smartglass-assisted interactive telementoring) features. To elaborate on this specialized use case of telementoring, we presented five illustrative and descriptive scenarios including paramedics, maintenance, culinary education and certification, hardware engineers, and foreigner navigation. Lastly, we identified opportunities, technical and societal challenges to overcome for successfully realizing the SAIT.

Our current approach has limitations and shortcomings that deserve further study. First, our approach was not the systematic review or meta-review in nature as other researchers have explored [

10,

11]. We investigated relevant studies mostly using commercially available smartglasses, which might have missed custom-designed prototypical approaches presented earlier than 2015. Indeed, as it turns out, our approach only covered high-fidelity smartglasses with stable hardware design, but there could be low-fidelity approaches of surgical telementoring applications [

10,

11] that we have not included in our analysis. Another limitation of our work is that our newly proposed SAIT scenarios are situated outdoor and involves multiple simultaneous user interactions. This complexity calls for carefully orchestrated security and privacy measures in wearable computing as well as XR settings which we were not able to cover due to the scope of the paper.

Nonetheless, we expect our proposed SAIT has its merits to support various telementoring applications beyond medical and surgical telementoring, while harmoniously fostering cooperation using the smart devices of mentors and mentees at different scales for collocated, distributed, and remote collaboration.