Digital Deforestation: Comparing Automated Approaches to the Production of Digital Terrain Models (DTMs) in Agisoft Metashape

Abstract

:1. Introduction

2. Materials and Methods

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Opitz, R.S.; Cowley, D.C. Interpreting Archaeological Topography. AIRBORNE Laser Scanning, 3D Data and Ground Observation; Oxbow Books (Occasional Publication of the Aerial Archaeology Research Group, 5): Oxford, UK, 2013. [Google Scholar]

- Casana, J.; Laugier, E.J.; Hill, A.C.; Reese, K.M.; Ferwerda, C.; McCoy, M.D.; Ladefoged, T. 2021 Exploring archaeological landscapes using drone-acquired lidar: Case studies from Hawai’i, Colorado, and New Hampshire, USA. J. Archaeol. Sci. Rep. 2021, 39, 103133. [Google Scholar]

- Chase, A.F.; Chase, D.Z.; Weishampel, J.F.; Drake, J.B.; Shrestha, R.L.; Slatton, K.C.; Awe, J.J.; Carter, W.E. Airborne LiDAR, archaeology, and the ancient Maya landscape at Caracol, Belize. J. Archaeol. Sci. 2011, 38, 387–398. [Google Scholar] [CrossRef]

- Devereux, B.J.; Amable, G.S.; Crow, P.; Cliff, A.D. The potential of airborne lidar for detection of archaeological features under woodland canopies. Antiquity 2005, 79, 648–660. [Google Scholar] [CrossRef]

- Doneus, M.; Briese, C.; Fera, M.; Janner, M. Archaeological prospection of forested areas using full-waveform airborne laser scanning. J. Archaeol. Sci. 2008, 35, 882–893. [Google Scholar] [CrossRef]

- Johnson, K.M.; Ouimet, W.B. Rediscovering the lost archaeological landscape of southern New England using airborne Light Detection and Ranging (LiDAR). J. Archaeol. Sci. 2014, 43, 9–20. [Google Scholar] [CrossRef]

- Inomata, T.; Triadan, D.; Pinzón, F.; Burham, M.; Ranchos, J.L.; Aoyama, K.; Haraguchi, T. Archaeological application of airborne LiDAR to examine social changes in the Ceibal region of the Maya lowlands. PLoS ONE 2018, 13, e0191619. [Google Scholar]

- Doneus, M.; Briese, C. Full-waveform airborne laser scanning as a tool for archaeological reconnaissance. BAR Int. Ser. 2006, 1568, 99. [Google Scholar]

- Meng, X.; Currit, N.; Zhao, K. Ground filtering algorithms for airborne LiDAR data: A review of critical issues. Remote Sens. 2010, 2, 833–860. [Google Scholar] [CrossRef] [Green Version]

- Montealegre, A.L.; Lamelas, M.T.; de la Riva, J. A comparison of open-source LiDAR filtering algorithms in a Mediterranean forest environment. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 4072–4085. [Google Scholar] [CrossRef] [Green Version]

- Opitz, R.S. An overview of airborne and terrestrial laser scanning in archaeology. In Interpreting Archaeological Topography: 3D Data, Visualization and Observation; Opitz, R.C., Cowley, D.C., Eds.; Oxbow Books: Oxford, UK, 2013; pp. 13–31. [Google Scholar]

- Sithole, G.; Vosselman, G. Experimental comparison of filter algorithms for bare-Earth extraction from airborne laser scanning point clouds. ISPRS J. Photogramm. Remote Sens. 2004, 59, 85–101. [Google Scholar] [CrossRef]

- Mlekuž, D. 2013 Skin deep: LiDAR and good practice of landscape archaeology. In Good Practice in Archaeological Diagnostics; Corsi, C., Slapšak, B., Vermeulen, F., Eds.; Springer: Cham, Switzerland, 2013; pp. 113–129. [Google Scholar]

- Štular, B.; Eichert, S.; Lozić, E. Airborne LiDAR Point Cloud Processing for Archaeology. Pipeline and QGIS Toolbox. Remote Sens. 2021, 13, 3225. [Google Scholar] [CrossRef]

- White, D.A. LiDAR, point clouds, and their archaeological applications. In Mapping Archaeological Landscapes from Space; Comer, D.C., Harrower, M.J., Eds.; Springer: New York, NY, USA, 2013; pp. 175–186. [Google Scholar]

- Evans, D.H.; Fletcher, R.J.; Pottier, C.; Chevance, J.-B.; Soutif, D.; Tan, B.S.; Im, S.; Ea, D.; Tin, T.; Kim, S.; et al. Uncovering archaeological landscapes at Angkor using lidar. Proc. Natl. Acad. Sci. USA 2013, 110, 12595–12600. [Google Scholar] [CrossRef] [Green Version]

- Masini, N.; Lasaponara, R. Airborne lidar in archaeology: Overview and a case study. In Computational Science and Its Applications–ICCSA 2013, Proceedings of the International Conference on Computational Science and Its Applications, Ho Chi Minh City, Vietnam, 24–27 June 2013; Springer: Berlin/Heidelberg, Germany, 2013; pp. 663–676. [Google Scholar]

- Fernandez-Diaz, J.C.; Carter, W.E.; Shrestha, R.L.; Glennie, C.L. Now you see it… now you don’t: Understanding airborne mapping LiDAR collection and data product generation for archaeological research in Mesoamerica. Remote Sens. 2014, 6, 9951–10001. [Google Scholar] [CrossRef] [Green Version]

- Howland, M.D. 3D Recording in the Field: Style Without Substance? In Cyber-Archaeology and Grand Narratives; Jones, I.W.N., Levy, T.E., Eds.; Springer: Cham, Switzerland, 2018; pp. 19–33. [Google Scholar]

- Vilbig, J.M.; Sagan, V.; Bodine, C. Archaeological surveying with airborne LiDAR and UAV photogrammetry: A comparative analysis at Cahokia Mounds. J. Archaeol. Sci. Rep. 2020, 33, 102509. [Google Scholar] [CrossRef]

- Jiménez-Jiménez, S.I.; Ojeda-Bustamante, W.; Marcial-Pablo, M.d.J.; Enciso, J. Digital Terrain Models Generated with Low-Cost UAV Photogrammetry: Methodology and Accuracy. ISPRS Int. J. Geo-Inf. 2021, 10, 285. [Google Scholar] [CrossRef]

- Campana, S. Drones in archaeology. State-of-the-art and future perspectives. Archaeol. Prospect. 2017, 24, 275–296. [Google Scholar] [CrossRef]

- Hill, A.C.; Rowan, Y. Droning on in the Badia: UAVs and site documentation at Wadi al-Qattafi. Near East. Archaeol. 2017, 80, 114–123. [Google Scholar] [CrossRef]

- Hill, A.C. Economical drone mapping for archaeology: Comparisons of efficiency and accuracy. J. Archaeol. Sci. Rep. 2019, 24, 80–91. [Google Scholar] [CrossRef]

- Howland, M.D.; Kuester, F.; Levy, T.E. Photogrammetry in the field: Documenting, recording, and presenting archaeology. Mediterr. Archaeol. Archaeom. 2014, 14, 101–108. [Google Scholar]

- Magnani, M.; Douglass, M.; Schroder, W.; Reeves, J.; Braun, D.R. The digital revolution to come: Photogrammetry in archaeological practice. Am. Antiq. 2020, 85, 737–760. [Google Scholar] [CrossRef]

- Olson, B.R.; Placchetti, R.A.; Quartermaine, J.; Killebrew, A.E. The Tel Akko Total Archaeology Project (Akko, Israel): Assessing the suitability of multi-scale 3D field recording in archaeology. J. Field Archaeol. 2013, 38, 244–262. [Google Scholar] [CrossRef]

- Themistocleous, K. 2020 The use of UAVs for cultural heritage and archaeology. In Remote Sensing for Archaeology and Cultural Landscapes; Hadjimitsis, D.G., Themistocleous, K., Cuca, B., Agapiou, A., Lysandrou, V., Lasaponara, R., Masini, N., Schreier, G., Eds.; Springer: Cham, Switzerland, 2020; pp. 241–269. [Google Scholar]

- Waagen, J. New technology and archaeological practice. Improving the primary archaeological recording process in excavation by means of UAS photogrammetry. J. Archaeol. Sci. 2019, 101, 11–20. [Google Scholar]

- Wernke, S.A.; Adams, J.A.; Hooten, E.R. Capturing Complexity: Toward an Integrated Low-Altitude Photogrammetry and Mobile Geographic Information System Archaeological Registry System. Adv. Archaeol. Pract. 2014, 2, 147–163. [Google Scholar] [CrossRef] [Green Version]

- Hill, A.C.; Rowan, Y.; Kersel, M.M. Mapping with aerial photographs: Recording the past, the present, and the invisible at Marj Rabba, Israel. Near East. Archaeol. 2014, 77, 182–186. [Google Scholar] [CrossRef]

- Jones, C.A.; Church, E. Photogrammetry is for everyone: Structure-from-motion software user experiences in archaeology. J. Archaeol. Sci. Rep. 2020, 30, 102261. [Google Scholar] [CrossRef]

- Verhoeven, G. Taking Computer Vision Aloft–Archaeological Three-Dimensional Reconstructions from Aerial Photographs with Photoscan. Archaeol. Prospect. 2011, 18, 67–73. [Google Scholar] [CrossRef]

- Dubbini, M.; Curzio, L.I.; Campedelli, A. Digital elevation models from unmanned aerial vehicle surveys for archaeological interpretation of terrain anomalies: Case study of the Roman castrum of Burnum (Croatia). J. Archaeol. Sci. Rep. 2016, 8, 121–134. [Google Scholar] [CrossRef] [Green Version]

- Uysal, M.; Toprak, A.S.; Polat, N. DEM generation with UAV Photogrammetry and accuracy analysis in Sahitler hill. Measurement 2015, 73, 539–543. [Google Scholar] [CrossRef]

- O’Driscoll, J. Landscape applications of photogrammetry using unmanned aerial vehicles. J. Archaeol. Sci. Rep. 2018, 22, 32–44. [Google Scholar] [CrossRef]

- Sapirstein, P.; Murray, S. Establishing best practices for photogrammetric recording during archaeological fieldwork. J. Field Archaeol. 2017, 42, 337–350. [Google Scholar] [CrossRef]

- Anders, N.; Valente, J.; Masselink, R.; Keesstra, S. Comparing filtering techniques for removing vegetation from UAV-based photogrammetric point clouds. Drones 2019, 3, 61. [Google Scholar] [CrossRef] [Green Version]

- Zeybek, M.; Şanlıoğlu, İ. Point cloud filtering on UAV based point cloud. Measurement 2019, 133, 99–111. [Google Scholar] [CrossRef]

- Serifoglu Yilmaz, C.; Gungor, O. Comparison of the performances of ground filtering algorithms and DTM generation from a UAV-based point cloud. Geocarto Int. 2016, 33, 1–16. [Google Scholar] [CrossRef]

- Fernández-Lozano, J.; Gutiérrez-Alonso, G. Improving archaeological prospection using localized UAVs assisted photogrammetry: An example from the Roman Gold District of the Eria River Valley (NW Spain). J. Archaeol. Sci. Rep. 2016, 5, 509–520. [Google Scholar] [CrossRef]

- Polat, N.; Uysal, M. DTM generation with UAV based photogrammetric point cloud. ISPRS 2017, XLII-4/W6, 77–79. [Google Scholar] [CrossRef] [Green Version]

- Zhang, W.; Qi, J.; Wan, P.; Wang, H.; Xie, D.; Wang, X.; Yan, G. An easy-to-use airborne lidar data filtering method based on cloth simulation. Remote Sens. 2016, 8, 501. [Google Scholar] [CrossRef]

- Jensen, J.L.R.; Mathews, A.J. Assessment of image-based point cloud products to generate a bare earth surface and estimate canopy heights in a woodland ecosystem. Remote Sens. 2016, 8, 50. [Google Scholar] [CrossRef] [Green Version]

- Rahmayudi, A.; Rizaldy, A. Comparison of Semi Automatic DTM from Image Matching with DTM from LIDAR. ISPRS 2016, 41, 373–380. [Google Scholar]

- Zhang, Z.; Gerke, M.; Vosselman, G.; Yang, M.Y. Filtering Photogrammetric Point Clouds using Standard LIDAR Filters Towards DTM Generation. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 4, 319–326. [Google Scholar] [CrossRef] [Green Version]

- Durupt, M.; Flamanc, D.; le Bris, A.; Iovan, C.; Champion, N. Evaluation of the potential of Pleiades system for 3D city models production: Building, vegetation and DTM extraction. In Proceedings of the ISPRS Commission I Symposium, Karlsruhe, Germany, 9–12 October 2016. [Google Scholar]

- Skarlatos, D.; Vlachos, M. Vegetation removal from UAV derived DSMS, using combination of RGB and NIR imagery. In Proceedings of the ISPRS Annals of Photogrammetry, Remote Sensing and Spatial Information Sciences, Riva del Garda, Italy, 4–7 June 2018; Volume IV-2, pp. 255–262. [Google Scholar]

- Özdemir, E.; Remondino, F.; Golkar, A. Aerial point cloud classification with deep learning and machine learning algorithms. ISPRS 2019, 42, 843–849. [Google Scholar] [CrossRef] [Green Version]

- Becker, C.; Rosinskaya, E.; Häni, N.; d’Angelo, E.; Strecha, C. Classification of aerial photogrammetric 3D point clouds. Photogramm. Eng. Remote Sens. 2018, 84, 287–295. [Google Scholar] [CrossRef]

- Sammartano, G.; Spanò, A. DEM Generation based on UAV Photogrammetry Data in Critical Areas. In Proceedings of the GISTAM, Rome, Italy, 26–27 April 2016; pp. 92–98. [Google Scholar]

- Dense Cloud Classification. Available online: https://agisoft.freshdesk.com/support/solutions/articles/31000148866-dense-cloud-classification#Automatic-Classify-Ground-Points%C2%A0 (accessed on 31 October 2021).

- Hatzopoulos, J.N.; Stefanakis, D.; Georgopoulos, A.; Tapinaki, S.; Pantelis, V.; Liritzis, I. Use of Various Surveying Techonologies to 3D Digital Mapping and Modelling of Cultural Heritage Structures for Maintenance and Restoration Purposes: The Tholos in Delphi, Greece. Mediterr. Archaeol. Archaeom. 2017, 17, 311–336. [Google Scholar]

- Sonnemann, T.F.; Hung, J.U.; Hofman, C.L. 2016 Mapping indigenous settlement topography in the Caribbean using drones. Remote Sens. 2016, 8, 791. [Google Scholar] [CrossRef] [Green Version]

- Salach, A.; Bakuła, K.; Pilarska, M.; Ostrowski, W.; Górski, K.; Kurczyński, Z. 2018 Accuracy assessment of point clouds from LiDAR and dense image matching acquired using the UAV platform for DTM creation. ISPRS Int. J. Geo-Inf. 2018, 7, 342. [Google Scholar] [CrossRef] [Green Version]

- Tutorial (Intermediate Level): Dense Cloud Classification and DTM Generation with Agisoft PhotoScan Pro 1.1. Available online: https://www.agisoft.com/pdf/PS_1.1%20-Tutorial%20(IL)%20-%20Classification%20and%20DTM.pdf (accessed on 31 October 2021).

- Liritzis, I. Kastrouli fortified settlement (desfina, phokis, Greece): A chronicle of research. Sci. Cult. 2021, 7, 17–32. [Google Scholar]

- Raptopoulos, S. Phokis (Φωκίδα). Arkheologiko Deltio 2005, 60, 463–464. [Google Scholar]

- Raptopoulos, S. 2012 Mycenaean tholos tomb in Desfina of Phokis (Μυκηναϊκός θολωτός τάφος στη Δεσφίνα Φωκίδος). Arkheologiko Ergo Thessal. Kai Ster. Elladas 2012, 3, 1071–1078. [Google Scholar]

- Liritzis, I.; Polymeris, G.S.; Vafiadou, A.; Sideris, A.; Levy, T.E. Luminescence dating of stone wall, tomb and ceramics of Kastrouli (Phokis, Greece) Late Helladic settlement: Case study. J. Cult. Herit. 2019, 35, 76–85. [Google Scholar] [CrossRef]

- Sideris, A.; Liritzis, I.; Liss, B.; Howland, M.D.; Levy, T.E. At-Risk Cultural Heritage: New Excavations and Finds from the Mycenaean Site of Kastrouli, Phokis, Greece. Mediterr. Archaeol. Archaeom. 2017, 17, 271–285. [Google Scholar]

- Liritzis, I.; Sideris, A. The Mycenaean Site of Kastrouli, Phokis, Greece: Second Excavation Season, July 2017. Mediterr. Archaeol. Archaeom. 2018, 18, 209–224. [Google Scholar]

- Baziotis, I.; Xydous, S.; Manimanaki, S.; Liritzis, I. An integrated method for ceramic characterization: A case study from the newly excavated Kastrouli site (Late Helladic). J. Cult. Herit. 2020, 42, 274–279. [Google Scholar] [CrossRef]

- Chovalopoulou, M.-E.; Bertsatos, A.; Manolis, S.K. Identification of skeletal remains from a Mycenaean burial in Kastrouli-Desfina, Greece. Mediterr. Archaeol. Archaeom. 2017, 17, 265–269. [Google Scholar]

- Koh, A.J.; Birney, K.J.; Roy, I.M.; Liritzis, I. 2020 The Mycenaean citadel and environs of Desfina-Kastrouli: A transdisciplinary approach to southern Phokis. Mycen. Archaeol. Archaeom. 2020, 20, 47–73. [Google Scholar]

- Kontopoulos, I.; Penkman, K.; Liritzis, I.; Collins, M.J. 2019 Bone diagenesis in a Mycenaean secondary burial (Kastrouli, Greece). Archaeol. Anthropol. Sci. 2019, 11, 5213–5230. [Google Scholar] [CrossRef] [Green Version]

- Liritzis, I.; Xanthopoulou, V.; Palamara, E.; Papageorgiou, I.; Iliopoulos, I.; Zacharias, N.; Vafiadou, A.; Karydas, A.G. Characterization and provenance of ceramic artifacts and local clays from Late Mycenaean Kastrouli (Greece) by means of p-XRF screening and statistical analysis. J. Cult. Herit. 2020, 46, 61–81. [Google Scholar] [CrossRef]

- Levy, T.E.; Sideris, T.; Howland, M.; Liss, B.; Tsokas, G.; Stambolidis, A.; Fikos, E.; Vargemezis, G.; Tsourlos, P.; Georgopoulos, A.; et al. At-risk world heritage, cyber, and marine archaeology: The Kastrouli–Antikyra Bay land and sea project, Phokis, Greece. In Cyber-Archaeology and Grand Narratives; Jones, I.W.N., Levy, T.E., Eds.; Springer: Cham, Switzerland, 2018; pp. 143–234. [Google Scholar]

- Doneus, M.; Verhoeven, G.; Fera, M.; Briese, C.; Kucera, M.; Neubauer, W. From deposit to point cloud – a study of low-cost computer vision approaches for the straightforward documentation of archaeological excavations. Geoinf. FCE CTU 2011, 6, 81–88. [Google Scholar] [CrossRef] [Green Version]

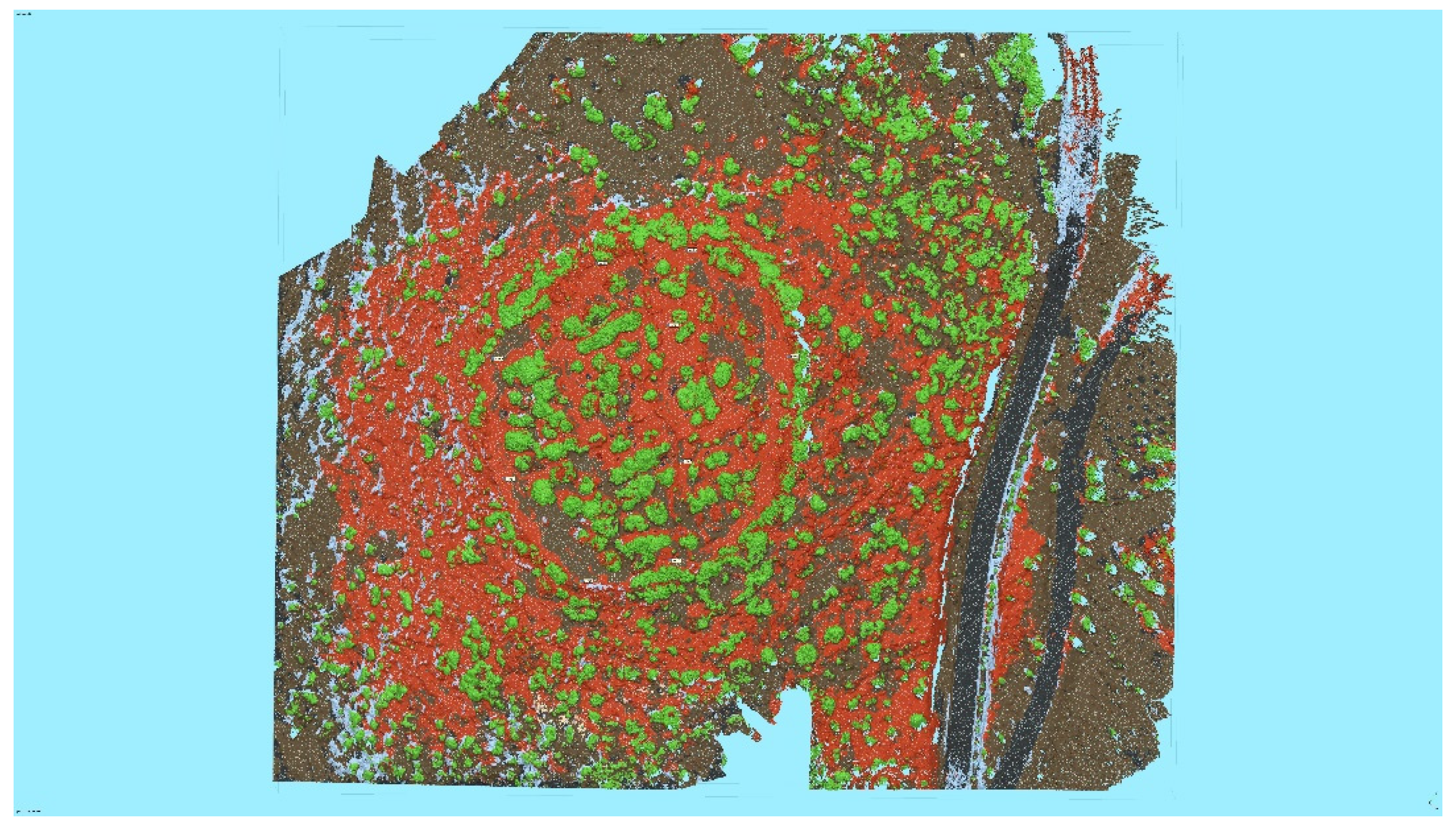

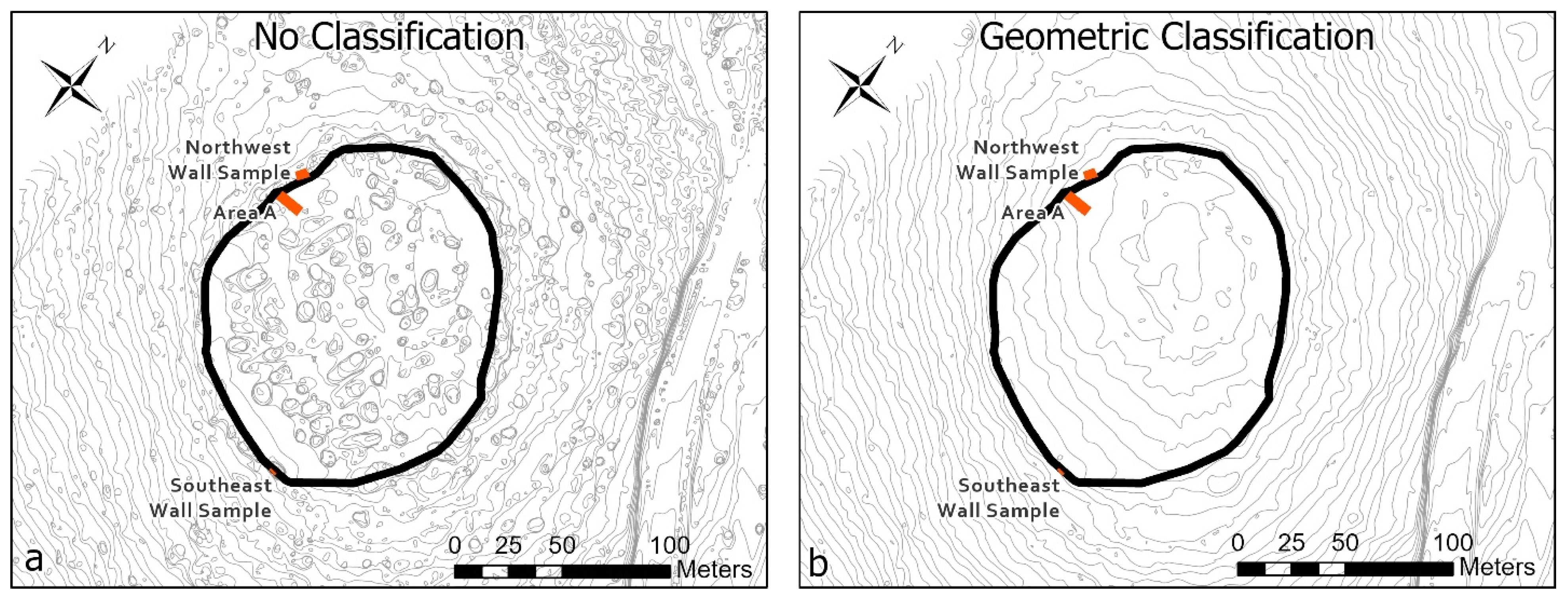

| Point Cloud Classification Method | User Parameters |

|---|---|

| Classify Points | Confidence: 0.00 |

| Classify Ground Points | Max angle (deg): 15 |

| Max distance (m): 0.05 | |

| Cell size (m): 10 | |

| Select Points by Color | Color: #b69b8a |

| Tolerance: 15 | |

| Channels: red, green, blue, hue, saturation, value | |

| Assign Class | Fully manual |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Howland, M.D.; Tamberino, A.; Liritzis, I.; Levy, T.E. Digital Deforestation: Comparing Automated Approaches to the Production of Digital Terrain Models (DTMs) in Agisoft Metashape. Quaternary 2022, 5, 5. https://doi.org/10.3390/quat5010005

Howland MD, Tamberino A, Liritzis I, Levy TE. Digital Deforestation: Comparing Automated Approaches to the Production of Digital Terrain Models (DTMs) in Agisoft Metashape. Quaternary. 2022; 5(1):5. https://doi.org/10.3390/quat5010005

Chicago/Turabian StyleHowland, Matthew D., Anthony Tamberino, Ioannis Liritzis, and Thomas E. Levy. 2022. "Digital Deforestation: Comparing Automated Approaches to the Production of Digital Terrain Models (DTMs) in Agisoft Metashape" Quaternary 5, no. 1: 5. https://doi.org/10.3390/quat5010005

APA StyleHowland, M. D., Tamberino, A., Liritzis, I., & Levy, T. E. (2022). Digital Deforestation: Comparing Automated Approaches to the Production of Digital Terrain Models (DTMs) in Agisoft Metashape. Quaternary, 5(1), 5. https://doi.org/10.3390/quat5010005