Toward Affirmation of Recovery of Deeply Embedded Autobiographical Memory with Background Music and Identification of an EEG Biomarker in Combination with EDA Signal Using Wearable Sensors

Abstract

1. Introduction

1.1. Types of Memories and Their Regions

1.2. Music Therapy

1.3. Biomarker

“A characteristic that is objectively measured and evaluated as an indicator of normal biological processes, pathogenic processes, or pharmacologic responses to a therapeutic intervention.”

1.4. Related Works

2. Materials and Methods

2.1. Experimental Protocols

2.2. Materials

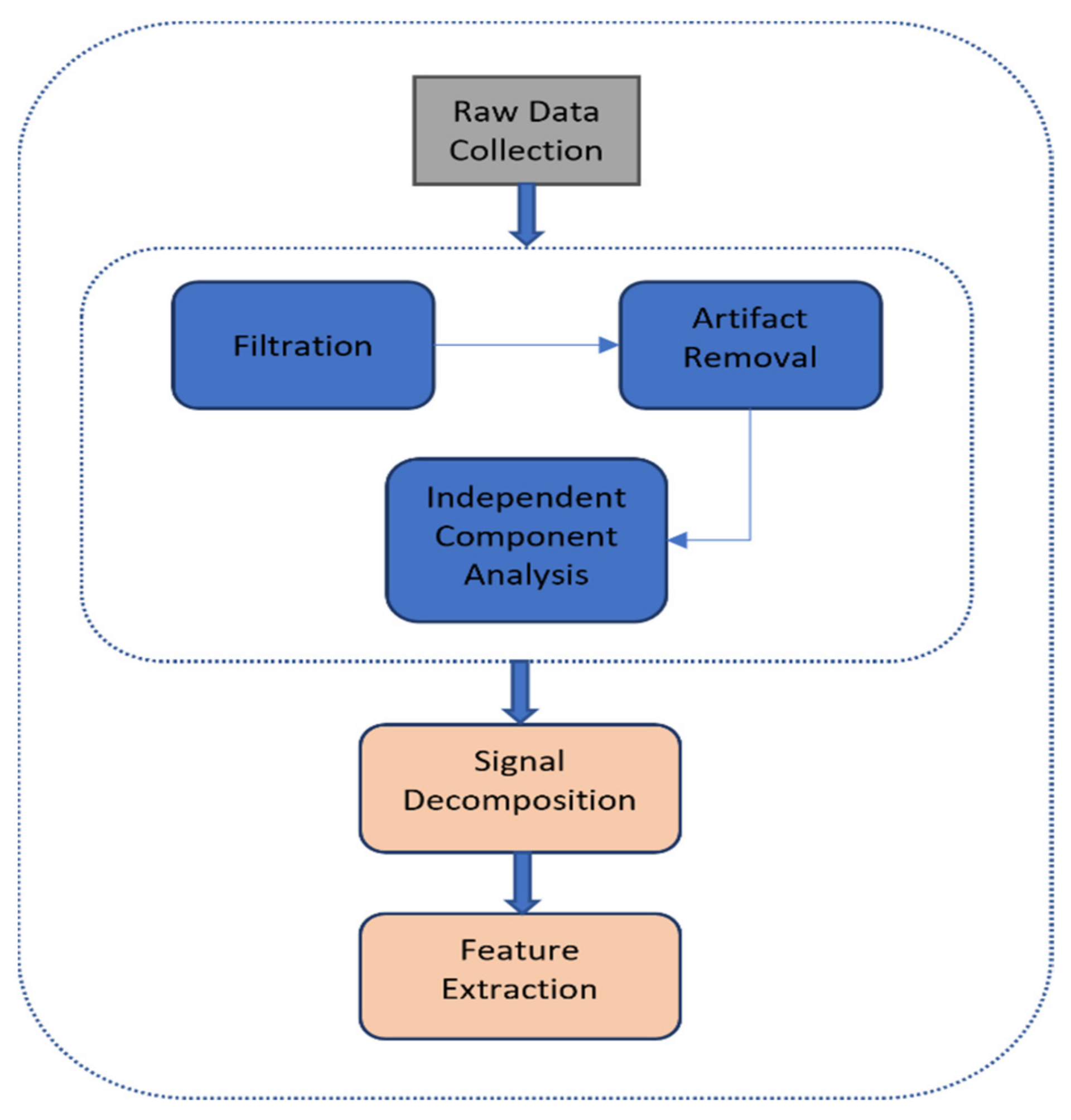

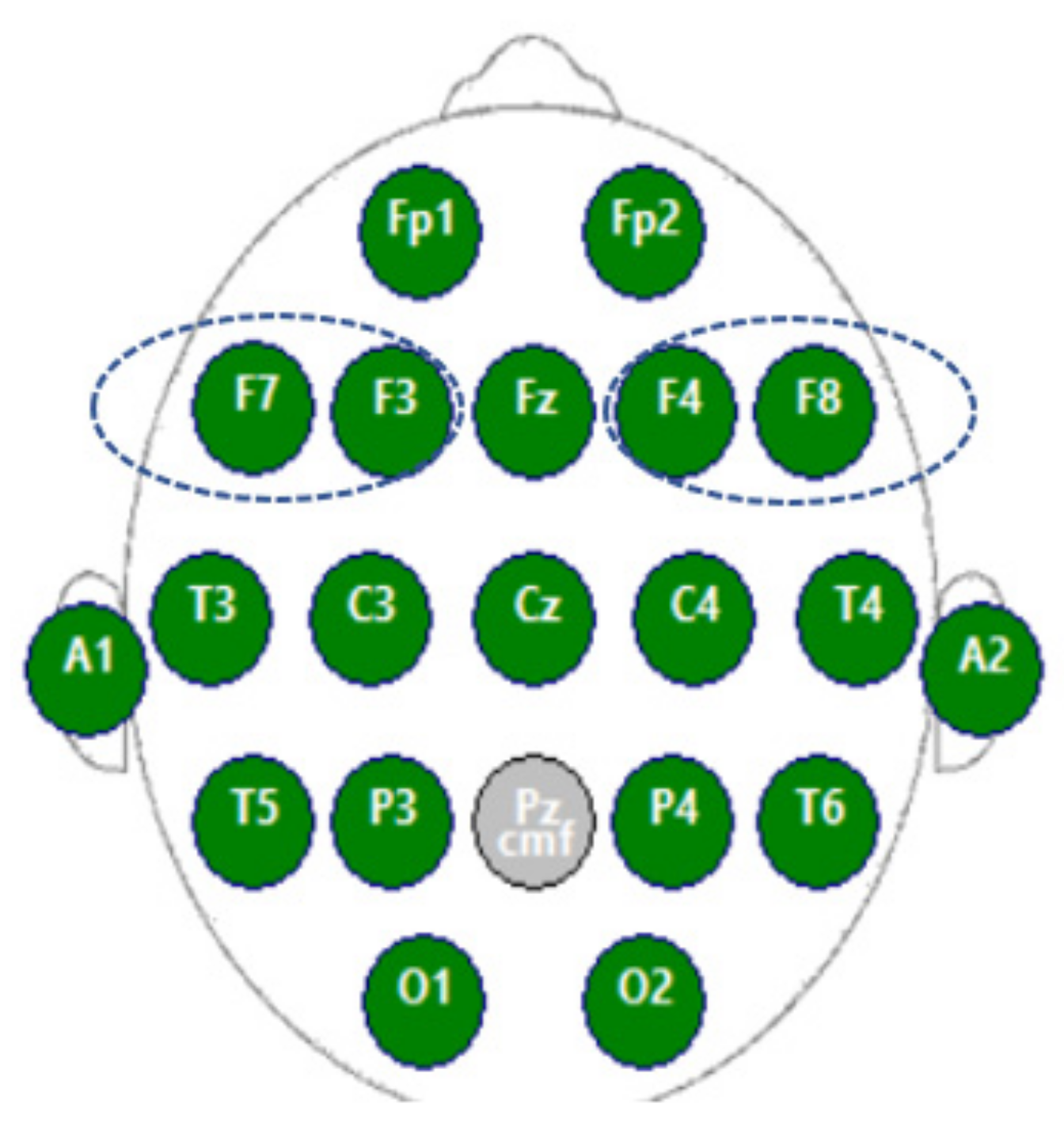

2.3. EEG Data Processing

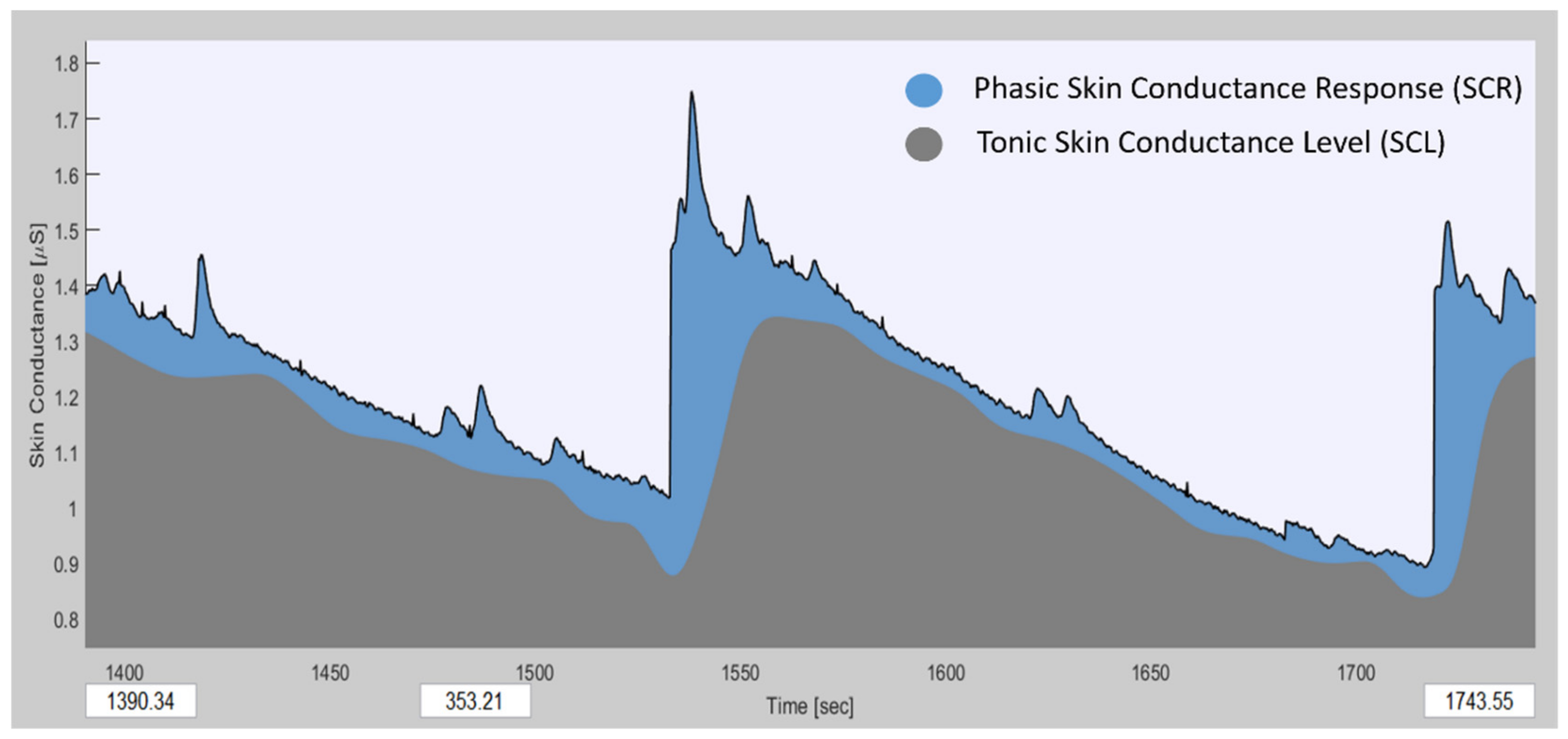

2.4. EDA Data Processing

2.5. Extracted Features

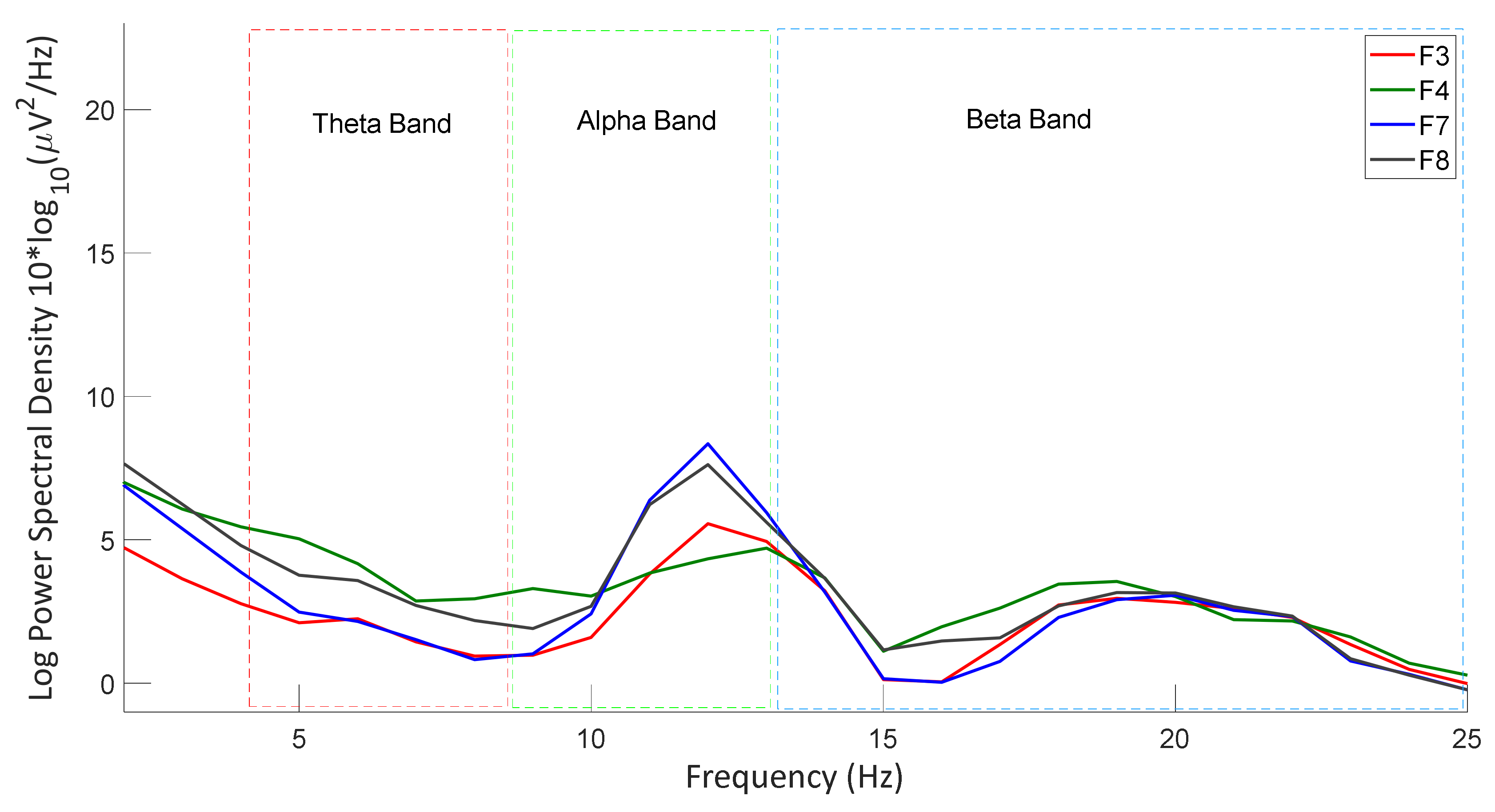

2.5.1. EEG Features

2.5.2. EDA Features

2.6. Statistical Tools

3. Results

3.1. Statistical Analysis of Memory Recall

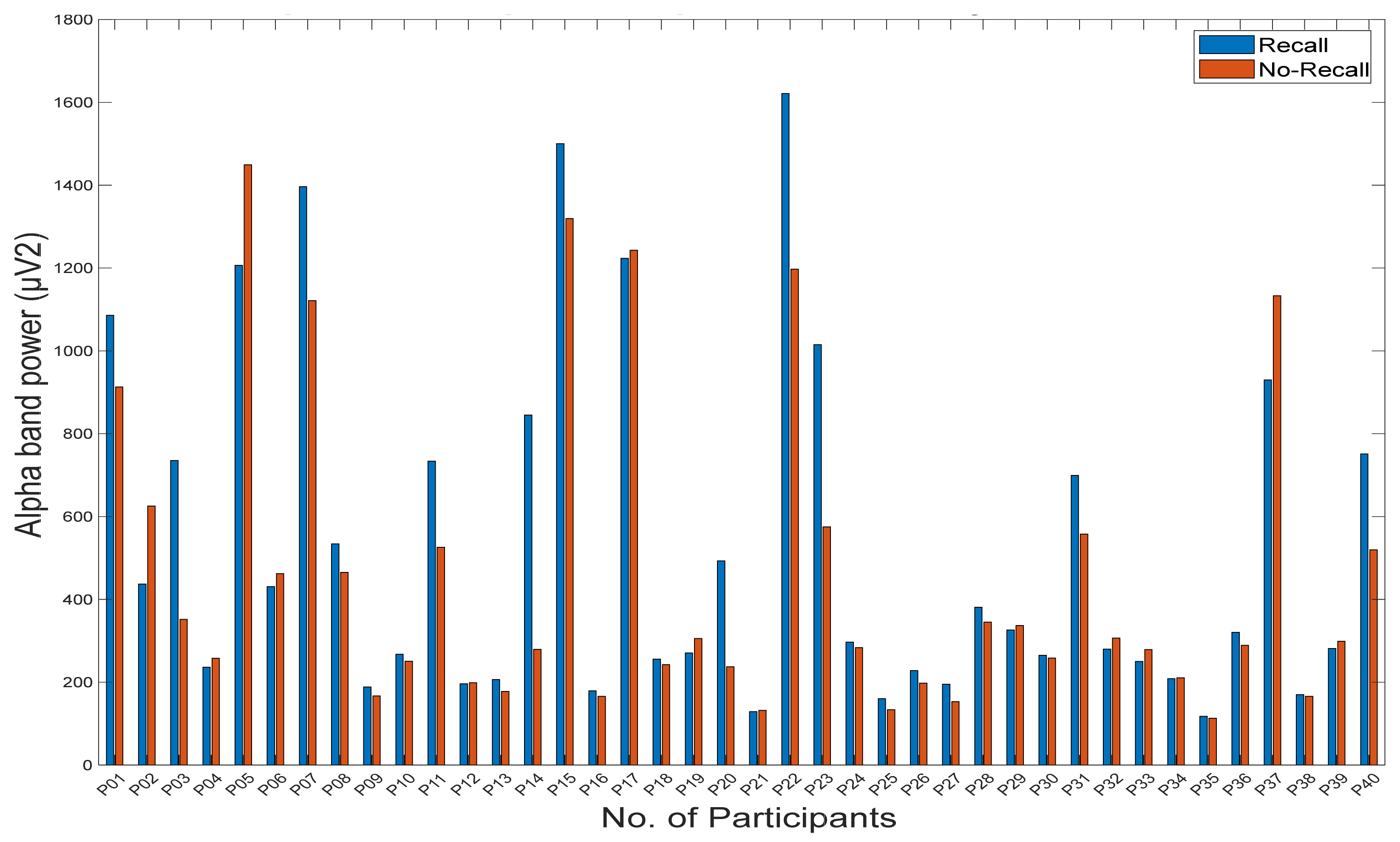

3.2. EEG Data Analysis Results

3.2.1. The t-Test between Two Scenarios

3.2.2. Pearson Correlation Coefficient between Paired Electrodes

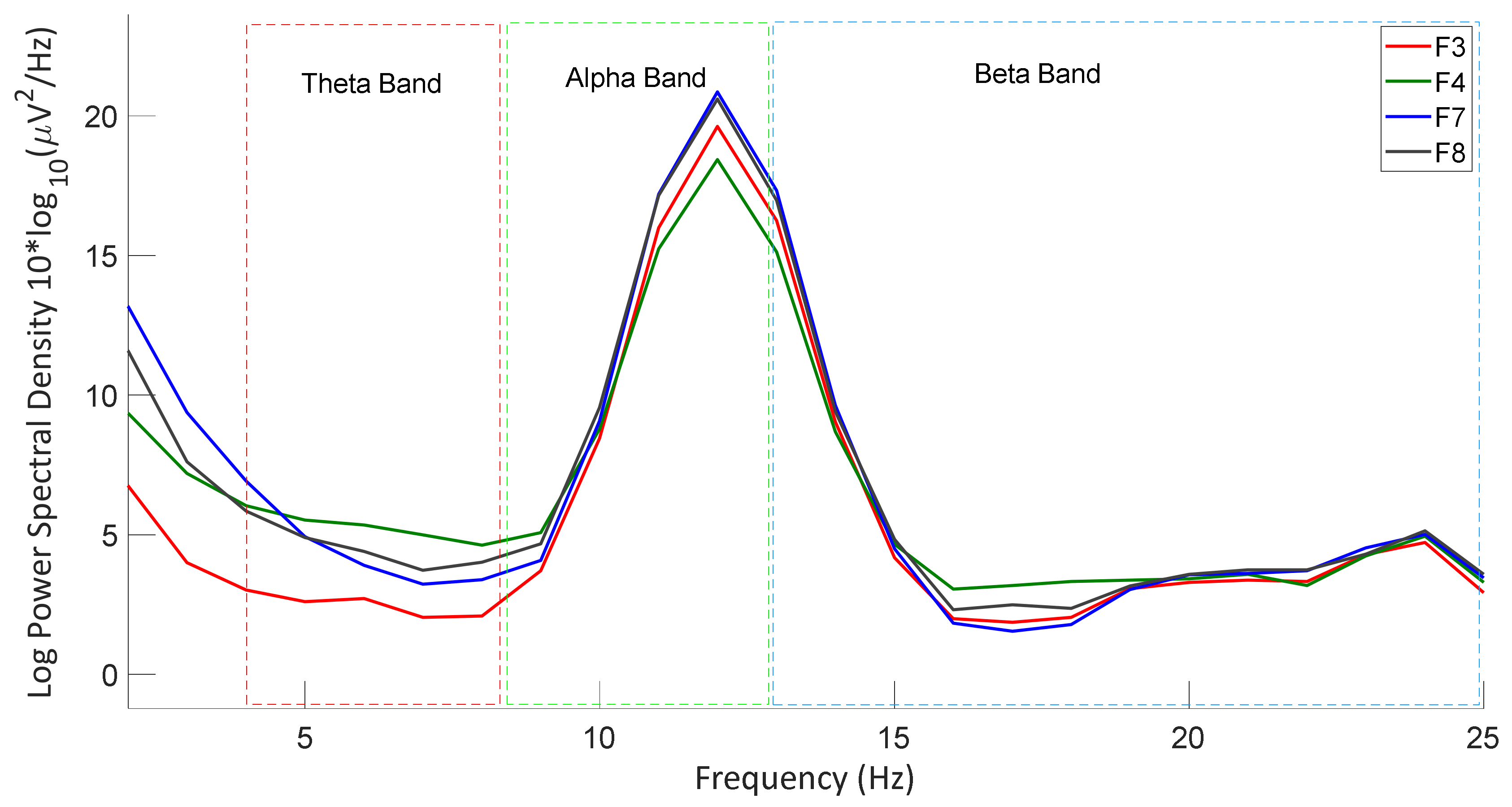

3.2.3. Power Spectral Density Analysis

3.3. EDA Data Analysis Results

4. Discussion

4.1. Contribution

4.2. Limitations

4.3. Future Work

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| EEG | electroencephalogram |

| EDA | electrodermal activity |

| ABM | autobiographical memory |

| BPSD | behavioral and psychologic symptoms of dementia |

| qEEG | quantitative EEG |

| mTB | mild traumatic brain injury |

| DAR | delta–alpha ratio |

| DTR | delta–theta ratio |

| AD | Alzheimer’s disease |

| MEAM | music-evoked autobiographical memory |

| IRB | Institutional Review Board |

| GSR | galvanic skin response |

| BVP | blood volume response |

| IBI | interbeat interval |

| HRV | heart rate variability |

| DSI | dry sensor interface |

| ICA | independent component analysis |

| FIR | finite impulse response |

| ASR | artifact subspace reconstruction |

| EKF | extended Kalman filter |

| PF | particle filter |

| SCR | skin conductance response |

| SCL | skin conductance level |

| PSD | power spectral density |

| PCC | Pearson correlation coefficient |

Appendix A

| Participant No. | Happy 1 | Happy 2 | Happy 3 | Sad 1 | Sad 2 | Sad 3 |

|---|---|---|---|---|---|---|

| 1 | To Binaziri (by Farshid Amin) | Engar Na Engar (by Mansour) | Aroosi (by Sattar) | Khoob Shod (by Homayoun Shajarian) | Monge (by Heydoo Hedayati) | Abr Mibarad (by Homayoun Shajarian) |

| 2 | Love You Zindagi (by Amit Trivedi, Jasleen Royal) | Don’t Let Her (by Walker Hayes) | Price Tag (by Jessie J) | One Light (by Linkin Park) | Gravity (by Coldplay) | Pal (by Arijit Singh) |

| 3 | Blue Bird (by Ikimonogakari) | Gulabi Aankhen (Sanam Puri) | Raabta (Arijit Singh) | Main Dhoondne Ko Zamaane Mein (by Arijit Singh) | Breathless (by Shayne Ward) | Older (by Sasha Sloan) |

| 4 | Priyathama (by Samantha, Nani, Ilayaraja) | A Thousand Years (by Christina Perri) | Samjhawan (by Alia Bhatt) | Selavanuko (by Nithiin and Adah) | Aaja Sanam Madhur Chandni (by Nargis, Raj K) | Agar Tum Saath Ho (ALKA YAGNIK, ARIJIT SINGH) |

| 5 | Come and Get Your Love (by Redbone) | I’m Walking on Sunshine (by Katrina and the Waves) | Beethoven Symphony No. 7 4th Movement | Schubert Sonata No. 18 (by Mitsuko Uchida) | Blue Spotted Tail (by Fleet Foxes) | Vissi d’arte (by Tosca) |

| 6 | Glass Animals (by Heat waves) | Never Been in Love (by Hailey Campbell) | Who Says (by Selena Gomez) | Just Go to Hell Dil (by Amit Trivedi) | Let Me Down Slowly (by Alec Benjamin) | Malupu (by Vinay Shanmukh) |

| 7 | Tomake (by Shandhi Sovvota) | Wavin’ Flag (by K’naan Warsame) | Holding Out for a Hero (by Bonnie Tyler) | Here Without You (by 3 Doors Down) | You and Me (by Lifehouse) | Hallelujah (by Leonard Cohen) |

| 8 | Believer (by Imagine Dragons) | Zaalima (by Arijit Singh & Harshdeep Kaur) | All of Me (by John Legend) | Easy on Me (by Adele) | Tere sang yaara (by Atif Aslam) | The scientist (by Coldplay) |

| 9 | Mr. Blue Sky (by Electric Light Orchestra) | Killer Queen (by Queen) | Static Space Lover (by Foster the People) | Royals (by Lorde) | Hurt (by Johnny Cash) | Something in the Way (by Nirvana) |

| 10 | Mr. Blue Sky (by Electric Light Orchestra) | Let Me Live/Let Me Die (Des Rocs) | Rock It for Me (by Caravan Palace) | Ai to Shuu (by Toshiro Masuda) | Pain (by Three Days Grace) | Easy (by Son Lux) |

| 11 | Understanding in a Car Crash (by Thursday) | Live Wire (by Motley Crue) | DESTINY (by Chris Klumpp) | Diamond Lost (by The Devil Wears Prada) | sugar honey ice & tea (by Bring Me the Horizon) | Bury Your Head (by Saosin) |

| 12 | Dil Kya Kare (by Adnan Sami) | Dil Ke Dastakk (by Karthik Rao, Shilpa Surroch) | Hey Ya! (by Loy Mendonca) | Beete Lamhe (by Emraan Hashmi, Geeta Basra) | Agar Tum Saath Ho (by ALKA YAGNIK, ARIJIT SINGH) | Sach Keh Raha Hai (by Kay (K.K)) |

| 13 | Un Vizhigalil (by Anirudh Ravichander & Shruti Haasan) | Pallikoodam (by Sanjith Hegde) | Naan Pizhai (by Ravi G & Shashaa Tirupati) | Kanave Kanave (by Anirudh) | Usure (by Sudharshan Ashok, Jothi Pushpa) | Nee Nenacha (by Sid Sriram) |

| 14 | Highway to Hell (by AC/DC) | Faguner Mohonay (by Bhumi) | Take Me Home, Country Roads (by John Denver) | Soldier of Fortune (by Deep Purple) | Aamar Vanga Ghore Vanga Chala (by Sabina Yesmin) | Channi posor raite jeno (by S.I. Tutul) |

| 15 | Golden (by Harry Styles) | Levitating (by Dua Lipa) | Holding Out for a Hero (by Bonnie Tyler) | No Way Out (by Phil Collins) | Angel (by Sarah McLachlan) | Gavi’s Song (by Lindsey Stirling) |

| 16 | Yeh Jawaani Hai Deewani (by Pritam) | Nightingale (by Yanni) | Havana (by Camila Cabello) | Dhoro Jodi Hothat Sondhye (by Baundule) | Shiddat (by Manan Bhardwaj) | Amaro Parano Jaha Chay (by Arijit Singh) |

| 17 | New South Africa (by Béla Fleck, Abigail Washburn) | Alone (by Masayoshi Takanaka) | Feather (by Nujabes) | Ride To U (by Béla Fleck & Abigail Washburn) | 1913 Massacre (by Woody Guthrie) | Give A Rose (by Nina May) |

| 18 | Unstoppable (by Sia) | Porcupine Tree (by Lazarus) | Nightdriver (by EIDA) | Heaven (by Nathan Grisdale) | Tumi Robe Nirobe (by Esraj and Shubhayu) | Abhi Mujh Mein Kahin (by Sonu Nigam) |

| 19 | Take Me Home, Country Roads (by John Denver) | Yellow (by Coldplay) | something just like this (by Coldplay) | Tum Pe Hum Toh (by Jyotica Tangri) | Amake Amar Moto Thakte Dao (by Anupam Roy) | Hariye Giyechi (by Arnob) |

| 20 | Don’t Be Shy (by Tiësto & Karol G) | Bad Habits (by Ed Sheeran) | Know Me Too Well (by New Hope Club, Danna Paola) | No Time to Die (by Billie Eilish) | Parga’dan Beri (by Fahir Atakoglu) | Dönmek (by Fahir Atakoglu) |

| 21 | When We Feel Young (by When Chai Met Toast) | My Universe (by Coldplay X BTS) | CAN’T STOP THE FEELING! (by Justin Timberlake) | Fix You (by Coldplay) | Creep (by Radiohead) | Rivers and Roads (by The Head and the Heart) |

| 22 | Thunderstruck (by AC/DC) | Deutschland (by Rammstein) | Without Me (by Eminem) | Zombie (by Bad Wolves) | Jodi Konodin (by Aurthohin) | Dukkho Bilash (by Artcell) |

| 23 | Wavin’ Flag (by K’naan Warsame) | Fireflies (by Owl City) | something just like this (Coldplay) | Sadness and Sorrow (by MUSASHI) | Brothers (by Taylor Davis) | Loneliness & What is Brox3n (by Brox3n) |

| 24 | Zip-A-Dee-Doo-Dah (by James Baskett) | Mussorgsky—Pictures at an Exhibition (by Kurt Masur) | Vivaldi (by The Four Seasons) | Climb Every Mountain (by Peggy Wood) | Suzanne (by Elektra Asylum) | Madame Butterfly (by Maria Callas)) |

| 25 | Some Kind of Wonderful (by Marvin Gaye) | All I Wanna Do (by Sheryl Crow) | The Luckiest Guy (by The Magnetic Fields) | Color of the Blues (by George Jones) | Rainy Night in Georgia (by Brook Bentos) | Love Will Tear Us Apart (by Joy Division) |

| 26 | Hard Rock Hallelujah (by Lordi) | Blame It on the Boogie (by The Jacksons) | September (by Earth, Wind & Fire) | Beautiful Savior (by F. Melius Christiansen) | Oh Danny Boy (Irish Traditional) | Leaving on a Jet Plane (by Peter, Paul & Mary) |

| 27 | Rock Me (by Steppenwolf) | Hey Pocky A-Way (by The Meters) | Back in Black (by AC/DC) | Fire and Rain (by James Taylor) | Landslide (by Mick Fleetwood) | Cats in the Cradle (by Harry Chapin) |

| 28 | Fxxk It (by BIGBANG) | We Like 2 Party (by BIGBANG) | I am the Best (by 2NE1) | Still Life (by BIGBANG) | Let’s Not Fall in Love (by BIGBANG) | Untitled, 2014 (by G-Dragon) |

| 29 | Bad Habits (by Ed Sheeran) | Fairytale (by Alexander Rybak) | Namehraboon (by Fataneh) | From the Northern Country (by Ashkan) | Koja Bayad Beram (by Roozbeh Bemani) | Sedaye Baroon (by Sattar) |

| 30 | Walk of Life (by Dire Straits) | Aaj Phir Jeene Ki Tamanna Hai (by Lata Mangeshkar) | Stayin’ Alive (by Bee Gees) | Barber (by Vienna Philharmonic) | Streets of Philadelphia (by Bruce Springsteen) | Tujhse Naraz Nahi Zindagi (by Lata Mangeshkar) |

| 31 | Livin’ on a Prayer (by Bon Jovi) | Fix You (by Coldplay) | Breaking the Habit (by Linkin Park) | Tumi rabe nirobe (by Sen Majumdar) | Jokhon Nirobe Dure (by Nageeb Hassan) | Adagio (by HAUSER) |

| 32 | Yellow (by Coldplay) | Sign of the Times (by Harry Styles) | Let Her Go (by Passenger) | How to Save a Life (by The Fray) | Mockingbird (by Eminem) | Shallow (by Lady Gaga, Bradley Cooper) |

| 33 | Pepas (by Farruko) | Party On My Mind (by Honey Singh) | Lat Lag Gayee Lyrical (by Benny Dayal, Shalmali) | Tu Jaane Na (by Atif Aslam) | Agar Tum Saath Ho (by Alka Yagnik, Arijit Singh) | Mat Kar Maya Ko Ahankar (by Neeraj Arya’s Kabir Café) |

| 34 | Love You Zindagi (by Amit Trivedi, Jasleen Royal) | Beat It (by Michael Jackson) | Levitating (by Dua Lipa) | Wolves (by Selena Gomez) | Amake Amar Moto Thakte Dao (by Anupam Roy) | Kill Em with Kindness (Selena Gomez) |

| 35 | Hairat Lyrical (by Lucky Ali) | DOLLAZ ON MY HEAD (by Gunna) | Dus Bahane Karke Le Gaye Dil (by K K, Shaan) | I Know (by Polo G) | Kaun Hoon Main (by Atif Aslam) | Give Me Some Sunshine (by Shantanu Moitra) |

| 36 | Calma (by Pedro Capó, Farruko) | Muy Feliz (by Nicky Jam) | Djadja Remix (by Aya Nakamura) | Say Something (by Christina Aguilera) | Inolvidable (by Beéle & Ovy on the Drums) | Thunder and Lightning (by Passenger) |

| 37 | The Best Time of My Life (by Cloud Cult) | Three Little Birds (by Bob Marley & The Wailers) | There’s So Much Energy in Us (by Cloud Cult) | Love You All (by Cloud Cult) | Love You All (by Cloud Cult) | Sigh No More (by Mumford & Sons) |

| 38 | Telephone (by Lady Gaga) | Storm (by Antonio Vivaldi) | Run Run (by Indila) | Vois sur ton chemin (by Les Choristes) | La Llorona (by Angela Aguilar) | Cancion Mixteca Cuco Sanchez |

| 39 | 10,000 Hours (by Dan + Shay, Justin Bieber) | As I Am (by Harry Styles) | All that Glitters (by Earl) | Easy on Me (by Adele) | Shallow (by Lady Gaga) | The Night We Met (by Lord Huron) |

| 40 | Zaalima (by Arijit Singh & Harshdeep Kaur) | Love You Zindagi (by Amit Trivedi, Jasleen Royal) | Matargasht (by Mohit Chauhan) | Maa (by Shankar Mahadevan) | Tere sang yaara (by Atif Aslam) | Tum Hi Ho (by Arijit Singh) |

References

- Irish, M.; Cunningham, C.J.; Walsh, J.B.; Coakley, D.; Lawlor, B.A.; Robertson, I.H.; Coen, R.F. Investigating the enhancing effect of music on autobiographical memory in mild Alzheimer’s disease. Dement. Geriatr. Cogn. Disord. 2006, 22, 108–120. [Google Scholar] [CrossRef] [PubMed]

- Types of Memory, Queensland Brain Institute, University of Queensland. Available online: https://qbi.uq.edu.au/brain-basics/memory/types-memory (accessed on 23 November 2022).

- Where Are Memories Stored in the Brain? Available online: https://qbi.uq.edu.au/brain-basics/memory/where-are-memories-stored (accessed on 2 December 2016).

- Jasvinder, S.; Ratnawat, A. Algorithmic Music Generation for the stimulation of Musical Memory in Alzheimer’s. In Proceedings of the 2018 4th International Conference on Computing Communication and Automation (ICCCA), Greater Noida, India, 14–15 December 2018. [Google Scholar]

- Thomas, D.W.; Smith, M. The effect of music on caloric consumption among nursing home residents with dementia of the Alzheimer’s type. Act. Adapt. Aging 2009, 33, 1–16. [Google Scholar] [CrossRef]

- Aysha, M. Finding memories in the ‘music mirror’. Nurs. Resid. Care 2021, 23, 1–3. [Google Scholar]

- Schulkind, M.D.; Hennis, L.K.; Rubin, D.C. Music, emotion, and autobiographical memory: They’re playing your song. Mem. Cogn. 1999, 27, 948–955. [Google Scholar] [CrossRef]

- Music Can Boost Memory and Mood. Harvard Health. Available online: https://www.health.harvard.edu/mind-and-mood/music-can-boost-memory-and-mood (accessed on 14 February 2015).

- Raglio, A.; Bellelli, G.; Traficante, D.; Gianotti, M.; Ubezio, M.C.; Villani, D.; Trabucchi, M. Efficacy of music therapy in the treatment of behavioral and psychiatric symptoms of dementia. Alzheimer Dis. Assoc. Disord. 2008, 22, 158–162. [Google Scholar] [CrossRef]

- Svansdottir, H.B.; Snædal, J. Music therapy in moderate and severe dementia of Alzheimer’s type: A case–control study. Int. Psychogeriatr. 2006, 18, 613–621. [Google Scholar] [CrossRef]

- Biomarkers Definitions Working Group; Atkinson, A.J., Jr.; Colburn, W.A.; DeGruttola, V.G.; DeMets, D.L.; Downing, G.J.; Hoth, D.F.; Oates, J.A.; Peck, C.C.; Spilker, B.A.; et al. Biomarkers and surrogate endpoints: Preferred definitions and conceptual framework. Clin. Pharmacol. Ther. 2001, 69, 89–95. [Google Scholar]

- Pérez-Elvira, R.; Oltra-Cucarella, J.; Carrobles, J.; Teodoru, M.; Bacila, C.; Neamtu, B. Individual alpha peak frequency, an important biomarker for live z-score training neurofeedback in adolescents with learning disabilities. Brain Sci. 2021, 11, 167. [Google Scholar] [CrossRef]

- Lewine, J.D.; Plis, S.; Ulloa-Cerna, A.; Williams, C.; Spitz, M.; Foley, J.; Paulson, K.; Davis, J.; Bangera, N.; Snyder, T.; et al. Quantitative EEG biomarkers for mild traumatic brain injury. J. Clin. Neurophysiol. 2019, 36, 298–305. [Google Scholar] [CrossRef]

- Hussain, I.; Hossain, A.; Jany, R.; Bari, A.; Uddin, M.; Kamal, A.R.M.; Ku, Y.; Kim, J.-S. Quantitative Evaluation of EEG-Biomarkers for Prediction of Sleep Stages. Sensors 2022, 22, 3079. [Google Scholar] [CrossRef]

- Chan, A.S.; Ho, Y.-C.; Cheung, M.-C. Music training improves verbal memory. Nature 1998, 396, 128. [Google Scholar] [CrossRef] [PubMed]

- Ho, Y.-C.; Cheung, M.-C.; Chan, A.S. Music training improves verbal but not visual memory: Cross-sectional and longitudinal explorations in children. Neuropsychology 2003, 17, 439. [Google Scholar] [CrossRef] [PubMed]

- El Haj, M.; Postal, V.; Allain, P. Music enhances autobiographical memory in mild Alzheimer’s disease. Educ. Gerontol. 2012, 38, 30–41. [Google Scholar] [CrossRef]

- Belfi, A.M.; Karlan, B.; Tranel, D. Music evokes vivid autobiographical memories. Memory 2015, 24, 979–989. [Google Scholar] [CrossRef] [PubMed]

- Martin, M.A.; Metha, A. Recall of early childhood memories through musical mood induction. Arts Psychother. 1997, 24, 447–454. [Google Scholar] [CrossRef]

- Wallace, W.T. Memory for music: Effect of melody on recall of text. J. Exp. Psychol. Learn. Mem. Cogn. 1994, 20, 1471. [Google Scholar] [CrossRef]

- Simmons-Stern, N.R.; Deason, R.G.; Brandler, B.J.; Frustace, B.S.; O’Connor, M.K.; Ally, B.A.; Budson, A.E. Music-based memory enhancement in Alzheimer’s disease: Promise and limitations. Neuropsychologia 2012, 50, 3295–3303. [Google Scholar] [CrossRef]

- Delorme, A.; Makeig, S. EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef]

- Klug, M.; Gramann, K. Identifying key factors for improving ICA-based decomposition of EEG data in mobile and stationary experiments. Eur. J. Neurosci. 2020, 54, 8406–8420. [Google Scholar] [CrossRef]

- Abhang, P.A.; Gawali, B.W.; Mehrotra, S.C. Introduction to EEG-and Speech-Based Emotion Recognition; Academic Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Kumar, J.S.; Bhuvaneswari, P. Analysis of Electroencephalography (EEG) signals and its categorization—A study. Procedia Eng. 2012, 38, 2525–2536. [Google Scholar] [CrossRef]

- Föll, S.; Maritsch, M.; Spinola, F.; Mishra, V.; Barata, F.; Kowatsch, T.; Fleisch, E.; Wortmann, F. FLIRT: A feature generation toolkit for wearable data. Comput. Methods Programs Biomed. 2021, 212, 106461. [Google Scholar] [CrossRef]

- Greco, A.; Valenza, G.; Lanata, A.; Scilingo, E.P.; Citi, L. cvxEDA: A convex optimization approach to electrodermal activity processing. IEEE Trans. Biomed. Eng. 2015, 63, 797–804. [Google Scholar] [CrossRef]

- Benedek, M.; Kaernbach, C. Decomposition of skin conductance data by means of nonnegative deconvolution. Psychophysiology 2010, 47, 647–658. [Google Scholar] [CrossRef]

- Saeid, S.; Chambers, J.A. EEG Signal Processing; John Wiley & Sons: Hoboken, NJ, USA, 2013. [Google Scholar]

- Warren, W.; Bandeali, A. Spyder: Scientific Python Development Environment. 2009. Available online: https://github.com/spyder-ide/spyder (accessed on 15 December 2017).

- Bhavsar, R.; Sun, Y.; Helian, N.; Davey, N.; Mayor, D.; Steffert, T. The correlation between EEG signals as measured in different positions on scalp varying with distance. Procedia Comput. Sci. 2018, 123, 92–97. [Google Scholar] [CrossRef]

- Bastiaansen, M.C.; Oostenveld, R.; Jensen, O.; Hagoort, P. I see what you mean: Theta power increases are involved in the retrieval of lexical semantic information. Brain Lang. 2008, 106, 15–28. [Google Scholar] [CrossRef]

| Characteristics | Information |

|---|---|

| Participant number | 40 |

| Age (mean) | 31.025 |

| Age (standard deviation) | 11.53 |

| Age range | 20–72 |

| Gender | M: 20, F:20 |

| Steps | Activity | Time (Minute) |

|---|---|---|

| 1 | Fill out the consent form | 3 |

| 2 | Wear an EEG headset and EDA wristband | 5 |

| 3 | Baseline data collection | 3 |

| 4 | Data collection during listening to music | 27 |

| 5 | Fill-up post-survey | 54 |

| Total | 92 | |

| Participant No | Memory Recall | Arithmetic Mean (uV) | Standard Deviation (uV) | Hjorth Activity | Hjorth Complexity | Hjorth Mobility | Mean Energy (uV2) | Shannon Entropy | Band Power-Gamma (uV2) | Band Power-Beta (uV2) | Band Power-Alpha (uV2) | Band Power-Theta (uV2) | Mean-Gamma (uV) | Mean-Beta (uV) | Mean-Alpha (uV) | Mean-Theta (uV) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 36 | Recalled | −0.112 | 8.599 | 73.94 | 2.116 | 0.262 | 73.98 | 14.65 | 9.666 | 71.76 | 320.5 | 534.4 | 0.0001 | 0.026 | −0.070 | 0.229 |

| 36 | No-Recall | −0.227 | 8.208 | 67.37 | 2.086 | 0.268 | 67.42 | 14.55 | 9.521 | 69.40 | 289.1 | 462.3 | −0.0002 | −0.003 | −0.059 | −0.137 |

| 37 | Recalled | 0.475 | 33.98 | 1154 | 3.646 | 0.167 | 1155 | 14.21 | 26.81 | 230.4 | 929.6 | 1924 | 0.0004 | −0.021 | 0.021 | −0.363 |

| 37 | No-Recall | 0.284 | 14.95 | 223.4 | 1.902 | 0.269 | 223.7 | 14.54 | 28.08 | 262.3 | 1133 | 1327 | −0.001 | −0.017 | −0.389 | 0.398 |

| 38 | Recalled | −0.194 | 8.397 | 70.51 | 2.236 | 0.314 | 70.57 | 14.50 | 17.79 | 75.08 | 170.0 | 337.5 | 0.0002 | −0.006 | 0.078 | 0.162 |

| 38 | No-Recall | −0.049 | 8.099 | 65.60 | 2.110 | 0.332 | 65.69 | 14.52 | 18.59 | 79.47 | 165.9 | 304.8 | 0.0000 | −0.055 | 0.031 | −0.049 |

| 39 | Recalled | −0.440 | 11.18 | 124.9 | 2.502 | 0.269 | 125.1 | 14.52 | 21.26 | 107.2 | 281.6 | 617.7 | −0.0007 | 0.022 | −0.075 | −0.438 |

| 39 | No-Recall | −1.888 | 13.09 | 171.4 | 2.943 | 0.229 | 174.9 | 13.82 | 20.94 | 105.3 | 299.1 | 769.1 | 0.005 | 0.032 | −0.015 | −0.480 |

| 40 | Recalled | −0.144 | 12.54 | 157.3 | 2.107 | 0.303 | 157.4 | 14.52 | 30.19 | 169.3 | 751.1 | 873.8 | −0.0001 | 0.006 | −0.044 | 0.033 |

| 40 | No-Recall | −0.050 | 11.73 | 137.6 | 2.117 | 0.316 | 137.5 | 14.52 | 31.46 | 153.8 | 519.5 | 757.8 | 0.001 | −0.177 | 0.063 | −0.044 |

| EEG Feature | p-Value | |||

|---|---|---|---|---|

| F3 | F7 | F4 | F8 | |

| Arithmetic Mean (EEG) | p = 0.426 | h = 0 | p = 0.800 | h = 0 | p = 0.129 | h = 0 | p = 0.276 | h = 0 |

| Standard Deviation (EEG) | p = 0.063 | h = 0 | p = 0.296 | h = 0 | p = 0.017 | h = 1 | p = 0.146 | h = 0 |

| Hjorth Activity | p = 0.133 | h = 0 | p = 0.627 | h = 0 | p = 0.052| h = 0 | p = 0.216 | h = 0 |

| Hjorth Complexity | p = 0.188 | h = 0 | p = 0.708| h = 0 | p = 0.042 | h = 1 | p = 0.611 | h = 0 |

| Hjorth Mobility | p = 0.057 | h = 0 | p = 0.097 | h = 0 | p = 0.002 | h = 1 | p = 0.275 | h = 0 |

| Mean Energy (EEG) | p = 0.120 | h = 0 | p = 0.716 | h = 0 | p = 0.056 | h = 0 | p = 0.225 | h = 0 |

| Shannon Entropy | p = 0.751 | h = 0 | p = 0.736 | h = 0 | p = 0.358 | h = 0 | p = 0.978 | h = 0 |

| Band Power-Gamma | p = 0.275 | h = 0 | p = 0.641 | h = 0 | p = 0.234 | h = 0 | p = 0.418 | h = 0 |

| Band Power-Beta | p = 0.010 | h = 1 | p = 0.679 | h = 0 | p = 0.021 | h = 1 | p = 0.081 | h = 0 |

| Band Power-Alpha | p = 0.007 | h = 1 | p = 0.039 | h = 1 | p = 0.002 | h = 1 | p = 0.029 | h = 1 |

| Band Power-Theta | p = 0.062 | h = 0 | p = 0.709 | h = 0 | p = 0.022 | h = 1 | p = 0.362 | h = 0 |

| Mean-Gamma | p = 0.225 | h = 0 | p = 0.692 | h = 0 | p = 0.926 | h = 0 | p = 0.853 | h = 0 |

| Mean-Beta | p = 0.409 | h = 0 | p = 0.850 | h = 0 | p = 0.643 | h = 0 | p = 0.576 | h = 0 |

| Mean-Alpha | p = 0.411 | h = 0 | p = 0.699 | h = 0 | p = 0.515 | h = 0 | p = 0.444 | h = 0 |

| Mean-Theta | p = 0.559 | h = 0 | p = 0.548 | h = 0 | p = 0.476 | h = 0 | p = 0.941 | h = 0 |

| Feature | Electrodes | ||

|---|---|---|---|

| F3 | F7 | ||

| Alpha band power during recall | F3 | 1.000 | 0.968 |

| F7 | 0.968 | 1.000 | |

| Alpha band power during no-recall | F3 | 1.000 | 0.837 |

| F7 | 0.837 | 1.000 | |

| Beta band power during recall | F3 | 1.000 | 0.748 |

| F7 | 0.748 | 1.000 | |

| Beta band power during no-recall | F3 | 1.000 | 0.511 |

| F7 | 0.511 | 1.000 | |

| Feature | Electrodes | ||

|---|---|---|---|

| F4 | F8 | ||

| Alpha band power during recall | F4 | 1.000 | 0.738 |

| F8 | 0.738 | 1.000 | |

| Alpha band power during no-recall | F4 | 1.000 | 0.696 |

| F8 | 0.696 | 1.000 | |

| Beta band power during recall | F4 | 1.000 | 0.438 |

| F8 | 0.438 | 1.000 | |

| Beta band power during no-recall | F4 | 1.000 | 0.313 |

| F8 | 0.313 | 1.000 | |

| Participant No | Memory Recall | Tonic Mean (uS) | Tonic std (uS) | Tonic min (uS) | Tonic max (uS) | Tonic Energy (uS2) | Phasic Mean (uS) | Phasic Std (uS) | Phasic min (uS) | Phasic max (uS) | Phasic Energy (uS2) |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 36 | recall | 0.487 | 0.065 | 0.282 | 0.792 | 167.3 | 0.009 | 0.027 | −0.002 | 0.335 | 0.574 |

| 36 | no-recall | 0.362 | 0.051 | 0.236 | 0.730 | 99.79 | 0.008 | 0.023 | −0.003 | 0.228 | 0.416 |

| 37 | recall | 9.665 | 1.287 | 2.212 | 14.89 | 75792 | 0.985 | 1.353 | −0.381 | 9.889 | 2166 |

| 37 | no-recall | 5.946 | 0.507 | 4.024 | 7.682 | 27144 | 0.584 | 0.575 | −0.165 | 3.314 | 537.9 |

| 38 | recall | 0.663 | 0.619 | −3.818 | 2.158 | 711.1 | 0.211 | 0.498 | −0.011 | 5.322 | 251.2 |

| 38 | no-recall | 0.432 | 0.196 | 0.094 | 1.397 | 186.4 | 0.032 | 0.109 | −0.166 | 0.881 | 10.31 |

| 39 | recall | 0.536 | 0.228 | −1.763 | 1.036 | 289.2 | 0.079 | 0.213 | −0.194 | 3.157 | 43.11 |

| 39 | no-recall | 0.432 | 0.092 | 0.302 | 0.612 | 148.6 | 0.009 | 0.014 | −0.005 | 0.061 | 0.208 |

| 40 | recall | 0.104 | 0.017 | 0.084 | 0.209 | 8.647 | 0.001 | 0.002 | −0.001 | 0.025 | 0.002 |

| 40 | no-recall | 0.145 | 0.003 | 0.085 | 0.219 | 8.949 | 0.001 | 0.001 | −0.001 | 0.009 | 0.001 |

| EDA Features | p-Value |

|---|---|

| Tonic Mean | 0.133 |

| Tonic Standard Deviation | 0.017 |

| Tonic Min | 0.009 |

| Tonic Max | 0.081 |

| Tonic Energy | 0.185 |

| Phasic Mean | 0.073 |

| Phasic Standard Deviation | 0.026 |

| Phasic Max | 0.001 |

| Phasic Energy | 0.048 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Das, R.K.; Imtiaz, N.Z.; Khan, A. Toward Affirmation of Recovery of Deeply Embedded Autobiographical Memory with Background Music and Identification of an EEG Biomarker in Combination with EDA Signal Using Wearable Sensors. Clin. Transl. Neurosci. 2022, 6, 26. https://doi.org/10.3390/ctn6040026

Das RK, Imtiaz NZ, Khan A. Toward Affirmation of Recovery of Deeply Embedded Autobiographical Memory with Background Music and Identification of an EEG Biomarker in Combination with EDA Signal Using Wearable Sensors. Clinical and Translational Neuroscience. 2022; 6(4):26. https://doi.org/10.3390/ctn6040026

Chicago/Turabian StyleDas, Rupak Kumar, Nabiha Zainab Imtiaz, and Arshia Khan. 2022. "Toward Affirmation of Recovery of Deeply Embedded Autobiographical Memory with Background Music and Identification of an EEG Biomarker in Combination with EDA Signal Using Wearable Sensors" Clinical and Translational Neuroscience 6, no. 4: 26. https://doi.org/10.3390/ctn6040026

APA StyleDas, R. K., Imtiaz, N. Z., & Khan, A. (2022). Toward Affirmation of Recovery of Deeply Embedded Autobiographical Memory with Background Music and Identification of an EEG Biomarker in Combination with EDA Signal Using Wearable Sensors. Clinical and Translational Neuroscience, 6(4), 26. https://doi.org/10.3390/ctn6040026