1. Introduction

In supervised classification the primary objective is to learn a decision rule that minimizes classification error. The Bayes classifier is the theoretical optimum for this criterion when the true class-conditional distributions are known [

1,

2,

3]. In practice, however, estimating a nonparametric, potentially high-dimensional joint density is often infeasible [

4,

5] (e.g., due to lack of samples since the amount of data required grows exponentially) and building a fully parameterized model to represent the joint distribution can be impractical, because real distributions are too complex to model with simple parametric forms, and high-dimensional feature spaces require the estimation of too many parameters from too little data, leading to unstable or inaccurate models [

6].

Consequently, a variety of classifiers have been developed to address classification challenges in practical scenarios, and a wide range of pragmatic approximations has been developed. One class of methods is nonparametric instance-based learning: k-nearest neighbors (kNN) is conceptually simple and, with a suitable choice of k, is a consistent approximation to the Bayes rule as the sample size grows [

7]. Another popular approach is the naïve Bayes classifier, which drastically reduces estimation complexity by assuming feature independence and often performs well despite this strong simplification [

6,

8]. Both kNN and naïve Bayes offer fast and straightforward alternatives to the more intricate Bayes classifier, though they may not always achieve the same level of performance.

Therefore, the naïve Bayes and k-nearest neighbor (kNN) classifiers are commonly used as baseline algorithms for classification. However, in the case of naïve Bayes, the user must determine the most suitable strategy for model fitting based on the specific use case. Available strategies include fitting a mixture of Gaussian distributions or employing a non-parametric approach using kernel density estimation. However, these methods usually do not account for varying distributions across different features (e.g., see

Appendix C for varying feature distributions). Moreover, analyzing the distributions of all features to select the optimal strategy for a naïve Bayes classifier is a complex task. Similarly, for kNN, the user must choose an appropriate distance measure, which poses a similar complex decision [

9] as well as the number k of nearest neighbors.

Although this feature independence is quite constraining, naïve Bayes classifiers have been reported to achieve high performance in many cases [

10,

11,

12]. Interestingly, it has been observed that a naïve Bayes classifier might perform well even when dependencies exist among features [

12], though correlation does not necessarily imply dependence [

13]. The impact of feature correlation on performance depends on both the degree of correlation and the specific measure used to evaluate it. Such a performance-dependent relation can be effectively demonstrated by applying multiple correlation measures (see

Table 1). Likewise, the choice of class assignments for cases within a dataset also influences performance, which can be illustrated using a correlated dataset evaluated on two different classifications.

A pitfall in Bayesian theory occurs if observations with low evidence are assigned to a class with a higher likelihood than another, without considering that the probabilities in a probability density with higher variance decay more slowly than in one with lower variance, creating a situation wherein a class assignment chooses a distant class with high variance over a closer class with smaller variance [

14]. An example of this would be the size of humans according to gender, where the female (Gaussian) distribution has higher variance than the male (Gaussian) distribution, and the female mean is left of that of males. In that case, the classical Bayes theorem would assign a giant’s size to the class of females, since the likelihood of the males’ distribution would decay faster than that of the females. Instead, the closest mode could be chosen if facing observations with low evidence (the giant size). As a consequence, the non-interpretable choice is to classify all giants as female.

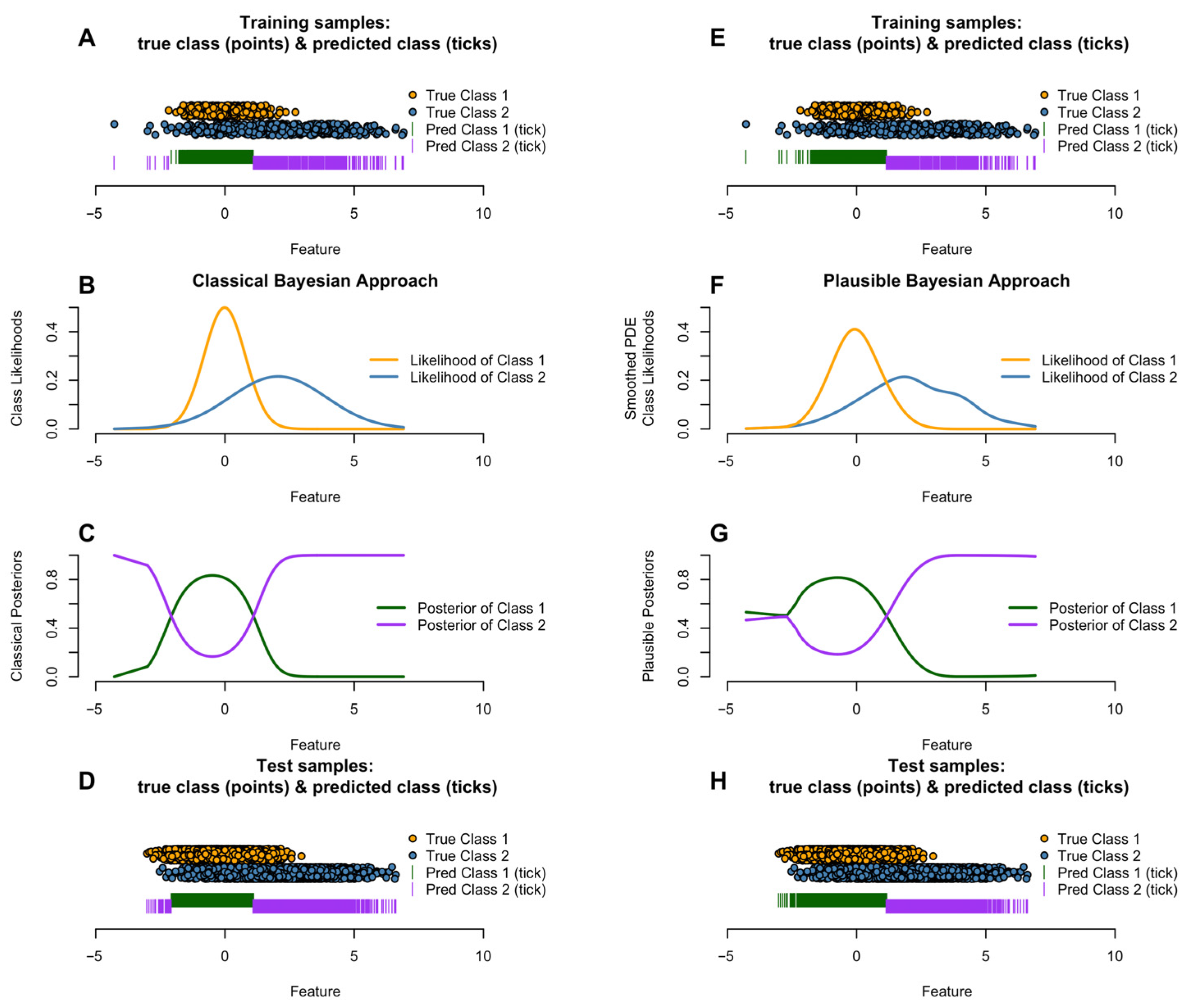

Figure 1 outlines this situation for univariate on the left side and sketches the influence of the flexible approach of smoothed Pareto density estimation (PDE) in combination with plausibility correction.

We are proposing a new methodology for the naïve Bayes classifier, overcoming these challenges and improving performance. Our approach does not make any prior assumption about density distribution, creating an algorithm free of assumptions about the data distribution. Furthermore, we dispose of any parameters to be optimized [

15]. We use a model of density estimation based on information theory defined by a data-driven kernel radius without intrinsic assumptions about the data, which empirically outperforms typical density estimation approaches [

16]. The main contributions of our work are as follows:

Solution to the above-mentioned pitfall of the Bayes theorem within a Naïve Bayes classifier framework.

Empirical benchmark showing a robust classification performance of the plausible naïve Bayes classifier using the Pareto Density Estimation (PDE).

Visualization of class-conditional likelihoods and posteriors to support model interpretability.

The aim of our work is to provide a classifier that can serve as a baseline. Hence, we will show that the performance of naïve Bayes is hard to associate with dependency measures, and that the design decisions are fluid across the degree of correlation and the choice of the correlation measure. We developed an open-access R package (0.2.8) on CRAN

https://CRAN.R-project.org/package=PDEnaiveBayes, accessed on 17 November 2025).

2. Materials and Methods

Let a classification

be a partition of a Dataset D consisting of N = |D| data points into

k∈ℕ non-empty, disjoint subsets (classes) [

17]. Each class

contains a subset of datapoints

and each datapoint is assigned is a class label

∈{1,…,

k} via the hypothesis function ℎ: D→{1,…,

k}. The task of a classifier is to learn the mapping function h given training data and labels

.

2.1. Bayes Classification

Assume a set of continuous input variables , with vector . In Bayesian classification, the prior is the initial belief (“knowledge”) about class membership.

The Bayesian classifier picks the class whose posterior

has the highest probability

The posterior probability captures what is known about

, now that we have incorporated

. The posterior probability is obtained with the Bayes Theorem

Here

is the conditional probability of

given class

called class likelihood and the denominator is the evidence. Let H be the hypothesis space and

being a MAP hypothesis,

the (not necessarily i.i.d.) data set. Let

be the hypothesis space with

being a MAP hypothesis,

the (not necessarily i.i.d.) data set and let

be a classifier that is a function. a Bayes optimal classifier [

18] is defined by

Equation (3) yields the optimal posterior probability to classify a data point in the sense that it minimizes the average probability error [

6].

The general approach to Bayesian modeling is to set up the full probability model, i.e., the joint distribution of all entities in accordance with all that is known about the problem [

19]. In practice, given sufficient knowledge about the problem, distributions can be assumed for the

and priors with hyperparameters θ that are estimated.

For the goal of this work, of using a Bayesian classifier to measure a baseline of performance, we make the assumption that the classification of a data point can be computed by the marginal class likelihoods

with

which is called the naive assumption because it assumes i.d.d. given the classification set G. In some domains, the performance of a naive Bayes classifier has been shown to be comparable to neural networks and decision trees [

18]. We will show in the results that for typical datasets, even if this assumption does not hold true, an acceptable baseline performance can be computed.

Our objective is to exploit the empirical information in the training set to derive, in a fully data-driven manner and under as few assumptions as possible, an estimate of each feature’s distribution within every class , and then to evaluate classifier performance on unseen test samples. To this end, we adopt a frequentist strategy: we estimate the class-conditional densities directly from the observed data, and we compute the class priors as the relative frequencies of each in the training sample—assuming that the sample faithfully represents the underlying population. Because our decision rule is given by Equation (4), which compares unnormalized scores across classes, the global evidence term (the denominator in Bayes’ theorem) may be treated as a constant and hence omitted.

The challenge is to estimate the likelihood

, given the samples of the training data. However, by using the naive assumption, the challenge of estimating the

-dimensional density is simplified to estimating

one-dimensional densities. In prior works, the first naive Bayes classifier using density estimation was introduced as flexible Bayes [

8]. This work improves significantly on the task of parameter-free univariate density estimation without making implicit assumptions about the data (c.f. Ref. [

16]).

2.2. Density Estimation

The task of density estimation can be achieved in two general ways. First, through parametric statistics, meaning the fitting of parametrized functions as a superposition, where the fitting can be performed according to some quality measure or some sort of statistical testing. The drawback here is the rich possibility of available assumptions. A default approach could be the use of Gaussian distributions fitted to the data [

20]. Second, nonparametric statistics, meaning local parametrized approximations using neighboring data around each available data point. It varies in its use of fixed or variable kernels (global or local estimated radius). The drawback of this approach is the complexity of tuning bandwidths for optimal kernel estimation, which is a computationally hard task [

21]. A default approach to solve this problem can be a Gaussian kernel, where the bandwidth is selected according to Silverman’s rule of thumb [

5].

In this work, we want to solve the density estimation with the data-driven approach called Pareto Density Estimation (PDE), taken from [

15]. In this way, any prior model assumption is dropped. The PDE is a nonparametric estimation method with a variable kernel radius. For each available datapoint in the dataset, the PDE estimates a radius to estimate the density at this point. It uses a rule from information theory: The PDE maximizes the information content (reward) while minimizing the hypersphere radius (effort). Since this rule is historically known as the Pareto rule (or 80-20-rule, reward/effort-trade-off), the method is called the Pareto Density Estimation.

2.3. Pareto Density Estimation

Let a subset

of data points have a relative size of

. If there is an equal probability that an arbitrary point x is observed, then its content is

. The optimal set size is the Euclidean distance of S from the ideal point, which is the empty set with 100% information [

15]. The unrealized potential is

. Minimizing the

yields the optimal set size

. This set retrieves 88% of the maximum information [

15]. For the purpose of univariate density estimation, computation of the univariate Euclidean distance is required. Under the MMI assumption, the squared Euclidean distances are χ-quadrat-distributed [

22] leading to

where

is the Chi-square cumulative distribution function for d degrees of freedom [

15]. The pareto Radius is approximated by

for

.

The PDE is an adaptive technique for estimating the density at a datapoint following the Pareto rule (80-20-rule). The PDE maximizes the information content using a minimum neighborhood size. It is well-suited to estimating univariate feature distributions from sufficiently large samples and to highlighting non-normal characteristics (multimodality, skew, clipped ranges) that standard, default-parameter density estimators often miss. An empirical study, Ref. [

16] demonstrated that PDE visualizations (mirrored-density plots, short MD plots) more faithfully revealed fine-scale distributional features than standard visualization defaults (histograms, violin plots, and bean plots) when evaluated across multiple density estimation approaches. Accordingly, PDE frequently yields superior empirical performance relative to commonly used, default-parameter density estimators [

16].

Given the Pareto radius R, the raw PDE can be estimated as a discrete function solely at given kernel points

with

where 1 is an indicator function that is 1 if the condition holds and 0 otherwise, A normalizes

, and

a quantized function that is proportional to the number of samples falling within the interval [

−R,

+R].

For the mirrored-density (MD) plots used in [

16], we previously applied piecewise-linear interpolation of the discrete PDE

to fill gaps between kernel grid points and obtain a continuous visual approximation of a feature’s pdf

. While linear interpolation produces a visually faithful representation and is adequate for exploratory plots, as it preserves continuity and is trivial to compute, it inherits the small-scale irregularities of the underlying discrete PDE estimate. If linearly interpolated densities are used directly as class-conditional likelihoods in Bayes’ theorem, their high-frequency noise propagates into the posteriors and can produce unstable or incorrect classifications.

Accordingly, in this work, we proceed as follows. After obtaining the raw and discrete conditional PDE , we replace linear interpolation with several smoothing steps in the next section that produce a class likelihood which (i) preserves the genuine distributional structure (modes, skewness, tails) and (ii) suppresses spurious high-frequency fluctuations that would destabilize posterior estimates.

2.4. Smoothed Pareto Density Estimation

Although the PDE of class likelihoods captures the overall shape of the distribution, it can be somewhat rough or piecewise constant due to its uniform kernel and finite sampling. This is disadvantageous, as irregular or noisy estimates yield fluctuating posteriors and unstable decisions. Therefore, smoothing the class likelihoods acts as a form of regularization: it suppresses sample-level noise and prevents high-frequency fluctuations like erratic spikes or dips in the likelihoods that lead to brittle posterior assignments. Balancing the fidelity to the PDE’s “true” features with the removal of artificial high-frequency components results in more accurate approximations of the true underlying distributions, leading to more reliable posterior estimates because they are less influenced by random sample noise.

For smoothing, we exploit the insight that the kernel estimate is a convolution of the data with the kernel by using fast Fourier transforms [

5] p. 61. To produce a smooth, continuous density estimate, we convolve the PDE output with a Gaussian kernel using the Pareto radius as the bandwidth. Hence, the Gaussian smoothing kernel is defined as

where R is the Pareto radius. We use the Fast Fourier Transform (FFT), leveraging the convolution theorem, in order to implement this convolution efficiently, as follows.

First, we evaluate the Gaussian kernel on the same grid

as the PDE in which

is the number of grid points. Let

be the grid spacing, then the Gaussian kernel vector

yields a normalized kernel vector aligned with the PDE grid, and we use the mean of adjacent differences to avoid numerical instabilities in spacing.

To perform a linear convolution without wrap-around artifacts, we zero-pad both the density

and kernel vectors

before FFT [

23] (part1, p. 260ff). We choose the padding length

as the next power of two. This length ensures that circular convolution via FFT corresponds to the linear convolution of the original sequences, and using a power of two leverages FFT efficiency [

24]. We create padded vectors

(the

with zero-pad) and

(

with zeros zero-pad), each of length L. This padding avoids overlap of the signal with itself during convolution.

Next, we compute the FFT of both padded vectors, multiply them element-wise in the frequency domain, and then apply the inverse FFT. By the convolution theorem, the inverse FFT of the product

yields the linear convolution on the padded length. We divide by L in Equation (9) when taking the inverse FFT, as per the normalization convention.

The central

elements correspond to the convolved density over the original grid. This middle segment is the smoothed density vector

, aligned with the original kernel grid. The approach is motivated by the idea from [

25].

Finally, the montone Hermite spline approximation of

[

26] yields the likelihood function

. and allows for a functional, fast computation of new points.

In sum, the empirical class PDE

in dimension

can be rough due to the data being noisy. If used for a visualization task, the roughness would be inconsequential [

16]. In order not to influence the posteriors through data noise, we propose as a solution a combination of filtering by convolution (c.f. Ref. [

27]) and monotonous spline approximation (c.f. Ref. [

28]) yielding

.

2.5. Plausible Naïve Bayes Classification

Ref. [

14] showed that misclassification can occur when only low evidence is used in the Bayes’ theorem, i.e., the cases lie below a certain threshold

ε. They define cases below

ε as uncertain and provide two solutions [

14]: reasonable Bayes (i.e., suspending a decision) and plausible Bayes (a correction of Equation (4)). To derive

ε, they propose the use of the computed ABC analysis [

29]. The algorithm allows users to compute precise thresholds that partition a dataset into interpretable subsets.

Closely related to the Lorenz curve, the ABC curve graphically represents the cumulative distribution function. Using this curve, the algorithm determines optimal cutoffs by leveraging the distributional properties of the data. Positive-valued data are divided into three disjoint subsets: A (the most profitable or largest values, representing the ‘important few’), B (values where yield matches effort), and C (the least profitable or smallest values, representing the ‘trivial many’).

Let

be a set of n observations, which, for the purpose of defining the plausible Naïve Bayes likelihoods in dimension

, are indexed in non-decreasing order in their respective dimensions, i.e.,

. Let

; the

is defined by [

30] as

For all other p in [0, 1] with p ≠ pi, L(p) is calculated using a linear, spline, or other suitable interpolation on

[

31].

Let L(p) be the Lorenz curve in Equation (11), then the ABC curve is formally defined as

Then the break-even point satisfies

and the submarginal point

is located by minimizing the distance from the ABC curve to the maximal-yield point at (1,1) after passing the break-even point with

. The break-even point yields the BC limit, and, hence, the threshold

ε with

Equation (12) defines the BC Limit.

Inspired by this idea, we reformulate the computation of epsilon from posterior to joint likelihood, as follows. An observation

is considered uncertain in feature

whenever the joint likelihood of every class falls below the confidence threshold ε, i.e.,

where

denotes the marginal of the distribution in dimension

. We will apply the threshold ε to identify low-evidence regions where the plausibility correction (Equation (15)) may be considered. Such uncertain cases might be classified against human intuition to a class with a probability density center quite far away, despite closer available class centers [

14]. Then, a “reasonable” assignment might be to assign the case to the class whose probability centroid is closest, which can be calculated using Voronoi cells for d > 1. For a one-dimensional case, they allow the closest mode to be determined for classification.

We estimate the univariate location of each class’s likelihood mode on a per-feature basis using the half-sample mode [

32]. For small sample sizes (

n < 100), we use the

estimator recommended by [

33]. As a safeguard mechanism, estimated modes are only considered for resolving uncertain cases if they are well-separated, i.e., have a distance from each other of at least the 10th percentile within the training data

This mechanism is motivated by the potential presence of inaccurately estimated modes or overlapping (non-separable) classes.

When the class likelihood for an observation x is uncertain in feature (Equation (13) holds true), and there is a class whose mode is well-separated from the highest-likelihood class (Equation (14)), we perform a conservative, local two-class correction of that feature class likelihoods as follows:

Let be the index of the uncorrected class likelihood with the largest value and the index of the class likelihood with the closest mode to for which Equation (14) holds true for and .

Then we update the two involved class likelihoods by replacing the values of the class likelihood

with the (former) top class likelihood,

. In addition, the operation subtracts δ from the prior top likelihood

and adds δ to the runner-up,

, and the relative advantage of the runner-up versus the former top increases by 2δ, which is sufficient to resolve many marginal posterior ties or implausibilities (as shown in the example in

Figure 1) while remaining conservative. All other class likelihoods for this feature remain unchanged in Equation (15):

Equation (4) is then used with the locally corrected class likelihoods . Note that as , the correction vanishes and the method reduces to the reasonable-Bayes rule; the transfer introduces a conservative “plausible-Bayes” adjustment, with controlling the strength of the plausibility correction.

2.6. Practical Considerations

In order to avoid numerical overflow, Equation (4), either uncorrected or the with the locally corrected class likelihoods

can be computed in log scale

to select the label of the class

that with the highest probability.

In practice, before this function can be computed, it must be determined if there are enough samples to yield a proper PDE. Based on empirical benchmarks [

16], if there are more than 50 samples and at least 12 uniquely defined samples, then

can be estimated, otherwise the estimations might deviate. In case there are too few samples, the density estimation defaults to simple histogram binning with bin width defined by Scott‘s rule [

34].

Let

be a small constant, then, to ensure numerical stability in Equation 16, the likelihoods

are clipped to the range of

. The reason is that density after smoothing may result in values slightly below zero due to the convolution (c.f. [

5]). In addition, density estimation can have spikes above 1. Moreover, we ensure numerical safety if we clip the corrected likelihoods to be non-negative after applying Equation (15).

There is also a possibility that Equation (4) may not allow a decision as two or more posteriors equal each other after the priors are considered. In such a case, the class assignment is randomly decided.

Equation (15)’s foundation is the assumption that modes can be estimated correctly in the data for each class, which could fail in practice. As a safeguard, we provide the following option for the user. We compute the classification assignments as defined in Equation (4), transformed according to Equation (16), and likewise without correction , using the training data.

Assuming the priors are not excessively imbalanced, we evaluate the Shannon entropy of each classification result

and choose the configuration that yields the highest entropy. The Shannon entropy H of

with priors

for

is defined as

with the normalization factor

.

A higher entropy indicates a potentially more informative classification.

Finally, due to the assumption leading to Equation (4) and subsequent equations, we provide a scalable multicore implementation of the plausible Bayes classifier by computing every feature separately, proceeding as follows: For each feature dimension we estimate a single Pareto radius independent of class, as defined in Equation (5), rather than separately for each feature–class combination. Empirical evaluations indicate that this approximation is sufficiently accurate for practical applications. For each class in each dimension we compute the class-wise PDE on an evenly spaced kernel grid to compute the raw conditional PDE covering the range of the data. Then, we compute the smoothed likelihood functions per feature dimension from the discrete conditional PDE . We call this approach the Plausible Pareto Density Estimation-based flexible Naïve Bayes classifier (PDENB).

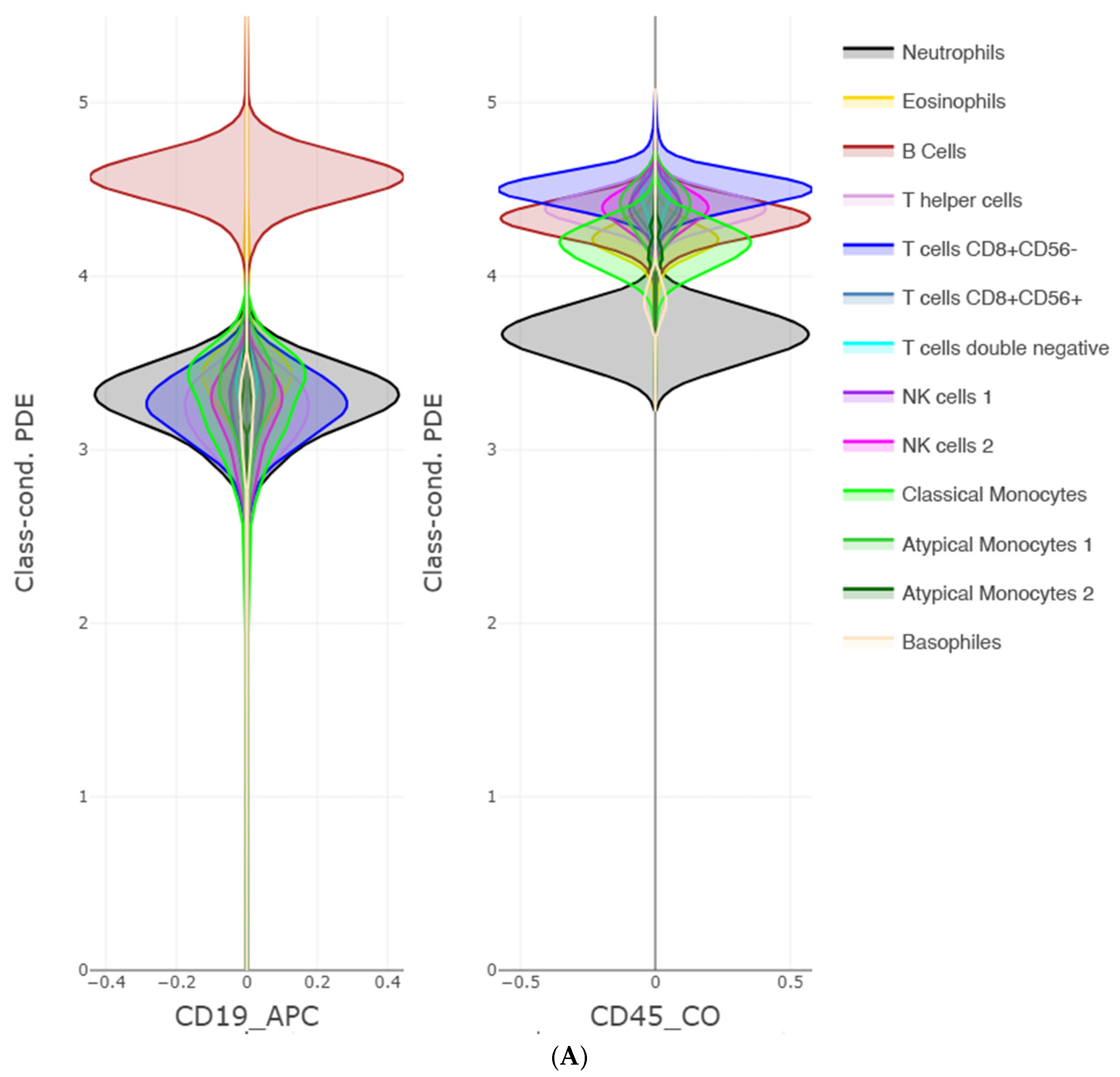

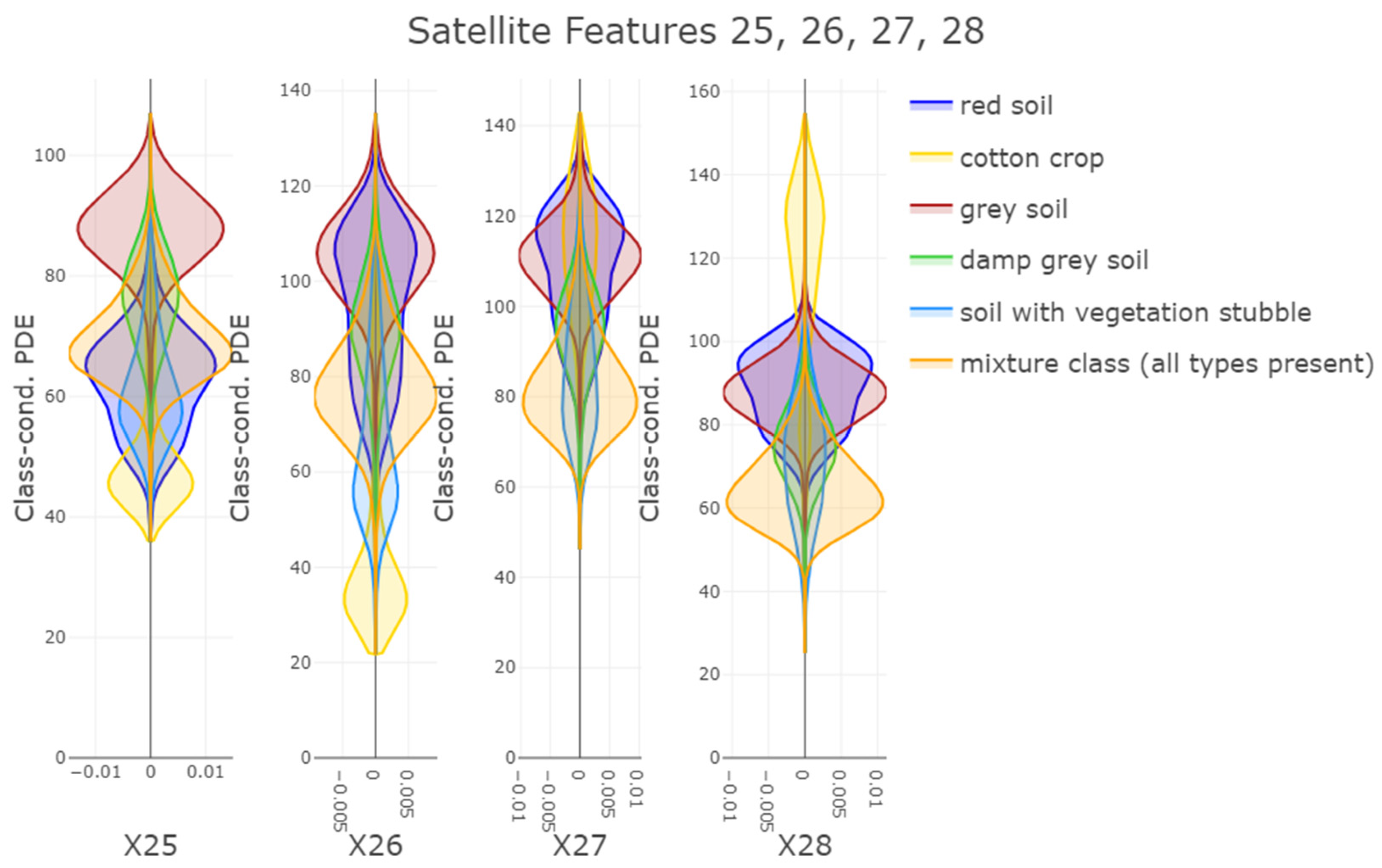

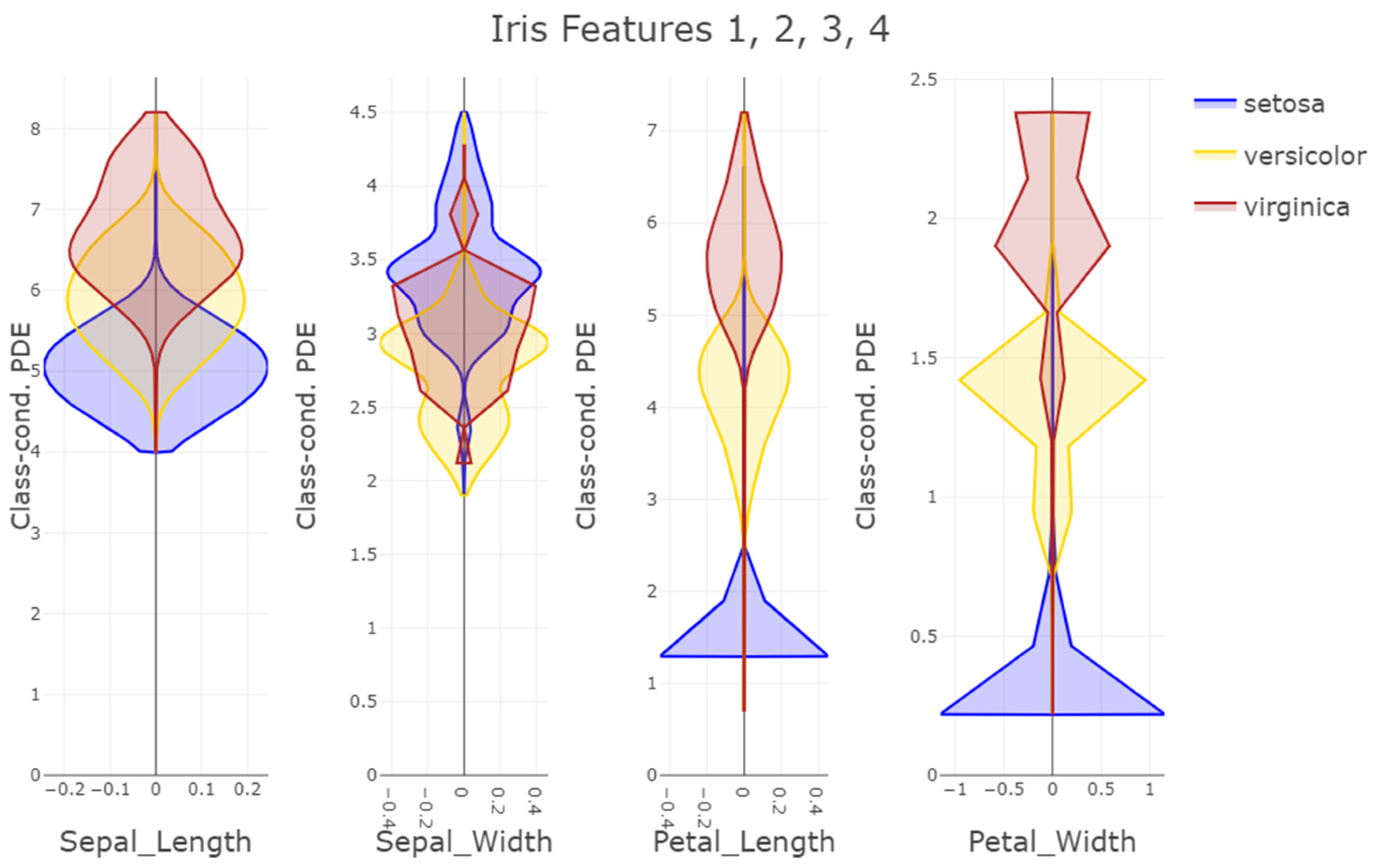

2.7. Interpretability of PDENB

A one-dimensional density estimation is required for the Naïve Bayes Classifier to compute the class-conditional likelihood of a feature as one of three parts of the Bayes theorem, yielding the final Posterior. This class-conditional likelihood allows a two-dimensional visualization as a line plot for a single feature. The plot gives insight into the class-wise distribution of the feature. Scaling the likelihoods with the weight of the prior obtained from the frequentist approach [

6] yields correct probabilistic proportions between the class-conditional likelihoods, which can be represented by different colors. Rotating the plots by 90 degrees and mirroring them in a similar way to that used for violin and mirrored density plots [

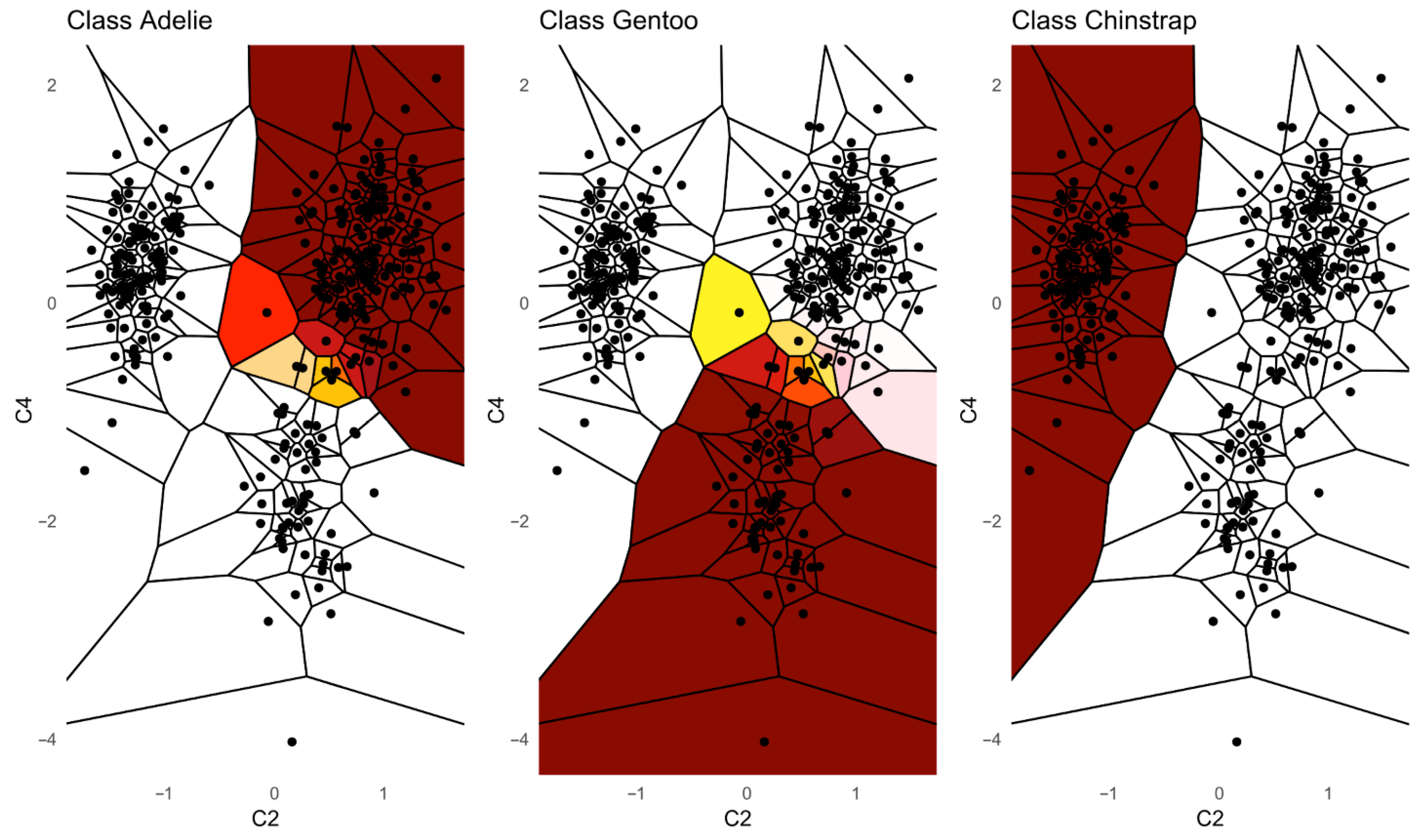

16] allows a lineup of the likelihoods to be created for multiple features at once. Such a visualization allows for interpretation based on the class-wise distribution of features. Most often, the colored class conditional likelihoods are overlapping and non-separable by solely one feature. However, in case of a high performing naïve Bayes classifier, overlaps do not indicate non-separability, but rather, first of all, a class tendency for each feature, and second, the existence of certain combinations and the disqualification of other combinations in question. These visual implications can be the starting point for a domain expert to find relations between features and classes, resulting in explanations.

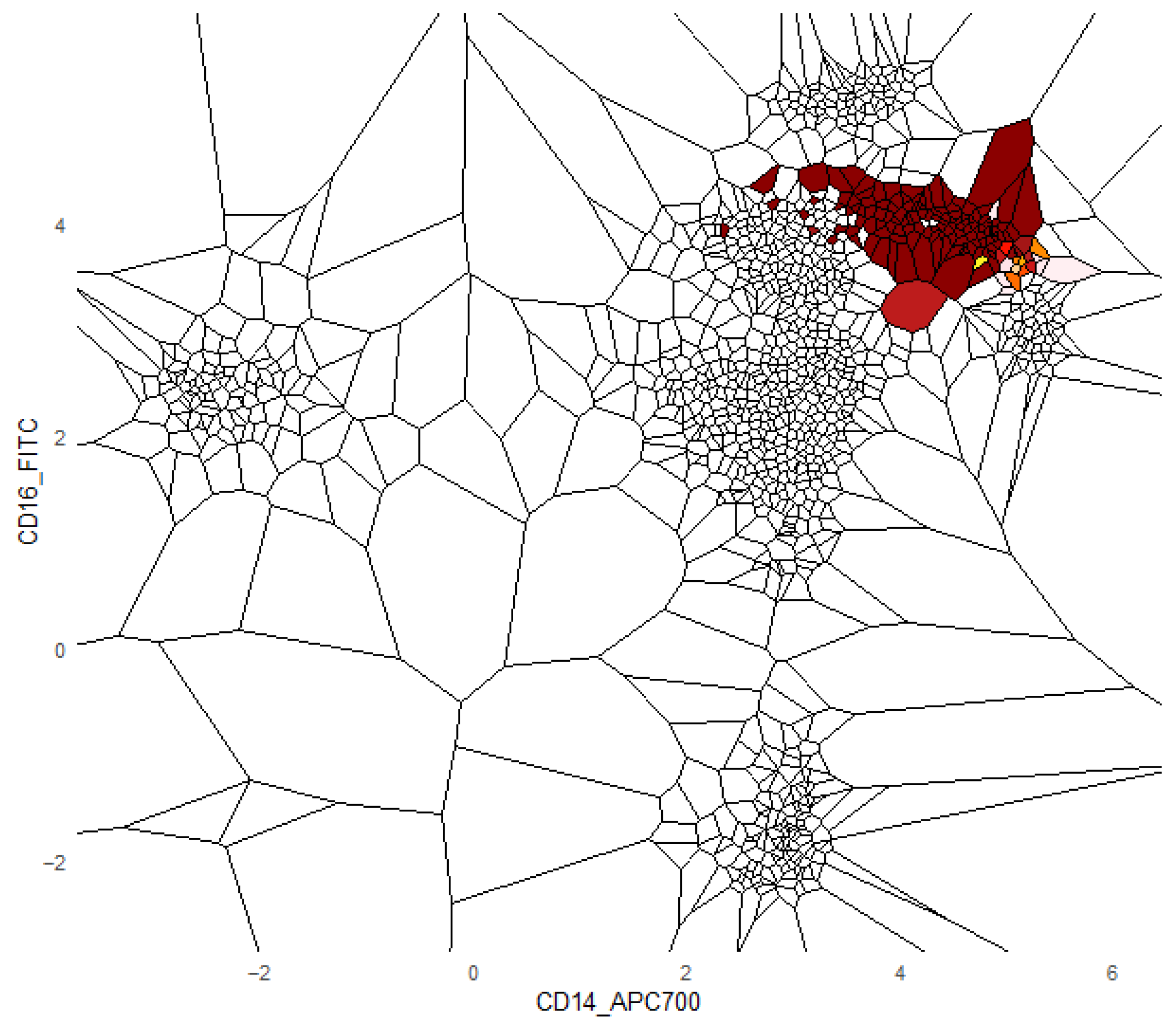

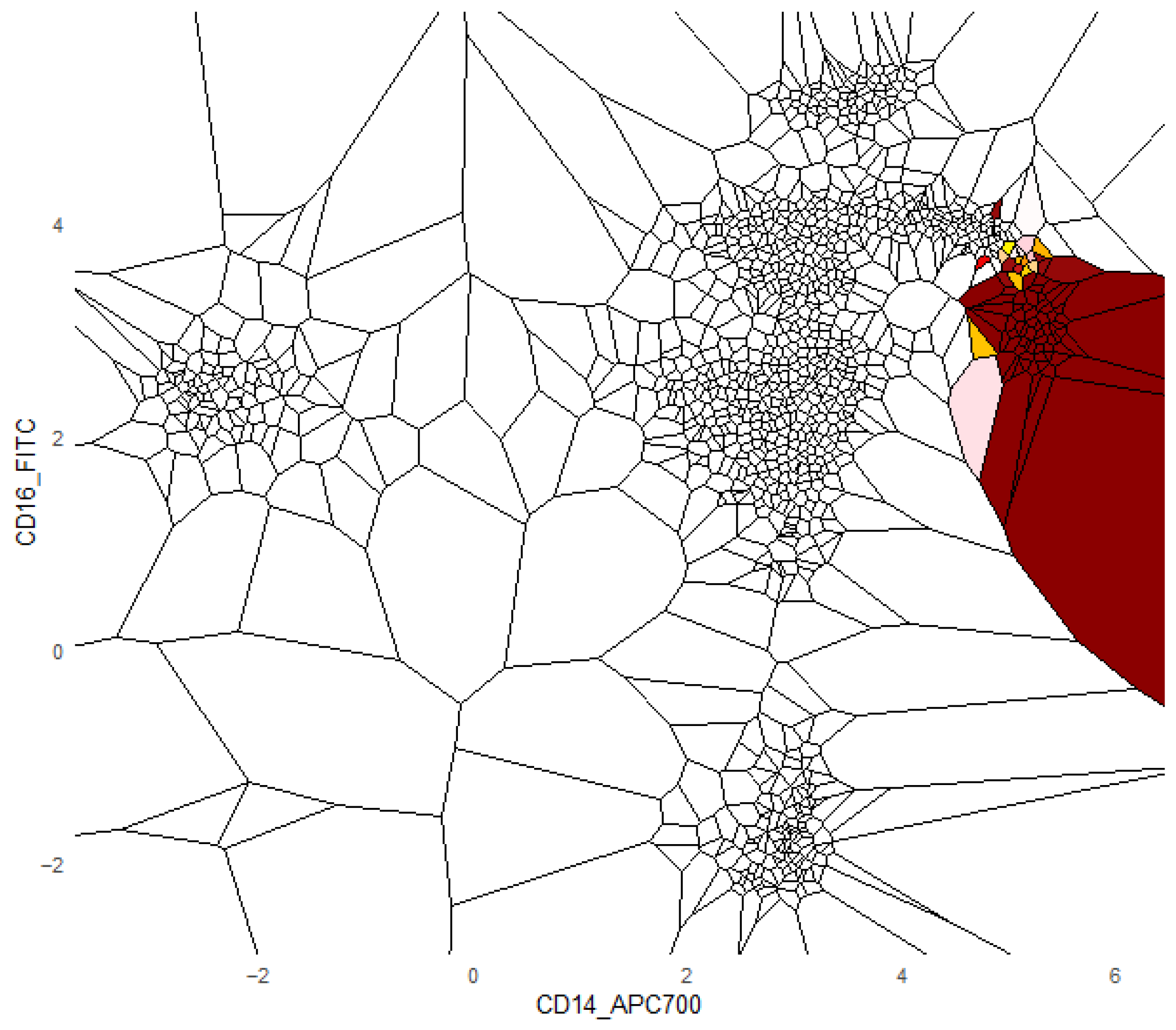

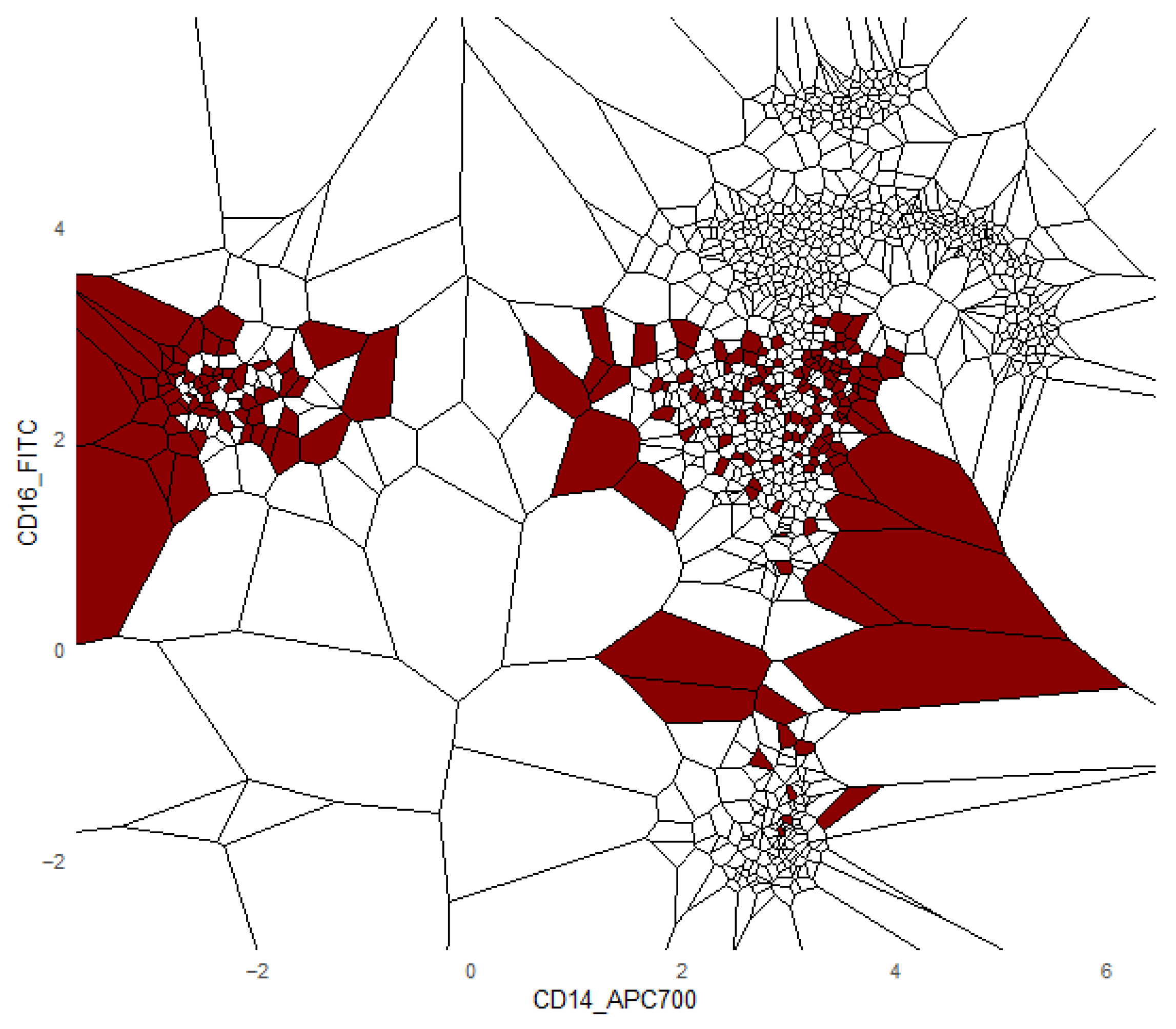

Additionally, we provide a visualization of one class versus all decision boundaries in 2D, as follows. Given a two-dimensional slice

, the Voronoi cell associated with a point g

is the region of the plane consisting of all points that are closer to g than to any other point v in the slice, defined by

That is,

contains all points such that the Euclidean distance from any y to g is less than or equal to the distance to any other v

. Each Voronoi cell

is binned according to the binned posterior probability

, thereby mapping regions of the plane to their inferred class likelihoods by colors. The binning can be either performed in equal sizes, using Scotts rule as bin width [

34], or less efficiently, by the DDCAL clustering algorithm [

35]. The user can visualize the set of slices of interest. This approach is motivated by human pattern recognition and the subsequent classification of diseases from identified patterns in two-dimensional slices of data [

36], which is apparently sufficient for a large variety of multivariate data distributions.

2.8. Benchmark Datasets and Conventional Naïve Bayes Algorithms

For the benchmark we selected 14 datasets: 13 from the UCI repository and a 14th dataset (“Cell populations”) containing manually identified cell populations [

37]. Full dataset descriptions of the UCI datasets, attribute definitions, and links to original sources are available on the repository pages for each dataset [

38], for example, Iris:

https://archive.ics.uci.edu/dataset/53/iris, accessed on the 15 December 2025). The Cell populations dataset is an extended version of the data used by [

37]; the set of populations provided here is larger than in that publication because the authors labeled populations at finer granularity. A detailed description of the cell populations dataset is given in [

37].

The datasets were preprocessed prior to analysis using the methods of rotation [

39,

40] taking into consideration Refs. [

41,

42], as implemented in “ProjectionBasedClustering”, available on CRAN [

43]. In it should be noted that although the signed log transformation for better interpretability was applied to the cell populations, no Euclidean optimized variance scaling was used [

44]. Thereafter, correlations of features were computed. Important properties, meta information, and correlations of the processed datasets are presented in

Table 1. The measure of correlation depends on the choice of algorithm. Here, the Pearson, Spearman’s rank, Kendall’s rank, and the Xi correlation coefficient [

45] are summarized using the minimum and maximum values to characterize the correlations of the datasets. The values of the Pearson and Spearman correlation coefficients tend to be higher than the Xi correlation coefficient by around 0.3. Similarly, Kendall’s Tau values tend to be higher than the Xi correlation coefficients, but not as high as the Pearson’s or Spearman’s correlation coefficients. Some important attributes, such as the number of classes, the distribution of cases per class to judge class imbalance, and various dependency measures related to the independent feature assumption of the naïve Bayes classifier, are presented in

Table 1.

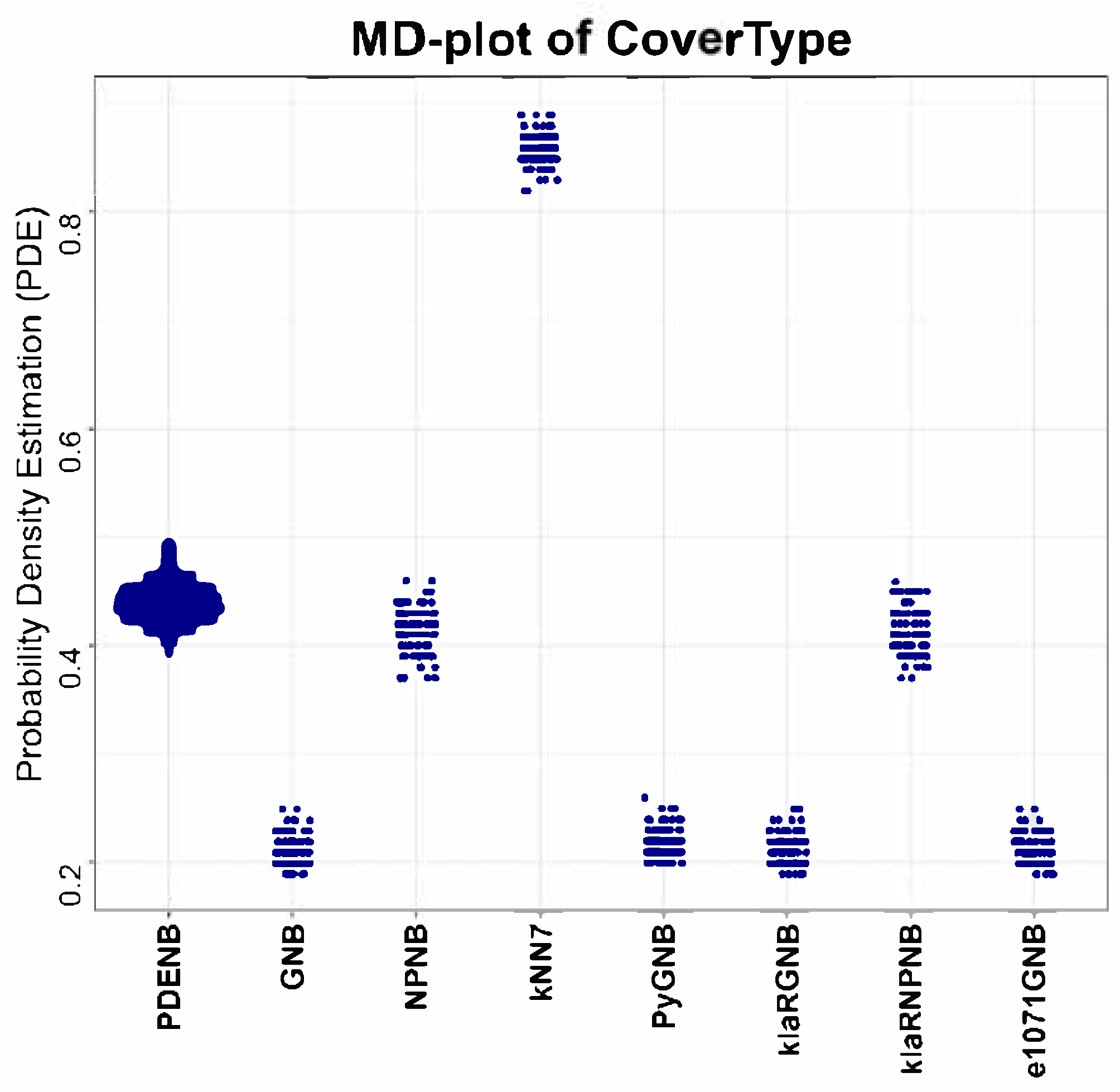

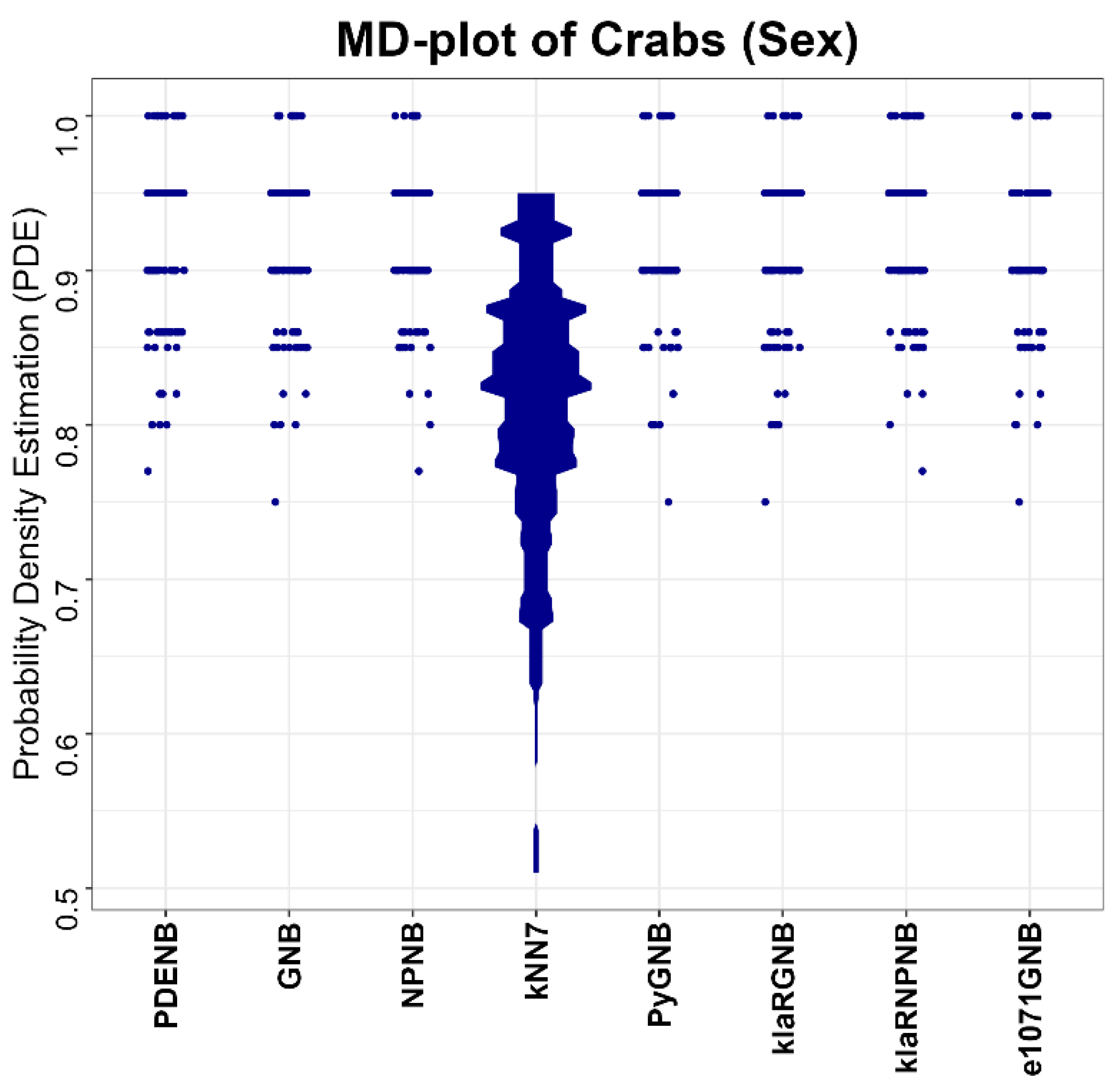

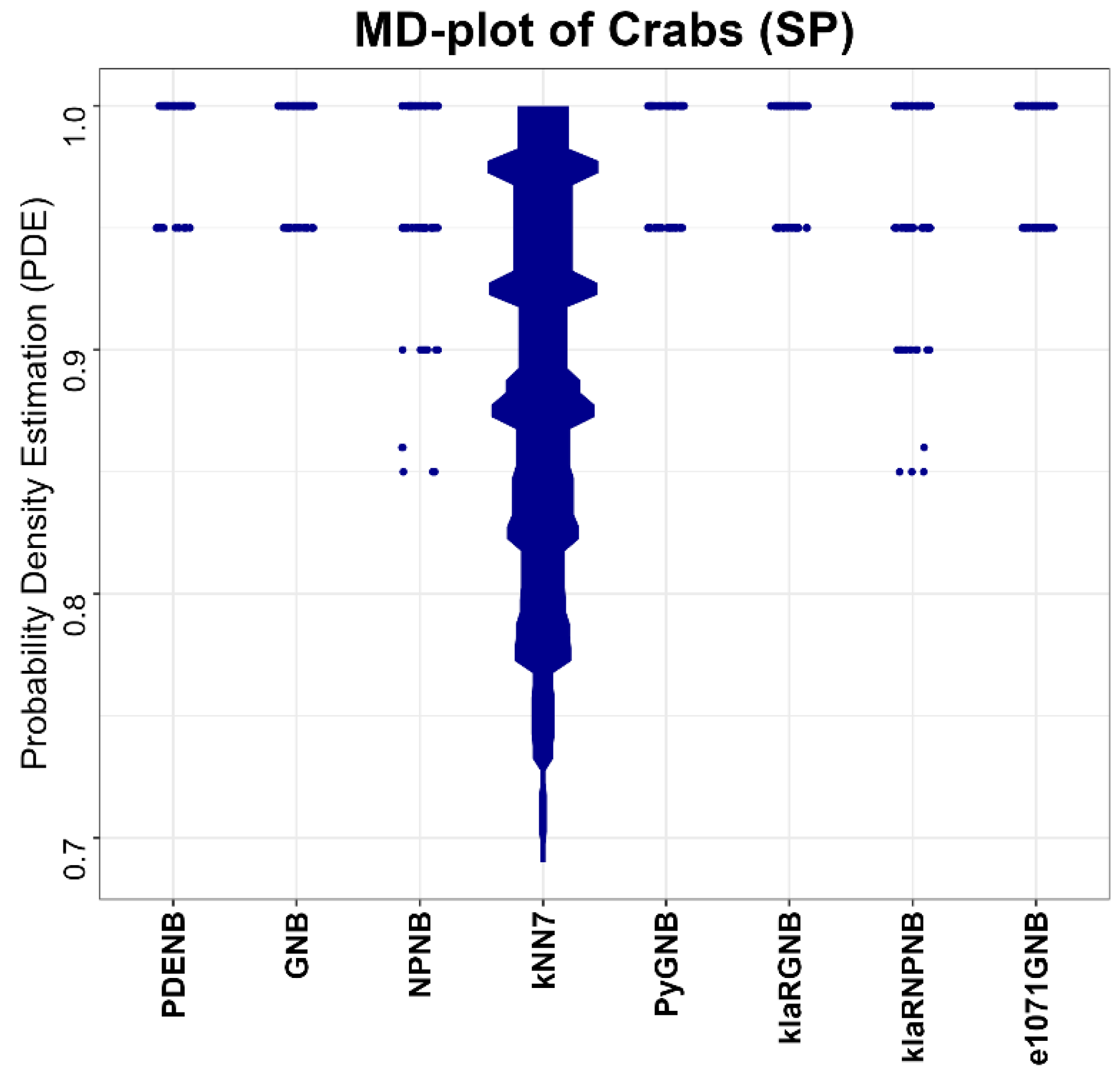

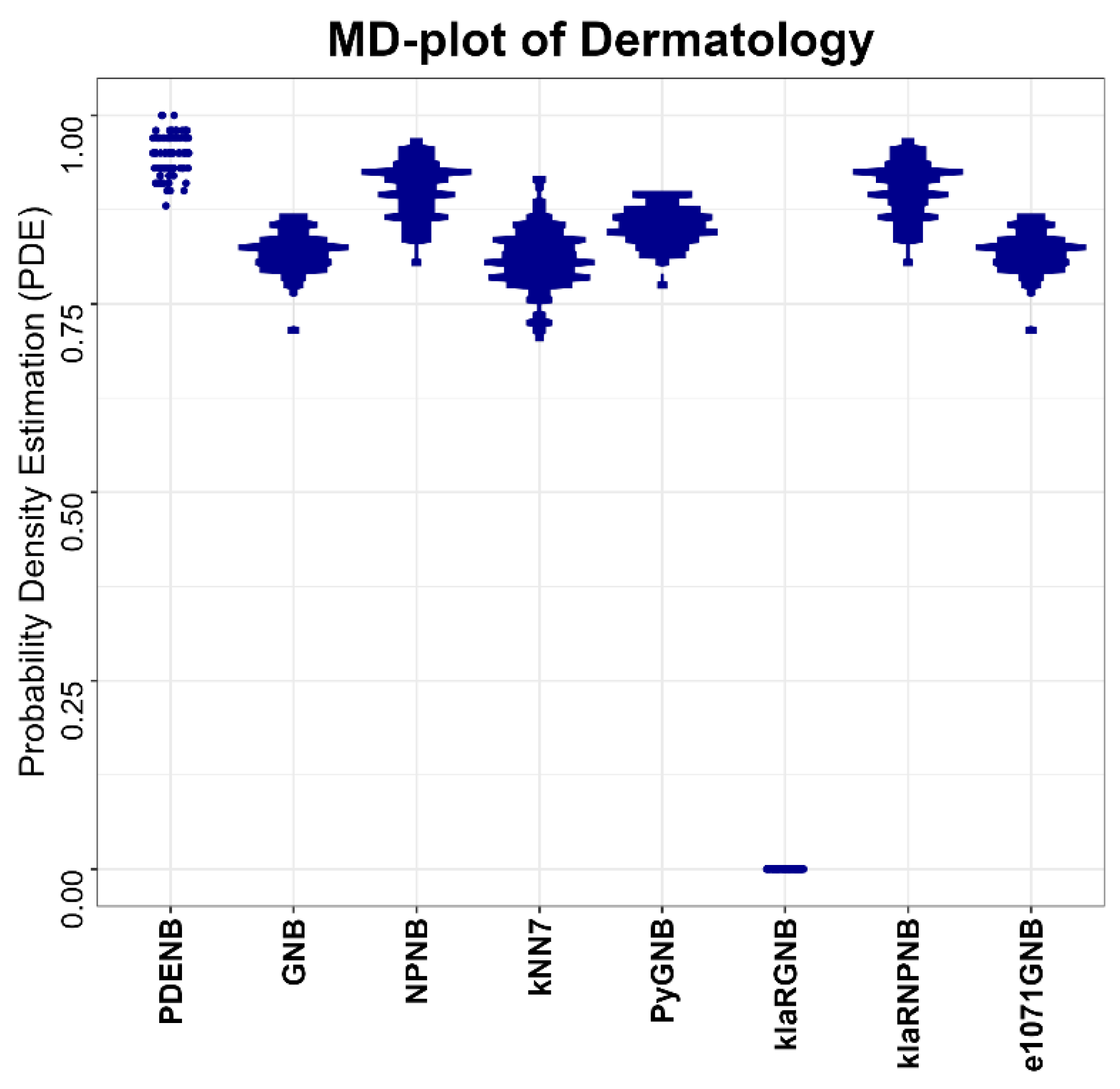

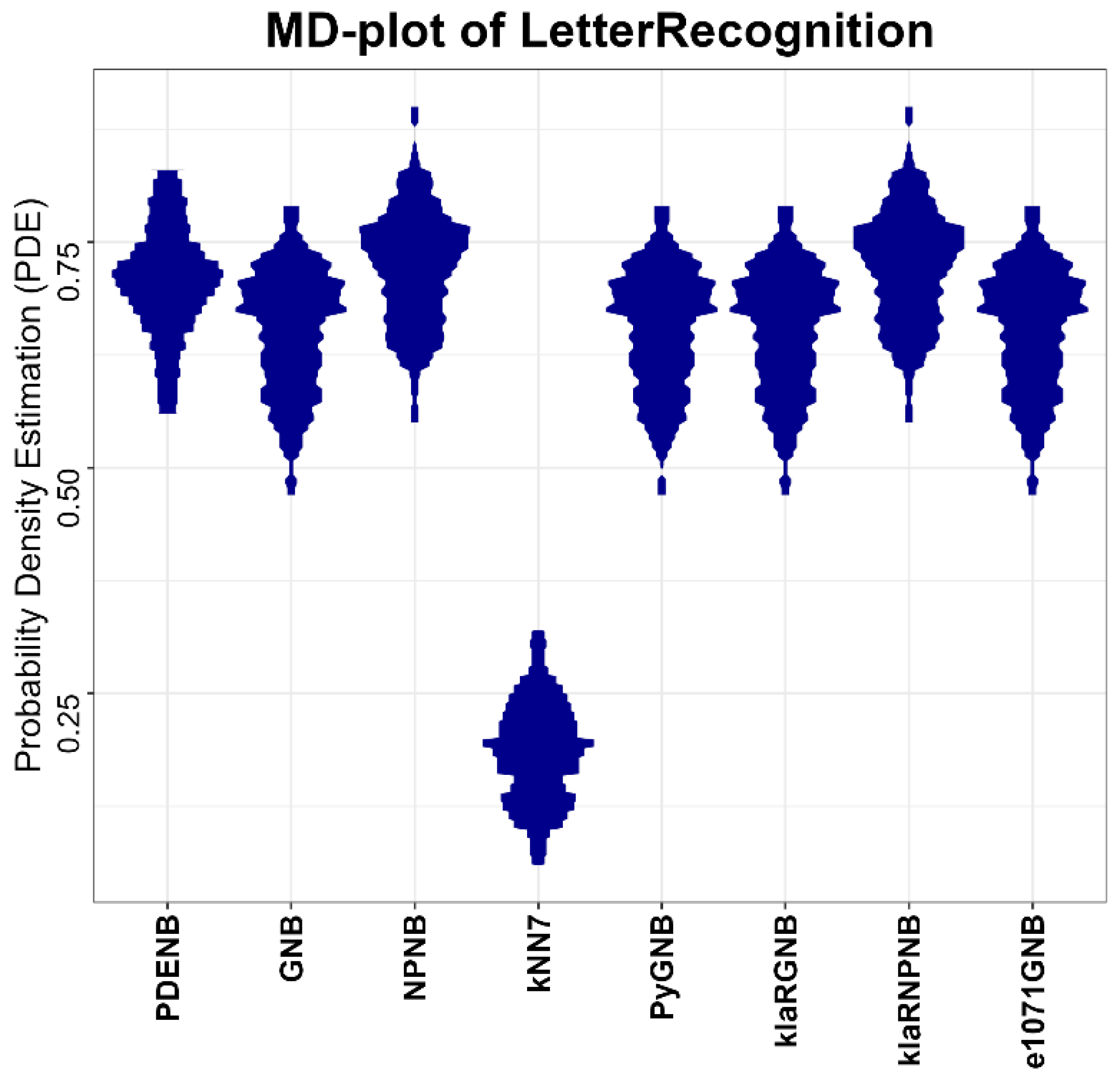

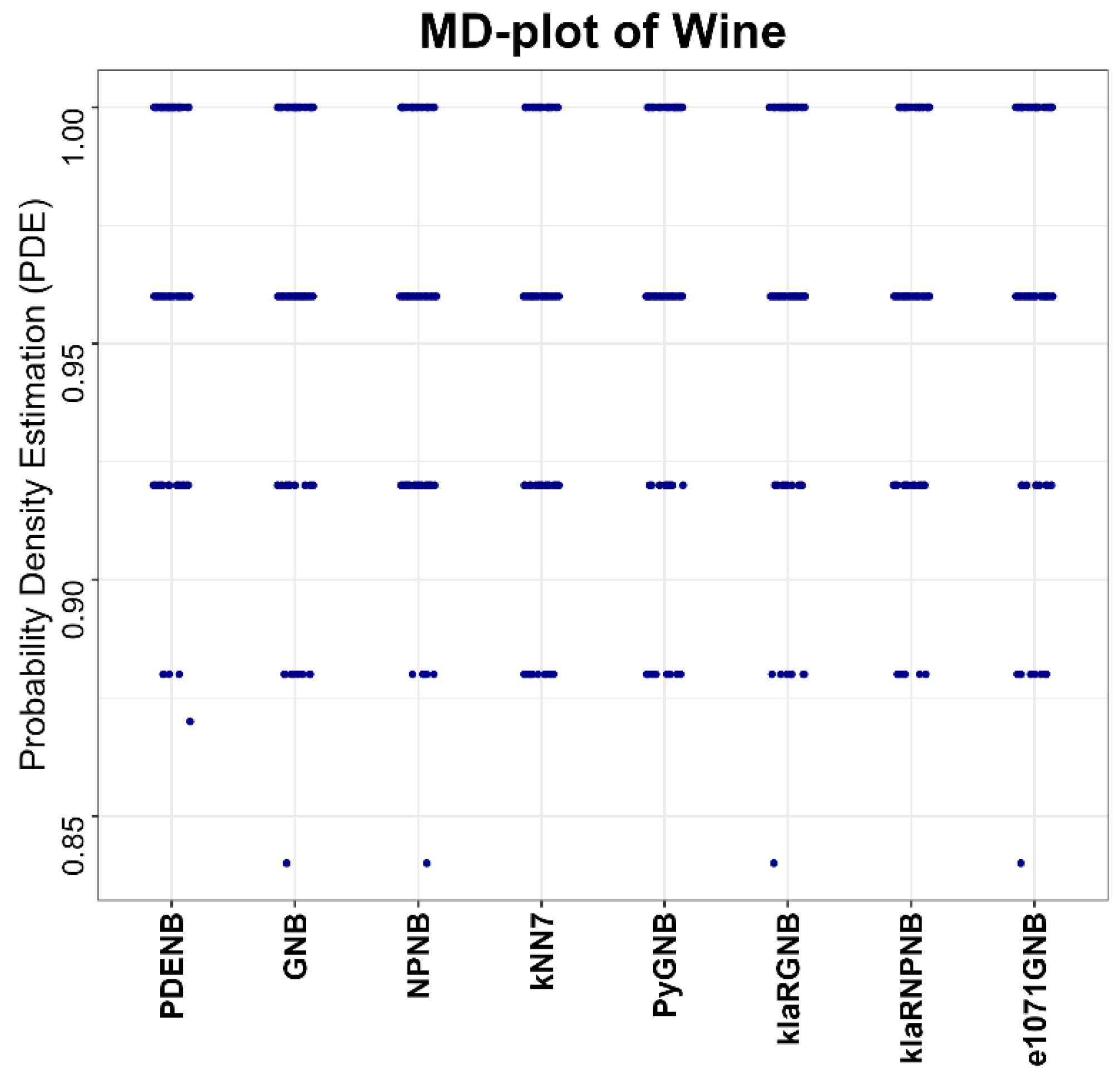

Performance is evaluated for the Plausible Pareto Density Estimation-based flexible Naïve Bayes classifier (PDENB) in comparison to a Gaussian naïve Bayes classifier (GNB) and a nonparametric naïve Bayes classifier (NPNB) from the R package “naivebayes”, available on CRAN [

20]); a fast implementation of k-nearest neighbor classifier (kNN as 7NN) from the R package “FNN”, available on CRAN [

46]); a Gaussian naïve Bayes from the python package “sklearn” [

47] (PyGNB); a Gaussian (klaRGNB) and non-parametric naïve Bayes (klaRNPNB) approach from the R package “klaR”, available on CRAN [

48]; and last, but not least, a Gaussian naïve Bayes from the R package “e1071”, available on CRAN [

49] (e1071GNB). The algorithms for the naïve Bayes methods were applied in their default settings differencing between “Gaussian” and “nonparametric” versions, while the parameter k for the kNN classifier is set to 7.

4. Discussion

We introduced PDENB, a Pareto Density-based Plausible Naïve Bayes classifier that combines assumption-free, neighborhood-based density estimation with smoothing and visualization tools to produce robust, interpretable classification. Our empirical benchmark across 14 datasets and its dedicated application to multicolor flow cytometry demonstrate several consistent advantages of this approach.

First, PDENB is competitive with—and frequently superior to—established Naïve Bayes implementations and non-parametric variants. Using repeated 80/20 hold-out evaluations (or resampling for very large datasets) and Matthews Correlation Coefficient (MCC) as the performance measure, PDENB attains top average ranks (

Table 3) and achieves very high per-dataset performance on several problems (e.g., MCC ≥ 0.95 for Iris, Penguins, Wine, Dermatology, and the Cell populations dataset). The permutation tests (with multiple-comparison correction) aggregated in

Table 3 indicate that these improvements are not merely random fluctuations: they translate into statistically detectable differences for many dataset–classifier pairs (see also

Appendix Table A1 and

Table A2). Note that we did not apply variance-optimized feature scaling for the benchmark; because k-nearest neighbors’ decisions are distance-based, kNN is not expected to attain its best possible performance under our preprocessing. The choice of scaling and distance is often empirical and context-dependent, and there is no single universally “correct” recipe.

Second, PDENB’s core strength is its flexibility in modeling complex, non-Gaussian feature distributions without parametric assumptions. The Satellite dataset illustrates this point: feature distributions (see

Appendix C) and class-conditional distributions for this set display long tails, multimodality, and skewness that violate Gaussian assumptions. In that setting, PDENB captures the fine structure that classical Gaussian Naïve Bayes misses, yielding substantially better discriminative performance. This example underscores the value of highly adaptive density estimation methods when confronted with complex, non-Gaussian data structures. This pattern—non-parametric methods outperforming Gaussian approximations when data depart from normality—is borne out across the benchmark: non-parametric Naïve Bayes variants tend to outrank their Gaussian counterparts (see

Table 2).

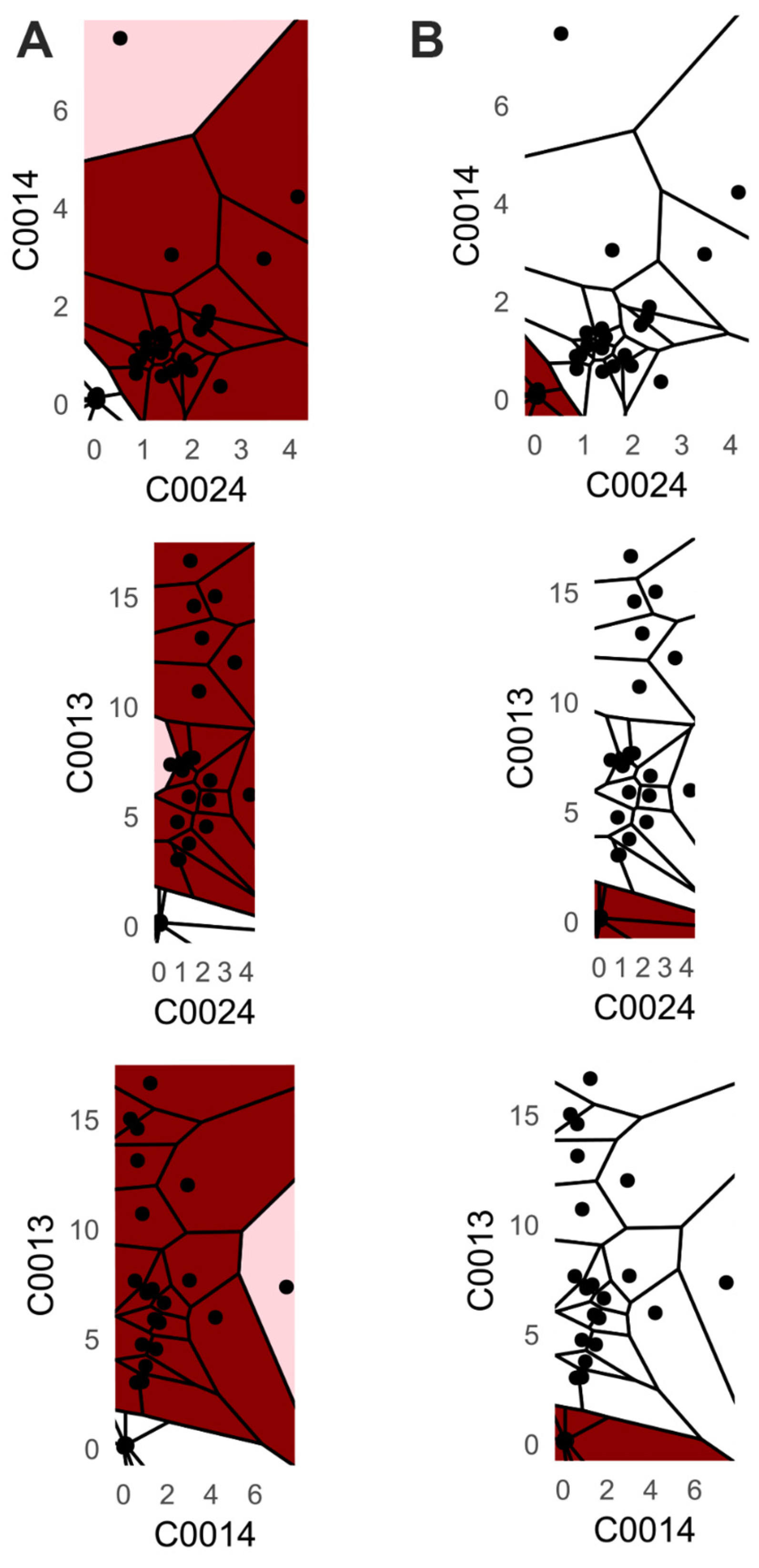

Third, PDENB directly supports interpretability through visualization. The class-conditional mirrored density (MD) plots and the customized 2D Voronoi posterior maps provide intuitive, feature-level, and case-level explanations as outlined using

Figure 2,

Figure 3,

Figure 4 and

Figure 5: users can inspect class-conditional likelihood shapes (modes, skewness, overlaps) and identify the feature combinations that produce compact, high-posterior decision areas. These visual diagnostics do not replace formal model evaluation, but they materially aid exploratory analysis and hypothesis generation, and they help explain why a particular prediction was made in cases where two or more features jointly determine a compact posterior region (see

Figure 6,

Figure 7,

Figure 8,

Figure 9 and

Figure 10).

The FlowCytometry application of distinguishing blood vs. bone marrow illustrates a practical use case where PDENB’s combination of sensitivity to distributional fine structure, feature selection, and interpretability is valuable. In such features of cell population frequencies, absolute counts, and percentages, classical Gaussian assumptions are violated (see

Appendix C Figure A16 and

Figure A17). PDENB achieved 98.8 MCC under cross-validation. The improved baseline performance is practically meaningful because higher sample-level accuracy reduces the risk of mislabeling the origin of aspirates of bone marrow vs. peripheral blood, which in turn can reduce downstream diagnostic errors. This result is a first indication that PDENB could potentially support clinically relevant classification tasks even with modest sample sizes, provided that careful feature selection and validation are applied.

The influence of dependency was tracked with four different measures in

Table 1 after preprocessing. High correlation values (>0.8) using all four measures are depicted in

Table 1 for all datasets except for the following three: CoverType, Swiss, and Wine (Crabs and Penguin had low correlations due to rotation by ICA or PCA). Although naïve Bayes theoretically assumes feature independence, in practice, correlated features did not necessarily imply a low performance (<0.8). For example, the Cell populations dataset retained high performance despite correlated features above 0.9. The removal of correlated features did not necessarily improve performance. Still, correlations can affect interpretability and sometimes classifier reliability; feature decorrelation, conditional modeling, or methods that explicitly capture dependencies may further improve the model’s performance in specific domains. In our benchmark, we observed that high feature correlation does not uniformly impair PDENB.

Our benchmark covers a diverse but limited set of datasets; broader evaluations—especially in high-dimensional, noisy, or highly imbalanced settings—would strengthen generality claims, although benchmarking against all families of classifiers is a controversial topic [

63,

64]. We emphasize several methodological points and caveats. PDENB’s robust performance hinges on three design choices: (i) estimating a single, class-independent Pareto radius per feature; (ii) applying smoothing to the raw class-conditional PDE output prior to using it as a likelihood; and (iii) applying a plausibility correction to the class likelihoods. While these design choices increase estimator stability and interpretability, they come at the cost of greater computational demand. To mitigate the computational cost, we provide a multicore, shared-memory [

65] implementation for large-scale applications. Finally, embedding PDENB visualizations within formal explainable-AI workflows (e.g., counterfactual analyses, local-explanation wrappers) would enhance their utility for decision-makers.

Several design alternatives (e.g., kernels, global or local Pareto radii) were explored during preliminary experimentation. For example, we approximate the Pareto radius through a single, class-independent parameter rather than estimating separate radii for each feature–class combination. While this could reduce the model’s flexibility, preliminary internal experiments and benchmarking indicate that the resulting approximation error is small and that the simplification is sufficiently accurate for practical applications. The final architecture and parameter configuration were fixed after extensive testing, as this setup consistently provided the most stable and reliable performance across validation settings. Therefore, we fixed the parameters, especially regarding the radius estimation, which could be estimated through class-dependent or global methods, the choice of kernel, and several competing approaches to assess low evidence areas in the naïve Bayes theorem. The curious reader will find even more possibilities for changing parameters in the presented setting.

As an example, we present results from our preliminary experimentation to support the process of deciding between a global versus a local (class-dependent) Pareto radius in

Appendix D and showcase our reasoning. As

Appendix D shows, the strategy using a global Pareto radius achieves better results in the high-performing examples, while the local radius achieves better results in the low-performing and moderately performing examples. Therefore, it is justifiable that the global strategy is the default setting, although the user is able to change the radius estimation to the local mode.

The computation of PDENB’s plausibility threshold ε is a data-driven optimal selection of the group of cases with smallest joint likelihood across all classes (see ABC-Analysis [

29]). Therefore, the relevant cases for which a plausible class assignment could be considered are determined automatically.

The δ-based adjustment provides a controlled way to nudge likelihood mass from the current top class towards a nearby mode without distorting the global posterior landscape. The parameter δ governs the trade-off between conservatism and decisiveness: a small δ produces minor, local adjustments that yield stable and interpretable posterior changes, whereas a larger δ would raise the likelihood of MAP flips in low-evidence regions. Because Equation (15) transfers a bounded amount of mass from one class to another, the overall likelihood geometry is preserved and posterior trajectories remain smooth rather than exhibiting abrupt thresholding, particularly near class boundaries. This produces a gradual change in the likelihood profile (illustrated in

Figure 1 and resembling a fading-variance effect) and enhances the MAP estimate for the closest-mode class while retaining Bayesian coherence and interpretability.

In

Appendix E we show that random forest (RF) yields a small but consistent edge in raw MCC on four imbalanced datasets, while PDENB provides a transparent, well-calibrated, and parameter-free baseline the errors of which are interpretable from its class-conditional likelihood plots. The benchmark on four imbalanced datasets hints that PDENB cannot necessarily achieve high performance for minority classes if there is a lack of samples, since the minority might be underrepresented. When two classes overlap strongly in feature space, one class can suffer disproportionately poor performance—especially if it is less prevalent or more heterogeneous. Differences in class prevalence (priors) or in within-class variability shift posterior probabilities toward the dominant (or more concentrated) class, which increases misclassification of the disadvantaged class. An estimation of a risk leading to a reweighting of the posteriori might be helpful if the false negative rate of a specific minority class must be minimized.

The PDENB is applicable to numeric tabular data as shown in the benchmark study. Theoretically, it is limited to independent features, however in practice it shows high performance despite significant dependency measures within the data. The PDENB can process big and high-dimensional data, as was shown in the benchmark study. In the case of Covertype (over 450.000 training samples and over 100.000 test samples with 17 features), the model training time is under 35 s and the classification requires around 3 s. Larger datasets with millions of cases could be processed within a few minutes. A more explicit runtime test was executed and documented in

Appendix F. The PDENB can compute large datasets of a million cases with up to 100 feature dimensions within a few minutes (<7 min). The computational speed of the training drastically decreases for hundreds of features and multiple millions of observations; however, it is still feasible, with a runtime of under an hour. Its prediction remains comparably fast, with a runtime of under 13 min for 4 million cases and 100 features.

PDENB assumes continuous feature domains. For features with only a few distinct numeric values or very small class-specific sample sizes, we therefore fall back to a simple histogram-based likelihood with an automatically chosen number of bins [

16], as described in

Appendix G. Truly categorical variables, i.e., unordered labels without a meaningful numeric scale, are mathematically different: they do not admit a probability density in the sense defined in the

Section 2.3 ff., and we do not implement a dedicated categorical likelihood model for them in the present work. In principle, PDENB could be extended to such features by replacing the continuous density with a multinomial/Dirichlet class-conditional model over categories, or by embedding categories into a continuous representation and applying PDE in that space. We regard this as a natural direction for future work and, in this study, deliberately restrict ourselves to continuous and quasi-continuous features, for which PDE and the histogram fallback are well defined and interpretable.

The plausible correction in PDENB relies on effective mode separation, which is determined using quantile-based criteria. If class separation is not feasible, instead of plausible correction, the classical Bayes theorem will be used. Conversely, when class separation exists, skewed distributions do not affect the computation of the plausible correction, provided that mode recognition remains accurate. However, incorrect mode recognition may deteriorate the correction outcome. The presented framework offers a foundation for the development of autogating approaches. In future work, the two-dimensional representation based on Voronoi cells, together with the a posteriori probability derived from PDENB, could be utilized to identify regions of interest within the feature space. Convex hulls may then be employed to characterize specific properties associated with particular feature–class combinations. By combining these properties, it would be possible to define automated and data-driven class assignment strategies, thereby enabling fully autonomous gating within this framework.

The advantage of the PDENB is clearly its assumption-free modeling of the data and robust performance. PDENB can be applied to data to leverage fine details of the distributions. Its assumption-free smoothed density estimation, combined with a plausibility correction of the Bayes theorem, yields reliable and robust posterior estimates.

Patterns arising from the multiplicative combination of feature-wise likelihoods can be recognized by the user and serve as intuitive explanations of how different features jointly contribute to the final class assignment.