Zero-Shot Elasmobranch Classification Informed by Domain Prior Knowledge

Abstract

1. Introduction

2. Related Work

3. Methodology

- Precise base descriptions provided by experts for each category.

- Schematic illustrations from field guides and specialized sources that highlight distinctive traits.

- Hierarchical taxonomy that organizes categories and groups shared visual features.

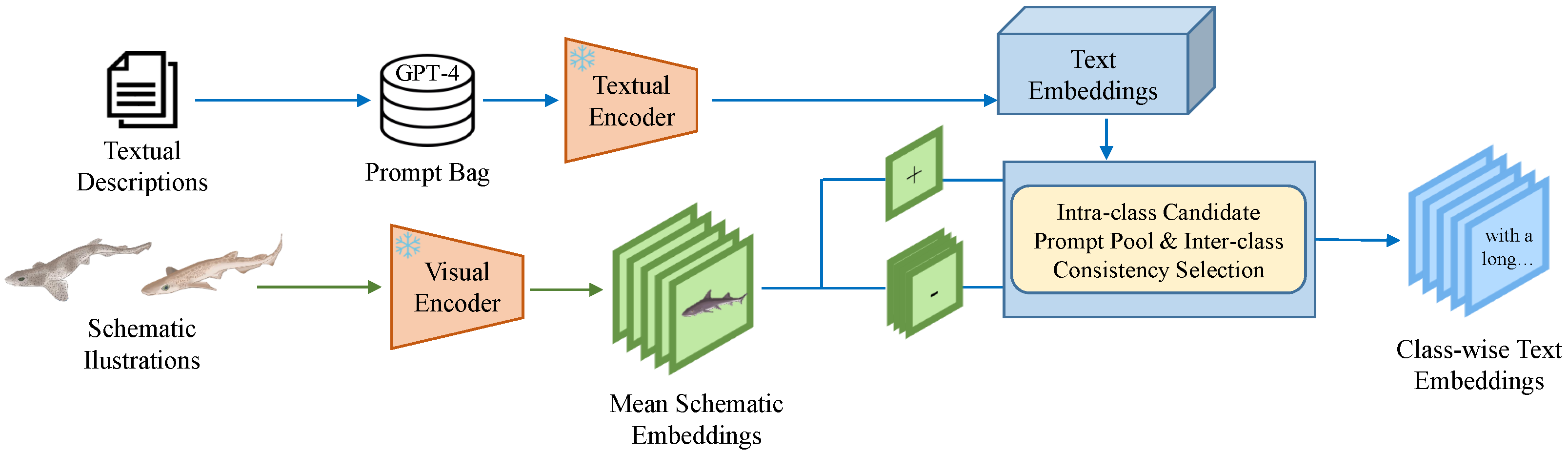

3.1. Prompt Extraction Through Prior Knowledge: Illustrations and Expert Descriptions

3.1.1. Taxonomy-Aware Classification Strategies

- General aggregation: each image is simultaneously evaluated across multiple taxonomic levels, including subclass (Elasmobranchii, which comprises sharks and rays), order, family, and species, by summing the similarity scores at each level to obtain an accumulated score.

- Sequential classification: the decision is taken hierarchically, starting from the most general level (shark or ray) and progressively restricting the options at each step to the corresponding subgroup. This reduces ambiguity and simplifies prompt selection.

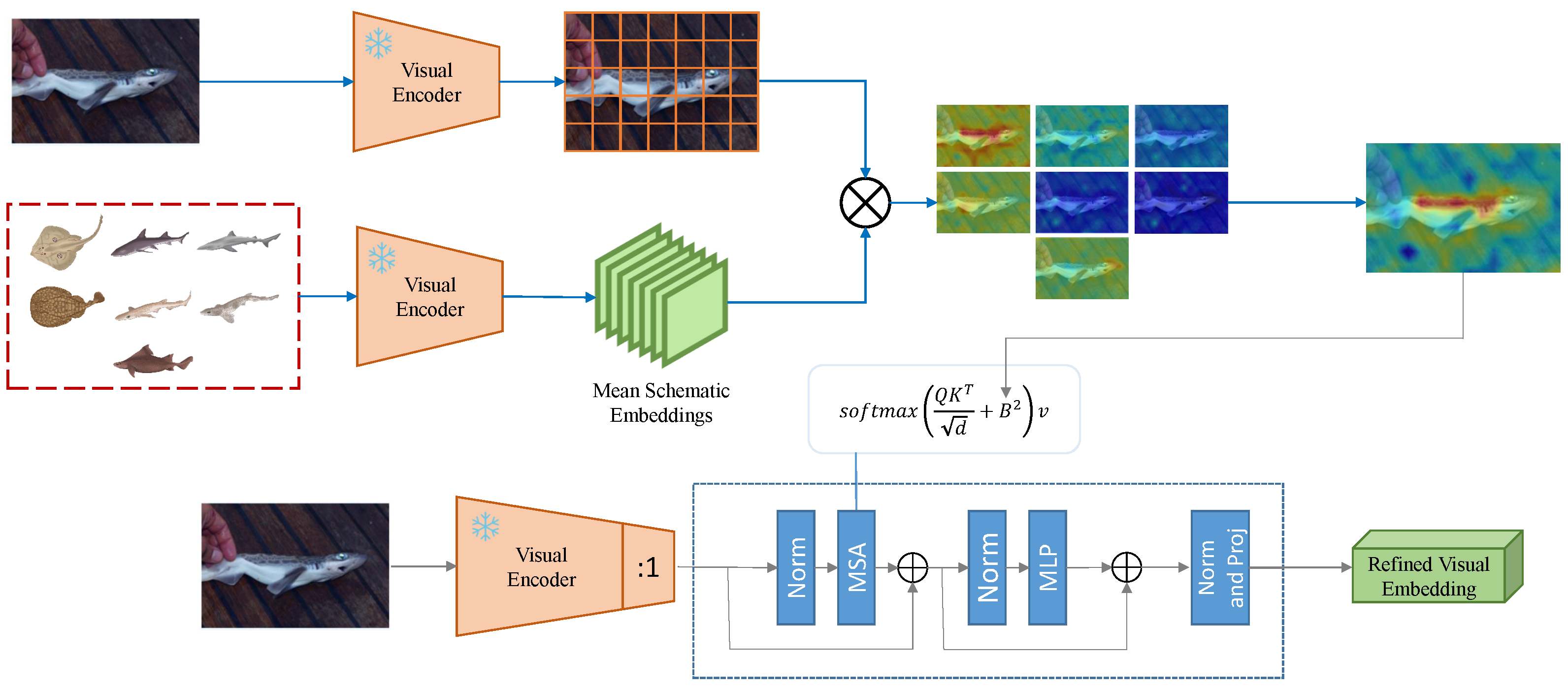

3.2. Prototype-Guided Cross-Attention from Illustrations

4. Experimentation

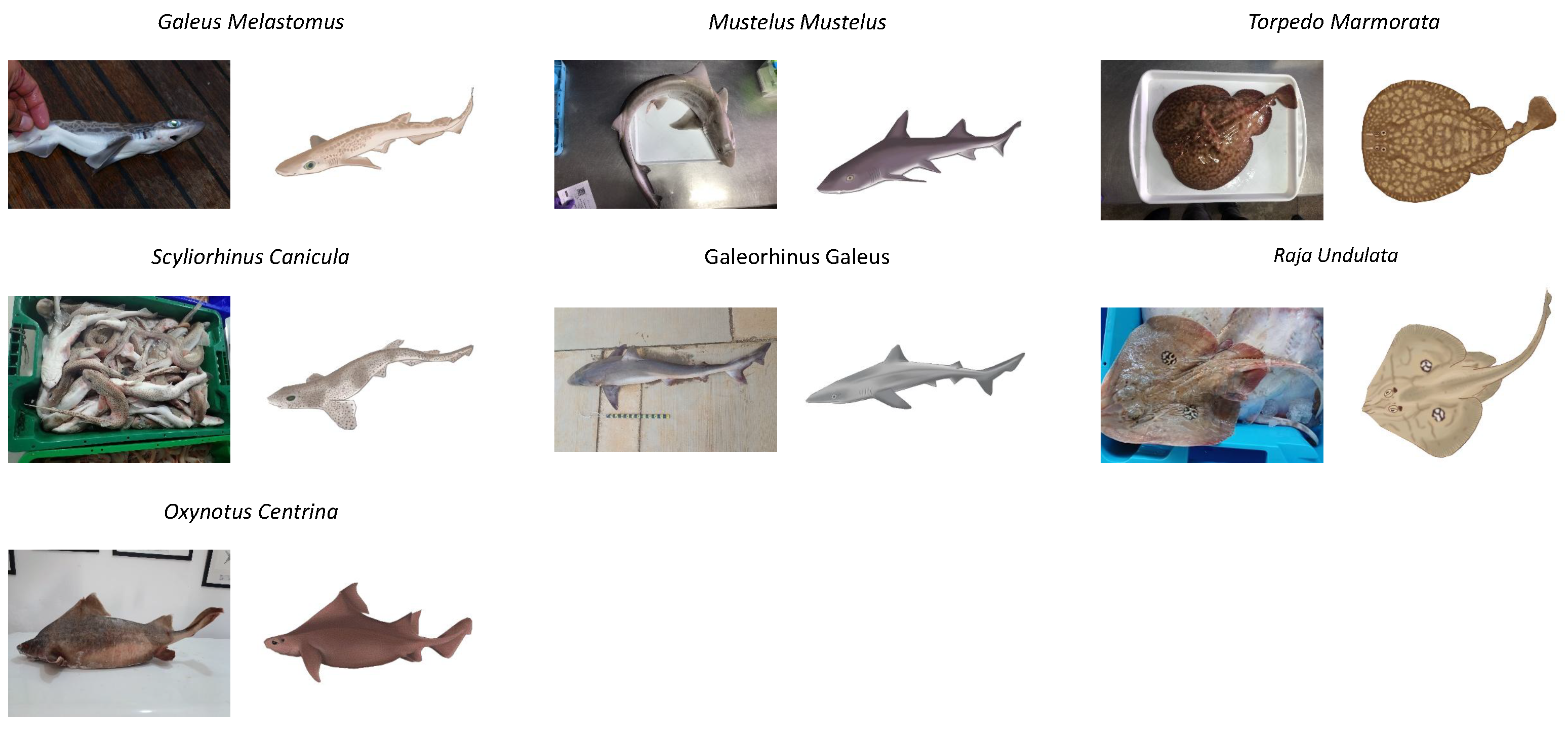

4.1. Dataset

4.2. Overview of the Methodological Workflow

- First, the prompt selection stage (Section 3.1) involves generating expert and category-specific textual descriptions from schematic illustrations that capture the distinctive morphological traits of each class. These descriptions are evaluated by measuring the similarity between their embeddings and those derived from the illustrations, selecting the prompts that best represent each class while minimizing confusion with others.For example, for the species Galeus melastomus, the prompt “a shark with reddish-brown coloration and oval spots encircled in white” was selected, as it showed the highest correspondence with its schematic illustration and minimal confusion with other species of the same order. The circular white-bordered spots and characteristic coloration reinforce its distinctiveness.

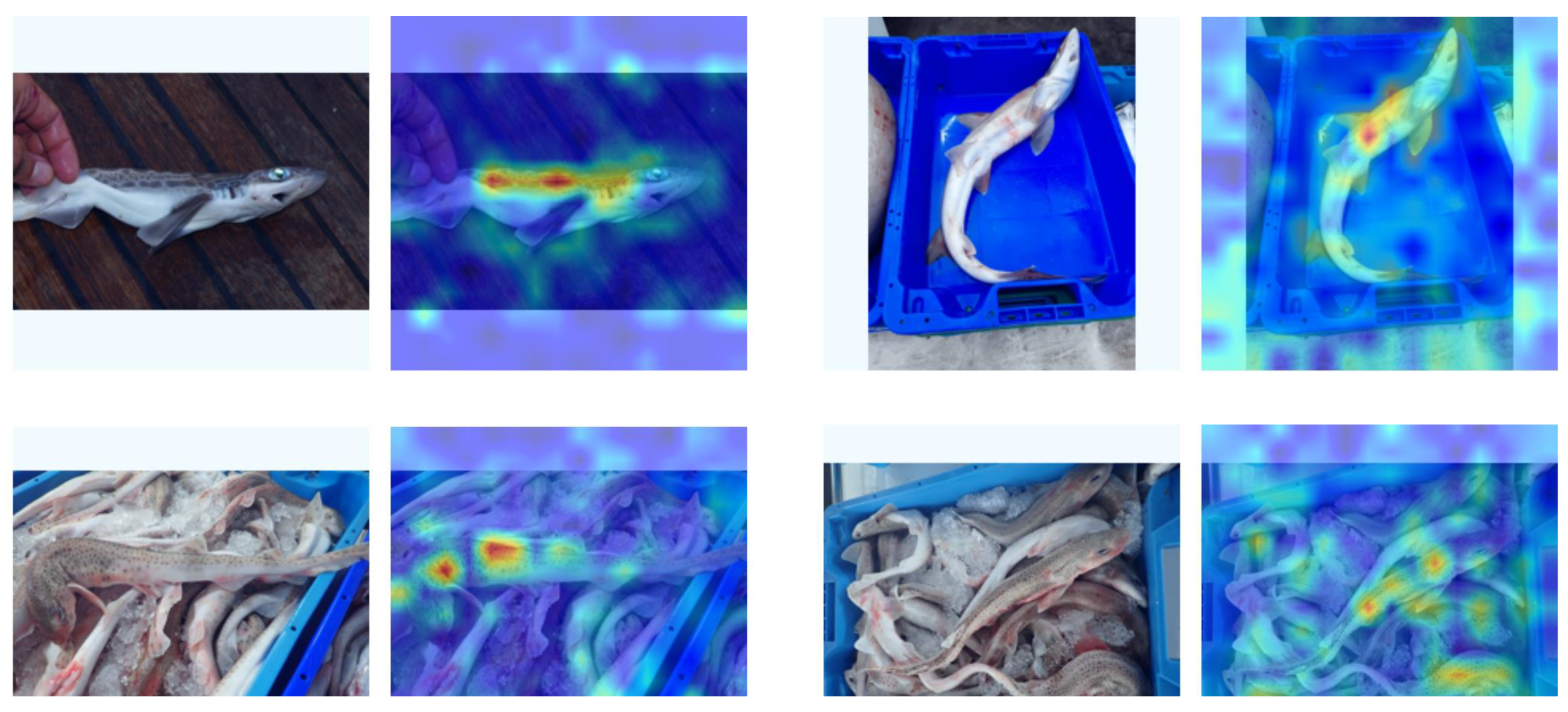

- Second, the visual representations (Section 3.2) of the images to be classified are refined by using the illustrations to strengthen the visual embeddings within the encoder. This process enhances the attention given to semantically matching features in the last layer of the model, improving the expressiveness of the resulting representations.For instance, in an image of Galeus melastomus, the illustrations highlight the pale circular marks on the dorsal area, guiding the model to assign higher weight to these features during representation building.

- Finally, both components (the optimized prompts and the enriched representations guided by semantically shared features) are integrated into a hierarchical decision workflow aware (Section 3.1.1) of the taxonomic structure. This process progressively narrows the decision space from broader to more specific categories, reducing ambiguity and improving the overall consistency of classification results.For example, when classifying between Galeus melastomus and Torpedo marmorata, both species exhibit dorsal spots; however, the hierarchical decision process allows comparisons only within equivalent levels, enabling more precise and consistent differentiation.

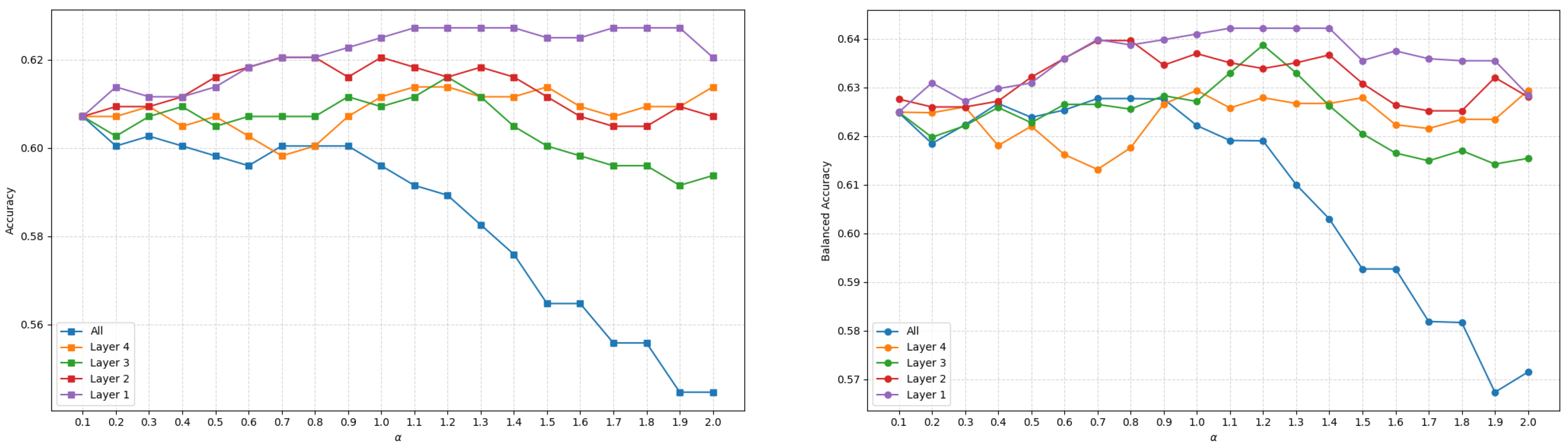

4.3. Hierarchical Taxonomic Evaluation

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Jabado, R.W.; Morata, A.Z.A.; Bennett, R.H.; Finucci, B.; Ellis, J.R.; Fowler, S.L.; Grant, M.I.; Barbosa Martins, A.P.; Sinclair, S.L. The Global Status of Sharks, Rays, and Chimaeras; IUCN Species Survival Commission (SSC), Shark Specialist Group: Gland, Switzerland, 2024; ISBN 978-2-8317-2318-1. [Google Scholar] [CrossRef]

- Pozo-Montoro, M.; Arroyo, E.; Abel, I.; Bas Gómez, A.; Clemente Navarro, P.; Cortés, E.; Esteban, A.; García-Charton, J.A.; López Castejón, F.; Ortolano, A.; et al. Important Shark and Ray Areas (ISRAs) en el SE ibérico, una declaración necesaria. In Proceedings of the XV Reunión del Foro Científico Sobre la Pesca Española en el Mediterráneo; Universitat d’Alacant: Alicante, Spain, 2025; pp. 55–64. [Google Scholar]

- Jabado, R.W.; García-Rodríguez, E.; Kyne, P.M.; Charles, R.; Armstrong, A.H.; Bortoluzzi, J.; Mouton, T.L.; Gonzalez-Pestana, A.; Battle-Morera, A.; Rohner, C.; et al. Mediterranean and Black Seas: A Regional Compendium of Important Shark and Ray Areas; Technical report; IUCN SSC Shark Specialist Group: Dubai, United Arab Emirates, 2023. [Google Scholar] [CrossRef]

- Pourpanah, F.; Abdar, M.; Luo, Y.; Zhou, X.; Wang, R.; Lim, C.P.; Wang, X.Z.; Wu, Q.M.J. A Review of Generalized Zero-Shot Learning Methods. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 4051–4070. [Google Scholar] [CrossRef] [PubMed]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models From Natural Language Supervision. arXiv 2021, arXiv:2103.00020. [Google Scholar] [CrossRef]

- Von Rueden, L.; Mayer, S.; Beckh, K.; Georgiev, B.; Giesselbach, S.; Heese, R.; Kirsch, B.; Pfrommer, J.; Pick, A.; Ramamurthy, R.; et al. Informed Machine Learning—A Taxonomy and Survey of Integrating Prior Knowledge into Learning Systems. IEEE Trans. Knowl. Data Eng. 2023, 35, 614–633. [Google Scholar] [CrossRef]

- Jenrette, J.; Liu, Z.C.; Chimote, P.; Hastie, T.; Fox, E.; Ferretti, F. Shark detection and classification with machine learning. Ecol. Inform. 2022, 69, 101673. [Google Scholar] [CrossRef]

- Villon, S.; Iovan, C.; Mangeas, M.; Vigliola, L. Toward an artificial intelligence-assisted counting of sharks on baited video. Ecol. Inform. 2024, 80, 102499. [Google Scholar] [CrossRef]

- Clark, J.; Lalgudi, C.; Leone, M.; Meribe, J.; Madrigal-Mora, S.; Espinoza, M. Deep Learning for Automated Shark Detection and Biometrics Without Keypoints. In Computer Vision—ECCV 2024 Workshops; Springer: Cham, Switzerland, 2025; pp. 105–120. [Google Scholar] [CrossRef]

- Purcell, C.; Walsh, A.; Colefax, A.; Butcher, P. Assessing the ability of deep learning techniques to perform real-time identification of shark species in live streaming video from drones. Front. Mar. Sci. 2022, 9, 981897. [Google Scholar] [CrossRef]

- Gómez-Vargas, N.; Alonso-Fernández, A.; Blanquero, R.; Antelo, L.T. Re-identification of fish individuals of undulate skate via deep learning within a few-shot context. Ecol. Inform. 2023, 75, 102036. [Google Scholar] [CrossRef]

- Garcia-D’Urso, N.E.; Galan-Cuenca, A.; Climent-Pérez, P.; Saval-Calvo, M.; Azorin-Lopez, J.; Fuster-Guillo, A. Efficient instance segmentation using deep learning for species identification in fish markets. In Proceedings of the 2022 International Joint Conference on Neural Networks (IJCNN), Padua, Italy, 18–23 July 2022; pp. 1–8. [Google Scholar] [CrossRef]

- Climent-Perez, P.; Galán-Cuenca, A.; Garcia-d’Urso, N.E.; Saval-Calvo, M.; Azorin-Lopez, J.; Fuster-Guillo, A. Simultaneous, vision-based fish instance segmentation, species classification and size regression. PeerJ Comput. Sci. 2024, 10, e1770. [Google Scholar] [CrossRef] [PubMed]

- Lu, J.; Song, Z.; Zhao, S.; Li, D.; Zhao, R. A Metric-Based Few-Shot Learning Method for Fish Species Identification with Limited Samples. Animals 2024, 14, 755. [Google Scholar] [CrossRef] [PubMed]

- Jerez-Tallón, M.; Beviá-Ballesteros, I.; Garcia-D’Urso, N.; Toledo-Guedes, K.; Azorín-López, J.; Fuster-Guilló, A. Comparative Study of Deep Learning Approaches for Fish Origin Classification. In Advances in Computational Intelligence. IWANN 2025; Rojas, I., Joya, G., Catala, A., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2025; Volume 16008, pp. 66–78. [Google Scholar] [CrossRef]

- Menon, S.; Vondrick, C. Visual Classification via Description from Large Language Models. arXiv 2022, arXiv:2210.07183. [Google Scholar] [CrossRef]

- Zhang, R.; Zhang, W.; Fang, R.; Gao, P.; Li, K.; Dai, J.; Qiao, Y.; Li, H. Tip-Adapter: Training-Free Adaption of CLIP for Few-Shot Classification. In Proceedings of the Computer Vision—ECCV 2022; Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T., Eds.; Springer: Cham, Switzerland, 2022; pp. 493–510. [Google Scholar]

- Guo, Z.; Zhang, R.; Qiu, L.; Ma, X.; Miao, X.; He, X.; Cui, B. CALIP: Zero-Shot Enhancement of CLIP with Parameter-free Attention. arXiv 2022, arXiv:2209.14169. [Google Scholar] [CrossRef]

- Zhuang, J.; Hu, J.; Mu, L.; Hu, R.; Liang, X.; Ye, J.; Hu, H. FALIP: Visual Prompt as Foveal Attention Boosts CLIP Zero-Shot Performance. In Computer Vision—ECCV 2024; Springer: Cham, Switzerland, 2024; pp. 236–253. [Google Scholar] [CrossRef]

- Li, Y.; Liang, F.; Zhao, L.; Cui, Y.; Ouyang, W.; Shao, J.; Yu, F.; Yan, J. Supervision Exists Everywhere: A Data Efficient Contrastive Language-Image Pre-training Paradigm. arXiv 2022, arXiv:2110.05208. [Google Scholar]

- Yuksekgonul, M.; Bianchi, F.; Kalluri, P.; Jurafsky, D.; Zou, J. When and why vision-language models behave like bags-of-words, and what to do about it? arXiv 2023, arXiv:2210.01936. [Google Scholar]

- Praveena, K.; Anandhi, R.; Gupta, S.; Jain, A.; Kumar, A.; Saud, A.M. Application of Zero-Shot Learning in Computer Vision for Biodiversity Conservation through Species Identification and Tracking. In Proceedings of the 2024 International Conference on Trends in Quantum Computing and Emerging Business Technologies, Pune, India, 22–23 March 2024; pp. 1–6. [Google Scholar]

- Lampert, C.H.; Nickisch, H.; Harmeling, S. Learning to detect unseen object classes by between-class attribute transfer. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 951–958. [Google Scholar] [CrossRef]

- Xian, Y.; Lampert, C.H.; Schiele, B.; Akata, Z. Zero-Shot Learning—A Comprehensive Evaluation of the Good, the Bad and the Ugly. arXiv 2020, arXiv:1707.00600. [Google Scholar] [CrossRef] [PubMed]

- Rodríguez, A.C.; D’Aronco, S.; Daudt, R.C.; Wegner, J.D.; Schindler, K. Recognition of Unseen Bird Species by Learning from Field Guides. In Proceedings of the WACV, Waikoloa, HI, USA, 3–8 January 2024; pp. 1742–1751. [Google Scholar]

- Stevens, S.; Wu, J.; Thompson, M.J.; Campolongo, E.G.; Song, C.H.; Carlyn, D.E.; Dong, L.; Dahdul, W.M.; Stewart, C.; Berger-Wolf, T.; et al. BioCLIP: A Vision Foundation Model for the Tree of Life. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 19412–19424. [Google Scholar] [CrossRef]

- Muravyov, S.V.; Nguyen, D.C. Automatic Segmentation by the Method of Interval Fusion with Preference Aggregation When Recognizing Weld Defects. Russ. J. Nondestruct. Test. 2023, 59, 1280–1290. [Google Scholar] [CrossRef]

- Muravyov, S.V.; Nguyen, D.C. Method of Interval Fusion with Preference Aggregation in Brightness Thresholds Selection for Automatic Weld Surface Defects Recognition. Measurement 2024, 236, 114969. [Google Scholar] [CrossRef]

- Snell, J.; Swersky, K.; Zemel, R.S. Prototypical Networks for Few-shot Learning. arXiv 2017, arXiv:1703.05175. [Google Scholar] [PubMed]

- Sui, D.; Chen, Y.; Mao, B.; Qiu, D.; Liu, K.; Zhao, J. Knowledge Guided Metric Learning for Few-Shot Text Classification. arXiv 2020, arXiv:2004.01907. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, N.; Xie, X.; Deng, S.; Yao, Y.; Tan, C.; Huang, F.; Si, L.; Chen, H. KnowPrompt: Knowledge-aware Prompt-tuning with Synergistic Optimization for Relation Extraction. In Proceedings of the Proceedings of the ACM Web Conference 2022, Lyon, France, 25–29 April 2022; ACM: New York, NY, USA, 2022; pp. 2778–2788. [Google Scholar] [CrossRef]

- Moloch. iNaturalist Observation: Prionace Glauca (Blue Shark). 2012. Available online: https://www.inaturalist.org/observations/8641690 (accessed on 1 October 2025).

- Arroyo, E.; Canales Cáceres, R.M.; Abel, I.; Giménez-Casalduero, F. Tiburones y Rayas de la Región de Murcia; Proyecto TIBURCIA, Fondo Europeo Marítimo y de Pesca: Alicante, Spain, 2021. [Google Scholar]

| Order | Family | Species | Common Name | Nº Img |

|---|---|---|---|---|

| Squaliformes | Oxynotidae | |||

| Oxynotus centrina | Angular roughshark | 36 | ||

| Carcharhiniformes | Triakidae | |||

| Mustelus mustelus | Smooth-hound | 76 | ||

| Galeorhinus galeus | Tope shark | 38 | ||

| Scyliorhinidae | ||||

| Scyliorhinus canicula | Small-spotted catshark | 121 | ||

| Galeus melastomus | Blackmouth catshark | 37 | ||

| Torpediniformes | Torpedinidae | |||

| Torpedo marmorata | Spotted torpedo | 50 | ||

| Rajiformes | Rajidae | |||

| Raja undulata | Undulate ray | 90 |

| Level | BAcc. | Acc. | Prec. | Rec. | F1 |

|---|---|---|---|---|---|

| Order | 0.37 | 0.70 | 0.60 | 0.37 | 0.36 |

| Family | 0.18 | 0.18 | 0.17 | 0.18 | 0.13 |

| Species (SC) | 0.13 | 0.15 | 0.06 | 0.14 | 0.06 |

| Species (CN) | 0.37 | 0.51 | 0.16 | 0.13 | 0.12 |

| Method | BAcc. | Acc. | Prec. | Rec. | F1 |

|---|---|---|---|---|---|

| Order | |||||

| PA | 0.79 | 0.79 | 0.71 | 0.79 | 0.72 |

| P-TC | 0.83 | 0.82 | 0.74 | 0.83 | 0.76 |

| P-TS | 0.83 | 0.82 | 0.74 | 0.83 | 0.76 |

| P-TS+B | 0.82 | 0.82 | 0.74 | 0.82 | 0.76 |

| P-TS+SB | 0.83 | 0.82 | 0.74 | 0.83 | 0.76 |

| Family | |||||

| PA | 0.70 | 0.74 | 0.70 | 0.70 | 0.69 |

| P-TC | 0.78 | 0.79 | 0.75 | 0.78 | 0.76 |

| P-TS | 0.78 | 0.75 | 0.73 | 0.78 | 0.73 |

| P-TS+B | 0.78 | 0.77 | 0.74 | 0.78 | 0.74 |

| P-TS+SB | 0.79 | 0.77 | 0.74 | 0.79 | 0.74 |

| Species | |||||

| PA | 0.51 | 0.50 | 0.60 | 0.51 | 0.48 |

| P-TC | 0.61 | 0.58 | 0.63 | 0.61 | 0.57 |

| P-TS | 0.62 | 0.60 | 0.63 | 0.62 | 0.58 |

| P-TS+B | 0.64 | 0.63 | 0.63 | 0.64 | 0.60 |

| P-TS+SB | 0.64 | 0.63 | 0.63 | 0.64 | 0.61 |

| Level | Category | Selected Prompt |

|---|---|---|

| Order | Carcharhiniformes | Shark slim elongated figure and white-edged oval spots |

| Squaliformes | Shark with a fat triangular body, stout compressed silhouette | |

| Torpediniformes | Stingray rounded silhouette, brown shade covered in patches | |

| Rajiformes | Stingray diamond-shaped figure, slender elongated tail tip, brown-gray body with dark banding, prominent circular mark in each fin, pale underside, pelvic fins gray | |

| Family | Scyliorhinidae | Shark slim elongated shape, light brown shade, covered with small round black speckles |

| Triakidae | Shark pointed body and light gray color | |

| Oxynotidae | Shark black body color and triangular shape | |

| Rajidae | Stingray rhomboid figure, large black rounded patch on each fin | |

| Torpedinidae | Stingray rounded silhouette, brown shade covered in patches | |

| Species | Galeus melastomus | Shark reddish brown coloration with oval spots encircled |

| Galeorhinus galeus | Shark with long snout, second dorsal fin tiny like anal, slender elongated figure, deep caudal notch, light gray body, white belly | |

| Oxynotus centrina | Shark with a fat triangular body, stout compressed silhouette | |

| Mustelus mustelus | Shark slim elongated body and gray to brown tone | |

| Scyliorhinus canicula | Shark slim elongated figure, brown shade with tiny circular black speckles | |

| Raja undulata | Stingray rhomboid figure, long thin tail, gray-brown body with narrow and broad bands, big round spot located in each fin center, underside white, gray pelvic fins | |

| Torpedo marmorata | Stingray rounded silhouette, brown shade covered in patches |

| Level | Category | Selected Prompt |

|---|---|---|

| Order (Sharks) | Carcharhiniformes | Shark slim elongated figure and white-edged oval spots |

| Squaliformes | Shark with a fat triangular body, stout compressed silhouette | |

| Family (Carcharhiniformes) | Scyliorhinidae | Shark elongated narrow silhouette, light brown tone patterned with round black speckles |

| Triakidae | Shark slim narrow form and gray coloration | |

| Species (Scyliorhinidae) | Galeus melastomus | Shark elongated slender body, long low anal fin, narrow caudal fin ridged, gray or brownish red coloration with oval markings bordered in white |

| Scyliorhinus canicula | Shark slender long body and dotted with tiny black speckles | |

| Species (Triakidae) | Mustelus mustelus | Shark elongated slender build, pointed profile, small mouth, uniform light gray or brown |

| Galeorhinus galeus | Shark narrow elongated outline, long snout, second dorsal fin small like anal, deep notched caudal fin, light gray body, white belly | |

| Order (Rays) | Torpediniformes | Stingray rounded silhouette, brown shade covered in patches |

| Rajiformes | Stingray diamond-shaped figure, slender elongated tail tip, brown-gray body with dark banding, prominent circular mark in each fin, pale underside, pelvic fins gray |

| Taxonomic Level | CLIP (Zero-Shot) | CALIP | PGCA (Ours) |

|---|---|---|---|

| Order | 0.77/0.76 | 0.77/0.77 | 0.82/0.81 |

| Family | 0.71/0.67 | 0.75/0.70 | 0.79/0.80 |

| Species | 0.50/0.50 | 0.52/0.53 | 0.59/0.62 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Beviá-Ballesteros, I.; Jerez-Tallón, M.; Aranda-Garrido, N.; Saval-Calvo, M.; Abel-Abellán, I.; Fuster-Guilló, A. Zero-Shot Elasmobranch Classification Informed by Domain Prior Knowledge. Mach. Learn. Knowl. Extr. 2025, 7, 146. https://doi.org/10.3390/make7040146

Beviá-Ballesteros I, Jerez-Tallón M, Aranda-Garrido N, Saval-Calvo M, Abel-Abellán I, Fuster-Guilló A. Zero-Shot Elasmobranch Classification Informed by Domain Prior Knowledge. Machine Learning and Knowledge Extraction. 2025; 7(4):146. https://doi.org/10.3390/make7040146

Chicago/Turabian StyleBeviá-Ballesteros, Ismael, Mario Jerez-Tallón, Nieves Aranda-Garrido, Marcelo Saval-Calvo, Isabel Abel-Abellán, and Andrés Fuster-Guilló. 2025. "Zero-Shot Elasmobranch Classification Informed by Domain Prior Knowledge" Machine Learning and Knowledge Extraction 7, no. 4: 146. https://doi.org/10.3390/make7040146

APA StyleBeviá-Ballesteros, I., Jerez-Tallón, M., Aranda-Garrido, N., Saval-Calvo, M., Abel-Abellán, I., & Fuster-Guilló, A. (2025). Zero-Shot Elasmobranch Classification Informed by Domain Prior Knowledge. Machine Learning and Knowledge Extraction, 7(4), 146. https://doi.org/10.3390/make7040146