1. Introduction

Artificial intelligence (AI) and machine learning (ML) are experiencing exponential growth, reshaping a wide array of industries ranging from healthcare and finance to education and sports. ML, a subfield of AI focused on systems that learn from data without explicit programming, has demonstrated exceptional potential in solving complex predictive and classification problems across domains [

1,

2]. The convergence of several key factors fuels this progress: the abundance of data (Big Data), advances in computing infrastructure (particularly GPUs and cloud computing), and the maturation of sophisticated algorithms, including deep learning [

3,

4].

Despite these advances, the practical application of ML is often constrained by a major challenge: accessibility. Developing, training, and deploying ML models traditionally requires specialized knowledge in software programming (e.g., Python, R), mathematical foundations (e.g., linear algebra, probability), and data science techniques (e.g., feature engineering, model validation) [

5]. This technical barrier limits adoption among professionals in fields like healthcare, education, or sports, where data are plentiful but computational expertise may be limited.

In response to this gap, low-code and no-code (LC/NC) ML platforms have emerged as promising solutions that aim to democratize access to ML [

6]. These tools offer visual interfaces, automated pipelines, and pre-built components that enable users to build and evaluate ML models without software programming. LC/NC platforms are fully graphical, requiring no programming, while low-code tools allow minimal scripting to offer greater flexibility. Major cloud providers such as Amazon Web Services (AWS), Google Cloud Platform, and Microsoft Azure now offer LC/NC solutions (e.g., SageMaker Canvas, Vertex AI, Azure Machine Learning Studio) tailored to non-experts [

7,

8,

9].

This study is motivated by the belief that such tools can significantly extend the reach of ML to professionals who deeply understand their domain but lack technical training. The sports domain, in particular, represents a compelling use case: Modern athletic environments generate extensive biometric and performance data that could be harnessed for injury prevention, performance optimization, and tactical analysis [

10,

11].

However, transforming these large, heterogeneous datasets into actionable insights typically requires advanced software engineering expertise, due to the complexity of traditional ML pipelines, which require programming skills, data engineering, and expertise in algorithm tuning and evaluation [

12,

13]. As a result, much of this potentially valuable information remains underutilized or would incur an additional cost to hire a specialist. In this context, LC/NC AutoML platforms could enable non-technical users to build predictive models directly from their data without writing code or configuring complex pipelines.

In this study, we focus on the injury risk prediction problem, a recurring challenge in sports science where the goal is to estimate the likelihood of athlete injury based on training load, physiological indicators, and historical performance. The problem involves multiple steps—from data ingestion and preprocessing to model training, evaluation, and interpretation—that can now be executed visually through LC/NC interfaces.

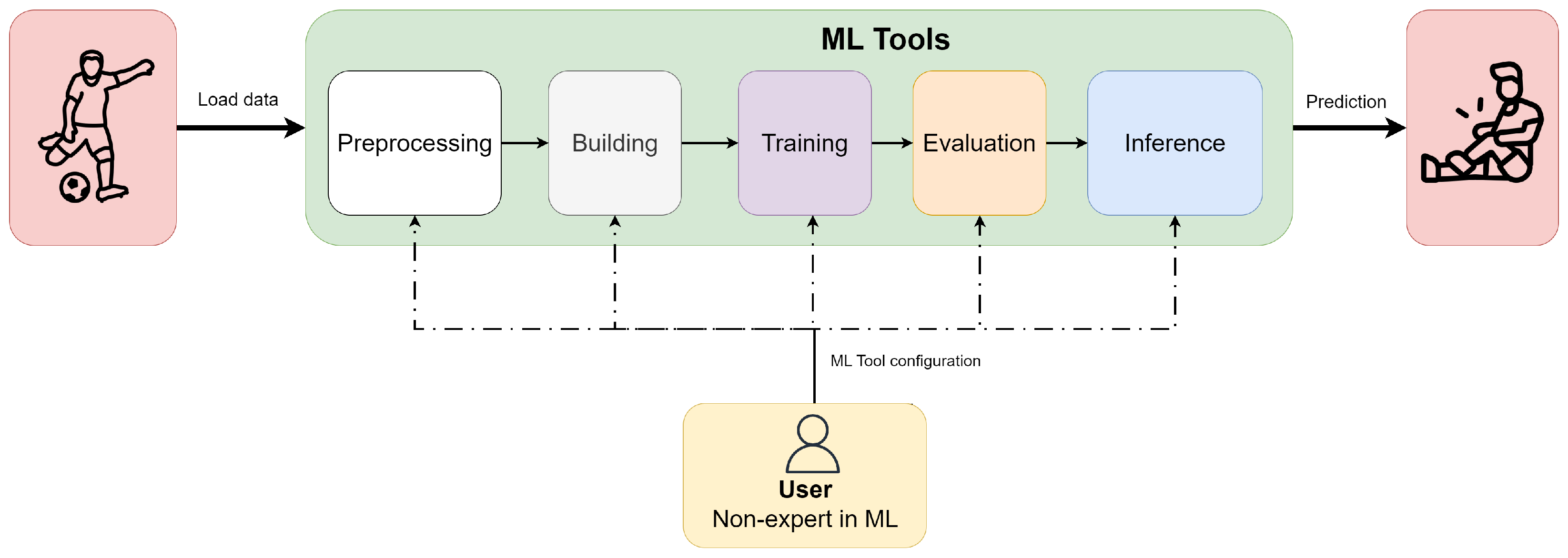

To clarify this process,

Figure 1 depicts the end-to-end workflow adopted in this study. Raw sports data (biometrics, training load, and injury history) are first preprocessed and then ingested into the three LC/NC AutoML platforms evaluated. Within each platform, the pipeline is executed by a non-expert user and comprises four stages: (i) preprocessing (basic cleaning and filtering), (ii) building (setup model regression/classification), (iii) training (AutoML model search), (iv) model evaluation (default internal validation), and (v) inference. The predicted injury risk is subsequently examined through interpretability outputs (feature importance), which provide actionable insights for coaches or physiotherapists.

To date, there is a lack of practical research examining whether LC/NC platforms can truly empower non-technical professionals. Although a few preliminary studies have explored these tools [

14,

15,

16], their scope has often been limited, focusing mainly on technical users, simplified use cases, or evaluations restricted to model performance alone. These works do not systematically assess usability, transparency, costs, or real-world applicability from the perspective of subject matter experts without programming knowledge. This gap highlights the need for applied studies that examine how LC/NC platforms can effectively bridge the divide between data availability and technical capacity across specialized environments.

Therefore, this paper presents a comparative evaluation of three leading LC/NC platforms, applied to a real-world dataset on sports injury risk. The study assesses whether these platforms enable non-technical users to carry out core ML tasks—such as data preparation, model training, evaluation, and interpretation—while also analyzing other critical aspects often overlooked: predictive performance, feature importance extraction, training time, usability, and cost management.

The findings are intended to inform both technical researchers and industry professionals, primarily researchers who do not have the technical capabilities to develop ML models from scratch but are interested in incorporating ML into their daily workflows. By evaluating how each platform supports the full ML lifecycle and the trade-offs involved, this work contributes to the ongoing debate on the democratization of AI and offers practical insight into the capabilities and limitations of LC/NC tools in real-world decision-making contexts.

The remainder of this paper is structured as follows.

Section 2 reviews related work on the democratization of ML through LC/NC platforms.

Section 3 details the methodological approach, including the selection criteria for the evaluated platforms, the characteristics of the dataset used for the case study, and the evaluation metrics employed to assess predictive performance.

Section 4 presents the results obtained from applying the workflows of each platform to the injury risk prediction problem.

Section 5 offers a critical discussion of these results, comparing the platforms across multiple usability and performance dimensions, and interpreting their implications for real-world adoption by non-technical users. Finally,

Section 6 presents the main conclusions and outlines directions for future research related to LC/NC platforms.

2. Related Work

The democratization of ML has become a focal point in both academic research and industry, with an increasing emphasis on enabling non-technical users to build, train, and deploy models through LC/NC platforms. AutoML tools, which automate key stages of the ML workflow such as feature engineering, model selection, and hyperparameter optimization, have been widely studied for their potential to reduce entry barriers [

13,

17]. However, despite their promise, researchers have identified persistent challenges, including transparency, reproducibility, and the risk of over-reliance on automation without understanding underlying processes [

18,

19]. Similarly, [

20] evaluated AutoML tools under multiple experimental configurations, revealing trade-offs between performance and interpretability. These empirical studies emphasize quantitative metrics but do not assess usability or accessibility for non-technical professionals.

In parallel, the rapid emergence of AI-augmented LC/NC platforms, sometimes incorporating agentic and memory-augmented frameworks, reflects a growing trend toward more intelligent and context-aware usability [

21]. Complementary empirical studies also reinforce the role of LC/NC technologies in accelerating organizational adoption and innovation cycles across sectors [

22]. Nevertheless, these contributions focus mainly on conceptual frameworks and broad adoption patterns, while lacking systematic evaluations of usability, cost transparency, and end-to-end workflows in domain-specific scenarios.

Recent studies have evaluated these platforms from a technical perspective, often focusing on performance and reproducibility rather than usability for domain experts without programming experience [

23,

24]. While some works explore the educational potential of NC/LC tools in teaching ML concepts to non-technical audiences [

25], systematic comparisons that assess the real-world applicability of these platforms for professionals in specialized domains remain scarce.

Other recent contributions examine LC/NC ML from human–computer interaction and governance perspectives. Sundberg and Holmström [

26] explored how no-code AI integrates with MLOps pipelines to streamline iteration cycles, while ref. [

27] identified governance and dependency challenges in long-term low-code adoption. D’Aloisio et al. [

28] introduced MANILA, a low-code fairness benchmarking tool extending LC/NC use to ethical AI, and Lehmann and Buschek [

29] discussed prompt-based natural language interfaces as a new layer of accessibility for novice users. However, these studies analyze design paradigms or single-platform prototypes, and they do not offer a comparative, cross-platform evaluation that integrates usability, cost, and interpretability, as carried out in this work.

Comparative reviews have highlighted that LC/NC approaches can streamline ML adoption in organizations by reducing development times and enabling faster prototyping [

26,

30]. In parallel, user acceptance research emphasizes perceived ease of use and usefulness as critical factors influencing adoption [

31]. Nonetheless, these works prioritize organizational and managerial perspectives, overlooking hands-on evaluations of usability, cost transparency, and technical barriers faced by non-expert users during real ML workflows.

The lack of applied studies testing LC/NC AutoML solutions for non-technical professionals is notable, with only a handful of preliminary efforts attempting to bridge this gap. This underexplored intersection highlights the need for empirical evaluations of LC/NC tools in realistic domain-specific scenarios, where professionals have deep contextual expertise but limited technical training.

In this context, the present work contributes by conducting a practical, comparative evaluation of three leading cloud-based LC/NC platforms applied to a real sports injury risk prediction use case. By combining predictive performance assessment with usability and cost management analysis, this study aims to provide a more holistic understanding of the strengths and limitations of these platforms for non-technical domain experts.

3. Materials and Methods

This section outlines the methodological framework adopted for evaluating the usability and effectiveness of LC/NC platforms in developing ML models within a sports context. It describes the criteria used for selecting the platforms under study, the characteristics of the dataset employed, the performance metrics applied to assess model quality, and the step-by-step experimental procedure followed. The aim was to ensure consistency across platforms while maintaining conditions reflective of real-world use by non-technical users.

3.1. Platform Selection Criteria

The selection of cloud-based platforms for this practical study was guided by their strong market presence in cloud computing services and their capabilities in the field of ML, with a specific focus on tools that support LC/NC development. The main selection criteria were as follows:

Cloud Market Leadership: Platforms offered by the three major cloud providers, Microsoft Azure [

32], AWS [

33], and Google Cloud [

34] were chosen for their robustness, scalability, and wide range of integrated services. Their leadership positions are consistently recognized in industry analyses [

35,

36].

Availability of LC/NC Platforms: A key requirement was the existence and maturity of tools designed for users with limited programming experience.

Support for the Full ML Lifecycle: Platforms needed to support all stages of the ML pipeline, including data ingestion, preprocessing, model training, evaluation, and deployment, to ensure a complete and consistent user experience.

Pricing Model and Free Tier Access: The platforms’ pricing structures and availability of free-tier credits were also considered, as accessibility within constrained budgets is essential for individuals, students, and small organizations exploring ML applications.

Based on these criteria, three cloud-based platforms were selected for the comparative study shown in

Table 1: Amazon SageMaker Canvas [

7], Google Cloud Vertex AI [

8], and Azure Machine Learning (Azure ML) [

9]. These platforms represent the leading options in the current market, combining robust cloud infrastructure with accessible LC/NC interfaces, making them suitable candidates for evaluating the democratization of ML for non-technical users. In addition, they also include AutoML capabilities, which automate model selection, training, and evaluation, making them especially suitable for users without prior experience in ML development [

37].

3.2. Dataset Description

The dataset used in this study focuses on sports injury risk prediction and was obtained from the open data platform Kaggle [

38]. Several publicly available datasets relevant to sports and physical activity were initially considered. These included (i) Baseball Players, containing height, weight, and age to predict player position (classification) [

39]; (ii) Fitness Tracker, which included daily steps, calories burned, distance, sleep duration, and heart rate to predict mood (classification) [

40]; (iii) Gym Members, aimed at predicting calories burned from personal and workout-related features (regression) [

41]; and (iv) Injury Data, a binary classification dataset predicting injury occurrence based on physical characteristics and training intensity [

42].

Finally, the Sports Injury Detection [

43] dataset was selected due to its greater feature richness and suitability for a regression and classification task. It predicts injury risk as a continuous score ranging from 0 to 1, offering a more nuanced approach relevant to real-world sports performance monitoring. For classification evaluation, we use the same tabular features and the variable

Injury_Occurred as the target (positive = injury). This preserves the domain and data modalities while allowing us to assess whether the platforms’ behavior generalizes across problem types. The dataset consists of 10,000 rows and includes key input variables such as sport type, cumulative fatigue index, impact force, and heart rate. Two columns,

Athlete_ID and

Session_Date, were excluded to prevent redundancy or data leakage. The dataset was clean and formatted as a standard CSV file, requiring no additional preprocessing before use.

Table 2 shows the features of the

Sports Injury Risk dataset.

3.3. Model Evaluation Metrics

To assess the performance of the ML models built using the selected platforms, a set of regression-specific evaluation metrics was employed, since the target variable

Injury_Risk_Score is continuous. The primary metrics considered were Mean Squared Error (MSE), Root Mean Squared Error (RMSE), Root Mean Squared Logarithmic Error (RMSLE), Coefficient of Determination (R

2), and Normalized RMSE (NRMSE). These metrics were provided directly by the platforms and interpreted in the context of the study, and are the most commonly used evaluation metrics [

44].

From a practical perspective, two aspects were prioritized in evaluating model quality: the typical magnitude of prediction error, and the model’s ability to explain variance in the data. RMSE, being expressed in the same units as the predicted outcome, offers an intuitive indication of average prediction error. A lower RMSE value suggests higher accuracy in estimating injury risk scores. Similarly, the R

2 metric indicates the proportion of variability in the target variable that can be explained by the model. An R

2 value near 1 denotes excellent model fit and predictive power.

Table 3 shows a summary of the evaluation metrics selected.

For the classification evaluation, we report standard metrics provided by each platform’s internal validation, as shown in

Table 4 [

45]: ROC AUC, F1-score, Accuracy, and Recall.

3.4. Experimental Environment

All experiments were executed entirely within cloud-based low-code/no-code (LC/NC) AutoML environments, which handle computation, storage, and model execution remotely. Therefore, no specific local hardware configuration was required.

The simulation involved the three LC/NC AutoML platforms:

Amazon SageMaker Canvas, which automates data import, cleaning, feature engineering, model training, and evaluation through a graphical interface.

Google Cloud Vertex AI, offering an AutoML workflow that integrates preprocessing, model selection, training, validation, and explainability components.

Microsoft Azure Machine Learning Studio, providing a drag-and-drop interface for data preprocessing, automated training, model evaluation, and deployment.

Each platform was configured with its default “out-of-the-box” settings to simulate realistic usage by non-technical users.

3.5. Experimental Procedure

The experimental process carried out on each of the selected cloud-based platforms followed a common methodology, adapted to the specific interfaces and workflows of each platform, as shown in

Figure 1. The goal was to maintain a consistent sequence of steps to ensure a fair comparison of user experience and model performance. While the overall process was similar across platforms, inherent differences such as internal data splitting strategies and AutoML automation mechanisms meant that a fixed external test set was not used. Instead, each model was evaluated using the default internal validation procedures provided by the platforms.

The procedure consisted of the following main steps:

- 1.

Dataset Preparation: The injury risk dataset was uploaded to each platform. Initial exploration was conducted using built-in visual tools to examine variable types and distributions and detect any outliers or missing values. The dataset was configured for training by specifying the target variable Injury_Risk_Score and excluding irrelevant columns. No manual preprocessing was required, as all platforms offered automated handling of data formatting and type inference.

- 2.

Model Building: Each platform’s graphical interface was used to define the ML task as either a Regression or Classification problem. The corresponding target variable was selected, and all configuration settings such as optimization metric, data split strategy, and runtime parameters were left at their default values to ensure methodological consistency across systems. No manual coding or custom configuration was involved.

Each platform applies its own default data partition strategy when training and validating models. For the regression tasks, the models automatically selected by each platform were identified as follows: Amazon SageMaker Canvas [

46] generated an

XGBoostRegressor ensemble model; Google Cloud Vertex AI [

47] selected a

Gradient Boosted Trees model (up to 100 iterations with early stopping); and Azure Machine Learning [

48] built a

Voting Ensemble based on the top-performing regressors (maximum depth = 10,100 trees). For the classification tasks, SageMaker Canvas produced an

XGBoostClassifier, Vertex AI selected a

Gradient Boosted Trees Classifier, and Azure ML Studio again selected a

Voting Ensemble combining LightGBM, Random Forest, and Logistic Regression models.

Each platform applies its own default data partition strategy when training and validating models for regression and classification. Amazon SageMaker Canvas performs an 80/20 split between training and validation data, Google Cloud Vertex AI uses a three-way split with 80% training, 10% validation, and 10% testing, and Azure Machine Learning applies a default partition of approximately 70% training, 15% validation, and 15% testing (depending on dataset size and configuration). In all cases, the performance metrics reported in this study correspond specifically to the validation subsets automatically generated by each platform.

- 3.

Model Training: Training was carried out using each platform’s default AutoML workflow, which automatically handled feature preprocessing, algorithm selection, and hyperparameter optimization. This approach was intended to assess the ability of LC/NC tools to produce high-quality models without manual intervention or expert knowledge. All experiments were executed using free-tier or default compute configurations to reflect realistic user constraints.

- 4.

Model Evaluation: Once training was complete, model performance was assessed using the metrics defined in

Section 3.3. For regression tasks, the primary indicators were RMSE, MSE, RMSLE,

, and NRMSE. For classification tasks, evaluation included ROC AUC, F1-score, Accuracy, and Recall. Built-in interpretability tools were then employed to identify the most influential features contributing to predictions, providing insight into the variables most strongly associated with injury risk estimation.

To ensure cost consistency, training was executed using only the basic compute resources included in each platform’s free tier. The total training duration was recorded for comparison purposes. Participants had no technical knowledge. Specifically, one of the authors, a sports science professional with no formal training in data science or artificial intelligence, carried out the practical interactions with each platform, together with colleagues from the sports field. Their experience and comments were used to qualitatively assess the usability of each LC/NC workflow.

For clarity and reproducibility, the workflow followed across all platforms is also summarized in Algorithm 1.

| Algorithm 1 LC/NC Procedure Across Platforms for Regression and Classification |

- Require:

Tabular dataset (CSV); target variable: Injury_Risk_Score (regression) or Injury_Occurred (classification); excluded columns: Athlete_ID, Session_Date - Ensure:

Trained ML model (regression or classification); recorded metrics; feature importance; training time - 1:

Dataset Ingestion: Upload CSV file to the platform’s data section. - 2:

Schema Review: Verify inferred data types for all variables. - 3:

Target & Exclusions: Define the target variable according to the task and exclude identifier or temporal columns. - 4:

Task Definition: Select Regression or Classification as the problem type. - 5:

Defaults: Retain default data partitioning and optimization metric (platform default: e.g., MSE/RMSE for regression, Log Loss/AUC for classification). - 6:

AutoML Training: Launch the automated training using the default configuration (no manual hyperparameter tuning or sampling adjustments). - 7:

Metrics Collection: Regression: record RMSE, MSE, RMSLE, , and NRMSE. Classification: record ROC AUC, F1-score, Accuracy, and Recall.

- 8:

Explainability: Retrieve feature importance or SHAP-based explanations provided by the platform. - 9:

Timing & Resource Notes: Log total training duration and compute tier used (e.g., free tier, credits, or custom cluster). - 10:

(Optional) Export/Deploy: Export the model artifact or enable batch/online predictions if supported.

|

4. Results

This section presents the results obtained from the practical experimentation carried out on the three selected LC/NC platforms, Amazon SageMaker Canvas, Google Cloud Vertex AI, and Azure ML Studio, using the same dataset (Sports Injury Risk) and identical methodological steps described in the previous section. Each platform was evaluated independently to ensure a fair and consistent comparison, focusing on model accuracy, training duration, feature importance analysis, and user interface behavior. The findings aim to assess the platforms not only in terms of raw performance metrics but also in terms of their practical usability for non-technical users attempting to develop machine learning solutions in applied domains.

4.1. Amazon SageMaker Canvas

Amazon SageMaker Canvas was the first platform tested in this study. It is a no-code solution from AWS that enables users to build ML models through a visual interface. Before experimentation, initial setup steps were required, including AWS account creation and SageMaker domain configuration. Once the environment was active, the workflow proceeded through dataset preparation, model configuration, training, and evaluation, as described below.

4.1.1. Dataset Preparation

The dataset was uploaded through the Datasets section of the Canvas interface. The platform automatically recognized variable types and provided a clear summary view. From the original 13 columns, 10 were retained for modeling, while Athlete_ID, Session_Date, and Injury_Occurred were excluded due to their lack of predictive relevance or potential to introduce data leakage. No additional cleaning or transformation was required, as the dataset was already formatted as a clean CSV file.

4.1.2. Model Building

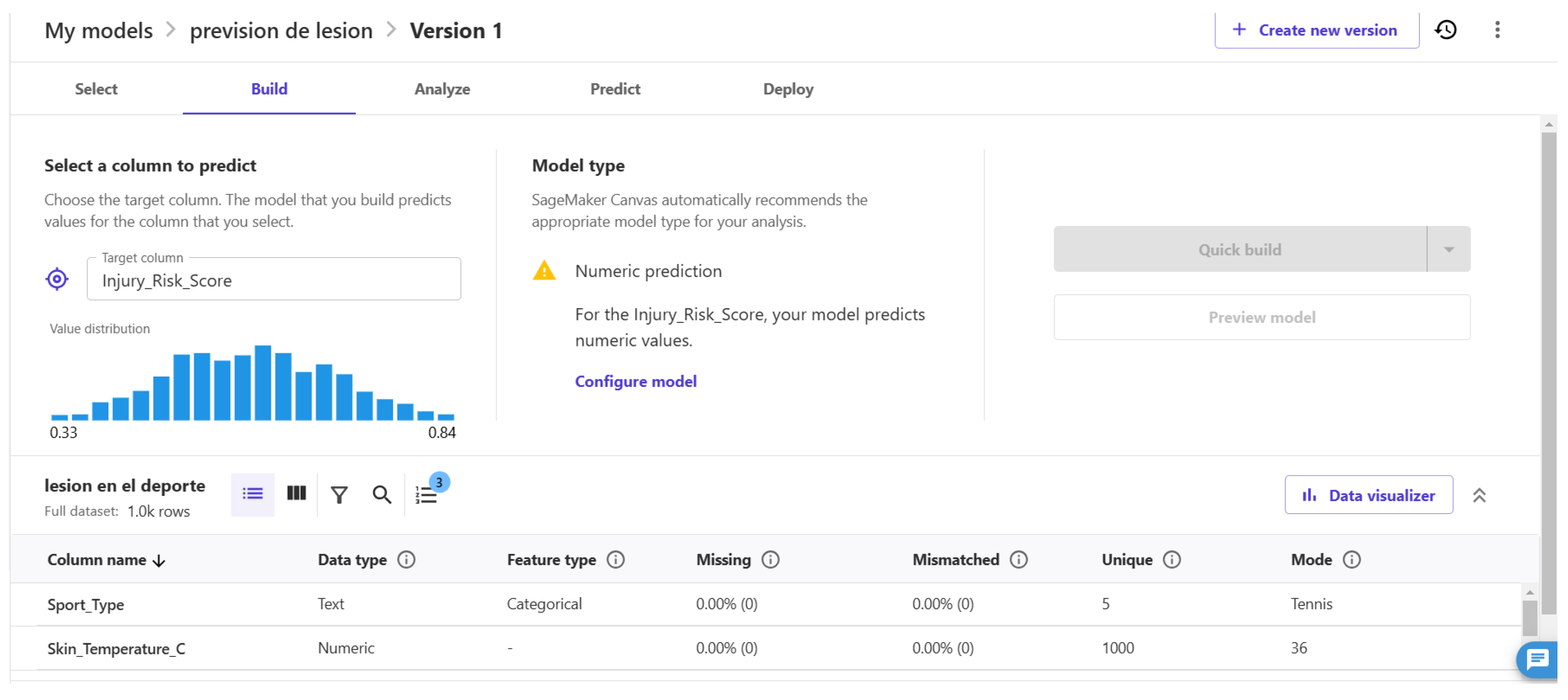

Using the

Auto Pilot feature, the task was set up as a numerical prediction problem with

Injury_Risk_Score as the target variable, as recommended by the tool itself, as shown in

Figure 2. The platform automatically selected appropriate preprocessing steps and ML algorithms. The default optimization metric, Mean Squared Error (MSE), was applied without user adjustment. The process was fully guided and required no manual parameter tuning.

For the classification setup, the task type was defined as Binary Classification, using the variable Injury_Occurred as the prediction target. It automatically handled data preprocessing, feature encoding, and class balance adjustments through its integrated AutoML pipeline. The default optimization objective, Log Loss, was applied, and additional metrics such as Accuracy, F1-score, Recall, and ROC AUC were reported in the model summary. No manual configuration of hyperparameters, sampling strategies, or model selection criteria was performed, ensuring consistency with the regression experiment. The final model automatically selected was an XGBoostClassifier, which achieved the highest validation performance according to the platform’s internal evaluation.

4.1.3. Model Training

The model was trained using the Quick Build option, which prioritizes faster results over exhaustive optimization. This mode was selected deliberately to align with the study’s primary objective: to evaluate whether individuals without technical expertise can successfully generate predictive models using LC/NC platforms. The training process was executed using the standard computational resources provided in AWS’s free tier. Model training and internal validation were completed in less than 30 min.

4.1.4. Model Evaluation

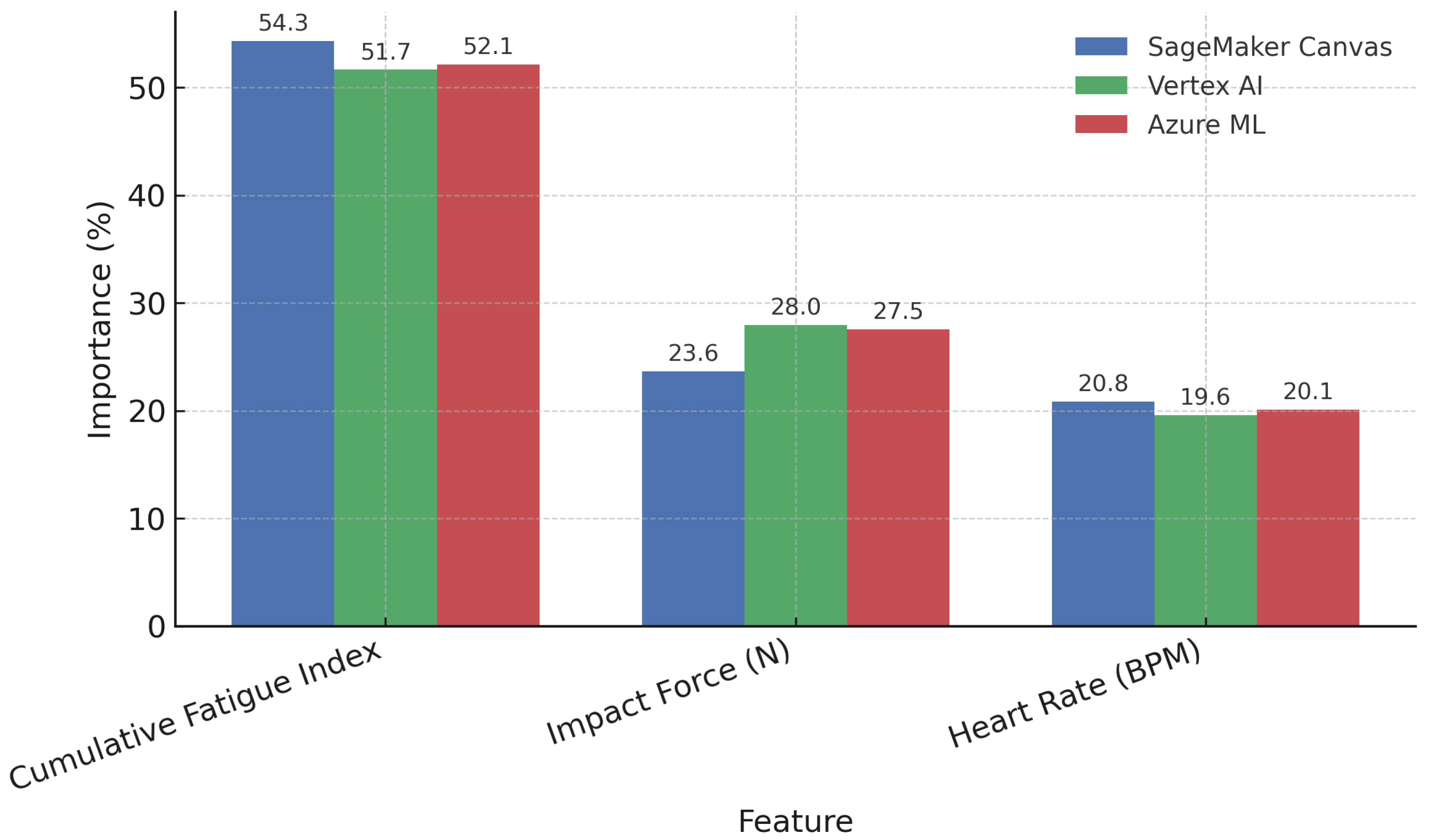

Upon completion, the platform presented key evaluation metrics, only providing an RMSE of 0.008 and an MSE of 0.000. Feature importance was also provided under the Analyze tab, highlighting Cumulative_Fatigue_Index (54.29%), Impact_Force_Newtons (23.65%), and Heart_Rate_BPM (20.83%) as the most influential predictors, as can be seen in Figure 4, enabling intuitive interpretation without requiring technical expertise.

For the classification task, it reported a ROC AUC of 0.91, an F1-score of 0.87, an Accuracy of 0.89, and a Recall of 0.85 on its internal validation dataset. These results indicate that the model was able to reliably distinguish between injured and non-injured cases, achieving a balanced trade-off between Precision and Recall. As with the regression experiment, the classification outputs were accompanied by interactive dashboards and feature importance plots, which highlighted Cumulative_Fatigue_Index and Impact_Force_Newtons as the main contributors to the predictive outcome. This consistency across tasks reinforces the interpretability and reliability of the automated modeling process.

4.2. Google Cloud Vertex AI

Google Cloud Vertex AI was the second platform evaluated in this study. It offers a fully integrated ML environment through the Google Cloud Console. The experiment was conducted using a free-tier account, with the dataset hosted in Google Cloud Storage. Unlike other platforms, Vertex AI requires no additional infrastructure setup beyond enabling the Vertex AI API, resulting in a smooth and centralized workflow suitable for non-technical users. The entire process from dataset handling to model training and evaluation was accessible from a single, unified interface.

4.2.1. Dataset Preparation

The dataset was first uploaded to Google Cloud Storage and then linked during the creation of a tabular dataset in Vertex AI. The platform enabled automatic schema recognition and provided summary statistics for all variables. As in previous cases, only 10 of the original 13 columns were retained for modeling, excluding Athlete_ID, Session_Date, and Injury_Occurred. The graphical interface made variable selection intuitive, requiring no manual data transformation.

4.2.2. Model Building

The regression task was configured using the AutoML interface. The target variable

Injury_Risk_Score was selected, and the problem type was defined as a regression task. Vertex AI provides an optional weighting column feature, but this was left blank to preserve comparability across platforms.

Figure 3 shows the default settings were used throughout, including a random data split (80% training, 10% validation, 10% testing) and Root Mean Squared Logarithmic Error (RMSLE) as the optimization metric.

For the classification experiment, the same workflow and data configuration were retained. The task type was set to Classification, with the binary variable Injury_Occurred defined as the target label. No class weights or custom sampling strategies were applied, allowing the AutoML system to handle balancing internally. Vertex AI automatically selected Log Loss as the primary optimization metric and additionally reported ROC AUC, F1-score, Recall, and Accuracy on its internal validation set. Default hyperparameter search and feature preprocessing options were kept unchanged to ensure methodological consistency with the regression task. The final model automatically chosen by Vertex AI corresponded to a gradient-boosted tree ensemble, which provided the best trade-off between accuracy and interpretability.

4.2.3. Model Training

Model training was launched through a series of intuitive clicks in the GUI, requiring no scripting or configuration files. The training process was executed automatically using Google’s AutoML backend. To evaluate accessibility for non-technical users, no custom hyperparameter tuning or manual adjustments were performed. The training process was completed in approximately 2 h and 7 min using the compute resources provided within the free usage tier.

4.2.4. Model Evaluation

The trained model was evaluated using the platform’s built-in metrics dashboard. Vertex AI reported an RMSE of 0.003, an RMSLE of 0.002, and an R

2 score of 1.000. Feature importance was provided using Shapley values, clearly indicating the most influential variables as described in

Figure 4:

Cumulative_Fatigue_Index (51.69%),

Impact_Force_Newtons (27.96%), and

Heart_Rate_BPM (19.59%). The results were presented through intuitive visualizations, facilitating interpretation without the need for technical explanation.

For the classification experiment, Vertex AI achieved consistently strong results across all evaluation metrics, with a ROC AUC of 0.93, an F1-score of 0.89, an Accuracy of 0.91, and a Recall of 0.88 on its internal validation split. These metrics confirm the platform’s ability to generate highly discriminative models with minimal user configuration.

4.3. Azure Machine Learning Studio

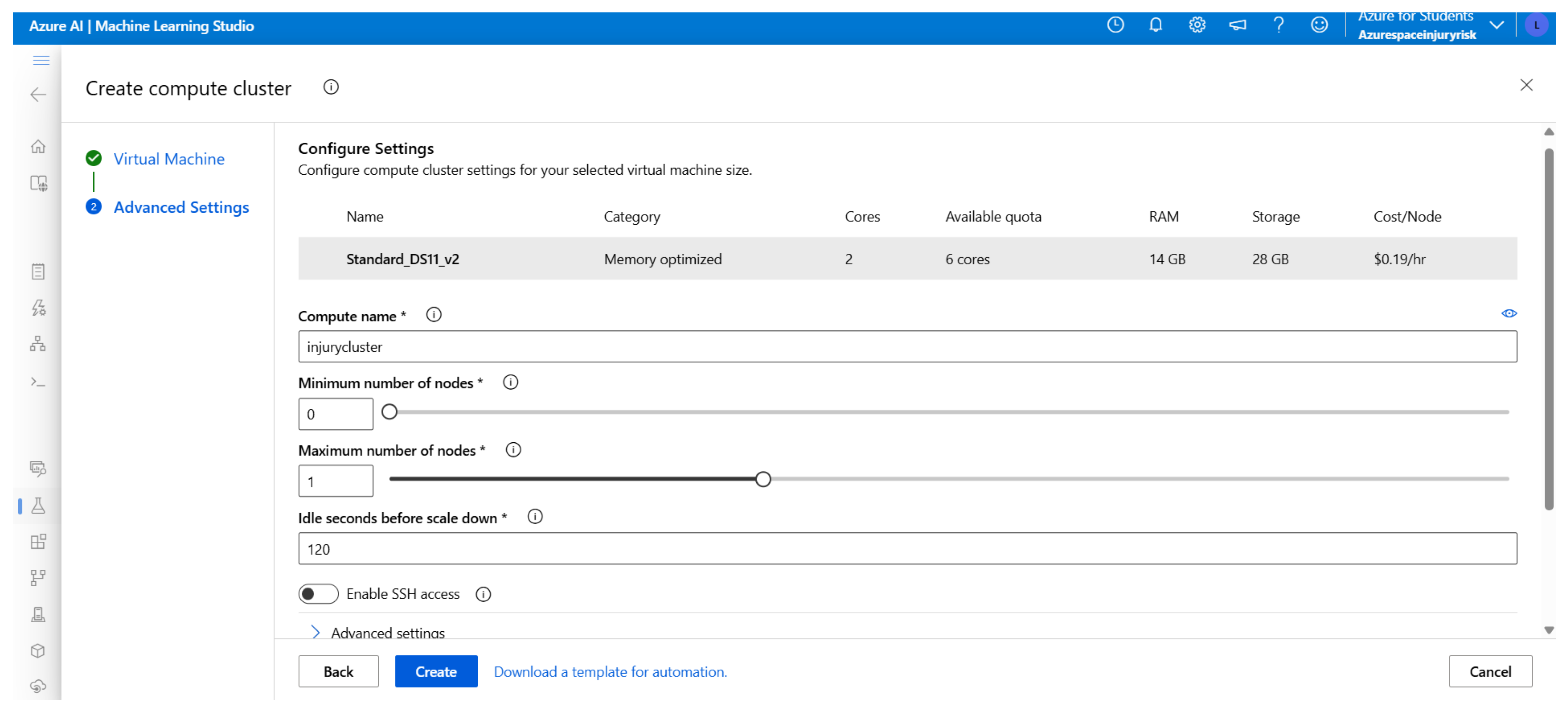

Azure ML Studio was the final platform evaluated in this study. Unlike the previous platforms, it required preliminary infrastructure configuration, including the creation of a dedicated workspace and compute cluster. An initial training attempt failed due to an unavailable CPU in the free tier, as the compute resource had not been correctly set. This issue, stemming from a lack of familiarity with Azure’s resource management, required additional research and reconfiguration of the cluster to proceed with training, as shown in

Figure 5. Although the Studio interface is user-friendly, the compute options and resolution of errors demanded a deeper level of exploration than in other platforms.

4.3.1. Dataset Preparation

The dataset was uploaded via the Datasets tab in Azure ML Studio. The platform allowed users to register and version datasets, which were then categorized as tabular data. During schema review, as in the previous platforms, three non-relevant columns, Athlete_ID, Session_Date, and Injury_Occurred, were excluded from the analysis. The visual interface streamlined this process without requiring code or transformation steps.

4.3.2. Model Building

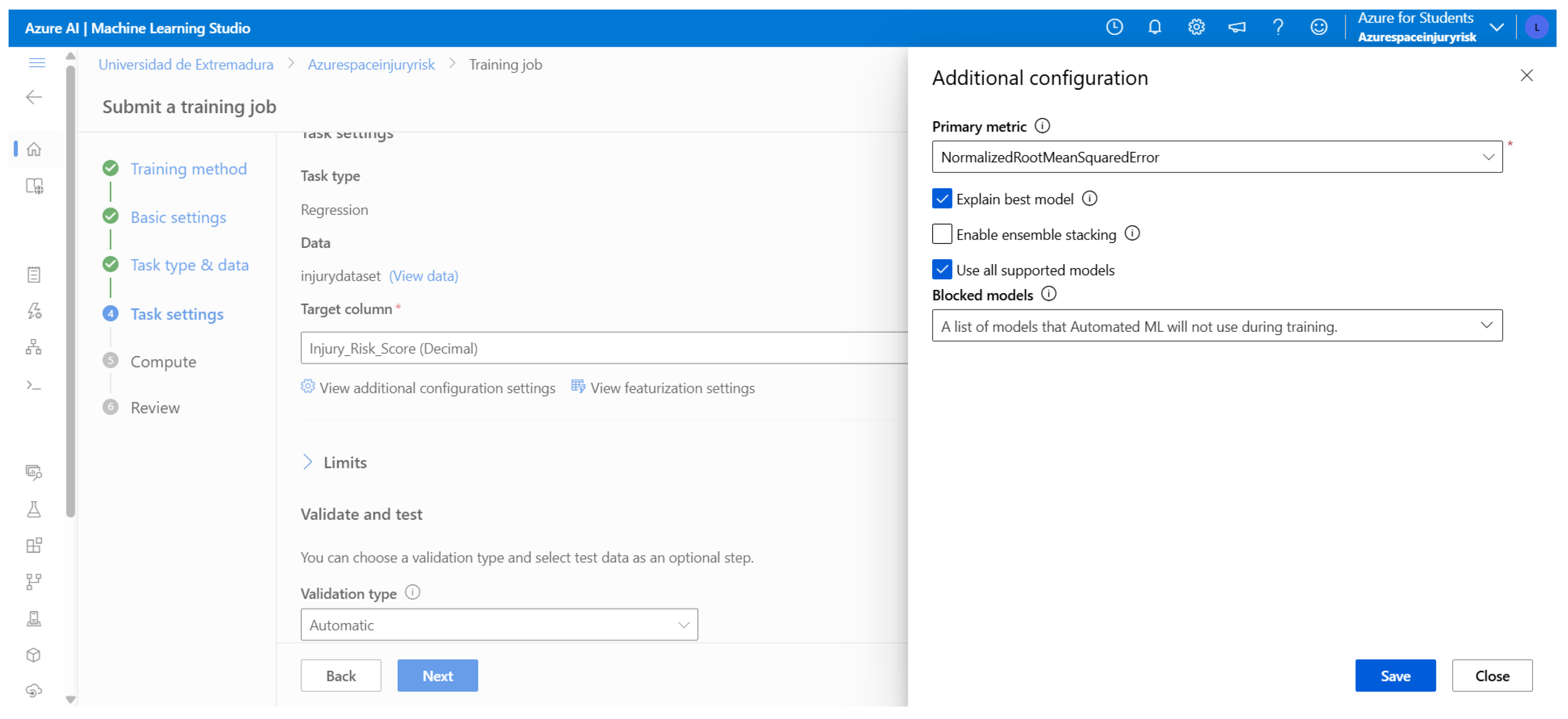

The experiment was configured using the graphical interface within Azure ML Studio, as shown in

Figure 6. The task was defined as a regression problem, and

Injury_Risk_Score was selected as the target variable. A compute cluster was manually assigned, and the default optimization metric,

Normalized Root Mean Squared Error (NRMSE), was used. Azure provided advanced settings such as experiment and iteration timeouts, and a metric score threshold to control cost and resource usage. These were left unchanged to maintain a baseline configuration. The platform selected a final model named

VotingEnsemble, based on an ensemble of the best individual models identified during the training process.

For the classification setup, the workflow followed an analogous configuration process. The task type was set to Classification, with the binary variable Injury_Occurred defined as the prediction target. Azure ML automatically used Weighted AUC as the default optimization metric, while retaining the same compute cluster and time constraints from the regression setup. No manual hyperparameter tuning or sampling adjustments were applied, ensuring comparability across platforms. Once the AutoML run was completed, Azure again selected a VotingEnsemble as the final model, combining multiple high-performing classifiers such as LightGBM, Random Forest, and Logistic Regression to maximize predictive robustness.

4.3.3. Model Training

The training process was initiated using the free-tier compute cluster. Although the model configuration required slightly more manual setup than in other platforms, all steps were performed through the graphical interface, maintaining the no-code approach. The training process was completed in approximately 6 h and 12 min, significantly longer than the training durations observed in SageMaker Canvas and Vertex AI. This increase was attributed to the ensemble strategy and the compute tier limitations.

4.3.4. Model Evaluation

Table 5 shows that Azure ML Studio reported exceptionally high performance metrics for the

VotingEnsemble model: RMSE = 0.0001968, RMSLE = 0.0001263, R

2 = 0.9999965, and NRMSE = 0.0003735.

Figure 4 shows that feature importance analysis revealed consistent results with the other platforms, highlighting

Cumulative_Fatigue_Index (52.13%),

Impact_Force_Newtons (27.52%), and

Heart_Rate_BPM (20.08%) as the top predictors. The visual representation, a bar chart within the Studio interface, provided an accessible summary for users without technical expertise.

For the classification task, Azure ML Studio achieved similarly strong performance, with a ROC AUC of 0.90, an F1-score of 0.85, an Accuracy of 0.87, and a Recall of 0.84. These results demonstrate that the automatically generated ensemble was able to generalize effectively across both regression and classification problems, maintaining high discriminative capacity while minimizing false negatives.

4.4. Cross-Platform Comparative Analysis

Beyond the platform-specific workflows described in the previous sections, a cross-platform perspective is necessary to understand how the tools compare in practice. This section brings together the results obtained on Amazon SageMaker Canvas, Google Cloud Vertex AI, and Azure ML Studio, focusing on key dimensions that determine their practical viability for non-technical users. The comparison covers predictive performance, feature importance, training time, usability and interface design, as well as cost management.

4.4.1. Predictive Performance Comparison

To evaluate the predictive accuracy of the regression models generated by each platform for the task of sports injury risk detection, several performance metrics were used, as defined in

Section 3.5.

Table 6 shows the results for the best-performing model selected by each LC/NC platform using their internal validation dataset.

All three platforms achieved exceptionally high levels of predictive performance on the given dataset. Azure ML produced the most favorable results, with nearly zero values in RMSE, MSE, RMSLE, and NRMSE, and an score of 0.999997. Google Cloud Vertex AI also demonstrated outstanding accuracy, reaching an of 1.000, along with low RMSE (0.003) and RMSLE (0.002) values. Although Amazon SageMaker Canvas reported a slightly higher RMSE (0.008), it achieved an MSE of 0.000, indicating that its predictions closely matched the true values.

However, these metrics must be interpreted with caution. The near-perfect performance of all platforms suggests that the dataset may contain relatively linear or simple relationships between input features and the target variable, making it highly compatible with automated modeling systems. While this reflects well on the usability of AutoML for structured data, generalizability may be limited. An external test set or a noisier, more complex dataset would be needed to properly assess model robustness and prevent overfitting.

Table 7 summarizes the classification performance achieved by each platform according to their internal validation results. Overall, all three LC/NC AutoML systems achieved strong classification accuracy, with ROC AUC values consistently above 0.90, indicating robust separability between injured and non-injured cases. Google Cloud Vertex AI achieved the highest overall performance, particularly in terms of F1-score and Recall, suggesting a balanced handling of false positives and false negatives. Azure ML Studio also produced highly competitive results, supported by its ensemble-based models, while Amazon SageMaker Canvas performed slightly lower on Recall but maintained stable Accuracy across runs.

These results are consistent with the regression and classification findings, reinforcing that all three LC/NC platforms are capable of automatically selecting and tuning high-quality models for structured tabular data. Small variations across systems likely reflect differences in their internal AutoML optimization strategies and model search spaces. Importantly, the classification results confirm that the usability and predictive effectiveness of these tools extend beyond continuous prediction tasks, supporting their applicability to a broader range of supervised learning problems.

A key methodological consideration is that the reported metrics were generated using each platform’s default internal validation procedures. Since the platforms apply different data splitting and evaluation strategies, the values are not strictly comparable across tools. To mitigate this limitation, we repeated the experiments three additional times per platform and observed highly consistent results across runs. This suggests that, although absolute fairness in cross-platform comparison cannot be guaranteed, the relative trends in performance are reliable.

4.4.2. Feature Importance Analysis

A particularly noteworthy outcome of the analysis was the consistent identification of key predictors across all platforms. The three most influential features,

Cumulative_Fatigue_Index,

Impact_Force_Newtons, and

Heart_Rate_BPM, were ranked at the top by each LC/NC platform.

Figure 4 summarizes their relative importance.

This remarkable consistency strengthens the trust in the models’ interpretability and the reliability of AutoML solutions for insight generation. Even without manual feature selection or domain-specific tuning, these platforms were able to converge on the most relevant variables for injury risk prediction.

What was particularly surprising was the degree to which these three variables dominated the model’s decision-making process. While their importance was expected, the minimal influence of other features, such as session duration, which accounted for less than 2%, was unexpected. The visual tools provided by each platform for feature importance were especially helpful in conveying this information in an accessible way, offering a strong sense of clarity and interpretability to users unfamiliar with ML mechanics.

These results suggest that AutoML tools not only streamline model development but also facilitate meaningful knowledge extraction, empowering domain professionals to make data-driven decisions without requiring deep technical expertise.

4.4.3. Training Time and Resource Usage

Training durations and resource usage varied significantly among platforms, as summarized in

Table 8. Amazon SageMaker Canvas completed its training in under 30 min using the “Quick Build” mode. Google Cloud Vertex AI required approximately 2 h and 7 min, which is reasonable for a dataset of this size. Azure ML Studio took 6 h and 12 min. This long duration, despite the quality of the results, highlights the impact of the default compute setup and the complexity of the automatically selected

VotingEnsemble model.

Although Azure offers powerful modeling capacity, its longer default execution time may present a barrier for users with limited compute quotas or time constraints. This delay, although justified by the advanced ensemble modeling techniques used, may frustrate novice users who expect faster feedback cycles.

Training duration and computational requirements for the classification tasks also varied across platforms, as shown in

Table 9. Amazon SageMaker Canvas completed training in approximately 24 min using the

“Quick Build” mode. Google Cloud Vertex AI required about 1 h and 45 min to finalize model selection and evaluation. Azure ML Studio took considerably longer, at around 5 h and 38 min, primarily due to its automatic use of ensemble-based algorithms within the free-tier compute configuration.

While all platforms successfully completed the classification workflow without manual configuration, the differences in runtime reflect variations in their AutoML optimization strategies and resource allocation defaults. As in the regression experiments, Azure ML’s longer training duration is associated with its use of complex ensemble models and constrained compute resources in the free tier. For non-technical users, such extended runtimes may pose a usability limitation, especially in iterative experimentation settings where rapid model feedback is desirable.

4.4.4. Usability and Interface Evaluation

Interface design and user experience are critical factors in the effectiveness of LC/NC platforms, particularly in the context of democratizing ML for non-expert users. To assess the usability of the three platforms, qualitative insights from the practical experimentation were organized using the logic of Nielsen’s Usability Heuristics [

49] and the structure of the System Usability Scale (SUS) [

50]. Although no formal survey was conducted, these frameworks offered a conceptual lens to evaluate intuitiveness, error prevention, transparency, and overall user confidence. Beyond graphical workflows, some tools integrate prompt-based assistants that guide users through natural language, further lowering barriers for non-technical users [

29].

Amazon SageMaker Canvas stood out for its highly structured and visual interface, built around a clear workflow of sequential stages (“Select”, “Build”, “Analyze”, “Predict”, and “Deploy”). This guided experience minimized the need for prior ML knowledge. Basic tasks such as dataset preparation and model training with the “Auto Pilot” feature required minimal configuration. The graphical presentation of model metrics and feature importance further enhanced comprehension. However, initial setup friction emerged from the need to configure a SageMaker domain and locate the Canvas interface within the broader AWS environment, an extra step that may discourage first-time users unfamiliar with cloud infrastructures. Importantly, unlike its competitors, SageMaker Canvas does not currently include prompt-based interaction features.

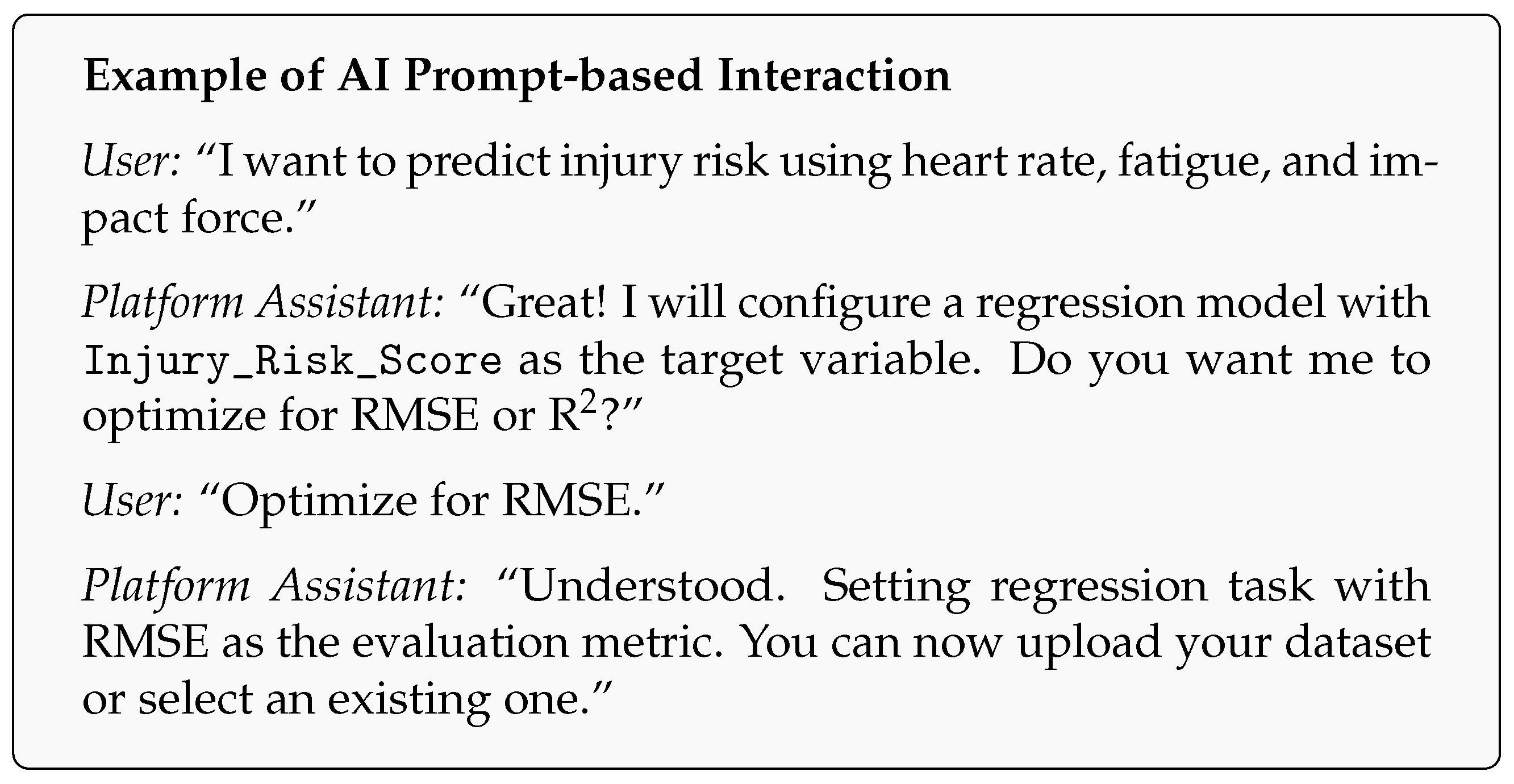

Google Cloud Vertex AI provided a highly seamless user experience. Thanks to its integration within the unified Google Cloud Console, all ML stages—from dataset upload (via Cloud Storage) to model configuration and result analysis—were centralized, reducing cognitive overhead and navigation effort. Vertex AI includes a

Promt Assistant, enabling natural language guidance to generate pipelines, models, or explanation [

51]. This feature exemplifies how LC/NC tools are evolving toward hybrid interaction models that blend visual design with natural-language guidance, as illustrated in

Figure 7.

Azure ML Studio, while also offering a visual interface, proved more complex. Initial usage required the manual configuration of a workspace and a compute cluster, concepts that can be intimidating for users unfamiliar with cloud resources. Despite this, the AutoML interface itself was guided and well-documented, offering powerful options such as early stopping, metric thresholds, and model explainability settings. While this depth supports customization and control, it may overwhelm beginners, especially when encountering platform-specific errors or warnings related to compute availability or quota limits. In addition, Azure also introduces

Prompt Flow, a framework that allows integrating large language models (LLMs) into ML workflows through natural language instructions, which enhances usability for non-expert users [

52].

These observations underline a broader insight: While LC/NC platforms significantly reduce the barriers to model training with AutoML, a basic understanding of ML workflows and platform-specific infrastructure remains necessary for effective use. Concepts such as data splitting, optimization metrics, and resource allocation must be understood, even if superficially, to make informed choices beyond default settings.

Table 10 compares the platforms across key usability dimensions, conceptually inspired by Nielsen’s usability heuristics and the general structure of the System Usability Scale (SUS), which were used as guiding frameworks for organizing qualitative observations from the experimentation.

4.4.5. Cost Management and Free Tier Usability

Economic accessibility is a key factor in the broader adoption of ML tools, particularly among non-expert users, students, or small organizations. While all three platforms analyzed in this study offer free-tier usage or credit-based experimentation, the transparency and ease of cost management varied considerably across services.

Amazon SageMaker Canvas provides an initial free-tier offering; however, practical experimentation revealed a risk of incurring unintended charges. One of the most critical issues was the complexity of understanding and managing the underlying infrastructure, especially for users unfamiliar with AWS resource billing. For instance, in one case, charges continued to accumulate (approximately EUR 4) despite no active training, due to a SageMaker domain remaining provisioned in the background. Identifying and terminating the source of these charges was non-trivial and required navigating multiple billing menus and finding the specific resource responsible. This lack of clear cost visibility increases the potential for unwanted billing, particularly for new users who assume that “not training” equals “not paying”.

Google Cloud Vertex AI, by contrast, provided a much more transparent and controlled cost experience. Although some AutoML operations may be more expensive once the free tier is exceeded, posing potential constraints for budget-conscious projects, Google’s interface consistently warns users of possible charges before initiating operations. Additionally, Google’s free-tier credits were generous in both duration and usage scope. This system offered a greater sense of security, as it ensured that no charges would be incurred without explicit user authorization. This clarity significantly reduces the risk of surprise billing, making the platform particularly user-friendly from a financial standpoint.

Azure ML also performed well in terms of cost transparency and predictability. Like Google, Azure provides a structured free-tier environment and a dedicated program for students, including free credits and pre-configured compute resources. Once the workspace and compute cluster were correctly set up, no unexpected charges were experienced throughout the AutoML workflow. Azure’s user interface also helped reinforce cost awareness by clearly indicating resource usage, contributing to a perception of safety regarding billing management.

Table 11 summarizes the practical cost management experience across platforms, highlighting differences in billing transparency, risk of unexpected charges, and suitability for low-budget usage.

In conclusion, while all platforms offer some form of no-cost experimentation, Google Cloud and Azure stand out in terms of transparency, safety, and overall ease of cost management. Amazon Sagemaker Canvas, despite its intuitive interface, was hindered by the complexity of its resource architecture and the opaque nature of its billing system. For non-expert users aiming to avoid unexpected expenses, clarity and control over cost-related processes are just as essential as usability and model performance.

5. Discussion

The results of this study support the hypothesis that LC/NC platforms can empower domain professionals to build effective ML models without deep technical knowledge. All three platforms successfully produced high-performing models on structured, tabular data with minimal user input. The visual guidance and automation of feature engineering, model selection, and training significantly lower the entry barrier to ML. In addition, it is worth noting that the usability assessment was conducted with the participation of domain professionals from the sports field, who do not possess technical expertise in computer science. Their involvement provided valuable insight into the accessibility and clarity of LC/NC AutoML interfaces from the perspective of real end-users.

Nonetheless, predictive accuracy alone does not capture the full picture. In practice, usability and transparency emerged as decisive factors for adoption. Google Cloud Vertex AI offered the most seamless end-to-end experience, while Azure ML Studio provided powerful customization options at the cost of greater complexity in infrastructure setup. AWS SageMaker Canvas was accessible in terms of workflow design, but its integration within the broader AWS ecosystem introduced additional friction for first-time users.

The main technical challenge for non-experts was not in using the AutoML interface, but in configuring and understanding the surrounding cloud infrastructure. Terms such as “workspace”, “domain”, “hyperparameters”, or “compute cluster” remain opaque to first-time users and may hinder adoption. Similarly, resolving platform-specific errors still requires a degree of technical literacy. However, the transparency of results, through visualizations such as feature importance and clear metric reporting, further contributed to user confidence, underlining the importance of interpretability for non-technical professionals.

Training time and cost management emerged as fundamental aspects in evaluating the suitability of LC/NC platforms. SageMaker Canvas completed training relatively quickly, while Vertex AI required considerably longer runtimes and Azure ML extended even further due to its reliance on ensemble-based approaches. These differences are critical when rapid experimentation or iterative workflows are required. Equally important, cost transparency strongly influenced the user experience. Google Cloud and Azure provided clear safeguards through free-tier credits and usage alerts, whereas AWS introduced hidden charges, such as persistent billing for idle resources, which were not immediately visible and led to unexpected costs during our experiments. For non-expert users, such surprises can represent a significant obstacle to adoption, underscoring the importance of both efficiency and cost clarity in real-world decision-making.

Furthermore, while these platforms abstract away most of the modeling complexity, users are still responsible for framing appropriate questions, preparing clean data, and interpreting results. Familiarity with basic ML concepts such as evaluation metrics, data leakage, and model overfitting is essential for responsible use.

Finally, practical deployment of models in real-world workflows (e.g., regular risk prediction for athletes) would require integration steps beyond the capabilities of current LC/NC interfaces. These include monitoring, versioning, and connecting the model to operational systems tasks that fall under the domain of machine learning operations (MLOps) and often demand software engineering technical expertise.

Beyond these practical considerations, it is important to acknowledge broader research directions in machine learning that could further enhance the capabilities of future AutoML systems. Although this study focused exclusively on supervised learning workflows within cloud-based AutoML platforms, it is worth noting that emerging paradigms such as self-supervised and semi-supervised learning are becoming increasingly relevant, particularly in scenarios with limited or imbalanced data [

53,

54,

55]. However, these methods are not yet available through the LC/NC interfaces analyzed in this work. Integrating such advanced learning strategies into accessible AutoML workflows represents an interesting avenue for future research, especially regarding data efficiency and model generalization in real-world applications [

56].

6. Conclusions

This study set out to explore the viability of LC/NC platforms for democratizing access to ML, with a focus on a real-world use case in the sports domain: injury risk prediction. By conducting a structured practical comparison of three leading LC/NC platforms, Amazon SageMaker Canvas, Google Cloud Vertex AI, and Azure ML Studio, this research assessed their effectiveness in enabling non-expert users to build and interpret regression and classification models with zero technical intervention.

The findings confirm that LC/NC platforms can deliver highly accurate predictive models even when applied by users with no background in programming or data science. At the same time, the comparison highlights that predictive accuracy is consistently strong across all three platforms. However, predictive performance alone does not capture the full picture. Usability and transparency were also decisive: Google Cloud Vertex AI provided the most seamless and intuitive experience thanks to its fully integrated workflow, whereas Azure ML offered powerful functionality but required more technical configuration and a steeper learning curve. Amazon Sagemaker Canvas stood out for its visual design and structured workflow, though some initial setup steps created additional friction for non-expert users. Training duration further distinguished the platforms, with some prioritizing rapid results while others required considerably longer execution times; Amazon Sagemaker Canvas completed training in less than 30 min, Vertex AI required approximately 2 h, and Azure ML extended to more than 6 h. Finally, cost management played a crucial role. Google Cloud and Azure ML offered clearer safeguards through credits and explicit usage alerts, while Amazon Sagemaker Canvas, despite its accessible interface, raised concerns about hidden charges caused by persistent background resource billing.

Future work could delve deeper into the analysis of user interaction within LC/NC AutoML workflows, identifying which stages or interface components generate the most confusion or cognitive load for users without technical knowledge, based on the results obtained regarding confusion in certain terms. The performance of these platforms could also be analyzed with noisier or more unbalanced datasets, or when implemented for real-time decision-making in operational environments.