1. Introduction

The world population is projected to increase by 2 billion over the next 30 years [

1] according to the United Nations (UN). Approximately 713–757 million people are projected to experience food insecurity in 2023 according to the latest report of the “State of Food Security and Nutrition in the World” (SOFI) in July 2024, which was published by the United Nations’ Food and Agriculture Organization (FAO) and the World Food Programme (WFP). This situation represents an increase of approximately 152 million compared to the year 2019 [

2]. Furthermore, about 2.33 billion people currently experience moderate to severe food insecurity. Reports by FAO, the World Health Organization (WHO), and other UN organizations indicate that if current trends persist, approximately 582 million individuals will be affected by chronic undernourishment by the year 2030 [

3].

Consequently, the necessity to enhance both the quantity and quality of food is more urgent than ever before, requiring the undertaking of appropriate research efforts. Oilseeds, including but not limited to soybean, oil palm, sunflower, and groundnut, have the potential to contribute to the alleviation of malnutrition. These crops are among the most significant sources of vegetable fats globally, ranking among the top ten crops in terms of total calorie production [

4]. Sunflower (Helianthus annuus) is considered to be the optimal crop for cultivation in different climatic zones, ranging from temperate to subtropical regions. Sunflower meal contains up to 50% protein, as well as various amino acids, vitamins B and E, minerals, antioxidants, fiber, lipids, etc., making it an important component of human and farm animal diets [

5].

The yield of any crop may be reduced by up to 40% as a consequence of various diseases, pests, and phytopathogens [

6]. The common pesticides have been associated with a multitude of adverse consequences, including the diminution of biodiversity [

7], the degradation of soil, water and air quality, and the emergence of health hazards that can progress to chronic diseases [

8]. Phytosanitary regulations are defined as rules, requirements, and measures that are implemented to safeguard crops from diseases, pests, weeds, and other such harmful organisms. These regulations are followed through the responsible use of agrochemicals and biological protection methods with the overarching aim of causing minimal damage to the environment. The rapid implementation of phytosanitary measures necessitates continuous monitoring of agricultural fields to ensure that if a disease is detected, appropriate measures can be taken to prevent the infestation of the entire field. The monitoring of a single field is a complex and resource-intensive process, as disease symptoms and pests may be similar or combined, making accurate diagnosis difficult. Moreover, it is impractical to systematically check every plant during the growing season, especially if access to individual fields is difficult.

The utilization of computer vision and artificial intelligence technologies for the purpose of automated crop monitoring is a growing trend [

9]. Despite the fact that a number of reviews have been conducted on the utilization of intelligent systems in sunflower cultivation, these have frequently been found to possess certain limitations that hinder the acquisition of a comprehensive overview of the situation. For instance, review [

10] provides a comprehensive analysis of hyperspectral images; however, it is noted that this technology is currently inaccessible to farmers and offers only a limited comparison with more pragmatic alternatives. In a similar vein, [

11] offers significant insights into the application of deep learning for disease diagnosis. However, the primary focus on Indian oil crops, as well as the omission of contemporary hybrid models, imposes limitations on its global relevance and applicability. These works highlight a clear gap in research: the lack of a systematic review that is globally relevant and covers the full range of modern data sources (such as UAVs and Sentinel-2) and intelligent processing methods specifically designed for sunflower cultivation.

The main goal of this systematic review is to investigate methods and algorithms for processing sunflower images and data in order to monitor plant health during the growing season and prevent disease emergence. Within the scope of this paper, the most relevant and significant algorithms are discussed, such as CNN [

12,

13] and multilayer perceptron (MLP) algorithms [

14], as well as RF algorithms [

15,

16], and SVM [

13]. In the field of machine learning, several algorithms have been employed for sunflower disease classification, including federated learning (FL) [

6], K-Nearest Neighbour (KNN) algorithm, K-Means, and others. For segmentation tasks, models such as YOLO [

17], UNet [

18], Feature Pyramid Network (FPN) [

19], and SVM [

13] were chosen. This review is the first systematic synthesis to combine the analysis of satellite and UAV data and classification and segmentation methods for disease diagnosis and yield prediction in sunflowers. Unlike existing reviews, this work systematically compares algorithm accuracy and synthesizes findings from 50 recent papers (2020–2025) gathered from various journals and conferences. The review focuses on the practical applicability of the algorithms to sunflower crops in the agricultural sector.

The rest of the paper is organized as follows. The

Section 2 provides a detailed exposition of the search methods employed, the databases utilized, and the selection criteria for identifying suitable papers. The

Section 3 presents a characterization of the most common sunflower diseases and a description of the datasets most frequently used in the reviewed papers, including Sentinel-2 satellite and UAV data. The

Section 4 presents various metrics used to evaluate the accuracy of the methods in the reviewed works, as well as vegetation indices that are widely used for vegetation monitoring and plant health assessment. The

Section 5 provides a comprehensive overview of the existing intelligent processing methods, including deep neural networks, random forests and other algorithms. These methods are utilized for various purposes, such as the classification and processing of sunflower data, disease diagnosis and yield prediction. The

Section 6 provides a comprehensive overview of the YOLO neural network architecture, which is regarded as the most prevalent segmentation method. Additionally, it offers a comprehensive summary of studies that employ alternative methods for segmenting sunflower crops and lesions. The

Section 7 presents the results of an analysis of advanced methods of sunflower data processing. It also highlights promising directions for further research, including the improvement of model accuracy and its adaptation to different agronomic conditions. The

Section 8 considers the main problems of the reviewed works, as well as the issues of processing and integration of data from different sources. This section puts forward several suggestions for the enhancement of future research, including the improvement of the interpretability of the models, the use of time series data, and the integration of additional factors such as weather and soil characteristics. This is followed by the

Section 9, which summarizes the results of the review, the conclusions that were drawn, and suggestions for future research in the area of sunflower crop monitoring using intelligent systems.

2. Strategy for Finding Studies for Systematic Review and Criteria for Selecting Papers

A meticulous search strategy and rigorous criteria were employed to undertake a systematic review of intelligent data processing methods and algorithms applied to sunflower crops by the PRISMA methodology [

20] and the Kitchenham and Charters guidelines [

21]. PRISMA 2020 checklist is available in

Supplementary Materials. This review was retrospectively registered in the Open Science Framework (OSF), registration DOI:

https://doi.org/10.17605/OSF.IO/MEVSX. The authors did not use any AI-assisted tools for the preparation of this manuscript. This comprehensive review systematically analyzes a wide range of studies on sunflower image analysis, selected through clear inclusion criteria based on relevance and publication date. The primary objective is to synthesize research that explores various machine learning techniques—including deep neural networks and other data processing methods—for applications such as mapping and disease detection. The study encompasses data captured by cameras, satellites, and UAVs.

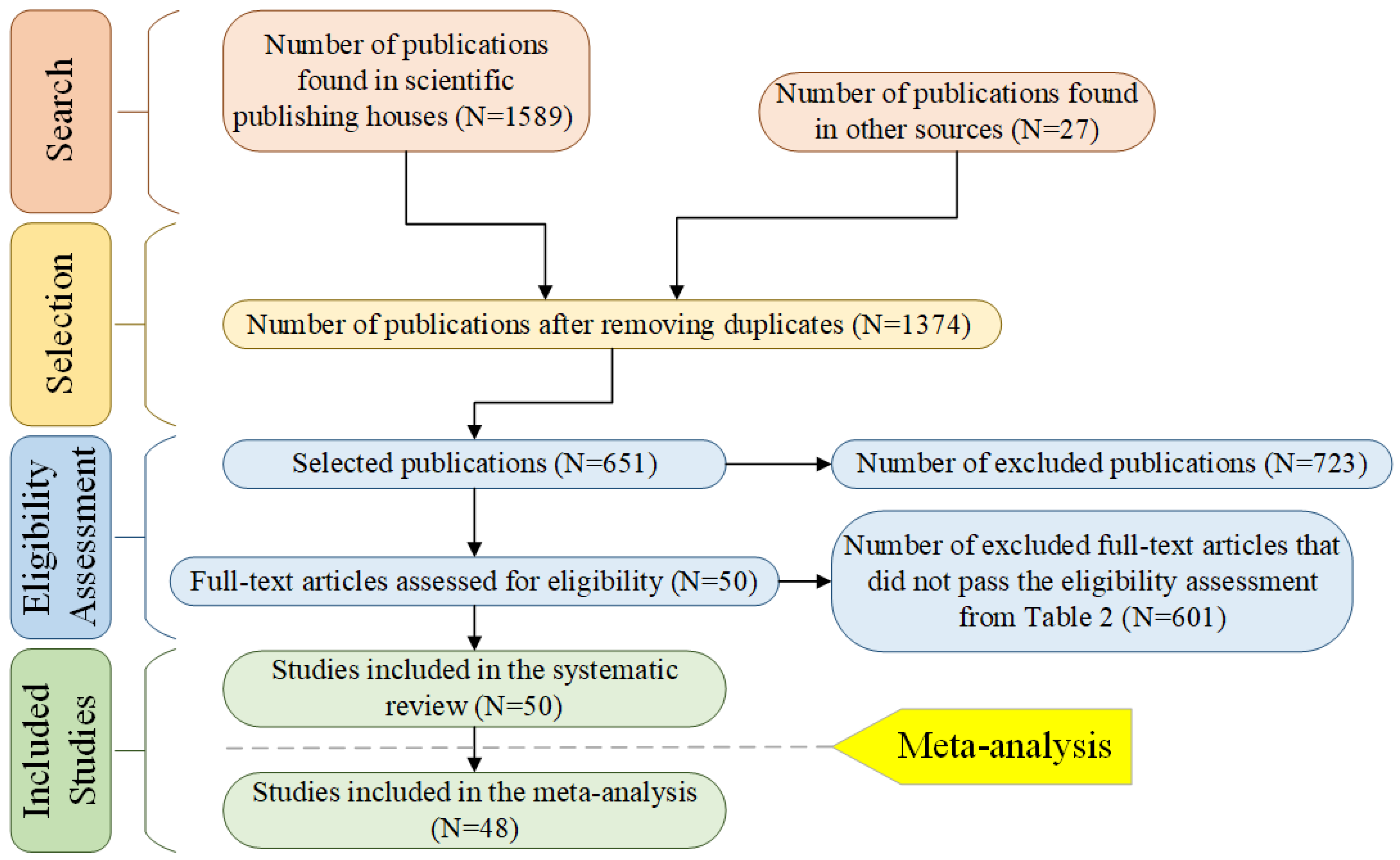

The search for relevant papers was conducted through a range of major scientific databases including Google Scholar, IEEE Xplore, SpringerLink, Scopus and Wiley. This review incorporated studies that were published between 2020 and February, 2025. As illustrated in

Table 1, the primary keywords were utilized to identify studies for a systematic review of methods and algorithms for the intelligent processing of agricultural data concerning sunflower crops. The search process also encompassed papers that addressed the application of remote sensing techniques, such as Sentinel-1 and Sentinel-2, as well as UAV-derived data for the monitoring and diagnosis of sunflower diseases.

The selection and assessment of papers for further inclusion in the review study were determined by the criteria outlined in

Table 2. A key limitation identified in this review is the narrowly focused scope of the existing research. Due to the specificity of the field related to the application of machine learning techniques for sunflower crop monitoring and disease detection, there are a limited number of studies that meet all the strict selection criteria. Consequently, the systematic review encompasses papers that may not fully align with established criteria, particularly with regard to aspects such as completeness of method description, quality of data, or clarity of validation of results. This is attributable to the necessity of generating a representative sample of studies, considering the restricted number of available publications in the field. Selection of scientific publications was carried out according to a step-by-step scheme for processing studies devoted to the sunflower data processing methods, presented in

Figure 1.

The search strategy for studies relevant to sunflowers and the criteria for paper selection ensured the inclusion in the review of the most relevant and methodologically sound studies. The selection of works, accompanied by detailed descriptions of the algorithms and characteristics of the data used provided the foundation for further analysis. The majority of the papers utilize specific types of data required for the detection and monitoring of diseases in sunflower crops. The following analysis will address these data types in greater detail.

3. Data Used in Sunflower Crop Detection and Condition Studies

The studies selected in this systematic review utilize disparate sunflower data for crop analysis, mapping, or disease classification. In most cases, four to five different diseases affecting sunflowers are used in the works, each of which is caused by a different pathogen. The classification of these diseases primarily utilizes datasets collected with conventional cameras to obtain high-resolution images. The entire field is subject to monitoring through the utilization of UAV or satellite data. In addition, the utilization of multispectral imaging facilitates the acquisition of novel insights into the domain of crop analysis. This encompasses the assessment of green mass condition, water stress, and the correlation between plant condition and the prevailing growing season.

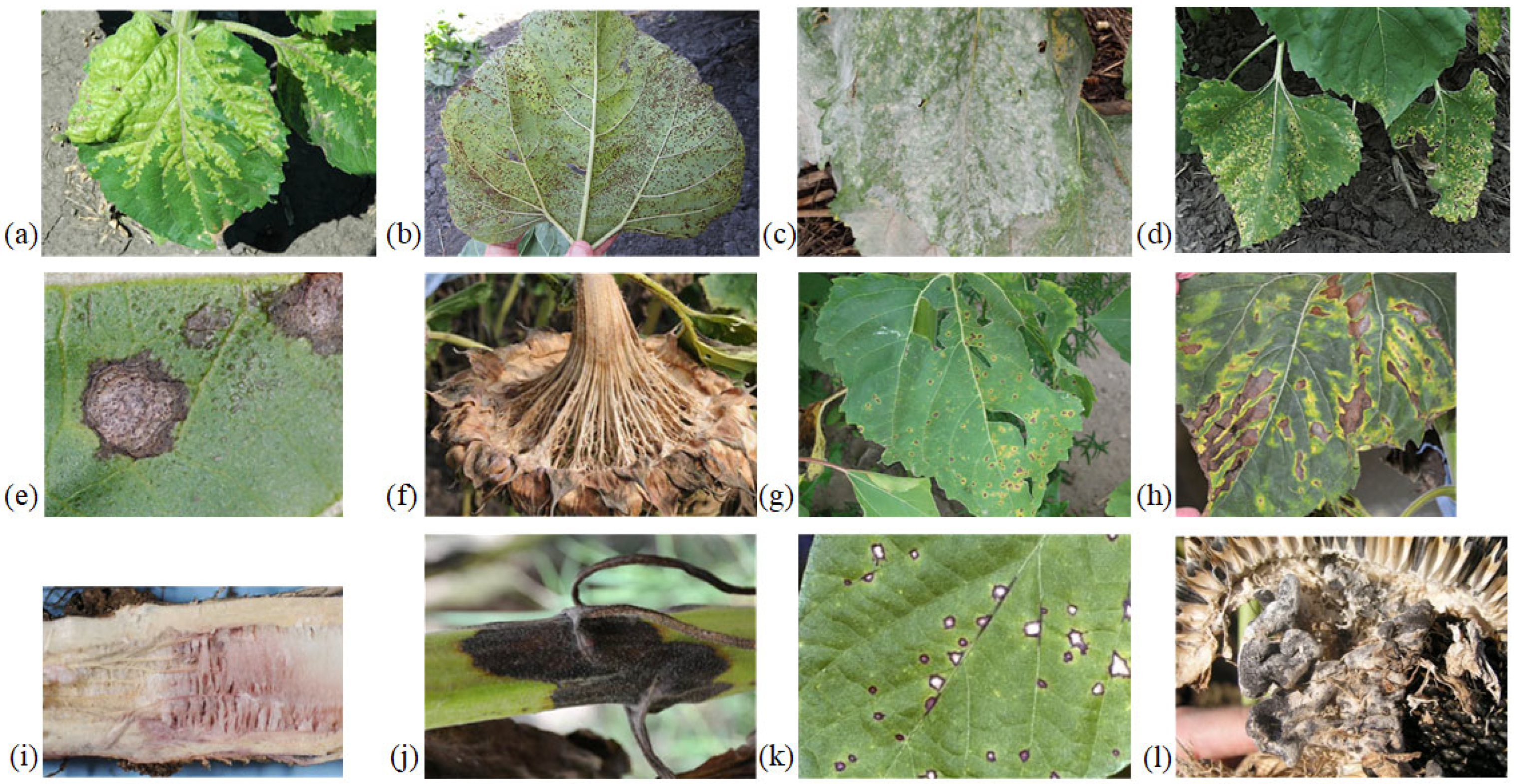

3.1. Common Diseases Affecting Sunflower Crops

Sunflower datasets under consideration in the papers are typically characterized by the presence of multiple disease categories, including, but not limited to, healthy plants, false powdery mildew, powdery mildew, rust, Alternaria, Septoria, sclerotinia, bacterial, Verticillium, Fusarium and others. Sunflower gray rot, a disease of considerable severity, is caused by the fungus Botrytis cinerea and affects various parts of the plant. Downy mildew (

Plasmopara halstedii) affects young plants under high humidity, causing chlorotic spots and white patches on leaf undersides, leading to stunting and deformation [

22,

23]. Rust (

Puccinia helianthi) is characterized by orange pustules on leaves, reducing photosynthetic rate [

24]. The powdery mildew (

Golovinomyces cichoracearum) manifests as white powdery plaque on leaves and stems, negatively impacting yield quality. Furthermore, it has been demonstrated that the plant’s immune system is also compromised.

Alternaria (

Alternariaster helianthi) causes brown necrotic lesions on leaves during early growth stages [

25]. Septoriosis (

Septoria helianthi) is characterized by the presence of gray or brown spots on leaves, which coalesce as the disease progresses, leading to leaf withering. Sclerotiniosis (

Sclerotinia sclerotiorum) is a fungal pathogen that affects stems, baskets and roots, causing them to rot. The presence of white mycelium with black sclerotia has been observed in the affected areas. The bacterium

Pseudomonas syringae, commonly known as bacterial spotting, produces watery spots that darken and cause leaf desiccation. Verticillium wilt (

Verticillium dahliae) and Fusarium wilt cause root infection and plant wilting [

26,

27]. The fungus

Phoma macdonaldii, the causative agent of Phoma black stem, and Cercosporosis (

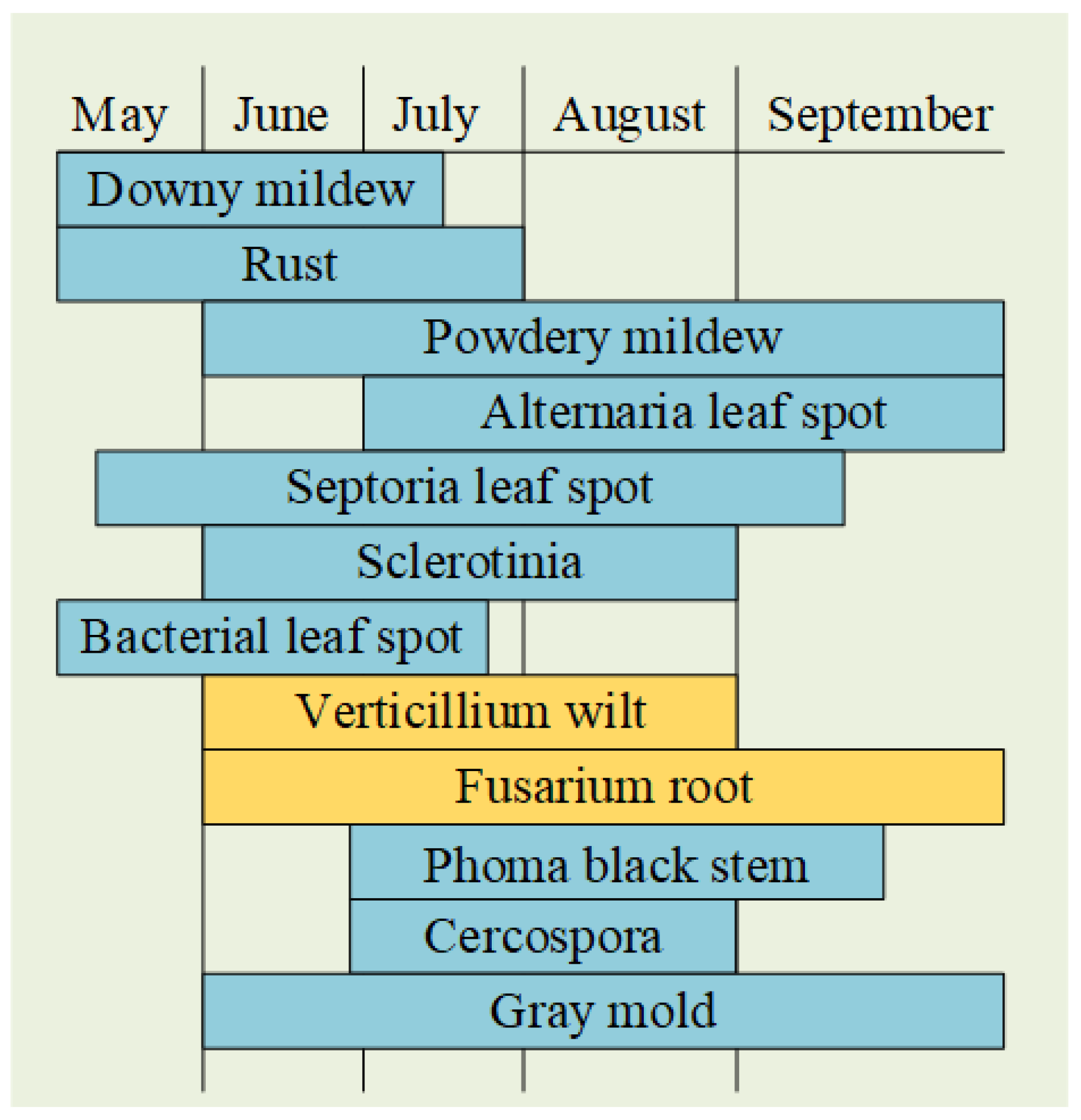

Cercospora helianthin) produce stem lesions and leaf spots, respectively. The conditions and periods of occurrence of diseases affecting sunflowers are presented in

Figure 2. Diseases that are contingent upon warm weather and high humidity are indicated by the color blue, while those diseases that require dry conditions are indicated by the color yellow. As illustrated in

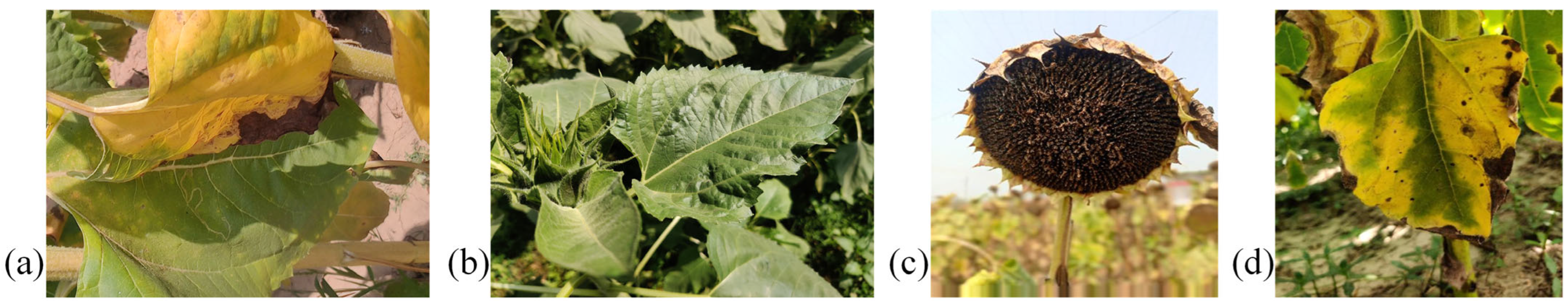

Figure 3, the following examples of plants have been observed to be affected by the diseases considered.

It should be noted that the review did not encompass all extant infections and fungi, given that the reviewed papers employed data derived from a circumscribed set of diseases. Multiple diseases can affect the field concurrently, which can complicate subsequent diagnosis. It is imperative to meticulously monitor crop growth and expeditiously implement remedial measures to eradicate insects and diseases, thereby averting further infections and ensuring optimal yield retention.

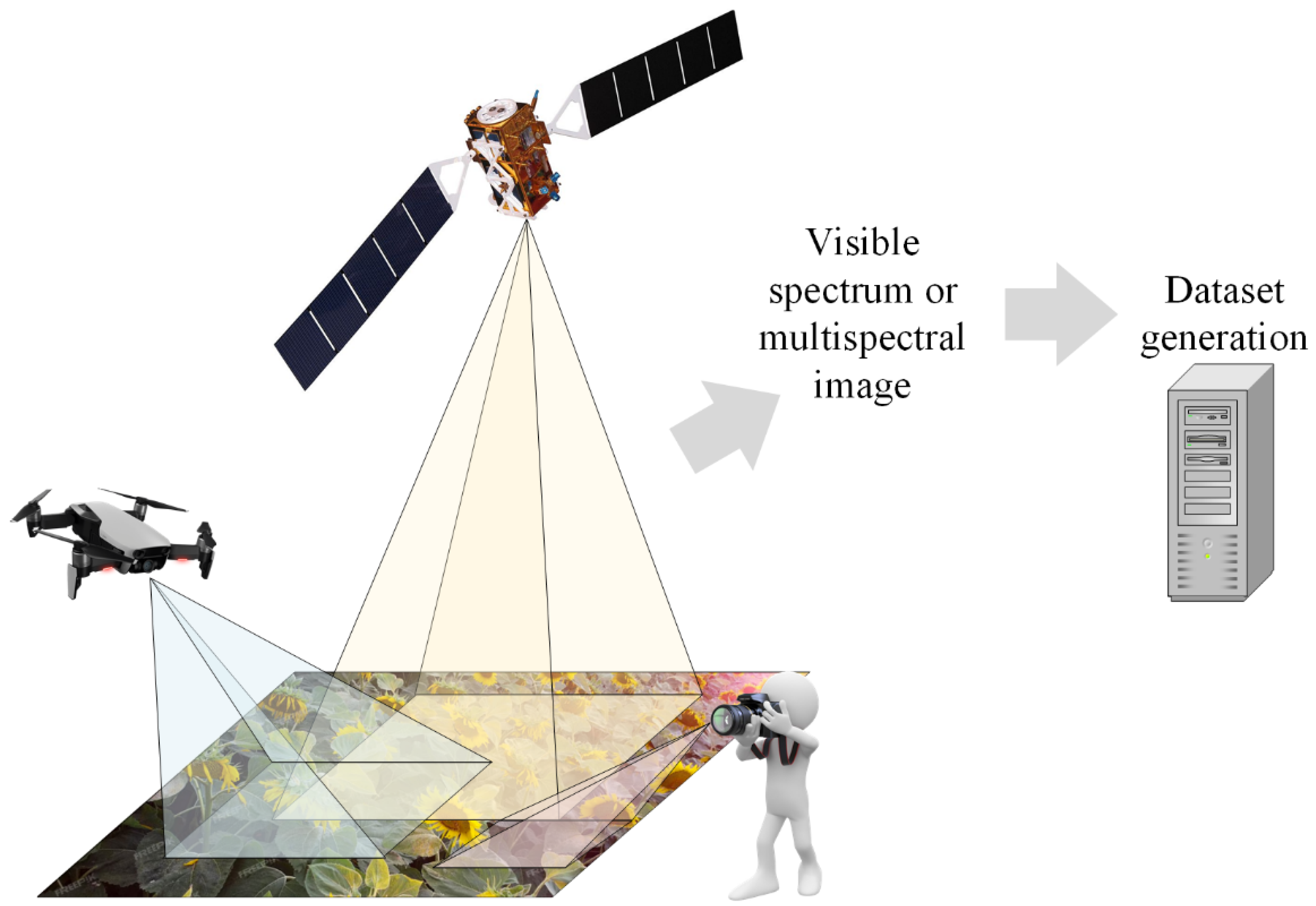

3.2. Sunflower Photo Datasets and Methods of Obtaining Them

There are currently two publicly available sunflower datasets: BARI-Sun and a set of synthetic images generated for segmentation tasks. The majority of the reviewed papers are authored by individuals who have created their datasets using conventional camera, UAV, or satellite data (

Figure 4). The use of a multispectral camera in agricultural data collection facilitates the analysis of hidden plant characteristics, including growing season and water stress. Existing sunflower datasets and imaging techniques for various crop monitoring applications are presented further.

3.2.1. Known Datasets

The PlantVillage is one of the most widely used datasets created for the automatic plant disease detection and classification using machine learning and computer vision methods [

28]. This dataset contains over 54,000 images across 38 categories including both healthy leaf images and those of affected crops such as tomatoes, potatoes, corn, and others. BARI-Sunflower or BARI-Sun is the most commonly used open dataset in the field of sunflower disease recognition [

12,

29,

30,

31,

32,

33,

34,

35,

36,

37]. In 2021, a large dataset of healthy and affected sunflowers was collected at the demonstration farm of the Bangladesh Agricultural Research Institute (BARI) in Gazipur, Bangladesh, consisting of 467 original images divided into four categories: fresh leaf, downy mildew, leaf scars, and gray mold [

38]. The authors further augmented the dataset using various techniques, such as rotation, scaling, and shearing, increasing the dataset by 1668 images. The photographs are taken up close (

Figure 5), allowing phytophysiologists to compare the structures of different sunflower species. The heterogeneous weather and lighting conditions in the images complicate the task of disease detection by the naked eye.

The synthetic image dataset generated using cGAN is presented in the work [

18]. The original images were collected using agricultural robots equipped with a four-channel camera that includes RGB channels and a near-infrared spectroscopy (NIR) channel. This dataset contains data for beets and sunflowers, categorized into crops, soil, and weeds. The sunflower data were collected in spring, over a month, starting from the seedling phase and continuing until the end of the period suitable for chemical treatments. Synthetic scenes in the multispectral images were created by replacing the objects (crops and weeds) with generated analogs, maintaining the realism of the scene. This approach increased the training dataset size and increased the training set diversity for segmentation [

36].

The BARI-Sun dataset has gained widespread use in the classification of diseases due to its open access, extensive disease class representation, and high-quality images. Models trained on this dataset will be highly versatile due to the varying shooting conditions and diverse classes, making them easy to fine-tune. The generation of scenes or plants enables the qualitative and realistic augmentation of any dataset, and in combination with other augmentation techniques, it significantly expands the dataset.

3.2.2. Methods of Acquiring Sunflower Images from Agricultural Lands

In recent years, remote sensing methods have gained significant popularity in agriculture. Thanks to multispectral and hyperspectral imaging technologies, farmers and agronomists can obtain valuable information about crop health, growth dynamics, and potential stress responses [

39]. Multi-spectral imaging techniques enable the analysis of various plant characteristics, opening new possibilities for precision agriculture [

40]. Multispectral images are created by visualizing several electromagnetic spectrum ranges, typically from four to ten channels. The visible light, near-infrared, and red bands are most commonly used to diagnose plant sunlight absorption and assess the state of their photosynthetic apparatus. Hyperspectral images capture reflected light across hundreds of narrow spectral bands, allowing for a detailed analysis of plant chemical composition, pigment levels, moisture, and nutrients.

These data can be acquired in three main ways: through satellite platforms [

40], UAVs [

17], and ground-based spectrometric sensors. Satellite images provide global monitoring and allow for long-term crop analysis, but their spatial resolution is relatively low, making them suitable for monitoring large-scale fields. UAVs equipped with multispectral cameras offer a more expensive solution but provide high-resolution images of any part of the field from any altitude. Ground-based sensors are placed directly within the crop canopy, offering precise measurements of the spectral characteristics of individual plants and soil. Additionally, these sensors can be used to calibrate aerial and satellite data.

The spectral data obtained can be useful for calculating vegetation indices such as the normalized difference vegetation index (NDVI) [

41], enhanced vegetation index (EVI), soil-adjusted vegetation index (SAVI), normalized difference moisture index (NDMI), and others [

42], as well as for detecting pathogens, pests, optimizing irrigation or fertilization, and predicting yield using time-series data. Remote sensing methods, including multispectral and hyperspectral imaging, have become an integral part of modern agriculture, providing highly accurate diagnostics of crop conditions. The variety of data sources enables the collection of information both for individual fields and entire regions. These data are actively used to calculate vegetation indices, making them key tools for precision farming and decision-making based on objective plant health parameters.

3.2.3. Sentinel-2 Data

Sentinel-2 data, provided by the European Space Agency (ESA), is widely used for monitoring various agricultural crops, including sunflowers [

15,

43,

44,

45,

46,

47]. These data offer high spatial (10–30 m per pixel) and temporal resolution. Consequently, time-series analysis allows for tracking phenological changes, assessing crop conditions, and forecasting yields. The multispectral images provided by Sentinel-2 satellites are created across 13 spectral bands, including visible, near-infrared, and shortwave infrared bands, and are updated every five days [

40]. The analysis of satellite data enables the determination of vegetation status using indices like NDVI, EVI, and the normalized difference red edge index (NDRE). These indices are employed for phenological analysis, monitoring crop growth stages, detecting stress, and mapping crop fields. Additionally, the study of time-series satellite data enables crop development modeling, yield prediction, and assessment of climatic impacts for optimizing crop growth conditions, as well as tracking deviations from the norm, such as pests, diseases, or adverse weather conditions. Given the accessibility of Sentinel-2 data and their extensive coverage [

15], there is an opportunity to develop powerful tools for efficient agricultural management, minimizing losses due to diseases and enhancing crop yields.

Due to its high spatial and temporal resolution, satellite data is a vital tool in crop monitoring. Its multispectral capabilities allow for calculating key vegetation indices and analyzing the phenological stages of plant growth. Regular data updates enable the creation of time-series necessary for identifying deviations, forecasting yields, and assessing the impact of climatic factors. The broad availability and coverage of satellite imagery create the foundation for the development of scalable agricultural management systems, capable of effectively minimizing crop losses and ensuring sustainable industry development.

3.2.4. Unmanned Aerial Vehicles Data

Drones equipped with multispectral and hyperspectral cameras provide highly accurate data on the condition of vegetation on agricultural lands, their stress levels, and the need for water and fertilizers [

13,

17,

19,

42,

48,

49]. The flexibility and timeliness of this data collection method are ensured by the ability to conduct necessary research at any time, regardless of weather conditions, while satellite data is updated every five days and may be unreadable due to cloud cover or precipitation [

13]. Additionally, detailed UAV images can have resolution up to several centimeters per pixel, which is crucial for analyzing soil heterogeneity, identifying areas with low fertility, detecting pathogenic lesions, and evaluating the effectiveness of agrotechnical measures and phytosanitary interventions. Using UAVs for crop monitoring reduces costs associated with fertilizers, irrigation, and plant protection, as well as enhancing yield by providing timely responses to emerging issues. Combined with satellite monitoring data, UAVs form the foundation for developing intelligent agricultural management systems, making the process more efficient and sustainable.

In the studies reviewed, UAV imagery, Sentinel-2 satellite data, and ground-based sensors are most commonly used to detect diseases at various plant growth stages. A significant portion of the research on sunflowers is dedicated to disease recognition, so this systematic review includes descriptions of common pathogens and their visual manifestations, which are crucial for subsequent automated image processing. The analysis of the presented datasets confirms the need for specialized methods of data processing that account for the specifics of agricultural objects. The next section presents statistical methods for evaluating intelligent systems and the application of vegetation indices for crop condition monitoring.

4. Methods for Statistical Evaluation of Intelligent Systems and Vegetation Indices

To process sunflower data, whether in the form of multispectral satellite images or simple disease photographs, different techniques such as machine learning algorithms are required. Several metrics are used to evaluate the accuracy of the developed models. This section also includes the characterization of some vegetation indices that improve the accuracy of diagnostics and forecasting.

4.1. Accuracy Metrics for Algorithms

Evaluating the accuracy of machine learning methods and neural network algorithms applied to sunflower image processing is crucial for ensuring the reliability and effectiveness of algorithms in real agricultural conditions. Accuracy metrics not only help assess model performance but also allow for comparisons of different approaches, enabling the selection of the most suitable method for a specific task [

50]. One of the main metrics is accuracy, which measures the proportion of correctly classified objects among all objects in the test dataset:

where

are the true positives,

are the true negatives,

are the false positives,

are the false negatives. In multiclass tasks, such as diagnosing various sunflower diseases, accuracy may be insufficient. In these cases, more specific metrics such as precision and recall are used to provide more detailed information about the model’s performance for each class. Precision measures the proportion of correctly classified objects of a certain class among all objects classified as that class:

For a model classifying diseases, precision indicates how many of the predicted disease cases are actually diseases [

51]. Recall on the other hand measures the proportion of correctly classified objects of a given class among all objects that truly belong to that class:

This metric is crucial when minimizing the number of missed cases is important (e.g., undiagnosed diseases) [

23]. To provide a more comprehensive evaluation of model performance, the F1-score is often used, which is the harmonic mean of precision and recall, helping to balance both metrics:

The F1-score is especially useful in tasks with imbalanced classes, where some diseases or plant conditions occur less frequently, and the model might tend to ignore such cases [

52]. The R

2 coefficient, or coefficient of determination, measures how well the model explains the variation of the dependent variable [

44]. Values range from 0 to 1, with 1 indicating perfect fit:

where

are actual values,

are predicted values,

is the mean of actual values. Time-series-based metrics, such as Root Mean Squared Error (RMSE), are commonly used for yield forecasting and assessing plant growth dynamics [

45]. These metrics measure the average deviation of forecasts from actual values, which is essential for evaluating the accuracy of long-term forecasts, such as yield predictions. The lower the RMSE value, the higher the model’s accuracy:

IoU (Intersection over Union) [

48] is used in segmentation and object detection tasks and shows the intersection between the predicted region and the true region of the label:

where A is the predicted region and B is the true region. Mean Average Precision at IoU threshold 0.5 (mAP@0.5) represents the average precision across all classes at the IoU threshold of 0.5. This metric is used to evaluate the quality of object detection models [

53]. First, Average Precision (AP) is calculated for each class, then averaged:

where N is the number of classes. Therefore, selecting and using the appropriate accuracy metrics is key to developing reliable and effective monitoring and diagnostic systems for agriculture, especially when working with large datasets from various sources, such as satellites, UAVs, and ground sensors.

4.2. Vegetation Indices

Vegetation indices are numerical indicators calculated based on the reflectance of vegetation in various spectral bands, such as blue, red, green, near-infrared, and red edge. These indices are used to assess the condition of vegetation, its productivity, moisture content, stress levels, and other agronomically significant parameters. Normalized Difference Vegetation Index (NDVI) is one of the most widely used indices for evaluating vegetation density and health [

41]. It is based on the difference in reflectance between near-infrared (NIR) and red (Red) bands. Chlorophyll in leaves strongly absorbs red light, while the leaf structure reflects near-infrared light. NDVI can be calculated as:

where

is the reflectance in the near-infrared band (≈780–900 nm) and

is the reflectance in the red band (≈630–680 nm). NDVI values range from −1 to 0, indicating water bodies, clouds, or snow, which interfere with visibility. Values between 0 and 0.2 indicate low vegetation or bare soil, values between 0.2 and 0.5 represent sparse vegetation, and values above 0.5 indicate dense, healthy vegetation. Despite its widespread use due to the simplicity of its calculation and interpretation, NDVI is sensitive to soil and atmospheric conditions, such as haze, clouds, and precipitation.

Enhanced Vegetation Index (EVI) was developed as an alternative to NDVI to correct for atmospheric effects and background soil noise [

42]. This index accounts for aerosol correction coefficients and coefficients that normalize the sensitivity of spectral channels:

where

is the blue channel (≈450–490 nm),

= 2.5 is the contrast enhancement factor,

= 6 and

= 7.5 are aerosol correction coefficients, and

= 0.5 is the background correction factor. The contrast enhancement coefficient G enhances the difference between

and

spectra, making the index more sensitive to changes in vegetation cover. The value of

= 2.5 was empirically determined from multiple studies to provide optimal contrast between vegetation and background objects such as soil. For dense forests, the coefficient may be increased to 3, while for sparse vegetation or areas with high atmospheric noise, it may be decreased. The coefficients

and

adjust for the impact of atmospheric particles (aerosols) on measurements, especially for the red and blue channels. The background reflection coefficient

adjusts for the influence of soil, reducing errors associated with variations in reflectance on different soil types.

can vary from 0 to 1, where 1 corresponds to sparse vegetation, deserts, and semi-deserts, and 0 corresponds to dense vegetation, such as forests. In agricultural landscapes, the vegetation density varies depending on the growth stage, and

would be set at 0.5, representing grasslands and pastures, which can be further adjusted after analyzing vegetation cover for more accurate EVI calculation.

To correct for soil influence on spectral reflectance in sparse vegetation areas, Soil Adjusted Vegetation Index (SAVI) is used:

SAVI helps correct for soil background in dry areas but is less useful in regions with high vegetation density, unlike NDVI. Normalized Difference Moisture Index (NDMI) is used to assess moisture content in vegetation and soil [

42]. It is calculated based on the reflectance in the near-infrared and shortwave infrared (

) bands:

where

is the shortwave infrared channel (≈1550–1750 nm). High NDMI values indicate good vegetation hydration, while low values indicate water stress or drought. NDMI is widely used for monitoring droughts, assessing crop conditions, and predicting fires and soil degradation zones. A limitation of NDMI is its requirement for

data, which makes it less accessible. In general, the choice of index depends on the task at hand—whether a general assessment is needed, vegetation density, or an analysis of water balance. Combining vegetation indices with machine learning methods and geographic information systems opens new possibilities for precision agriculture and ecosystem monitoring.

Vegetation indices have been identified as the most significant tools for evaluating vegetation condition and monitoring crop health. The utilization of these sensors facilitates a thorough examination of the crop’s condition, enabling the discernment of stress conditions, the identification of diseases, and the estimation of yield. The integration of machine learning methodologies with vegetation indices serves as a foundational element in the development of intelligent monitoring systems, which, in turn, can effectively support agricultural decision-making processes. An integrated approach that incorporates both the accuracy metrics of machine learning architectures and the calculation of vegetation indices can more accurately assess current plant health, diagnose diseases, and make yield predictions. The comprehension and adept utilization of these methodologies and indices are imperative for the development of robust intelligent systems within the agricultural domain. Further research endeavors should prioritize the integration of disparate data sources and the refinement of processing algorithms to enhance the precision of monitoring and forecasting under real field conditions. The subsequent section will present the most common methods of sunflower data processing, including machine learning, clustering, and segmentation methods.

5. Methods of Machine Learning for Sunflower Image Processing

Artificial intelligence technologies enable efficient disease recognition, determining the extent of plant damage, and yield prediction. Machine learning helps automate tasks such as image classification, segmentation, anomaly detection, and other data processing tasks obtained from various remote sensing platforms (satellites, UAVs, ground sensors). In this context, algorithms such as CNN, SVM, RF, and others are actively used, which efficiently handle large data volumes and complex classification and prediction tasks.

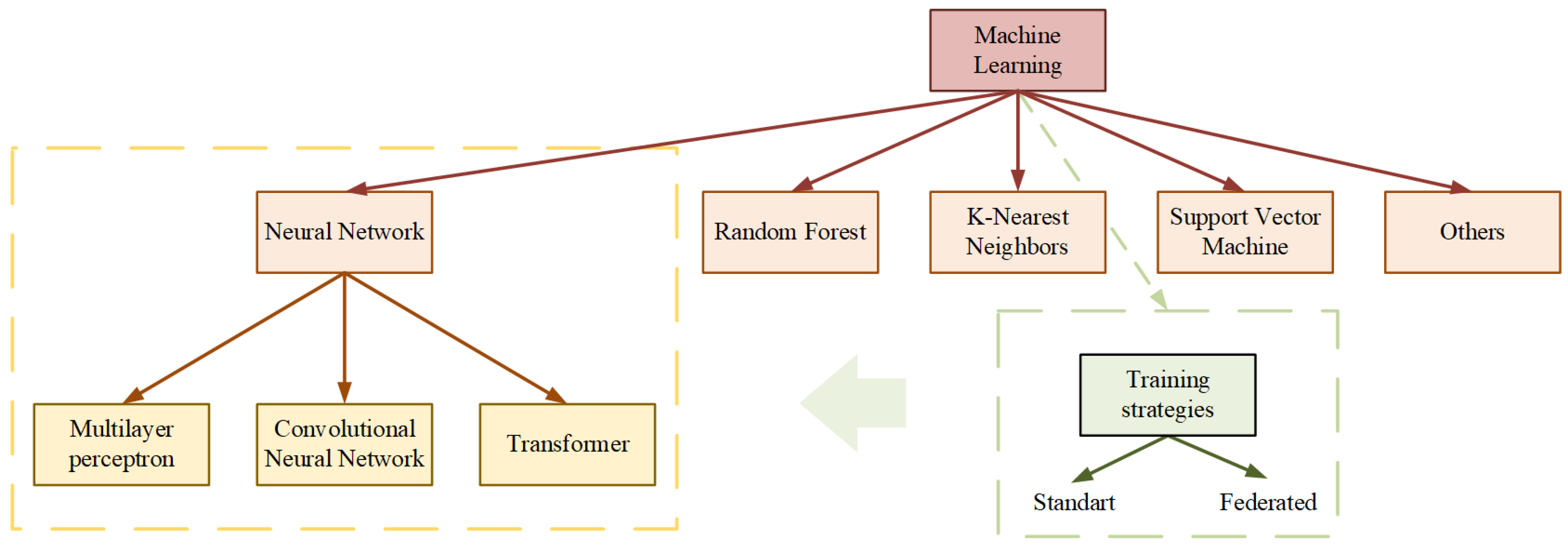

Figure 6 illustrates the schematic of machine learning methods used for sunflower image processing. This section considers the most effective machine learning methods employed for sunflower image processing and their applicability for agricultural monitoring.

5.1. Convolutional Neural Networks

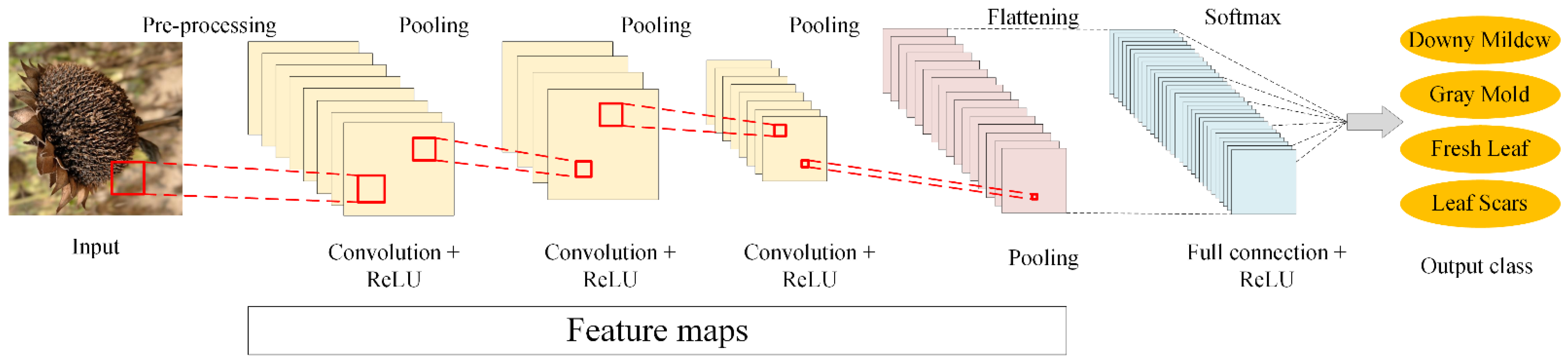

CNNs remain one of the most popular and widely used deep learning model for image processing of crop diseases. CNN architectures consist of an input layer that accepts a multidimensional array (image) (

Figure 7). Convolutional layers take this image and extract local features using a defined filter. After each convolution, an activation function, typically ReLU (Rectified Linear Unit), is applied, adding nonlinearity and helping the network learn more complex dependencies. After convolutional layers, a pooling layer (subsampling) is typically applied, which reduces the spatial dimensions of the feature map, lowering computational costs and preventing overfitting. These components of the architecture may be repeated several times, allowing the network to extract more complex, abstract features. Next, a flattening procedure is required, in which a multi-dimensional tensor of features is transformed into a one-dimensional vector. The fully connected layer then processes this vector and outputs the classification or prediction result. The output layer uses an activation function depending on the task; for multi-class classification, the Softmax function is used.

In the study [

12], the authors proposed a hybrid model based on VGG19 and a simple CNN, consisting of a convolutional layer, a pooling layer (max and average), and a fully connected layer, combined with transfer learning, which achieved 93.00% accuracy on the BARI dataset. Other models, such as Xception, Inception, MobileNet, ResNet, and DenseNet, were considered but showed lower results in combination with CNN. Authors of [

14] assessed sunflower seed yield using MLP, radial basis function, artificial neural networks (ANN), CNN, and linear regression. The best result was shown by the convolutional neural network with an R

2 of 0.914, MAPE of 4.950, MAE of 0.163, and RMSE of 1.699. Authors of [

54] focused on using ResNet152 to improve pathogen detection accuracy in sunflower fields. The dataset included rust, downy mildew, powdery mildew, and alternaria leaf blight with 405 photos per category. The test accuracy of ResNet152 was 98.02% on the original dataset. In [

29] a comparative analysis of 38 neural network models trained with transfer learning on datasets of cauliflower and sunflower diseases is presented. Some models achieved 100% recognition accuracy on each of the datasets, raising questions about the reliability of the study’s results. The EfficientNet_V2B2 model achieved an accuracy of 96.83%, which is a relatively high result. Similarly, ref. [

30] demonstrated the advantage of EfficientNet over other deep models, with an accuracy of 97.90% using the well-known k-fold cross-validation algorithm on the BARI-Sunflower dataset.

Other works, such as [

39], explored the use of deep fuzzy dimension reduction, CNN, and graph networks with an attention module, achieving optimal multispectral visualization, analysis, and classification of hyperspectral images. In the study by [

36] a CNN architecture called LeafNet was proposed for recognizing various datasets, including [

38]. This model, based on the popular VGG19 architecture, augmented with an attention module, achieved 96.00% accuracy in detecting sunflower diseases. Authors of [

55] proposed a seven-layer CNN model for classifying sunflower diseases, such as alternaria leaf blight, downy mildew, phoma blight, and verticillium wilt. After feature extraction by the main model, SVM was used to classify leaves into two categories: healthy and diseased. The overall disease recognition accuracy for sunflowers was 98.00%. Other studies, such as those by [

23], focused on detecting downy mildew and the pests causing it, achieving a detection accuracy of 98.16% using CNN. Reference [

56] introduced an ensemble of VGG-16 and MobileNet to detect sunflower diseases such as alternaria leaf blight, downy mildew, phoma blight, and verticillium wilt, which achieved 89.20% accuracy. This research was extended by the authors in [

57]. Authors of [

33,

34] also used the classic CNN approach for training and classification with a slight dataset augmentation [

38], achieving recognition accuracy of 97.88%. Various image preprocessing techniques, such as histogram equalization, contrast stretching, gamma correction, Gaussian noise, and Gaussian filters, improved the accuracy of disease recognition in sunflowers to 98.70% [

34]. In [

58] ResNet152 is used for the classification of four sunflower leaf diseases with similar symptoms, achieving an accuracy of 98.80%.

CNNs are one of the most efficient and widely used models for image processing in agriculture. These networks perform well in classification and segmentation tasks, extracting important features from images. In the context of sunflowers, CNNs are used to diagnose diseases such as powdery mildew and rust, and to predict yield. The advantages of using this algorithm include high accuracy in image classification, the ability to handle large amounts of data and generalize well, and the ability to extract complex abstract features. The disadvantages of CNNs can be highlighted as the need for large volumes of labeled data and high computational requirements when using deep architectures.

5.2. Random Forest Algorithm

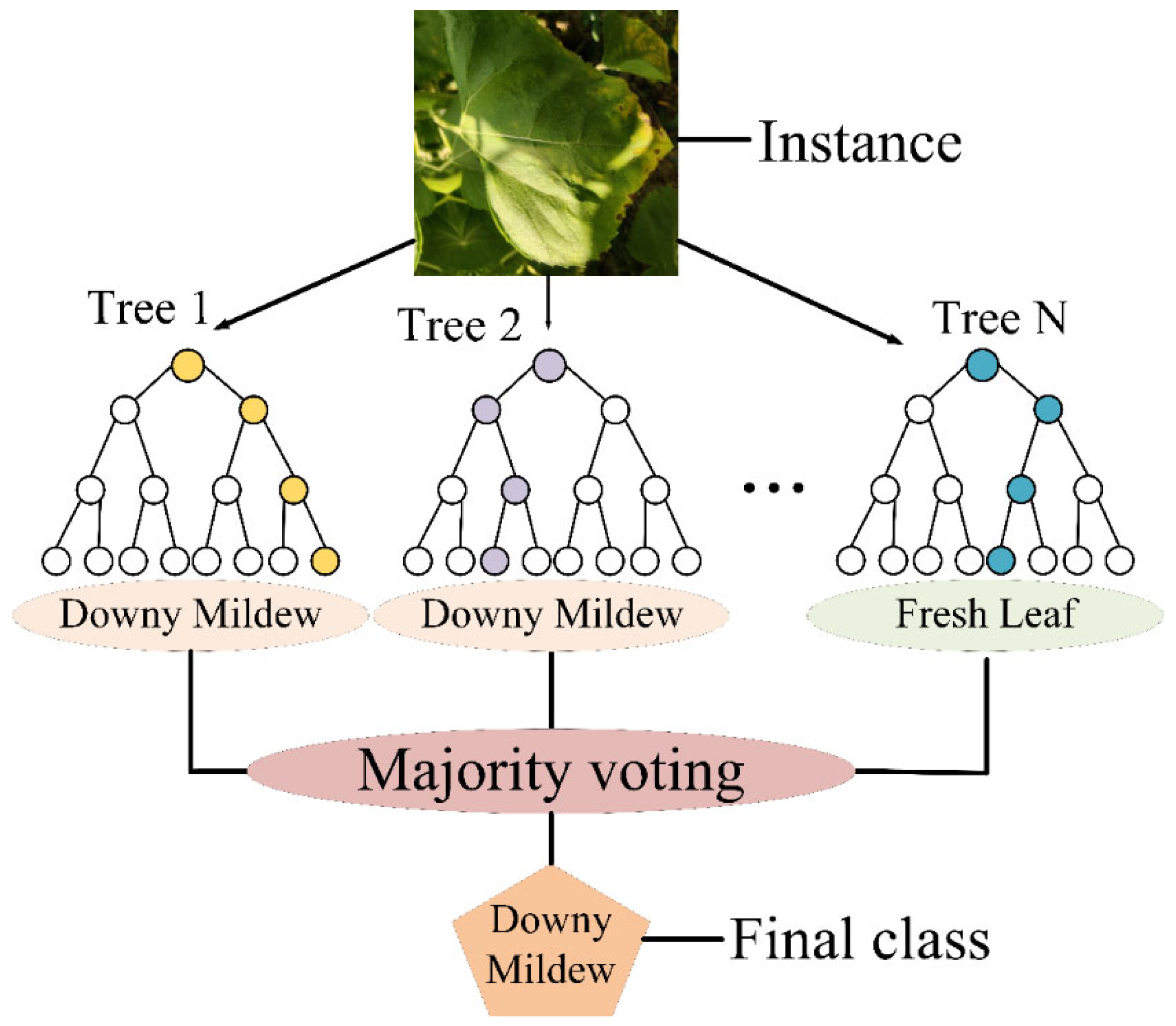

The RF classifier is very popular for classification tasks in precision agriculture. It is an ensemble learning method based on the aggregation of multiple decision trees to improve prediction accuracy and reduce model variability. This algorithm was proposed in 2001 [

59] and has since become one of the most popular tools in machine learning. The algorithm constructs multiple decision trees, each trained on a random subsample of the input training data (bootstrapping) (

Figure 8). A random subset of features is selected at each tree node split, which helps to create diversity between the trees and reduce the correlation between them. This allows the algorithm to avoid overtraining and increases its ability to generalize. A voting method is used for classification tasks, and predictions are averaged for regression tasks. The algorithm can process a large amount of data with a large number of features without considering noise and missing information, but it is quite difficult to interpret and has a high computational cost when training on large datasets.

Sunflower crop mapping using the RF algorithm shows a high classification accuracy of 80% in Europe and the USA. This result depends on the creation of a consistent predictor dataset derived from monthly and seasonal composites using Sentinel-1 data. Automatic mapping can also be performed using a wavelet-inspired neural network, which outperformed traditional RF and SVM, achieving an overall accuracy result of 97.89% [

16]. The authors also introduced factor analysis to reduce the hyperspectral image, and the wavelet transform allowed the extraction of important features which, together with the CNN spectral attention mechanism, achieved a high result in the mapping automation task. The combination of CNN and RF achieved an overall accuracy of 81.54% for seven sunflower disease classes, including downy mildew, rust, verticillium wilt, phoma black stem, alternaria leaf spot, sclerotinia stem rot, and phomopsis stem canker [

16]. The proposed hybrid model used well-known back-propagation and gradient descent and consisted of two convolutional layers, max-pooling, and 14 decision trees. A sunflower disease dataset consisting of 5 classes (gray mold, downy mildew, leaf scar, and leaf rust) collected from different sources was seeded using the k-means algorithm [

60], which showed an underperforming result for disease trait extraction. Using Sentinel-1 data, the work [

43] focuses on sunflower area mapping and shows a 5% decrease in area planted. The RF machine learning model based on multi-temporal metrics of satellite data takes into account phenological characteristics of sunflowers, such as the directional behavior of the plant during flowering. A paper [

41] investigates the performance of different machine learning algorithms and datasets for mapping sunflower, soybean and maize using Sentinel-1 and Sentinel-2 satellite data. RF, MLP, and XGBoost classifiers were used to analyze the data, and the best result was demonstrated by the InceptionTime classifier, which was developed specifically for time series analysis and achieved a maximum accuracy of 91.60%.

RF is an ensemble method that uses multiple decision trees for classification and prediction. It is a powerful tool due to its high accuracy, robustness to overfitting, and ability to handle noisy data. However, RF has lower accuracy than more sophisticated neural networks when dealing with large numbers of classes or high-dimensional data. In addition, training a random forest can require significant computational resources when dealing with large datasets.

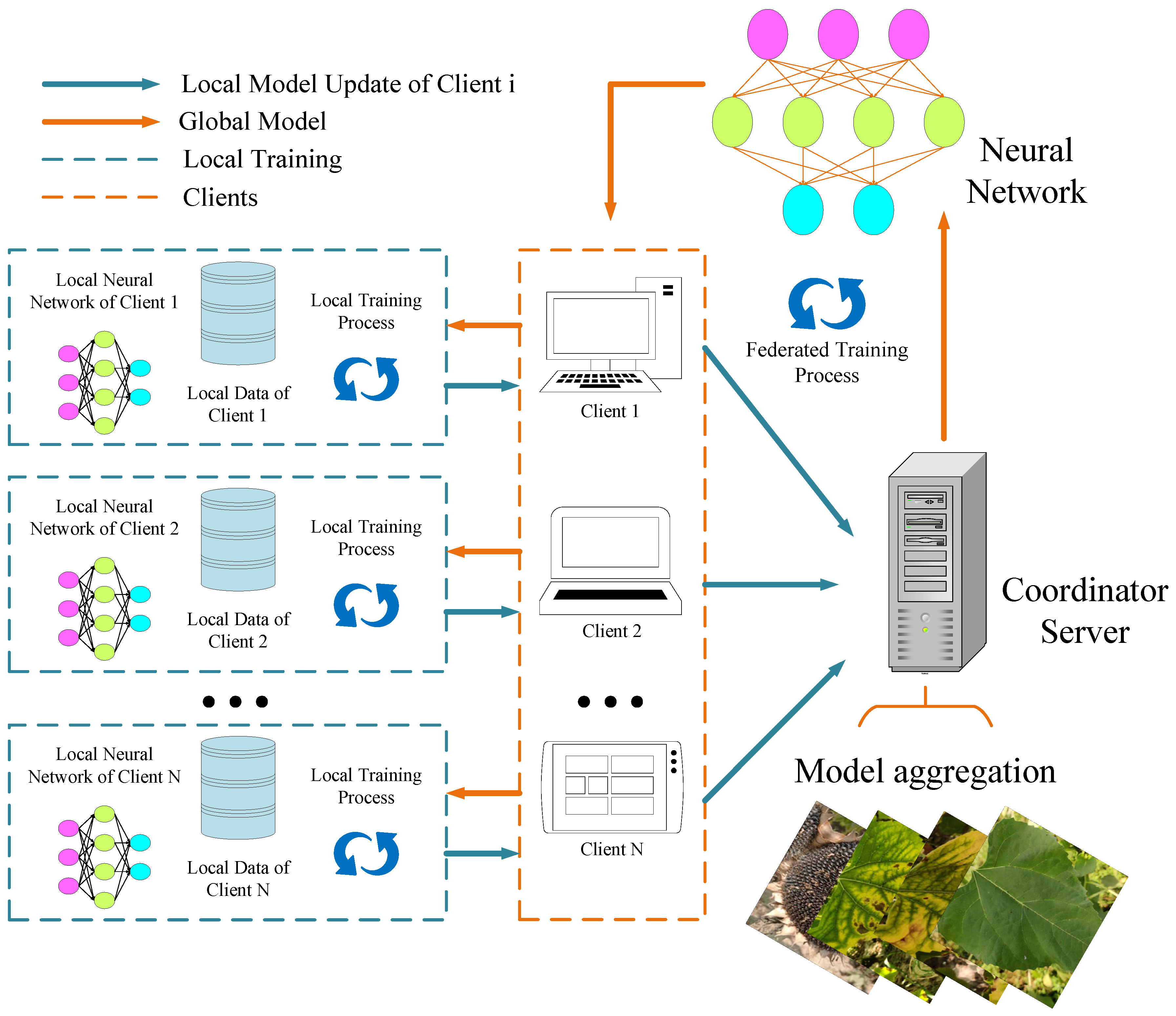

5.3. Federated Learning

The FL method is distinguished by the fact that models are not trained on a single server but rather distributed across multiple devices or nodes (e.g., cell phones, drones, local servers). The training process occurs on a local device, with the model weights and gradients subsequently being transmitted to a central server for merging, as illustrated in

Figure 9. The efficacy of this approach has been demonstrated in the context of sensitive data management and when dealing with substantial volumes of data. In the domain of precision agriculture, federated learning has emerged as a significant technological advancement. This innovative approach enables the local analysis of data from UAVs, field sensors, and satellites. It facilitates individual adaptation of models to diverse geographical regions, obviating the necessity for centralized data collection. The implementation of such a method has been demonstrated to enhance privacy, reduce network load, and enable customization of models to align with the unique characteristics of each farm. The subsequent works employ a unified dataset encompassing images of rust, downy mildew, Verticillium wilt, Septoria leaf spot, and powdery mildew. In the paper [

61], the utilization of the classical FL approach in conjunction with a CNN comprising three convolution layers, four max-pooling layers, and two fully connected layers is proposed for sunflower disease detection. This is achieved by the combination of local models that have been trained on data from five clients into a single global model using Federated Averaging. The model demonstrates high precision, recall, and F1-score, with accuracy metrics ranging from 85.31% to 91.86%. Addressing the imbalance in the data through various methods, such as reasoning the input data, has been demonstrated to enhance overall classification accuracy and reduce prediction error for rare classes.

A study [

50] focuses on the dynamic improvement of the model with an increasing number of clients and investigates the impact of federated averaging strategies. The experiment involves the use of FL in conjunction with the CNN (three convolutional layers with ReLU activation, three max pooling layers, and two fully connected layers with ReLU and Softmax activation) approach. The effectiveness of incremental federated learning under conditions of uneven data distribution is validated with a gradual increase in accuracy, starting from 82.95% in the first stage and reaching 94.87% by the final version of the model. The findings demonstrate that the employment of diverse data pooling and averaging mechanisms can exert a substantial influence on the ultimate classification outcome. A paper [

51] examines the role of local and global learning in sunflower disease classification. The analysis of data from five clients with differing information representations demonstrates that FL assists in addressing imbalances in data, enhancing the quality and precision of classification from 88.76% to 95.72%. The primary distinction of the study [

6] is that it employs a four-tiered classification system to categorize disease severity, deviating from the conventional approach of employing class divisions. The efficacy of the method is enhanced by the integration of a hybrid CNN with an SVM, wherein the CNN comprises three convolutional layers with ReLU activation, three pooling layers, two fully connected layers with ReLU activation, and dropout and Softmax layers. This approach yielded macro-averaged accuracies ranging from 87.00% to 90.35%, with weighted accuracies ranging from 90.41% to 94.87%.

The FL approach has the potential to enhance the confidentiality of data, given that it does not involve the transmission of information outside the device. Moreover, federated learning serves to reduce the load on central servers and the network, thus conserving resources. A salient benefit of this approach is its capacity to adapt models to local data, thereby facilitating their utilization for tasks such as the monitoring of sunflower crops from drones or mobile devices. However, the process of synchronizing data from multiple devices can be challenging, and the devices must possess substantial computing capabilities. Furthermore, the quality of the model may be adversely affected by the limited data collected on a single device, rendering such systems less versatile.

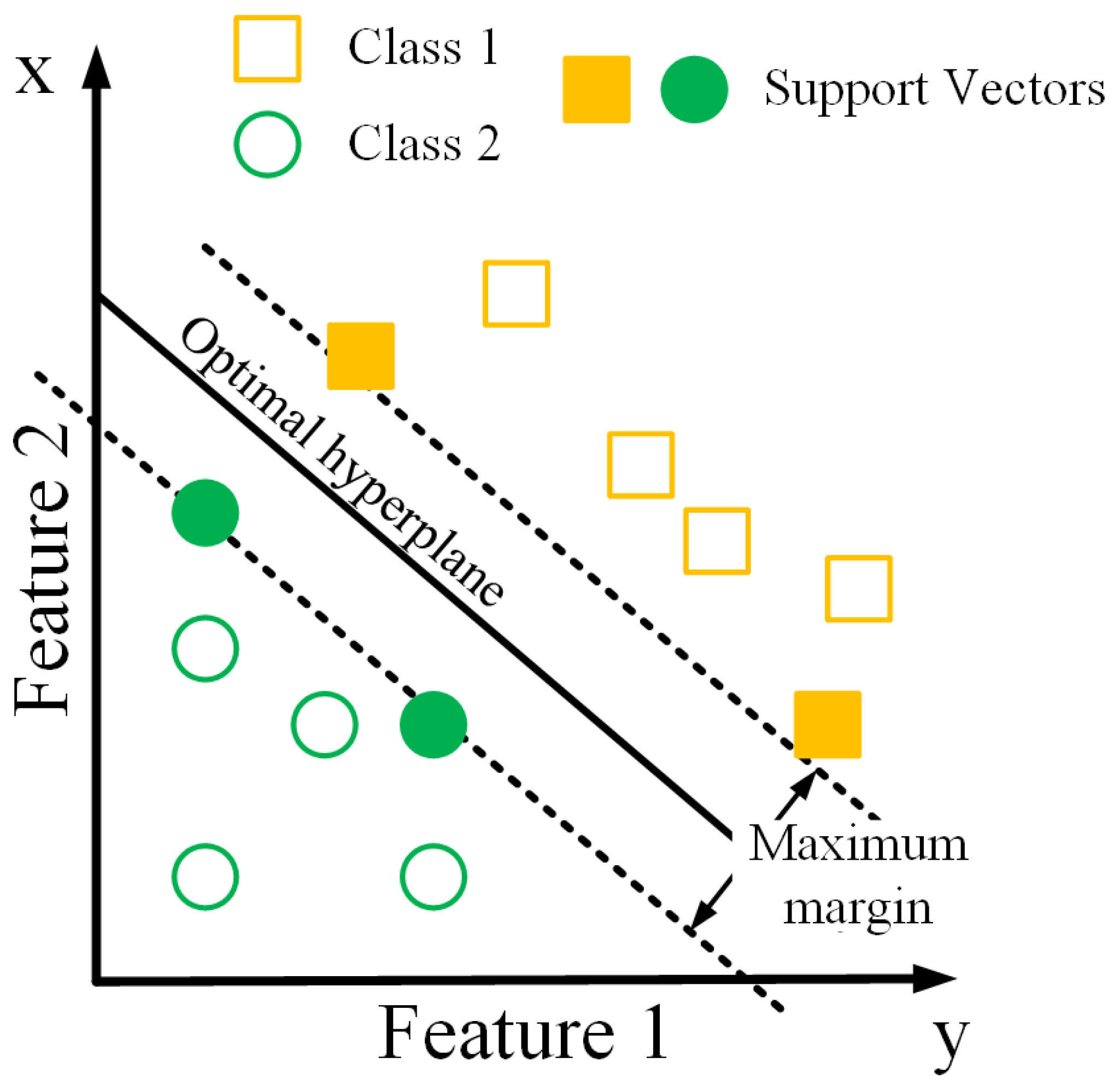

5.4. Support Vector Machine

Support vector machines (SVMs) are a machine learning algorithm that is utilized for the purposes of classification and regression. The algorithm’s objective is to identify a hyperplane that maximizes the separation of the data into distinct classes by providing the largest possible margin between them. The objects in closest proximity to the plane’s boundary, also known as the partition, and which determine its position, are designated as support vectors (

Figure 10). Moreover, it is possible to transform a space that is linearly indivisible by making it of higher dimensionality when employing the Kernel Trick method. In the domain of agriculture, this methodology is extensively employed for the analysis of images and information of various modalities. It demonstrates a high degree of training efficiency with small datasets, yielding optimal recognition results. However, when confronted with a substantial volume of processed data, this approach can exhibit significant resource demands. In the study [

35], a hybrid approach of applying support vector machines (SVMs) in conjunction with convolutional neural networks (CNNs) for the multi-class classification of sunflower diseases is presented. This approach comprises three convolutional layers, three subsampling (max-pooling) layers and two fully connected layers. The second of these employs an SVM for final classification. The final model achieved an accuracy of 83.59% and an F1-score of 83.45%, with individual class accuracies ranging from 80.65% to 85.37%.

SVMs are frequently employed for classification with limited data and in tasks with clearly defined boundaries between classes, such as the identification of healthy and diseased plants. They are less computationally expensive and can be effective with small amounts of data. However, SVMs perform poorly on high-dimensional or complex data where the boundaries between classes are not obvious. Furthermore, to use SVMs efficiently, it is necessary to tune many hyperparameters.

5.5. K-Nearest Neighbor

The KNN algorithm is predicated on the identification of the k closest points in the feature space for a given point to be classified. The determination of the class of a new point is achieved through the analysis of the classes of its nearest neighbor points, with the selection of the most frequent class among them. The authors [

62] utilized 280 artificially generated images and classified six types of flowering sunflowers using the k-neighbor algorithm, achieving an accuracy of 88.52%.

In the context of large and multi-class datasets, KNN is subject to a disadvantage in that the enhancement of the number of features results in a concomitant deterioration in the quality of classification. Additionally, the algorithm is prone to latency when processing voluminous datasets, due to the necessity of recalculating distances for all points within the training set for each new prediction. KNN is also susceptible to noise in the data, which can result in a reduction in accuracy. Notwithstanding the aforementioned limitations, the algorithm remains a viable option for classification tasks in environments characterized by limited resources and data, such as the early detection of sunflower diseases.

5.6. Other Methods for Processing Sunflower Data

K-Means is a popular data clustering algorithm that divides an input sample into k groups, or clusters, based on their similarity and without prior knowledge of class membership. The fundamental principle of the algorithm is as follows: a specified number of clusters is allocated, k initial points (centroids) in the feature space are arbitrarily selected, the belonging of clusters to the nearest centroids is determined (via the Euclidean distance), and a new centroid is calculated for each cluster as the average of all points that fall into it. The final two steps of this process are repeated iteratively until the centroids no longer change (i.e., until convergence) or until a predetermined number of iterations is reached. The efficacy of the algorithm in the domain of plant disease recognition has been demonstrated. The capabilities of the algorithm include lesion extraction (segmentation), classification of leaf texture and color to identify disease features, and optimization of data processing.

A study [

40] was conducted to evaluate the suitability of NDVI maps derived from Sentinel-2 satellite data for identifying sunflower crops. The study utilized the k-means clustering algorithm to analyze the data, aiming to ascertain the effectiveness of the method. The method was employed to analyze NDVI data to identify homogeneous zones (subfields) within crop areas. The experimental results demonstrated the efficacy of the proposed method for the monitoring and management of sunflower crops in individual field plots. An R

2 regression coefficient of 0.73 was determined between yield and NDVI at approximately 63 days of crop maturity. The authors [

63] utilized an enhanced YOLO_v4 model to detect verticillium wilt, powdery mildew, and rust. To enhance the efficacy of the algorithm, the k-means clustering algorithm was employed, thereby generating a novel anchor frame size and rendering the network capable of detecting minute lesions. Furthermore, the backbone feature extraction network was substituted with MobileNet_V1, MobileNet_V2, and MobileNet_V3 to mitigate the computational intricacy of the proposed methodology. The metrics of precision, recall, and F1 achieved 0.84, 0.84, and 0.84, respectively, and the accuracy was 96.46%, which outperforms the original YOLO_v4. The authors of the dataset [

38] developed a method [

60] to segment affected sunflower patches using k-means clustering, then extract features and classify using Logistic Regression (LR), Naïve Bayes (NB), J48 [

64] (a decision tree algorithm based on recursive attribute partitioning), K-star, and RF. The latter algorithm demonstrated superior performance in comparison to the other algorithms, attaining an average accuracy of 90.68%.

A study [

65] investigated the potential for early detection of sunflower infestation by the parasitic weed Orobanche cumana using hyperspectral imaging. Logistic regression was utilized to differentiate between infested and uninfested plants, achieving an accuracy of up to 89.00%. Furthermore, analysis of a reduced spectral set demonstrated the potential of employing multispectral cameras to address the weed search problem. The objective of the present study [

46] was to investigate the effect of climatic conditions on satellite signals using long time series of optical and Sentinel-1 C-band Synthetic Aperture Radar (SAR) data. The authors processed six years of data from 940 sunflower fields. The application of backscatter analysis, in conjunction with NDVI, GAI, and Fcover indices, in combination with radar signals, to monitor crop phenology, demonstrated the efficacy of SAR data in detecting anomalies in sunflower development, particularly when employing dual-orbit data and accounting for radar capture geometry. Recent years have seen a surge in popularity of ViT architectures in ensemble with deep neural networks, which have achieved a noteworthy 97.02% accuracy on the BARI-Sunflower dataset [

37]. In the analysis of the BARI-Sunflower dataset, the BeiT architecture, which is based on a bi-navel encoder for image transformer, demonstrated the most optimal result, achieving an accuracy of 99.58% [

66]. The Near-Real-Time Cumulative Sum (CuSum-NRT) algorithm [

47] applied to Sentinel-1 SAR data utilizes the sensitivity of SAR data to soil, moisture, and crop condition to track changes throughout the growing season. A range of seasonal patterns of change were identified. Firstly, the VV polarization (the radar signal is emitted vertically and received vertically) was found to be more sensitive during periods of bare soil. Secondly, the VH polarization (the radar signal is emitted vertically and received horizontally) was found to be more sensitive during vegetation growth. In their study, [

42] advanced a novel methodology for yield prediction, integrating time series data on vegetation indices and plant physiological parameters, including fresh weight, dry weight, and moisture factor. The utilization of physiological parameters yielded superior outcomes in comparison to the scenario where they were disregarded, resulting in an RMS error of 0.4075. The findings of such a study may be generalized to other crops in the future.

The presented alternative methods, in contrast to neural network approaches, offer distinct advantages in the processing of complex and heterogeneous data, as well as in tasks where classification or extraction of hidden patterns in the data is required. For instance, the K-Means algorithm can effectively segment images and identify anomalies in vegetation, which aids in the early detection of sunflower diseases. Nevertheless, such methods may be less effective at processing high-dimensional or complex data than state-of-the-art deep neural networks. Furthermore, the integration of machine learning and time series analysis techniques provides novel perspectives for the monitoring of changes in crop health throughout the season. These methods use information collected at different phases of plant growth to forecast yields or identify stress conditions in plants. However, it should be highlighted that each of these approaches has drawbacks, such as the difficulty in interpreting the results and the need for large volumes of pre-processed data. A technique for segmenting sunflower patches impacted by the condition was created in [

60] by the dataset’s authors [

38]. This method employed k-means clustering for the extraction of features and classification using Logistic Regression (LR), Naïve Bayes (NB), J48 [

64] (a decision tree algorithm based on recursive attribute partitioning), K-star and RF.

Machine learning methodologies, encompassing CNN, RF, SVM, KNN, and FL, have been demonstrated to be efficacious in the domain of sunflower image processing, particularly in the context of disease diagnosis, yield prediction and crop mapping. Nevertheless, despite the high accuracy, there are several challenges, such as the necessity for large amounts of labeled data for training, the high computational complexity and the sensitivity of the models to noise in the data. Moreover, the adaptation of machine learning methods to differing agronomic conditions and discrete tasks is a challenge that persists at the present time. Prospects in this area are related to further improvement of models, including the integration of additional factors such as climate and soil data to improve the accuracy of predictions and monitoring. Furthermore, there is a necessity to develop methods that are less dependent on large amounts of data and more adaptable to real-world conditions. Segmentation is an integral step in the image preprocessing pipeline. This step has been shown to increase disease recognition accuracy and allow for precise lesion diagnosis in every section of an agricultural site.

6. Segmentation Methods Applied to Sunflower Crop Analysis

Image segmentation is currently one of the most significant data processing steps in machine learning and computer vision, particularly in the context of crop monitoring, such as sunflowers. This process facilitates the isolation and classification of specific regions within an image, a procedure that is imperative for precise disease diagnosis, plant health evaluation, and yield estimation. Segmentation is a process that can be used to divide an image into different zones corresponding to different objects or features. It can also be used to improve the accuracy and efficiency of processing data from different remote sensing technologies, such as satellite imagery, UAV data, or multispectral cameras. In order to accurately analyze the condition of sunflower crops, it is necessary to identify specific areas, such as disease-affected parts of the plant or healthy areas. Thus, the use of these methods in combination with more advanced approaches can significantly improve the accuracy and efficiency of sunflower data processing. Furthermore, the execution of segmentation in an optimal manner is instrumental in enhancing the precision of yield forecasts and optimizing agronomic measures.

Image segmentation necessitates the implementation of diverse approaches and techniques, encompassing both conventional algorithms and sophisticated neural networks. In order to facilitate a comprehensive understanding of the subject matter and to present the diversity of methods, the section has been divided into several subparts. This will facilitate a more detailed examination of the architectures and approaches employed, including the well-known You Only Look Once (YOLO) neural network architecture and other methodologies employed for vegetation segmentation and lesion detection.

6.1. Utilization of YOLO for the Purpose of Segmentation

YOLO is a popular model for the classification, detection, and segmentation of objects in images. The algorithm’s working principle, based on a convolutional architecture, can be outlined as follows: The input image is segmented into a grid of cells. Each cell is responsible for analyzing the object within its own grid cell. If the object’s center is found within a cell, that cell is responsible for detecting it. Once a cell has found the center of the object, a bounding rectangle is constructed. YOLO performs a regression of the bounding rectangle, predicting its parameters. The intersection over union (IOU) metric measures the degree to which the predicted bounding rectangle matches the real one, thus helping to eliminate erroneous predictions.

The network divides the image into cells, with each cell predicting the bounding boxes and confidence level. The cells then determine the probability of an object belonging to a particular class. In instances where multiple model cells are employed to predict an object, the result is often a multitude of repetitive and redundant prediction frames. In order to address this issue, YOLO employs a Non-Maximum Suppression (NMS) algorithm. The fundamental principle of the method is that the box with the highest probability score is selected from a group of overlapping bounding boxes. All other boxes with a high degree of overlap with the selected box, but with lower scores, are suppressed and excluded from further processing. This process is repeated iteratively, resulting in the retention of only the final boundary frames that represent the most accurate predictions. This significantly improves the quality of object recognition. (

Figure 11). The accuracy of YOLO models is evaluated by means of the mean average precision (mAP) metric.

Each new YOLO model introduces certain innovations that improve the accuracy and efficiency of the model. YOLO_v12 is the latest model released by Ultralytics on 18 February 2025. The YOLO_v9, YOLO_v10, and YOLO_v11 models were also released in 2024. The latest YOLO models are capable of detection, classification, segmentation, human pose estimation, object tracking, and the ability to orient bounding boxes to detect the presence of an object without its exact location [

67]. Wide applications of the algorithm have also been found in precision agriculture. Different versions of YOLO are used to detect weeds or detect diseases, which, together with the use of UAVs, allows for large-scale crop monitoring. For example, the authors [

17] used a drone to collect over one and a half thousand sunflower images to detect and classify sunflower capitula. The network divides the image into cells, each of which predicts the bounding boxes and confidence level, and then determines the probability that an object belongs to a particular class. The precision, recall, and mAP@0.5 achieved were 92.30%, 89.70%, and 93.00%, respectively, outperforming the original YOLO model. The work in [

31] was based on the older YOLO_v5 model, extended with an attention pyramid module. The resulting system processes non-obvious feature information inferred from the first module of the model and highlights affected plant patches in the image in the second module of the model on three datasets: Katra-Twelve, BARI-Sunflower [

38], and FGVC8, with an average F1 score of 95.27%. The same architecture was used in another study [

32] where in combination with the Dwarf Mongoose Optimization Algorithm, 95.87% accuracy was achieved on the dataset [

38]. The authors [

53] collected their dataset of photos of weeds in fields using a UGV robot, segmented them using U-Net, and further labeled them. YOLO_v7 and YOLO_v8 were used for the detection task on sunflower crops, which showed a mAP@0.5 score of 0.971 in detecting sunflower crops, which is higher than Efficientnet, Retinanet and Detection Transformer. The highest recognition score for the two classes was 0.770, leading to the conclusion that only the labeled dataset is novel.

The YOLO segmentation method has proven to be one of the most effective for image processing tasks, including sunflower crop analysis. YOLO allows the simultaneous detection and segmentation of objects in an image, making it particularly useful for the rapid and accurate detection of lesions and diseases on plants and enabling the real-time analysis of large amounts of data, which is essential for monitoring in agricultural environments. The main challenge is accuracy when processing small objects or complex backgrounds where objects can be difficult to distinguish. In addition, YOLO models require significant computational resources, which can limit their use on devices with limited capabilities. Despite these drawbacks, YOLO remains one of the most popular tools for agricultural segmentation.

6.2. Other Segmentation Methods

Different versions of YOLO are used for weed detection or disease detection, which, together with the use of UAVs, enables large-scale crop monitoring. For example, the authors [

17] used a drone to collect more than one and a half thousand sunflower images to detect and classify sunflower capitula. YOLO_v7-tiny was taken as the main machine learning model, which was further refined by changing the activation function to SiLU and improving the feature map for the capitula detection task, as well as introducing deformable convolution and the SimAM attention mechanism. The achieved precision, recall and mAP@0.5 were 92.30%, 89.70%, and 93.00%, respectively, outperforming the original YOLO model.

The joint application of FPN with ResNet18 showed an accuracy higher than the U-Net architecture by an average of 1.05 percentage points. Despite their simplicity and efficiency, these methods may be limited in the case of complex images with high noise or a large number of classes. They require considerable effort to optimize the parameters and may not always handle subtle differences between objects. However, their use in combination with more sophisticated neural network architectures can provide good results for analyzing data with less computational effort.

Image segmentation is a key step in data processing for crop monitoring, including sunflowers. Segmentation methods have greatly improved the accuracy of disease diagnosis and crop health assessment. However, despite the successes, there are several challenges such as high computational requirements, the need for accurate parameter tuning and the sensitivity of the methods to data quality. The need for large amounts of labeled data for model training is also an important limitation. Prospects for segmentation lie in the further development of more adaptive and efficient methods, such as improving neural network models to deal with small objects or complex backgrounds. One of the most important directions is the automatic annotation of large image sets using large language models, which will significantly speed up the process of generating training data and increase the availability of high-quality labeled sets for research.

The analysis of different segmentation methods, their comparative evaluation and the identification of the best approaches serve as a basis for the selection of the most effective solutions in agricultural practice. Systematic comparison allows us to summarize the results of different studies, to highlight promising directions and to assess their practical applicability in real agricultural conditions.

7. Systematic Comparison and Synthesis of Results

Due to the significant heterogeneity of the reviewed studies, which address different tasks (e.g., classification, segmentation and yield prediction), use different datasets and report incompatible metrics (e.g., accuracy, mAP and R2), a formal quantitative meta-analysis with pooled effect sizes was not feasible. Instead, this section presents a structured qualitative synthesis and systematic comparison of the results. The results were stratified by main task and algorithm family to identify common trends, performance benchmarks and the most promising approaches described in the literature.

To ensure a structured and transparent summary of the results of the 47 selected studies, the following procedure was adopted. First, key performance indicators (e.g., accuracy and F1 score) and important study characteristics (e.g., algorithm used, data type and task, e.g., classification, segmentation or yield prediction) were systematically extracted from each article and compiled into a comparative table (

Appendix A). Next, the studies were stratified by task. Due to the fundamental heterogeneity of the tasks and evaluation metrics, it was not possible to aggregate the results statistically. Instead, the studies were grouped by major task, such as disease classification and recognition, object detection, image segmentation, yield prediction and crop mapping. This allowed for meaningful comparisons within groups. Within each task group, the most relevant and frequently mentioned metrics were used to compare algorithms. For classification, the focus was on accuracy and the F1 score. For detection and segmentation, the focus was on the mAP@0.5 and the IoU. For yield prediction, the focus was on the R

2 and RMSE. This qualitative comparison across specific tasks revealed trends, the most effective models and common challenges. The synthesis focused on identifying high-level trends, such as the dominance of certain architectures, the influence of data sources, and how methods have evolved over the five-year review period.

The following sections present the results of this systematic comparison, stratified by algorithm and task. For instance, the RF method is widely used for classification in precision agriculture and has been applied to analyze data from the Sentinel-2 platform in combination with vegetation indices. In one study, the use of RF resulted in a sunflower crop classification accuracy of over 98%. This approach can effectively categorize the different growth stages of sunflowers and determine plant health, including stress conditions and illnesses. Vegetation indices, such as the NDVI, greatly enhance classification outcomes and in the detection of anomalies in the field.

To categorize the stages of sunflower development, some studies have employed SVM and LR algorithms trained on a variety of vegetable indices. When tested on separate plots, the model’s accuracy reached 98%. The highest accuracy values were obtained with index time series taken from Sentinel-2. This illustrates how these methods can be used to evaluate crop phenological stages in actual field settings. The use of U-Net and FPN models for semantic image segmentation with the goal of differentiating sunflowers at various growth stages is also highlighted in the review.

Converged architectures continue to be one of the most effective tools for image processing in agriculture. CNNs have been used in studies to classify diseases in sunflowers with extremely high accuracy. On the BARI-Sun dataset, for instance, employing CNNs with pre-trained models (VGG19, Res-Net152) produced a detection accuracy of up to 98.70%. Classifying data from the BARI-Sunflower dataset using the EfficientNet_V2B2 architecture yielded an accuracy of 96.83%, one of the best results in the field. In contrast to other architectures like Inception or Xception, this model offered superior results at a lower computational cost. CNN and SVM hybrid models demonstrated a high 98.00% classification accuracy for sunflower diseases like downy mildew and Alternaria leaf blight.

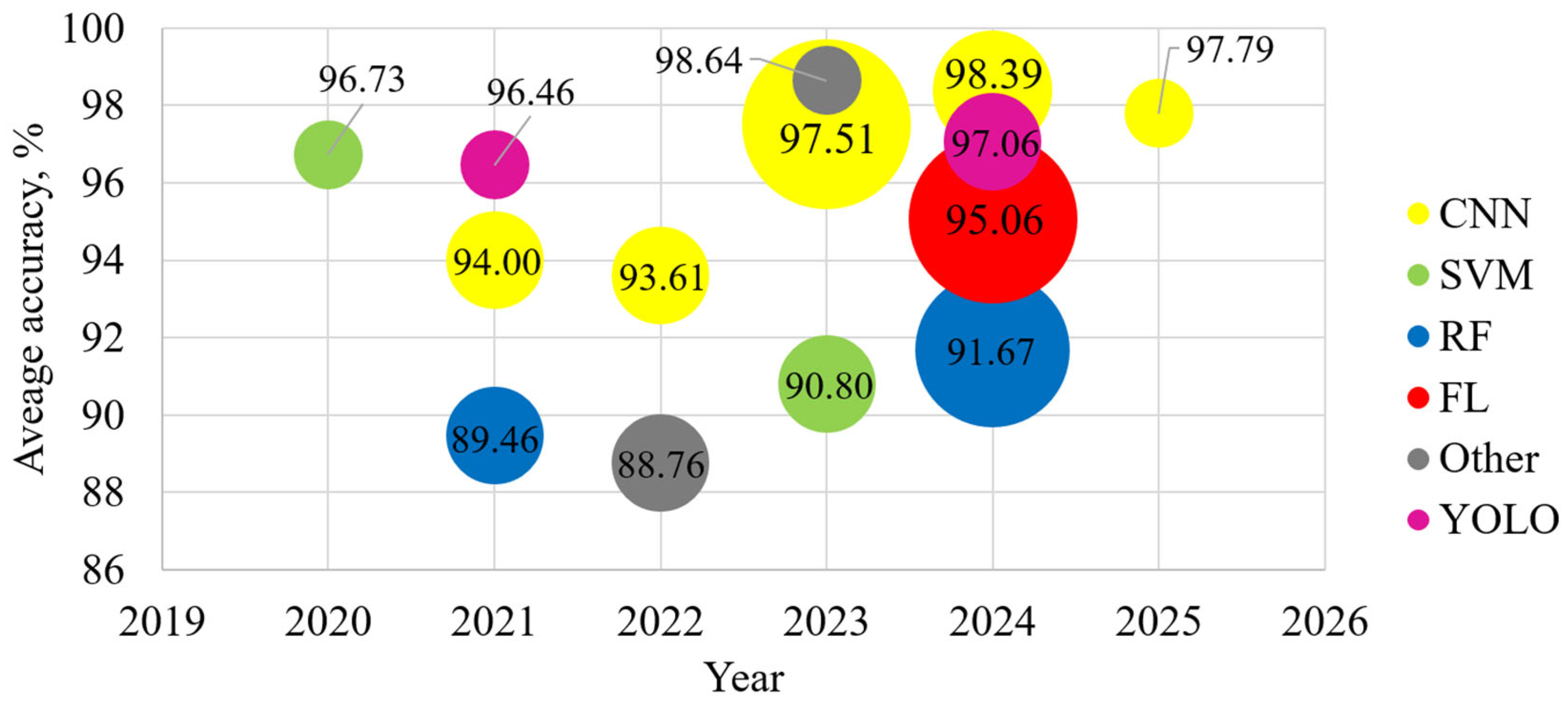

YOLO models were used for the segmentation task of identifying damaged plant patches. For example, YOLO_v4 combined with k-means clustering showed an accuracy of 96.46% in detecting diseases such as powdery mildew and Verticillium wilt. In another study, using an older YOLO_v5 model with pyramidal attention, an average F1 score of 95.87% was achieved on three datasets including BARI sunflower. The YOLO_v5 architecture combined with pyramidal compressed attention and multiple convolutional layers achieved a higher accuracy of 98.25% compared to the other works reviewed.

Figure 12 presents a graph illustrating the distribution of the different methods over time, showing the average accuracy (precision) of these methods from one year to the next. The methods using CNNs represent the most popular and accurate solutions, with more than 96%, and lead in terms of prevalence in the studies. Early detection techniques, such as the use of hyperspectral imagery and machine learning, help to detect diseases at an early stage, which significantly reduces disease damage. RF also performs well, with an accuracy of around 92–96%. They are consistently used for sunflower disease detection, although much less frequently than CNNs. Federated learning methods show significant growth in 2024, indicating a growing interest in these methods, despite their lower accuracy at the moment. YOLO is also used for detection tasks and, despite the relatively high accuracy of the method (over 96%), remains less popular than the other algorithms. The graph shows that in recent years there has been an increase in the popularity of deep learning methods such as CNN and FL, and some increase in interest in YOLO methods, reflecting changing preferences in machine learning for analyzing sunflower data. Comparative table of existing sunflower data processing methods presented in the systematic comparison is presented in

Appendix A. Please note that the reported accuracy values are derived from diverse studies using different datasets, problem formulations, and are often achieved by hybrid or ensemble methods. Therefore, the values are intended to illustrate general performance trends rather than for direct numerical comparison between algorithms.

One of the key factors affecting prediction accuracy is the quality of the input data. Many studies show that high spatial and spectral resolution of satellite imagery improves classification results. Using data with a resolution of 10 m per pixel allows detailed analysis of crops, while data with a resolution of 30 m gives less accurate results. In addition, combining different data sources, such as satellite imagery and drone data, can reduce prediction errors. Transfer learning is actively used to improve accuracy with a limited dataset, significantly reducing the amount of training data required and speeding up the model training process.

This systematic comparison demonstrates that machine learning and satellite monitoring methods have high efficiency in agricultural tasks. In addition to increasing classification and prediction accuracy, the use of contemporary algorithms lowers the cost of agricultural field monitoring. More precise and dependable systems for yield forecasting and plant disease detection will be developed in the future as a result of the advancement of machine learning techniques and their integration with other data sources.

8. Problems and Future Directions

Scaling and transferring developed models to actual agrotechnological processes is hindered by a number of issues and limitations in current research, despite notable advancements in the application of machine learning and satellite data processing methods to sunflower crop monitoring. The small and inconsistent datasets are one of the primary drawbacks of these studies, particularly when it comes to sunflowers. Most studies use local or proprietary datasets collected under specific geographical or climatic conditions, making it difficult to generalize the resulting models. In addition, information on plant phenological stages, agronomic practices, or soil types is often missing, reducing the applicability of models in other regions. Machine learning models, especially deep neural networks, are sensitive to changes in external conditions: illumination, viewing angle, and atmospheric conditions. This systematic comparison demonstrates that machine learning and satellite monitoring methods have high efficiency in agricultural tasks. The application of modern algorithms allows not only to improve the accuracy of classification and prediction, but also to reduce the cost of monitoring agricultural fields. In the future, the development of machine learning methods and their integration with other data sources will contribute to the creation of more accurate and reliable systems for yield forecasting and plant disease detection. This implies a limitation in the use of models trained in one agro-ecological zone and applied in another. Many models are trained on specific sunflower varieties, local conditions, and farming techniques. Depending on the region, downy mildew may be accompanied by a different disease or pest or have slightly different symptoms. Therefore, when the model is transferred to a different zone, the accuracy is drastically reduced. This highlights the need to develop more versatile, adaptive solutions that can be pre-trained or adapted without having to be completely re-trained from scratch.