1. Introduction

Modern decision-making systems are increasingly ascendant in situations of distributional uncertainty, where data-generating processes are subject to shifts, perturbations, or misspecifications at the base level. This is most salient for black-box optimization situations, where expensive function evaluations are performed under uncertain contextual settings. Although vanilla Bayesian Optimization (BO) is known to be effective for deterministic or well-characterized stochastic settings [

1,

2,

3], it can exhibit poor out-of-sample performance when the true distribution deviates from prior assumptions. In Sample Average Approximation (SAA) methods, this phenomenon is often referred to as the “optimizer’s curse”.

Distributionally Robust Optimization (DRO) emerged as a justified methodology for addressing such uncertainties through optimization under worst-case scenarios that are aligned with carefully crafted ambiguity sets around empirical samples, e.g., historical data. Instead of point estimates of unknown distributions, DRO considers all possible distributions aligned with a neighbourhood of the empirical distribution, providing performance guarantees that degrade gracefully under shifts of the underlying distributions. Its underlying intuition is that through hedging against the uncertainty of distributions, decision-makers can gain solutions that share robust performance under a variety of plausible scenarios, even if at the cost of nominal optimality under ideal conditions.

Applying DRO concepts to BO presents a number of characteristic challenges that make it different from classical robust optimization [

4]. To begin with, the black-box nature of expensive objective functions demands explicit balancing of exploration versus exploitation, simultaneously coping with distributional uncertainty. Traditional acquisition functions must be fundamentally reconsidered to incorporate robustness considerations without sacrificing the sample efficiency that makes BO attractive for expensive optimization problems. Second, the construction of appropriate ambiguity sets becomes critical in the BO setting, where limited samples must inform both the underlying surrogate model of the black-box objective function and the distributional uncertainty quantification. The choice of distance metric for defining ambiguity sets—whether based on φ-divergences, Wasserstein distances, or moment-based constraints—has a significant impact on both computational tractability and the quality of robust solutions. Recent theoretical work by Ref. [

5] shows that worst-case expectation problems can be transformed into finite convex problems for many practical loss functions, which provides a foundation for tractable distributionally robust formulations. Third, the high-dimensional nature of many practical optimization problems exacerbates the curse of dimensionality in distributional uncertainty quantification. As noted by Ref. [

6], distance metrics defined in high-dimensional spaces can lead to exceedingly large ambiguity sets, potentially resulting in overly conservative solutions. This observation points to the need for more sophisticated approaches that can capture the geometric structure of data distributions while maintaining computational efficiency.

Current approaches to Distributionally Robust Bayesian Optimization (DRBO) have several limitations that this work addresses. Existing methods usually rely on simple empirical averaging or uniform sampling within ambiguity sets, which fail to capture the underlying geometric structure of the context distribution. This approach can lead to suboptimal representation of distributional uncertainty and, consequently, poor exploration strategies in the robust optimization landscape [

7,

8].

Furthermore, many existing robust BO methods exhibit excessive conservatism, especially when ambiguity sets are large compared to the available data. The resulting solutions may sacrifice significant performance under nominal conditions to hedge against unlikely worst-case scenarios. The challenge lies in developing acquisition functions that can adaptively balance robustness and performance as more information becomes available through the optimization process. Computational complexity presents another significant barrier, as the nested min–max formulation inherent in distributionally robust optimization introduces substantial computational overhead. Traditional approaches often need to solve complex optimization problems at each iteration, making them impractical for high-dimensional or time-sensitive applications. Recent work by Ref. [

9] addressed Wasserstein Distributionally Robust Bayesian Optimization (WDRBO) with continuous contexts, establishing sublinear regret bounds for settings where context distributions are uncertain but lie within Wasserstein ball ambiguity sets. However, both proposed approaches still have difficulty efficiently aggregating distributional information while preserving geometric structure.

This paper introduces a novel methodology that addresses these limitations through the principled application of the Wasserstein Barycenter, efficiently computed via the Sinkhorn regularization. Our key insight is that the geometric structure of distributional uncertainty can be effectively captured using Wasserstein Barycenter, which provides a natural generalization of the notion of mean distributions in the space of probability measures. The Wasserstein Barycenter, also known as the Fréchet mean, computed in the space of probability distributions endowed with Wasserstein distance [

10], offers a non-linear interpolation mechanism that preserves the geometric characteristics of constituent distributions. Unlike simple weighted averages that ignore spatial structure, the Wasserstein Barycenter gives us a principled approach to aggregating multiple plausible distributions while maintaining their essential geometric properties. Our methodology takes advantage of the computational efficiency of the Sinkhorn algorithm to approximate Wasserstein Barycenter computation. While this approach trades the traditional distance properties for divergence measures, it provides crucial advantages: computational tractability for practical applications and guaranteed uniqueness of solutions. By combining entropic regularization with iterative scaling techniques, Sinkhorn achieves significant computational speedups while preserving the essential geometric properties required for robust optimization. The resulting Distributionally Robust Sinkhorn Barycenter Upper Confidence Bound acquisition function seamlessly integrates distributional robustness considerations into the BO framework while maintaining the exploration–exploitation balance that characterizes effective acquisition strategies. Our approach incorporates adaptive robustness scheduling and distributional Lipschitz regularization to ensure that the level of conservatism appropriately adapts to the available information and problem structure.

The main contributions of this work can be summarized as follows:

We propose SWBBO, a novel Bayesian Optimization algorithm that integrates Sinkhorn-regularized Wasserstein Barycenters into a robust acquisition strategy, providing geometry-aware modeling of distributional uncertainty in continuous contexts.

We develop a computationally efficient and theoretically grounded acquisition function that combines entropic optimal transport, adaptive robustness scheduling, and distributional Lipschitz regularization, offering strong regret guarantees under distributional shifts.

We demonstrate that SWBBO outperforms existing DRBO methods on both synthetic benchmarks and real-world-inspired optimization tasks, achieving faster convergence and improved robustness under repeated evaluations.

The rest of this paper proceeds in the following manner. We begin with

Section 2, which covers essential background on DRO and Wasserstein Barycenter. In

Section 3, we describe the DRBO framework, along with the Sinkhorn-regularized barycenter computation and the acquisition function.

Section 4 formalizes the problem setting, introduces our distributional robustness framework, and presents the core methodology.

Section 5 presents a comprehensive experimental evaluation, and

Section 6 concludes with a discussion of future research directions.

2. Literature Review

2.1. Theoretical Foundations of DRO

The theoretical foundations of Distributionally Robust Optimization (DRO) have advanced significantly in recent years, revealing important connections between robust statistical methods and optimization under distributional uncertainty. In 2022, Rahimian and Mehtora published a comprehensive survey [

11] that thoroughly covers the field, tracing its evolution from classical robust optimization to modern distributionally robust approaches. Their work categorizes different types of ambiguity sets—including moment-based, distance-based, and divergence-based formulations—and examines the trade-offs between robustness guarantees and computational feasibility. Building on this foundation, Ref. [

5] demonstrates that many DRO problems can be seen as extensions of robust statistical estimation methods. Rather than simply looking for robust parameter estimates, the focus shifts toward finding decisions that work well across multiple plausible data-generating distributions. The authors in Ref. [

12] took these concepts further by developing adaptive DRO frameworks that dynamically adjust ambiguity sets as new data become available. This method addresses the fundamental challenge of balancing robustness with performance in sequential decision-making, providing a way to reduce the excessive conservatism typical of worst-case optimization while maintaining strong out-of-sample guarantees. This work tackles key problems found in traditional stochastic optimization (SO) methods that depend on fixed probability distributions. DRO’s mathematical framework handles uncertainty using three main paradigms. SO represents the first approach, which maximizes expected performance under a known distribution [

7]:

Let

denote the decision variables, and

denote the context. The function

is the black-box objective function to be minimized, which depends on both decision and contextual variables. Decisions are made assuming perfect knowledge of the probability distribution

P governing uncertain parameters

c. Worst-case Robust Optimization (RO) adopts an extremely conservative approach by optimizing against the worst possible realization:

where no probabilistic assumptions are made about the uncertainty set Δ.

Finally, DRO achieves a balanced compromise through optimizing against the worst-case expectation over all distributions

Q within an ambiguity set

U.

In the following formulations, represents the objective function evaluated at decision under context . Depending on the optimization paradigm, we take expectations over , worst-case realizations, or robust expectations over an ambiguity set.

2.2. Wasserstein Distance and Optimal Transport

In statistics and machine learning, the Wasserstein distance has increasingly gained importance as it allows the comparison of probability distributions of different types while satisfying all properties of a distance metric under certain assumptions. It has been recently applied to extend BO on complicated—also known as exotic—tasks such as grey-box optimization [

13] and multiple information source optimization [

14].

The mathematical foundation for the

p-Wasserstein distance between probability measures

α and

β in

P(Ω) is defined as [

15]

where

is the set of probability measures on

having marginals

α and

, and

.

and

are probability measures (i.e., distributions) over the space

, and

denotes the set of joint couplings (transport plans) with marginals

and

. Setting

and

the Euclidean distance leads to the so-called 2-Wasserstein distance with Equation (4) that can be rewritten as

which is intended as an optimal transport problem [

10]. This formulation interprets the distance as the minimum cost of transporting probability mass

to match target probability mass

, where symbol # denotes the push-forward operator ensuring that

α matches

following transport plan

T.

For Gaussian distributions, that is

and

, the 2-Wasserstein distance simplifies to [

9]

where

represents the Bures metric between positive definite matrices [

16,

17]:

Thus, in the case of centered GDs (i.e., , the 2-Wasserstein distance resembles the Bures metric. Moreover, if and are diagonal, the Bures metric is the Hellinger distance, while in the commutative case, that is = , the Bures metric is equal to the Frobenius norm .

When dealing with general probability distributions beyond the Gaussian case, computing the Wasserstein distance becomes much more difficult and requires solving the Monge or Kantorovich optimal transport problems. The Kantorovich formulation turns this into a linear program with complexity for discrete measures on n points, which makes exact computation impractical for large-scale applications. This computational burden has motivated the development of entropic regularization techniques that approximate the optimal transport solution while maintaining computational tractability.

The computational tractability of Wasserstein-based methods has been revolutionized through entropic regularization approaches, most notably the introduction of Sinkhorn distances [

18], which enables rapid computation of optimal transport through entropic penalties. Recent complexity analyses have improved our understanding of entropic regularized algorithms for optimal transport between discrete probability measures, establishing refined convergence bounds and computational guarantees [

19]. The extension to Wasserstein Barycenter computation through fast Sinkhorn-based algorithms [

15] has established the algorithmic foundations for practical implementation while maintaining essential geometric properties with significant computational speedups.

2.3. Wasserstein Barycenters and Geometric Averaging

The mathematical foundations for geometric averaging of probability distributions are rooted in optimal transport theory and the concept of Wasserstein Barycenters.

Given a set of probability measures

in

with associated weights

with

, their Wasserstein Barycenter

is defined as

where

is uniquely defined by the weights

. Thus, different weights lead to a different Wasserstein Barycenter. The most common situation is to consider equally weighted probability measures, that is

, leading to

For univariate Gaussian distributions

, the Wasserstein Barycenter

has parameters determined by

The solution is elegantly simple: and . This demonstrates that Wasserstein Barycenters of Gaussian distributions preserve the Gaussian structure while averaging parameters in the natural geometric space.

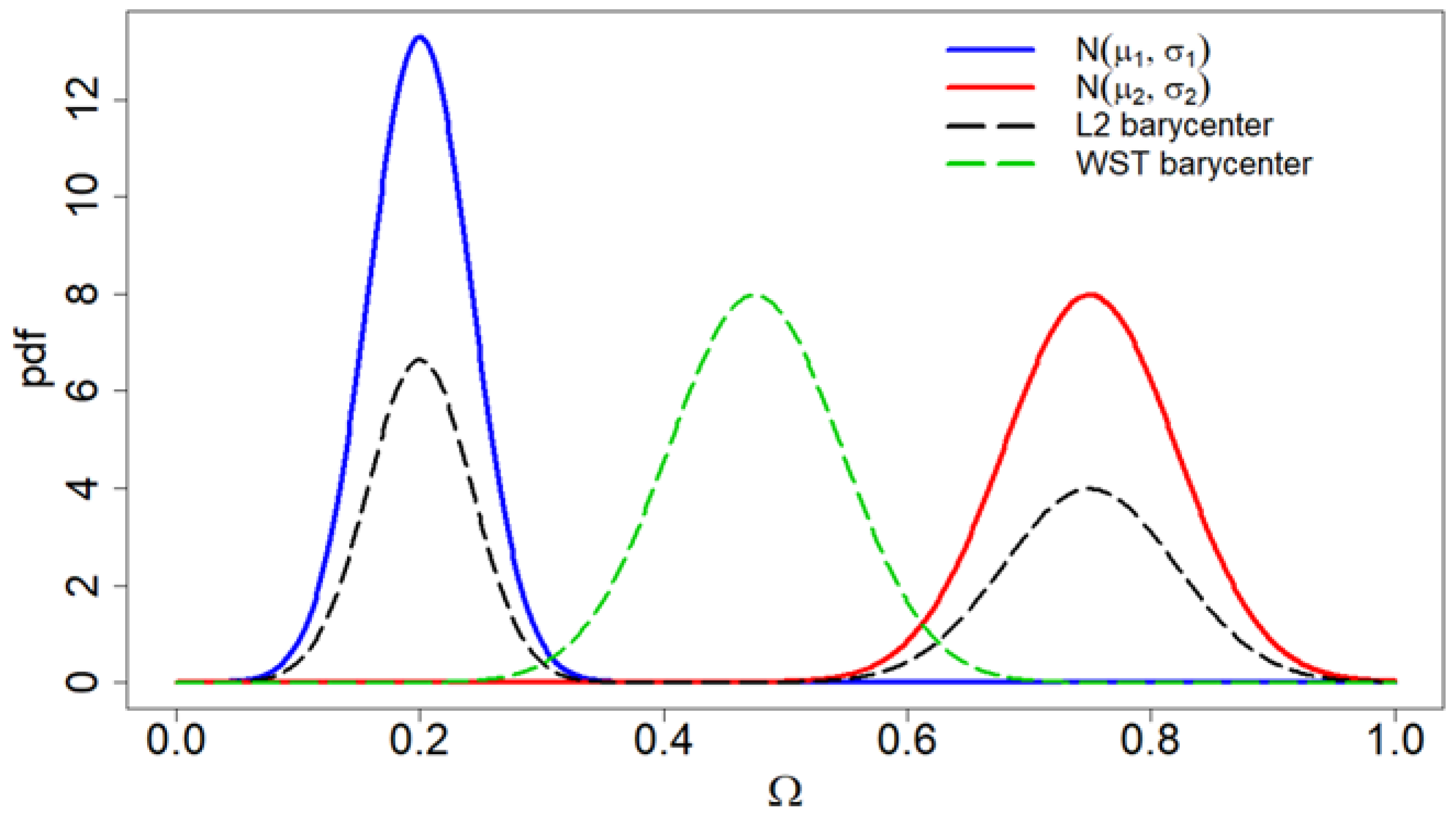

Figure 1 provides a practical and simple example showing the difference between the Wasserstein Barycenter of two (equally weighted) normal distributions and the L2-norm barycenter obtained by considering, as the distance, the L2-norm between the probability density functions aligned on the support. The shape preservation guaranteed by the Wasserstein Barycenter is clearly visible: it is normal, instead of bimodal.

Wasserstein Barycenters generalize McCann’s interpolation to the case of more than two measures, providing a natural extension of mean distributions that preserves geometric structure in probability spaces [

20]. Recent theoretical advances have established both the fundamental importance and computational complexity of these objects, with Wasserstein Barycenters being proven NP-hard to compute in general settings [

21], highlighting the necessity for efficient algorithmic approximations.

2.4. Ambiguity Set Construction and Distance Metrics

Constructing ambiguity sets is one of the most important design decisions in DRO, since different distance metrics create entirely different optimization problems and computational challenges. Ref. [

22] came up with a new data-driven approach that relies on statistical hypothesis tests to build uncertainty sets that balance robustness with performance. This gives us principled statistical frameworks instead of arbitrary specifications. They use historical data to figure out appropriate robustness levels and incorporate complex statistical testing procedures that can handle intricate dependency structures.

There are several basic ways to construct ambiguity sets in DRO. Moment-based ambiguity sets work by constraining the mean, variance, and higher-order moments. While these are computationally manageable, they often miss important aspects of the distribution. Divergence-based ambiguity sets create neighborhoods around reference measures using metrics like Kullback–Leibler divergence [

17] or Total Variation distance. These have solid theoretical backing but can be hard to interpret geometrically. Wasserstein-based ambiguity sets offer flexible and intuitive frameworks that use optimal transport metrics to measure distributional distances, which gives us both computational feasibility and meaningful geometric understanding.

Wasserstein-based methods have become popular among these options because they can handle realistic transportation costs and come with strong theoretical guarantees.

Figure 2 shows the three main ways to construct ambiguity sets in DRO, illustrating how moment-based, divergence-based, and Wasserstein-based methods create different-sized uncertainty neighborhoods around the reference distribution. The figure highlights the trade-offs between computational ease, geometric understanding, and methodological flexibility across these different approaches.

Computational Speed. How fast and practical it is to solve the resulting optimization problem.

Geometric Clarity. How easy it is to understand the geometric meaning of distributional distances and uncertainty neighborhoods (Wasserstein methods give clear transportation cost intuition, while moment-based methods involve abstract statistical constraints).

Adaptability. How well the method can handle different problem types, cost functions, and distributional characteristics (Wasserstein methods can adjust cost functions for specific uses, while moment-based methods are stuck with fixed moment structures).

Building on these foundations, Ref. [

24] made significant theoretical contributions to optimal transport-based DRO, establishing crucial structural properties of the value function in Wasserstein-based DRO problems and developing efficient iterative solution schemes. The computational implications of different ambiguity set constructions vary significantly depending on the chosen distance metric, with Wasserstein-based methods requiring more sophisticated optimal transport algorithms but providing valuable geometric insights, particularly in high-dimensional settings where traditional distance metrics may be overly conservative.

2.5. BO Challenges

Traditional BO approaches run into several basic problems that have pushed researchers to develop distributionally robust extensions. DRBO methods specifically handle uncertainty in the underlying probability distribution of the objective function, which is affected by unknown stochastic noise. Ref. [

9] shows how Wasserstein-based distributionally robust approaches can give theoretical guarantees even when the model is misspecified. Meanwhile, Ref. [

4] lays out the mathematical foundations for incorporating distributional ambiguity. More generally, Wasserstein Distributionally Robust Optimization (WDRO) has become a solid framework for dealing with distributional uncertainty across different optimization problems [

25,

26]. It provides robustness guarantees that stay valid when the true distribution falls within a specified Wasserstein ball around the empirical distribution. This way of thinking about distributional robustness changes the focus from obtaining perfect model specification to developing optimization strategies that work well across a range of plausible distributions. This makes the approach more practical for real-world applications where we are inherently uncertain about distributional assumptions.

2.6. Acquisition Functions for DRBO

Standard acquisition functions must be adapted for the distributionally robust setting. The key challenge is incorporating distributional uncertainty into the acquisition strategy while maintaining computational tractability.

For UCB-based approaches, the robust acquisition function typically takes the following form:

where

is the ambiguity set over distributions of the context, which is unknown a priori,

and

are the GP’s posterior mean and standard deviation, after

observations, and

is a parameter controlling the exploration–exploitation trade-off.

However, simple empirical averaging over observed contexts may not capture the underlying structure of the context distribution, motivating the adoption of the Wasserstein ball ambiguity set and, due to the need for computational efficiency, the Sinkhorn regularization.

2.7. Integrating Distributionally Robust Principles into BO

Combining BO with DRO has become a new framework for tackling both parametric uncertainty and distributional uncertainty in data-driven optimization problems. Ref. [

27] introduced Bayesian Distributionally Robust Optimization (BDRO), which builds a comprehensive theoretical foundation that differs from traditional DRO approaches by including Bayesian estimation of unknown parametric distributions. Their framework builds ambiguity sets using parametric distributions as reference points, which allows for robust optimization that keeps the benefits of Bayesian estimation when data are scarce while providing robustness against model uncertainty.

Extending distributionally robust principles to BO is a recent development that tackles expensive function evaluation under distributional uncertainty. Ref. [

28] worked on black-box optimization problems that include both design variables and uncertain context variables. They handled both aleatoric and epistemic uncertainty sources using adaptive and safe optimization strategies in high-dimensional spaces. Ref. [

9] made important progress by tackling situations where context distributions are uncertain, but we know they fall within ambiguity sets defined as balls in the Wasserstein space. The researchers established sublinear regret bounds that give us theoretical guarantees for how DRBO algorithms converge. These developments together show that DRBO has matured into a unified framework that can handle both parametric and distributional uncertainties in complex decision-making situations.

DRBO brings several unique challenges compared to standard BO:

Ambiguity Set Construction: The choice of ambiguity set

significantly impacts the robustness performance trade-off. People typically use Wasserstein balls, ϕ-divergence balls, and moment-based sets [

23].

Computational Complexity: The min–max formulation creates a nested optimization problem that is still computationally difficult even with recent algorithmic improvements. Modern approaches use dual formulations and cutting-plane methods to deal with the computational burden of continuous ambiguity sets [

11,

29,

30,

31], but scaling up to high-dimensional problems is still an active area of research.

Conservative Solutions: DRBO can produce overly conservative solutions when the ambiguity set is not sized correctly. Recent work has focused on developing principled approaches for ambiguity set calibration that balance being conservative with maintaining good performance. This includes adaptive shrinking strategies and risk-aware formulations [

32,

33]. One key limitation is sample efficiency: when you are dealing with distributional uncertainty, it takes longer for the algorithm to converge compared to standard Bayesian Optimization. This happens because the algorithm needs to protect itself against multiple possible scenarios that could play out. To address this problem, current research is working on better acquisition function designs and multi-fidelity approaches that can reduce the computational burden that comes with distributional robustness [

34].

2.8. Computational Challenges and High-Dimensional Considerations

The curse of dimensionality creates fundamental challenges in DRO, since traditional distance metrics can produce prohibitively large ambiguity sets and overly conservative performance guarantees in high-dimensional spaces. Ref. [

35] tackled this critical limitation by developing the first finite-sample guarantees for Wasserstein DRO that break the curse of dimensionality. Their groundbreaking work demonstrates how the out-of-sample performance of robust solutions depends on sample size, uncertainty dimension, and loss function complexity in a nearly optimal manner, providing theoretical foundations that make high-dimensional Wasserstein DRO practically viable. Their groundbreaking work shows how the out-of-sample performance of robust solutions depends on sample size, uncertainty dimension, and loss function complexity in a nearly optimal manner, providing theoretical foundations that make high-dimensional Wasserstein DRO practically viable. The theoretical foundations established by Ref. [

23] provided essential performance guarantees and tractable reformulations for data-driven DRO using the Wasserstein metric, enabling practical implementations that have significantly influenced subsequent algorithmic developments. Ref. [

25] showed that data-driven Wasserstein DRO can achieve workable reformulations and strong performance guarantees when you properly account for the underlying geometry of the problem. They found that Wasserstein ambiguity sets naturally regularize the system, which helps prevent overfitting when you are working with limited samples.

Recent developments include advanced generic column generation algorithms for high-dimensional multimarginal optimal transport problems, which allow for accurate mesh-free Wasserstein Barycenters and cubic Wasserstein splines [

36]. The comprehensive theoretical framework from Ref. [

10] continues to support modern computational optimal transport applications with solid convergence properties and approximation bounds for practical data science uses.

2.9. Research Gaps

This literature review shows critical computational and theoretical gaps in DRBO that standard approaches simply cannot address. Current robust BO methods face two key problems: first, naive aggregation strategies like uniform weighting across uncertainty sets completely ignore how probability spaces are actually structured, which leads to suboptimal acquisition functions that work less efficiently than methods that understand distributions better. Second, existing Wasserstein-based approaches need to solve transport problems at every single BO iteration, which creates major bottlenecks and makes them too slow to use in practice. The real problem here is keeping distributional geometry intact during optimization. When uncertainty sets contain distributions that have completely different support or covariance structures, simply averaging them destroys the geometric relationships that actually drive good exploration–exploitation trade-offs. Our Sinkhorn-regularized approach tackles this computational barrier head-on by approximating Wasserstein Barycenters while keeping geometric fidelity within ε-accuracy of exact solutions. But we still face three unresolved challenges: handling distributional uncertainty sets that span different support dimensions, keeping barycenter approximation quality stable as the ambient space dimension grows, and developing adaptive regularization parameters that can balance computational speed against geometric precision across different types of problems. This points to a clear need for developing computationally practical methods that preserve essential distributional geometry without giving up the convergence guarantees that make BO useful for expensive optimization problems.

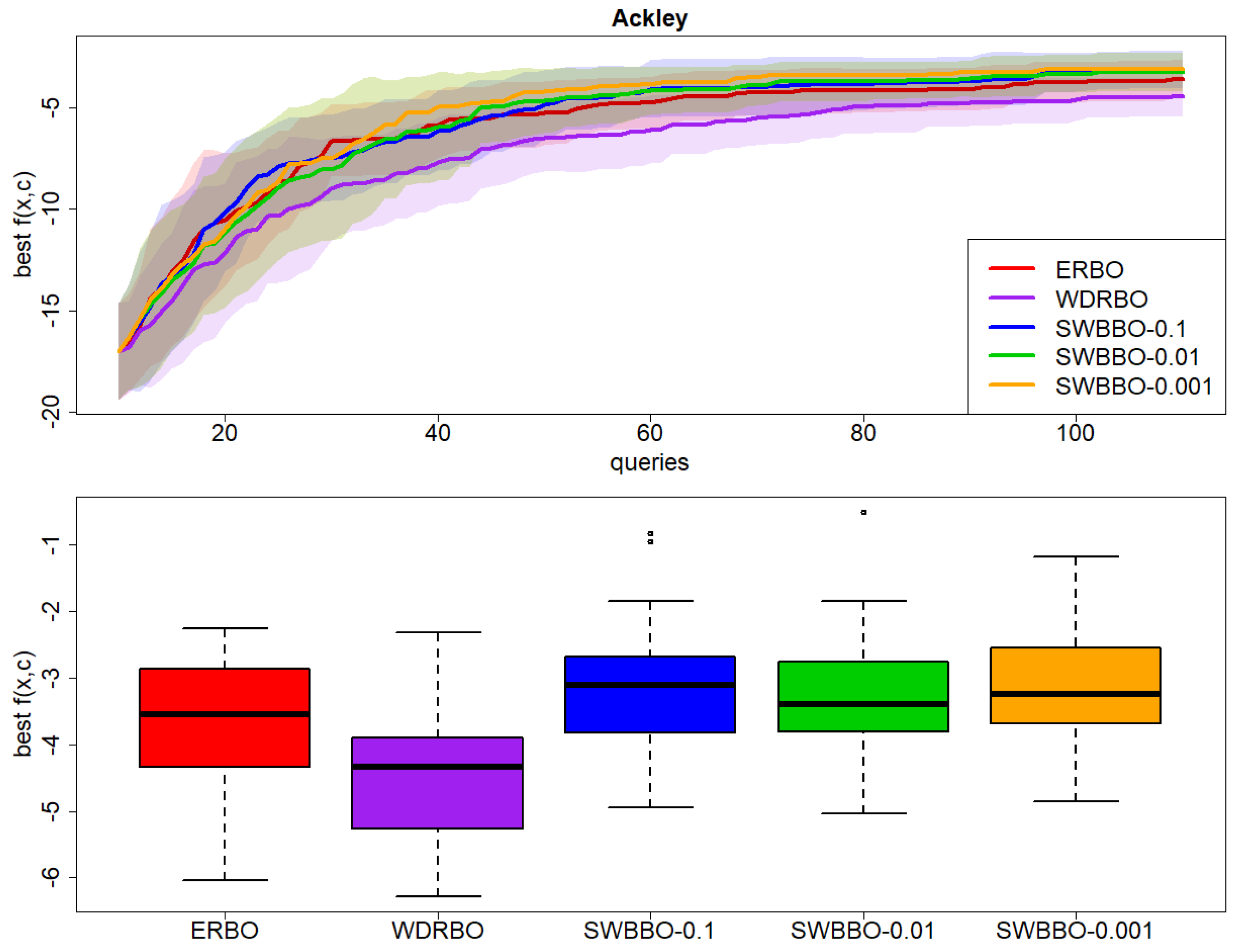

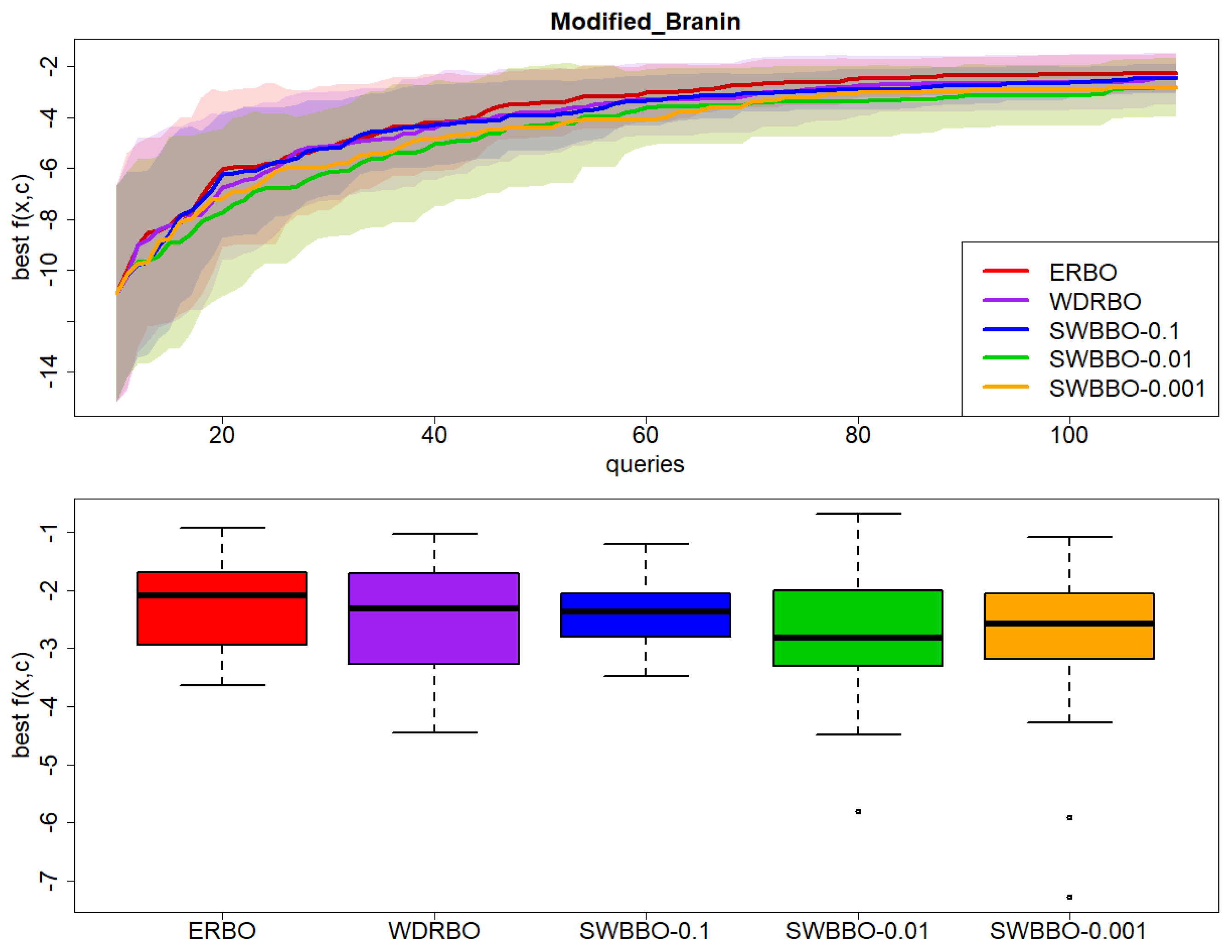

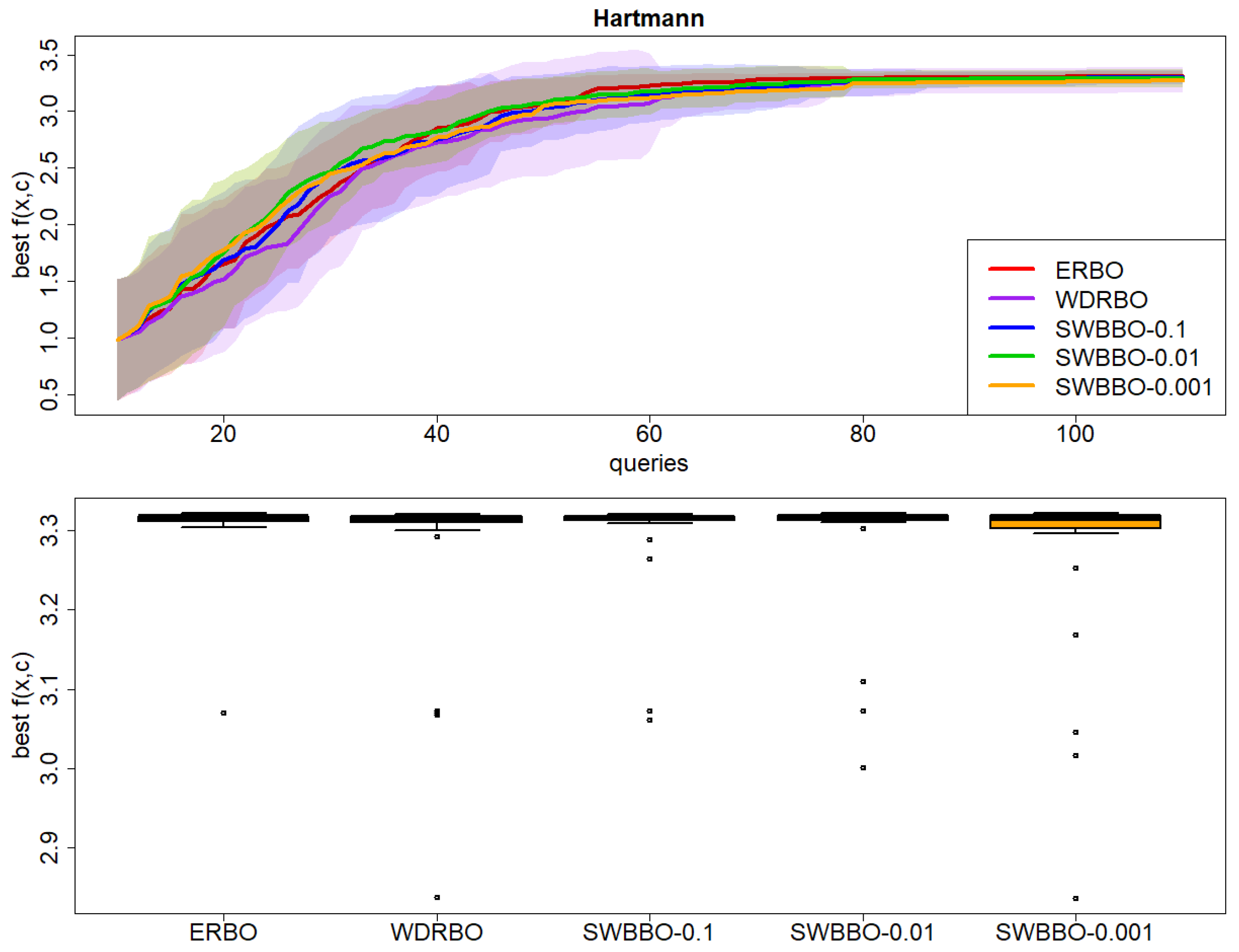

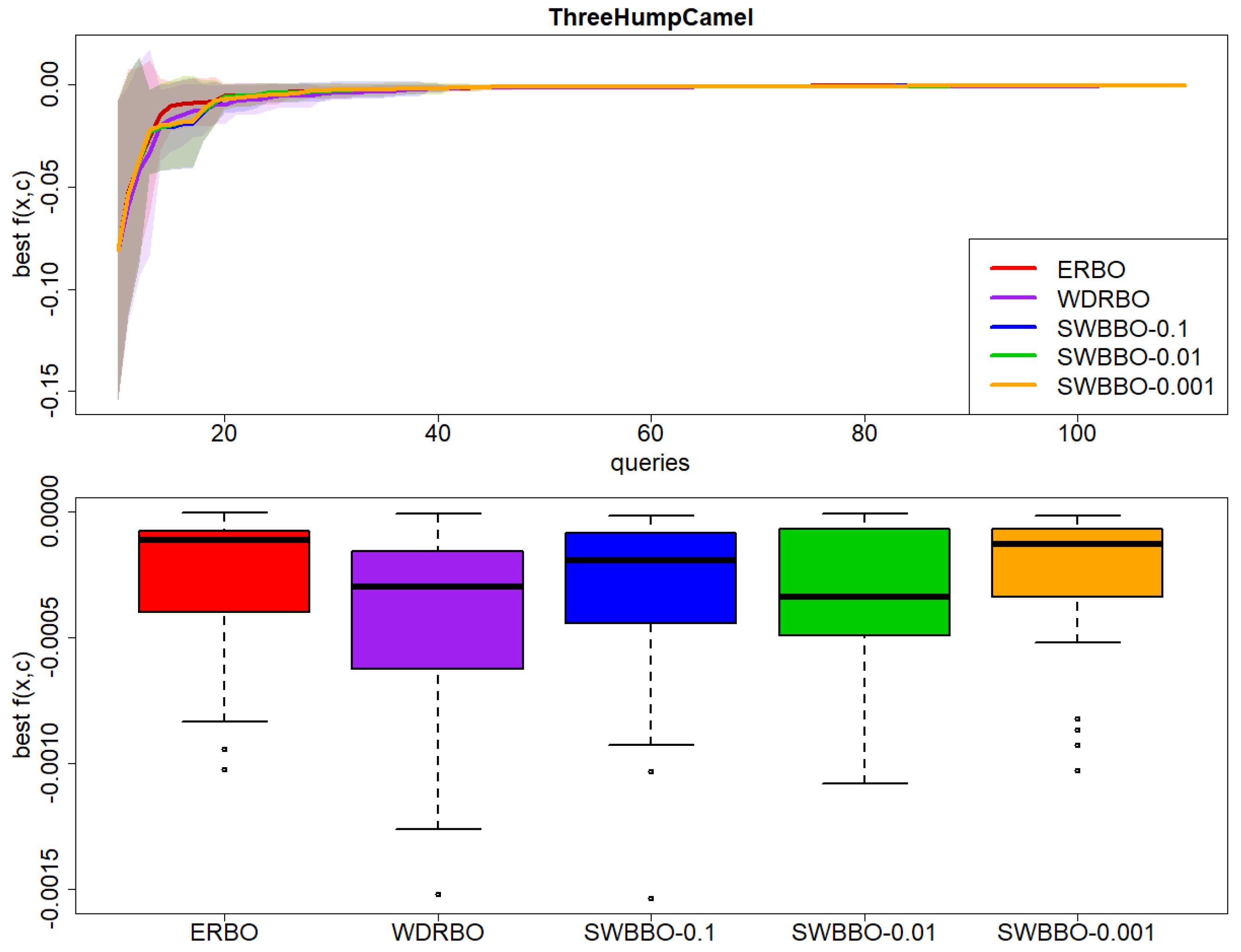

5. Experiments and Results

Following the experimental setting presented in Ref. [

3], we have compared three DRBO algorithms on five benchmark test functions: Ackley, Modified Branin, Hartmann, Three Hump Camel, Six Hump Camel, and three real-life related problems, specifically, Continuous Vendor, Portfolio, and Portfolio Normal Optimization. The algorithms compared are Empirical Risk Bayesian Optimization (ERBO), Wasserstein Distance Robust Bayesian Optimization (WDRBO), and SWBBO (With

= 0.1, 0.01, 0.001). Each algorithm is evaluated over 30 independent runs to ensure statistical significance. The baseline methods, ERBO and WDRBO, are from the implementation provided by the authors of Ref. [

9] as freely accessible on their GitHub repository.

The following table summarizes the best value of observed at the end of the optimization processes performed by the different algorithms (median and standard deviation over 30 independent runs), separately for the test problems.

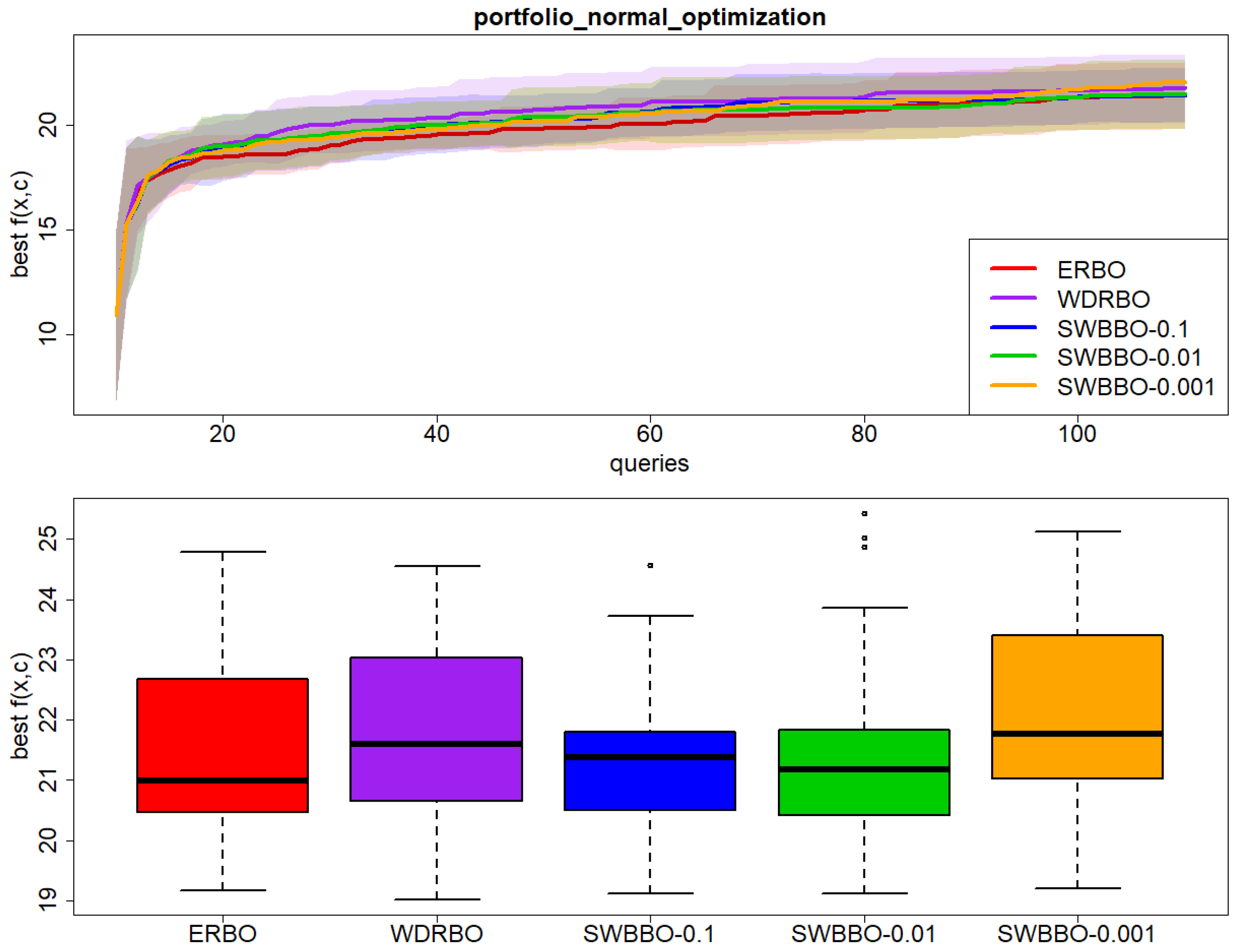

As reported in Ref. [

9], ERBO is always better (on median) than WDRBO, apart from in the case of Portfolio Normal Optimization. Moreover, in our experiments—which are more extensive than those reported in Ref. [

9]—this difference was statistically significant (with respect to a Wilcoxon test) in the following cases: Ackley (

p-value = 0.001), Three Hump Camel (

p-value = 0.046), and Six Hump Camel (

p-value = 0.015), while it was not statistically significant in the following: Modified_Branin (

p-value = 0.532), Hartmann (

p-value = 0.260), Continuous Vendor (

p-value = 0.605), Portfolio Optimization (

p-value = 0.665), and Portfolio Normal Optimization (

p-value = 0.254).

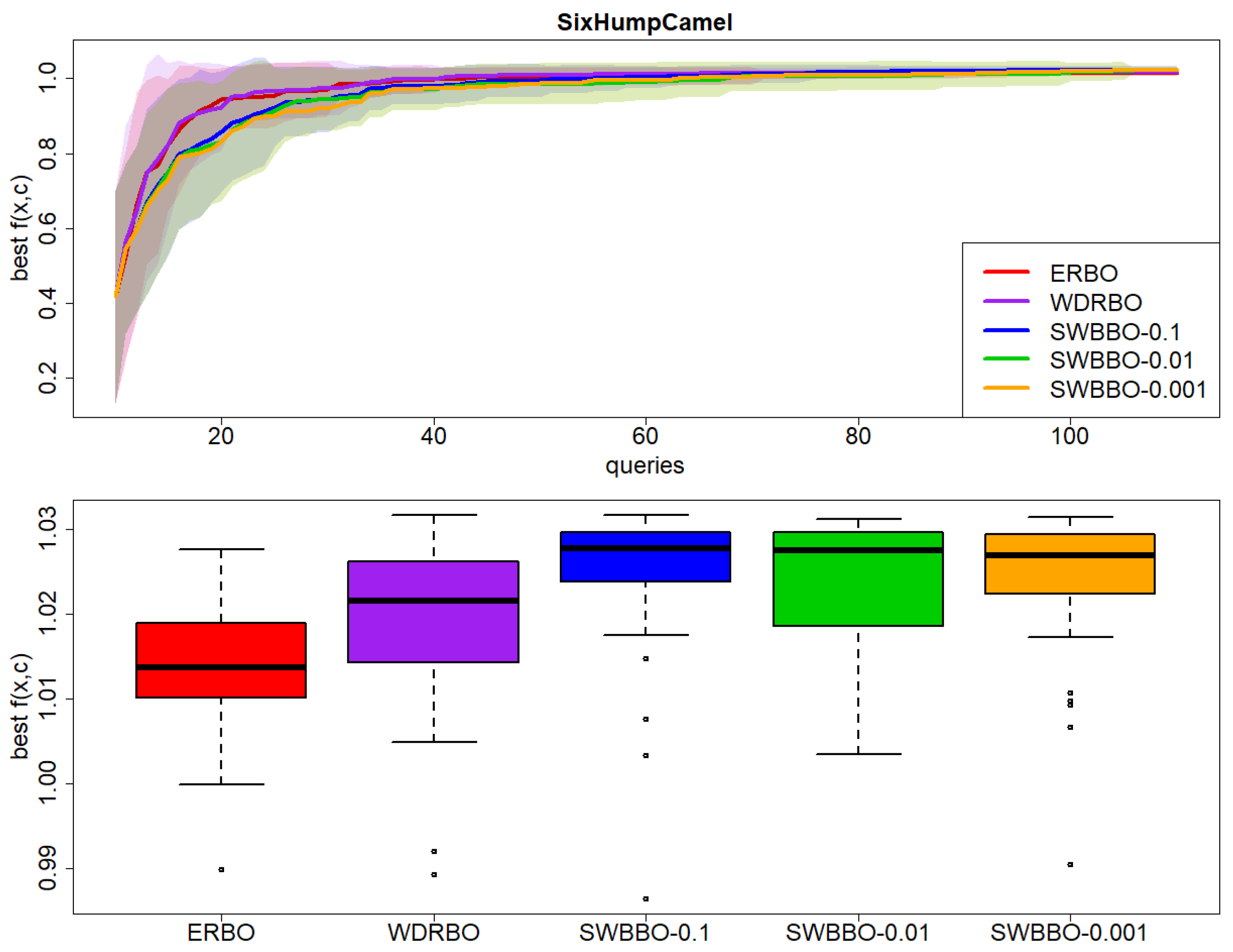

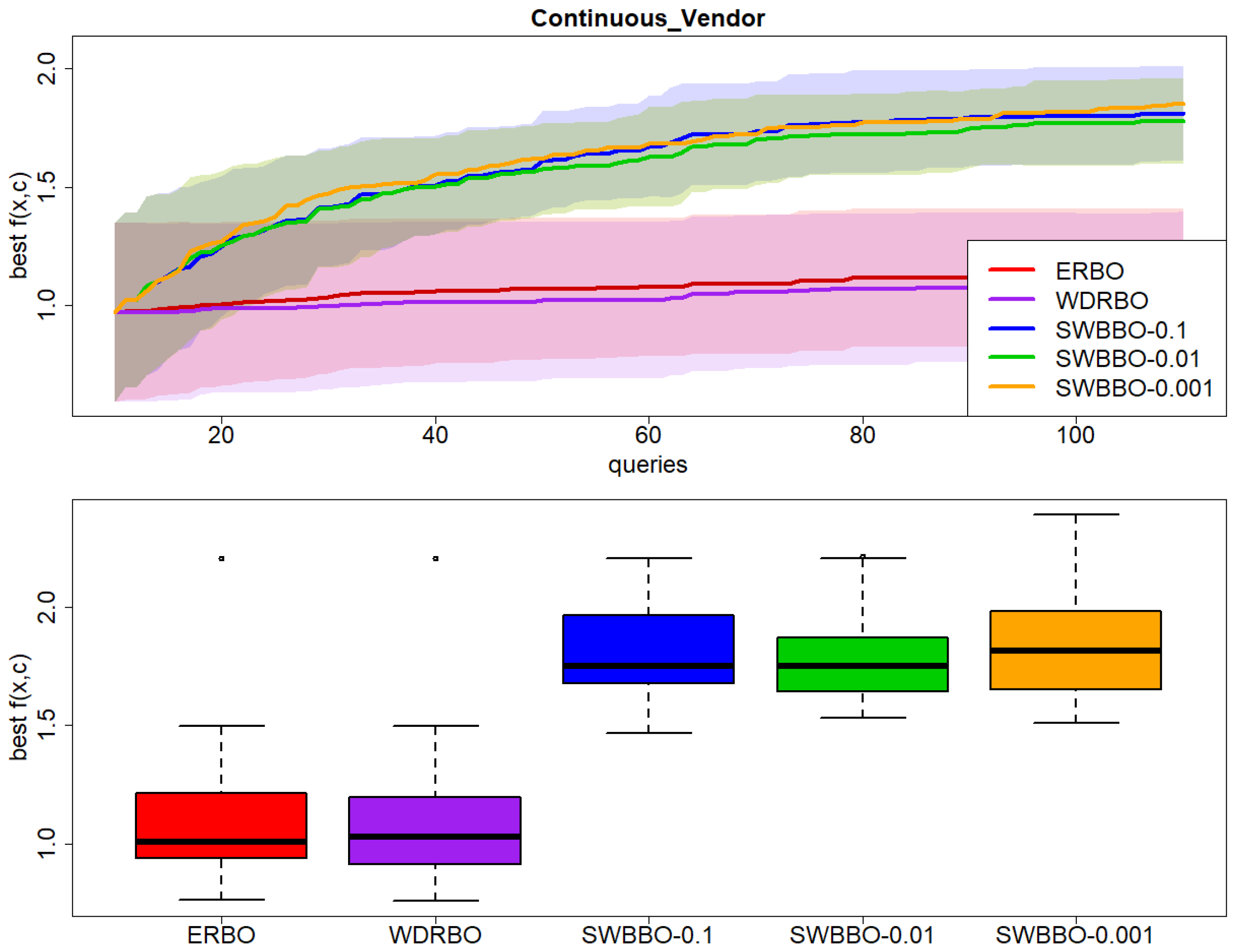

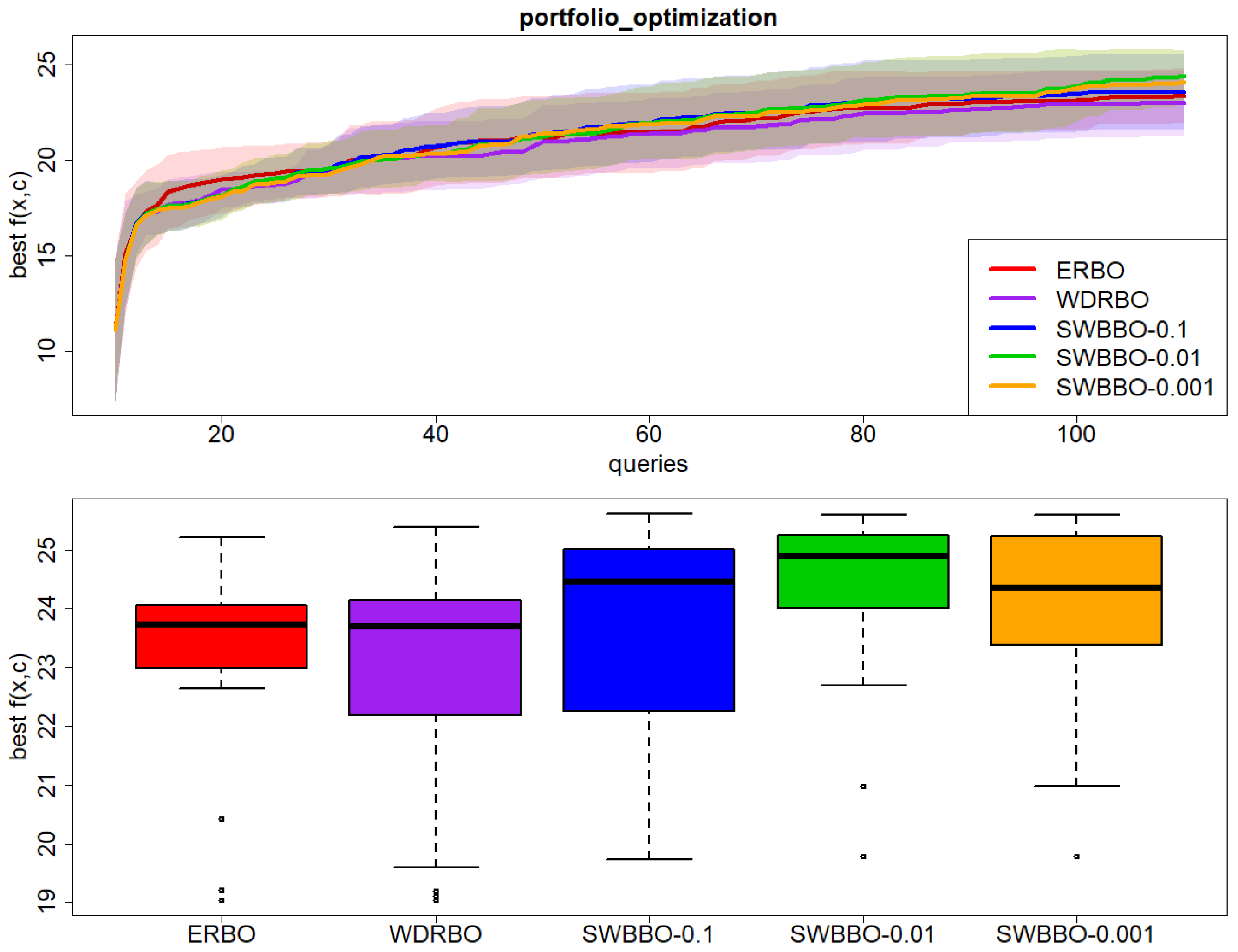

When ERBO is compared against the best among the three SWBBO alternatives (

Table 1), its results are better (on median) only in the case of Modified Branin, but this difference is not statistically significant (

p-value = 0.290). In all the other test problems, the best SWBBO alternative is (on median) better than ERBO; more precisely, it is significantly better in the following: Six Hump Camel (

p-value < 0.001), Continuous Vendor (

p-value < 0.001), and Portfolio Optimization (

p-value < 0.001), while the difference is not statistically significant in Ackley (

p-value = 0.127), Hartmann (

p-value = 0.708), Three Hump Camel (

p-value = 0.440), and Portfolio Optimization (

p-value = 0.088). Results are also reported in

Figure 4,

Figure 5,

Figure 6,

Figure 7,

Figure 8,

Figure 9,

Figure 10 and

Figure 11.

Figure 4.

Results on Ackley over 30 independent runs: (top) best observed value of with respect to BO queries and (bottom) boxplot of the final best value of .

Figure 4.

Results on Ackley over 30 independent runs: (top) best observed value of with respect to BO queries and (bottom) boxplot of the final best value of .

Figure 5.

Results on Modified_Branin over 30 independent runs: (top) best observed value of with respect to BO queries and (bottom) boxplot of the final best value of .

Figure 5.

Results on Modified_Branin over 30 independent runs: (top) best observed value of with respect to BO queries and (bottom) boxplot of the final best value of .

Figure 6.

Results on Hartmann over 30 independent runs: (top) best observed value of with respect to BO queries and (bottom) boxplot of the final best value of .

Figure 6.

Results on Hartmann over 30 independent runs: (top) best observed value of with respect to BO queries and (bottom) boxplot of the final best value of .

Figure 7.

Results on Three Hump Camel over 30 independent runs: (top) best observed value of with respect to BO queries and (bottom) boxplot of the final best value of .

Figure 7.

Results on Three Hump Camel over 30 independent runs: (top) best observed value of with respect to BO queries and (bottom) boxplot of the final best value of .

Figure 8.

Results on Six Hump Camel over 30 independent runs: (top) best observed value of with respect to BO queries and (bottom) boxplot of the final best value of .

Figure 8.

Results on Six Hump Camel over 30 independent runs: (top) best observed value of with respect to BO queries and (bottom) boxplot of the final best value of .

Figure 9.

Results on Continuous_Vendor over 30 independent runs: (top) best observed value of with respect to BO queries and (bottom) boxplot of the final best value of .

Figure 9.

Results on Continuous_Vendor over 30 independent runs: (top) best observed value of with respect to BO queries and (bottom) boxplot of the final best value of .

Figure 10.

Results on Portfolio_Optimization over 30 independent runs: (top) best observed value of with respect to BO queries and (bottom) boxplot of the final best value of .

Figure 10.

Results on Portfolio_Optimization over 30 independent runs: (top) best observed value of with respect to BO queries and (bottom) boxplot of the final best value of .

Figure 11.

Results on Portfolio_Normal_Optimization over 30 independent runs: (top) best observed value of with respect to BO queries and (bottom) boxplot of the final best value of .

Figure 11.

Results on Portfolio_Normal_Optimization over 30 independent runs: (top) best observed value of with respect to BO queries and (bottom) boxplot of the final best value of .

Finally,

Table 2 summarizes the results related to the Cumulative Stochastic Regret (CSR) and computational time. It is evident that ERBO offered a lower CSR, on median, more frequently than the other methods, even in cases in which SWBBO has provided a better value of

. The main plausible reason is a more explorative behaviour of SWBBO after the identification of its own best observed

.

As far as computational time is concerned, there are no relevant differences between the approaches, nor is there an evident relationship between CSR and time. As shown in

Table 2, SWBBO1 and SWBBO3 are often faster or comparable to baseline methods. SWBBO2, which uses a moderate regularization value, incurs slightly higher cost in some cases but offers improved robustness. Thus, computational trade-offs depend on the specific configuration and should be considered jointly with regret performance.

5.1. Evaluation Metrics

To evaluate how well our computed barycenters and transport maps perform, we use two established metrics from the entropic optimal transport literature [

46]. The

(Bures–Wasserstein Unexplained Variance Percentage) metric measures the quality of the generated barycenter compared to the ground truth, and it is defined as

where the Bures–Wasserstein metric is

, for the respective means and covariances of the distributions. This metric provides a normalized measure of the distance between the computed and true barycenter, expressed as a percentage of the target distribution’s variance. Where the Bures–Wasserstein metric is computed for the respective means and covariances of the distributions. The

(

Unexplained Variance Percentage metric assesses the quality of individual transport maps from each marginal distribution to the barycenter:

where

denotes the learned transport map from the marginal to the barycenter, and

represents the ground truth mapping. This metric captures how well the learned transport maps approximate the optimal transport plans, normalized by the variance of the target distribution.

For illustration, we compute these metrics for the Ackley function across different regularization parameter values. The results demonstrate how regularization strength affects both barycenter quality and transport map accuracy.

Table 3 shows how well the barycenter computation and transport map estimation perform for the Ackley test function across different regularization parameter values (ε = 0.1, 0.01, 0.001), using two key evaluation metrics,

and

. The

metric shows that performance varies with regularization strength: moderate regularization (

= 0.1, 0.01) yields acceptable barycenter quality with

values of 0.35% and 0.77% respectively, while strong regularization (

= 0.001) achieves perfect barycenter approximation with

= 0.0000%. The

metric values stay consistently around 7–8% across all regularization levels, which tells us that the learned transport maps maintain reasonable accuracy no matter which regularization parameter we choose. These results show that stronger regularization (smaller

) improves barycenter quality for the Ackley function, while transport map fidelity stays stable. This suggests our method performs robustly across different regularization settings for this test problem.

5.2. Sinkhorn vs. LP Barycenter Comparison

Table 4 shows a quantitative comparison between barycenters computed using the Sinkhorn Wasserstein method and those we obtained through Linear Programming (LP) for the Ackley test function across different regularization parameters (γ = 0.1, 0.01, 0.001). We use three metrics to evaluate them: Wasserstein Distance (WD), Mean Euclidean Distance (MED), and Maximum Mean Discrepancy (MMD). The results demonstrate that the regularization parameter has a significant impact on the agreement between Sinkhorn and LP Barycenters. For the Wasserstein Distance, the deviation decreases as regularization becomes stronger: from 0.002593 at

= 0.1 to 0.001621 at

= 0.01, and further to 0.001208 at

= 0.001. This pattern tells us that when we use smaller γ values, the two methods work much better together under optimal transport geometry. The Mean Euclidean Distance behaves the same way, dropping from 0.010303 when γ = 0.1 down to 0.007938 at γ = 0.01, and then down to 0.003189 at γ = 0.001. What this means is that the pointwise differences between the barycenters become much smaller when we use stronger regularization. The MMD Approximation, which measures distributional similarity through kernel-based comparison, shows the most dramatic improvement: from 0.000604 at γ = 0.1 to 0.000299 at γ = 0.01, and finally to 0.000059 at γ = 0.001.

Overall, the results show that both Sinkhorn and LP methods produce increasingly aligned barycenters as the regularization parameter becomes smaller, with the strongest agreement achieved at γ = 0.001 across all evaluation metrics for the Ackley function.

The and metrics might not really capture what is going on with entropic optimal transport convergence, since they are mainly looking at pointwise differences instead of the underlying geometric structure of how we compute Barycenters. The WD, MED, and MMD metrics seem like better choices for evaluation because they actually measure how well the distributions line up and whether the geometry stays consistent between different ways of computing Barycenters.

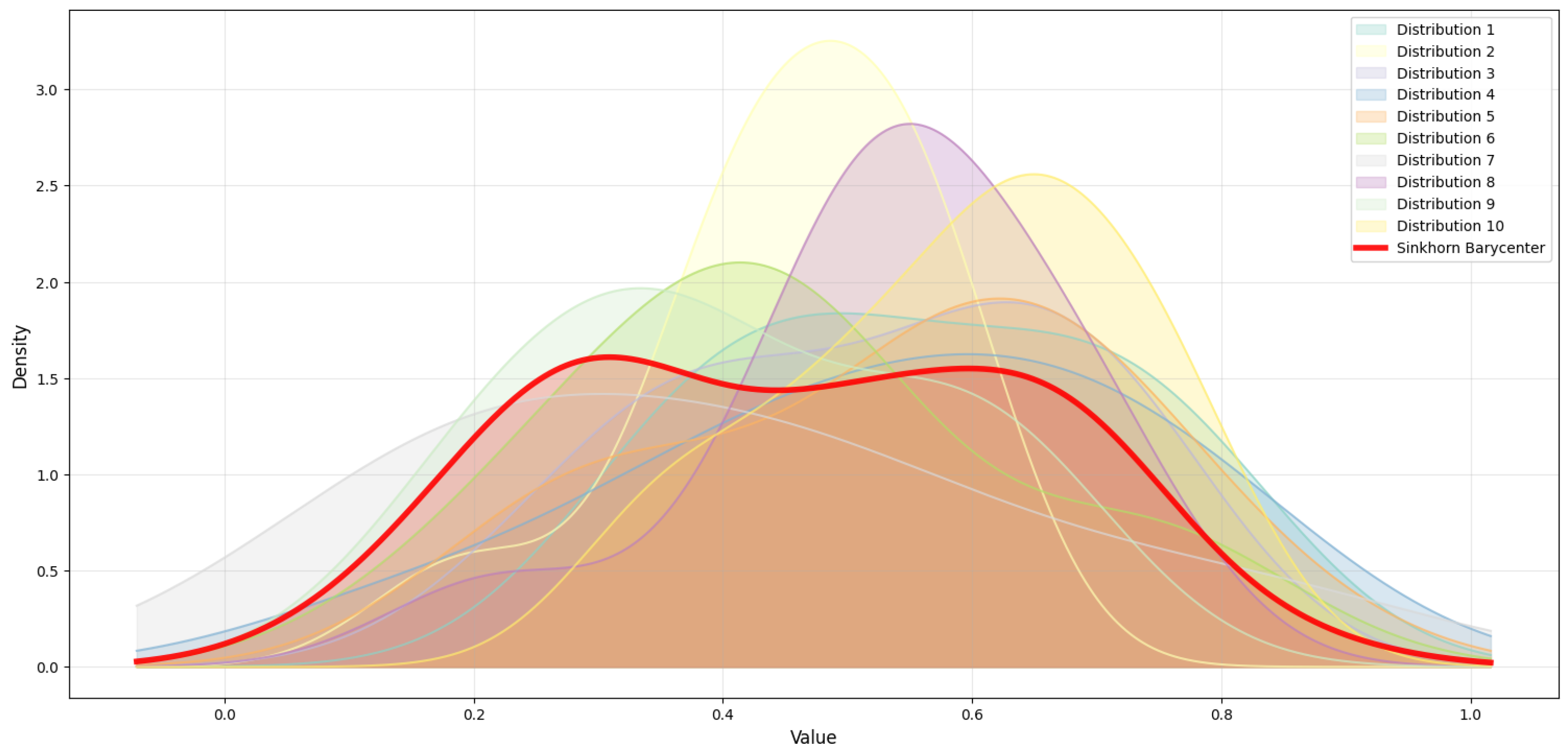

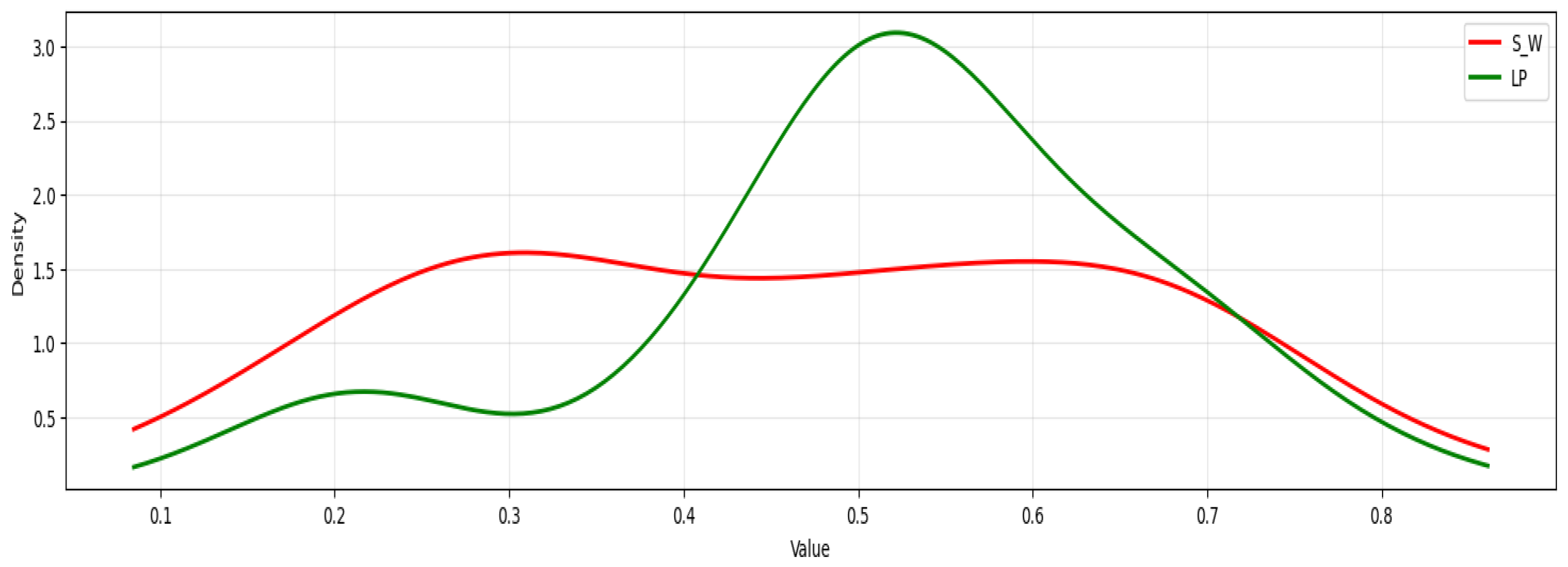

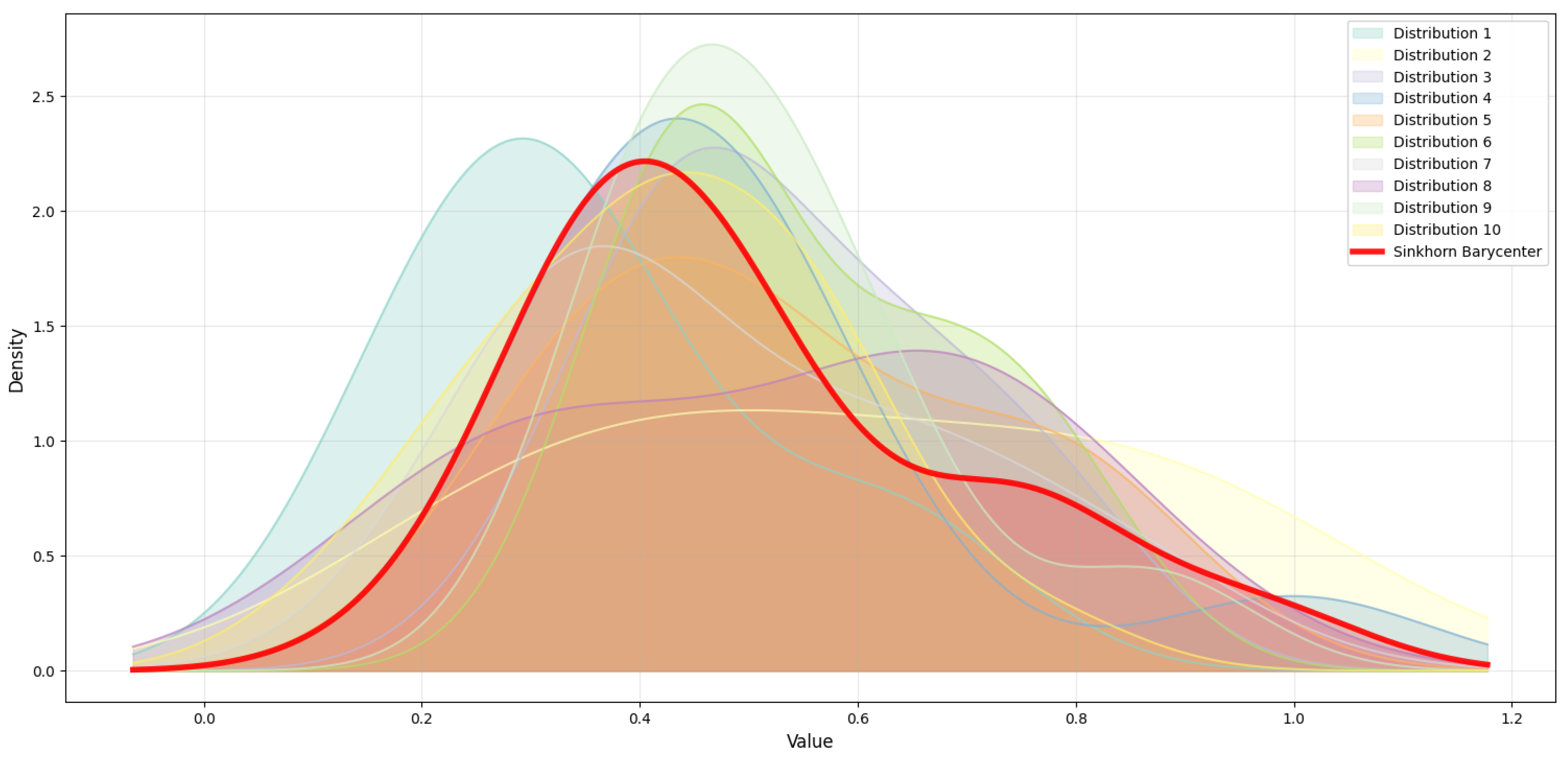

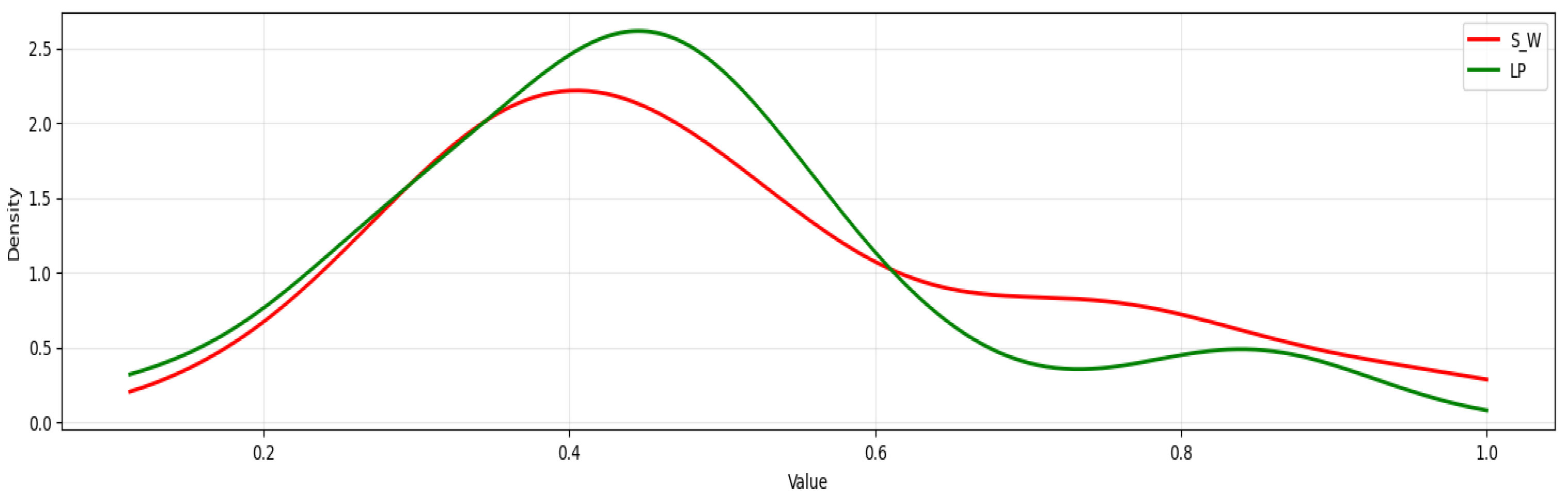

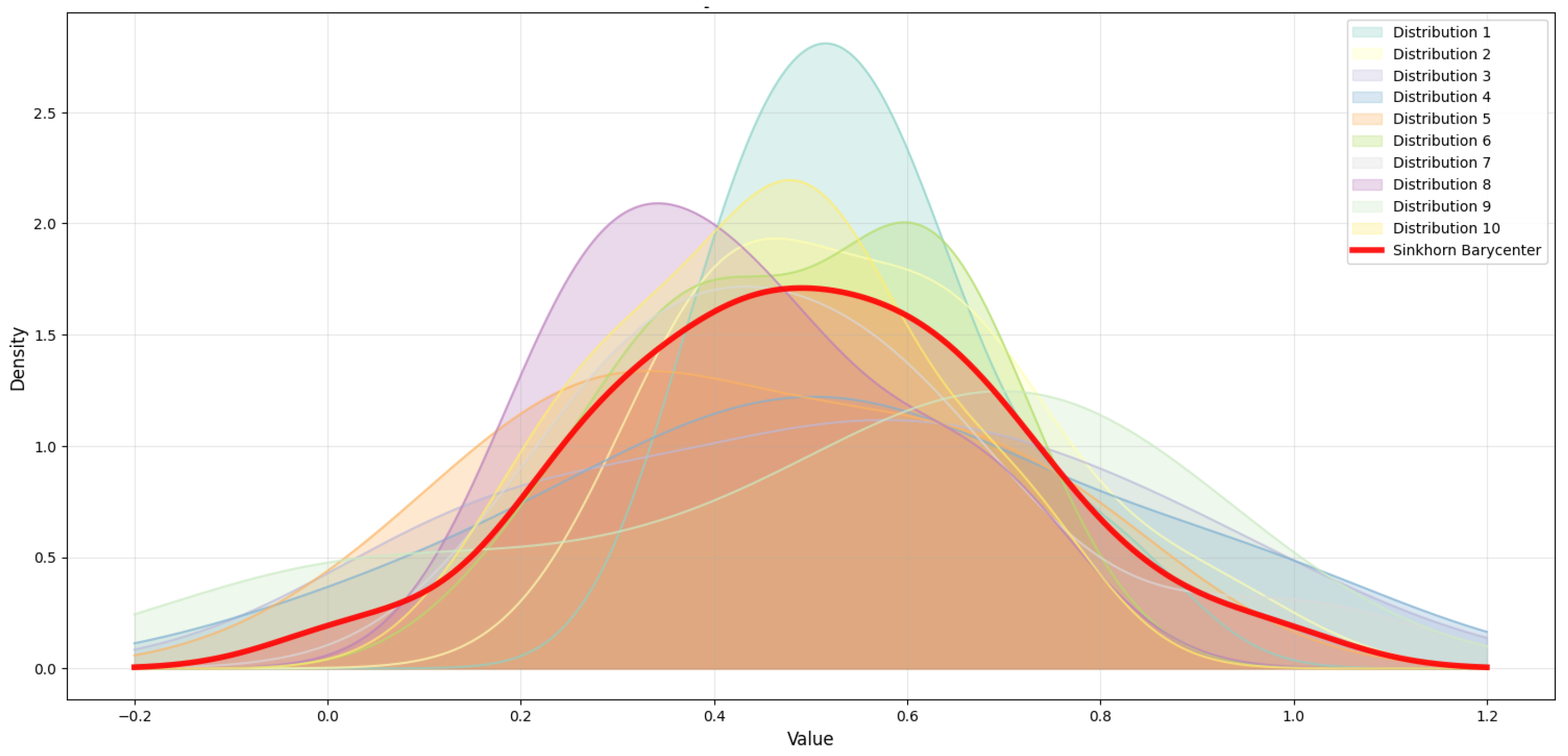

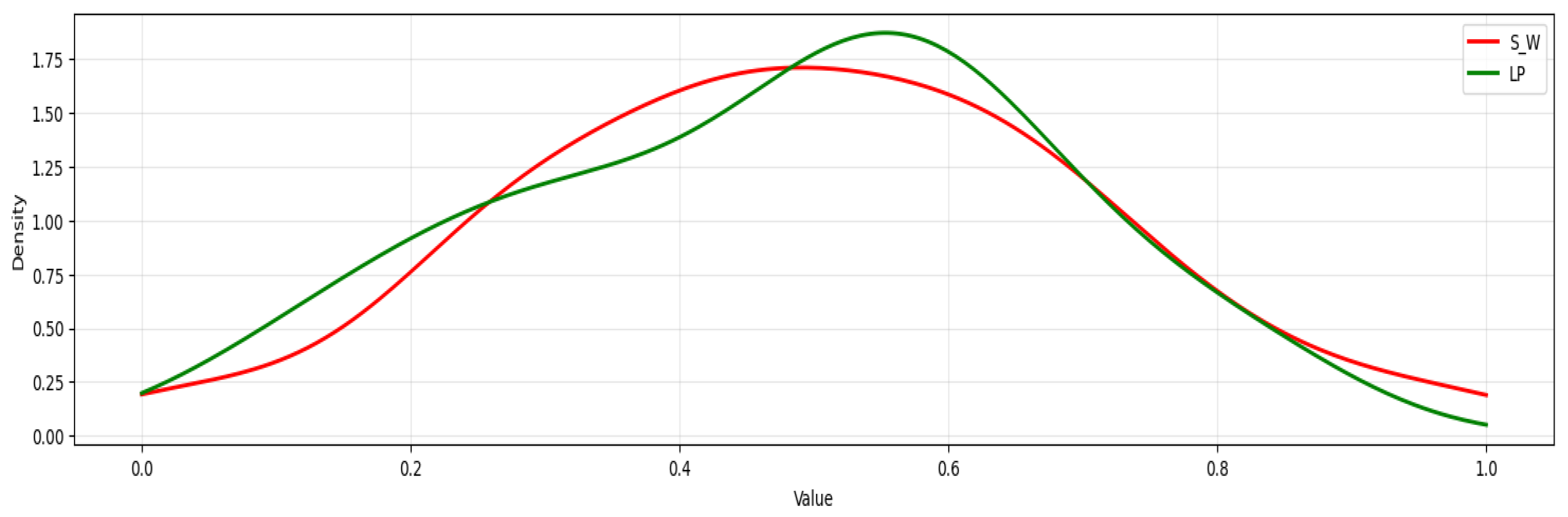

Figure 12,

Figure 13,

Figure 14,

Figure 15,

Figure 16 and

Figure 17 show us a comparative analysis of Sinkhorn Barycenter computation versus LP Barycenter methods across different regularization strengths. We have organized the analysis in pairs, where the even-numbered

Figure 12, and

Figure 16 show the Sinkhorn Barycenter among multiple distributions with the computed barycenter highlighted in red, while the odd-numbered

Figure 13,

Figure 15 and

Figure 17 give us direct comparisons between the Sinkhorn–Wasserstein (S-W) method (red curves) and LP method (green curves). The regularization parameter

decreases progressively across the figure pairs:

Figure 12 and

Figure 13 use

= 0.1,

Figure 14 and

Figure 15 use γ = 0.01, and

Figure 16 and

Figure 17 use γ = 0.001. This progression reveals the fundamental relationship between regularization strength and solution characteristics.

When we use high regularization (γ = 0.1,

Figure 12 and

Figure 13), the Sinkhorn method creates highly smooth, almost flat distributions that look quite different from what the LP solution gives us. The strong entropic regularization pushes the barycenter toward maximum entropy, which means we end up with broad, spread-out distributions. Meanwhile, the LP method keeps sharper, more concentrated peaks that do a better job of preserving what the original distributions actually looked like. When we move to moderate regularization (γ = 0.01,

Figure 14 and

Figure 15), the Sinkhorn Barycenter starts becoming closer to the LP solution while still staying smooth. The entropic regularization still smooths things out noticeably, but now the barycenter does a better job of capturing the underlying distribution structure with more defined peaks and valleys. At low regularization (γ = 0.001,

Figure 16 and

Figure 17), the Sinkhorn and LP methods converge toward nearly identical solutions. The minimal regularization lets the Sinkhorn method become really close to the true optimal transport solution while still keeping its computational advantages. The distributions show sharp, well-defined features that match up closely between both methods.

This progression shows us the trade-off between how fast we can compute things and how accurate our solution is in regularized optimal transport. When we use higher regularization, we obtain faster convergence, but we might end up smoothing out important features of the distributions too much. When we use lower regularization, we stay more faithful to the true barycenter, but it takes more computational work to achieve.

Figure 12.

Sinkhorn Barycenter on Ackley at γ = 0.1.

Figure 12.

Sinkhorn Barycenter on Ackley at γ = 0.1.

Figure 13.

Sinkhorn vs. LP Barycenter comparison on Ackley at = 0.1.

Figure 13.

Sinkhorn vs. LP Barycenter comparison on Ackley at = 0.1.

Figure 14.

Sinkhorn Barycenter on Ackley at = 0.01.

Figure 14.

Sinkhorn Barycenter on Ackley at = 0.01.

Figure 15.

Sinkhorn vs. LP Barycenter comparison on Ackley at = 0.01.

Figure 15.

Sinkhorn vs. LP Barycenter comparison on Ackley at = 0.01.

Figure 16.

Sinkhorn Barycenter on Ackley at = 0.001.

Figure 16.

Sinkhorn Barycenter on Ackley at = 0.001.

Figure 17.

Sinkhorn vs. LP Barycenter comparison on Ackley at = 0.001.

Figure 17.

Sinkhorn vs. LP Barycenter comparison on Ackley at = 0.001.

6. Conclusions: Limitations and Perspectives

BO works really well for optimizing data-driven systems when uncertainty is involved, where randomness comes from things like contextual conditions, model parameters, or noisy observations. In these situations, uncertainty has many different sides, which makes developing DRBO algorithms both important and tricky. One of the main challenges we face is that context distributions are infinite-dimensional, especially when we are dealing with continuous contexts. Solving BO problems robust to such uncertainty requires tractable approximations of otherwise intractable constrained optimization problems over probability spaces. Historically, robust BO formulations have employed φ-divergences to define ambiguity sets over context distributions. However, Wasserstein distances, arising from OT theory, have recently gained prominence due to their desirable geometric, topological, and statistical properties. Unlike φ-divergences, Wasserstein distances naturally incorporate the geometry of the underlying space and provide meaningful comparisons even when distributions have disjointed support. This geometry-aware notion of robustness allows the Wasserstein metric to capture subtle shifts in distribution and thus leads to better-behaved optimization landscapes. As a result, Wasserstein-based ambiguity sets have become increasingly popular for modeling uncertainty in robust machine learning, including BO.

Despite these advantages, computational complexity remains a well-known bottleneck. The exact computation of Wasserstein distances has a complexity of with respect to the number of samples n. This makes OT prohibitive in high dimensions. To address this, entropic regularization via the Sinkhorn algorithm has emerged as a practical alternative, offering a per-iteration complexity of . Moreover, Sliced Wasserstein approaches and neural OT methods (e.g., via learned transport maps) have enabled further scaling by approximating high-dimensional OT through lower-dimensional projections or learned parametrizations. The SWBBO algorithm developed in this study contributes to this growing intersection of Bayesian Optimization and optimal transport. By leveraging Wasserstein Barycenters, our methods offer tractable and robust BO strategies that adapt to distributional shifts in context while maintaining computational efficiency through entropic regularization. In conclusion, Wasserstein-based BO methods such as SWBBO offer promising tools for robust and geometry-aware optimization under uncertainty. While computational challenges remain, the flexibility and strong theoretical foundations of optimal transport provide a compelling framework for advancing robust machine learning, with exciting future applications in optimization, control, and learning under uncertainty.

Looking ahead, we expect this direction to keep expanding, especially when we integrate it with generative modeling, distributional Reinforcement Learning, and distributional robustness. In generative modeling, for example, people are increasingly using Wasserstein distances as loss functions because they can compare entire distributions rather than just point estimates. In dictionary learning, researchers use Wasserstein Barycenters to combine atoms meaningfully across distributional structures. Similarly, Distributional Reinforcement Learning expands what we are trying to learn from mean rewards to full return distributions, often using Wasserstein-based metrics for more informative policy updates.