1. Introduction

Underground coal mine visual targets account for a large proportion of small targets; taking helmet-wearing as an example, more than 25% of the visual targets are small targets according to the definition of small targets in the COCO dataset. The safety of workers in the production and operation environment of underground coal mines has always been the primary concern. Due to the complexity and variability of the underground environment, there are many potential dangers such as gang slice, roof coal rock fall, etc. Wearing a helmet is a basic requirement to protect workers’ lives and safety. However, the existing helmet detection methods in underground coal mine scenarios face many challenges in practical application, including the following: (1) There are few effective samples and is strong spatial bias; the helmet detection in underground coal mines not only needs to detect the wearing of helmets, but also needs to detect the non-wearing of helmets by the workers, and the latter is the focus of the concern of coal mine safety. Due to the safety regulations, workers in the underground scene do not wear safety helmets when working less, and therefore face the problem of significantly less effective samples, and also because the monitoring camera is usually installed in the two gangs of the roadway, the distribution of safety helmet position presents a strong spatial bias. (2) The underground environment has a complex background, and the scene contains a variety of production and transportation equipment, and the visual data is susceptible to the influence of dust and water mist. (3) The safety-helmet-wearing targets are usually small in size in the image [

1], so helmet-wearing detection of small targets faces difficulties. These problems make it difficult for the accuracy and reliability of existing detection systems to meet practical needs.

In recent years, deep learning algorithms represented by convolutional neural networks [

2] and Transformer networks [

3] have been rapidly developed in the field of object detection, accompanied by the release of generalized scenario datasets such as COCO [

4], Pascal VOC [

5], and so on. The research and applications of object detection have been deeply applied, and in the field of real-time object detection, with the birth of the YOLO [

6] series of increasingly mature algorithmic models for target recognition, detection, and semantic segmentation. For underground coal mine helmet-wearing detection, these techniques are able to efficiently recognize and detect the wearing condition of workers by analyzing the visual data of underground coal mine surveillance [

1] and send timely alerts when workers are not wearing helmets. However, the direct application of the above algorithms for helmet-wearing detection in coal mine scenes faces many challenges as mentioned before. The complex conditions and data distribution of the underground environment require the detection algorithms to have better background interference immunity, adaptability to scene generalization, and characterization of helmet-wearing detection for small targets, and to be able to maintain high-precision detection performance in the presence of fewer samples and complex backgrounds.

The safety problems in the underground coal mine environment are prominent, which requires real-time detection of workers wearing helmets. Therefore, this study proposes a helmet-wearing detection method for the underground coal mine environment with few samples on the basis of the latest YOLOv10 real-time object detection algorithm, which effectively improves the detection accuracy by effectively expanding the training samples and optimizing the network structure, thus providing powerful support for the safety management of the workers during the operation of the underground coal mine.

The main task of object detection is to determine the location of an object in a given image and to label the category to which the object belongs. Convolutional neural networks pioneered the breakthrough in visual object classification algorithms [

1], and subsequently researchers applied them to visual object detection tasks. Existing object detection algorithms can be broadly categorized into single-stage and two-stage. In the two-stage object detection research, Girshick et al. proposed the R-CNN [

7] object detection algorithm based on a regional convolutional neural network in 2014, which was realized to pioneer the deep learning-based object detection paradigm. Meanwhile, Ren et al. [

8] proposed the Faster R-CNN algorithm that fuses region generating networks in combination with the R-CNN algorithm to achieve faster speeds and higher accuracy detection algorithms. He et al. [

9] proposed the Mask R-CNN algorithm, which increases instance segmentation capability, and then TridentNet [

10], which achieves a multi-scale fusion module for higher-accuracy object detection. Two-stage object detection algorithms usually excel in accuracy due to fine-grained classification and regression after candidate region generation, but they are less computationally efficient, so single-stage object detection algorithms are more commonly used. Liu et al. [

11] proposed an SSD algorithm based on multi-scale convolutional detection that can detect targets of different sizes. Lin et al. [

12] proposed a focal loss that can focus on the category of fewer samples for the problem of positive–negative sample imbalance, which enhances the detection performance. Zhu et al. [

13] proposed a BiFPN network to improve the feature fusion efficiency while taking into account the detection accuracy.

The YOLO (You Only Look Once) architecture family has fundamentally reshaped real-time object detection paradigms since its seminal introduction by Redmon et al. [

6], which has been iterated to the YOLOv12 version [

14]. Subsequent iterations demonstrate progressive evolution: YOLOv2 [

15], YOLOv3 [

16], and YOLOv4 [

17] enhanced feature extraction through multi-scale predictions and anchor box optimization; YOLOv5 [

18], YOLOv6 [

19], and YOLOv7 [

20] introduced modular design patterns enabling architecture scaling; while YOLOv8 [

21], YOLOv9 [

22], and YOLOv10 [

23] achieved state-of-the-art speed–accuracy tradeoffs through reparametrized convolutions and spatial-channel decoupling. YOLOv10 removes time-consuming NMS in the inference stage and achieves high accuracy for object detection tasks. YOLOv11 [

24] enhances performance in complex vision tasks such as semantic segmentation through sophisticated spatial attention mechanisms. YOLOv12 achieves computationally efficient attention operations via integration of the hardware-aware Flash Attention computational framework. This continuous innovation trajectory—driven by computational efficiency demands across embedded systems, robotics, and industrial applications—positions YOLO as the de facto solution for latency-critical vision systems, culminating in the highly efficient YOLOv10 architecture that forms this study’s foundation. Recently, transformer-based approaches have also been introduced to the field of object detection. Inspired by the channel-wise attention mechanism in SENet [

25], the Convolutional Block Attention Module (CBAM) [

26] incorporates sequential channel and spatial attention operations to enhance feature extraction capabilities. RT-DETR [

27] shows comparable latency to YOLO series with NMS considered. RF-DETR [

28] even pushes the latency bar further with multi-scale self-attention.

In terms of coal mine scene helmet object detection, coal mine scene helmet detection faces the challenges of fewer effective samples and low sample quality. Li et al. [

29] effectively improved the accuracy of underground small object detection by adopting the convolution and attention to enhance the feature extraction ability. Yang et al. [

30] propose to use a local enhancement module to map low illumination pixels to normal states for image processing.

Another challenge of helmet detection in coal mine scenarios is the complex background, where dust and water mist interference in the underground environment, as well as the distribution of underground illumination and its changes, can adversely affect helmet detection. Feng et al. [

31] proposed to apply a recursive feature pyramid for more efficient multi-scale feature fusion methods to further improve the helmet detection accuracy and reduce the missed detection. Sun & Liu [

32] introduce a parameter-free attention mechanism in YOLOv7, which effectively enhances the helmet feature extraction capability and enriches the contextual information captured by the model, thus improving the speed and accuracy of helmet detection in underground environment.

Coal mine underground scene helmet detection also faces the difficulty of small target samples. Hou et al. [

33] proposed to use Ghost convolution to improve the YOLOv5 backbone network and combined it with BiFPN for feature fusion to realize small target helmet detection in underground scene. Cao et al. [

34] optimized the initial value estimation of anchor frames in YOLOv7 by K-Means to improve the accuracy of small object detection underground.

The application of helmet-wearing detection algorithms in coal mine scenarios has achieved certain results; however, with the existing methods in coal-mine-scenario helmet detection, there are still problems: (1) There is more consideration in the detection algorithms to detect the helmet itself, while ignoring the downhole safety concerns, and there is a lack of analysis of the distribution of data on the downhole helmets. (2) The downhole background is more complex, and there is still a lack of effective programs to reduce the small object detection interference in the background. (3) Helmets belong to the small object detection, but regarding the detection head’s perceptual ability, there is still a lot of room for optimization. For real-time detection tasks, the YOLO architecture series remains the predominant solution due to its exceptional performance–efficiency balance. While YOLOv11 and YOLOv12 represent more recent advancements, they introduce either increased architectural complexity without commensurate gains in small-object detection accuracy or imposed stringent hardware constraints during deployment. Consequently, we select YOLOv10 as the foundational architecture for our helmet detection algorithm for the benefit of its high real-time performance and detection accuracy in underground mining environments.

Based on the architecture of YOLOv10, this paper analyzes the distribution of downhole visual data and the spatial bias of helmet position, and further applies data expansion and data enhancement algorithms to effectively increase the number of samples; proposes the application of a convolution module that integrates the background sensing in the backbone network to inhibit the interference of the complex background on the detection of the helmet, and integrates the convolution and the attention module in the detecting head, thus realizing the expansion of the sensory field to improve the model’s ability to perceive small targets wearing helmets; and optimizes the loss function by combining the a priori information that the helmet length and width comparison is centralized to improve the constraint strength for the helmet-wearing target. Experiments show that the optimized algorithm in this paper can effectively improve the detection accuracy of the small target of helmet-wearing in underground environments, which is beneficial to promote the safety management of underground workers.

2. Methodology of the Paper

The underground environment of coal mines has different characteristics from the surface environment. (1) The underground environment faces a complex background and more interference. (2) The underground area of coal mines presents restricted spatial characteristics, and safety helmets often appear in certain areas of the visual data, thus causing spatial bias. (3) From the data level, the underground helmet visual data has an obvious imbalance, with more data of workers wearing helmets and few samples of workers not wearing helmets due to safety management regulations. (4) The target of helmet-wearing is small, further increasing the difficulty of detection.

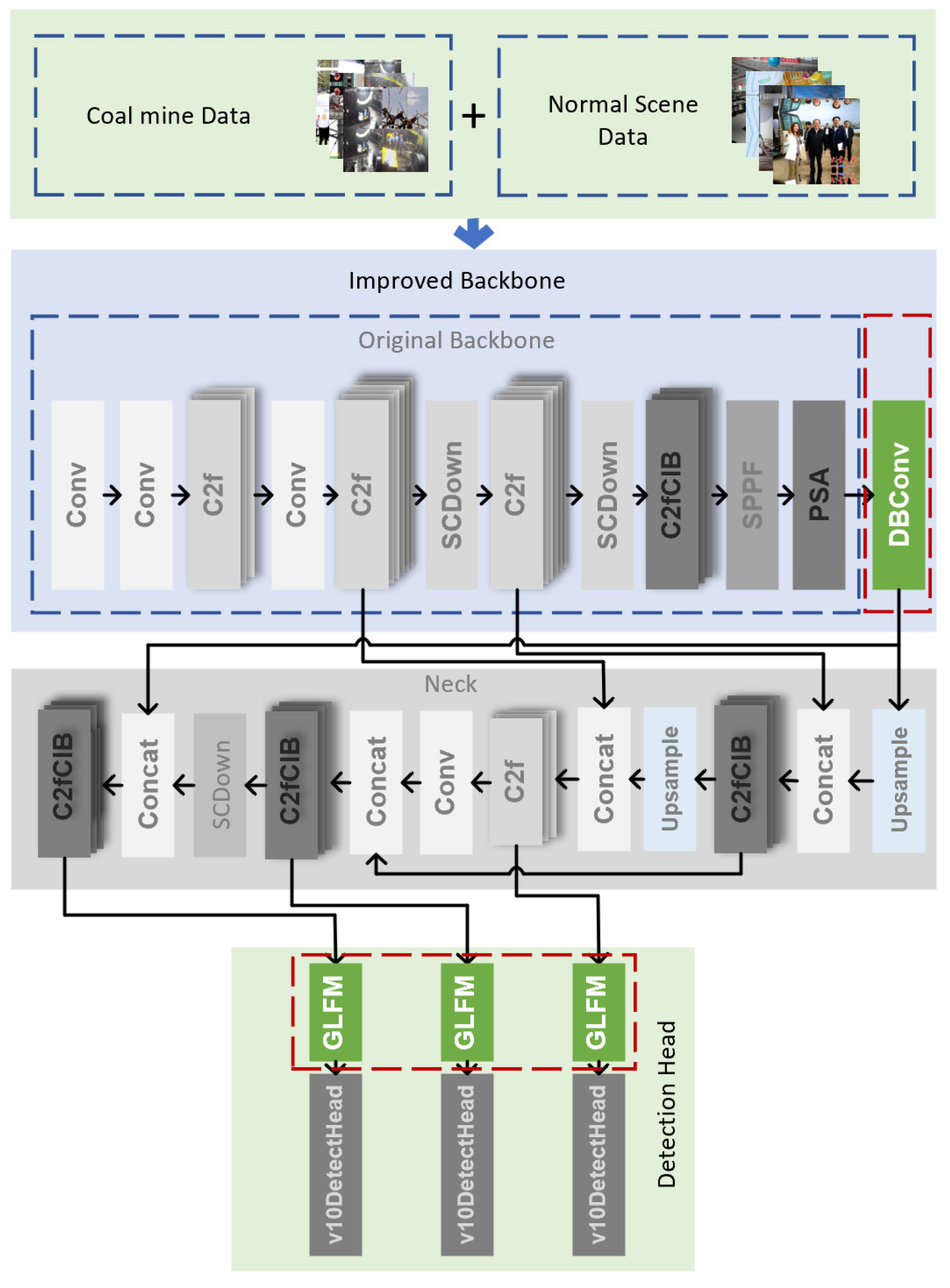

Aiming at these problems, this paper combines YOLOv10 to optimize the helmet-wearing detection model for underground scenes, and proposes an underground helmet-wearing detection method based on fused dynamic background perception under few samples, which is applied to the helmet detection in underground complex environments. The structure of the model is shown in

Figure 1, and the improved modules proposed in this paper are marked with red dashed boxes.

Among them, the backbone network (backbone) is mainly used for feature extraction, the neck network (neck) is used to generate multi-scale detection information, and the detection head network (detection head) is used to compute different scales of detection information and obtain the loss of output. Among them, in order to solve the significant spatial bias problem in the underground environment, this paper is restricted by analyzing the distribution of underground visual data and helmet spatial bias, and proposed to combine the ground helmet-wearing detection open-source data. The dataset Baidu PaddlePaddle [

35] provides a strategy to carry out an effective data expansion, so as to achieve more uniform data distribution and so that it is easier to generalize; for the problem of false alarms caused by the complex interference of downhole background, this paper proposes a dynamic background-aware convolution module (DBConv, Dynamic Background Convolution) for background masking, and applies it to the backbone network of YOLOv10, which guides the network to learn the important areas and effectively reduces the interference of the background through the introduction of dynamic background masking regions, and combined with the depth-separable convolution of the large convolutional kernel, the number of parameters is further optimized while increasing the computational sense field. To cope with the helmet small target problem, this paper proposes to apply the local feature and global feature fusion module (GLFM, Global-Local Fusion Module) in the detection head; the convolution module extracts the local high-frequency features, and the attention module pays more attention to the global low-frequency features [

36], which fuses the local features with the global features for characterization, to further improve the ability of the small target perception, and at the same time reduce the scale sensitivity, so as to further optimize the number of parameters. At the same time, scale sensitivity is reduced, so as to realize stable detection of small targets. Finally, the algorithm learning process is optimized by optimizing the hyperparameters in the model training process.

2.1. Helmet Data Enhancement

Underground environment helmet data presents the following characteristics: (1) The existing open-source underground scene helmet dataset [

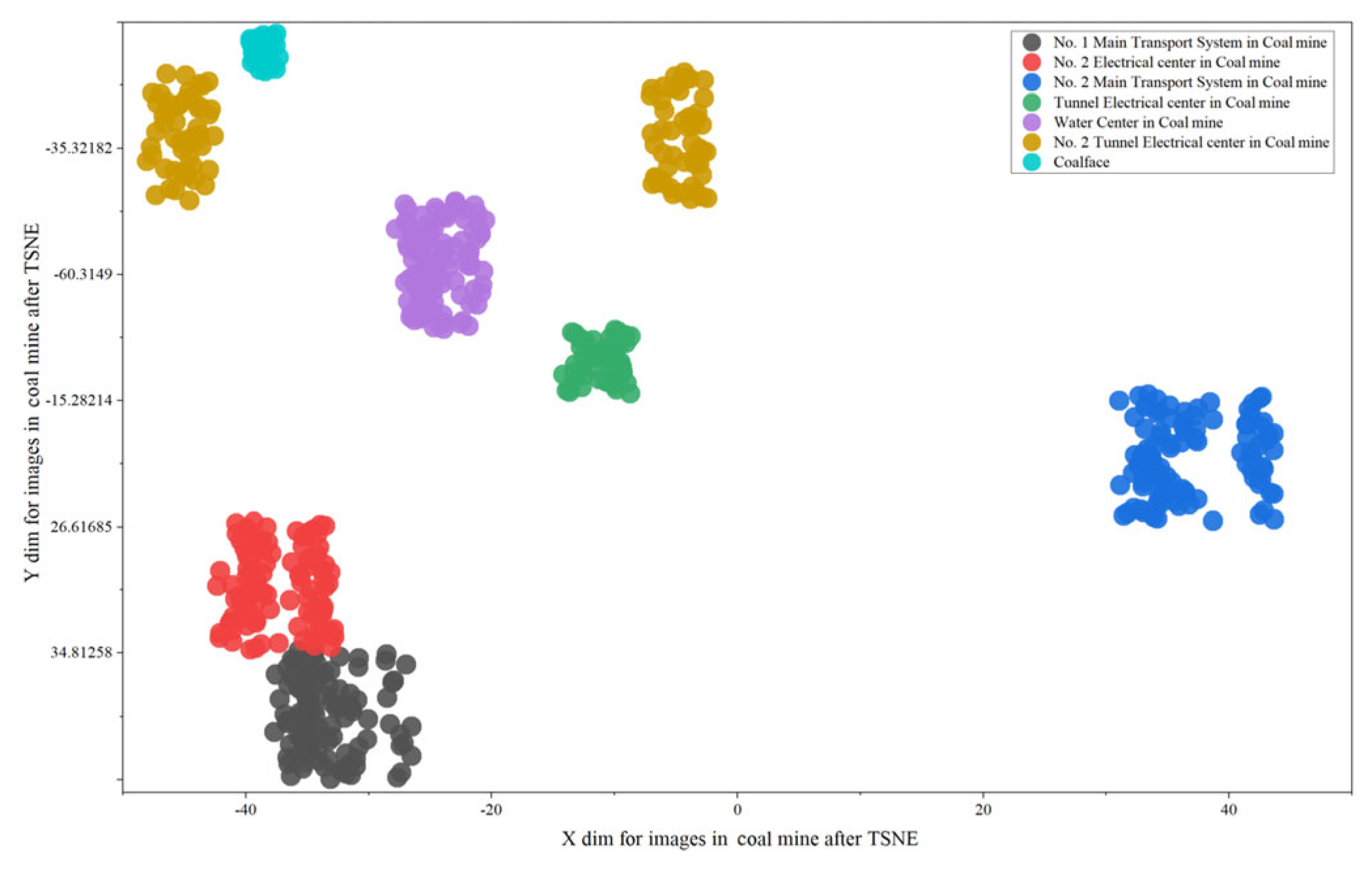

37] mainly detects helmets in the underground environment; however, in practice, the underground safety management needs to target the detection of operating workers wearing helmets/not wearing helmets, so the existing dataset is difficult to be directly applied to the detection of helmet-wearing in the underground. (2) The underground scene of coal mines’ data distribution shows an island-like shape; the data in similar scenes underground are more accumulated and less dispersed, and the data in different scenes are distributed farther away with fewer intersections, e.g.,

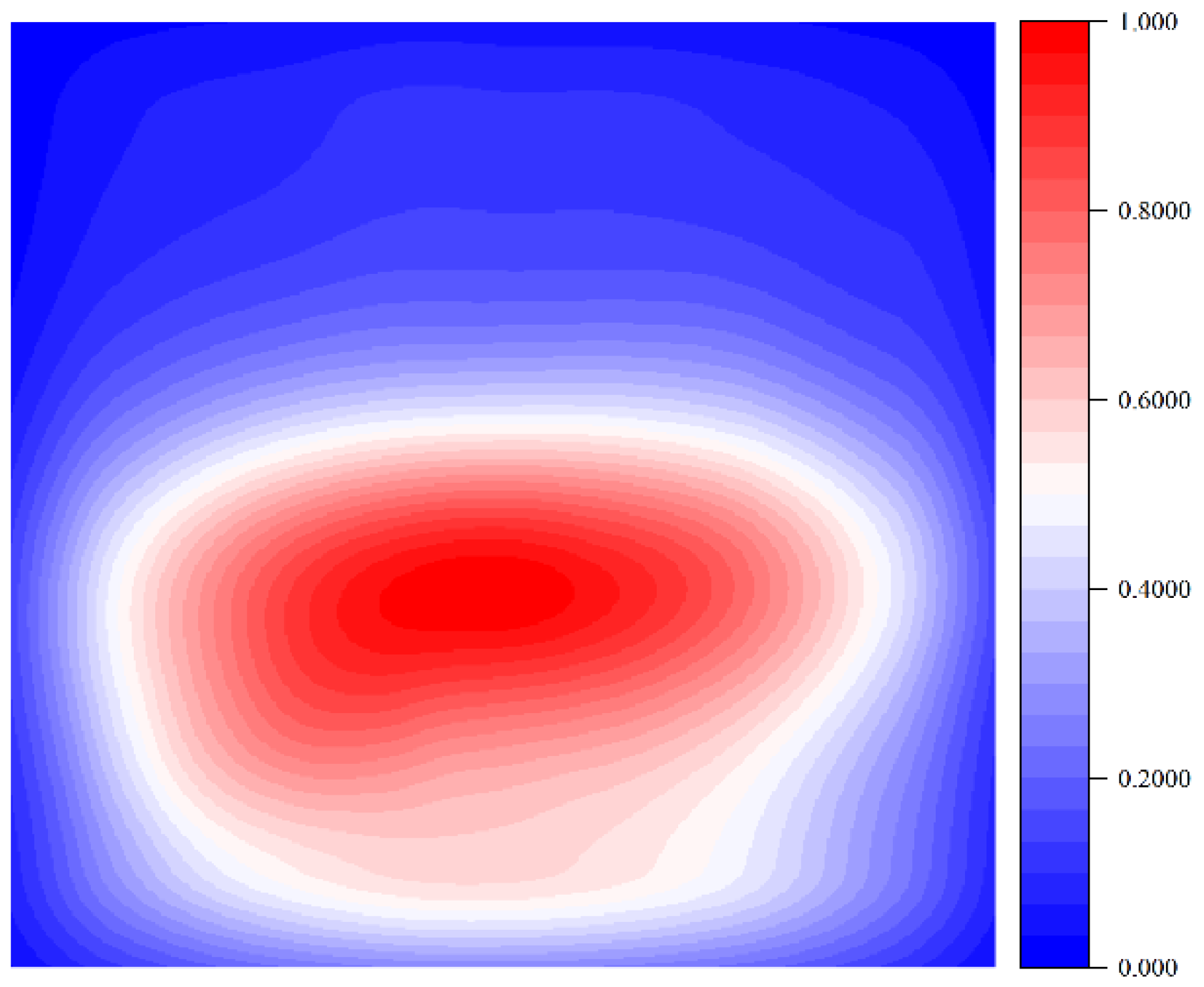

Figure 2. (3) Due to the safety management regulations, there are very few samples without helmets in underground environments, and a large number of samples are samples of normal wearing of helmets. (4) The visual acquisition cameras in the underground scene are usually installed at fixed points and are rarely changed, resulting in the data in the locations where helmets appear showing significant spatial bias, as shown in

Figure 3.

Data distribution. Due to the overall regional characteristics of the downhole environment, there is a significant island effect in the downhole data, affecting the deep learning network’s ability to learn different scene data features; due to the data alone, there is a lack of diversity in the data distribution, resulting in the network finding it difficult to effectively learn the continuous changes in the environment.

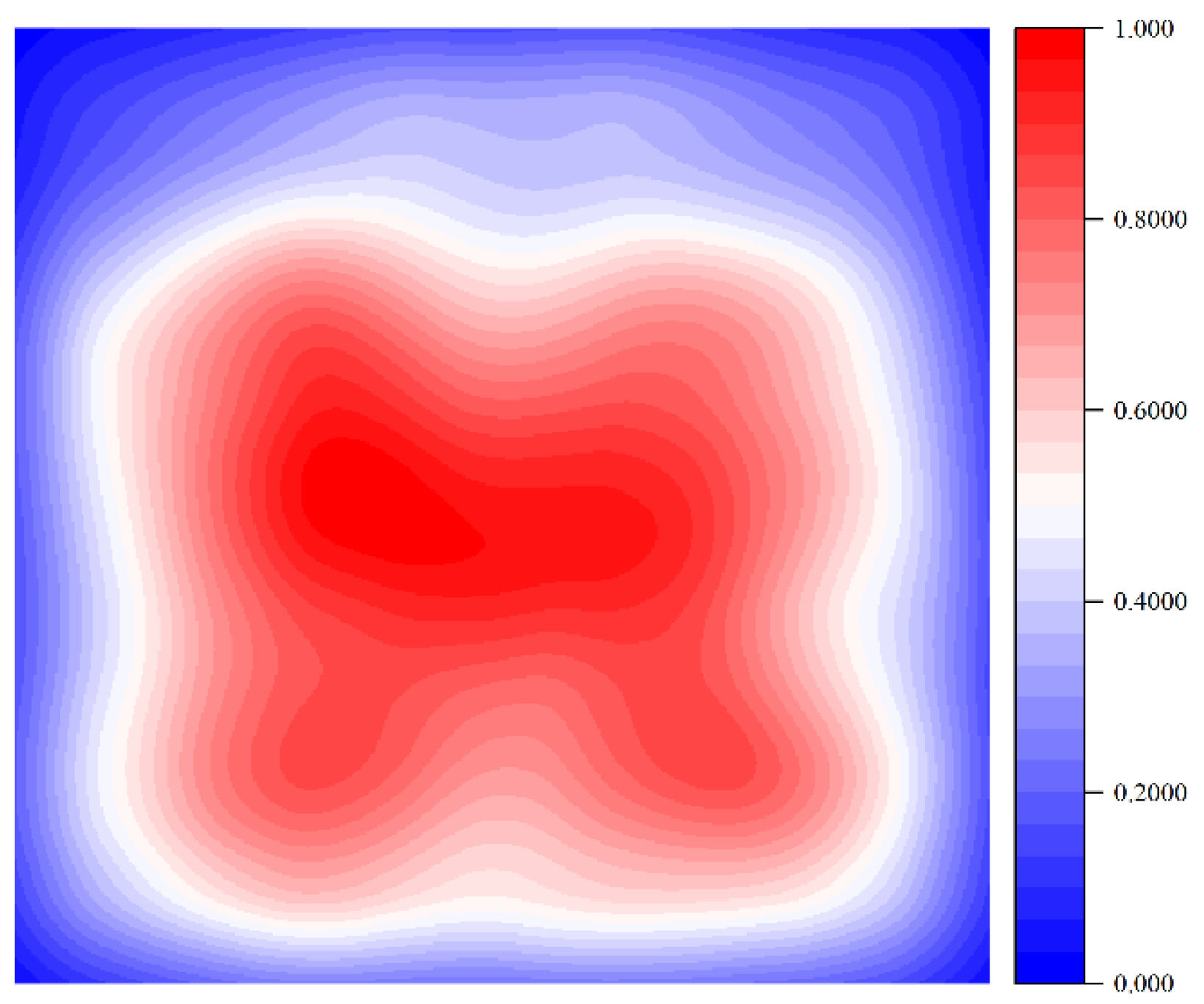

Spatial Bias. Due to the underground environment, the camera installation is more fixed, and most of the cameras are installed in the upper part of the two gangs of the roadway, forming a diagonal downward side of the collected image data. This feature leads to the underground scene of the visual data in a large number of pixel areas not appearing in the data of the personnel machine helmet-wearing or not, which leads to the neural network learning process to receive the spatial bias of the target of the helmet-wearing pixel area, making the network generalization Poor generalization ability. The spatial bias of the downhole dataset used in this paper is shown in

Figure 3. In drawing the spatial bias, we adopt the Mollifier Kernel Function to smooth the helmet-wearing target frame region.

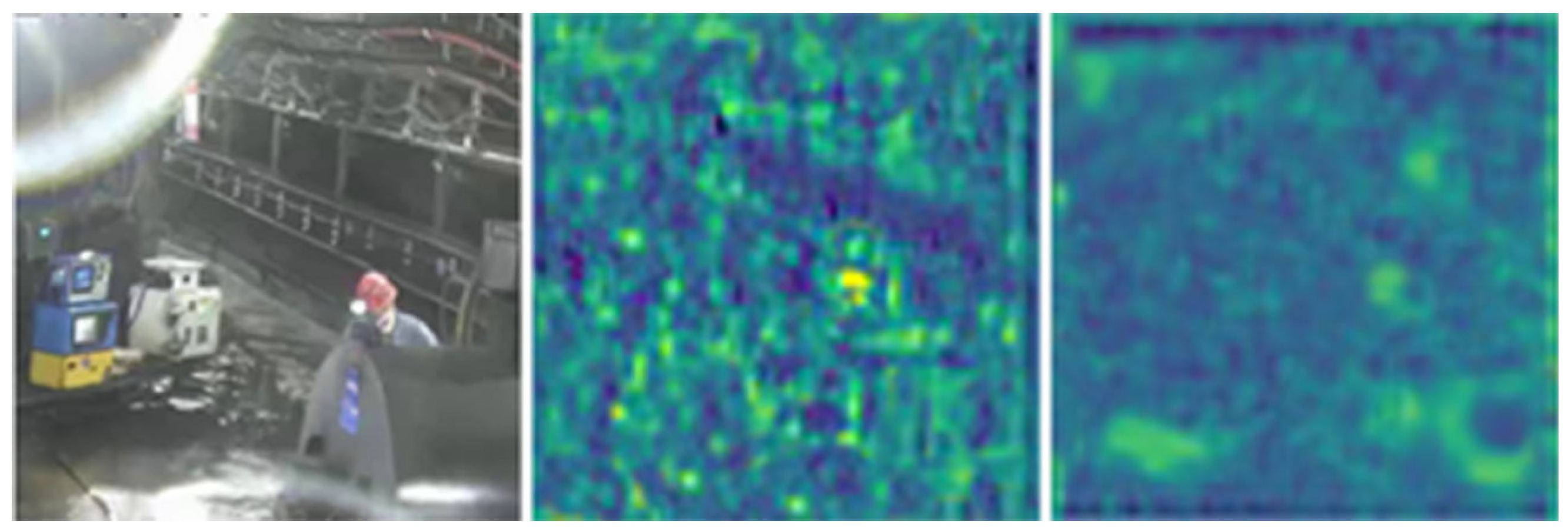

2.2. Convolutional Module for Dynamic Background Awareness in Backbone Networks

Coal mine underground scene helmet data is affected by dust, water mist, etc., and the underground environment is faced with background complexity, illumination changes, single color of the scene and other adverse effects; these factors make the underground helmet data show low clarity, and the overall color is dark, which leads to the underground helmet detection being vulnerable to the background environment interference, which guides the neural network to detect the region that is itself a background for the helmet, and even detect as not wearing a helmet, resulting in false alarms for safety management. In addition, some background areas of mechanical equipment and personnel helmets have similar visual effects, so it is also easy to cause helmet detection to focus on the wrong area. In the application of object detection algorithms, the backbone network is mainly responsible for the characterization of the input image; due to the above adverse effects of the underground environmental data, the output characterization of the backbone network is susceptible to background interference, which results in the subsequent detection of the head being difficult to effectively fuse the helmet target area. In this paper, we draw on the Dynamic Region Convolution (DRConv, Dynamic Region Convolution) [

39], propose the Dynamic Background Sensing Convolution Module (DBConv), and apply it to the backbone network of YOLOv10, through which we dynamically generate the background region masks, and combine it with the product of the elements to perform the mask feature aggregation, and the scale of the module feature space is further improved [

40], and, at the same time, the convolution is directly used to generate the mask in the module to avoid the problem of inconsistency between the forward process and the backward process, and the problem of non-conductivity of the backward process brought by the use of argmax operation in DRConv.

The original DRConv module uses argmax to obtain the background region perception and splits the input data into different regions, and at the same time uses the convolution kernel generation module to generate convolution kernels, and applies different convolution kernels in the different regions of the background to perform the feature extraction, so as to obtain the high-dimensional feature representation of the module output. This module can be effectively applied to the field of face recognition, and it can effectively extract different regions of the face, such as eyes, nose, and other regions, but it is difficult to distinguish the specific meaning of the background region when directly applying this module to helmet object detection. In addition, this module uses argmax to get the region-aware mask, and due to the irreducible property of this operation itself, it leads to the existence of irreducible links in the computation of the backward process, which requires a complex computational structure for approximation.

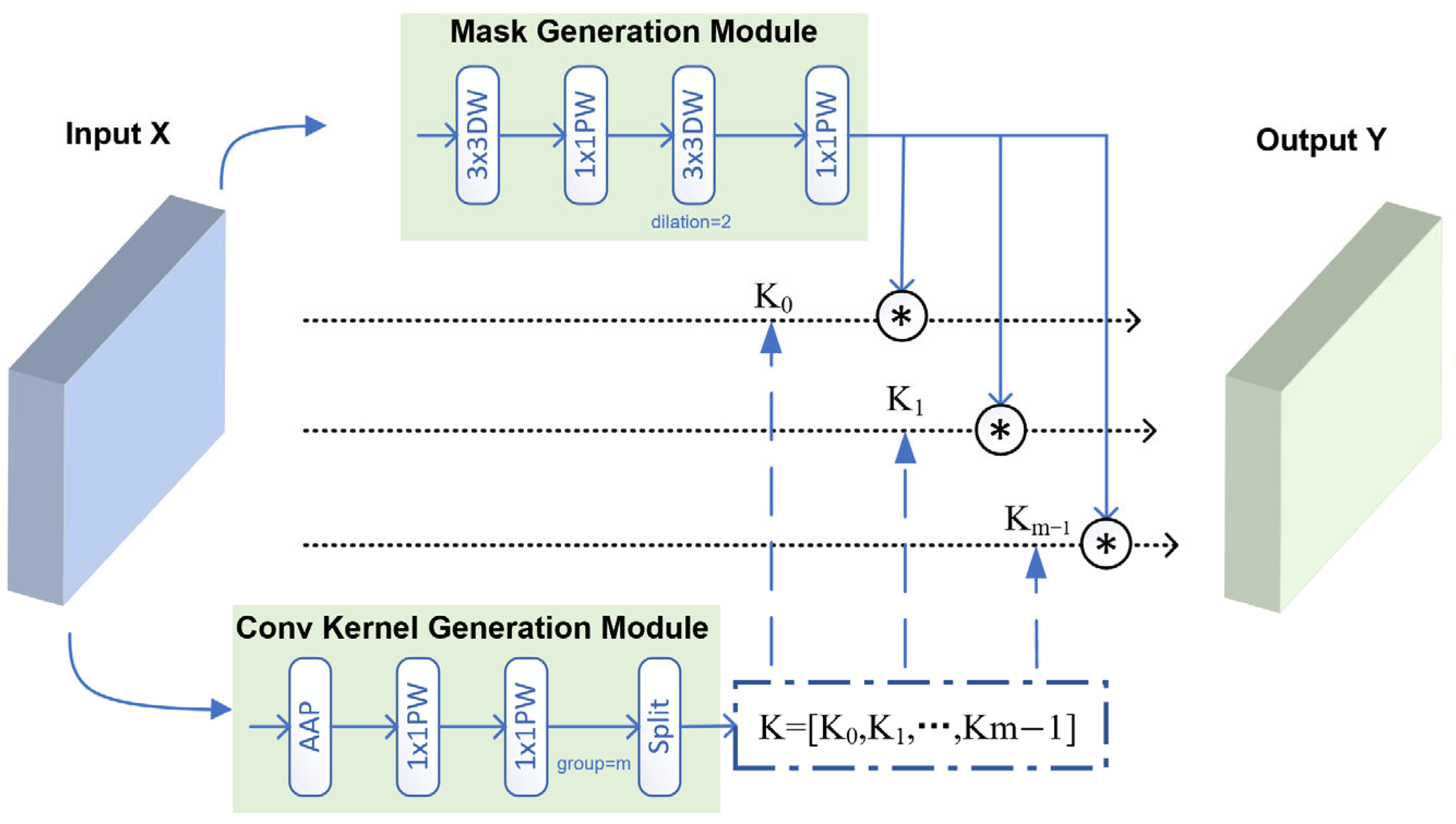

The structure of the DBConv module proposed in this paper is shown in

Figure 4, given the input feature characterization as

,where

represents the batch size and

represents the number of channels. The input feature data passes through the mask-generating convolution module of the upper side pathway to obtain a single channel mask feature for background sensing

; in order to keep the mask size the same as the input feature size, set the step size

and the convolution kernel size and padding size relationship to

, where

is the size of padding, and

is the convolution kernel size. Thus, the output size constraint is satisfied without the need of up-sampling or inverse convolution calculation. In the design of this module, the main considerations are computational efficiency and solving accuracy. From the perspective of solving efficiency, in order to reduce the number of parameters and computational scale, the convolution module adopts depth-separable convolution for computation; from the perspective of solving accuracy, it adopts the combination of enlarged convolution kernel and cavity convolution to further expand the feeling field of feature computation. The module uses two convolution operations, the first convolution is mainly for mask region extraction, and the second convolution expands the receptive field through spatial convolution to form a mask for the general region perception of the background. After the mask generation convolution module calculation, the mask features for feature fusion dynamic background perception are obtained.

The lower side pathway of DBConv is the convolutional kernel generation module. For a given input feature representation

, the main role of this module is to generate

convolution kernels, each of size

, where

is the number of channels of the DBConv output module and

is the convolution kernel size. The formula for this module is as follows:

where

stands for Adaptive Average Pooling operation,

stands for Point Wise Convolution operation,

stands for Depth Wise Convolution operation, and the outermost

is computed by using the group mechanism to get the convolution kernel of size

, and undergoes the Chunk operation to get the final convolution kernel size.

Through the mask generation module and the convolution kernel generation module, construction is completed, the generated convolution kernel is applied to each channel of the input features, and after channel aggregation, the element-by-element product operation (the ‘*’ operation) is performed with the mask features; theoretical analysis shows that the element-by-element product operation can effectively increase the spatial dimension of the feature characterization, thus making the features more differentiated from each other. On the other hand, the DBConv adopts the convolutional for efficient feature extraction, aggregation, and characterization, rather than using the attention mechanism for implementation; this is because in the backbone network, feature extraction is more desirable to be able to retain more high-frequency information, and the theoretical analysis [

36] suggests that the attention mechanism is more adept at capturing the global features rather than the local high-frequency features, i.e., the attention mechanism computation can be regarded as a low-pass filter.

2.3. Detection Head Convolution and Attention Fusion Module

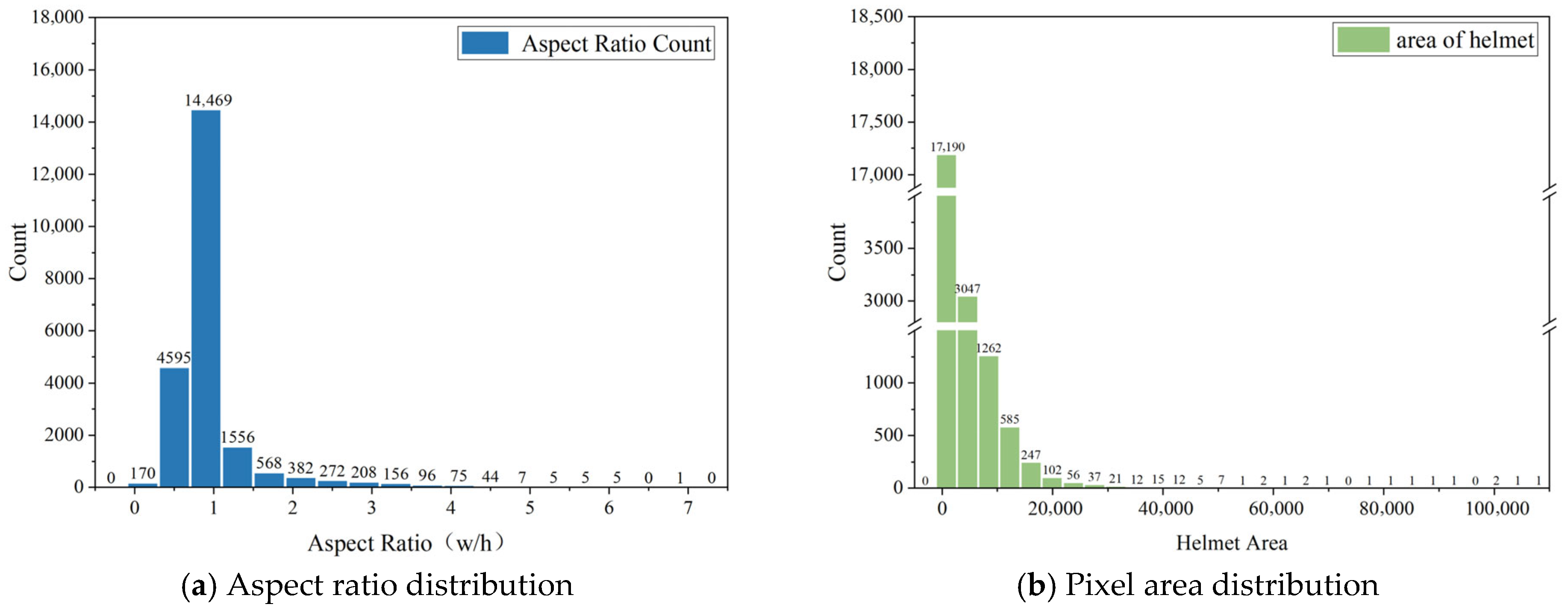

The helmet detection target occupies a relatively small area in the image, so it is necessary for the detection head to be able to effectively extract and characterize the helmet data features. The area distribution and aspect ratio of helmet small targets in the dataset used in this paper are shown in

Figure 5, according to the division of the COCO dataset for small targets, the targets with pixel area less than

(the resolution of input image of COCO dataset is 640 × 640) belong to small target objects, and the proportion of downhole data belonging to small targets is 25.81%, so for the downhole helmet targets, most of the helmet objects all belong to small object detection. For small object detection, due to the small pixel information that can be utilized, it is easy to lose the effective information of the target in the feature extraction part of the backbone network. When detecting small targets, it is difficult to effectively characterize the shape information and material information of the target, which makes the network’s performance for detecting small targets drop sharply.

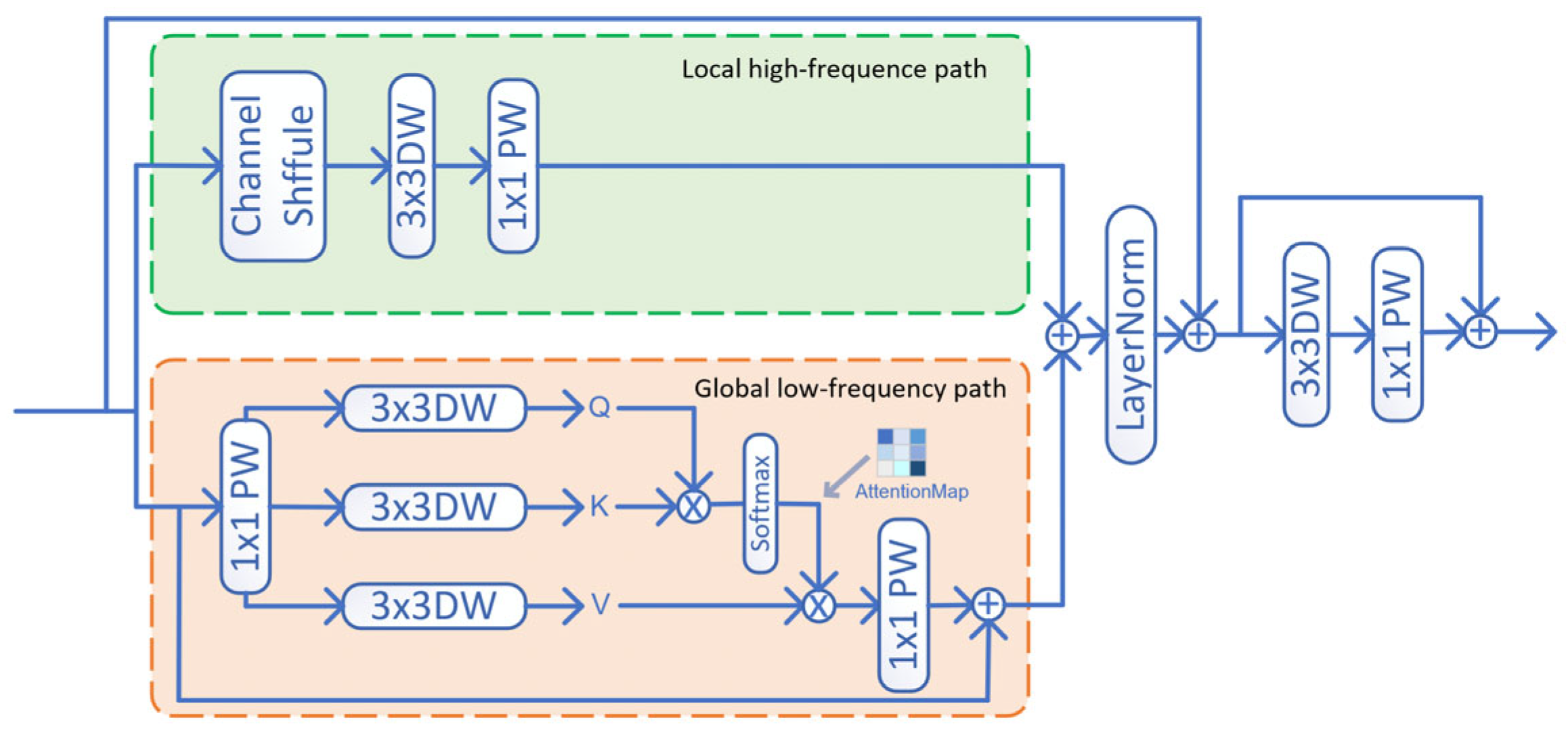

In this paper, we propose to use the joint optimization strategy of feature fusion and attention mechanism in the detection head for helmet small object detection optimization. The structure of this module is shown in

Figure 6. In order to improve the feature perception effect of helmet small targets, this module proposes a local–global fusion module (GLFM, Global–Local Fusion Module) that fuses the high-frequency local sensing information with the low-frequency global sensing information, so as to overcome the problem of easy disappearance and interference of the small targets’ information response during the detection process. The GLFM module contains two computational pathways: (1) Low-frequency Global Detection Sensing Module; (2) High-frequency Local Detection Sensing Module. GLFM applies the attention mechanism for low-frequency global information extraction to overcome the problem of small targets in helmets being susceptible to global interference. By combining the attention mechanism with the feature data, it makes it easy for the network to learn the weights of the important features in the data, and inhibit the weights of the unimportant features, thus reducing the global background interference. By applying convolutional operations to achieve the local high-frequency information extraction, we further amplify the feature response of the local region of the size target to avoid the target region data due to the excessive smoothing of the Pooling operation, thus losing the helmet target box. The two parts of the information are accumulated to form the detection head output information.

The low-frequency global detection and perception module uses the depth-separable global simulation of the attention computation process, which uses three independent parallel depth-separable convolutions to compute , and uses to compute the required Attention Map. The Attention Map is multiplied with to obtain the Attention Output, on the basis of which Wise Convolution (PW) is applied to obtain the Global Output.

The high-frequency local detection perceptual module first performs channel mixing on the input features to introduce randomness and, at the same time, reduce the dependence of the subsequent convolution operation on different channels; then it utilizes depth-separable convolution for local high-frequency detection information extraction to obtain the output of the local detection perceptual pathway. The high-frequency local detection perception module is calculated as follows:

It is applied to the detection head of YOLOv10 to form the structure as shown in

Figure 7. Multiple resolution detection heads are used for classification and regression computation of helmet targets and optimization of network parameters after backward propagation.

2.4. Loss Function Optimization

The true aspect ratio for the tracing frame in the helmet data annotation file is uniform, and its distribution is shown in

Figure 5. As can be seen from the figure, the helmet aspect ratio is distributed in the Y~Z interval, which is more uniform overall; however, YOLOv10 adopts the CIoU calculation as follows.

where

is the

of the prediction frame and the truth frame,

is the distance between the prediction frame and the center of the truth frame,

is the farthest distance between the prediction frame and the truth frame,

is the error term considering the aspect ratio of the truth frame and the prediction frame,

is the balancing coefficient of the different losses, and the formulas of

and

are as follows:

Although the application of CIoU can comprehensively consider the aspect ratio, the distance between the prediction frame, and the true value frame and other factors, it is usually applied in the application of object detection with a large change in the aspect ratio; for the helmet, a target with a small change in the aspect ratio, the dynamic weighting can be applied to achieve the penalty for the failure to comply with the true value of the aspect ratio, and the balancing coefficient

can be corrected to:

where

is a predetermined hyperparameter. The balancing parameter

dynamically penalizes aspect ratio inconsistencies by increasing the aspect ratio error when the predicted frame aspect ratio differs significantly from the true frame aspect ratio.

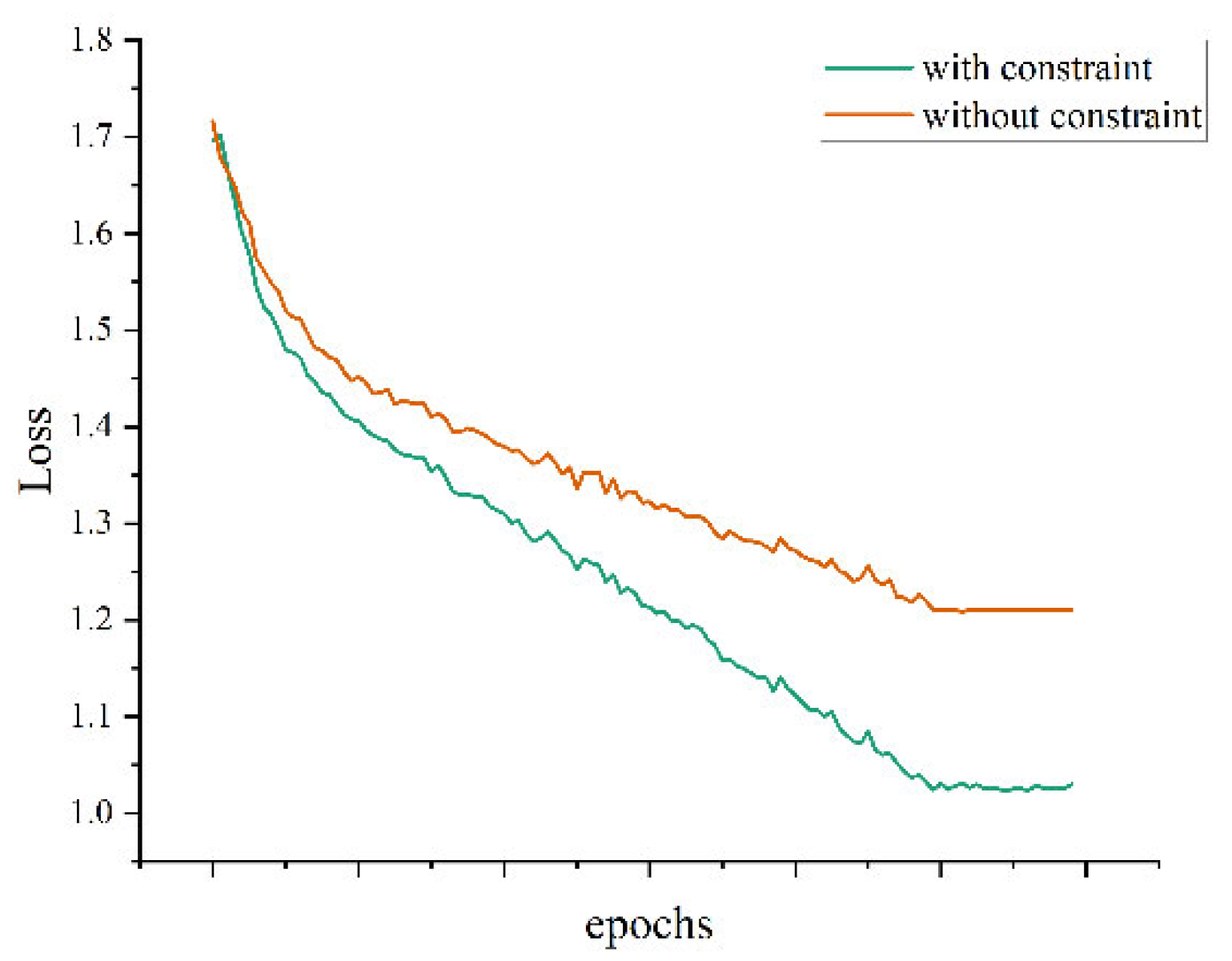

In this paper, the algorithm is used for the optimization process of the network learning rate using OneCycleLR to dynamically adjust the learning rate; it is applied so that in the beginning of the network training, learning rate is gradually increased so that the parameters can be quickly updated. When the learning reaches a certain extent, the learning rate gradually reduces and stabilizes so that the network is more likely to converge to the parameters of smaller loss.

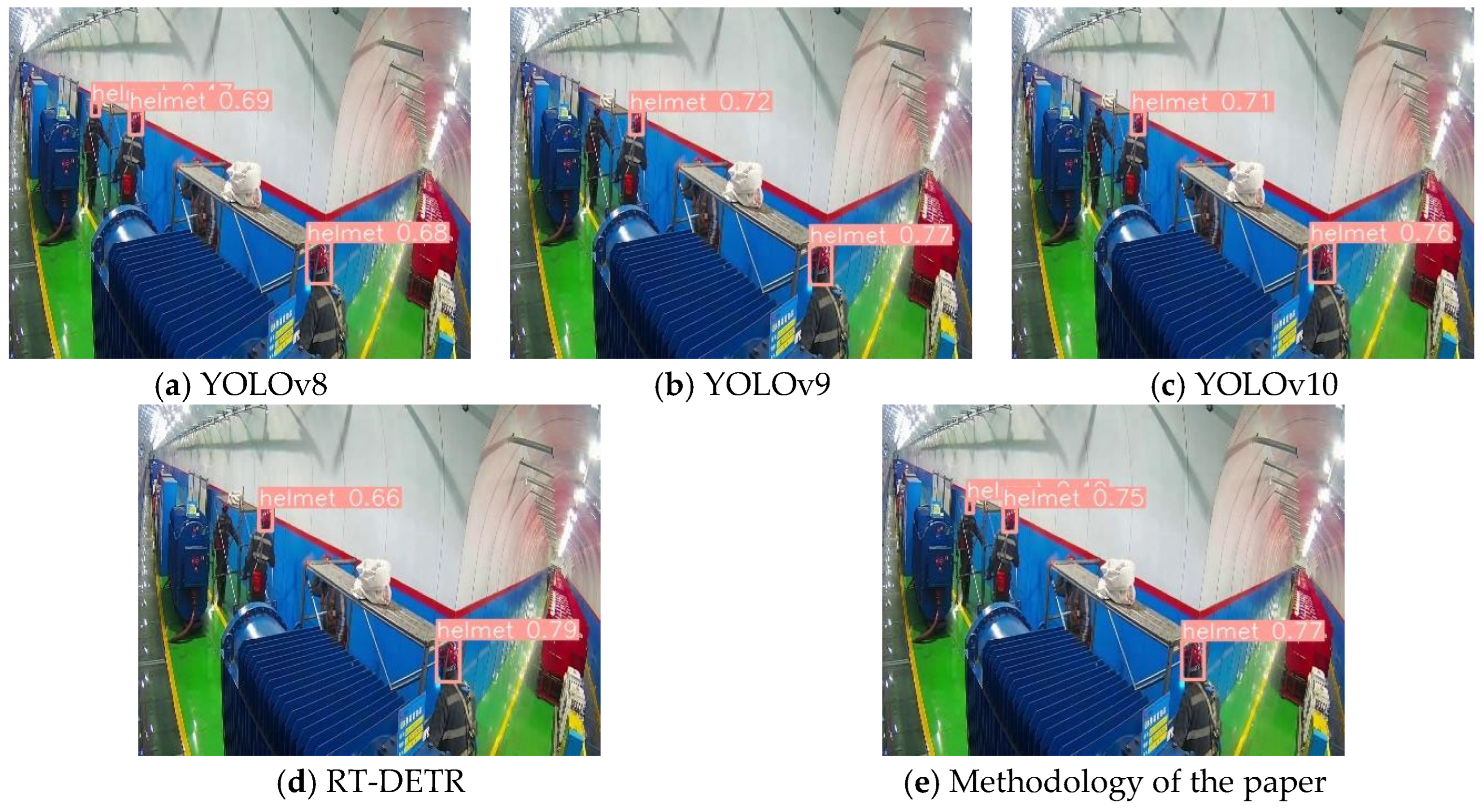

4. Conclusions

In this paper, we proposed a novel yet practical method for the underground helmet-wearing small object detection problem with large background interference. Our method mainly includes two parts: data processing and structure design. Firstly, to deal with the spatial bias of helmet objects in an underground coal mine environment, we conduct the data augmentation steps to mitigate this strong bias. Secondly, to overcome the extremely imbalanced helmet-wearing data in the real-world coal mine environment, we mix up the data samples with normal samples, which are publicly available. This step effectively enhances the diversity of the sample. The helmet itself is a small target and the aspect ratio does not change much; we design a backbone network optimization module based on the dynamic background perception module for background masking and reducing the background interference; in the small object detection, the network optimization of the detection head is based on the fusion of the global and local information to improve the detection effect; in the design of the loss function, we combined it with the aspect ratio for dynamic weight adjustment to achieve the performance optimization of helmet-wearing object detection.

Experiments show that the algorithm proposed in this paper effectively improves the accuracy of safety helmet-wearing small target detection in coal mine underground scenes, and the accuracy of the algorithm proposed in this paper improves by 5.1% relative to the baseline model YOLOv10l, and the detection performance improves for the convolution-based methods (YOLO series) and attention-based methods (e.g., RT-DETR) at this stage. The algorithm proposed in this paper has high detection accuracy and better detection performance for helmet-wearing small targets.

While the proposed methodology demonstrates compelling efficacy in our experimental evaluation, we acknowledge that emerging model architectures will inevitably offer alternative solutions. Crucially, however, the core design principles underlying our approach—particularly regarding data processing and structure design—remain transferable to enhance future iterations of real-time detection systems. Furthermore, the data mixing strategy requires deliberate consideration of domain distribution characteristics. When significant domain discrepancies exist, this approach risks performance degradation and may adversely impact model convergence. Crucially, however, helmet detection scenarios do not encounter such substantial domain gaps due to inherently consistent feature distributions across mining environments.