1. Introduction

Systematic reviews are a cornerstone of evidence-based decision making across a range of disciplines. Unlike traditional literature reviews, systematic reviews follow a rigorous methodology to identify, evaluate, and synthesize all available evidence relevant to a specific research question. This process typically involves a comprehensive search of the literature, predefined inclusion and exclusion criteria, and systematic data extraction and analysis. While essential in building cumulative knowledge, systematic reviews are also time-consuming and labor-intensive, often requiring months of work by domain experts [

1].

In medicine, systematic reviews play a critical role in synthesizing clinical evidence to inform guidelines, policy decisions, and individual patient care. These reviews are particularly demanding due to the volume and heterogeneity of biomedical literature, the need for high precision and recall, and the importance of reproducibility and transparency [

2]. Given these challenges, technology-assisted review (TAR) systems have emerged as crucial tools in the healthcare domain, particularly in supporting the growing demand for timely and comprehensive systematic reviews. As the volume of biomedical literature continues to rise, traditional manual review processes are becoming increasingly insufficient to ensure that healthcare decisions are informed by the best available evidence. TAR systems leverage intelligent algorithms—ranging from classical machine learning to neural ranking models and active learning frameworks—to enhance the efficiency, accuracy, and scalability of the systematic review pipeline [

3]. These systems not only reduce the cognitive and temporal burden on human reviewers but also contribute to reproducibility, transparency, and adaptability in evidence-based medicine [

4].

Evaluating TAR systems presents distinct challenges that go beyond those of traditional information retrieval settings. Standard metrics used in text classification and information retrieval, such as recall, precision, and the area under the curve, primarily measure the system’s ability to retrieve relevant documents [

5]. However, these metrics often assume an idealized environment where resources—time, money, and human effort—are unlimited. In practice, systematic reviews, particularly in medicine, operate under strict resource constraints: reviewers must balance the goal of achieving high recall with the reality of limited budgets and tight timelines [

6,

7]. Furthermore, existing metrics rarely account for the actual cost of reviewing documents, which includes the cognitive burden and time demands placed on human experts. Another complication arises when the true recall is unknown or difficult to estimate, especially in live systematic review projects where the entire set of relevant documents is not available [

8]. As a result, there is growing recognition that the evaluation of TAR systems should incorporate cost awareness, risk management, and practical stopping criteria, rather than relying solely on traditional retrieval performance indicators. In real-world settings—like in medical domains—reviewers often operate under limited time, budgets, and cognitive resources. As such, cost-aware perspectives are critical in assessing the true utility of TAR systems and guiding their design [

9].

The CLEF eHealth (

https://clefehealth.imag.fr/ (accessed on 1 May 2025)) initiative has been a key venue in evaluating the performance of TAR systems in the context of medical systematic reviews. Between 2017 and 2019 [

10,

11,

12], the CLEF eHealth TAR tasks provided a benchmark for comparing automated and semi-automated approaches using realistic, large-scale datasets derived from Cochrane reviews (

https://www.cochranelibrary.com/ (accessed on 1 May 2025)). These tasks aimed to simulate real-world conditions by offering a continuous active learning setup, assessing systems based on their ability to retrieve relevant documents efficiently and effectively. This paper provides a critical overview of the experiments conducted in the CLEF eHealth TAR tasks over these three years, highlighting methodological trends, performance outcomes, and implications for future development in medical TAR systems.

This paper presents a comprehensive re-examination of the CLEF eHealth TAR tasks conducted from 2017 to 2019. In particular, we propose the following key contributions from this study.

Reproduction of Official Results: We collect, organize, and verify the official participant runs from the CLEF eHealth TAR tasks (2017–2019), ensuring reproducibility and providing a unified view of system performance across editions.

Exploration of Budget Allocation Strategies: We investigate alternative strategies for allocating limited review budgets across multiple topics. By analyzing budget-aware screening performance, we provide practical insights for the optimization of systematic reviews under resource constraints.

Introduction of Cost-Aware Evaluation Metrics: We propose two novel measures—relevant found per cost unit (RFCU@k) and utility gain at budget (UG@B)—designed to capture the trade-offs between retrieval effectiveness and resource consumption in TAR systems.

This study makes a distinct contribution to the TAR literature by shifting the focus from single-topic ranking performance to the broader problem of budget allocation across multiple topics under realistic constraints. Whereas most prior evaluations have assumed unconstrained or uniform review budgets, we formalize allocation as a policy optimization problem and introduce two novel budget-aware metrics, RFCU and UG@B, that capture the complementary dimensions of efficiency and utility. To the best of our knowledge, no prior study has systematically investigated budget allocation strategies in evaluating TAR systems. By reanalyzing three years of CLEF eHealth TAR tasks under standardized budget assumptions, we show that allocation policies, whether simple heuristics or adaptive approaches, can materially influence review outcomes. These results provide both methodological advances and practical insights, offering the community new tools and benchmarks to better align TAR evaluation with the realities of systematic review practice.

The remainder of this paper is organized as follows. In

Section 2, we introduce budget-aware perspectives on technology-assisted reviews and discuss their implications for screening strategies.

Section 3 presents the CLEF eHealth TAR lab, describing its setting, tasks, and relevance for empirical medicine.

Section 4 reports on the experimental analysis, evaluating different budget allocation strategies. Finally,

Section 5 concludes the paper and outlines directions for future work.

2. Beyond Recall: Budget-Aware Perspectives in Technology- Assisted Reviews

Although metrics such as recall and workload reduction are standard in evaluating TAR systems, they only partially capture the practical constraints under which systematic reviews are conducted. In real-world settings—especially in medical domains—reviewers often operate under limited time, budgets, and cognitive resources. As such, cost-aware perspectives are critical in assessing the true utility of TAR systems and guiding their design. Several dimensions of cost remain underexplored in current TAR research.

First, most evaluation frameworks assume unlimited resources for document screening, with few efforts to simulate budget-constrained or time-sensitive review scenarios. Integrating explicit constraints—such as fixed review time or monetary limits—could yield insights into the robustness of different systems under realistic operational pressures. Second, human-in-the-loop efficiency remains largely nonquantified. The time per decision, fatigue effects, and the interpretability of ranking outputs are rarely measured but have substantial impacts on reviewer performance and user acceptance. Third, the computational cost of TAR systems, especially neural architectures, is seldom considered relative to their performance gains. Lightweight, non-neural models may offer similar effectiveness at a significantly lower cost in terms of infrastructure, energy consumption, and deployment complexity—factors that are particularly relevant for smaller institutions or global health contexts. Fourth, few studies account for the opportunity costs of screening inefficiencies or misclassifications. The delayed identification of relevant studies can affect the timeliness of clinical guideline implementation or policy decisions, which carries downstream consequences that are not reflected in retrieval metrics alone.

To address these limitations, research on budget allocation should adopt broader experimental protocols that model cost explicitly. This includes collecting timing data, incorporating economic analyses, and conducting mixed-method user studies. Comparative benchmarks should evaluate TAR systems not only by their retrieval effectiveness but also by their efficiency, transparency, and long-term sustainability.

2.1. Examples of Budget Allocation in Information Retrieval

While considerable research in the technology-assisted review (TAR) field has focused on stopping strategies and recall-oriented optimization, the problem of how to distribute review effort across multiple topics or tasks has received far less attention. In particular, budget allocation—how limited annotation or screening resources should be apportioned across topics—remains an underexplored area. To the best of our knowledge, budget allocation in TAR systems has not yet been systematically investigated, despite its growing relevance in scenarios involving large-scale, multitopic systematic reviews.

In this section, we examine related work on budget allocation in adjacent areas, highlighting strategies and frameworks that could inform future developments in TAR.

The TREC 2017 Common Core track explored how bandit techniques could be used not only to simulate but also to construct a test collection in real time. This requires addressing practical challenges beyond simulations, such as allocating annotation effort across topics and enabling assessors to familiarize themselves with each topic, while also developing infrastructure for real-time document selection and judgment acquisition [

13].

Constructing reliable and low-cost IR test collections often depends on how annotation budgets are distributed across topics. Traditional strategies, as used in TREC, typically assign a uniform budget per topic, often tied to a fixed top-

k pool size. However, more recent work has highlighted the limitations of such static allocation and has proposed dynamic approaches based on topic-specific needs [

14].

Flexible budget models have also been studied in the context of sequential decision making. A generalization of restless multiarmed bandits (RMABs), known as flexible RMABs (F-RMABs), has been proposed to allow resource redistribution across decision rounds. This framework supports more realistic planning scenarios and provides a theoretically grounded algorithm for optimal budget allocation over time [

15].

In multichannel advertising, reinforcement learning techniques have been applied to optimize long-term outcomes under budget constraints. A hybrid Q-learning and differential evolution (DE) approach has been introduced to dynamically allocate resources across channels, taking into account delayed effects and interdependencies between actions and outcomes [

16].

Budget-sensitive approaches have also emerged in data annotation settings such as multilabel classification. A reverse auction framework has been proposed to select crowd workers based on cost and confidence, combined with systematic budget selection strategies (e.g., greedy and multicover selection) to address domain coverage limitations within fixed budgets [

17].

Finally, contextual bandits, widely used in online recommendation systems, have been extended to handle biased user feedback caused by herding effects. This line of research, while not directly focused on budget, provides useful insights into sequential decision making under partial observability and feedback bias, which are relevant for adaptive resource allocation [

18].

2.2. A Proposal of Budget Allocation Strategies in TAR Systems

In cost-constrained learning scenarios such as active learning, annotation campaigns, or document screening workflows, a central challenge is how to allocate a fixed labeling or review budget in order to maximize utility [

16,

19,

20]. The budget may represent time, a monetary cost, or human effort and must be judiciously spent across a sequence of uncertain decisions. While most traditional active learning approaches focus on selecting the next most informative item, they often assume a static or uniform cost per query and overlook the global allocation question: how much effort should be spent on which data, tasks, or domains?

This budget allocation problem has been relatively understudied in TAR systems, despite its crucial role in real-world deployments. For example, in multiclass classification, multidomain retrieval, or document triage tasks, allocating too much effort to low-yield areas can waste resources, while underinvesting in promising areas reduces overall effectiveness. In [

21], the authors mention that “it has been estimated that systematic reviews take, on average, 1139 h (range 216–2518 h) to complete and usually require a budget of at least

$100,000”. Therefore, a robust allocation strategy must consider both the expected gain from labeling additional examples and the associated cost, potentially adapting over time as feedback is observed. This requires balancing the exploitation of known high-utility areas with the exploration of uncertain regions and motivates strategies that incorporate cost awareness, utility estimation, or uncertainty modeling into the allocation process.

Despite being a critical aspect, most TAR systems, when evaluated, focus on optimizing the within-topic ranking performance, assuming unlimited or per-topic isolated review budgets. However, in real-world scenarios such as legal reviews, systematic medical literature reviews, or multitopic classification, practitioners operate under a global screening budget that must be distributed across heterogeneous topics of varying size, relevance prevalence, and ranking quality [

9,

22,

23,

24,

25,

26,

27,

28].

In this study, we present a set of straightforward yet informative strategies for distributing review effort across topics. Rather than proposing complex adaptive policies, our objective is to analyze how different allocation schemes affect system performance and evaluation metrics under constrained review conditions. To this end, we wish to re-evaluate the CLEF eHealth TAR experiments from the 2017 to 2019 editions by applying a budget allocation strategy across all runs. This approach ensures that each TAR system is assessed under the same labeling cost constraints, allowing for a fair and consistent comparison of their effectiveness. By standardizing the annotation budget, we eliminate variability caused by differing cost assumptions in the original submissions, thereby focusing the evaluation on the intrinsic capabilities of the TAR methods themselves, rather than on disparities in resource expenditure.

In

Section 2.2.1, we present four baseline strategies for budget allocation:

We selected these four strategies to reflect a range of simple, interpretable policies with different assumptions about topic relevance distribution. These strategies provide a useful contrast between uniform effort (even), topic prior-based effort (proportional/inverse), and a greedy baseline driven by estimated gains.

We assume that we have a budget, B, available, and we need to allocate it across the set of topics , with a total number of topics equal to . will be the budget allocated for the i-th topic . The total number of documents available for the i-th topic is .

2.2.1. Even Allocation

The even allocation strategy divides the total review budget equally across all topics:

This approach ensures the uniform treatment of all topics, regardless of their size or difficulty. It is simple, fair, and effective in scenarios where topics are equally important or we lack prior estimations of relevance prevalence or difficulty. It may underperform when topic sizes are highly imbalanced or when some topics are significantly more relevant than others.

2.2.2. Proportional Allocation

Proportional allocation distributes the budget in proportion to the number of documents in each topic:

This strategy assumes that larger topics deserve more review effort. This approach reflects the topic scale and avoids undersampling large collections, but it can overcommit to large topics with low relevance prevalence, reducing the overall retrieval efficiency.

2.2.3. Inversely Proportional Allocation

Inversely proportional allocation gives more effort to smaller topics, based on the notion that they can be reviewed more thoroughly and may yield higher accuracy averaged across the topics:

It prioritizes small topics that may otherwise be overlooked, and it should improve the recall by ensuring better topic coverage under tight budgets. The limitation of this approach is that it may underallocate effort to large but relevance-rich topics.

2.2.4. Threshold-Capped Greedy Allocation

This strategy allocates budget in a greedy, depth-first manner, prioritizing smaller topics. Topics are sorted in ascending order regarding the available number of documents

, and the budget is allocated sequentially until exhaustion. For each topic, allocation is capped at either a fraction

of its total size or the remaining budget,

:

The idea of this approach is to quickly screen small topics, enabling broader coverage when only partial review is feasible. The drawback is that it may lead to the neglect of later (often larger) topics unless combined with a secondary reallocation strategy.

These strategies provide foundational tools for managing review effort in multitopic TAR settings. While simple, they offer contrasting trade-offs between fairness, coverage, and efficiency. In

Section 4, we analyze their empirical performance and explore adaptive alternatives based on relevance prevalence and utility gain.

One final remark concerns the fact that the budget allocation strategies may operate in parallel with a stopping strategy at the topic level. While a global screening budget constrains the total effort across all topics, individual topics may consume less than their allocated share if an early stopping condition is met—for example, when an estimated recall threshold is achieved or the marginal utility drops below a predefined threshold. In practice, this allows the reallocation of unused budget to other topics that remain uncertain or underexplored. However, the problem of recall estimation and stopping decision making introduces additional complexity, requiring reliable recall estimators or predictive models. In this work, we focus exclusively on the budget allocation problem under the assumption of fixed topic-level effort, and we leave the investigation of stopping strategies to future work.

2.3. Budget-Aware Evaluation Metrics

Traditional evaluations of TAR systems often prioritize effectiveness metrics, such as recall, while focusing on reducing the workload [

10,

11,

12]. However, these metrics only offer a partial perspective on system utility, particularly when reviews must be performed under real-world resource constraints. To address this gap, we propose a budget-aware framework for evaluating TAR systems where we treat the systematic review process as a decision-making problem under budget constraints. Each document-screening action is associated with a cost—whether in terms of time, cognitive load, or financial expenditure—while the benefit is measured by how effectively the system retrieves relevant studies. To quantify this balance, we introduce budget-sensitive metrics like the relevant found per cost unit (RFCU) and utility gain at budget (UG@B), which measure system effectiveness while factoring in resource limitations.

These budget-aware metrics are intended to complement, rather than replace, traditional evaluation measures such as recall, precision, or F1. While standard metrics provide essential insights into the overall effectiveness of TAR systems, they do not capture how efficiently this performance is achieved in terms of the annotation cost. Budget-aware metrics fill this gap by explicitly linking performance to resource usage, offering a more refined view of system behavior under realistic constraints. By considering both sets of metrics, we gain a more comprehensive understanding of each system’s strengths—balancing absolute effectiveness with cost-efficiency—which is crucial for informed deployment in high-cost, time-sensitive review settings.

The proposed budget-aware metrics used in our evaluation operate under the realistic assumption that the total number of relevant documents is unknown—a typical scenario in technology-assisted review tasks. Rather than relying on oracle knowledge of how many relevant items remain undiscovered, these measures focus exclusively on the performance achieved within the constraints of the allocated budget. This means that we evaluate systems based on the actual annotation cost incurred and the utility of the results retrieved and not based on hypothetical outcomes that assume full awareness of the relevance distribution. This approach ensures that our evaluation remains grounded in the practical limitations of real-world applications, where exhaustive relevance judgments are rarely available.

We will begin our presentation of the definitions of the new metrics by reviewing Recall@k, which measures the proportion of relevant documents retrieved within the first

k screened documents:

where

is the number of relevant documents (true positives—TP) found up to rank

k, and

is the total number of relevant documents (positives—P) in the collection. While Recall@k effectively captures early retrieval performance, it assumes that screening cost is uniform and unlimited. In real-world TAR scenarios, reviewers operate under strict resource constraints. Consequently, Recall@k may fail to reflect the efficiency with which relevant documents are identified relative to the effort expended.

To address these limitations, we propose two complementary baseline budget-aware evaluation metrics: relevant found per cost unit (RFCU) and utility gain at budget (UG@B). Let B denote the reviewing budget, expressed as the number of documents that experts can examine for a systematic review. Given a ranked list of documents and their binary relevance labels, we wish to measure how effectively a system identifies relevant documents while minimizing wasted effort on nonrelevant ones.

2.3.1. Relevant Found per Cost Unit@k

RFCU@k measures how many relevant documents are retrieved per unit of cost within the first k documents. It is defined as

where

is the number of relevant documents found within the first k screened documents;

c is the cost of reviewing a single document (e.g., in units of time, money, or effort).

RFCU@k captures the cost-efficiency of the screening process. Unlike Recall@k, it directly accounts for the screening cost, making it particularly appropriate when the objective is to maximize the yield of relevant documents per dollar or hour spent.

To better illustrate the difference between Recall@k and RFCU@k, consider the following hypothetical scenario: suppose that a document collection contains relevant documents. A reviewer screens the top documents, finding relevant documents. The cost per document assessed is cost units (e.g., due to document length or review complexity).

Recall@k measures the proportion of relevant documents retrieved within the top

k documents:

Thus, 20% of all relevant documents are found after screening 50 documents.

RFCU@k measures the number of relevant documents retrieved in the top-k documents per unit of cost spent:

Thus, the system achieves efficiency of

relevant documents per budget unit.

Although, in this example, the numerical values of Recall@k and RFCU@k coincide, they measure fundamentally different aspects:

Recall@k evaluates how much of the total relevant information has been retrieved;

RFCU@k evaluates how efficiently the review effort has been translated into relevant findings, accounting for the cost per document.

If the cost per document

c were higher (e.g.,

), RFCU@k would drop:

whereas Recall@k would remain unchanged.

Another important aspect to highlight is the fact that RFCU@k is similar to Precision@k, but the interpretation and the objectives are quite different also in this case. Precision@k measures the proportion of relevant documents among the top-

k retrieved documents:

Precision@k reflects the

purity of the top-

k results and is bounded between 0 and 1. It assumes that the cost of assessing each document is constant and does not influence the evaluation. RFCU@k accounts explicitly for the screening cost. When

, RFCU@k reduces to Precision@k. However, when the document assessment costs vary (e.g., due to the document length or reviewer expertise requirements), RFCU@k would provide an evaluation of system efficiency when screening costs need to be taken into account.

2.3.2. Utility Gain at Budget (UG@B)

UG@B formalizes the balance between the gain in finding relevant documents and the cost of reviewing nonrelevant ones. It is defined as

where

is the total number of documents reviewed for the i-th topic;

g is the gain associated with reviewing a relevant document;

c is the penalty associated with reviewing a nonrelevant document.

While Recall@k, and all other traditional measures, remains a valuable metric in assessing early retrieval effectiveness, it does not account for the practical costs associated with reviewing documents. RFCU@k and UG@B extend the traditional evaluation by incorporating budget awareness and cost-efficiency, providing a more actionable framework for the assessment of TAR systems under real-world constraints.

3. The CLEF eHealth Technology-Assisted Review Lab

CLEF eHealth was a large and extensive evaluation challenge in the medical and biomedical domain within the CLEF initiative. The goal was to provide researchers with datasets, evaluation frameworks, and events. The CLEF eHealth TAR lab, conducted from 2017 to 2019 [

10,

11,

12], aimed to advance the development of intelligent systems to support the systematic review process in evidence-based medicine. Systematic reviews are vital in synthesizing research findings and guiding clinical and policy decisions, yet they are often labor-intensive and time-consuming due to the exponential growth of the medical literature. The TAR lab addressed this challenge by providing a benchmark platform for evaluating automated and semi-automated methods capable of assisting reviewers in identifying relevant scientific articles.

The main objectives of the TAR lab were threefold:

To develop and evaluate technology-assisted methods that support different stages of systematic reviews, including document retrieval, title and abstract screening, and ranking;

To assess how well such systems could replicate or improve upon expert-generated results, particularly in the context of diagnostic test accuracy (DTA) reviews;

To create reusable test collections and protocols that could foster reproducible and comparative experimentation across the research community.

Each year, the lab introduced realistic tasks simulating distinct phases of the systematic review process. In 2017, the lab focused on prioritizing citations retrieved by expert Boolean queries. In 2018 and 2019, the lab expanded to two subtasks: (1) retrieving relevant documents using only structured review protocols (no Boolean search) and (2) ranking documents retrieved by expert searches (title and abstract screening). In particular, some of the TAR tasks challenges were as follows:

Data sparsity and imbalance: Relevant studies were a small minority within large retrieval sets, mimicking the real-world screening burden.

Domain specificity: The DTA reviewed the required understanding of complex medical terminology and concepts.

Limited supervision: Especially in the no Boolean search subtask, systems had to operate with minimal training data and no expert-crafted queries.

Across the three editions, the TAR lab demonstrated that automatic and semi-automatic methods could substantially reduce the human effort required to complete systematic reviews without compromising their comprehensiveness. Participants employed a wide range of techniques, including classic machine learning, neural ranking models, and query expansion strategies. Some of the general findings were as follows:

High recall achievable with limited review effort: Several systems reached near-complete recall for relevant studies after reviewing only a fraction of the dataset.

Protocol-based retrieval remains challenging: The no Boolean search subtask proved to be the most difficult, with mixed results across teams and significant room for improvement.

Iterative strategies outperform static ones: Approaches that leveraged feedback loops, such as active learning or reranking, were generally more effective than static ranking methods.

3.1. CLEF eHealth 2017 Technology-Assisted Review Task

The CLEF eHealth 2017 evaluation lab introduced a new pilot task focusing on technology-assisted reviews (TAR) in empirical medicine [

12,

29]. The primary objective of this task was to support health scientists and policymakers by enhancing information access during the systematic review process. The TAR task specifically addressed the abstract and title screening phase of conducting diagnostic test accuracy (DTA) systematic reviews. Participants were challenged to develop and evaluate search algorithms aimed at efficiently and effectively ranking studies during this screening phase. The task involved constructing a benchmark collection of fifty systematic reviews, along with corresponding relevant and irrelevant articles identified through original Boolean queries. Fourteen teams participated, submitting a total of 68 automatic and semi-automatic runs, utilizing various information retrieval and machine learning algorithms over diverse text representations, in both batch and iterative modes.

3.2. CLEF eHealth 2018 Technology-Assisted Review Task

The CLEF eHealth 2018 evaluation lab continued its exploration into technology-assisted reviews (TAR) within empirical medicine, aiming to enhance the efficiency and effectiveness of systematic reviews [

11,

30]. Building upon the foundation laid in 2017, the 2018 lab introduced refinements to its tasks and methodologies. The 2018 TAR task was structured into two subtasks, each designed to simulate different stages of the systematic review process.

Subtask 1: No Boolean Search—Participants were provided with elements of a systematic review protocol, such as the objective, study type, participant criteria, index tests, target conditions, and reference standards. Using this information, they were to retrieve relevant studies from the PubMed database without constructing explicit Boolean queries. The goal was to emulate the initial search phase of a systematic review, relying solely on protocol information to identify pertinent literature.

Subtask 2: Title and Abstract Screening—In this subtask, participants received the results of a Boolean search executed by Cochrane experts, including the set of PubMed Document Identifiers (PMIDs) retrieved. The task involved ranking these abstracts to prioritize the most relevant studies, effectively simulating the screening phase, when reviewers assess titles and abstracts to determine inclusion in the systematic review.

3.3. CLEF eHealth 2019 Technology-Assisted Review Task

The CLEF eHealth 2019 evaluation lab, building upon the foundations established in previous years, introduced refinements to its tasks and methodologies [

10,

31]. The 2019 TAR task comprised the same two subtasks, each designed to simulate different stages of the systematic review process: subtask 1—no Boolean search; subtask 2—title and abstract screening.

4. Experimental Analysis

In this section, we present the experimental framework adopted to analyze the results of CLEF eHealth TAR tasks from 2017 to 2019. Our primary focus is on reproducing and systematically studying the official runs submitted by participants, with particular attention to the title and abstract screening task. We restrict our analysis to this task because it is the one with sufficient evaluation data across all three editions, whereas the other subtask (full-text screening) was less consistently populated by participants and thus less suitable for comprehensive comparison. The goal of this experimental analysis is to reproduce the retrieval effectiveness of TAR systems under a recall-oriented perspective, reflecting the critical importance of high recall in systematic reviews. Accordingly, we adopt a set of well-established evaluation metrics that were used in the evaluation of the CLEF TAR labs, each capturing complementary aspects of system performance.

Recall@k: This measures the proportion of relevant documents retrieved within the top k screened documents. This metric provides a direct view of how quickly systems identify relevant studies. We will evaluate the runs when k is equal to the total number of relevant documents.

Area Under the Curve (AUC): Specifically, we compute the AUC of the recall versus documents screened curve, offering a global assessment of the retrieval efficiency across the screening process.

Mean Average Precision (MAP): This summarizes the precision achieved for each relevant document retrieved, providing an aggregate measure of the ranking quality. Although it is traditionally precision-biased, we have seen that this measure is strongly correlated with RFCU@k.

Work Saved over Sampling at Recall (WSS@r): This quantifies the reduction in screening effort relative to random sampling, at a given level of recall, thus highlighting the practical workload benefits offered by TAR systems.

These metrics collectively allow us to capture both the effectiveness (in terms of the retrieval and ranking of relevant documents) and the efficiency (in terms of effort saved) of the systems evaluated during the CLEF eHealth TAR challenges. This analysis serves as the foundation for comparing the results on new cost- and budget-aware evaluation measures proposed

Section 2.3.

We implemented all measures in R and did not rely on the official scripts in order to be consistent with the R programming framework that we established for this analysis.

4.1. Adaptive Baseline: Epsilon-Greedy Multiarmed Bandit

While our main analysis focuses on fixed, hand-crafted allocation strategies, we also include in this work a simple adaptive baseline to illustrate how learning-based methods can dynamically adjust budget allocation. We adopt an epsilon-greedy multiarmed bandit approach [

32,

33], where each review topic is treated as an “arm” that yields a reward when relevant documents are discovered. At each allocation step, the policy selects the topic with the highest estimated reward (exploitation) with probability

or a random topic (exploration) with probability

. In this work, the reward is defined as the number of relevant documents identified in the screened set, consistent with prior work in active learning [

33,

34].

This adaptive baseline highlights two key properties absent in static heuristics: (1) the ability to incorporate observed feedback into future allocation decisions and (2) the trade-off between exploiting high-yield topics and exploring uncertain ones. While epsilon-greedy is intentionally simple and does not model topic heterogeneity or review costs, it provides a principled adaptive comparison point and a foundation for more sophisticated strategies.

4.2. Datasets and Data Availability

All data used in this study were obtained from the official CLEF TAR GitHub repository (

https://github.com/CLEF-TAR/tar/ (accessed on 1 May 2025)). This repository contains the complete set of topics, document collections, and submitted runs for the CLEF eHealth TAR tasks from 2017 to 2019, as well as associated relevance judgments and evaluation scripts. By relying on this openly accessible and curated resource, we ensure the transparency, reproducibility, and consistency of our experimental setup and results. We will also make available all the code that we implemented to reproduce the study online (

https://github.com/gmdn (accessed on 1 May 2025)).

In

Table 1, we provide a summary of the number of topics, documents, and relevant documents for each year of the CLEF eHealth TAR lab.

4.3. Reproducing the Original Results

In this section, we revisit and reproduce the results of the CLEF eHealth TAR lab from the 2017 to 2019 editions. By systematically reproducing the original runs, we aim to create a consistent evaluation framework that allows for direct comparison across years, systems, and strategies. This reproduction effort not only reinforces the transparency and reliability of previous findings but also lays the groundwork for introducing new, budget-aware evaluation methods that reflect the practical constraints of real-world TAR deployments in a better way.

To ensure completeness, we have included not only the runs that were officially evaluated during the original campaigns but also those that were submitted but not formally assessed at the time. This comprehensive approach allows for a more thorough comparison across systems and years and supports the introduction of new budget-aware evaluation metrics that reflect the practical constraints and priorities of real-world screening workflows.

In

Table 2,

Table 3 and

Table 4, we show the reproduced results of the three editions, CLEF 2017, CLEF 2018, and CLEF 2019, respectively. We observed that our replicated evaluation closely matched the original scores. In fewer than 10% of the evaluated system–topic combinations, we observed minor deviations, with absolute differences typically ranging from 2% to 3%, and all of them occurred in the work saved over sampling (WSS) metric. This is due to the behavior of trec_eval (

https://github.com/usnistgov/trec_eval (accessed on 1 May 2025)), the software used by CLEF lab organizers to compute results, which applies a truncation mechanism when a run fails to retrieve all relevant documents, leading to slight variations in WSS calculation. These isolated cases were limited in scope and did not exhibit any systematic bias in favor of a particular system. For each system–topic–year tuple, we computed both absolute and relative differences, confirming that the observed discrepancies were negligible and did not compromise the integrity or fairness of the comparative evaluation—a key objective of the CLEF benchmarking initiative. This high level of agreement confirms the reliability of the reproduction process and provides a solid foundation for further analysis. With this strongly reproduced dataset, we can confidently proceed to study the impact of budget allocation strategies, ensuring that any observed differences in performance are due to the evaluation framework rather than inconsistencies in the data or original runs.

4.4. Analysis of Budget Allocation Strategies

To explore the impact of budget-aware evaluation, we designed an experimental setting in which each TAR system is allocated a fixed annotation budget equivalent to 10% of the total number of documents in the collection and a value of 1 for both cost and gain. This budget simulates a realistic constraint on the number of documents that can be manually reviewed or labeled, reflecting common limitations in resources. All systems are evaluated under this uniform budget threshold to ensure comparability, and selection strategies are assumed to proceed until the budget is exhausted. This controlled setting enables us to assess not only how effectively systems retrieve relevant documents but also how efficiently they operate within predefined resource limits.

For better readability, we show the tables of all results in

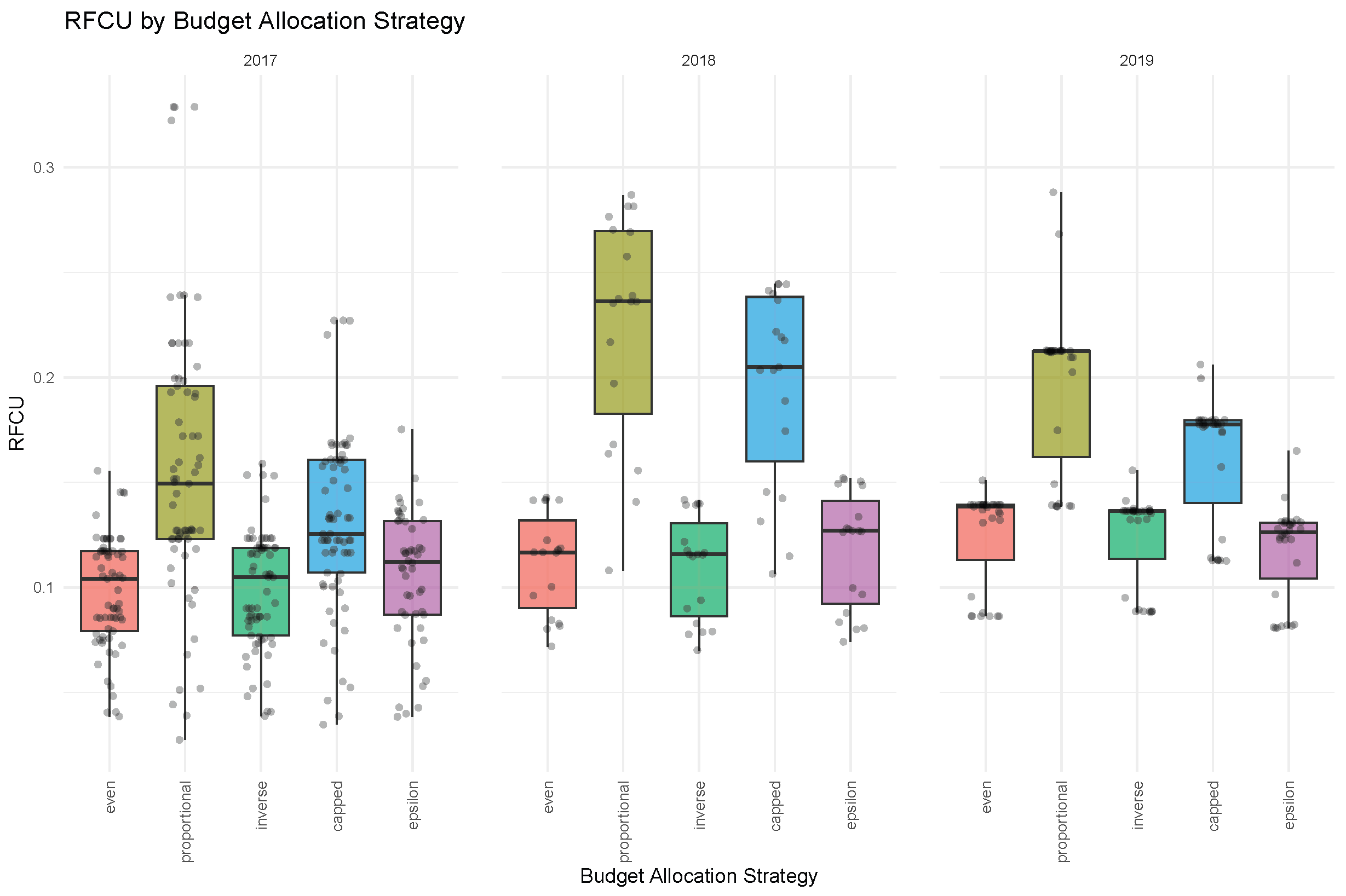

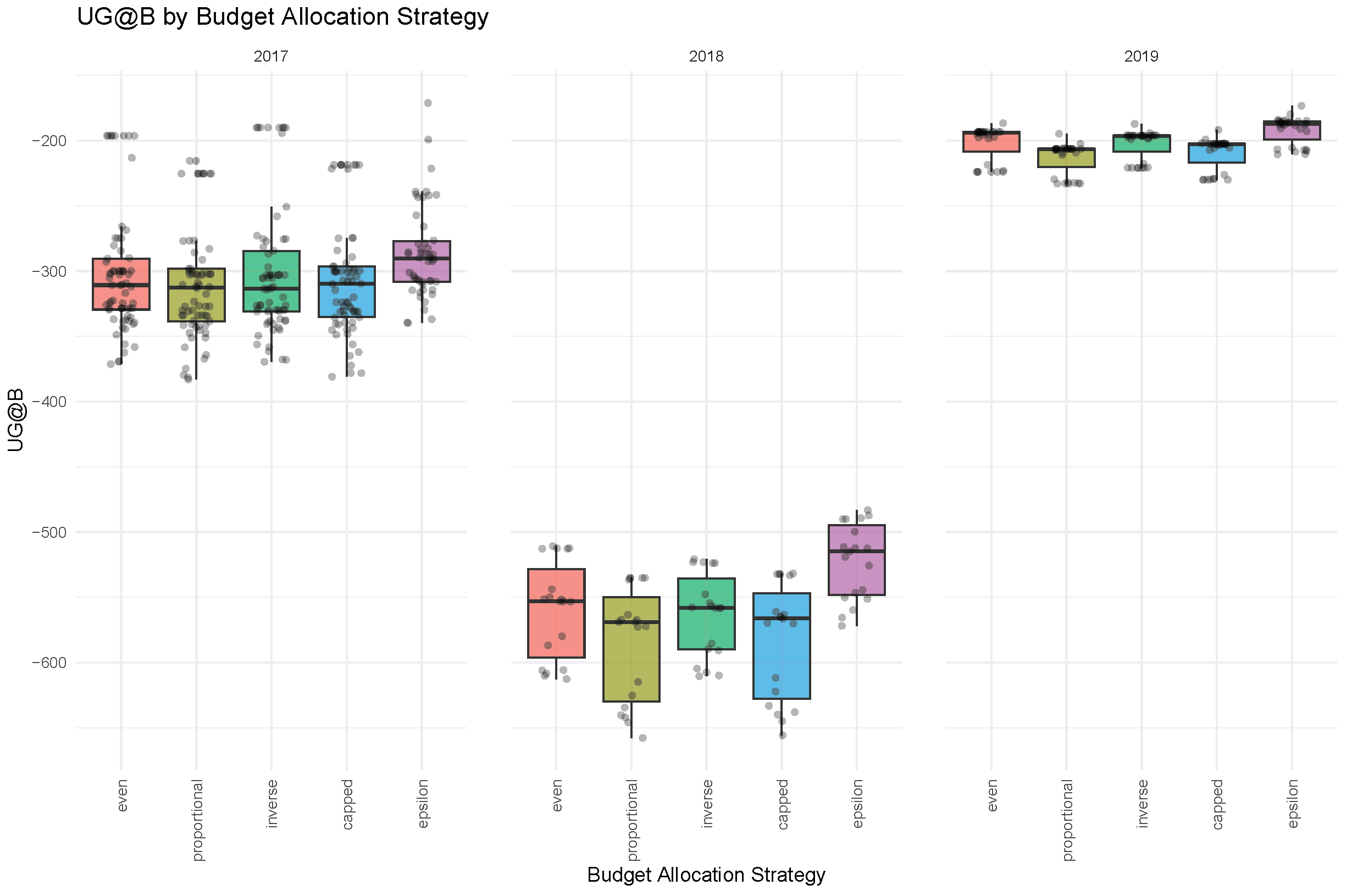

Appendix A. Our comparative evaluation across multiple years reveals that the effectiveness of budget allocation strategies varies substantially depending on the evaluation metric used. In particular, when optimizing for recall—the proportion of relevant documents retrieved—the best-performing allocation strategies were even allocation and inverse proportional allocation. These strategies prioritize topic coverage and allocate resources in a way that avoids overcommitting to large, potentially low-yield topics. In particular, inverse proportional allocation favors smaller topics, which may contain concentrated pockets of relevant documents and benefit from deeper review. This behavior aligns with recall’s sensitivity to missing relevant items, especially in underrepresented or niche topics.

In contrast, when optimizing for RFCU, the highest scores were achieved by the proportional and threshold-capped greedy strategies. These methods allocate budget relative to the topic size or limit effort given to large topics and thus avoid overspending review effort where relevant documents are sparse. RFCU emphasizes efficiency—finding the most relevant documents per unit cost—rather than coverage and is therefore better served by strategies that exploit expected high-yield regions while avoiding low-utility areas.

For UG@B, we observed no significant performance differences among the four allocation strategies. This suggests that UG@B is less sensitive to the specific distribution of the budget, possibly due to its balancing of gains (true positives) and penalties (false positives). UG@B captures the net benefit rather than pure coverage or efficiency and may tolerate a wider range of allocation behaviors without large variance in utility.

These results are better visualized in

Figure 1,

Figure 2 and

Figure 3. For each year, we show a boxplot of the metrics of all experiments according to the allocation strategy. Strategies like even allocation and inverse proportional allocation promote fairness and topic-wide coverage, which benefits recall. Conversely, strategies such as proportional allocation and capped greedy allocation optimize for cost-effectiveness, which aligns with RFCU. UG@B’s neutrality reflects its more balanced formulation, integrating both gain and cost into a single score and, in this specific case, the fact that gains and costs are equal.

The epsilon-greedy bandit adaptive baseline achieved performance that was broadly comparable to that of the static policies, although it did not consistently outperform them. In particular, its ability to dynamically reallocate effort allowed it to avoid severe underinvestment in small but relevant-rich topics, yielding recall scores close to those of even and inverse proportional allocation. At the same time, the exploration component prevented the systematic neglect of large topics, producing RFCU values that were again similar to those of even allocation and inverse proportional allocation. However, under our uniform cost/gain assumptions, epsilon-greedy did show an advantage in terms of UG@B for all years. This behavior is similar to what we observed when using UG@B as the reward, rather than the number of relevant documents, for the adaptive baseline [

35]. This reinforces the view that, in budget-constrained TAR settings, adaptive strategies offer flexibility, but their advantages depend strongly on the chosen rewards, task characteristics, and evaluation criteria. Overall, these results confirm that even the simplest adaptive strategy can provide a reasonable middle ground between coverage- and efficiency-oriented heuristics, and they highlight the potential of more advanced learning-based allocation methods.

4.5. Analysis of Correlations Between Evaluation Metrics

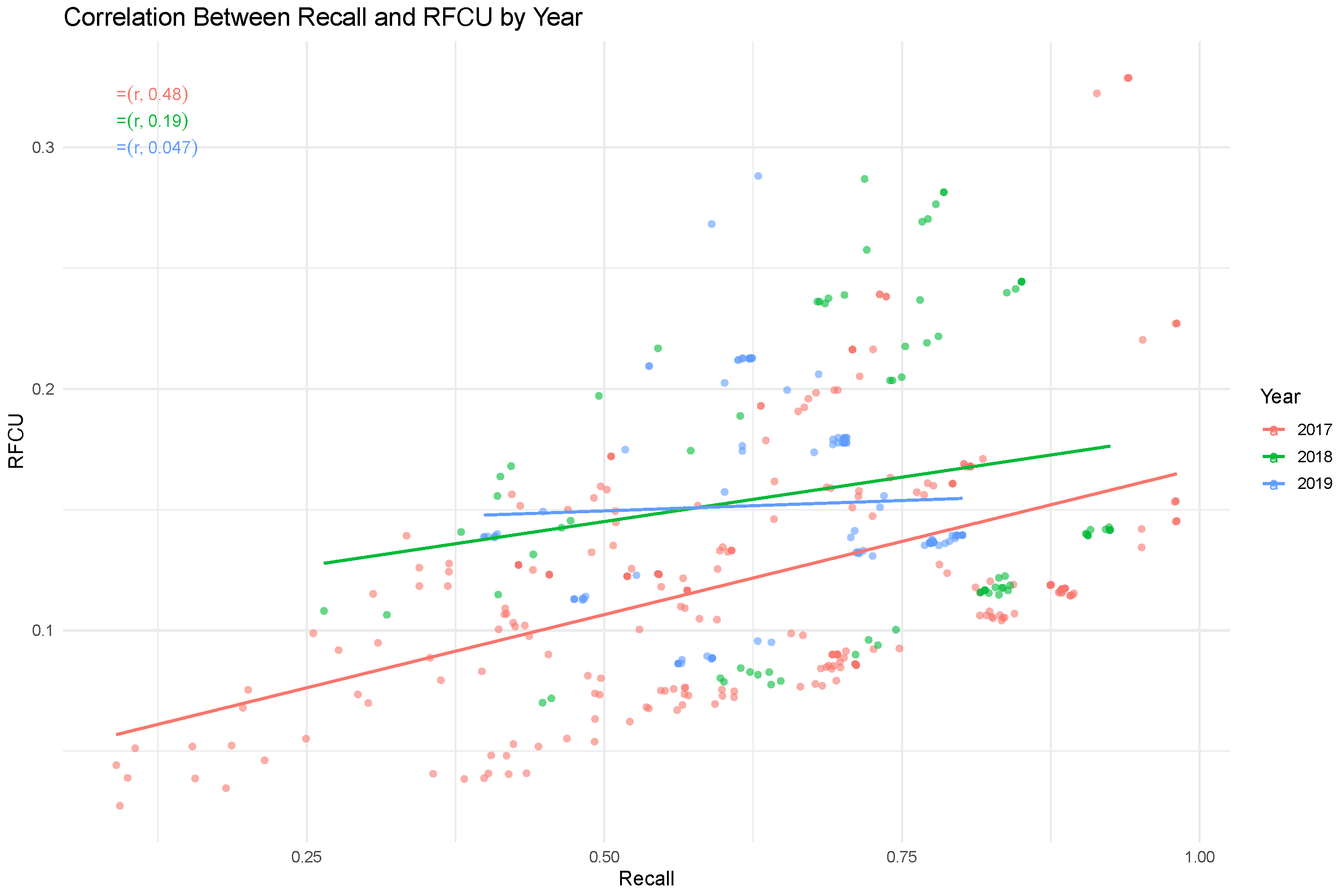

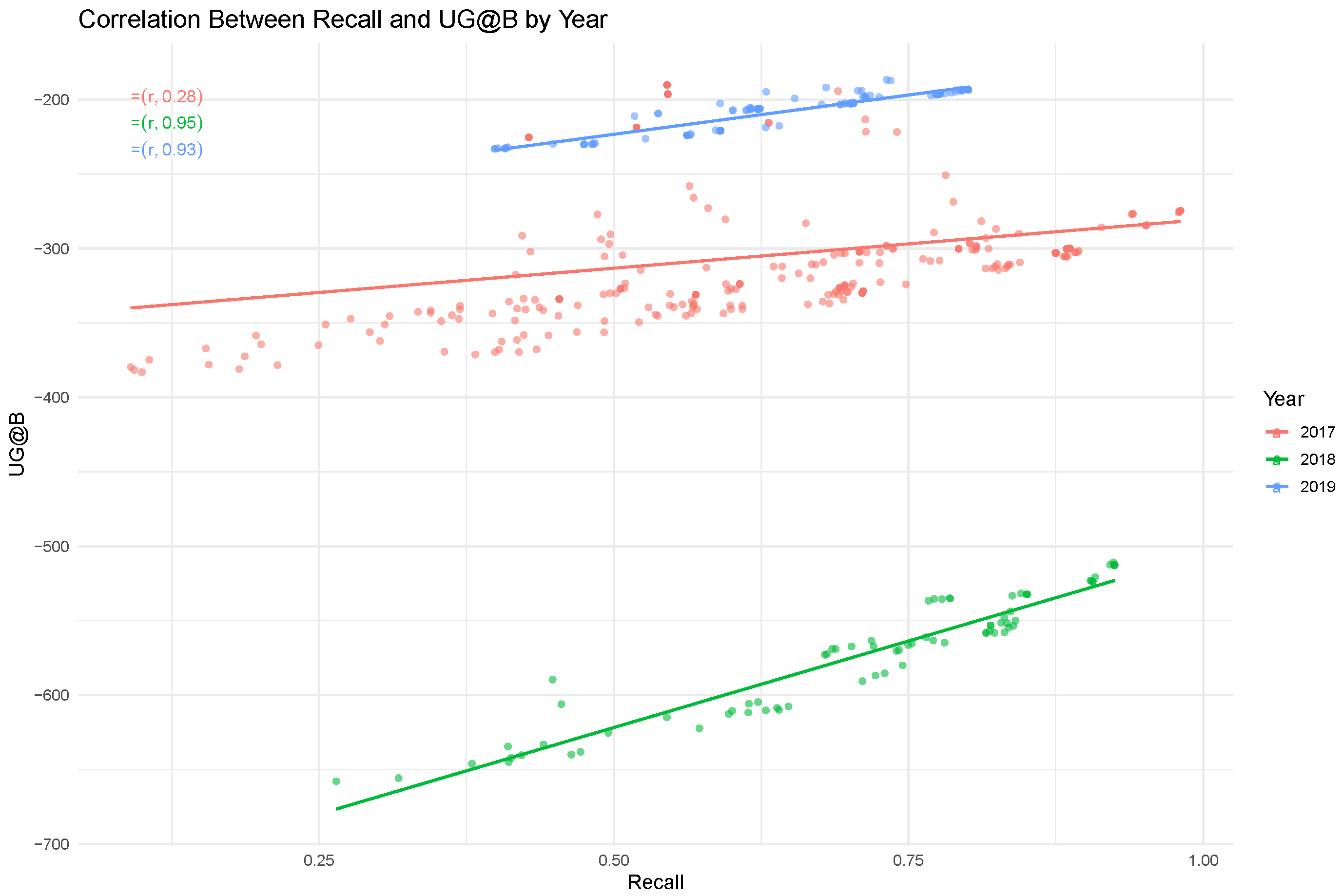

To better understand the relationships between evaluation metrics, we conducted a year-by-year correlation analysis comparing recall with RFCU and UG@B. The result, plotted in

Figure 4 and

Figure 5, shows that RFCU is, in some cases, only weakly correlated with recall—demonstrating that systems that achieve high recall may do so inefficiently, while others can yield high RFCU with relatively low recall. This divergence reinforces the view that RFCU captures a distinct, efficiency-oriented dimension of performance. In contrast, UG@B shows a more consistent and moderate correlation with recall across years, suggesting that it partially aligns with traditional effectiveness goals while also incorporating cost sensitivity. Importantly, the strength of these correlations varies year by year, reflecting differences in system behavior, task design, and relevance prevalence across CLEF eHealth editions. These findings support the use of multiple, complementary metrics in TAR evaluation to provide a broader understanding of system trade-offs under budget constraints.

4.6. On the Effect of Gain/Loss Ratios in UG@B

In our main experiments, the budget

B was defined as the number of documents that could be reviewed. Under this interpretation, every reviewed document is either a true positive (

) or a false positive (

) and thus

. Let us rewrite Equation (

8) in the following manner:

where

is the gain per relevant document found within the budget

B, and

is the cost per nonrelevant document reviewed.

Substituting

, we obtain

Since

B is constant for all systems, the ranking induced by UG@B is strictly increasing in

. This implies that, when the budget is defined in terms of the number of documents reviewed, the relative weighting of gain and loss (i.e., the ratio

) does not affect the ranking between systems. A system that retrieves more relevant documents within the fixed number of screened documents will always achieve a higher UG@B, regardless of how much losses are penalized. In this setting, no crossover between systems (a crossover occurs when a system performs better or worse than another system according to the parameters of the metric—in this case,

g and

c) can occur unless a stopping strategy is implemented for one of the systems (more details are given in the Conclusions).

As an alternative, the budget can be defined in terms of time rather than the number of screened documents. In this case,

B represents the total annotation time available, where each reviewed document consumes a variable amount of time depending on whether it is relevant or not, and possibly also on the system’s workflow. For example, a system might route likely negatives through a faster triage process, while relevant items require more careful reading. Under this interpretation, the number of documents reviewed within the same time budget may differ across systems, and the mix of

and

achieved depends on both the effectiveness and review cost. Let us consider this version of UG@B, which highlights the gain/loss ratio up to a multiplicative constant

c:

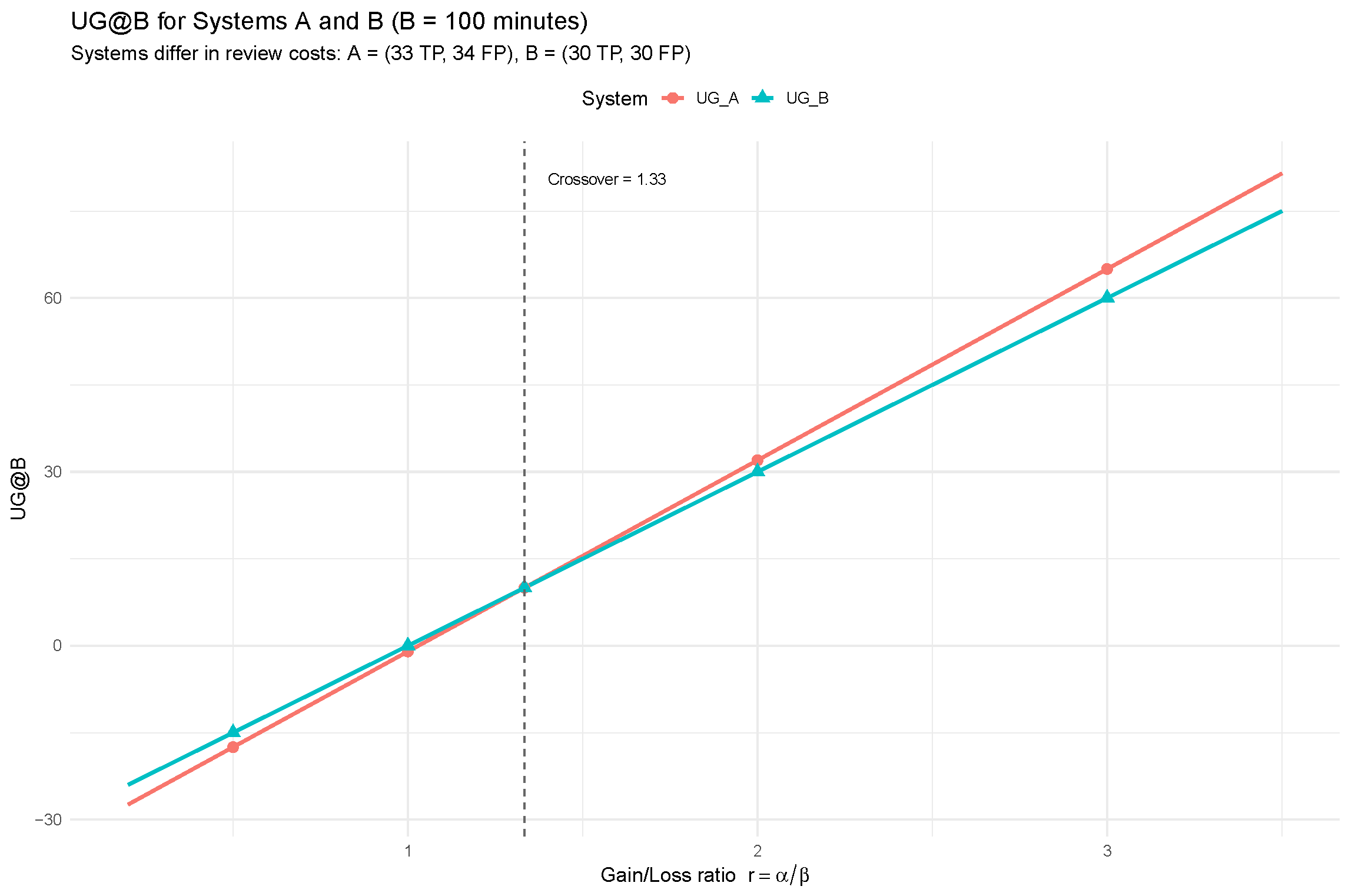

This scenario renders UG@B more sensitive to the gain/loss ratio, and crossovers between systems become possible. To illustrate this, consider the following example. Suppose that the time budget is fixed at min. We compare two systems with different review dynamics:

System A: True positives take min to review, and false positives take min. Within 100 min, this system can review documents, since .

System B: Both true and false positives take and min to review, respectively. Within 100 min, this system can review documents, since .

In this situation, the evaluation of the two systems would be

and system A would be preferred in terms of UG@B. The question now is the following: is there a gain/cost ratio value for which the preference of one system over the other would switch? Let us rewrite the utilities as a function of the gain/loss ratio

(with

without loss of generality):

The crossover point occurs when

:

This means that

For (loss-dominant setting), system B is preferred because it minimizes wasted effort on nonrelevant items;

For (gain-dominant setting), system A is preferred because its higher number of true positives outweighs the additional cost of false positives.

This example, displayed in

Figure 6, shows that when the budget is defined in terms of time, the choice of gain/loss ratio directly influences the ranking of systems. UG@B thus provides a tunable framework to reflect different operational scenarios, such as prioritizing recall (high

) versus minimizing annotation effort (low

).

5. Conclusions and Future Work

This study presents a comprehensive, budget-aware analysis of document screening strategies in technology-assisted review using real-world evaluation data from the CLEF eHealth shared tasks (2017–2019). Our investigation emphasizes the importance of modeling both retrieval performance and resource constraints when designing and evaluating TAR systems.

In particular, our main contributions are the following.

Reproduction of Official Results: We collect, organize, and verify the official participant runs from the CLEF eHealth TAR tasks, providing a unified and reproducible dataset spanning three years of evaluations.

Exploration of Budget Allocation Strategies: We investigate how limited screening budgets can be distributed across topics using several allocation strategies, including even, proportional, inverse proportional, and capped greedy approaches. Our analysis reveals that different strategies optimize different objectives: even and inverse proportional strategies favor recall, while proportional and capped strategies enhance efficiency.

Introduction of Cost-Aware Evaluation Metrics: We propose two novel measures—relevant found per cost unit (RFCU) and utility gain at budget (UG@B)—that directly account for resource expenditure. These metrics complement traditional recall by quantifying screening efficiency and net utility, and they help to expose trade-offs not visible through recall alone.

Our findings show that no single allocation strategy dominates across all evaluation criteria, underscoring the need to align strategy selection with screening goals—whether completeness, efficiency, or balanced utility. Furthermore, our correlation analysis demonstrates that cost-aware metrics like RFCU and UG@B provide complementary insights alongside recall, rather than duplicating it. The choice of evaluation metric in TARs should reflect the goals and constraints of the review task. In resource-unconstrained environments—such as legal e-discovery with completeness mandates—recall remains the gold standard. However, in practice, many reviews operate under strict cost or time budgets. In such settings, traditional recall may fail to capture a system’s efficiency or utility under constraints. To address this, we propose the following guidelines for selecting evaluation metrics that align with practical review objectives. For a comprehensive performance analysis, we recommend using a composite evaluation approach: recall should be used to ensure topic coverage, while RFCU and UG@B provide complementary perspectives on efficiency and cost sensitivity. This multimetric framework offers a more robust and actionable view of TAR performance, especially in real-world screening scenarios where budgets are finite and reviewers must prioritize effort.

This work opens up several promising directions. We envision the development of cost–risk frontiers, which plot the relationship between resource expenditure and the risk of missing relevant documents. These frontiers would enable a nuanced comparison of TAR strategies, not only by their performance but also by how well they handle the degradation of results under limited resources. Evaluating systems along cost–risk frontiers will provide a deeper understanding of their robustness when constrained by resource availability.

Incorporating human decision making into TAR system evaluation is a crucial avenue for future work, as the effectiveness of TAR workflows is ultimately shaped by human-in-the-loop dynamics. Reviewer behavior can vary widely—in terms of speed, risk tolerance, consistency, fatigue, and stopping strategies—introducing variance in the effective cost and duration of screening. These behavioral factors mean that the performance and utility of a policy can diverge from the outcomes predicted under the assumptions of this paper. To capture this complexity, research in this area will require access to real-world data on reviewer behavior. While simulations can approximate variations in speed, risk tolerance, or stopping strategies, they inevitably rely on simplifying assumptions that may not capture the full complexity of human decision making. In this sense, synthetic data can therefore be misleading, particularly when subtle patterns of fatigue, inconsistency, or adaptation to system guidance play a decisive role. To obtain reliable insights, future work should complement simulations with empirical studies involving actual users, ensuring that evaluations reflect the realities of human interaction with TAR systems.

Automatic early stopping is a crucial aspect of TAR systems, as it directly affects the trade-off between recall and cost. Stopping too early risks missing a substantial portion of relevant documents, while stopping too late expends resources without meaningful gains. Reviewer behavior further complicates this picture: a conservative reviewer may continue screening well beyond the optimal point, consuming unnecessary resources, while a more aggressive one may stop prematurely, jeopardizing recall. These behavioral and policy-driven factors highlight the importance of jointly modeling human decision making and system-level stopping strategies, ensuring that evaluations reflect both theoretical performance and the realities of practice. We propose the exploration of adaptive stopping mechanisms that rely on marginal utility or predictive confidence thresholds, allowing the review process to adjust dynamically to budget constraints. In future work, we aim to estimate the opportunity cost of early stopping by quantifying the risk of missing relevant documents when resources are limited, thereby identifying the most effective strategies for cost-sensitive review settings.

Looking ahead, we aim to move beyond static budget allocation by enabling each TAR system to dynamically determine its own optimal budget usage based on performance signals and uncertainty estimates. Rather than imposing a fixed budget ceiling, future evaluations will explore adaptive budget strategies where systems decide how much annotation effort is necessary to achieve a given level of recall or confidence.