1. Introduction

Machine learning models are increasingly used in many life-critical applications such as image recognition, natural language processing, and medical diagnosis [

1]. These models are applied to learn from more complex datasets, which facilitates more precise findings and enhances the ability to identify patterns and connections among different aspects of information. ML models are particularly valuable in scenarios like medical decision making, where distinguishing between various types of illnesses is crucial. The ML learning process often involves training data on specialized classifiers while also determining which attributes to prioritize for optimal results. Moreover, there are various types of machine learning models, as given in

Figure 1, and practitioners have the flexibility to choose from a range of approaches, utilize specific classifiers, and evaluate which method best aligns with their objectives.

One of the most challenging aspects of machine learning, particularly with large datasets, is the speed at which data processing occurs. Lengthy training periods for massive datasets can significantly slow down the training and evaluation processes. Moreover, large datasets may require substantial computational power and memory, further exacerbating processing delays [

2]. Addressing these challenges necessitates the use of efficient computing methods and optimizing resource allocation to expedite processing. Techniques such as parallel processing, distributed computing, and cloud computing offer viable solutions to improve processing efficiency.

Several research efforts have explored the potential of parallelization to accelerate supervised machine learning. A significant body of work has focused on data parallelism, where the dataset is partitioned across multiple cores to expedite training and prediction. Techniques such as data partitioning, feature partitioning, and model partitioning have been investigated to distribute computational load efficiently [

3,

4]. Additionally, researchers have delved into algorithm-level parallelism, exploring opportunities to parallelize specific operations within machine learning algorithms. For instance, parallel implementations of matrix operations, gradient calculations, and optimization algorithms have been developed to exploit multicore architectures [

5,

6].

While existing research has made considerable progress in parallelizing supervised machine learning, several challenges and limitations still persist. Many proposed approaches exhibit limited scalability, failing to effectively utilize the full potential of modern multicore processors as the problem size grows. Furthermore, achieving optimal performance often requires intricate algorithm-specific optimizations, hindering the development of general-purpose parallelization frameworks. Consequently, there remains a need for innovative techniques that can address these challenges and deliver substantial performance improvements across a wide range of machine learning algorithms and hardware platforms.

This paper proposes a novel parallel processing technique tailored for ensemble classifiers and KNN algorithms. This research aims to address these limitations and explore the potential of parallel processing to enhance performance on multicore architectures. By effectively partitioning data, parallelizing classifier training, and optimizing communication, the proposed approach seeks to improve computational efficiency and scalability in multicore environments. The methodology will be evaluated using real-world datasets to demonstrate its effectiveness in handling large-scale machine learning tasks.

The proposed parallel approach to enhance supervised machine learning boasts significant potential across diverse sectors. By dramatically accelerating model training and inference while preserving accuracy, this methodology is poised to revolutionize industries such as healthcare, finance, and retail [

7]. For instance, in healthcare, it can expedite drug discovery, enable real-time patient monitoring, and facilitate precision medicine [

8]. Within the financial domain, it can bolster fraud detection, risk assessment, and algorithmic trading. Furthermore, retailers can benefit from optimized inventory management, personalized marketing, and improved customer experience. The potential applications extend to manufacturing, agriculture, and environmental science, where rapid data processing and analysis are paramount.

The remainder of this paper is organized as follows.

Section 2 discusses the related work to this research, while

Section 3 describes the data and materials used.

Section 4 presents the proposed parallel processing methodology in detail, including data partitioning, parallel classifier training, and optimization techniques.

Section 5 describes the experimental results, comparing the performance of the proposed method with existing approaches. Finally,

Section 6 offers a comprehensive conclusion of the findings, highlighting the contributions of this research and outlining potential avenues for future work.

3. Related Works

Numerous advancements in the field of parallel neural network training have been proposed to enhance the efficiency and speed of training processes. A notable contribution is a model that achieves a 1.3 times faster increase over sequential neural network training by analyzing sub-images at each level and filtering out non-faces at successive levels, revealing functional limitations in the face detection technique due to the application of three neural network cascades [

9].

A map-reduce approach was applied in a new ML technique that partitions training data into smaller groups, distributing it randomly across multiple GPU processes before each epoch. This method uses sequence bucketing optimization to manage training sequences based on input lengths, leading to improved training performance by leveraging parallel processing [

10]. Moreover, the backpropagation algorithm was used to create a hardware-independent prediction tool, demonstrating quicker response times in parallelizing ANN algorithms for applications like financial forecasting [

11].

Another study focused on enhancing the efficiency of parallelizing the historical data method across simultaneous computer networks. It discussed the necessity of different numbers of input neurons for single-layer and multi-layer perceptrons to predict sensor drift values, which depend on the neural network architecture [

12]. This highlights the challenges and strategies involved in implementing complex parallel models on single-processor systems.

The complexity and inefficiency of implementing numerous parallel models on single-processor systems have been addressed by a novel parallel ML approach. This method simplifies complex ML models using a parallel processing framework, and it includes implementations like Systolic array, Warp, MasPar, and Connection Machine in parallel computing systems [

13]. Parallel neural network training processes are significantly influenced by the extensive usage of training data, especially in voice recognition. The division of batch sizes among multiple training processors is crucial to achieve outcomes comparable to single-processor training. However, this division can slow down the full training process due to the overhead associated with parallelism [

13].

Iterative K-means clustering, typically unsuitable for large data volumes due to longer completion times, was adapted for parallel processing. This approach achieves notable performance improvements, as evidenced by an accuracy of 74.76 and an execution time of 56 s. Additionally, exemplar parallelism in backpropagation neural network training shows minimal overhead, significantly enhancing the speed and communication between processes [

14,

15].

The ensemble–compression approach aggregates local model results through an ensemble, aligning their outputs instead of their variables. This method, though it increases model size, demonstrates better performance in modular networks by reducing test inaccuracy significantly [

16,

17]. The use of deep learning convolutional neural networks (DNNs) has also been proposed to improve face recognition and feature point calibration techniques, despite the considerable time required for training and testing [

18].

A parallel implementation of the backpropagation algorithm and the use of the LM nonlinear optimization algorithm have shown promise in training neural networks efficiently across multiple processors. These methods demonstrate significant speedup capabilities and are suited for handling real-world, high-dimensional, large-scale problems due to the parallelizable nature of the Jacobian computation [

19,

20].

Neural network training for applications such as price forecasting in finance has been improved through parallel and multithreaded backpropagation algorithms, outperforming conventional autoregression models in accuracy. The use of OpenMP and MPI results further illustrates the effectiveness of training set parallelism [

21].

Various general-purpose hardware techniques and parallel architecture models have been summarized in the literature, highlighting the importance of cost-effective approaches to parallel ML implementation [

22]. Moreover, the application of Ant Colony Optimization (ACO) for solving the Traveling Salesman Problem (TSP) showcases the benefits of parallelizing metaheuristics to speed up solution processes while maintaining quality standards [

23].

A new neural network training method that has applications in finance such as price forecasting is presented [

21]. This model implements four distinct algorithms that are parallel and multithreaded backpropagation neural networks. To determine the accuracy of our findings, we conducted some analysis to examine the performance of these algorithms and compared the outcomes to those of a conventional autoregression model. By comparing the OpenMP and MPI results, training set parallelism was found to defeat all types taken into account.

A summary of the various studies that have been conducted in this area is presented [

22]. After this discussion, various general-purpose hardware techniques are described. Then, various parallel architecture models and topologies are described. Finally, a conclusion is given based on the costs of techniques.

Deep learning convolutional neural network (DNN)-based models have been proposed to enhance the understanding of face recognition and feature point calibration techniques [

24]. In addition, it has been proposed to increase the training example size and develop a cascade structure algorithm. The proposed algorithm is resilient to interference from lighting, occlusion, pose, expression, and other factors. This algorithm can be used to improve the accuracy of facial recognition programs.

A parallel machine and a demonstration on how parallel learning can be implemented are proposed [

25]. In this model, DSPs and transputers, which are the features of the device and are called ArMenX, are studied. Neural network calculations are carried out on the DSPs. Also, DSP dynamic allocation uses transputers, where the model is implemented to use a pre-existing learning algorithm.

A parallel neural network with a confidence algorithm, as well as a parallel neural network with a success/failure algorithm to achieve high performance in the test problem of letter recognition from a set of phonemes, are presented [

26]. In this method, data partitioning allows us to decompose complex problems into smaller, more manageable problems. This allows each neural network to better adapt to its particular subproblem.

In addressing the complexity of time-dependent systems, a prediction method for neural networks with parallelism is proposed. By dividing the process into short time intervals and distributing the load across the network, this method enhances stress balancing and improves approximation and forecasting capabilities, as demonstrated in a sunspot time series forecasting [

27].

A meta-heuristic called Ant Colony Optimization (ACO), inspired by natural processes, has been parallelized to solve problems like the Traveling Salesman Problem (TSP). This parallelization aims to expedite the solution process while maintaining algorithm quality, highlighting the growing interest in parallelizing algorithms and metaheuristics with the advent of parallel architectures [

28].

Finally, recurrent neural network (RNN) training has been optimized through parallelization and a two-stage network structure, significantly accelerating training for tasks with varying input sequence lengths. The sequence bucketing and multi-GPU parallelization techniques enhance training speed and efficiency, as illustrated by the application of LSTM RNN to online handwriting recognition tasks [

29].

The existing literature underscores the critical need for efficient processing of large datasets using parallel computing techniques. Studies such as those by [

23,

30] have demonstrated the effectiveness of parallel algorithms in reducing computation time and enhancing the scalability of machine learning models. However, the approach in [

23] focuses on specific algorithms like Ant Colony Optimization, and [

30] is limited to image-based datasets.

The proposed method builds upon this foundational work by offering a generalized parallel approach that is versatile across various machine learning models and datasets. With the increasing popularity of ensemble modeling due to its robustness, stability, and reduction in overfitting, our algorithm enhances the parallel processing of ensemble models by integrating advanced multicore processing capabilities. Our approach not only achieves significant speedup, but it also maintains high accuracy, addressing the limitations identified in previous studies. This advancement provides a more holistic solution that can be widely applied, bridging the gap between theoretical research and practical implementation in diverse industrial applications.

5. Proposed Model

5.1. Overview

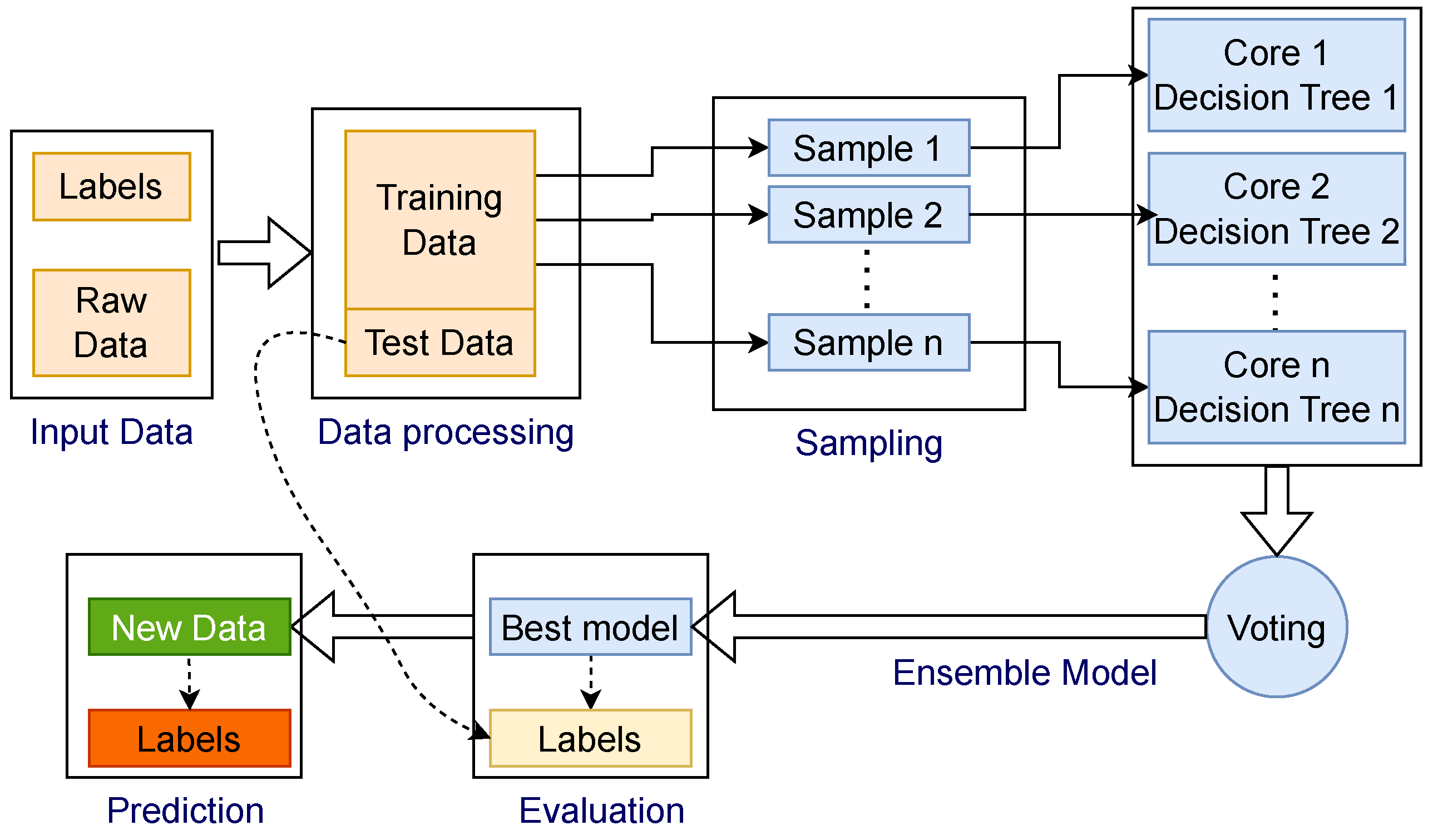

In this study, a parallel processing technique is presented for quickly and effectively employing a ensemble classifier and KNN to divide a large amount of data into multiple categories. The workflow plan for solving the problem using a Random Forest machine learning model is shown in

Figure 2. In order to evaluate the proposed parallel processing ensemble models, two problems were considered. The overall implementation design of the proposed algorithm is explained in

Figure 3.

At first, the aim was to detect the fraud transaction of a credit card based on the transaction details and amount. The features were compressed into a lower-dimensional subspace using dimensionality reduction techniques. The advantage of decreasing the dimension of the feature space is that less storage space is needed, and the learning algorithm can operate much more speedily. The second goal was to estimate the quality of a wine; as such, certain useful attributes were first extracted from the raw dataset. Some of the attributes were redundant due to their high correlation with each other. The quality of each wine was considered as the label of Dataset2, while the class of the transaction was considered for Dataset1. The labels are kept separately for training and evaluation.

This study presents a parallel processing technique designed to efficiently utilize ensemble classifiers such as Random Forest, AdaBoost, XGBoost classifier, and KNN for large-scale data categorization. Random Forest is an ensemble learning method for classification, regression, etc., and it operates by constructing a multitude of decision trees at training time and outputting the class that is the mode of the classes (classification) or mean prediction (regression) of the individual trees. This ensemble of decision trees can provide better generalization performance compared to a single decision tree.

The training data are fed into the ensemble classifier, along with the parameters for parallel processing using n number of cores. At first, the classifier ensembles are divided into group of decision trees so that the classifier would use all the available cores on the machine. Once the classifier is trained, it is used to predict the class labels for the test data. The accuracy of the classifier is then computed, and the execution time for training is noted.

To comprehensively evaluate the models and generalize their performance, three distinct hardware configurations were selected. The chosen algorithms were executed on all three devices using both datasets. The subsequent analysis and comparison of these results are detailed in

Section 6, providing insights into how the algorithms performed across varied hardware setups. The overall implementation design is explained in

Figure 3.

5.2. Data Preprocessing

This subsection details the preprocessing steps applied to each dataset described in

Section 4. For Dataset1, initial features obtained from credit card transactions were reduced to 28 components using Principal Component Analysis (PCA) [

33]. To ensure user confidentiality, the original features were transformed into a new set of orthogonal features through PCA, resulting in anonymized columns. As the open-source dataset already had PCA applied, it was unnecessary to repeat this preprocessing in the experiments.

For both of the datasets, standardization was applied to ensure that each feature contributed equally to the analysis. Standardization involves scaling the data such that it has a mean of zero and a standard deviation of one. This process helps in making features comparable by centering them around the mean and scaling them based on standard deviation. It is particularly beneficial for models that are sensitive to the scale of data, such as KNN, Random Forest, XGBoost, and AdaBoost, as it can improve model performance and consistency. Additionally, standardization can enhance the convergence speed of gradient-based algorithms by making the optimization process more stable.

The final preprocessing step was to split the dataset into training and testing sets, with 80% of the data used for training and 20% for testing, which is a common approach in many studies [

34]. Splitting data into training and testing sets is crucial in machine learning to ensure unbiased evaluation, to detect overfitting, and to assess the generalization ability of a model to new and unseen data.

5.3. Multicore Processing for Ensemble Models

The ensemble learning method constructs multiple classifiers, like decision trees in case of RF, and combines their predictions. The parallelization of the training process can significantly improve the computational efficiency, especially for large datasets. The core idea is to train individual decision trees in parallel, leveraging the inherent independence between the trees.

Figure 3 shows the training of an ensemble classifier with a parallel processing technique. The training of the classifiers is parallelized through the following three major steps.

5.3.1. Data Partitioning

The training data are partitioned into multiple subsets by randomly sampling instances, which is called bootstrap sampling. The technique was used in the ensemble model to create multiple subsets of the training data for building an individual classifier. It involves randomly sampling instances from the original training dataset with replacements. This means that each subset can contain duplicate instances, and some instances from the original dataset may not be included in a particular subset. By creating these bootstrap samples, the classifier in the ensemble model (EM) is trained on a slightly different subset of the data, introducing diversity among the trees. This diversity helps to reduce the overall variance and improve the generalization performance of the EM. The process of bootstrap sampling works as given in Algorithm 1.

| Algorithm 1: Bootstrap Sampling Algorithm for Generating Subsets from the Original Dataset |

![Make 06 00090 i001]() |

5.3.2. Parallel Tree Construction

Each subset of the training data is assigned to a separate processor or computing node. These processors or nodes independently build a decision tree using the assigned subset of the training data. During the tree construction process, a random subset of features is selected at each node split, further introducing diversity among the trees. The CARTs (Classification and Regression Trees) algorithm, which is the tree construction algorithm, is executed in parallel on each processor or node [

35]. Synchronization mechanisms are required to ensure that the parallel tree construction processes do not interfere with each other and that the final results are correctly aggregated. By parallelizing the tree construction process, the computational load is distributed across multiple processors or nodes, leading to significant performance improvements, especially for large datasets.

5.3.3. Ensemble Combination

Once all classifiers are trained, they are combined to form the final model. The combination process is typically straightforward and does not require parallelization as it involves a simple aggregation of the predictions of individual classifiers. The combination process does not require significant computational resources as it involves simple operations like counting votes or calculating averages.

In a Random Forest classifier, the process of making a final prediction involves multiple steps. Firstly, for a given test instance, each decision tree in the Random Forest makes a prediction of the classes for the classification task. The final prediction is determined by a majority voting among the individual tree predictions. The class with the most votes is assigned as the final prediction.

5.4. Parallelization of the K-Nearest Neighbors Algorithm

The parallelization of KNNs is performed in a similar process of a multicore processing of ensemble models. Firstly, the training data are partitioned into multiple subsets, as explained in

Section 5.3.1 For parallel KNNs training, each subset of the training data is assigned to a separate processor/node. On each processor/node, a separate KNNs model is trained using only the assigned subset of the training data. During the training process, each processor/node computes the distances between the instances in its subset and the new data point to be classified. When a new data point needs to be classified, it is distributed to all processors/nodes.

Each processor/node finds the k-nearest neighbors from its subset and makes a prediction based on those neighbors. The final prediction is obtained by combining the predictions from all processors/nodes. This can be achieved by techniques like the following: majority voting, where the class predicted by the highest number of processors/nodes is chosen; and distance-weighted voting, where the class predictions are weighted by the inverse of the distances from the neighbors, and the class with the highest weighted sum is then chosen.

When a new data point requires classification, it is distributed to all processors/nodes. Each processor/node then finds the k-nearest neighbors from its subset of the training data and makes a class prediction based on those neighbors. To obtain the final prediction, the predictions from all processors/nodes are combined using one of two techniques.

The first technique is majority voting, where the class predicted by the highest number of processors/nodes is chosen as the final prediction. This is a simple and effective approach that does not consider the relative distances of the neighbors. The second technique is distance-weighted voting, where the class predictions are weighted by the inverse of the distances from the neighbors. The class with the highest weighted sum is then chosen as the final prediction. This approach takes into account the relative distances of the neighbors, potentially improving the accuracy of the final prediction.

5.5. Hardware Specifications

The experiments were conducted on three multicore environments with the specifications given in

Table 2.

6. Results and Discussion

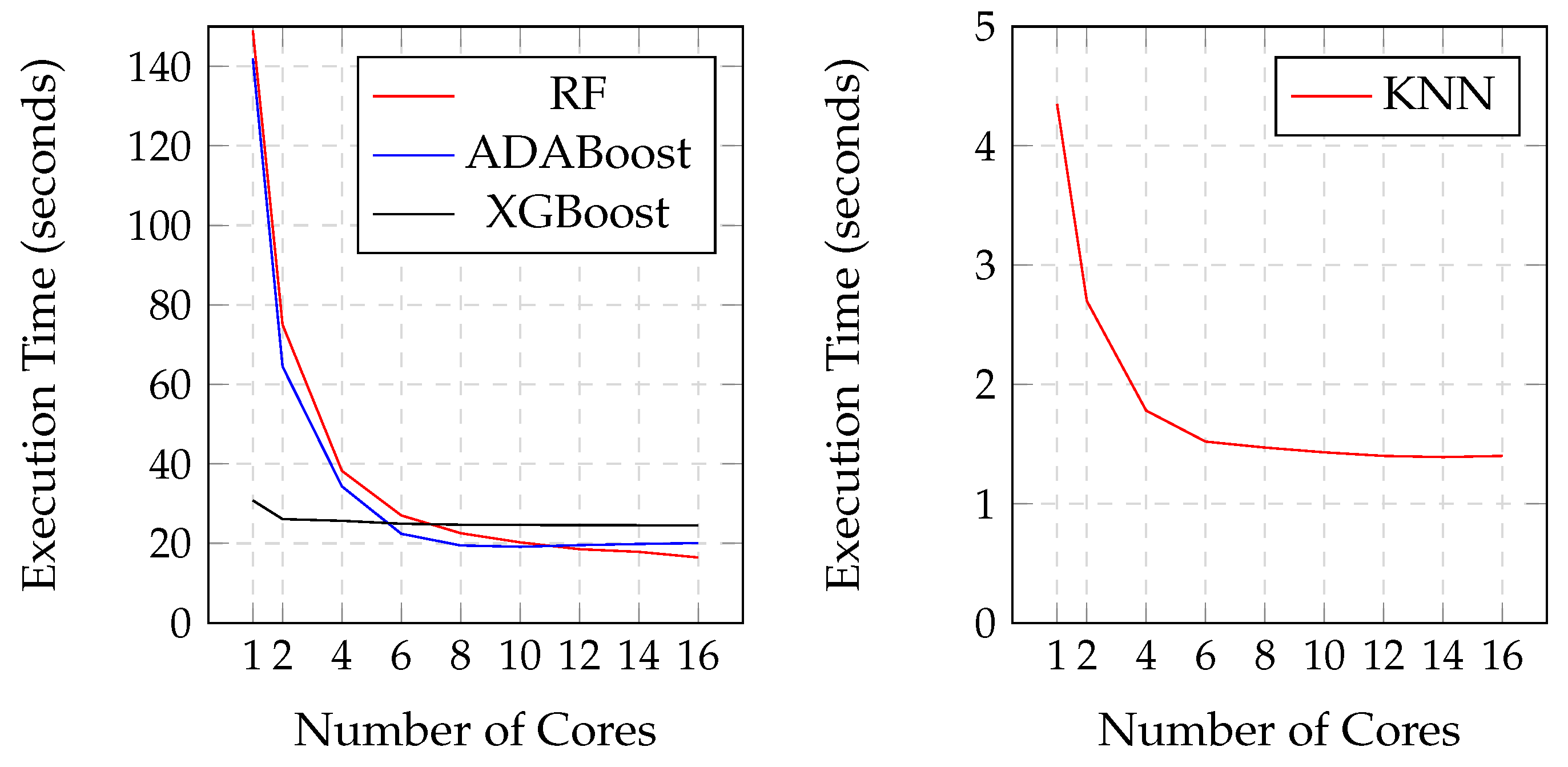

This study investigated the impact of increasing the number of cores on the accuracy and speed of machine learning algorithms with a parallel processing architecture. The results demonstrate that, while the accuracy remains relatively constant, the speed varies inversely with the number of cores employed. The results are analyzed in the following subsections.

6.1. Performance with Dataset1

For the larger dataset in the experiment, Dataset1, the classification models achieved high accuracy, with the highest being 99.96% for Device1. The results show that the accuracy obtained by Random Forest, ADABoost, and XGBoost were 99.96%, 99.91%, and 99.92%, respectively. Despite slight differences in accuracy among the models, the accuracy was maintained regardless of the number of cores used.

Figure 4 also shows the execution times for the various models tested on Device1 while training with Dataset1. For better visualization,

Figure 4 was divided into two parts based on the range of execution times. Models like XGBoost and KNN exhibited lower training times compared to Random Forest and ADABoost. The slowest model without parallel processing was Random Forest, with an execution time of 148.97 s, while the slowest model with 16-core processing was ADABoost, with an execution time of 20.08 s. The fastest model for training with single-core processing was XGBoost, with an execution time of 30.80 s after KNNs with 4.35 s, and with 16 cores, the same model took just 24.55 s for training while KNNs took 1.40 s.

6.1.1. Generalization across Various Hardware Configurations

The experiment was extended to different devices with varying hardware configurations, as detailed in

Section 5.5. This analysis was crucial for generalizing and evaluating the proposed algorithm across different platforms with varying operating systems and processors.

Figure 5 compares the performance of the Random Forest and XGBoost classifiers across three devices. The graph shows that Device1 was the fastest for both single-core and multicore processing for both models. Despite this, the pattern of reduction in the execution time with the addition of more cores was similar for each model across all devices.

While comparing the accuracy of the four different ensemble models trained with Dataset1 on all three devices, the accuracy of all models was preserved across all devices, indicating the robustness of the models’ performance in different hardware environments. In this comparison, the Random Forest, XGBoost, ADABoost, and KNN classifiers achieved an accuracy of 99.96%, 99.92%, 99.91%, and 99.84%, respectively.

6.1.2. Speed Improvement Analysis

Table 3 presents the multicore performance improvement speeds of various models trained with Dataset1 on Device1. The Random Forest model demonstrated a notable improvement, scaling almost linearly with the number of cores up to 16, achieving a speedup of 9.05 times. The ADABoost model showed similar trends with a significant speedup, peaking at 7.28 times with 8 cores, but it then slightly decreased with 16 cores. XGBoost and KNNs exhibited more modest improvements, with XGBoost reaching a maximum speedup of 2.25 times and KNNs achieving up to 3.1 times, indicating less efficiency in utilizing multiple cores compared to Random Forest and ADABoost.

Table 4 illustrates the performance improvements on Device2. Random Forest continued to show significant speedup, reaching up to 4.01 times with 8 cores, although this was less than the improvement seen on Device1. ADABoost also performed well, achieving a speedup of 4.39 times with 8 cores. XGBoost and KNNs displayed more limited improvements, with XGBoost peaking at 1.87 times and KNNs at 2.12 times. The reduced speedup compared to Device1 suggests that Device2 may have less efficient multicore processing capabilities or other hardware limitations affecting performance.

Table 5 provides the multicore performance improvement speeds on Device3. Random Forest showed a maximum speedup of 4.52 times with 8 cores, while ADABoost reached a speedup of 4.4 times, indicating strong parallel processing capabilities on this device. XGBoost and KNNs again demonstrated more modest improvements, with XGBoost peaking at 2.13 times and KNNs at 2.15 times. Overall, Device3 appeared to perform better than Device2 but not as well as Device1 in terms of multicore speedup, highlighting the variability in multicore processing efficiency across different hardware configurations.

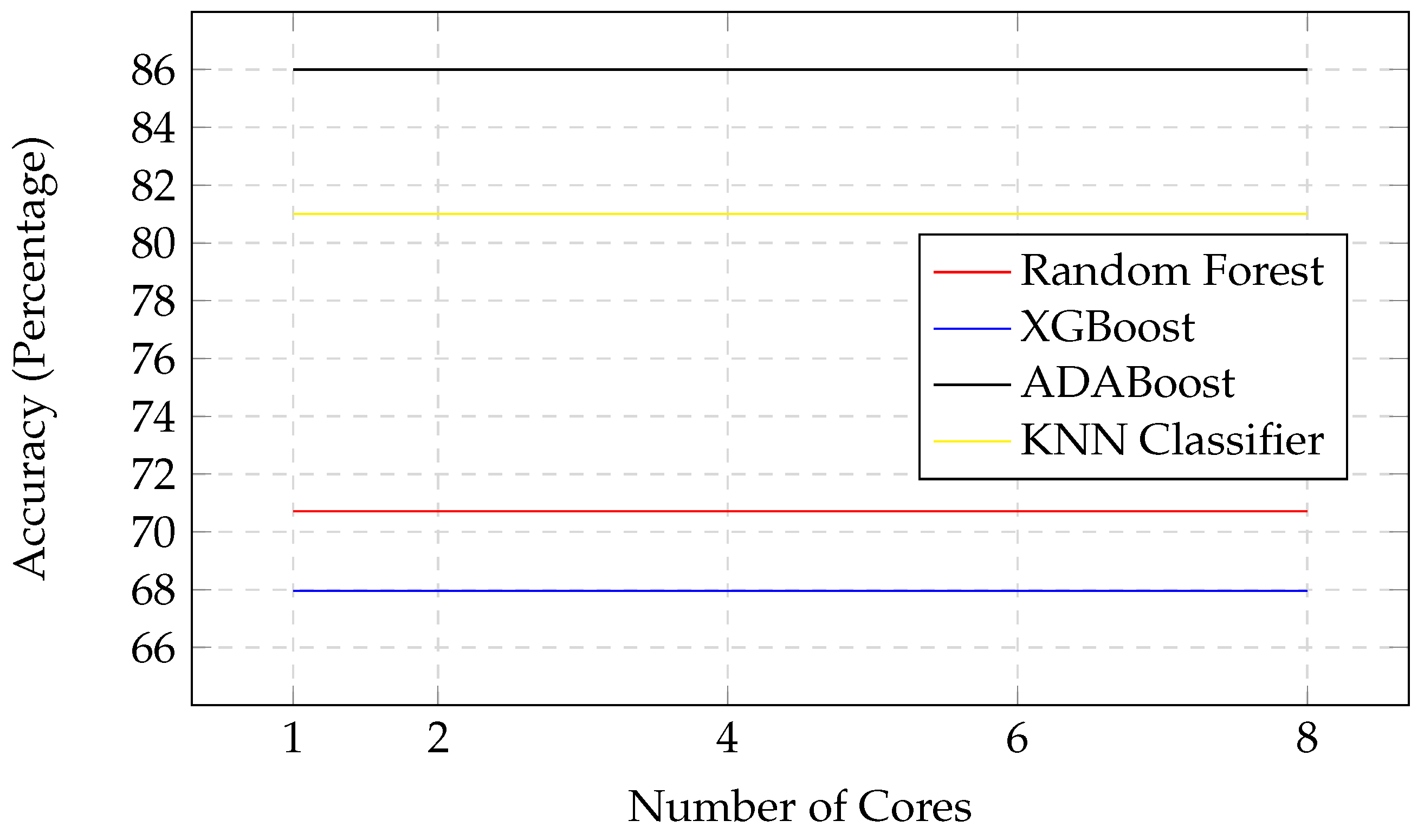

6.2. Performance with Dataset2

Figure 6 illustrates the accuracy of the various machine learning models—Random Forest, XGBoost, ADABoost, and KNNs—when trained on Dataset2 and tested on Device2. The accuracy metrics remained constant across all core configurations, indicating that increasing the number of cores does not impact the accuracy of these models. Random Forest and KNNs exhibited stable accuracy rates of 70.71% and 81.0%, respectively. ADABoost achieved the highest accuracy at 86.0%, while XGBoost maintained an accuracy of 67.96%. This consistency suggests that the classification performance of the ensemble models is robust to changes in computational resources when parallelized by the proposed algorithm.

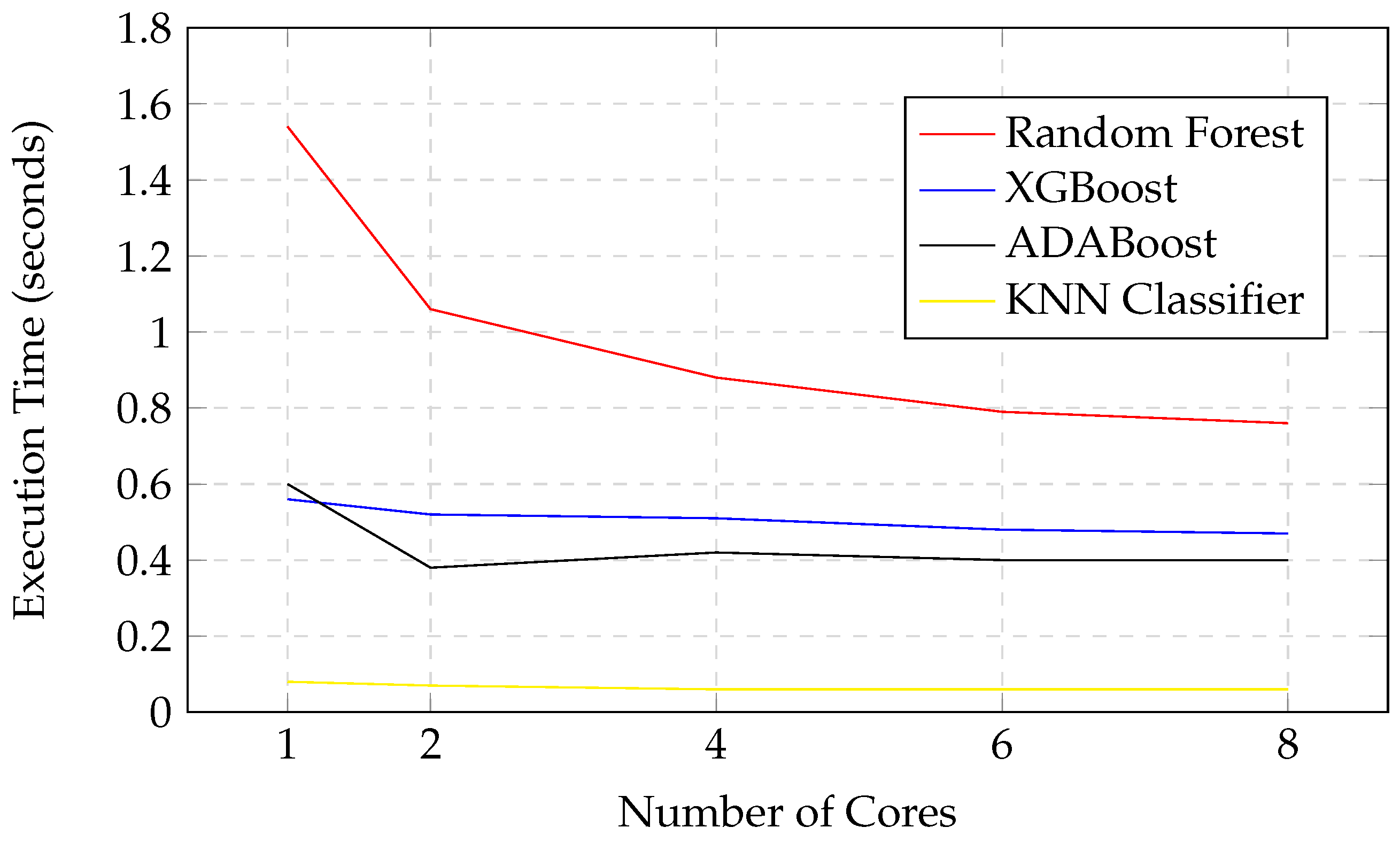

Figure 7 displays the execution times of the same models when trained on Dataset2 and tested on Device2. As the number of cores increased, there was a noticeable decrease in tje execution time for all models. Random Forest, which initially had the highest execution time of 1.54 s with a single core, reduced to 0.76 s with 8 cores. Similarly, ADABoost’s execution time decreased from 0.60 s to 0.40 s, and XGBoost’s from 0.56 s to 0.47 s. KNNs consistently showed the lowest execution times, starting at 0.08 s and reducing slightly to 0.06 s with 8 cores. This reduction in execution time with additional cores demonstrates the efficiency gains achieved through parallel processing.

Table 6 presents the multicore performance improvement speeds of various models when trained with Dataset2 on Device2, with the values represented in seconds. The speedup metrics for Random Forest, XGBoost, ADABoost, and KNNs demonstrate how well these models utilize multiple cores. Random Forest showed a consistent and significant improvement, with a speedup increasing from 1.45 times with 2 cores to 2.02 times with 8 cores. XGBoost exhibited a more modest speedup, peaking at 1.19 times with 8 cores, indicating limited scalability with additional cores. ADABoost showed a variable pattern, achieving a speedup of 1.57 times with 2 cores, slightly decreasing with 4 cores, and stabilizing at 1.5 times with 8 cores. KNNs demonstrated moderate improvement, with speedup values rising from 1.14 times with 2 cores to 1.33 times with 4 and 8 cores. Overall, these results highlight that, while Random Forest and ADABoost benefit significantly from multicore processing, XGBoost and KNN show more limited gains, underscoring the varying efficiency of parallel processing across different models.

6.3. Comparison of the Speed Improvements in between Datasets

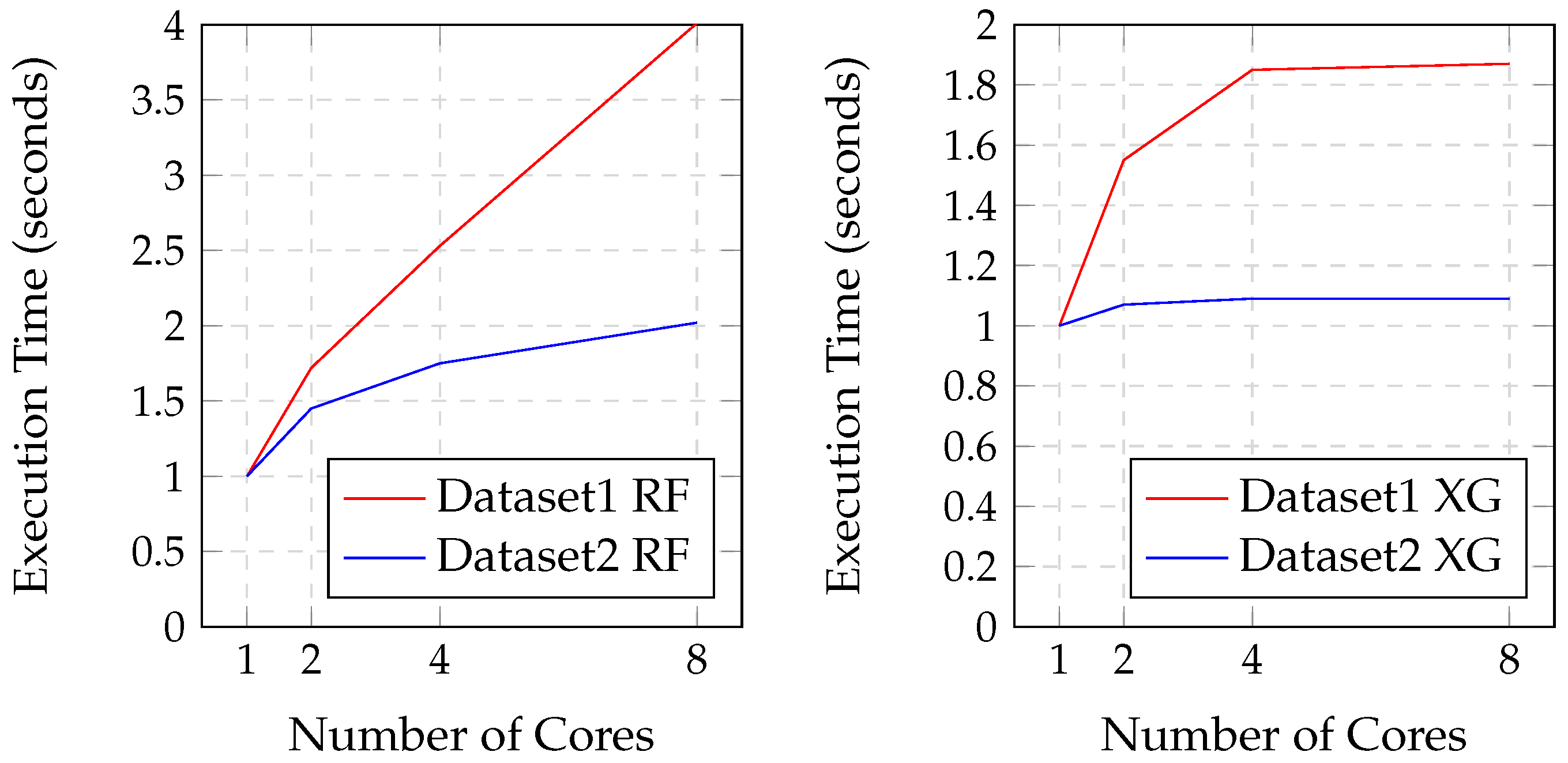

Figure 8 illustrates the performance speed of the Random Forest and XGBoost models on the two datasets, comparing their execution times across different numbers of cores. For Random Forest, Dataset1 demonstrated a substantial decrease in execution time with increasing cores, achieving the most significant speedup by reaching 4.01 times with 8 cores. Dataset2 also showed improved performance with more cores, though to a lesser extent, peaking at 2.02 times with 8 cores. In contrast, XGBoost’s execution times exhibited modest improvements. For Dataset1, the speedup reached 1.87 times with 8 cores, while for Dataset2, the performance gains were minimal, with a peak speedup of only 1.09 times regardless of the number of cores. This comparison highlights that, while Random Forest benefited significantly from parallel processing on both datasets, XGBoost’s performance improvements were more restrained, particularly for Dataset2.

6.4. Comparison of the Results to the Existing Works

Table 7 presents a comparison of the proposed techniques with existing works for various problems. For the Traveling Salesman Problem, the proposed technique of parallelizing the Ant Colony Optimization (ACO) algorithm resulted in a 1.007 times faster execution. In the case of handwriting recognition using the MNIST digits dataset, the proposed backpropagation algorithm with an exemplar parallelism of neural networks (NNs) achieved a 2.4 times speedup, albeit with a 6.7 percent decrease in accuracy. For image classification using convolutional neural networks (CNNs), the proposed technique of data partitioning and parallel training with multicores resulted in a 1.05 times faster execution. In the proposed model for wine quality classification and fraud detection using the Random Forest classifier, the proposed technique achieved a 2.22 times and 3.89 times faster execution, respectively, with respect to maintaining the accuracy fluctuation.

6.5. Discussions

The results of the implemented parallel computing algorithm reveal significant improvements in execution time while maintaining high accuracy across different machine learning models. The Random Forest classifier, in particular, showed a noteworthy enhancement in performance compared to other models. The divide-and-conquer strategy intrinsic to the Random Forest algorithm inherently benefits from parallel processing as each tree in the forest can be built independently and simultaneously. This modular nature allows the Random Forest classifier to fully leverage the multiple cores available, leading to a substantial reduction in execution time without sacrificing accuracy. For instance, the accuracy remained consistent to 99.96% across different core counts, while the execution time improved markedly from 148.97 s with a single core to 38.24 s with four cores. This demonstrates that the Random Forest classifier is particularly well suited for parallel processing, providing both efficiency and reliability in handling large datasets.

In contrast, other models such as K-Nearest Neighbors (KNNs) exhibited less significant improvements. While the KNNs model did benefit from parallel computing, the nature of its algorithm—relying heavily on distance calculations for classification—did not parallelize as efficiently as the tree-based structure of Random Forest. As a result, the KNNs model’s execution time showed only a modest decrease from 4.35 s with one core to 1.52 s with eight cores, with the accuracy remaining stable at 99.84%. This comparison highlights that the specific characteristics of the algorithm play a crucial role in determining the extent of improvement achieved through parallel processing. Models like Random Forest, with their inherently independent and parallelizable tasks, demonstrate more pronounced performance gains, whereas models with more interdependent calculations, such as KNNs, show relatively modest improvements.

6.6. Limitations

Despite the promising results, several limitations need to be acknowledged. First, the performance gains observed were heavily dependent on the hardware configuration, particularly the number of available cores and the architecture of the multicore processor. In environments with fewer cores or less advanced hardware, the speedup may be less significant. Second, the parallel approach introduced additional complexity in terms of implementation and debugging, which could pose challenges for practitioners with limited experience in parallel computing. Additionally, while the method was tested on a diverse set of machine learning algorithms, certain algorithms or models with inherently sequential operations may not benefit as much from parallelization. Finally, the overhead associated with parallel processing, such as inter-thread communication and synchronization, can diminish the performance improvements if not managed efficiently. Future work should focus on addressing these limitations by optimizing the parallelization strategies and exploring adaptive approaches that can dynamically adjust based on the hardware and dataset characteristics.