3.1. The Proposed ORC Algorithm

Here, we introduce ORC in the proposed SSA. Previous research studies [

5,

6,

7,

8,

9,

14,

18,

19] have shown that UML K-Mean is very susceptible to outliers

O and tends to produce local optimal centroids. Including an outlier removal strategy in every iteration of the clustering process makes it possible to obtain outcomes even when dealing with datasets that include many outliers. This ensures that locations far from the cluster centroid do not impact the centroid calculation. To keep things simple, we investigate learning a single hyper-parameter of the fraction of outlier samples to delete.

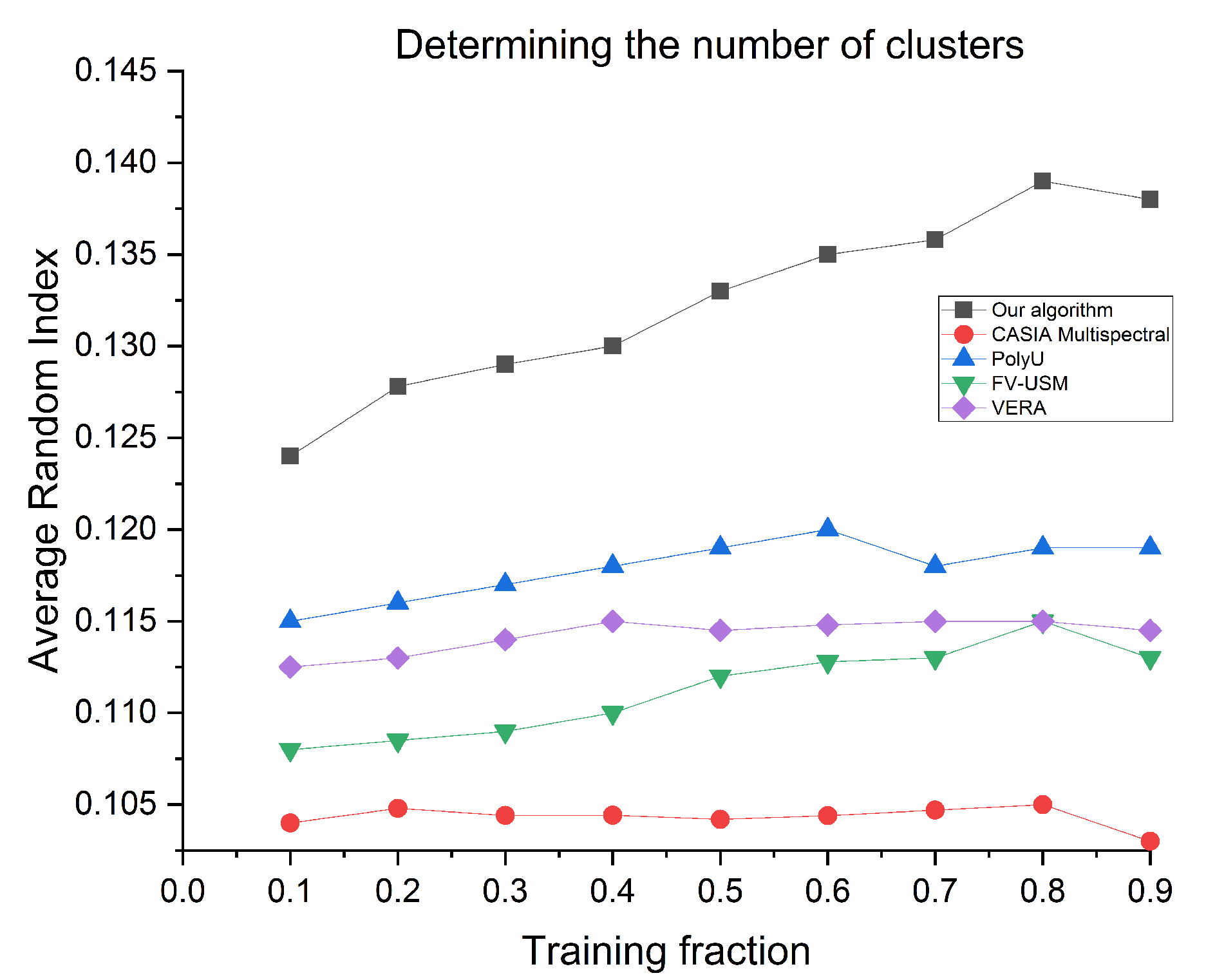

Algorithm 1 is the proposed ORC, while

Figure 3a shows the original and normalized data. Our technique (95% confidence intervals) is compared to traditional clustering baselines. Given enough training problems, our method outperforms the best baseline algorithms by more than 5%. It is important to note that ARI is a powerful performance indicator because it compensates for chance and can even be negative. Furthermore, the best potential value of ARI as determined by executing the optimum procedure for each dataset was about 0.16, making this improvement outstanding,

Figure 3b shows that the outlier removal yields much better outcomes. Removing 1% of the interpretations as outliers significantly increases the ARI score. Surprisingly, above 1% reduces performance to that before outlier elimination. Rationally, our system intuitively determines the appropriate amount (1%).

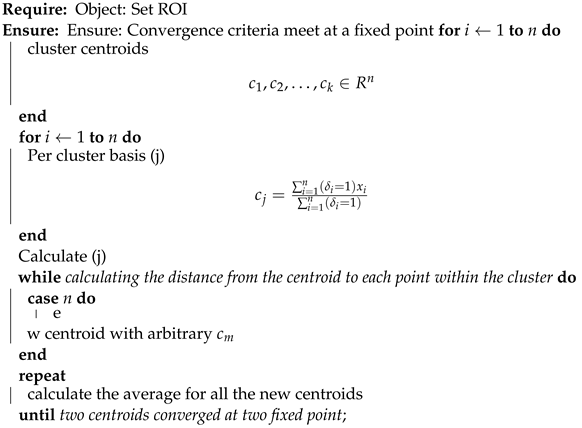

| Algorithm 1: Proposed ORC algorithm. |

- Require:

Classification training problem - Ensure:

Centroid computation - 1:

The classification cases for training problems are

- 2:

With method C, some parameter - 3:

Define- - 4:

- 5:

Set aside the proportion of samples: is the furthest from - 6:

Euclidean distance as outliers , - 7:

Cluster the data with outliers removed using C, and assign each outlier to the nearest cluster center - 8:

END

|

In our method, the algorithm produces the highest estimated ARI as a function of the SC [

5] and

k. We assess using the same 90 dataset clustering as the baseline. In this experiment, we employ basic linear regression to estimate ARI. All of the data from the training set in the partition related to

k are used to train ARI as a linear function of SC. Each dataset in the training set has 10 target values corresponding to different runs, where the number of clusters is fixed at

k.

Thus, to illustrate, let there be a method

C (e.g., K-means clustering [

20]). Choosing the parameter

that represents the proportion of outliers to disregard during fitting, define

with parameter

on data

. To maximize performance, we may select the best

. The outlier-removal method may be directly modified to eliminate a variable proportion of outliers from each test dataset. The approach may be thought of as assigning weights to points in a test set of size

l, where each of the

o outlier points, based on the learned threshold

is assigned weight ’zero’, while all other points share the equal weight.

This difficult arrangement might be eased to produce a distribution in which the weights are readjusted to award a low but non-zero mass to the outlier points o. The weights are then used in methods like the weighted K-means algorithm, which arranges the points into clusters and a collection of noisy or outlier points.

3.2. Proposed GPO Algorithm for Visualization

The alignment of the distinctive vein characteristics is determined in this section. The orientation image, which represents the characteristics of vascular images, represents them. The localized feature orientation specifies the invariant coordinates of ridges and furrows.

Algorithm 2, which we name GPO, depicts the primary phase of the technique for estimating feature orientation. The correlation technique is employed to measure the similarity between the registered and tester images. Alternatively, simple distance-matching metrics, such as the Hausdorff distance, are employed. In the vein recognition investigation, backpropagation neural networks, Radial Basis Function Neural Networks, and Probabilistic Neural Networks are used as classifiers.

| Algorithm 2: Proposed GPO algorithm. |

- Require:

img: Normalized vein image - Ensure:

Local Ridges Position for do -

end -

Kernels divided from normalized image; -

applying the Sobel kernel filtration method in different axis to determine the magnitude variations; -

while Define do -

end

and estimate the data mean; - 2:

Estimated magnitude: taking reference of below equations - 3:

Converting img into continuous vector field

- 4:

Computing the direction of localized ridges for each point (x, y) using:

where is the angular direction represented by the Least Square Estimation

|

3.3. The RDM Algorithm

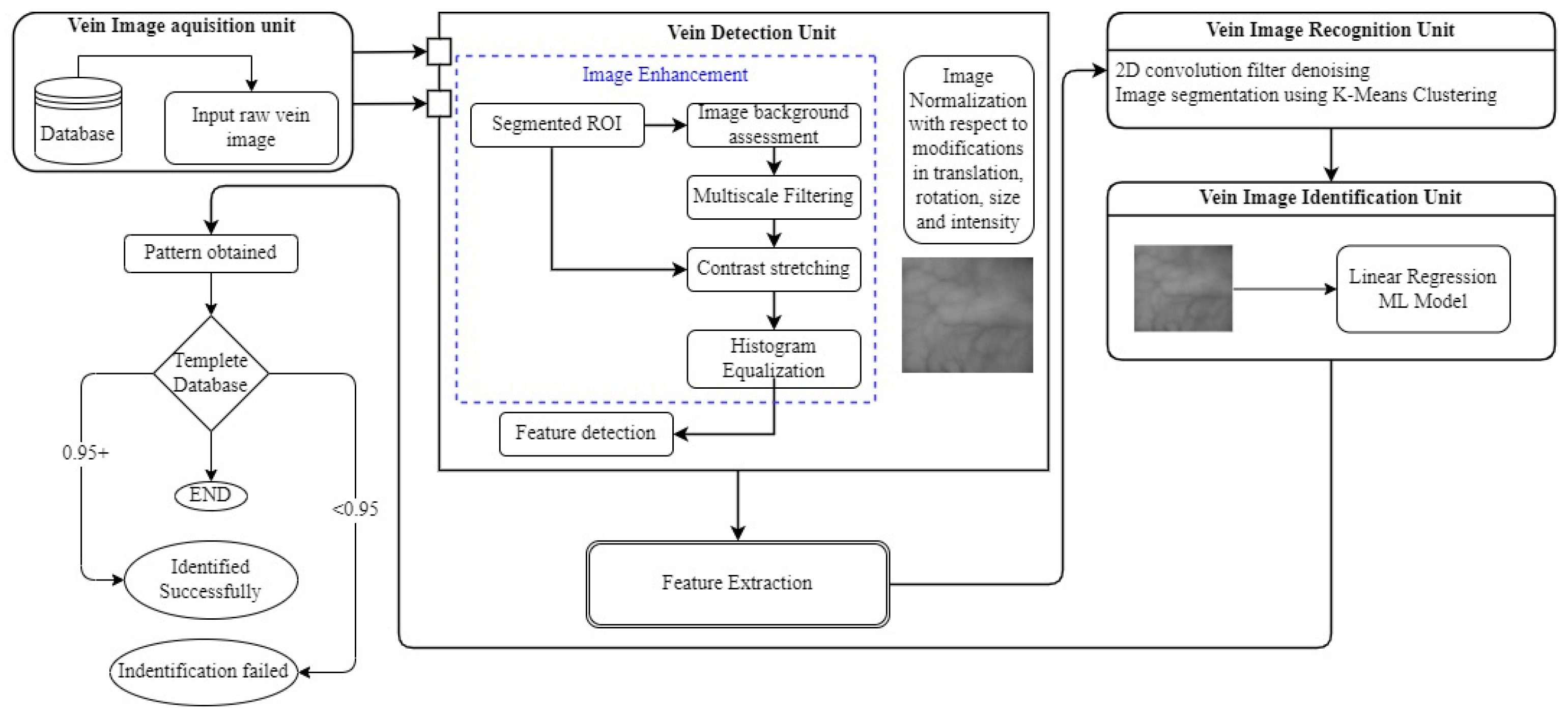

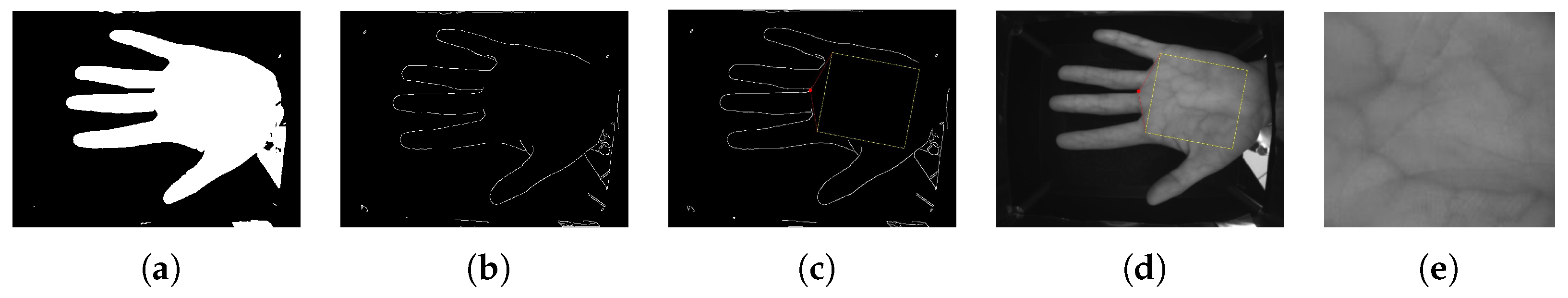

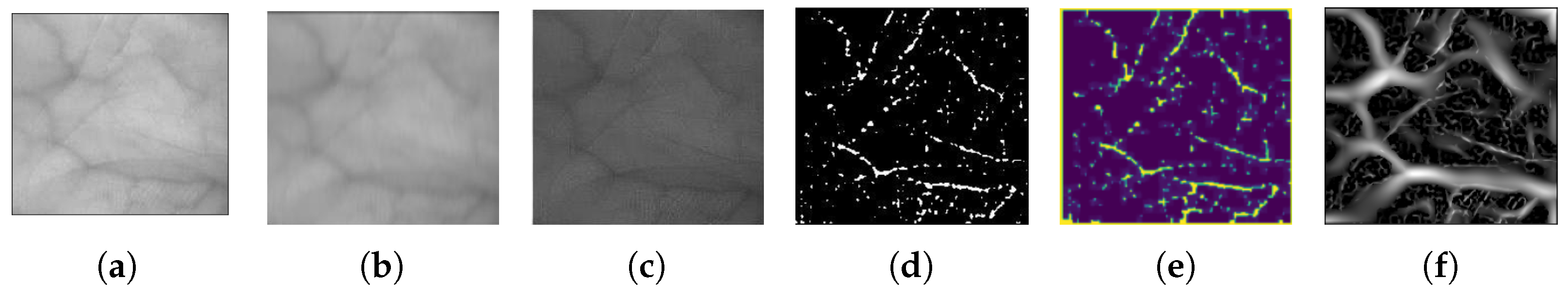

It is necessary to extract the most essential and valuable information from the vein for detection. First, we identify and use extracted ROI (see

Figure 2). To improve the imaging contrast, gamma correction [

18] is initially performed. We test several well-known image-enhancing methods to bring out the intricacy of the input vascular ridge pattern (see

Figure 4. Although these approaches are practical for sharpening imagery, the noise parts are over-emphasized. As a result, the proposed LRE [

21] technique produces a sharper image without exaggerating the noise. This approach determines which portions of the image have critical lines and ridge patterns and then enhances just those areas. To mine out the vein structures in an image, the suggested LRE approach employs an “RDM algorithm” (see

Figure 4).

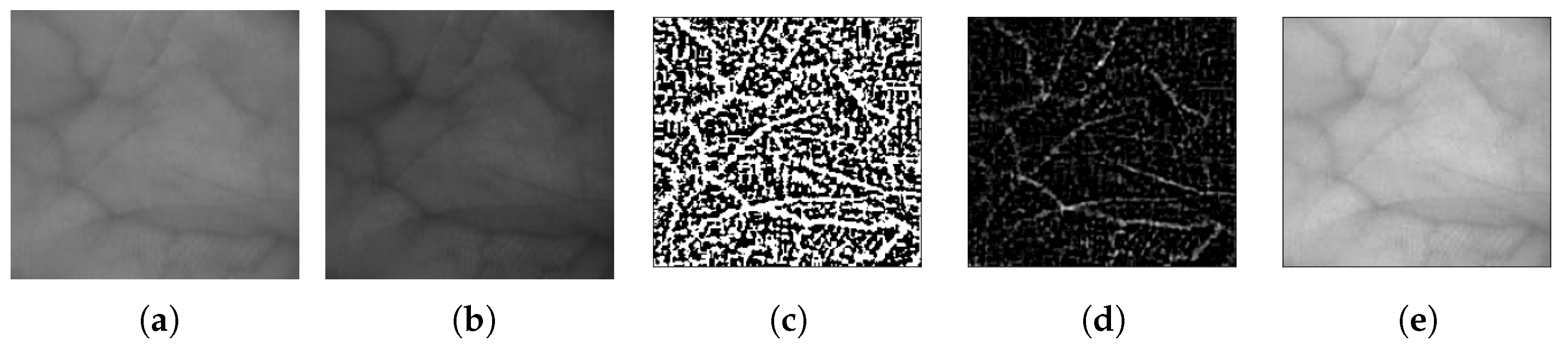

To generate a soft-focus representation of an image

m(x, y), LRE first applies an LPF

l(x, y) to the original image,

i(x, y) as illustrated in

Figure 5 and represented in (

12):

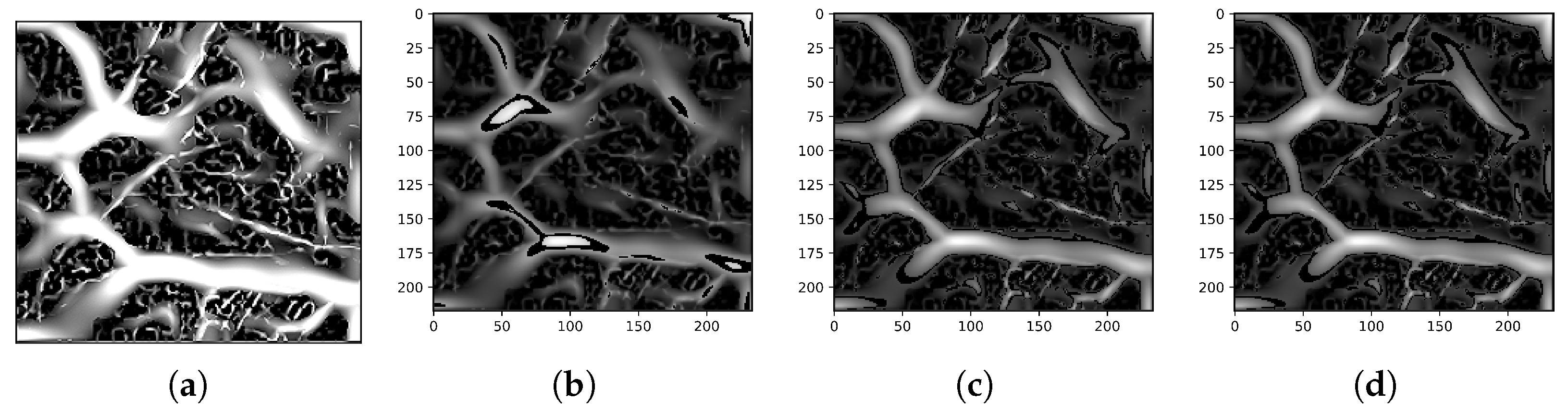

In this study, we use a Gaussian filter with kernel size 5 × 5, followed by an HPF

h(x, y) to detect the ridge in an image as represented in (

13):

Only the edges of the predominant ridges M′(x, y) are presented since the relatively insignificant ridge patterns have been “filtered”.

Further, the Laplacian filter is used as the HPF, and

M′(x, y) at this stage displays the boundaries of the primary ridge structure (see

Figure 6). We binarize M′ using a threshold value

(x, y). Certain morphological operators are also used to eliminate undesirable noise zones. The detailed structure is labeled “mask”, and it identifies the location of the strong ridge pattern. We “overlay”

M′(x, y) on

I(x, y) to improve the ridge region

(x, y) as in (

14):

where

I′(x, y) is the augmented image and “

c” is the “intensity coefficient” used to emphasize and recognize the ridge area. The more intense the ridge pattern, the lower the value of

c (i.e., the darker the area will be).

The value of “

c” is experimentally established at 0.9. We want to mention that additional permutations may be incorporated to determine alternative values for “

c” to demonstrate the distinct ridge sections based on their strength levels. For instance, gray-level dicing could allocate a more considerable weight, “

c”, to the more robust ridge pattern and inversely. We do not perform this additional step because of the computational overhead (computation time is critical for an online application).

Figure 4 depicts many instances of contrast enhancement results in the vein image, while

Figure 5 depicts the enhanced image.

The vein pattern feature recognition using various ML approaches is described in

Section 2 and

Section 3. The following section demonstrates how the image is processed to extract the characteristics required to recognize the feature/pattern. The first step in this experiment is to denoise the image using a 2D convolution filter. Convolution is the numerical activity between two functions that creates a third function. Image processing refers to every pixel performing a calculation with its neighbors. The kernels will characterize the convolution’s size, weights, and anchor point, usually at the center. With this denoising, an algorithm convolves the kernel with an image.

In this study, a kernel size of 25 × 25 is considered. The resultant 2D convolution-filtered image is obtained from the vein pattern detected as found in

Section 3.

Figure 6 elucidates the 2D convolution filter denoising. Image segmentation splits an image into numerous areas (or segments) to change an image’s portrayal into more essential data. Current research discloses the UML image segmentation techniques employed on various datasets. This is the most basic and widely used iterative UML method.

In this technique, we randomly initialize the K number of centroids in the data (the number of k is determined using the elbow method) and iterate these centroids until the position of the centroid does not change. To better understand, Algorithm 3 explains the steps involved in the experimentation of UML clustering. These points are chosen so that the summation of distances between test data and their corresponding centroids is minimal, or the sum of distances between them is minimal. The maximum number of iterations permitted is 500, and the epsilon value is 1. This robust UML algorithm expects to partition

N observations into an

n number of clusters, where every observation must be placed with the group with the closest mean. A cluster alludes to an assortment of information accumulated based on specific similarities. The image obtained (from

Figure 6a) is subjected to image segmentation for the clusters at K = 10, 15, and 20 as shown in

Figure 6.

| Algorithm 3: UML K-means pseudoalgo model. |

![Make 06 00056 i001]() |