More Capable, Less Benevolent: Trust Perceptions of AI Systems across Societal Contexts

Abstract

1. Introduction

2. Related Work

2.1. Defining Generative/Interactive AI

2.2. Embedding Public Perception into Human-Centered AI Design

2.3. AI Trust

2.3.1. Engendering Trust through System Capabilities

2.3.2. Engendering Trust through Ethical AI

2.3.3. Engendering Trust beyond System Performance

2.3.4. Individual Factors Influencing AI Trust

2.4. AI Trust across Different Contexts

2.4.1. AI in Healthcare

2.4.2. AI in Education

2.4.3. AI in Creative Arts

3. Method

3.1. Design and Participants

3.2. Measurement

3.2.1. AI Trust

3.2.2. Individual Traits

3.3. Data Analysis

4. Results

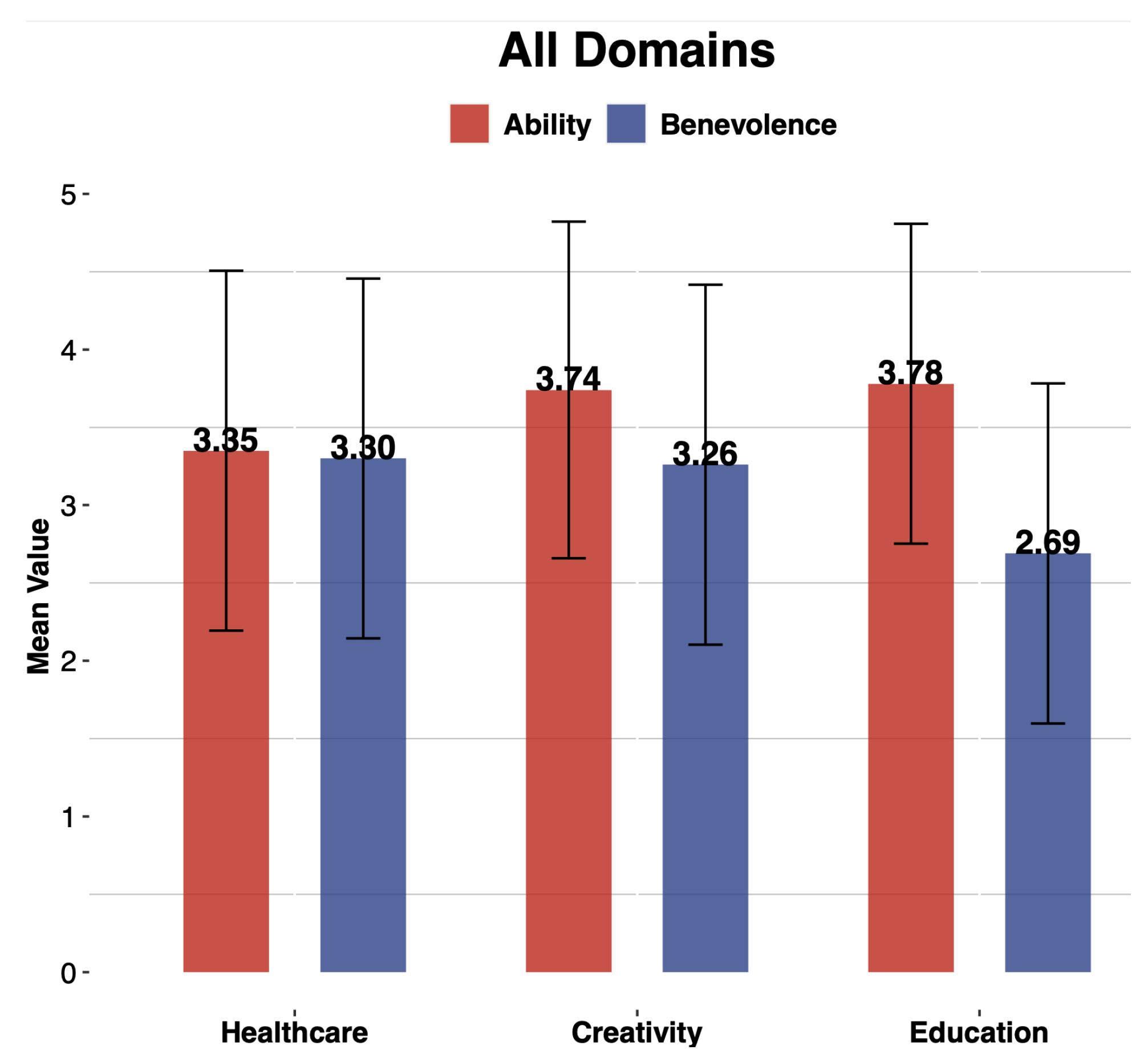

4.1. AI Trust

4.2. Individual Traits

4.2.1. Factors That Influence Trust in AI Ability across Domains

4.2.2. Factors That Influence Trust in AI Benevolence across Domains

5. Discussion

5.1. More Capable, Less Benevolent

5.2. Preference for Humans over AI

5.3. Policy Implications

6. Limitations and Future Research

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Scales and Measurements

- AI Knowledge

- A website or application that translates languages (for example, Google Translate).

- An application that identifies or categorizes people and objects in your photos or videos.

- A self-driving car.

- A chatbot that offers advice or customer support.

- A digital personal assistant on your phone or a device in your home that can help schedule meetings, answer questions, and complete tasks (for example, Google Home).

- A social robot that can interact with humans.

- A website or application that recommends movies or television shows based on your prior viewing habits.

- A website that suggests advertisements for you based on your browser history.

- A search engine (for example, Google).

- An industrial robot, such as those used in manufacturing.

- A website or application where users can input text to generate images (e.g., DALL-E).

- A chatbot that can generate essays, poems, and computer code based on users’ input (e.g., ChatGPT).

- A calculator that can do basic math (add, subtract, multiply, divide, etc.).

- A set of rules that determines whether students receive a college scholarship based on their high school grades and SAT scores.

- Yes.

- No.

- I don’t know.

- AI Familiarity

- I have never heard of AI.

- I have heard about AI in the news, from friends or family, etc.

- I closely follow AI-related news.

- I have some formal education or work experience relating to AI.

- I have extensive experience in AI research and/or development.

- AI Experience

- Yes.

- No.

- I don’t know.

- AI Domains—Education

Appendix A.1. Capability

- Entirely capable.

- Mostly capable.

- Somewhat capable.

- Only a little capable.

- Not at all capable.

- I don’t know.

Appendix A.2. Benevolence

- Strongly disagree.

- Disagree.

- Neither disagree nor agree.

- Agree.

- Strongly agree.

- I don’t know.

| Field 1 | Field 2 |

|---|---|

| Drafting lesson plans for teachers | Drafting lesson plans |

| Grading students’ work | Grading |

| Drafting essays for students | Drafting essays |

| Providing answers to students’ questions | Providing answers |

| Giving learning support based on individual students’ needs | Giving learning support |

Appendix B. Healthcare

Appendix B.1. Capability

- Entirely capable.

- Mostly capable.

- Somewhat capable.

- Only a little capable.

- Not at all capable.

- I don’t know.

Appendix B.2. Benevolence

- Strongly disagree.

- Disagree.

- Neither disagree nor agree.

- Agree.

- Strongly agree.

| Field 1 | Field 2 |

|---|---|

| Providing answers to patients’ medical questions | Answering patients’ medical questions |

| Helping healthcare professionals diagnose diseases | Helping diagnose diseases |

| Assisting healthcare professionals in medical research | Assisting medical research |

| Conversing with patients in therapy settings | Conversing in therapy |

| Using patient data to determine health risks | Determining health risks |

Appendix C. Creativity

Appendix C.1. Capability

- Entirely capable.

- Mostly capable.

- Somewhat capable.

- Only a little capable.

- Not at all capable.

- I don’t know.

Appendix C.2. Benevolence

- Strongly disagree.

- Disagree.

- Neither disagree nor agree.

- Agree.

- Strongly agree.

- I don’t know.

| Field 1 | Field 2 |

|---|---|

| Content creation (e.g., generating blog posts) | Content creation |

| Creative writing (e.g., generating scripts or novels) | Creative writing |

| Music composition (e.g., generating lyrics or melodies) | Music composition |

| Creating art (e.g., generating digital paintings) | Creating art |

| Creating videos (e.g., generating video content) | Creating videos |

Appendix D. Principal Components Analysis

| Kaiser–Meyer–Olkin Measure of Sampling Adequacy | Bartlett’s Test of Sphericity (df) | Eigenvalue | Variance Explained | Factor Loadings (Range) | |

|---|---|---|---|---|---|

| Capability | |||||

| Creativity | 0.89 | 1127.87(10) *** | 3.66 | 73.26% | 0.84–0.86 |

| Education | 0.87 | 1099.49(10) *** | 3.55 | 71.03% | 0.82–0.86 |

| Healthcare | 0.89 | 1236.79(10) *** | 3.69 | 73.75% | 0.81–0.89 |

| Benevolence | |||||

| Creativity | 0.9 | 1619.71(10) *** | 4.07 | 81.39% | 0.88–0.93 |

| Education | 0.87 | 909.85(10) *** | 3.38 | 67.57% | 0.80–0.84 |

| Healthcare | 0.89 | 1338.87(10) *** | 3.76 | 75.20% | 0.81–0.91 |

References

- Grace, K.; Salvatier, J.; Dafoe, A.; Zhang, B.; Evans, O. When Will AI Exceed Human Performance? Evidence from AI Experts. J. Artif. Intell. Res. 2018, 62, 729–754. [Google Scholar] [CrossRef]

- Hsu, T.; Myers, S.L. Can We No Longer Believe Anything We See? The New York Times. 8 April 2023. Available online: https://www.nytimes.com/2023/04/08/business/media/ai-generated-images.html (accessed on 26 August 2023).

- Lonas, L. Professor Attempts to Fail Students After Falsely Accusing Them of Using Chatgpt to Cheat. The Hill. 18 May 2023. Available online: https://thehill.com/homenews/education/4010647-professor-attempts-to-fail-students-after-falsely-accusing-them-of-using-chatgpt-to-cheat/ (accessed on 26 August 2023).

- Shaffi, S. “It’s the Opposite of Art”: Why Illustrators Are Furious About AI. The Guardian. 8 April 2023. Available online: https://www.theguardian.com/artanddesign/2023/jan/23/its-the-opposite-of-art-why-illustrators-are-furious-about-ai (accessed on 26 August 2023).

- Tyson, A.; Kikuchi, E. Growing Public Concern About the Role of Artificial Intelligence in Daily Life. Pew Research Center. 2023. Available online: https://policycommons.net/artifacts/4809713/growing-public-concern-about-the-role-of-artificial-intelligence-in-daily-life/5646039/ (accessed on 11 January 2024).

- Faverio, M.; Tyson, A. What the Data Says About Americans’ Views of Artificial Intelligence. 2023. Available online: https://www.pewresearch.org/short-reads/2023/11/21/what-the-data-says-about-americans-views-of-artificial-intelligence/ (accessed on 11 January 2024).

- The White House. Blueprint for an AI Bill of Rights. Office of Science and Technology Policy. 2022. Available online: https://www.whitehouse.gov/ostp/ai-bill-of-rights/ (accessed on 11 January 2024).

- The Act | EU Artificial Intelligence Act. European Commission. 2021. Available online: https://artificialintelligenceact.eu/the-act/ (accessed on 11 January 2024).

- Rebedea, T.; Dinu, R.; Sreedhar, M.; Parisien, C.; Cohen, J. Nemo guardrails: A toolkit for controllable and safe llm applications with programmable rails. arXiv 2023, arXiv:2310.10501. [Google Scholar] [CrossRef]

- Christian, B. The Alignment Problem: Machine Learning and Human Values; WW Norton & Company: New York, NY, USA, 2020. [Google Scholar]

- Bommasani, R.; Hudson, D.A.; Adeli, E.; Altman, R.; Arora, S.; von Arx, S.; Bernstein, M.S.; Bohg, J.; Bosselut, A.; Brunskill, E.; et al. On the opportunities and risks of foundation models. arXiv 2021, arXiv:2108.07258. [Google Scholar] [CrossRef]

- Xu, W. Toward human-centered AI. Interactions 2019, 26, 42–46. [Google Scholar] [CrossRef]

- Ozmen Garibay, O.; Winslow, B.; Andolina, S.; Antona, M.; Bodenschatz, A.; Coursaris, C.; Xu, W. Six Human-Centered Artificial Intelligence Grand Challenges. Int. J. Hum. Comput. Interact. 2023, 39, 391–437. [Google Scholar] [CrossRef]

- Choung, H.; Seberger, J.S.; David, P. When AI is Perceived to Be Fairer than a Human: Understanding Perceptions of Algorithmic Decisions in a Job Application Context. SSRN Electron. J. 2023. [Google Scholar] [CrossRef]

- Lee, J.D.; See, K.A. Trust in automation: Designing for appropriate reliance. Hum. Factors 2004, 46, 50–80. [Google Scholar] [CrossRef]

- Cheng, X.; Zhang, X.; Cohen, J.; Mou, J. Human vs. AI: Understanding the impact of anthropomorphism on consumer response to chatbots from the perspective of trust and relationship norms. Inf. Process. Manag. 2022, 59, 102940. [Google Scholar] [CrossRef]

- Angerschmid, A.; Zhou, J.; Theuermann, K.; Chen, F.; Holzinger, A. Fairness and explanation in AI-informed decision making. Mach. Learn. Knowl. Extr. 2022, 4, 556–579. [Google Scholar] [CrossRef]

- Shin, D. The effects of explainability and causability on perception, trust, and acceptance: Implications for explainable AI. Int. J. Hum. Comput. Stud. 2021, 146, 102551. [Google Scholar] [CrossRef]

- Pickering, B. Trust, but verify: Informed consent, AI technologies, and public health emergencies. Future Internet 2021, 13, 132. [Google Scholar] [CrossRef]

- Bedué, P.; Fritzsche, A. Can we trust AI? An empirical investigation of trust requirements and guide to successful AI adoption. J. Enterp. Inf. Manag. 2022, 35, 530–549. [Google Scholar] [CrossRef]

- Mayer, R.C.; Davis, J.H.; Schoorman, F.D. An Integrative Model of Organizational Trust. Acad. Manag. Rev. 1995, 20, 709–734. [Google Scholar] [CrossRef]

- Choung, H.; David, P.; Seberger, J.S. A multilevel framework for AI governance. arXiv 2023, arXiv:2307.03198. [Google Scholar] [CrossRef]

- Rheu, M.; Shin, J.Y.; Peng, W.; Huh-Yoo, J. Systematic review: Trust-building factors and implications for conversational agent design. Int. J. Hum. Comput. Interact. 2021, 37, 81–96. [Google Scholar] [CrossRef]

- Agarwal, N.; Moehring, A.; Rajpurkar, P.; Salz, T. Combining Human Expertise with Artificial Intelligence: Experimental Evidence from Radiology; Technical Report; National Bureau of Economic Research: Cambridge, MA, USA, 2023. [Google Scholar] [CrossRef]

- de Haan, Y.; van den Berg, E.; Goutier, N.; Kruikemeier, S.; Lecheler, S. Invisible friend or foe? How journalists use and perceive algorithmic-driven tools in their research process. Digit. J. 2022, 10, 1775–1793. [Google Scholar] [CrossRef]

- Balaram, B.; Greenham, T.; Leonard, J. Artificial Intelligence: Real Public Engagement. 2018. Available online: https://www.thersa.org/reports/artificial-intelligence-real-public-engagement (accessed on 26 August 2023).

- Copeland, B.J. Artificial Intelligence. Encyclopaedia Britannica. 2023. Available online: https://www.britannica.com/technology/artificial-intelligence/Reasoning (accessed on 26 August 2023).

- West, D.M. What Is Artificial Intelligence; Brookings Institution: Washington, DC, USA, 2018; Available online: https://www.brookings.edu/articles/what-is-artificial-intelligence/ (accessed on 12 January 2024).

- Russell, S.J.; Norvig, P. Artificial Intelligence: A Modern Approach; Pearson: London, UK, 2010. [Google Scholar]

- Goodfellow, I.; Bengio, Y.B.; Courville, A. Adaptive Computation and Machine Learning Series (Deep Learning); MIT Press: Cambridge, MA, USA, 2016. [Google Scholar] [CrossRef]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Sastry, G.; Askell, A.; Agarwal, S. Language models are few-shot learners. In Proceedings of the Advances in Neural Information Processing Systems, Virtual, 6–12 December 2020; Volume 33, pp. 1877–1901. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sutskever, I. Learning transferable visual models from natural language supervision. In Proceedings of the 2021 International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Brock, A.; Donahue, J.; Simonyan, K. Large scale GAN training for high fidelity natural image synthesis. arXiv 2018, arXiv:1809.11096. [Google Scholar] [CrossRef]

- Engel, J.; Agrawal, K.K.; Chen, S.; Gulrajani, I.; Donahue, C.; Roberts, A. Gansynth: Adversarial neural audio synthesis. arXiv 2019, arXiv:1902.08710. [Google Scholar] [CrossRef]

- Shneiderman, B. Human-Centered AI; Oxford University Press: Oxford, UK, 2022. [Google Scholar]

- Heaven, W.D. DeepMind’S Cofounder: Generative AI Is Just a Phase. What’S Next Is Interactive AI. MIT Technology Review. 2023. Available online: https://www.technologyreview.com/2023/09/15/1079624/deepmind-inflection-generative-ai-whats-next-mustafa-suleyman/ (accessed on 26 August 2023).

- Terry, M.; Kulkarni, C.; Wattenberg, M.; Dixon, L.; Morris, M.R. AI Alignment in the Design of Interactive AI: Specification Alignment, Process Alignment, and Evaluation Support. arXiv 2023, arXiv:2311.00710. [Google Scholar] [CrossRef]

- Generate Text, Images, Code, and More with Google Cloud AI. 2023. Available online: https://cloud.google.com/use-cases/generative-ai (accessed on 11 September 2023).

- Kelly, S.M. So Long, Robotic Alexa. Amazon’s Voice Assistant Gets More Human-Like with Generative AI. CNN Business. 2023. Available online: https://edition.cnn.com/2023/09/20/tech/amazon-alexa-human-like-generative-ai/index.html (accessed on 11 September 2023).

- Syu, J.H.; Lin, J.C.W.; Srivastava, G.; Yu, K. A Comprehensive Survey on Artificial Intelligence Empowered Edge Computing on Consumer Electronics. Proc. IEEE Trans. Consum. Electron. 2023. [Google Scholar] [CrossRef]

- Chouldechova, A. Fair Prediction with Disparate Impact: A Study of Bias in Recidivism Prediction Instruments. Big Data 2017, 5, 153–163. [Google Scholar] [CrossRef]

- Raghavan, M.; Barocas, S.; Kleinberg, J.; Levy, K. Mitigating bias in algorithmic hiring. In Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency, Barcelona, Spain, 27–30 January 2020; ACM: New York, NY, USA, 2020. [Google Scholar] [CrossRef]

- Balaji, T.; Annavarapu, C.S.R.; Bablani, A. Machine learning algorithms for social media analysis: A survey. Comput. Sci. Rev. 2021, 40, 100395. [Google Scholar] [CrossRef]

- Chen, C.; Shu, K. Can llm-generated misinformation be detected? arXiv 2023, arXiv:2309.13788. [Google Scholar] [CrossRef]

- Sowa, K.; Przegalinska, A.; Ciechanowski, L. Cobots in knowledge work: Human–AI collaboration in managerial professions. J. Bus. Res. 2021, 125, 135–142. [Google Scholar] [CrossRef]

- Tiron-Tudor, A.; Deliu, D. Reflections on the human-algorithm complex duality perspectives in the auditing process. Qual. Res. Account. Manag. 2022, 19, 255–285. [Google Scholar]

- Xu, W.; Dainoff, M.J.; Ge, L.; Gao, Z. Transitioning to human interaction with AI systems: New challenges and opportunities for HCI professionals to enable human-centered AI. Int. J. Hum. Comput. Interact. 2023, 39, 494–518. [Google Scholar] [CrossRef]

- Yang, R.; Wibowo, S. User trust in artificial intelligence: A comprehensive conceptual framework. Electron. Mark. 2022, 32, 2053–2077. [Google Scholar] [CrossRef]

- Lv, X.; Yang, Y.; Qin, D.; Cao, X.; Xu, H. Artificial intelligence service recovery: The role of empathic response in hospitality customers’ continuous usage intention. Comput. Hum. Behav. 2022, 126, 106993. [Google Scholar] [CrossRef]

- Lee, J.C.; Chen, X. Exploring users’ adoption intentions in the evolution of artificial intelligence mobile banking applications: The intelligent and anthropomorphic perspectives. Int. J. Bank Mark. 2022, 40, 631–658. [Google Scholar] [CrossRef]

- Lu, L.; McDonald, C.; Kelleher, T.; Lee, S.; Chung, Y.J.; Mueller, S.; Yue, C.A. Measuring consumer-perceived humanness of online organizational agents. Comput. Hum. Behav. 2022, 128, 107092. [Google Scholar] [CrossRef]

- Pelau, C.; Dabija, D.C.; Ene, I. What makes an AI device human-like? The role of interaction quality, empathy and perceived psychological anthropomorphic characteristics in the acceptance of artificial intelligence in the service industry. Comput. Hum. Behav. 2021, 122, 106855. [Google Scholar] [CrossRef]

- Shi, S.; Gong, Y.; Gursoy, D. Antecedents of Trust and Adoption Intention Toward Artificially Intelligent Recommendation Systems in Travel Planning: A Heuristic–Systematic Model. J. Travel Res. 2021, 60, 1714–1734. [Google Scholar] [CrossRef]

- Moussawi, S.; Koufaris, M.; Benbunan-Fich, R. How perceptions of intelligence and anthropomorphism affect adoption of personal intelligent agents. Electron. Mark. 2021, 31, 343–364. [Google Scholar] [CrossRef]

- Al Shamsi, J.H.; Al-Emran, M.; Shaalan, K. Understanding key drivers affecting students’ use of artificial intelligence-based voice assistants. Educ. Inf. Technol. 2022, 27, 8071–8091. [Google Scholar] [CrossRef]

- He, H.; Gray, J.; Cangelosi, A.; Meng, Q.; McGinnity, T.M.; Mehnen, J. The Challenges and Opportunities of Human-Centered AI for Trustworthy Robots and Autonomous Systems. IEEE Trans. Cogn. Dev. Syst. 2022, 14, 1398–1412. [Google Scholar] [CrossRef]

- Shin, D. User Perceptions of Algorithmic Decisions in the Personalized AI System: Perceptual Evaluation of Fairness, Accountability, Transparency, and Explainability. J. Broadcast. Electron. Media 2020, 64, 541–565. [Google Scholar] [CrossRef]

- Schoeffer, J.; De-Arteaga, M.; Kuehl, N. On explanations, fairness, and appropriate reliance in human-AI decision-making. arXiv 2022, arXiv:2209.11812. [Google Scholar] [CrossRef]

- Ghassemi, M.; Oakden-Rayner, L.; Beam, A.L. The false hope of current approaches to explainable artificial intelligence in healthcare. Lancet Digit. Health 2021, 3, e745–e750. [Google Scholar] [CrossRef]

- Muir, B.M. Trust in automation: Part I. Theoretical issues in the study of trust and human intervention in automated systems. Ergonomics 1994, 37, 1905–1922. [Google Scholar] [CrossRef]

- Hoff, K.A.; Bashir, M. Trust in Automation. Hum. Factors J. Hum. Factors Ergon. Soc. 2014, 57, 407–434. [Google Scholar] [CrossRef]

- Thurman, N.; Moeller, J.; Helberger, N.; Trilling, D. My Friends, Editors, Algorithms, and I. Digit. J. 2018, 7, 447–469. [Google Scholar] [CrossRef]

- Smith, A. Public Attitudes toward Computer Algorithms. Policy Commons. 2018. Available online: https://policycommons.net/artifacts/617047/public-attitudes-toward-computer-algorithms/1597791/ (accessed on 24 August 2023).

- Araujo, T.; Helberger, N.; Kruikemeier, S.; de Vreese, C.H. In AI we trust? Perceptions about automated decision-making by artificial intelligence. AI Soc. 2020, 35, 611–623. [Google Scholar] [CrossRef]

- Choung, H.; David, P.; Ross, A. Trust and ethics in AI. AI Soc. 2022, 38, 733–745. [Google Scholar] [CrossRef]

- Mays, K.K.; Lei, Y.; Giovanetti, R.; Katz, J.E. AI as a boss? A national US survey of predispositions governing comfort with expanded AI roles in society. AI Soc. 2021, 37, 1587–1600. [Google Scholar] [CrossRef]

- Novozhilova, E.; Mays, K.; Katz, J. Looking towards an automated future: U.S. attitudes towards future artificial intelligence instantiations and their effect. Humanit. Soc. Sci. Commun. 2024. [Google Scholar] [CrossRef]

- Shamout, M.; Ben-Abdallah, R.; Alshurideh, M.; Alzoubi, H.; Al Kurdi, B.; Hamadneh, S. A conceptual model for the adoption of autonomous robots in supply chain and logistics industry. Uncertain Supply Chain. Manag. 2022, 10, 577–592. [Google Scholar] [CrossRef]

- Oliveira, T.; Martins, R.; Sarker, S.; Thomas, M.; Popovič, A. Understanding SaaS adoption: The moderating impact of the environment context. Int. J. Inf. Manag. 2019, 49, 1–12. [Google Scholar] [CrossRef]

- Zhang, B.; Dafoe, A. U.S. Public Opinion on the Governance of Artificial Intelligence. In Proceedings of the AAAI/ACM Conference on AI, Ethics, and Society, Montreal, QC, Canada, 7–8 February 2020; ACM: New York, NY, USA, 2020. [Google Scholar] [CrossRef]

- Nazaretsky, T.; Ariely, M.; Cukurova, M.; Alexandron, G. Teachers’ trust in AI-powered educational technology and a professional development program to improve it. Br. J. Educ. Technol. 2022, 53, 914–931. [Google Scholar] [CrossRef]

- Nazaretsky, T.; Cukurova, M.; Alexandron, G. An Instrument for Measuring Teachers’ Trust in AI-Based Educational Technology. In Proceedings of the LAK22: 12th International Learning Analytics and Knowledge Conference, Online, 21–25 March 2022. [Google Scholar] [CrossRef]

- Law, E.L.C.; van As, N.; Følstad, A. Effects of Prior Experience, Gender, and Age on Trust in a Banking Chatbot With(Out) Breakdown and Repair. In Proceedings of the Human-Computer Interaction—INTERACT 2023, Copenhagen, Denmark, 23–28 July 2023; pp. 277–296. [Google Scholar] [CrossRef]

- Stanton, B.; Jensen, T. Trust and Artificial Intelligence; Technical Report; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2021. [Google Scholar] [CrossRef]

- Szalavitz, M.; Rigg, K.K.; Wakeman, S.E. Drug dependence is not addiction—And it matters. Ann. Med. 2021, 53, 1989–1992. [Google Scholar] [CrossRef]

- Okaniwa, F.; Yoshida, H. Evaluation of Dietary Management Using Artificial Intelligence and Human Interventions: Nonrandomized Controlled Trial. JMIR Form. Res. 2021, 6, e30630. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Y.; Tang, N.; Omar, R.; Hu, Z.; Duong, T.; Wang, J.; Haick, H. Smart Materials Enabled with Artificial Intelligence for Healthcare Wearables. Adv. Funct. Mater. 2021, 31, 2105482. [Google Scholar] [CrossRef]

- Huang, P.; Li, S.; Li, P.; Jia, B. The Learning Curve of Da Vinci Robot-Assisted Hemicolectomy for Colon Cancer: A Retrospective Study of 76 Cases at a Single Center. Front. Surg. 2022, 9, 897103. [Google Scholar] [CrossRef]

- Tustumi, F.; Andreollo, N.A.; de Aguilar-Nascimento, J.E. Future of the Language Models in Healthcare: The Role of ChatGPT. Arquivos Brasileiros de Cirurgia Digestiva 2023, 36, e1727. [Google Scholar] [CrossRef]

- Kung, T.H.; Cheatham, M.; Medenilla, A.; Sillos, C.; De Leon, L.; Elepaño, C.; Madriaga, M.; Aggabao, R.; Diaz-Candido, G.; Maningo, J.; et al. Performance of ChatGPT on USMLE: Potential for AI-assisted medical education using large language models. PLoS Digit. Health 2023, 2, e0000198. [Google Scholar] [CrossRef]

- West, D.M.; Allen, J.R. Turning Point: Policymaking in the Era of Artificial Intelligence; Brookings Institution Press: Washington, DC, USA, 2020. [Google Scholar]

- Drukker, K.; Chen, W.; Gichoya, J.; Gruszauskas, N.; Kalpathy-Cramer, J.; Koyejo, S.; Myers, K.; Sá, R.C.; Sahiner, B.; Whitney, H.; et al. Toward fairness in artificial intelligence for medical image analysis: Identification and mitigation of potential biases in the roadmap from data collection to model deployment. J. Med. Imaging 2023, 10, 061104. [Google Scholar] [CrossRef]

- Knight, D.; Aakre, C.A.; Anstine, C.V.; Munipalli, B.; Biazar, P.; Mitri, G.; Halamka, J.D. Artificial Intelligence for Patient Scheduling in the Real-World Health Care Setting: A Metanarrative Review. Health Policy Technol. 2023, 12, 100824. [Google Scholar] [CrossRef]

- Samorani, M.; Harris, S.L.; Blount, L.G.; Lu, H.; Santoro, M.A. Overbooked and overlooked: Machine learning and racial bias in medical appointment scheduling. Manuf. Serv. Oper. Manag. 2022, 24, 2825–2842. [Google Scholar] [CrossRef]

- Li, R.C.; Asch, S.M.; Shah, N.H. Developing a delivery science for artificial intelligence in healthcare. NPJ Digit. Med. 2020, 3, 107. [Google Scholar] [CrossRef] [PubMed]

- Yu, K.H.; Beam, A.L.; Kohane, I.S. Artificial intelligence in healthcare. Nat. Biomed. Eng. 2018, 2, 719–731. [Google Scholar] [CrossRef] [PubMed]

- Baldauf, M.; Fröehlich, P.; Endl, R. Trust Me, I’m a Doctor—User Perceptions of AI-Driven Apps for Mobile Health Diagnosis. In Proceedings of the 19th International Conference on Mobile and Ubiquitous Multimedia, Essen, Germany, 22–25 November 2020; ACM: New York, NY, USA, 2020. [Google Scholar] [CrossRef]

- Ghafur, S.; Van Dael, J.; Leis, M.; Darzi, A.; Sheikh, A. Public perceptions on data sharing: Key insights from the UK and the USA. Lancet Digit. Health 2020, 2, e444–e446. [Google Scholar] [CrossRef] [PubMed]

- Rienties, B. Understanding academics’ resistance towards (online) student evaluation. Assess. Eval. High. Educ. 2014, 39, 987–1001. [Google Scholar] [CrossRef][Green Version]

- Johnson, A. Chatgpt in Schools: Here’s Where It’s Banned-and How It Could Potentially Help Students. Forbes. 2023. Available online: https://www.forbes.com/sites/ariannajohnson/2023/01/18/chatgpt-in-schools-heres-where-its-banned-and-how-it-could-potentially-help-students/ (accessed on 31 January 2024).

- Jones, B.; Perez, J.; Touré, M. More Schools Want Your Kids to Use CHATGPT Really. Politico. 2023. Available online: https://www.politico.com/news/2023/08/23/chatgpt-ai-chatbots-in-classrooms-00111662 (accessed on 31 January 2024).

- Kasneci, E.; Seßler, K.; Küchemann, S.; Bannert, M.; Dementieva, D.; Fischer, F.; Kasneci, G. ChatGPT for Good? On Opportunities and Challenges of Large Language Models for Education. arXiv 2023. [Google Scholar] [CrossRef]

- Hwang, G.J.; Chen, N.S. Editorial Position Paper. Educ. Technol. Soc. 2023, 26. Available online: https://www.jstor.org/stable/48720991 (accessed on 31 January 2024).

- Williams, B.A.; Brooks, C.F.; Shmargad, Y. How algorithms discriminate based on data they lack: Challenges, solutions, and policy implications. J. Inf. Policy 2018, 8, 78–115. [Google Scholar] [CrossRef]

- Hao, K. The UK Exam Debacle Reminds Us That Algorithms Can’t Fix Broken Systems. MIT Technology Review. 2020. Available online: https://www.technologyreview.com/2020/08/20/1007502/uk-exam-algorithm-cant-fix-broken-system/ (accessed on 31 January 2024).

- Engler, A. Enrollment Algorithms Are Contributing to the Crises of Higher Education. Brookings Institute. 2021. Available online: https://www.brookings.edu/articles/enrollment-algorithms-are-contributing-to-the-crises-of-higher-education/ (accessed on 31 January 2024).

- Lo, C.K. What Is the Impact of ChatGPT on Education? A Rapid Review of the Literature. Educ. Sci. 2023, 13, 410. [Google Scholar] [CrossRef]

- Conijn, R.; Kahr, P.; Snijders, C. The Effects of Explanations in Automated Essay Scoring Systems on Student Trust and Motivation. J. Learn. Anal. 2023, 10, 37–53. [Google Scholar] [CrossRef]

- Qin, F.; Li, K.; Yan, J. Understanding user trust in artificial intelligence-based educational systems: Evidence from China. Br. J. Educ. Technol. 2020, 51, 1693–1710. [Google Scholar] [CrossRef]

- Vinichenko, M.V.; Melnichuk, A.V.; Karácsony, P. Technologies of improving the university efficiency by using artificial intelligence: Motivational aspect. Entrep. Sustain. Issues 2020, 7, 2696. [Google Scholar] [CrossRef] [PubMed]

- Ramesh, A.; Dhariwal, P.; Nichol, A.; Chu, C.; Chen, M. Hierarchical text-conditional image generation with clip latents. arXiv 2022, arXiv:2204.06125. Available online: https://3dvar.com/Ramesh2022Hierarchical.pdf (accessed on 31 January 2024).

- Roose, K. GPT-4 Is Exciting And Scary. The New York Times. 2023. Available online: https://www.nytimes.com/2023/03/15/technology/gpt-4-artificial-intelligence-openai.html (accessed on 31 January 2024).

- Plut, C.; Pasquier, P. Generative music in video games: State of the art, challenges, and prospects. Entertain. Comput. 2020, 33, 100337. [Google Scholar] [CrossRef]

- Zhou, V.; Dosunmu, D.; Maina, J.; Kumar, R. AI Is Already Taking Video Game Illustrators’ Jobs in China. Rest of World. 2023. Available online: https://restofworld.org/2023/ai-image-china-video-game-layoffs/ (accessed on 31 January 2024).

- Coyle, J.; Press, T.A. Chatgpt Is the “Terrifying” Subtext of the Writers’ Strike That Is Reshaping Hollywood. The Fortune. 2023. Available online: https://fortune.com/2023/05/05/hollywood-writers-strike-wga-chatgpt-ai-terrifying-replace-writers/ (accessed on 31 January 2024).

- Bianchi, F.; Kalluri, P.; Durmus, E.; Ladhak, F.; Cheng, M.; Nozza, D.; Hashimoto, T.; Jurafsky, D.; Zou, J.; Caliskan, A. Easily Accessible Text-to-Image Generation Amplifies Demographic Stereotypes at Large Scale. In Proceedings of the 2023 ACM Conference on Fairness, Accountability, and Transparency, Chicago, IL, USA, 12–15 June 2023; ACM: New York, NY, USA, 2023. [Google Scholar] [CrossRef]

- Srinivasan, R.; Uchino, K. Biases in Generative Art. In Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency, Virtual, 3–10 March 2021; ACM: New York, NY, USA, 2021. [Google Scholar] [CrossRef]

- Romero, A. How to Get the Most Out of Chatgpt. The Algorithmic Bridge. 2022. Available online: https://thealgorithmicbridge.substack.com/p/how-to-get-the-most-out-of-chatgpt (accessed on 31 January 2024).

- Lyu, Y.; Wang, X.; Lin, R.; Wu, J. Communication in Human–AI Co-Creation: Perceptual Analysis of Paintings Generated by Text-to-Image System. Appl. Sci. 2022, 12, 11312. [Google Scholar] [CrossRef]

- Mazzone, M.; Elgammal, A. Art, Creativity, and the Potential of Artificial Intelligence. Arts 2019, 8, 26. [Google Scholar] [CrossRef]

- Rasrichai, K.; Chantarutai, T.; Kerdvibulvech, C. Recent Roles of Artificial Intelligence Artists in Art Circulation. Digit. Soc. 2023, 2, 15. [Google Scholar] [CrossRef]

- Bosonogov, S.D.; Suvorova, A.V. Perception of AI-generated art: Text analysis of online discussions. Sci. Semin. Pom 2023, 529, 6–23. Available online: https://www.mathnet.ru/eng/znsl7416 (accessed on 31 January 2024).

- Alves da Veiga, P. Generative Ominous Dataset: Testing the Current Public Perception of Generative Art. In Proceedings of the 20th International Conference on Culture and Computer Science: Code and Materiality, Lisbon, Portugal, 28–29 September 2023; pp. 1–10. [Google Scholar] [CrossRef]

- Katz, J.E.; Halpern, D. Attitudes towards robots suitability for various jobs as affected robot appearance. Behav. Inf. Technol. 2013, 33, 941–953. [Google Scholar] [CrossRef]

- Kates, S.; Ladd, J.; Tucker, J.A. How Americans’ Confidence in Technology Firms Has Dropped: Evidence From the Second Wave of the American Institutional Confidence Poll. Brookings Institute. 2023. Available online: https://www.brookings.edu/articles/how-americans-confidence-in-technology-firms-has-dropped-evidence-from-the-second-wave-of-the-american-institutional-confidence-poll/ (accessed on 31 January 2024).

- Yin, J.; Chen, Z.; Zhou, K.; Yu, C. A deep learning based chatbot for campus psychological therapy. arXiv 2019, arXiv:1910.06707. [Google Scholar] [CrossRef]

- Bidarian, N. Meet Khan Academy’s Chatbot Tutor. CNN. 2023. Available online: https://www.cnn.com/2023/08/21/tech/khan-academy-ai-tutor/index.html (accessed on 31 January 2024).

- Mays, K.K.; Katz, J.E.; Lei, Y.S. Opening education through emerging technology: What are the prospects? Public perceptions of Artificial Intelligence and Virtual Reality in the classroom. Opus Educ. 2021, 8, 28. [Google Scholar] [CrossRef]

- Molina, M.D.; Sundar, S.S. Does distrust in humans predict greater trust in AI? Role of individual differences in user responses to content moderation. New Media Soc. 2022. [Google Scholar] [CrossRef]

- Raj, M.; Berg, J.; Seamans, R. Art-ificial Intelligence: The Effect of AI Disclosure on Evaluations of Creative Content. arXiv 2023, arXiv:2303.06217. [Google Scholar] [CrossRef]

- Heikkilä, M. AI Literacy Might Be CHATGPT’S Biggest Lesson for Schools. MIT Technology Review. 2023. Available online: https://www.technologyreview.com/2023/04/12/1071397/ai-literacy-might-be-chatgpts-biggest-lesson-for-schools/ (accessed on 31 January 2024).

- Elements of AI. A Free Online Introduction to Artificial Intelligence for Non-Experts. 2023. Available online: https://www.elementsofai.com/ (accessed on 31 January 2024).

- U.S. Department of Education. Artificial Intelligence and the Future of Teaching and Learning; Office of Educational Technology U.S. Department of Education: Washington, DC, USA, 2023. Available online: https://www2.ed.gov/documents/ai-report/ai-report.pdf (accessed on 31 January 2024).

- U.S. Food and Drug Administration. FDA Releases Artificial Intelligence/Machine Learning Action Plan; U.S. Food and Drug Administration: Washington, DC, USA, 2023.

- Office of the Chief Information Officer Washington State. Generative AI Guidelines; Office of the Chief Information Officer Washington State: Washington, DC, USA, 2023. Available online: https://ocio.wa.gov/policy/generative-ai-guidelines (accessed on 31 January 2024).

- Holt, K. You Can’t Copyright AI-Created Art, According to US Officials. Engadget. 2022. Available online: https://www.engadget.com/us-copyright-office-art-ai-creativity-machine-190722809.html (accessed on 31 January 2024).

- Engler, A. The AI Bill of Rights Makes Uneven Progress on Algorithmic Protections. Lawfare Blog. 2022. Available online: https://www.lawfareblog.com/ai-bill-rights-makes-uneven-progress-algorithmic-protections (accessed on 31 January 2024).

- Ye, J. China Says Generative AI Rules to Apply Only to Products for the Public. Reuters. 2023. Available online: https://www.reuters.com/technology/china-issues-temporary-rules-generative-ai-services-2023-07-13/ (accessed on 31 January 2024).

- Meaker, M. The EU Just Passed Sweeping New Rules to Regulate AI. Wired. 2023. Available online: https://www.wired.com/story/eu-ai-act/ (accessed on 31 January 2024).

- Ernst, C. Artificial Intelligence and Autonomy: Self-Determination in the Age of Automated Systems. In Regulating Artificial Intelligence; Springer International Publishing: Berlin/Heidelberg, Germany, 2019; pp. 53–73. [Google Scholar] [CrossRef]

- van der Veer, S.N.; Riste, L.; Cheraghi-Sohi, S.; Phipps, D.L.; Tully, M.P.; Bozentko, K.; Peek, N. Trading off accuracy and explainability in AI decision-making: Findings from 2 citizens’ juries. J. Am. Med Informatics Assoc. 2021, 28, 2128–2138. [Google Scholar] [CrossRef] [PubMed]

- Engler, A. The EU and US Diverge on AI Regulation: A Transatlantic Comparison and Steps to Alignment. Brookings Institute. 2023. Available online: https://www.brookings.edu/articles/the-eu-and-us-diverge-on-ai-regulation-a-transatlantic-comparison-and-steps-to-alignment/ (accessed on 31 January 2024).

- Dreksler, N.; McCaffary, D.; Kahn, L.; Mays, K.; Anderljung, M.; Dafoe, A.; Horowitz, M.; Zhang, B. Preliminary Survey Results: US and European Publics Overwhelmingly and Increasingly Agree That AI Needs to Be Managed Carefully. Centre for the Governance of AI. 2023. Available online: https://www.governance.ai/post/increasing-consensus-ai-requires-careful-management (accessed on 31 January 2024).

| Domain | Traditional Machine Learning AI | Generative, Interactive AI |

|---|---|---|

| Healthcare | Diagnostic tools for disease detection using ML algorithms (e.g., analyzing X-rays or MRI scans for tumors). | Personalized medicine platforms generating customized treatment plans based on patient data and feedback. |

| Education | AI-powered adaptive learning platforms adjusting educational content based on a student’s learning pace and style. | AI systems for interactive learning scenarios, creating dynamic educational content like virtual lab experiments. |

| Creative Arts | Recommendation algorithms in music or video streaming services analyzing user habits to suggest content. | Generative music composition or digital-art-creation platforms, adapting outputs based on user inputs. |

| Variable | Mean (SD) | % |

|---|---|---|

| Gender | ||

| Male | 47.9% | |

| Female | 52.1% | |

| Age (18–95) | 44.90 (17.78) | |

| Education | ||

| Less than High School diploma | 3.2% | |

| High School diploma/GED | 27.5% | |

| Some college (no degree) | 23.7% | |

| Associate’s degree | 10.0% | |

| Bachelor’s degree | 22.6% | |

| Graduate degree | 13.0% | |

| Race/Ethnicity | ||

| White/Caucasian | 63.7% | |

| Black/African American | 11.1% | |

| American Hispanic/Latino | 17.9% | |

| Asian or Pacific Islander | 4.8% | |

| American Indian or Alaska Native or Other | 2.5% | |

| Political Ideology | ||

| Republican | 23.8% | |

| Democrat | 43.6% | |

| Independent or no affiliation | 30.5% | |

| Other | 2.2% |

| Healthcare | Education | Creative Arts | |

|---|---|---|---|

| Constant | 0.42 | 1.44 | 1.46 |

| Gender (male = 1, female = 2) | 0.10 *** | 0.04 | |

| Age | 0.04 | 0.03 | |

| Education | 0.04 | 0.03 | 0.02 |

| change | 12.3% | 9.5% | 5.8% |

| Perceived knowledge of AI | 0.26 *** | 0.17 *** | 0.17 *** |

| Objective knowledge of AI | 0.07 ** | 0.10 *** | 0.11 *** |

| Perceived technology competence | 0.07 ** | 0.14 *** | 0.11 *** |

| Familiarity with AI | 0.13 *** | 0.12 ** | 0.13 ** |

| Prior experience with LLMs | 0.07 * | 0.07 * | 0.04 |

| change | 14.5% | 12.7% | 11.8% |

| Total adjusted | 26.1% | 21.7% | 17.1% |

| Healthcare | Education | Creative Arts | |

|---|---|---|---|

| Constant | 1.07 | 4.17 | 1.44 |

| Gender (male = 1, female = 2) | 0.11 *** | 0.04 | |

| Age | 0.08 ** | ||

| Education | 0.05 | 0.14 *** | 0.05 |

| change | 12.2% | 10.2% | 9.3% |

| Perceived knowledge of AI | 0.19 *** | *** | 0.08 * |

| Objective knowledge of AI | 0.01 | ||

| Perceived technology competence | 0.08 ** | 0.07 * | |

| Familiarity with AI | 0.014 *** | *** | 0.27 *** |

| Prior experience with LLMs | 0.08 * | 0.02 | |

| change | 9.0% | 7.8% | 9.3% |

| Total adjusted | 20.7% | 17.5% | 18.1% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Novozhilova, E.; Mays, K.; Paik, S.; Katz, J.E. More Capable, Less Benevolent: Trust Perceptions of AI Systems across Societal Contexts. Mach. Learn. Knowl. Extr. 2024, 6, 342-366. https://doi.org/10.3390/make6010017

Novozhilova E, Mays K, Paik S, Katz JE. More Capable, Less Benevolent: Trust Perceptions of AI Systems across Societal Contexts. Machine Learning and Knowledge Extraction. 2024; 6(1):342-366. https://doi.org/10.3390/make6010017

Chicago/Turabian StyleNovozhilova, Ekaterina, Kate Mays, Sejin Paik, and James E. Katz. 2024. "More Capable, Less Benevolent: Trust Perceptions of AI Systems across Societal Contexts" Machine Learning and Knowledge Extraction 6, no. 1: 342-366. https://doi.org/10.3390/make6010017

APA StyleNovozhilova, E., Mays, K., Paik, S., & Katz, J. E. (2024). More Capable, Less Benevolent: Trust Perceptions of AI Systems across Societal Contexts. Machine Learning and Knowledge Extraction, 6(1), 342-366. https://doi.org/10.3390/make6010017