1. Introduction

Epilepsy is an enduring neurological disorder that primarily impacts the central nervous system, with its primary expression manifesting in the brain. It is characterized by the occurrence of sudden and repetitive seizures [

1]. This ailment is recognized as the third most prevalent neurological condition, ranking closely behind stroke and Alzheimer’s disease [

2]. An epileptic seizure is a temporary event characterized by unusual and exaggerated activity of brain neurons, leading to the presence of signs or symptoms [

3]. Seizures can be identified by observing and analyzing various physiological indicators, such as brain and muscle activities, heart rate, oxygen levels, synthetic speech, or visual patterns. Techniques like electroencephalography (EEG), electrocardiogram (ECG), electromyography (EMG), movement tracking, or video capturing on a person’s head and body are used for this purpose [

4].

To detect anomalous behaviors and enable early detection of epileptic seizures before they escalate into more severe states, human activity recognition (HAR) can be effectively applied [

5]. Recent scholarly investigations have explored a range of techniques for identifying anomalous behaviors [

6], including wearable technologies, sensor-based approaches, and ambient instrument methodologies. The activated notification system ensures the verification of action identification. However, the accuracy of detecting these behaviors depends on the thorough analysis and precise acquisition of feature patterns [

7].

EEG signals are preferred for their cost-effectiveness, portability, and ability to exhibit distinct frequency-dependent patterns [

8]. The EEG is a measurement technique that captures the brain’s bioelectric activities by recording voltage fluctuations resulting from the ionic flow of neurons [

9]. To identify epileptic seizures accurately, capturing signals over an extended duration is necessary, which can introduce complexity due to multiple channels used for storage. Studies have also raised concerns over wearable devices’ energy use and data storage limits posing challenges for creating seizure forecasting tools [

10]. These portable gadgets tend to swiftly drain batteries and lack capacity to save the huge data flows needed to reliably predict seizures over extended periods. Overcoming these power and memory roadblocks to enable precise ambulatory monitoring and algorithms will require further innovations going forward. Nonetheless, EEG signals can be influenced by disturbances arising from different origins, including the main power supply, movement of electrodes, and muscular vibrations [

11]. The presence of noisy EEG signals poses a significant challenge for healthcare professionals in diagnosing epileptic seizures effectively. To address these difficulties, extensive research is currently being conducted to detect and forecast epileptic seizures through the utilization of EEG methods, alongside tools like magnetic resonance imaging (MRI) and artificial intelligence (AI) methodologies [

12]. The realm of diagnosing epileptic seizures has been incorporating traditional machine learning (ML) and deep learning (DL) techniques within the framework of AI methods [

13].

Numerous ML algorithms have been established for epileptic seizure identification, incorporating statistical, temporal, spectral, time–frequency domain, and nonlinear features [

14]. Traditional ML approaches involve a trial-and-error method for selecting features and classifiers [

15]. A thorough grasp of signal processing and data mining techniques is essential for constructing accurate models. A recent study [

16] utilized three ML models—support vector machines, linear discriminant analysis, and multilayer perceptrons—to differentiate resting state EEG data between healthy subjects and those with psychogenic non-epileptic seizures. Specifically, these algorithms aimed to uncover connections between measures of functional brain connectivity and the eventual categorical diagnosis. By modeling these complex relationships, the systems classified individual data points as belonging to either the healthy control group or the psychogenic seizure group. Initial findings demonstrated promise in using patterns of functional connectivity derived from EEGs to accurately predict which subjects were suffering from non-epileptic events via completely automated ML. However, further validation is still needed, particularly around generalizability to diverse patient subgroups. While these models perform well with small data sets, the field has also implemented sophisticated DL techniques for epileptic seizure identification [

17]. DL models, unlike traditional ML methods, require a substantial amount of data during training due to their complex feature mapping spaces. This phenomenon leads to overfitting challenges when confronted with insufficient data.

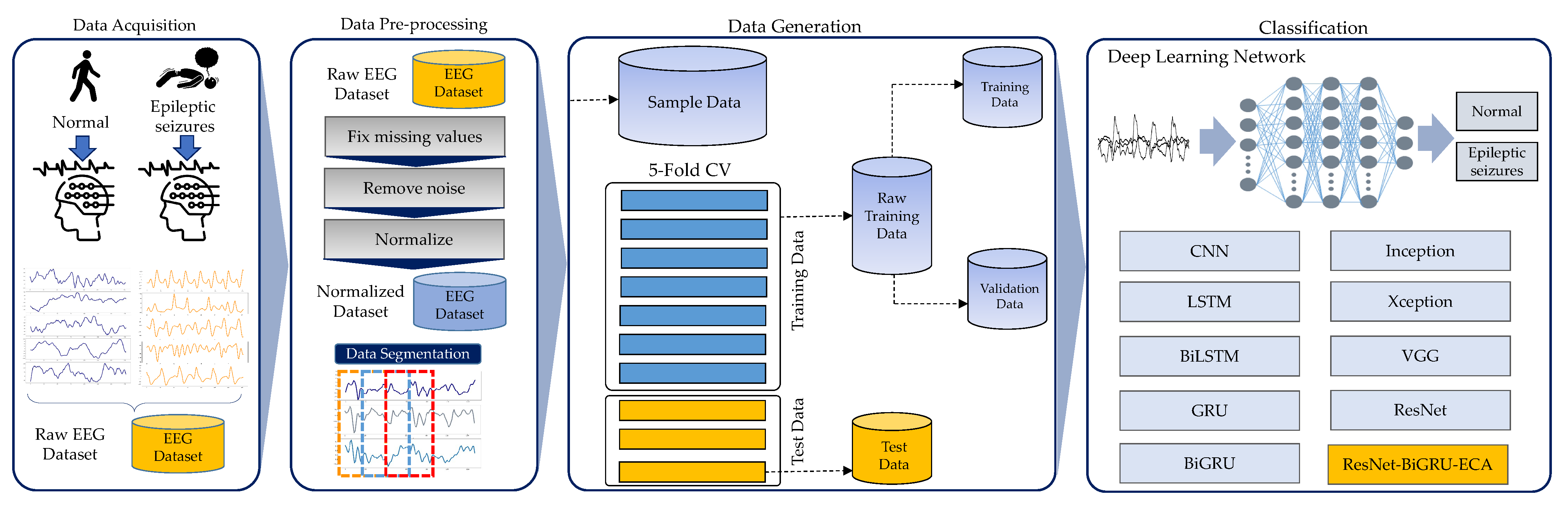

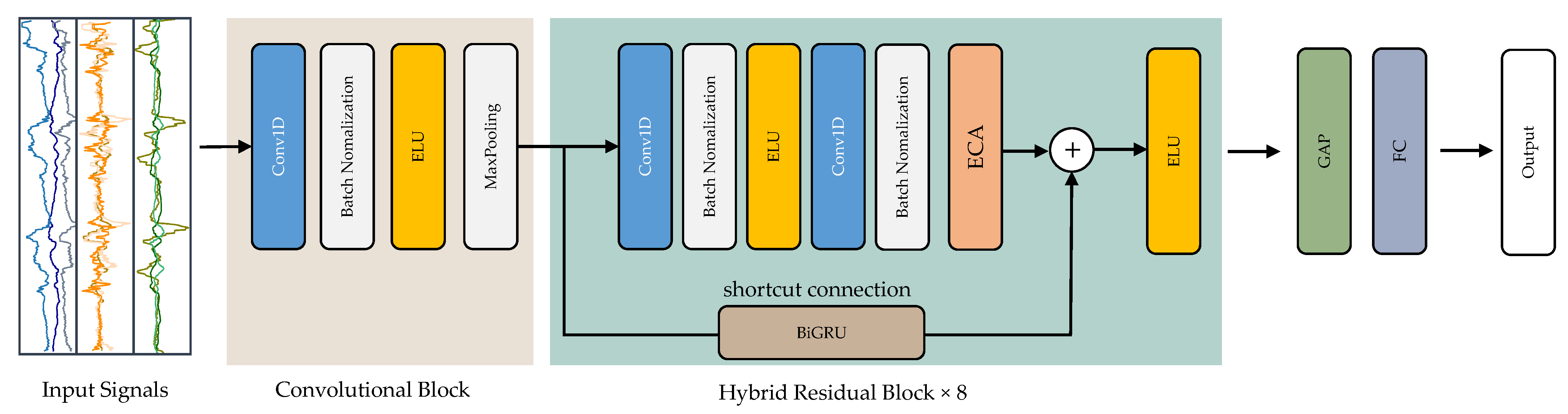

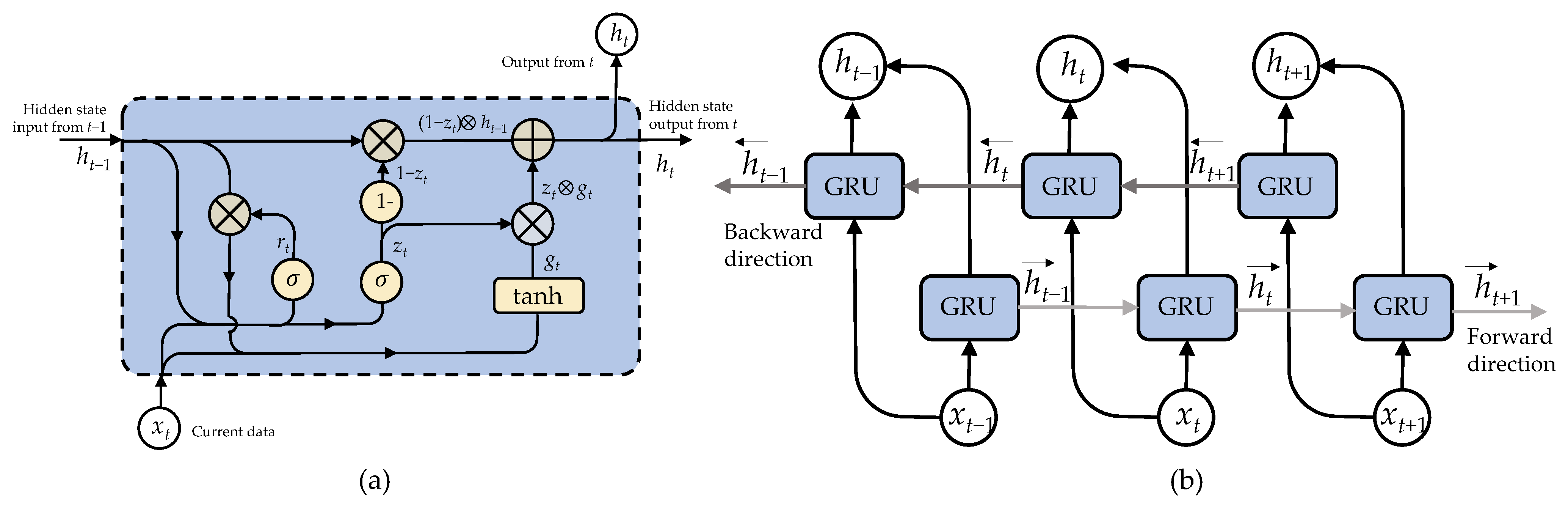

The primary objective of this research is to utilize DL networks to detect epilepsy by automatically processing EEG signals and recognizing patterns. The aim is to identify the spatial distribution and temporal characteristics of spikes and seizures. Convolutional neural networks (CNN), long short-term memory (LSTM), and gated recurrent unit (GRU) are among the methods employed in this research. We introduce a new deep residual model, ResNet-BiGRU-ECA, to accurately identify epileptic seizures by analyzing EEG data.

To evaluate the performance of our model, we utilized a publicly available epilepsy dataset. This benchmark compilation contained EEG readings segmented into five distinct health categories—one representing active epileptic seizures and the remaining four encompassing normal, non-seizure brain activity. Leveraging this diverse test set enabled robust assessment of the model’s ability to accurately differentiate between the pathological seizure state and healthy function. Through this analysis, we aim to demonstrate several key contributions of the current research, summarized as follows:

This research presents a framework for epileptic seizure detection (ESD) using EEG data to assess the performance of various DL structures within this particular domain.

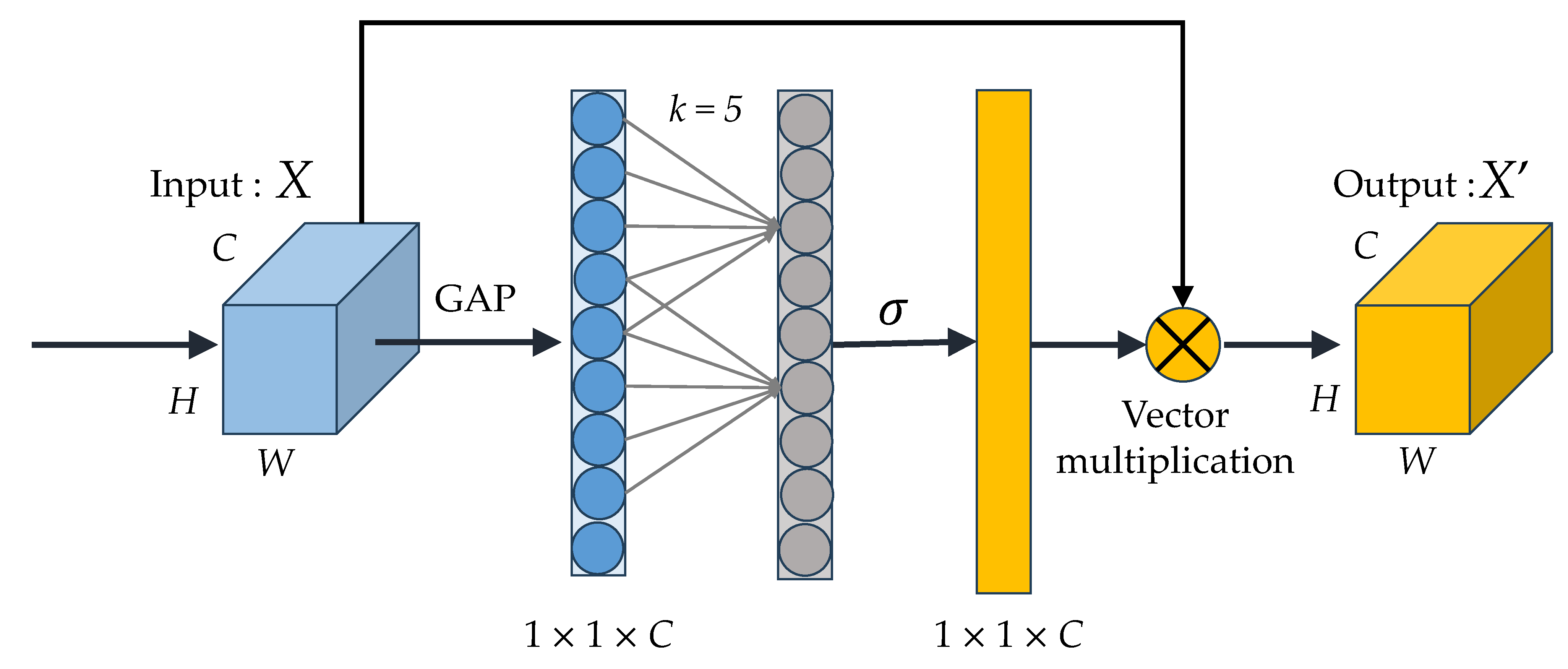

The suggested method introduces a deep residual model that incorporates residual blocks, an efficient channel attention (ECA) module, and a bidirectional GRU (BiGRU). This model adeptly captures extended data sequences, extracts spatio-temporal features, and carries out EEG classification.

The paper’s organization is as follows:

Section 2 offers a summary of pertinent literature and prior studies.

Section 3 outlines the proposed DL-based framework for epileptic seizure recognition. The results are showcased and examined in

Section 4, and

Section 5 wraps up and deliberates on potential avenues for future research.

2. Related Work

In this section, we review several studies that have used DL to classify epileptic and non-epileptic activities. One study introduced a DL model called the pyramidal one-dimensional CNN. This CNN model uses improved parameters that are less amenable to training than those used in conventional CNNs. The model achieved a remarkable accuracy of 99.1% when used to distinguish between different behaviors, including typical behaviors such as eyes closed, eyes open, and pre-ictal, as well as unusual behaviors such as inter-ictal and ictal [

18].

Conventional DL models struggle to process lengthy, variable input data like text, sensor readings over time, or video [

19]. Yet these sequential data types represent abundant real-world information. Recurrent neural networks (RNNs) have emerged to enable DL on such ordered series with temporal dynamics. Through internal state units retaining context, RNNs can analyze signals that change over timescales like EEG—gaining widespread use in physiology. Standard deep networks fail on such variable, redundant sequential inputs. But by propagating context, RNNs overcome challenges of long inputs with fluctuations and redundancy across temporal or spatial dimensions [

7]. This unique feedback architecture provides short-term memory lacking in feedforward networks to better extract patterns from sequential data like measurements over time.

The primary limitation of a basic RNN lies in its inability to retain information effectively over short periods of time. This is mainly because RNNs struggle to propagate information from earlier time steps to later ones, especially when dealing with long sequential data [

20]. Another challenge faced by RNNs is the vanishing gradient problem [

21], which occurs when the gradients diminish significantly during backpropagation. To overcome the short-term memory issue, researchers devised a solution in the form of LSTM neural networks [

22]. LSTM networks address the problem by allowing the model to selectively store and access relevant information, making them more adept at handling long sequences and retaining essential details.

In their study, Golmohammadi et al. [

23] evaluated two LSTM designs: one with three layers and the other with four layers, both combined with the Softmax classifier. The researchers reported that their findings were considered appropriate. In another study [

24], a three-layer LSTM architecture was used for feature extraction and classification. The final fully connected layer commonly employed the sigmoid activation function for classification purposes. Furthermore, in a study conducted by another group [

25], two structures, LSTM and GRU, were investigated. The structure of the LSTM/GRU model included a reshaped layer, succeeded by four layers of LSTM/GRU with an activation function, and, ultimately, a fully connected layer featuring a sigmoid activation function. In summary, these studies explored various LSTM designs and activation functions to improve feature extraction and classification accuracy.

In the realm of sophisticated DL models, it has been observed that employing more intricate and deeper architectures leads to enhanced accuracy compared to the feature learning approach discussed earlier. These prototypes employ CNNs to identify features autonomously [

26]. In particular, the CNN feature extractor is often denoted as the backbone when it comes to object recognition, setting it apart from the complete model architecture. In this investigation, we adopt a CNN-based feature extractor as the foundational framework. DL techniques, such as CNNs and RNNs, have demonstrated their ability to obtain state-of-the-art performance by autonomously learning the underlying characteristics from raw sensor data. The concept of deepening neural networks has evolved with the emergence of hybrid networks. These hybrid models combine diverse architectural designs, leading to improved feature representation and enhancing both computing and network achievements. Moreover, this development opens up opportunities for DL-based techniques in portable electronics.

4. Experimental Results and Discussion

In this section, we will detail the experimental setup and showcase the outcomes acquired through our assessment of DL models for the purpose of identifying epileptic seizures.

4.1. Experiments

The experiments in this study were conducted using Google Colab Pro, which provides access to Tesla V100 GPUs. The implementation was done in Python 3.6.9, utilizing key libraries, including TensorFlow 2.2.0 for building the neural network models, Keras 2.3.1 for the high-level API, Scikit-Learn for machine learning utilities, Numpy 1.18.5 for numerical processing, and Pandas 1.0.5 for data manipulation. By leveraging Google Colab and these state-of-the-art libraries, the experiments could be efficiently run on powerful hardware, enabling the exploration of deep neural network architectures for the research questions under investigation.

This empirical study compared four main DL architectures for the application of ESD on the ESRD dataset. The models tested were CNNs, LSTMs, bidirectional LSTMs (BiLSTMs), GRUs, and BiGRUs. These represented the state-of-the-art approaches. Our proposed model, called ResNet-BiGRU-ECA, was benchmarked against these models to assess its performance at identifying seizures relative to conventional architectures. By evaluating both unidirectional and bidirectional variants of LSTM and GRU models, we aimed to thoroughly compare our novel approach to existing methods using these epileptic seizure data.

Additionally, we conducted an in-depth investigation into various CNN backbone models. Specifically, we examined VGG16 [

36], ResNet18 [

37], Pyramid-Net18 [

38], Inception [

39], and Xception [

40] to perform a comprehensive experimental comparison analysis. These models were considered as potential solutions for addressing the challenge of time-series classification. Consequently, we restructured each model to suit the context of ESR.

In this study, we conducted research on two distinct scenarios involving EEG data for ESRD. In each of these scenarios, we utilized separate datasets for both training and testing DL models, as detailed in

Table 3.

4.2. Experimental Results

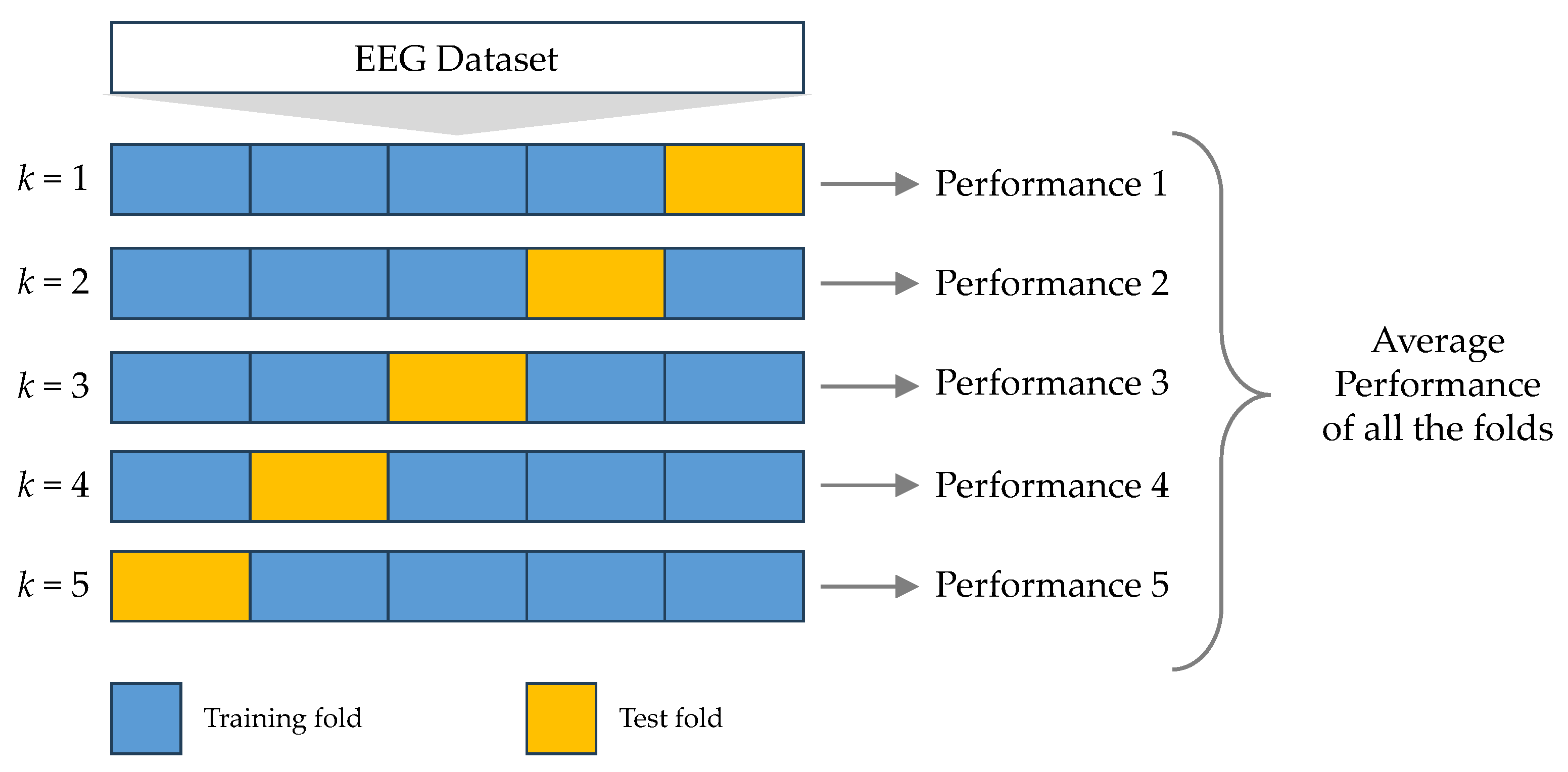

In each of our experiments, we used the ESRD dataset for training DL models. To assess these models, we employed a 5-CV approach. Our study centered on assessing the effectiveness of five core DL models (CNN, LSTM, BiLSTM, GRU, and BiGRU), in addition to cutting-edge DL models, within the framework of the two situations outlined in

Table 4 and

Table 5.

The DL models underwent training and testing using EEG data from scenario I, as detailed in

Table 4. Our experiments revealed that the ResNet-BiGRU-ECA model, as proposed, demonstrated exceptional efficiency, boasting an average accuracy of 0.998 and an average F1-score of 0.998.

The DL models underwent training and testing with EEG data sourced from scenario II, as specified in

Table 5. Upon analyzing the experiment results, it became evident that the ResNet-BiGRU-ECA model, as initially proposed, displayed the highest level of effectiveness. Its impressive performance supports this conclusion, boasting an average accuracy rate of 0.996 and an average F1-score of 0.994.

4.3. Comparative Results with ML Models

Guided by prior analyses [

5], we selected leading ML classifiers for comparative benchmarking, including k-nearest neighbors (KNN), naive Bayes (NB), logistic regression (LR), random forest (RF), decision trees (DT), stochastic gradient boosting (SGDC), and gradient boosting (GB). Recent studies confirm the utility of these algorithms paired with neurologists in accurately detecting seizures and characterizing epileptiform EEG dynamics [

41,

42]. Our experiments leveraged the standard ESRD dataset under equivalent scenarios to examine model performance variability when using raw EEG readings versus extracted feature sets as inputs.

Table 6 presents accuracy outcomes with our proposed deep ResNet-BiGRU-ECA architecture versus these widely adopted shallow ML approaches. By evaluating on equal inputs, we aimed to isolate the performance gains stemming solely from algorithmic and architectural optimizations rather than data pre-processing.

Results showed NB reached 95% accuracy, the sole ML technique with comparable proficiency. However, our deep ResNet-BiGRU-ECA architecture significantly exceeded all benchmark methods under both data scenarios. Surpassing 99% accuracy and 99% F1, the optimized network architecture demonstrated superior feature extraction and pattern recognition even from raw EEG readings. This substantial performance gap despite shared inputs suggests deep networks intrinsically outperform shallow ML at derives nuanced relationships within complex physiological signals. Rather than relying on predefined assumptions in simplified models, DL constructs intricate representations via hierarchical data transformations. Though some conventional methods approach sufficiency for classification tasks, deep neural networks attain state-of-the-art performance by learning intricate embeddings uniquely tuned to the intricacies of the data through backpropagation and gradient descent optimization.

4.4. Comparative Results with DL Models

The ResNet-BiGRU-ECA model under examination is subjected to a comparative analysis alongside previously developed models using the same dataset, specifically the ESDR dataset. Prior research studies [

36,

37,

38,

39,

40] have consistently shown that leveraging CNN-based DL architectures yields remarkable results in the field of time-series classification. The existing literature introduced the

5-CV methodology, which we also employed in our study. The summary of comparative results can be found in

Table 7 and

Table 8. These outcomes reveal that the ResNet-BiGRU-ECA model, as presented here, exhibits superior accuracy compared to the earlier models across the majority of actions.

4.5. Effect of BiGRU and ECA Modules

Additional investigations were conducted to provide a more comprehensive assessment of the BiGRU and ECA modules within the proposed ResNet-BiGRU-ECA architecture. As depicted in

Table 9, the findings obviously indicate that both the BiGRU module and the ECA module contribute significantly to enhancing the model’s efficiency in identifying datasets from two distinct situations.

5. Conclusions and Future Work

Epilepsy, a neurological disorder, can be significantly mitigated if detected early. This study introduces a novel hybrid DL model named ResNet-BiGRU-ECA, designed to accurately identify epileptic seizures using EEG signals. This model combines residual blocks, an ECA module, and a BiGRU to recognize epileptic seizures in pre-processed multichannel EEG recordings. To gauge the efficiency of our suggested model, we carried out a thorough assessment by contrasting it with five fundamental DL models and leading time-series classification models, all applied to the same publicly available dataset. The DL models underwent training and evaluation using a 5-CV approach. We analyzed model performance using standard evaluation metrics, and the ResNet-BiGRU-ECA model consistently outperformed other models, achieving an average accuracy of 0.998 and an F1-score of 0.998. Furthermore, our model surpassed most existing systems in terms of effectiveness when compared using the same dataset. The objective of this research is to make a meaningful contribution to the field of neurology by investigating the potential benefits of employing EEG data in the context of epilepsy detection and related matters. Our primary goal is to investigate the possibility of reducing examination duration while simultaneously improving diagnostic efficiency and effectiveness.

In future research, we intend to employ the ResNet-BiGRU-ECA model to address other detection issues relying on EEG signals. Additionally, we plan to delve into the explainability of our model to gain insights into the mechanisms and reasoning behind its accurate decision-making process.