Live Fish Species Classification in Underwater Images by Using Convolutional Neural Networks Based on Incremental Learning with Knowledge Distillation Loss

Abstract

:1. Introduction

- We propose a novel approach of using knowledge distillation for the training of the CNN architecture for live coral reef fish species classification task in unconstrained underwater images.

- We propose to train the pre-trained ResNet50 [36] progressively by focusing at the beginning on hard fish species and then integrating more easy species.

- Extensive experiments and comparisons of results with other methods are presented. The proposed approach outperforms state-of-the-art fish identification approaches on the LifeClef 2015 Fish ( www.imageclef.org/lifeclef/2015/fish accessed on 7 July 2022) benchmark dataset.

2. Related Works

2.1. Fish Species Classification

2.2. Incremental Learning

- It should be able to learn additional knowledge from new data;

- It should not require access to the original data (i.e., the data that were used to learn the current classifier);

- It should preserve previously acquired knowledge;

- It should be able to learn new classes that may be introduced with new data.

- Architectural strategy [56]: this algorithm modifies the architecture of the model in order to mitigate forgetting, e.g., adding layers, fixing weights…

- Repetition strategy [59]: old data are periodically replayed in the model to strengthen the connections associated with the learned knowledge. A simple approach is to store some of the previous training data and interleave it with new data for future training.

3. Proposed Approach

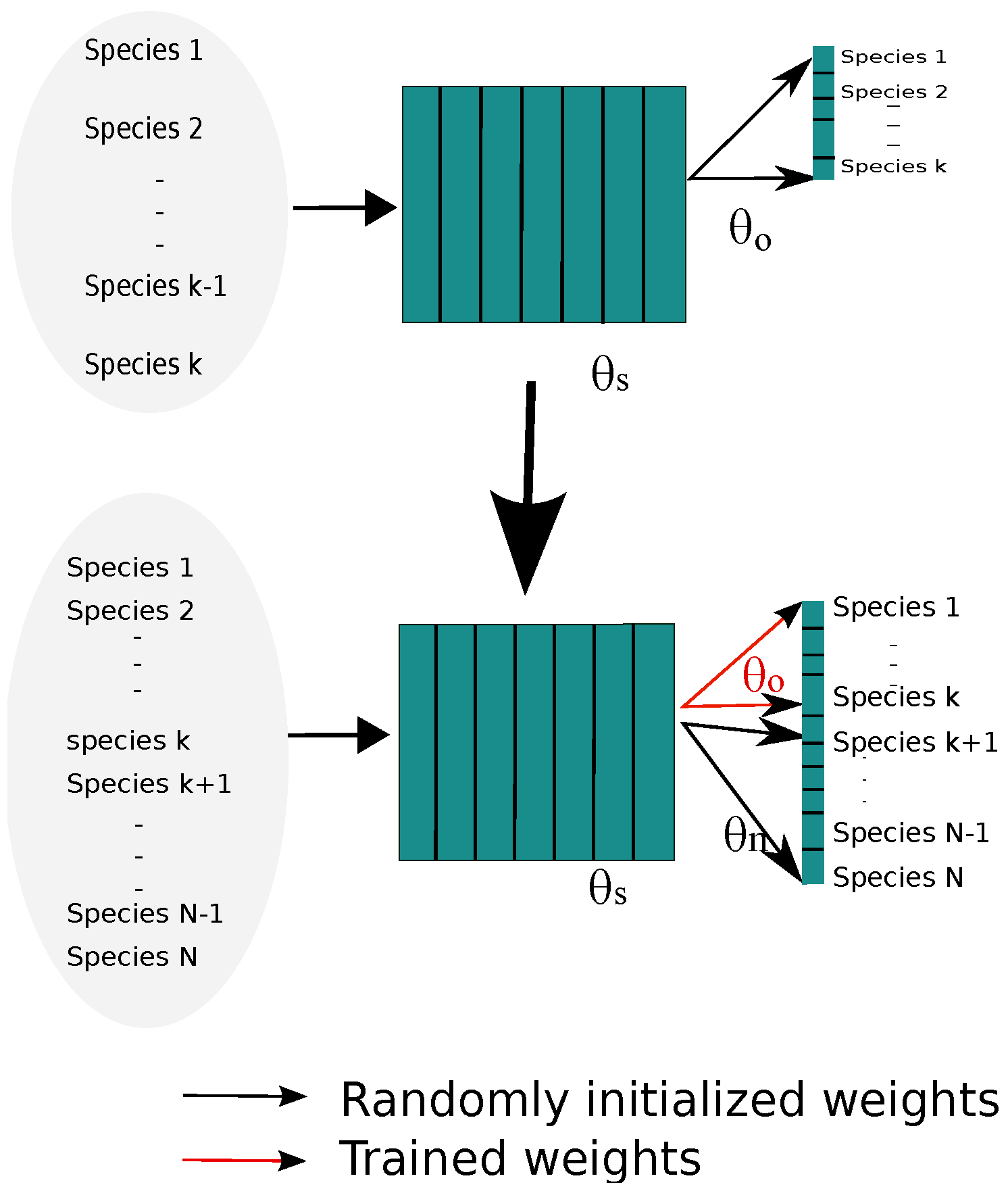

3.1. Architecture of the Approach

3.2. Learning Phase

- Step 1: train parameters and : First, using classical transfer learning, we train a pre-trained network, here ResNet50, on .

- Step 2: calculate probabilities: At the end of the first step, each image is passed through the trained CNN (of parameters and ) to generate a vector of probabilities of belonging to the k old species . The set of probabilities serves as labels corresponding to the training image set X; is the output of the CNN using the parameters and . The objective is to train the network without moving these predictions much.

- Step 3: train all parameters: In order to incorporate the new species, we add nodes for each new species to the classification layer with randomly initialized weights (parameters ). When training the new model, we jointly train all model parameters , and until convergence. This procedure, called joint-optimize training, encourages the computed output probabilities to approximate the recorded probabilities . To achieve this, we modify the network loss function by adding a knowledge distillation term.

3.3. Knowledge Distillation

3.4. Total Loss Function

4. Experiments

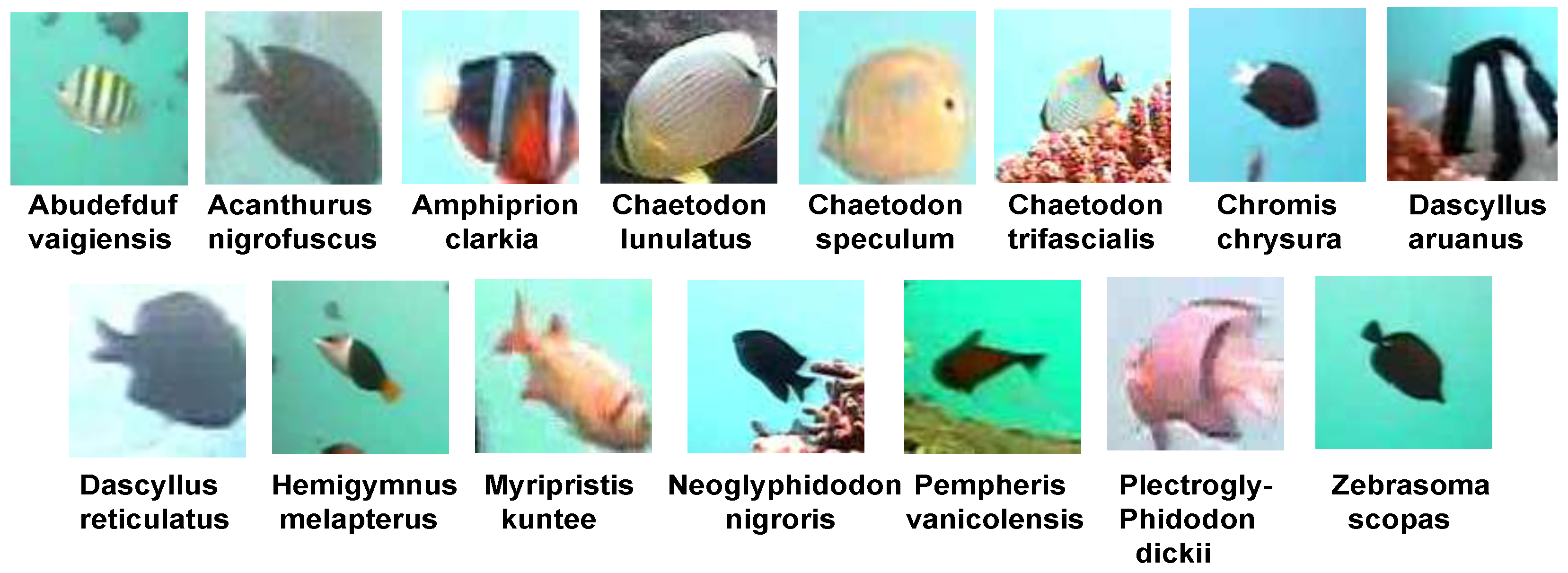

4.1. LifeClef 2015 Fish (LCF-15) Benchmark Dataset

4.2. Learning Strategy for Live Fish Species Classification

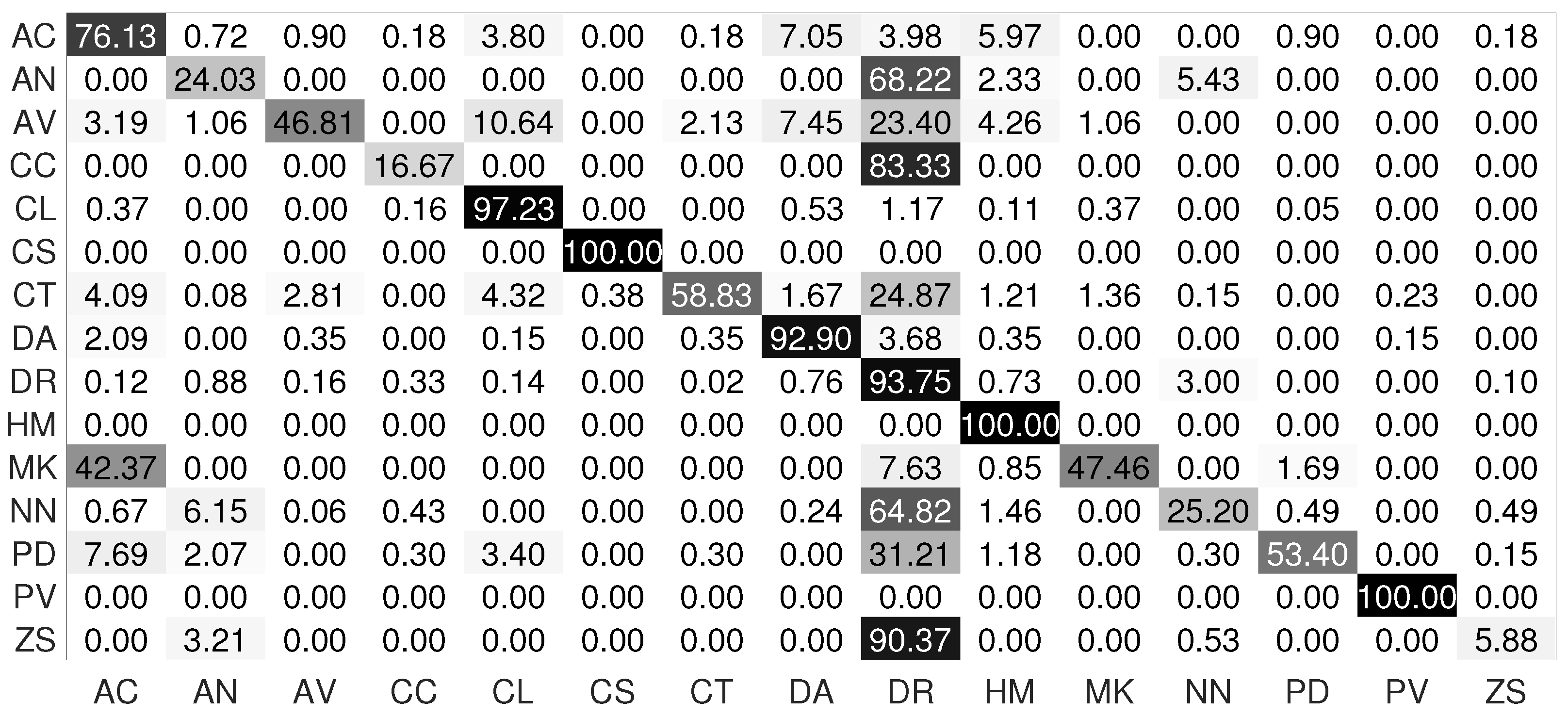

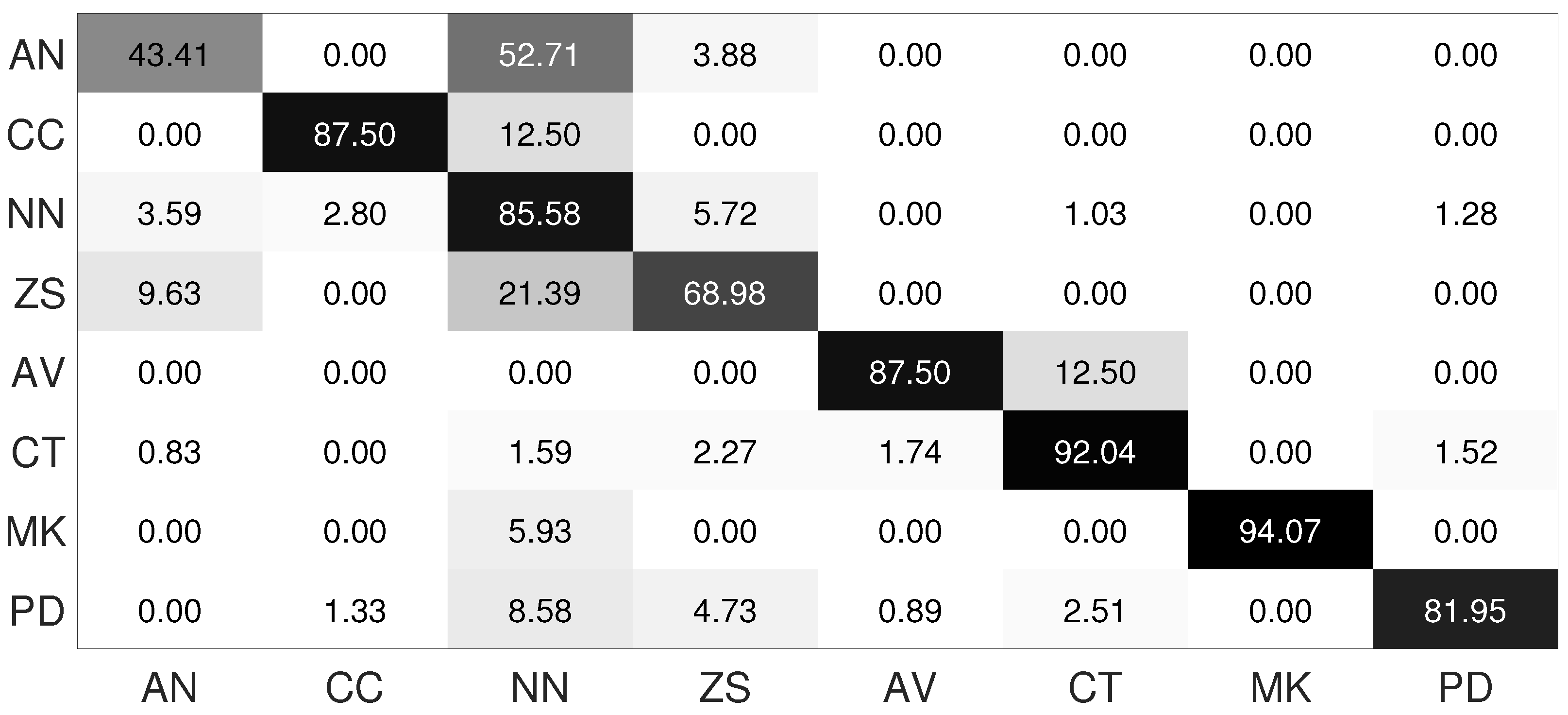

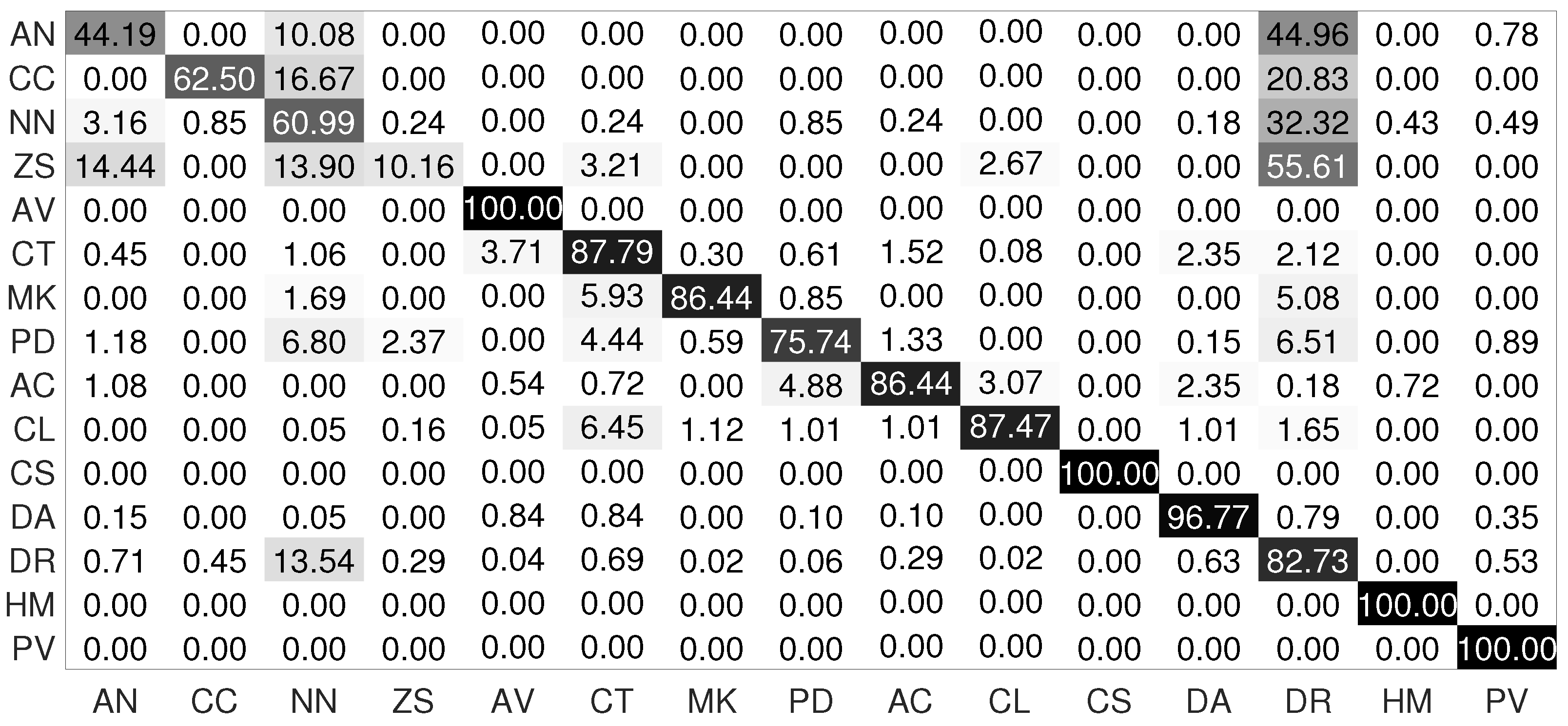

- Construction of two groups: in order to separate the species into two subsets, difficult and easy, we train the pre-trained network ResNet50 on all species of the LCF-15 dataset with transfer learning. Figure 4 illustrates the confusion matrix. From this confusion matrix, we can group the species into two main groups: group of species with low precision, difficult species, (AN, AV, CC, CT, MK, NN, PD, ZS) and group of species with high precision, easy species, (AC, CL, CS, DA, DR, HM, PV).

- Step 1 (difficult species): We first train the model on the first group using a pre-trained ResNet50 model. We want the model to focus on this subset. For this reason, we apply a data augmentation technique. To perform data augmentation, we proceed as follows. We flip each fish sample horizontally to simulate a new sample where fish are swimming in the opposite direction; then, we scale each fish image to different scales (tinier and larger). We also crop the images by removing one quarter from each side to eliminate parts of the background. Finally, we rotate fish images with angles and for invariant rotation fish recognition issues. At the end of this training, the model generates the shared parameters and the specific parameters for the first group .

- Step 2 (all species): Then, we add the species of the second group. In order to integrate these new species, we add a number of neurons equal to the number of species in this group into the classification layer. We randomly initialize the values of the weights of these new neurons (parameters ) and keep the weights corresponding to the old species ( and ). We apply in this second training the new loss function to learn the new species while keeping the knowledge learned in the old training.

4.3. Results

4.3.1. Model Trained on Difficult Species

4.3.2. Model Trained on All Species

- i.

- Optimization technique

- ii.

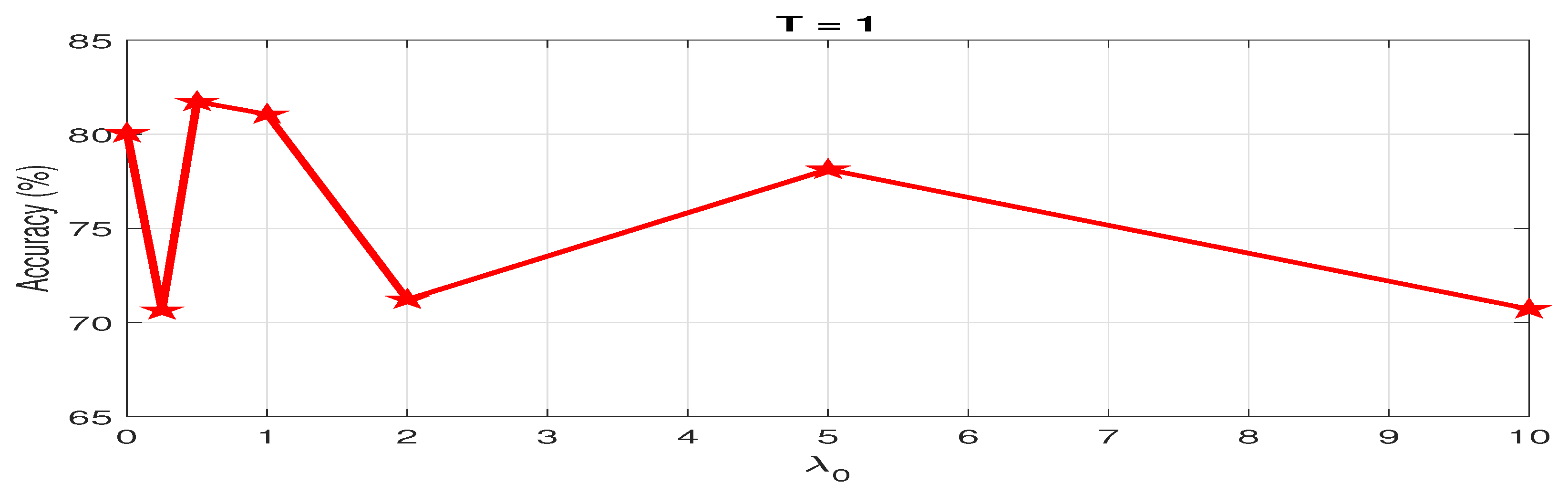

- Effect of parameter

- iii.

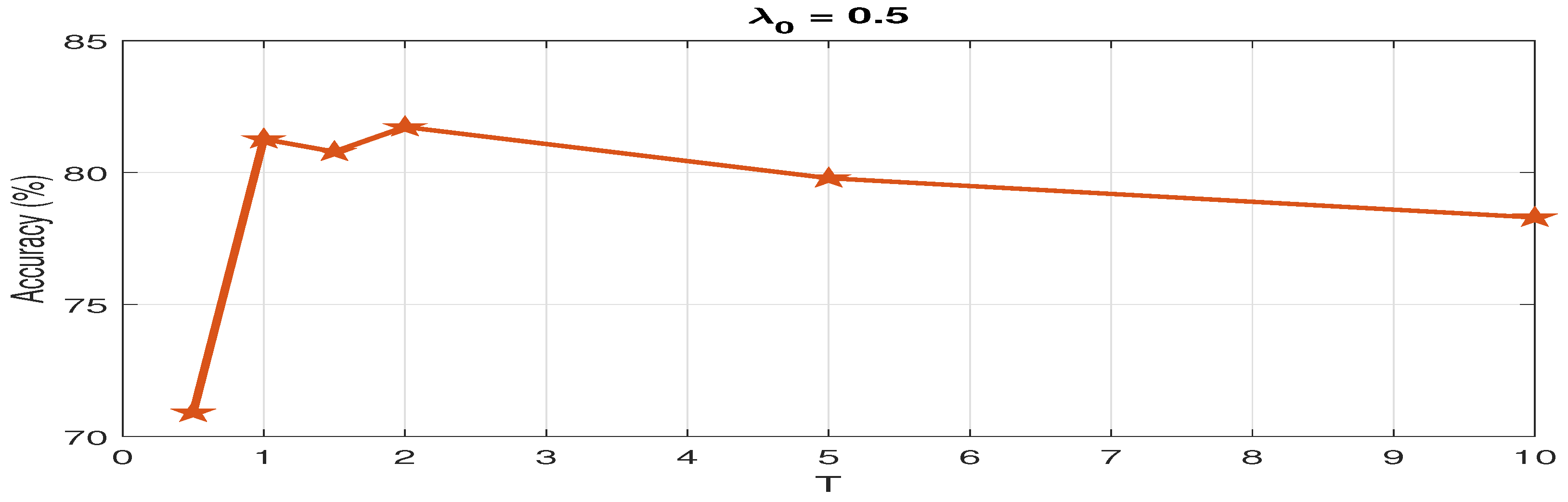

- Effect of temperature parameter T

- iv.

- Performance analysis

4.3.3. Comparative Study

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Brandl, S.J.; Goatley, C.H.; Bellwood, D.R.; Tornabene, L. The hidden half: Ecology and evolution of cryptobenthic fishes on coral reefs. Biol. Rev. 2018, 93, 1846–1873. [Google Scholar] [CrossRef] [PubMed]

- Johannes, R. Pollution and degradation of coral reef communities. In Elsevier Oceanography Series; Elsevier: Amsterdam, The Netherlands, 1975; Volume 12, pp. 13–51. [Google Scholar]

- Robinson, J.P.; Williams, I.D.; Edwards, A.M.; McPherson, J.; Yeager, L.; Vigliola, L.; Brainard, R.E.; Baum, J.K. Fishing degrades size structure of coral reef fish communities. Glob. Chang. Biol. 2017, 23, 1009–1022. [Google Scholar] [CrossRef] [PubMed]

- Leggat, W.P.; Camp, E.F.; Suggett, D.J.; Heron, S.F.; Fordyce, A.J.; Gardner, S.; Deakin, L.; Turner, M.; Beeching, L.J.; Kuzhiumparambil, U.; et al. Rapid coral decay is associated with marine heatwave mortality events on reefs. Curr. Biol. 2019, 29, 2723–2730. [Google Scholar] [CrossRef] [Green Version]

- D’agata, S.; Mouillot, D.; Kulbicki, M.; Andréfouët, S.; Bellwood, D.R.; Cinner, J.E.; Cowman, P.F.; Kronen, M.; Pinca, S.; Vigliola, L. Human-mediated loss of phylogenetic and functional diversity in coral reef fishes. Curr. Biol. 2014, 24, 555–560. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hughes, T.P.; Barnes, M.L.; Bellwood, D.R.; Cinner, J.E.; Cumming, G.S.; Jackson, J.B.; Kleypas, J.; Van De Leemput, I.A.; Lough, J.M.; Morrison, T.H.; et al. Coral reefs in the Anthropocene. Nature 2017, 546, 82–90. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jackson, J.B.; Kirby, M.X.; Berger, W.H.; Bjorndal, K.A.; Botsford, L.W.; Bourque, B.J.; Bradbury, R.H.; Cooke, R.; Erlandson, J.; Estes, J.A.; et al. Historical overfishing and the recent collapse of coastal ecosystems. Science 2001, 293, 629–637. [Google Scholar] [CrossRef] [Green Version]

- Jennings, S.; Pinnegar, J.K.; Polunin, N.V.; Warr, K.J. Impacts of trawling disturbance on the trophic structure of benthic invertebrate communities. Mar. Ecol. Prog. Ser. 2001, 213, 127–142. [Google Scholar] [CrossRef]

- Fernandes, I.; Bastos, Y.; Barreto, D.; Lourenço, L.; Penha, J. The efficacy of clove oil as an anaesthetic and in euthanasia procedure for small-sized tropical fishes. Braz. J. Biol. 2016, 77, 444–450. [Google Scholar] [CrossRef] [Green Version]

- Thresher, R.E.; Gunn, J.S. Comparative analysis of visual census techniques for highly mobile, reef-associated piscivores (Carangidae). Environ. Biol. Fishes 1986, 17, 93–116. [Google Scholar] [CrossRef]

- Ben Tamou, A.; Benzinou, A.; Nasreddine, K. Multi-stream fish detection in unconstrained underwater videos by the fusion of two convolutional neural network detectors. Appl. Intell. 2021, 51, 5809–5821. [Google Scholar] [CrossRef]

- Huang, P.X.; Boom, B.J.; Fisher, R.B. Underwater live fish recognition using a balance-guaranteed optimized tree. In Proceedings of the Asian Conference on Computer Vision, Daejeon, Korea, 5–9 November 2012; pp. 422–433. [Google Scholar]

- Spampinato, C.; Giordano, D.; Di Salvo, R.; Chen-Burger, Y.H.J.; Fisher, R.B.; Nadarajan, G. Automatic fish classification for underwater species behavior understanding. In Proceedings of the first ACM International Workshop on Analysis and Retrieval of Tracked Events and Motion in Imagery Streams, Firenze, Italy, 29 October 2010; pp. 45–50. [Google Scholar]

- Cabrera-Gámez, J.; Castrillón-Santana, M.; Dominguez-Brito, A.; Hernández Sosa, J.D.; Isern-González, J.; Lorenzo-Navarro, J. Exploring the use of local descriptors for fish recognition in lifeclef 2015. In Proceedings of the CEUR Workshop Proceedings, Toledo, Spain, 11 September 2015. [Google Scholar]

- Szucs, G.; Papp, D.; Lovas, D. SVM classification of moving objects tracked by Kalman filter and Hungarian method. In Proceedings of the Working Notes of CLEF 2015 Conference, Toulouse, France, 8–11 September 2015. [Google Scholar]

- Hu, K.; Weng, C.; Zhang, Y.; Jin, J.; Xia, Q. An Overview of Underwater Vision Enhancement: From Traditional Methods to Recent Deep Learning. J. Mar. Sci. Eng. 2022, 10, 241. [Google Scholar] [CrossRef]

- Edge, C.; Islam, M.J.; Morse, C.; Sattar, J. A Generative Approach for Detection-driven Underwater Image Enhancement. arXiv 2020, arXiv:2012.05990. [Google Scholar]

- Li, X.; Shang, M.; Qin, H.; Chen, L. Fast accurate fish detection and recognition of underwater images with fast r-cnn. In Proceedings of the OCEANS 2015-MTS/IEEE, Washington, DC, USA, 19–22 October 2015; pp. 1–5. [Google Scholar]

- Jalal, A.; Salman, A.; Mian, A.; Shortis, M.; Shafait, F. Fish detection and species classification in underwater environments using deep learning with temporal information. Ecol. Inform. 2020, 57, 101088. [Google Scholar] [CrossRef]

- Zhang, D.; O’Conner, N.E.; Simpson, A.J.; Cao, C.; Little, S.; Wu, B. Coastal fisheries resource monitoring through A deep learning-based underwater video analysis. Estuar. Coast. Shelf Sci. 2022, 269, 107815. [Google Scholar] [CrossRef]

- Jäger, J.; Rodner, E.; Denzler, J.; Wolff, V.; Fricke-Neuderth, K. SeaCLEF 2016: Object Proposal Classification for Fish Detection in Underwater Videos. In Proceedings of the CLEF (Working Notes), Évora, Portugal, 5–8 September 2016; pp. 481–489. [Google Scholar]

- Sun, X.; Shi, J.; Liu, L.; Dong, J.; Plant, C.; Wang, X.; Zhou, H. Transferring deep knowledge for object recognition in low-quality underwater videos. Neurocomputing 2018, 275, 897–908. [Google Scholar] [CrossRef] [Green Version]

- Ju, Z.; Xue, Y. Fish species recognition using an improved AlexNet model. Optik 2020, 223, 165499. [Google Scholar] [CrossRef]

- Iqbal, M.A.; Wang, Z.; Ali, Z.A.; Riaz, S. Automatic fish species classification using deep convolutional neural networks. Wirel. Pers. Commun. 2021, 116, 1043–1053. [Google Scholar] [CrossRef]

- Villon, S.; Chaumont, M.; Subsol, G.; Villéger, S.; Claverie, T.; Mouillot, D. Coral reef fish detection and recognition in underwater videos by supervised machine learning: Comparison between Deep Learning and HOG + SVM methods. In Proceedings of the International Conference on Advanced Concepts for Intelligent Vision Systems, Lecce, Italy, 24–27 October 2016; pp. 160–171. [Google Scholar]

- Murugaiyan, J.S.; Palaniappan, M.; Durairaj, T.; Muthukumar, V. Fish species recognition using transfer learning techniques. Int. J. Adv. Intell. Inform. 2021, 7, 188–197. [Google Scholar] [CrossRef]

- Mathur, M.; Vasudev, D.; Sahoo, S.; Jain, D.; Goel, N. Crosspooled FishNet: Transfer learning based fish species classification model. Multimed. Tools Appl. 2020, 79, 31625–31643. [Google Scholar] [CrossRef]

- Mathur, M.; Goel, N. FishResNet: Automatic Fish Classification Approach in Underwater Scenario. SN Comput. Sci. 2021, 2, 273. [Google Scholar] [CrossRef]

- Zhang, Z.; Du, X.; Jin, L.; Wang, S.; Wang, L.; Liu, X. Large-scale underwater fish recognition via deep adversarial learning. Knowl. Inf. Syst. 2022, 64, 353–379. [Google Scholar] [CrossRef]

- Qin, H.; Li, X.; Yang, Z.; Shang, M. When underwater imagery analysis meets deep learning: A solution at the age of big visual data. In Proceedings of the OCEANS 2015-MTS/IEEE, Washington, DC, USA, 19–22 October 2015; pp. 1–5. [Google Scholar]

- Salman, A.; Jalal, A.; Shafait, F.; Mian, A.; Shortis, M.; Seager, J.; Harvey, E. Fish species classification in unconstrained underwater environments based on deep learning. Limnol. Oceanogr. Methods 2016, 14, 570–585. [Google Scholar] [CrossRef] [Green Version]

- Paraschiv, M.; Padrino, R.; Casari, P.; Bigal, E.; Scheinin, A.; Tchernov, D.; Fernández Anta, A. Classification of Underwater Fish Images and Videos via Very Small Convolutional Neural Networks. J. Mar. Sci. Eng. 2022, 10, 736. [Google Scholar] [CrossRef]

- Qin, H.; Li, X.; Liang, J.; Peng, Y.; Zhang, C. DeepFish: Accurate underwater live fish recognition with a deep architecture. Neurocomputing 2016, 187, 49–58. [Google Scholar] [CrossRef]

- Sun, X.; Shi, J.; Dong, J.; Wang, X. Fish recognition from low-resolution underwater images. In Proceedings of the 2016 9th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI), Datong, China, 15–17 October 2016; pp. 471–476. [Google Scholar]

- Zhao, Z.; Liu, Y.; Sun, X.; Liu, J.; Yang, X.; Zhou, C. Composited FishNet: Fish detection and species recognition from low-quality underwater videos. IEEE Trans. Image Process. 2021, 30, 4719–4734. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Bay, H.; Tuytelaars, T.; Gool, L.V. Surf: Speeded up robust features. In Proceedings of the European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; pp. 404–417. [Google Scholar]

- Dhar, P.; Guha, S. Fish Image Classification by XgBoost Based on Gist and GLCM Features. Int. J. Inf. Technol. Comput. Sci. 2021, 4, 17–23. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Grauman, K.; Darrell, T. The pyramid match kernel: Discriminative classification with sets of image features. In Proceedings of the Tenth IEEE International Conference on Computer Vision (ICCV’05), Beijing, China, 17–21 October 2005; Volume 1–2, pp. 1458–1465. [Google Scholar]

- Chan, T.H.; Jia, K.; Gao, S.; Lu, J.; Zeng, Z.; Ma, Y. PCANet: A simple deep learning baseline for image classification? IEEE Trans. Image Process. 2015, 24, 5017–5032. [Google Scholar] [CrossRef] [Green Version]

- Lin, M.; Chen, Q.; Yan, S. Network in network. arXiv 2013, arXiv:1312.4400. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet Classification with Deep Convolutional Neural Networks. Available online: https://proceedings.neurips.cc/paper/2012/file/c399862d3b9d6b76c8436e924a68c45b-Paper.pdf (accessed on 7 July 2022).

- Pan, S.J.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2009, 22, 1345–1359. [Google Scholar] [CrossRef]

- Pang, J.; Liu, W.; Liu, B.; Tao, D.; Zhang, K.; Lu, X. Interference Distillation for Underwater Fish Recognition. In Proceedings of the Asian Conference on Pattern Recognition, Macau SAR, China, 4–8 December 2022; pp. 62–74. [Google Scholar]

- Cheng, L.; He, C. Fish Recognition Based on Deep Residual Shrinkage Network. In Proceedings of the 2021 4th International Conference on Robotics, Control and Automation Engineering (RCAE), Wuhan, China, 4–6 November 2021; pp. 36–39. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Knausgård, K.M.; Wiklund, A.; Sørdalen, T.K.; Halvorsen, K.T.; Kleiven, A.R.; Jiao, L.; Goodwin, M. Temperate fish detection and classification: A deep learning based approach. Appl. Intell. 2022, 52, 6988–7001. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Olsvik, E.; Trinh, C.; Knausgård, K.M.; Wiklund, A.; Sørdalen, T.K.; Kleiven, A.R.; Jiao, L.; Goodwin, M. Biometric fish classification of temperate species using convolutional neural network with squeeze-and-excitation. In Proceedings of the International Conference on Industrial, Engineering and Other Applications of Applied Intelligent Systems, Graz, Austria, 9–11 July 2019; pp. 89–101. [Google Scholar]

- Mittal, S.; Srivastava, S.; Jayanth, J.P. A Survey of Deep Learning Techniques for Underwater Image Classification. Available online: https://www.researchgate.net/profile/Sparsh-Mittal-2/publication/357826927_A_Survey_of_Deep_Learning_Techniques_for_Underwater_Image_Classification/links/61e145aec5e310337591ec08/A-Survey-of-Deep-Learning-Techniques-for-Underwater-Image-Classification.pdf (accessed on 7 July 2022).

- Saleh, A.; Sheaves, M.; Rahimi Azghadi, M. Computer vision and deep learning for fish classification in underwater habitats: A survey. Fish Fish. 2022, 23, 977–999. [Google Scholar] [CrossRef]

- Shmelkov, K.; Schmid, C.; Alahari, K. Incremental learning of object detectors without catastrophic forgetting. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3400–3409. [Google Scholar]

- Xiao, T.; Zhang, J.; Yang, K.; Peng, Y.; Zhang, Z. Error-driven incremental learning in deep convolutional neural network for large-scale image classification. In Proceedings of the 22nd ACM International Conference on Multimedia, Orlando, FL, USA, 3–7 November 2014; pp. 177–186. [Google Scholar]

- Polikar, R.; Upda, L.; Upda, S.S.; Honavar, V. Learn++: An incremental learning algorithm for supervised neural networks. IEEE Trans. Syst. Man Cybern. Part C (Appl. Rev.) 2001, 31, 497–508. [Google Scholar] [CrossRef] [Green Version]

- Rusu, A.A.; Rabinowitz, N.C.; Desjardins, G.; Soyer, H.; Kirkpatrick, J.; Kavukcuoglu, K.; Pascanu, R.; Hadsell, R. Progressive neural networks. arXiv 2016, arXiv:1606.04671. [Google Scholar]

- Kirkpatrick, J.; Pascanu, R.; Rabinowitz, N.; Veness, J.; Desjardins, G.; Rusu, A.A.; Milan, K.; Quan, J.; Ramalho, T.; Grabska-Barwinska, A.; et al. Overcoming catastrophic forgetting in neural networks. Proc. Natl. Acad. Sci. USA 2017, 114, 3521–3526. [Google Scholar] [CrossRef] [Green Version]

- Li, Z.; Hoiem, D. Learning without forgetting. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 2935–2947. [Google Scholar] [CrossRef] [Green Version]

- Hayes, T.L.; Cahill, N.D.; Kanan, C. Memory efficient experience replay for streaming learning. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, Canada, 20–24 May 2019; pp. 9769–9776. [Google Scholar]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the knowledge in a neural network. arXiv 2015, arXiv:1503.02531. [Google Scholar]

- Boom, B.J.; Huang, P.X.; He, J.; Fisher, R.B. Supporting ground-truth annotation of image datasets using clustering. In Proceedings of the 21st International Conference on Pattern Recognition (ICPR2012), Tsukuba, Japan, 11–15 November 2012; pp. 1542–1545. [Google Scholar]

| ID | Species | Training Set Size | Test Set Size |

|---|---|---|---|

| AV | Abudefduf vaigiensis | 436 | 94 |

| AN | Acanthurus nigrofuscus | 2805 | 129 |

| AC | Amphiprion clarkia | 3346 | 553 |

| CL | Chaetodon lunulatus | 3711 | 1876 |

| CS | Chaetodon speculum | 162 | 0 |

| CT | Chaetodon trifascialis | 681 | 1319 |

| CC | Chromis chrysura | 3858 | 24 |

| DA | Dascyllus aruanus | 1777 | 2013 |

| DR | Dascyllus reticulatus | 6333 | 4898 |

| HM | Hemigymnus melapterus | 356 | 0 |

| MK | Myripristis kuntee | 3246 | 118 |

| NN | Neoglyphidodon nigroris | 114 | 1643 |

| PV | Pempheris Vanicolensis | 1048 | 0 |

| PD | Plectrogly-Phidodon dickii | 2944 | 676 |

| ZS | Zebrasoma scopas | 343 | 187 |

| Total | 31,260 | 13,530 |

| Optimizer | Accuracy |

|---|---|

| RMSprop | 71.79% |

| Adamax | 79.08% |

| SGD | 79.16% |

| Adam | 80.06% |

| Approach | Accuracy |

|---|---|

| SURF-SVM [15] | 51% |

| FishResNet [28] | 54.24 |

| CNN-SVM [21] | 66% |

| NIN-SVM [34] | 69.84% |

| Modified AlexNet [24] | 72.25 |

| Yolov3 [19] | 72.63 |

| AdvFish [29] | 74.54 |

| ResNet50 (with non incremental learning) | 76.90% |

| PCANET-SVM [34] | 77.27% |

| ResNet50 (with proposed incremental learning) | 81.83% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ben Tamou, A.; Benzinou, A.; Nasreddine, K. Live Fish Species Classification in Underwater Images by Using Convolutional Neural Networks Based on Incremental Learning with Knowledge Distillation Loss. Mach. Learn. Knowl. Extr. 2022, 4, 753-767. https://doi.org/10.3390/make4030036

Ben Tamou A, Benzinou A, Nasreddine K. Live Fish Species Classification in Underwater Images by Using Convolutional Neural Networks Based on Incremental Learning with Knowledge Distillation Loss. Machine Learning and Knowledge Extraction. 2022; 4(3):753-767. https://doi.org/10.3390/make4030036

Chicago/Turabian StyleBen Tamou, Abdelouahid, Abdesslam Benzinou, and Kamal Nasreddine. 2022. "Live Fish Species Classification in Underwater Images by Using Convolutional Neural Networks Based on Incremental Learning with Knowledge Distillation Loss" Machine Learning and Knowledge Extraction 4, no. 3: 753-767. https://doi.org/10.3390/make4030036

APA StyleBen Tamou, A., Benzinou, A., & Nasreddine, K. (2022). Live Fish Species Classification in Underwater Images by Using Convolutional Neural Networks Based on Incremental Learning with Knowledge Distillation Loss. Machine Learning and Knowledge Extraction, 4(3), 753-767. https://doi.org/10.3390/make4030036