Abstract

Differential equations (DEs) are used as numerical models to describe physical phenomena throughout the field of engineering and science, including heat and fluid flow, structural bending, and systems dynamics. While there are many other techniques for finding approximate solutions to these equations, this paper looks to compare the application of the Theory of Functional Connections (TFC) with one based on least-squares support vector machines (LS-SVM). The TFC method uses a constrained expression, an expression that always satisfies the DE constraints, which transforms the process of solving a DE into solving an unconstrained optimization problem that is ultimately solved via least-squares (LS). In addition to individual analysis, the two methods are merged into a new methodology, called constrained SVMs (CSVM), by incorporating the LS-SVM method into the TFC framework to solve unconstrained problems. Numerical tests are conducted on four sample problems: One first order linear ordinary differential equation (ODE), one first order nonlinear ODE, one second order linear ODE, and one two-dimensional linear partial differential equation (PDE). Using the LS-SVM method as a benchmark, a speed comparison is made for all the problems by timing the training period, and an accuracy comparison is made using the maximum error and mean squared error on the training and test sets. In general, TFC is shown to be slightly faster (by an order of magnitude or less) and more accurate (by multiple orders of magnitude) than the LS-SVM and CSVM approaches.

1. Introduction

Differential equations (DE) and their solutions are important topics in science and engineering. The solutions drive the design of predictive systems models and optimization tools. Currently, these equations are solved by a variety of existing approaches with the most popular based on the Runge-Kutta family [1]. Other methods include those which leverage low-order Taylor expansions, namely Gauss-Jackson [2] and Chebyshev-Picard iteration [3,4,5], which have proven to be highly effective. More recently developed techniques are based on spectral collocation methods [6]. This approach discretizes the domain about collocation points, and the solution of the DE is expressed by a sum of “basis” functions with unknown coefficients that are approximated in order to satisfy the DE as closely as possible. Yet, in order to incorporate boundary conditions, one or more equations must be added to enforce the constraints.

The Theory of Functional Connections (TFC) is a new technique that analytically derives a constrained expression which satisfies the problem’s constraints exactly while maintaining a function that can be freely chosen [7]. This theory, initially called “Theory of Connections”, has been renamed for two reasons. First, the “Theory of Connections” already identifies a specific theory in differential geometry, and second, what this theory is actually doing is “functional interpolation”, as it provides all functions satisfying a set of constraints in terms of a function and any derivative in rectangular domains of n-dimensional spaces. This process transforms the DE into an unconstrained optimization problem where the free function is used to search for the solution of the DE. Prior studies [8,9,10,11], have defined this free function as a summation of basis functions; more specifically, orthogonal polynomials.

This work was motivated by recent results that solve ordinary DEs using a least-squares support vector machine (LS-SVM) approach [12]. While this article focuses on the application of LS-SVMs to solve DEs, the study and use of LS-SVMs remains relevant in many areas. In reference [13] the authors use the support vector machines to predict the risk of mold growth on concrete tiles. The mold growth on roofs affects the dynamics of heat and moisture through buildings. The approach leads to reduced computational effort and simulation time. The work presented in reference [14] uses LS-SVMs to predict annual runoff in the context of water resource management. The modeling process starts with building a stationary set of runoff data based on mode functions which are used as input points in the prediction by the SVM technique when chaotic characteristics are present. Furthermore, reference [15] uses the technique of LS-SVMs as a less costly computational alternative that provides superior accuracy compared to other machine learning techniques in the civil engineering problem of predicting the stability of breakwaters. The LS-SVM framework was applied to tool fault diagnosis for ensuring manufacturing quality [16]. In this work, a fault diagnosis method was proposed based on stationary subspace analysis (SSA) used to generate input data used for training with LS-SVMs.

In this article, LS-SVMs are incorporated into the TFC framework as the free function, and the combination of these two methods is used to solve DEs. Hence, the contributions of this article are twofold: (1) This article demonstrates how boundary conditions can be analytically embedded, via TFC, into machine learning algorithms and (2) this article compares using a LS-SVM as the free function in TFC with the standard linear combination of CP. Like vanilla TFC, the SVM model for function estimation [17] also uses a linear combination of functions that depend on the input data points. While in the first uses of SVMs the prediction for an output value was made based on a linear combination of the inputs , a later technique uses a mapping of the inputs to feature space, and the model SVM becomes a linear combination of feature functions . Further, with the kernel trick, the function to be evaluated is determined based on a linear combination of kernel functions; Gaussian kernels are a popular choice, and are used in this article.

This article compares the combined method, referred to hereafter as CSVM for constrained LS-SVMs, to vanilla versions of TFC [8,9] and LS-SVM [12] over a variety of DEs. In all cases, the vanilla version of TFC outperforms both the LS-SVM and the CSVM methods in terms of accuracy and speed. The CSVM method does not provide much accuracy or speed benefit over LS-SVM, except in the PDE problem, and in some cases has a less accurate or slower solution. However, in every case the CSVM satisfies the boundary conditions of the problem exactly, whereas the vanilla LS-SVM method solves the boundary condition with the same accuracy as the remainder of the data points in the problem. Thus, this article provides support that in the application of solving DEs, CP are a better choice for the TFC free function than LS-SVMs.

While the CSVM method underperforms vanilla TFC when solving DEs, its implementation and numerical verification in this article still provides an important contribution to the scientific community. CSVM demonstrates that the TFC framework provides a robust way to analytically embed constraints into machine learning algorithms; an important problem in machine learning. This technique can be extended to any machine learning algorithm, for example deep neural networks. Previous techniques have enforced constraints in deep neural networks by creating parallel structures, such as radial basis networks [18], adding the constraints to the loss function to be minimized [19], or by modifying the optimization process to include the constraints [20]. However, all of these techniques significantly modify the deep neural network architecture or the training process. In contrast, embedding the constraints with TFC does not require this. Instead, TFC provides a way to analytically embed these constraints into the deep neural network. In fact, any machine learning algorithm that is differentiable up to the order of the DE can be seamlessly incorporated into TFC. Future work will leverage this benefit to analyze the ability to solve DEs using other machine learning algorithms.

2. Background on the Theory of Functional Connections

The Theory of Functional Connections (TFC) is a generalized interpolation method, which provides a mathematical framework to analytically embed constraints. The univariate approach [7] to derive the expression for all functions satisfying k linear constraints follows,

where represents a “freely chosen” function, are the coefficients derived from the k linear constraints, and are user selected functions that must be linearly independent from . Recent research has applied this technique to embedding DE constraints using Equation (1), allowing for least-squares (LS) solutions of initial-value (IVP), boundary-value (BVP), and multi-value (MVP) problems on both linear [8] and nonlinear [9] ordinary differential equations (ODEs). In general, this approach has developed a fast, accurate, and robust unified framework to solve DEs. The application of this theory can be explored for a second-order DE such that,

By using Equation (1) and selecting and , the constrained expression becomes,

By evaluating this function at the two constraint conditions a system of equations is formed in terms of ,

which can be solved for by matrix inversion leading to,

These terms can are substituted in Equation (3) and the final constrained expression is realized,

By observation, it can be seen that the function for always satisfies the initial value constraints regardless of the function . Substituting this function into our original DE specified by Equation (2) transforms the problem into a new DE with no constraints,

Aside from the independent variable t, this equation is only a function of the unknown function . By discretizing the domain and expressing as some universal function approximator, the problem can be posed as an unconstrained optimization problem where the loss function is defined by the residuals of the function. Initial applications of the TFC method to solve DEs [8,9] expanded as some basis (namely Chebyshev or Legendre orthogonal polynomials); however, the incorporation of a machine learning framework into this free function has yet to be explored. This will be discussed in following sections. The original formulation expressed as,

where is an unknown vector of m coefficients and is the vector of m basis functions. In general the independent variable is while the orthogonal polynomials are defined in . This gives the linear mapping between x and t,

Using this mapping, the derivative of the free function becomes,

where it can be seen that the term is a constant such that,

Using this definition, it follows that all subsequent derivatives are,

Lastly, the DE given by Equation (4) is discretized over a set of N values of t (and inherently x). When using orthogonal polynomials, the optimal point distribution (in terms of numerical efficiency) is provided by collocation points [21,22], defined as,

By discretizing the domain, Equation (4) becomes a function solely of the unknown parameter ,

which can be solved using a variety of optimization schemes. If the original DE is linear then the new DE defined by Equation (5) is also linear. In this case, Equation (5) is a linear system,

which can be solved using LS [8]. If the DE is nonlinear, a nonlinear LS approach is needed, which requires an initial guess for . This initial guess can be obtained by a LS fitting of a lower order integrator solution, such as one provided by a simple improved Euler method. By defining the residuals of the DE as the loss function , the nonlinear Newton iteration is,

where k is the iteration. The convergence is obtained when the -norm of satisfies , where is a specified convergence tolerance. The final value of is then used in the constrained expression to provide an approximated analytical solution that perfectly satisfies the constraints. Since the function is analytical, the solution can be then used for further manipulation (e.g., differentiation, integration, etc.). The process to solve PDEs follows a similar process with the major difference involving the derivative of the constrained expression. The TFC extension to n-dimensions and a detailed explanation of the derivation of these constrained expressions are provided in references [23,24]. Additionally, the free function also becomes multivariate, increasing the complexity when using CPs.

3. The Support Vector Machine Technique

3.1. An Overview of SVMs

Support vector machines (SVMs) were originally introduced to solve classification problems [17]. A classification problem consists of determining if a given input, x, belongs to one of two possible classes. The proposed solution was to find a decision boundary surface that separates the two classes. The equation of the separating boundary depended only on a few input vectors called the support vectors.

The training data is assumed to be separable by a linear decision boundary. Hence, a separating hyperplane, H, with equation , is sought. The parameters are rescaled such that the closest training point to the hyperplane H, let’s say , is on a parallel hyperplane with equation . By using the formula for orthogonal projection, if satisfies the equation of one of the hyperplanes, then the signed distance from the origin of the space to the corresponding hyperplane is given by . Since equals for H, and for , it follows that the distance between the two hyperplanes, called the “separating margin”, is . Thus to find the largest separating margin, one needs to minimize . The optimization problem becomes,

If a separable hyperplane does not exist, the problem is reformulated by taking into account the classification errors, or slack variables, , and a linear or quadratic expression is added to the cost function. The optimization problem in the non-separable case is,

When solving the optimization problem by using Lagrange multipliers, the function always shows up as a dot product with itself; thus, the kernel trick [25] can be applied. In this research, the kernel function chosen is the radial basis function (RBF) kernel proposed in [12]. Hence, the function can be written using the kernel [25],

and its partial derivatives [12,26],

where the Kernel bandwidth, , is a tuning parameter that must be chosen by the user.

We follow the method of solving DEs using RBF kernels proposed in [12]. As an example, we take a first order linear initial value problem,

to be solved on the interval . The domain is partitioned into N sub-intervals using grid points , which from a machine learning perspective represents the training points. The model,

is proposed for the solution . Note that the number of coefficients equals the number of grid points , and thus the system of equations used to solve for the coefficient is a square matrix. Let be the vector of residuals obtained when using the model solution in the DE, that is, is the amount by which fails to satisfy the DE,

This results in,

and for the initial condition, it is desired that,

is satisfied exactly. In order to have the model close to the exact solution, the sum of the squares of the residuals, , is to be minimized. This expression can be viewed as a regularization term added to the objective of maximizing the margin between separating hyperplanes. The problem is formulated as an optimization problem with constraints,

Using the method of Lagrange multipliers, a loss function, , is defined using the objective function from the optimization problem and appending the constraints with corresponding Lagrange multipliers and .

The values where the gradient of is zero give candidates for the minimum.

Note that the conditions found by differentiating with respect to and are simply the constraint conditions, while the remaining conditions are the standard Lagrange multiplier conditions that the gradient of the function to be minimized is a linear combination of the gradients of the constraints. Using,

we obtain a new formulation of the approximate solution

where the inner products of can be re-written using Equations (6) and (7), and the parameter in the kernal matrix is a value that is learned during the training period together with the coefficients . The remaining gradients of can be used to form a linear system of equations where , , and b are the only unknowns. Note, that this system of equations can also be expressed using the kernal matrix and its partial derivatives rather than inner-products of .

3.2. Constrained SVM (CSVM) Technique

In the TFC method [7], the general constrained expression can be written for an initial value constraint as,

where is a “freely chosen” function. In prior studies [8,9,11], this free function was defined by a set of orthogonal basis functions, but this function can also be defined using SVMs,

where becomes,

This leads to the equation,

where the initial value constraint is always satisfied regardless of the values of and . Through this process, the constraints only remain on the residuals and the problem becomes,

Again, using the method of Lagrange multipliers, a term is introduced for the constraint on the residuals, leading to the expression,

The values where the gradient of is zero give candidates for the minimum,

Using,

we obtain a new formulation of the approximate solution given by Equation (9), that can be expressed in terms of the kernel and its derivatives. Combining the three equations for the gradients of , we can obtain a linear system with unknowns ,

The coefficient matrix is given by,

where we use the notation,

Finally, in terms of the kernel matrix, the approximate solution at the grid points is given by,

and a formula for the approximate solution at an arbitrary point t is given by,

3.3. Nonlinear ODEs

The method for solving nonlinear, first-order ODEs with LS-SVM comes from reference [12]. Nonlinear, first-order ODEs with initial value boundary conditions can be written generally using the form,

The solution form is again the one given in Equation (8) and the domain is again discretized into N sub-intervals, (training points). Let be the residuals for the solution ,

To minimize the error, the sum of the squares of the residuals is minimized. As in the linear case, the regularization term is added to the expression to be minimized. Now, the problem can be formulated as an optimization problem with constraints,

The variables are introduced into the optimization problem to keep track of the nonlinear function f at the values corresponding to the grid points. The method of Lagrange multipliers is used for this optimization problem just as in the linear case. This leads to a system of equations that can be solved using a multivariate Newton’s method. As with the linear ODE case, the set of equations to be solved and the dual form of the model solution can be written in terms of the kernel matrix and its derivatives.

The solution for nonlinear ODEs when using the CSVM technique is found in a similar manner, but the primal form of the solution is based on the constraint function from TFC. Just as the linear ODE case changes to encompass this new primal form, so does the nonlinear case. A complete derivation for nonlinear ODEs using LS-SVM and CSVM is provided in Appendix B.

3.4. Linear PDEs

The steps for solving linear PDEs using LS-SVM are the same as when solving linear ODEs, and are shown in detail in reference [27]. The first step is to write out the optimization problem to be solved. The second is to solve that optimization problem using the Lagrange multipliers technique. The third is to write the resultant set of equations and dual-form of the solution in terms of the kernel matrix and its derivatives.

Solving linear PDEs using the CSVM technique follows the same solution steps except the primal form of the solution is derived from a TFC constrained expression. A complete derivation for the PDE shown in problem #4 of the numerical results section using CSVM is provided in Appendix C. The main difficulty in this derivation stems from the numerous amount of times the function shows up in the TFC constrained expression. As a result, the set of equations produced by taking gradients of contain hundreds of kernel matrices and their derivatives. The only way to make this practical (in terms of the derivation and programming the result) was to write the constrained expression in tensor form. This was reasonable to perform for the simple linear PDE used in this paper, but would become prohibitively complicated for higher dimensional PDEs. Consequently, future work will investigate using other machine learning algorithms, such as neural networks, as the free function in the TFC framework.

4. Numerical Results

This section compares the methodologies described in the previous sections on four problems given in references [12] and [27]. Problem #1 is a first order linear ODE, problem #2 is a first order nonlinear ODE, problem #3 is a second order linear ODE, and problem #4 is a second order linear PDE. All problems were solved in MATLAB R2018b (MathWorks, Natick, MA, USA) on a Windows 10 operating system running on an Intel® Core™ i7-7700 CPU at 3.60GHz and 16.0 GB of RAM. Since all test problems have analytical solutions, absolute error and mean-squared error (MSE) were used to quantify the error of the methods. MSE is defined as,

where n is the number of points, is the true value of the solution, and is the estimated value of the solution at the i-th point.

The tabulated results from this comparison are included in Appendix A. A graphical illustration and summary of those tabulated values is included in the subsections that follow, along with a short description of each problem. These tabulated results also include the tuning parameters for each of the methods. For TFC, the number of basis functions, m, was found using a grid search method, where the residual of the differential equation was used to choose the best value of m. For LS-SVM and CSVM, the kernel bandwidth, , and the parameter were found using a grid search method for problems #1, #3, and #4. For problem #2, the value of for the LS-SVM and CSVM methods was tuned using fminsearch while the value of was fixed at [12]. This method was used in problem #2 rather than grid search because it did a much better job choosing tuning parameters that reduced the error of the solution. For all problems, a validation set was used to choose the best value for and [12,26]. It should be noted that the tuning parameter choice affects the accuracy of the solution. Thus, it may be possible to achieve more accurate results if a different method is used to find the value of the tuning parameters. For example, an algorithm that is better suited to finding global optimums, such as a genetic algorithm, may find better tuning parameter values than the methods used here.

4.1. Problem #1

Problem #1 is the linear ODE,

which has the analytic solution,

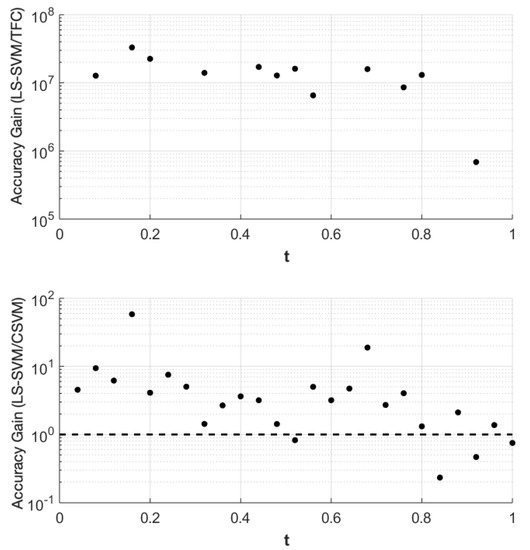

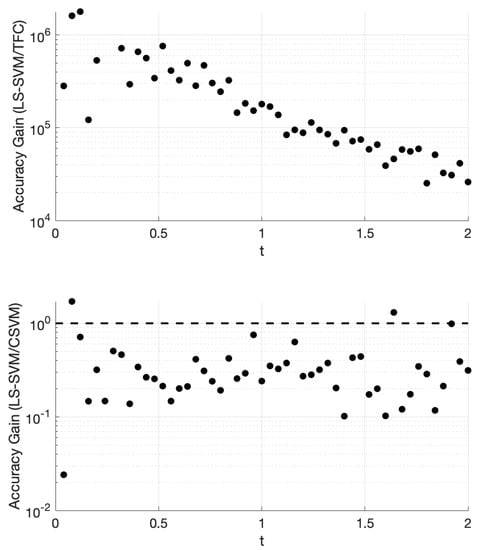

The accuracy gain of TFC and CSVM compared to LS-SVM for problem #1 is shown in Figure 1. The results were obtained using 100 training points. The top plot shows the error of the LS-SVM solution divided by the error in the TFC solution, and the bottom plot shows the error of the LS-SVM solution divided by the error of the CSVM solution. Values greater than one indicate that the compared method is more accurate than the LS-SVM method, and vice-versa for values less than one.

Figure 1.

Accuracy gain for the Theory of Functional Connections (TFC) and constrained support vector machine (CSVM) methods over least-squares support vector machines (LS-SVMs) for problem #1 using 100 training points.

Figure 1 shows that TFC is the most accurate of the three methods followed by CSVM and finally LS-SVM. The error reduction when using CSVM instead of LS-SVM is typically an order of magnitude or less. However, the error reduction when using TFC instead of the other two methods is multiple orders of magnitude. The attentive reader will notice that the plot that includes TFC solution has less data points in Figure 1 than the other methods. This is because the calculated points and the true solutions vary less than machine level accuracy and when the subtraction operation is used the resulting number becomes zero.

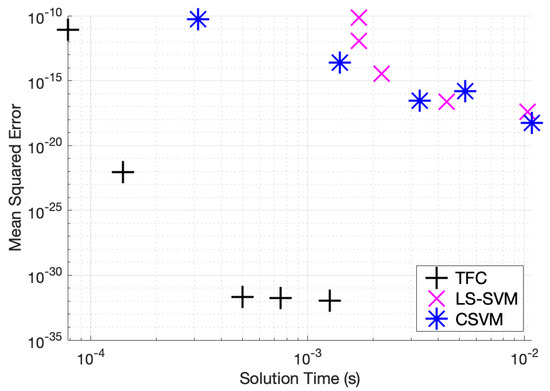

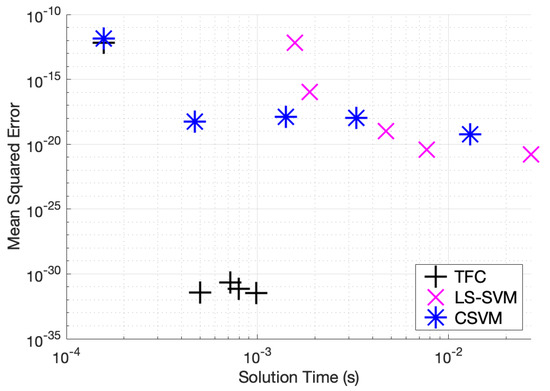

Table A1, Table A2 and Table A3 in the appendix compare the three methods for various numbers of training points when solving problem #1. Additionally, these tables show that TFC provides the shortest training time and the lowest maximum error and mean square error (MSE) on both the training set and test set. The CSVM results are the slowest, but they are more accurate than the LS-SVM results. The accuracy gained when using CSVM compared to LS-SVM is typically less than an order of magnitude. On the other hand, the accuracy gained when using TFC is multiple orders of magnitude. Moreover, the speed gained when using LS-SVM compared to CSVM is typically less than an order of magnitude, whereas the speed gained when using TFC is approximately one order of magnitude. An accuracy versus speed comparison is shown graphically in Figure 2, where the MSE on the test set is plotted against training time for five specific cases: 8, 16, 32, 50, and 100 training points.

Figure 2.

Mean squared error vs. solution time for problem #1.

4.2. Problem #2

Problem #2 is the nonlinear ODE given by,

which has the analytic solution,

where is the gamma function defined as,

and J is Bessel function of first kind defined as,

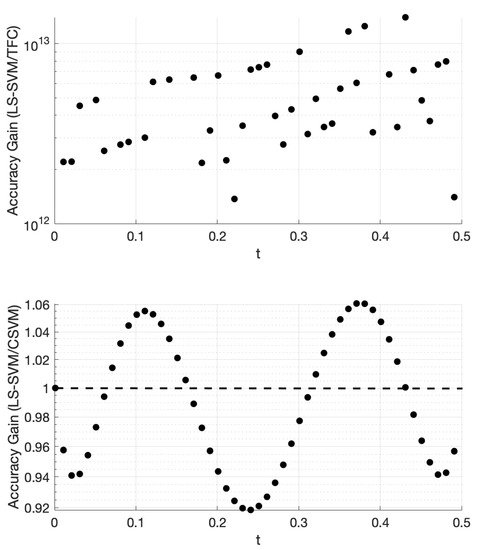

The accuracy gain of TFC and CSVM compared to LS-SVM for problem #2 is shown in Figure 3. This figure was created using 100 training points. The top plot shows the error in the LS-SVM solution divided by the TFC solution. The bottom plot provides the error in the LS-SVM solution divided by the error in the CSVM solution.

Figure 3.

Accuracy gain for TFC and CSVM methods over LS-SVM for problem #2 using 100 training points.

Figure 3 shows that TFC is the most accurate of the two methods with the error being several orders of magnitude lower than the LS-SVM method. It was observed that the difference in accuracy between the CSVM and LS-SVM is negligible. The small variations in accuracy are a function of the specific method. For this problem, the solution accuracy for both methods monotonically decreases as t increases; however, the behavior of this decrease is not constant and is at different rates, which produces a sine wave-like plot of the accuracy gain.

Table A4, Table A5 and Table A6 in the appendix compare the two methods for various numbers of training points when solving problem #2. Additionally, these tables show that solving the DE using TFC is faster than using the LS-SVM method for all cases except the second case (using 16 training points). However, the speed gained using TFC is less than one order of magnitude. Furthermore, TFC is more accurate by multiple orders of magnitude as compared to the LS-SVM method over the entire range of test cases. In addition, TFC continues to reduce the MSE and maximum error on the test and training set as more training points are added, whereas the LS-SVM method error increases slightly between 8 and 16 points and then stays approximately the same. The CSVM method follows the same trend as the LS-SVM method; however, it requires more time to train than the LS-SVM method. This is highlighted in an accuracy versus speed comparison, shown graphically in Figure 4, where the MSE on the test set is plotted against training time for five specific cases: 8, 16, 32, 50, and 100 training points.

Figure 4.

Mean squared error vs. solution time for problem #2.

4.3. Problem #3

Problem #3 is the second order linear ODE given by,

which has the analytic solution,

The accuracy gain of TFC and CSVM compared to LS-SVM for problem #3 is shown in Figure 5. The figure was created using 100 training points. The top plot shows the error in the LS-SVM solution divided by the TFC solution, and the bottom plot shows the error in the LS-SVM solution divided by the CSVM solution.

Figure 5.

Accuracy gain for TFC and CSVM methods over LS-SVMs for problem #3 using 100 training points.

Table A7, Table A8 and Table A9 in the appendix compare the two methods for various numbers of training points when solving problem #3. These tables show that solving the DE using TFC is approximately an order of magnitude faster than using the LS-SVM method for all cases. Furthermore, TFC is more accurate than the LS-SVM method for all of the test cases. One interesting note is that when moving from 16 to 32 training points TFC actually loses a bit of accuracy, whereas the LS-SVM method continues to gain accuracy. Despite this, TFC is still multiple orders of magnitude more accurate than the LS-SVM method. Additionally, these tables show that the CSVM method is faster than the LS-SVM for all cases. The speed difference varies from approximately twice as fast to an order of magnitude faster. The LS-SVM and CSVM methods have a similar amount of error, and which method is more accurate depends on how many training points were being used. However, LS-SVM is slightly more accurate than CSVM for more cases than CSVM is slightly more accurate than LS-SVM. An accuracy versus speed comparison is shown graphically in Figure 6, where the MSE on the test set is plotted against training time for five specific cases: 8, 16, 32, 50, and 100 training points.

Figure 6.

Mean squared error vs. solution time for problem #3 accuracy vs. time.

Figure 5 shows that TFC is the most accurate of the three methods. The TFC error is 4–6 orders of magnitude lower than the LS-SVM method. The LS-SVM method has error that is lower than the error in the CSVM method by an order of magnitude or less.

4.4. Problem #4

Problem #4 is the second order linear PDE on given by,

which has the analytical solution,

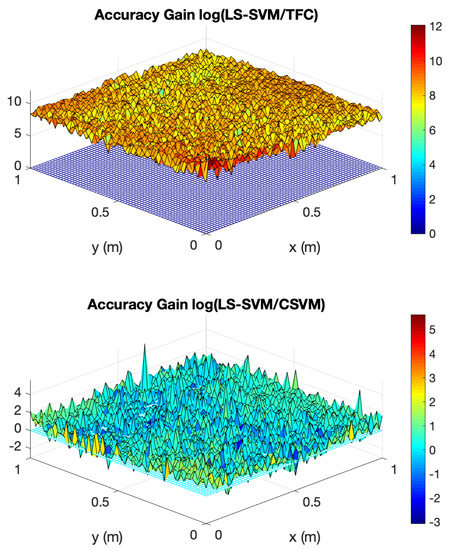

The accuracy gain of TFC and CSVM compared to LS-SVM for problem #4 is shown in Figure 7. The figure was created using 100 training points in the domain—training points in the domain means training points that do not lie on one of the four boundaries. The top plot shows the log base 10 of the error in the LS-SVM solution divided by the TFC solution, and the bottom plot shows the log base 10 of the error in the LS-SVM solution divided by the error in the CSVM solution.

Figure 7.

Accuracy gain for TFC and CSVM methods over LS-SVMs for problem #4 using 100 training points in the domain.

Figure 7 shows that TFC is the most accurate of the two methods. The TFC error is orders of magnitude lower than the LS-SVM method. The CSVM error is, on average, approximately one order of magnitude lower than the LS-SVM method, but the error is still orders of magnitude higher than the error when using TFC.

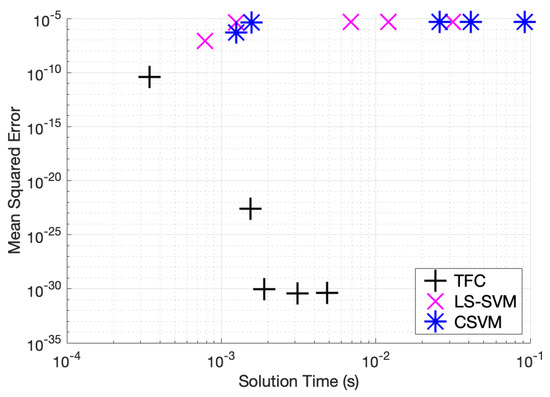

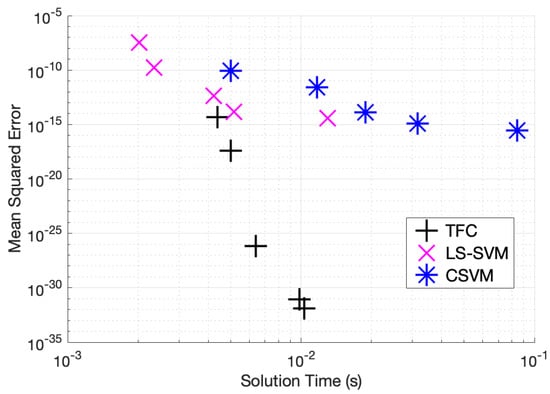

Table A10–Table A12 in the appendix compare the two methods for various numbers of training points in the domain when solving problem #4. These tables show that solving the DE using TFC is slower than LS-SVM by less than an order of magnitude for all test cases. The MSE error on the test set for TFC is less than LS-SVM for all of the test cases. The amount by which the MSE error on the test set differs between the two methods varies between 7 and 18 orders of magnitude. In addition, these tables show that the training time for CSVM is greater than LS-SVM by approximately an order of magnitude or less. The MSE error on the test set for CSVM is less than the MSE error on the test set for LS-SVM for all the test cases. The amount by which the MSE error on the test set differs between the two methods varies between one and three orders of magnitude. An accuracy versus speed comparison is shown graphically in Figure 8, where the MSE on the test set is plotted against training time for five specific cases: 9, 16, 36, 64, and 100 training points in the domain.

Figure 8.

Mean squared error vs. solution time for problem #4 accuracy vs. time.

5. Conclusions

This article presented three methods to solve various types of DEs: TFC, LS-SVM, and CSVM. The CSVM method was a combination of the other two methods; it incorporated LS-SVM into the TFC framework. Four problems were presented that include a linear first order ODE, a nonlinear first order ODE, a linear second order ODE, and a linear second order PDE. The results showed that, in general, TFC is faster, by approximately an order of magnitude or less, and more accurate, by multiple orders of magnitude. The CSVM method has similar performance to the LS-SVM method, but the CSVM method satisfies the boundary constraints exactly whereas the LS-SVM method does not. While the CSVM method underperforms vanilla TFC, it showed the ease with which machine learning algorithms can be incorporated into the TFC framework. This capability is extremely important, as it provides a systematic way to analytically embed many different types of constraints into machine learning algorithms.

This feature will be exploited in future studies and specifically for higher-dimensional PDEs, where the scalability of machine learning algorithms may give a major advantage over the orthogonal basis functions used in TFC. In this article, the authors found that the number of terms when using SVMs may become prohibitive at higher dimensions. Therefore, future work should focus on other machine learning algorithms, such as neural networks, that do not have this issue. Additionally, comparison problems should be looked at other than initial value problems. For example, problems could be used for comparison that have boundary value constraints, differential constraints, and integral constraints.

Furthermore, future work should analyze the effect of the regularization term. One observation from the experiments is that the parameter is very large, about , making the contribution from insignificant. However, is the term meant to provide the best separating boundary surfaces. Going farther in this direction, one could analyze whether applying the kernel trick is beneficial (if the separating margin is not really achieved), or if an expansion similar to Chebyshev polynomials (CP), but using Gaussians, could provide a more accurate solution with a simpler algorithm.

Author Contributions

Conceptualization, C.L., H.J. and L.S.; Software, C.L. and H.J.; Supervision, L.S. and D.M.; Writing original draft, C.L., H.J. and L.S.; Writing review and editing, C.L., H.J. and L.S.

Funding

This work was supported by a NASA Space Technology Research Fellowship, Leake [NSTRF 2019] Grant #: 80NSSC19K1152 and Johnston [NSTRF 2019] Grant #: 80NSSC19K1149.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| BVP | boundary-value problem |

| CP | Chebyshev polynomial |

| CSVM | constrained support vector machines |

| DE | differential equation |

| IVP | initial-value problem |

| LS | least-squares |

| LS-SVM | least-squares support vector machines |

| MSE | mean square error |

| MVP | multi-value problem |

| ODE | ordinary differential equation |

| PDE | partial differential equation |

| RBF | radial basis function |

| SVM | support vector machines |

| TFC | Theory of Functional Connections |

Appendix A Numerical Data

Table A1.

TFC results for problem #1.

Table A1.

TFC results for problem #1.

| Number of Training Points | Training Time (s) | Maximum Error on Training Set | MSE on Training Set | Maximum Error on Test Set | MSE on Test Set | m |

|---|---|---|---|---|---|---|

| 8 | 7.813 × | 6.035 × | 1.057 × | 6.187 × | 8.651 × | 7 |

| 16 | 1.406 × | 2.012 × | 1.257 × | 1.814 × | 8.964 × | 17 |

| 32 | 5.000 × | 2.220 × | 1.887 × | 3.331 × | 2.086 × | 25 |

| 50 | 7.500 × | 2.220 × | 9.368 × | 2.220 × | 1.801 × | 25 |

| 100 | 1.266 × | 4.441 × | 1.750 × | 2.220 × | 1.138 × | 26 |

Table A2.

LS-SVM results for problem #1.

Table A2.

LS-SVM results for problem #1.

| Number of Training Points | Training Time (s) | Maximum Error on Training Set | MSE on Training Set | Maximum Error on Test Set | MSE on Test Set | ||

|---|---|---|---|---|---|---|---|

| 8 | 1.719 × | 1.179 × | 5.638 × | 1.439 × | 7.251 × | 5.995 × | 3.162 × |

| 16 | 1.719 × | 1.710 × | 1.107 × | 1.849 × | 1.161 × | 3.594 × | 6.813 × |

| 32 | 2.188 × | 9.792 × | 3.439 × | 9.525 × | 3.359 × | 3.594 × | 3.162 × |

| 50 | 4.375 × | 1.440 × | 2.983 × | 8.586 × | 2.356 × | 3.594 × | 3.162 × |

| 100 | 1.031 × | 3.671 × | 3.781 × | 3.673 × | 3.947 × | 2.154 × | 3.162 × |

Table A3.

CSVM results for problem #1.

Table A3.

CSVM results for problem #1.

| Number of Training Points | Training Time (s) | Maximum Error on Training Set | MSE on Training Set | Maximum Error on Test Set | MSE on Test Set | ||

|---|---|---|---|---|---|---|---|

| 8 | 3.125 × | 1.018 × | 4.131 × | 1.357 × | 5.547 × | 2.154 × | 3.162 × |

| 16 | 1.406 × | 2.894 × | 2.588 × | 2.818 × | 2.468 × | 5.995 × | 6.813 × |

| 32 | 5.313 × | 2.283 × | 1.355 × | 2.576 × | 1.494 × | 3.594 × | 3.162 × |

| 50 | 3.281 × | 8.887 × | 2.055 × | 1.072 × | 2.783 × | 7.743 × | 3.162 × |

| 100 | 1.078 × | 2.230 × | 5.571 × | 2.163 × | 5.337 × | 3.594 × | 1.468 × |

Table A4.

TFC results for problem #2.

Table A4.

TFC results for problem #2.

| Number of Training Points | Training Time (s) | Maximum Error on Training Set | MSE on Training Set | Maximum Error on Test Set | MSE on Test Set | m |

|---|---|---|---|---|---|---|

| 8 | 3.437 × | 8.994 × | 2.242 × | 1.192 × | 4.132 × | 8 |

| 16 | 1.547 × | 4.586 × | 6.514 × | 9.183 × | 2.431 × | 16 |

| 32 | 1.891 × | 3.109 × | 9.291 × | 4.885 × | 9.590 × | 32 |

| 50 | 3.125 × | 1.110 × | 2.100 × | 2.665 × | 3.954 × | 32 |

| 100 | 4.828 × | 1.776 × | 3.722 × | 2.665 × | 4.321 × | 32 |

Table A5.

LS-SVM results for problem #2.

Table A5.

LS-SVM results for problem #2.

| Number of Training Points | Training Time (s) | Maximum Error on Training Set | MSE on Training Set | Maximum Error on Test Set | MSE on Test Set | ||

|---|---|---|---|---|---|---|---|

| 8 | 7.813 × | 1.001 × | 1.965 × | 1.001 × | 7.904 × | 1.000 × | 3.704 × |

| 16 | 1.250 × | 4.017 × | 4.909 × | 3.872 × | 4.514 × | 1.000 × | 4.198 × |

| 32 | 6.875 × | 4.046 × | 4.834 × | 3.900 × | 4.575 × | 1.000 × | 4.536 × |

| 50 | 1.203 × | 4.048 × | 4.792 × | 3.902 × | 4.580 × | 1.000 × | 4.666 × |

| 100 | 3.156 × | 4.050 × | 4.752 × | 3.903 × | 4.582 × | 1.000 × | 4.853 × |

Table A6.

CSVM results for problem #2.

Table A6.

CSVM results for problem #2.

| Number of Training Points | Training Time (s) | Maximum Error on Training Set | MSE on Training Set | Maximum Error on Test Set | MSE on Test Set | ||

|---|---|---|---|---|---|---|---|

| 8 | 1.250 × | 1.556 × | 7.644 × | 1.480 × | 5.325 × | 1.000 × | 3.452 × |

| 16 | 1.563 × | 4.021 × | 4.914 × | 3.876 × | 4.517 × | 1.000 × | 4.719 × |

| 32 | 2.594 × | 4.047 × | 4.834 × | 3.901 × | 4.575 × | 1.000 × | 5.109 × |

| 50 | 4.109 × | 4.050 × | 4.792 × | 3.903 × | 4.580 × | 1.000 × | 5.252 × |

| 100 | 9.219 × | 4.051 × | 4.753 × | 3.904 × | 4.583 × | 1.000 × | 5.469 × |

Table A7.

TFC results for problem #3.

Table A7.

TFC results for problem #3.

| Number of Training Points | Training Time (s) | Maximum Error on Training Set | MSE on Training Set | Maximum Error on Test Set | MSE on Test Set | m |

|---|---|---|---|---|---|---|

| 8 | 1.563 × | 1.313 × | 5.184 × | 1.456 × | 6.818 × | 8 |

| 16 | 7.969 × | 5.551 × | 6.123 × | 8.882 × | 7.229 × | 15 |

| 32 | 7.187 × | 1.221 × | 2.377 × | 9.992 × | 2.229 × | 15 |

| 50 | 5.000 × | 7.772 × | 3.991 × | 5.551 × | 3.672 × | 15 |

| 100 | 9.844 × | 7.772 × | 5.525 × | 6.661 × | 3.518 × | 15 |

Table A8.

LS-SVM results for problem #3.

Table A8.

LS-SVM results for problem #3.

| Number of Training Points | Training Time (s) | Maximum Error on Training Set | MSE on Training Set | Maximum Error on Test Set | MSE on Test Set | ||

|---|---|---|---|---|---|---|---|

| 8 | 1.563 × | 1.420 × | 8.300 × | 1.638 × | 6.522 × | 5.995 × | 6.813 × |

| 16 | 1.875 × | 1.811 × | 1.015 × | 1.871 × | 1.014 × | 3.594 × | 3.162 × |

| 32 | 4.687 × | 5.455 × | 1.025 × | 9.005 × | 1.015 × | 5.995 × | 1.468 × |

| 50 | 7.656 × | 8.563 × | 3.771 × | 8.391 × | 3.646 × | 2.154 × | 1.468 × |

| 100 | 2.688 × | 6.441 × | 1.500 × | 6.128 × | 1.640 × | 2.154 × | 1.468 × |

Table A9.

CSVM results for problem #3.

Table A9.

CSVM results for problem #3.

| Number of Training Points | Training Time (s) | Maximum Error on Training Set | MSE on Training Set | Maximum Error on Test Set | MSE on Test Set | ||

|---|---|---|---|---|---|---|---|

| 8 | 1.563 × | 1.263 × | 7.737 × | 2.017 × | 1.339 × | 1.000 × | 6.813 × |

| 16 | 4.687 × | 1.269 × | 4.961 × | 1.631 × | 5.342 × | 3.594 × | 3.162 × |

| 32 | 1.406 × | 1.763 × | 8.308 × | 2.230 × | 1.248 × | 3.594 × | 3.162 × |

| 50 | 3.281 × | 1.429 × | 1.045 × | 1.569 × | 1.017 × | 2.154 × | 1.468 × |

| 100 | 1.297 × | 8.261 × | 8.832 × | 7.209 × | 5.589 × | 2.154 × | 1.468 × |

Table A10.

TFC results for problem #4.

Table A10.

TFC results for problem #4.

| Number of Training Points in Domain | Training Time (s) | Maximum Error on Training Set | MSE on Training Set | Maximum Error on Test Set | MSE on Test Set | m |

|---|---|---|---|---|---|---|

| 9 | 4.375 × | 1.107 × | 1.904 × | 1.543 × | 4.633 × | 8 |

| 16 | 5.000 × | 3.336 × | 2.131 × | 4.938 × | 3.964 × | 9 |

| 36 | 6.406 × | 6.628 × | 5.165 × | 2.333 × | 6.961 × | 12 |

| 64 | 9.844 × | 4.441 × | 2.091 × | 8.882 × | 8.320 × | 15 |

| 100 | 1.031 × | 3.331 × | 1.229 × | 6.661 × | 1.246 × | 15 |

Table A11.

LS-SVM results for problem #4.

Table A11.

LS-SVM results for problem #4.

| Number of Training Points in Domain | Training Time (s) | Maximum Error on Training Set | MSE on Training Set | Maximum Error on Test Set | MSE on Test Set | ||

|---|---|---|---|---|---|---|---|

| 9 | 2.031 × | 2.578 × | 9.984 × | 3.941 × | 3.533 × | 1.000 × | 6.635 × |

| 16 | 2.344 × | 2.229 × | 6.277 × | 3.794 × | 1.731 × | 1.000 × | 3.577 × |

| 36 | 4.219 × | 1.254 × | 2.542 × | 2.435 × | 4.517 × | 1.000 × | 1.894 × |

| 64 | 5.156 × | 2.916 × | 1.193 × | 4.962 × | 1.390 × | 1.000 × | 1.589 × |

| 100 | 1.297 × | 1.730 × | 3.028 × | 2.673 × | 3.668 × | 1.000 × | 9.484 × |

Table A12.

CSVM results for problem #4.

Table A12.

CSVM results for problem #4.

| Number of Training Points in Domain | Training Time (s) | Maximum Error on Training Set | MSE on Training Set | Maximum Error on Test Set | MSE on Test Set | ||

|---|---|---|---|---|---|---|---|

| 9 | 5.000 × | 1.305 × | 1.936 × | 3.325 × | 8.262 × | 1.000 × | 6.948 × |

| 16 | 1.172 × | 2.121 × | 7.965 × | 5.507 × | 2.530 × | 1.000 × | 4.894 × |

| 36 | 1.891 × | 2.393 × | 6.242 × | 3.738 × | 1.341 × | 1.000 × | 2.154 × |

| 64 | 3.156 × | 9.501 × | 1.021 × | 1.251 × | 1.165 × | 1.000 × | 1.371 × |

| 100 | 8.453 × | 4.362 × | 2.687 × | 5.561 × | 2.951 × | 1.000 × | 8.891 × |

Appendix B Nonlinear ODE LS-SVM and CSVM Derivation

This appendix shows how the method of Lagrange multiplies is used to solve nonlinear ODEs using the LS-SVM and CSVM methods. Equation (A1) shows the Lagrangian for the LS-SVM method. The values where are zero give candidates for the minimum.

A system of equations can be set up by substituting the results found by differentiating with respect to and into the remaining five equations found by taking the gradients of . This will lead to a set of equations and unknowns, which are , , , , and b. This system of equations is given in Equation (A2),

where . The system of equations given in Equation (A2) is the same as the system of equations in Equation (20) of reference [12] with one exception: The regularization term, , in the second row of the second column entry is missing from this set of equations. The reason is, while running the experiments presented in this paper, that regularization term had an insignificant effect on the overall accuracy of the method. Moreover, as has been demonstrated here, it is not necessary in the setup of the problem. Once the set of equations has been solved, the model solution is given in the dual form by,

As with the linear ODE case, the set of equations that need to be solved and dual form of the model solution can be written in terms of the kernel matrix and its derivatives. The method for solving the nonlinear ODEs with CSVM is the same, except the Lagrangian function is,

where the initial value constraint has been embedded via TFC by taking the primal form of the solution to be,

Similar to the SVM derivation, taking the gradients of and setting them equal to zero leads to a system of equations,

where . This can be solved using Newton’s method for the unknowns , , and . In addition, the gradients can be used to re-write the estimated solution, , in the dual form,

As with the SVM derivation, the system of equations that must be solved and the dual form of the estimated solution can each be written in terms of the kernel matrix and its derivatives.

Appendix C Linear PDE CSVM Derivation

This appendix shows how to solve the PDE given by,

using the CSVM method. Note, that this is the same PDE as shown in problem number four of the numerical results section where the right-hand side of the PDE has been replaced by a more general function and the boundary-value type constraints have been replaced by more general functions where . Note, that throughout this section all matrices will be written using tensor notation rather than vector-matrix form for compactness. In this article, we will let superscripts denote a derivative with respect to the superscript variable and a subscript will be a normal tensor index. For example, the symbol would denote a second-order derivative of the second-order tensor with respect to the variable x (i.e. ).

Using the Multivariate TFC [23], the constrained expression for this problem can be written as,

where will satisfy the boundary constraints regardless of the choice of w and . Now, the Lagrange multiplies are added in to form ,

where is the vector composed of the elements where and there are training points. The gradients of give candidates for the minimum,

where is the second order tensor composed of the vectors , is the fourth order tensor composed of the third order tensors , and . The gradients of can be used to form a system of simultaneous linear equations to solve for the unknowns and write in the dual form. The system of simultaneous linear equations is,

where , , and is the fourth order tensor composed of the third order tensors where . The dual-form of the solution is,

The system of simulatenous linear equations as well as the dual form of the solution can be written and were solved using the kernel matrix and its partial derivatives.

References

- Dormand, J.; Prince, P. A Family of Embedded Runge-Kutta Formulae. J. Comp. Appl. Math. 1980, 6, 19–26. [Google Scholar] [CrossRef]

- Berry, M.M.; Healy, L.M. Implementation of Gauss-Jackson integration for orbit propagation. J. Astronaut. Sci. 2004, 52, 351–357. [Google Scholar]

- Bai, X.; Junkins, J.L. Modified Chebyshev-Picard Iteration Methods for Orbit Propagation. J. Astronaut. Sci. 2011, 58, 583–613. [Google Scholar] [CrossRef]

- Junkins, J.L.; Younes, A.B.; Woollands, R.; Bai, X. Picard Iteration, Chebyshev Polynomials, and Chebyshev Picard Methods: Application in Astrodynamics. J. Astronaut. Sci. 2015, 60, 623–653. [Google Scholar] [CrossRef]

- Reed, J.; Younes, A.B.; Macomber, B.; Junkins, J.L.; Turner, D.J. State Transition Matrix for Perturbed Orbital Motion using Modified Chebyshev Picard Iteration. J. Astronaut. Sci. 2015, 6, 148–167. [Google Scholar] [CrossRef][Green Version]

- Driscoll, T.A.; Hale, N. Rectangular spectral collocation. IMA J. Numer. Anal. 2016, 36, 108–132. [Google Scholar] [CrossRef]

- Mortari, D. The Theory of Connections: Connecting Points. Mathematics 2017, 5, 57. [Google Scholar] [CrossRef]

- Mortari, D. Least-squares Solutions of Linear Differential Equations. Mathematics 2017, 5, 48. [Google Scholar] [CrossRef]

- Mortari, D.; Johnston, H.; Smith, L. High accuracy least-squares solutions of nonlinear differential equations. J. Comput. Appl. Math. 2019, 352, 293–307. [Google Scholar] [CrossRef]

- Johnston, H.; Mortari, D. Linear Differential Equations Subject to Relative, Integral, and Infinite Constraints. In Proceedings of the 2018 AAS/AIAA Astrodynamics Specialist Conference, Snowbird, UT, USA, 19–23 August 2018. [Google Scholar]

- Johnston, H.; Leake, C.; Efendiev, Y.; Mortari, D. Selected Applications of the Theory of Connections: A Technique for Analytical Constraint Embedding. Mathematics 2019, 7, 537. [Google Scholar] [CrossRef]

- Mehrkanoon, S.; Falck, T.; Johan, A.K. Approximate Solutions to Ordinary Differential Equations using Least-squares Support Vector Machines. IEEE Trans. Neural Netw. Learn. Syst. 2012, 23, 1356–1367. [Google Scholar] [CrossRef] [PubMed]

- Freire, R.Z.; Santos, G.H.d.; Coelho, L.d.S. Hygrothermal Dynamic and Mould Growth Risk Predictions for Concrete Tiles by Using Least Squares Support Vector Machines. Energies 2017, 10, 1093. [Google Scholar] [CrossRef]

- Zhao, X.; Chen, X.; Xu, Y.; Xi, D.; Zhang, Y.; Zheng, X. An EMD-Based Chaotic Least Squares Support Vector Machine Hybrid Model for Annual Runoff Forecasting. Water 2017, 9, 153. [Google Scholar] [CrossRef]

- Gedik, N. Least Squares Support Vector Mechanics to Predict the Stability Number of Rubble-Mound Breakwaters. Water 2018, 10, 1452. [Google Scholar] [CrossRef]

- Gao, C.; Xue, W.; Ren, Y.; Zhou, Y. Numerical Control Machine Tool Fault Diagnosis Using Hybrid Stationary Subspace Analysis and Least Squares Support Vector Machine with a Single Sensor. Appl. Sci. 2017, 7. [Google Scholar] [CrossRef]

- Vapnik, V.N. Statistical Learning Theory; Wiley: Hoboken, NJ, USA, 1998. [Google Scholar]

- Kramer, M.A.; Thompson, M.L.; Bhagat, P.M. Embedding Theoretical Models in Neural Networks. In Proceedings of the 1992 American Control Conference, Chicago, IL, USA, 24–26 June 1992; pp. 475–479. [Google Scholar]

- Pathak, D.; Krähenbühl, P.; Darrell, T. Constrained Convolutional Neural Networks for Weakly Supervised Segmentation. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 11–18 December 2015; pp. 1796–1804. [Google Scholar]

- Márquez-Neila, P.; Salzmann, M.; Fua, P. Imposing Hard Constraints on Deep Networks: Promises and Limitations. arXiv 2017, arXiv:1706.02025. [Google Scholar]

- Lanczos, C. Applied Analysis. In Progress in Industrial Mathematics at ECMI 2008; Dover Publications, Inc.: New York, NY, USA, 1957; Chapter 7; p. 504. [Google Scholar]

- Wright, K. Chebyshev Collocation Methods for Ordinary Differential Equations. Comput. J. 1964, 6, 358–365. [Google Scholar] [CrossRef]

- Mortari, D.; Leake, C. The Multivariate Theory of Connections. Mathematics 2019, 7, 296. [Google Scholar] [CrossRef]

- Leake, C.; Mortari, D. An Explanation and Implementation of Multivariate Theory of Functional Connections via Examples. In Proceedings of the 2019 AAS/AIAA Astrodynamics Specialist Conference, Portland, ME, USA, 11–15 August 2019. [Google Scholar]

- Theodoridis, S.; Koutroumbas, K. Pattern Recognition; Academic Press: Cambridge, MA, USA, 2008. [Google Scholar]

- Mehrkanoon, S.; Suykens, J.A. LS-SVM Approximate Solution to Linear Time Varying Descriptor Systems. Automatica 2012, 48, 2502–2511. [Google Scholar] [CrossRef]

- Mehrkanoon, S.; Suykens, J. Learning Solutions to Partial Differential Equations using LS-SVM. Neurocomputing 2015, 159, 105–116. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).