Abstract

The present study introduces an AI (Artificial Intelligence) framework for surface roughness assessment in milling operations through sound signal processing. As industrial demands escalate for in-process quality control solutions, the proposed system leverages audio data to estimate surface finish states without interrupting production. In order to address this, a novel classification approach was developed that maps audio waveform data into predictive indicators of surface quality. In particular, an experimental dataset was employed consisting of sound signals that were captured during milling procedures applying various machining conditions, where each signal was labeled with a corresponding roughness quality obtained via offline metrology. The formulated classification pipeline commences with audio acquisition, resampling, and normalization to ensure consistency across the dataset. These signals are then transformed into Mel-Frequency Cepstral Coefficients (MFCCs), which yield a compact time–frequency representation optimized for human auditory perception. Next, several AI algorithms were trained in order to classify these MFCCs into predefined surface roughness categories. Finally, the results of the work demonstrate that sound signals could contain sufficient discriminatory information enabling a reliable classification of surface finish quality. This approach not only facilitates in-process monitoring but also provides a foundation for intelligent manufacturing systems capable of real-time quality assurance.

1. Introduction

Machining procedures hold significant value in contemporary manufacturing due to their versatility, precision, and ability to produce complex geometries with high dimensional accuracy [1]. As a core subtractive process, milling is widely utilized across industries ranging from aerospace and automotive to biomedical and electronics, where the demand for high-quality components continues to rise. In the context of modern manufacturing applications, the efficiency of milling operations must be evaluated not only in terms of productivity and material removal rates but also through critical performance indicators such as the surface finish quality of the fabricated products [2]. Surface roughness constitutes a critical factor in evaluating process effectiveness, as it is inherently connected to the functional performance, durability, and assembly compatibility of machined components [3]. Therefore, monitoring and optimizing the machining process in order to achieve superior surface finish is essential for ensuring both the technical reliability and economic competitiveness of leading manufacturing companies [4]. This necessitates a systematic approach to process evaluation and control, especially as industries increasingly adopt intelligent manufacturing systems and data-driven optimization strategies.

In general, surface finish quality comprises a fundamental determinant of both the functional and esthetic performance of a product, particularly within the domains of precision engineering and advanced manufacturing. Notably, a high-quality surface finish contributes significantly to the enhancement of mechanical properties such as fatigue strength, wear resistance, and corrosion resistance, primarily by reducing surface irregularities that often serve as initiation sites for mechanical failure [5]. Furthermore, the surface condition critically affects the fit and tribological behavior of interfacing components, thereby ensuring accurate assembly, dimensional stability, and consistent operational performance [6]. In applications where visual and tactile attributes are pivotal, such as in consumer electronics or biomedical devices, the surface finish also plays a key role in shaping user perception and product acceptance. It is worth mentioning that surface finish quality should not be regarded as a superficial attribute but rather as an intrinsic factor that governs the functional integrity and the overall lifecycle performance of machined components. Specifically, it represents a crucial interface between material characteristics and system-level outcomes, impacting interactions at both micro and macro scales. As such, the ability to monitor and control surface finish in real time establishes a vital capability in the context of smart manufacturing systems, where precision, adaptability and predictive quality assurance are paramount [7].

Hereupon, real-time evaluation of surface finish quality has become critically important in the modern manufacturing industry, particularly within the context of smart manufacturing and Industry 4.0. This importance stems from the increasing demand for higher productivity, tighter tolerances, zero-defect manufacturing, and adaptive process control [8]. In traditional manufacturing environments, surface quality control is predominantly performed offline using tactile or optical profilometers. While these post-process techniques provide high accuracy, they inherently delay the detection of surface anomalies until after the component has been fully machined. Such delays not only result in material waste but also increase the likelihood of propagating defects through downstream operations [9]. Therefore, real-time surface finish evaluation circumvents these limitations by providing instantaneous feedback on surface quality during the machining process itself. This approach could enable timely corrective actions such as tool path modification, feed rate adjustments, or spindle speed regulation.

In addition, the real-time evaluation of surface quality is crucial for advancing modern manufacturing systems toward greater efficiency, consistency, and autonomy, enabling a proactive conceptual framework for quality control [10]. By monitoring signals from several sensors during machining and predicting the surface outcome on the fly, manufacturers can immediately detect deviations from optimal cutting conditions. This capability supports early intervention to adjust process parameters before defects occur [11]. Moreover, it allows for dynamic process optimization, where the system continuously adapts in response to material variability, tool degradation, or thermal effects. From a broader perspective, in situ surface finish prediction aligns with the principles of intelligent manufacturing, where digital integration, data-driven decision-making, and cyber–physical systems play central roles. Through the integration of real-time analytics into the milling workflow, manufacturers could gain enhanced process visibility, reduced inspection time, and the ability to implement predictive maintenance strategies. This not only improves product quality and consistency but also reduces costs associated with rework, scrap, and machine downtime. Hence, ensuring optimal surface finish is essential for both product performance and compliance with applicable industry standards.

The prediction of surface finish in machining operations has garnered significant attention in precision manufacturing, driven by demands for zero-defect production and Industry 4.0 adoption. Traditional methods relying on post-process metrology are limited by their offline nature, prompting research into in-process monitoring techniques and quality prediction based on certain manufacturing parameters. Among these, AI techniques, such as machine learning (ML) and deep learning (DL), are employed alongside mathematical modeling and statistical analysis, including regression methods and analysis of variance (ANOVA), in order to accurately capture the complex, nonlinear relationships between cutting parameters and surface roughness [12]. These approaches are supported by systematically designed experiments that generate high-quality data for model training and optimization.

In particular, study [13] aimed to develop a mathematical model for predicting surface roughness in face milling operations, based on the geometric characteristics of the cutting tool’s surface profile. Unlike previous approaches, which often relied on the simplifying assumption of a perfectly circular tool nose or employed purely statistical analyses derived from extensive experimental datasets, this research introduced a geometry-based modeling framework. Additionally, the research presented in [14] employed both Artificial Neural Networks (ANN) and Response Surface Methodology (RSM) to develop accurate surface roughness prediction models. Network construction involved evaluating five learning algorithms: conjugate gradient backpropagation, Levenberg–Marquardt, scaled conjugate gradient, quasi-Newton backpropagation, and resilient backpropagation. In parallel, a second-order polynomial model was developed using RSM, and statistical analysis through ANOVA was conducted to evaluate the influence of machining parameters. The predictions from both models closely matched the experimental results; however, the RSM model exhibited a higher R2 value, indicating superior stability and robustness compared to the ANN approach. An interesting approach was suggested in [15], where random forest (RF), decision tree, and support vector machine (SVM) models were trained using a central composite design framework to incorporate both controllable process parameters and noise variables. The models’ performance in predicting surface roughness was assessed through validation experiments taking into account Root Mean Square Error (RMSE), with the RF demonstrating the highest prediction accuracy.

The authors in [16] explored the application of ML techniques for predicting surface roughness in milling operations, utilizing sensor-acquired data as the principal input source. The proposed sensor suite, comprising current transformers, a microphone, and displacement sensors, highlighted the effectiveness of integrating data-driven methodologies with advanced predictive modeling to improve both the quality and precision of machined surfaces. It must be noted that conventional ML-based approaches could face limitations related to convergence to local minima, potentially resulting in suboptimal generalization and predictions that may conflict with established physical principles. To address these issues, a study [17] introduced a physics-informed DL framework for surface roughness prediction in milling processes, integrating domain-specific physical knowledge into both the input and training phases of the learning model. Another work that utilized a learning-based optimization approach was suggested in [18] to predict the surface finish quality based on cutting parameters, vibrations, and sound characteristics in face milling. The developed system obviates the necessity for manual, physical measurement of surface roughness by enabling automated real-time data acquisition.

Findings that could offer valuable insights regarding the turning process in optimizing process parameters in order to achieve superior surface integrity were proposed in [19,20]. Specifically, ML models including SVM, Gaussian process regression (GPR), adaptive neuro-fuzzy inference systems (ANFIS), and ANN were utilized to predict surface roughness with high accuracy. The RSM analysis revealed, among others, that the feed rate was the most influential parameter affecting surface roughness. Another ML approach was introduced in [21], where the AI models were able to leverage data from cutting forces, temperatures, and vibrations by applying robust sensors to predict the workpiece surface roughness in cylindrical turning operations. The work presented in [22] formulated a dual-method approach to surface roughness prediction in CNC milling operations. Initially, a Design of Experiments (DOE) methodology was employed to identify the most influential process parameters. Subsequently, a fuzzy logic-based predictive model was developed using experimental data obtained from CNC milling tests. Then, the significant parameters identified through DOE were used as inputs to the fuzzy logic system estimating the surface roughness via an empirically derived model.

In order to improve data utilization while minimizing computational overhead, a novel dual-task monitoring approach for simultaneous prediction of surface roughness and tool wear was proposed in [23]. This method modifies the enhancement layer of each sub-task in the traditional broad learning system by incorporating a reservoir with echo state network characteristics. By enabling information sharing and capturing dynamic features unique to each task, a broad echo state dual-task learning system with incremental learning capabilities was established, outperforming existing dual-task learning approaches in terms of overall monitoring accuracy and computational efficiency. Furthermore, an interesting methodology [24] employing long short-term memory (LSTM) networks demonstrated its effectiveness in handling time-series data of varying lengths and capturing long-range dependencies. In this context, the LSTM approach was applied to predict surface roughness during milling, where the results highlighted the potential of LSTM models in data-driven smart manufacturing environments, particularly for surface roughness prediction and real-time decision-making in machining processes.

This study presents a comprehensive methodology for predicting surface roughness in milling operations by classifying data signals using multiple AI-based algorithms. It should be noted that each audio signal is associated with a corresponding surface roughness value, enabling supervised learning that way. The proposed pipeline includes audio preprocessing, feature extraction via Mel-Frequency Cepstral Coefficients (MFCCs) and classification using ML-based models tailored for time–frequency representations. Essential preprocessing steps, including channel normalization, resampling, and image-like representation methods, were employed to ensure data consistency and enhance model efficiency. Finally, the experimental results demonstrated robust classification accuracy and strong resilience to noise, establishing the proposed framework as an effective, lightweight solution for real-time and non-contact surface roughness estimation in intelligent manufacturing systems.

2. Materials and Methods

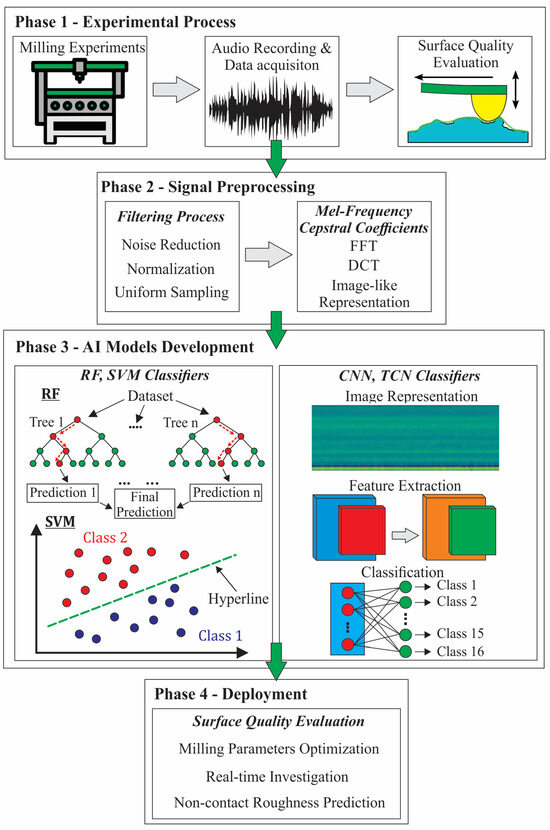

The introduced methodology follows a structured pipeline designed to evaluate and classify surface roughness based on sound data captured during milling operations, as exhibited in Figure 1. An open experimental dataset was considered containing a series of controlled milling experiments that were conducted under predefined machining conditions, as it is analytically described in [25,26]. Specifically, a BFW YF1 vertical milling machine was employed using tungsten carbide tools, where the workpiece material consisted of a mild steel with dimensions of 8 × 20 × 8 [mm]. During these experiments, sound signals were collected using the appropriate sensors and the surface roughness of the machined components was measured post-process using standardized evaluation techniques to establish a corresponding label for each recorded signal, thereby constructing a labeled dataset. The proposed workflow demands that each audio signal undergoes a preprocessing phase aimed at standardizing and enhancing the quality of the input. Hereupon, certain actions were conducted like noise reduction in order to remove ambient or irrelevant signal components, channel normalization to convert stereo recordings into mono by averaging and finally resampling all audio waveforms to a uniform sampling rate. These preprocessing steps ensure consistency across the dataset, as well as eliminate potential variability imported by differences in recording conditions.

Figure 1.

Conceptual architecture of the proposed data processing and analysis pipeline.

Once preprocessed, the audio waveforms were transformed into two-dimensional (2D) time–frequency representations using MFCCs. This transformation emulates the human auditory system’s perceptual scale; providing a compact, image-like representation of the audio data that captures relevant spectral features. The resulting MFCC matrices serve as the input to the applied ML models designed for classification. In order to explore the effectiveness of different learning paradigms, a convolutional neural network (CNN), a temporal convolutional network (TCN), an RF classifier and an SVM classifier were trained to classify the MFCC representations according to the defined surface roughness categories. CNN/TCN consider the full MFCC time series, like an image or a 2D matrix, so they can model temporal and frequency patterns directly; whereas RF and SVM rely on their aggregated values like the mean and standard deviation.

To further investigate the temporal efficiency and real-time applicability of the trained models, the original audio signals were segmented into fixed-duration clips of five seconds. These cropped sample audio files are then processed through the developed pipeline employing the trained ML models in order to assess whether the models can maintain accurate classification performance with limited temporal information. This step serves to evaluate the feasibility of implementing the proposed system in practical, real-time industrial scenarios, where rapid surface condition assessment is essential. Overall, the proposed pipeline integrates experimental machining, signal processing, and ML approaches into a unified framework to enable automated and data-driven prediction of surface finish quality, supporting advancements in intelligent manufacturing systems aligned with Industry 4.0 objectives.

2.1. Data Acquisition Process and Experimental Evaluation

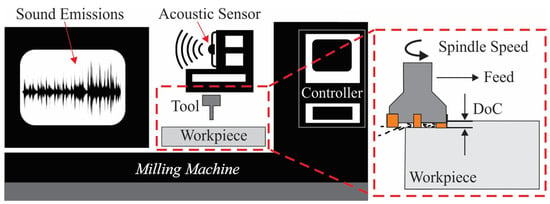

Regarding the data acquisition procedure and the evaluation of the surface quality, an experimental dataset was utilized that consists of 7.444 audio recordings of sound signals during the milling procedure [25,26], as schematically illustrated in Figure 2. The purpose of this dataset was to explore how variations in the values of certain milling conditions affect the surface roughness, ultimately determining the quality of the machined products. These audio data were collected for different combinations of spindle speed, feed rate, and depth of cut. Moreover, the experiments were carried out in a controlled environment to minimize external noise that could compromise the accuracy of the recorded audio data. It is noteworthy that mechanical vibrations and equipment interference can significantly impact the quality and interpretation of sound emission signals during milling operations [27]. Structural vibrations from the spindle, guideways, or drive systems may introduce low-frequency noise that modulates the high-frequency sound emissions generated by tool-workpiece interactions. Additionally, electromagnetic interference from motors and controllers may introduce broadband electrical noise that contaminates the raw audio signal [28]. These interference effects can lead to misinterpretation of signal features, particularly when using time–frequency analysis methods where overlapping spectral components may obscure the characteristic signatures of surface formation mechanisms. Hereupon, the employed controlled setup was essential to ensure the integrity of the experimental data, enabling a more reliable analysis and interpretation of the sound characteristics associated with milling operations.

Figure 2.

Experimental setup for audio data acquisition.

The experiments were conducted in various combinations of feed rate (5 and 10 mm), depth of cut (0.25, 0.50, 0.75, and 1 mm), and spindle speed (500 and 1000 rpm), creating 16 different classes. Hereupon, the 7.444 audio recordings were divided and merged into a single file in each of the 20 sets per class, resulting in a total number of 320 (20 × 16) sets of data. In addition, roughness was measured for each combination using a Carl Zeiss E-35B profilometer after the termination of each test. The evaluation of these measurements was also included in the dataset, providing key insights regarding the correlation between the surface quality of the machine part and the corresponding audio files. The results of the surface finish quality investigations in each class are documented in Table 1. It is noteworthy that while measurement uncertainty may affect tabulated roughness values, the developed model’s input features (audio waveforms) remain invariant to such post hoc uncertainties, as they directly reflect the physical process dynamics of the milling process.

Table 1.

Evaluation and classification of the surface roughness measurements.

2.2. Transformation of Audio Data into Image-like Representations

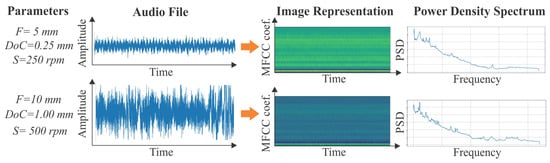

In order to enable the application of sophisticated networks and image-based DL architectures for classification tasks, the sound signals were transformed into 2D image-like representations using MFCC [29]. Although the MFCC representation can be visualized as an image, owing to its 2D structure, it fundamentally constitutes a multivariate time series, where each MFCC corresponds to a frequency-related feature that evolves over time. This process leverages the time–frequency characteristics of audio signals, encoding relevant temporal and spectral information into a compact, structured format suitable for image-based analysis. Specifically, this audio-to-image conversion bridges the gap between signal processing and DL, leveraging the superior pattern recognition capabilities of DL originally developed for computer vision tasks. It should be noted that spectral representations highlight transient frequency content and energy distribution over time, offering that way a compressed yet information-rich depiction of the audio environment during milling. In this form, sound signatures corresponding to different surface finish qualities manifest as distinct visual textures or patterns, which can be learned and discriminated by a suitably trained ML model. Moreover, it is worth mentioning that the proposed approach is particularly advantageous for real-time monitoring applications. Once trained, the models could classify incoming sound signals with minimal latency, providing immediate estimations of surface quality without interrupting the machining process. As such, the use of audio image-like representations constitutes a powerful, non-invasive, and scalable methodology for in-process surface finish evaluation.

The transformation of raw sound signals begins with segmenting the continuous waveform into short, overlapping frames using a sliding window with predefined frame length and hop size. For each frame, the power spectrum is calculated via the Fast Fourier Transform (FFT), providing a spectral representation of the signal. This spectrum is subsequently processed through a bank of triangular filters distributed according to the Mel scale, which models the nonlinear frequency sensitivity of human auditory perception. This filtering emphasizes perceptually significant frequency components while attenuating less informative regions. Following this, the Discrete Cosine Transform (DCT) is applied to decorrelate the resulting features and reduce dimensionality, resulting in a fixed number of MFCCs per frame. The temporal sequence of these MFCC vectors forms a two-dimensional representation, with rows corresponding to individual cepstral coefficients and columns representing time frames. These MFCC-based spectrograms are then standardized and optionally resized to conform with the input dimensions of AI models, thus enabling efficient learning of discriminative patterns from audio data in a visual domain. Characteristic results of the applied transformation method are displayed in Figure 3. Finally, it is worth noting that even though the Mel scale is conventionally employed in audio feature extraction in several studies, its direct mapping from human auditory perception could potentially not be fully aligned with machine-generated sound data. An alternative approach for analyzing such data, particularly with emphasis on spectral characteristics, involves the examination of the Power Spectral Density (PSD) of the signals. This can be effectively visualized through characteristic diagrams depicting the PSD as a function of frequency, as shown on the right of Figure 3. However, in the present work, the MFCC approach demonstrated robust efficacy in assessing surface quality characteristics of the machined products, proving particularly effective at capturing subtle texture variations and finish irregularities.

Figure 3.

Two-dimensional image representation of sound signals.

2.3. Artificial Intelligence Algorithms for Real-Time Evaluation of the Milling Procedure

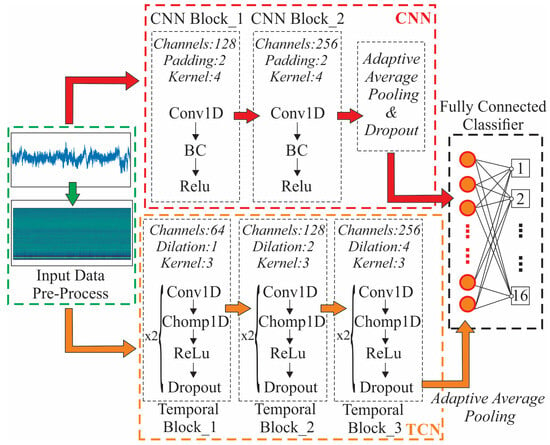

The main objective of the present work is to develop an autonomous system that can receive audio signals from a milling center in real-time and evaluate the surface finish of the manufactured component. In order to approach this behavior, four AI models (CNN, TCN, RF and SVM) were developed to classify audio signals captured during milling operations. The models like CNN and TCN take as input a 2D representation of MFCCs, which encode time–frequency information of the audio signals as described in the previous paragraph. Upon loading, the audio signals are converted to mono and normalized to zero mean and unit variance. Considering the CNN, the upper part of Figure 4 displays the employed network that is comprised of two convolutional layers with ReLU activations and batch normalization to enhance training stability and convergence. The first convolutional layer maps the input MFCC features with 40 coefficients to 128 channels, followed by a second layer that increases this to 256 channels. Both layers use a kernel size of four with padding to preserve temporal dimensions. An adaptive average pooling layer reduces the temporal dimension to a fixed size of one, followed by a dropout layer with rate equal to 0.2 to mitigate overfitting. The final fully connected layer maps the resulting feature representation to 16 output classes corresponding to different experimental configurations. In addition, the training is conducted for 50 epochs using the Adam optimizer with a learning rate of 0.001. Finally, the loss function used is categorical cross-entropy, suitable for multi-class classification.

Figure 4.

Architectural diagrams of the image-based models.

Regarding the primary differences between the CNN classifier and the TCN classifier, they lie in their architectural design and temporal modeling capabilities, as exhibited in the bottom part of Figure 4. The TCN classifier is specifically designed to model sequential dependencies through the use of dilated causal convolutions. Particularly, the model comprises three stacked temporal blocks, each containing two causal, dilated 1D convolutional layers where each convolution is followed by a ReLU activation and a dropout. Furthermore, causality is enforced using a trimming operation using a custom Chomp1d layer that removes the excess padding introduced by dilation, and its rate doubles with each subsequent block in order to enable an exponentially increasing receptive field. Finally, the network processes sequences of MFCCs and aggregates the temporal information via adaptive average pooling, followed by a fully connected output layer for final classification. This enables the TCN to capture long-term temporal patterns while preserving the temporal ordering of the input data.

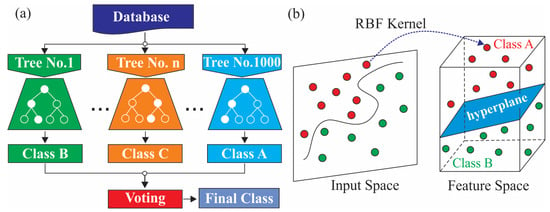

The RF classifier employed in this study utilizes an ensemble learning approach, constructing multiple decision trees during training and determining the final output based on the majority vote of the individual tree predictions. To generate a compact feature set suitable for the RF classifier, statistical descriptors are computed by taking the mean and standard deviation of each MFCC across the time dimension, resulting in an 80-dimensional feature vector (40 MFCC means and 40 MFCC standard deviations). The designed classifier consists of 1000 decision trees, generated through bootstrap aggregation. Each tree is trained on a random subset of features and samples, promoting diversity and reducing overfitting. During inference, the ensemble assigns class labels by aggregating the outputs of individual trees through majority voting, as presented in Figure 5a. This architecture demonstrates particular efficiency for audio classification tasks where feature interpretability and robustness to noise are prioritized, while the non-parametric nature of the algorithm accommodates the potentially nonlinear relationships inherent in audio feature spaces. Considering the SVM classifier, it composes a margin-based learning algorithm that seeks to find an optimal hyperplane that separates classes with the maximum margin in a high-dimensional space. In order to produce fixed-length feature vectors, statistical descriptors comprising the mean and standard deviation of each MFCC across time were computed, yielding an 80-dimensional representation for each audio file, as in the case of the RF model. This approach ensures that variations in signal duration do not affect the input dimensionality, thereby standardizing the input space for classification. Moreover, the classification model is based on a radial basis function (RBF) kernel SVM, chosen for its capability to model nonlinear decision boundaries in high-dimensional spaces, as schematically illustrated in Figure 5b. Finally, regarding the model’s configuration, the penalty parameter ‘C’ was equal to 40, balancing margin maximization and classification errors, and the kernel coefficient ‘γ’ was set to ‘scale’, which automatically adjusts based on the input feature variance.

Figure 5.

Typical workflow of the (a) RF and (b) SVM classifiers.

3. Results

The performance evaluation of the developed AI models was based on a comprehensive set of metrics, each offering unique insights into different aspects of classification effectiveness [30]. More specifically, the performance of ML models for evaluating surface roughness during milling processes is assessed using four key metrics: accuracy, precision, recall, and F1-score. Accuracy provides a general indication of classification correctness, which is reliable given the balanced dataset. Precision reflects the model’s ability to correctly identify specific surface roughness categories without misclassifying others, thereby minimizing false alarms. Recall indicates how effectively the model detects all instances of a given roughness level, ensuring that critical surface defects are not overlooked. The F1-score balances these two aspects, offering a robust single measure particularly useful in scenarios where both over- and under-detection carry practical consequences. Together, these metrics translate directly to the system’s ability to distinguish subtle variations in surface quality using sound signals. Importantly, all classifiers are evaluated using this unified framework, ensuring fair and objective comparison across different learning-based methodologies tailored for tool condition monitoring and surface quality prediction.

3.1. Comparative Analysis of the Classification Performance

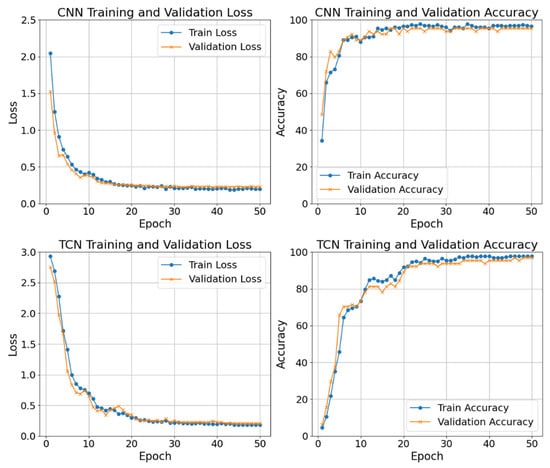

The effectiveness of the CNN and TCN models was also assessed through the use of two essential diagnostic plots, such as the loss versus epochs and the accuracy values versus epochs. The diagrams in Figure 6 provide essential insights into the learning behavior of the models throughout the training process and serve as indicators of generalization capability and convergence dynamics. The loss versus epochs diagrams illustrate how the value of the loss function, specifically the cross-entropy loss used for multi-class classification, evolves across successive training epochs. For both the CNN and TCN models, these plots include two distinct curves representing the loss on the training set and the validation set, respectively. A consistent decline in the training loss indicated that both of the models were progressively minimizing the discrepancy between predicted and true class labels during training. In addition, the validation loss follows a similar decreasing trend, suggesting effective generalization to unseen data. In the case of the CNN model, the training loss exhibited a rapid initial decrease, reflecting the model’s ability to quickly capture spatial features from the MFCC-based input representations. The training loss drops rapidly within the first ten epochs and stabilizes below 0.3 after approximately 20 epochs, suggesting convergence. In addition, the validation loss follows a similar trend, albeit with slightly higher values than the training loss, indicating a moderate generalization gap and the absence of significant overfitting. However, after a certain number of epochs, the validation loss tended to plateau, whereas the TCN model demonstrated a more gradual reduction in training loss, maintaining a marginally more stable and consistently low validation loss throughout the training phase. This behavior underscores the TCN model’s slightly enhanced ability to capture temporal dependencies within the audio signal, along with its increased resilience to overfitting.

Figure 6.

Training versus validation loss and accuracy curves for the CNN and TCN model.

Furthermore, the accuracy versus epochs diagrams complement the loss plots by showing the classification accuracy attained on both the training and validation datasets over the course of the training stage. Regarding the CNN model, the training accuracy improved rapidly, often reaching high values early in training, highlighting the robustness and high classification performance of the trained model, as displayed in the upper right part of Figure 6. Specifically, the accuracy plot reveals a swift increase in both training and validation accuracy, reaching high levels exceeding 90% by epoch 15. After this point, the accuracy curves plateau, with the training accuracy slightly surpassing the validation accuracy across the entire training period. On the other hand, the TCN model displays an even steadier increase in both training and validation accuracy, with the validation accuracy ultimately surpassing that of the CNN in later epochs. This behavior confirms that the TCN’s architecture, which incorporates dilated causal convolutions and residual connections, is more proficient at modeling sequential information and achieves better generalization performance in time-dependent audio classification tasks. Overall, the loss and accuracy trajectories observed in both models confirm that while CNNs are effective at capturing localized spectral features, TCNs could potentially offer a more powerful mechanism for leveraging temporal patterns within the MFCC representations, resulting in more stable training dynamics and improved validation performance. These findings could underscore the importance of temporal modeling in sound signal classification, as well as the advantage of TCNs over traditional CNNs in such contexts.

Additionally, the performance of the developed CNN, TCN, RF, and SVM classifiers was comprehensively evaluated using the previously mentioned metrics, like accuracy, precision, recall, and F1-score, as documented in Table 2. Among the examined AI models, the TCN classifier demonstrated the highest performance across all metrics, indicating superior capability in capturing temporal dependencies within the input MFCC features. The CNN model followed, exhibiting slightly lower but still robust performance, benefiting from its hierarchical feature extraction ability. The RF algorithm achieved respectable results leveraging its ensemble approach to handle feature interactions in the sound data, though with reduced performance compared to the DL models due to the limitation of its reliance on summary statistical features, such as the mean and standard deviation of the MFCCs, rather than the full temporal structure. Moreover, the SVM classifier yielded the lowest performance metrics, likely due to its reduced capacity to model complex nonlinear patterns in the high-dimensional feature space, especially in the absence of sequential information. In detail, the TCN model consistently outperformed the others across all evaluation metrics, demonstrating the strongest overall performance. It shows a particularly notable advantage in terms of precision and F1-score, indicating both a low false positive rate and a well-balanced trade-off between precision and recall. The CNN model follows closely behind, exhibiting strong performance with only marginally lower effectiveness compared to the TCN. It maintains a solid balance between all metrics, making it a reliable alternative, especially in contexts where temporal modeling is less critical. Furthermore, the RF classifier exhibits competitive results but begins to lag slightly, particularly in balancing recall and precision. While it still delivers acceptable performance, its capacity to capture complex patterns is limited in comparison to the neural models. Finally, the SVM model displayed the weakest performance across the board. Its lower accuracy, precision, and F1-score suggest it is less suited for the complexity of the task, likely due to its limited ability to model nonlinear relationships or temporal dependencies present in the data. Overall, the comparative analysis of these indicators clearly highlights the strength of DL architectures, particularly those designed for temporal modeling in audio-based classification tasks. These findings underscore the importance of preserving temporal dynamics in the feature representation, as demonstrated by the performance advantage of the TCN and CNN models over traditional ML approaches.

Table 2.

Comparative performance evaluation of the developed AI models.

3.2. Impact of MFCC Impact on the Milling Conditions and the Surface Roughness

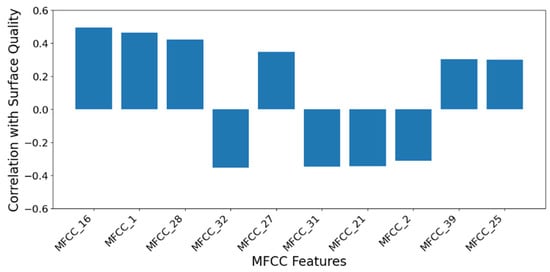

A Pearson correlation plot between individual MFCCs and surface roughness quality could reveal the degree of association between each audio feature and the roughness output, as demonstrated in Figure 7. In particular, the plot displays a diverse range of correlation values, reflecting the varying sensitivities of the most dominant MFCC features to the sound characteristics of the milling procedure. Generally, the strongest positive correlations are with surface roughness, indicating that increases in these spectral features are associated with higher roughness values. In contrast, negative correlation implies an inverse relationship wherein higher values of this coefficient correspond to smoother surfaces. It is worth mentioning that the figure serves to identify which spectral components of the milling audio signals exhibit predominant relationships with surface finish, thereby informing feature selection and model interpretability in the context of audio-based machining quality assessment. Hereupon, the MFCCs could be utilized not only as input features for classification models but also as a means of gaining interpretable, frequency-specific insight into the physical phenomena that could occur during the milling process.

Figure 7.

Dominant MFCC features correlated with surface roughness.

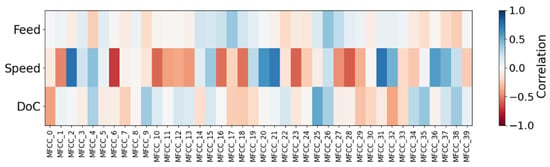

In addition, Figure 8 provides valuable insights into the relationship between process parameters and their audio signatures. Specifically, the resulting correlations are visualized using a heatmap-style bar plot, where the horizontal axis represents the MFCC indices and the vertical one denotes the corresponding Pearson correlation values ranging from −1 to 1. These correlations may reflect underlying physical phenomena such as tool-workpiece interactions, chatter vibrations, or chip formation dynamics, which are known to influence both the sound emissions and the resulting surface quality [31]. Furthermore, the correlation patterns could assist the selection of optimal MFCC features for real-time process monitoring and control, as well as for providing physical interpretability to data-driven models. Particularly, low- to mid-order coefficients exhibit high positive and negative correlations with the investigated milling conditions, indicating that variations in these parameters introduce dominant changes in audio signals, likely related to tool-workpiece interaction dynamics. Hereupon, the plot could serve as a feature selection aid, guiding downstream model design and dimensionality reduction strategies by highlighting the most responsive spectral features. This approach could conceivably bridge the gap between operational parameters and their audio consequences, enabling a more fundamental understanding of the milling operation through its emitted sound characteristics. Finally, it is worth noting that this capability could also support enhanced diagnostics and monitoring within data-driven manufacturing systems, aligning with the broader goals of intelligent machining in Industry 4.0 applications.

Figure 8.

Correlation analysis between MFCC features and milling process parameters.

It is relevant to highlight that each MFCC corresponds to a different region of the mel-scaled frequency spectrum, which reflects how sound frequencies are perceived and is particularly suitable for analyzing sound signals from machining operations [32]. Moreover, the correlation analysis revealed strong relationships between specific MFCCs and key milling conditions and outcomes. Notably, each MFCC exhibited distinct correlations with different machining variables, i.e., the spindle rate is strongly correlated with MFCC_1, MFCC_2, MFCC_16, MFCC_21, and MFCC_28; the depth of cut aligns with MFCC_25 and MFCC_32; and finally, the feed rate shows a relationship with MFCC_4 and MFCC_26, as indicated by Figure 8. By identifying which MFCCs are strongly correlated with key milling conditions, it becomes feasible to infer process states or surface quality directly from AE, without the need for physical sensors or interruptions. Hence, in practical terms, these correlations allow the development of quality control systems that can estimate in situ the surface finish quality, taking into account audio signals alone. Integrating such models into CNC systems could potentially enable real-time adjustments of the applied milling parameters based on sound feedback, which can minimize tool damage, improve surface quality and reduce scrap rates. Therefore, mapping the correlations between individual MFCCs, surface roughness, and cutting conditions may enable a more sophisticated interpretation of the relationship between audio features and machining outcomes.

Additionally, in order to gain further insight into the relevance of MFCC features with respect to the milling process parameters, a correlation analysis was also conducted between the mean values of MFCCs and the corresponding feed rate, spindle speed, and depth of cut. The results indicate that specific MFCCs, most notably those in the lower-order range (MFCC 1 through MFCC 3), demonstrate relatively strong correlations with the spindle speed, suggesting a relationship between spectral content and the fundamental rotational dynamics of the spindle. This observation is consistent with the nature of sound emission signals, where periodic mechanical components generate harmonic structures predominantly captured in the lower frequency range. These frequency components are emphasized by the Mel filter bank and subsequently encoded in the initial MFCCs. As such, the lower-order MFCCs can be interpreted as capturing the fundamental harmonic and its multiples, which are directly influenced by changes in spindle rotational velocity. This analysis not only reinforces the discriminative power of MFCC features for regime classification but also establishes a physical connection between signal representation and machining dynamics.

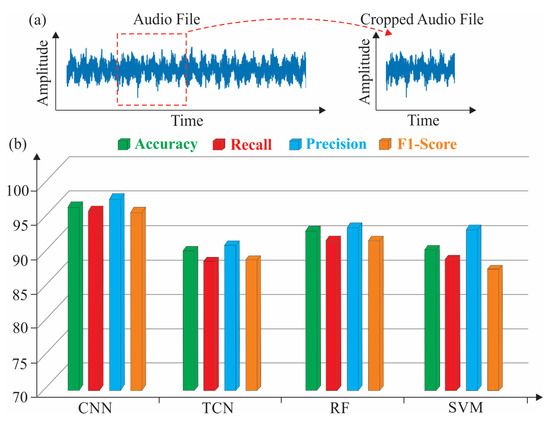

3.3. Assessment of the AI Models Performance on Short-Duration Test Data

In order to assess the robustness and generalizability of the developed AI classifiers, it is crucial to assess their performance on unseen data, particularly when the input structure deviates from the training conditions. One meaningful approach involves the evaluation of the AI models on audio signals that have been cropped to a shorter time duration than those used during the training phase (10–15% of the original duration), as displayed in Figure 9a. This strategy simulates more realistic industrial scenarios where rapid decision-making based on limited audio information is often required. By reducing the signal length, the amount of available temporal and spectral information is also reduced, which naturally leads to a decline in classification accuracy. However, the relative performance ranking among the models did not remain consistent with that observed during training on the full-length signals.

Figure 9.

(a) Test set extraction based on a machining duration of five seconds and (b) the corresponding performance comparison of the developed AI models.

Particularly, the performance of the TCN model degrades significantly, becoming the least effective among the examined classifiers. This decline in performance can be explained by the model’s dependency on longer input sequences to fully leverage its receptive field. With shorter input lengths, the TCN is no longer able to utilize its dilated convolutions effectively, as the sequential patterns become truncated and insufficient for the model to recognize the same long-range dependencies it was trained to detect. Hereupon, the drop in TCN performance on shorter audio samples illustrates a limitation of sequence models that rely on extended context, highlighting the importance of input length compatibility in temporal DL architectures. On the other hand, models like CNNs, which rely more on local feature extraction and less on the temporal extent of the signal, retain enhanced performance under these conditions. Hence, the CNN can still extract spatial patterns from the MFCC representation even in shorter clips, as exhibited in Figure 9b. Similarly, the RF classifier relies heavily on aggregated statistical features computed over the full audio duration. Utilizing shorter signals, these statistical features become less stable and less representative of the underlying audio class, leading to higher variability and weaker decision boundaries across the decision trees. As a result, the ensemble of trees in the RF lacks the discriminative power it exhibited when trained and evaluated on longer, more information-rich signals. In contrast, the kernel-based mapping coupled with the margin maximization principle allowed the SVM classifier to retain a higher degree of generalization under conditions where the feature set becomes less descriptive. Therefore, although both RF and SVM classifiers underperform on short-duration audio inputs compared to their performance on the original full-length data, the SVM’s performance remains comparatively more stable.

4. Conclusions and Outlook

The present study successfully demonstrated a robust pipeline for evaluating the surface roughness in milling processes using sound data and learning-based methodologies. By associating each recorded sound signal with a corresponding surface roughness quality, the proposed methodology enables precise classification through a series of well-integrated stages, ranging from signal acquisition and preprocessing to feature extraction, model training, and evaluation. The signal preprocessing pipeline ensures data consistency and maximizes the information retained for classification purposes. In addition, the utilization of MFCCs as the primary feature representation effectively captures relevant spectral and temporal information from the sound signals, enabling the applied AI classifiers to distinguish between various surface finish classes. The architectures of the developed models successfully extract discriminative patterns and demonstrate efficient training convergence and generalization capabilities when validated on a test dataset. In addition, due to the model’s learned representation space, unseen sound signals would be mapped to the closest existing cluster in the feature space, enabling reliable classification even with minor variations. Overall, the proposed framework provides a lightweight, non-intrusive, and scalable solution for surface roughness classification and enhancement, making it well-suited for deployment in intelligent manufacturing environments. However, it is important to note that this study has limitations stemming from its specific experimental setup. The analysis was conducted using a single material, cutting tool, and milling machine under fixed operating parameters. These restrictions underscore the need for additional validation across a wider range of operational conditions. In order to enhance generalizability, future research could expand the dataset to include multiple machines, tool types, workpiece materials, and cutting conditions beyond those used in training. This approach would allow the model to develop more robust and generalizable sound signal representations. Furthermore, domain adaptation and transfer learning methods could be investigated to fine-tune pre-trained models for new operating environments using limited labeled data. Another promising strategy is to enhance the training dataset through synthetic or semi-synthetic audio signals that emulate diverse machining conditions, thereby improving model variability and operational resilience.

Finally, it should be stated that the introduced research paves the way for further studies that can explore real-time deployment, multi-sensor fusion, and broader generalization across different machining conditions and materials. By integrating AI models into CNC systems, it could become possible to monitor the machining quality and dynamically adjust cutting parameters to maintain optimal surface finish quality. This closed-loop control would also reduce production defects and enhance the cutting tool’s life. Another promising direction involves the deploying of AI models on edge computing devices, enabling decentralized processing and decision-making directly on the shop floor. This approach would support faster response times and shall reduce dependence on cloud infrastructure, aligning with the real-time demands of Industry 4.0 environments. Further advancements could potentially explore multi-modal data integration, combining signals from various sensors such as dynamometers, temperature cameras, and vision cameras. By training learning-based models on fused sensor data, the corresponding predictions and recommendations can achieve greater accuracy and resilience under varying operating conditions during machining.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The author declares no conflicts of interest.

References

- Val, S.; Lambán, M.P.; Lucia, J.; Royo, J. Analysis and Prediction of Wear in Interchangeable Milling Insert Tools Using Artificial Intelligence Techniques. Appl. Sci. 2024, 14, 11840. [Google Scholar] [CrossRef]

- Zhao, C.; Tian, W.; Yan, Q.; Bai, Y. Prediction of Surface Roughness in Milling Additively Manufactured High-Strength Maraging Steel Using Broad Learning System. Coatings 2025, 15, 566. [Google Scholar] [CrossRef]

- Palová, K.; Kelemenová, T.; Kelemen, M. Measuring Procedures for Evaluating the Surface Roughness of Machined Parts. Appl. Sci. 2023, 13, 9385. [Google Scholar] [CrossRef]

- Manjunath, K.; Tewary, S.; Khatri, N.; Cheng, K. Monitoring and Predicting the Surface Generation and Surface Roughness in Ultraprecision Machining: A Critical Review. Machines 2021, 9, 369. [Google Scholar] [CrossRef]

- Ercetin, A.; Der, O.; Akkoyun, F.; Chandrashekarappa, M.P.G.; Şener, R.; Çalışan, M.; Olgun, N.; Chate, G.; Bharath, K.N. Review of Image Processing Methods for Surface and Tool Condition Assessments in Machining. J. Manuf. Mater. Process. 2024, 8, 244. [Google Scholar] [CrossRef]

- Mehmood, T.; Khalil, M.S. Enhancement of Machining Performance of Ti-6Al-4V Alloy Though Nanoparticle-Based Minimum Quantity Lubrication: Insights into Surface Roughness, Material Removal Rate, Temperature, and Tool Wear. J. Manuf. Mater. Process. 2024, 8, 293. [Google Scholar] [CrossRef]

- García Plaza, E.; Núñez López, P.J.; Beamud González, E.M. Multi-Sensor Data Fusion for Real-Time Surface Quality Control in Automated Machining Systems. Sensors 2018, 18, 4381. [Google Scholar] [CrossRef]

- Lario, J.; Mateos, J.; Psarommatis, F.; Ortiz, Á. Towards Zero Defect and Zero Waste Manufacturing by Implementing Non-Destructive Inspection Technologies. J. Manuf. Mater. Process. 2025, 9, 29. [Google Scholar] [CrossRef]

- Khazal, H.; Belaziz, A.; Al-Sabur, R.; Khalaf, H.I.; Abdelwahab, Z. Unveiling Surface Roughness Trends and Mechanical Properties in Friction Stir Welded Similar Alloys Joints Using Adaptive Thresholding and Grayscale Histogram Analysis. J. Manuf. Mater. Process. 2025, 9, 159. [Google Scholar] [CrossRef]

- Tsai, M.-H.; Lee, J.-N.; Tsai, H.-D.; Shie, M.-J.; Hsu, T.-L.; Chen, H.-S. Applying a Neural Network to Predict Surface Roughness and Machining Accuracy in the Milling of SUS304. Electronics 2023, 12, 981. [Google Scholar] [CrossRef]

- Teti, R.; Jemielniak, K.; O’Donnell, G.; Dornfeld, D. Advanced monitoring of machining operations. CIRP Annals 2010, 59, 717–739. [Google Scholar] [CrossRef]

- Ghosh, S.; Knoblauch, R.; Mansori, M.E.; Corleto, C. Towards AI driven surface roughness evaluation in manufacturing: A prospective study. J. Intell. Manuf. 2024. [Google Scholar] [CrossRef]

- Wang, R.; Wang, B.; Barber, G.C.; Gu, J.; Schall, J.D. Models for Prediction of Surface Roughness in a Face Milling Process Using Triangular Inserts. Lubricants 2019, 7, 9. [Google Scholar] [CrossRef]

- Eser, A.; Ayyıldız, E.A.; Ayyıldız, M.; Kara, F. Artificial Intelligence-Based Surface Roughness Estimation Modelling for Milling of AA6061 Alloy. Adv. Mater. Sci. Eng. 2021, 6, 1–10. [Google Scholar] [CrossRef]

- Vasconcelos, G.A.V.B.; Francisco, M.B.; Oliveira, C.H.d.; Barbedo, E.L.; Souza, L.G.P.d.; Melo, M.d.L.N.M. Prediction of surface roughness in duplex stainless steel top milling using machine learning techniques. Int. J. Adv. Manuf. Technol. 2024, 134, 2939–2953. [Google Scholar] [CrossRef]

- Antosz, K.; Kozłowski, E.; Sęp, J.; Prucnal, S. Application of Machine Learning to the Prediction of Surface Roughness in the Milling Process on the Basis of Sensor Signals. Materials 2025, 18, 148. [Google Scholar] [CrossRef]

- Zeng, S.; Pi, D. Milling Surface Roughness Prediction Based on Physics-Informed Machine Learning. Sensors 2023, 23, 4969. [Google Scholar] [CrossRef]

- Raju, R.S.; Kottala, R.K.; Varma, B.M.; Krishna, P.; Barmavatu, P. Internet of Things and hybrid models-based interpretation systems for surface roughness estimation. Artif. Intell. Eng. Des. Anal. Manuf. 2024, 38, 1–19. [Google Scholar] [CrossRef]

- Adizue, U.L.; Tura, A.D.; Isaya, E.O.; Farkas, B.Z.; Takács, M. Surface quality prediction by machine learning methods and process parameter optimization in ultra-precision machining of AISI D2 using CBN tool. Int. J. Adv. Manuf. Technol. 2023, 129, 1375–1394. [Google Scholar] [CrossRef]

- Nguyen, V.H.; Le, T.T. Predicting surface roughness in machining aluminum alloys taking into account material properties. Int. J. Comput. Integr. Manuf. 2024, 38, 555–576. [Google Scholar] [CrossRef]

- Motta, M.P.; Pelaingre, C.; Delamézière, A.; Ayed, L.B.; Barlier, C. Machine learning models for surface roughness monitoring in machining operations. Procedia CIRP 2022, 108, 710–715. [Google Scholar] [CrossRef]

- Tseng, T.L.; Konada, U.; Kwon, Y. A novel approach to predict surface roughness in machining operations using fuzzy set theory. J. Comput. Des. Eng. 2016, 3, 1–13. [Google Scholar] [CrossRef]

- Liu, R.; Tian, W. A novel simultaneous monitoring method for surface roughness and tool wear in milling process. Sci. Rep. 2025, 15, 8079. [Google Scholar] [CrossRef]

- Manjunath, K.; Tewary, S.; Khatri, N. Surface roughness prediction in milling using long-short term memory modelling. Mater. Today Proc. 2022, 64, 1300–1304. [Google Scholar] [CrossRef]

- Sakthivel, N.R.; Cherian, J.; Nair, B.B.; Sahasransu, A.; Aratipamula, L.N.V.P.; Gupta, S.A. An acoustic dataset for surface roughness estimation in milling process. Data Brief 2024, 57, 111108. [Google Scholar] [CrossRef]

- Sakthivel, N.R.; Nair, B. Milling Surface Roughness Acoustic Sensor Dataset. Mendeley Data 2024, V1, Licensed under CC BY 4.0. [Google Scholar] [CrossRef]

- Umar, M.; Siddique, M.F.; Ullah, N.; Kim, J.-M. Milling Machine Fault Diagnosis Using Acoustic Emission and Hybrid Deep Learning with Feature Optimization. Appl. Sci. 2024, 14, 10404. [Google Scholar] [CrossRef]

- Mauricio, A.; Qi, J.; Smith, W.A.; Sarazin, M.; Randall, R.B.; Janssens, K.; Gryllias, K. Bearing diagnostics under strong electromagnetic interference based on Integrated Spectral Coherence. Mech. Syst. Signal Process. 2020, 140, 106673. [Google Scholar] [CrossRef]

- Liu, H.; Xu, Y.; Qi, Y.; Yang, H.; Bi, W. Rapid Diagnosis of Distributed Acoustic Sensing Vibration Signals Using Mel-Frequency Cepstral Coefficients and Liquid Neural Networks. Sensors 2025, 25, 3090. [Google Scholar] [CrossRef]

- Orozco-Arias, S.; Piña, J.S.; Tabares-Soto, R.; Castillo-Ossa, L.F.; Guyot, R.; Isaza, G. Measuring Performance Metrics of Machine Learning Algorithms for Detecting and Classifying Transposable Elements. Processes 2020, 8, 638. [Google Scholar] [CrossRef]

- Uhlmann, E.; Holznagel, T.; Clemens, R. Practical Approaches for Acoustic Emission Attenuation Modelling to Enable the Process Monitoring of CFRP Machining. J. Manuf. Mater. Process. 2022, 6, 118. [Google Scholar] [CrossRef]

- Salm, T.; Tatar, K.; Chilo, J. Real-Time Acoustic Measurement System for Cutting-Tool Analysis During Stainless Steel Machining. Machines 2024, 12, 892. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).