SAUCF: A Framework for Secure, Natural-Language-Guided UAS Control

Highlights

- SAUCF achieves secure voice-driven mission planning in under 105 s with a 97.5% safety classification accuracy and 0.52 m GPS trajectory precision.

- The decision-tree based framework demonstrated safety compliance across simulation and field trials with continuous human-in-the-loop supervision.

- Eliminates complex mission planning enabling non-expert agricultural operators to control UAS through natural language commands.

- Provides a validated framework integrating biometric security, LLM-based planning, and operator oversight for safe agricultural UAS deployment.

Abstract

1. Introduction

Objectives of SAUCF

- Develop a secure and modular SAUCF architecture that converts spoken instructions into decision-tree-encoded UAS survey missions, thereby reducing operational barriers associated with manual mission setup while enforcing dual-factor biometric authentication.

- Develop and verify an integrated pipeline combining ASR and LLM-based mission planning and mission execution with continuous telemetry, LLM-based safety filtering through few-shot SAFE/UNSAFE command classification, and explicit human-in-the-loop override to ensure secure and reliable mission execution.

- Perform a simulation backed assessment of sim2real transfer to field UAS platform, quantifying the safety compliance and the applicability of Natural language to action pipelines.

2. Methodology

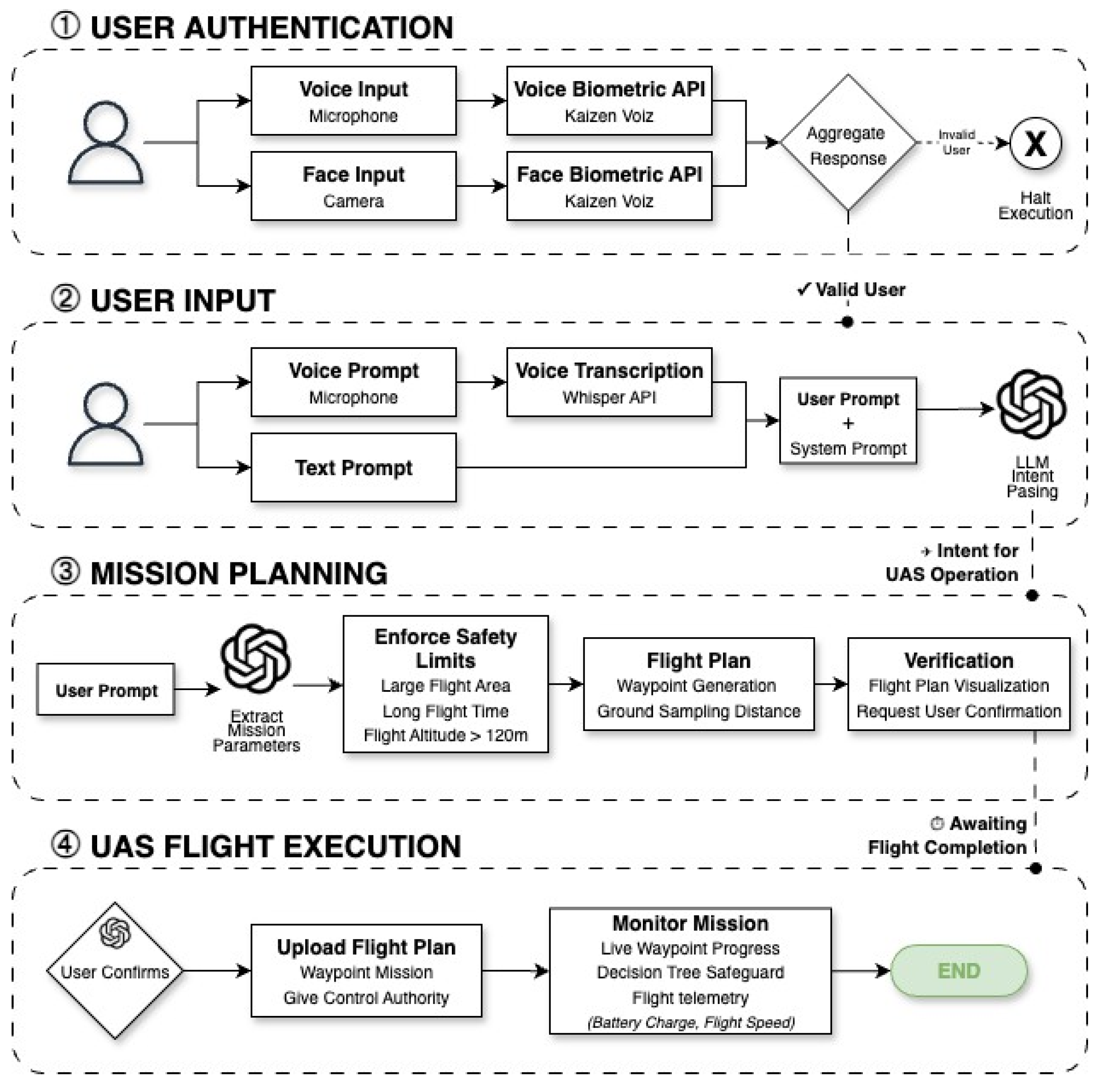

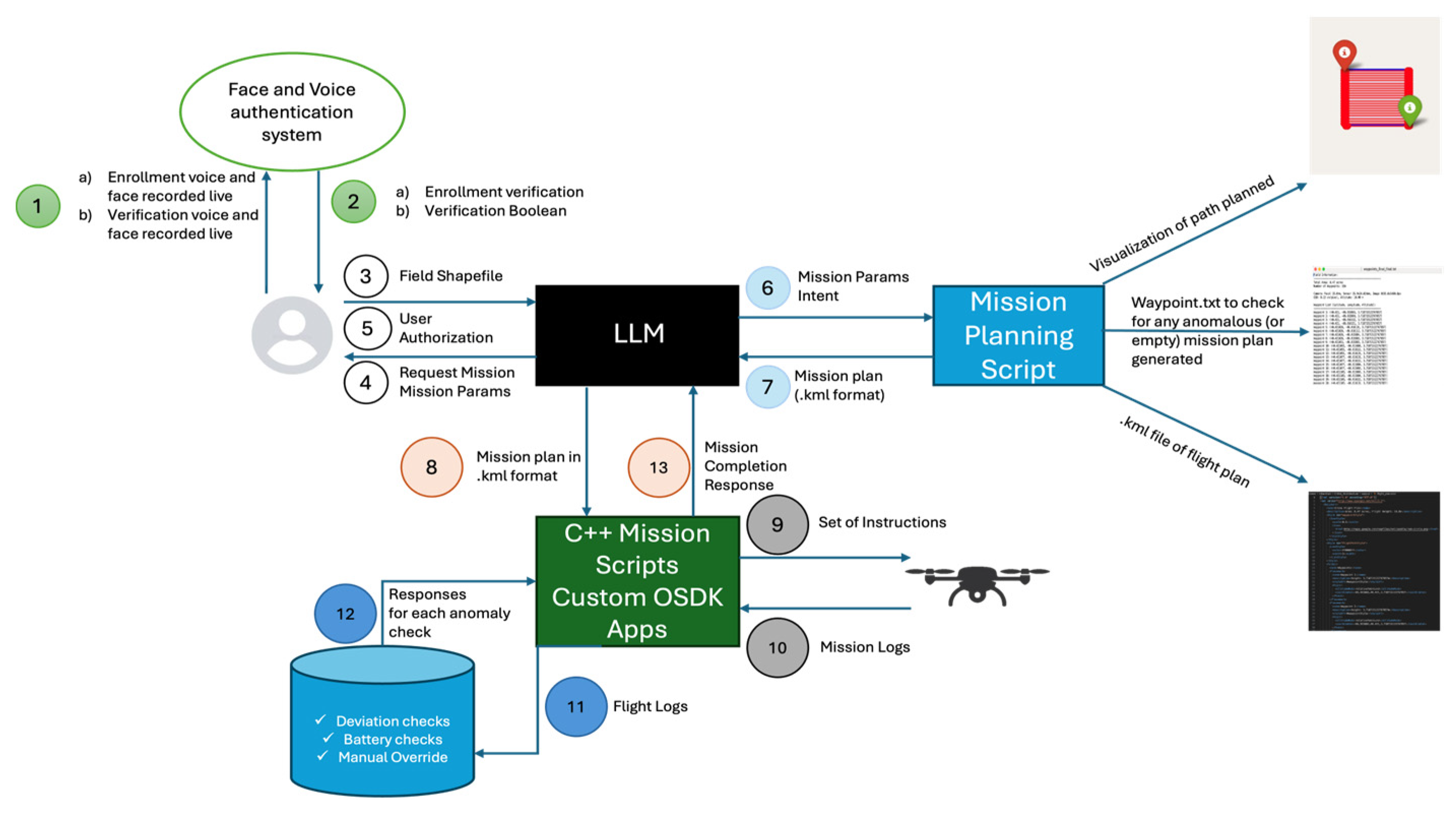

2.1. System Architecture and Design Philosophy

- (i)

- reducing technical complexity for non-expert operators,

- (ii)

- ensuring secure access control and mission auditability;

- (iii)

- preserving human authority through comprehensive safety oversight, including ensuring reliable sim-to-real mission execution.

2.2. Security and Authentication Framework

2.3. Natural Language Processing Pipeline

2.3.1. Voice Command Acquisition and Speech Recognition

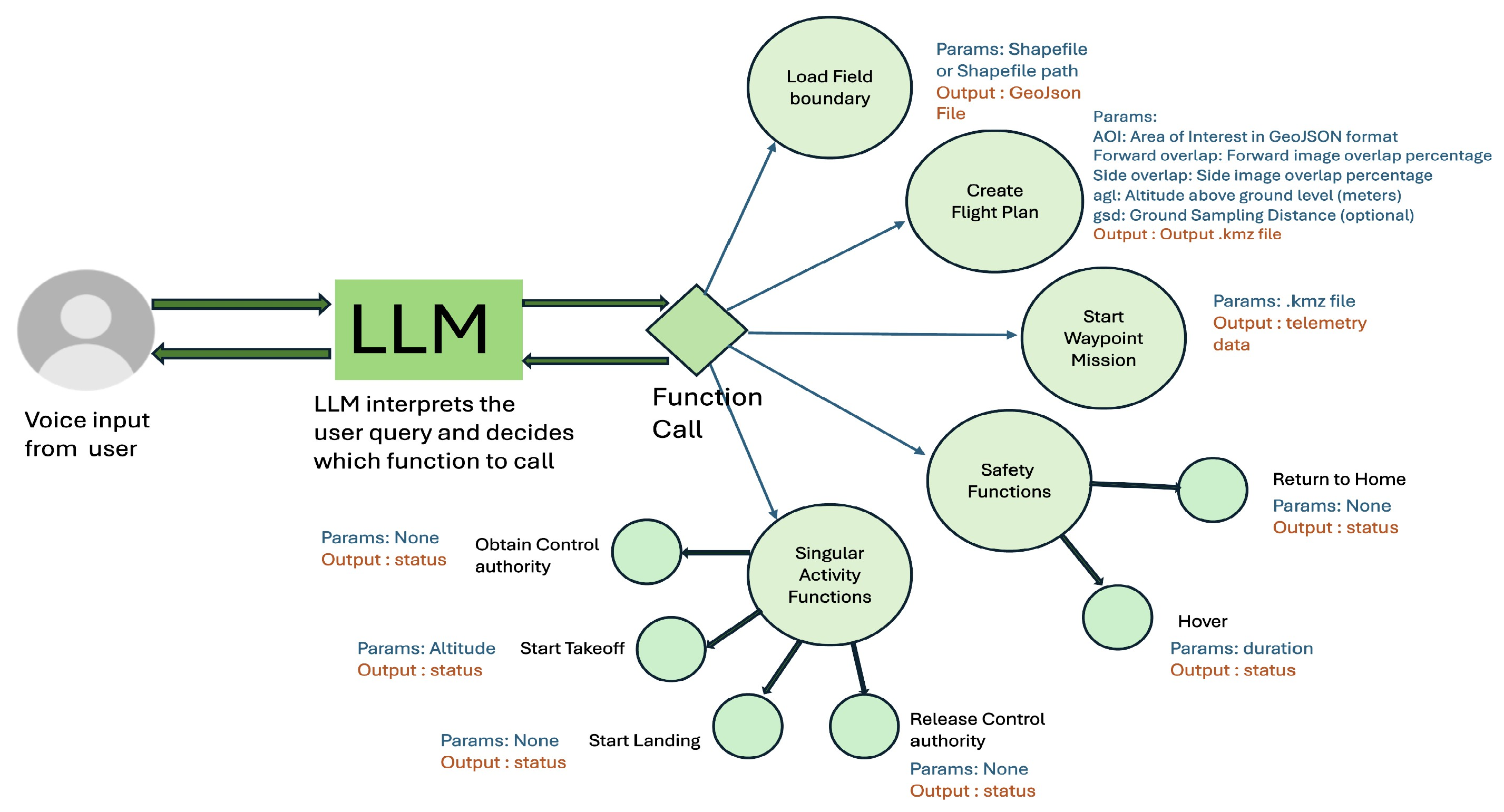

2.3.2. Large Language Model Integration for Intent Parsing

2.4. Automated Mission Planning and Path Generation

2.4.1. Flight Pattern Optimization

2.4.2. Mission Serialization and Visualization

2.5. Onboard Execution and Safety Monitoring

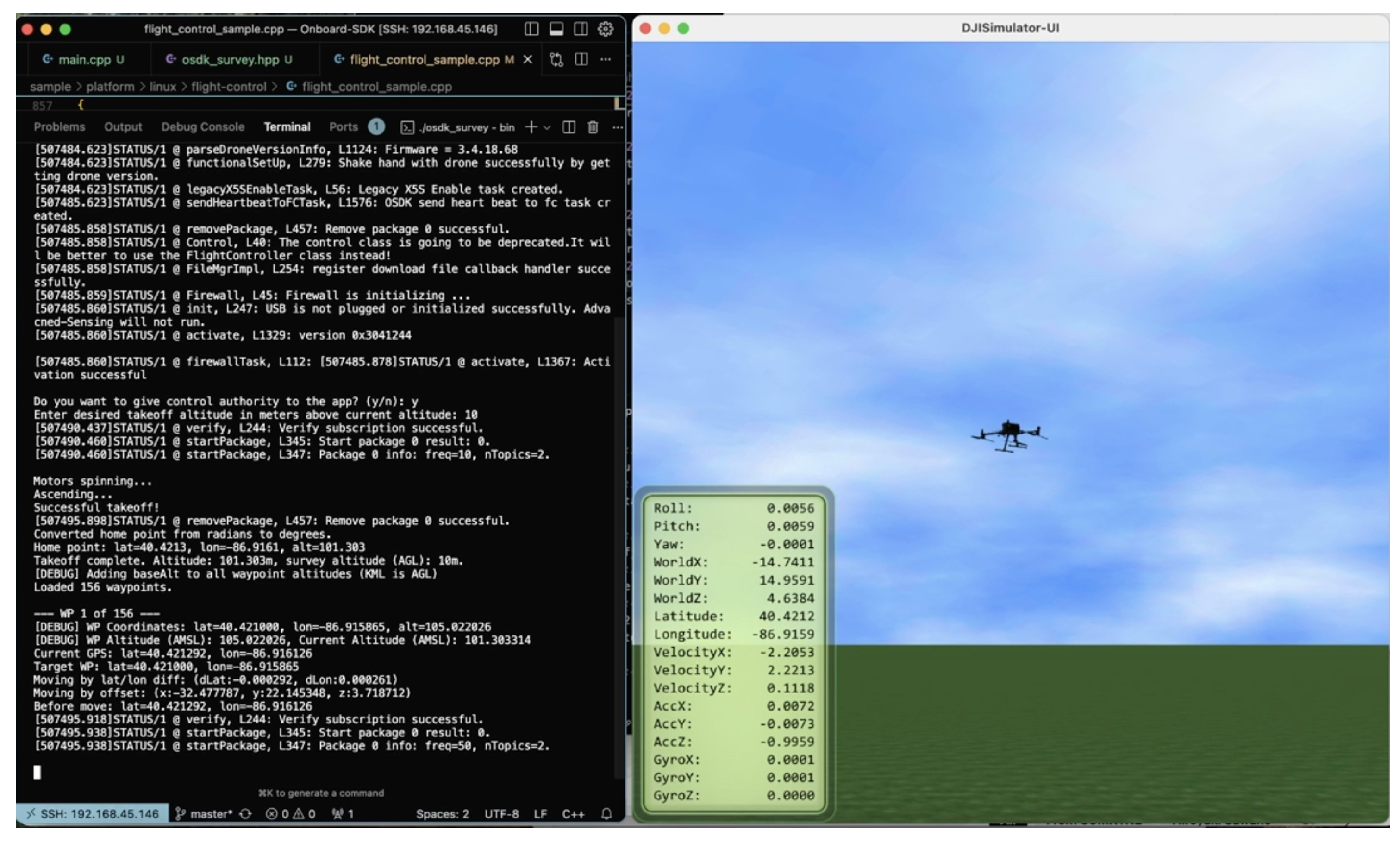

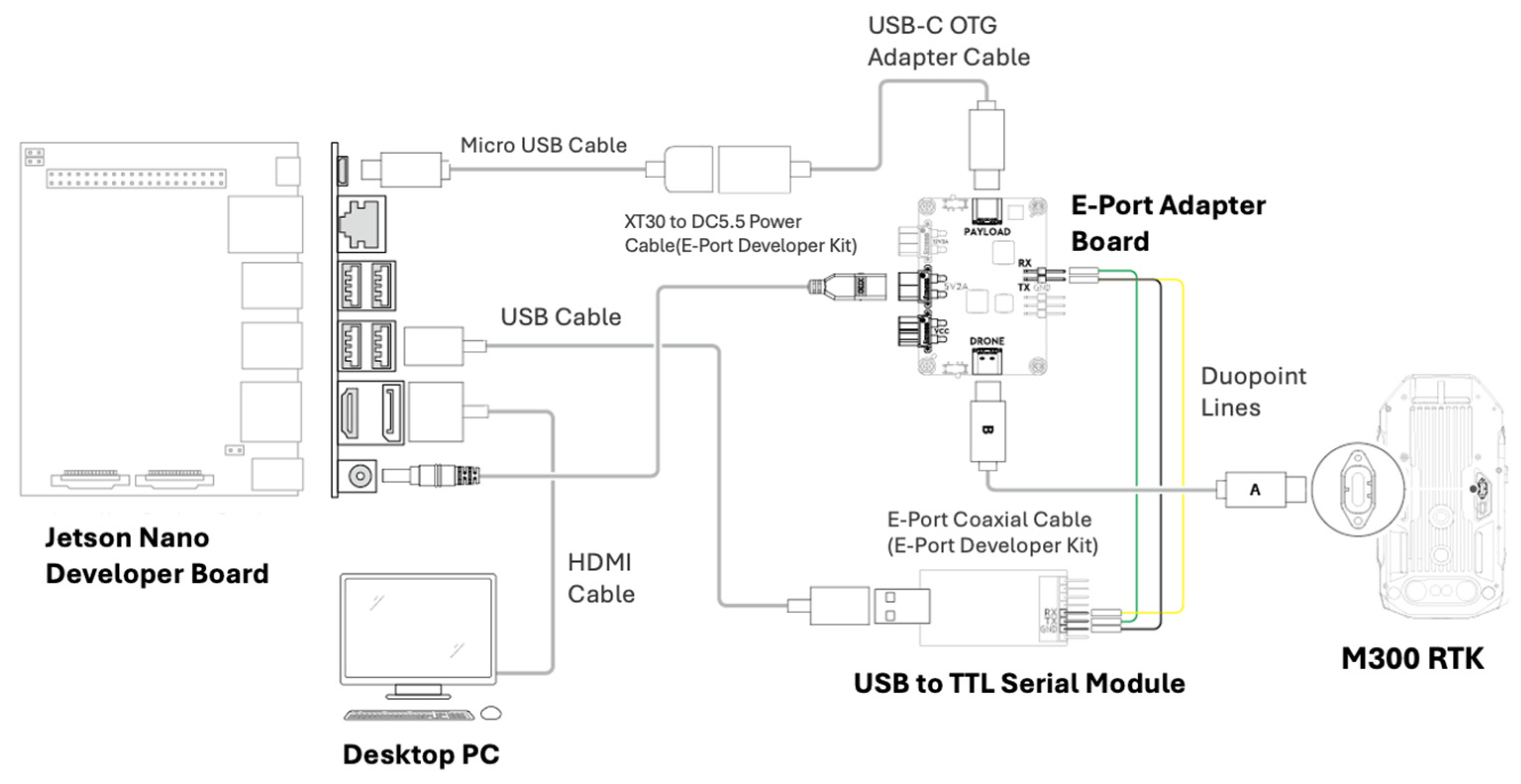

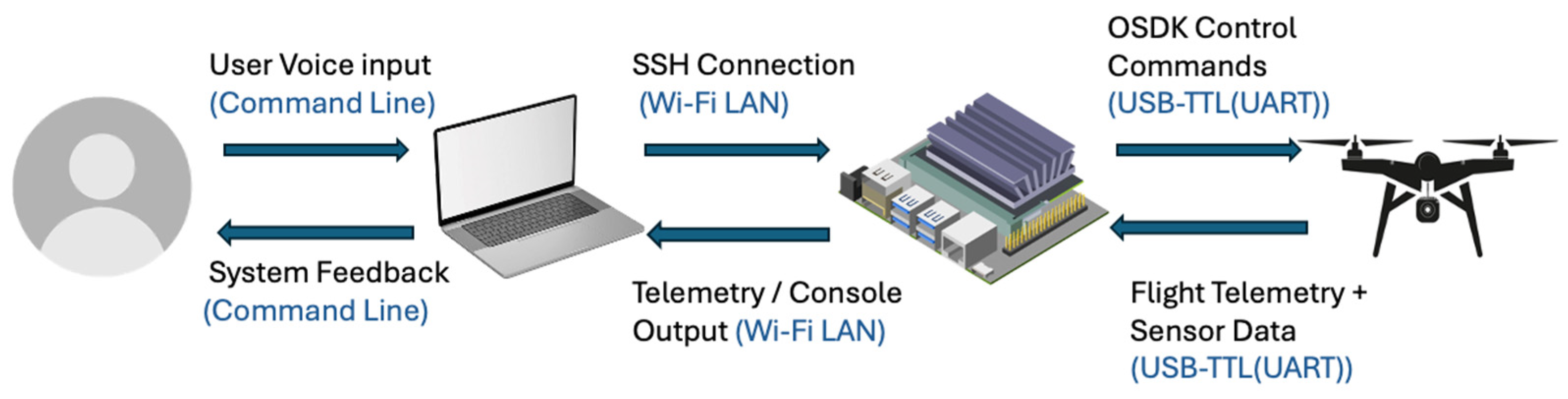

2.5.1. Companion Computer Integration

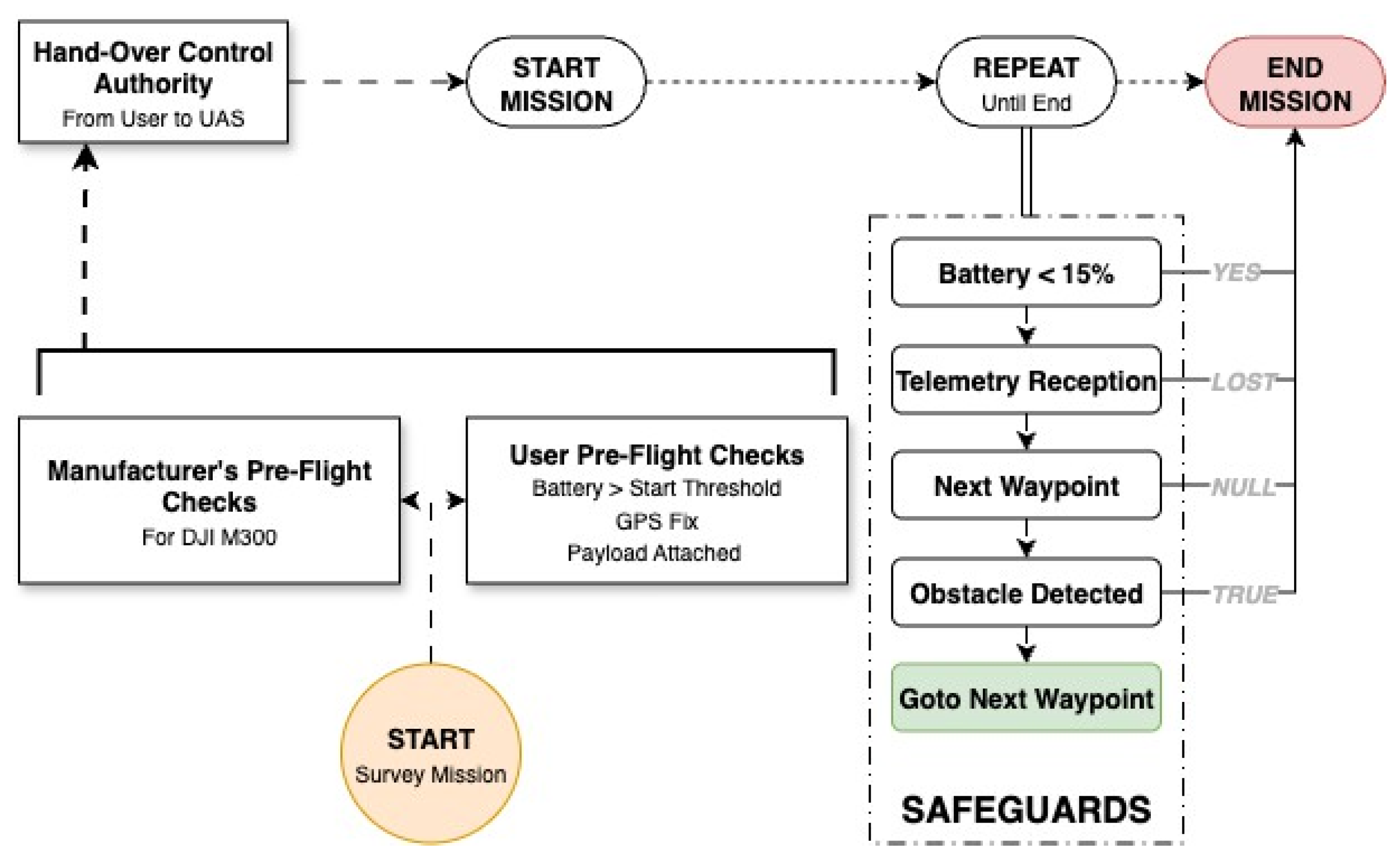

2.5.2. Decision Tree Based Safeguards

2.5.3. Human-in-the-Loop Safety Integration

3. Experimental Settings

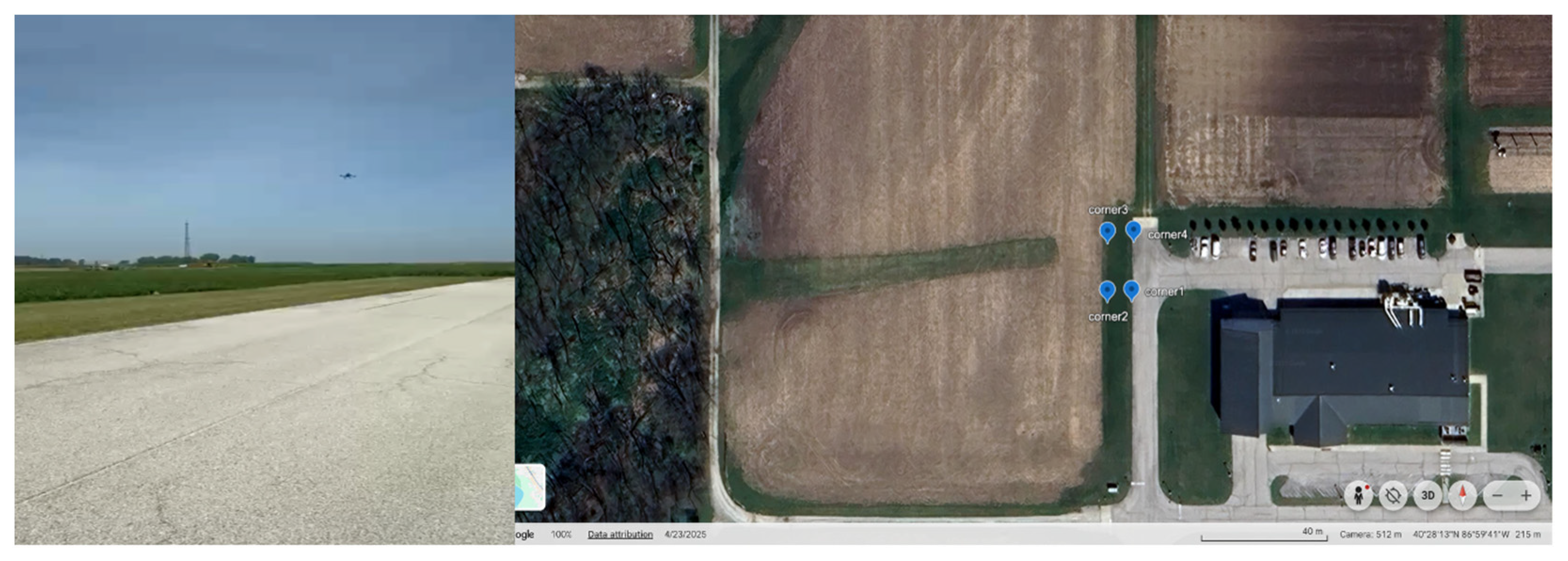

3.1. Experimental Environment

3.2. Evaluation Metrics

- Flight-Control Unit Tests: Unit tests were used to validate the correctness of control authority acquisition and release, takeoff, landing, and a complete three-waypoint micro-mission sequence.

- Altitude-Robust Mission Execution: Survey missions were executed at 5 m, 10 m, 20 m, 30 m, and 50 m above ground level to evaluate stability, waypoint accuracy, and safety-guard compliance across varying altitudes.

- Battery-Safety Compliance: Low-battery tests checked whether the system correctly detected when the battery was running low and automatically initiated a return-to-home (RTH) as expected.

- Mission Planning Time: Time elapsed from voice input to deployable mission plan.

- Decision Tree Compliance: Validation of executed behaviors against predefined sequences.

- Operational Safety Classification: Ability of the LLM to correctly classify mission commands as SAFE or UNSAFE before intent parsing.

- Authentication Reliability: Biometric system performance measured via FAR and FRR across modalities.

- Execution Consistency Over Varying Survey Altitudes: GPS trajectory comparison between planned and executed GPS coordinates over varying altitudes.

4. Experimental Results and Analysis

4.1. Unit Testing of Core Flight Actions

4.2. Mission Planning Time

4.3. Decision Tree Compliance

4.4. Operational Safety Classification

4.5. Authentication Reliability

4.6. Flight Trajectory Consistency over Varying Survey Altitudes

4.7. Summary

5. Discussion

5.1. Mission Planning and Usability

5.2. Reliability of Execution Framework

5.3. Safety in Natural Language Command Processing

5.4. Authentication Performance

5.5. Trajectory Consistency over Varying Survey Altitudes

5.6. Limitations and Future Work

5.7. Implications

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

References

- Zhang, C.; Kovacs, J.M. The application of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Tsouros, D.C.; Bibi, S.; Sarigiannidis, N. A Review on UAV-Based Applications for Precision Agriculture. Information 2019, 10, 349. [Google Scholar] [CrossRef]

- Hunt, E.R.; Daughtry, C.S.T. What good are unmanned aircraft systems for agricultural remote sensing and precision agriculture? Int. J. Remote Sens. 2018, 39, 5345–5376. [Google Scholar] [CrossRef]

- Mekdad, Y.; Aris, A.; Babun, L.; Fergougui, A.E.; Conti, M.; Lazzeretti, R.; Uluagac, A.S. A Survey on Security and Privacy Issues of UAVs. Comput. Netw. 2023, 224, 109626. [Google Scholar] [CrossRef]

- Yang, Z.; Zhang, Y.; Zeng, J.; Yang, Y.; Jia, Y.; Song, H.; Lv, T.; Sun, Q.; An, J. AI-Driven Safety and Security for UAVs: From Machine Learning to Large Language Models. Drones 2025, 9, 392. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Xu, T.; Brockman, G.; McLeavey, C.; Sutskever, I. Robust speech recognition via large-scale weak supervision. In Proceedings of the International Conference on Machine Learning. PMLR, Honolulu, HI, USA, 23–29 July 2023; pp. 28492–28518. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. Adv. Neural Inf. Process. Syst. (NeurIPS) 2020, 33, 1877–1901. [Google Scholar]

- Contreras, R.; Ayala, A.; Cruz, F. Unmanned Aerial Vehicle Control through Domain-Based Automatic Speech Recognition. Computers 2020, 9, 75. [Google Scholar] [CrossRef]

- Fagundes-Júnior, L.A.; Barcelos, C.O.; Silvatti, A.P.; Brandão, A.S. UAV–UGV Formation for Delivery Missions: A Practical Case Study. Drones 2025, 9, 48. [Google Scholar] [CrossRef]

- Ghilom, M.; Latifi, S. The Role of Machine Learning in Advanced Biometric Systems. Electronics 2024, 13, 2667. [Google Scholar] [CrossRef]

- Shakhatreh, H.; Sawalmeh, A.H.; Al-Fuqaha, A.; Dou, Z.; Almaita, E.; Khalil, I.; Othman, N.S.; Khreishah, A.; Guizani, M. Unmanned Aerial Vehicles (UAVs): A Survey on Civil Applications and Key Research Challenges. IEEE Access 2019, 7, 48572–48634. [Google Scholar] [CrossRef]

- Zhang, Y.; Yao, S.; Wang, P.; Wu, H.; Xu, Z.; Wang, Y.; Zhang, Y. Building Natural Language Interfaces Using Natural Language Understanding and Generation: A Case Study on Human–Machine Interaction in Agriculture. Appl. Sci. 2022, 12, 11830. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, H.; Xue, X.; Jiang, Y.; Shen, Q. Deep Learning for Remote Sensing Image Classification: A Survey. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2018, 8, e1264. [Google Scholar] [CrossRef]

- OpenAI. Whisper: General-Purpose Speech Recognition Model; GitHub Repository. 2022. Available online: https://github.com/openai/whisper (accessed on 15 June 2025).

- Adhikari, N. Drone Flight Plan Generator, Version 0.3.6; Github: San Francisco, CA, USA, 2025. Available online: https://github.com/hotosm/drone-flightplan (accessed on 15 June 2025)Version 0.3.6.

- DJI Developers. Jetson Nano Quick Guide. 2023. Available online: https://developer.dji.com/doc/payload-sdk-tutorial/en/quick-start/quick-guide/jetson-nano.html (accessed on 12 September 2025).

- Zeeshan, N.; Bakyt, M.; Moradpoor, N.; La Spada, L. Continuous Authentication in Resource-Constrained Devices via Biometric and Environmental Fusion. Sensors 2025, 25, 5711. [Google Scholar] [CrossRef] [PubMed]

- Zhu, H.; Chen, Q.; Zhu, X.; Yao, W.; Chen, X. Edge computing powers aerial swarms in sensing, communication, and planning. Innovation 2023, 4, 100506. [Google Scholar] [CrossRef] [PubMed]

- Hoffpauir, K.; Simmons, J.; Schmidt, N.; Pittala, R.; Briggs, I.; Makani, S.; Jararweh, Y. A Survey on Edge Intelligence and Lightweight Machine Learning Support for Future Applications and Services. J. Data Inf. Qual. 2023, 15, 1–30. [Google Scholar] [CrossRef]

| Component | Description |

|---|---|

| Companion computer | NVIDIA Jetson Nano (CUDA GPU) for low-latency ASR and edge inference. |

| UAS platform | DJI Matrice 300 RTK (M300RTK) with DJI Onboard SDK (OSDK) for programmatic flight control. |

| Input subsystems | USB microphone for audio capture and an onboard camera for biometric/visual authentication. |

| Communications | FT232 USB-serial adapter for direct serial links and integration via DJI E-Port for reliable access to the flight controller. |

| Module | Description |

|---|---|

| ASR processing | Whisper (Python implementation) executed with GPU acceleration for robust, low-latency transcription. |

| Intent parsing | LLM integration (GPT-4 API) via JSON/HTTP to convert transcribed utterances into structured mission parameters. |

| Mission planning | Python-based planner leveraging the UAS-flightplan library for waypoint generation and flight-pattern optimisation. |

| Flight control | Custom C++ applications built atop the DJI OSDK to implement mission execution, safety checks, and telemetry logging. |

| System integration | Lightweight file and serial protocols for exchanging mission plans, telemetry, and control messages between components. |

| Interface | Format/Protocol |

|---|---|

| LLM API | JSON over HTTP for request/response exchanges with authenticated LLM endpoints. |

| ASR output | Plain text files or messages containing transcribed utterances. |

| Mission planning | File-based interchange (KML/JSON) for waypoint and mission specification. |

| Flight control | Serial and SDK APIs for deterministic command and telemetry exchange with the flight controller. |

| Waypoint | Target Lat | Target Lon | Actual Lat | Actual Lon | Deviation (m) |

|---|---|---|---|---|---|

| 1 | 40.470297 | −86.995236 | 40.470298 | −86.995239 | 0.28 |

| 2 | 40.470297 | −86.995255 | 40.470299 | −86.995248 | 0.63 |

| 3 | 40.470297 | −86.995368 | 40.470297 | −86.995364 | 0.34 |

| 4 | 40.470297 | −86.995387 | 40.470296 | −86.995380 | 0.60 |

| 5 | 40.470322 | −86.995387 | 40.470316 | −86.995384 | 0.71 |

| 6 | 40.470322 | −86.995368 | 40.470321 | −86.995377 | 0.77 |

| 7 | 40.470322 | −86.995255 | 40.470322 | −86.995262 | 0.59 |

| 8 | 40.470322 | −86.995236 | 40.470323 | −86.995243 | 0.60 |

| 9 | 40.470348 | −86.995236 | 40.470342 | −86.995239 | 0.71 |

| 10 | 40.470348 | −86.995255 | 40.470349 | −86.995252 | 0.28 |

| 11 | 40.470348 | −86.995368 | 40.470351 | −86.995365 | 0.42 |

| 12 | 40.470348 | −86.995387 | 40.470349 | −86.995387 | 0.11 |

| 13 | 40.470373 | −86.995387 | 40.470368 | −86.995387 | 0.56 |

| 14 | 40.470373 | −86.995368 | 40.470371 | −86.995375 | 0.63 |

| 15 | 40.470301 | −86.995233 | 40.470302 | −86.995239 | 0.52 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shah, N.; Aggarwal, V.; Saraswat, D. SAUCF: A Framework for Secure, Natural-Language-Guided UAS Control. Drones 2025, 9, 860. https://doi.org/10.3390/drones9120860

Shah N, Aggarwal V, Saraswat D. SAUCF: A Framework for Secure, Natural-Language-Guided UAS Control. Drones. 2025; 9(12):860. https://doi.org/10.3390/drones9120860

Chicago/Turabian StyleShah, Nihar, Varun Aggarwal, and Dharmendra Saraswat. 2025. "SAUCF: A Framework for Secure, Natural-Language-Guided UAS Control" Drones 9, no. 12: 860. https://doi.org/10.3390/drones9120860

APA StyleShah, N., Aggarwal, V., & Saraswat, D. (2025). SAUCF: A Framework for Secure, Natural-Language-Guided UAS Control. Drones, 9(12), 860. https://doi.org/10.3390/drones9120860