UAV Path Planning via Semantic Segmentation of 3D Reality Mesh Models

Abstract

1. Introduction

- (1)

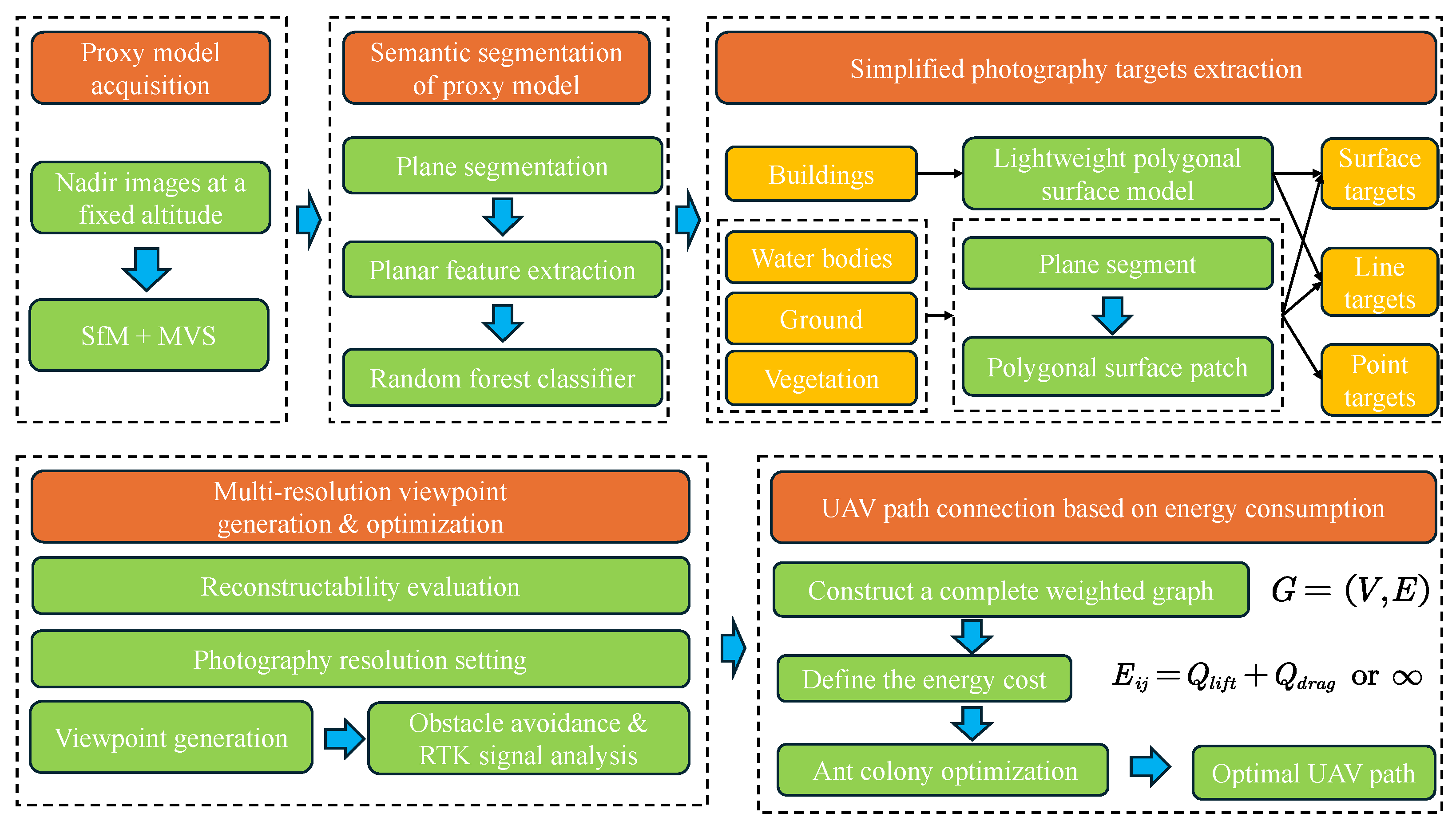

- An automatic method is implemented to extract simplified photography targets from the proxy model. By performing semantic segmentation on the proxy model, the scene is divided into object categories such as buildings, vegetation, ground, and water bodies. For buildings, which are of primary interest, a lightweight polygonal surface model [14] is reconstructed to produce simplified photography targets. For non-building objects, surface and line targets are extracted, and those with areas below a certain threshold are simplified into point targets.

- (2)

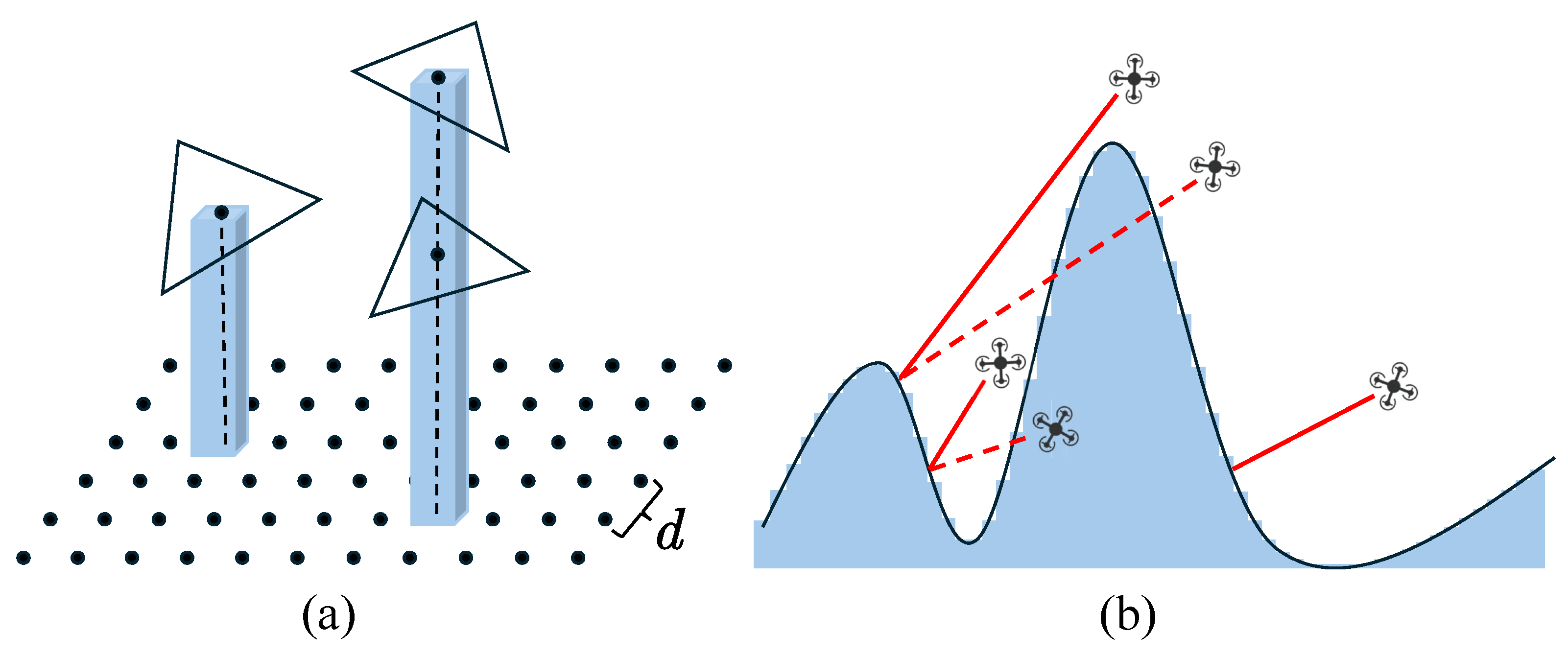

- A multi-resolution UAV path planning strategy that considers object categories is proposed. Based on the characteristics of different object types and their corresponding observation accuracy requirements, different image capture strategies are designed at multiple resolutions, enabling high-resolution coverage of key areas while allowing for rapid scanning of general regions.

- (3)

- A Real-Time Kinematic (RTK) signal evaluation method for UAV photography points based on sky-view constraints is proposed. Utilizing the proxy model and the positions of candidate photography points, the sky view at each point is simulated [15]. By calculating the proportion of visible sky area in the simulated sky view, the RTK signal quality at each point is assessed. Points with potential RTK signal occlusion are then adjusted accordingly, improving both flight safety and the continuity of the planned flight path.

2. Related Works

2.1. Semantic Segmentation Methods for 3D Models

2.2. UAV Path Planning Methods for 3D Reconstruction

2.2.1. Model-Free Approaches

2.2.2. Model-Based Approaches

3. Proposed Method

3.1. Overview

3.2. Proxy Model Acquisition

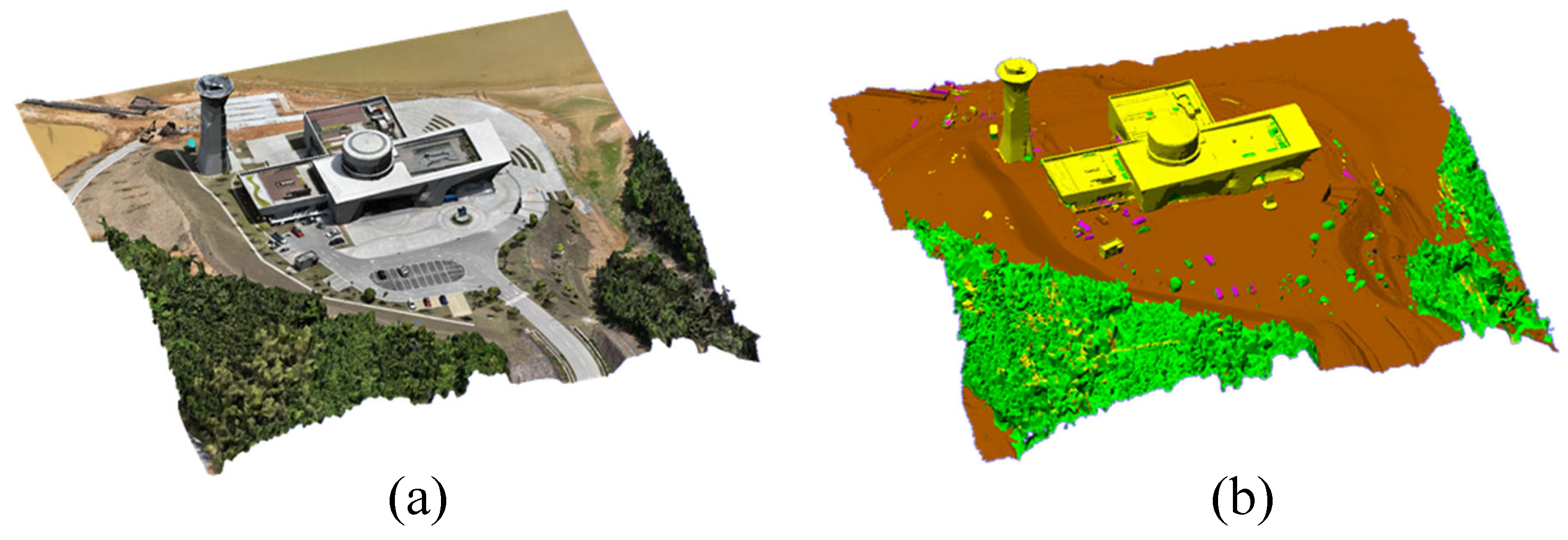

3.3. Semantic Segmentation of Proxy Model

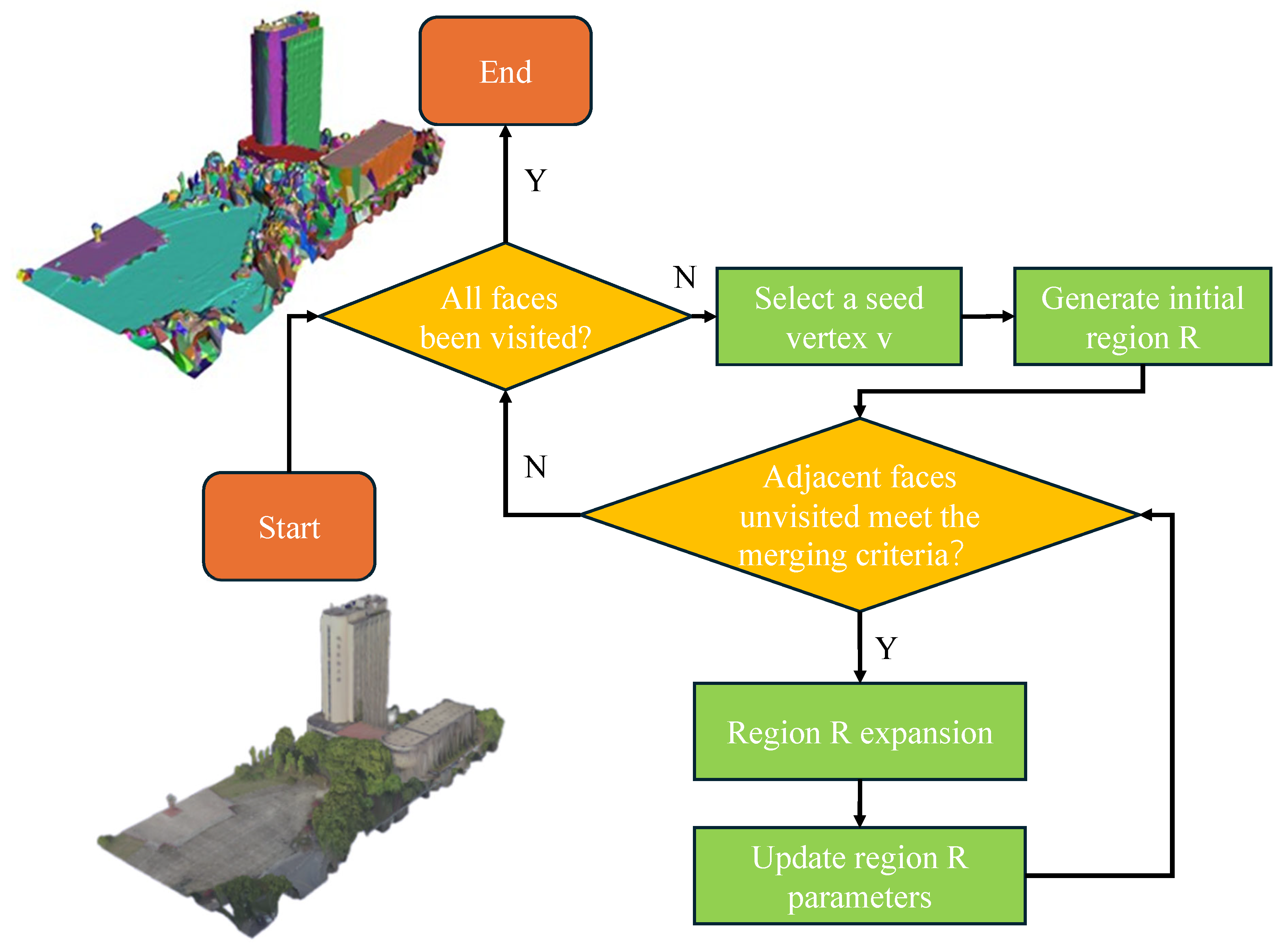

3.3.1. Plane Segmentation Based on Least Squares Plane Fitting

- Seed Vertex Selection and Initial Region Construction

- (1)

- Mark the face f as visited;

- (2)

- Calculate the area of the region and the cumulative color value of the region , then compute the average color , where the color is represented in the RGB color space;

- (3)

- Perform least squares plane fitting on all faces in region R to obtain the unit normal vector of the plane and the distance from the coordinate system origin to the plane d.

- Region Growing and Merging Criteria

- (1)

- Mark the face f as visited;

- (2)

- Update the area of the region and cumulative color , and recalculate the average color ;

- (3)

- Refit the plane parameters using the least squares method, and update the unit normal vector and the distance to the origin d.

3.3.2. Planar Feature Extraction and Random Forest Classifier

3.4. Semantic Segmentation-Based Viewpoint Generation

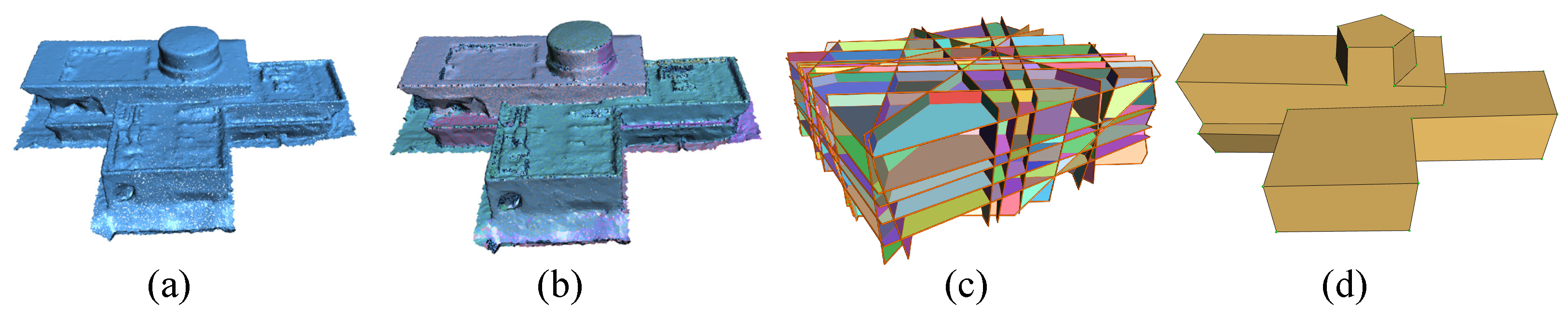

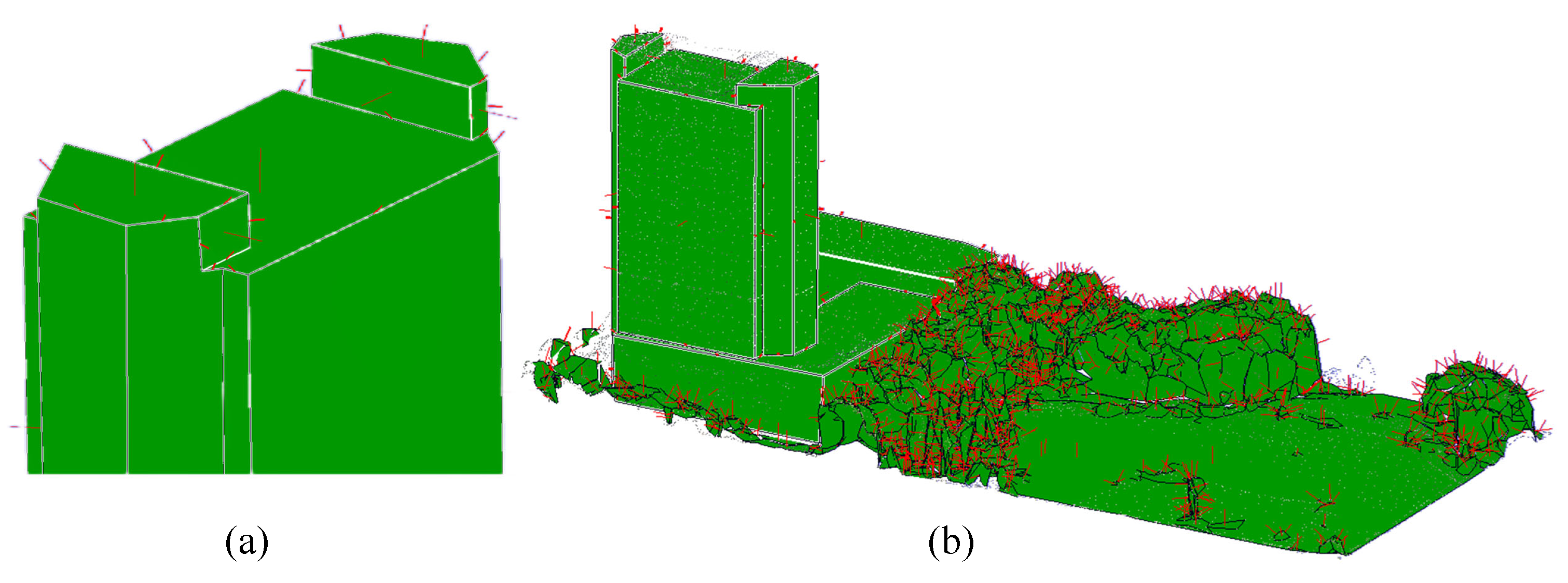

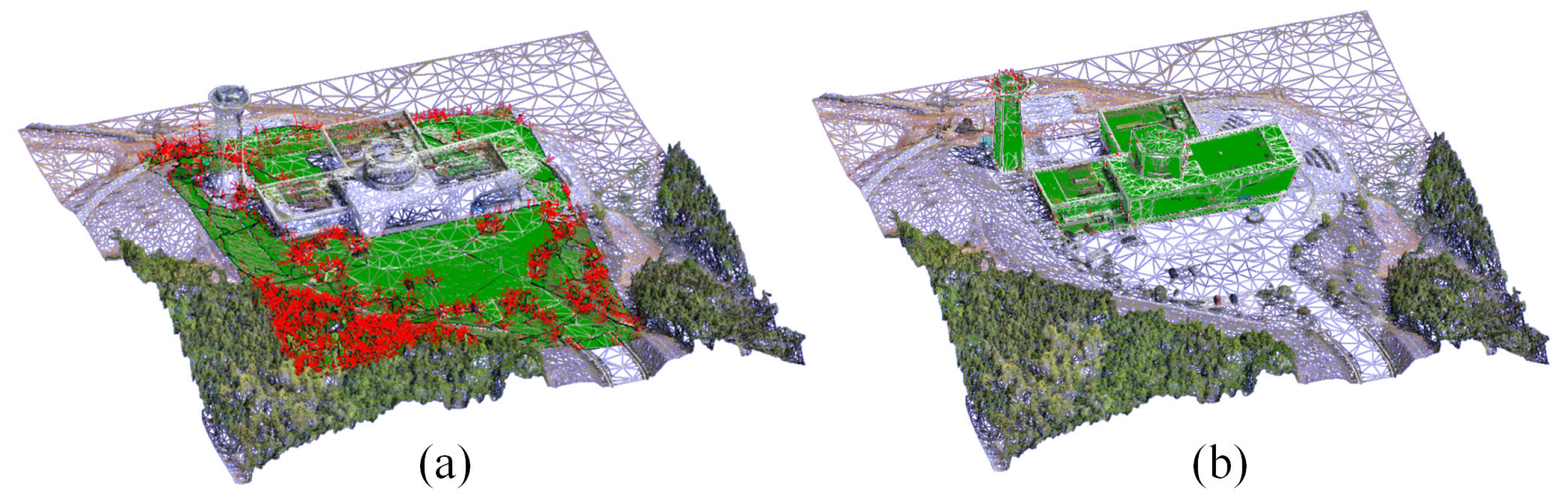

3.4.1. Simplified Photography Targets Extraction

- Lightweight Polygonal Surface Model Reconstruction of Buildings

- Non-Building Area Photography Target Extraction

- (1)

- For a given input point set S, evaluate any two points ;

- (2)

- If there exists a circle with radius passing through p and q, and the circle contains no other points from S, then retain the edge as a boundary edge;

- (3)

- If the distance between p and q exceeds twice the radius r, skip this pair;

- (4)

- For each pair of points, there are typically two circles symmetric about the edge. If either circle satisfies the above condition, the edge is considered a boundary edge.

- Photography Target Decomposition and Processing

3.4.2. Multi-Resolution Viewpoint Generation and Optimization

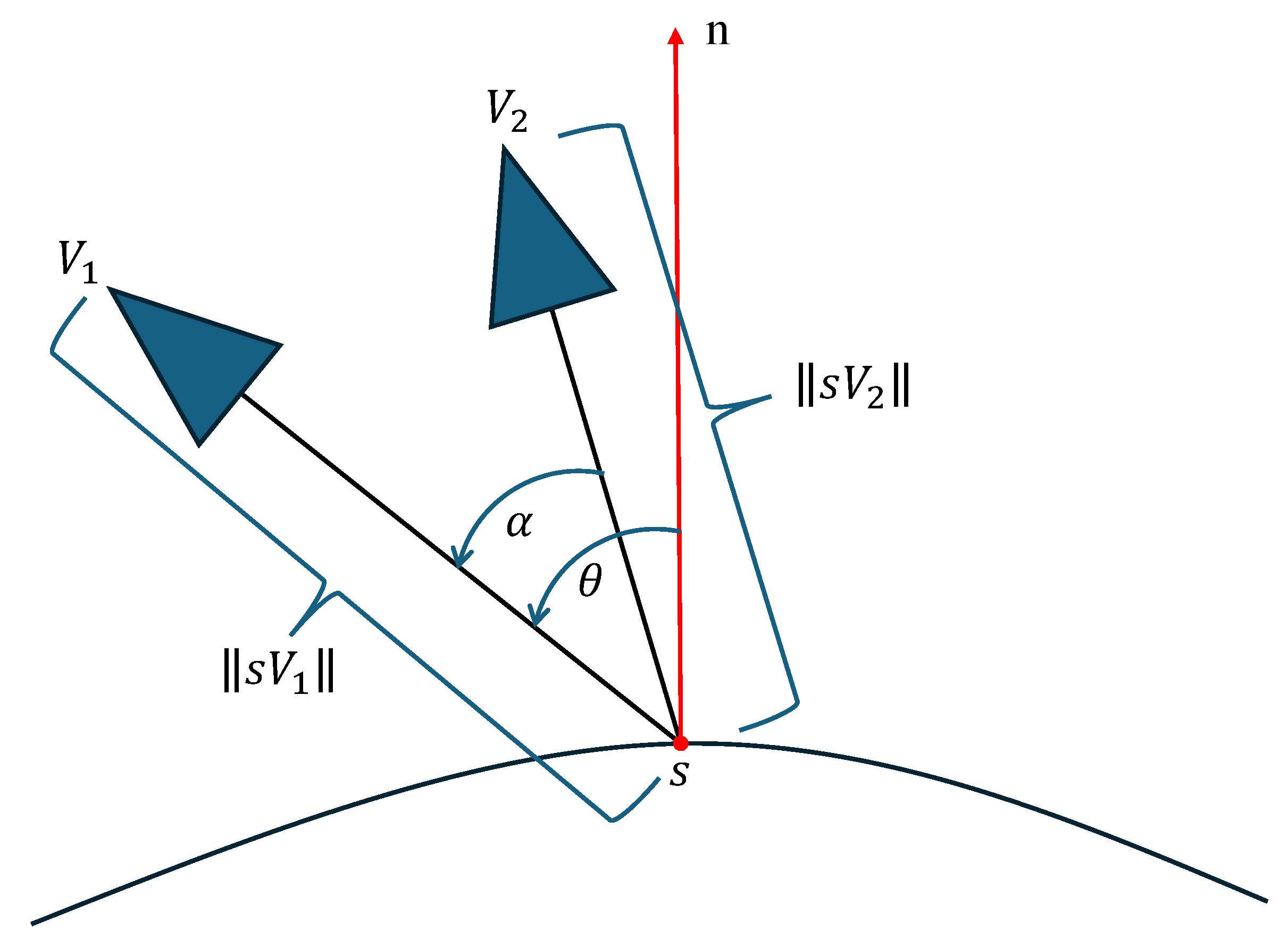

- Reconstructability Evaluation of Viewpoints

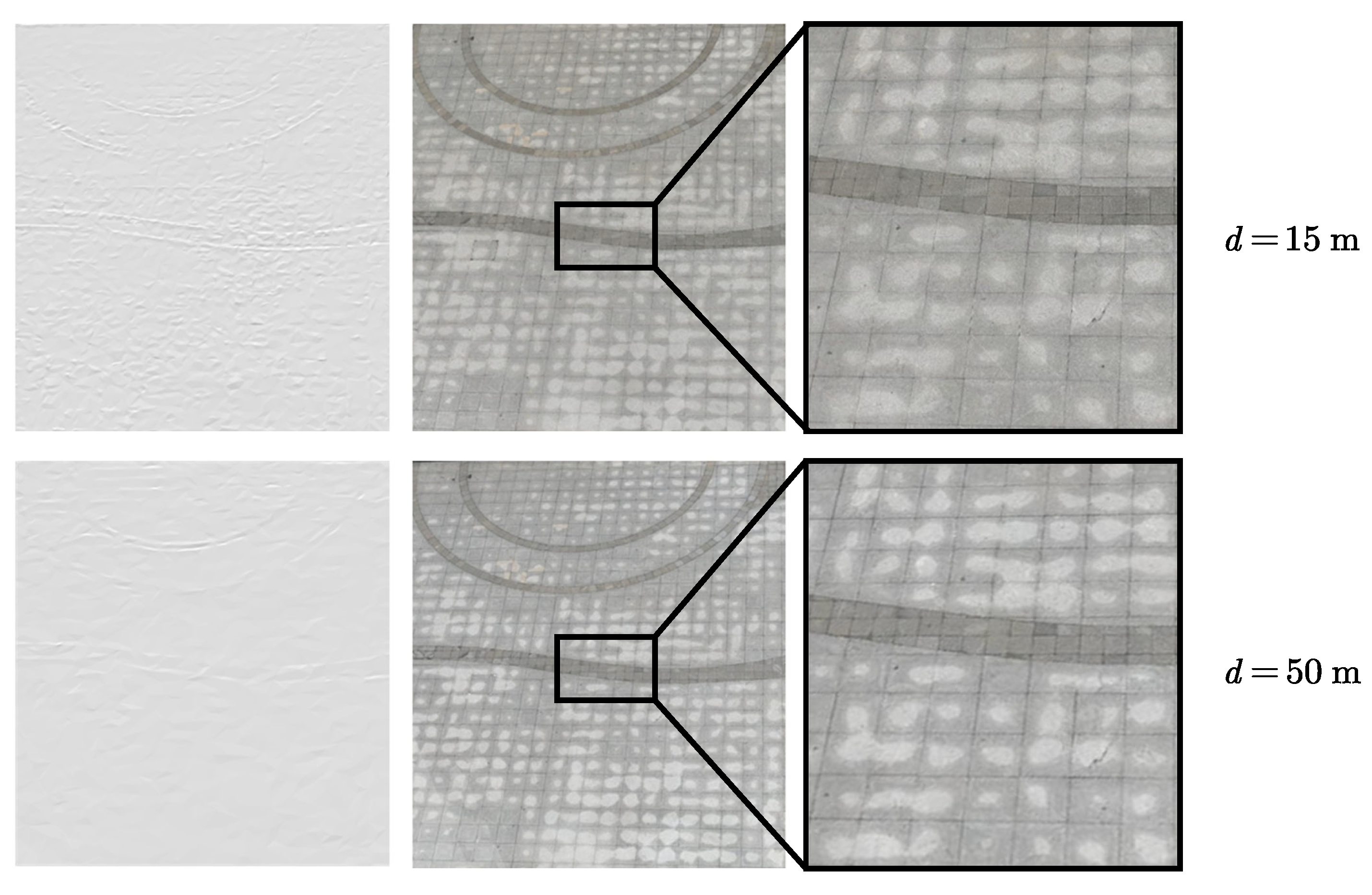

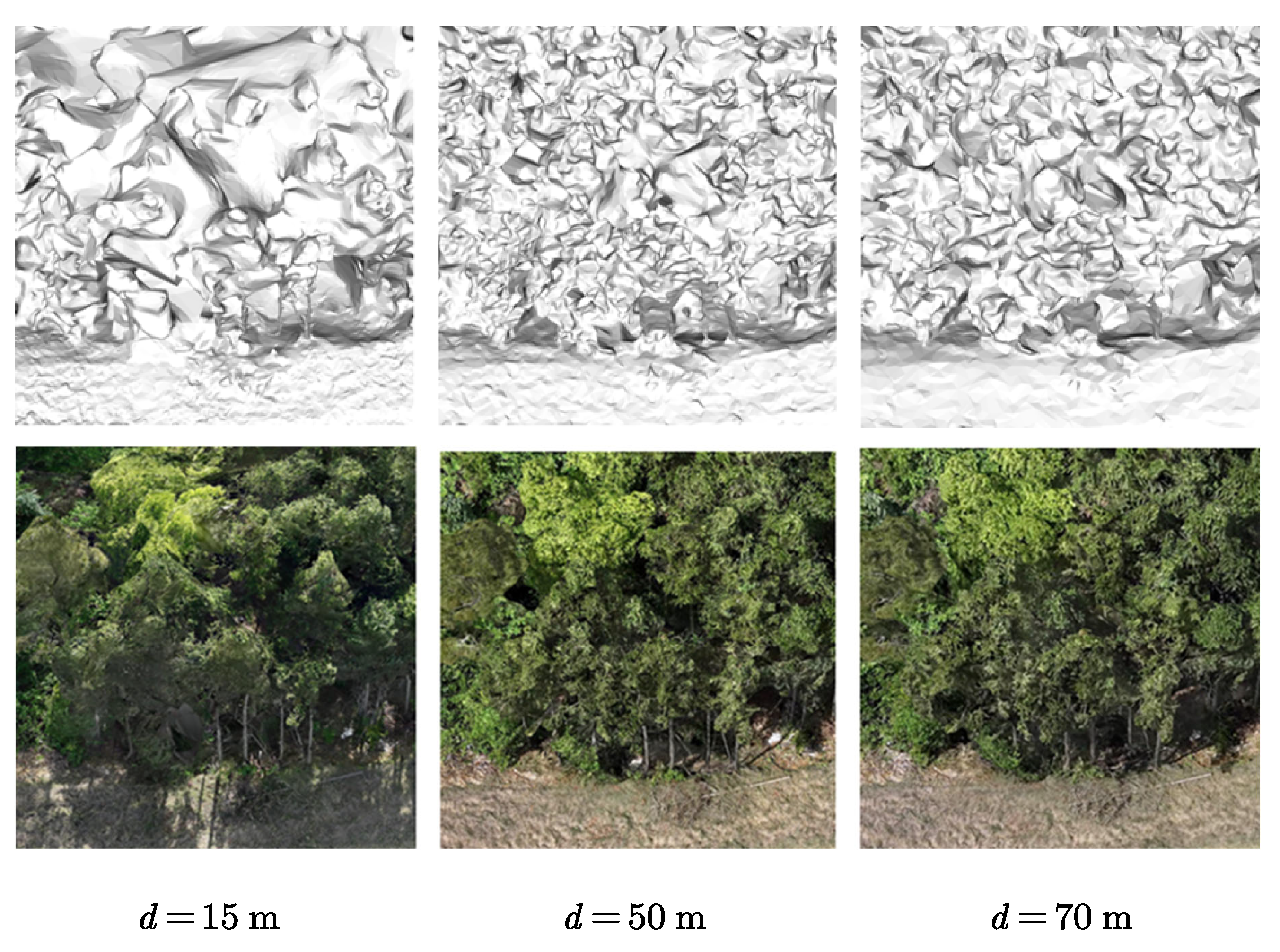

- Photography Resolution Setting Based on Object Categories

- Viewpoint Generation Based on Reconstructability

3.5. Obstacle-Aware and RTK Signal-Based Viewpoint Optimization

3.5.1. Obstacle Avoidance Analysis Based on DSM Safety Shell

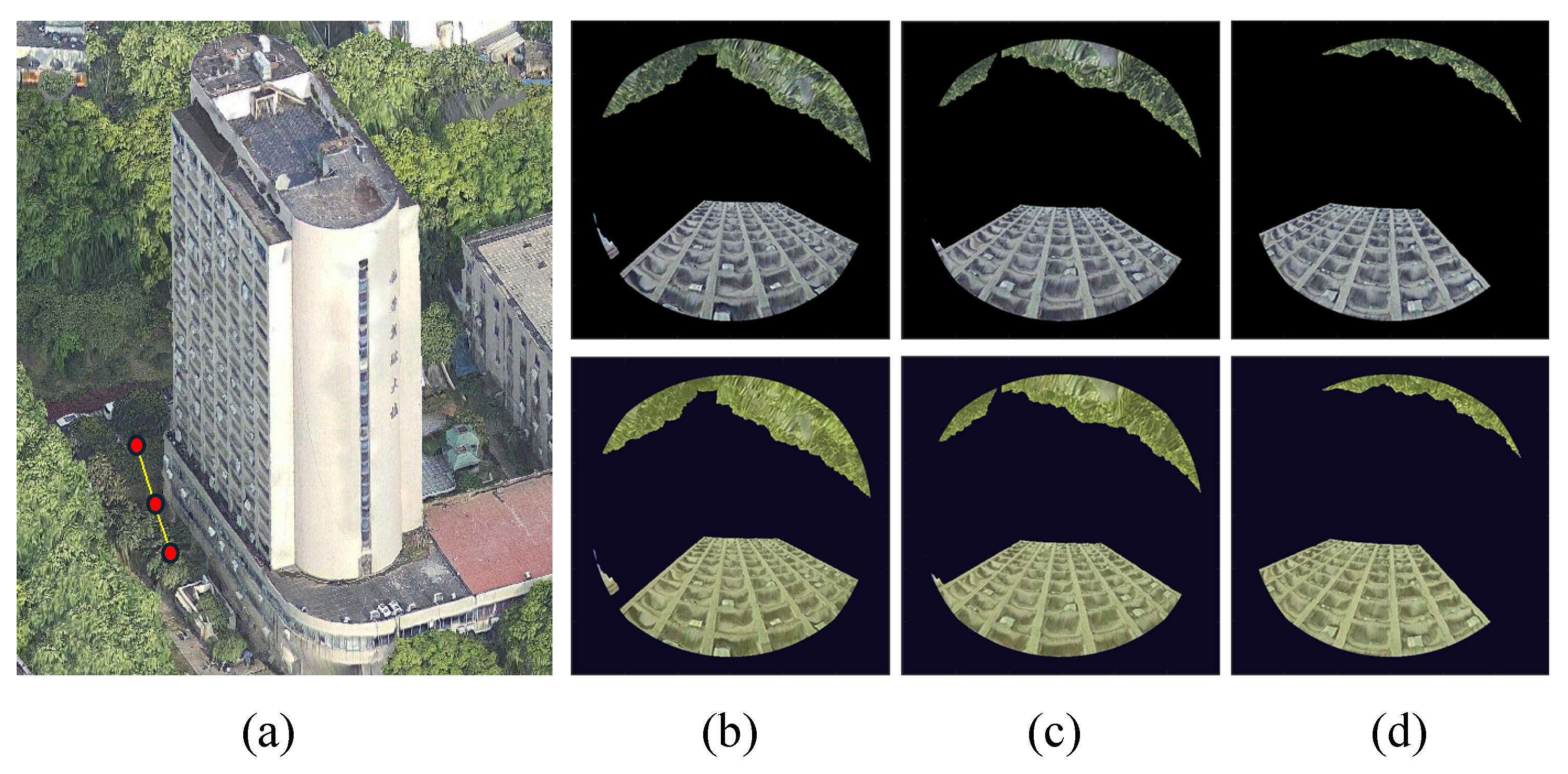

3.5.2. RTK Signal Analysis and Optimization Based on Sky-View Maps

- (1)

- Among the viewpoints generated for buildings, identify those whose heights are less than half the height of the corresponding building.

- (2)

- For these viewpoints, use an OpenGL renderer to generate upward-facing virtual images of the proxy model, then convert them into fisheye images. Based on a sky mask, extract the non-sky area and calculate the sky-view ratio.

- (3)

- For viewpoints with a sky-view ratio below 50%, incrementally raise their height in 2 m steps. During elevation, maintain the camera orientation toward the original sample point.

- (4)

- After each elevation step, recalculate the sky-view ratio at the new position. Continue this process until the ratio exceeds 50% or the elevation causes the viewing angle to surpass a predefined threshold (60°).

3.6. UAV Path Connection Based on Energy Consumption

- (1)

- The flight path intersects an obstacle, i.e., for any pair of photography points , the line segment connecting them intersects the safety shell, as determined through collision detection.

- (2)

- When a viewpoint lies on a flight strip unit, only the energy costs between adjacent points on the same strip are retained; the energy costs between non-adjacent points are set to infinity. In this way, each flight strip unit is treated as an indivisible segment during route connection.

4. Experimental Results

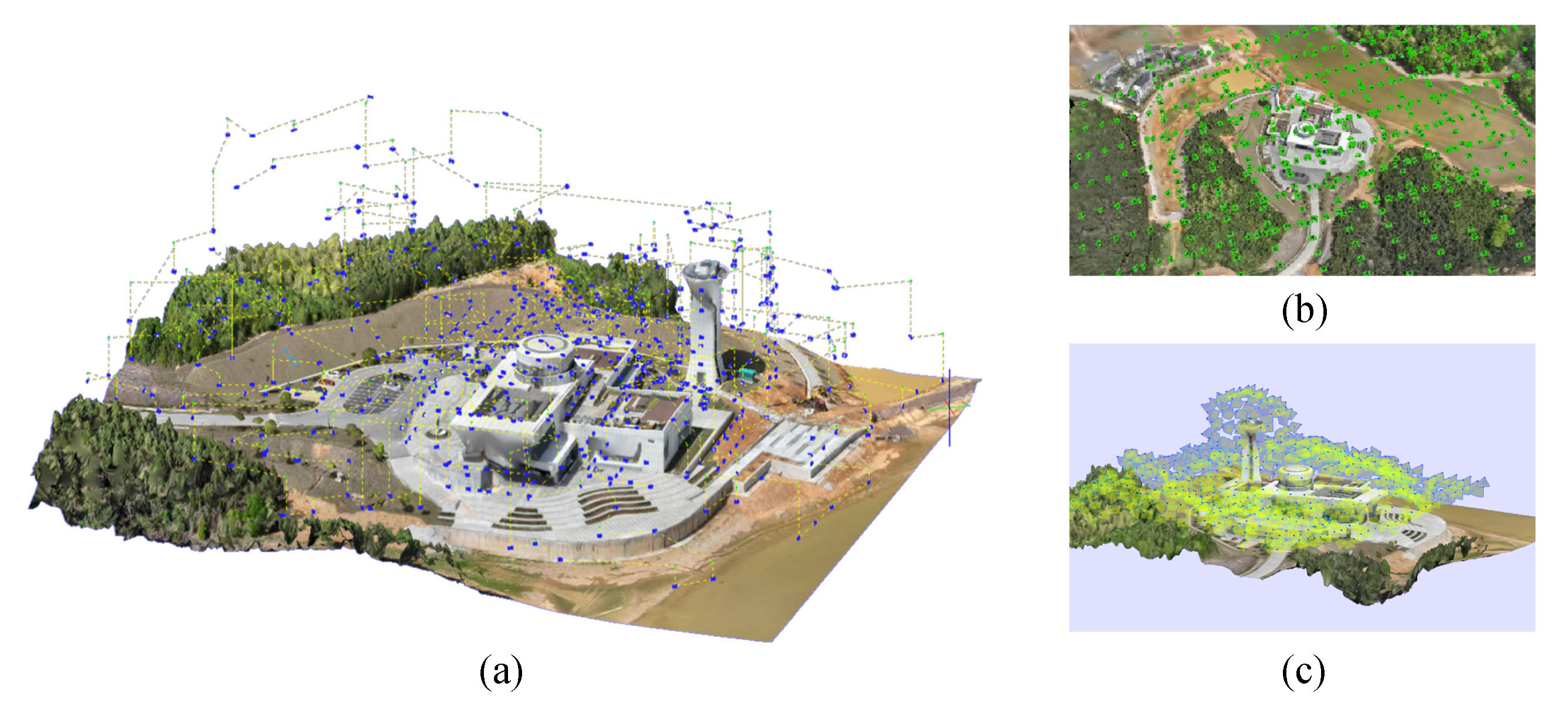

4.1. Experimental Setup

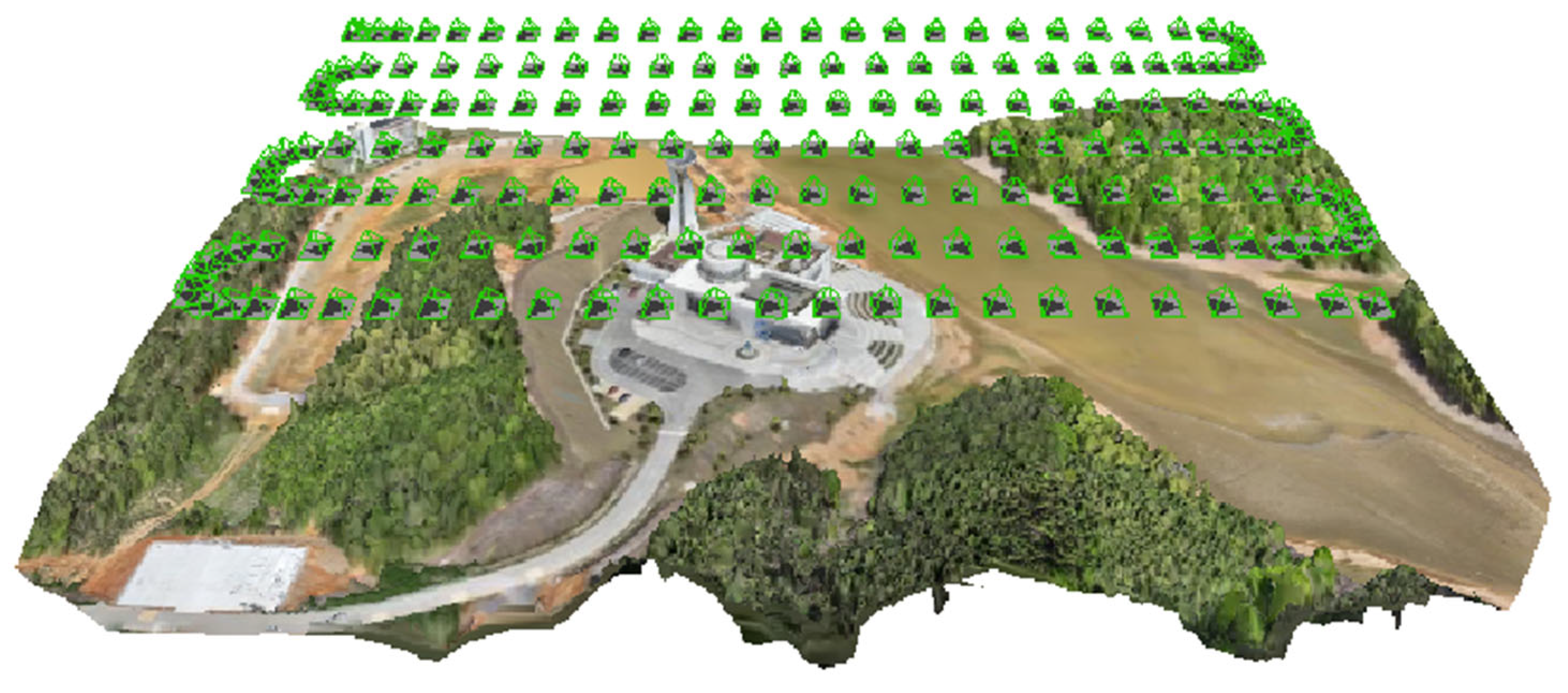

4.2. Experimental Procedure

4.2.1. Proposed Method

4.2.2. Oblique Photogrammetry

4.2.3. Metashape (MS) Method

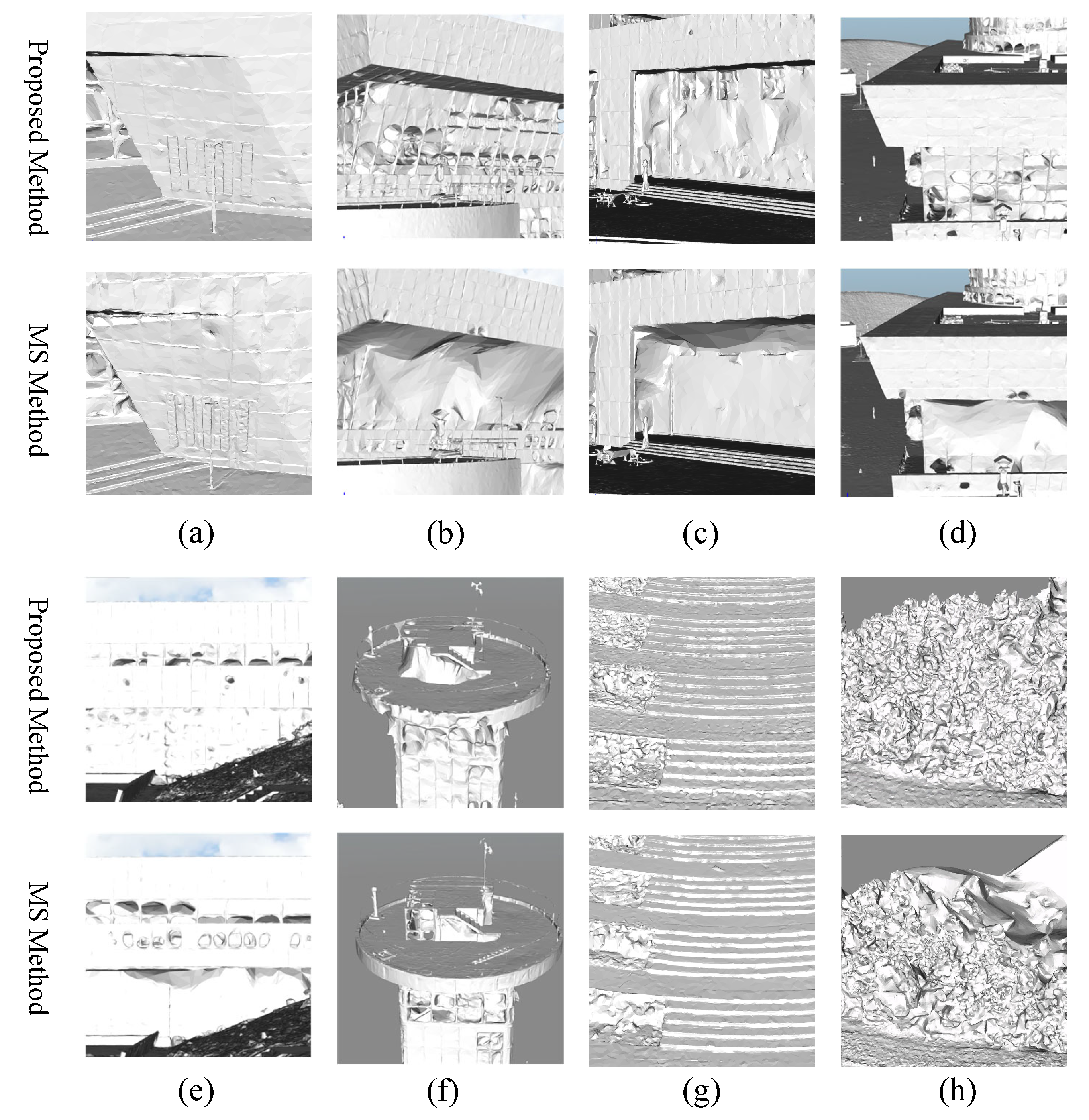

4.3. UAV Path Planning Method Comparison

4.3.1. Quality Evaluation Metrics

4.3.2. Comparison with Oblique Photogrammetry

4.3.3. Comparison with Metashape (MS) Method

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Jovanović, D.; Milovanov, S.; Ruskovski, I.; Govedarica, M.; Sladić, D.; Radulović, A.; Pajić, V. Building virtual 3D city model for smart cities applications: A case study on campus area of the university of novi sad. ISPRS Int. J. Geo-Inf. 2020, 9, 476. [Google Scholar] [CrossRef]

- Ketzler, B.; Naserentin, V.; Latino, F.; Zangelidis, C.; Thuvander, L.; Logg, A. Digital twins for cities: A state of the art review. Built Environ. 2020, 46, 547–573. [Google Scholar] [CrossRef]

- Remondino, F.; Rizzi, A. Reality-based 3D documentation of natural and cultural heritage sites—Techniques, problems, and examples. Appl. Geomat. 2010, 2, 85–100. [Google Scholar] [CrossRef]

- Xu, J.; Wang, C.; Li, S.; Qiao, P. Emergency evacuation shelter management and online drill method driven by real scene 3D model. Int. J. Disaster Risk Reduct. 2023, 97, 104057. [Google Scholar] [CrossRef]

- Amraoui, M.; Kellouch, S. Comparison assessment of digital 3d models obtained by drone-based lidar and drone imagery. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, 46, 113–118. [Google Scholar] [CrossRef]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. ‘Structure-from-Motion’photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef]

- Furukawa, Y.; Hernández, C. Multi-view stereo: A tutorial. Found. Trends® Comput. Graph. Vis. 2015, 9, 1–148. [Google Scholar] [CrossRef]

- Maboudi, M.; Homaei, M.; Song, S.; Malihi, S.; Saadatseresht, M.; Gerke, M. A review on viewpoints and path planning for UAV-based 3-D reconstruction. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 5026–5048. [Google Scholar] [CrossRef]

- DJI. P4RTK System Specifications. 2019. Available online: https://www.dji.com/cn/support/product/phantom-4-rtk (accessed on 16 June 2025).

- Zhang, S.; Liu, C.; Haala, N. Three-dimensional path planning of uavs imaging for complete photogrammetric reconstruction. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 1, 325–331. [Google Scholar] [CrossRef]

- Jing, W.; Polden, J.; Tao, P.Y.; Lin, W.; Shimada, K. View planning for 3D shape reconstruction of buildings with unmanned aerial vehicles. In Proceedings of the 2016 14th International Conference on Control, Automation, Robotics and Vision (ICARCV), Phuket, Thailand, 13–15 November 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Yan, F.; Xia, E.; Li, Z.; Zhou, Z. Sampling-based path planning for high-quality aerial 3D reconstruction of urban scenes. Remote Sens. 2021, 13, 989. [Google Scholar] [CrossRef]

- Wei, R.; Pei, H.; Wu, D.; Zeng, C.; Ai, X.; Duan, H. A Semantically Aware Multi-View 3D Reconstruction Method for Urban Applications. Appl. Sci. 2024, 14, 2218. [Google Scholar] [CrossRef]

- Nan, L.; Wonka, P. Polyfit: Polygonal surface reconstruction from point clouds. In Proceedings of the IEEE international Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2353–2361. [Google Scholar] [CrossRef]

- Lee, M.J.L.; Lee, S.; Ng, H.F.; Hsu, L.T. Skymask matching aided positioning using sky-pointing fisheye camera and 3D City models in urban canyons. Sensors 2020, 20, 4728. [Google Scholar] [CrossRef]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar] [CrossRef]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space. arXiv 2017, arXiv:1706.02413. [Google Scholar] [CrossRef]

- Engel, N.; Belagiannis, V.; Dietmayer, K. Point Transformer. IEEE Access 2021, 9, 134826–134840. [Google Scholar] [CrossRef]

- Wu, P.; Chai, B.; Li, H.; Zheng, M.; Peng, Y.; Wang, Z.; Nie, X.; Zhang, Y.; Sun, X. Spiking Point Transformer for Point Cloud Classification. Proc. AAAI Conf. Artif. Intell. 2025, 39, 21563–21571. [Google Scholar] [CrossRef]

- Feng, Y.; Feng, Y.; You, H.; Zhao, X.; Gao, Y. Meshnet: Mesh neural network for 3d shape representation. Proc. of the AAAI Conf. Artif. Intell. 2019, 33, 8279–8286. [Google Scholar] [CrossRef]

- Singh, V.V.; Sheshappanavar, S.V.; Kambhamettu, C. Mesh Classification With Dilated Mesh Convolutions. In Proceedings of the 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021; pp. 3138–3142. [Google Scholar] [CrossRef]

- Rouhani, M.; Lafarge, F.; Alliez, P. Semantic segmentation of 3D textured meshes for urban scene analysis. ISPRS J. Photogramm. Remote Sens. 2017, 123, 124–139. [Google Scholar] [CrossRef]

- Gao, W.; Nan, L.; Boom, B.; Ledoux, H. SUM: A benchmark dataset of semantic urban meshes. ISPRS J. Photogramm. Remote Sens. 2021, 179, 108–120. [Google Scholar] [CrossRef]

- Weixiao, G.; Nan, L.; Boom, B.; Ledoux, H. PSSNet: Planarity-sensible semantic segmentation of large-scale urban meshes. ISPRS J. Photogramm. Remote Sens. 2023, 196, 32–44. [Google Scholar] [CrossRef]

- Yang, Y.; Tang, R.; Xia, M.; Zhang, C. A Texture Integrated Deep Neural Network for Semantic Segmentation of Urban Meshes. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 4670–4684. [Google Scholar] [CrossRef]

- Zhang, R.; Zhang, G.; Yin, J.; Jia, X.; Mian, A. Mesh-Based DGCNN: Semantic Segmentation of Textured 3-D Urban Scenes. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–12. [Google Scholar] [CrossRef]

- Schreiber, Q.; Wolpert, N.; Schömer, E. METNet: A mesh exploring approach for segmenting 3D textured urban scenes. ISPRS J. Photogramm. Remote Sens. 2024, 218, 498–509. [Google Scholar] [CrossRef]

- Maturana, D.; Scherer, S. VoxNet: A 3D Convolutional Neural Network for real-time object recognition. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 922–928. [Google Scholar] [CrossRef]

- Choy, C.; Gwak, J.; Savarese, S. 4D Spatio-Temporal ConvNets: Minkowski Convolutional Neural Networks. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 3070–3079. [Google Scholar] [CrossRef]

- Su, H.; Maji, S.; Kalogerakis, E.; Learned-Miller, E. Multi-view Convolutional Neural Networks for 3D Shape Recognition. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 945–953. [Google Scholar] [CrossRef]

- Boulch, A.; Guerry, J.; Le Saux, B.; Audebert, N. SnapNet: 3D point cloud semantic labeling with 2D deep segmentation networks. Comput. Graph. 2018, 71, 189–198. [Google Scholar] [CrossRef]

- Meng, W.; Zhang, X.; Zhou, L.; Guo, H.; Hu, X. Advances in UAV Path Planning: A Comprehensive Review of Methods, Challenges, and Future Directions. Drones 2025, 9, 376. [Google Scholar] [CrossRef]

- Hepp, B.; Dey, D.; Sinha, S.N.; Kapoor, A.; Joshi, N.; Hilliges, O. Learn-to-Score: Efficient 3D Scene Exploration by Predicting View Utility. arXiv 2018, arXiv:1806.10354. [Google Scholar] [CrossRef]

- Kuang, Q.; Wu, J.; Pan, J.; Zhou, B. Real-Time UAV Path Planning for Autonomous Urban Scene Reconstruction. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 1156–1162. [Google Scholar] [CrossRef]

- Liu, Y.; Cui, R.; Xie, K.; Gong, M.; Huang, H. Aerial path planning for online real-time exploration and offline high-quality reconstruction of large-scale urban scenes. ACM Trans. Graph. (TOG) 2021, 40, 1–16. [Google Scholar] [CrossRef]

- Palazzolo, E.; Stachniss, C. Effective Exploration for MAVs Based on the Expected Information Gain. Drones 2018, 2, 9. [Google Scholar] [CrossRef]

- Wu, S.; Sun, W.; Long, P.; Huang, H.; Cohen-Or, D.; Gong, M.; Deussen, O.; Chen, B. Quality-driven Poisson-guided Autoscanning. ACM Trans. Graph. 2014, 33, 1–12. [Google Scholar] [CrossRef]

- Xu, K.; Shi, Y.; Zheng, L.; Zhang, J.; Liu, M.; Huang, H.; Su, H.; Cohen-Or, D.; Chen, B. 3D attention-driven depth acquisition for object identification. ACM Trans. Graph. (TOG) 2016, 35, 1–14. [Google Scholar] [CrossRef]

- Almadhoun, R.; Abduldayem, A.; Taha, T.; Seneviratne, L.; Zweiri, Y. Guided next best view for 3D reconstruction of large complex structures. Remote Sens. 2019, 11, 2440. [Google Scholar] [CrossRef]

- Chen, S.; Li, Y. Vision sensor planning for 3-D model acquisition. IEEE Trans. Syst. Man, Cybern. Part B (Cybern.) 2005, 35, 894–904. [Google Scholar] [CrossRef] [PubMed]

- Lee, I.D.; Seo, J.H.; Kim, Y.M.; Choi, J.; Han, S.; Yoo, B. Automatic Pose Generation for Robotic 3-D Scanning of Mechanical Parts. IEEE Trans. Robot. 2020, 36, 1219–1238. [Google Scholar] [CrossRef]

- Song, S.; Jo, S. Surface-Based Exploration for Autonomous 3D Modeling. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 4319–4326. [Google Scholar] [CrossRef]

- Smith, N.; Moehrle, N.; Goesele, M.; Heidrich, W. Aerial path planning for urban scene reconstruction: A continuous optimization method and benchmark. ACM Trans. Graph. 2018, 37, 1–15. [Google Scholar] [CrossRef]

- Koch, T.; Körner, M.; Fraundorfer, F. Automatic and semantically-aware 3D UAV flight planning for image-based 3D reconstruction. Remote Sens. 2019, 11, 1550. [Google Scholar] [CrossRef]

- Zhou, X.; Xie, K.; Huang, K.; Liu, Y.; Zhou, Y.; Gong, M.; Huang, H. Offsite aerial path planning for efficient urban scene reconstruction. ACM Trans. Graph. (TOG) 2020, 39, 1–16. [Google Scholar] [CrossRef]

- Zhang, H.; Yao, Y.; Xie, K.; Fu, C.W.; Zhang, H.; Huang, H. Continuous aerial path planning for 3D urban scene reconstruction. ACM Trans. Graph. 2021, 40, 1–15. [Google Scholar] [CrossRef]

- Zhou, H.; Ji, Z.; You, X.; Liu, Y.; Chen, L.; Zhao, K.; Lin, S.; Huang, X. Geometric primitive-guided UAV path planning for high-quality image-based reconstruction. Remote Sens. 2023, 15, 2632. [Google Scholar] [CrossRef]

- Roberts, M.; Shah, S.; Dey, D.; Truong, A.; Sinha, S.; Kapoor, A.; Hanrahan, P.; Joshi, N. Submodular Trajectory Optimization for Aerial 3D Scanning. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 5334–5343. [Google Scholar] [CrossRef]

- Hepp, B.; Nießner, M.; Hilliges, O. Plan3d: Viewpoint and trajectory optimization for aerial multi-view stereo reconstruction. ACM Trans. Graph. (TOG) 2018, 38, 1–17. [Google Scholar] [CrossRef]

- Peng, C.; Isler, V. Adaptive View Planning for Aerial 3D Reconstruction. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 2981–2987. [Google Scholar] [CrossRef]

- Schnabel, R.; Wahl, R.; Klein, R. Efficient RANSAC for point-cloud shape detection. Comput. Graph. Forum 2007, 26, 214–226. [Google Scholar] [CrossRef]

- Lee, D.T.; Schachter, B.J. Two algorithms for constructing a Delaunay triangulation. Int. J. Comput. Inf. Sci. 1980, 9, 219–242. [Google Scholar] [CrossRef]

- Edelsbrunner, H.; Kirkpatrick, D.; Seidel, R. On the shape of a set of points in the plane. IEEE Trans. Inf. Theory 1983, 29, 551–559. [Google Scholar] [CrossRef]

- Douglas, D.H.; Peucker, T.K. Algorithms for the reduction of the number of points required to represent a digitized line or its caricature. Cartogr. Int. J. Geogr. Inf. Geovisualization 1973, 10, 112–122. [Google Scholar] [CrossRef]

- El-Latif, E.I.A.; El-dosuky, M. Predicting power consumption of drones using explainable optimized mathematical and machine learning models. J. Supercomput. 2025, 81, 646. [Google Scholar] [CrossRef]

- Dorigo, M.; Gambardella, L.M. Ant colony system: A cooperative learning approach to the traveling salesman problem. IEEE Trans. Evol. Comput. 1997, 1, 53–66. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, X.; Ji, Z.; Chen, L.; Lyu, Y. UAV Path Planning via Semantic Segmentation of 3D Reality Mesh Models. Drones 2025, 9, 578. https://doi.org/10.3390/drones9080578

Zhang X, Ji Z, Chen L, Lyu Y. UAV Path Planning via Semantic Segmentation of 3D Reality Mesh Models. Drones. 2025; 9(8):578. https://doi.org/10.3390/drones9080578

Chicago/Turabian StyleZhang, Xiaoxinxi, Zheng Ji, Lingfeng Chen, and Yang Lyu. 2025. "UAV Path Planning via Semantic Segmentation of 3D Reality Mesh Models" Drones 9, no. 8: 578. https://doi.org/10.3390/drones9080578

APA StyleZhang, X., Ji, Z., Chen, L., & Lyu, Y. (2025). UAV Path Planning via Semantic Segmentation of 3D Reality Mesh Models. Drones, 9(8), 578. https://doi.org/10.3390/drones9080578