1. Introduction

In recent years, multi-UAV systems have emerged as a cornerstone in advancing low-altitude economies and intelligent autonomous systems owing to their advantages in task flexibility, environmental adaptability, and cooperative autonomy. Technically, system architectures have evolved from centralized schemes to distributed cooperative frameworks, thereby enhancing the robustness, scalability, and resilience in complex and dynamic environments. These advancements have facilitated the widespread deployment of multi-UAV systems across various domains. For example, in precision agriculture, UAV swarms are employed for real-time crop monitoring, field mapping, and pesticide application [

1,

2]. In urban logistics, UAV-based delivery networks enable efficient last-mile transportation and emergency responses [

3,

4]. In the energy sector, UAVs are increasingly used to inspect power lines, substations, and wind farms, providing high-frequency data acquisition with reduced operational risks [

5,

6]. These diverse applications highlight the critical role of multi-UAV systems in addressing real-world challenges across civil, industrial, and environmental domains.

Among various coordination strategies, containment tracking has emerged as a fundamental problem in the cooperative control of multi-UAV systems. The primary objective is to drive the follower UAVs to converge into the convex hull formed by multiple leader agents under a leader–follower architecture. Depending on the motion behavior of the leaders, containment control problems are generally classified into two categories: static and dynamic containment [

7]. Static containment considers scenarios where the leaders remain stationary, and the followers are required to converge to a fixed convex region. This formulation simplifies the controller design by eliminating the need for velocity measurements and is well suited to applications such as satellite formation surveillance and area monitoring [

8,

9,

10]. In contrast, dynamic containment addresses cases where the leaders follow time-varying trajectories. It necessitates the development of distributed velocity or acceleration observers to enable the followers to track a moving convex region, thereby enhancing the robustness under dynamic operating conditions. Dynamic containment has demonstrated substantial practical relevance in time-sensitive missions such as UAV-based logistics and aerial surveillance [

11,

12,

13]. Nevertheless, practical deployments of multi-UAV systems often involve non-cooperative behaviors and resource contention, especially in congested airspace or adversarial environments [

14,

15]. Such scenarios naturally give rise to both cooperative and antagonistic interactions among agents, which conventional containment control frameworks fail to adequately address. To overcome these limitations, the concept of bipartite containment control has been introduced as a significant extension. This framework is designed to ensure group-level convergence even in the presence of both positive (cooperative) and negative (competitive) relationships among agents.

Within the framework of bipartite containment control, follower agents are guided to converge to the union of two convex hulls, each constructed by a distinct subgroup of cooperative or competitive leaders. These leader subgroups independently span their respective convex regions, while the system collectively ensures global convergence and stability [

16]. Several recent studies have explored this problem from various perspectives. For instance, Wu et al. [

17] investigated bipartite containment for heterogeneous marine aerial–surface systems and proposed an adaptive control scheme with prescribed performance guarantees. He et al. [

18] tackled the containment tracking problem in nonlinear systems subject to false data injection (FDI) attacks using a model-free adaptive iterative learning control method. Furthermore, Fan et al. [

19] formulated a problem under adversarial disturbances as a zero-sum game and employed game-theoretic strategies to achieve bipartite containment. Also contributing to the research on bipartite containment control are the works in [

20,

21], which have significantly advanced the field by enhancing robustness and addressing adversarial scenarios. However, most of the existing approaches primarily focus on feasibility and stability, while a critical gap persists in performance-oriented design. In particular, aspects such as minimizing the control effort, reducing the energy consumption, and improving transient behavior have received insufficient attention, despite their practical significance in real-world multi-UAV applications that operate under resource constraints and mission-critical timelines.

Moreover, most of the aforementioned studies do not explicitly account for the presence of uncertainties, which are ubiquitous in practical multi-UAV control scenarios. In real-world deployments, UAVs are often subjected to external disturbances such as gusty winds, atmospheric turbulence, and aerodynamic interactions with neighboring vehicles [

22]. Simultaneously, internal uncertainties may stem from actuator degradation, sensor misalignment, mounting errors, and structural deformations [

23]. These compound uncertainties introduce significant challenges into the design of control strategies that are both robust and performance-optimal. Consequently, there is a pressing need for control frameworks capable of achieving high-performance coordination while effectively accommodating such uncertainties.

In recent years, adaptive dynamic programming (ADP) has garnered considerable attention as an effective approach to solving the optimal control problem [

24]. ADP facilitates online learning of the optimal control policies via iterative value function approximation and is particularly appealing due to its model-free nature, enabling adaptation to unknown or time-varying system dynamics [

25]. Some representative studies [

26,

27] show that ADP exhibits adaptivity and practicality in both energy system optimization and UAV cooperative control. Meanwhile, to address the uncertainty in the control process, some works [

28,

29] propose robust ADP methods. Despite these advances, the majority of the existing ADP approaches rely on the generalized policy iteration (GPI) framework [

30,

31], which typically assumes a preassigned and fixed number of internal iterations. Such static iteration strategies may result in a suboptimal computational efficiency, particularly in scenarios with heterogeneous learning speeds or dynamic environmental conditions, thereby hindering real-time applicability in resource-constrained systems.

Therefore, to address the aforementioned challenges, this paper proposes a data-driven Dynamic Iterative Reward Shaping (DIRS) approach to achieve optimal bipartite containment control for multi-UAV systems subject to compound model uncertainties. The main contributions of this paper are summarized as follows:

(1) A data-driven control scheme is developed to address the problem of optimal bipartite containment tracking control for multi-UAV systems under compound uncertainties. The proposed scheme integrates task-specific cost function design with online policy learning, enabling performance-oriented and adaptive control. Compared with the existing approaches [

32,

33], this framework provides greater flexibility and applicability in real-world scenarios by allowing the control strategies to be tailored to mission-specific objectives and environmental conditions.

(2) By leveraging a critic–actor neural network architecture, the proposed scheme realizes a data-driven, online iterative control process that operates independently of explicit system model parameters. This model-free formulation enhances the robustness against compound uncertainties, including external disturbances and internal structural or parametric errors, thereby ensuring a reliable performance in uncertain and dynamically evolving multi-UAV environments.

(3) To enhance the computational efficiency of traditional ADP algorithms [

34,

35], this paper designs a novel DIRS method. By incorporating a dynamic regulation mechanism and an adaptive regulation threshold, the DIRS enables task-dependent adjustment of the learning iteration steps. This mechanism effectively eliminates redundant iterations, improves the computational efficiency, and facilitates a better trade-off between the control performance and resource cost, thereby making this approach more suitable for real-time deployment in complex multi-UAV systems.

The remainder of this paper is organized as follows.

Section 2 presents the preliminary concepts in graph theory and formulates the optimal bipartite containment tracking problem for multi-UAV systems.

Section 3 introduces a novel DIRS-based iterative strategy for solving the optimal control problem and analyzes its performance and further details the implementation of a critic–actor neural network for online approximation of the optimal control law.

Section 4 provides numerical simulations to demonstrate the effectiveness of the proposed method. Finally,

Section 5 concludes the whole paper.

3. DIRS Algorithm Design, Analysis, and Online Implementation

In this section, a novel DIRS is proposed to adaptively adjust the sub-iteration step size. The proposed algorithm effectively eliminates redundant iterations and facilitates the solution of the optimal value function and the corresponding control inputs. A neural-network-based approximation scheme is then employed to enable an online implementation of the optimal bipartite containment tracking control. The overall DIRS-based control framework is illustrated in

Figure 1.

3.1. The DIRS Algorithm Design

Let and represent the control law and the value function, respectively. Furthermore, to simplify the iteration process, is defined as an adaptive sub-iteration value function with the sub-iteration indexes . The symbol denotes the number of sub-iterations that are adaptively adjusted based on the convergence of the value function, while represents the predetermined maximum number of sub-iterations. In order to facilitate the representation, all polynomials of a similar form have , for those mentioned later.

For the progress of the

th sub-iteration, the value function is

The DIRS algorithm’s iterative process is summarized below:

(1): Initializing the admissible control law

, the initial value function

is determined as

For

, the iteration control law

is

(2): Let the control law remain a constant value. For the bth step of the iteration, the value function can be solved as follows.

For

,

where the initial value function of the sub-iterations is

If the difference in the value function in two iterations is required to satisfy the following equation,

then the

d sub-iteration terminates, while its iteration number is recorded as

. In particular, the threshold size is set to

. In fact, a smaller

imposes stricter accuracy requirements, leading to more internal iterations to achieve a higher control accuracy. Conversely, a larger

relaxes the accuracy requirements, allowing the system to reach a steady state faster with fewer iterations.

Otherwise, the adaptive sub-iteration value function is

This subsection presents a DIRS algorithm with an adaptive termination mechanism (

18), which enables online solution of the HJB equation via alternating policy evaluations and policy improvements. Specifically, the algorithm reduces the number of iterations once sub-iteration convergence is detected, thereby accelerating computation and conserving resources. Moreover, by adjusting the termination threshold, the DIRS algorithm balances computational efficiency and solution accuracy, ensuring reliable approximation of the optimal value function with reduced computational costs.

Remark 3. Compared with existing ADP algorithms [32,35] for which the number of internal iterations needs to be set in advance, the DIRS algorithm proposed in this paper can adaptively and dynamically adjust the iterative process when (18) is satisfied, avoiding unnecessary iterations after the internal iterations converge. Furthermore, the algorithm can satisfy the required control accuracy while performing the minimum number of iterations, thus saving computational resources and improving the efficiency of optimal bipartite containment control. 3.2. Stability, Convergence, and Optimality

In this subsection, the stability, convergence, and optimality properties of the proposed DIRS algorithm are analyzed, and the corresponding theoretical results are rigorously established. These results lay the theoretical foundation for solving the bipartite containment tracking control problem in multi-UAV systems.

Theorem 1. The bipartite tracking control error in the multi-UAV system can be asymptotically stabilized under the application of the optimal control input , which is obtained via the proposed DIRS algorithm. That is, the following condition holds: Proof. According to the DIRS algorithm, the optimal value function

satisfies the HJB equation as given in Equation (

11) and consequently obtains

Multiply both sides of Equation (

22) by

:

Define

as the Lyapunov function for all agents. Consequently, the first-order difference form of the Lyapunov equation is derived as

By combining Equations (

23) and (

24), we obtain the following result.

From Equation (

25), it is evident that the bipartite tracking control error

of multi-UAV systems is asymptotically stable, i.e.,

when

. This indicates that the system driven by the designed DIRS algorithm achieves bipartite tracking control and the agents track the leader’s trajectory.

The proof is completed. □

Theorem 2. The value function, as determined by Equations (14) to (20) of the DIRS algorithm, forms a monotonically non-increasing sequence, satisfying the subsequent conditions: Proof. The mathematical induction method will be employed to prove Equations (

26) and (

27).

As the DIRS algorithm incorporates an adaptive termination mechanism, the maximum number of sub-iterations for the value function becomes uncertain. The algorithm is designed to automatically terminate when the change in the sub-iteration value function is insignificant, recording the maximum number of sub-iterations as , or if it is not a termination. Therefore, in the subsequent proof, the maximum number of sub-iterations within a single iteration is denoted as ℑ, where .

Let

, and denote the value function as follows:

Furthermore, when

, it follows that

Let

, where

is a positive integer such that

. From this, it follows that

Based on Equations (

28) to (

30), it is straightforward to demonstrate that inequality (

26) from Theorem 2 is satisfied when

. Suppose further that inequality (

26) from Theorem 2 holds for

. This leads to

Consequently, it remains to establish that inequality (

26) is also valid for

:

By using (

29), for when

,

Similarly, let

and

be a positive integer satisfying

; then, it can be derived that

From the derivation process outlined above, it can be summarized that when

The proof is complete. □

An important lemma is introduced before determining the optimality of the DIRS algorithm.

Lemma 1 ([

38])

. If the sequence is monotonically non-increasing, then it converges to the same limit as its subsequence. Theorem 3. The value function and the control law , as determined by Equations (14) to (20) of the DIRS algorithm, converge to the optimal values nd . Proof. Initially, construct the sequence associated with the value function according to the DIRS algorithm as follows:

Furthermore, define the sequence of the value function for the outer iterations as follows:

It is evident that the sequence (

40) is a subsequence of the sequence (

39). Moreover, given that sequence (

39) is monotonically non-increasing, it follows from Lemma 1 that

Therefore, Theorem 3 to be proven is transformed into

Let the limit of

as

p approaches infinity be denoted by

. Given that sequence (

40) is monotonically non-increasing, it follows that

As

, Equation (

43) transforms into

Further, suppose an arbitrarily small real number

exists. Since the sequence

is monotonically non-increasing, there is a positive iteration index Y and

According to Equation (

46), it follows that

Based on the prior definition,

is an arbitrarily small real number, thus establishing that

As Equations (

44) and (

47) are both satisfied, we derive the following conclusion:

It is clear that Equations (

9) and (

48) have the same right-hand side equation; hence, it can be concluded that

The proof for Equation (

37) is thereby complete. With the value function converges to the optimal value, it is deduced from Equation (

12) that the control law similarly converges to the optimal control law, i.e.,

.

The proof is complete. □

In this subsection, a data-driven control scheme based on the proposed DIRS algorithm is developed to solve the optimal bipartite containment tracking control problem for multi-UAV systems. The DIRS algorithm incorporates a novel adaptive termination mechanism, which effectively reduces redundant iteration steps and improves the overall computational efficiency. Furthermore, the stability, convergence, and optimality of the proposed control framework are rigorously established using a Lyapunov-based analysis and mathematical induction.

3.3. The Neural Network Framework

The DIRS algorithm is implemented within a neural network framework using an actor–critic architecture to enable data-driven reinforcement learning. Specifically, the critic network is employed to approximate the value function , while the actor network is used to generate the control input . In this paper, two independent fully connected feedforward neural networks are used to approximate the optimal value function and the optimal control law , respectively. These networks are updated using an error back-propagation algorithm.

3.3.1. The Critic Network

The critic network is employed to approximate the optimal value function

. The input to the critic network consists of the error state

and the control inputs

from the local agent, respectively. Accordingly, the output of the critic network is defined as follows:

where

,

is a function expression, and

is the network weight for the critic network.

Subsequently, the error function for the critic network is formulated as

Therefore, the objective function within the critic network is defined as

Furthermore, utilizing a gradient-descent-based approach, we derive the weight update rule for the critic network:

where

represents the learning rate of the critic network for the

ith agent.

3.3.2. The Actor Network

The actor network is used to approximate the optimal control law

. The output vector of the actor network is given by

where

denotes the function expression, and

represents the network weight of the actor network.

When the value function

of the

ith agent converges to its optimal form, this implies that the corresponding control law is optimal. Based on this observation, the objective function for the actor neural network is designed as follows:

where

.

Similarly, the actor network updates its weights using a gradient-descent-based method, which can be expressed as follows:

where

is the learning rate of the actor network,

, and

is a constant matrix.

This subsection has established a reinforcement learning framework for the online approximation and implementation of the DIRS algorithm. The framework consists of two main components. First, the critic network evaluates the control law using real-time error data and estimates the approximate optimal value function, thereby enabling online learning of the system’s control behavior. Second, the actor network approximates the optimal control law using the learned value function, without relying on explicit system models, thus facilitating model-free optimal control. In the following section, this framework is applied to implementing the DIRS algorithm to achieve optimal bipartite containment tracking.

Remark 4. It is worth noting that the designed DIRS-based controller is a data-driven model-free online control scheme. As mentioned in the literature [35,39], the neural network architectures introduced to solve the ADP-based control problem have only been used to fit the optimal value function and the optimal control inputs to solve nonlinear, non-analytic HJB equations. The data required for the neural network is generated entirely through the online interaction of the system, without the need for pre-collected or offline datasets. The whole training process is synchronized with the mission, and there is no independent offline phase. 4. Simulation Results

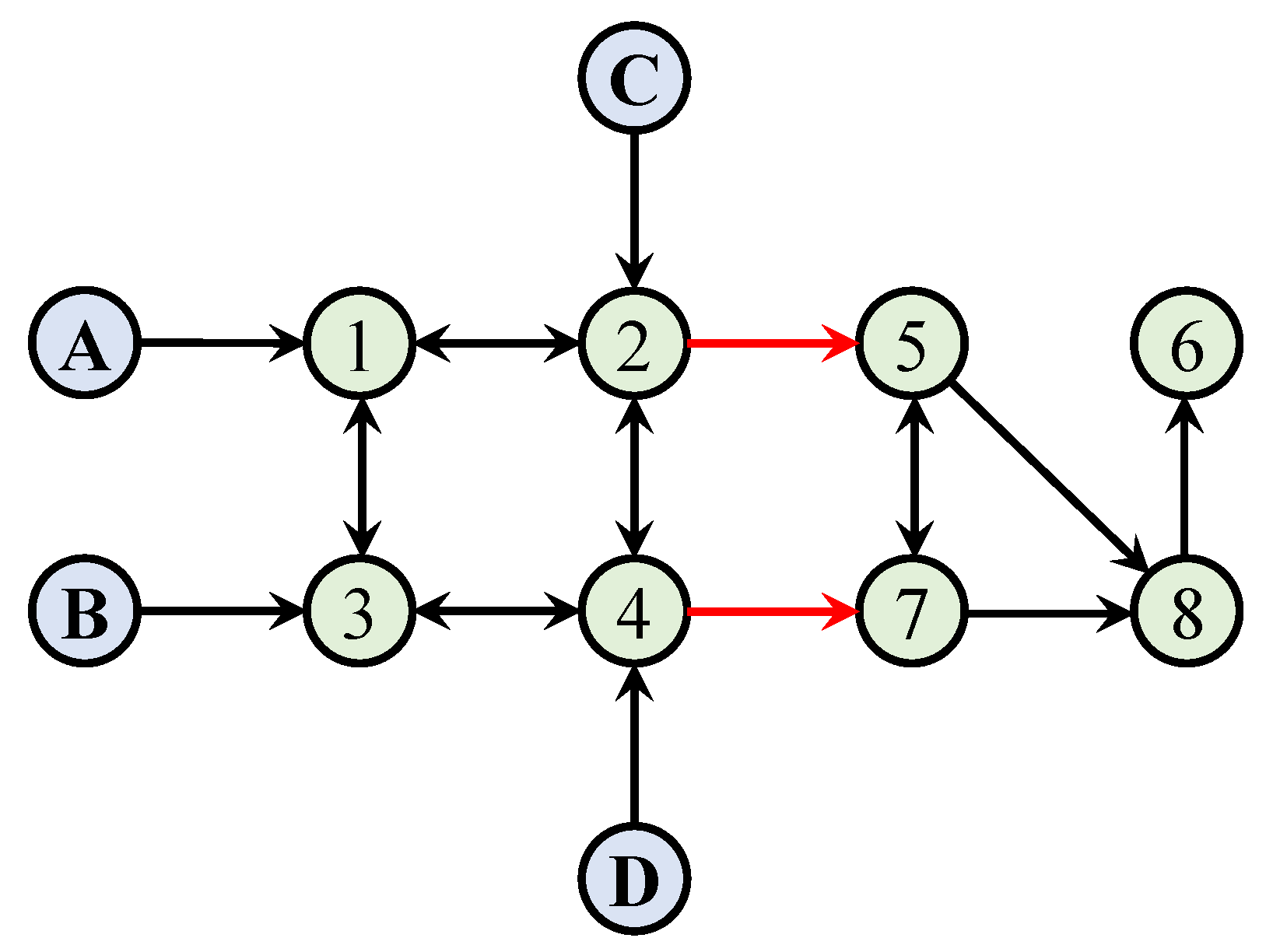

In this section, numerical simulations are conducted to validate the effectiveness of the proposed DIRS-based control framework and the neural network approximation scheme. The communication topology of the multi-UAV system considered, which incorporates both cooperative and competitive interactions, is depicted in

Figure 2. In this topology, black arrows denote cooperative relationships, while red arrows represent competitive ones. The blue nodes correspond to the leader UAVs labeled A, B, C, and D, whereas the green nodes indicate the follower UAVs.

In the simulation, the value function parameters are initialized as

and

. The dynamic behaviors of both the leader and follower UAVs are modeled according to (

4) and (

3), with a sampling time of

. The uncertainties are defined as

and

, while the external disturbances are set as

and

.

For spatial initialization, the leader UAVs are placed at fixed coordinates

,

,

, and

, whereas the initial positions of the follower UAVs are randomly generated. The hyperparameter variables used in the neural network architecture are summarized in

Table 1.

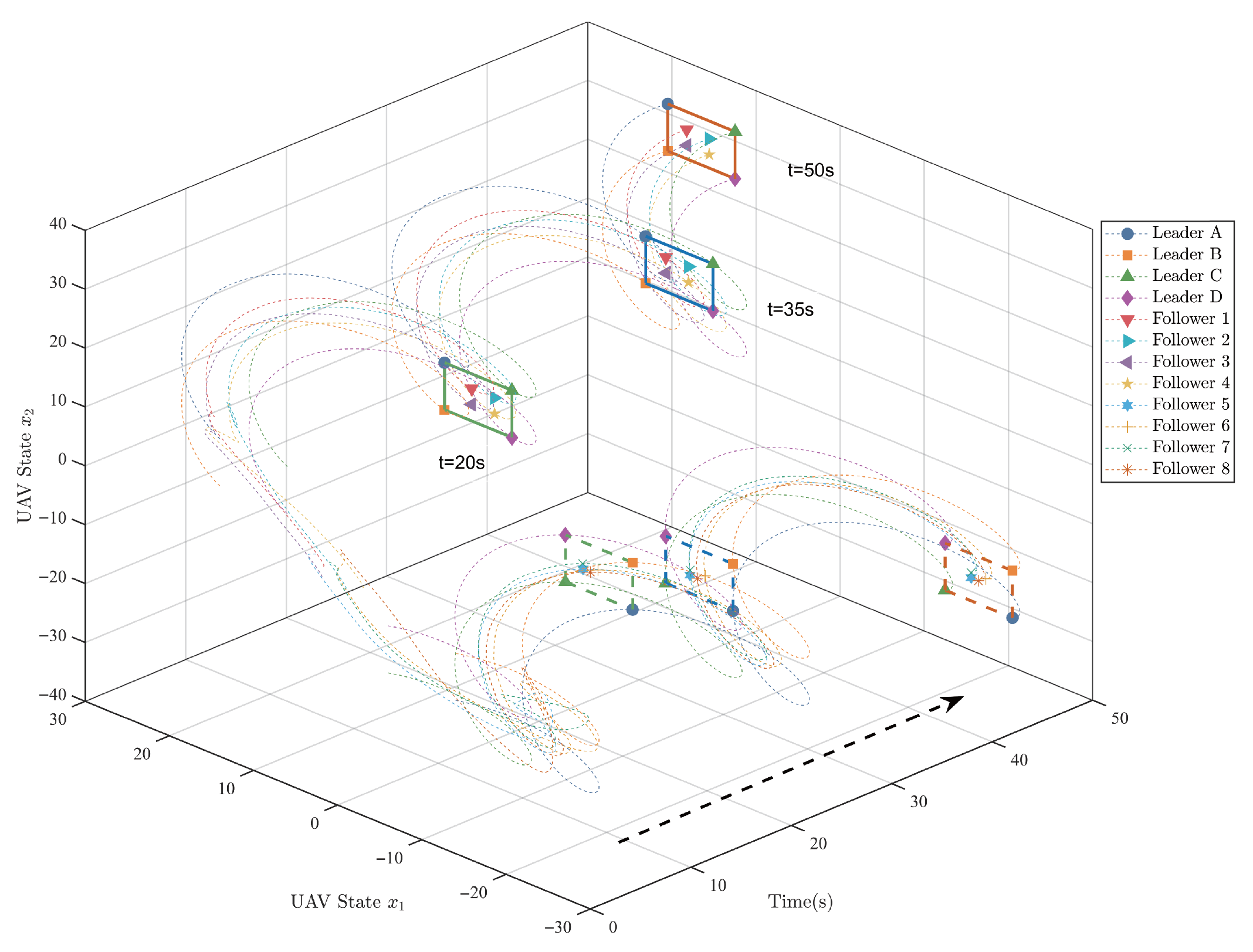

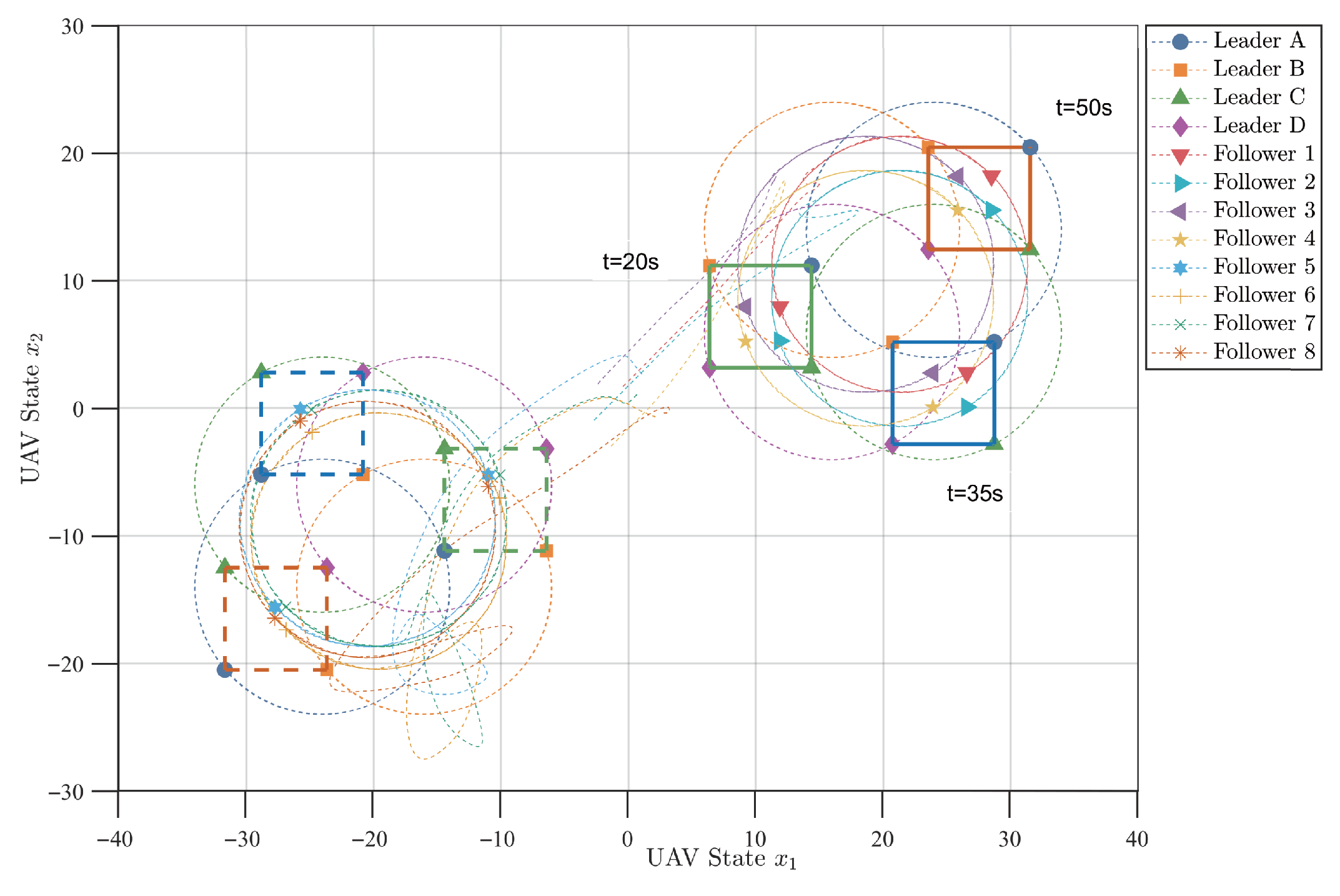

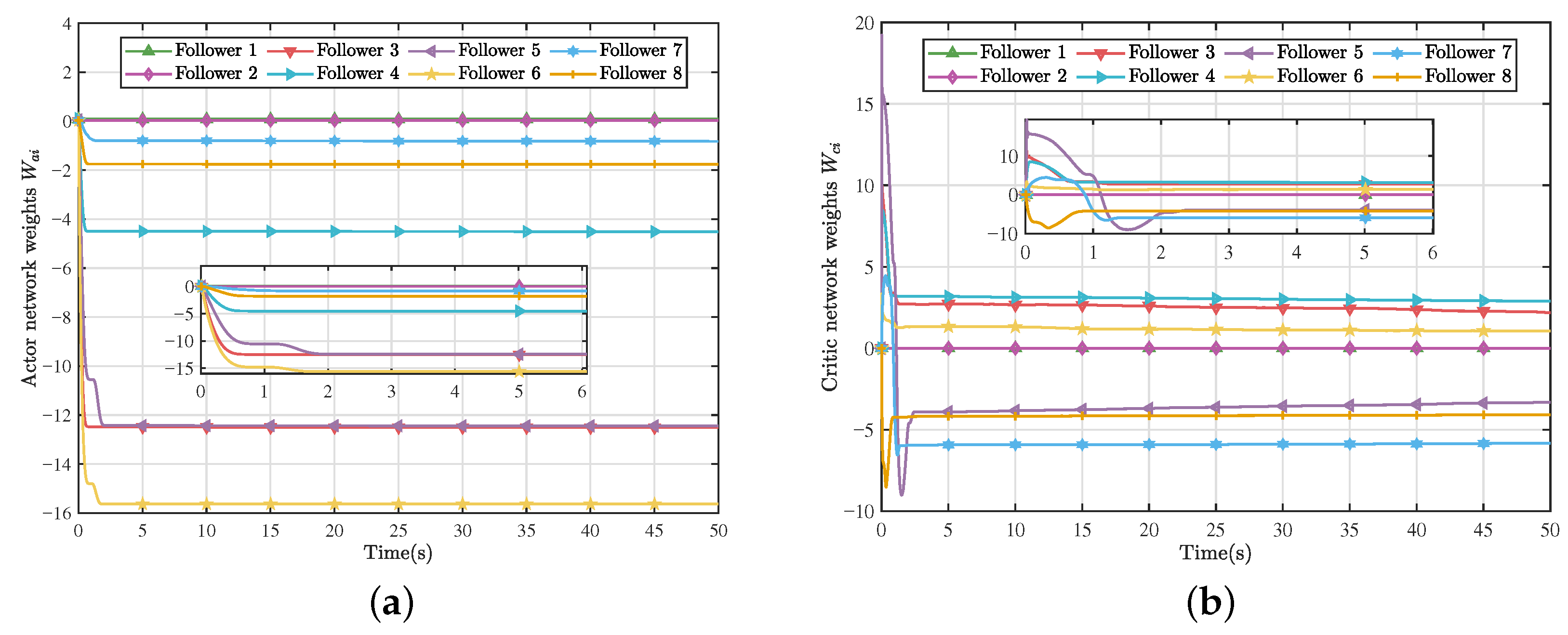

Figure 3 and

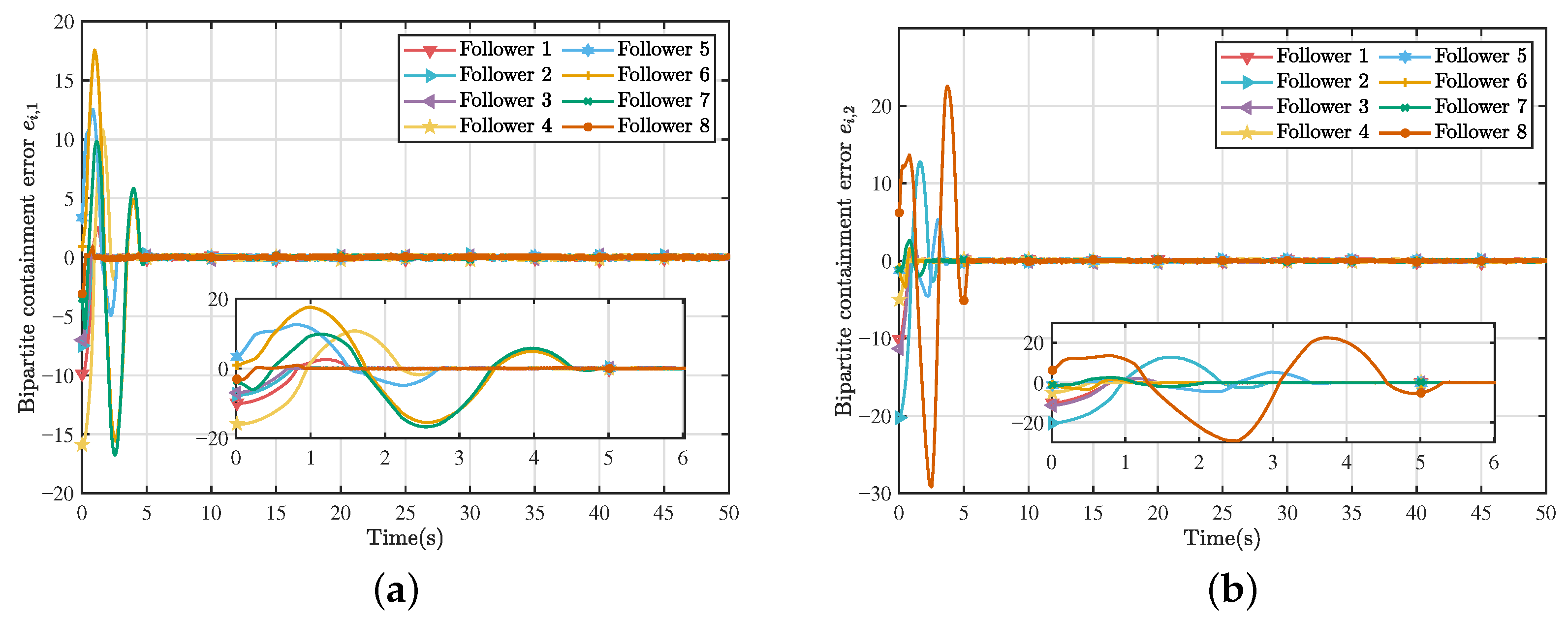

Figure 4 depict the two-dimensional and three-dimensional trajectories of the optimal bipartite containment tracking for the multi-UAV system. Under the proposed optimal control strategy, all follower UAVs successfully converge to the bipartite convex hull spanned by the leader UAVs and achieve stable containment tracking. The corresponding bipartite containment tracking errors are illustrated in

Figure 5, where the tracking errors of all follower UAVs asymptotically converge to zero, thereby validating the effectiveness of the proposed control law. Furthermore,

Figure 6 presents the evolution of the weight vectors of the critic and actor networks, demonstrating the convergence behavior of the neural-network-based approximation process. Equally, according to the enlarged transient states in

Figure 5 and

Figure 6, it can be found that the system is in the regulation process in the first 6 s. The DIRS algorithm learns and continuously generates the optimal control inputs through the system data, and finally, the system achieves convergence after 6 s.

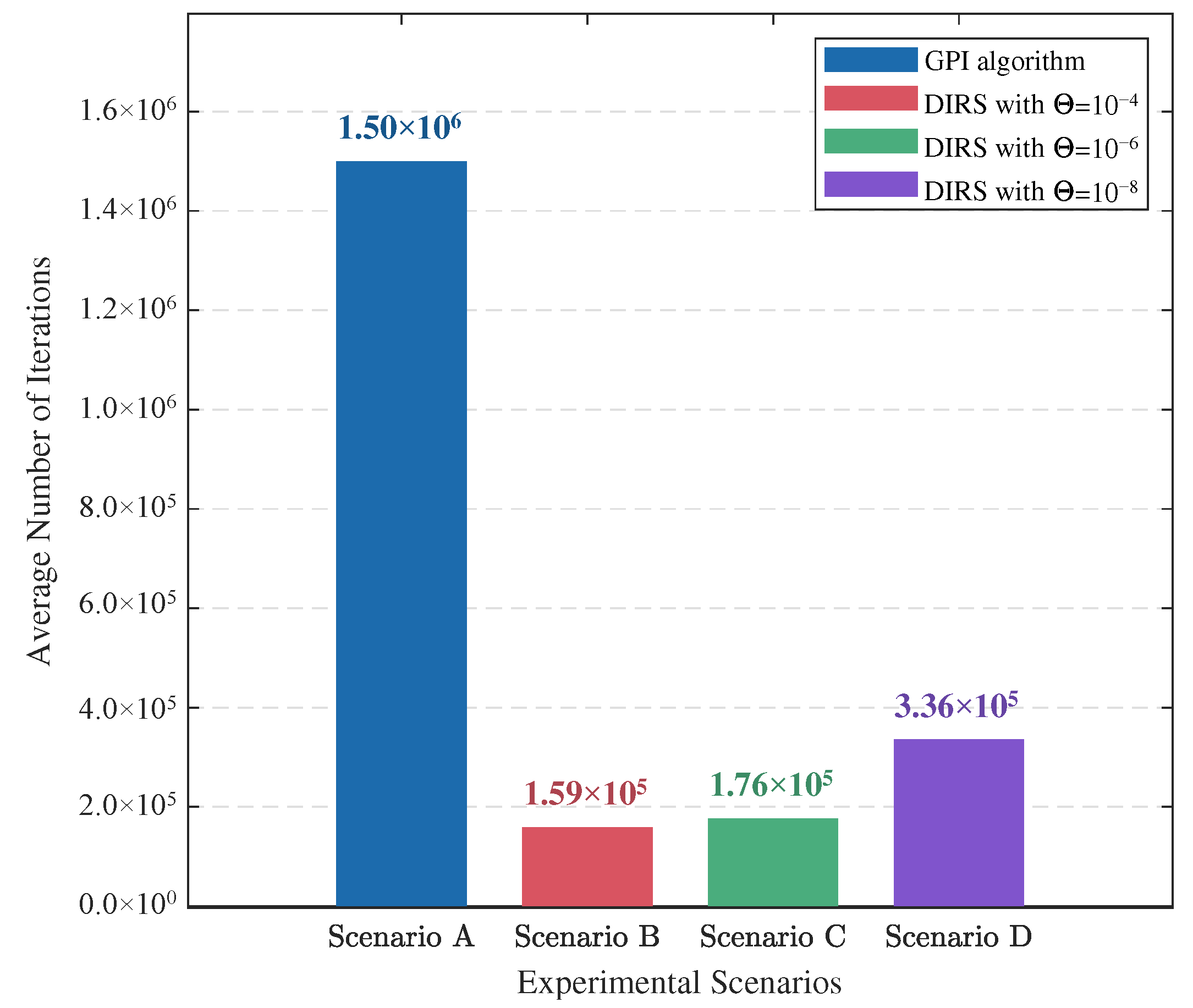

In order to further demonstrate the advantages of the designed DIRS in improving the efficiency of ADP computation, the same experimental conditions are used for comparison with the method in [

34], and the average number of iterations for each UAV is calculated during the whole process of control. In addition, comparative experiments are conducted in order to assess the impact of the dynamic iteration threshold

on the iteration efficiency. Finally, the comparison results are shown in

Figure 7. It can be seen that compared with the existing ADP algorithm, the introduction of the DIRS can effectively reduce the average number of iterations, and the DIRS with

reduces the total number of iterations by 88.27% compared to that with the GPI. Meanwhile, the simulation results under different

values further illustrate that it is very important to choose a suitable iteration threshold to trade-off between control accuracy and computational efficiency in practical applications.

Remark 5. In this paper, numerical simulations are conducted for the optimal bipartite containment tracking control problem for multi-UAV systems, mainly to verify the effectiveness of the designed DIRS algorithm-based controller. As mentioned in the literature [40,41,42], by extending the Newton’s second-law-compliant second-order integrator model and the disturbance model investigated in this paper, it could be deployed and developed in Gazebo, Webots, and IsaacSim. This has also inspired us to conduct more realistic flight experiments using Gazebo and other tools in the future. 5. Conclusions

In this paper, a data-driven optimal bipartite containment tracking control scheme is developed for multi-UAV systems subject to compound uncertainties. The dynamics of the multi-UAV system and the associated uncertainties are first modeled, followed by formulation of the optimal bipartite containment tracking problem and derivation of the corresponding coupled HJB equations. To alleviate the computational burden, a DIRS is introduced to adaptively adjust the number of iterations, thereby balancing the control accuracy and computational efficiency. Rigorous proofs are provided to establish the stability, convergence, and optimality of the proposed control framework. Furthermore, a neural-network-based online approximation method is employed to realize a model-free solution to the optimal control problem. Finally, the simulation results are also presented and compared with the existing ADP methods to verify the effectiveness of the proposed DIRS in saving computational resources.

The proposed DIRS framework is applicable to multi-UAV systems with compound uncertainties (e.g., external interference and internal modeling errors) and communication topologies that contain both cooperative and competitive interactions. Nonetheless, the framework suffers from a number of limitations, including the fact that the current design assumes a structural equilibrium map, which may be violated in the event of communication failures or switching topologies, and that practical constraints such as actuator saturation, failures, and communication delays have not yet been considered. Also, the current results build on numerical simulations to verify the effectiveness of the control algorithm. Therefore, future work will address these issues and extend the experimental results further to a UAV vehicle swarm for flight experiments to enhance its robustness and applicability.