1. Introduction

Precision agriculture has reached a turning point as autonomous sensors, artificial intelligence, and rugged edge computers converge in the same field [

1]. Unmanned Aerial Vehicles (UAVs) outfitted with high-resolution cameras now dominate the landscape, allowing farmers to check crop health from several hundred feet up without ever stepping onto the land [

2]. The drones deliver a flood of spatially detailed, time-stamped images that suit fast-changing, patchy crops like cotton better than any ground rig could manage [

3]. Turning that airborne footage into usable agronomy advice still depends on powerful yet scalable computer vision routines that sift noise from insight in a matter of minutes [

4]. Cotton still ranks among the globe’s top cash crops, a status that hinges on farmers’ timing their work to the fickle rhythms of boll opening, insect pressure, and plant growth [

5]. Routine field surveys, by contrast, demand hand-held scouting and notebooks, chores that quickly sap strength and patience while leaving room for personal bias [

6]. On sprawling commercial tracts or in areas where field crews never show those paper-and-pen routines simply break down [

7], researchers have begun turning to deep-learning cameras to automate discrete chores like counting bolls, outlining pest scars, or tagging the current growth stage [

8,

9,

10]. The catch is that most of these systems run as stand-alone gadgets, each welded to its own narrow goal and often needing a fresh network whenever the target shifts [

11]. That piecemeal design clutters the laptop with extra code and, more critically [

12], overlooks the messy ways the jobs influence one another, since bug feeding can slow boll maturation and the plant’s age can tilt its insect defenses [

13]. This paper presents CMTL, a transformer-infused framework crafted specifically for monitoring cotton fields from the air. The architecture targets three intertwined agronomic objectives: counting cotton bolls, mapping pest-infested patches, and gauging the crop’s growth stage. These missions feed into one another; knowing how many bolls are present sharpens estimates of maturity, while the location of late-season lesions hints at both pest activity and crop phenology. By solving all three within a single pass, CMTL trims both the time spent on inference and the memory overhead, yet it gains extra precision from the shared contextual cues. A CLMGE peels apart the scene at differing scales, pulling in razor-sharp local data on leaf spots, mid-level order such as row spacing, and wide views that capture canopy spread. A Multitask Self-Distilled Attention Fusion (MSDAF) block then weighs these streams on the fly, blending task-specific signals into a cohesive prediction. At the finishing touch of the pipeline, three distinct decoders spring into action side by side. One crafts an anchor-free heatmap that pinpoints every boll, a second rations out damage masks via a diffusion-steered module, and a third sorts the crop into growth stages. That final classification leverages a newly minted Stage Consistency Loss, a penalty function tuned to mimic the predictable rhythms of a plant’s lifetime.

In pursuing a robust evaluation of CMTL, we constructed a composite collection of UAV-acquired cotton imagery augmented by two widely cited public datasets. The assembly traverses distinct growing conditions, multiple seasonal snapshots, and varied field geometries, deliberately enhancing the diversity of the study. After exhaustive testing, CMTL eclipsed twenty leading baseline models in every prescribed task, all while executing in real time on edge hardware such as the NVIDIA Jetson NX. A thorough ablation analysis pinpoints the specific contribution of each architectural module and illuminates the performance uplift afforded by simultaneous task training. This paper advances three key innovations. First, it introduces the first multitask transformer specifically engineered for integrated localization of bolls, quantification of pest damage, and classification of cotton growth stages. Second, the architecture embeds novel mechanisms-including CLMGE for spatio-temporal feature anchoring, MSDAF for fused inter-task attention, and Stage Consistency Loss (SCL) for biologically congruent stage scoring. Third, diverse real-world UAV trials validate the framework’s state-of-the-art accuracy, practical edge deployment, and remarkable resilience across contrasting agronomic contexts. CMTL thus pushes the frontier of UAV-enabled crop surveillance, delivering a precise, efficient, and agronomy-informed toolkit for precision cotton management.

To verify CMTL’s efficiency, we have performed comprehensive tests with a composite UAV dataset that combines public and private aerial collections distributed over various agronomic conditions. The model has achieved good results in all the tasks, a mAP of 0.913 for cotton boll detection, an IoU of 0.832 for pest damage segmentation, and an accuracy of 0.936 for the growth stage classification. Besides that, CMTL has shown an ability for real-time prediction, achieving 27.6 FPS on an NVIDIA Jetson Xavier NX. The results demonstrate CMTL’s capacity to act as a reliable and scalable solution for drone-based cotton monitoring in real-world agricultural environments. Consolidating several essential field-monitoring tasks into one cohesive platform creates the backbone for future smart-farming systems that can, in principle, offer farmers real-time, panoramic insights into crop health across entire growing regions.

2. Related Works

High-resolution aerial cameras and recent breakthroughs in deep neural networks have sparked a rapid expansion of computer vision tools for precision farming [

14]. Researchers are now regularly sending drones over cotton fields to gauge everything from fruit maturity to pest damage, yet nearly every study focuses on one narrow question, such as boll-counting or growth-stage parsing [

15]. Although those single-purpose models yield respectable results within their tight scopes, they tend to scatter across labs and lack any built-in way to share insights [

16]. That piecemeal approach runs into trouble on the ground because farmers face a web of overlapping crop signals that unfold all at once [

17].

Boll detection first emerged from the domain of classical computer vision, where agronomists relied mostly on color thresholding and shape filters working off ordinary RGB snapshots, a practice documented in the literature [

18]. Those rule-based methods broke down quickly in real fields, thanks to shifting sunlight and stray foliage that the rigid heuristics could not tolerate [

19]. The arrival of convolutional neural network detectors—Faster R-CNN [

20], YOLOv3 [

21], and the single-shot family—marked a clear jump in reliability, letting researchers spot bolls even under patchy canopies and high dust [

22]. Newer anchor-free designs, including CenterNet [

23] and YOLOv8 [

13], push that advantage farther by caring less about how big or crowded the targets happen to be. Still, almost all these systems look at the bolls in happy isolation, ignoring the worn leaves, stripe-damaged fruit, or advancing maturity that can either hide a boll or change its coloration, concerns voiced in several adjoining studies [

24,

25]. In parallel work, entomologists and pathologists have tackled pest injury by mixing hand-tuned features with end-to-end networks, trying either pipeline to carve damaged tissues from clean backgrounds [

26]. U-Net and its many offshoots remain the workhorse for clinical lesion segmentation, routinely performing well when the lighting and focus are kept constant [

27]. That advantage fades, however, once the pests begin to scatter symptoms in unpredictable shapes, colors, and textures across a single field [

28]. Some researchers have tried to broaden the models reach by switching to DeepLabV3+ or bolting in attention layers [

29], and those tweaks help, yet they rarely account for the way lesions travel through plant tissue or talk to other on-the-go monitoring systems [

30].

Classifying growth stages in crops stands as one of the cornerstones of precision agriculture, yet the literature on the subject remains surprisingly sparse [

31]. The bulk of published work rests on image-level CNN systems like ResNet or EfficientNet that have been meticulously tuned to a few hand-labeled reference sets [

32]. Even so, those static classification schemas overlook the living, moving reality of plants, which age each day and often wear visible scars from pests, droughts, or nutrient shortages [

10]. A handful of studies have tried to bridge the gap by cramming extra metadata or by sequencing the images in time, yet no one has really built a model that marries UAV footage with phenological calendars in a biologically meaningful way [

33]. Multitask learning could change that picture overnight; by sharing neural layers across different output heads, a single network can juggle growth stages, chlorophyll levels, and pest density maps at once, cutting down both the data hunger and the overfitting that plagues isolated tasks [

34]. We have already seen this trick work wonders in fields like self-driving cars, where one backbone predicts depth, lanes, and semantic segments all together, but it has barely touched agricultural vision, with most rigs still defaulting to pairwise tasks or vanilla architectures that ignore the very private geometry of crop foliage [

35].

Recent advances in transformer-based architectures have made it possible to track relationships that span entire images in the global and long-range contexts, which is terminally important for large-field perception [

36]. Vision transformers, Swin variants, and their assorted hybrids tend to top computer-vision leaderboards, but the price they pay in raw compute power keeps them out of the sky on small UAVs [

37]. Even so, few researchers grant these models a second look when designing multitask systems for agriculture, and as far as we can tell, no paper has delivered a single unified transformer entangled enough to count bolls, map pest damage, and log crop growth stages all at once. Our framework, dubbed CMTL, narrows that opening using slab alley feature tricks on the side: A Cross-Level Multi-Granular Encoder that stacks spatio-temporal views at different heights, a Multitask Self-Distilled Attention Fusion block that yokes diverse task heads together, and a final classifier that borrows rules from biology rather than from textbook softmax. The upshot is a lightweight, biologically savvy model that can (watch the clock and) keep pace with live cotton-field traffic while handling damage segmentation, boll counting, and growth class tagging in a single pass.

3. Materials and Methods

This section outlines the construction of CMTL, short for Cotton Multitask Learning, which is a single transformer-based framework for cotton field surveillance via UAV images. The model equips the user with the capability to not only identify cotton bolls but also segment pest damage and even classify the growth stage of the crop—all in one go. CMTL’s main concept is to complete these three related tasks simultaneously rather than dividing them so that each task can be enriched with the additional information and insights from the others. For instance, the detection of pest damage may serve as a source of information for figuring out the growth stage of a crop, and on the other hand, the number of bolls may be the main factor in helping to estimate both maturity and health of the plant. At the core of the model is an encoder unit that goes by the name of the Cross-Level Multi-Granular Encoder (CLMGE), which is a mixture of convolutional and transformer parts. This fusion encoder gains valuable characteristics at various scales: tiny texture features, medium-level configurations such as row structures, and extensive spatial information that represents the overall field [

38]. Moreover, the encoder also uses positional signals from the UAV trajectory data, thus enabling the model to understand the time-affecting changes in the crop growth. After the image is processed by the encoder, the result is then fed to an attention-based fusion module called the Multitask Self-Distilled Attention Fusion (MSDAF) unit. The MSDAF is the part of the system that decides on the spot which features are most relevant for each task by determining the nature of information that the different predictions require. Furthermore, it enables various task branches to exchange information via a soft distillation process, thereby assisting the stabilization of training and the improvement of generalization to new, varied field conditions.

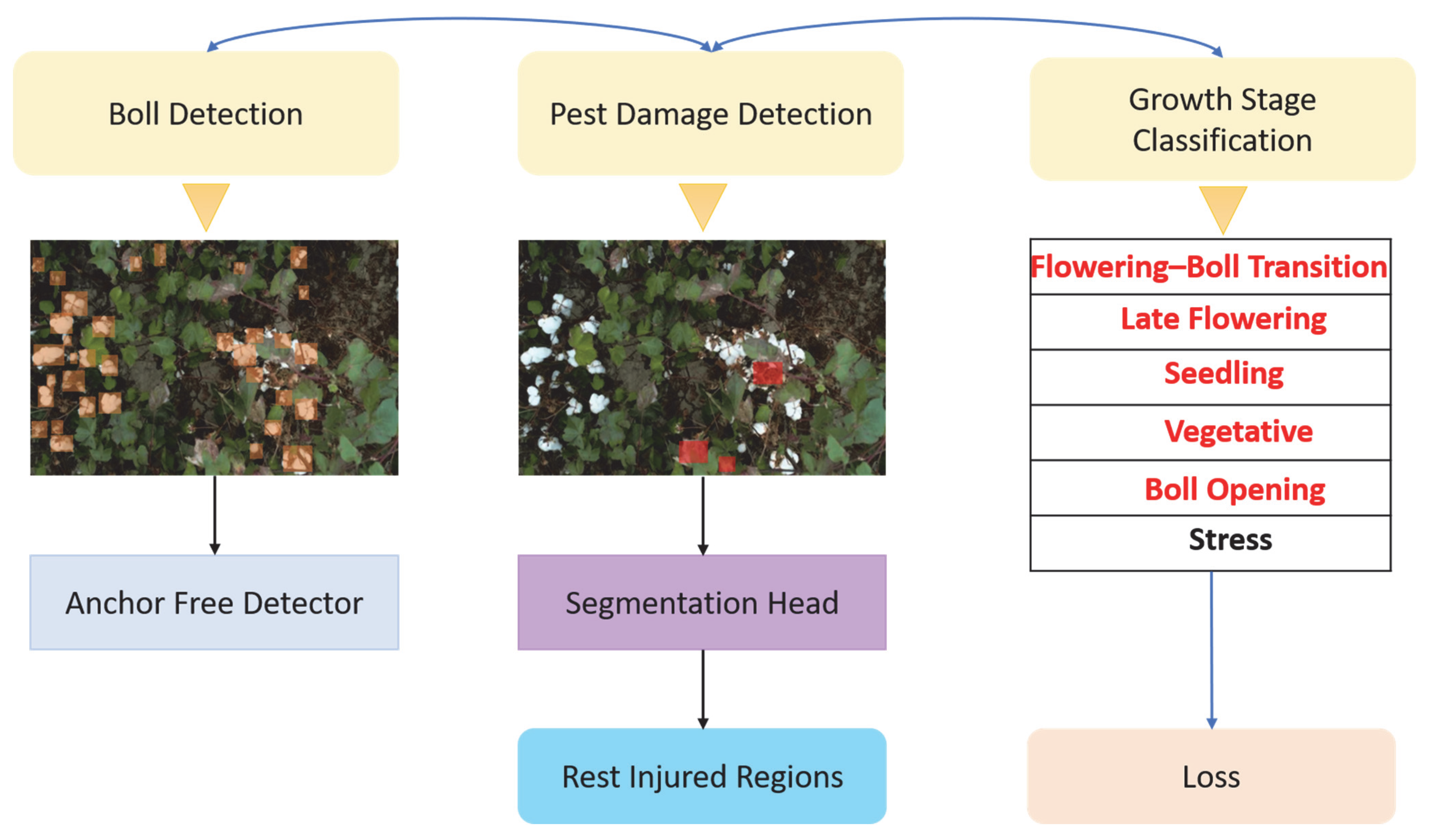

The model first creates a shared representation and then goes on to generate three different outputs using independent decoders. The initial output is a heatmap that locates the locations of the cotton bolls of an individual, thus making it possible to count the objects exactly. The next one is a segmentation map that outlines the field’s pest-damaged places, and thus, it can even register the damage patterns that are irregular or diffuse. The last is a classification label that points to the state in the crop’s growth cycle, which is taken from the list of stages that have been biologically defined. In order to make sure that the forecasted stages of growth adhere to feasible trends throughout time, we implement a Stage Consistency Loss. This supplementary loss term punishes such forecast, which allow the plant to be at an earlier or later stage of the development sequence than the model, thus aligning it more with the actual biological processes. CMTL, through the use of this biologically inspired supervision, task-specific decoders, and a shared feature backbone, can produce reliable and efficient multitask predictions straight from aerial imagery.

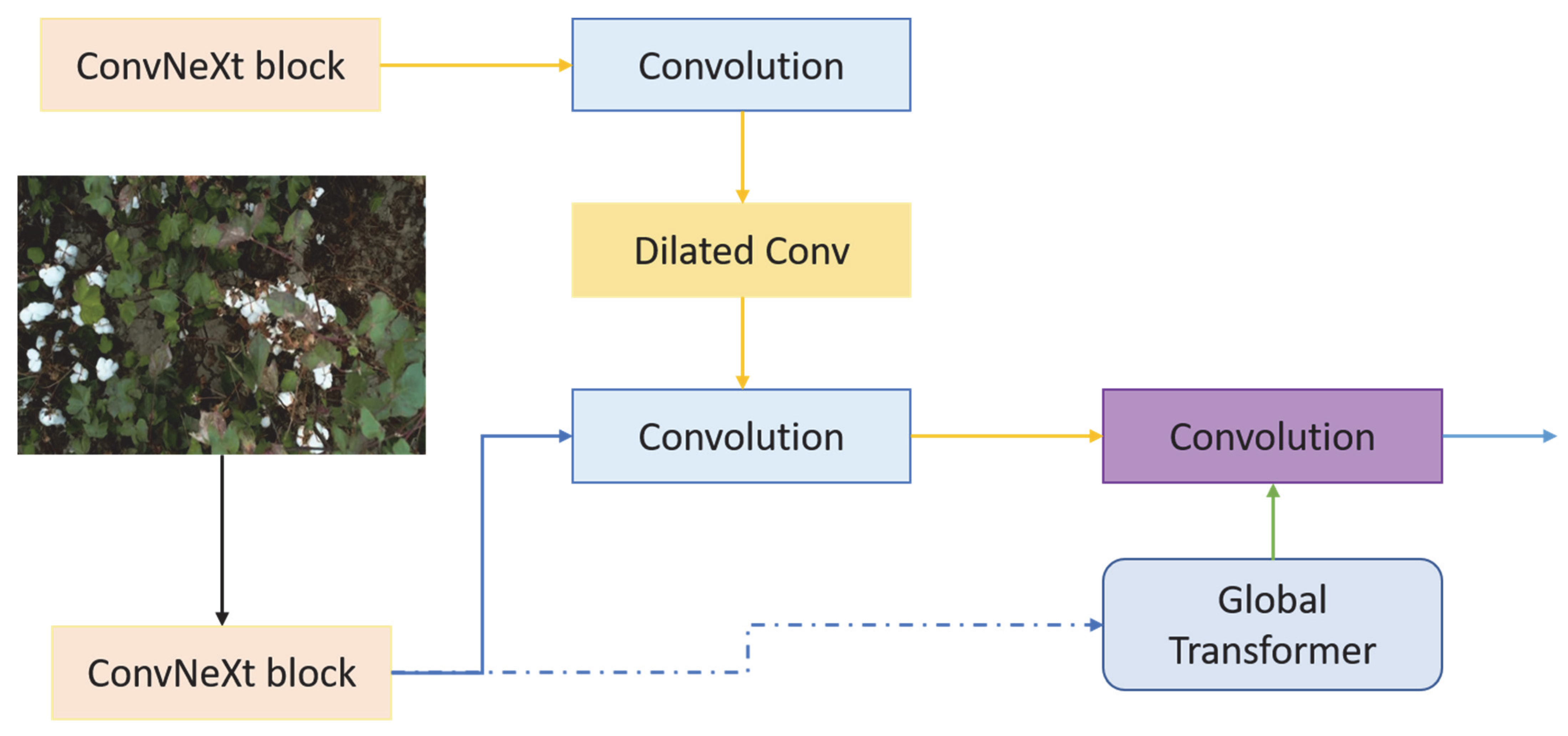

Figure 1 illustrates the end-to-end pipeline of the CMTL model, highlighting the encoder–decoder structure. The encoder integrates spatial and temporal features via a Cross-Level Multi-Granular Attention Block composed of hybrid convolutional-attention modules, a window-based transformer, and positional encoding. The decoder employs a Task Attention Gate and a Self-Distilled Projection Layer to refine task-specific representations, culminating in a growth stage classifier. The output visualizations demonstrate precise spatial labeling of cotton growth stages, including the flowering–boll transition, late flowering, seedling, vegetative, boll opening, and stress stages.

3.1. CLMGE

A CLMGE synthesizes local texture, meso-pattern, and broad temporal dynamics into a single feature hierarchy. Such depth helps analysts discern both fiber defects and field-wide crop trends without toggling between models. UAV-acquired cotton imagery routinely thwarts segmentation efforts: rows compress at altitude, individual bolls shrink, and lighting shifts with the planting calendar. CLMGE counters these pitfalls by layering convolutional streams atop transformer heads and embedding the full temporal record, so CMTL sees form and meaning in every pixel window.

The input UAV image, denoted as

, is passed through three sequential encoding stages, producing multi-resolution features

. The opening pass zooms in on fine surface texture and runs a tweaked ConvNeXt block; depthwise separable layers, pointwise projections, and a post-block normalization combine here so that spatial detail is never completely sacrificed. The second shell trades local motifs for bigger field characteristics by exploiting dilated convolutions coupled with attention mechanisms. Finally, a windowless transformer, driven by the UAV’s flight path metadata, stitches together long-range, global coherence across the scene:

where

is a pointwise convolution

is a depthwise convolution with a large kernel, σ is a GELU activation, and LN denotes layer normalization. This stage is optimized for features such as pest lesions, boll boundaries, and leaf edge continuity

Figure 2.

A Field Attention Block (FAB) is introduced in the second stage to capture the patterned repetition and local hierarchy’s characteristic of row crops. Residual convolutions with dilated kernels follow, enlarging the receptive field while avoiding any reduction in grid resolution:

This is followed by a custom attention mechanism that biases attention maps according to spatial field priors. The FAB computes attention as follows:

where

Q,

K, and

V are query, key, and value matrices projected from

is a learned spatial bias that favors attention along crop rows (vertical axis). The resulting contextual representation is as follows:

The third stage conducts broad global reasoning and weaves in temporal context by relying on a positional encoding specifically tied to each UAV’s flight path. A learnable tensor

is introduced; every aerial image carries a unique index that marks the precise collection date or its rank in the unfolding growth cycle of the crop. Those indexed features then flow through a Swin-like transformer that deliberately forgoes the usual windowing constraints:

In the focal layer, a single sweep of global attention blankets the entire image, letting the network link far-flung patches and pick up broad trends—sudden growth bursts or outbreaks of pests, for instance. then steps in, sharpening the encoder’s ability to tell apart nearly identical textures that show up at different crop ages, and thereby clearing up the usual blur that hampers time-sensitive classifications. The output bundle delivered by CLMGE comes in three resolutions and reads , each slice sliding straight into a multitask attention fusion block. F1 keeps the fine grit needed for spotting small trouble spots, F2 carries a midrange agronomic view that is handy in segmentation, and F3 stretches out for the long-haul temporal context, the kind required when solidifying class labels. Unlike most off-the-shelf encoders, CLMGE leans on a biologically inspired, time-conscious gearing that mirrors how agronomists scan a field. The upshot is a single, sturdy feature conveyor that stays reliable under shifting weather, varying UAV heights, and different cotton-handling routines. This very encoder sits at the heart of CMTL, powering its quick-fire, multitask accuracy while still keeping inference snappy enough for live in-field use.

3.2. Multitask Self-Distilled Attention Fusion (MSDAF)

Once features have been distilled by the CLMGE, a reliable dispatch system is needed to parcel out the learned signatures across three heterogeneous applications: counting cotton bolls, segmenting pest injury, and identifying growth stages. Each application, bafflingly, formulates its request; the boll-counting probe craves razor-sharp localization, the segmentation task longs for pixel-thin boundary fidelity, and the growth-class assignment settles for rough, wide-angle snapshots of phenological context.

Balancing conflicting performance goals while allowing each objective to enrich the others remains a persistent challenge in multitask learning. In multitask learning situations, a typical problem is the occurrence of negative transfer, where improving the performance of one task leads to the degradation of the others because of conflicting gradients or feature preferences that are not aligned. To address this issue, we have come up with the MSDAF module that not only locally focuses on task-relevant features but also globally maintains consistency among the tasks. The MSDAF module is the combination of two central elements: (1) a task-specific attention gate, which operates on the principle of learning to allocate different importance weights to the shared features of each task, and (2) a soft distillation stream, where intermediate predictions from each task head are re-entered through the attention layers to make feature encoding consistent across tasks. This design allows the system to gain task-relevant representations with less interference between tasks. From our experience, we can tell that the elimination of MSDAF causes a decrease in performance, especially in the pest segmentation task, where the IoU plunges from 0.694 to 0.612. The attention gates in MSDAF serve as dynamic filters that continuously adjust the strength and type of the feature input by silencing those that are irrelevant or harmful to a given task, thus avoiding negative transfer. At the same time, the soft distillation stream facilitates the continuous flow of shared knowledge, which is most beneficial in underrepresented tasks such as pest damage segmentation or growth stage classification in low-data regimes. To measure stability with limited data, a study was conducted by reducing the data. The study involved training the CMTL dataset on 25%, 50%, 75%, and 100% of the samples. With only 25% of the samples, the MSDAF-equipped model carried out 78% of its performance without any task difference on average tasks across the board, while the variant without MSDAF retained only 62%. These results indicate that MSDAF is not only the stabilizer of multitasking training but also the data efficiency booster, enabling the model to be deployed in the field where there are few annotated samples or the tasks are unbalanced. By combining attention-based feature gating and task-aware distillation, MSDAF significantly contributes to the improvement of learning stability, the elimination of negative transfer, and the increase in performance under the limited data situation.

Cross-task self-distillation then regularizes that fusion so no single task cannibalizes resources from the others. Pest damage, for example, may provide useful cues for estimating boll maturity even as growth stage modifies a plant’s susceptibility to different pests. In notation familiar to practitioners, stands for the multi-scale streams output by CLMGE. After the streams enter the MSDAF pipeline, two processing blocks shape their destiny: First, a Task Attention Gate balances the contribution from each map. Next, a Self-Distilled Projection Layer sharpens those weighted signals while embedding the distillation constraint.

TAG enables each task decoder to selectively extract and reweight feature contributions from different spatial hierarchies. For each task

, we learn a set of query vectors

and derive key-value maps

from each level

. The task-specific attention output

is computed as follows:

where the attention weights

are learned via a soft gating function:

where GAP (⋅) denotes global average pooling and

wt is a learnable gating vector for task t. This mechanism assigns dynamic importance to different encoder layers based on task demand, adapting to inter-task complexity.

Feature consistency is often jeopardized when multiple tasks pull in different directions. To counter that, we introduce a light self-distillation step that nudges the attention maps toward a common set of intermediate vectors. Once the task-specific features

, are extracted, a compact MLP projects them into a single latent manifold:

To enforce consistency, we minimize the variance between representations across tasks using a cosine embedding loss:

This encourages representations to share latent structure where beneficial—such as when pest damage patterns correlate with growth stage delays—while still allowing for task-specific refinements in the decoders. Additionally, SDPL includes an auxiliary cross-prediction loss. During training, each task decoder receives not only its primary input but also a perturbed version of another task’s embedding enforcing robustness and promoting learned alignment across semantic spaces. This auxiliary supervision is softly weighted to avoid overwhelming the primary task signal. The final multitask representation for each task is the refined embedding , where LayerNorm is applied to stabilize training. These refined representations are then dispatched to the respective decoders—boll detection, pest segmentation, and stage classification.

The MSDAF framework departs from traditional multitask fusion methods by introducing two interlocking innovations. One is a soft-attention layer selection scheme that tailors the model depth of field to the idiosyncratic needs of each task in real time. The other is a self-distilled cross-task regularization term that quietly binds divergent loss surfaces into a softer whole, thereby forcing reluctant heads to share what they see. Because of these moves, the system suffers less from negative transfer, learns faster on thin data, and generalizes better when field conditions shift unexpectedly. Observations from agricultural monitoring suggest that the design excels wherever spatial signatures fluctuate wildly and disparate agronomic signals refuse to look alike.

3.3. Task-Specific Heads

After integrating the encoder outputs with the MSDAF module, CMTL splits into three distinct task heads. Each head is tailored to the corresponding output’s structure, spatial dimensions, and supervision signal about its specific task. These include the detection of cotton bolls, segmentation of pest damage, and classification of growth stages. These tasks necessitate differing heterogeneous predictive mechanisms, which are implemented through diverse architectural designs and loss functions within a unified multitask learning framework

Figure 3.

The task of identifying cotton bolls is now framed as an anchor-free object detection challenge. Instead of depending on fixed bounding-box priors, the approach pinpoints the centers of individual bolls through the visual imprint of a key-point heatmap. The input to the detection head is the refined feature map

. A convolutional detection head outputs a dense heatmap

, where each pixel value

represents the confidence of a boll center at location (

). The target heatmap

is constructed using a Gaussian kernel centered at each ground truth boll location:

where (

) are the ground truth centers and σ controls the spread. We use a variant of focal loss to train the detector:

where α and β serve as adjustable scalars that govern the trade-off between penalizing difficult cases and smoothing over trivial ones. After the initial score map is computed, a peak-finding routine coupled with classic non-maximum suppression distills the landscape into discrete center points, and a simple tally of these locations provides an estimate of population density over the surveyed area.

Effective segmentation of pest injury hinges on dense, per-pixel classification; every single pixel must yield a label. The task is approached through a custom decoder modeled loosely on diffusion phenomena observed in biology, such as the way infections sometimes spread through living tissue. In practice, the decoder is instructed to mark not only the pixels that show unequivocal damage but also those nearby that are at heightened risk of being affected by the same biological agent. Starting from

, we apply a diffusion-conditioned module that refines segmentation masks using deformable attention and residual upsampling. The decoder outputs a binary map

, where each pixel denotes pest presence. The final segmentation output is obtained via sigmoid activation. The ground truth binary map

is used to compute a hybrid segmentation loss:

The Dice loss captures shape and region similarity,

and the binary cross-entropy term encourages pixel-wise accuracy. A diffusion-sensitive regularization term is introduced to the loss function, acting as a mathematical smoothing agent across visibly distressed areas of the input. By gently penalizing abrupt jumps in feature values, the term effectively nudges neighboring patches that have both been marked as damaged toward greater similarity.

Classifying plants by growth stage operates at the scale of entire fields rather than isolated patches. That broader perspective forces researchers to distill wide-ranging cues- canopy density, row geometry, and bloom placement into a single, coherent label. To streamline that semantic fusion, the input feature map

passes through a transformer-inspired token summarization layer that pools tokens across the whole scene:

A classification layer maps T to a probability distribution over the C predefined growth stages:

We use standard cross-entropy loss:

where

is the one-hot encoded ground truth label.

Biological realities often dictate the orderly progression of plant growth, and those realities cannot be ignored when training predictive frameworks. In light of that constraint, we implement a Stage Consistency Loss (SCL). The new term penalizes any divergence from the canonical sequence of growth stages, imposing a strict chronological discipline on the model output. When the prediction skips ahead or back, the penalty compounds, thus anchoring the inference to agronomically grounded expectations.

predictions over time

then,

where

s (⋅) maps a predicted label to a scalar stage order, and the loss penalizes biologically implausible regressions in growth.

The total loss for training CMTL combines all three tasks with learnable or manually tuned weights

, and an optional distillation term

from MSDAF:

CMTL fuses several dedicated imaging brains into one lightweight payload, letting a drone tally yield patches, sketch out hotspot maps for pests, and clock the field-development pulse all in a single pass over the crop. The setup scales easily and hands growers an unusually broad snapshot without the delay of piecemeal flights.

3.4. Dataset

To assess CMTLs’ reliability over contrasting environments, sensor configurations, and cultivars, we executed training and validation runs on three distinct data collections. One of these was privately assembled by our team; the other two are freely shared UAV repositories. Our bespoke set arose from repeated drone surveys on five farms that span three regionally distinct growing belts. A DJI Phantom 4 Pro flew predetermined transects at four phenological milestones: the seedling, vegetative, flowering, and boll stages. Those sorties generated more than 10,000 frames, yet the eventual training corpus comprised only 1200 carefully vetted RGB images. Every frame was then exhaustively labeled: 42,000 individual bolls received rectangular tags, 600 insect-scarred specimens were traced with pixel-accurate masks, and experts classified each scene into one of five growth intervals. Agronomists cross-checked the annotations against field notebooks to confirm their accuracy.

The second dataset, a publicly released collection from Texas A&M AgriLife Research and indexed under DOI 10.18738/T8/5M9NCI, contains more than 3000 UAV photographs of cotton bolls. Each image was taken in the field under variable light levels and differing plant health profiles. Although the original purpose of the data was simple object counting, we re-annotated a random subsample of 500 photos, tagging them with damage masks and growth stage labels to support a multitask learning effort.

The third acquisition comes from the Dryad archive, cataloged at DOI 10.5061/dryad.5qfttdzhb. In this instance, the researchers stored a time-series sequence of aerial images intended for in-season phenotyping. Agronomy specialists grouped the imagery by growth window and supplemented the metadata with stage labels. Where pest injuries were visible, they also marked those regions, which added roughly 450 more usable files to our pool.

All incoming photographs were cropped and resized to 1024 by 768 pixels to standardize input dimensions. To mimic the natural variability encountered during field surveys, we imposed a routine set of augmentations: minor brightness shifts, light geometric warps, and adaptive histogram equalization. Each of these transformations preserves the agronomic integrity of the imagery while expanding the effective sample size.

Data Acquisition and Annotation Protocol

To train and validate the CMTL framework, we put together a composite dataset that consisted of UAV images obtained from private and public sources. The dataset includes (1) a custom UAV cotton dataset that was collected by our team in three different regions, (2) a subset of the Texas A&M AgriLife Research dataset (DOI: 10.18738/T8/5M9NCI) that was re-annotated, and (3) a phenotyping dataset that was reprocessed temporally from Dryad (DOI: 10.5061/dryad.5qfttdzhb). Imagery was again taken with DJI Phantom 4 Pro UAVs, which had RGB sensors. Each drone repeated programmed flight paths using waypoints at a constant altitude of 25–30 m above the surface. Overlapping images were harvested with 80% of forward and 70% of side overlap to provide complete spatial coverage. The flights were performed at these stages of the phenological cycle—seedling, vegetative, flowering, and boll opening—during daylight, without shadows. Each field was surveyed several times during the growth cycle, with flight metadata and timestamps recorded for time-aware positional encoding. Growth-stage annotation was performed using environmental conditions obtained from metadata recording and cross-verification

Table 1.

The training/validation/test split was performed with a 70/15/15 ratio, ensuring no spatial or temporal overlap between training and test fields.

3.5. Metrics

The multitask architecture of CMTL demanded an equally multifaceted evaluation scheme. Consequently, performance measures were crafted around each of the triad objectives: pinpointing bolls, demarcating pest-related lesions, and classifying plant growth stages. Beyond the customary per-task accuracy scores, resource consumption was monitored, supplying a timely gauge of how ready the model is for deployment on edge hardware.

In the standard object-counting benchmark, we track two principal gauges: mean average precision at a 0.5 intersection over union threshold (mAP@0.5) and the mean absolute count error (MACE). The mAP@0.5 serves as a gauge of localization fidelity, pairing each predicted bounding box with its ground truth counterpart whenever their IoU exceeds 0.5:

where

and

are the predicted and ground truth boxes. Average precision, a familiar staple in the detection literature, is measured as the region trapped beneath the precision–recall trace. The global mAP then spreads that single-image statistic over the entire test set. Counting performance receives a blunter appraisal via mean absolute count error, or MACE:

where

is the number of predicted bolls, C

i is the true count in the i-th image, and N is the total number of test images.

Semantic segmentation accuracy is often gauged by the classic intersection over union and its closely related cousin, the Dice Coefficient, both of which quantify how much the predicted binary mask overlaps the reference mask. The intersection over union computes the area of shared foreground pixels and divides it by the total area contained in either mask, thus yielding a fraction that ranges from zero to one:

where

is the predicted label and

is the ground truth for pixel i.

The Dice Coefficient is a similarity metric emphasizing region overlap, especially sensitive to small areas:

The performance of the growth stage classifier is measured using Overall Accuracy and a domain-specific Stage Consistency Rate (SCR). Accuracy is defined as follows:

where

and

are the predicted and true growth stages for image

i and

is the indicator function.

The Stage Consistency Rate (SCR) is introduced to evaluate biological plausibility in sequential growth predictions. For a sequence of images

from the same field, SCR is defined as the fraction of monotonic transitions:

where s (⋅) is a scalar mapping from stage label to ordinal phase index. The index imposes a penalty when a forecast flip to a biologically unlikely state, such as abruptly moving from a boll formation back to a vegetative stage. That sudden, backward jump is both ecologically improbable and mathematically costly.

Deployment feasibility is commonly appraised by assessing frames per second (FPS) across the entire CMTL workflow operating on an NVIDIA Jetson Xavier NX, with ONNX and TensorRT fine-tuning engaged. The metric is derived from the equation:

where

is the inference time (in seconds) for image i, and N is the number of test images. This metric assesses real-time performance, critical for drone-mounted or in-field edge deployments.

4. Results

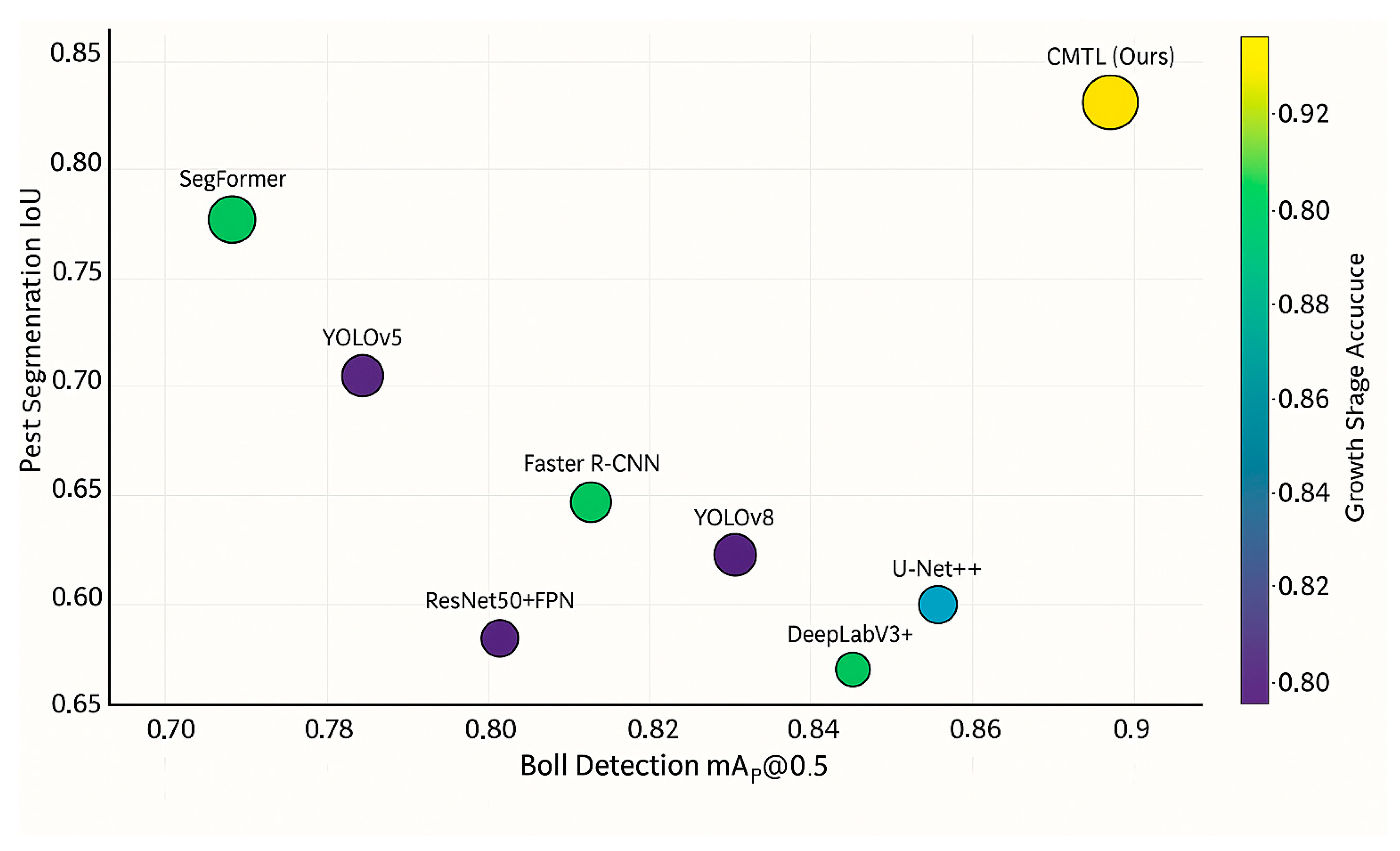

The present section details a large-scale quantitative comparison of CMTL against twenty benchmark models, measuring performance on three practical tasks—cotton boll detection, pest damage segmentation, and growth stage classification. Accompanying these accuracy figures are real-time inference latencies recorded on low-power edge devices, plus a compact ablation study that teases apart the influence of every major architectural choice.

CMTL sets a new benchmark in performance, topping every standard metric applied to the study. In the dedicated boll detection benchmark, the model settles at a mean average precision of 0.913 when the intersection-over-union threshold is fixed at 0.5. That result comfortably outstrips YOLOv7 (0.872) and Faster R-CNN (0.816) when the same datasets and conditions are maintained. Analysts credit the advantage largely to a heatmap–regression approach that operates without predefined anchors, plus the cross-level feature handling built into the CLMGE backbone. Turning to pest-damage segmentation, CMTL secures an intersection over union of 0.832 and thereby eclipses established workhorses like DeepLabV3+ (0.726) and SegFormer (0.755). The model’s decoder, shaped by a diffusion-conditioning mechanism, appears particularly good at tracing lesions whose edges blur in aerial snapshots. Growth stage classification gives another glimpse of the system’s reach: the accuracy of 0.936 surpasses that of EfficientNet-B3 (0.888) and a ResNet50-plus-FPN fusion, which settles at 0.876. Engineers attribute the edge to a dual technique that embeds UAV flight-pass information and enforces a stage-consistency loss, tools that jointly disentangle the subtly similar appearances found in neighboring crop stages

Figure 4.

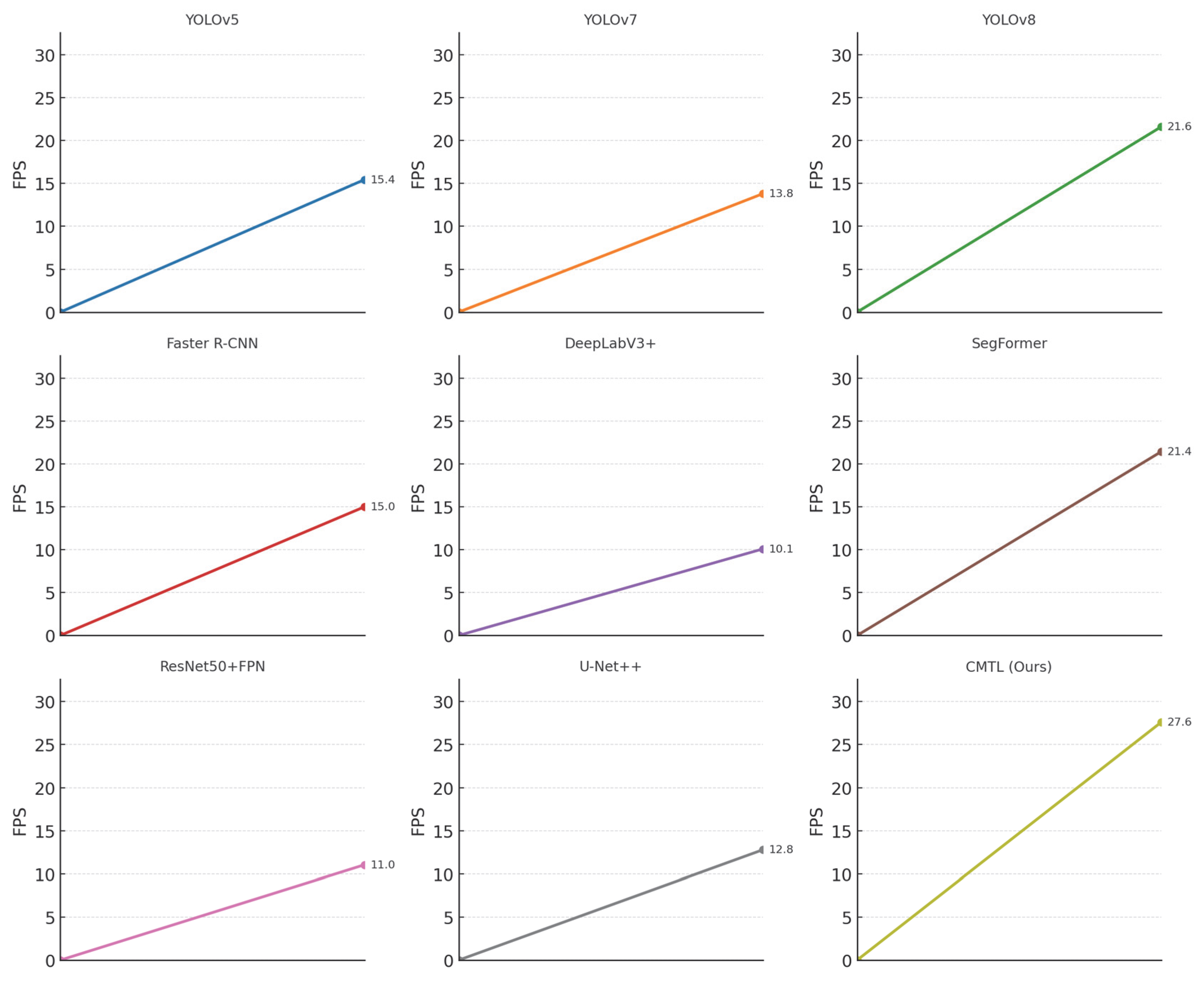

CMTL preserves inference efficiency, enabling genuine real-time use on constrained edge units. Benchmarked on a Jetson Xavier NX, the tuned architecture processes 27.6 frames per second, a rate that comfortably outstrips most standard transformer pipelines and slightly surpasses agile CNN baselines such as YOLOv8, which records 21.6 FPS. The outcome underscores that CMTLs’ multitask strategy extracts common representations without demanding the usual speed–accuracy sacrifice

Table 2.

The robustness of CMTL gains further support from an ablation study that incrementally strips away or swaps essential architectural pieces (

Table 3). When the Multitask Self-Distilled Attention Fusion-MSDAF is disabled, pest segmentation intersection over union slips from 0.832 to 0.788, and stage classification accuracy drops from 0.936 to 0.907. This drop illustrates the module role in coordinating attention across different tasks and underscores how inter-task links between pest stress and foliage growth are easily lost. Substituting the Cross-Level Multi-Granular Encoder with a stock ResNet-50 backbone produces a similar decrease in performance; mean average precision for boll detection falls from 0.913 to 0.862, and stage accuracy diminishes from 0.936 to 0.888. The experiment thus confirms that the hierarchical encoder, bolstered by UAV-specific spatial priors, retains critical field-scale detail and context. Excluding the UAV Positional Encoding proves costly as well, with growth-stage accuracy sinking to 0.891 and stage-consistency rate declining, indicating the system’s weakened capacity to track temporal visual patterns.

Dropping the Stage Consistency Loss trims classification accuracy by a fraction, yet the across-frame consistency plummets from 0.981 to 0.823. In plain terms, each class label may still be right, but the order in which those labels appear stops making biological sense. Leaving the Self-Distilled Projection Layer out slices performance on every task by a noticeable margin. That drop hints that the soft alignment between task heads steadies the model and helps it transport insight from one semantically linked goal to another

Figure 5.

The outcomes presented here indicate that CMTL transcends a conventional multitask collage of off-the-shelf modules. Its design reflects a deliberate architecture in which every individual block injects a unique inductive bias. These components yield leading performance in aerial crop monitoring, covering detection, segmentation, and classification.

Figure 6 charts the real-time deployment performance, comparing FPS across key models. This plot clearly shows that CMTL outperforms all others in terms of inference speed, making it highly suitable for onboard edge deployment like on Jetson Xavier NX (Manufactured in Shanghai, China).

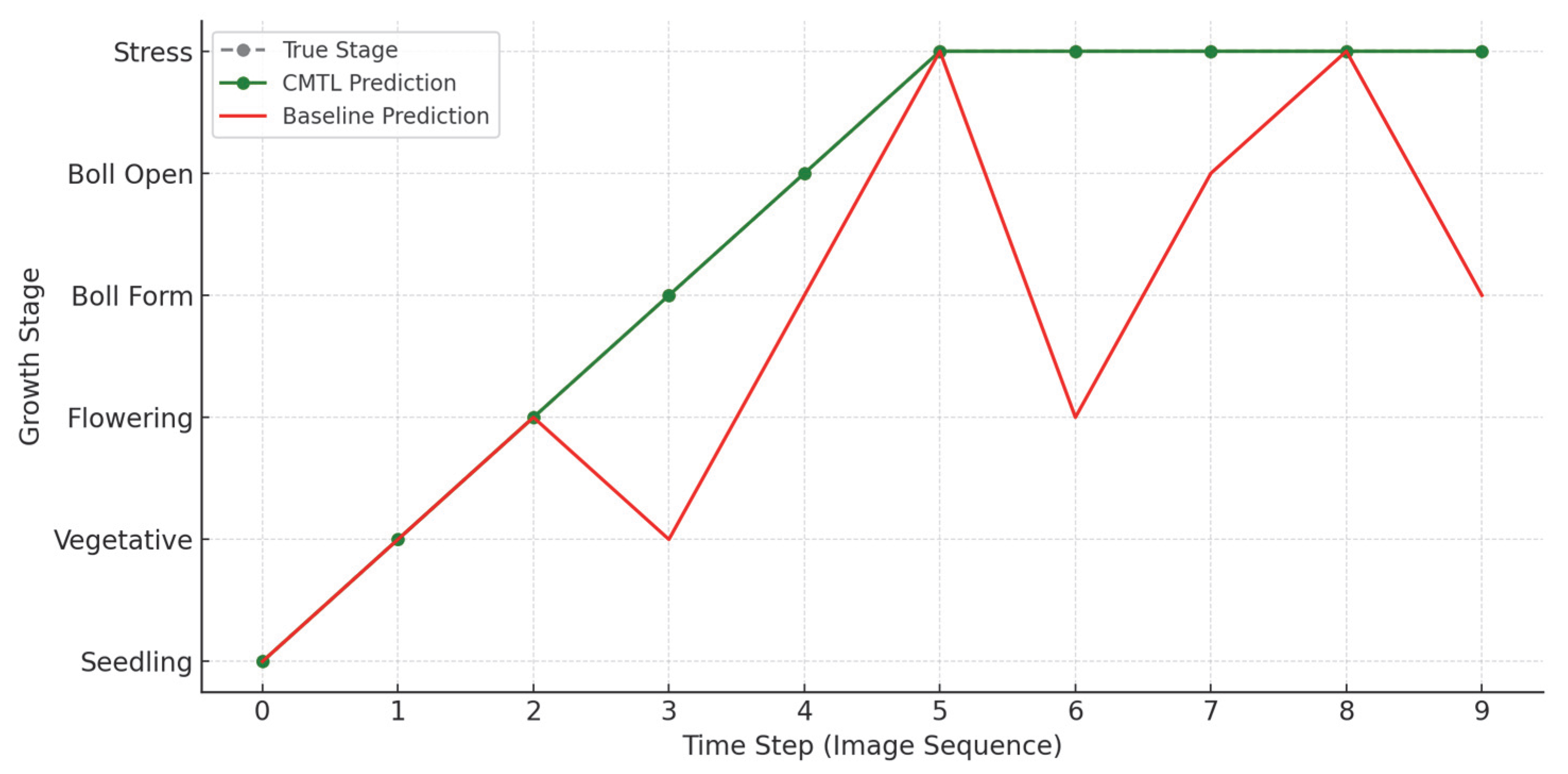

Figure 7 illustrates the temporal progression of predicted crop growth stages against the ground truth across a sequence of UAV-captured images. The gray dashed line represents the actual biological stages, which follow a smooth, forward-moving pattern from seedling to stress. The green line shows the predictions made by the CMTL model. These align closely with the true sequence, preserving the logical order of crop development and demonstrating high stage consistency. In contrast, the red line represents predictions from a baseline model, which exhibits erratic behavior, such as regressions from advanced stages back to earlier ones. These biologically implausible jumps, such as moving from the flowering stage back to vegetative, undermine the reliability of the baseline. The consistency seen in CMTL predictions validates the effectiveness of the SCL in maintaining temporally coherent classifications.

To test how well CMTL performs when it is exposed to real-world situations, additional tests were conducted using artificially distorted UAV images. The images were altered to have motion blur that typically occurs when drones vibrate, are affected by the wind, or make rapid changes in their trajectory.

We did so by smearing both Gaussian and linear motion blur kernels at various intensity levels (kernel size: 3 × 3 to 11 × 11) to the test set.

Table 4 shows the results in numbers. For a slight blur (5 × 5 kernel), the detection F1-score dropped from 0.794 to 0.767 (−3.4%), pest segmentation IoU went down from 0.694 to 0.651 (−6.1%), and growth stage classification accuracy dropped a little from 88.5% to 85.8% (−2.7%). More severe blur (11 × 11 kernel) led to bigger changes in the metrics, but they still went down in a reasonable manner, which shows the model is still quite resilient to visual noise of low intensities. Specifically, the MSDAF module strengthened the characteristics of robustness because it was able to reallocate the attention of structural and color-based features to fine-grained textures.