The proposed system for tactile paving inspection is designed to address the unique challenges posed by tactile paving elements, including real-time detection, obstacle handling, and lightweight operation. This section details the system architecture, hardware configuration, and control algorithms, emphasizing the integration of the YOLOv8-OBB model for accurate object detection.

3.1. Structure of the System

The inspection task for tactile pavings is multifaceted, involving detection of both go-blocks and stop-blocks, analysis of block dimensions, identification of obstructions, following the tactile paving wayfinding map, and other cloud/networking tasks. Deploying all these tasks onto a drone for real-time operation is challenging. Therefore, this system focuses on the detection and video acquisition of tactile paths, ensuring comprehensive coverage so that it can later be targeted and analyzed in detail in the cloud. The inspection tasks of this system are:

- (1)

Due to the different design requirements of the go-block and the stop-block, they are differentiated by the detection task so that the drone can follow the go-block.

- (2)

Obtain the steering trend of the go-block through the OBB task and adjust the drone heading in real time to ensure that the tactile path is always in the center of the inspection video.

- (3)

The common occupancy of tactile path, such as cars and bicycles, causes the tactile path targets in the drone’s field of view to be missing, which greatly increases the difficulty of inspection and detection of tactile path, so this system identifies the occupancy of tactile path and processes them individually.

- (1)

Safety Check, Receive Task and Map—The drone performs a safety check before deployment. Then, the cloud central station computes the waypoints from a wayfinding map and the specific task that consititutes a start and end point. The cloud central station then send the task details , where N is the number of waypoints and, in the WGS84 geodetic coordinate system.

- (2)

Fly to the Start Point—The drone moves to the designated starting position

for inspection. The initial attitude is maintained throughout the flight unless there is an obstacle. An obstacle avoidance algorithm is triggered by a millimeter-wave forward radar, logs the event, and then computes the

that has higher priority than the cloud waypoint

[

23]. More advanced obstacle avoidance can be implemented with fusion of another sensor, such as a camera [

24].

- (3)

OBB Detect (Oriented Bounding Box Detection)—The drone detects the tactile paving path and occupations using the YOLOv8 model. In the experimental section, we will show an example of an OBB detect task where the detection quality is also indicated. Three distinct situations are defined as follows:

- (a)

If a tactile paving path is detected, then it classifies the block type, i.e., whether the block is a go-block (guiding block) or a stop-block (warning block). If the go-block path is detected and is clear, then the drone follows the centerline of the tactile paving for continuous inspection. If the stop-block path is detected and is clear, then the drone hovers first and then aligns the drone heading following the wayfinding map.

- (b)

If an occupation, such as cars and bicycles, is detected, then the drone logs the position referencing on the tactile paving wayfinding map. Then, the drone continues with the task using the wayfinding map.

- (c)

If an error occurs (e.g., no tactile paving detected), then it transitions to the error state and proceeds to the goal position of the task.

- (4)

Land on the Specified Area—The drone reaches the end of the inspection route and lands safely in the predesignated area.

- (5)

Task Completion—At the end of the inspection, the complete inspection video and tactile road occupancy information will be uploaded to the cloud central station to subsequently facilitate more-detailed tactile road inspection operations such as identifying cracks, damages, dimensions, etc.

Figure 2.

The structure of the system.

Figure 2.

The structure of the system.

Figure 3.

Task execution process.

Figure 3.

Task execution process.

3.2. Hardware Structure of the Drone

The hardware system of our designed drone is shown in

Figure 4. The drone is equipped with a multi-sensor system and edge-computing hardware to ensure accurate and stable operation during inspection tasks. Key components include: AP, GPS, MAG, IMU, millimeter-wave forward radar, LTE model, ground proximity radar, Jetson AGX Xavier, and a fisheye camera.

The drone acquires precise position, attitude, and pose information through an integrated multi-sensor system that includes a GPS, magnetometer (MAG), and inertial measurement unit (IMU). Altitude is maintained using the IMU’s inbuilt barometer module to ensure the vehicle’s altitude stability, while forward radar and ground radar are used to provide environmental awareness to ensure safe flight.

Through the LTE module, particularly the core SIMCOM communication module, the drone achieves real-time interaction with the central station. This enables timely updates and transmission of flight status and operational progress while providing a critical remote intervention interface for operators. The capability to remotely send inspection waypoint tasks and execute basic control commands—such as hovering, takeoff, and landing—serves as the most direct and effective intervention measure for handling unexpected situations during flight (e.g., sudden obstacles or mission objective changes). This robust communication ensures real-time uploading of inspection data and timely reception of remote commands, safeguarding the integrity and smooth execution of inspection tasks.

Additionally, leveraging the Jetson AGX Xavier platform and a high-performance fisheye camera, the drone performs target detection tasks based on oriented bounding boxes (OBB). The fisheye camera features a diagonal field of view (FOV) of 160 degrees—significantly wider than conventional lenses (approximately 72 degrees)—dramatically expanding the information coverage per capture. This capability ensures that even during low-altitude operations, it captures expansive scenes (such as a full panorama of tactile paving) while minimizing the probability of target loss due to limited visibility. The AGX Xavier’s high-performance computing power meets the real-time requirements of OBB detection tasks, and the fisheye camera’s wide-angle coverage synergistically enhances detection accuracy and the overall reliability of drone inspection operations.

3.3. Control Algorithm

The drone control system shown in

Figure 5 uses a layered control architecture to meet the real-time and stability requirements of the tactile paving detection task. The architecture is divided into high-level navigation and target tracking control and low-level attitude and thrust control.

All layers implement PID control:

where

is error and

controller output.

,

, and

denote the proportional, integral, and derivative gains. OBB-based detection provides forward/lateral position errors to the P-position controller, converting these to x/y-direction velocity targets. These enable constant speed tracking along tactile paving curves. The PID velocity controller then converts velocity commands to attitude angle targets (x/y-directions), while OBB directly provides heading targets. This allows adaptive heading adjustment for continuous path tracking.

Attitude targets feed a P-attitude controller for roll/pitch fine-tuning, generating angular velocity references. This enables rapid response to high-level adjustments while minimizing positional errors. The PID angular velocity controller then converts attitude errors to precise angular rate commands. Finally, the mixer integrates all signals for motor thrust distribution.

The controller parameters are specified in

Table 1:

This layered architecture enables specialized functionality: Low-level controllers reject high-frequency disturbances through rapid attitude and thrust adjustments, while high-level controllers adapt navigation paths using real-time tactile paving detection. The coordinated operation maintains stable tracking in complex urban environments.

3.4. Improved YOLOv8-OBB

This study builds upon the YOLO architecture’s inherent real-time capabilities and proven effectiveness in medium-to-large target detection, focusing on lightweight structural modifications to YOLOv8. The proposed enhancements to YOLOv8-OBB specifically aim to optimize tactile paving detection while maintaining robust performance across diverse datasets, including public benchmarks. Through computational efficiency improvements and refined feature representation capabilities, these architectural adaptations preserve the model’s real-time advantages while achieving enhanced detection accuracy—ultimately elevating the benchmark model’s comprehensive performance without compromising its core operational efficiency.

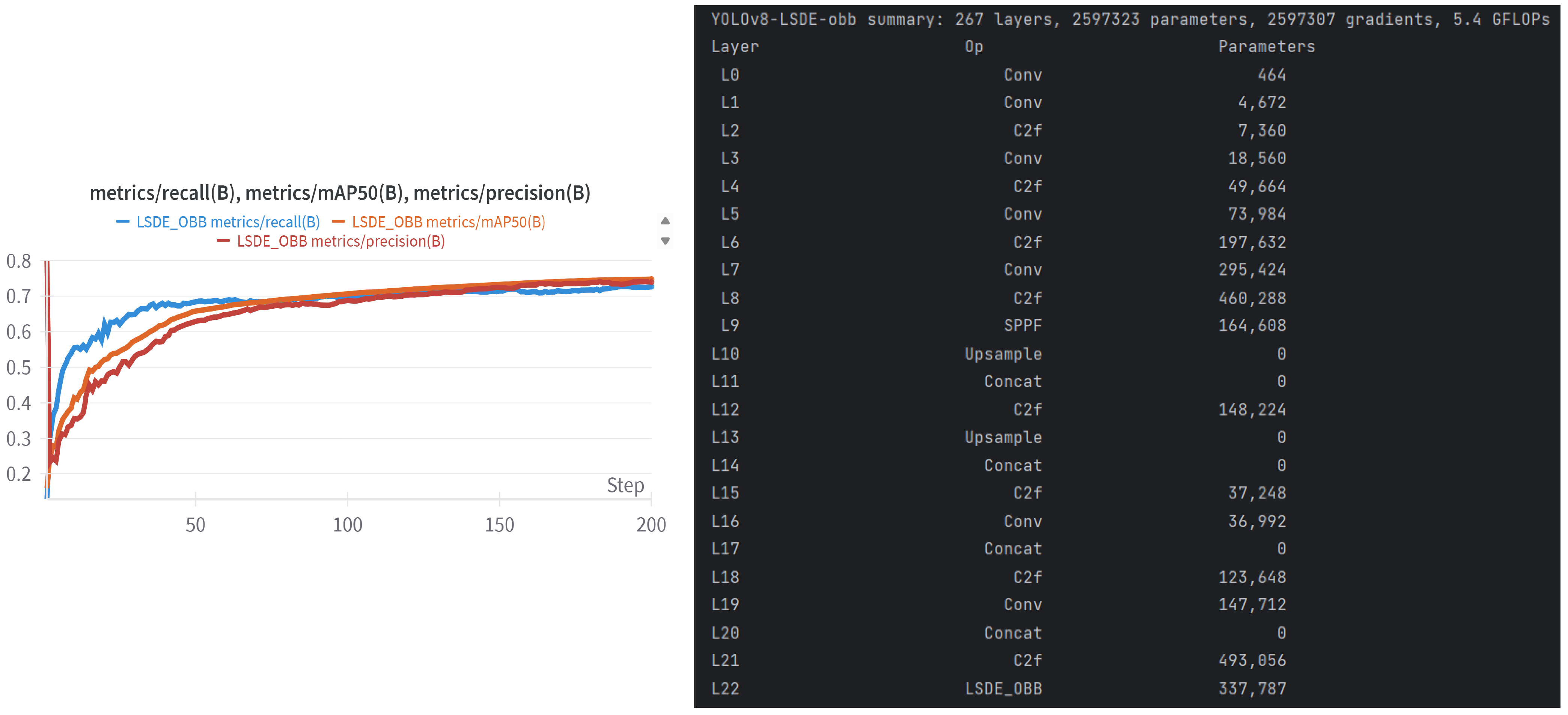

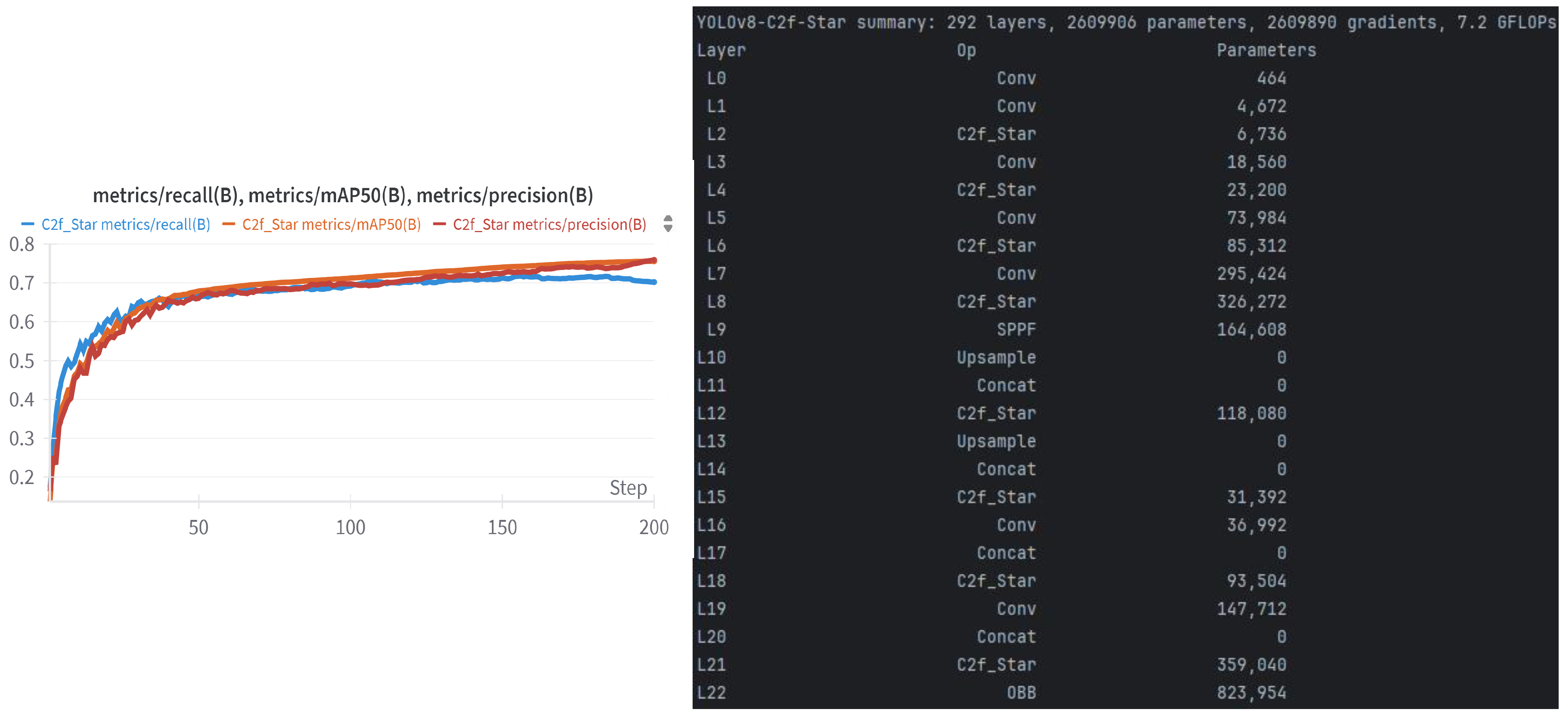

Figure 6 illustrates the refined model structure. Through targeted component optimizations, we enhance computational speed (LSDE-OBB) and reduce the number of parameters (C2f-Star) while preserving detection precision (CAA). The optimizations aim to address the critical need for compact model deployment on edge devices while performing tactile paving detection tasks. The following section provides a detailed description of these key improvements.

In the YOLO architecture, multi-scale detection heads conventionally employ three independent branches to process object features at varying scales. This decoupled design pattern, while effective in feature representation, introduces two critical limitations:

- (1)

Parameter redundancy caused by duplicated convolution operations across branches

- (2)

Increased risk of overfitting due to insufficient regularization in small-batch training scenarios.

Such architectural constraints particularly hinder deployment on resource-constrained edge devices [

8]. To address these challenges, we implement the LSDE-OBB detection head with two key innovations. First, we introduce shared convolutional layers across scale branches, effectively reducing network parameters through weight sharing while maintaining multi-scale representation capacity. The shared features are subsequently processed through scale-specific transformation layers to generate outputs at three resolution levels [

25]. Second, we replace conventional batch normalization with group normalization (GN) [

26] to enhance training stability. This modification proves particularly crucial given the reduced batch sizes required for edge-device compatibility, where traditional batch normalization often suffers from inaccurate statistical estimation that degrades detection performance. Replacing the convolution used for feature extraction with Detail Enhanced Convolution (DEConv) [

27] proposed by DEA-Net can enhance representation and generalization capabilities while being equivalently converted to a normal convolution by a repartitioning technique without additional computational cost.

The C2f module is a crucial component in YOLOv8. It enhances the C3 module from YOLOv5 by achieving richer gradient flow information. This enhancement maintains the model’s lightweight architecture. The C2f module in YOLOv8n introduces multiple bottleneck structures, which effectively solves the problems of disappearing and exploding gradients, improves network performance, and facilitates the use of a deeper architecture. The C2f module’s excessive stacking of bottleneck structures introduces feature redundancy and irrelevant information in feature maps, increasing computational complexity while impairing detection accuracy [

28]. To optimize this architecture, we implement Star-Block [

29] as a replacement for conventional bottleneck components. This substitution maintains equivalent functionality but reduces computational costs through more efficient feature processing, thereby enhancing both efficiency and accuracy.

The Contextual Anchor Attention Module (CAA) is an advanced attention mechanism that efficiently balances computational cost with robust feature extraction [

30]. Despite its streamlined design, CAA harnesses both global average pooling and 1D strip convolution to effectively model long-range pixel dependencies while emphasizing central features. In this way, it can efficiently extract local contextual information in images containing different scales and complex backgrounds and combine it with global contextual information to improve feature representation.

3.4.1. LSDE-OBB

Within the YOLO framework, conventional detection heads employ three independent branches for multi-scale target processing. However, this architecture can result in inefficient parameter utilization and an increased risk of overfitting due to isolated operations. To address these limitations, we introduce the Lightweight Shared Detail Enhanced Oriented Bounding Box (LSDE-OBB) head, as illustrated in

Figure 7.

This unified detection head implements parameter sharing across all scales (highlighted in green), replacing the traditional triple-branch detection modules (depicted in

Figure 7), thereby reducing model complexity and enhancing computational efficiency. The computational benefits of LSDE-OBB are further validated through comparative performance evaluations presented in

Section 4.

The rationale behind this approach stems from the consistent structural patterns exhibited by tactile pavements, making parameter sharing a viable optimization strategy without significantly compromising detection accuracy. However, to mitigate any potential loss in performance due to parameter sharing, two complementary strategies are incorporated.

- (1)

Normalization Strategy for Small-Batch Training: Conventionally, each CBS block comprises a standard convolution layer (C), batch normalization (BN), and SiLU activation (S). In our approach, batch normalization (BN) is replaced with group normalization (GN), which groups channels instead of relying on batch statistics. This substitution enhances stability in small-batch training and improves model robustness, as demonstrated in prior classification and localization research.

- (2)

Detail-Enhanced Convolution for Feature Representation: To further enhance feature extraction, the shared convolutional layers integrate Detail-Enhanced Convolution (DEConv) from DEA-Net (see

Figure 8). Unlike conventional convolutions, DEConv combines standard convolution (SC) with four specialized differential convolution operators:

Center Differential Convolution (CDC): Enhances edge sharpness.

Angle Differential Convolution (ADC): Captures angular variations.

Horizontal Differential Convolution (HDC): Refines horizontal structural details.

Vertical Differential Convolution (VDC): Improves vertical directional information.

Each of these convolutional branches works in parallel, capturing complementary feature information. While SC extracts intensity features, CDC, ADC, HDC, and VDC enhance spatial structure details, facilitating more robust tactile paving detection.

Figure 8.

Detail-enhanced convolution (DEConv).

Figure 8.

Detail-enhanced convolution (DEConv).

A reparameterization technique is employed to address the computational trade-off between multi-branch feature representation and inference efficiency. The transformation is expressed mathematically as

where

,

,

,

, and

correspond to the five independent convolutional kernels associated with SC, CDC, ADC, HDC, and VDC, respectively.

and

are the inputs and outputs of the module. These kernels enable differential feature learning during the training phase. For deployment, these kernels are mathematically fused into a single equivalent kernel

through parameter fusion, ensuring that inference speed and computational resource usage remain comparable to standard convolution while preserving the enhanced representational capabilities of DEConv. This optimization allows DEConv to efficiently capture tactile path textures, which are crucial for tactile paving detection, without incurring additional computational overhead.

To further enhance detection stability across varying object scales, scale adjustment layers are incorporated after each shared convolutional layer in the regression head. These layers dynamically adjust feature resolutions, ensuring balanced multi-scale feature extraction for improved robustness in tactile paving detection. The implementation details can be found in Algorithm A1.

3.4.2. C2f-Star

The C2f module serves as a critical component for feature extraction, incorporating multiple bottleneck structures to enhance network performance. This design not only boosts gradient flow efficiency but also alleviates common issues like gradient vanishing and exploding while maintaining manageable computational overhead. Nevertheless, excessive use of bottleneck modules leads to redundant feature information. This redundancy increases computational costs and resource consumption while compromising detection accuracy [

31]. Recent advancements in network architectures have introduced the Star Block, a module sharing functional similarities with traditional bottlenecks. This design maintains comparable structural simplicity while enhancing feature extraction capabilities and optimizing residual pathways to prevent gradient issues. The Star Block module captures the diversity of features through two different point-by-point convolutions after deep convolution and then multiplies the information of the two ways so that the network can integrate the information more accurately at different feature scales [

29]. Therefore, we replace the bottleneck module with the Star Block module, which is lighter and has strong privilege extraction capability, as shown in

Figure 9.

The primary innovation of Star Block lies in cross-layer element-wise multiplication, which fuses multi-level features without increasing network width, thereby effectively mapping inputs to higher-dimensional non-linear spaces. The inclusion of depthwise convolution DWConv, which applies a single filter per input channel with no cross-channel mixing, preserves feature richness while enhancing feature interactions. This improves feature representation accuracy and effectiveness.

Here,

H and

W represent the height and width of the input feature map,

denotes the number of input channels, and

corresponds to the kernel size. The FLOPs metric (Floating Point Operations Per Second) quantifies the computational cost by measuring the number of floating point operations (additions, multiplications, etc.) required to process an input.

By incorporating Star Block into the C2f module, the resulting C2f-Star variant is optimized for tactile pavement detection. This architecture enhances boundary and detail recognition by propagating low-level texture information through cross-layer connections. This design is particularly well suited to capturing subtle texture variations in Tenji images and improves detection accuracy for complex shapes.

In addition, the high computational efficiency of the C2f-Star module reduces redundant computations and ensures that the computational burden is kept low when processing large-scale data. This allows the model to operate efficiently in real-time applications without sacrificing detection accuracy. With the optimized network structure, C2f-Star better adapts to the complex texture and shape variations in the Tenji task, further improving the overall detection performance and robustness. For instance, compared to the standard C2f module, the reconstructed modules achieved parameter retentions of 91%, 46%, 43%, 70%, 79%, 84%, 75%, and 72% across eight C2f modules, leading to a significant improvement in computational efficiency.

3.4.3. CAA

The Contextual Anchor Attention (CAA) mechanism extracts contextual information through a hierarchical approach, first via global average pooling, then sequential 1D convolutions applied horizontally and vertically. This multi-step process strengthens pixel-level feature relationships while enhancing central region details.

Figure 10 illustrates the structure of the CAA attention module.

As illustrated in the schematic representation of the CAA module, the input feature maps undergo an initial processing stage where local spatial characteristics are extracted. This aggregation aims to generate a comprehensive global–spatial feature representation. To achieve this, input features initially undergo a 7 × 7 average pooling operation with a stride of 1 and padding of 3, reducing dimensionality while suppressing noise interference through local feature smoothing. This process is mathematically represented as

where X denotes the input feature maps,

represents the output feature maps after average pooling, “AvgPool2d” is the 2D average pooling function, 7 is the kernel size, 1 is the stride, and 3 is the padding size.

In the mean pooling operation, the input feature maps are aggregated by averaging the local regions to achieve a dimensionality reduction effect, which effectively smooths the feature maps, reduces the interference of noise, and makes the extracted features more robust. This method reduces the tendency of the model to overfit local noise and details, improves the generalization ability, and can alleviate feature fluctuations, making the texture, color and other features of the tactile path less susceptible to small-scale noise and enhancing the robustness of the model. At the same time, the feature map after average pooling contains less redundant information and the computational complexity is reduced, thus improving the model efficiency. Then the relationship between feature channels is enhanced by convolution to improve the information flow.

is the input from the previous average pooling layer, represents a convolutional operation enhancing channel relationships, and is the output feature maps from this convolution.

Then, the feature maps are sequentially passed through the horizontal 1D convolution layer with the number of groups as the number of channels and the kernel as and the vertical 1D convolution layer with, respectively, in order to capture the contextual information in different directions, and this band convolution can also capture the features of the elongated shapes of the objects in a better way, as shown below:

is the input to the horizontal convolution, denotes horizontal 1D convolution with kernel size , and is the output. Then, is the input to the vertical convolution, denotes vertical 1D convolution with kernel size , and is the output.

Traditional convolutional operations are typically implemented through standard convolution layers, whereas Depthwise Separable Convolution (DWConv) decomposes this process into two sequential stages: spatial-wise depth convolution and channel-wise point convolution. From a parameter efficiency perspective, conventional convolution layers exhibit a parameter count quantified as where K represents the kernel dimension, with and denoting input and output channel counts. The spatial processing stage in DWConv demonstrates computational complexity scaling as where specifies the feature map’s spatial dimensions. Unlike conventional depthwise separable convolutions, this dual-directional convolutional design is specifically optimized to capture elongated object features and cross-pixel relationships. The use of bandlimited kernels enables parameter efficiency comparable to large-receptive-field convolutions. The horizontal convolution extracts row-wise contextual patterns, while the vertical convolution focuses on columnar dependencies, collectively modeling the structural characteristics of tactile paths.

Next, high-level contextual features are extracted from the feature maps using pointwise convolution and the Sigmoid activation function to obtain enhanced feature maps. Through the application of adaptive weights to the input feature representations, this mechanism prioritizes key features by amplifying their significance while diminishing less-critical aspects. This process improves the model’s ability to focus on the key regions of interest.

is the input from the vertical convolution, represents a pointwise convolution operation used to generate high-level contextual features, is the output from this pointwise convolution, and A denotes the attention weights obtained after applying the Sigmoid activation function to .

Subsequent point-wise convolution followed by sigmoid activation generates channel-aware attention weights, adaptively amplifying critical regions while suppressing irrelevant features. This hierarchical fusion mechanism dynamically integrates multi-scale contextual information through three coordinated effects:

- (1)

Noise suppression: The initial pooling operation establishes noise-resistant base features.

- (2)

Shape-specific sensitivity: Directional convolutions enhance object structure detection.

- (3)

Adaptive feature weighting: The attention mechanism prioritizes discriminative texture patterns.

For tactile paving detection, the CAA module demonstrates improved attention allocation precision by leveraging context-aware feature selection.