Intelligent Queue Scheduling Method for SPMA-Based UAV Networks

Abstract

1. Introduction

- We propose a method named ACBS-RL to address the unfair transmission problem of SPMA in UAV networks. We leverage CBS to determine the transmission sequence of queues by the value of credit instead of priority.

- We employ the Q-learning method to dynamically adjust the parameter of the CBS in ACBS-RL to achieve better performance.

- We conduct extensive simulations to evaluate the performance of proposed ACBS-RL. The simulation results confirm the performance of ACBS-RL.

2. Related Work

3. Motivation and Problem Formulation

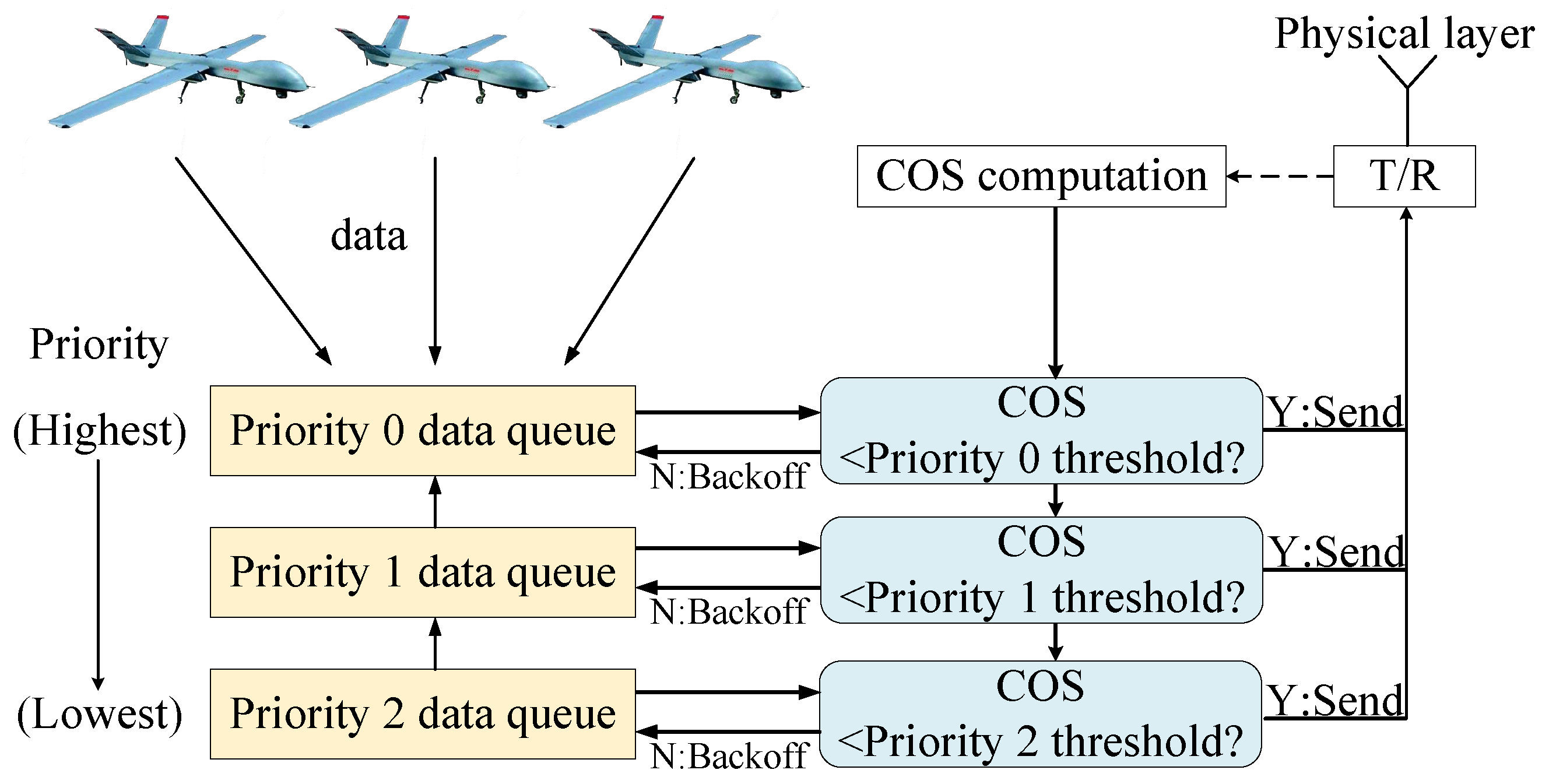

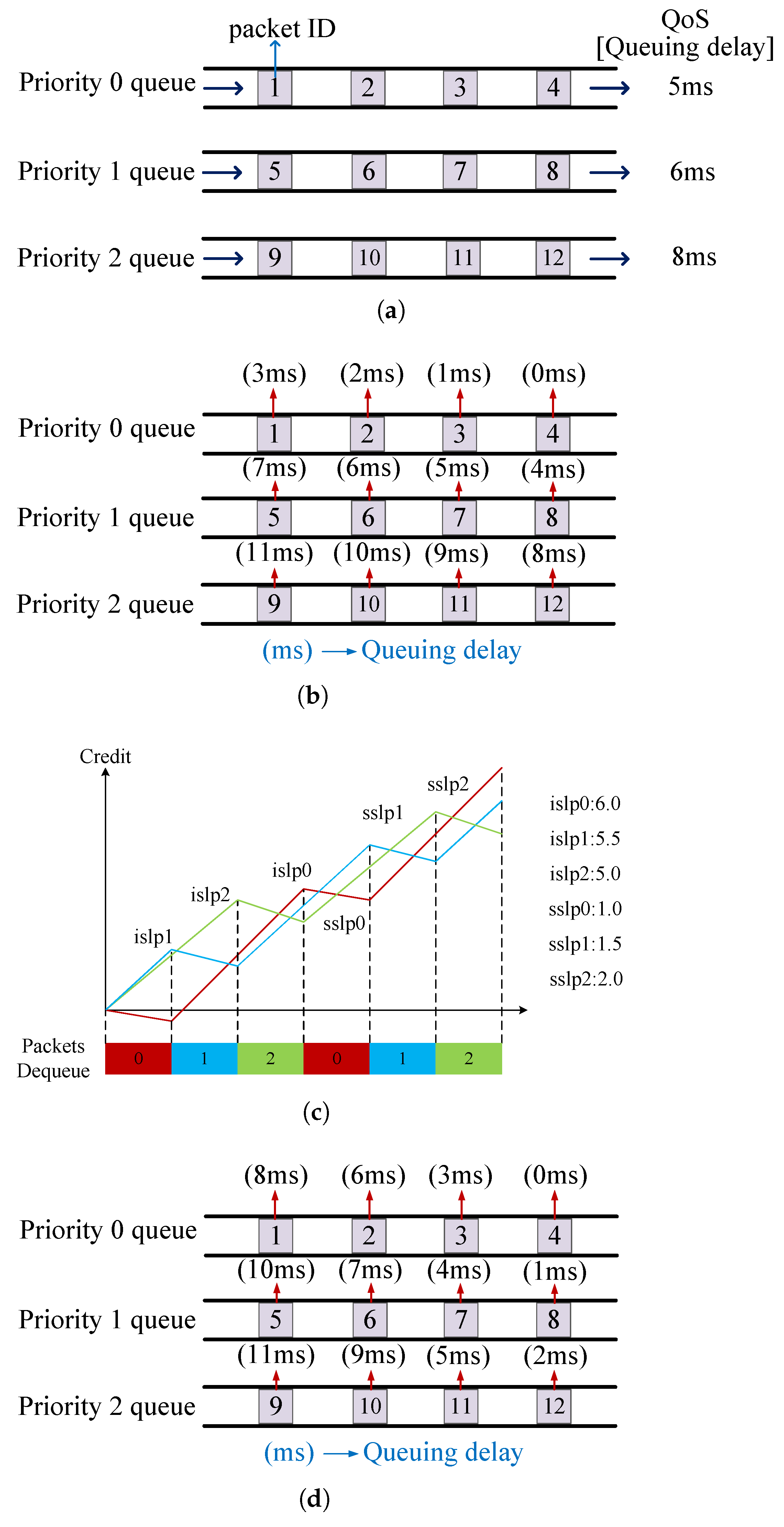

3.1. Motivation Example

- When transmission begins, due to the same credit of each queue, the packet from the highest priority Queue 0 is the first to be transmitted.

- During this period, the credit of Queue 0 decreased to −1 based on , while the credit of Queues 1 and 2, respectively, increased to 5.5 and 5 based on and .

- After the transmission is finished, the credit of Queue 1 is at its maximum and the Priority 1 packet start to dequeue.

- During this time, the credit of Queue 1 decreases to 4, while the credits of Queue 0 and 2 become 5 and 10, respectively.

- Then Queue 2 becomes the next transmission queue and all queues follow this mechanism until all packets are sent.

3.2. Problem Formulation

4. Algorithm Design

| Algorithm 1 ACBS-RL algorithm. |

|

| Algorithm 2 Procedure Initialization. |

|

| Algorithm 3 CBS function. |

|

| Algorithm 4 Q-learning function. |

|

4.1. Procedure Initialization

4.2. CBS Function

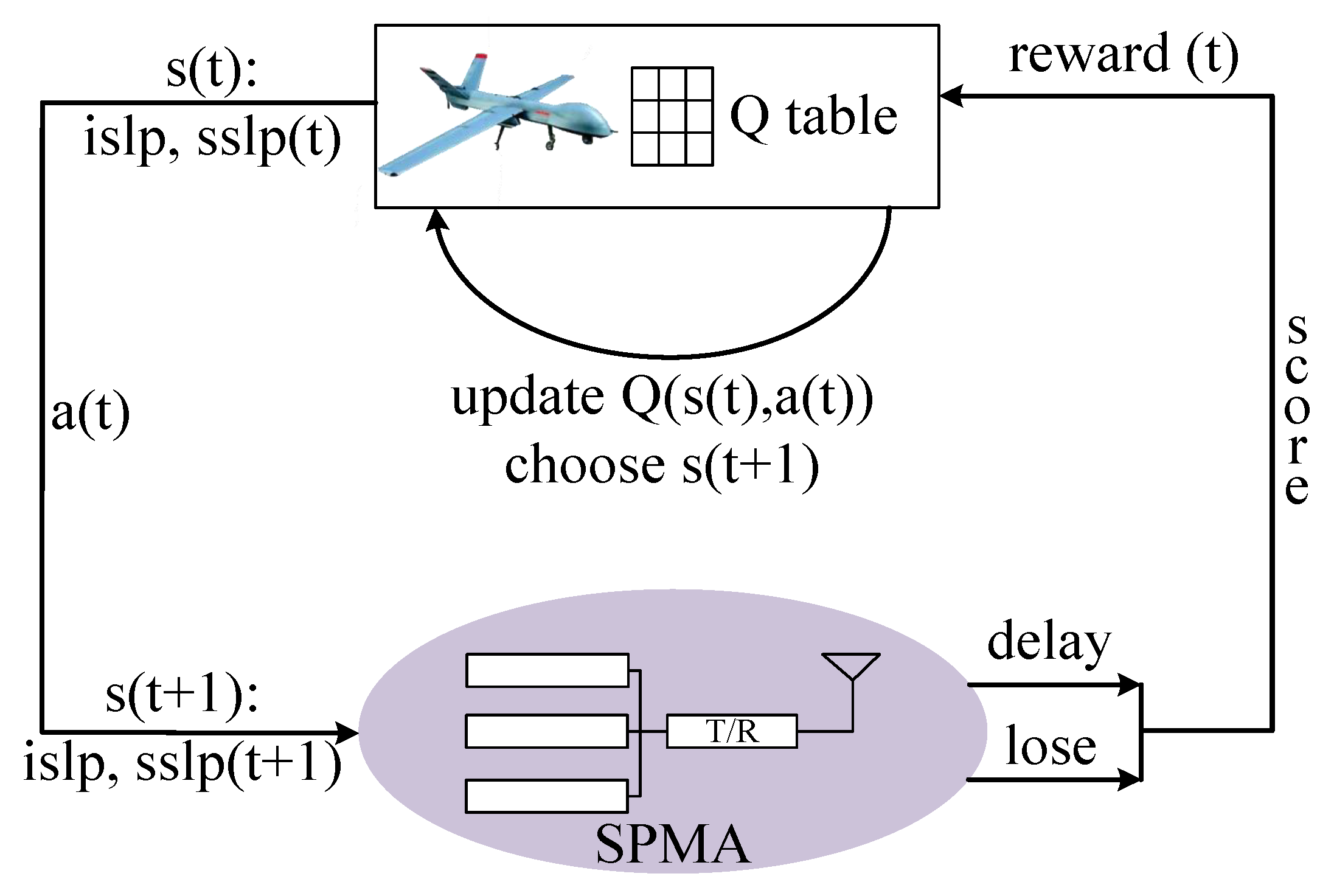

4.3. Q-Learning Function

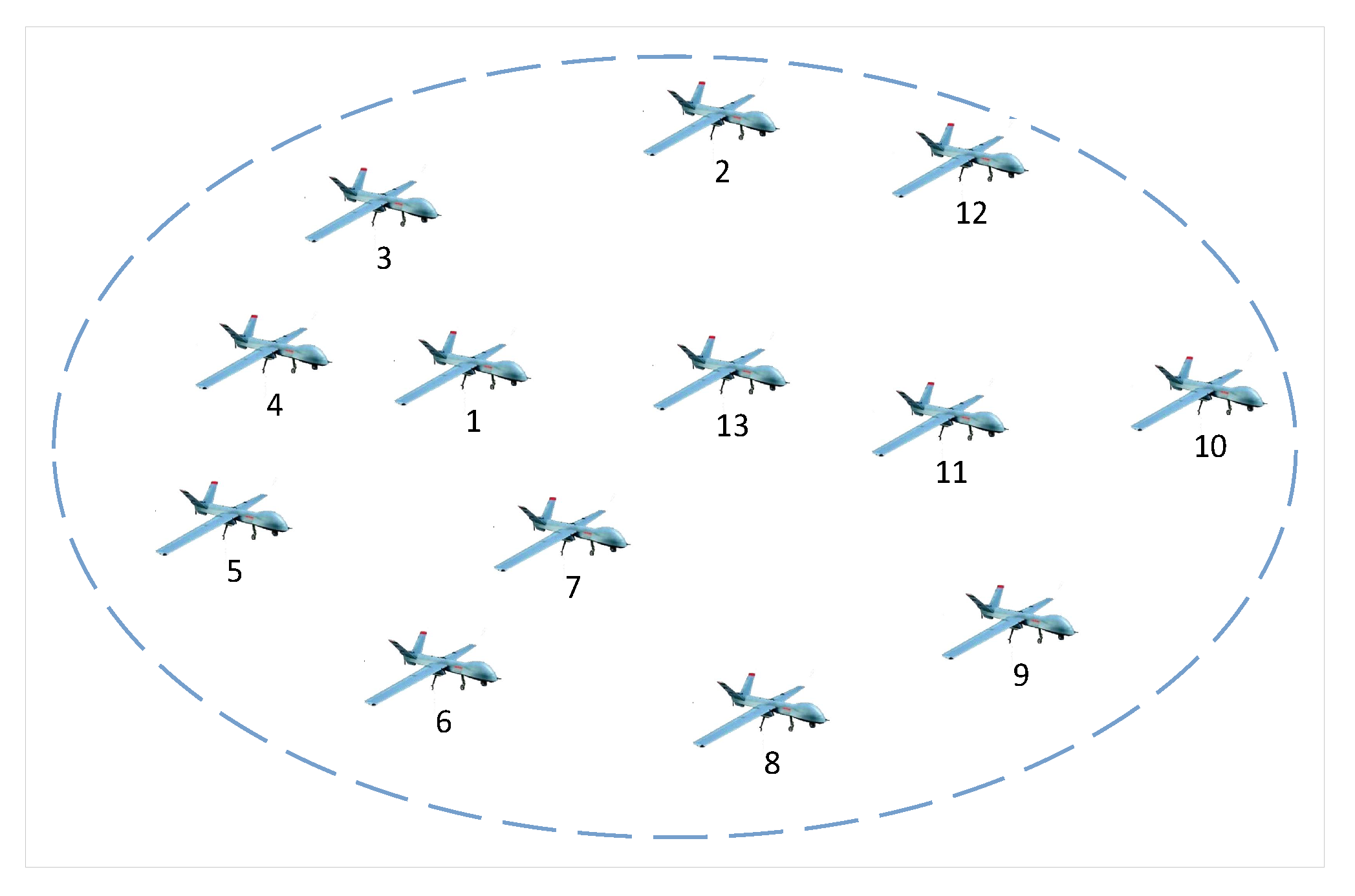

5. Simulation

5.1. Simulation Setting

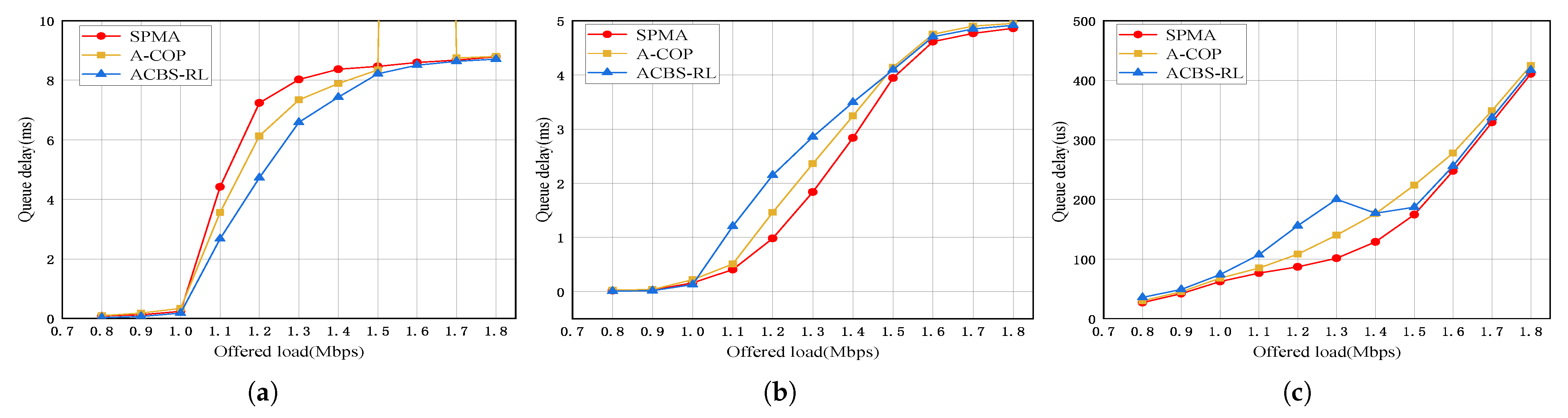

- Baseline: The standard SPMA algorithm without any improvement.

- A-COP: The backoff time is adapted based on COS and priority in the method compared with SPMA.

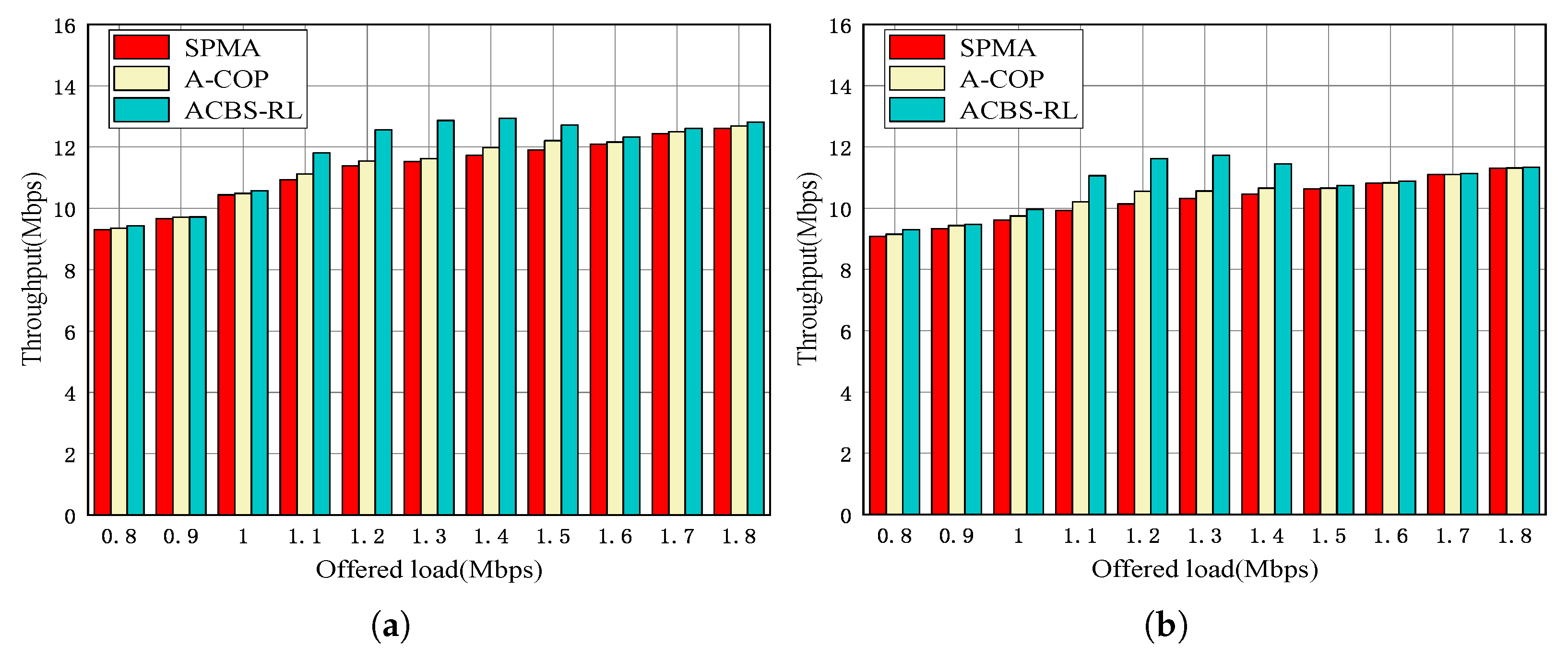

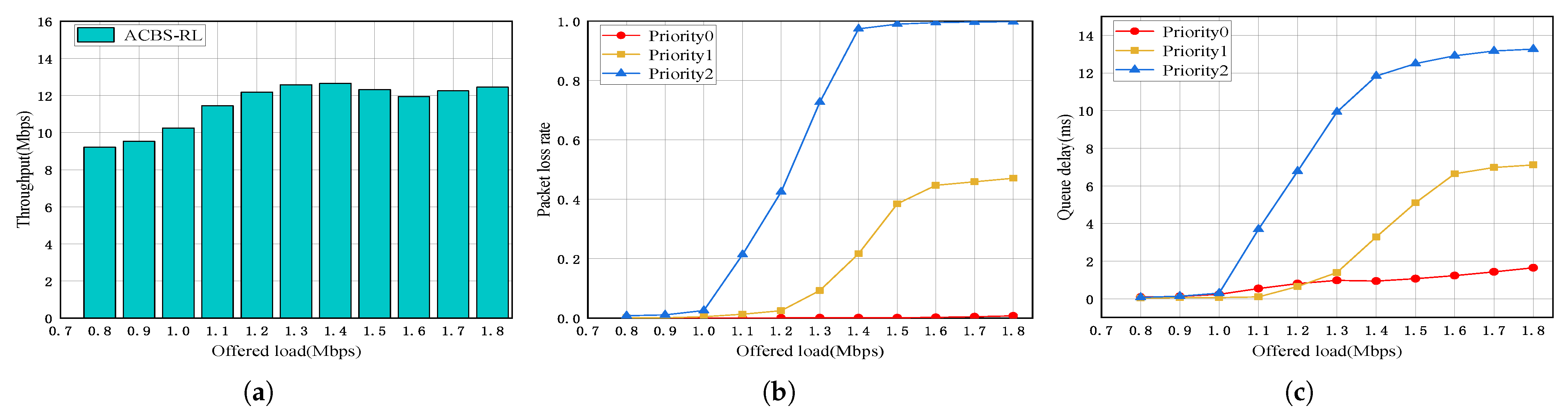

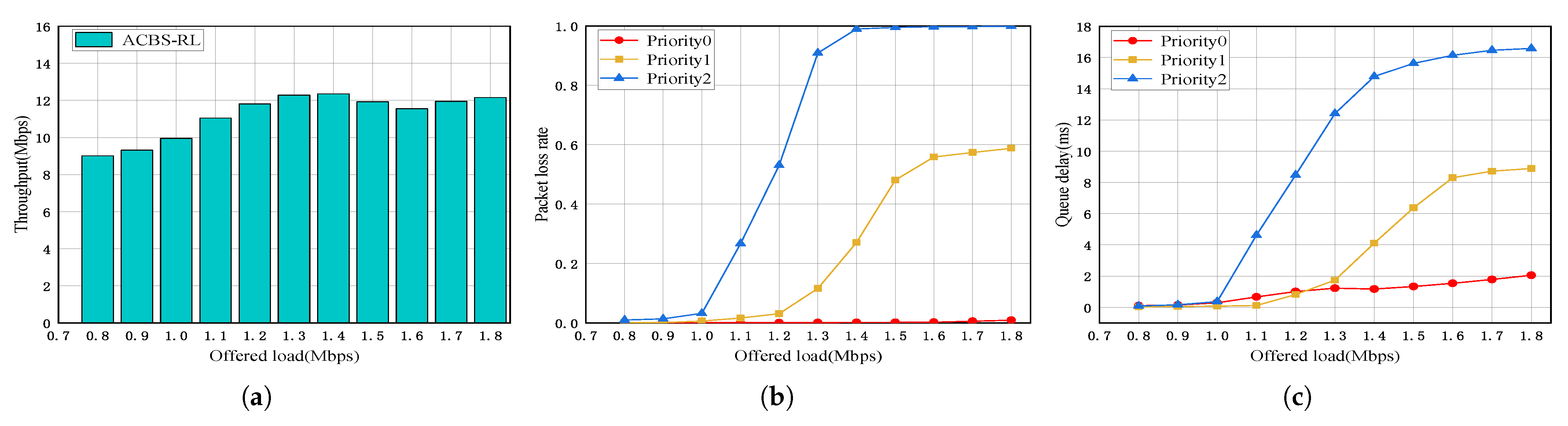

- Network throughput: The total amount of data that passes through the UAV network. This is a metric used to measure the ability of processing data in a system.

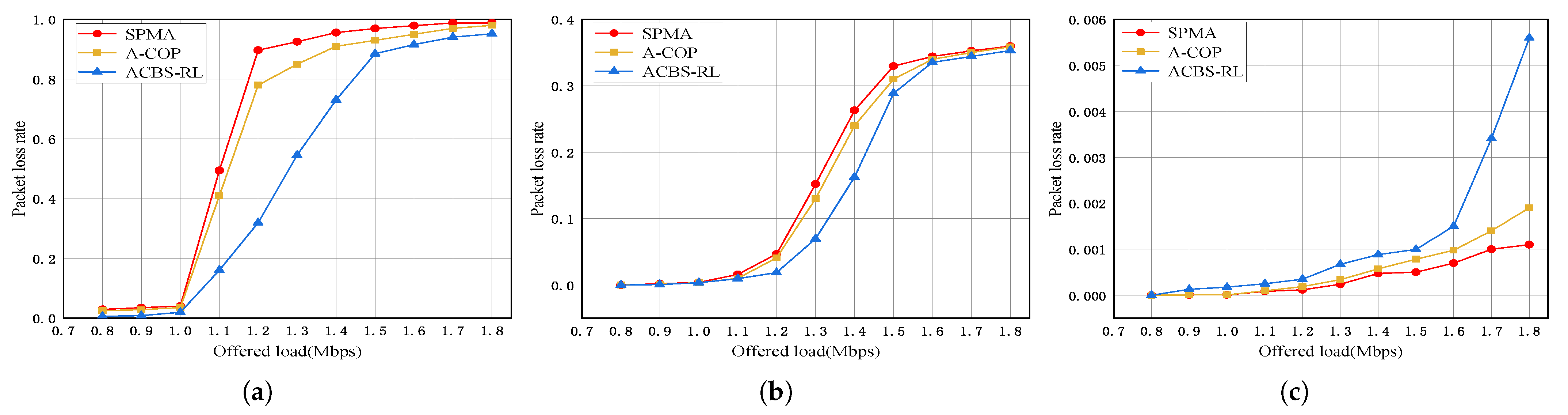

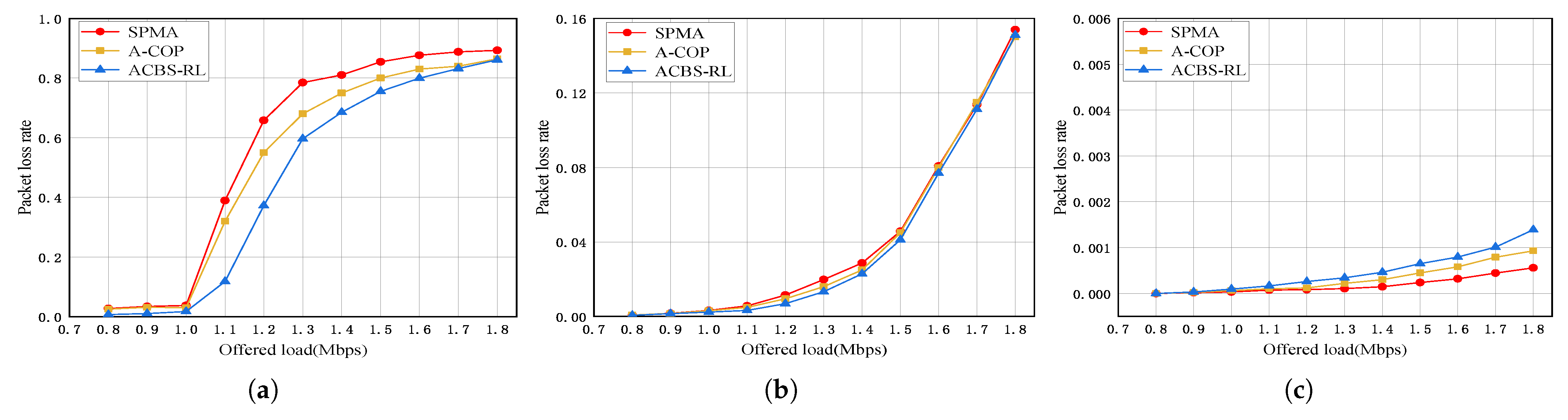

- Packet loss rate: The ratio of packets lost with respect to packets sent in the system. It reflects the quality of transmission and we utilize this metric to evaluate the condition of a packet’s successful reception.

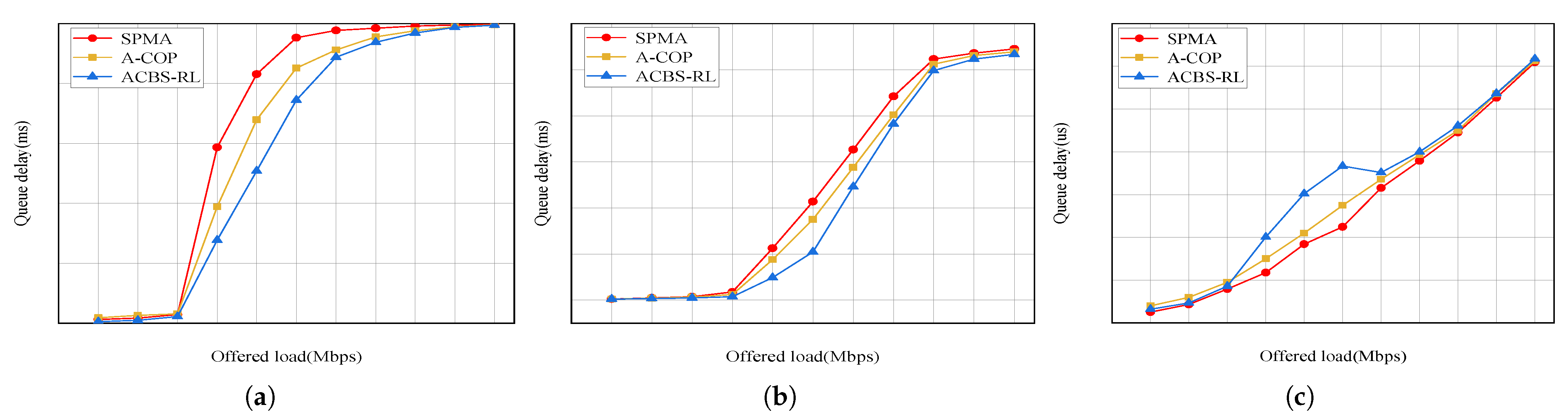

- Queuing delay: The time that a packet waits to be dequeued. This is a essential metric to qualify the algorithm performance in a given environment.

- Network scalability: We test the network performance under different numbers of network nodes to evaluate its scalability in dynamic UAV networks.

5.2. Results and Analysis

6. Conclusions and Future Works

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhang, X.; Zhang, H.; Sun, K.; Long, K.; Li, Y. Human-centric irregular RIS-assisted multi-UAV networks with resource allocation and reflecting design for metaverse. IEEE J. Sel. Areas Commun. 2024, 42, 603–615. [Google Scholar] [CrossRef]

- Liu, Y.; Xie, J.; Xing, C.; Xie, S.; Luo, X. Self-organization of UAV networks for maximizing minimum throughput of ground users. IEEE Trans. Veh. Technol. 2024, 73, 11743–11755. [Google Scholar] [CrossRef]

- Wu, J.; Zhou, J.; Yu, L.; Gao, L. MAC optimization protocol for cooperative UAV based on dual perception of energy consumption and channel gain. IEEE Trans. Mob. Comput. 2024, 23, 9851–9862. [Google Scholar] [CrossRef]

- Mao, K.; Zhu, Q.; Wang, C.; Ye, X.; Gomez-Ponce, J.; Cai, X.; Miao, Y.; Cui, Z.; Wu, Q.; Fan, W. A survey on channel sounding technologies and measurements for UAV-assisted communications. IEEE Trans. Instrum. Meas. 2024, 73, 8004624. [Google Scholar] [CrossRef]

- Touati, H.; Chriki, A.; Snoussi, H.; Kamoun, F. Cognitive radio and dynamic TDMA for efficient UAVs swarm communications. Comput. Netw. 2021, 196, 108264. [Google Scholar] [CrossRef]

- Chen, J.; Wang, J.; Wang, J.; Bai, L. Joint fairness and efficiency optimization for CSMA/CA-based multi-user MIMO UAV ad hoc networks. IEEE J. Sel. Top. Signal Process. 2024, 18, 1311–1323. [Google Scholar] [CrossRef]

- Ripplinger, D.; Narula-Tam, A.; Szeto, K. Scheduling vs. random access in frequency hopped airborne networks. In Proceedings of the IEEE Military Communications Conference (MILCOM), Orlando, FL, USA, 29 October–1 November 2012. [Google Scholar]

- Liu, J.; Peng, T.; Quan, Q.; Cao, L. Performance analysis of the statistical priority-based multiple access. In Proceedings of the IEEE International Conference on Computer and Communications (ICCC), Chengdu, China, 13–16 December 2017. [Google Scholar]

- Zheng, W.; Jin, H. Analysis and research on a new data link MAC protocol. In Proceedings of the IEEE International Conference on Communication Software and Networks (ICCSN), Chengdu, China, 6–9 July 2018. [Google Scholar]

- Wang, L.; Li, H.; Liu, Z. Research and pragmatic-improvement of statistical priority-based multiple access protocol. In Proceedings of the IEEE International Conference on Computer and Communications (ICCC), Chengdu, China, 14–17 October 2016. [Google Scholar]

- Zhang, Y.; Sun, H.; He, Y.; Zhang, Z.; Wang, X.; Quek, T.Q.S. A spatio-temporal analytical model for statistical priority-based multiple access network. IEEE Wirel. Commun. Lett. 2023, 12, 153–157. [Google Scholar] [CrossRef]

- Gao, S.; Yang, M.; Yu, H. Modeling and parameter optimization of statistical priority-based multiple access protocol. China Commun. 2019, 16, 45–61. [Google Scholar] [CrossRef]

- Liu, P.; Wang, C.; Lei, M.; Li, M.; Zhao, M. Adaptive priority-threshold setting strategy for statistical priority-based multiple access network. In Proceedings of the IEEE Vehicular Technology Conference (VTC), Antwerp, Belgium, 25–28 May 2020. [Google Scholar]

- Zhang, Y.; He, Y.; Sun, H.; Wang, X.; Quek, T.Q.S. Performance analysis of statistical priority-based multiple access network with directional antennas. IEEE Wirel. Commun. Lett. 2022, 11, 220–224. [Google Scholar] [CrossRef]

- Zhang, Y.; He, Y.; Wang, X.; Sun, H.; Quek, T.Q.S. Modeling and performance analysis of statistical priority-based multiple access: A stochastic geometry approach. IEEE Internet Things J. 2022, 9, 13942–13954. [Google Scholar] [CrossRef]

- He, S.; An, Z.; Zhu, J.; Zhang, M.; Huang, Y. Cross-layer optimization: Joint user scheduling and beamforming design with QoS support in joint transmission networks. IEEE Trans. Commun. 2023, 71, 792–807. [Google Scholar] [CrossRef]

- Wang, S.; Liu, H.; Gomes, P.H.; Krishnamachari, B. Deep reinforcement learning for dynamic multichannel access in wireless networks. IEEE Trans. Cogn. Commun. Netw. 2018, 4, 257–265. [Google Scholar] [CrossRef]

- Chen, X.; Huang, C.; Fan, X.; Liu, D.; Li, P. LDMAC: A propagation delay-aware MAC scheme for long-distance UAV networks. Comput. Netw. 2018, 144, 40–52. [Google Scholar] [CrossRef]

- Feng, P.; Bai, Y.; Huang, J.; Wang, W.; Gu, Y.; Liu, S. CogMOR-MAC: A cognitive multi-channel opportunistic reservation MAC for multi-UAVs ad hoc networks. Comput. Commun. 2019, 136, 30–42. [Google Scholar] [CrossRef]

- Li, B.; Guo, X.; Zhang, R.; Du, X. Performance analysis and optimization for the MAC protocol in UAV-based IoT network. IEEE Trans. Veh. Technol. 2020, 69, 8925–8937. [Google Scholar] [CrossRef]

- Wang, J.; Zhu, Q.; Lin, Z.; Chen, J.; Ding, G.; Wu, Q.; Gu, G.; Gao, Q. Sparse bayesian learning-based hierarchical construction for 3D radio environment maps incorporating channel shadowing. IEEE Trans. Wirel. Commun. 2024, 23, 14560–14574. [Google Scholar] [CrossRef]

| Notation | Description |

|---|---|

| N | The number of UAV nodes |

| The increase rate of credit value for a queue when it is idle | |

| The decrease rate of credit value for a queue when it sends packets | |

| The value of updated by action a | |

| The value of updated by action a | |

| The head waiting delay requirement for Queue i | |

| The actual head waiting delay for Queue i | |

| L | The packet size for simulation |

| The simulation duration | |

| Channel Occupancy Statistics | |

| The threshold at which each priority queue can send packets | |

| The throughput of node i | |

| C | The set of credit value for all priority queues |

| The set of credit value for all priority queues in the descending order | |

| T | The transmission time of a packet |

| The maximum credit of value | |

| The ID of queue which has the maximum credit value | |

| The ID of queue which is ready to transmit | |

| The Q-value table of Q-Learning algorithm | |

| a | The action adjusting the value of , |

| Q | The list of all priority queues |

| The reward for parameter adjusting of time t | |

| The learning rate | |

| The discounted factor | |

| R | The total rewards in Q-learning |

| The queuing delay of Queue i | |

| The packet loss rate of Queue i | |

| The total number of priority i packets transmitted | |

| The time when the packet is dequeued | |

| The time when the packet is enqueued | |

| The number of lost packet for priority i | |

| The number of successfully transmitted packets for priority i | |

| The score for the queuing delay of packets | |

| The score for the packet loss rate | |

| The maximum waiting time of packets | |

| The parameter adjusting importance factor for delay | |

| The parameter adjusting importance factor for packet loss | |

| The weight of |

| Parameter Name | Parameter Value |

|---|---|

| Simulation time | 100 s |

| Channel occupancy calculation window size | 1 ms |

| Priority number of packets | 3 |

| Packet size | 7200 bits |

| Transmission rate | 40 Mbps |

| Number of orthogonal channels | 5 |

| Number of sub pulses per packet | 27 |

| Length of sub pulse | 100 bits |

| Length of backoff window | 1000 us |

| Duty cycle | 15 % |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, K.; Xu, C.; Qiao, G.; Zhong, J.; Zhang, X. Intelligent Queue Scheduling Method for SPMA-Based UAV Networks. Drones 2025, 9, 552. https://doi.org/10.3390/drones9080552

Yang K, Xu C, Qiao G, Zhong J, Zhang X. Intelligent Queue Scheduling Method for SPMA-Based UAV Networks. Drones. 2025; 9(8):552. https://doi.org/10.3390/drones9080552

Chicago/Turabian StyleYang, Kui, Chenyang Xu, Guanhua Qiao, Jinke Zhong, and Xiaoning Zhang. 2025. "Intelligent Queue Scheduling Method for SPMA-Based UAV Networks" Drones 9, no. 8: 552. https://doi.org/10.3390/drones9080552

APA StyleYang, K., Xu, C., Qiao, G., Zhong, J., & Zhang, X. (2025). Intelligent Queue Scheduling Method for SPMA-Based UAV Networks. Drones, 9(8), 552. https://doi.org/10.3390/drones9080552