BiDGCNLLM: A Graph–Language Model for Drone State Forecasting and Separation in Urban Air Mobility Using Digital Twin-Augmented Remote ID Data

Abstract

1. Introduction

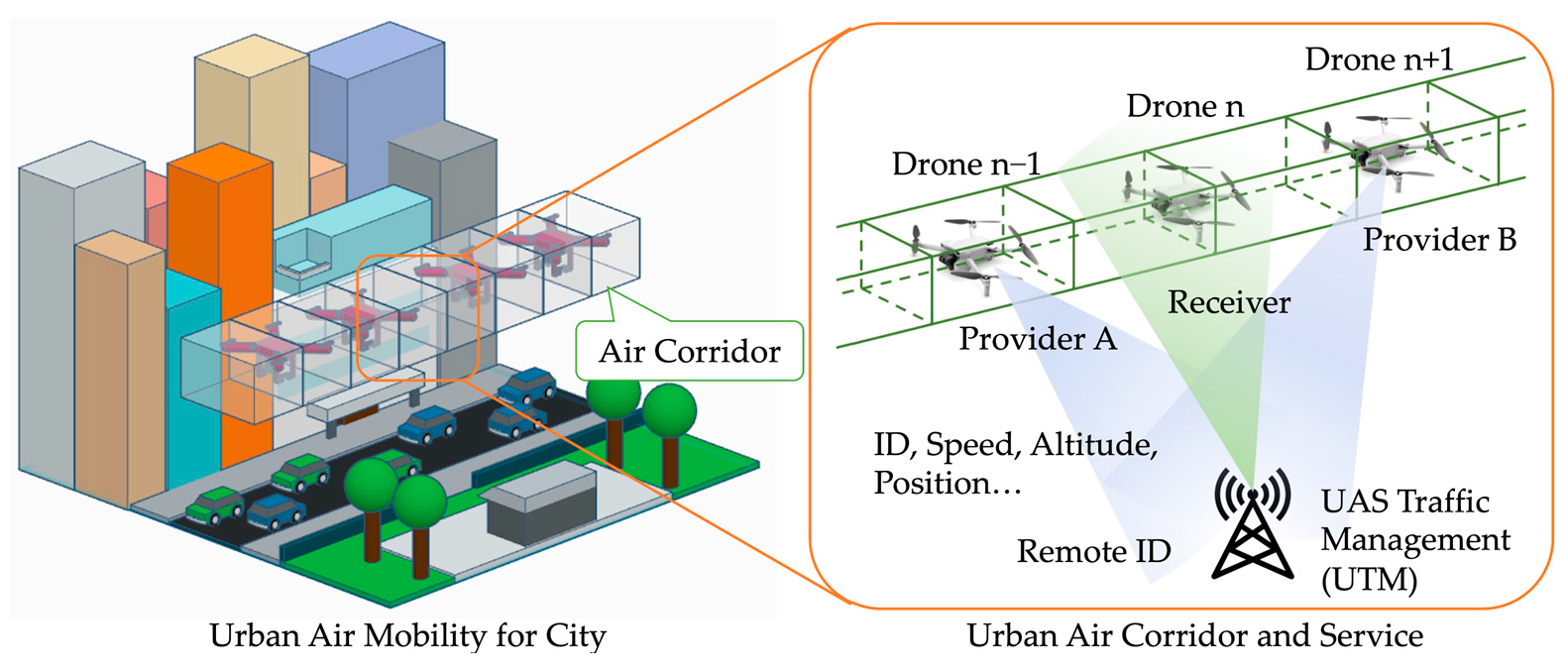

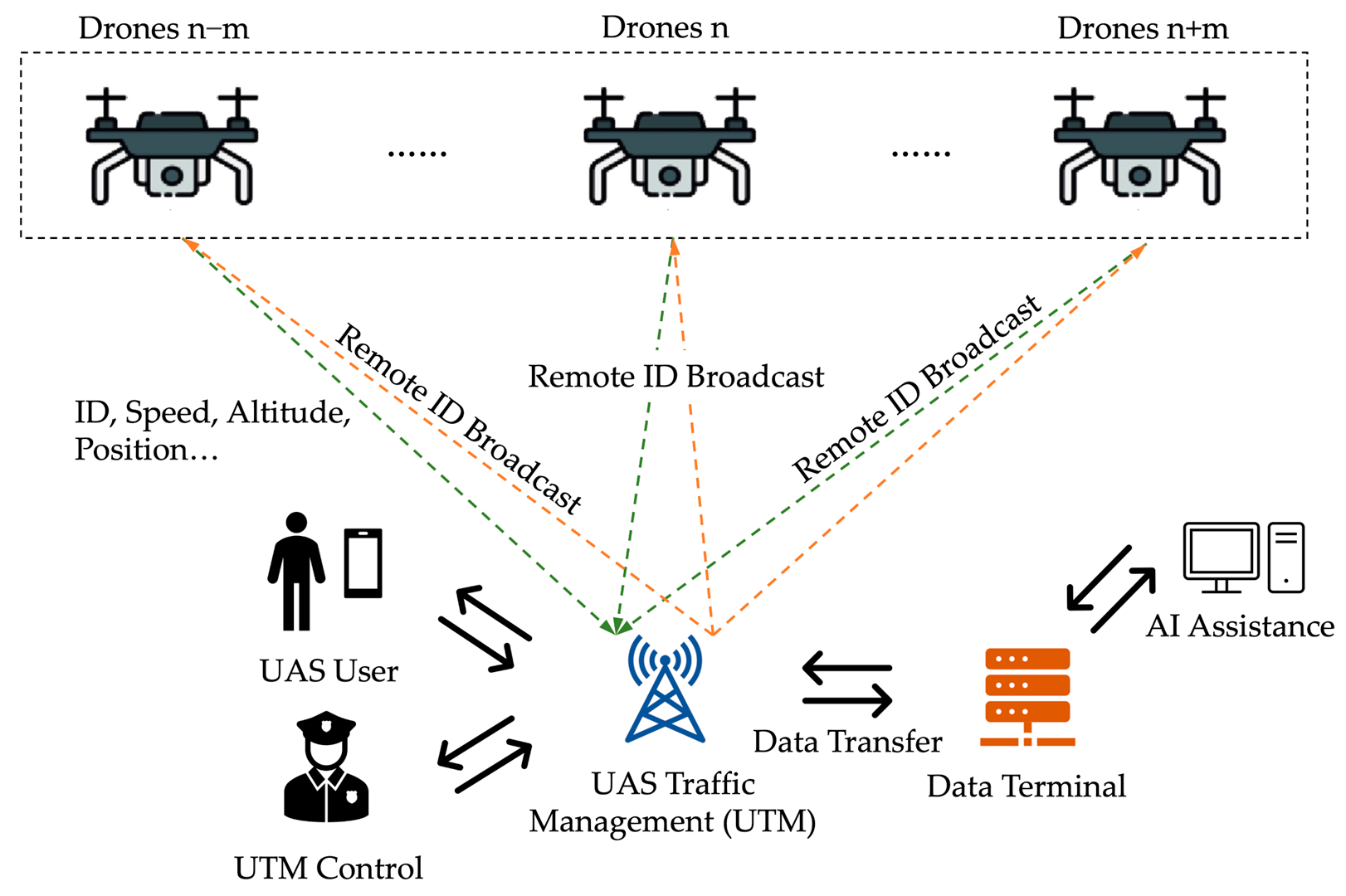

1.1. Background

1.2. Related Work

1.3. Contributions

- This study is the first to explore the use of LLM for predicting UAV speed within air corridors, aiming to improve the applicability of autonomous UAS operations.

- Augmented Remote ID broadcasts using AirSUMO to generate high-fidelity UAV telemetry data, enabling reliable speed prediction, conflict risk assessment, and DT-based evaluation under UAM scenarios.

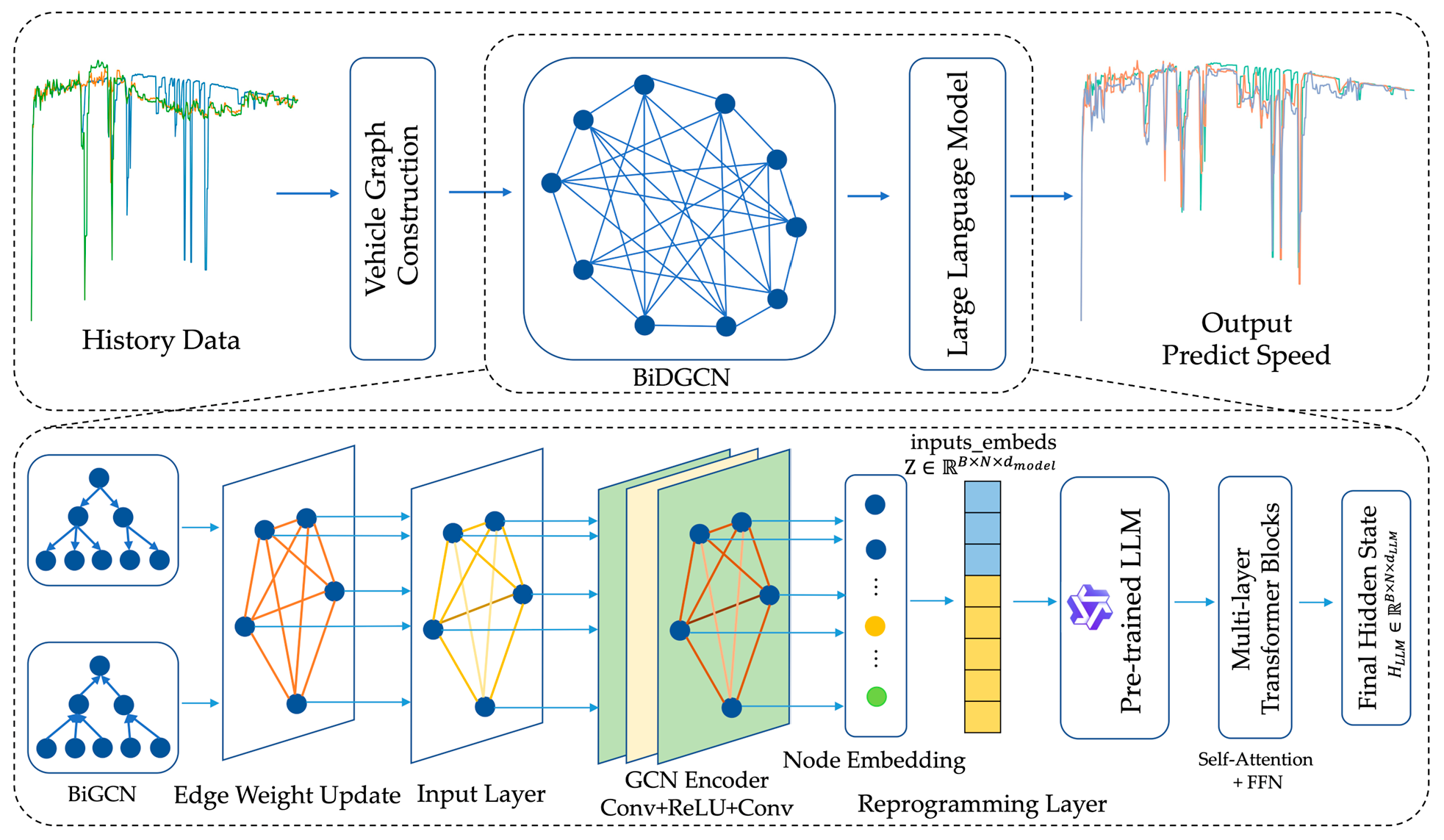

- Designed the BiDGCNLLM, a novel model that combines BiGCN with Dynamic Edge Weight and integrates the Qwen2.5–0.5B LLM as its backbone. This architecture leverages the knowledge richness and adaptability of LLM to handle time-series prediction tasks efficiently, achieving high performance while maintaining computational efficiency.

- The proposed model is evaluated through short-term prediction tasks, ablation studies, and comparisons with state-of-the-art time series forecasting baselines. Results show that BiDGCNLLM outperforms most existing methods in prediction accuracy.

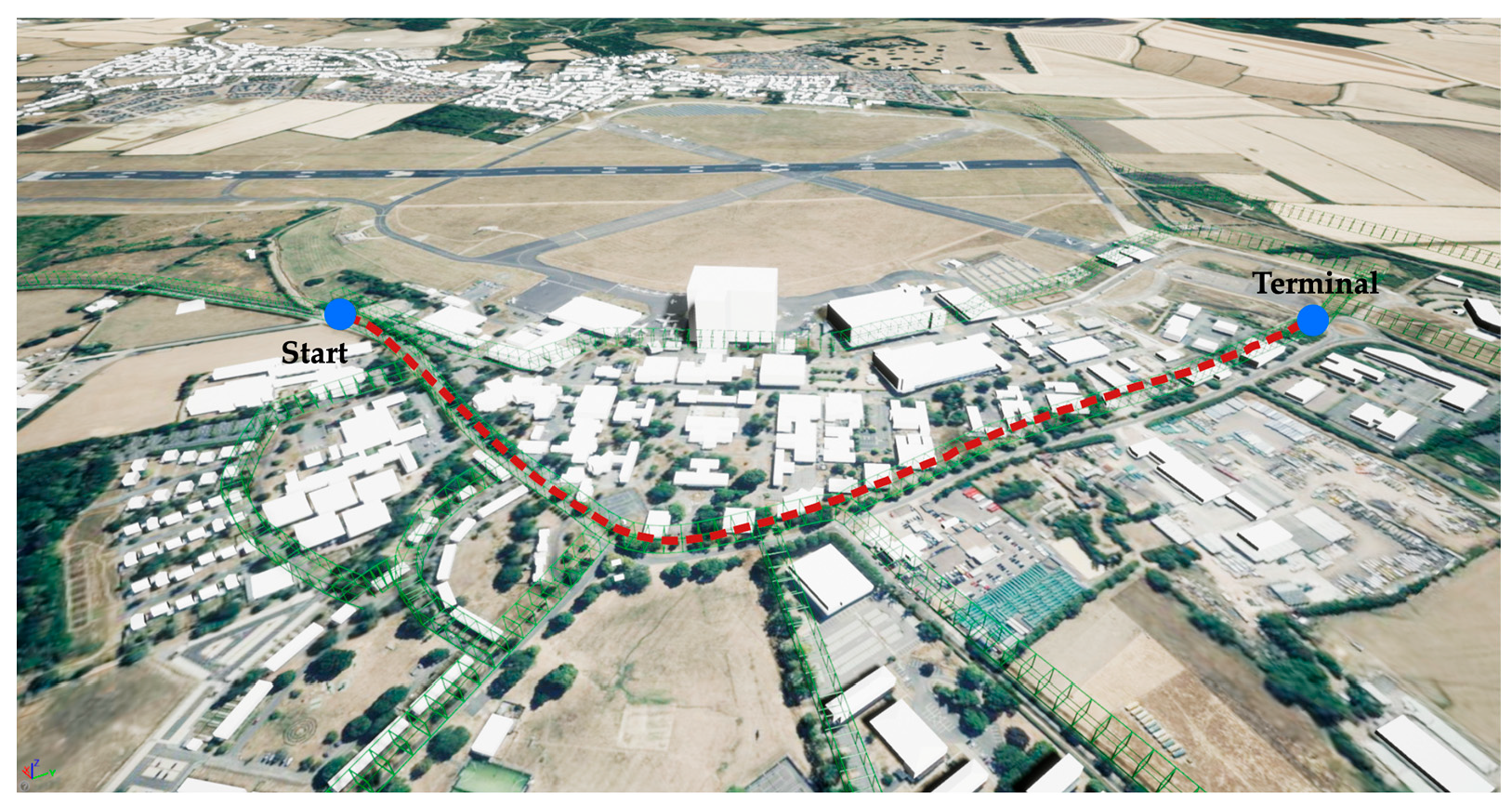

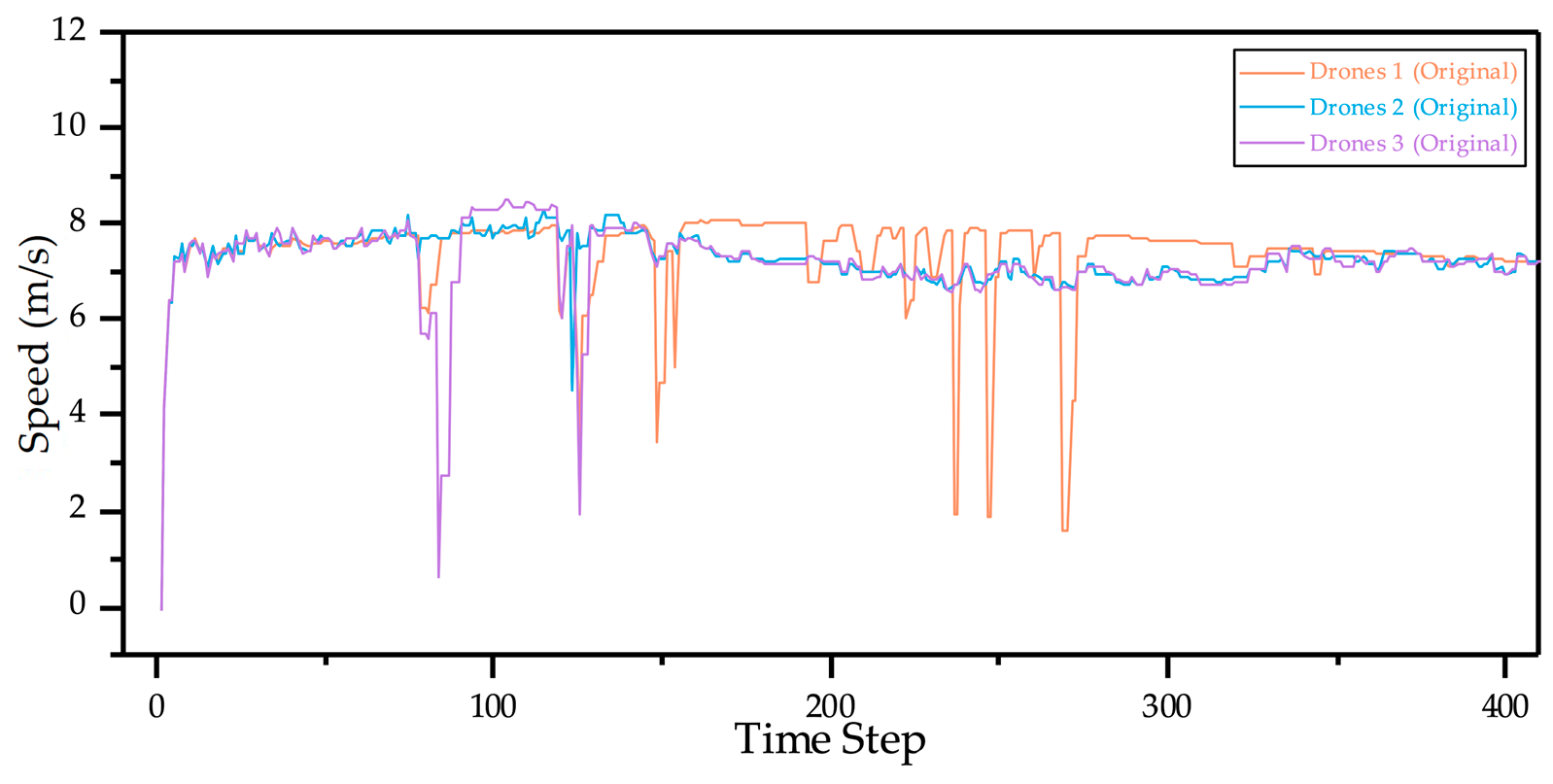

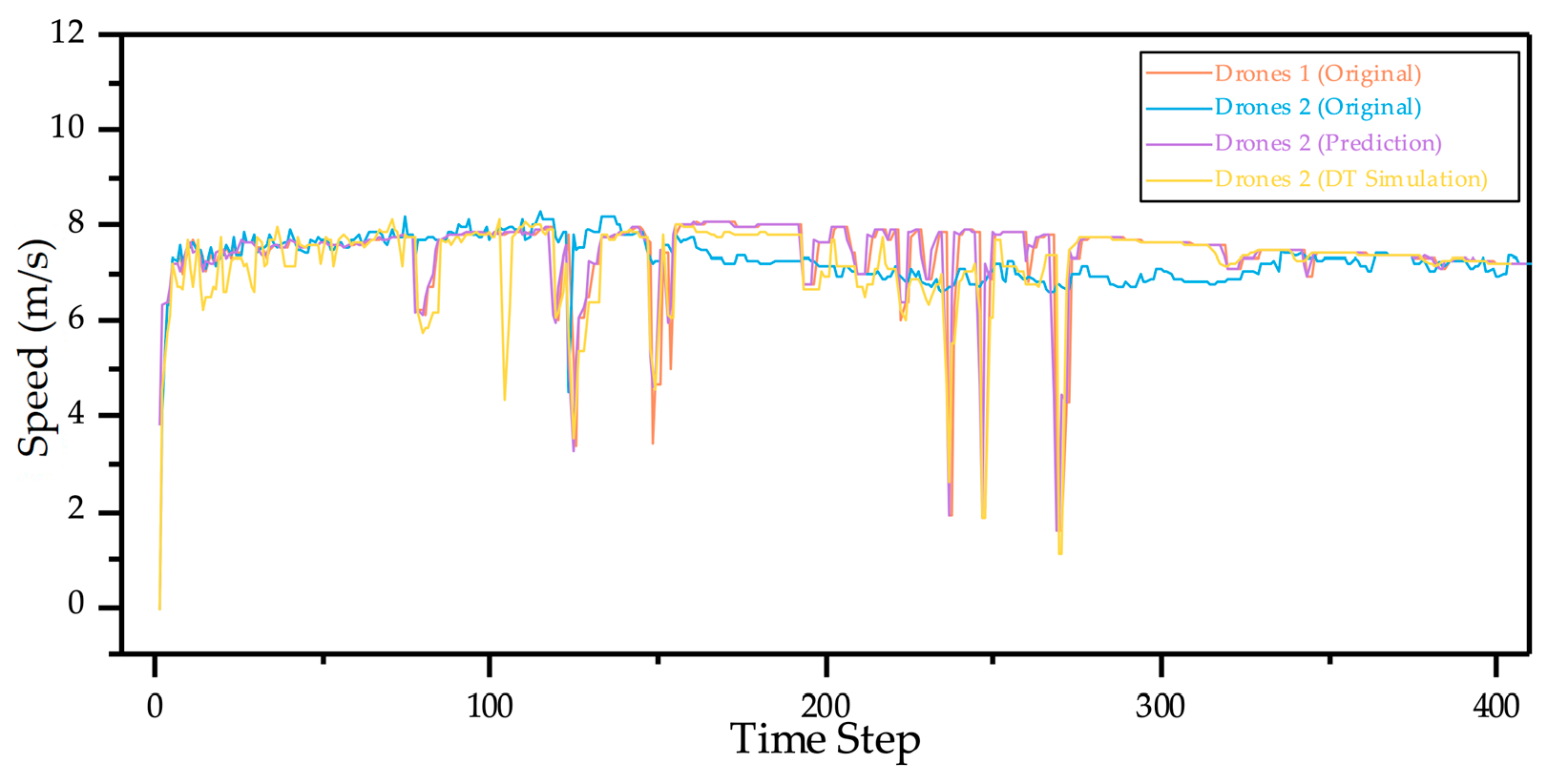

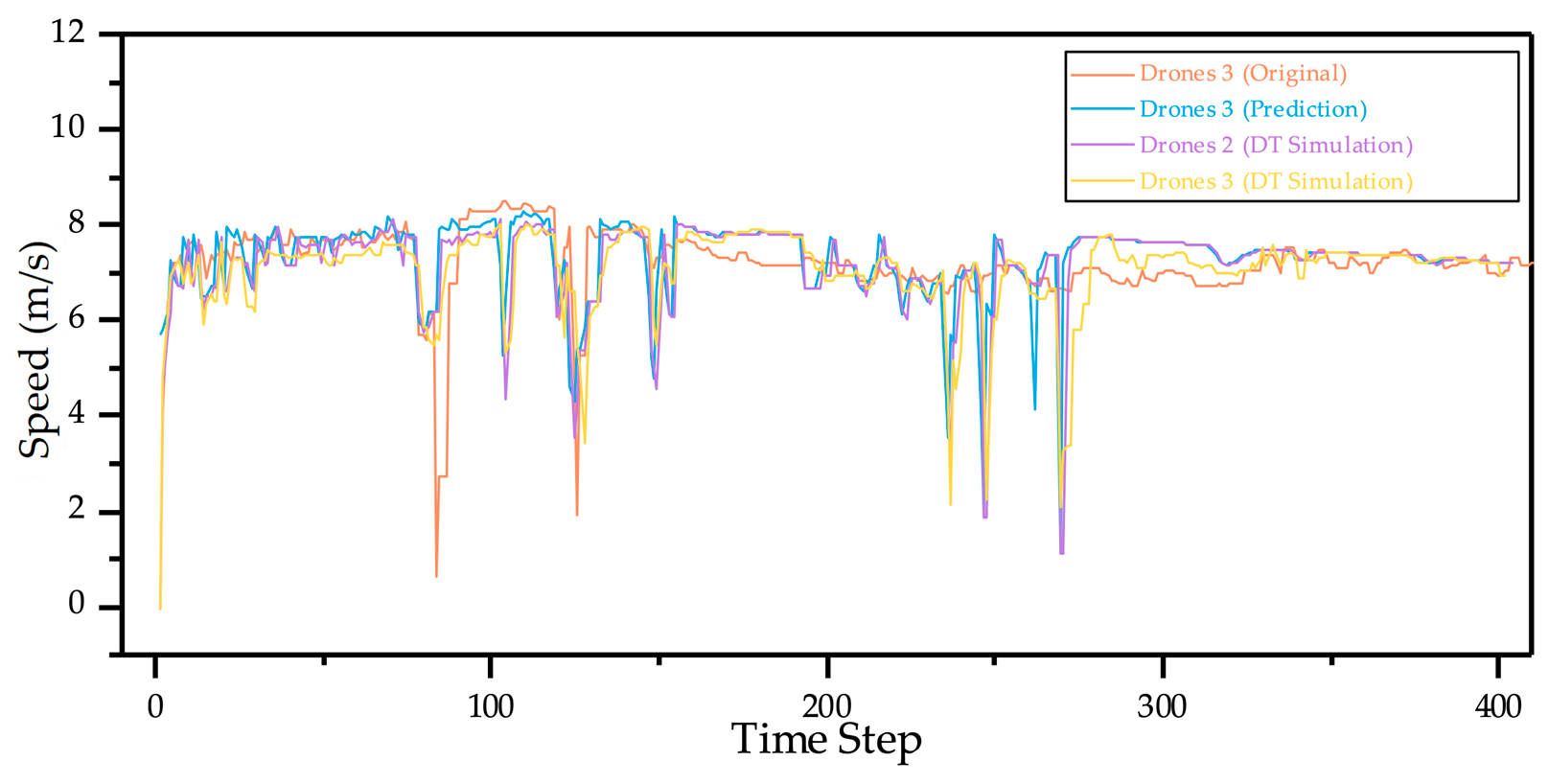

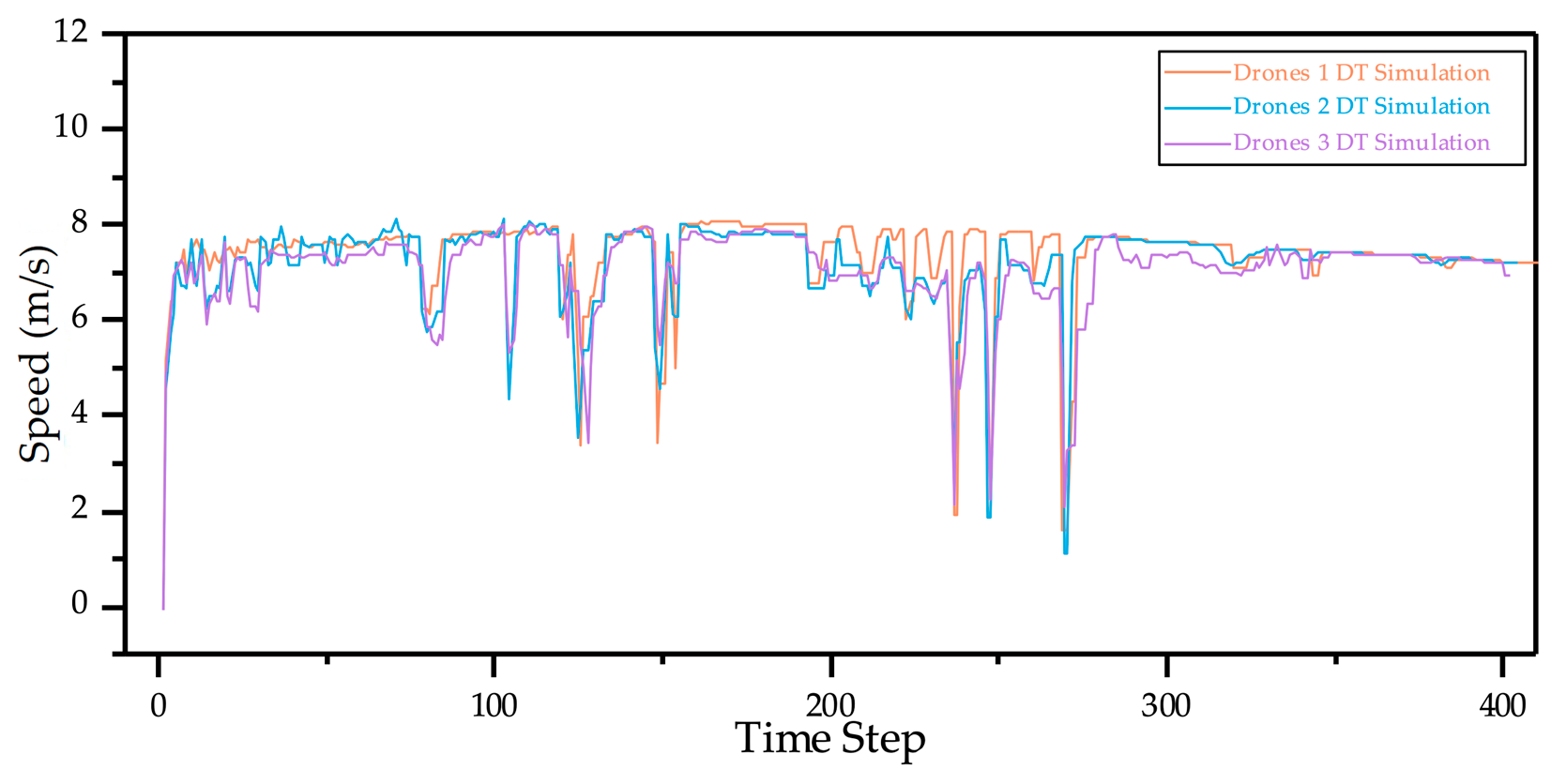

- The model is deployed in the AirSUMO and tested in a DT of the Cranfield campus. Speed curves of three UAVs before and after LLM optimisation demonstrate the effectiveness of BiDGCNLLM in improving stability and predictability.

1.4. Organisation

2. Methodology

2.1. Overview of Methodology

2.2. BiDGCNLLM

2.2.1. Algorithm Overview

2.2.2. Data Preprocessing and Dynamic Graph Construction

2.2.3. GCN Encoder

- Layer 1:

- Layer 2:

2.2.4. Reprogramming Layer

2.2.5. Large Language Model

- Query matrix , key , value

- The multi-head mechanism allows the model-to-model temporal dependencies in parallel in different subspaces

2.2.6. Output Projection

3. Experimental Results

3.1. Data and Preprocessing

3.2. Experimental Setup and Model Training

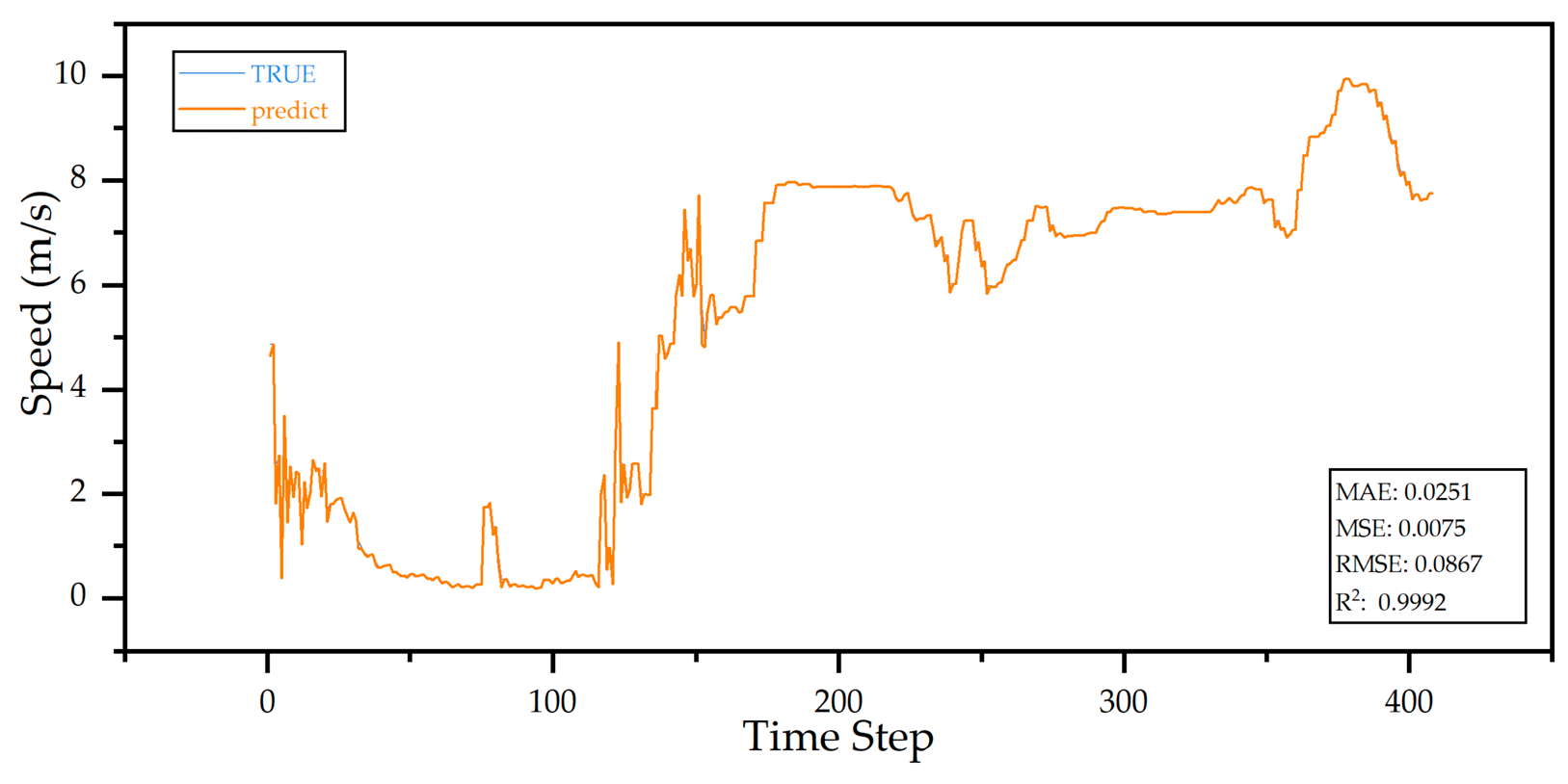

3.3. Experimental Prediction

3.4. Ablation Study

- Baseline: GCN and LLM are combined as the basic comparison model to verify the effect of the collaborative modelling of the two.

- Baseline + BiGCN: BiGCN is introduced based on Baseline to explore the effect of forward and backwards graph information transmission on prediction performance.

- Baseline + Dynamic Edge Weight: The Dynamic Edge Weight mechanism is introduced on Baseline to model the correlation strength between drones as it evolves over time and evaluate the effect of Dynamic Edge Weight adjustment.

- Full model (BiDGCNLLM): A complete prediction framework that integrates BiGCN, Dynamic Edge Weight, and LLM, representing the final model structure proposed in this paper.

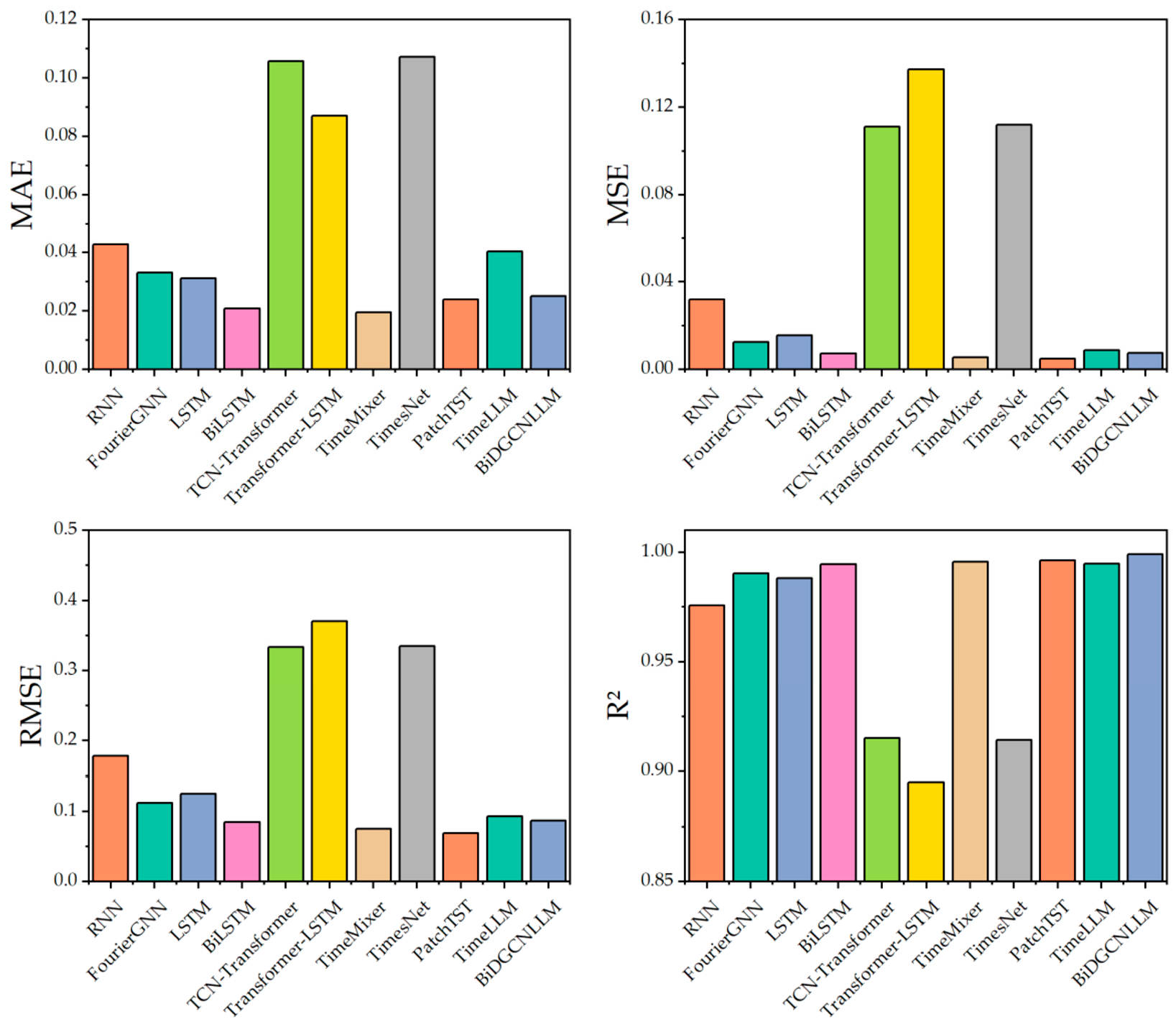

3.5. Comparative Analysis

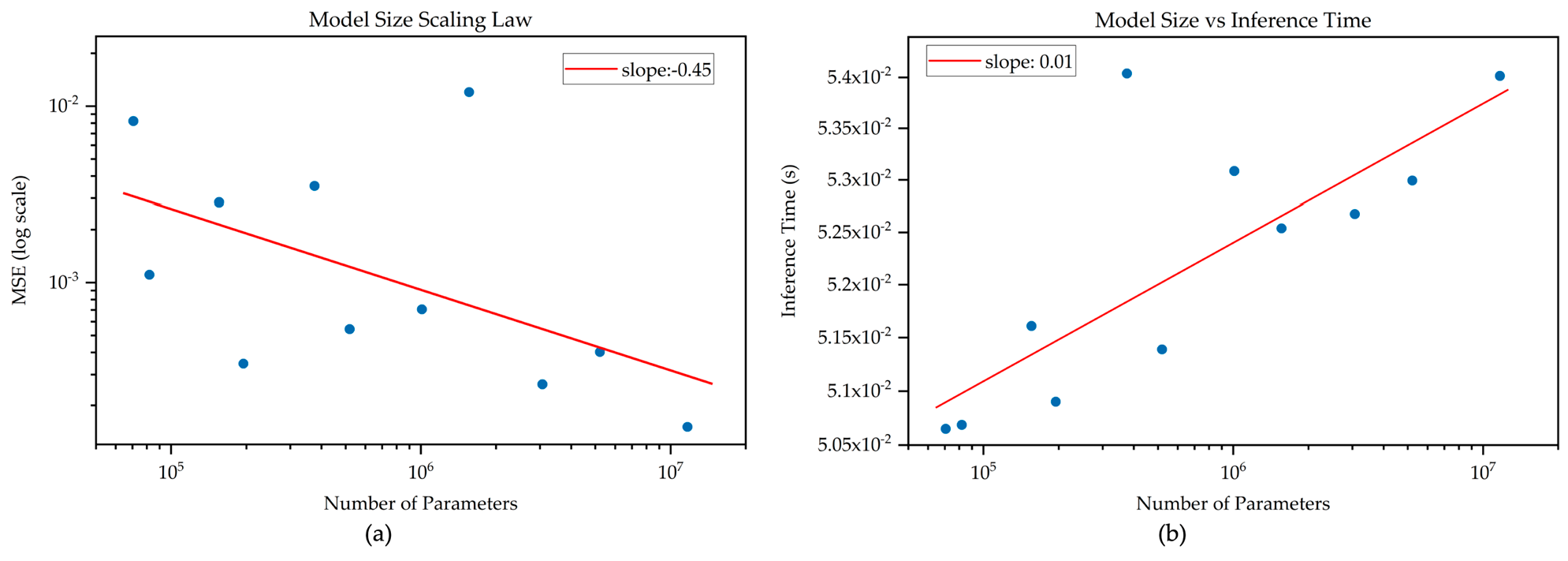

3.6. Scaling Law Analysis

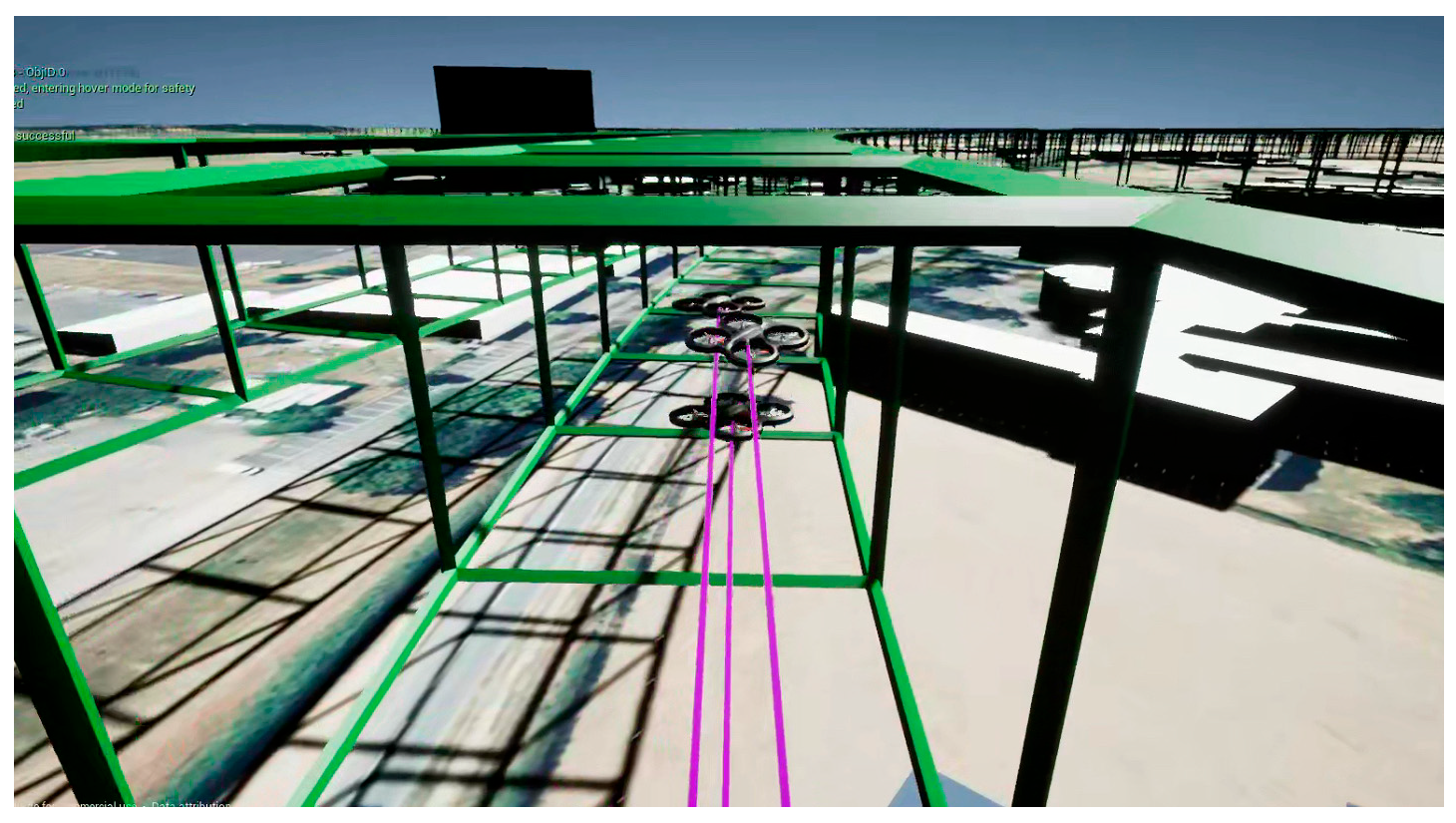

4. BiDGCNLLM Integration and Digital Twin Visualisation Simulation

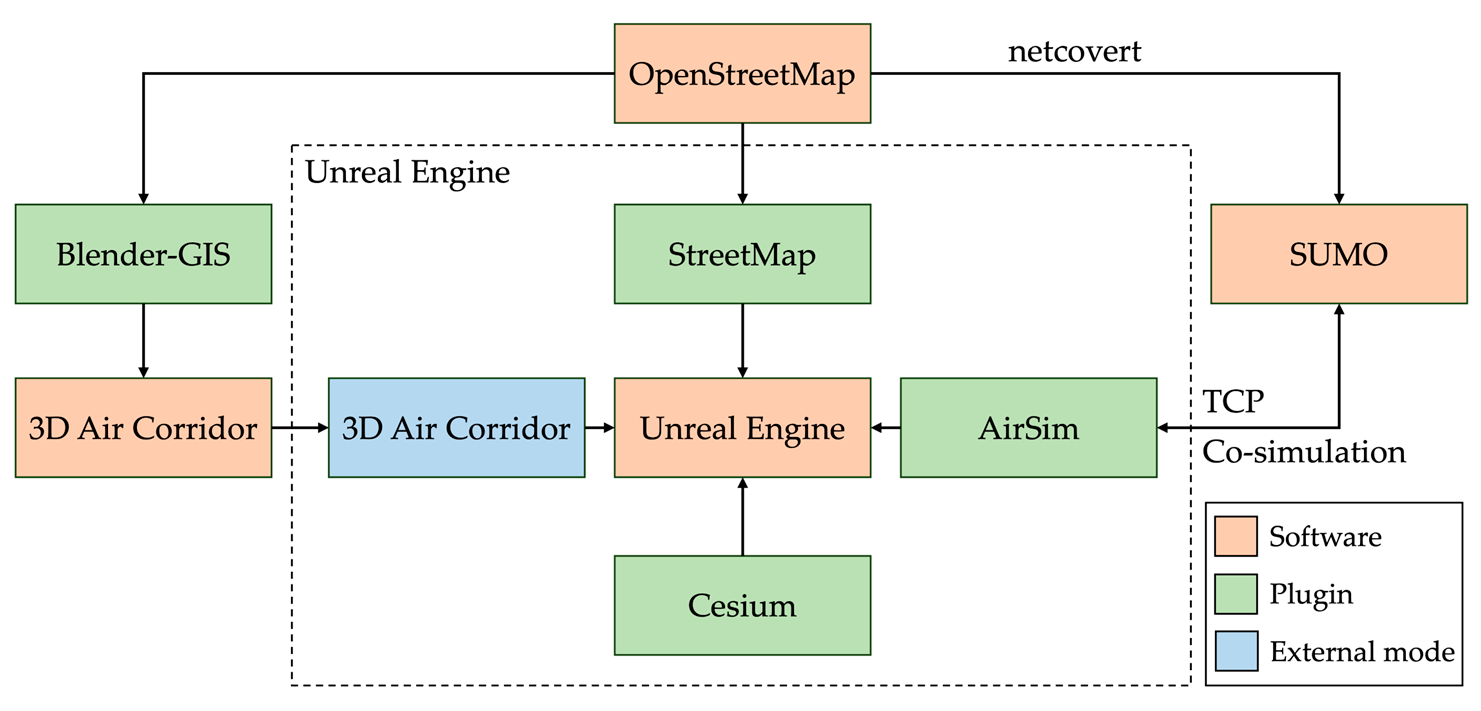

4.1. BiDGCNLLM and Digital Twin Integration

4.2. Digital Twin Construction and Deployment

4.3. Digital Twin Operation Simulation and Optimisation

4.4. Safety Analysis

5. Discussion

6. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Kopardekar, P.; Rios, J.; Prevot, T.; Johnson, M.; Jung, J.; Robinson, J.E. Unmanned aircraft system traffic management (UTM) concept of operations. In Proceedings of the AIAA AVIATION Forum and Exposition (No. ARC-E-DAA-TN32838), Washington, DC, USA, 13–17 June 2016; Available online: https://www.faa.gov/sites/faa.gov/files/2022-08/UTM_ConOps_v2.pdf (accessed on 15 July 2025).

- Goyal, R.; Reiche, C.; Fernando, C.; Serrao, J.; Kimmel, S.; Cohen, A.; Shaheen, S. Urban Air Mobility (UAM) Market Study (No. HQ-E-DAA-TN65181). 2018. Available online: https://ntrs.nasa.gov/api/citations/20190002046/downloads/20190002046.pdf (accessed on 15 July 2025).

- Belwafi, K.; Alkadi, R.; Alameri, S.A.; Al Hamadi, H.; Shoufan, A. Unmanned aerial vehicles’ remote identification: A tutorial and survey. IEEE Access 2022, 10, 87577–87601. [Google Scholar] [CrossRef]

- Ministry of Land, Infrastructure, Transport and Tourism, Japan. Concept of Operations for Advanced Air Mobility. 2024. Available online: https://www.mlit.go.jp/koku/content/001757082.pdf (accessed on 15 July 2025).

- Zhang, Z.; Zheng, Y.; Li, C.; Jiang, B.; Li, Y. Designing an Urban Air Mobility Corridor Network: A Multi-Objective Optimization Approach Using U-NSGA-III. Aerospace 2025, 12, 229. [Google Scholar] [CrossRef]

- Wang, X.; Yang, P.P.J.; Balchanos, M.; Mavris, D. Urban Airspace Route Planning for Advanced Air Mobility Operations. In Proceedings of the International Conference on Computers in Urban Planning and Urban Management, Montréal, QC, Canada, 12–14 June 2023; Springer: Cham, Switzerland; pp. 193–211. [Google Scholar] [CrossRef]

- Li, S.; Zhang, H.; Li, Z.; Liu, H. Air Route Design of Multi-Rotor UAVs for Urban Air Mobility. Drones 2024, 8, 601. [Google Scholar] [CrossRef]

- Altun, A.T.; Hasanzade, M.; Saldiran, E.; Guner, G.; Uzun, M.; Fremond, R.; Tang, Y.; Bhundoo, P.; Su, Y.; Xu, Y.; et al. AMU-LED cranfield flight trials for demonstrating the advanced air mobility concept. Aerospace 2023, 10, 775. [Google Scholar] [CrossRef]

- Shi, Z.; Zhang, J.; Shi, G.; Ji, L.; Wang, D.; Wu, Y. Design of a UAV trajectory prediction system based on multi-flight modes. Drones 2024, 8, 255. [Google Scholar] [CrossRef]

- Li, M.; Huang, Z.; Bi, W.; Hou, T.; Yang, P.; Zhang, A. A fish evasion behavior-based vector field histogram method for obstacle avoidance of multi-UAVs. Aerosp. Sci. Technol. 2025, 159, 109974. [Google Scholar] [CrossRef]

- Duan, X.; Fan, Q.; Bi, W.; Zhang, A. Belief Exponential Divergence for DS Evidence Theory and its Application in Multi-Source Information Fusion. J. Syst. Eng. Electron. 2024, 35, 1454–1468. [Google Scholar] [CrossRef]

- Bagnall, T.M.; Kriz, A.; Briggs, R.; Takamizawa, K. Demonstration and Validation of Remote ID Detect and Avoid. 2025; Federal Aviation Administration. Available online: https://www.faa.gov/uas/programs_partnerships/BAA/BAA004-MosaicATM-Demonstration-and-Validation-of-RID-DAA.pdf (accessed on 15 July 2025).

- Sacharny, D.; Henderson, T.C.; Marston, V.V. Lane-based large-scale uas traffic management. IEEE Trans. Intell. Transp. Syst. 2022, 23, 18835–18844. [Google Scholar] [CrossRef]

- McCorkendale, Z.; McCorkendale, L.; Kidane, M.F.; Namuduri, K. Digital Traffic Lights: UAS Collision Avoidance Strategy for Advanced Air Mobility Services. Drones 2024, 8, 590. [Google Scholar] [CrossRef]

- Huang, C.; Petrunin, I.; Tsourdos, A. Strategic conflict management using recurrent multi-agent reinforcement learning for urban air mobility operations considering uncertainties. J. Intell. Robot. Syst. 2023, 107, 20. [Google Scholar] [CrossRef]

- Yi, J.; Zhang, H.; Wang, F.; Ning, C.; Liu, H.; Zhong, G. An operational capacity assessment method for an urban low-altitude unmanned aerial vehicle logistics route network. Drones 2023, 7, 582. [Google Scholar] [CrossRef]

- Yi, J.; Zhang, H.; Li, S.; Feng, O.; Zhong, G.; Liu, H. Logistics UAV air route network capacity evaluation method based on traffic flow allocation. IEEE Access 2023, 11, 63701–63713. [Google Scholar] [CrossRef]

- Ruseno, N.; Lin, C.Y.; Guan, W.L. Flight test analysis of UTM conflict detection based on a network remote ID using a random forest algorithm. Drones 2023, 7, 436. [Google Scholar] [CrossRef]

- Cook, B.; Cohen, K.; Kivelevitch, E.H. A fuzzy logic approach for low altitude UAS traffic management (UTM). In Proceedings of the AIAA infotech@ Aerospace, California, CA, USA, 4–8 January 2016; p. 1905. [Google Scholar] [CrossRef]

- Neelakandan, D.S.; Al Ali, H. Enhancing trajectory-based operations for UAVs through hexagonal grid indexing: A step towards 4D integration of UTM and ATM. Int. J. Aviat. Aeronaut. Aerosp. 2023, 10, 5. [Google Scholar] [CrossRef]

- Xue, M. Coordination between federated scheduling and conflict resolution in UAM operations. In Proceedings of the AIAA AVIATION 2021 FORUM, Seattle, WA, USA, 2–6 August 2021; p. 2349. [Google Scholar] [CrossRef]

- Yahi, N.; Matute, J.; Karimoddini, A. Receding horizon based collision avoidance for uam aircraft at intersections. Green Energy Intell. Transp. 2024, 3, 100205. [Google Scholar] [CrossRef]

- Kipf, T.N.; Welling, M. Semi-supervised classification with graph convolutional networks. arXiv 2016, arXiv:1609.02907. [Google Scholar]

- Yu, B.; Yin, H.; Zhu, Z. Spatio-temporal graph convolutional networks: A deep learning framework for traffic forecasting. arXiv 2017, arXiv:1709.04875. [Google Scholar]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.Y.; Wong, W.K.; Woo, W.C. Convolutional LSTM network: A machine learning approach for precipitation nowcasting. Adv. Neural Inf. Process. Syst. 2015, 28, 1. [Google Scholar]

- Bai, L.; Yao, L.; Li, C.; Wang, X.; Wang, C. Adaptive graph convolutional recurrent network for traffic forecasting. Adv. Neural Inf. Process. Syst. 2020, 33, 17804–17815. [Google Scholar]

- Nie, Y.; Nguyen, N.H.; Sinthong, P.; Kalagnanam, J. A time series is worth 64 words: Long-term forecasting with transformers. arXiv 2022, arXiv:2211.14730. [Google Scholar]

- Zhao, Y.; Ma, Z.; Zhou, T.; Ye, M.; Sun, L.; Qian, Y. Gcformer: An efficient solution for accurate and scalable long-term multivariate time series forecasting. In Proceedings of the 32nd ACM International Conference on Information and Knowledge Management, Birmingham, UK, 21–25 October 2023; pp. 3464–3473. [Google Scholar] [CrossRef]

- Garza, A.; Challu, C.; Mergenthaler-Canseco, M. TimeGPT-1. arXiv 2023, arXiv:2310.03589. [Google Scholar] [CrossRef]

- Jin, M.; Wang, S.; Ma, L.; Chu, Z.; Zhang, J.Y.; Shi, X.; Chen, P.Y.; Liang, Y.; Li, Y.F.; Pan, S.; et al. Time-LLM: Time Series Forecasting by Reprogramming Large Language Models. In Proceedings of the International Conference on Learning Representations, Vienna, Austria, 7–11 May 2024. [Google Scholar] [CrossRef]

- Ditta, C.C.; Postorino, M.N. Three-Dimensional Urban Air Networks for Future Urban Air Transport Systems. Sustainability 2023, 15, 13551. [Google Scholar] [CrossRef]

- Hohmann, N.; Brulin, S.; Adamy, J.; Olhofer, M. Three-dimensional urban path planning for aerial vehicles regarding many objectives. IEEE Open J. Intell. Transp. Syst. 2023, 4, 639–652. [Google Scholar] [CrossRef]

- Brunelli, M.; Ditta, C.C.; Postorino, M.N. A framework to develop urban aerial networks by using a digital twin approach. Drones 2022, 6, 387. [Google Scholar] [CrossRef]

- Pradhan, P.; Omorodion, J.; Rostami, M.; Venkatesh, A.; Kamoonpuri, J.; Chung, J. Digital Framework for Urban Air Mobility Simulation. In Proceedings of the 2024 IEEE International Symposium on Emerging Metaverse (ISEMV), Bellevue, WA, USA, 21–23 October 2024; pp. 37–40. [Google Scholar] [CrossRef]

- Ywet, N.L.; Maw, A.A.; Nguyen, T.A.; Lee, J.W. Yolotransfer-Dt: An operational digital twin framework with deep and transfer learning for collision detection and situation awareness in urban aerial mobility. Aerospace 2024, 11, 179. [Google Scholar] [CrossRef]

- Ancel, E.; Capristan, F.M.; Foster, J.V.; Condotta, R.C. Real-time risk assessment framework for unmanned aircraft system (UAS) traffic management (UTM). In Proceedings of the 17th AIAA AVIation Technology, Integration, and Operations Conference, Colorado, CO, USA, 5–9 June 2017; p. 3273. Available online: https://ntrs.nasa.gov/api/citations/20170005780/downloads/20170005780.pdf (accessed on 15 July 2025).

- Sherstinsky, A. Fundamentals of recurrent neural network (RNN) and long short-term memory (LSTM) network. Phys. D Nonlinear Phenom. 2020, 404, 132306. [Google Scholar] [CrossRef]

- Yi, K.; Zhang, Q.; Fan, W.; He, H.; Hu, L.; Wang, P.; An, N.; Cao, L.; Niu, Z. FourierGNN: Rethinking multivariate time series forecasting from a pure graph perspective. Adv. Neural Inf. Process. Syst. 2023, 36, 69638–69660. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Siami-Namini, S.; Tavakoli, N.; Namin, A.S. The performance of LSTM and BiLSTM in forecasting time series. In Proceedings of the 2019 IEEE International Conference on Big Data (Big Data), California, CA, USA, 9–12 December 2019; IEEE: Piscataway, NJ, USA; pp. 3285–3292. [Google Scholar] [CrossRef]

- Chen, H.; Tian, A.; Zhang, Y.; Liu, Y. Early time series classification using tcn-transformer. In Proceedings of the 2022 IEEE 4th International Conference on Civil Aviation Safety and Information Technology (ICCASIT), Dali, China, 14–16 October 2022; IEEE: Piscataway, NJ, USA; pp. 1079–1082. [Google Scholar] [CrossRef]

- Shi, J.; Wang, S.; Qu, P.; Shao, J. Time series prediction model using LSTM-Transformer neural network for mine water inflow. Sci. Rep. 2024, 14, 18284. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Wu, H.; Shi, X.; Hu, T.; Luo, H.; Ma, L.; Zhang, J.Y.; Zhou, J. Timemixer: Decomposable multiscale mixing for time series forecasting. arXiv 2024, arXiv:2405.14616. [Google Scholar] [CrossRef]

- Wu, H.; Hu, T.; Liu, Y.; Zhou, H.; Wang, J.; Long, M. Timesnet: Temporal 2d-variation modeling for general time series analysis. arXiv 2022, arXiv:2210.02186. [Google Scholar]

- Wen, Z.; Zhao, J.; Xu, Y.; Tsourdos, A. A co-simulation digital twin with SUMO and AirSim for testing lane-based UTM system concept. In Proceedings of the 2024 IEEE Aerospace Conference, Big Sky, MT, USA, 2–9 March 2024; IEEE: Piscataway, NJ, USA; pp. 1–11. [Google Scholar] [CrossRef]

- Unreal Engine. The Most Powerful Real-Time 3D Creation Tool. 2023. Available online: https://www.unrealengine.com/en-US (accessed on 15 July 2025).

- Cesium. Cesium for Unreal. Cesium. 2022. Available online: https://cesium.com/platform/cesium-for-unreal/ (accessed on 15 July 2025).

- Conrad, C.; Delezenne, Q.; Mukherjee, A.; Mhowwala, A.A.; Ahmed, M.; Zhao, J.; Xu, Y.; Tsourdos, A. Developing a digital twin for testing multi-agent systems in advanced air mobility: A case study of cranfield university and airport. In Proceedings of the 2023 IEEE/AIAA 42nd Digital Avionics Systems Conference (DASC), Barcelona, Spain, 1–5 October 2023; IEEE: Piscataway, NJ, USA; pp. 1–10. [Google Scholar] [CrossRef]

- Zhao, J.; Conrad, C.; Delezenne, Q.; Xu, Y.; Tsourdos, A. A digital twin mixed-reality system for testing future advanced air mobility concepts: A prototype. In Proceedings of the 2023 Integrated Communication, Navigation and Surveillance Conference (ICNS), Herndon, VA, USA, 18–20 April 2023; IEEE: Piscataway, NJ, USA; pp. 1–10. [Google Scholar] [CrossRef]

| Method | MAE | MSE | RMSE | |

|---|---|---|---|---|

| Baseline (GCNLLM) | 0.0235 | 0.0056 | 0.0747 | 0.9965 |

| Baseline + BiGCN | 0.0259 | 0.0040 | 0.0636 | 0.9975 |

| Baseline + Dynamic Edge Weight | 0.0205 | 0.0029 | 0.0537 | 0.9982 |

| Fullmodel (BiDGCNLLM) | 0.0251 | 0.0075 | 0.0867 | 0.9992 |

| Method Name | MAE | MSE | RMSE | Ref. | |

|---|---|---|---|---|---|

| RNN | 0.0429 | 0.0319 | 0.1786 | 0.9757 | [37] |

| FourierGNN | 0.0332 | 0.0125 | 0.1119 | 0.9904 | [38] |

| LSTM | 0.0312 | 0.0156 | 0.1250 | 0.9881 | [39] |

| BiLSTM | 0.0209 | 0.0072 | 0.0846 | 0.9945 | [40] |

| TCN-Transformer | 0.1057 | 0.1111 | 0.3333 | 0.9151 | [41] |

| Transformer-LSTM | 0.0871 | 0.1373 | 0.3706 | 0.8954 | [42] |

| TimeMixer | 0.0195 | 0.0056 | 0.0750 | 0.9957 | [43] |

| TimesNet | 0.1072 | 0.1119 | 0.3346 | 0.9144 | [44] |

| PatchTST | 0.0239 | 0.0048 | 0.0695 | 0.9963 | [27] |

| TimeLLM | 0.0404 | 0.0087 | 0.0933 | 0.9947 | [30] |

| BiDGCNLLM | 0.0251 | 0.0075 | 0.0867 | 0.9992 | / |

| Number | AirSim ID | Position |

|---|---|---|

| 1 | Drones 1 | Front (Leader) |

| 2 | Drones 2 | Middle |

| 3 | Drones 3 | End |

| Type | Mavic Air 2 | Inspire 2 | MK 300 RTK |

|---|---|---|---|

| Mavic Air 2 | 10 m | 15 m | 20 m |

| Inspire 2 | 15 m | 15 m | 20 m |

| MK 300 RTK | 15 m | 10 m | 25 m |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wen, Z.; Zhao, J.; Zhang, A.; Bi, W.; Kuang, B.; Su, Y.; Wang, R. BiDGCNLLM: A Graph–Language Model for Drone State Forecasting and Separation in Urban Air Mobility Using Digital Twin-Augmented Remote ID Data. Drones 2025, 9, 508. https://doi.org/10.3390/drones9070508

Wen Z, Zhao J, Zhang A, Bi W, Kuang B, Su Y, Wang R. BiDGCNLLM: A Graph–Language Model for Drone State Forecasting and Separation in Urban Air Mobility Using Digital Twin-Augmented Remote ID Data. Drones. 2025; 9(7):508. https://doi.org/10.3390/drones9070508

Chicago/Turabian StyleWen, Zhang, Junjie Zhao, An Zhang, Wenhao Bi, Boyu Kuang, Yu Su, and Ruixin Wang. 2025. "BiDGCNLLM: A Graph–Language Model for Drone State Forecasting and Separation in Urban Air Mobility Using Digital Twin-Augmented Remote ID Data" Drones 9, no. 7: 508. https://doi.org/10.3390/drones9070508

APA StyleWen, Z., Zhao, J., Zhang, A., Bi, W., Kuang, B., Su, Y., & Wang, R. (2025). BiDGCNLLM: A Graph–Language Model for Drone State Forecasting and Separation in Urban Air Mobility Using Digital Twin-Augmented Remote ID Data. Drones, 9(7), 508. https://doi.org/10.3390/drones9070508