Abstract

The construction of hydroelectric dams leads to substantial land-cover alterations, particularly through the removal of vegetation in wetland and valley areas. This results in exposed sediment that is susceptible to erosion, potentially leading to dust storms. While the reintroduction of vegetation plays a crucial role in restoring these landscapes and mitigating erosion, such efforts incur substantial costs and require detailed information to help optimize vegetation densities that effectively reduce dust storm risk. This study evaluates the performance of drones for measuring the growth of introduced low-lying grasses on reservoir beaches. A set of test flights was conducted to compare LiDAR and photogrammetry data, assessing factors such as flight altitude, speed, and image side overlap. The results indicate that, for this specific vegetation type, photogrammetry at lower altitudes significantly enhanced the accuracy of vegetation classification, permitting effective quantitative assessments of vegetation densities for dust storm risk reduction.

1. Introduction

Hydroelectric reservoirs play a vital role in energy production in Canada. From 2016 to 2022, 60% of the country’s energy was produced from hydroelectric turbines [1], with the province of British Columbia (BC) relying on hydroelectricity for as much as 89% of its total energy production [2]. While this energy production is an important part of the province’s infrastructure and economy, the creation of reservoirs can have significant adverse impacts through the removal of natural vegetation from wetlands and valleys, thus altering critical ecological processes and causing irreparable damage to wildlife habitats [3]. In addition, the removal of vegetation over large areas results in the exposure of sediment such as soil and sand, which in some locations in BC has led to severe dust storms when strong winds elevate and carry this sediment over far distances. In some cases, such dust storms have been deemed responsible for causing poor air quality and resulting in significant human health impacts [4].

Hydroelectric reservoirs pose a unique challenge for environmental restoration efforts as water levels are controlled by both natural impacts and energy consumption demands. During times of high precipitation or seasonal snow melt, water volume in reservoirs is increased, while drier seasons or periods with temperatures below freezing see a decrease in reservoir water volume due to lack of input [5]. These natural processes must be balanced with human demands for electricity that also vary throughout the year [6]. As a result, the sandy beaches of some reservoir shorelines experience ongoing fluctuations in their water cover, creating an environment that is uniquely vulnerable to dust storms. Finer and drier grains, such as those found on the beach-like shorelines of hydroelectric reservoirs, are more susceptible to entrainment as their ability to counteract the wind’s force is weaker than that of larger sediments. Furthermore, as water levels drop and sediment particles dry, there is a decline in the sediment moisture that acts as a bonding agent between particles, limiting their susceptibility to erosion [7]. The reservoir fluctuations often result in a brief growing season for grasses planted below the waterline as part of a restoration effort, preventing vegetation from growing to a sustainable size or going to seed.

Multiple reservoirs across the province of BC experience dust storm events when water levels are low. The Williston Reservoir in the northeast, the Kinbasket Reservoir in the southeast, and the Carpenter Reservoir in the southwest region of the province have a documented history of increased dust storms because of lowered water levels [8,9,10]. Previous dust storms in the province have been deemed responsible for impacts on human health. Air contamination due to dust content can result in premature death by way of lung cancer or cardio-pulmonary diseases [11]. Finer dust particles are known to trigger respiratory disorders like asthma [12]. Such health risks will only increase as BC’s largest hydroelectric reservoir (Site C Dam) becomes operational in 2025, and the transition to more carbon-neutral energy systems becomes more prevalent. Therefore, understanding how to mitigate dust storms in these systems is critical.

Several dust storm mitigation tactics have recently been explored, most of which attempt to reduce the carrying potential of wind. This is typically accomplished by building barriers to create protected areas on the lee side of the structure [13]. Alternatively, some efforts have used irrigation to strengthen the capillary bond between particles, or chemical sprays which bond the particles together forming a crust, both of which increase the wind strength required to entrain the sediment [14]. Another common mitigation tactic employs vegetation to act as a natural barrier from the wind while also strengthening the soil’s resistance to erosion through its root systems [15,16,17,18]. While this method may be more labor intensive and costly than the construction of a fence, it restores the affected area to a condition most similar to its previous state, prior to dam construction [19]. Due to the drastic change in the environment caused by the construction of hydroelectric reservoirs, successfully growing native vegetation is not always possible [20], but the implementation of vegetation that is suited to the new environment is still less intrusive as it avoids the introduction of human-made infrastructure. The destruction of the surrounding environment is a widely recognized impact and downside of hydroelectricity, an otherwise cleaner alternative to fossil fuels [20,21].

Reservoir beaches are a unique and challenging environment for the successful planting of vegetation as they are typically submerged for a significant portion of the year and, in many areas across BC, face freezing conditions. In addition, beaches are also subject to variable topography and poor soil nutrients, which further affect vegetation growth. These challenges highlight the need for a robust method to quantify how well grasses are growing in the reservoir beach environment.

Typically, grass is quantified through in situ measurements in specific locations across the study area. These data are then extrapolated to estimate vegetation coverage values for the entire study area. However, given the scale of reservoir beaches (e.g., many reservoir beaches span over 100 hectares), this method is extremely time-consuming and not always feasible. An alternative to this traditional method is to use remote-sensing data acquired by an unmanned aerial vehicle (UAV). UAVs are an increasingly popular tool for monitoring small areas as, due to their small size, they can fly at much lower altitudes, collecting finer detail with a relatively lower cost than could be achieved via satellite or manned aircraft [22]. However, the size of UAVs limits their ability to fly in conditions with high winds or precipitation, and the low altitude at which they fly increases the amount of time required to cover an area, making them ill-suited for monitoring or data collection over large areas. Conversely, capturing data via fixed-wing aircraft may have much higher upfront costs, but such platforms enable data capture over a much larger area. As such, these costs and benefits must be weighed when planning data collection. A UAV is a sensible option if the size of the study area is such that the data can be collected at the required resolution within a few days, which has led UAV-based photogrammetry and LiDAR data to become increasingly popular tools for fine-scale vegetation monitoring, especially in the agricultural [23,24,25] and environmental management sectors [22,26].

Both LiDAR and photogrammetric sensors are commonly paired with UAVs and benefit from the lower flight altitude that they can provide. LiDAR sensors send out laser pulses and time their return in order to calculate the distance between the sensor and the object/ground. This creates a points cloud that can be processed into digital terrain or surface models. Photogrammetric sensors take a series of timed and spatially referenced images, which can later be stitched together to form an orthomosaic image. The low altitude of the UAV allows for both LiDAR and photogrammetric data products to have a smaller ground sample distance (GSD) and the LiDAR sensor will be able to obtain a higher point density both of which improve the resolution of the end product. This, coupled with its ability to penetrate through vegetation, has seen UAV-based LiDAR used in many environmental fields, especially when dense vegetation is being looked at as often seen in forestry applications [22,25,27]. While UAV-based photogrammetry and LiDAR data have demonstrated efficacy for reservoir vegetation monitoring, minimal research to date has been conducted that guides how best to conduct UAV mapping missions to quantify the success of planting efforts. Mapping missions (i.e., the flying of a UAV in a systematic planned pattern to capture consistent data over a defined area) and the subsequent mapping products are sensitive to a variety of conditions, such as the height of the UAV when flying, the speed of the UAV, and the amount of overlap between images and successive flight lines [28]. While mapping missions frequently emphasize minimizing flight duration as a means to reduce personnel costs and minimize fluctuations in illumination or atmospheric conditions, this can result in flying using sub-optimal parameters that could cause poor data outputs. Operational guidance is needed to ensure that researchers and practitioners understand the impacts of flight parameters, specifically the nuanced trade-offs with targeting specific flight times and resolution of data as this will impact operational costs and the extent to which restoration efforts can be applied.

This study aims to provide guidance on the impact of flight times and desired data resolutions on the ability to capture fine-scale vegetation distribution on reservoir beaches. This research will evaluate flight parameters—specifically altitude, speed, and flight line overlap—when collecting LiDAR and photogrammetric data. The study will not only assess the optimal parameter configurations but also analyze the differing capabilities of LiDAR and photogrammetric sensors in capturing vegetation. This analysis will be performed on a single large beach of the Williston Reservoir in northern BC. As part of an ongoing dust storm mitigation effort, several beaches in this reservoir have been seeded with agronomic grasses to reduce sediment erosion and the resulting dust storms that occur when reservoir levels are low. By developing a comprehensive comparative analysis of LiDAR and photogrammetric methodologies, this research aims to assist other studies and applications that aim to use UAVs to map, monitor, and quantify small scale vegetation.

The main motivation for this study is to address the following gaps in understanding how UAVs can be utilized in monitoring vegetation:

- Understanding specific flight parameters that are best suited to collect data in a barren beach environment where there is little elevation change or obstacles but the vegetation is relatively small;

- Determining how LiDAR versus photogrammetry data perform in this specific environment and task;

- Understanding how the information collected from this study can be used to inform future UAV data collection.

2. Materials and Methods

2.1. Study Area

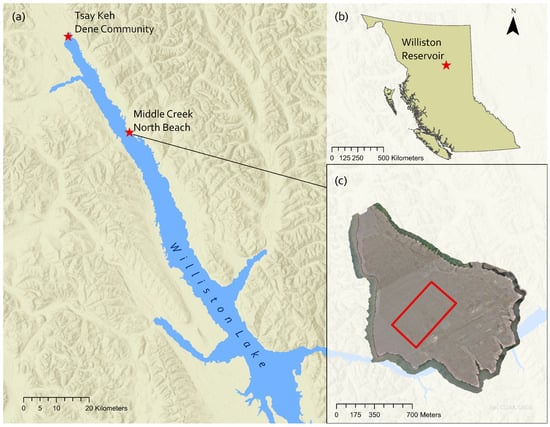

In the 1960’s, the Peace River, which flows east from the traditional territory of the Sekani peoples in northern British Columbia, through the Rocky Mountains to the Dunne-Za territory in northern Alberta, was identified as a prolific potential power source by BC’s provincial government [29]. This led to the creation of the W.A.C. Bennett Dam in 1968. The construction of the dam flooded the Peace River Valley and created the Williston Reservoir, which at 250 km long and 150 km across, is the largest human-made body of water in BC (Figure 1). This displaced the Tsay Keh Dene peoples, who moved across seasons through the river valley for thousands of years, and forced them to consolidate into one location at the northern end of the reservoir. Due to the topography of the landscape, the Tsay Keh Dene community, now experiences severe dust storms whenever reservoir levels are low and the fine sediment of the lakebed is exposed. To address this issue, dust storm mitigation efforts have been ongoing since the mid-1990s through a variety of measures, one of which has been planting vegetation, in the form of fast-growing grasses, on the surface of a selection of beaches that have been previously identified as high dust emitters. These grasses are planted annually once reservoir levels are low and the ground is thawed.

Figure 1.

(a) The northern end of Williston Lake Reservoir. Middle Creek North Beach (the study area) and the Tsay Keh Dene community are highlighted. (b) Williston Reservoir’s location within the province of British Columbia. (c) Middle Creek North Beach. The red outline indicates the study area that was used for the test flights.

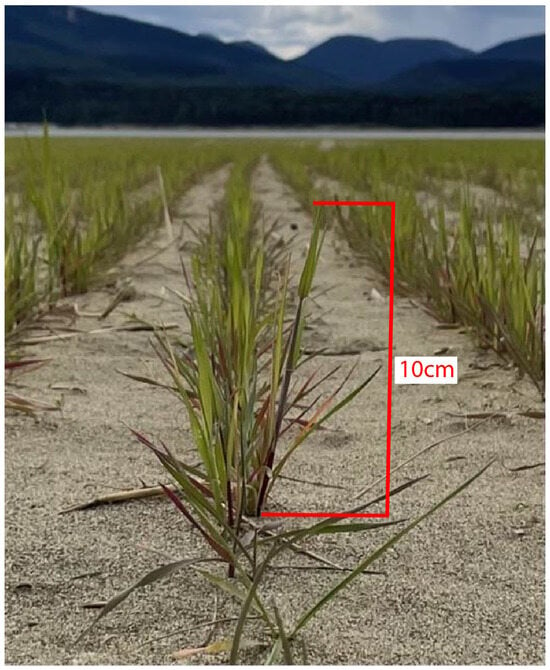

A single beach, Middle Creek North (MCN), on the eastern side of the reservoir, was selected for this study. MCN is 1.85 km2 and just over 1 km at its widest point. MCN was selected for this study as it has been identified as a high dust emitter beach and was the site of a seeding trial from a previous mitigation effort which was done to compare the impact of different seeding rates and thus was already being planted. Sections of the beach were seeded using a tractor and seeder with a seeding rate of 100, 200, or 300 kg per hectare depending on the location. This resulted in lines of vegetation that, at the time of data collection, were around 10 cm tall (Figure 2). The data collected in this study included all three seeding densities. Winds typically travel north from the southern end of the beach, and there is minimal elevation change that would lead to topographical features that could potentially be a barrier from wind. This study focuses specifically on a 305 m by 540 m subsection of the beach that was small enough to allow for several UAV flights to be flown with the parameters of interest within an effective time frame.

Figure 2.

Planted grasses on the Williston Reservoir, roughly 2 months after seeding.

2.2. Data Collection

All data were collected using the DJI Matrice 300 RTK UAV, a four-propeller, 6.3 kg drone that is able to fly in light wind and rain, making it an appropriate choice for the remote study area where field days are not easily rescheduled [30]. This drone has a vertical accuracy of 1.5 cm and a horizontal accuracy of 1 cm when paired with the DJI D-RTK2 as it was for this research. The DJI Zenmuse L1 payload was used to collect the LiDAR point data and the DJI Zenmuse P1 sensor with a 35 mm lens was used to collect the photogrammetric data. All flights were completed on 19 June 2023. There was consistent cloud cover throughout the day and minimal wind, ensuring clear and accurate flight results. A total of ten flights were flown, three collecting photogrammetry data and seven collecting LiDAR data. It should be emphasized here that the broader intent of collecting data for this research is to provide metrics on vegetation cover, which will later be analyzed alongside measures of dust particles that are transmitted during dust storms. As such, the UAV data are not collected during dust storm events as UAVs with the above-mentioned instruments cannot be safely flown during dust storms nor can we ensure the health and safety of research personnel collecting the data.

2.2.1. LiDAR Flights

The purpose of the LiDAR flights was to compare how flight altitude, speed, and side overlap (or sidelap) affected the point density of the resulting product and, in turn, the ability to classify vegetation in the data. Each parameter was given a default value (as set by the manufacturers) and an optimized value, which should improve the resolution and quality of results. For flight altitude and speed parameters, the optimized value was obtained by reducing the default value, and for the sidelap parameter, the default value was increased to create the optimized value. Seven LiDAR flights were flown in total with the range of parameter values listed in Table 1. All flights were flown on the same day, within a short time window to maintain consistent lighting conditions.

Table 1.

LiDAR test flights and the parameters used.

2.2.2. Photogrammetry Flights

As with the LiDAR flights, the goal of the photogrammetry flights was to determine how each flight parameter impacts the quality of the outputs as well as how suitable the resulting data are for determining vegetation density. However, photogrammetry requires a high sidelap as its default to produce a complete orthoimage, so for these flights sidelap was held at its high default value and only altitude and speed were compared at their default and optimal values. This resulted in a total of only three flights; one with an optimized altitude, one with an optimized speed, and one with both parameters optimized. The flight parameters used for the photogrammetry flights can be seen in Table 2.

Table 2.

Photogrammetry test flights and the parameters used.

2.2.3. Ground Truthing

To ensure the spatial accuracy of the results and allow for an accurate comparison, an Emlid Reach RS2+ GNSS system consisting of a base station and rover was used throughout the flights. The base station was operating throughout the entirety of all the flights, and a rover was used to obtain highly precise locations of the five ground control points (GCPs) and the UAV’s take-off point. This ensures high spatial accuracy and allows for more precise comparison between the resulting products of different flights.

2.3. Processing

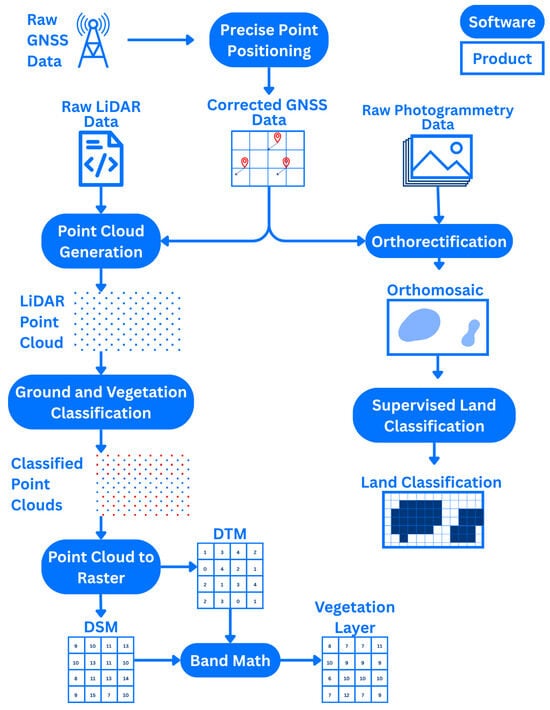

The processing workflow for both the LiDAR and photogrammetry data can be seen in Figure 3.

Figure 3.

Workflow used for processing the LiDAR and photogrammetry data.

2.3.1. LiDAR

To ensure a robust comparison, the LiDAR data collected was processed using DJI Terra [31] to apply the relevant spatial corrections as determined by the ground control points (GCPs), the RTK Base Station, and precision point positioning that was estimated using Natural Resources Canada’s Precise Point Positioning Geodetic reference systems. Data noise, such as that generated by direction changes between flight lines, was filtered using TerraScan software [32]. TerraScan was also used to produce ground and vegetation classifications. The ground classification was exported as a digital terrain model (DTM), and a combination of the ground and vegetation classifications was used to create a digital surface model (DSM). ArcGIS Pro [33] was then used to subtract the DTM from the DSM to create a raster of just vegetation. The above process was repeated for all seven LiDAR flights.

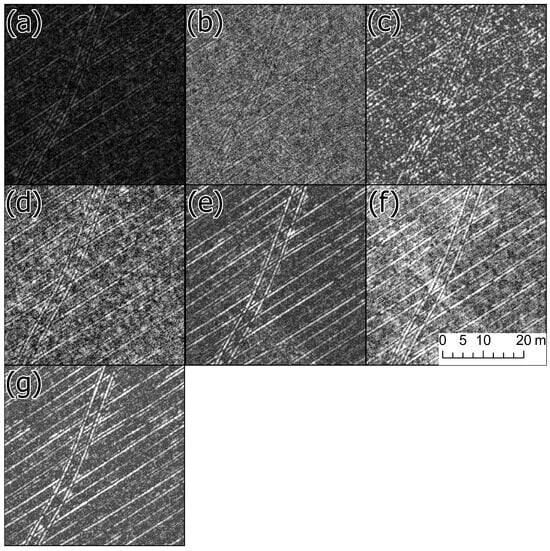

The vegetation layers were visually assessed to determine the flight that had the clearest definition of vegetation lines and the highest resolution (Figure 4). This was determined to be the vegetation layer produced by the lower altitude and higher side lap flight (Figure 4g). This raster dataset was then reclassified, with each pixel being given a value of 1 (indicating vegetation) or 0 (indicating sand) based on whether its initial value fell above or below the elevation threshold of 0 cm. This process was repeated six times, increasing the elevation threshold by 10 cm each time.

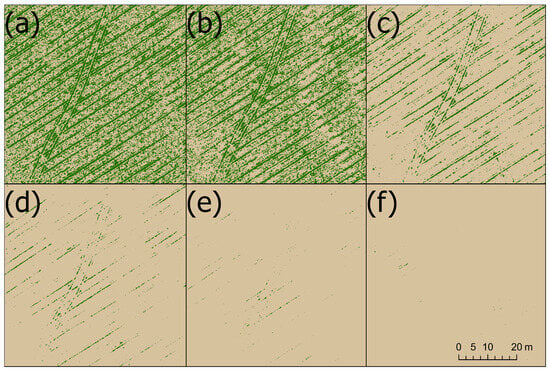

Figure 4.

LiDAR vegetation layer results. These were produced by subtracting the DTM from the DSM, a standard procedure that should result in a layer of just vegetation. (a) Higher side lap and slower speed. (b) Slower speed. (c) Higher side lap. (d) Lower altitude. (e) Lower altitude, higher side lap, and slower speed. (f) Lower altitude and slower speed. (g) Lower altitude and higher side lap.

2.3.2. Photogrammetry

The photogrammetry data were also processed in DJI Terra to ensure spatial accuracy between the flights. Both the LiDAR flights and the photogrammetry flights were collected on the same day and used the same RTK set up and GCPs to allow for spatial alignment between data types as well as between flights. In addition to applying spatial corrections, DJI Terra stitched the images from each flight into orthomosaics. These orthomosaics could then be used to perform a supervised land classification.

The supervised land classification was performed using ArcGIS Pro. To do so, training sets, groups of user-defined pixels that are known to represent a certain landcover type, were created. Typically, each land-cover type will have around 10 groups or samples of pixels that represent all the differing shades that this land cover can appear as. The software then uses the pixel shades associated with each land cover to classify the entire image. Due to differences in the lighting during the flights, a training set had to be created for each flight to avoid misclassification caused by a poorly suited training set. Each flight’s training set had 10 samples for barren/sandy areas, 10 samples for vegetated areas, and five artificial samples. The artificial samples included the pixels making up the black and white GCP markers that had been laid out in the study area to aid with processing and spatial correction. To avoid having these values potentially impact the classification, they were given their own category. An automated supervised land classification was then performed using these training samples.

2.3.3. Comparison

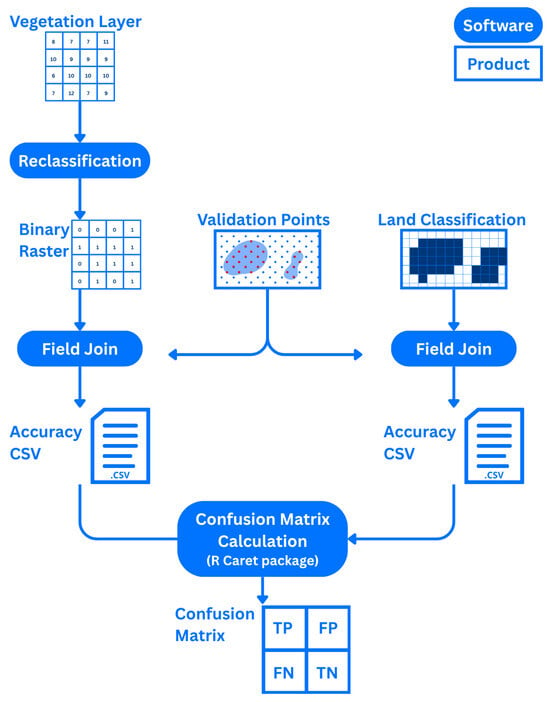

The workflow used to compare products across sensors can be seen in Figure 5.

Figure 5.

Workflow used for comparing the accuracy of the LiDAR and photogrammetry-based vegetation classifications.

A manual classification was completed of the study area to assess the accuracy of both the LiDAR and photogrammetry vegetation classifications [34]. As manually classifying each pixel was not feasible, a point layer with 200 points randomly distributed across the study area was created. These points were displayed over each orthomosaic image and adjusted slightly to ensure that 100 were located on top of known vegetation pixels (based on visual assessment) and 100 were located on top of known pixels that were known to be sand. This information was used to populate the actual field of the point layer attribute table with either a 1 (indicating vegetation) or a 0 (indicating sand). The same points were then displayed over the orthomosaic’s corresponding land classification. The predicted field of the point attribute table could then be populated based on the value of the pixels that the points now resided over, where, again, 1 represented vegetation and 0 represented sand. This process was repeated for each photogrammetry flight and for each binary vegetation raster produced from the best-performing LiDAR flight. This created a series of accuracy csv tables that compare the results as predicted by the automated classifications and vegetation binaries with what existed in reality.

These accuracy tables could then be used to calculate the accuracy of each prediction. Analysis was done in R Studio using the R package Caret [35,36,37], which used the data to create confusion matrices and determine the accuracy of each classification. The accuracy is calculated by dividing the sum of the True Positive and True Negatives (in this case True Vegetation and True Sand) by the total number of samples. The matrices provide Cohen’s Kappa values as well as precision and recall values [38]. Cohen’s Kappa is calculated by subtracting the expected probability from the observed probability and dividing it by the difference between 1 and the expected probability.

3. Results

3.1. LiDAR

The best performing LiDAR flight, based on visual assessment of vegetation lines, was flown at low altitude with a default speed and high overlap (Figure 4). This flight became the focus of the study, and six vegetation classification were created based on the vegetation layers created from the DSM and DTM of this flight.

All elevation thresholds created binary vegetation rasters that performed poorly (Table 3). The baseline threshold of 0 cm produced the land classification results with the highest accuracy; however, this accuracy was relatively low, at only 55.5% and the classification erred on the side of under-classifying vegetation. At a vegetation threshold of 10 mm, an accuracy of 55% was obtained and this classification still tended towards under-classifying vegetation. The following vegetation height thresholds continued this pattern of under-classifying vegetation when compared to the manually classified points. Although the overarching issue in the data is the under-classification of vegetation (i.e., classifying vegetation as sand), the mere presence of vegetation in the classifications based on higher elevation thresholds (such as 30, 40, and 50 cm) indicates an issue with the vegetation layers (Figure 6). If the vegetation layer that resulted from subtracting the DTM from the DSM was actually showing vegetation, there would be nothing over 10 cm in height, as this is the known height of the grasses. The height of features that occur suggests that this is instead picking up larger features in the sand such as the small dunes that form around the tire tracts of the equipment used for planting and watering the vegetation. With these findings in mind, future research should not rule out the application of LiDAR for mapping small grasses. In a smaller area that can be flown at a lower altitude, LiDAR could potentially perform well with additional GCPs and using a novel technique for isolating vegetation within the LiDAR point cloud.

Table 3.

Classification and accuracy results for LiDAR flights.

Figure 6.

Lidar-based vegetation classification results. Pixels in the vegetation layer with a value higher than a certain threshold were classified as vegetation. Each pane shows the results at a different threshold. (a) 0 cm threshold. (b) 10 cm threshold. (c) 20 cm threshold. (d) 30 cm threshold. (e) 40 cm threshold. (f) 50 cm threshold.

Photogrammetry

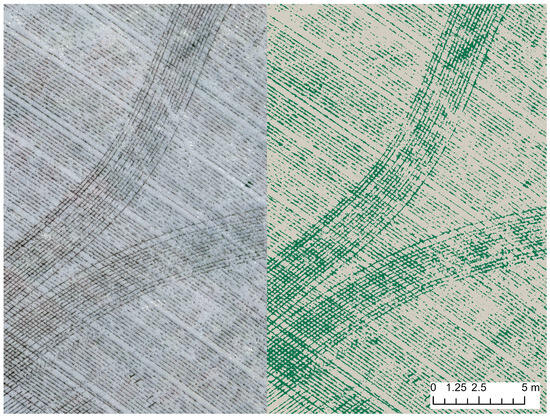

The low flight classification had relatively few errors (Figure 7). Every cell that was known to be vegetation was classified as such. However, there were three instances of false positives where a sand cell was misclassified as vegetation. So, while overall this classification was accurate and precise, it had a slight tendency to over-classify vegetation.

Figure 7.

The image on the left shows the orthomosaic created from the data collected by the low-altitude photogrammetry flight. The image on the right shows the results from the supervised land classification that was run on the orthoimagery.

The slow flight classification had a total of 16 misclassifications overall. This classification had only two instances where sand was falsely classified as vegetation (false positives) but 14 instances where cells that were known to be vegetation were falsely classified as sand (false negatives), so this classification was more prone to under-classify vegetation. The low and slow flight classification had a total of 18 misclassifications. This classification had 13 instances of known vegetation being classified as sand (false negatives), but five instances of sand being classified as vegetation, a higher count of false positives than the previous two flights. While this classification scheme still tends towards under-classifying vegetation, there is generally more error present as indicated by the relatively lower accuracy and kappa values. However, the accuracy and Kappa values are considered relatively high for all three flights (Table 4).

Table 4.

Classification and accuracy results for photogrammetric flights.

4. Discussion

The overall objective of this study was to identify which parameters and sensors allow for the most accurate collection of data when monitoring coverage of introduced grasses on the beaches of the hydroelectric reservoir. The results from multiple flights with varying parameters on Middle Creek North Beach demonstrated that, in general, photogrammetry data can identify small grasses on reservoir beaches with relatively higher accuracy than the LiDAR data. The low-altitude photogrammetry flight performed best with 98.5% accuracy, while the slow-speed flight and the combined low-altitude and slow-speed flight had accuracies of 92% and 91%, respectively. The respective kappa values of 0.97, 0.84, and 0.82 indicate that all the flights produced classifications that had near-perfect agreement, well beyond what would be obtained by random occurrence [38]. Although all values are above the 0.81 threshold of near-perfect [39], the low flight classification produced a value that was closest to 1.

In contrast, the best-performing LiDAR flight could be classified with only a 55.55% accuracy and a Kappa of 0.1. The Kappa values for all LiDAR flights are close to zero, indicating that they are not significantly different from results that could be obtained by chance. This, more than accuracy or precision results, is particularly indicative of the quality of the land classifications produced from the LiDAR flights.

The extreme discrepancy between the LiDAR and photogrammetry results is likely due to the small size of the vegetation (5 cm), which was easily lost in the noise of the LiDAR point cloud, even when flying with parameters that should reduce noise. Subtracting the DTM from the DSM, which should have resulted in a vegetation elevation model [40], instead showed only noise and some larger features in the land scale such as tire tracks, small dunes, and woody debris.

In contrast, the orthomosaic-based land classification identified vegetation based on pixel colour, and as a result, it was much better able to identify the small grasses as they only had to be large enough to affect the colour of the 1 cm pixel. All flights tended to underrepresent vegetation, with the exception of the low-altitude photogrammetry flight, which over-classified. However, the low altitude flight also performed with the highest accuracy. It is also important to note that while the classifications produced by the other two photogrammetry flights were not as reliable as the low-altitude flight, they produced results beyond what could be expected by random chance.

While both lower altitude and slower speed can improve the resulting product resolution, for this application a lower altitude had a greater impact on the quality of the land classification produced by the data. Slowing the flight speed produced lower quality results than lowering the flight altitude as the results resembled those of the slower flight when these parameters were combined. A low flight altitude paired with default speed and sidelap parameters performed best for photogrammetry. It is important to note that the default sidelap value for photogrammetric data collected is much higher than the default for LiDAR flights.

Through visual assessment, the LiDAR results with the clearest vegetation lines were that from the flight flown with a low altitude and default speed. Sidelap was also considered for LiDAR flights and, in this case, an optimized high sidelap was also used, which meant this flight was flown with similar parameters to the best-performing photogrammetry flight; both were at an altitude of 50 m, the LiDAR flight flew at 6 m/s with an overlap of 60 m while the photogrammetry flight flew at 7 m/s with an overlap of 70%. Regardless of the vegetation threshold used, the classification results produced were suboptimal, although there is a distinct decrease in the accuracy and kappa values after the 10 mm threshold (Figure 4).

While previous studies comparing the efficacies of LiDAR and photogrammetry tend to find that LiDAR will outperform photogrammetry across a variety of applications [41,42], these studies operate on a vastly different scale than that of this research. One of the key motivations for this study was the lack of prior research conducted on this very specific environment which consists of a vast space with very fine vegetation. As such, these previous findings do not necessarily transfer to this study area as our results have shown. However, the occurrence of photogrammetry outperforming LiDAR in a specific context is not entirely unprecedented - Man et al. [43] also found that the spectral information provided by RGB imagery could be used to improve the classification ability of UAV-photogrammetry data such that it was able to classify tree species on a pixel level with more accuracy than UAV-based LiDAR.

Reservoir restoration poses a unique challenge due to the shortened growing season, leading to smaller plant sizes, which necessitated precise mapping and monitoring techniques in this study. However, the need for detailed vegetation monitoring extends beyond reservoirs. For instance, in mining restoration, accurate vegetation mapping supports the reclamation of disturbed lands by tracking plant establishment and growth over time. In oil and gas sectors, effective vegetation monitoring is critical for assessing the recovery of habitats impacted by extraction activities. Similarly, in dune restoration, precise mapping can aid in controlling erosion and promoting the establishment of native plant species. Therefore, the methodologies developed in this study have valuable applications in these varied contexts, enhancing restoration success across diverse environments.

Limitations

Due to time limitations, each flight parameter combination was flown only once. This limited the statistical analysis that could be performed on the results, and although the flight results aligned with what was expected, it is possible that the data collected is not an accurate representation of the expected quality of results from specific parameter combinations. It would be beneficial for future studies to repeat the flights so that averages could be obtained. Additionally, it could also be beneficial to fly a series of flights over time to see how the quality of results changes as the grass height increases. The test flights in this study were flown near the end of the growing season when grass was anticipated to be at its peak, so the results do not represent the best parameter fit for smaller grass varieties or grasses in the early stages of growth.

Additionally, this study only tested two variations of each parameter (the default and optimized setting). While this served as a good basis for a preliminary study and allowed the data to be collected in the allotted time, it would be beneficial for future studies to take a more detailed approach. Given the results of this study, focusing just on photogrammetry and altitude while holding all other parameters at default could be beneficial and would allow more time for a wider variety of flight altitudes to be tested.

As previously mentioned, this research had intended to calculate a biomass estimate for the vegetation that had been planted from the Lidar data, however, due to the range of noise exceeding the size of the vegetation, this was not feasible under the study conditions. Having a biomass estimate would better inform researchers and practitioners looking to optimize their mitigation efforts. Future studies could consider using field measurements to train and validate a model that would be better able to calculate these biomasses, however, this objective fell outside the scope of the current investigation.

This research builds on previous literature that has aimed to identify the optimal parameters for vegetation monitoring [27,28,44,45,46]. A common theme amongst this past research is that the best-fit parameters typically differ depending on the terrain and the goal of the project [28]. As such, it is important to continue investigating the sensitivity of UAV-based vegetation monitoring to specific flight parameters so that as many optimal parameters for different terrains can be documented as possible. Resolution must always be weighed against time and budget restraints; the finding from this study that speed reduction (which can dramatically increase flight time) did not make a significant difference on resolution or the quality of the land class may enhance the efficiency of future field planning efforts.

5. Conclusions

The primary findings of this study are as follows:

- For vegetation of this size (10 cm high grasses) to be monitored over a large area, photogrammetry is a better-suited instrument than LiDAR as the small-scale vegetation is too easily lost in the noise of the LiDAR.

- The altitude of the flight was the most impactful parameter for improving the quality of the results for both photogrammetry and LiDAR.

As energy demands increase, so too does the potential increase in the construction of dams as well as an increased period where dam levels are low. Prolonged exposure of fine sediments may exacerbate sediment transport, emphasizing the need for effective dust storm mitigation techniques. The findings from this study can act as a guide for future studies aiming to accurately monitoring small-scale vegetation in hydroelectric reservoirs using drones. These findings may inform practitioners involved in dust storm mitigation projects who are looking to incorporate UAVs as an effective tool for measuring the success of vegetation in these unique and challenging environments.

Author Contributions

Conceptualization, M.M., C.B. and N.S.; methodology, C.B., G.V. and J.K.; software, G.V., R.S. and J.K.; validation, C.B. and G.V.; formal analysis, G.V.; resources, C.B., N.S., M.M. and R.S.; data curation, M.M., G.V., C.B., N.S. and J.K.; writing—original draft preparation, G.V.; writing—review and editing, C.B. and N.S.; visualization, G.V.; supervision, C.B. and N.S.; project administration, M.M., C.B. and N.S.; funding acquisition, M.M. and N.S. All authors have read and agreed to the published version of the manuscript.

Funding

The work was supported by the NSERC Alliance Grant [566939-21], a partnership between Chu Cho Industries LP through the Mitacs Accelerate program; BC Hydro; the Tsay Keh Dene Nation; and the University of Victoria.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data collected and created are the property of Chu Cho Industries LP, Chu Cho Environmental LLP, and Tsay Keh Dene Nation, whose land it depicts. Therefore, the datasets presented in this article are not available for public use at this time.

DURC Statement

Current research is limited to the field of geography and is beneficial for environmental restoration and dust storm mitigation. This research does not pose a threat to public health or national security. Authors acknowledge the dual-use potential of the research involving drones and confirm that all necessary precautions have been taken to prevent potential misuse. As an ethical responsibility, authors strictly adhere to relevant national and international laws about DURC. Authors advocate for responsible deployment, ethical considerations, regulatory compliance, and transparent reporting to mitigate misuse risks and foster beneficial outcomes.

Acknowledgments

This research was made possible by the Tsay Keh Dene Nation, whose traditional land this project took place on. We are thankful for their guidance and support. The equipment was provided in combination by Chu Cho Industries LP, Chu Cho Environmental LLP, and Surreal Lab at the University of Victoria.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Hydroelectricity Generation Dries Up Amid Low Precipitation and Record High Temperatures: Electricity Year in Review 2023; Statistics Canada: Ottawa, ON, Canada, 2024.

- BACKGROUNDER: BC’s Energy System; Government of British Columbia: Victoria, BC, Canada, 2024.

- Wu, W.; Eamen, L.; Dandy, G.; Razavi, S.; Kuczera, G.; Maier, H.R. Beyond engineering: A review of reservoir management through the lens of wickedness, competing objectives and uncertainty. Environ. Model. Softw. 2023, 167, 105777. [Google Scholar] [CrossRef]

- Dams and Development: A New Framework for Decision-Making; Earthscan: Abingdon, UK, 2013; Backup Publisher: World Commission on Dams.

- Olsson, G. Water and Energy: Threats and Opportunities, 2nd ed.; IWA Publishing: London, UK, 2015. [Google Scholar]

- Darghouth, S.; Ward, C.; Gambarelli, G.; Styger, E.; Roux, J. Watershed Management Approaches, Policies, and Operations: Lessons for Scaling Up; World Bank: Washington, DC, USA, 2008; Volume 11. [Google Scholar]

- Willett, C.D.; Adams, M.J.; Johnson, S.A.; Seville, J.P. Capillary bridges between two spherical bodies. Langmuir 2000, 16, 9396–9405. [Google Scholar] [CrossRef]

- Kinbasket Reservoir: Dust; BC Hydro: Vancouver, BC, Canada, 2010.

- Williston Dust Control Trials and Monitoring; BC Hydro: Vancouver, BC, Canada, 2016.

- Carpenter Reservoir Drawdown Zone Riparian Enhancement Program 2020 Yearly Report; BC Hydro: Vancouver, BC, Canada, 2021.

- Giannadaki, D.; Pozzer, A.; Lelieveld, J. Modeled global effects of airborne desert dust on air quality and premature mortality. Atmos. Chem. Phys. 2014, 14, 957–968. [Google Scholar] [CrossRef]

- Derbyshire, E. Natural Minerogenic Dust and Human Health. AMBIO J. Hum. Environ. 2007, 36, 73–77. [Google Scholar] [CrossRef]

- Middleton, N.; Kang, U. Sand and dust storms: Impact mitigation. Sustainability 2017, 9, 1053. [Google Scholar] [CrossRef]

- Zhang, K.; Tang, C.S.; Jiang, N.J.; Pan, X.H.; Liu, B.; Wang, Y.J.; Shi, B. Microbial-induced carbonate precipitation (MICP) technology: A review on the fundamentals and engineering applications. Environ. Earth Sci. 2023, 82, 229. [Google Scholar] [CrossRef]

- Webb, N.P.; McCord, S.E.; Edwards, B.L.; Herrick, J.E.; Kachergis, E.; Okin, G.S.; Van Zee, J.W. Vegetation Canopy Gap Size and Height: Critical Indicators for Wind Erosion Monitoring and Management. Rangel. Ecol. Manag. 2021, 76, 78–83. [Google Scholar] [CrossRef]

- Maleki, S.; Miri, A.; Rahdari, V.; Dragovich, D. A method to select sites for sand and dust storm source mitigation: case study in the Sistan region of southeast Iran. J. Environ. Plan. Manag. 2021, 64, 2192–2213. [Google Scholar] [CrossRef]

- Su, Y.; Zhang, Y.; Wang, H.; Zhang, T. Effects of vegetation spatial pattern on erosion and sediment particle sorting in the loess convex hillslope. Sci. Rep. 2022, 12, 14187. [Google Scholar] [CrossRef]

- Zomorodian, S.M.A.; Ghaffari, H.; O’Kelly, B.C. Stabilisation of crustal sand layer using biocementation technique for wind erosion control. Aeolian Res. 2019, 40, 34–41. [Google Scholar] [CrossRef]

- Betz, F.; Halik, Ü.; Kuba, M.; Tayierjiang, A.; Cyffka, B. Controls on aeolian sediment dynamics by natural riparian vegetation in the Eastern Tarim Basin, NW China. Aeolian Res. 2015, 18, 23–34. [Google Scholar] [CrossRef]

- Auestad, I.; Nilsen, Y.; Rydgren, K. Environmental Restoration in Hydropower Development—Lessons from Norway. Sustainability 2018, 10, 3358. [Google Scholar] [CrossRef]

- Harada, J.; Yasuda, N. Conservation and improvement of the environment in dam reservoirs. Int. J. Water Resour. Dev. 2004, 20, 77–96. [Google Scholar] [CrossRef]

- Ancin-Murguzur, F.J.; Munoz, L.; Monz, C.; Hausner, V.H. Drones as a tool to monitor human impacts and vegetation changes in parks and protected areas. Remote Sens. Ecol. Conserv. 2020, 6, 105–113. [Google Scholar] [CrossRef]

- Qubaa, A.R.; Aljawwadi, T.A.; Hamdoon, A.N.; Mohammed, R.M. Using UAVs/Drones and vegetation indices in the visible spectrum to monitoring agricultural lands. Iraqi J. Agric. Sci. 2021, 52, 601–610. [Google Scholar] [CrossRef]

- Hama, A.; Tanaka, K.; Chen, B.; Kondoh, A. Examination of appropriate observation time and correction of vegetation index for drone-based crop monitoring. J. Agric. Meteorol. 2021, 77, 200–209. [Google Scholar] [CrossRef]

- Atanasov, A.; Mihaylov, R.; Stoyanov, S.; Mihaylova, D.; Benov, P. Drone-based Monitoring of Sunflower Crops. Annu. J. Tech. Univ. Varna Bulg. 2022, 6, 1–9. [Google Scholar] [CrossRef]

- Pérez-Luque, A.J.; Ramos-Font, M.E.; Tognetti Barbieri, M.J.; Tarragona Pérez, C.; Calvo Renta, G.; Robles Cruz, A.B. Vegetation Cover Estimation in Semi-Arid Shrublands after Prescribed Burning: Field-Ground and Drone Image Comparison. Drones 2022, 6, 370. [Google Scholar] [CrossRef]

- Sankey, T.; Donager, J.; McVay, J.; Sankey, J.B. UAV lidar and hyperspectral fusion for forest monitoring in the southwestern USA. Remote Sens. Environ. 2017, 195, 30–43. [Google Scholar] [CrossRef]

- Gonçalves, G.; Andriolo, U.; Gonçalves, L.M.; Sobral, P.; Bessa, F. Beach litter survey by drones: Mini-review and discussion of a potential standardization. Environ. Pollut. 2022, 315, 120370. [Google Scholar] [CrossRef]

- Stanley, M. Voices from Two Rivers: Harnessing the Power of the Peace and Columbia; Douglas & McIntyre: Vancouver, BC, Canada, 2010. [Google Scholar]

- DJI. Support for Matrice 300 RTK [Web Page]; SZ DJI Technology Co., Ltd.: Shenzhen, China, 2025; Available online: https://www.dji.com/support/product/matrice-300 (accessed on 5 July 2025).

- DJI Terra, Version 4.0 [Computer Software]; SZ DJI Technology Co., Ltd.: Shenzhen, China, 2024. Available online: https://www.dji.com/dji-terra (accessed on 5 July 2025).

- TerraScan. TerraSolid, Version 0.023.005 [Computer Software]; TerraSolid Ltd.: Helsinki, Finland, 2025. Available online: https://terrasolid.com/terrascan/ (accessed on 5 July 2025).

- ArcGIS Pro, Version 3.0 [Computer Software]; Environmental Systems Research Institute: Redlands, CA, USA, 2022. Available online: https://www.esri.com/en-us/arcgis/products/arcgis-pro/overview (accessed on 5 July 2025).

- Abdullah Abbas, H.; Sabah Jaber, H. Accuracy Assessment of land use maps classification based on remote sensing and GIS techniques. BIO Web Conf. 2024, 97, 00063. [Google Scholar] [CrossRef]

- Team, R.C. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2024. [Google Scholar]

- Kuhn, M. Building Predictive Models in R Using the caret Package. J. Stat. Softw. 2018, 28, 1–26. [Google Scholar]

- Kuhn, M. caret: Classification and Regression Training (Version 7.0-1) [R Package]. 2024. Available online: https://CRAN.R-project.org/package=caret (accessed on 5 July 2025).

- Cohen, J. A Coefficient of Agreement for Nominal Scales. Educ. Psychol. Meas. 1960, 20, 37–46. [Google Scholar] [CrossRef]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences, 2nd ed.; Routledge: London, UK, 2013. [Google Scholar] [CrossRef]

- Pirotti, F.; Guarnieri, A.; Vettore, A. Ground filtering and vegetation mapping using multi-return terrestrial laser scanning. ISPRS J. Photogramm. Remote Sens. 2013, 76, 56–63. [Google Scholar] [CrossRef]

- Storch, M.; Kisliuk, B.; Jarmer, T.; Waske, B.; de Lange, N. Comparative analysis of UAV-based LiDAR and photogrammetric systems for the detection of terrain anomalies in a historical conflict landscape. Sci. Remote Sens. 2025, 11, 100191. [Google Scholar] [CrossRef]

- Guan, T.; Shen, Y.; Wang, Y.; Zhang, P.; Wang, R.; Yan, F. Advancing Forest Plot Surveys: A Comparative Study of Visual vs. LiDAR SLAM Technologies. Forests 2024, 15, 2083. [Google Scholar] [CrossRef]

- Man, Q.; Yang, X.; Liu, H.; Zhang, B.; Dong, P.; Wu, J.; Liu, C.; Han, C.; Zhou, C.; Tan, Z.; et al. Comparison of UAV-Based LiDAR and Photogrammetric Point Cloud for Individual Tree Species Classification of Urban Areas. Remote Sens. 2025, 17, 1212. [Google Scholar] [CrossRef]

- Oldeland, J.; Revermann, R.; Luther-Mosebach, J.; Buttschardt, T.; Lehmann, J.R. New tools for old problems—Comparing drone- and field-based assessments of a problematic plant species. Environ. Monit. Assess. 2021, 193, 90. [Google Scholar] [CrossRef]

- Mora-Felix, Z.D.; Sanhouse-Garcia, A.J.; Bustos-Terrones, Y.A.; Loaiza, J.G.; Monjardin-Armenta, S.A.; Rangel-Peraza, J.G. Effect of photogrammetric RPAS flight parameters on plani-altimetric accuracy of DTM. Open Geosci. 2020, 12, 1017–1035. [Google Scholar] [CrossRef]

- Seifert, E.; Seifert, S.; Vogt, H.; Drew, D.; van Aardt, J.; Kunneke, A.; Seifert, T. Influence of drone altitude, image overlap, and optical sensor resolution on multi-view reconstruction of forest images. Remote Sens. 2019, 11, 1252. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).