Cluster-Based Flight Path Construction for Drone-Assisted Pear Pollination Using RGB-D Image Processing

Abstract

1. Introduction

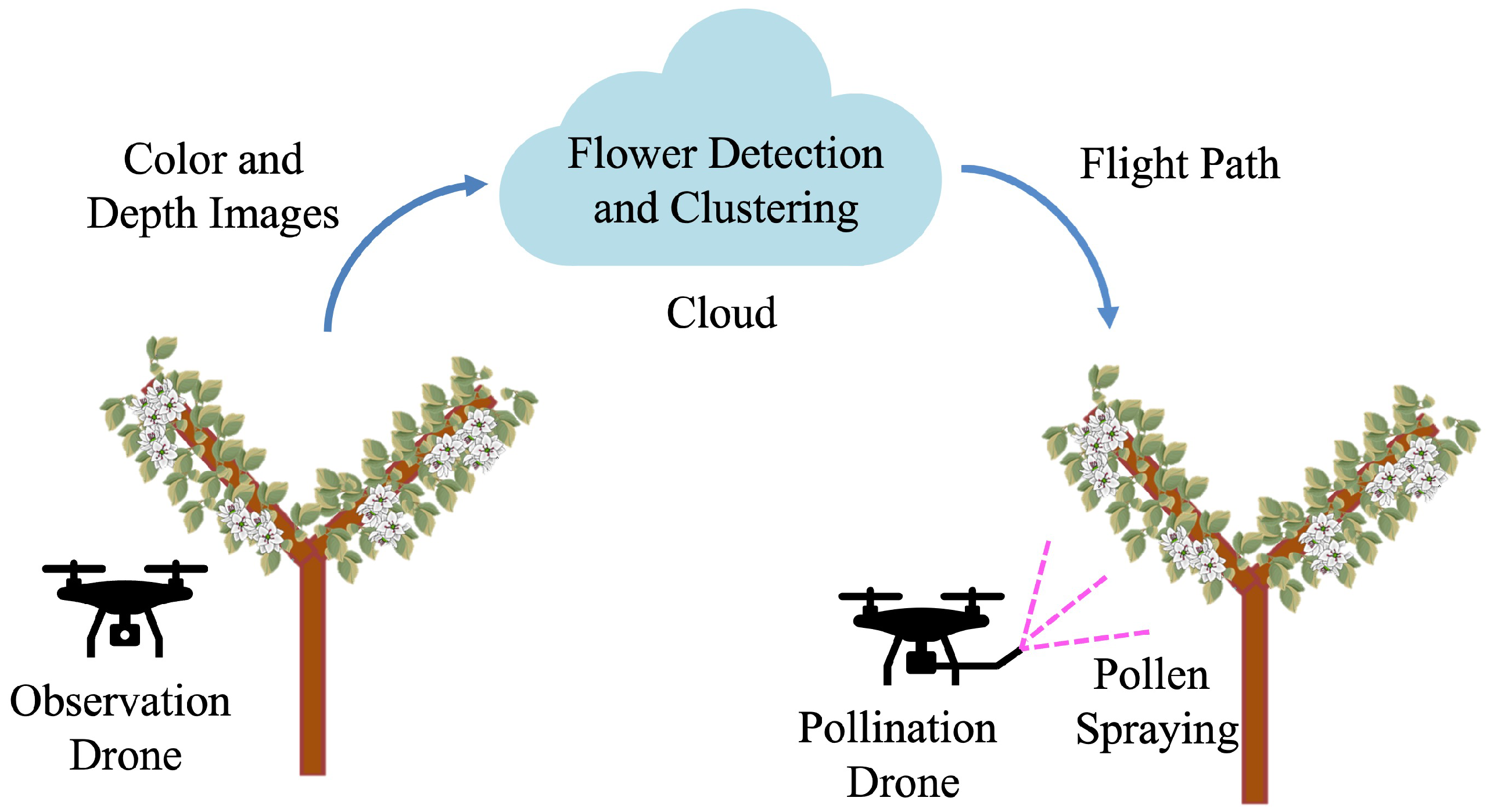

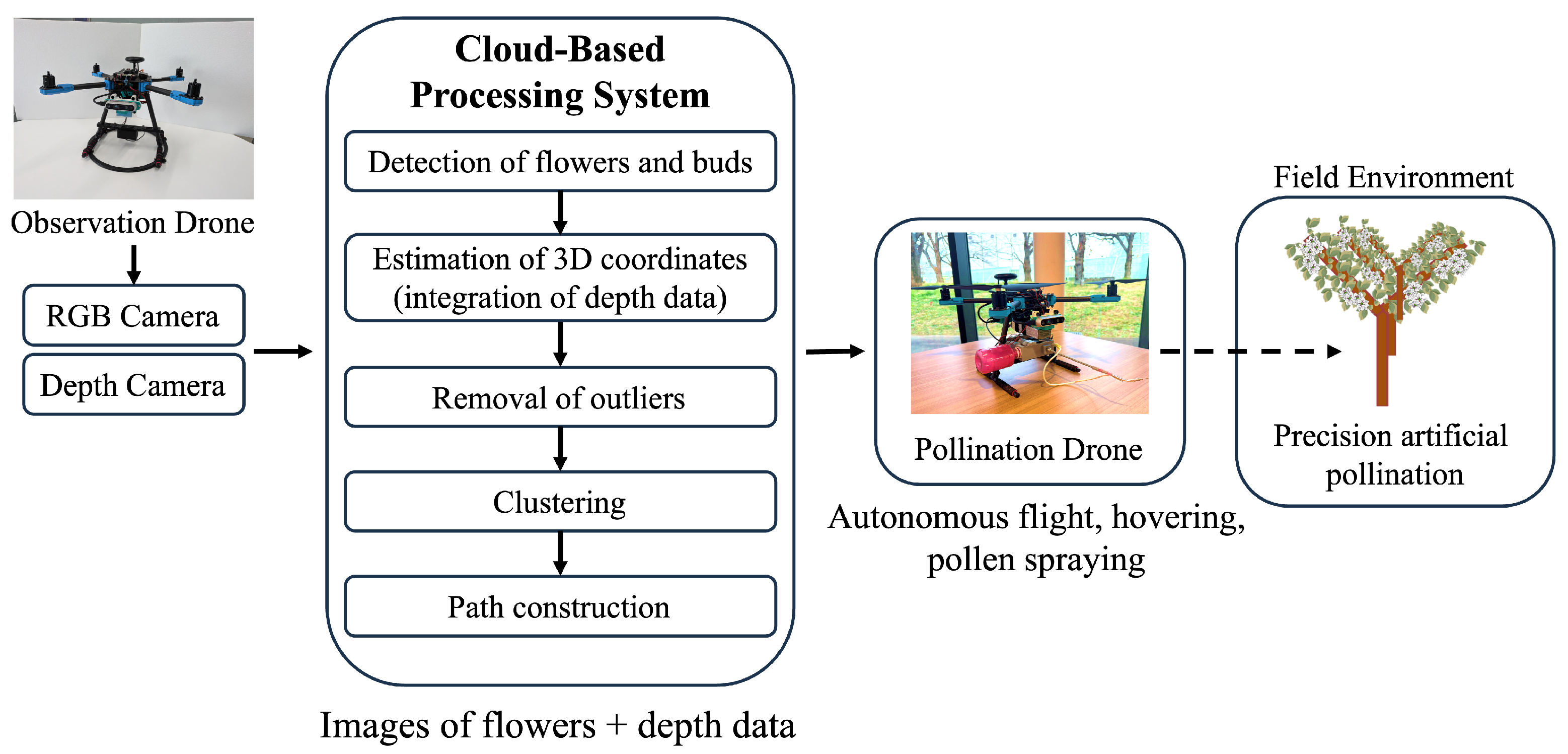

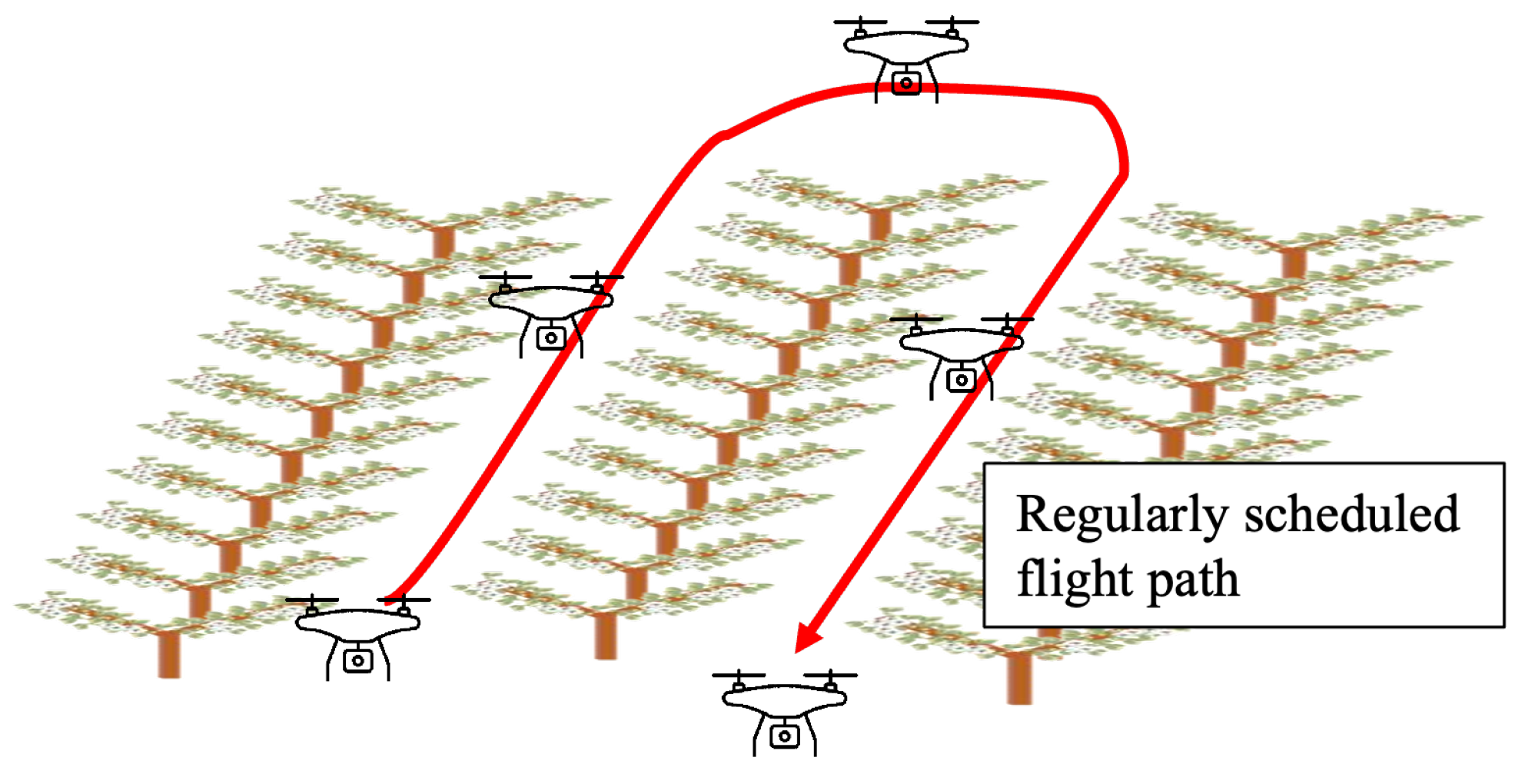

- Integrated Flower Detection and 3D Localization: The proposed system uses an observation drone equipped with RGB and depth cameras to detect pear flowers and estimate their 3D coordinates with high accuracy, thereby enabling precise guidance for autonomous pollination.

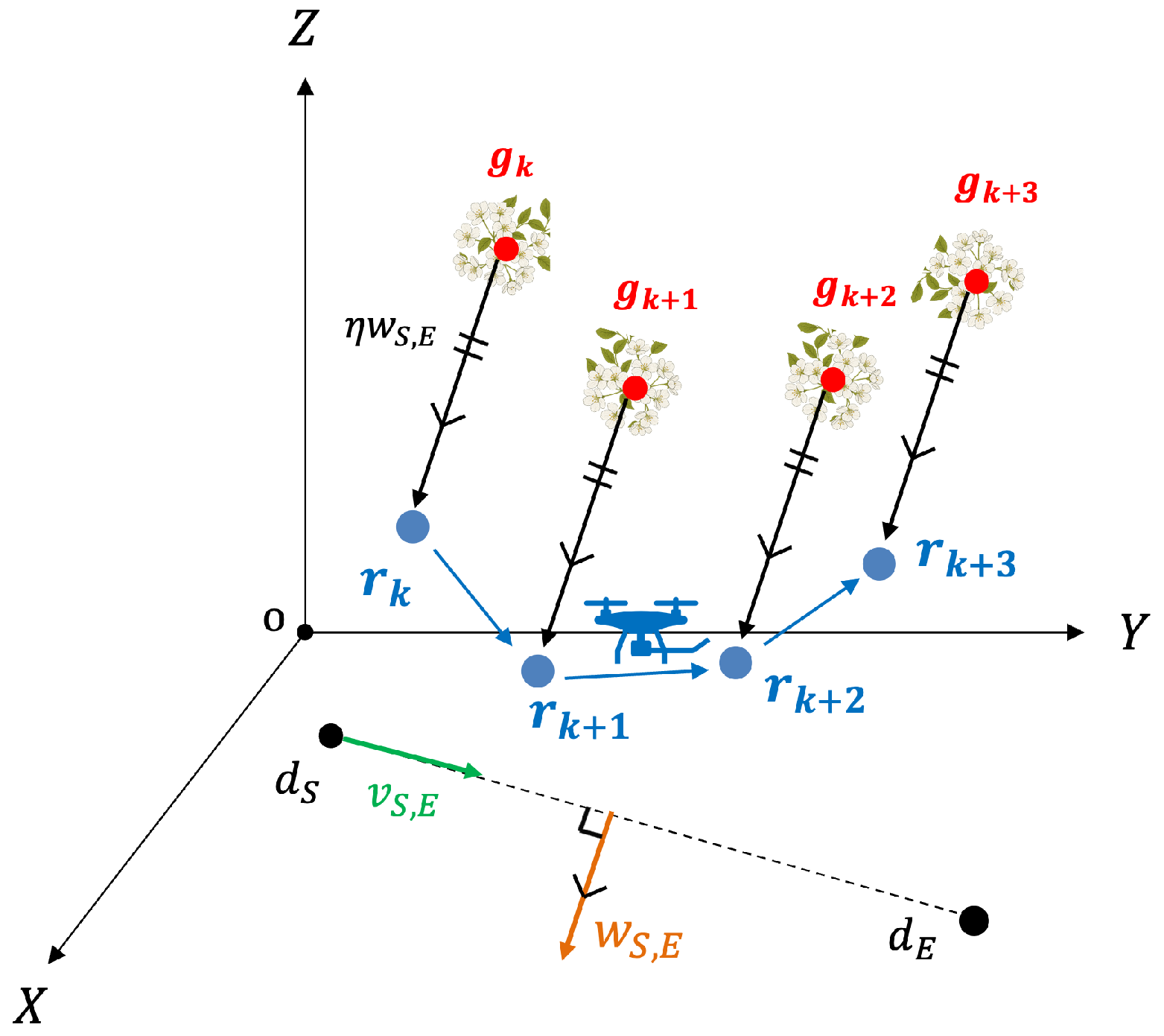

- Clustering-Based Flight Path Planning: We introduce a novel flight path construction method, which applies the clustering algorithm to group spatially proximate flowers and determine efficient, branch-level waypoints. This approach reduces unnecessary drone movement and improves overall pollination efficiency.

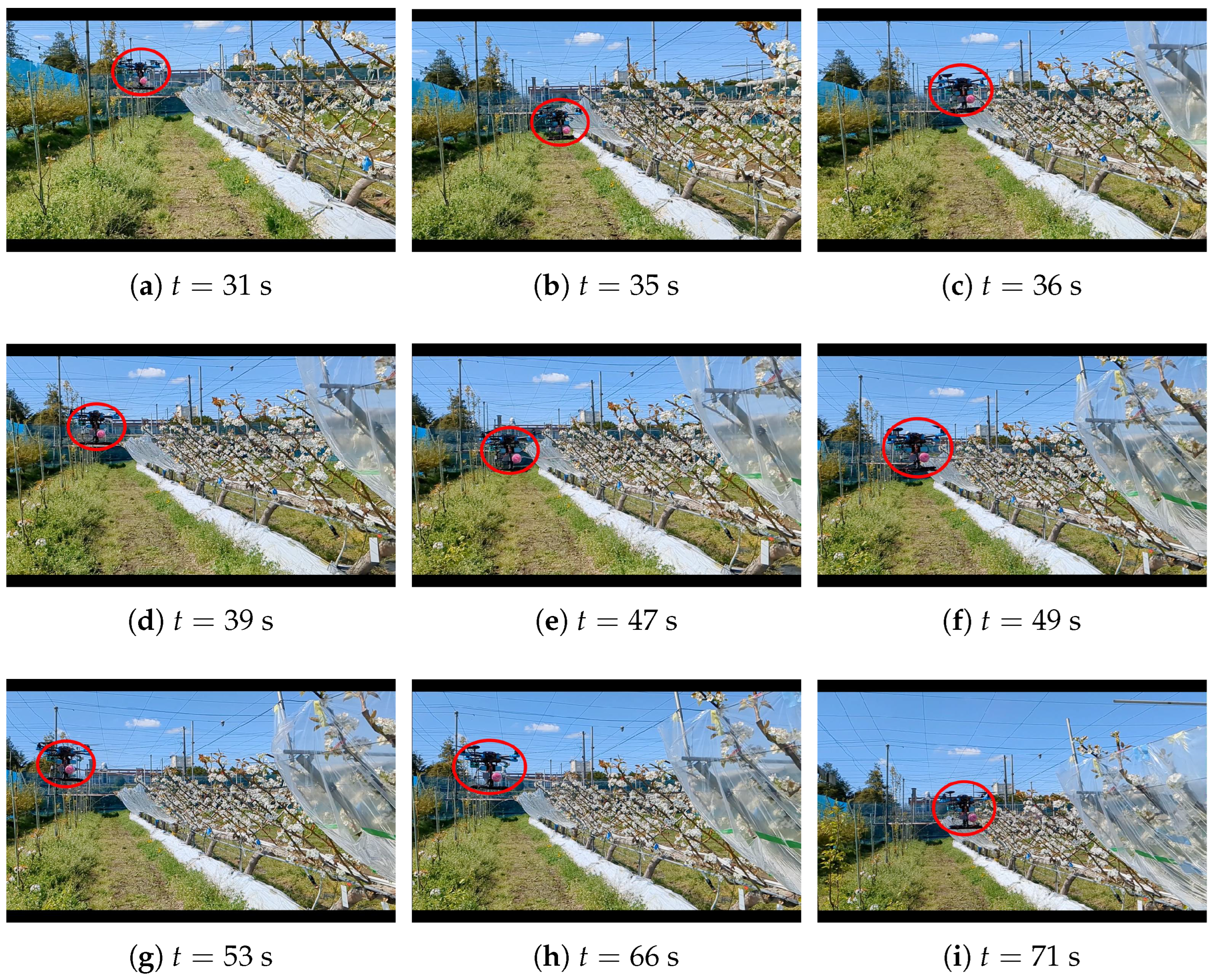

- Experimental Validation with RTK-Based Control: We demonstrated the system’s effectiveness through real-world experiments using RTK-GNSS (Global Navigation Satellite System)-based flight control to confirm the accuracy of flower detection, position estimation, and autonomous pollination in orchard environments.

- Fruit Set Rate Evaluation: The effectiveness of the proposed method was further validated by comparing the fruit set rate with that of natural and manual pollination. The results confirmed that our drone-based method achieves a higher fruit set rate than natural pollination and a comparable performance to manual pollination, demonstrating its practical utility in real-world agricultural settings.

2. Related Work

2.1. Vision-Based Machine Learning Applications in Agriculture

2.2. Flight Path Planning for Drones

3. Proposed Flight Path Construction Method for the Pollination Drone

3.1. Overview

- Step 1.

- Train the flower detection algorithm.

- Step 2.

- Capture video footage with the observation drone.

- Step 3.

- Apply the flower detection algorithm to the video.

- Step 4.

- Extract flower coordinates.

- Step 5.

- Remove unnecessary points.

- Step 6.

- Determine the flight path.

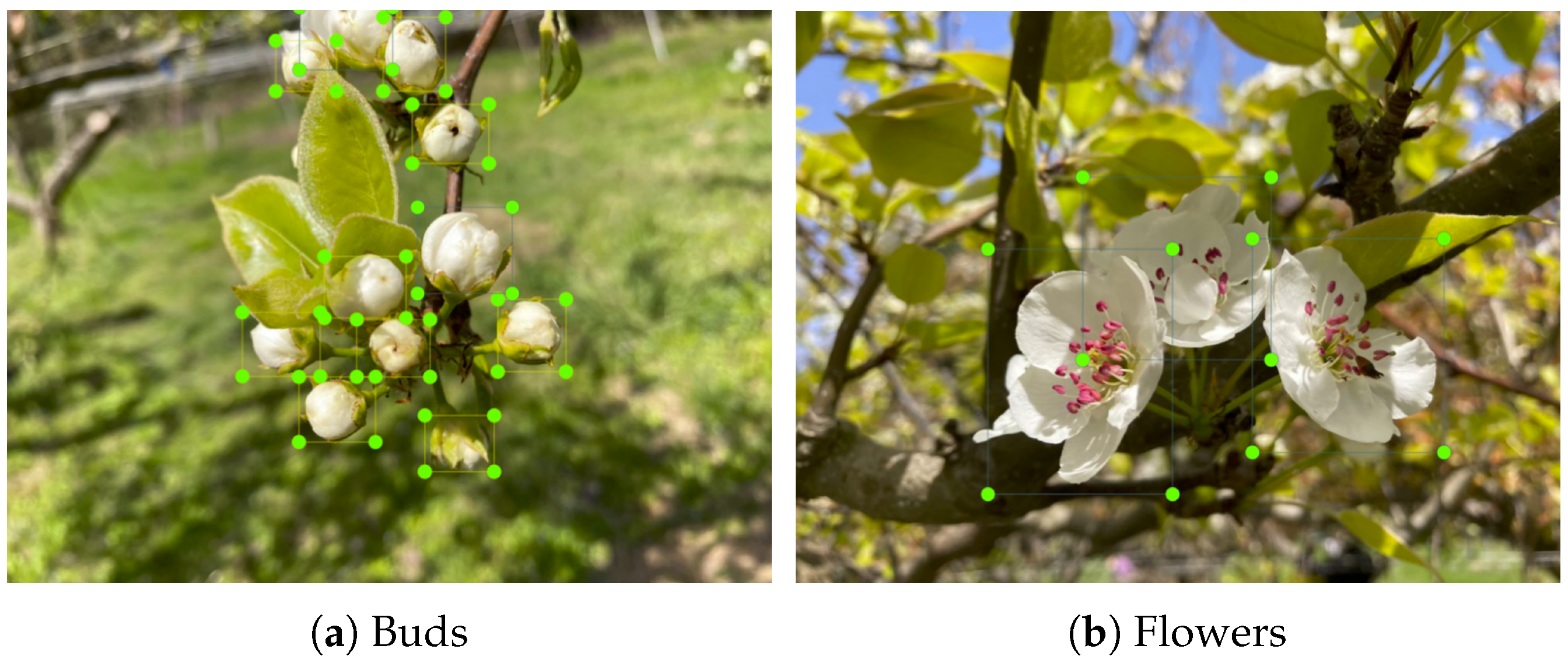

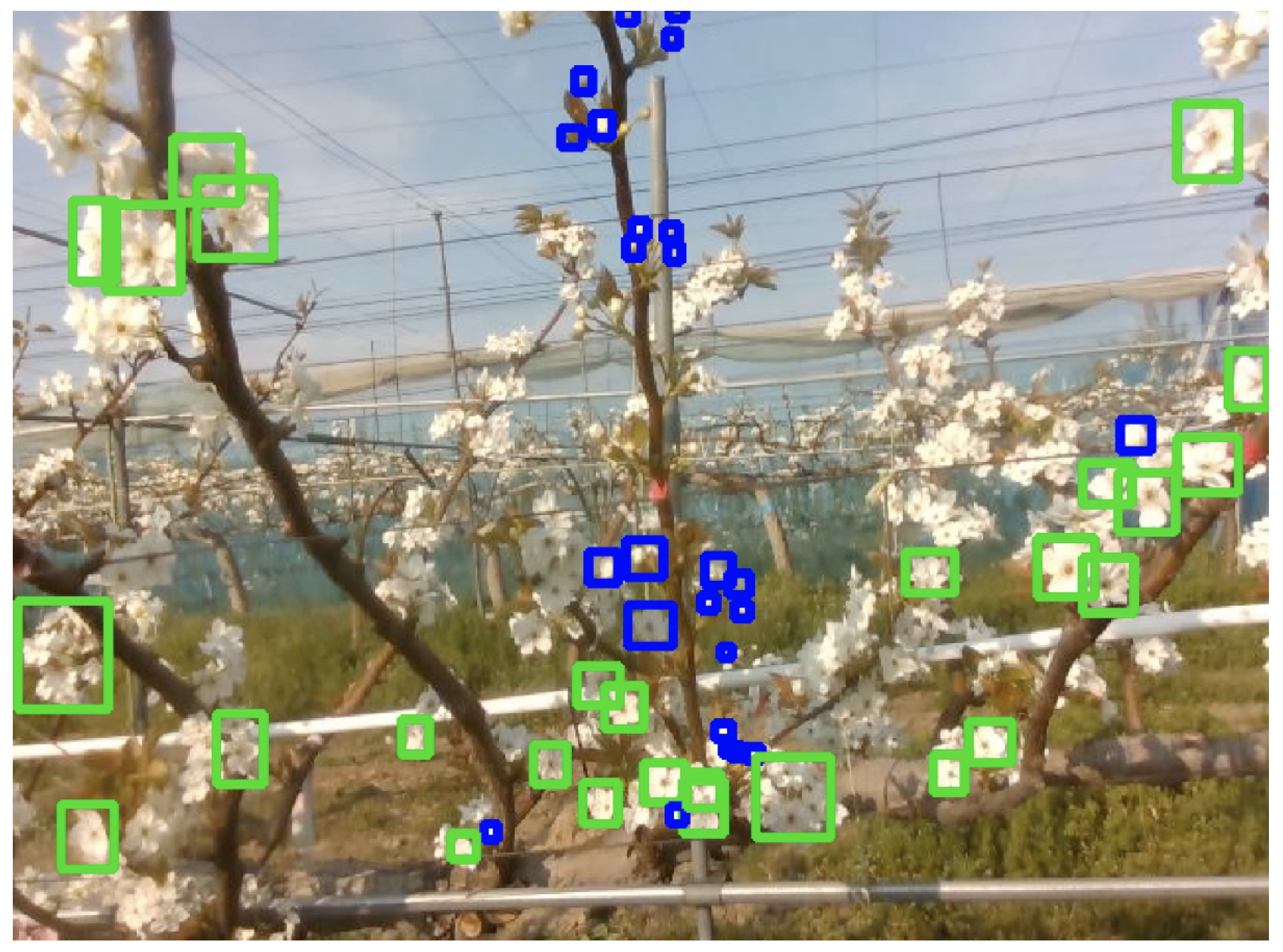

3.2. Training the Flower Detection Algorithm

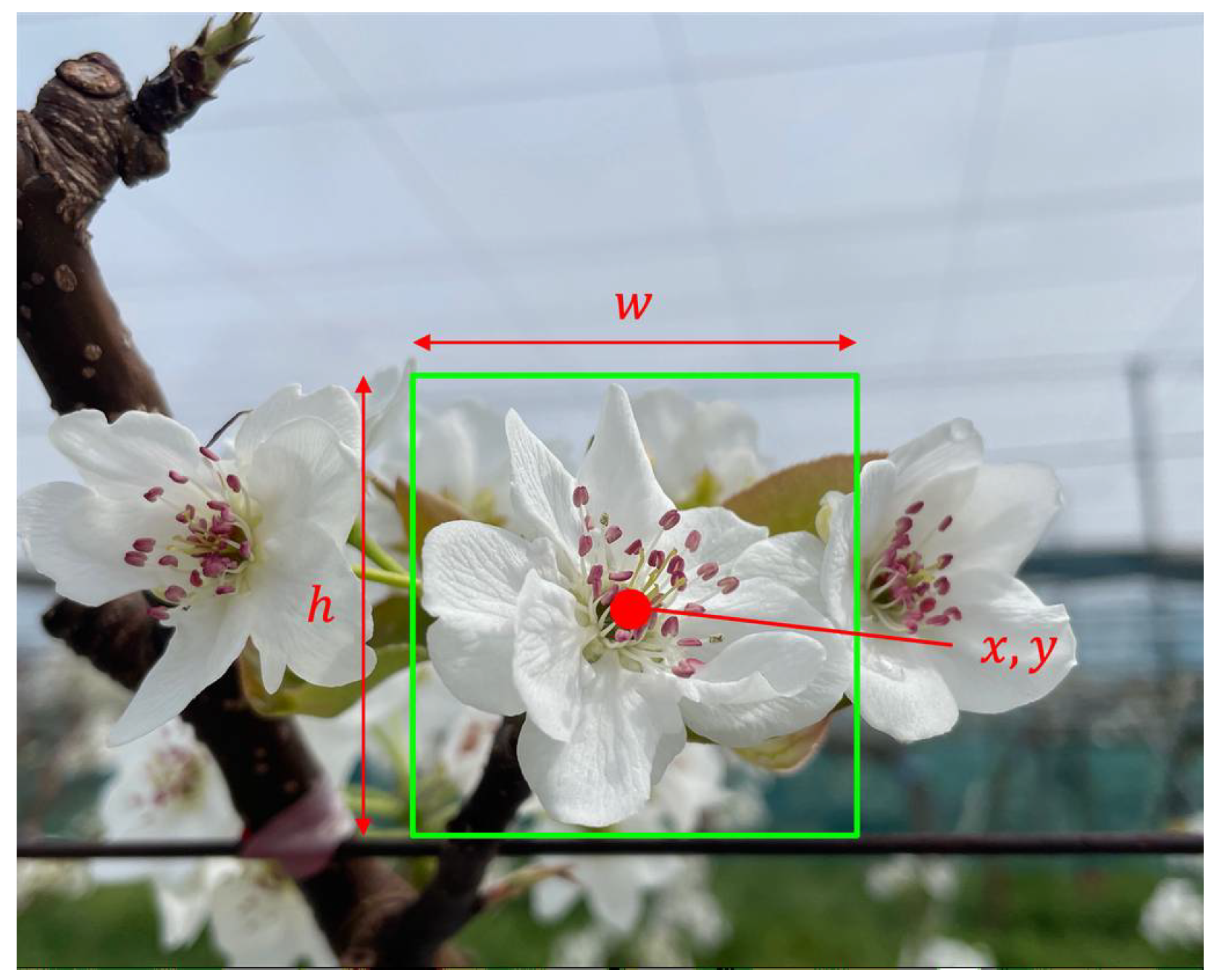

- Bounding Box A bounding box is a rectangle that indicates the potential region where an object is detected. It represents the position of the detected object within the image (Figure 6). The bounding box is typically defined using four parameters: the central coordinates , width w, and height h. For example, if an object is detected in an image at position with width w and height h, the bounding box can be represented as:

- Confidence Score Each bounding box is assigned a confidence score, which represents both the probability that an object is present within the box and the accuracy of the prediction. This confidence score C is calculated as follows:where is the probability that an object is present inside the bounding box. IoU (intersection over union) represents the overlap ratio between the predicted bounding box and the ground truth bounding box. The higher the confidence score, the higher the probability that the bounding box contains an object and that the predicted box closely matches the actual object’s position.

3.3. Capture of the Observation Drone Video

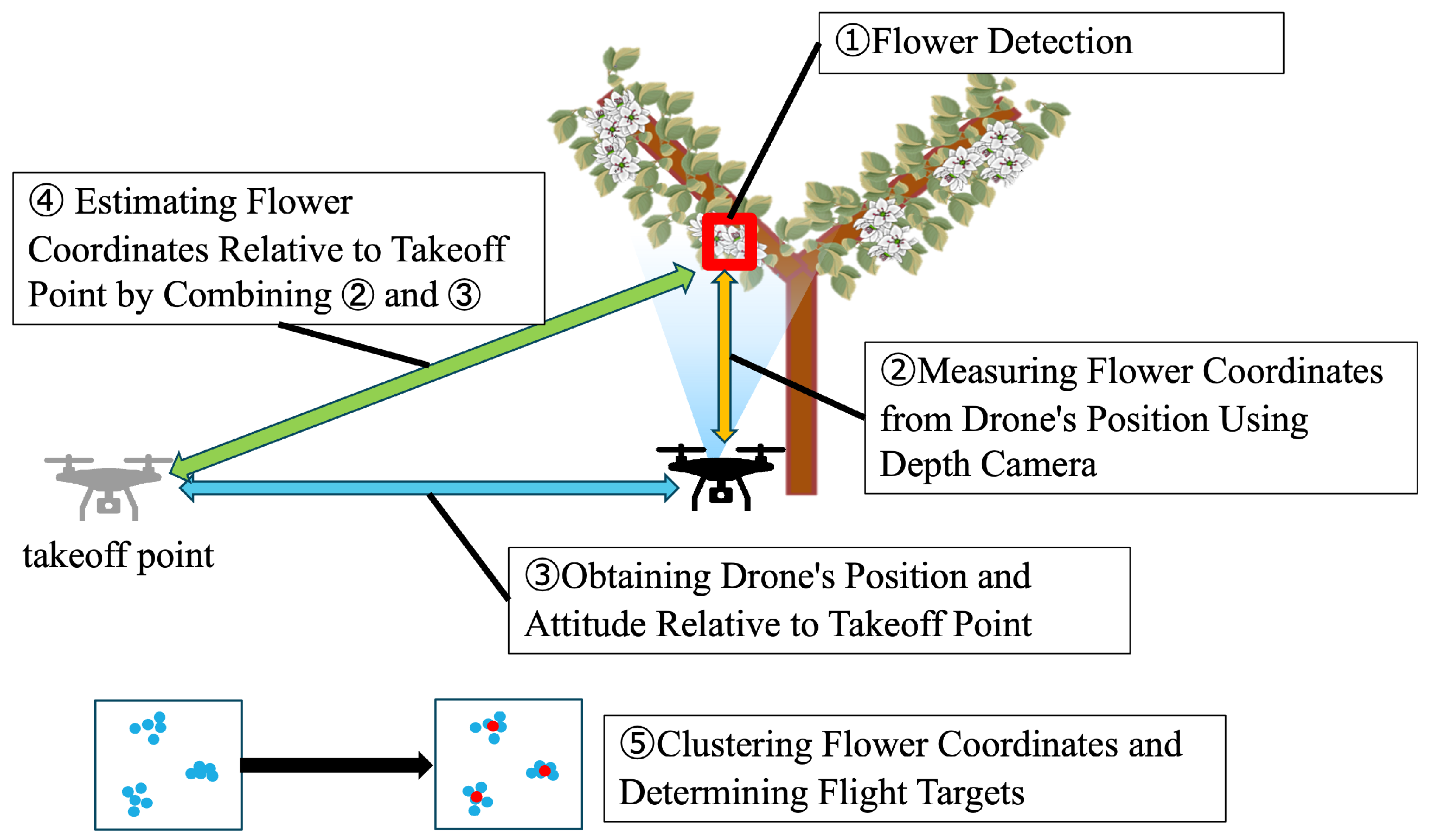

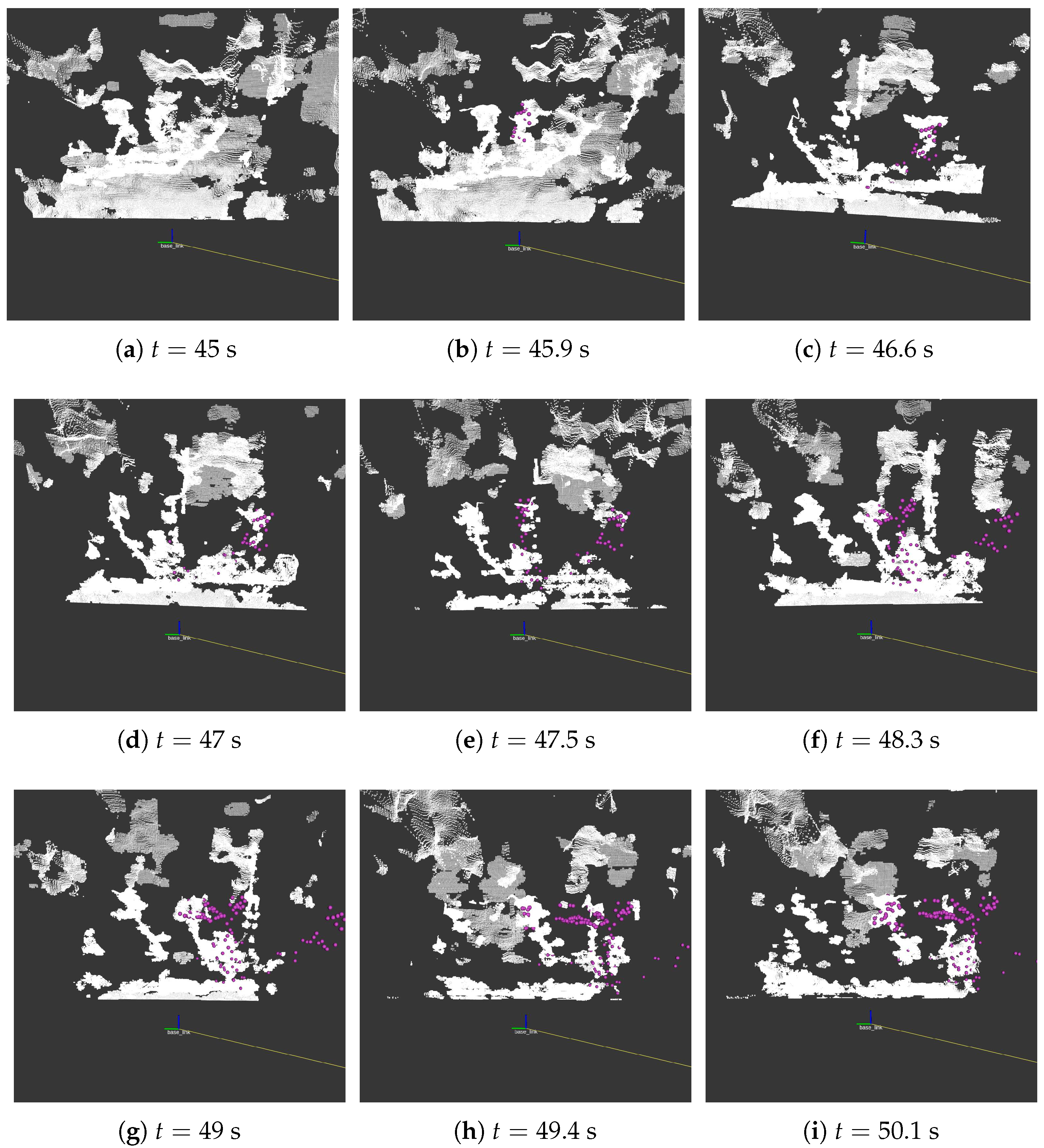

3.4. Estimation of Flower Coordinates

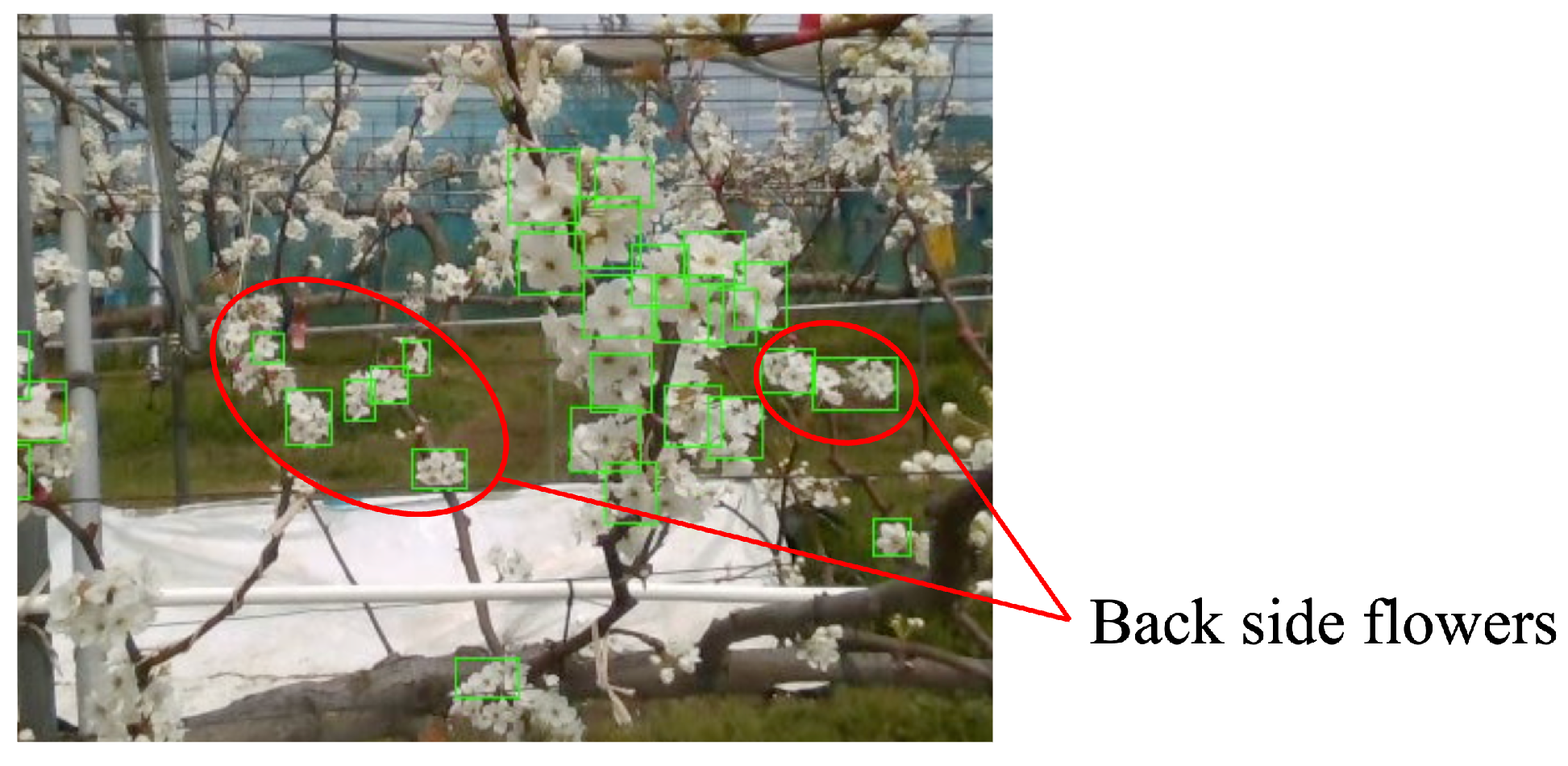

3.5. Removal of Unnecessary Points

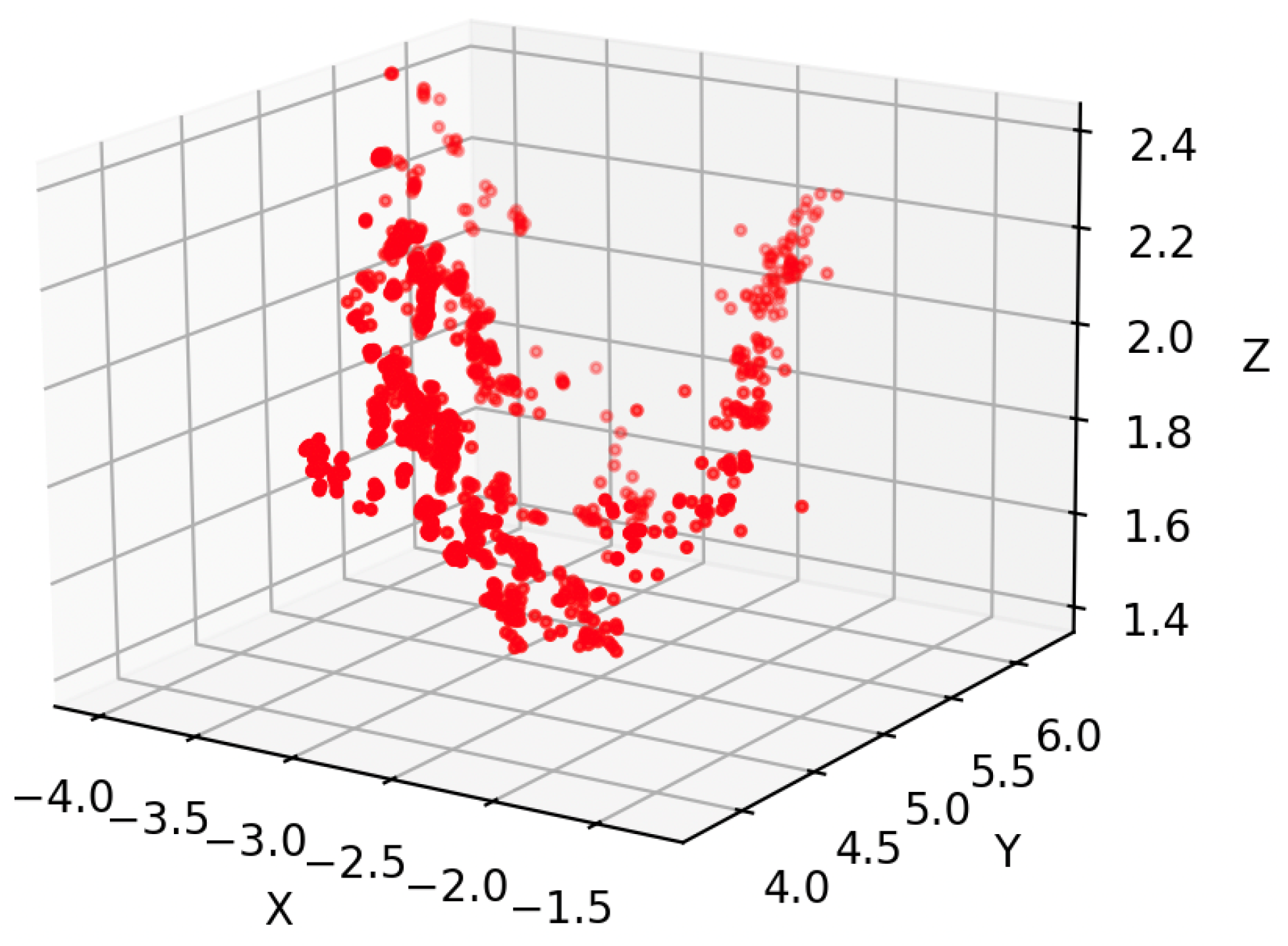

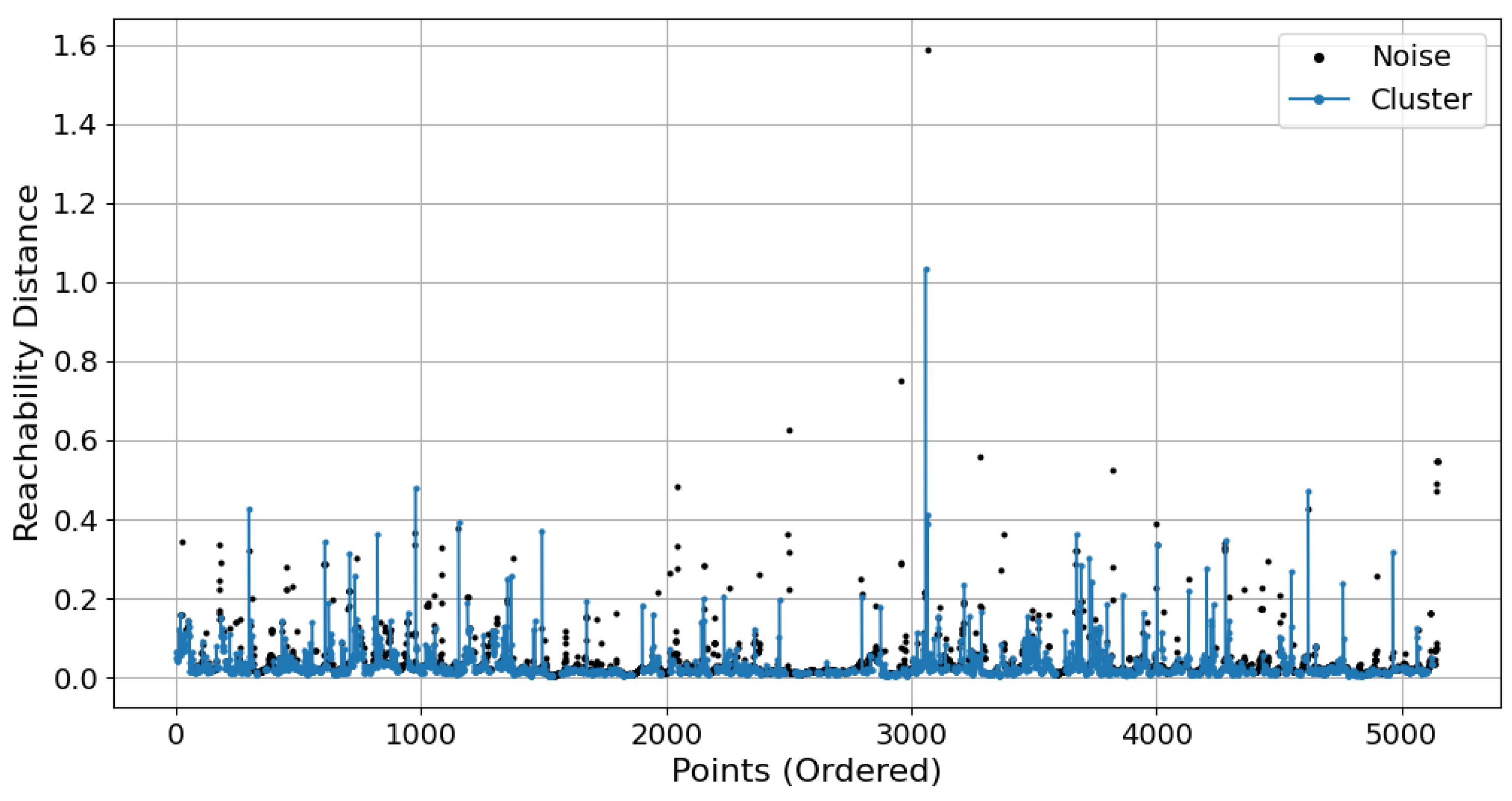

3.6. Clustering of Flower Coordinates Using OPTICS

- Step 1.

- Initialize each element as unprocessed. Subsequently, initialize as .

- Step 2.

- If , terminate the algorithm; otherwise, select a specific element from . Update as .

- Step 3.

- If is processed, return to Step 2; otherwise, compute the neighborhood as the set of points within a distance . If the number of points in is at least , consider the point a core point. Define the core distance as the distance from to its -th nearest neighbor within :When the computation of the neighborhood is complete, mark the point as processed and append its entry to the ordered list to preserve the density-based traversal order.

- Step 4.

- If the core distance is defined, initialize a priority queue . Evaluate each unprocessed point and compute its reachability distance from asIf does not yet have a reachability distance assigned to it, set the computed value and insert into the priority queue . If is already in the queue with a larger reachability distance, update its value and adjust its position in the queue accordingly.

- Step 5.

- Although is not empty, remove the point with the smallest reachability distance from the queue. If is unprocessed, mark it as processed and add it to the ordered list. Then, compute its -neighborhood and evaluate its core distance . If is a core point, process its neighbors in the same manner as described in Step 4 and add them to .

- Step 6.

- When the processing of all points reachable from is complete, return to Step 2.

4. Performance Evaluation

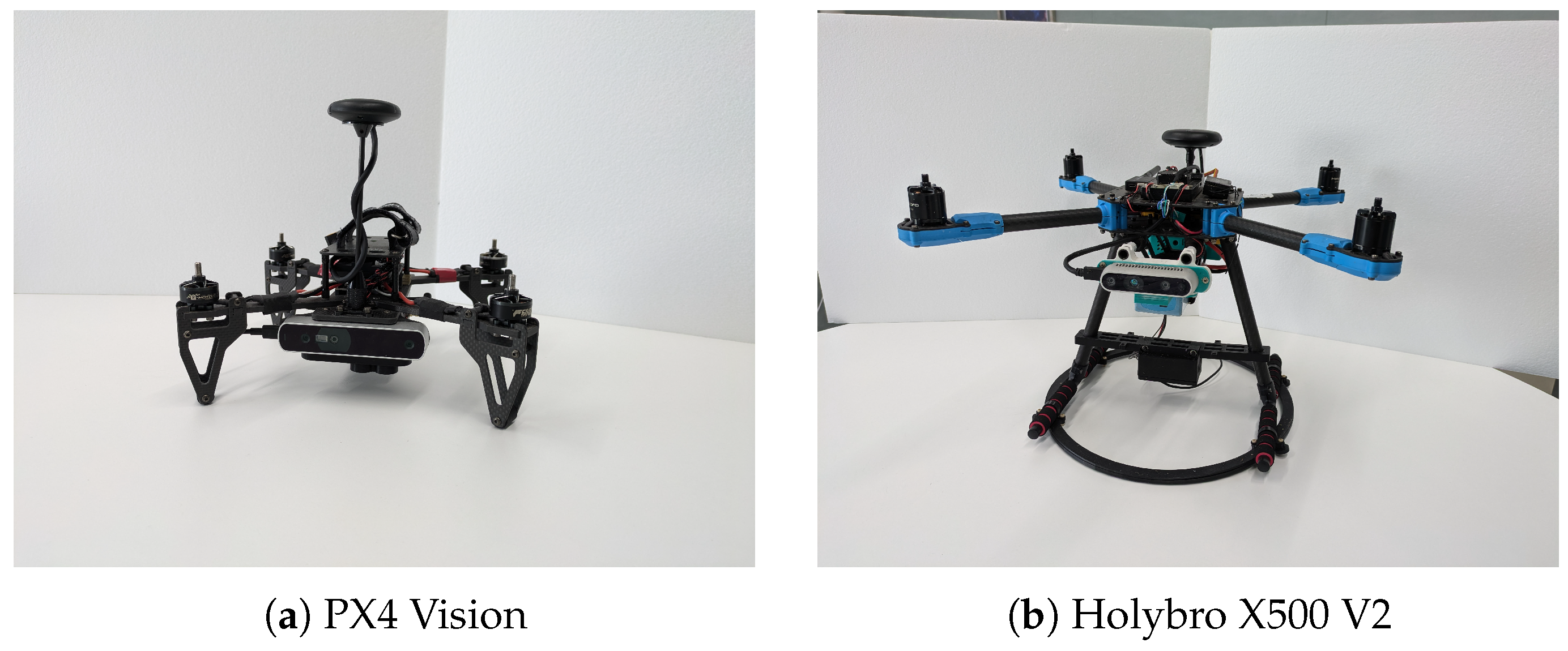

4.1. Experimental Setting

4.2. Results

4.2.1. Flower Detection Performance

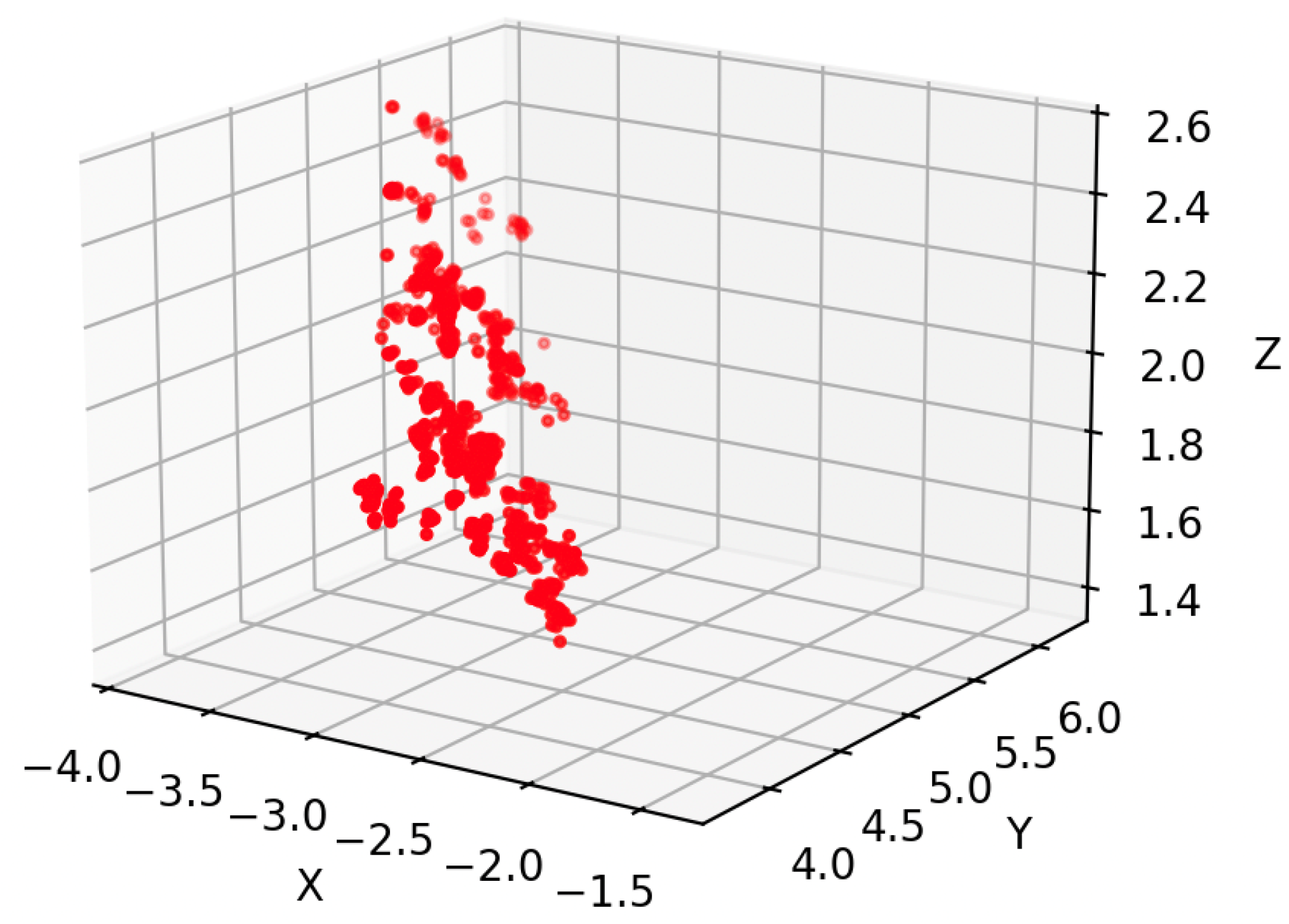

4.2.2. Detection Accuracy of Flower Positions

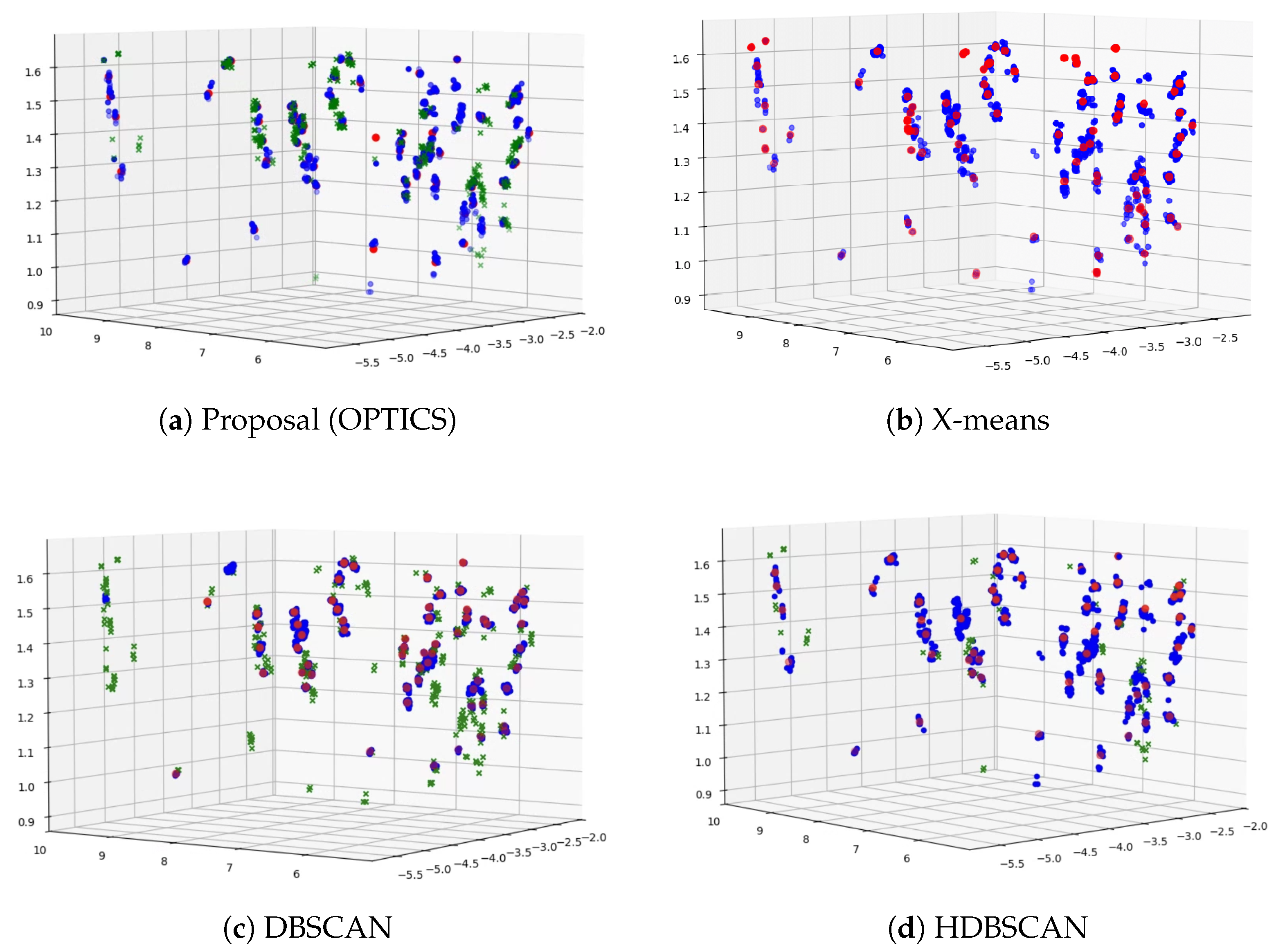

4.2.3. Clustering Performance

- X-means: X-means is an extension of the K-means clustering algorithm that automatically determines the optimal number of clusters by iteratively splitting existing clusters based on a statistical criterion, such as the Bayesian information criterion.

- DBSCAN: DBSCAN is a density-based clustering algorithm that groups points that are closely packed while marking points in low-density regions as outliers. It requires two parameters: a neighborhood radius and minimum number of points to form a cluster.

- HDBSCAN: HDBSCAN is an advanced density-based clustering algorithm that extends DBSCAN by building a hierarchy of clusters and extracting the most stable clusters. It can handle clusters of varying densities and is more robust to parameter selection.

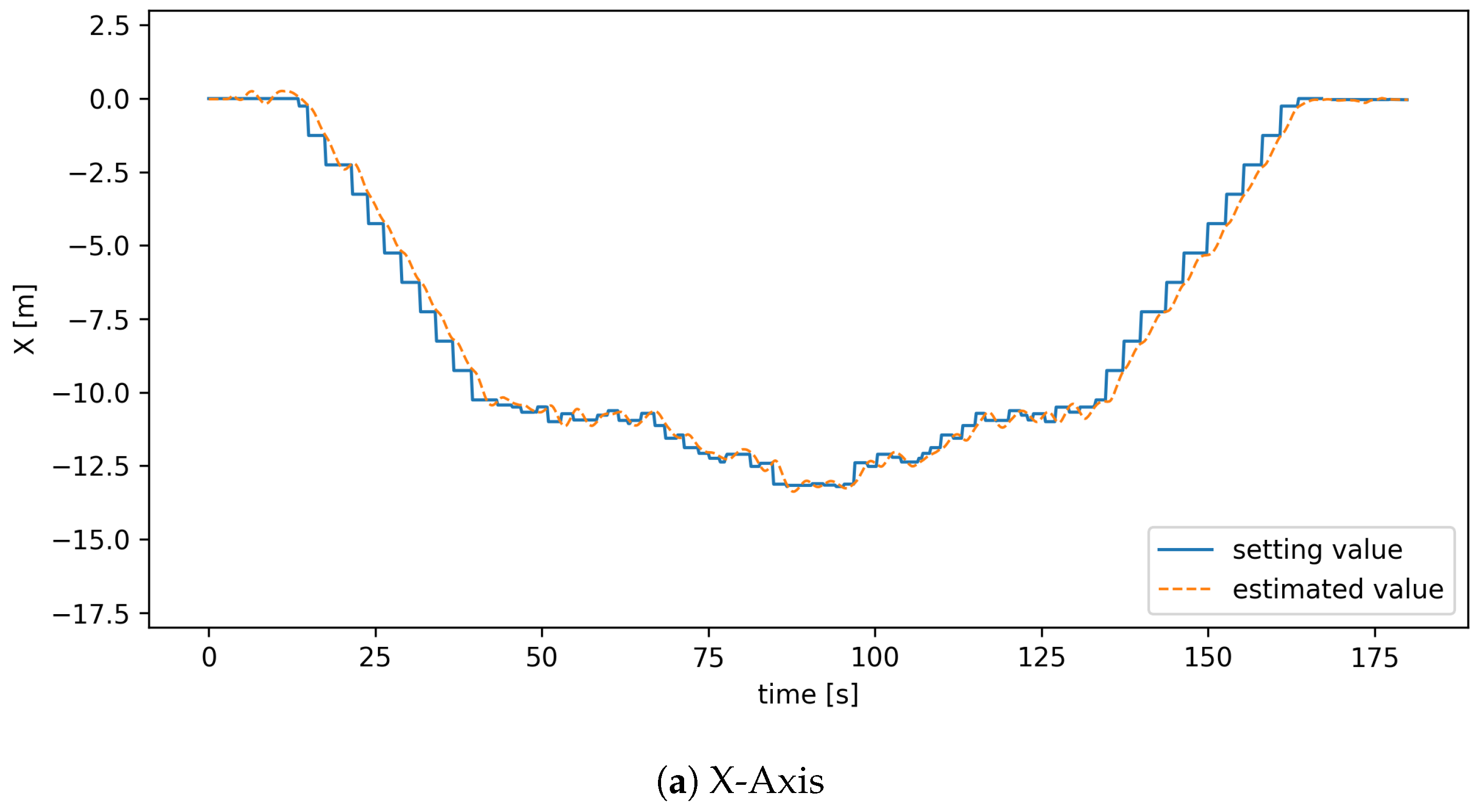

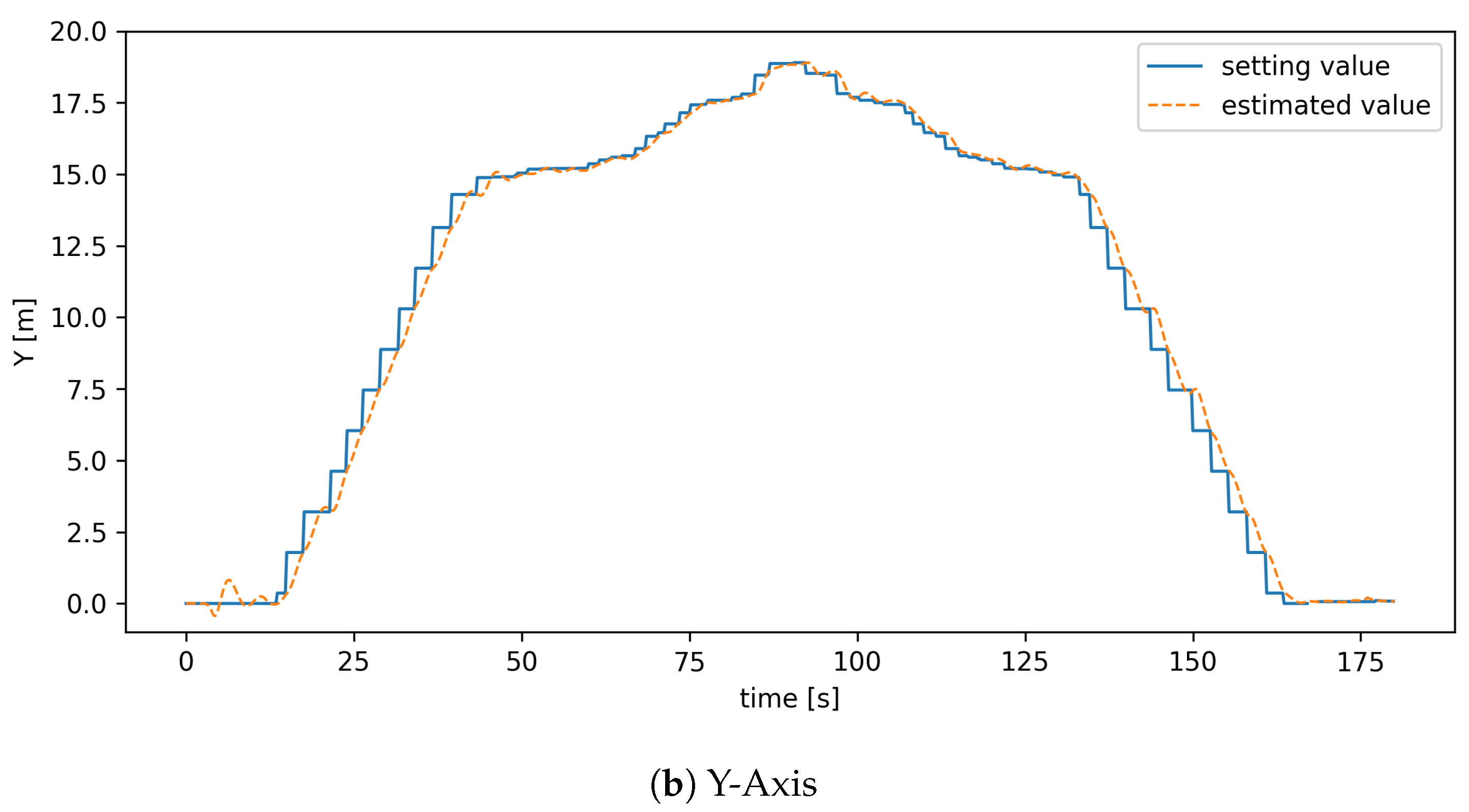

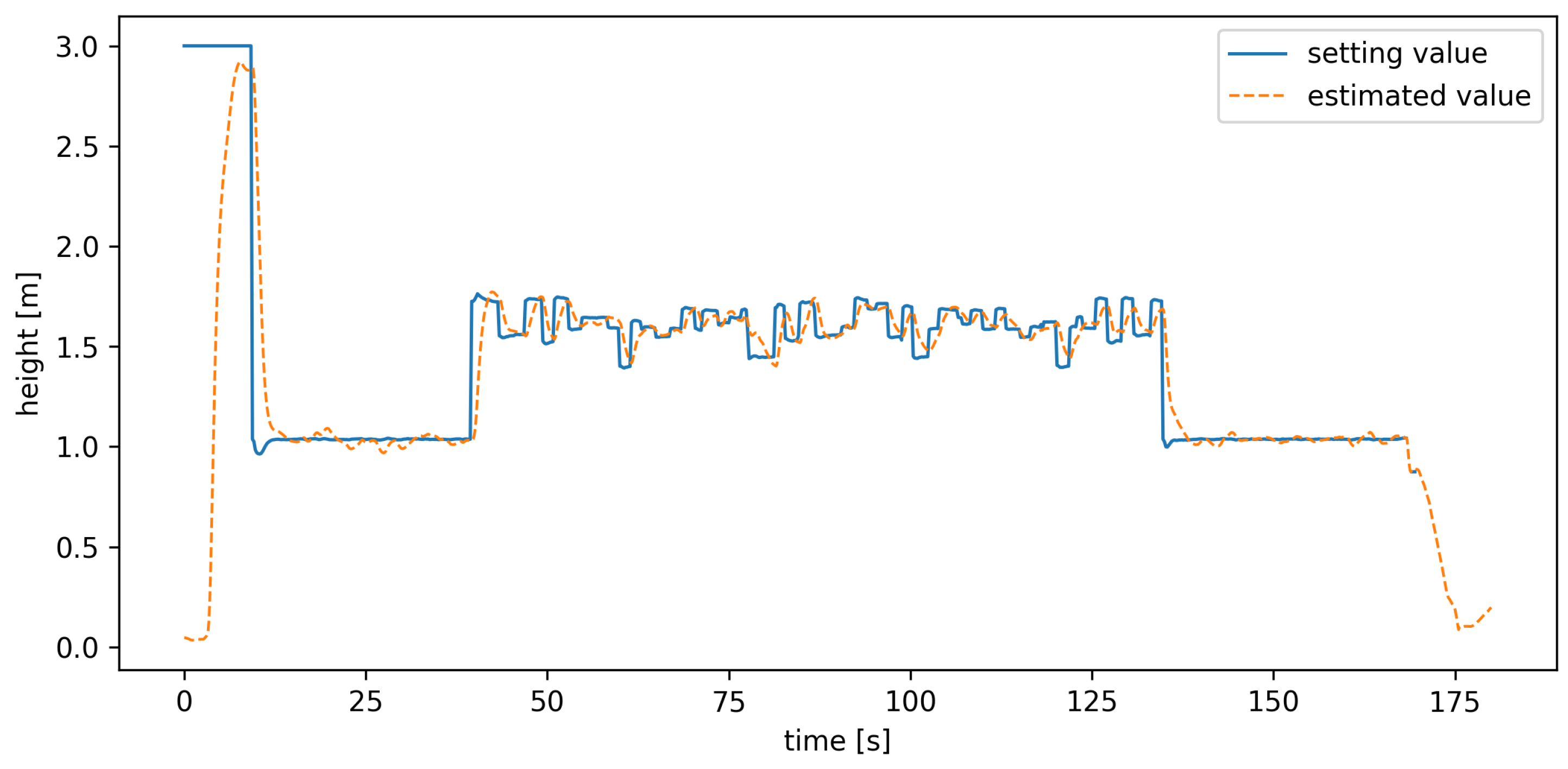

4.2.4. Flight Path Performance

4.2.5. Fruit Set Rate

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Potts, S.G.; Biesmeijer, J.C.; Kremen, C.; Neumann, P.; Schweiger, O.; Kunin, W.E. Global pollinator declines: Trends, impacts and drivers. Trends Ecol. Evol. 2010, 25, 345–353. [Google Scholar] [CrossRef] [PubMed]

- Chaffer, L. Pollinators, bees and food. Geogr. Bull. 2020, 52, 68–69. [Google Scholar]

- Martén-Rodríguez, S.; Cristobal-Pérez, E.J.; de Santiago-Hernández, M.H.; Huerta-Ramos, G.; Clemente-Martínez, L.; Krupnick, G.; Taylor, O.; Lopezaraiza-Mikel, M.; Balvino-Olvera, F.J.; Sentíes-Aguilar, E.M.; et al. Untangling the complexity of climate change effects on plant reproductive traits and pollinators: A systematic global synthesis. Glob. Change Biol. 2025, 31, e70081. [Google Scholar] [CrossRef]

- Kerr, J.T.; Pindar, A.; Galpern, P.; Packer, L.; Potts, S.G.; Roberts, S.M.; Rasmont, P.; Schweiger, O.; Colla, S.R.; Richardson, L.L.; et al. Climate change impacts on bumblebees converge across continents. Science 2015, 349, 177–180. [Google Scholar] [CrossRef]

- Broussard, M.A.; Coates, M.; Martinsen, P. Artificial pollination technologies: A review. Agronomy 2023, 13, 1351. [Google Scholar] [CrossRef]

- Quinet, M.; Jacquemart, A.L. Troubles in pear pollination: Effects of collection and storage method on pollen viability and fruit production. Acta Oecol. 2020, 105, 103558. [Google Scholar] [CrossRef]

- Wurz, A.; Grass, I.; Tscharntke, T. Hand pollination of global crops–A systematic review. Basic Appl. Ecol. 2021, 56, 299–321. [Google Scholar] [CrossRef]

- Olson, D.; Anderson, J. Review on unmanned aerial vehicles, remote sensors, imagery processing, and their applications in agriculture. Agron. J. 2021, 113, 971–992. [Google Scholar] [CrossRef]

- Ayamga, M.; Akaba, S.; Nyaaba, A.A. Multifaceted applicability of drones: A review. Technol. Forecast. Soc. Chang. 2021, 167, 120677. [Google Scholar] [CrossRef]

- Rejeb, A.; Rejeb, K.; Simske, S.J.; Treiblmaier, H. Drones for supply chain management and logistics: A review and research agenda. Int. J. Logist. Res. Appl. 2023, 26, 708–731. [Google Scholar] [CrossRef]

- Goodchild, A.; Toy, J. Delivery by drone: An evaluation of unmanned aerial vehicle technology in reducing CO2 emissions in the delivery service industry. Transp. Res. Part D Transp. Environ. 2018, 61, 58–67. [Google Scholar] [CrossRef]

- Rakha, T.; Gorodetsky, A. Review of Unmanned Aerial System (UAS) applications in the built environment: Towards automated building inspection procedures using drones. Autom. Constr. 2018, 93, 252–264. [Google Scholar] [CrossRef]

- Rymer, N.; Moore, A.J. A Review of Unmanned Aerial Vehicle Technology in Power Line Inspection. In Proceedings of the AIAA Scitech 2021, Virtual, 11–15 January 2021. [Google Scholar]

- Erdelj, M.; Król, M.; Natalizio, E. Wireless sensor networks and multi-UAV systems for natural disaster management. Comput. Netw. 2017, 124, 72–86. [Google Scholar] [CrossRef]

- Yucesoy, E.; Balcik, B.; Coban, E. The role of drones in disaster response: A literature review of operations research applications. Int. Trans. Oper. Res. 2025, 32, 545–589. [Google Scholar] [CrossRef]

- Koh, L.P.; Wich, S.A. Dawn of drone ecology: Low-cost autonomous aerial vehicles for conservation. Trop. Conserv. Sci. 2012, 5, 121–132. [Google Scholar] [CrossRef]

- Anderson, K.; Gaston, K.J. Lightweight unmanned aerial vehicles will revolutionize spatial ecology. Front. Ecol. Environ. 2013, 11, 138–146. [Google Scholar] [CrossRef]

- Rejeb, A.; Abdollahi, A.; Rejeb, K.; Treiblmaier, H. Drones in agriculture: A review and bibliometric analysis. Comput. Electron. Agric. 2022, 198, 107017. [Google Scholar] [CrossRef]

- Marzuki, O.F.; Teo, E.Y.L.; Rafie, A.S.M. The mechanism of drone seeding technology: A review. Malays. For. 2021, 84, 349–358. [Google Scholar]

- Miyoshi, K.; Hiraguri, T.; Shimizu, H.; Hattori, K.; Kimura, T.; Okubo, S.; Endo, K.; Shimada, T.; Shibasaki, A.; Takemura, Y. Development of Pear Pollination System Using Autonomous Drones. AgriEngineering 2025, 7, 68. [Google Scholar] [CrossRef]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3645–3649. [Google Scholar]

- Radočaj, D.; Plaščak, I.; Jurišić, M. Global navigation satellite systems as state-of-the-art solutions in precision agriculture: A review of studies indexed in the web of science. Agriculture 2023, 13, 1417. [Google Scholar] [CrossRef]

- Okubo, S.; Tsunewaki, K.; Kimura, T.; Shimizu, H.; Hiraguri, T.; Shibasaki, A.; Shimada, T.; Takemura, Y. Pear Blossom Counting System with Drones Using YOLO and Deep SORT. In Proceedings of the 2024 International Conference on Image Processing and Robotics (ICIPRoB), Colombo, Sri Lanka, 9–10 March 2024; pp. 1–4. [Google Scholar]

- Lim, J.; Ahn, H.S.; Nejati, M.; Bell, J.; Williams, H.; MacDonald, B.A. Deep neural network based real-time kiwi fruit flower detection in an orchard environment. arXiv 2020, arXiv:2006.04343. [Google Scholar]

- Shang, Y.; Xu, X.; Jiao, Y.; Wang, Z.; Hua, Z.; Song, H. Using lightweight deep learning algorithm for real-time detection of apple flowers in natural environments. Comput. Electron. Agric. 2023, 207, 107765. [Google Scholar] [CrossRef]

- Mu, X.; He, L.; Heinemann, P.; Schupp, J.; Karkee, M. Mask R-CNN based apple flower detection and king flower identification for precision pollination. Smart Agric. Technol. 2023, 4, 100151. [Google Scholar] [CrossRef]

- Wang, C.; Liu, S.; Wang, Y.; Xiong, J.; Zhang, Z.; Zhao, B.; Luo, L.; Lin, G.; He, P. Application of convolutional neural network-based detection methods in fresh fruit production: A comprehensive review. Front. Plant Sci. 2022, 13, 868745. [Google Scholar] [CrossRef] [PubMed]

- Milicevic, M.; Zubrinic, K.; Grbavac, I.; Obradovic, I. Application of deep learning architectures for accurate detection of olive tree flowering phenophase. Remote Sens. 2020, 12, 2120. [Google Scholar] [CrossRef]

- Wu, S.; Liu, J.; Lei, X.; Zhao, S.; Lu, J.; Jiang, Y.; Xie, B.; Wang, M. Research progress on efficient pollination technology of crops. Agronomy 2022, 12, 2872. [Google Scholar] [CrossRef]

- Yang, L.; Li, P.; Qian, S.; Quan, H.; Miao, J.; Liu, M.; Hu, Y.; Memetimin, E. Path planning technique for mobile robots: A review. Machines 2023, 11, 980. [Google Scholar] [CrossRef]

- Manasherov, O.; Degani, A. Multi-agent target allocation and safe trajectory planning for artificial pollination tasks. Smart Agric. Technol. 2024, 8, 100461. [Google Scholar] [CrossRef]

- Zhang, Q.; Zhang, Z.; Manzoor, S.; Li, T.; Igathinathane, C.; Li, W.; Zhang, M.; Mhamed, M.; Javidan, S.; Abdelhamid, M. A comprehensive review of autonomous flower pollination techniques: Progress, challenges, and future directions. Comput. Electron. Agric. 2025, 237, 110577. [Google Scholar] [CrossRef]

- Rice, C.R.; McDonald, S.T.; Shi, Y.; Gan, H.; Lee, W.S.; Chen, Y.; Wang, Z. Perception, path planning, and flight control for a drone-enabled autonomous pollination system. Robotics 2022, 11, 144. [Google Scholar] [CrossRef]

- Hönig, W.; Preiss, J.A.; Kumar, T.S.; Sukhatme, G.S.; Ayanian, N. Trajectory planning for quadrotor swarms. IEEE Trans. Robot. 2018, 34, 856–869. [Google Scholar] [CrossRef]

- Liu, H.; Chen, Z.; Wang, Z.; Li, J. An effective precision afforestation system for UAV. Sustainability 2023, 15, 2212. [Google Scholar] [CrossRef]

- Brundavani, P.; Avanija, Y. Robot Path Planning and Tracking with the Flower Pollination SearchOptimization Algorithm. J. Comput. Allied Intell. 2024, 2, 70–81. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 7464–7475. [Google Scholar]

- Ankerst, M.; Breunig, M.M.; Kriegel, H.P.; Sander, J. OPTICS: Ordering points to identify the clustering structure. ACM Sigmod Rec. 1999, 28, 49–60. [Google Scholar] [CrossRef]

- Saitama Prefectural Agricultural Technology Research Center. 2025. Available online: https://www.pref.saitama.lg.jp/soshiki/b0909/ (accessed on 31 May 2025).

- Intel. Intel RealSense Depth Camera D435i Specifications. 2025. Available online: https://www.intel.co.jp/content/www/jp/ja/products/sku/190004/intel-realsense-depth-camera-d435i/specifications.html (accessed on 31 May 2025).

- Holybro. PX4 Vision Kits. 2025. Available online: https://holybro.com/collections/multicopter-kit/products/px4-vision-dev-kit-v1-5 (accessed on 27 May 2025).

- Holybro. X500 V2 Kits. 2025. Available online: https://holybro.com/collections/x500-kits (accessed on 27 May 2025).

- QGroundControl. QGroundControl. 2025. Available online: https://qgroundcontrol.com/ (accessed on 27 May 2025).

- Open Source Robotics Foundation. Robot Operating System (ROS) Melodic. 2025. Available online: https://wiki.ros.org/melodic (accessed on 27 May 2025).

- Pelleg, D.; Moore, A. X-means: Extending K-means with E cient Estimation of the Number of Clusters. In Proceedings of the ICML’00: Seventeenth International Conference on Machine Learning, Stanford, CA, USA, 29 June–2 July 2000; pp. 727–734. [Google Scholar]

- Ester, M.; Kriegel, H.P.; Sander, J.; Xu, X. A density-based algorithm for discovering clusters in large spatial databases with noise. In Proceedings of the KDD’96: Second International Conference on Knowledge Discovery and Data Mining, Portland, OR, USA, 2–4 August 1996; Volume 96, pp. 226–231. [Google Scholar]

- Campello, R.J.; Moulavi, D.; Zimek, A.; Sander, J. Hierarchical density estimates for data clustering, visualization, and outlier detection. ACM Trans. Knowl. Discov. Data (TKDD) 2015, 10, 1–51. [Google Scholar] [CrossRef]

| Topic Name | Data Type | |

|---|---|---|

| /camera/color/image_raw/compressed | Color Image | 4468 |

| /camera/aligned_depth_to_color/image_raw | Depth Image | 2328 |

| /mavros/local_position/pose | Drone Position and Orientation | 9090 |

| /tf | Coordinate Transformation Data | 38,887 |

| Label | Precision | Recall | F1 Score | Average Precision |

|---|---|---|---|---|

| Bud | 0.833 | 0.745 | 0.787 | 0.825 |

| Blossom | 0.858 | 0.902 | 0.880 | 0.943 |

| Frontside | Backside | Total |

|---|---|---|

| 1106 | 221 | 1327 |

| Branch 1 | Branch 2 | Branch 3 | Branch 4 | Branch 5 | Branch 6 | Branch 7 | Average |

|---|---|---|---|---|---|---|---|

| 1.4 | 1.5 | 1.4 | 1.3 | 1.4 | 1.4 | 1.4 | 1.4 |

| 0.01 | 0.02 | 0.05 | 0.08 | 0.1 | 0.12 | 0.15 | 0.18 | 0.2 | |

|---|---|---|---|---|---|---|---|---|---|

| Clusters | 95 | 93 | 89 | 87 | 81 | 79 | 74 | 68 | 67 |

| Noise | 249 | 264 | 323 | 335 | 340 | 334 | 351 | 416 | 410 |

| Pollination Method | Fruit Set Rate (%) | Good Fruit Set Rate (%) |

|---|---|---|

| Drone pollination | 62.1 | 13.8 |

| Manual pollination | 61.9 | 22.5 |

| Natural pollination | 53.6 | 10.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kuwahara, A.; Kimura, T.; Okubo, S.; Yoshioka, R.; Endo, K.; Shimizu, H.; Shimada, T.; Suzuki, C.; Takemura, Y.; Hiraguri, T. Cluster-Based Flight Path Construction for Drone-Assisted Pear Pollination Using RGB-D Image Processing. Drones 2025, 9, 475. https://doi.org/10.3390/drones9070475

Kuwahara A, Kimura T, Okubo S, Yoshioka R, Endo K, Shimizu H, Shimada T, Suzuki C, Takemura Y, Hiraguri T. Cluster-Based Flight Path Construction for Drone-Assisted Pear Pollination Using RGB-D Image Processing. Drones. 2025; 9(7):475. https://doi.org/10.3390/drones9070475

Chicago/Turabian StyleKuwahara, Arata, Tomotaka Kimura, Sota Okubo, Rion Yoshioka, Keita Endo, Hiroyuki Shimizu, Tomohito Shimada, Chisa Suzuki, Yoshihiro Takemura, and Takefumi Hiraguri. 2025. "Cluster-Based Flight Path Construction for Drone-Assisted Pear Pollination Using RGB-D Image Processing" Drones 9, no. 7: 475. https://doi.org/10.3390/drones9070475

APA StyleKuwahara, A., Kimura, T., Okubo, S., Yoshioka, R., Endo, K., Shimizu, H., Shimada, T., Suzuki, C., Takemura, Y., & Hiraguri, T. (2025). Cluster-Based Flight Path Construction for Drone-Assisted Pear Pollination Using RGB-D Image Processing. Drones, 9(7), 475. https://doi.org/10.3390/drones9070475