A Deep Learning-Based Trajectory and Collision Prediction Framework for Safe Urban Air Mobility

Abstract

1. Introduction

1.1. Urban Air Mobility Trajectory Prediction: Background and Importance

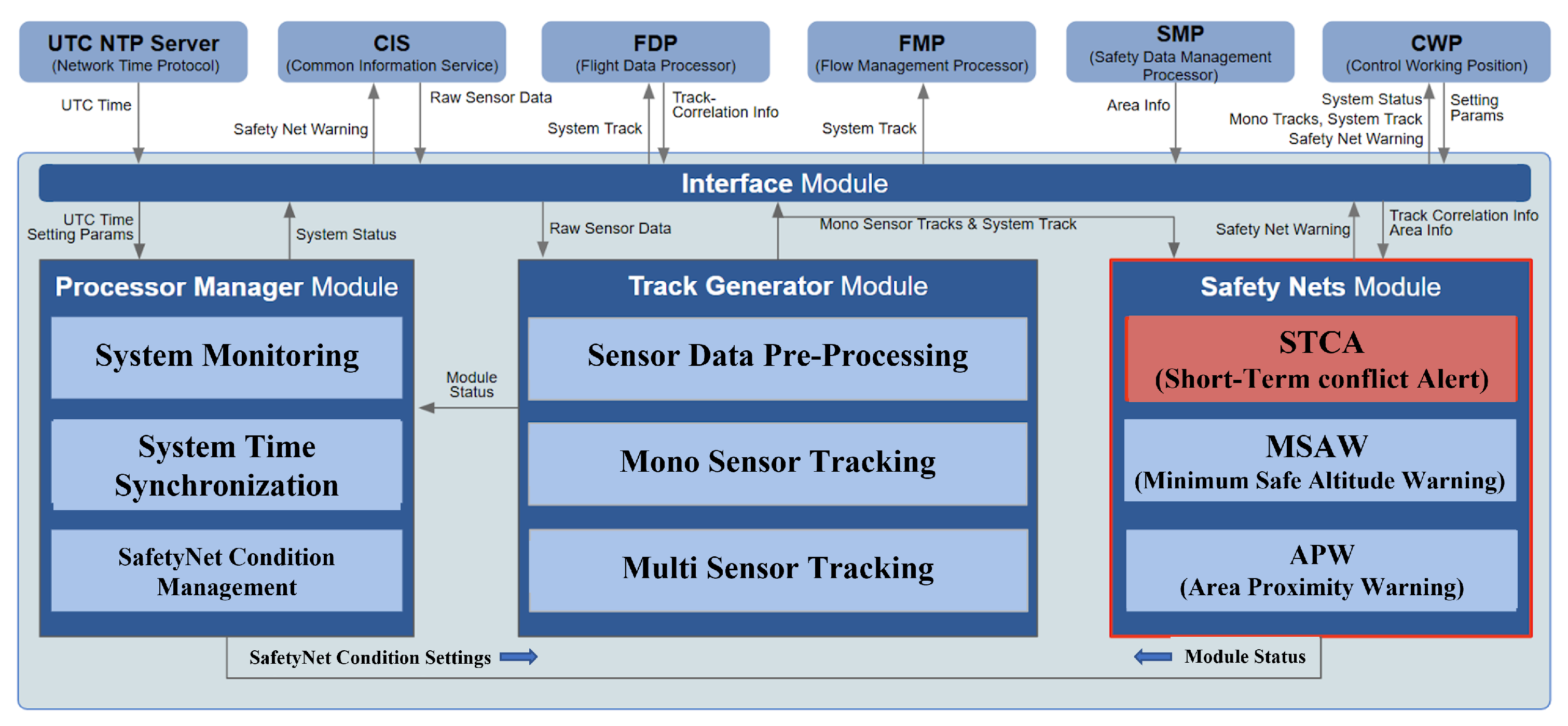

1.2. Integration of Deep Learning-Based Trajectory and Collision Prediction into Short Term Conflict Alert Module

1.3. Related Work

1.3.1. Deep Learning-Based Trajectory Prediction

1.3.2. Trajectory Prediction and Collision Detection

1.3.3. Limitations of Related Works and Proposal of Deep Learning-Based Trajectory and Collision Prediction System for UAM

2. Materials and Methods

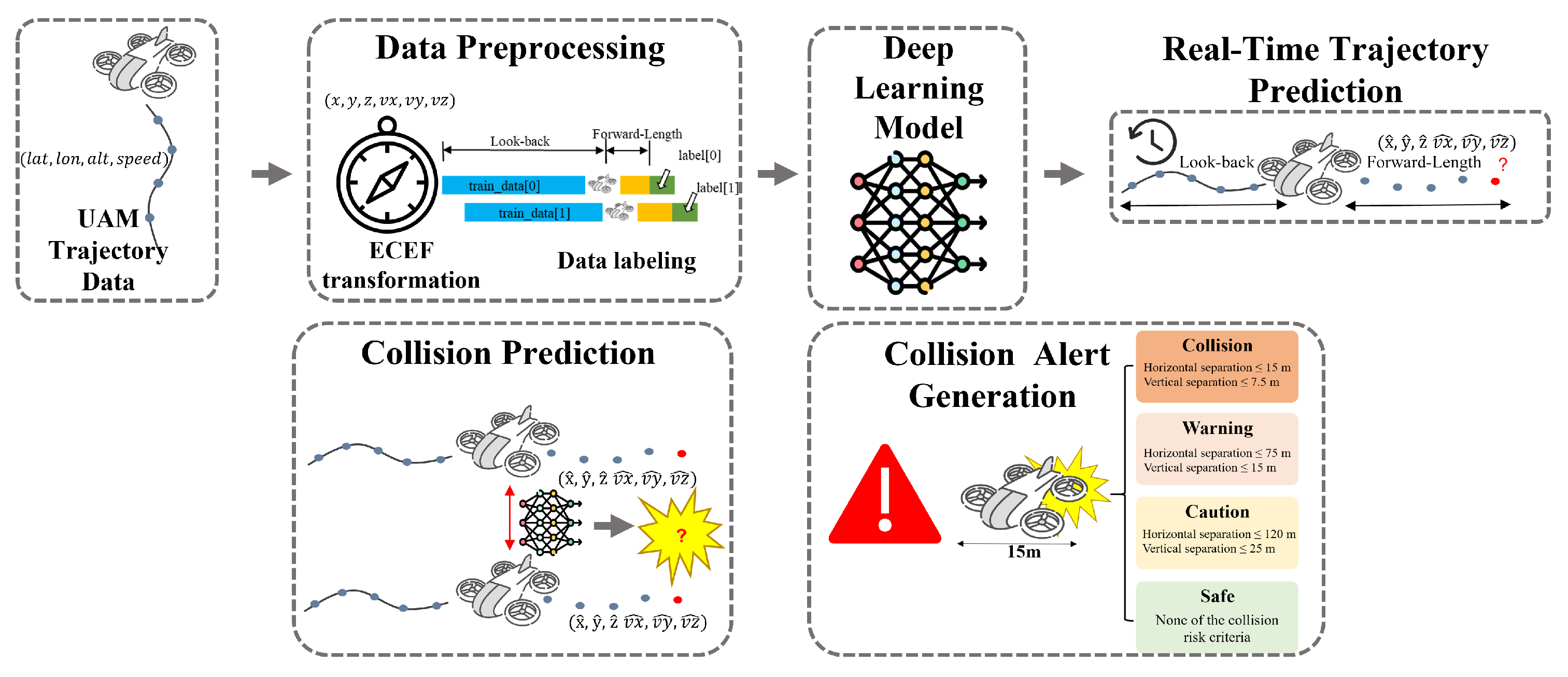

2.1. Overall Workflow for Deep Learning-Based Urban Air Mobility Trajectory Prediction and Collision Risk Assessment

2.2. Dataset and Preprocessing

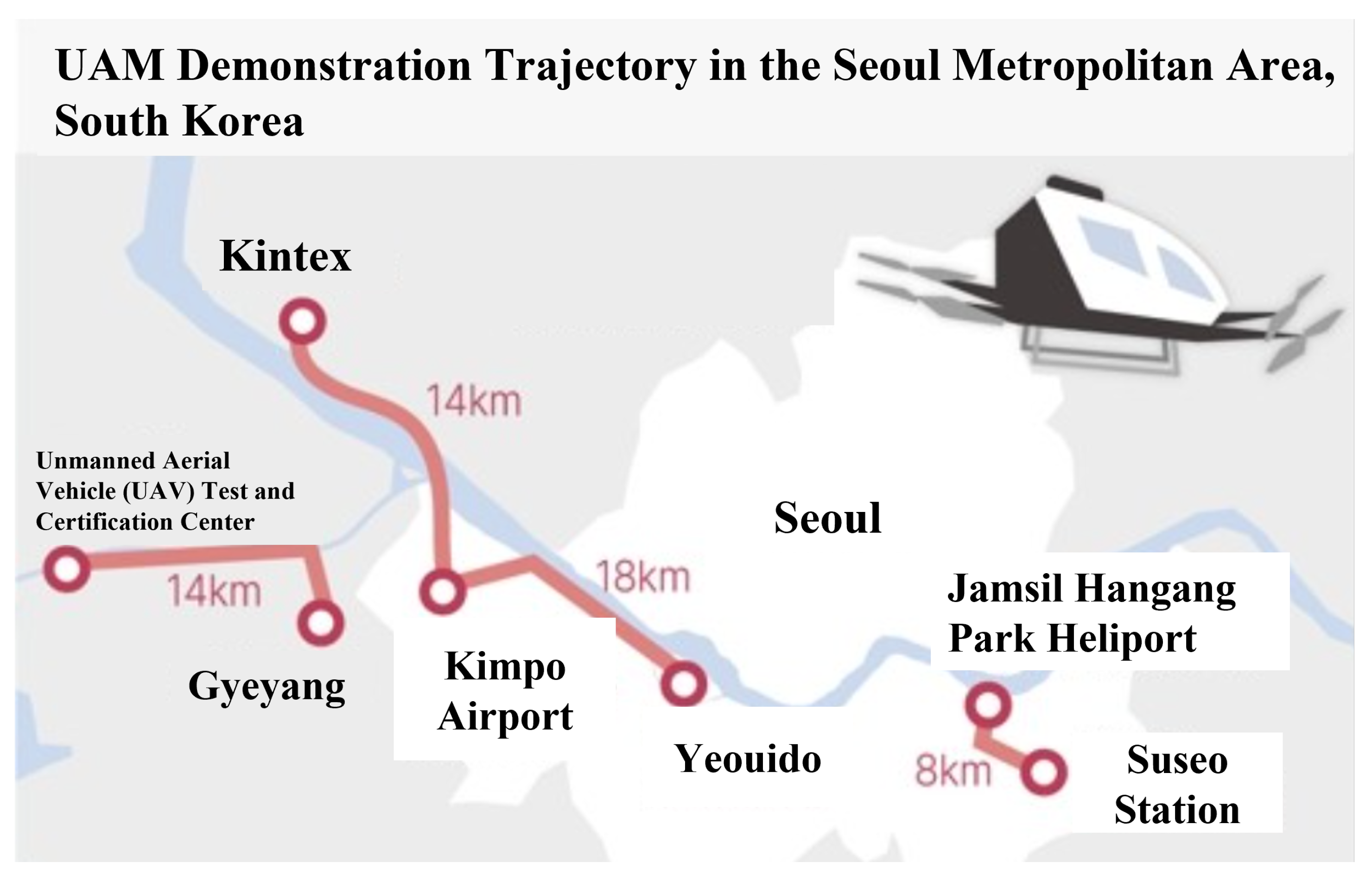

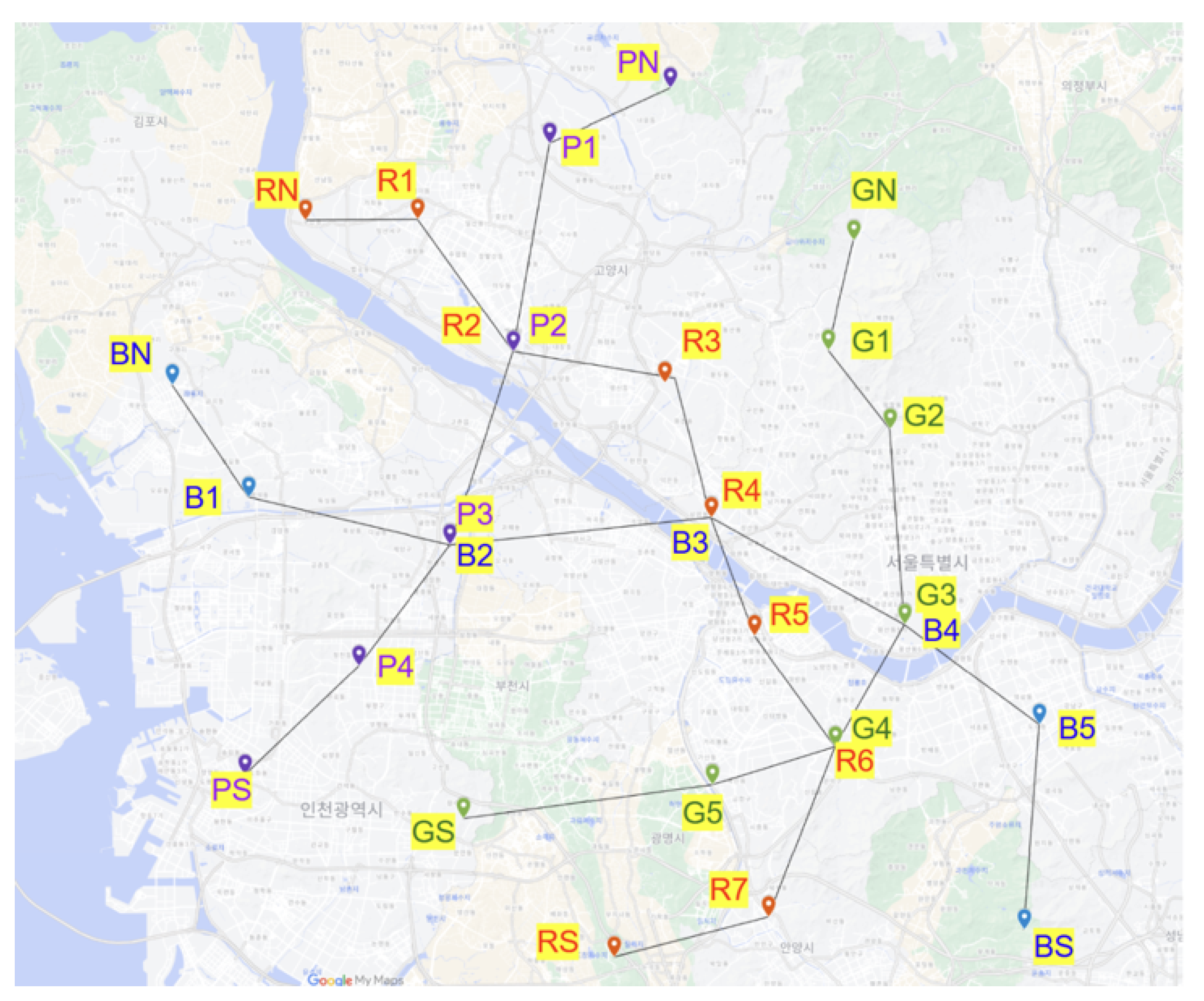

2.2.1. VTG-Based Simulation Dataset for UAM Trajectories

2.2.2. Coordinate Transformation from Geodetic to ECEF

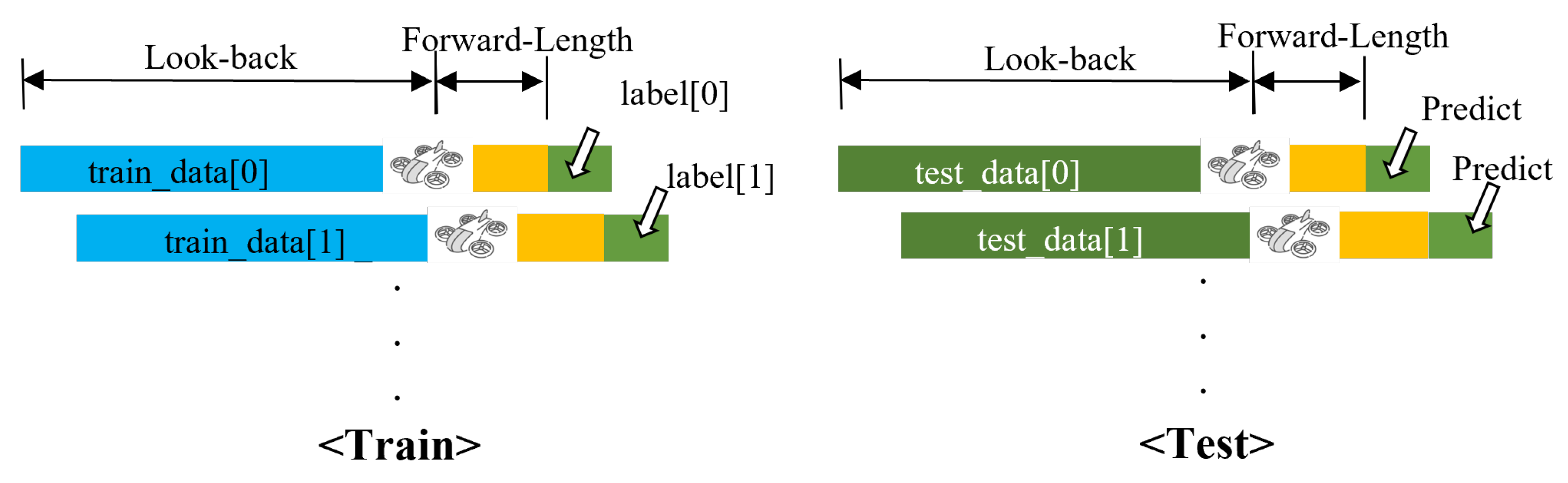

2.2.3. Data Labeling Method for Trajectory Prediction in Urban Air Mobility

- Look-Back: The immediate historical window (e.g., the past several seconds of flight data), used as the input feature sequence.

- Forward-Length: The prediction horizon (e.g., the next several seconds), for which the model predicts positions and velocities.

2.3. Deep Learning Model for Urban Air Mobility Trajectory Prediction

2.3.1. LSTM Model

2.3.2. Multi-Head Self-Attention with Learnable Pooling

2.3.3. Hyperparameters and Experimental Setup

2.4. Trajectory-Based Multi-Level Collision Prediction for Urban Air Mobility

2.4.1. Overall Collision Risk Assessment Workflow in Urban Air Mobility Systems

2.4.2. Risk-Level Definitions and Threshold Justification

- Level 1—Collision: This is triggered when horizontal separation is less than or equal to 15 m and vertical separation is less than or equal to 7.5 m. These thresholds correspond to the physical overlap or near-overlap of aircraft fuselages, indicating imminent collision.

- Level 2—Warning: This is assigned at the predicted TCA when horizontal separation is less than or equal to 75 m and vertical separation is less than or equal to 15 m. This level represents a high-risk encounter necessitating immediate evasive action.

- Level 3—Caution: This is defined by horizontal separation less than or equal to 120 m and vertical separation less than or equal to 25 m at TCA. This classification allows for the early detection of potential conflicts and timely trajectory adjustments.

- Level 4—Safe: This applies to all predicted aircraft encounters that do not meet the criteria of the above three levels, indicating no immediate conflict.

2.4.3. Ground Truth Label Generation

2.4.4. Collision Prediction Algorithm

| Algorithm 1 Collision risk prediction and alert generation. |

| 1: ▹ Initialize alert storage |

| 2: for all aircraft trajectories do |

| 3: Normalize input features using pre-fitted scaler |

| 4: Segment trajectory into look-back and forward-length prediction windows |

| 5: Predict future positions and velocities using LSTM–Attention model |

| 6: Denormalize predicted outputs to ECEF coordinates |

| 7: end for |

| 8: for all unique aircraft pairs do |

| 9: for all prediction timesteps t do |

| 10: Compute relative position: |

| 11: Compute relative velocity: |

| 12: Calculate TCA: |

| 13: end for |

| 14: Identify minimum positive over prediction horizon |

| 15: Compute spatial separation at |

| 16: Assign risk level based on thresholds |

| 17: if risk level ≠ Safe then |

| 18: Create alert with timestamp, predicted states, and risk level |

| 19: Convert predicted collision coordinates to geodetic format (lat, lon, alt) |

| 20: Append alert to |

| 21: end if |

| 22: end for |

| 23: return ▹ Return complete alert log |

3. Results

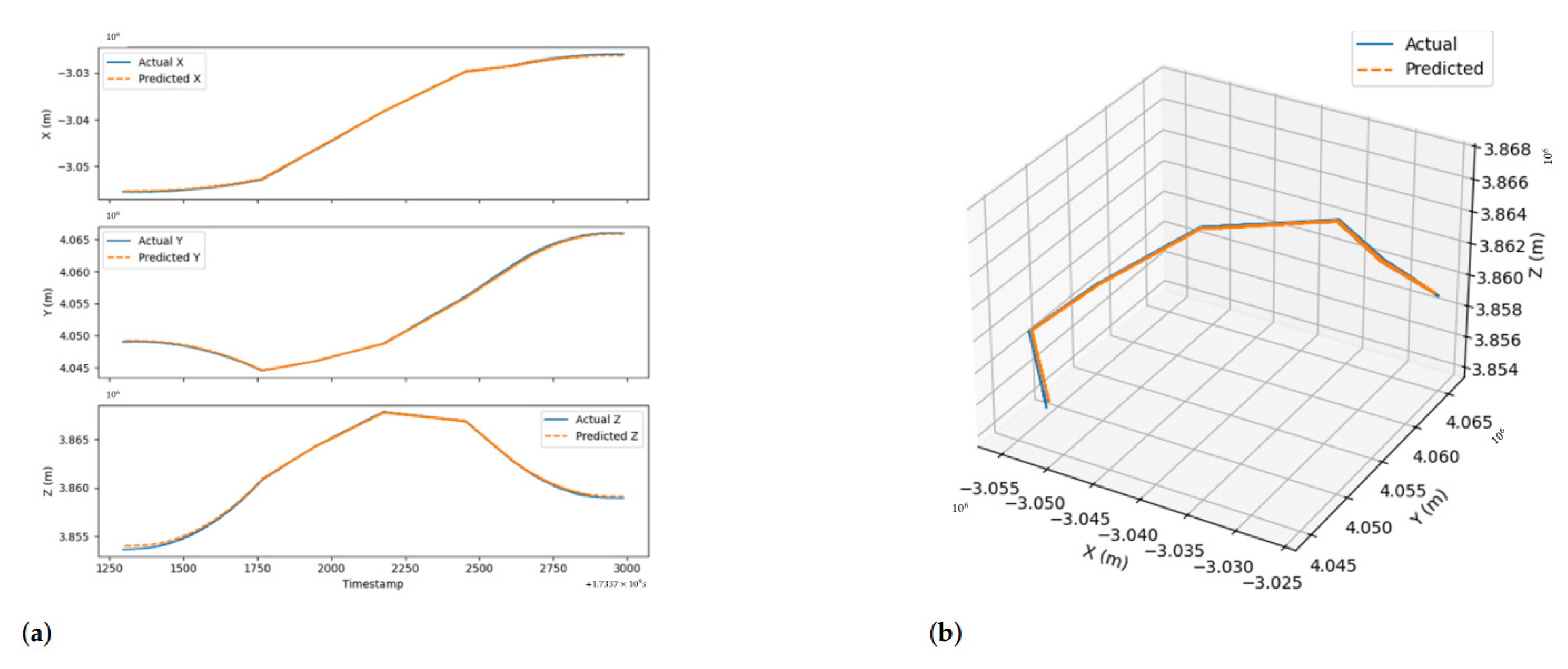

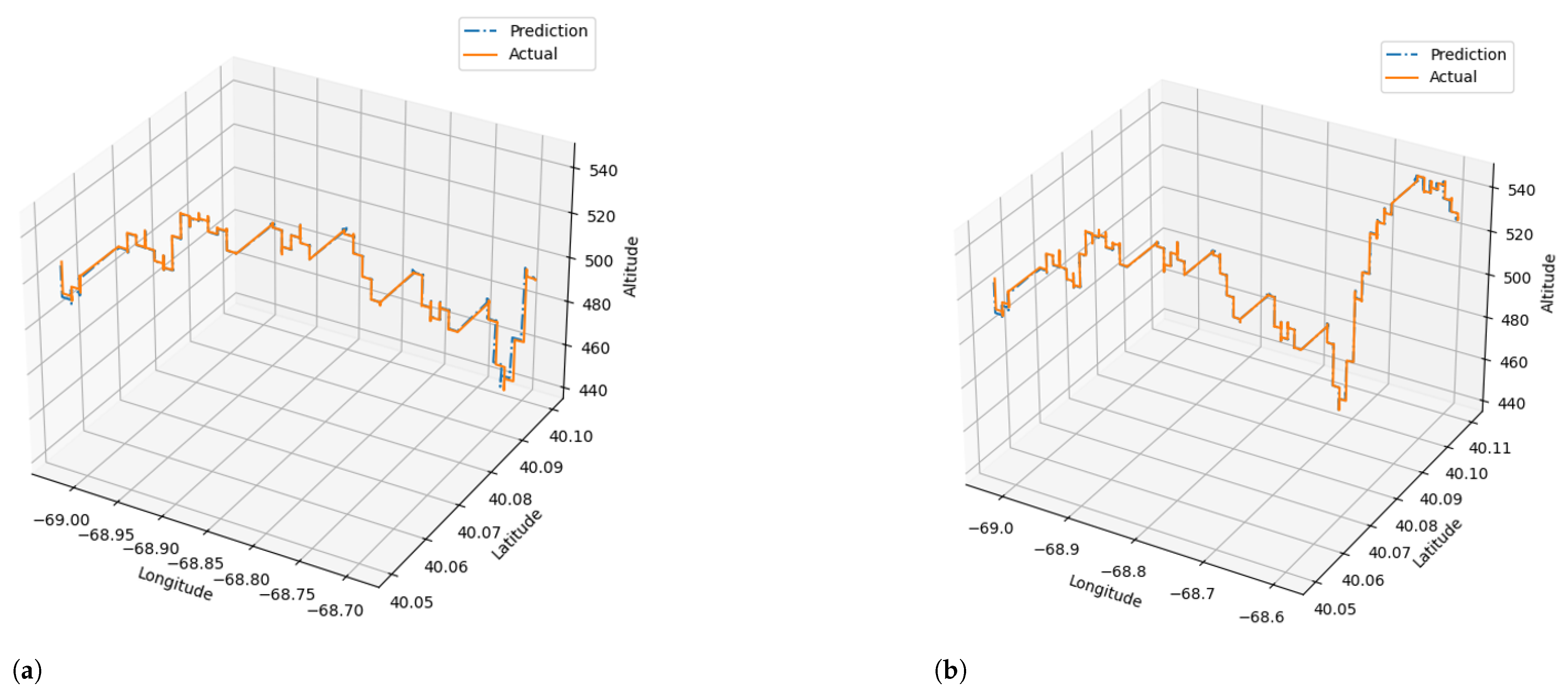

3.1. Integrated Evaluation of the LSTM–Attention Model for UAM Trajectory Prediction

3.1.1. Evaluation Metrics for Trajectory Prediction

3.1.2. Look-Back Window Configuration for UAM Trajectory Prediction

3.1.3. Comparative Analysis of Geodetic and ECEF Coordinates

3.1.4. Real-Time Inference Evaluation for Urban Air Mobility Trajectory Prediction

3.1.5. Case Study on LSTM–Attention Model

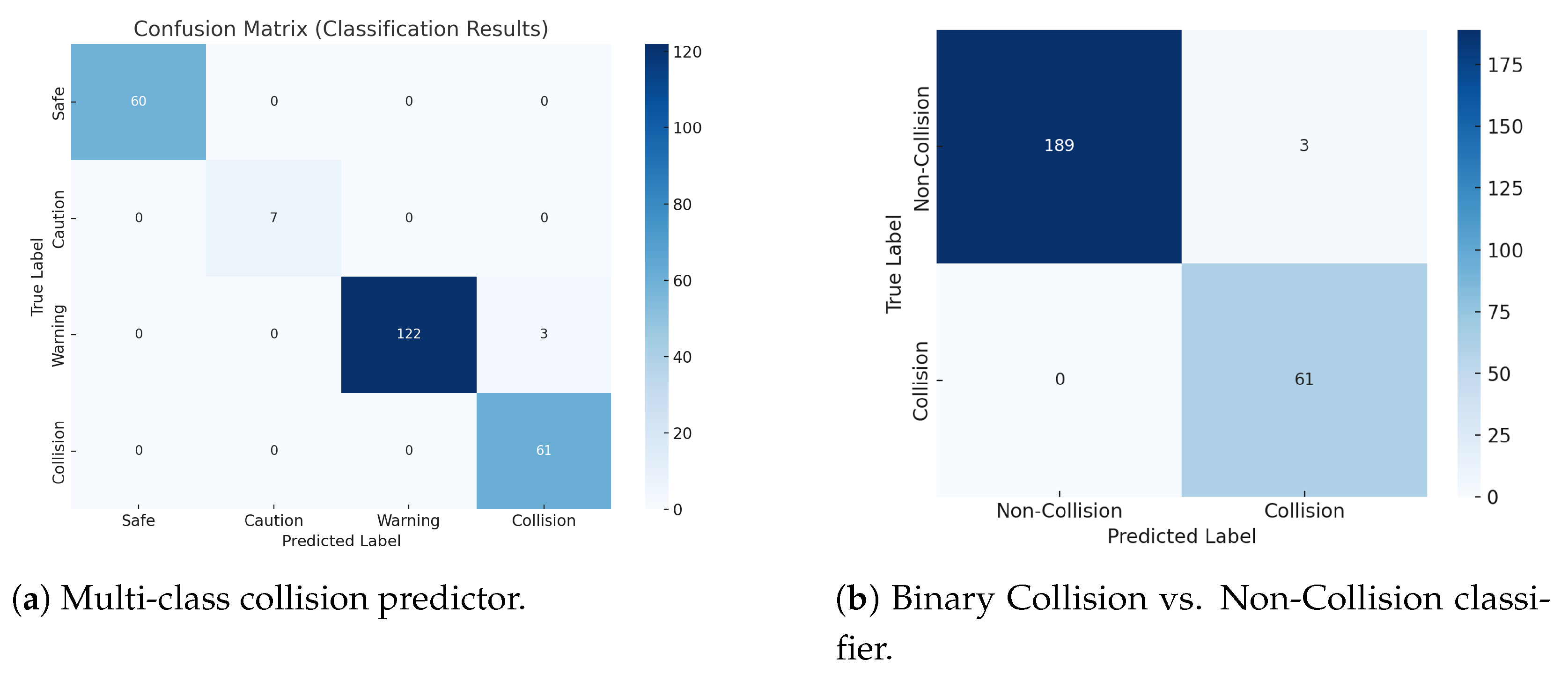

3.2. Experimental Evaluation of the Collision Prediction Algorithm

3.2.1. Collision Prediction Algorithm Performance Evaluation Method

3.2.2. Results of the Collision Prediction

4. Discussion

4.1. Comparison of RMSE by Number of Attention Heads

4.2. Visualization of the Attention Mechanism

4.3. Contributions of This Study

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Bauranov, A.; Rakas, J. Designing airspace for urban air mobility: A review of concepts and approaches. Prog. Aerosp. Sci. 2021, 125, 100726. [Google Scholar] [CrossRef]

- Straubinger, A.; Rothfeld, R.; Shamiyeh, M.; Rudolph, F.; Plötz, A.; Kaiser, J.; Antoniou, C. An overview of current research and developments in urban air mobility—Setting the scene for UAM introduction. J. Air Transp. Manag. 2020, 87, 101852. [Google Scholar] [CrossRef]

- UAM Team Korea. K-UAM Concept of Operations 1.0. Technical Report Version 1.0; Ministry of Land, Infrastructure and Transport (MOLIT): Sejong City, Republic of Korea, 2021. [Google Scholar]

- Joby Aviation. Website and Media Assets; Joby Aero, Inc.: Santa Cruz, CA, USA, 2025; Available online: https://www.jobyaviation.com/ (accessed on 5 June 2025).

- Savita, V. Exploration of Near-term Potential Routes and Procedures for Urban Air Mobility. In Proceedings of the Aviation Technology, Integration and Operations Conference, Dallas, TX, USA, 17–21 June 2019. [Google Scholar]

- Neto, E.C.P.; Baum, D.M.; Junior, J.R.d.A.; Junior, J.B.C.; Cugnasca, P.S. Trajectory-based urban air mobility (UAM) operations simulator (TUS). arXiv 2019, arXiv:1908.08651. [Google Scholar]

- Zhang, A.; Zhang, B.; Bi, W.; Mao, Z. Attention based trajectory prediction method under the air combat environment. Appl. Intell. 2022, 52, 17341–17355. [Google Scholar] [CrossRef]

- Zhu, R.; Yang, Z.; Chen, J. Conflict risk assessment between non-cooperative drones and manned aircraft in airport terminal areas. Appl. Sci. 2022, 12, 10377. [Google Scholar] [CrossRef]

- Chen, K.; Zhang, P.; You, L.; Sun, J. Research on Kalman Filter Fusion Navigation Algorithm Assisted by CNN-LSTM Neural Network. Appl. Sci. 2024, 14, 5493. [Google Scholar] [CrossRef]

- Wu, S.; Mao, F. uAV collision avoidance system based on ADS-B real-time information. In Proceedings of the 2023 5th International Symposium on Robotics & Intelligent Manufacturing Technology (ISRIMT), Changzhou, China, 22–24 September 2023; pp. 275–279. [Google Scholar]

- Tong, L.; Gan, X.; Wu, Y.; Yang, N.; Lv, M. An ADS-B information-based collision avoidance methodology to UAV. Actuators 2023, 12, 165. [Google Scholar] [CrossRef]

- Liu, T.; Xu, X.; Lei, Z.; Zhang, X.; Sha, M.; Wang, F. A multi-task deep learning model integrating ship trajectory and collision risk prediction. Ocean Eng. 2023, 287, 115870. [Google Scholar] [CrossRef]

- National Imagery and Mapping Agency (NIMA). Department of Defense World Geodetic System 1984: Its Definition and Relationships with Local Geodetic Systems. Technical Report NIMA TR8350.2; National Imagery and Mapping Agency: Bethesda, MA, USA, 2000. [Google Scholar]

- Yoon, S.; Jang, D.; Yoon, H.; Park, T.; Lee, K. GRU-based deep learning framework for real-time, accurate, and scalable UAV trajectory prediction. Drones 2025, 9, 142. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 1–11. [Google Scholar]

- Kingma, D.P.; Ba, J.L. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- International Civil Aviation Organization. Annex 10—Aeronautical Telecommunications, Volume IV: Surveillance and Collision Avoidance Systems, 7th ed.; ICAO: Montreal, QC, Canada, 2020. [Google Scholar]

- Radio Technical Commission for Aeronautics. Minimum Operational Performance Standards for Traffic Alert and Collision Avoidance System (TCAS) Change 7.0; RTCA: Washington, DC, USA, 2013. [Google Scholar]

- EUROCONTROL. Specification for TCAS II Operating Procedures. 2018. Available online: https://www.eurocontrol.int/publication/specification-tcas-ii (accessed on 15 April 2025).

- Stjernberg, J.; Durnford, P.; van Egmond, P.; Krivohlavek, J.; Martijnse-Hartikka, R.; Solbø, S.A.; Wachter, F.; Wigler, K. Guidebook for Urban Air Mobility Integration: AiRMOUR Deliverable 6.4; AiRMOUR Consortium/European Union Aviation Safety Agency. 2023. Available online: https://www.easa.europa.eu/sites/default/files/dfu/airmour_-_d6.4_guidebook_for_uam_integration_process_management.pdf (accessed on 15 April 2025).

- Cho, K.; Van Merriënboer, B.; Gulcehre, C.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning phrase representations using RNN encoder–decoder for statistical machine translation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1724–1734. [Google Scholar]

- Schuster, M.; Paliwal, K.K. Bidirectional recurrent neural networks. IEEE Trans. Signal Process. 1997, 45, 2673–2681. [Google Scholar] [CrossRef]

- Chung, J.; Gülçehre, Ç.; Cho, K.; Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Donahue, J.; Hendricks, L.A.; Guadarrama, S.; Rohrbach, M.; Venugopalan, S.; Saenko, K.; Darrell, T. Long-term recurrent convolutional networks for visual recognition and description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 2625–2634. [Google Scholar]

- Phadke, A.; Medrano, F.A.; Chu, T.; Sekharan, C.N.; Starek, M.J. Modeling wind and obstacle disturbances for effective performance observations and analysis of resilience in UAV swarms. Aerospace 2024, 11, 237. [Google Scholar] [CrossRef]

| Reference | Data Type | Number of Data | Coordinate Transformation | Deep Learning Model | Evaluation Metric | Max. Prediction Time | t+1 Performance | t+max Performance |

|---|---|---|---|---|---|---|---|---|

| Zhang et al. (2022) [7] | Simulation Flight Data | 3890 | ECEF | AttConv LSTM | MAE | 2.5 s | 2.09 (MAE) | 45.73 (MAE) |

| Zhu et al. (2022) [8] | Drone | 39,548 | ECEF | GRU | RMSE, MAE, MAPE | 40 s | 15.65 (RMSE) | 161.73 (RMSE) |

| Chen et al. (2024) [9] | Drone | - | ECEF | CNN + LSTM | RMSE | 3 s | x, y: 0.1336; z: 0.0108 (RMSE) | - |

| Reference | Data Type | Variables Used | No. of Targets | Trajectory Prediction Method | Prediction Performance |

|---|---|---|---|---|---|

| Wu et al. (2023) [10] | ADS-B UAV | latitude, longitude, altitude, velocity | 7 | Straight-line extrapolation | – |

| Tong et al. (2022) [11] | ADS-B UAV | latitude, longitude, altitude, speed, heading | 2 | Kalman Filter | – |

| Liu et al. (2023) [12] | AIS ship | latitude, longitude, speed, heading, ship length | – | LSTM | Accuracy: 98.3% |

| # | Item | Description | Unit |

|---|---|---|---|

| 1 | Registration number | Aircraft identifier | – |

| 2 | Route | Name of route | – |

| 3 | Timestamp | Time of data | time |

| 4 | Size | Size of aircraft | 15 m |

| 5 | Long. | Longitude | deg |

| 6 | Lat. | Latitude | deg |

| 7 | Alt. | Altitude | m |

| 8 | Horizontal speed | Aircraft’s horizontal speed | m/s |

| 9 | Vertical speed | Aircraft’s vertical speed | m/s |

| 10 | Direction | Flight direction | deg |

| Look-Back (Timesteps) | RMSE | MAE | MSE |

|---|---|---|---|

| 10 | 0.2299 | 0.0528 | 0.1685 |

| 30 | 0.2295 | 0.0527 | 0.1694 |

| 50 | 0.2172 | 0.0524 | 0.1668 |

| 100 | 0.2341 | 0.0548 | 0.1725 |

| Model | Geodetic RMSE | ECEF RMSE | Reduction |

|---|---|---|---|

| GRU | 0.2929 | 0.2440 | −0.0489 |

| LSTM | 0.5177 | 0.2429 | −0.2748 |

| Bi-LSTM | 0.4333 | 0.2435 | −0.1898 |

| Bi-GRU | 0.3068 | 0.2442 | −0.0626 |

| CNN–LSTM | 0.2930 | 0.2436 | −0.0494 |

| Transformer | 0.3056 | 0.2259 | −0.0797 |

| GRU–Attention | 0.3888 | 0.2434 | −0.1454 |

| LSTM–Attention | 0.2531 | 0.2172 | −0.0359 |

| Forward-Length | RMSE | MAE | MSE | Inference Time (ms) |

|---|---|---|---|---|

| 0 | 0.2172 | 0.1668 | 0.0524 | 0.5088 |

| 5 (0.5 s) | 0.2391 | 0.1711 | 0.0572 | 0.5266 |

| 10 (1 s) | 0.2414 | 0.1737 | 0.0583 | 0.5272 |

| 15 (1.5 s) | 0.2415 | 0.1734 | 0.0583 | 0.5390 |

| 20 (2 s) | 0.2408 | 0.1736 | 0.0580 | 0.5226 |

| 100 (10 s) | 0.2417 | 0.1728 | 0.0584 | 0.5196 |

| Model | RMSE | MAE | MSE |

|---|---|---|---|

| LSTM–Attention | 0.1897 | 0.1157 | 0.0364 |

| Model | RMSE | MAE | MSE |

|---|---|---|---|

| LSTM–Attention | 0.4412 | 0.1654 | 0.1946 |

| Precision | Recall | F1-Score | Accuracy | AUC | |

|---|---|---|---|---|---|

| Overall | 0.9887 | 0.9881 | 0.9882 | 0.9881 | 0.9950 |

| Model | Heads | RMSE | MAE | MSE |

|---|---|---|---|---|

| LSTM–Attention | 4 | 0.2172 | 0.1668 | 0.0524 |

| 8 | 0.2290 | 0.1686 | 0.0524 | |

| GRU–Attention | 4 | 0.2296 | 0.1688 | 0.0527 |

| 8 | 0.2287 | 0.1683 | 0.0523 | |

| CNN–LSTM–Attention | 4 | 0.2292 | 0.1673 | 0.0525 |

| 8 | 0.2270 | 0.1654 | 0.0515 |

| Reference | Data Type | Number of Data | Coordinate Transformation | Deep Learning Model | Evaluation Metric | Max. Prediction Time | t+1 Performance | t+max Performance |

|---|---|---|---|---|---|---|---|---|

| Zhang et al. (2022) [7] | Simulation Flight Data | 3890 | ECEF | AttConv LSTM | MAE | 2.5 s | 2.09 (MAE) | 45.73 (MAE) |

| Zhu et al. (2022) [8] | Drone | 39,548 | ECEF | QRDGRU | RMSE, MAE, MAPE | 40 s | 15.65 (RMSE) | 161.73 (RMSE) |

| Chen et al. (2024) [9] | Drone | – | ECEF | CNN+LSTM | RMSE | 3 s | x, y: 0.1336, z: 0.0108 (RMSE) | – |

| Ours (2025) | Simulation UAM Data | 670,000 | ECEF | LSTM–Attention | RMSE, MSE, MAE | 10 s | 0.2172 (RMSE) 0.1668 (MAE) 0.0524 (MSE) | 0.2417 (RMSE) 0.1728 (MAE) 0.0584 (MSE) |

| Reference | Data Type | Variables Used | No. of Targets | Trajectory Prediction Method | Prediction Performance |

|---|---|---|---|---|---|

| Wu et al. (2023) [10] | ADS-B UAV | latitude, longitude, altitude, velocity | 7 | Straight-line extrapolation | – |

| Tong et al. (2022) [11] | ADS-B UAV | latitude, longitude, altitude, speed, heading | 2 | Kalman Filter | – |

| Liu et al. (2023) [12] | AIS ship | latitude, longitude, speed, heading, ship length | – | LSTM | Accuracy: 98.3% |

| Ours (2025) | UAM | latitude, longitude, altitude, velocity | 23 | LSTM–Attention | Accuracy: 98.81% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, J.; Yoon, H.; Yoon, S.; Kwon, Y.; Lee, K. A Deep Learning-Based Trajectory and Collision Prediction Framework for Safe Urban Air Mobility. Drones 2025, 9, 460. https://doi.org/10.3390/drones9070460

Kim J, Yoon H, Yoon S, Kwon Y, Lee K. A Deep Learning-Based Trajectory and Collision Prediction Framework for Safe Urban Air Mobility. Drones. 2025; 9(7):460. https://doi.org/10.3390/drones9070460

Chicago/Turabian StyleKim, Junghoon, Hyewon Yoon, Seungwon Yoon, Yongmin Kwon, and Kyuchul Lee. 2025. "A Deep Learning-Based Trajectory and Collision Prediction Framework for Safe Urban Air Mobility" Drones 9, no. 7: 460. https://doi.org/10.3390/drones9070460

APA StyleKim, J., Yoon, H., Yoon, S., Kwon, Y., & Lee, K. (2025). A Deep Learning-Based Trajectory and Collision Prediction Framework for Safe Urban Air Mobility. Drones, 9(7), 460. https://doi.org/10.3390/drones9070460