Abstract

In recent years, UAV technology has developed rapidly and has been widely applied across various fields. However, as the adoption of civilian UAVs continues to grow, there has been a corresponding rise in the number of black flights by UAVs, which may cause criminal activities and privacy and security issues, so it has become necessary to recognize UAVs in the airspace in order to deal with potential threats. This study recognizes UAVs based on the acoustic signals of UAV flights. Since there are various acoustic interferences in the real environment, more efficient acoustic recognition techniques are needed to meet the recognition needs in complex environments. Aiming at the recognition difficulties caused by the overlap of UAV sound and the background noise spectrum in low signal-to-noise ratio environments, this study proposes an improved lightweight ResNet10_CBAM deep learning model. The optimal performance of MFCC in low SNR environments is verified by comparing three feature extraction methods, Spectrogram, Fbank, and MFCC. The enhanced ResNet10_CBAM model, with fewer layers and integrated channel and spatial attention mechanisms, significantly improved feature extraction in low SNR conditions while reducing model parameters. The experimental results show that the model improves the average accuracy by 14.52%, 17.53%, and 20.71% compared with ResNet18 under the low SNR conditions of −20 dB, −25 dB, and −30 dB, respectively, and the F1 score reaches 94.30%. The study verifies the effectiveness of lightweight design and attention mechanisms in complex acoustic environments.

1. Introduction

In recent years, thanks to the rapid iteration of technologies in control, communication, power, materials, and artificial intelligence, the unmanned aerial vehicle (UAV) industry has grown at a rapid pace and has been widely used in various fields. For example, UAVs are used in agriculture to accurately monitor crops [1,2], in forestry to prevent and monitor forest fires and investigate forest resources [3,4], in the electric power industry to carry out non-destructive inspection [5], in the logistics industry to deliver parcels with the help of UAVs [6], and in emergency rescue operations to assist in search and rescue missions [7]. However, while the rapid development of drone technology has brought convenience to various industries, the risks of its misuse are also escalating. In the realm of public safety, identifying and intercepting threatening drones has become imperative; meanwhile, in civilian applications, such as agricultural monitoring, logistics transportation, and aerial photography, accurately identifying drones and ensuring their compliance with regulations are equally critical to guaranteeing operational safety and efficiency. These cross-cutting urgent demands underscore the broader social value and practical necessity of developing automated drone identification technologies for target airspaces.

There are various recognition techniques for UAVs, mainly including visual recognition [8,9], radar recognition [10,11], radio frequency recognition [12,13], and acoustic recognition [14,15,16,17]. Among these methods, visual recognition is intuitive, provides rich contextual information, and is suitable for good daytime lighting, but performance decreases at night or in bad weather, is sensitive to lighting changes, is greatly affected by occlusion and line-of-sight limitations, and has high computational resource requirements. Radar recognition is less affected by weather and lighting conditions, can work over medium and long distances, and accurately estimates speed and distance, but the probability of detection relies on the target cross-sectional area RCS. Radio frequency (RF) recognition works day and night in all kinds of weather, has a long recognition range, is easy to implement, detects the UAV startup process, and recognizes the operator’s position, but the performance is affected by the coexistence of other signals, is ineffective for autonomous flying UAVs, has low detection accuracy for small UAVs, and the multipath propagation and obstacles in the urban environment affect the accuracy. Since acoustic recognition has distinct advantages, including independence from line-of-sight constraints, effectiveness in all kinds of weather, requirement for low cost, and sensitivity to the acoustic characteristics of the UAV, this study uses a recognition method based on acoustic signals. The current research in the field of acoustic detection for UAVs mainly focuses on the construction of acoustic data sets, as well as the design and optimization of models.

The acoustic recognition of UAVs is highly dependent on acoustic data. Many researchers have significantly improved the generalization ability and accuracy of the model by augmenting the data, constructing diverse datasets, and optimizing the acoustic feature extraction techniques. Al-Emadi et al. [18] synthesized hybrid acoustic data using a generative adversarial network (GAN) combined with a convolutional neural network (CNN), which achieved high-accuracy detection of unknown UAV types. Wang et al. [19] constructed a dataset containing 15 types of UAV audio, and the CNN-based classification accuracy reached 98.7%. Diao et al. [20] proposed MFCC-based UAV voiceprint authentication technology, which provided a biometric identification solution for security scenarios. This study also employed the advanced MFCC feature extraction method to extract the characteristics of acoustic signals from various complex environments and constructed a unique database of complex environmental acoustic features.

In terms of the design of the UAV acoustic recognition model, the traditional machine learning models and the lightweight deep learning models are developed in parallel, both of which continue to make efforts in reducing computational cost [15] and improving model performance [21]. In addition, improving the anti-noise technology to enhance the model’s environmental adaptability is also one of the research hotspots. Encinas et al. [22] separated the UAV noise in single-channel audio based on singular spectrum analysis (SSA). Fang et al. [23] achieved high-precision localization at a long distance by combining fiber optic acoustic sensors (FOASs) and distributed acoustic sensing (DAS). Sun et al. [24] improved the linear prediction spectrum (LSP), which improved the detection accuracy under the background noise by covariance matrix reconstruction and filter optimization. Ibrahim et al. [25] analyzed the effect of UAV load on acoustic features and verified the model’s environmental adaptability by combining MFCC and SVM to achieve the classification under different load conditions. The design of the acoustic recognition model for UAVs is further required to improve the robustness to noise interference while considering the computational cost, which provided a direction for the design of the attention mechanism in this study.

In summary, although the acoustic recognition technology of UAVs has made some progress, there is still room for development. First, the robustness of acoustic data feature extraction is insufficient, the effectiveness of acoustic features is limited in dynamic acoustic environments, and there is insufficient research on the feature variations across different flight regimes. Secondly, the existing recognition models are poorly adapted in complex environments, the recognition performance decreases dramatically under low signal-to-noise ratios and strong background noise, and the influence of environmental factors on acoustic propagation is often neglected. Therefore, this study first applies MFCC for feature extraction to extract relevant features from complex acoustic signals, constructs a database of acoustic features, and then designs and optimizes an improved lightweight ResNet10_CBAM model based on deep learning techniques to achieve accurate identification of the sound of UAVs in complex environments. UAV sounds are utilized under four distinct noise environments with nine different signal-to-noise ratios (SNRs), ranging from −30 dB to 5 dB. The main contributions of this paper are as follows:

- A UAV acoustic feature database is constructed using the feature extraction method;

- An improved lightweight ResNet10_CBAM model is proposed;

- Acoustic recognition of UAVs in complex (low signal-to-noise ratio and environments with varying levels of noise interference) environments is performed.

2. Proposed Method

2.1. Features Extraction

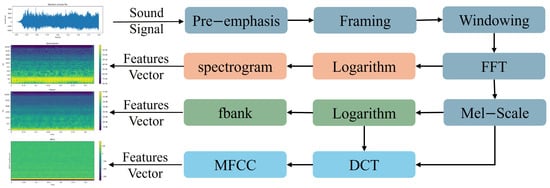

Field-recorded UAV acoustic signatures frequently contain ambient noise interference, with spectral overlap between target signals and environmental noise, which renders significant classification challenges in source separation. Meanwhile, by transforming the raw acoustic data into a feature matrix as input through feature extraction, the classification effect of UAV acoustic signatures can be enhanced. In this study, three different feature extraction methods, namely Spectrogram, Filterbank (Fbank), and Mel-frequency cepstral coefficients (MFCC), are compared and analyzed, to test the recognition effect of different methods in a low signal-to-noise ratio environment. The flow chart of the three feature extraction methods is shown in Figure 1.

Figure 1.

The feature extraction flowchart of Spectrogram, Fbank, and MFCC.

The spectrogram extraction pipeline involves pre-emphasis of the acoustic signal, followed by framing, window application, fast Fourier transform (FFT), squaring of spectral magnitude, and temporal stacking to generate a 2D time–frequency energy feature matrix. The procedural difference between Fbank and Spectrogram lies in the additional Mel-scale filtering following the FFT. MFCC [26] further processes Fbank by subjecting the Mel-scale filtering outputs to discrete cosine transform (DCT), thereby yielding a feature matrix.

During the feature extraction of the UAV acoustic signals, pre-emphasis processing is initially applied to implement high-pass filtering, which enhances the high-frequency part of the input signal, thereby avoiding signal distortion caused by disproportionately strong low-frequency and disproportionately weak high-frequency signals. The processed signal is formulated as follows:

where is the raw acoustic signal, is the pre-emphasized acoustic signal, is the coefficient of pre-emphasis, which is between 0.9 and 1. The audio signal is subsequently divided into frames, with each frame having a certain overlapping area. Then, each frame is windowed using a Hamming window to reduce spectral leakage. After that, the windowed signal is transformed from the time domain to the frequency domain via an FFT, obtaining the spectrum information for each frame. Subsequently, a Mel-scale filter bank is applied to divide the frequency domain into several bands, with each band filtered by a Mel-scale filter to extract the energy distribution. The frequency response formula for filter is as follows:

where k denotes the frequency, and is the center frequency of the m-th filter. The logarithmic power spectrum is then obtained by taking the logarithm of each filter output and is calculated as follows:

Finally, the logarithmic energy is subjected to a DCT to obtain the L-order MFCC coefficients, which are calculated as follows:

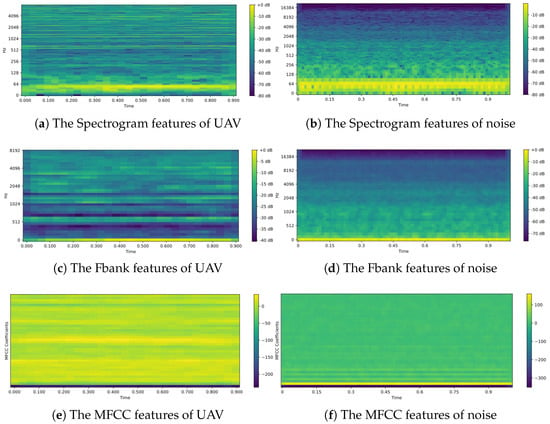

Eventually, the UAV acoustic features corresponding to different processing pipelines are shown in Figure 2a,c,e, and the noise features are shown in Figure 2b,d,f.

Figure 2.

The map of UAV acoustic features and noise features.

2.2. Deep Model Architecture

2.2.1. Models and Methods

There are four typical neural network models used for comparative analysis, to improve and optimize the models based on ResNet. AlexNet, proposed by Krizhevsky et al. [27], is a milestone of deep convolutional neural networks, whose structure contains five convolutional layers and three fully connected layers, transforms the problem of vanishing gradient in the saturated region of the traditional sigmoid function into a segmented linear activation by introducing the ReLU activation function. It significantly accelerates the convergence speed of the training and enhances the model’s nonlinear expressive ability at the same time. In order to prevent overfitting, AlexNet applies the Dropout technique in the fully connected layer for the first time, and the dynamic sparsification of the network structure is realized via stochastic dropout of neurons (with a dropout probability ) during forward propagation. Subsequently, Szegedy et al. [28] proposed GoogleNet, whose core inception module achieves multi-scale feature fusion by performing 1 × 1, 3 × 3, 5 × 5 convolution, and 3 × 3 maximum pooling operations in parallel. The output feature maps of each branch complete the information integration through channel concatenation. This structure increases the width of the network while controlling the amount of computation and significantly improves the feature expression capability.

To further address the degradation problem in deep networks, He Kaiming’s team [29] introduced ResNet, which incorporates a residual learning mechanism. Its fundamental unit employs shortcut connections to enable identity mapping, allowing gradients to bypass layers and propagate directly to shallow layers during backpropagation, effectively mitigating gradient vanishing. This study also compares the RNN model, a type of recurrent neural network that processes sequential data through recursive connections, where all nodes (recurrent units) are linked in a chain-like structure along the sequence progression.

The typical network models, GoogleNet, ResNet, AlexNet, and RNN, are compared in this study, and ResNet is found to be more effective after combining the advantages and disadvantages of the respective network frameworks and carrying out preliminary experimental validation. Based on this, ResNet is further improved to obtain an optimized model for UAV acoustic recognition.

2.2.2. Model Optimization

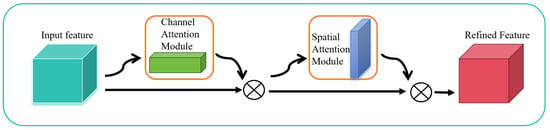

In order to overcome the limitations of traditional convolutional neural networks in dealing with information of different scales, shapes, and orientations, the convolutional block attention module (CBAM) is applied to optimize the model. CBAM introduces two types of attention mechanisms, the channel attention and the spatial attention. The channel attention mechanism, as illustrated in Figure 3, initially performs global average pooling (GAP) and global max pooling (GMP), on the input feature matrix to obtain two vectors of size . Subsequently, these two vectors are fed into a shared multi-layer perceptron (MLP) to generate the corresponding channel attention weights. Afterward, the two weight vectors are summed and normalized via a sigmoid function to yield the final channel attention feature matrix. The formula of the channel attention mechanism is as follows:

where F is the input feature matrix, and is the sigmoid function.

Figure 3.

The channel attention module.

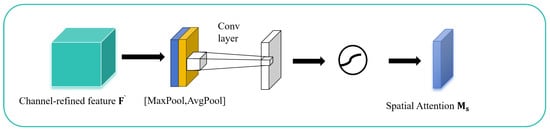

The spatial attention mechanism performs average pooling and max pooling on the weighted feature matrix along the channel dimension to obtain two feature matrices of size . After concatenating these two matrices, a convolutional layer is used to generate the spatial attention feature matrix. Finally, the sigmoid function normalizes this matrix to produce the spatial attention weights. The formula of the channel attention mechanism is as follows:

The spatial attention mechanism is shown in Figure 4. Channel attention helps to enhance the feature representation of different channels, while spatial attention helps to extract key information at different locations in space. The CBAM multiplies the output features of the channel attention module and the spatial attention module element-by-element to obtain the final enhanced attention feature that serves as an input to the subsequent network layers. This attention fusion preserves critical signal information while suppressing noise and irrelevant information. The CBAM is shown in Figure 5.

Figure 4.

The spatial attention module.

Figure 5.

The convolutional block attention module.

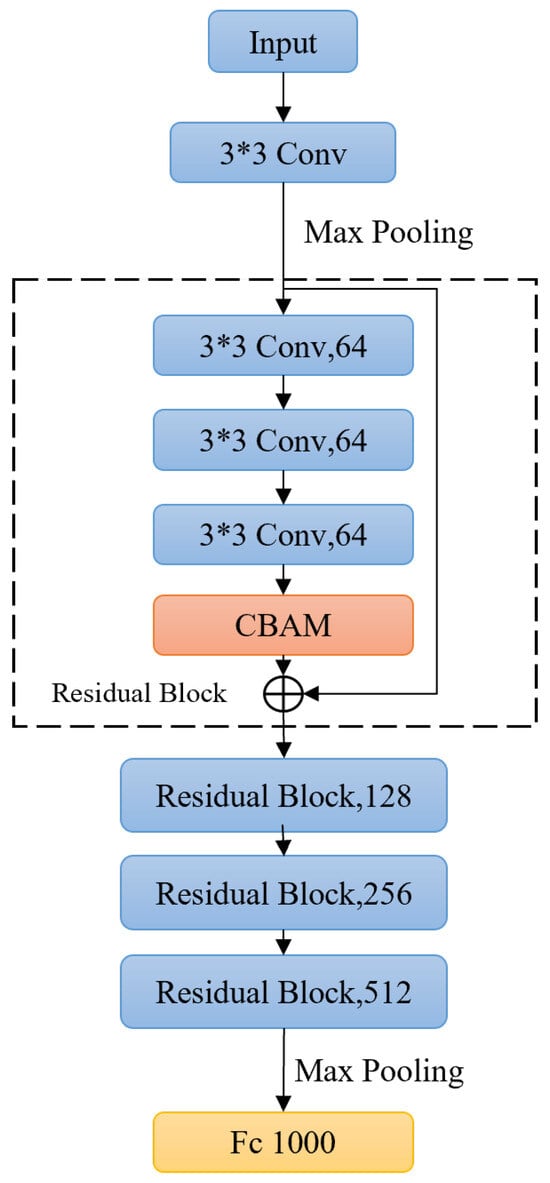

The ResNet10 lightweight network is built on the basis of ResNet firstly and then the CBAM and the dropout layer are added to the basic residual block. Eventually, the improved ResNet10_CBAM network model is proposed, which is shown in Figure 6.

Figure 6.

The improved ResNet10_CBAM network model.

ResNet10_CBAM is a binary classification model that processes input data to predict either “UAV” or “Noise” based on the probability distribution. The final network layer of the model is a fully connected layer, which maps the features extracted by the previous network to the category space and outputs a 2-dimensional tensor for each category to represent the probability distribution of the input data belonging to that category. The category with the highest probability is selected as the final prediction result. During the training phase, the cross-entropy loss function is used to measure the difference between the model output and the true labels, and the optimizer Adam is utilized to iteratively update the model parameters in order to minimize the loss function.

3. Experimental Setting and Results

3.1. Datasets

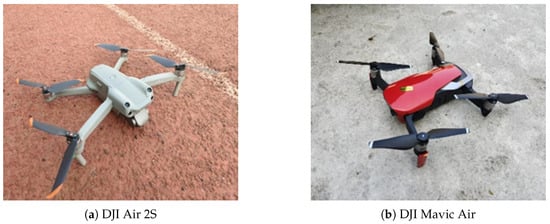

The acoustic training data employed in this study, as detailed in Table 1, are recorded from five different UAVs. The datasets comprise two sources, Benop and Membo, sourced from a public dataset [30], alongside proprietary laboratory recordings of DJI Air 2S, DJI Mavic Air, DJI Spark. All acoustic signals are resampled to 16 kHz and segmented into 1-s clips. To enhance the signal diversity of UAVs, augmentation was implemented by mixing UAV acoustic signals with noise samples. Here, 10,460 s of augmented hybrid audio are generated, with a comprehensive dataset totaling 14,407 s.

Table 1.

The acoustic dataset for training.

In the experiment, the acoustic data of UAVs recorded by the laboratory for DJI Air 2S, DJI Mavic Air, and DJI Spark were randomly mixed with various environmental noises to augment the data. Given that Bebop and Membo were not originally acquired through the laboratory’s instrumentation systems, these data were not augmented. The specific operation was to randomly mix each second of the 2615 s self-recorded acoustic data of UAVs with the corresponding one-second noise. Through three mixings with different noises, 7845 s of UAV acoustic data containing different background sounds were generated. The augmented part shown in Table 1 included the original samples. Finally, a total of 14,407 s of data consisted of the 1332 s of open source data and the 10,460 s augmented data.

Signal-to-noise ratio (SNR) is an important parameter for measuring the quality of a signal, which represents the ratio of the power of the target signal to that of the background noise. The formula is as follows:

where is the signal power and is the noise power. Usually, SNR is expressed in decibels (dB), and the formula is:

In this study, the signal power was calculated by averaging the squared values of the signal’s sampling values , and the formula is as follows:

As shown in Table 2, the different SNRs calculated through the above formulas were employed in the test data. Four different environmental noises, that is, Car_horn, Drilling, Jackhammer, and Siren, were used in the data set. By mixing the acoustic data of UAVs with different-sized background noises, nine different SNRs ranging from 10 to −30, decreasing in equal steps from 5, were obtained. The sample sizes of the data under the four noise backgrounds were 9 × 494, 9 × 302, 9 × 238, and 9 × 518, respectively. Using these data, a simulation of different noise environments under the distinct SNRs was carried out.

Table 2.

The acoustic dataset for testing.

3.2. Experimental Details

The acoustic data were resampled to 16 kHz, with framing parameters set to a 512-sample window length and 50% overlap, that is, 256-sample stride. These configurations were systematically applied to MFCC to obtain the MFCC feature matrix. The resultant 2D data were subsequently fed into the ResNet model and the adaptive moment estimation (Adam) optimizer was selected for the ResNet10_CBAM model.

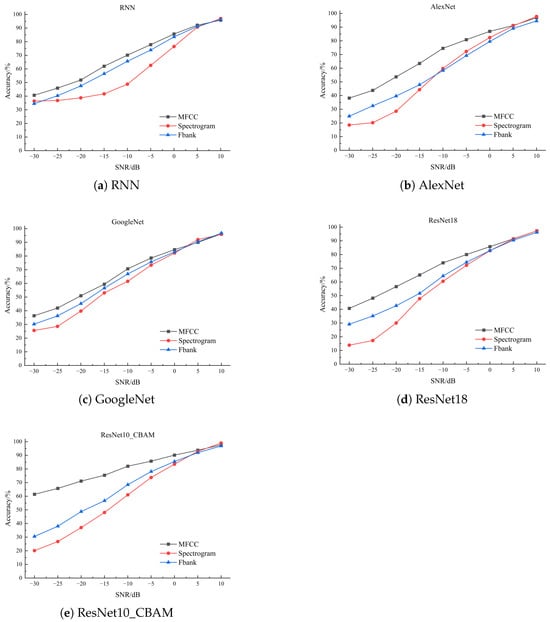

All experiments were conducted in a Python 3.8 environment, utilizing the Pytorch 1.12 framework for neural network construction and the Librosa 0.9.2 library for feature extraction operations. The experimental platform operated on Windows 11 with a hardware configuration comprising an R5-4600H CPU and GTX1650 GPU. Partial experimental equipment is shown in Figure 7. Figure 7a–c illustrates three self-owned UAVs in the laboratory, namely the sound recording sources of datasets DJI Air 2S, DJI Mavic Air, and DJI Spark. Figure 7d is the microphone used for recording sounds, with the model being MPA201, and the frequency response range being 10 Hz to 20 kHz. Figure 7e is the computer sound card of model Cube4Nano, with the frequency response range being 20 Hz to 20 kHz. Figure 7f is the recording site surrounded by buildings, where the wind noise has very little interference with the sensors.

Figure 7.

Partial experimental equipment and experimental scenarios.

3.3. Evaluation Index

In the experiments, various evaluation metrics, that is, accuracy, precision, recall, and F1 scores, were used to assess the acoustic recognition performance of different models. The accuracy indicates the ability of the model to correctly recognize the actual category of the input acoustic signals. The precision indicates the ability of the model to accurately categorize the positive categories. The recall represents the proportion of all actual positive categories that are correctly predicted as positive by the model. The F1 score is a reconciled average of precision and recall, which combines a balance of precision and recall. The value of the F1 score ranges from 0 to 1, with higher values indicating better performance of the model. All these evaluation metrics are used to comprehensively evaluate different UAV acoustic classifiers. The formulas for accuracy, precision, recall, and F1 score are shown in Equations (10)–(13).

where TP, TN, FP, and FN are true positives, true negatives, false positives, and false negatives, respectively. TP is the number of correctly detected classes, and TN is the number of correctly categorized negative audios. FP denotes the number of incorrectly identified positive audios, and FN represents the number of positive audios, which are incorrectly predicted as negative.

3.4. The Experimental Result

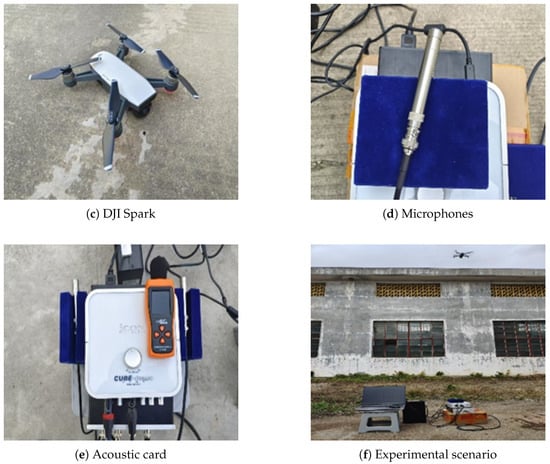

3.4.1. The Experiment Result of Features Extraction

The experiment first compared and analyzed the recognition performance of different models in different SNR environments using three different feature extraction methods, namely Spectrogram, Fbank, and MFCC, and the results are shown in Figure 8. As could be seen from Figure 8, the same effect was presented in the five models, that is, the accuracy when using MFCC was higher than the accuracy when using Spectrogram and Fbank for feature extraction in most of SNR. Just in the case where the SNR was greater than 5, the accuracy when using Spectrogram and Fbank was slightly higher than that when using MFCC for feature extraction. It could be seen that the extraction performance of MFCC was better than the other two methods in the low SNR environment, and was not too bad in the higher SNR environment. Therefore, for the subsequent experiments in this study, the MFCC method was used for feature extraction in each model.

Figure 8.

The performance of five model using different feature extraction methods.

3.4.2. Experiment Results of Model Comparison

Four models, RNN, AlexNet, GoogleNet, and ResNet18, were compared with the improved ResNet10_CBAM network model, and the results are shown in Table 3. As shown in Table 3, the proposed ResNet10_CBAM network model outperformed the other four models in all four evaluations: accuracy, precision, recall, and F1 score by 94.45%, 94.09%, 94.52%, and 94.30%, respectively. Moreover, compared to the baseline ResNet18 model, the number of parameters in ResNet10_CBAM had also been significantly reduced.

Table 3.

The evaluation results of each model.

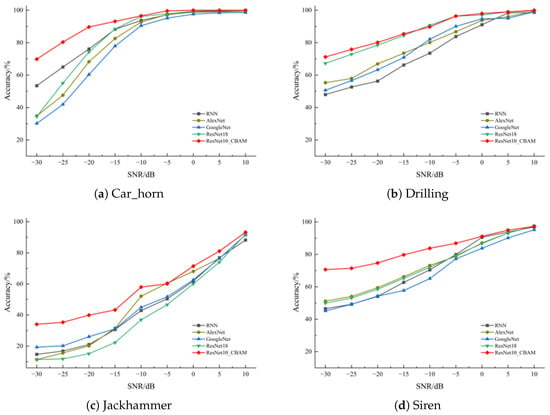

3.4.3. Experiment Results of Model Comparison in Different Environments

Next, we conducted experimental comparisons to analyze the recognition performance of each model in different environments, the test data used in the experiment consists of audio recordings of drone sounds mixed with various background noises at different signal-to-noise ratios (SNRs). The results are shown in Figure 9. In all four different environments, the improved ResNet10_CBAM network model showed excellent performance at low SNR. Among them, the effect was significant in Car_horn, Jackhammer, and Siren environments. While the accuracy was close to that of the ReNet18 model in the Drilling environment, it indicated that the effect of the proposed model still needed to be improved.

Figure 9.

The accuracy of models in different environments with different SNRs.

In Figure 9, the performance of each model under Jackhammer was worse compared to that of Siren. This might be related to the SNR characteristics of the two. Siren typically has sinusoidal amplitude modulation, meaning its loudness changes periodically in a sine waveform over time, allowing models to classify the sound of UAV from the background noise more easily during the quieter period of the alarm sound. The noise generated under the Jackhammer environment is relatively constant, with its loudness and frequency characteristics changing less over time, lacking obvious characteristic changes over time. It is difficult for the model to find a quiet period similar to that in the alarm sound for more accurate classification. Based on this result, it can be inferred that in different background noises, the accuracy of model recognition is not only affected by SNR changes but also related to the characteristics of the noise.

In order to observe and compare the comprehensive performance of each model, the average accuracy of five models across four different environmental scenarios under nine SNR conditions (decrement from 10 dB to −30 dB in 5 dB steps) was further compared, and the results are shown in Table 4.

Table 4.

The average accuracy of five models at different SNR values.

Taking the mean value of the accuracy of each model in four different noise environments under the same SNR as the evaluation index, it was obvious that the accuracy of the improved ResNet10_CBAM network model was higher than that of the other four models. Moreover, the lower the SNR was, the more significant the improvement of the average accuracy of the improved ResNet10_CBAM network model was.

4. Conclusions

This study proposed a UAV acoustic recognition model, ResNet10-CBAM, which could accurately identify the UAVs by extracting the acoustic features of the UAV flight, aiming to meet the urgent needs of low-altitude UAV supervision and safety prevention and control in civil scenarios such as agricultural inspection, logistics airspace, and public areas. A UAV acoustic dataset of 14,407 s was built to train and evaluate the models; on the basis of ResNet, the ResNet10_CBAM model is designed and improved. Comparative experiments were conducted on three different feature extraction methods, and using the outperforming feature extraction method MFCC, the comparison experiments among RNN, AleNet, GoogleNet, ResNet18, and the proposed ResNet10_CBAM were performed in different environments and under different SNRs. The experimental results showed that MFCC feature extraction outperforms the other two methods, and the ResNet10_CBAM network model outperformed the other four models as evaluated in four aspects: accuracy, precision, recall, and F1 score. The UAV recognition ranges considered in this study were all within the close range, and the current experiment was conducted in the environment with single background noise. However, the actual environment may have multiple background noise interferences. Thus, further recognition of long-range UAV acoustic signals could be incorporated in the future, and the UAV identification under the interference of multiple background noises in the actual environment could be considered.

Author Contributions

Conceptualization, Z.L. and K.F.; methodology, Z.L. and L.X.; software, Z.L., H.Z., J.Y., and A.F.; validation, K.F., Z.L., Y.C., and A.F.; writing—original draft preparation, Z.L., H.Z., and J.Y.; writing—review and editing, Z.L., L.X., Y.C., and K.F.; funding acquisition, K.F. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded by the National Natural Science Foundation of China grant numbers No. 62363014, No. 61763018; the Program of Qingjiang Excellent Young Talents in Jiangxi University of Science and Technology grant number JXUSTQJBJ2019004; the Key Program of Ganzhou Science and Technology grant number GZ2024ZDZ008.

Data Availability Statement

Data are available upon request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Rejeb, A.; Abdollahi, A.; Rejeb, K.; Treiblmaier, H. Drones in Agriculture: A Review and Bibliometric Analysis. Comput. Electron. Agric. 2022, 198, 107017. [Google Scholar] [CrossRef]

- Misara, R.; Verma, D.; Mishra, N.; Rai, S.K.; Mishra, S. Twenty-Two Years of Precision Agriculture: A Bibliometric Review. Precis. Agric. 2022, 23, 2135–2158. [Google Scholar] [CrossRef]

- WZhang, Y.; Onda, Y.; Kato, H.; Feng, B.; Gomi, T. Understory Biomass Measurement in a Dense Plantation Forest Based on Drone-SfM Data by a Manual Low-Flying Drone under the Canopy. J. Environ. Manag. 2022, 312, 114862. [Google Scholar] [CrossRef]

- McKinney, M.; Wavrek, M.; Carr, E.; Jean-Philippe, S. Drone Remote Sensing in Urban Forest Management: A Case Study. SSRN Electron. J. 2022, 86, 127978. [Google Scholar] [CrossRef]

- Nooralishahi, P.; Ibarra-Castanedo, C.; Deane, S.; López, F.; Pant, S.; Genest, M.; Avdelidis, N.P.; Maldague, X.P.V. Drone-Based Non-Destructive Inspection of Industrial Sites: A Review and Case Studies. Drone 2021, 5, 106. [Google Scholar] [CrossRef]

- Hong, F.; Wu, G.; Luo, Q.; Huan, L.; Fang, X. Witold Pedrycz Logistics in the Sky: A Two-Phase Optimization Approach for the Drone Package Pickup and Delivery System. IEEE Trans. Intell. Transp. Syst. 2023, 24, 9175–9190. [Google Scholar] [CrossRef]

- Karaca, Y.; Cicek, M.; Tatli, O.; Sahin, A.; Pasli, S.; Beser, M.F.; Turedi, S. The Potential Use of Unmanned Aircraft Systems (Drones) in Mountain Search and Rescue Operations. Am. J. Emerg. Med. 2018, 36, 583–588. [Google Scholar] [CrossRef]

- Samadzadegan, F.; Dadrass Javan, F.; Ashtari Mahini, F.; Gholamshahi, M. Detection and Recognition of Drones Based on a Deep Convolutional Neural Network Using Visible Imagery. Aerospace 2022, 9, 31. [Google Scholar] [CrossRef]

- Liu, H.; Fan, K.; Ouyang, Q.; Li, N. Real-Time Small Drones Detection Based on Pruned YOLOv4. Sensors 2021, 21, 3374. [Google Scholar] [CrossRef] [PubMed]

- Martins Ezuma; Chethan Kumar Anjinappa; Vasilii Semkin; Ismail Guvenc Comparative Analysis of Radar-Cross-Section- Based UAV Recognition Techniques. IEEE Sens. J. 2022, 22, 17932–17949. [CrossRef]

- Kumar, P.; Idsøe, H.; Yakkati, R.R.; Kumar, A.; Zafar, M.; Yalavarthy, P.K. Linga Reddy Cenkeramaddi Localization and Activity Classification of Unmanned Aerial Vehicle Using MmWave FMCW Radars. IEEE Sens. J. 2021, 21, 16043–16053. [Google Scholar] [CrossRef]

- Xue, C.; Li, T.; Li, Y.; Ruan, Y.; Zhang, R.; Dobre, O.A. Radio-Frequency Identification for Drones with Nonstandard Waveforms Using Deep Learning. IEEE Trans. Instrum. Meas. 2023, 72, 5503713. [Google Scholar] [CrossRef]

- Mohammed, K.K.; El-Latif, E.I.A.; El-Sayad, N.E.; Darwish, A.; Hassanien, A.E. Radio Frequency Fingerprint-Based Drone Identification and Classification Using Mel Spectrograms and Pre-Trained YAMNet Neural. Internet Things 2023, 23, 100879. [Google Scholar] [CrossRef]

- Kümmritz, S. The Sound of Surveillance: Enhancing Machine Learning-Driven Drone Detection with Advanced Acoustic Augmentation. Drones 2024, 8, 105. [Google Scholar] [CrossRef]

- Aydın, İ.; Kızılay, E. Development of a New Light-Weight Convolutional Neural Network for Acoustic-Based Amateur Drone Detection. Appl. Acoust. 2022, 193, 108773. [Google Scholar] [CrossRef]

- Ding, R.; Yang, L.; Shan, Z.; Wang, Y.; Ren, H.; Liu, L.; Yu, L.; Liu, B. Drone Detection and Recognition Based on Fiber-Optic EFPI Acoustic Sensor and CNN-LSTM Network Model. IEEE Sens. J. 2024, 24, 28835–28843. [Google Scholar] [CrossRef]

- Wang, W.; Fan, K.; Ouyang, Q.; Yuan, Y. Acoustic UAV Detection Method Based on Blind Source Separation Framework. Appl. Acoust. 2022, 200, 109057. [Google Scholar] [CrossRef]

- Al-Emadi, S.; Al-Ali, A.; Al-Ali, A. Audio-Based Drone Detection and Identification Using Deep Learning Techniques with Dataset Enhancement through Generative Adversarial Networks. Sensors 2021, 21, 4953. [Google Scholar] [CrossRef]

- Wang, M.Y.; Chu, Z.; Ku, I.; Smith, E.C.; Matson, E.T. A 15-Category Audio Dataset for Drones and an Audio-Based UAV Classification Using Machine Learning. Int. J. Semant. Comput. 2023, 18, 257–272. [Google Scholar] [CrossRef]

- Diao, Y.; Zhang, Y.; Zhao, G.; Khamis, M. Drone Authentication via Acoustic Fingerprint; The University of Glasgow: Enlighten, UK, 2022; pp. 658–668. [Google Scholar] [CrossRef]

- Akbal, E.; Akbal, A.; Dogan, S.; Tuncer, T. An Automated Accurate Sound-Based Amateur Drone Detection Method Based on Skinny Pattern. Digit. Signal Process. 2023, 136, 104012. [Google Scholar] [CrossRef]

- Encinas, F.G.; Silva, L.A.; Mendes, A.S.; Gonzalez, G.V.; Reis, V.; Francisco, J. Singular Spectrum Analysis for Source Separation in Drone-Based Audio Recording. IEEE Access 2021, 9, 43444–43457. [Google Scholar] [CrossRef]

- Fang, J.; Li, Y.; Ji, P.N.; Wang, T. Drone Detection and Localization Using Enhanced Fiber-Optic Acoustic Sensor and Distributed Acoustic Sensing Technology. J. Light. Technol. 2022, 41, 822–831. [Google Scholar] [CrossRef]

- Sun, Y.; Liu, Y.; Wang, L.; Li, J.; Wang, J.; Zhang, A.; Wang, S. Improved Method for Drone Sound Event Detection System Aiming at the Impact of Background Noise and Angle Deviation. Sens. Actuators A Phys. 2024, 377, 115676. [Google Scholar] [CrossRef]

- Ibrahim, O.A.; Sciancalepore, S.; Di Pietro, R. Noise2Weight: On Detecting Payload Weight from Drones Acoustic Emissions. Future Gener. Comput. Syst. 2022, 137, 319–333. [Google Scholar] [CrossRef]

- Rabiner, L.R.; Schafer, R.W. Introduction to Digital Speech Processing. Found. Trends® Signal Process. 2007, 1, 1–194. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2012, 60, 84–90. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V. Going Deeper with Convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2015, Boston, MA, USA, 7–12 June 2015; Volume 7, pp. 1–9. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Al-Emadi, S. Saraalemadi/DroneAudioDataset. Available online: https://github.com/saraalemadi/DroneAudioDataset (accessed on 23 March 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).